Abstract

In practical applications of deep learning-based Synthetic Aperture Radar (SAR) Automatic Target Recognition (ATR) systems, new target categories emerge continuously. This requires the systems to learn incrementally—acquiring new knowledge while retaining previously learned information. To mitigate catastrophic forgetting in Class-Incremental Learning (CIL), this paper proposes a CIL method for SAR ATR named Multi-center Prototype Feature Distribution Reconstruction (MPFR). It has two core components. First, a Multi-scale Hybrid Attention feature extractor is designed. Trained via a feature space optimization strategy, it fuses and extracts discriminative features from both SAR amplitude images and Attribute Scattering Center data, while preserving feature space capacity for new classes. Second, each class is represented by multiple prototypes to capture complex feature distributions. Old class knowledge is retained by modeling their feature distributions through parameterized Gaussian diffusion, alleviating feature confusion in incremental phases. Experiments on public SAR datasets show MPFR achieves superior performance compared to existing approaches, including recent SAR-specific CIL methods. Ablation studies validate each component’s contribution, confirming MPFR’s effectiveness in addressing CIL for SAR ATR without storing historical raw data.

1. Introduction

Synthetic Aperture Radar (SAR) is an advanced active microwave imaging system. It produces high-resolution two-dimensional terrain imagery and operates independently of weather and lighting conditions. This all-weather, day-and-night Earth observation capability is valuable for continuous monitoring across diverse environments [1]. SAR imaging has been widely adopted in topographic mapping, environmental monitoring, disaster assessment, agricultural management, and resource surveying [2,3].

With the rapid development of artificial intelligence, deep learning-based SAR Automatic Target Recognition (ATR) has become a core technology in remote sensing image interpretation and resource monitoring. These systems are critical in real-world scenarios—disaster response, maritime monitoring, infrastructure inspection, and strategic resource assessment. They automatically and accurately identify vehicles, vessels, buildings, and other targets from SAR imagery, supporting safety and resource management [4,5,6,7,8,9].

However, operational environments and mission requirements evolve dynamically. Conventional SAR ATR systems face a key challenge: new target types emerge constantly, requiring models to adapt without full retraining. Most existing deep learning-based SAR ATR methods use a static learning paradigm, trained on a fixed set of target categories [10,11]. When new classes appear, these systems suffer from catastrophic forgetting—sharp degradation in recognition performance of previously learned classes as new knowledge is added [12,13].

This challenge underscores the pressing need for SAR ATR systems that support continuous learning. There is growing demand for systems that efficiently integrate new target class knowledge without full retraining, while maintaining recognition performance on previously learned classes. This need has driven research into incremental learning methods for SAR ATR.

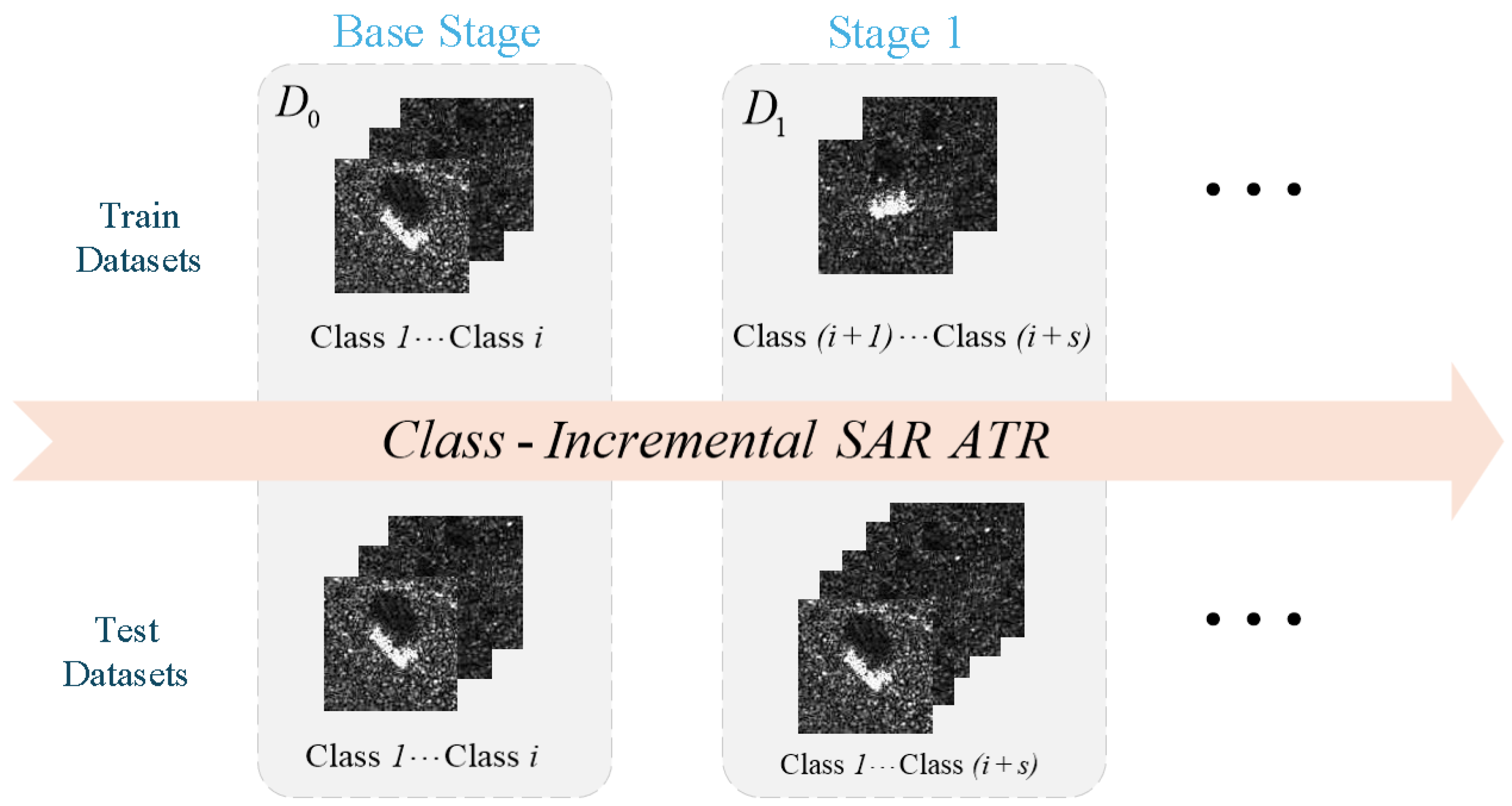

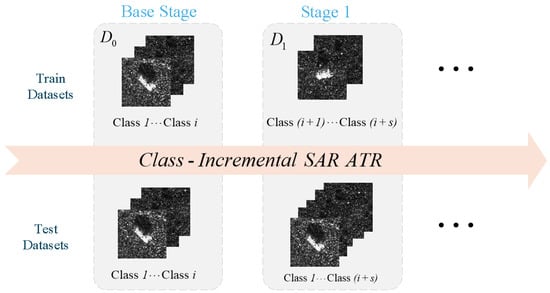

Class-Incremental Learning (CIL) provides a structured framework to address catastrophic forgetting in SAR ATR. CIL enables models to learn progressively from a sequence of non-overlapping SAR datasets () that arrive incrementally. The model must acquire new knowledge while maintaining classification accuracy across all previously encountered classes. After each learning phase, the model is evaluated on all learned classes [14], as shown in Figure 1. This reflects real-world usability in evolving operational settings.

Figure 1.

Class-Incremental Learning for Synthetic Aperture Radar Automatic Target Recognition.

CIL methods fall into three main categories: regularization-based, replay-based, and parameter-isolation approaches. Regularization techniques like PASS [15] use prototype augmentation to enhance class discriminability across incremental states. Replay methods such as IL2A [16] leverage class distribution information to replay past class features without storing raw samples.

A recent trend is freezing the feature extractor after initial learning and only incrementally updating the classifier. This avoids storing old-class exemplars and simplifies model updates. Methods like FeTrIL [17] (generating synthetic features), SimpleCIL [18], and FeCAM [19] follow this trend.

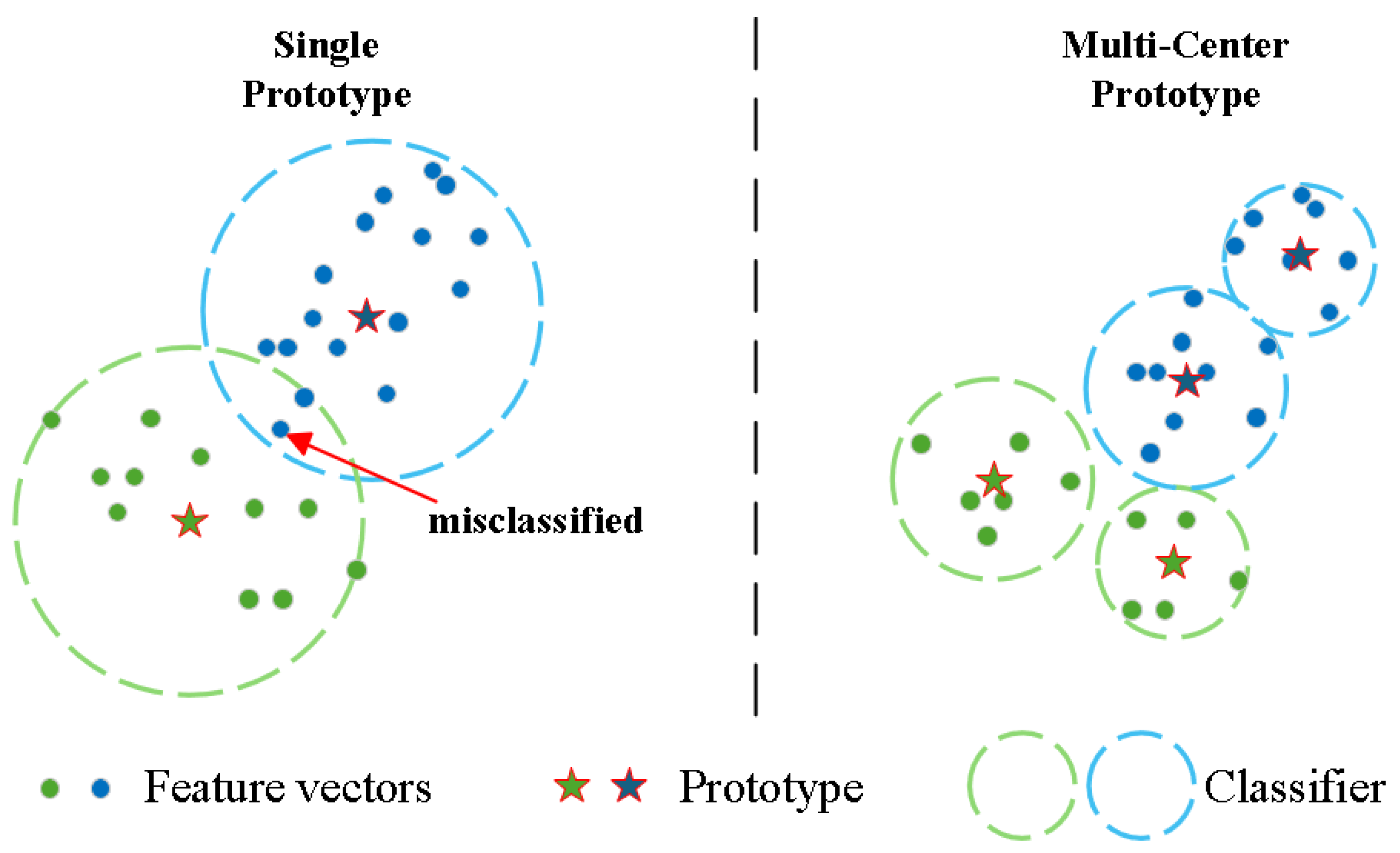

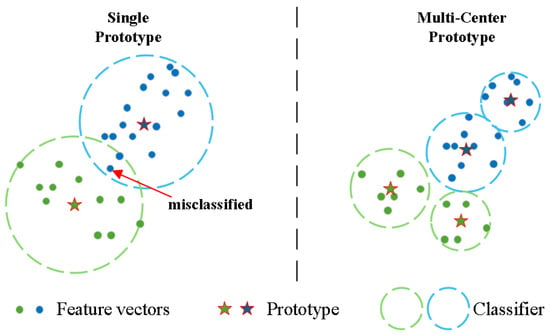

However, many current approaches represent each class with a single prototype centroid. This oversimplification causes problems in SAR image recognition. SAR targets have complex, multi-modal feature distributions due to aspect-dependent scattering and target configuration variations [20]. When new classes are introduced without retraining the feature extractor, their feature distributions often overlap significantly with old classes in the embedding space. The single-prototype representation fails to capture these complex manifolds, leading to severe feature confusion and misclassification (Figure 2).

Figure 2.

Comparison of single-prototype vs. multi-center prototype representations. Single prototypes fail to capture complex class distributions, leading to feature confusion and classification errors at decision boundaries. Multi-center prototype representation captures intrinsic manifold structure, preserving topological relationships and reducing inter-class interference.

Although FeTrIL and SimpleCIL streamline the incremental learning pipeline, they cannot handle such complex distributions effectively. Their reliance on global centroids or simple feature generation fails to model intra-class diversity. Thus, SAR target recognition requires methods that finely represent each class’s complex feature distributions.

To address these issues, this paper proposes the Multi-center Prototype Feature Reconstruction (MPFR) method. MPFR differs fundamentally from single-prototype approaches by modeling each class distribution with multiple cluster centroids. This enables faithful reconstruction of complex feature manifolds during incremental phases. Combined with multi-modal SAR feature fusion, MPFR robustly captures the intricate scattering characteristics of radar targets while mitigating catastrophic forgetting.

MPFR operates in two phases: base phase (initial model training and prototype selection) and incremental phases (feature reconstruction and classifier updates). During incremental learning, synthetic features of old classes are generated from stored multi-center prototypes. These are combined with new target class real features to update the classifier. This mitigates catastrophic forgetting while preserving inter-class discrimination, even with SAR noise and high variability.

The main contributions of this paper are threefold:

- Novel CIL Framework: MPFR departs from single-prototype approaches by using multiple prototypes per class. This accurately captures the complex, multi-modal feature distributions of SAR targets caused by aspect-dependent scattering and configuration variations.

- Advanced Multi-modal Feature Fusion: A Multi-scale Hybrid Attention feature extractor fuses SAR amplitude images with physically meaningful Attributed Scattering Center features. A joint supervision strategy optimizes the feature space for incremental learning.

- Comprehensive Experimental Validation: Extensive evaluations on MSTAR [21] and SAR-AIRcraft-1.0 [22] datasets show MPFR achieves state-of-the-art performance. It outperforms existing CIL approaches in average accuracy and forgetting rate. Ablation studies validate each component’s contribution.

2. Related Work

2.1. SAR Automatic Target Recognition

SAR Automatic Target Recognition (ATR) has evolved significantly, transitioning from traditional model-based approaches to modern deep learning techniques. Early SAR ATR systems predominantly relied on template matching and model-based methods [23,24,25,26]. While these methods offer strong interpretability, they are often too simplistic to handle the complexities of real-world SAR ATR scenarios.

The rise of deep learning has revolutionized SAR ATR, with Convolutional Neural Networks (CNNs) demonstrating substantial performance improvements. Pioneering work by [3] introduced CNN architectures specifically tailored for SAR target classification. Subsequent research explored various network designs, including deeper architectures [9], multi-scale networks [27], attention mechanisms [28,29,30], and pre-trained models such as SARATR-X [31]. More recently, methods incorporating electromagnetic scattering characteristics and feature fusion [20,32,33,34] have made considerable progress in achieving both high recognition accuracy and physical interpretability.

2.2. Class-Incremental Learning

Class-Incremental Learning (CIL) is a pivotal domain in continual learning designed to overcome the plasticity–stability dilemma. Early approaches primarily relied on regularization. Methods such as LwF [35] introduced distillation losses to preserve the responses of the old model on new data. However, their performance often degrades significantly as the number of incremental tasks increases.

Replay-based methods constitute another major direction. iCaRL [36] pioneered the use of exemplar replay. WA [37] improved this by aligning weight magnitudes. PODNet [38] enhanced replay efficiency via spatial-based distillation. More recently, methods like IL2A [16] explored feature-level replay to circumvent privacy and storage constraints.

A prominent trend is decoupling feature extraction from classifier learning. Approaches such as FeTrIL [17], SimpleCIL [18], and FeCAM [19] typically freeze the backbone after the initial stage and focus on optimizing the classifier using robust feature representations.

In the broader computer vision domain, significant progress has been made with exemplar-free approaches. A notable method is Adversarial Drift Compensation (ADC) [39]. ADC introduces a mechanism to adversarially perturb current task samples to mimic old class distributions, compensating for semantic drift without storing exemplars. While highly effective on optical datasets (e.g., ImageNet, CIFAR), its application to the complex, speckle-noise-rich SAR domain remains an open challenge, as the generated adversarial perturbations may not fully align with the physical scattering properties of radar targets.

Integrating CIL into SAR ATR introduces unique challenges. Our work focuses on the standard CIL setting where training data for new classes is abundant, but access to historical data is restricted. In this context, the primary challenge is preserving the complex manifold structures of learned classes without overwriting them with new information.

Several domain-specific methods have been proposed to address this. MLAKDN [40] utilizes multilevel adaptive knowledge distillation, while IncSAR [41] employs a dual fusion framework. Furthermore, recent work based on MIM-CIL [42] aims to mitigate forgetting by maximizing the dependency between historical and current representations.

However, despite these advancements, most existing SAR CIL methods still rely on global constraints or assume relatively compact, unimodal class distributions. They often fail to explicitly model the fragmented, multi-modal feature manifolds caused by large viewpoint variations in radar imagery. Our proposed MPFR method addresses this gap by employing a multi-center prototype strategy. Unlike methods based solely on information maximization or distillation, MPFR explicitly reconstructs the diverse geometric modes of target features.

3. Proposed Method

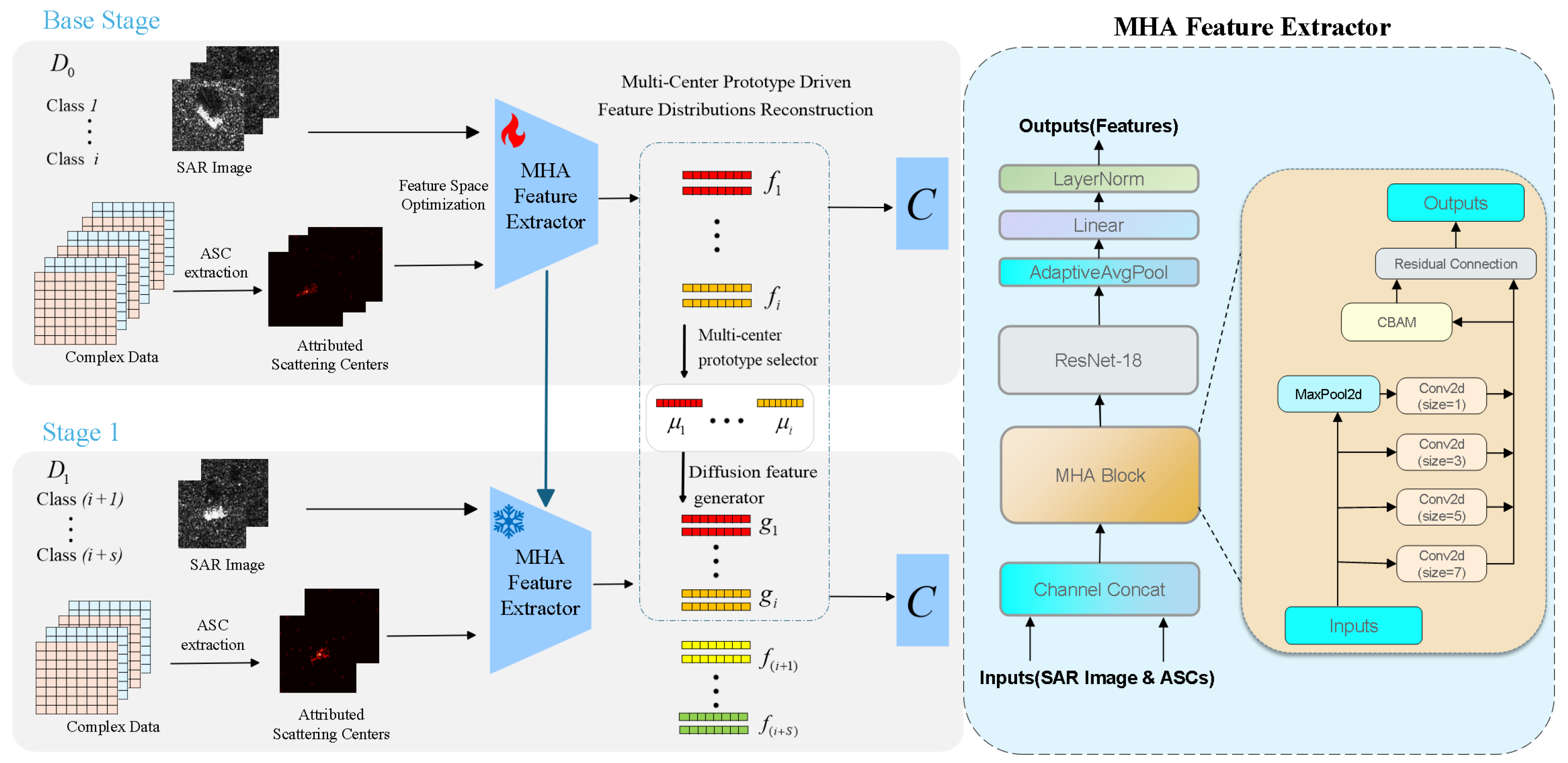

This section details the MPFR method. It first presents the overall framework, clarifying the workflow of the base phase and incremental phase. Then it elaborates on three core components: Multi-scale Hybrid Attention-based Feature Fusion (extracting discriminative multi-modal features), Feature Space Optimization (using joint supervision to enhance feature discriminability), and Multi-Center Prototype Feature Distribution Reconstruction (modeling complex class distributions and generating synthetic features). Each component’s design principles and implementation details are explained.

3.1. Overall Framework

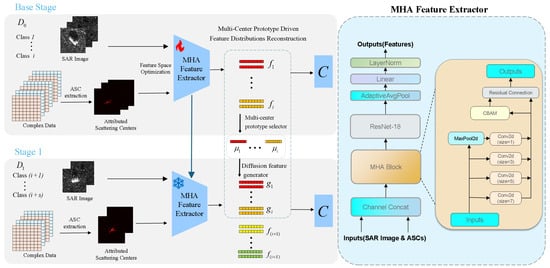

The MPFR framework operates in two distinct phases: base phase and incremental phase, as shown in Figure 3. The base phase focuses on training a high-performance feature extractor and selecting multi-center prototypes. The incremental phase generates synthetic old-class features via diffusion and updates the classifier using a hybrid feature space. This balances feature extractor stability and classifier plasticity, addressing catastrophic forgetting.

Figure 3.

Overall framework of the proposed method, showing the base phase for initial feature extraction and prototype selection, and the incremental phase for feature reconstruction and classifier updating.

In the base phase, a gradient-based method [43] extracts Attributed Scattering Centers (ASC) that model targets’ high-frequency electromagnetic scattering characteristics. These ASC features are fused with SAR amplitude images via a feature space optimization strategy to jointly train a multi-scale hybrid attention feature extractor.

The trained feature extractor F processes the initial training dataset to generate feature vectors , which train the initial classifier C. A multi-center prototype selector then identifies representative prototypes for each class and stores them in the prototype set .

During incremental learning, the diffusion feature generator synthesizes old-class feature representations using the preserved prototype set . These reconstructed features are combined with new target features extracted by the frozen F to form a hybrid feature space for training the current incremental classifier C. This allows the frozen F and updated C to recognize all previous targets while adapting to new classes.

After each incremental phase, the multi-center prototype selector identifies new-class prototypes and adds them to .

Algorithm 1 formally describes MPFR’s complete class-incremental learning procedure:

| Algorithm 1 Class-Incremental Learning Procedure of MPFR. |

|

3.2. Multi-Scale Hybrid Attention-Based Feature Fusion

This subsection details the Multi-scale Hybrid Attention (MHA) feature extractor designed to fuse SAR amplitude images and scattering center features. It follows a systematic workflow which includes the following steps in sequence: scattering center extraction, ASC reconstructed image generation, dual-modal feature extraction, multi-scale encoding, hybrid attention fusion, and backbone refinement, as shown in Figure 3.

Attributed scattering center features are extracted from raw SAR echo data using gradient-based optimization. The process starts with a parameterized scattering center model that accurately represents high-frequency electromagnetic scattering. For each scattering center, attributes like position , type, amplitude, and frequency-dependent characteristics are estimated by solving the nonlinear optimization problem:

Here, is the measured SAR echo data, and is the simulated echo using ASC model parameters for all k scattering centers. Gradient-based methods in deep learning frameworks ensure efficient convergence.

Extracted ASC parameters are rasterized into feature maps via spatial projection. Each scattering center contributes to its corresponding pixel location based on attributes, generating spatially-aligned representations compatible with SAR amplitude images .

The fusion mechanism uses a multi-scale architecture with hybrid attention for adaptive feature weighting. The feature extractor processes the concatenated input through parallel convolutional paths with different receptive fields:

Multi-scale encoded features are concatenated for a comprehensive representation:

The hybrid attention fusion module [44] refines features adaptively through sequential processing. Channel attention emphasizes informative feature channels:

Spatial attention addresses misalignment and highlights discriminative regions:

Finally, attention-weighted features are refined via a ResNet-18 backbone:

This approach captures comprehensive information through multi-scale encoding and enables intelligent feature selection via hybrid attention. It leverages complementary advantages of SAR amplitude information and scattering center features, ensuring robust performance in complex electromagnetic environments.

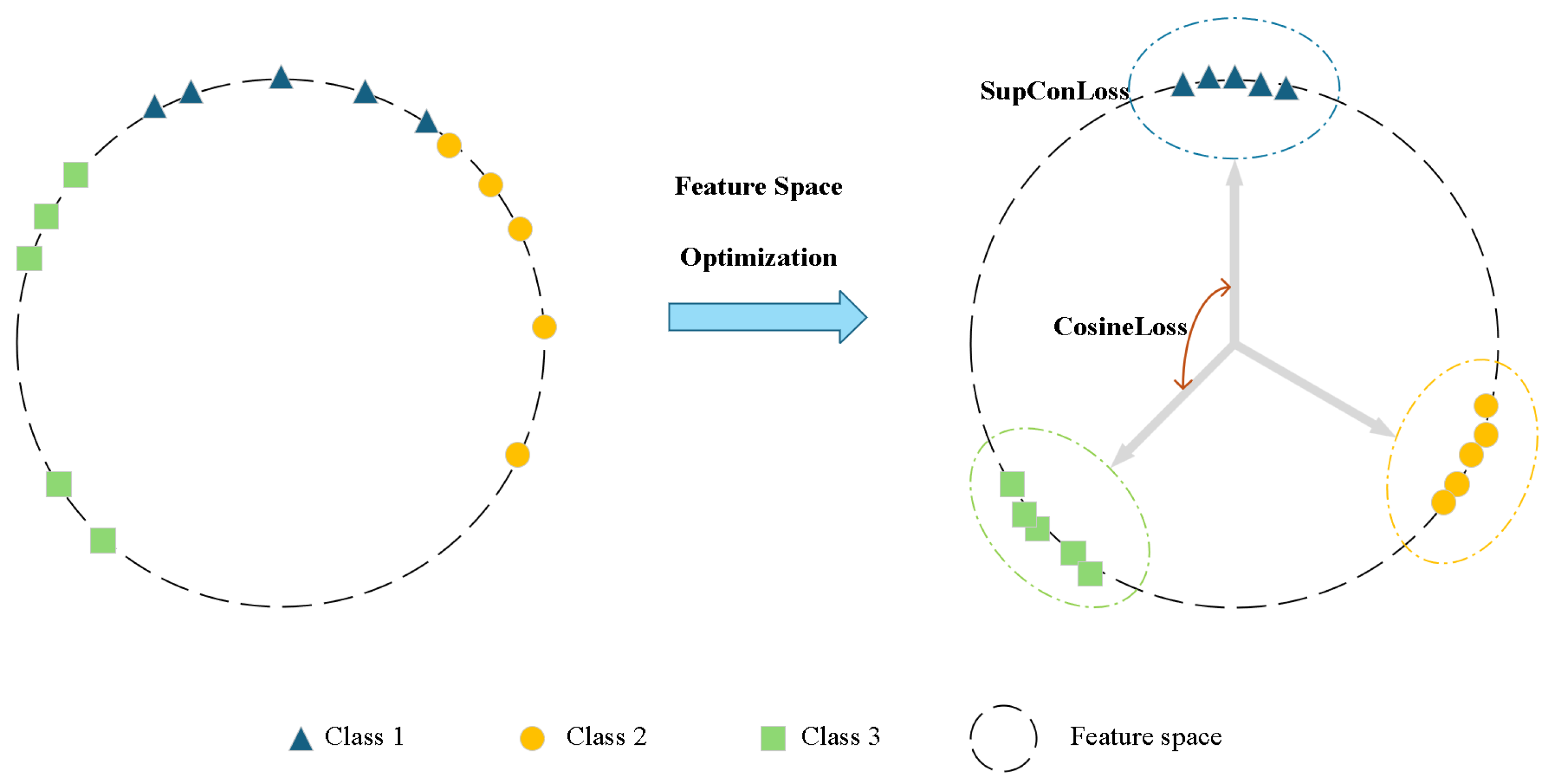

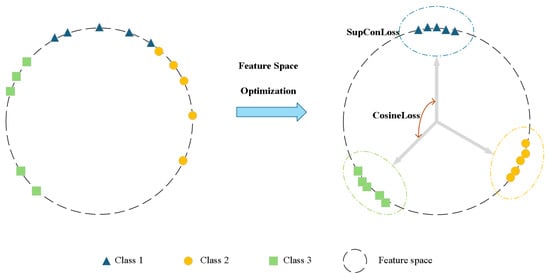

3.3. Feature Space Optimization for Backbone Network Training

To ensure the MHA feature extractor produces discriminative features for incremental learning, a joint supervision strategy combines Supervised Contrastive Loss [45] and Additive Angular Margin Loss [46] (Figure 4). This dual-loss strategy optimizes intra-class compactness and inter-class separability, laying a foundation for subsequent multi-center prototype learning.

Figure 4.

Illustration of the joint supervision strategy for feature space optimization. The framework combines supervised contrastive loss and additive angular margin loss to achieve intra-class compactness and inter-class distinctiveness in the learned feature representations.

Supervised contrastive loss structures the feature space by pulling same-class features together and pushing different-class features apart. For a batch of normalized feature vectors , the loss is:

Here, is the set of indices for the same class as sample i (positive pairs), is the temperature hyperparameter, and N is the batch size. This guides the model to learn semantically consistent feature embeddings, enhancing robustness.

Additive angular margin loss imposes an angular constraint on the classification layer to improve discriminability. Let be the normalized classification layer weight vector, and be the angle between feature and its ground-truth class weight . The loss is:

Here, s is a scale factor, m is the additive angular margin, and C is the total number of classes. The angular margin m explicitly increases inter-class separation.

The joint training objective combines both losses via weighted summation:

Here, and are balancing hyperparameters. This synergy ensures intra-class aggregation via contrastive loss and inter-class separation via angular margin loss, producing powerful fused features with invariance and high discriminability.

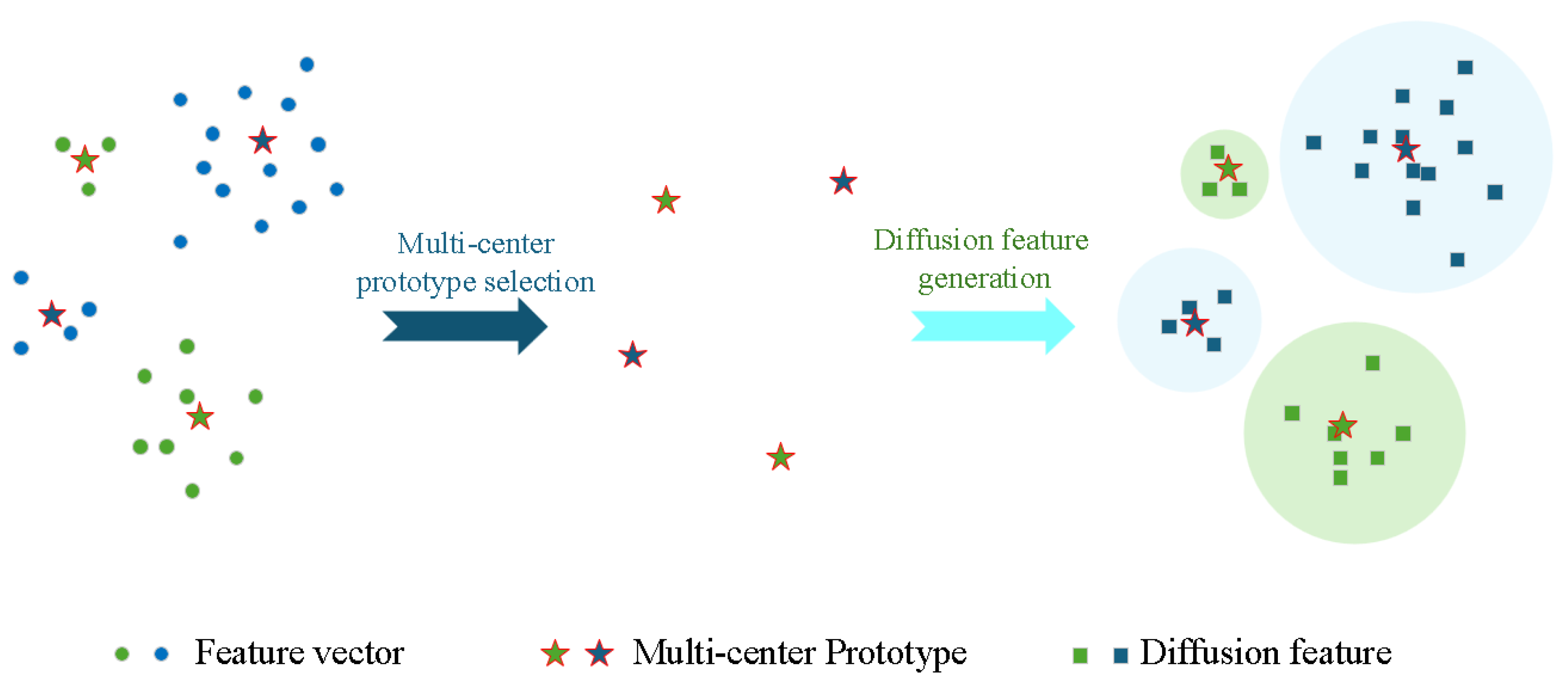

3.4. Multi-Center Prototype Feature Distribution Reconstruction

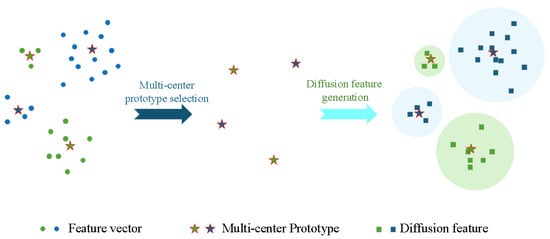

This subsection presents the core innovation of MPFR: Multi-Center Prototype Feature Distribution Reconstruction. In CIL with a frozen feature extractor, single-prototype class representation causes feature confusion, especially in noisy SAR ATR. MPFR addresses this by capturing complex class distributions through two key steps: multi-center prototype extraction and feature distribution reconstruction, as shown in Figure 5.

Figure 5.

Feature distribution reconstruction for two classes: feature representations of each class are clustered into m clusters (visualized here as for clarity, though experiments use ), and the cluster centers are preserved as multi-center prototypes. The left side shows the original feature vectors and multi-center prototypes, while the right side demonstrates the diffusion features generated from these prototypes, reconstructing the feature space distribution for incremental learning.

For each new class in the current incremental phase, K-means clustering [47] with m clusters is applied to extracted feature vectors . This yields m cluster centroids as multi-center prototypes. The clustering objective minimizes the within-cluster sum of squares:

Here, is a feature vector, and is the Euclidean distance.

It is worth noting that a common alternative to dealing with intra-class diversity is reformulating the problem as subclass classification, where fine-grained labels are treated as mutually exclusive subclasses. However, such an approach relies on the availability of detailed, manually annotated sub-labels, which are often expensive or unavailable in non-cooperative SAR scenarios.

In contrast, our MPFR method utilizes unsupervised clustering to automatically discover these latent multi-modal distributions. By representing each class with m adaptive centroids without requiring explicit subclass supervision, MPFR effectively captures the intrinsic geometric structure of the data while avoiding the prohibitive cost of fine-grained annotation. Consequently, multi-center prototypes offer a more practical and robust solution for modeling complex SAR feature distributions than rigid subclass definitions.

During incremental training, old-class feature distributions are reconstructed by generating synthetic features around preserved multi-center prototypes. For each centroid , diffusion features are generated (where equals the number of original feature points in the cluster). The standard deviation for each cluster is:

Here, is the mean distance from features to centroid . Diffusion features for each centroid are generated as:

A class’s complete reconstructed feature space is the union of all diffusion feature sets:

Classifier C is trained on a hybrid feature space combining old-class reconstructed features and new-class real features:

Here, are new-class feature vectors, and are old-class reconstructed diffusion features. This mitigates catastrophic forgetting while maintaining inter-class discrimination, even with SAR noise and variability.

4. Experiments and Results

This section validates MPFR’s effectiveness through comprehensive experiments. It first describes experimental settings (datasets, implementation details, evaluation metrics) to ensure reproducibility. Then it compares MPFR with state-of-the-art CIL methods on two public SAR datasets, analyzing performance advantages. Finally, ablation studies quantify the contribution of each core component, verifying the rationality of the proposed framework.

4.1. Datasets and Configurations

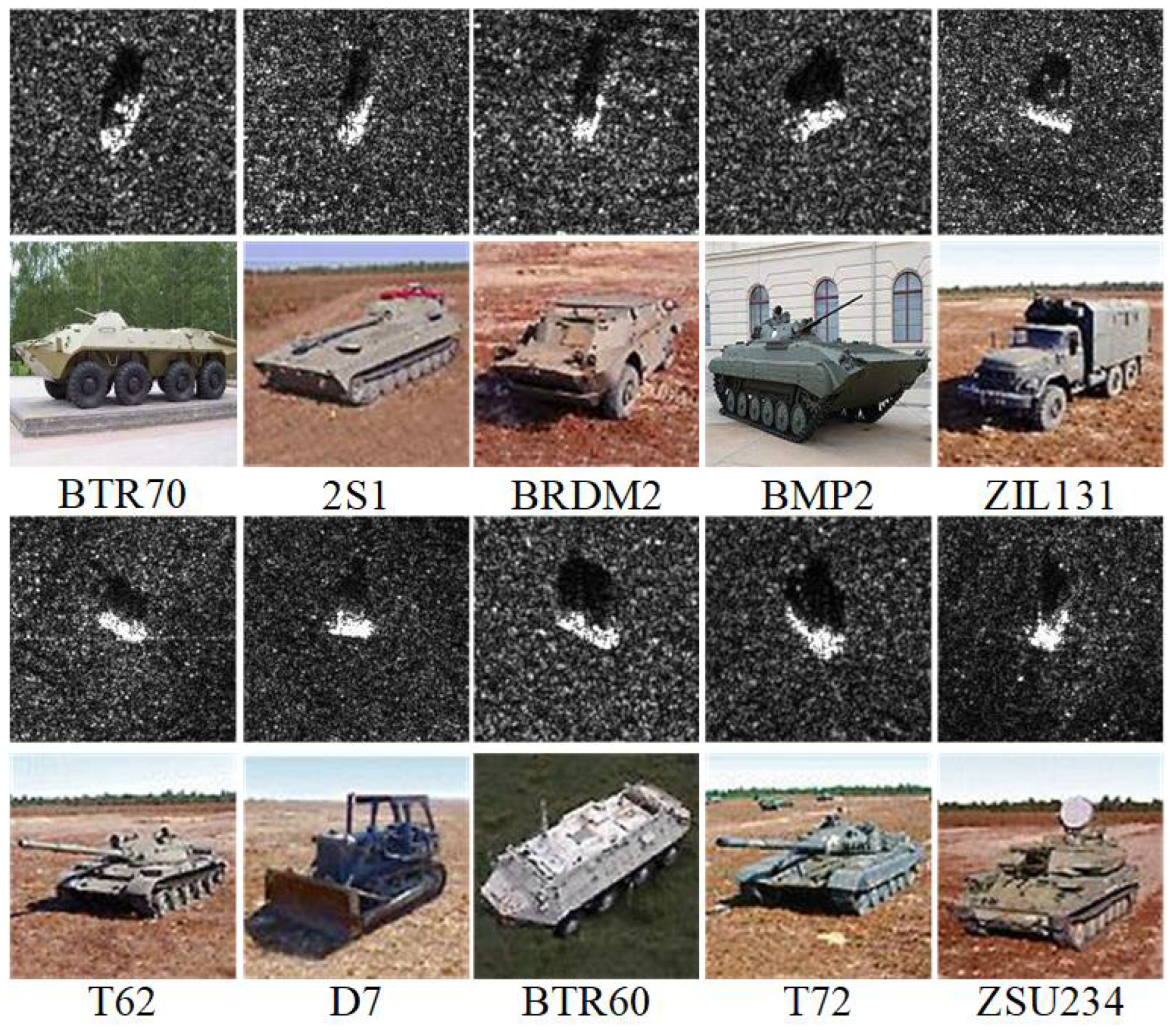

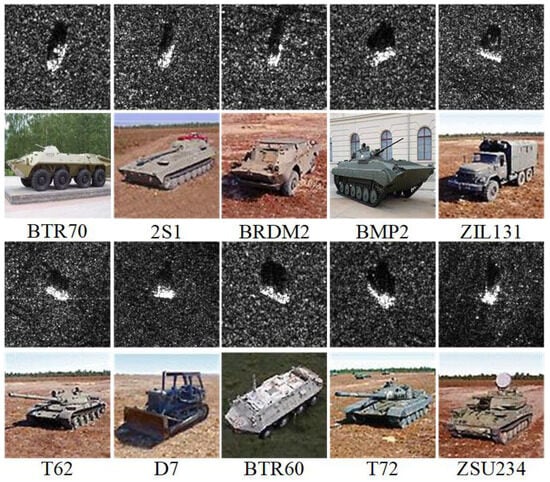

4.1.1. MSTAR Dataset

The Moving and Stationary Target Acquisition and Recognition (MSTAR) dataset [21], developed by Sandia National Laboratory, is a widely recognized benchmark in the SAR target recognition field. Operating in the X-band with a resolution of 0.3 m, it encompasses a diverse set of vehicles with variations in configuration, depression angles, and operating conditions, making it a standard for evaluating SAR ATR algorithms. For this study, we adopt the Standard Operating Condition (SOC) scenario with a cross-depression angle protocol: training samples are collected at a 17° depression angle, while testing samples are acquired at 15° to simulate practical scenario differences.

Three incremental learning scenarios with varying difficulty are designed:

- Setting A: 6 incremental phases (), 1 new class per phase ()

- Setting B: 3 incremental phases (), 2 new classes per phase ()

- Setting C: 2 incremental phases (), 3 new classes per phase ()

Figure 6 illustrates the targets in both SAR and optical imagery. Table 1 details the dataset configuration, with 4 base classes and remaining classes distributed across incremental phases.

Figure 6.

MSTAR dataset SAR and optical imagery.

Table 1.

MSTAR dataset configurations for class-incremental learning.

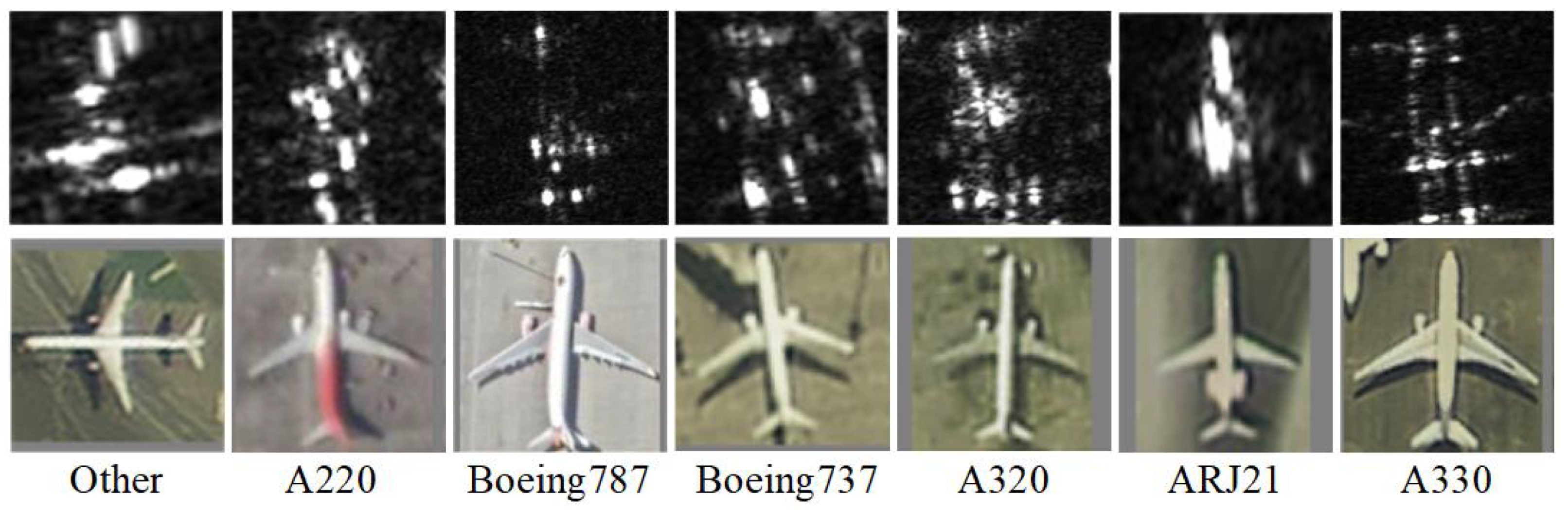

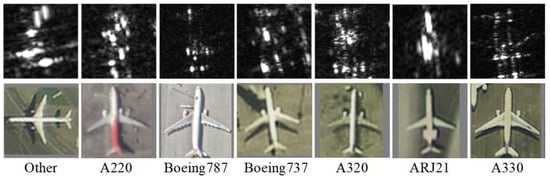

4.1.2. SAR-AIRcraft-1.0 Dataset

The SAR-AIRcraft-1.0 dataset [22], from the Aerospace Information Research Institute, Chinese Academy of Sciences, contains high-resolution SAR images of commercial aircraft captured by the GaoFen-3 satellite in spotlight mode. It includes seven aircraft categories: A220, A320, A330, ARJ21, Boeing737, Boeing787, and others. Challenges include complex background clutter and fine-grained inter-class variations.

A single incremental configuration is used for SAR-AIRcraft-1.0:

- Setting D: 3 incremental phases (), 1 new class per phase ()

Figure 7 shows SAR and optical imagery of targets. Table 2 details the dataset configuration, with 4 base classes and 3 incrementally added classes.

Figure 7.

SAR-AIRcraft-1.0 Dataset SAR and optical imagery.

Table 2.

SAR-AIRcraft-1.0 dataset configuration for class-incremental learning.

4.2. Implementation Details

Our implementation follows a consistent protocol across all experiments. For the proposed MPFR method, we leverage both SAR amplitude images and complex-valued data when using the MSTAR dataset, while for SAR-AIRcraft-1.0, we utilize only SAR amplitude images due to data availability. All comparative methods employ a ResNet-18 backbone as the feature extractor with SAR amplitude images as input.

Input images are normalized to the range [0, 1] and resized to pixels. All experiments are implemented using PyTorch (v1.12.1; Meta Platforms, Menlo Park, CA, USA) and executed on NVIDIA GeForce RTX 4090 GPUs (NVIDIA Corporation, Santa Clara, CA, USA). Models are trained for 100 epochs using the Adam optimizer (, ) with a batch size of 128. The initial learning rate is set to 0.001 and decayed following a cosine annealing schedule. For our MPFR method, we set the number of prototypes per class to based on empirical analysis.

4.3. Evaluation Metrics

We employ two principal metrics to comprehensively evaluate class-incremental learning performance:

- Average Accuracy (A): The mean top-1 classification accuracy across all incremental phases (including the base phase), providing an overall measure of learning capability:where T denotes the total number of incremental phases and represents the accuracy at phase t.

- Forgetting Rate (FR): Quantifies the stability of knowledge retention by measuring performance degradation on base classes after completing all incremental phases:where indicates the accuracy on base classes after initial training, and denotes the corresponding accuracy after all T incremental phases.

Both metrics are computed over three independent runs, and we report mean values with standard deviations to ensure statistical significance.

4.4. Comparative Evaluation

We conduct extensive comparative experiments against several state-of-the-art class-incremental learning methods, including FeTrIL [17], WA [37], SimpleCIL [18], iCaRL [36], LwF [35], ADC [39], and MIM-CIL [42].

To ensure a strictly fair comparison and isolate the contribution of the incremental learning strategies, all comparative methods are implemented using the identical ResNet-18 backbone network. By standardizing the feature extractor, we ensure that performance differences are solely attributable to the effectiveness of the incremental learning algorithms rather than discrepancies in model capacity. All methods are evaluated on both MSTAR [21] and SAR-AIRcraft-1.0 [22] datasets under identical experimental conditions with an input size of , the same data augmentation, and identical optimizer settings.

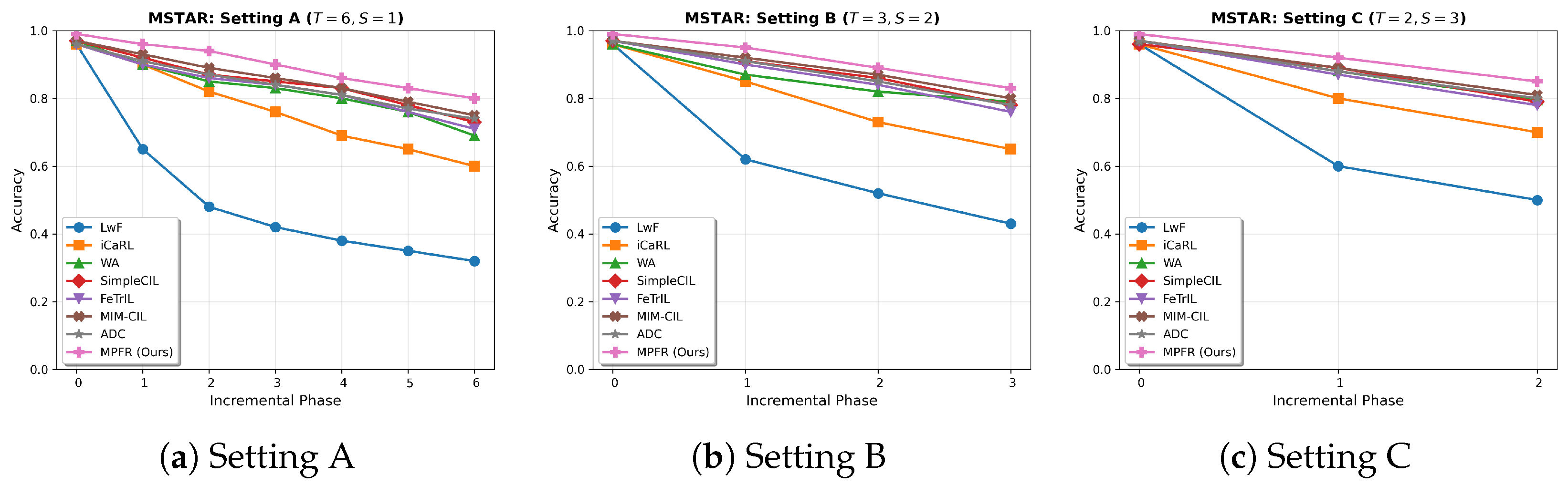

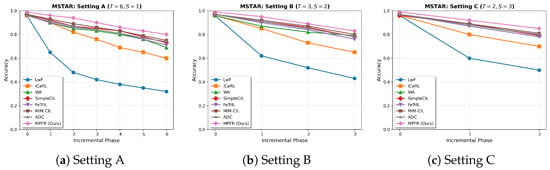

4.4.1. Results on MSTAR Dataset

Table 3 presents the comprehensive comparison on the MSTAR dataset across three incremental configurations.

Table 3.

Comparative results on MSTAR dataset under different incremental configurations.

The experimental results reveal that our MPFR method achieves the highest average accuracy across all settings (e.g., 89.7% in Setting A), as shown in Figure 8, representing a 2.2% absolute improvement over MIM-CIL and a 3.3% improvement over ADC. This performance advantage can be attributed to the fundamental differences in how these methods model the SAR feature space.

Figure 8.

Accuracy trends across incremental phases on MSTAR dataset: (a) Setting A (), (b) Setting B (), (c) Setting C (). Our MPFR method demonstrates superior performance with minimal accuracy degradation.

Regarding SimpleCIL and FeTrIL, these methods typically rely on a single Gaussian prototype or mean vector to represent each class. As discussed in Section 3.4, SAR targets exhibit extreme aspect sensitivity, forming “disjointed blobs” in the feature space rather than a single compact cluster. Single-prototype methods average out these distinct modes, losing critical topological information. MPFR’s multi-center approach preserves these modes, leading to significantly lower forgetting rates (19.8% vs. 24.7% for SimpleCIL).

In comparison with MIM-CIL, while it effectively maximizes global mutual information between old and new tasks to reduce forgetting, it focuses on statistical dependency rather than geometric structure. In scenarios with large depression angle variations (MSTAR SOC), preserving the geometry of specific scattering topologies is crucial. MPFR explicitly reconstructs these geometric sub-structures via local clustering, providing a more fine-grained representation than MIM-CIL’s global optimization, hence the 2.2% accuracy gain.

Unlike ADC, which employs adversarial perturbations to estimate and compensate for feature drift, MPFR uses Gaussian diffusion based on actual stored centroids (). While ADC’s generated “drifted” samples are based on a learned adversarial direction, they may not fully capture the complex, non-linear scattering variations of radar targets. In contrast, MPFR ensures that the reconstructed features remain faithful to the original multi-modal distribution of the target, resulting in superior stability.

4.4.2. Results on SAR-AIRcraft-1.0 Dataset

Table 4 shows the performance comparison on the more challenging SAR-AIRcraft-1.0 dataset.

Table 4.

Comparative results on SAR-AIRcraft-1.0 dataset (Setting D: ).

On this challenging dataset, our method achieves an average accuracy of 92.3%, outperforming the second-best method (MIM-CIL) by 2.2% and reducing the forgetting rate by 3.1%. The SAR-AIRcraft-1.0 dataset contains complex background clutter and fine-grained inter-class variations (e.g., distinguishing Boeing 737 from 787). Methods like ADC and MIM-CIL may struggle to disentangle the target features from background noise when enforcing global constraints. MPFR’s advantage here lies in its ability to cluster features that share similar semantic content (aircraft structure) while potentially isolating outlier clusters caused by background variations. By reconstructing the distribution around these clean, multi-modal centers, MPFR maintains high discriminability even for fine-grained categories, demonstrating that geometric reconstruction is a robust strategy for complex real-world SAR imagery.

4.4.3. Computational Efficiency Analysis

To evaluate the computational efficiency of the proposed method, we compared the training costs of MPFR against several existing approaches, including recent methods like ADC and MIM-CIL. To ensure a fair comparison, all methods were evaluated using the same ResNet-18 backbone on an NVIDIA RTX 4090 GPU. Table 5 details the average accuracy and training time per incremental phase on the MSTAR dataset under Setting A ().

Table 5.

Comparison of computational efficiency and performance on MSTAR dataset (Setting A). Training time represents the average duration per incremental phase.

As shown in Table 5, regularization-based and replay-based methods (LwF, iCaRL, WA) incur higher computational overhead (2.6–3.5 min) due to gradient backpropagation. Similarly, ADC and MIM-CIL require significant computation (2.6 min and 2.9 min) for adversarial generation or mutual information optimization.

In contrast, our MPFR method achieves the highest accuracy (89.7%) with a training time of just 0.6 min. While SimpleCIL and FeTrIL are faster (0.2 min and 0.5 min), they suffer from notable performance drops compared to MPFR (e.g., −4.7% for SimpleCIL and −6.3% for FeTrIL). MPFR effectively balances the trade-off, offering superior performance with a highly efficient update speed suitable for real-time applications.

4.4.4. Cross-Dataset Step-Wise Incremental Learning

To robustly evaluate the generalizability of MPFR under severe domain shifts and continuous adaptation scenarios, we designed a rigorous Cross-Dataset Step-wise Incremental Learning experiment (Setting E). Unlike standard settings where the domain remains constant, this scenario requires the model—initially trained on ground targets (MSTAR)—to progressively learn aerial targets (SAR-AIRcraft-1.0) in multiple steps.

- Setting E Configuration (Ground-to-Air Step-wise Transfer):

- Base Phase: All 10 classes from the MSTAR dataset (Ground Vehicles).

- Incremental Phases (): The 7 classes from SAR-AIRcraft-1.0 are added sequentially over 3 phases to simulate a continuous stream of new aerial threats:

- -

- Phase 1: 3 classes (A220, A320, A330).

- -

- Phase 2: 2 classes (ARJ21, Boeing737).

- -

- Phase 3: 2 classes (Boeing787, Other).

This setting is particularly challenging because the feature extractor, frozen after learning ground vehicle features, must accommodate aircraft features that possess significantly different scattering structures and background clutter distributions.

Table 6 summarizes the performance. We report the Average Accuracy (A) across all phases (Base + 3 Incremental phases) and the Final Forgetting Rate (FR) of the base MSTAR classes after the final phase.

Table 6.

Comparative results of Cross-Dataset Step-wise Incremental Learning (Setting E). The model learns 10 MSTAR classes followed by 7 SAR-AIRcraft classes in 3 steps ().

As illustrated in Table 6, MPFR outperforms all comparative methods in this cross-domain continuous learning scenario. Standard replay-free methods like SimpleCIL and FeTrIL experience performance drops because their single-prototype representations struggle to adapt to the “alien” distribution of aircraft targets when the backbone is frozen on ground vehicle data. The domain shift causes the new aircraft features to be loosely distributed, which single centroids fail to capture accurately, leading to overlap with old classes.

In contrast, MPFR’s multi-center strategy flexibly models the dispersed scattering features of the new aerial targets as multiple sub-clusters. This capability allows the model to find “open spaces” in the embedding space for new classes without disrupting the decision boundaries of the existing MSTAR classes. The low forgetting rate (5.8%) confirms that MPFR effectively isolates the new domain knowledge from the old, making it highly suitable for real-world multi-domain recognition systems.

4.5. Ablation Studies

To quantitatively assess the individual contributions of each proposed component and verify the robustness of our framework, we conduct comprehensive ablation studies on the MSTAR dataset using the Setting A () configuration.

4.5.1. Multi-Scale Hybrid Attention and Training Strategy

We first evaluate the effectiveness of the Multi-scale Hybrid Attention (MHA) feature fusion module and the joint supervision training strategy. Table 7 presents the comparative results of different feature fusion approaches and training strategies.

Table 7.

Ablation study on MHA feature fusion and training strategy.

The results demonstrate the progressive improvement achieved by each component. The integration of ASC features with SAR amplitude images provides a 3.2% accuracy improvement over using SAR data alone, confirming the value of complementary electromagnetic scattering characteristics. The MHA fusion mechanism further enhances performance by 2.2% compared to simple concatenation, highlighting the importance of multi-scale processing and adaptive feature weighting. Most significantly, the joint supervision strategy provides an additional 4.1% improvement, validating that the combination of supervised contrastive loss and additive angular margin loss effectively enhances feature discriminability and robustness for incremental learning.

4.5.2. Multi-Center Prototype Strategy

We further investigate the effectiveness of the multi-center prototype strategy compared to single-center approaches, with particular attention to the impact of prototype quantity on performance and storage efficiency. Table 8 presents the quantitative results.

Table 8.

Ablation study on multi-center prototype strategy.

The multi-center prototype approach consistently outperforms the single-center baseline, with the configuration achieving optimal performance (89.7% average accuracy, 19.8% forgetting rate). This represents a substantial 5.8% improvement in accuracy and 15.8% reduction in forgetting rate compared to the single-prototype approach.

A single prototype forces the assumption of unimodal Gaussian distribution, which severely underfits the true feature manifold of SAR targets that exhibit significant aspect-dependent scattering. Our multi-center approach addresses this by approximating the complex distribution through a Gaussian Mixture Model. The performance improvement from to demonstrates that increasing prototype diversity effectively captures the underlying data structure. However, the slight performance degradation at suggests the onset of over-clustering, where the model begins to fit noise rather than meaningful semantic modes. The choice of represents an optimal balance between performance and storage efficiency.

4.5.3. Impact of Feature Extractor Backbone

To verify the generalization capability of the MPFR framework and ensure that its performance is not strictly dependent on the ResNet-18 architecture, we evaluated our method using various feature extractor backbones with different parameter scales. Specifically, we compared the lightweight ResNet-18 with deeper Convolutional Neural Networks (VGG-16 [48], ResNet-50 [49]) and Vision Transformer architectures (ViT-S/16 and ViT-B/16 [50]). For ViT models, we utilized weights pre-trained on ImageNet and fine-tuned them on the base classes to accommodate the SAR data scale. All experiments were conducted under the MSTAR Setting A () configuration.

Table 9 summarizes the performance and model size (number of parameters) across different architectures.

Table 9.

Performance comparison of MPFR using different feature extractor backbones (MSTAR Setting A).

The results indicate that the MPFR framework is architecture-agnostic and functions effectively across different types of backbones. Regarding the efficiency of Residual Networks, despite having the fewest parameters (11.7 M), ResNet-18 achieves competitive performance (89.7%), outperforming the much larger VGG-16 (138.4 M). This justifies our choice of ResNet-18 as the default backbone for resource-constrained onboard SAR applications.

When comparing CNNs with Transformers of similar size, ViT-S/16 (22.1 M) outperforms ResNet-50 (25.6 M) by 0.4% in accuracy and reduces the forgetting rate by 0.4%. This suggests that the Transformer architecture provides highly discriminative feature representations for SAR targets, which effectively complements our multi-center prototype reconstruction. In terms of scalability, scaling up to ViT-B/16 (86.6 M) results in the highest average accuracy (91.2%). However, the marginal gain over ViT-S (0.3%) comes at a cost of nearly the parameters, highlighting ViT-S as a highly efficient alternative for high-performance scenarios.

Despite the superior performance of ViT architectures, we retained ResNet-18 as the default backbone in our main experiments to ensure a fair comparison with existing baselines (e.g., FeTrIL, SimpleCIL), which predominantly report results using ResNet-18.

5. Conclusions

In this paper, we proposed a novel Class-Incremental Learning method named Multi-center Prototype Feature Distribution Reconstruction (MPFR) to address the challenge of catastrophic forgetting in SAR ATR systems. Recognizing that standard single-prototype approaches fail to capture the complex, multi-modal feature manifolds caused by the aspect-sensitivity of SAR targets, MPFR introduces a robust mechanism to model and reconstruct these distributions without storing raw historical data.

Our method rests on two pillars: a high-performance feature extraction module and a distribution reconstruction mechanism. The Multi-scale Hybrid Attention network, optimized via a joint supervision strategy comprising Supervised Contrastive Loss and Additive Angular Margin Loss, successfully fuses SAR amplitude information with physically meaningful Attributed Scattering Centers. This ensures the learned embeddings are both compact and discriminative. Furthermore, the Multi-center Prototype strategy approximates the feature space of old classes using multiple cluster centroids and Gaussian diffusion. This allows the classifier to retain knowledge of the topological structure of historical data while adapting to new categories.

Extensive experiments on the MSTAR and SAR-AIRcraft-1.0 datasets validated the superiority of MPFR. It consistently outperformed state-of-the-art methods, including recent SAR-specific CIL approaches and general CIL frameworks, achieving up to 92.3% average accuracy with minimal forgetting. Ablation studies further confirmed that multi-center modeling is critical for handling the high intra-class variance inherent in radar imagery.

Future work will focus on three key directions. First, to address the challenge of hyperparameter selection noted in the ablation studies, we plan to investigate adaptive clustering mechanisms to automatically determine the optimal number of centroids per class based on the complexity of their feature distributions. Second, building on the promising results from the cross-dataset experiments, we aim to further enhance the model’s robustness against severe domain shifts and speckle noise, exploring its applicability in more complex cross-sensor incremental learning scenarios to support multi-platform cooperative reconnaissance. Finally, we will extend the framework to Open-Set Recognition (OSR) settings, enabling the system to not only incrementally learn new classes but also effectively detect and reject unknown targets in dynamic operational environments.

Author Contributions

Conceptualization, K.Z.; Methodology, K.Z.; Validation, Z.K.; Investigation, B.W.; Data curation, L.Z.; Project administration, P.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Publicly available datasets were analyzed in this study. The MSTAR dataset can be found in reference [21]. The SAR-AIRcraft-1.0 dataset is openly available in the Journal of Radars repository at https://radars.ac.cn/web/data/getData?dataType=SAR-Airport accessed on 29 September 2025 or reference [22].

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Wiley, C.A. Synthetic Aperture Radars. IEEE Trans. Aerosp. Electron. Syst. 1985, AES-21, 440–443. [Google Scholar] [CrossRef]

- Xu, G.; Zhang, B.; Yu, H.; Chen, J.; Xing, M.; Hong, W. Sparse Synthetic Aperture Radar Imaging From Compressed Sensing and Machine Learning: Theories, applications, and trends. IEEE Geosci. Remote Sens. Mag. 2022, 10, 32–69. [Google Scholar] [CrossRef]

- Chen, Y.; Jiang, H.; Li, C.; Jia, X.; Ghamisi, P. Deep Feature Extraction and Classification of Hyperspectral Images Based on Convolutional Neural Networks. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6232–6251. [Google Scholar]

- Chen, H.; He, M.; Yang, Z.; Gan, L. MCEM: Multi-Cue Fusion with Clutter Invariant Learning for Real-Time SAR Ship Detection. Sensors 2025, 25, 5736. [Google Scholar] [CrossRef]

- Zheng, J.; Li, M.; Li, X.; Zhang, P.; Wu, Y. Revisiting Local and Global Descriptor-Based Metric Network for Few-Shot SAR Target Classification. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–14. [Google Scholar] [CrossRef]

- Ding, J.; Chen, B.; Liu, H.; Huang, M. Convolutional Neural Network With Data Augmentation for SAR Target Recognition. IEEE Geosci. Remote Sens. Lett. 2016, 13, 364–368. [Google Scholar] [CrossRef]

- Feng, S.; Ji, K.; Zhang, L.; Ma, X.; Kuang, G. SAR Target Classification Based on Integration of ASC Parts Model and Deep Learning Algorithm. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 10213–10225. [Google Scholar] [CrossRef]

- Shi, Y.; Du, L.; Li, C.; Guo, Y.; Du, Y. Unsupervised domain adaptation for SAR target classification based on domain- and class-level alignment: From simulated to real data. ISPRS J. Photogramm. Remote Sens. 2024, 207, 1–13. [Google Scholar] [CrossRef]

- Gu, Y.; Tao, J.; Feng, L.; Wang, H. Using VGG16 to Military Target Classification on MSTAR Dataset. In Proceedings of the 2021 2nd China International SAR Symposium (CISS), Shanghai, China, 3–5 November 2021; pp. 1–3. [Google Scholar]

- Pan, Q.; Liao, K.; He, X.; Bu, Z.; Huang, J. A Class-Incremental Learning Method for SAR Images Based on Self-Sustainment Guidance Representation. Remote Sens. 2023, 15, 2631. [Google Scholar] [CrossRef]

- Zhang, Y.; Xing, M.; Zhang, J.; Vitale, S. SCF-CIL: A Multi-Stage Regularization-Based SAR Class-Incremental Learning Method Fused with Electromagnetic Scattering Features. Remote Sens. 2025, 17, 1586. [Google Scholar] [CrossRef]

- Zhou, D.-W.; Sun, H.-L.; Ning, J.; Ye, H.-J.; Zhan, D.-C. Continual learning with pre-trained models: A survey. In Proceedings of the IJCAI, Jeju, Republic of Korea, 3–9 August 2024; pp. 8363–8371. [Google Scholar]

- Zhou, D.-W.; Wang, F.-Y.; Ye, H.-J.; Zhan, D.-C. PyCIL: A Python toolbox for class-incremental learning. Sci. China Inf. Sci. 2023, 66, 197101. [Google Scholar] [CrossRef]

- Zhou, D.-W.; Wang, Q.-W.; Qi, Z.-H.; Ye, H.-J.; Zhan, D.-C.; Liu, Z. Class-Incremental Learning: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 9851–9873. [Google Scholar] [CrossRef]

- Zhu, F.; Zhang, X.-Y.; Wang, C.; Yin, F.; Liu, C.-L. Prototype Augmentation and Self-Supervision for Incremental Learning. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 5867–5876. [Google Scholar]

- Zhu, F.; Cheng, Z.; Zhang, X.-Y.; Liu, C.-L. Class-incremental learning via dual augmentation. In Proceedings of the Advances in Neural Information Processing Systems, Online, 6–14 December 2021; pp. 1–12. [Google Scholar]

- Petit, G.; Popescu, A.; Schindler, H.; Picard, D.; Delezoide, B. FeTrIL: Feature Translation for Exemplar-free Class-incremental Learning. arXiv 2023, arXiv:2211.13131. [Google Scholar]

- Zhou, D.-W.; Cai, Z.-W.; Ye, H.-J.; Zhan, D.-C.; Liu, Z. Revisiting Class-incremental Learning with Pre-trained Models: Generalizability and Adaptivity are All You Need. Int. J. Comput. Vis. 2025, 133, 1012–1032. [Google Scholar] [CrossRef]

- Goswami, D.; Liu, Y.; Twardowski, B.; van de Weijer, J. FeCAM: Exploiting the heterogeneity of class distributions in exemplar-free continual learning. In Proceedings of the Advances in Neural Information Processing Systems, New Orleans, LA, USA, 10–16 December 2023; pp. 6582–6595. [Google Scholar]

- Datcu, M.; Huang, Z.; Anghel, A.; Zhao, J.; Cacoveanu, R. Explainable, Physics-Aware, Trustworthy Artificial Intelligence: A paradigm shift for synthetic aperture radar. IEEE Geosci. Remote Sens. Mag. 2023, 11, 8–25. [Google Scholar] [CrossRef]

- Diemunsch, J.R.; Wissinger, J. Moving and stationary target acquisition and recognition (MSTAR) model-based automatic target recognition: Search technology for a robust ATR. In Algorithms for Synthetic Aperture Radar Imagery III; SPIE: Bellingham, WA, USA, 1998; Volume 2757, pp. 228–242. [Google Scholar]

- Wang, Z.; Kang, Y.; Zeng, X.; Wang, Y.; Zhang, T.; Sun, X. SAR-AIRcraft-1.0: High-resolution SAR aircraft detection and recognition dataset. J. Radars 2023, 12, 906–922. [Google Scholar]

- Clemente, C.; Pallotta, L.; Gaglione, D.; De Maio, A.; Soraghan, J.J. Automatic target recognition of military vehicles with Krawtchouk moments. IEEE Trans. Aerosp. Electron. Syst. 2017, 53, 493–500. [Google Scholar] [CrossRef]

- Akbarizadeh, G. A new statistical-based kurtosis wavelet energy feature for texture recognition of SAR images. IEEE Trans. Geosci. Remote Sens. 2012, 50, 4358–4368. [Google Scholar] [CrossRef]

- Raeisi, A.; Akbarizadeh, G.; Mahmoudi, A. Combined method of an efficient cuckoo search algorithm and nonnegative matrix factorization of different Zernike moment features for discrimination between oil spills and lookalikes in SAR images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 4193–4205. [Google Scholar] [CrossRef]

- Ding, B.; Wen, G.; Zhong, J.; Ma, C.; Yang, X. A robust similarity measure for attributed scattering center sets with application to SAR ATR. Neurocomputing 2017, 219, 130–143. [Google Scholar] [CrossRef]

- Cao, R.; Sui, J. A Dynamic Multi-Scale Feature Fusion Network for Enhanced SAR Ship Detection. Sensors 2025, 25, 5194. [Google Scholar] [CrossRef]

- Wei, D.; Du, Y.; Du, L.; Li, L. Target detection network for SAR images based on semi-supervised learning and attention mechanism. Remote Sens. 2021, 13, 2686. [Google Scholar] [CrossRef]

- Patnaik, N.; Raj, R.; Misra, I.; Kumar, V. Demystifying SAR with attention. Expert Syst. Appl. 2025, 276, 127182. [Google Scholar] [CrossRef]

- Feng, S.; Ji, K.; Wang, F.; Zhang, L.; Ma, X.; Kuang, G. PAN: Part attention network integrating electromagnetic characteristics for interpretable SAR vehicle target recognition. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–17. [Google Scholar] [CrossRef]

- Li, W.; Yang, W.; Hou, Y.; Liu, L.; Liu, Y.; Li, X. SARATR-X: Toward Building a Foundation Model for SAR Target Recognition. IEEE Trans. Image Process. 2025, 34, 869–884. [Google Scholar] [CrossRef]

- Zhao, C.; Wang, D.; Zhang, S.; Kuang, G. Multi-Level Structured Scattering Feature Fusion Network for Limited Sample SAR Target Recognition. Remote Sens. 2025, 17, 3186. [Google Scholar] [CrossRef]

- Hui, X.; Liu, Z.; Wang, L.; Zhang, Z.; Yao, S. Bidirectional Interaction Fusion Network Based on EC-Maps and SAR Images for SAR Target Recognition. IEEE Trans. Instrum. Meas. 2025, 74, 1–13. [Google Scholar] [CrossRef]

- Huang, Z.; Wu, C.; Yao, X.; Zhao, Z.; Huang, X.; Han, J. Physics inspired hybrid attention for SAR target recognition. ISPRS J. Photogramm. Remote Sens. 2024, 207, 164–174. [Google Scholar] [CrossRef]

- Li, Z.; Hoiem, D. Learning without Forgetting. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 2935–2947. [Google Scholar] [CrossRef]

- Rebuffi, S.-A.; Kolesnikov, A.; Sperl, G.; Lampert, C.H. iCaRL: Incremental Classifier and Representation Learning. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2001–2010. [Google Scholar]

- Zhao, B.; Xiao, X.; Gan, G.; Zhang, B.; Xia, S.-T. Maintaining Discrimination and Fairness in Class Incremental Learning. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 13208–13217. [Google Scholar]

- Douillard, A.; Cord, M.; Ollion, C.; Robert, T.; Valle, E. Podnet: Pooled outputs distillation for small-tasks incremental learning. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 86–102. [Google Scholar]

- Goswami, D.; Soutif-Cormerais, A.; Liu, Y.; Kamath, S.; Twardowski, B.; Van de Weijer, J. Resurrecting Old Classes with New Data for Exemplar-Free Continual Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; pp. 23335–23345. [Google Scholar]

- Yu, X.; Dong, F.; Ren, H.; Zhang, C.; Zou, L.; Zhou, Y. Multilevel adaptive knowledge distillation network for incremental SAR target recognition. IEEE Geosci. Remote Sens. Lett. 2023, 20, 1–5. [Google Scholar] [CrossRef]

- Karantaidis, G.; Pantsios, A.; Kompatsiaris, I.; Papadopoulos, S. IncSAR: A dual fusion incremental learning framework for SAR target recognition. IEEE Access 2025, 13, 12358–12372. [Google Scholar] [CrossRef]

- Zhang, L.; Xu, T.; Chen, J. SAR Incremental Automatic Target Recognition Based on Mutual Information Maximization. IEEE Geosci. Remote Sens. Lett. 2024, 21, 1–5. [Google Scholar]

- Luan, J.; Huang, X.; Ding, J. Efficient Attributed Scattering Center Extraction Using Gradient-Based Optimization for SAR Image. IEEE Antennas Wirel. Propag. Lett. 2025, 24, 3634–3638. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. arXiv 2018, arXiv:1807.06521. [Google Scholar] [CrossRef]

- Khosla, P.; Teterwak, P.; Wang, C.; Sarna, A.; Tian, Y.; Isola, P.; Maschinot, A.; Liu, C.; Krishnan, D. Supervised Contrastive Learning. arXiv 2021, arXiv:2004.11362. [Google Scholar]

- Deng, J.; Guo, J.; Yang, J.; Xue, N.; Kotsia, I.; Zafeiriou, S. ArcFace: Additive Angular Margin Loss for Deep Face Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 5962–5979. [Google Scholar] [CrossRef]

- Liu, M.; Jiang, X.; Kot, A.C. A Multi-prototype Clustering Algorithm. Pattern Recognit. 2009, 42, 689–698. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. In Proceedings of the International Conference on Learning Representations (ICLR), Vienna, Austria, 4 May 2021. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.