Highlights

What are the main findings?

- Both LDA and SHAP consistently selected overlapping key biomechanical features, confirming high agreement in feature relevance.

- Angular features achieved higher classification effectiveness (F1 = 0.89), whereas distance-based features provided greater stability and resistance to calibration inaccuracies.

What are the implications of the main findings?

- The identified angular and distance-based features provide a starting point for developing more advanced models, which should deliver even better performance when applied to richer algorithms and expanded datasets.

- Reliable assessment of lateral lunges in VR can be achieved with a simplified sensor set: headset plus sensors on feet and shanks.

Abstract

Virtual reality (VR) technologies are increasingly applied in rehabilitation, offering interactive physical and spatial exercises. A major challenge remains the objective assessment of human movement quality (HMQA). This study aimed to identify biomechanical features differentiating correct and incorrect execution of a lateral lunge and to determine the minimal number of sensors required for reliable VR-based motion analysis, prioritising interpretability. Thirty-two healthy adults (mean age: 26.4 ± 8.5 years) performed 211 repetitions recorded with the HTC Vive Tracker system (7 sensors + headset). Repetitions were classified by a physiotherapist using video observation and predefined criteria. The analysis included joint angles, angular velocities and accelerations, and Euclidean distances between 28 sensor pairs, evaluated with Linear Discriminant Analysis (LDA) and SHapley Additive exPlanations (SHAP). Angular features achieved higher LDA performance (F1 = 0.89) than distance-based features (F1 = 0.78), which proved more stable and less sensitive to calibration errors. Comparison of SHAP and LDA showed high agreement in identifying key features, including hip flexion, knee rotation acceleration, and spatial relations between headset and foot or shank sensors. The findings indicate that simplified sensor configurations may provide reliable diagnostic information, highlighting opportunities for interpretable VR-based rehabilitation systems in home and clinical settings.

1. Introduction

The rapid development and growing availability of virtual reality (VR) technology mean that it is being applied increasingly across a wide range of scientific disciplines. Helou et al. provide a comprehensive overview of VR applications in medicine [1], highlighting areas such as rehabilitation, public health training, clinical training for professionals, and medical image visualisation. VR supports rehabilitation by serving as a multifaceted tool that offers interactive physical and spatial exercises. Examples include its use in the rehabilitation of neurological patients [2,3] and orthopaedic patients [4,5]. VR can also be used to analyse the movements of athletes or patients, enabling researchers to better understand the mechanics of movement sequences and to develop personalised training sequences that enhance performance or support rehabilitation [6,7,8].

The most commonly used VR systems consist of base stations, goggles (headsets), and handheld controllers. These kits enable the mapping of the user’s body and objects within a virtual environment, while also providing natural and intuitive interaction with its elements. The basic equipment can be complemented by HTC Vive Tracker sensors, which operate with headsets and controllers in a VR environment, but can also function independently as a standalone motion-tracking system. These sensors enable the recording of quantitative data describing performed motor tasks, such as joint angles and body-segment velocities and positions [5,6,7]. This VR accessory-based tracking system provides a cost-effective solution for recording motion in six degrees of freedom (6DOF) within a global coordinate system, allowing for the measurement of both the position and orientation of objects. Numerous studies highlight the potential of this technology for analysing the movements of VR users, demonstrating the high precision of the data obtained and the repeatability of results comparable to those of advanced optoelectronic systems [9,10,11,12].

As part of the tracking system, a proprietary eMotion application has been developed that serves as a platform for the acquisition and analysis of kinematic data (Poland, Wroclaw). The programme enables the recording and processing of motion data from VR devices, as well as the calculation of lower-limb joint angles using two implemented measurement protocols: Simplified6DOF, which allows for rapid measurements without anatomical calibration, and ISB6DOF, which is compliant with the guidelines of the International Society of Biomechanics and requires calibration. A detailed description of the application is provided by Żuk et al. [9].

In parallel with the tracking system, a VR motion-based game was developed to support the rehabilitation and training process. This objective is achieved through engaging VR gameplay, during which users are encouraged to perform planned movement sequences; points are earned by hitting or avoiding virtual objects, whether stationary or moving. An example of its application is the use of the system to conduct exercise sessions for orthopaedic patients [4].

However, designing the game mechanics and creating a motion-tracking system are not sufficient to achieve the broader goal of assessing the quality of the repetitions performed, as users can collect points in various ways rather than through a single, strictly planned movement pattern.

Human Motion Quality Assessment (HMQA) refers to the objective evaluation of the quality and efficiency of human movements during physical activity, training, or rehabilitation. Its purpose is both to monitor the correctness of performed motor tasks and to identify potential errors or irregularities in movement. HMQA can be understood in a broad context, ranging from comparisons of movement repeatability with expert reference patterns to assessments of patients’ movements against normative data or those of healthy individuals [13,14]. In practice, motion analysis involves the measurement of kinematic parameters (joint angles, body-segment trajectories, velocities, and accelerations), spatiotemporal parameters (e.g., for gait: speed, cadence, stride length and width, gait-phase duration, symmetry, and variability), and selected structural and functional indicators (e.g., inter-joint distances, segment rotation) [15,16,17,18,19,20,21]. Movement quality assessment can be performed using rules, templates, or statistical methods, allowing for the classification of movements, the identification of errors, and the monitoring of exercise repeatability. HMQA can also be considered a specific form of human activity recognition (HAR), in which movement classification is based on subtle differences in the performance of motor tasks [22,23].

Human motion analysis commonly uses basic preprocessing procedures, such as filtering, segmentation, and normalisation [9,24]. In contrast, classical techniques used in the main analysis include signal transforms (FFT, STFT, Wavelet) [25,26,27,28], Dynamic Time Warping (DTW) [29,30], Empirical Mode Decomposition (EMD) [31], Kalman filters [32,33], and probabilistic and statistical models, including Hidden Markov Models (HMM) [34,35,36], Dynamic Bayesian Networks [37,38], Gaussian Mixture Models (GMM) [39,40], and ARMA [41] and ARIMA [42] time-series models. Contemporary approaches also include machine-learning and deep-learning algorithms such as SVM [43,44], KNN [43,44,45], PCA [46,47], LDA [48], multiple regression [49], and cluster analysis [50] as well as ANN [51,52], LSTM [53,54], BiLSTM [55,56], CNN, CNN-LSTM, FCN [57,58,59,60], ResNet [61], and transformer-based models [62,63].

The number of possible methods, models, and parameters is vast, and many advanced algorithms remain a ‘black box’ in practice, making it difficult to interpret the results and determine which features are responsible for correct classification and why. One solution is to use SHapley Additive exPlanations (SHAP), which provide a better understanding of the contribution of individual features to the decisions made by the classification model [64].

The aim of this study is to identify the key biomechanical features that distinguish between correct and incorrect performance of a selected physical exercise, and to determine the minimum number of sensors required for reliable analysis of VR users’ movement data. The priority was not to maximise classification accuracy, but to employ methods that ensure high interpretability of the results.

2. Materials and Methods

2.1. Measurement Setup and Procedure

Thirty-two healthy, injury-free adults (13 women and 19 men) were included in the study (mean age: 26.4 ± 8.5 years; mean height: 176 ± 9.1 cm; mean mass: 69.8 ± 10.6 kg). The study was approved by the local Ethics Committee, and all participants provided written informed consent.

Seven HTC Vive Trackers (versions 2.0 and 3.0) and an HTC Vive head-mounted display were used to record lower-limb joint kinematics, together with two Lighthouse 2.0 base stations from the HTC Vive Pro Full Kit (HTC, New Taipei City, Taiwan), placed 5.7 m apart. All sensors were connected to a ROG Strix G15 laptop (Asus, Taipei, Taiwan) using an 8-port powered USB 3.0 hub (iTec, Ostrava, Czech Republic).

Participants were prepared for data collection by attaching Vive Trackers to their lower limbs using Vortex attachment straps. The sensors were placed on the following locations: the right side of the pelvis, the lateral aspects of the thighs, the distal lateral shanks, and the dorsal aspects of the feet. In addition, participants wore the VR headset for tracking purposes only, without visual occlusion, and held the controllers in their hands.

Measurements were performed using the acquisition module of the proprietary eMotion software (version 2.8), which collects data from Vive Trackers and the HTC Vive VR system, as described in detail by Żuk et al. [9]. The study used the Simplified 6DOF protocol, in which joint angles are defined as angles between trackers; therefore, precise sensor placement along the axes of the body segments is crucial for measurement accuracy. The protocol assumes that the axes of the body segments coincide with the axes of the trackers. Only the position of the tracker on the foot was not significant, as a correction procedure was applied whereby an ankle joint angle of 0° was assumed in an upright standing position with parallel feet. Data were recorded during the exercises and subsequently processed in the eMotion software to calculate joint angles. During data acquisition with the Vive Trackers, video recordings were captured simultaneously using a Galaxy S20 FE smartphone (Samsung Electronics, Suwon, South Korea), which recorded at a resolution of 1920 × 1080 pixels and 30 frames per second.

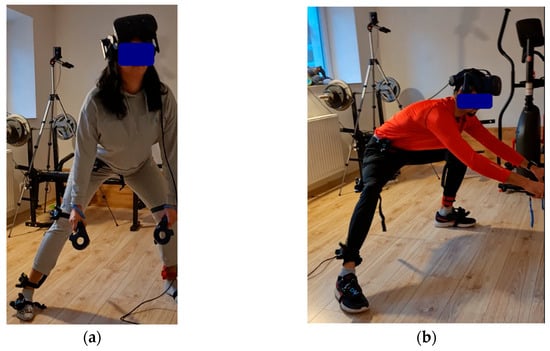

After recording a static standing position, participants received instructions and performed a side lunge, completing three repetitions on each side. If additional repetitions were performed, all of them were included in the analysis. In three cases, the measurement was repeated twice. A total of 211 lunge repetitions were recorded, of which 110 were classified as correct and 100 as incorrect. The assessments and category assignments were performed by a physiotherapist according to predefined criteria based on the observation and analysis of the video recordings. The successive phases of the exercise performed correctly, along with examples of common errors, are shown in Figure 1 and Figure 2.

Figure 1.

Correct execution of the lateral lunge exercise.

Figure 2.

Incorrect execution of the lateral lunge: (a) absence of pelvic lowering; (b) flexion of both lower limbs accompanied by a hunched posture.

The starting position for the exercise involved standing with the feet parallel and no more than hip-width apart, with the torso upright and the pelvis in a neutral position. The leading leg then moved laterally in a straight line, without rotation or forward displacement, while the knee remains aligned above the ankle as the centre of gravity is lowered. The hip was lowered with a simultaneous backward movement of the pelvis. During this movement, the supporting leg remained straight or slightly flexed, with the foot firmly grounded. The torso leaned slightly forward while maintaining the natural spinal curvature, without slouching, and the pelvis remained symmetrical without rotation. The range of motion resulted from hip and knee flexion and was smooth and within physiological limits. The return to the starting position was achieved by dynamically pushing off with the leading leg, while maintaining balance and active control of the torso.

Repetitions that failed to meet the above criteria were considered incorrect. The most common errors observed included excessive forward displacement of the leading knee during lowering, simultaneous flexion of both lower limbs instead of only the leading leg, failure to clearly lower the centre of gravity, and performing the movement without fully returning to the starting position (defined as placing the feet hip-width apart and keeping the torso upright).

2.2. Preprocessing

Data analysis was performed in the MATLAB environment (R2026b). Data were prepared in several stages, including the processing of sensor trajectories and joint angles. After manual segmentation into individual repetitions, the signals were filtered using a 6 Hz Butterworth filter, normalised to 101 frames, and corrected for offsets introduced by the eMotion angle calculation algorithms. The correction involved calculating the mean of the first five frames and shifting the signal by 180° if the absolute value of the mean exceeded 165°. Sign corrections were also applied to selected hip, knee, and ankle rotations (to account for differences in coordinate systems), in accordance with the system developers’ recommendations to standardise the directions of rotation [9]. The limbs were treated functionally (leading vs. supporting leg).

During this stage, the quality of the raw sensor trajectories was checked, and unnatural signal jumps or losses were identified. The affected fragments were marked as NaN in both the trajectories and the corresponding angles, which avoided the removal of entire observations and preserved as many samples as possible. At this stage, the following signals were identified as defective: 65 from the hip sensor, 20 from the right thigh, 12 from the right foot, 8 from the left thigh, 11 from the left shank, and 7 from the left foot. Subsequently, a visual inspection of the angular waveforms was performed. In the case of further anomalies, only the angular data were selectively marked as NaN. The analysis also identified artefacts arising from the limitations of representing rotation using Euler angles (including gimbal lock). These artefacts caused, among other effects, flattened knee flexion waveforms with simultaneous unnatural variability in the abduction angle; in such cases, the affected data were also rejected. The final results of the angular data cleaning process are summarised in the following Table 1.

Table 1.

The final results of the angular data cleaning process.

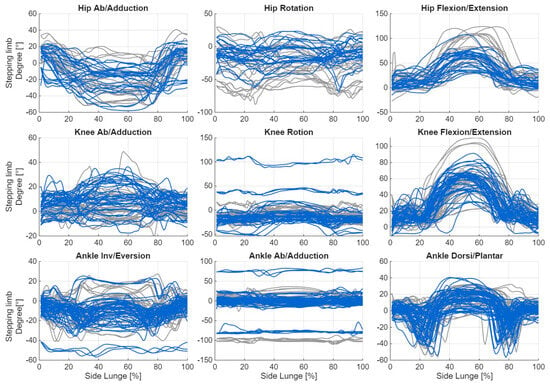

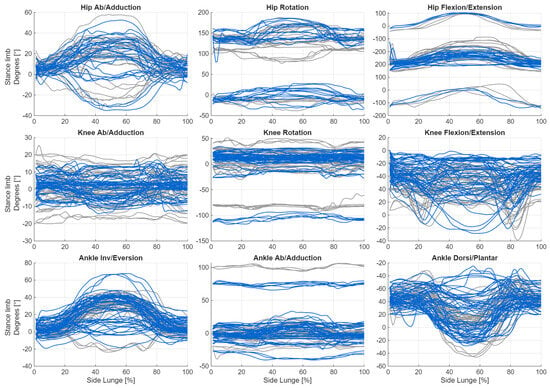

Below are graphs showing changes in joint angles for movements performed on the right side. Despite the applied correction, visible offsets remain in the data. It is also noteworthy that the directions of the curves are not always consistent (e.g., abduction/adduction of the hip joint in the supporting leg). The next step was to compute angular velocities and accelerations which, as derivatives of the signal, are independent of both the offset and the curve direction. These derived measures can provide valuable information about the movement strategy, even when the direction of rotation is uncertain. Figure 3 and Figure 4 illustrate the changes in joint angle values during the execution of the lateral lunge, presented separately for consecutive repetitions.

Figure 3.

Plot of changes in joint angle values during the execution of the lateral lunge for the stepping limb, shown separately for consecutive repetitions (blue: incorrect, grey: correct).

Figure 4.

Plot of changes in joint angle values during the execution of the lateral lunge for stance limb, shown separately for consecutive repetitions (blue: incorrect, grey: correct).

Since the trajectories themselves depend on the coordinate system as well as on individual anthropometric characteristics, it was decided to analyse the distances between the sensors. These were calculated as the Euclidean distances between all possible pairs of sensors at each time instant, according to the formula:

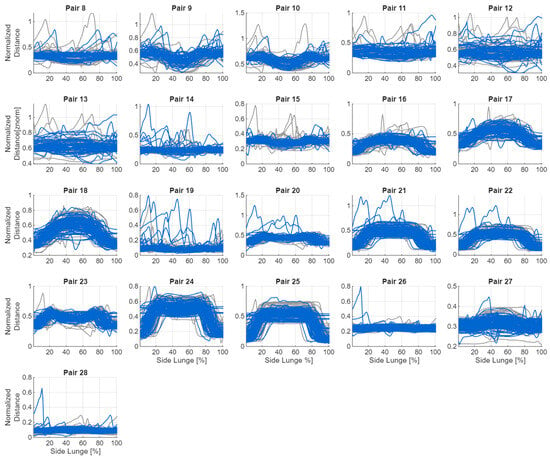

If the coordinates of any of the sensors contained a NaN value, a missing value was assigned to the corresponding sensor pair and frame. To limit the impact of anthropometric differences, all distances were normalised relative to a reference distance—the distance between the goggles and the foot of the leading limb in the first frame. When the coordinates of this foot contained NaN, the distance between the goggles and the supporting foot was used instead. All spatial values were then divided by this reference distance to obtain a comparable scale across participants. All 28 possible sensor pairs were analysed, including the seven trackers on the lower limbs and the goggles (Table 2). Hand trajectories were excluded because the subjects did not receive clear instructions on upper-limb positioning. Figure 5 and Figure 6 show graphs of the changes in distance between the sensors during the exercise.

Table 2.

Sensor pairs and their assigned identification numbers.

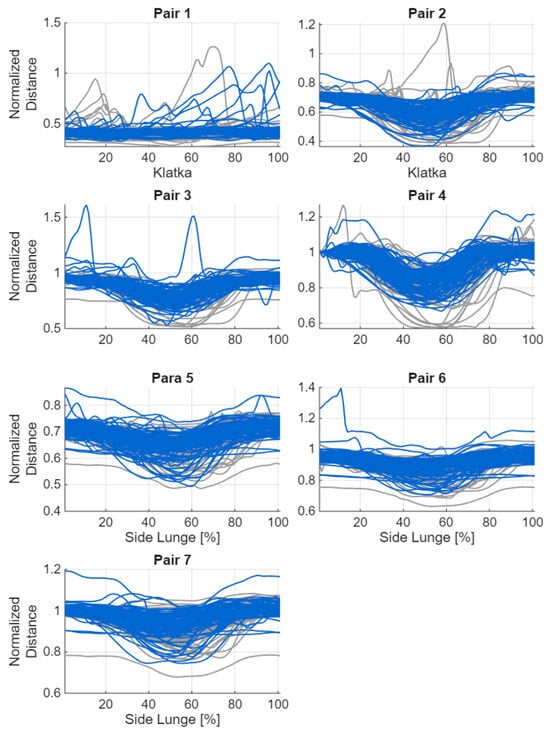

Figure 5.

Changes in distances between sensor pairs without goggles during the exercise (blue—incorrect, grey—correct).

Figure 6.

Changes in distances between sensor pairs with goggles during the exercise (blue: incorrect, grey: correct).

The procedure described above ensured consistent, orderly, and standardised datasets covering joint angles with their corresponding angular velocities and accelerations, as well as the distances between the sensors used during the measurements. Each repetition was also labelled with its correctness (‘correct’/‘incorrect’), the side on which the exercise was performed (‘right’/‘left’), and a ‘subjectID’ to identify repetitions performed by the same participant. The data prepared in this way provided the foundation for the subsequent analysis.

2.3. Statistical Analysis

Due to frequent shifts in the angular signals relative to the OY axis, only offset-insensitive measures were calculated for the angles, namely standard deviation, range of motion (ROM), skewness, and kurtosis. For angular velocities and accelerations, a more complete set of statistics was computed, including the mean, median, minimum, maximum, standard deviation, quartiles, coefficient of variation (CV), ROM, skewness, and kurtosis. The same set of measures was calculated for the distances between sensors. In total, 72 angular features, 200 velocity and acceleration features, and 224 distance-based features were obtained.

The analysis was conducted at the level of individual repetitions, comparing correct and incorrect performances. The normality of each distribution was assessed using the Shapiro–Wilk test, followed by either Student’s t-test or the Wilcoxon–Mann–Whitney test. For each feature, the p-value and effect size (Cohen’s d) were calculated. In parallel, a correlation analysis (Spearman) was performed, and redundant features were removed (|r| > 0.98).

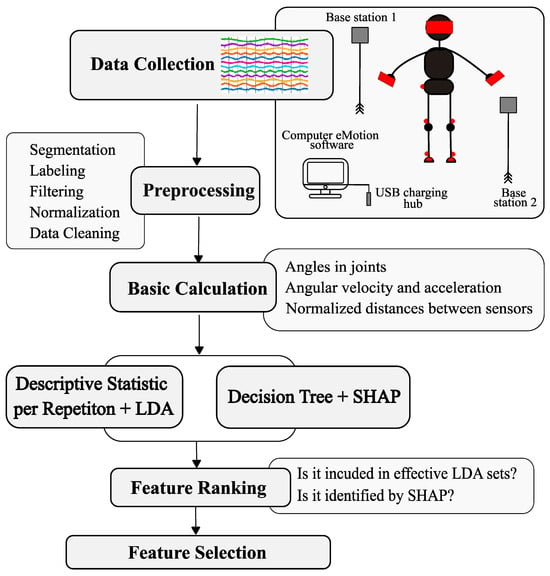

In addition, the classification potential of each feature was assessed using the area under the curve (AUC) of the receiver operating characteristic (ROC), identifying variables that simultaneously met the following criteria: AUC ≥ 0.55, Cohen’s d ≥ 0.5, and p < 0.1. This method identified seven distance features and 117 angular features. Due to the small number of distance features meeting the AUC criterion, feature sets selected on the basis of significance and correlation tests (48 features) were used for further analysis. The final set consisted of statistical parameters derived from angular and distance signals (e.g., ROM, deviations, quartiles, and distribution shape measures), which served as input data for the LDA classification model. Figure 7 illustrates the workflow of the study.

Figure 7.

Workflow of the analysis procedure applied in the study. Red color—schematic marking of sensors during measurements.

2.4. Linear Discriminant Analysis (LDA)

To determine which movement parameters—both individual features and combinations thereof—best distinguished between correct and incorrect performances, classical linear discriminant analysis was applied (Statistics and Machine Learning Toolbox; fitcdiscr function with equal a priori class probabilities: ‘Prior’, ‘uniform’). This method identifies a linear boundary between groups based on the feature distributions, assuming a common covariance matrix [65].

To prevent information leakage between the training and test sets, the data were split according to participant ID so that repetitions from the same individual never appeared in both sets simultaneously. Each experiment was repeated twenty times, with participants randomly assigned to five validation folds per iteration. In each iteration, the training data were standardised using a Z-score, while the test data were rescaled using the mean and standard deviation parameters calculated from the training set. This approach ensured standardisation of the feature scale while maintaining the isolation of the test data. The results were then averaged, allowing assessment of both the effectiveness and repeatability of the models. The significance of the results was verified using a simplified permutation test (50 permutations) without group validation.

The features used were statistical parameters computed for individual repetitions (e.g., range of motion of a given joint, standard deviation, median, or kurtosis), with each feature representing a single numerical value characterising the course of the movement.

The analysis compared sets of features of varying sizes: single features, pairs, triples, and sets of four (the latter were obtained by adding one feature to the ten best sets from the previous step). The number of features was limited to a maximum of four in accordance with recommendations for the stability of linear classifiers, which state that the number of samples in each class should be at least 5–10 times greater than the number of parameters [66]. This condition was satisfied in both classes.

The effectiveness of the models was assessed using four metrics: accuracy, F1 for the incorrect class, AUC, and standard deviation of F1 (Std F1). This set of metrics allowed for simultaneous evaluation of the accuracy, stability, and reliability of the classification. Particular emphasis was placed on the F1 measure for the incorrect class, as in the context of the designed application it is especially important to correctly detect incorrect performances, which are most relevant for the safety of training or rehabilitation. Table 3 presents the best combinations of trajectory features, while Table 4 shows the best combinations of angular features, highlighting the biomechanical parameters that most effectively distinguished between correct and incorrect performances. The F1-score, which combines precision and sensitivity, was used to evaluate classification quality, reflecting the model’s effectiveness in recognising incorrect exercise executions.

Table 3.

Comparison of distance-based sensor sets: the three best overall, the two top-performing two-element and three-element sets, and, for illustration, the weakest three-element combination. Stability assessed using Std_F1 thresholds (<0.015 very stable; 0.015–0.025 acceptable; >0.025 increased variability).

Table 4.

Best four-element set for angular data, with top two- and three-element sets included for comparison. Stability assessed using Std_F1 thresholds (<0.015 very stable; 0.015–0.025 acceptable variability; >0.025 increased variability). 1: Stepping limb; 2: Stance limb.

2.5. SHapley Additive Explanation (SHAP)

The classification analysis considered all calculated statistical features—both those selected in previous significance tests and other descriptive parameters. The features were grouped into blocks, which in practice represented an equal division of the full feature list according to their order. This approach facilitated the organisation of the data and the interpretation of the impact of individual feature groups on the model, without omitting any features. The detailed composition of the blocks is not presented; however, each block included a complete set of features for all signal types.

The analysis was performed in the MATLAB environment (Statistics and Machine Learning Toolbox). For each block, twenty cross-validation iterations were conducted using a 5-fold scheme in two variants: with grouping by participants and with random assignment. The training data were standardised separately in each iteration using Z-scores, while the test data were scaled using parameters derived from the training set. A single decision tree (fitctree) was used for classification. The impact of individual features was assessed based on Shapley values, calculated using the shapley function, with the global importance of each feature defined as the average absolute value of its Shapley coefficients. When Shapley values were unavailable, the predictorImportance measure was used as an alternative. The analysis was performed only for blocks that met the effectiveness criteria (presented in Table 5), and feature rankings were generated for these blocks (examples are listed in Table 6).

Table 5.

Criteria used in a decision tree to select a set of features for subsequent stages of analysis.

Table 6.

Results of metrics for sample blocks based on a decision tree with random validation applied to angular features.

Selected features from the blocks were combined into a new data matrix, on which cross-validation was performed again and SHAP values were calculated, generating a ranking of features according to their average impact. In the next iteration, 50% of the highest-ranked features were retained to assess the effect of feature reduction on model performance. For angular data, only one block met the criteria in grouped validation (acc = 0.60; F1 = 0.61), with half of its features advancing to subsequent stages. In random validation, four blocks were fully included and ten blocks partially included in the subsequent stages. However, the metrics were highly variable.

In the case of angular features, because the results deteriorated after combining selected feature sets and recalculating the metrics, only the features from the best-rated random-validation blocks and from the best grouped-validation block were retained for further analysis, provided that their weights were greater than zero. This procedure resulted in a total of 42 features. A summary of selected parameters is provided in Table 7. Notably, the selected angular features mainly relate to the hip joint of the supporting leg, whereas for velocity and acceleration, features associated with the hip and knee joints of the leading leg predominate.

Table 7.

Angular feature selection based on a decision tree with SHAP values (1: stepping limb, 2: stance limb).

For the distance data, the same feature selection procedure was applied as for the angular data. In the second stage, a six-step SHAP-based selection was carried out, with the number of analysed features reduced by half at each step. In the case of random validation, the best results were achieved using an eleven-element feature set (details are provided in Table 8). However, a larger 41-element set was ultimately selected for constructing the rankings, as it provided both good performance and the highest stability, and its size was comparable to the angular feature set. For grouped validation, none of the blocks met the criteria required for inclusion in the subsequent stages of analysis.

Table 8.

Metric results for sample blocks based on a decision tree with random validation applied for distance features.

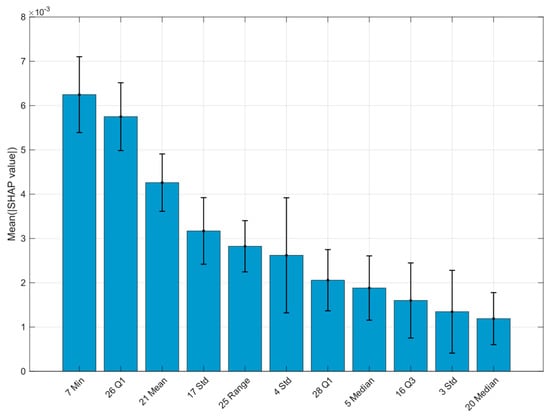

As an illustration of the approach used, a SHAP bar chart (feature importance plot) is presented for the block of distance features with the highest classification effectiveness, showing the global importance of the individual parameters (Figure 8). No analogous visualisations are provided for the remaining blocks, as they do not contribute any additional key information within the adopted framework.

Figure 8.

SHAP-based ranking of distance features. Pair numbers and statistical descriptors are shown on the x-axis.

2.6. Feature Selection

The next stage involved a ranking analysis of the features selected using two independent approaches, with the aim of identifying biomechanical variables consistently regarded as significant. Features selected by SHAP for all data types (angles, velocities, accelerations, distances) and those highlighted by LDA were considered, but only from effective sets (F1 ≥ 0.70, AUC ≥ 0.75), These sets were divided into individual features, and their frequency of occurrence was counted. The analysis then focused on identifying features that recurred most often in the most significant sets. Features that recurred multiple times and had the greatest impact on model performance were considered the most important, allowing a comparison of the relative importance of individual variables and identification of key parameters for classification.

3. Results

In the angular-data analysis, 4412 of the 13,440 LDA sets met the predefined criteria. These sets contained 115 unique features, including 17 that overlapped with features selected using SHAP. Across the complete set of angular features considered, the value of joint angles appeared in the effective LDA sets 15 times (Table 9).

Table 9.

Feature selection results for joint-angle data using LDA and SHAP. 1: Stepping limb; 2: Stance limb.

The features appearing most frequently in the effective LDA sets were also selected by SHAP. All features related to joint angle values and identified by both LDA and SHAP corresponded to hip flexion in the supporting leg. The effective LDA sets included 52 features for angular velocities and 48 features for angular accelerations. These findings suggest that movement dynamics is an important indicator. They also indicate that correcting for offsets when computing first- and second-order derivatives from angle values may be important.

Table 10 summarises the angular features that occur most frequently in the effective LDA sets, as well as those identified by SHAP.

Table 10.

Selected features combining joint angles, accelerations, and velocities using decision tree and SHAP analysis. 1: Stepping limb; 2: Stance limb.

In the case of distance data and LDA, 36 of the 3926 evaluated sets met the predefined effectiveness thresholds. These were constructed from 14 unique features tested in multiple combinations, including three that overlapped with features identified by SHAP. These three highlighted features correspond to the following sensor pairs:

Goggles—foot of the leading leg (100%)

Shank of the leading leg—shank of the supporting leg (11.1%)

Shank of the leading leg—foot of the leading leg (8.3%)

Table 11 summarises the results for the distance features.

Table 11.

Selected distance-based features identified using decision tree and SHAP analysis.

In addition, it is worth noting features that recur frequently in effective LDA sets, even though they were not identified in the SHAP analysis. These are:

Standard deviation of the distance between the goggles and the thigh of the leading leg.

Median distance between the shank of the leading leg and the foot of the supporting leg.

Standard deviation of the distance between the shank of the leading leg and the foot of the supporting leg.

Minimum acceleration in the hip joint of the supporting leg in rotation and flexion/extension.

The analysis showed that key diagnostic information can be obtained primarily from the goggles and sensors placed on the feet and shanks for distance analysis, and from the hip and knee joint angles for angular data.

4. Discussion

To achieve the study objective, the side lunge was selected as the motor task due to its widespread use in functional training and lower-limb rehabilitation [67]. This exercise involves a complex, multi-joint movement in an open kinematic chain, producing rich and diverse biomechanical signals. An additional advantage is its asymmetrical nature, which allows assessment of both the supporting and stepping limbs, as well as analysis of how sensor placement affects data quality. Consequently, the side lunge is a sufficiently challenging yet controlled task, enabling reliable evaluation of both the significance of individual movement characteristics and sensor configurations in VR conditions.

The variety of available approaches in human motion analysis reflects the complexity of the field and makes it challenging to select appropriate methods for implementation and interpretation in practical systems, such as VR applications supporting rehabilitation. This paper therefore focuses on identifying the key biomechanical features that distinguish between correct and incorrect lunge execution, and on determining the number of sensors required for reliable analysis of VR users’ movement data—a first step toward developing simple yet effective methods for assessing movement in a VR environment. To this end, simple descriptive statistical parameters were analysed, and methods with high interpretability were applied, namely LDA and a decision tree model interpreted using SHAP.

These methods have previously been applied in similar contexts. For example, SHAP has been used for feature selection in classifying types of locomotion transitions, demonstrating that this technique can significantly reduce the number of channels analysed while maintaining high classification accuracy [22,68]. LDA has also been employed in motion analysis, including the classification of fitness exercises using data from inertial sensors [48], and in combination with HMM for feature reduction in HAR tasks, showing that this approach can substantially improve balanced classification accuracy [69].

The integration of SHAP provides a critical advantage over ‘black-box’ deep learning models, such as the LSTM or CNN architectures used by Spilz and Munz [53]. While deep learning often yields high accuracy, it lacks clinical interpretability. By using SHAP, we can pinpoint specific biomechanical indicators, such as knee rotation acceleration, that contribute to the classification, allowing physiotherapists to provide targeted, evidence-based feedback to patients.

Based on the analysis, six features were selected that were jointly identified by the linear LDA model and the non-linear decision tree model, along with four additional features that were not selected by SHAP but appeared frequently in effective LDA sets. These features were considered to have the greatest potential for assessing lunge performance and, importantly, are supported by a clear biomechanical rationale (Table 12).

Table 12.

Importance of the selected biomechanical features.

The analysis revealed that key information for distinguishing between correct and incorrect lunge executions can originate from different sets of sensors, depending on the type of data analysed. For distance-related parameters, the most informative signals came from the goggles and sensors placed on the feet and shanks, whereas in the analysis of angular data, features related to the hip and knee joints predominated. Importantly, among the angular features, angular acceleration of knee joint rotation of the leading leg emerged most frequently, highlighting the potential value of derivatives, rather than just the raw joint angles. It is worth noting that the range of motion in this plane, with the joint flexed, can reach up to 44° [70]. At the same time, all joint-angle features selected by both methods simultaneously referred to hip flexion and extension in the supporting leg.

Clear signal offsets were observed in the angular data, likely resulting from calibration imperfections, measurement errors, or variability in sensor placement during recordings. This phenomenon should be considered natural, particularly in the context of home use, where VR users are unlikely to perform calibration as precisely as in laboratory conditions. Consequently, it is especially important to design analysis methods that are robust to baseline shifts and uncertainty in signal orientation.

The LDA classification results indicated that angular data outperformed distance data (F1 = 0.89 ± 0.007 vs. 0.78 ± 0.004 for the best sets), although angular features were more sensitive to local signal fluctuations and incurred a higher computational cost. In contrast, distance features were more stable, less computationally demanding, and more resistant to calibration imperfections. Our classification performance (F1 = 0.89 for angular data) is consistent with other state-of-the-art systems for human movement quality assessment (HMQA),. For instance, Yu and Xiong achieved a correlation of r = 0.86 using DTW for rehabilitation exercises [29], while Pereira et al. reported a 96% accuracy in error detection using pure inertial sensors [71]. However, our findings align with Vox et al. regarding the inherent limitations of VR-based tracking, where joint angle discrepancies can range from ±6° to ±42° depending on the sensor configuration and calibration [72].

Practical implementation in home settings faces significant hardware challenges. Our study confirmed that thigh-mounted sensors are prone to shifting during dynamic lunges, and the hip sensor frequently suffers from loss of line-of-sight with the base stations. These real-world constraints justify our focus on a simplified sensor configuration (headset, shanks, and feet), which proved more robust and less sensitive to such artefacts while maintaining diagnostic reliability. Consequently, the choice of appropriate sensors and features should depend on the target application. In home systems, where simplicity and robustness to user errors are priorities, distance-based metrics represent a promising solution. In contrast, in clinical contexts, where measurement conditions and sensor placement can be controlled more precisely, full kinematic analysis may offer greater diagnostic value, with acceleration and angular velocity data being as important as joint-angle measurements.

It is also worth noting that the sensor placed on the hip most frequently produced erroneous data records. This may have resulted from defects in that particular sensor or from its mounting location, which caused frequent interruptions in the line of sight between the sensor and the base station. Practical experience during the study also showed that sensors placed on the thighs often shifted or slipped, which may have further compromised the quality of the recorded data.

In the presented analysis, the LDA assumption of equal covariance matrices between classes was not always satisfied, which may have partially affected classification performance. As noted by Brobbey et al. [70], violating this assumption may reduce the accuracy of LDA, although it does not invalidate the usefulness of the resulting findings. Models that allow for variance differentiation, such as Quadratic Discriminant Analysis (QDA), often achieve higher effectiveness in such cases, but require larger datasets, which limits their use in exploratory analyses. In this study, the priority was not to construct an optimal classifier but to identify the features that best differentiate the studied groups; therefore, the obtained results retain significant cognitive value despite a partial violation of the LDA assumptions.

Importantly, even assessments performed by experienced physiotherapists are not fully repeatable. For instance, in a study on the reliability of the ARAT test in stroke survivors, the coefficient of concordance (Kendall’s W = 0.711) indicated good but incomplete agreement between specialists [71]. In the context of this study, a key limitation was that repetitions were assessed by a single evaluator, which restricts the objectivity of the results. Additionally, there were no clear guidelines on the positioning of the leading leg’s foot during the exercise, which may have contributed to variations in foot placement across both the correct and incorrect performance groups. This heterogeneity may explain the less frequent significance of ankle-related parameters among the angular features.

The analysis relied exclusively on simple statistical parameters and basic classification models, such as LDA and a single decision tree. For the decision tree, a block analysis was employed, grouping the features, which may have influenced how the model was trained. Alternative block-building strategies could yield different SHAP results and affect the interpretation of feature importance.

In summary, the presented results provide a valuable foundation for further experimental work. They highlight the potential of both angular and distance-based approaches and identify areas that warrant further investigation.

In subsequent research stages, it is advisable to apply more advanced machine learning methods, such as decision tree ensembles or neural networks, using the key biomechanical features identified in this study. Additionally, it is recommended to expand the exercise database, increase the size and diversity of the participant group, and develop a detailed movement assessment protocol covering all critical aspects of exercise performance. This approach will increase the stability of feature selection, improve classification accuracy, and better adapt the systems for use in both clinical and home rehabilitation settings.

5. Conclusions and Future Work

This study, while identifying key biomechanical features, has several limitations. First, side-lunge performance was classified by a single physiotherapist, which limits objectivity and repeatability. Second, only 32 healthy participants were included (mean age 26.4 ± 8.5 years), limiting generalizability to clinical populations. General exercise guidelines lacked precise instructions for foot positioning, potentially increasing variability and affecting ankle-related parameters. Technical challenges included sensor shifts and intermittent line-of-sight loss, which may have affected data quality. Simple statistical parameters and highly interpretable models (LDA, single decision tree) were used, and SHAP feature-block grouping may have influenced feature importance assessments. Finally, VR goggles did not fully occlude vision, limiting ecological validity.

Anomalous samples, such as missing data, line-of-sight loss, or anatomically implausible spikes, were removed during preprocessing. No formal anomaly-detection criteria were established due to their sporadic and heterogeneous nature. Thus, the analysis focused on feature identification rather than model robustness under data-loss conditions. Future work will include explicit anomaly-detection criteria and sensitivity analyses.

The findings provide a foundation for further research. Future studies should include participants with a broader range of ages and motor abilities, especially clinical populations, and incorporate multiple independent evaluators to enable inter-rater agreement metrics. System performance should also be evaluated under non-professional, home-based conditions. While this study focused on interpretability, future work will examine more complex data-driven models (CNNs, LSTMs), validate the results against gold-standard motion analysis, and assess combined angular and distance features. Additionally, extending the analysis to other physical exercises will help generalise the findings across different movement patterns. Finally, a standardised protocol for movement execution, including foot orientation and stride range, will reduce variability and improve diagnostic relevance.

6. Conclusions

Angular and distance data provide complementary information: angular features yielded higher LDA classification effectiveness in distinguishing between correct and incorrect side lunge performances, whereas distance features proved more stable, resistant to calibration inaccuracies, and less computationally demanding, making them especially suitable for home applications.

Variables identified as significant were selected in both the global analysis (LDA) and the analysis of the local influence of individual parameters (SHAP). The high consistency of these approaches indicates the stability and reliability of the selected features.

The most important angular features included: hip joint flexion of the supporting limb, movement dynamics in this joint (angular acceleration of rotation and flexion/extension), and angular acceleration of rotation in the knee joint of the leading leg.

The key distance features comprised the distances between the goggles and the foot of the leading leg, between the shank of the leading leg and the shank of the supporting leg, and between the shank and foot of the leading leg, along with statistical measures (standard deviation, median) of the distances between the goggles and the thigh of the leading leg and between the shank and foot of the supporting leg.

An extensive set of sensors is not necessary for a reliable assessment of the side lunge. The analysis showed that the most useful information can be obtained from the goggles and sensors placed on the feet and shanks, while sensors on the hip and thighs are more prone to artefacts. As a result, it is possible to design simplified sensor configurations, appropriately adapted to home and clinical rehabilitation settings.

Author Contributions

U.C.: Conceptualization, Methodology, Investigation, Data curation, Formal analysis, visualisation, Writing—original draft, Writing—review and editing. M.Ż.: Project administration, Funding acquisition, Conceptualization, Methodology, Software, Supervision, Writing—review and editing. M.P.: Software, Methodology, Validation. C.P.: Validation, Writing—review and editing. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Centre for Research and Development in Poland, grant number LIDER/37/0200/L-10/18/NCBR/2019. The APC was funded by the institutional funds of Wroclaw University of Science and Technology.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Senate Committee on Research Ethics at the Academy of Physical Education named after the Polish Olympians in Wroclaw.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding authors.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| LDA | Linear Discriminant Analysis |

| SHAP | SHapley Additive exPlanations |

| VR | Virtual Reality |

| HMQA | Human Motion Quality Assessment |

| FFT | Fast Fourier Transform |

| STFT | Short-Time Fourier Transform |

| DTW | Dynamic Time Warping |

| EMD | Empirical Mode Decomposition |

| HMM | Hidden Markov Model |

| GMM | Gaussian Mixture Model |

| ARMA | Autoregressive Moving Average |

| ARIMA | Autoregressive Integrated Moving Average |

| SVM | Support Vector Machine |

| KNN | k-Nearest Neighbours |

| PCA | Principal Component Analysis |

| ANN | Artificial Neural Network |

| LSTM | Long Short-Term Memory |

| BiLSTM | Bidirectional Long Short-Term Memory |

| CNN | Convolutional Neural Network |

| CNN LSTM | Convolutional Neural Network combined with LSTM |

| FCN | Fully Convolutional Network |

| ResNet | Residual Network |

| ROM | Range of Motion |

| AUC | Area under the curve |

| ROC | Receiver operating characteristic |

References

- Helou, S.; Khalil, N.; Daou, M.; El Helou, E. Virtual reality for healthcare: A scoping review of commercially available applications for head-mounted displays. Digit. Health 2023, 9, 20552076231178619. [Google Scholar] [CrossRef]

- Matys-Popielska, K.; Popielski, K.; Matys, P.; Sibilska-Mroziewicz, A. Immersive Virtual Reality Application for Rehabilitation in Unilateral Spatial Neglect: A Promising New Frontier in Post-Stroke Rehabilitation. Appl. Sci. 2024, 14, 425. [Google Scholar] [CrossRef]

- Ceradini, M.; Losanno, E.; Micera, S.; Bandini, A.; Orlandi, S. Immersive VR for upper-extremity rehabilitation in patients with neurological disorders: A scoping review. J. Neuroeng. Rehabil. 2024, 21, 75. [Google Scholar] [CrossRef] [PubMed]

- Czajkowska, U.; Żuk, M.; Pezowicz, C.; Popek, M.; Łopusiewicz, M.; Kentel, M. Virtual Reality and Motion Capture in Orthopedic Rehabilitation: A Preliminary Study Using the eMotion System. Gait Posture 2025, 121, 51–52. [Google Scholar] [CrossRef]

- Tang, P.; Cao, Y.; Vithran, D.T.A.V.; Xiao, W.; Wen, T.; Liu, S.; Li, Y. The Efficacy of Virtual Reality on the Rehabilitation of Musculoskeletal Diseases: Umbrella Review. J. Med. Internet Res. 2025, 27, e64576. [Google Scholar] [CrossRef]

- Kantha, P.; Hsu, W.-L.; Chen, P.-J.; Tsai, Y.-C.; Lin, J.-J. A novel balance training approach: Biomechanical study of virtual reality-based skateboarding. Front. Bioeng. Biotechnol. 2023, 11, 1136368. [Google Scholar] [CrossRef] [PubMed]

- Oagaz, H.; Schoun, B.; Choi, M.-H. Real-time posture feedback for effective motor learning in table tennis in virtual reality. Int. J. Hum. Comput. Stud. 2022, 158, 102731. [Google Scholar] [CrossRef]

- Shi, Y.; Peng, Q. A VR-based user interface for the upper limb rehabilitation. Procedia CIRP 2018, 78, 115–120. [Google Scholar] [CrossRef]

- Żuk, M.; Wojtków, M.; Popek, M.; Mazur, J.; Bulińska, K. Three-dimensional gait analysis using a virtual reality tracking system. Measurement 2022, 188, 110627. [Google Scholar] [CrossRef]

- Czajkowska, U.; Żuk, M.; Pezowicz, C.; Popek, M.; Łopusiewicz, M.; Bulińska, K. Low-Cost Motion Tracking Systems in the Kinematics Analysis of VR Game Users—Preliminary Study. In Innovations in Biomedical Engineering 2024; Gzik, M., Paszenda, Z., Piętka, E., Milewski, K., Jurkojć, J., Eds.; Lecture Notes in Networks and Systems; Springer Nature: Cham, Switzerland, 2025; Volume 1202, pp. 15–28. [Google Scholar] [CrossRef]

- Merker, S.; Pastel, S.; Bürger, D.; Schwadtke, A.; Witte, K. Measurement Accuracy of the HTC VIVE Tracker 3.0 Compared to Vicon System for Generating Valid Positional Feedback in Virtual Reality. Sensors 2023, 23, 7371. [Google Scholar] [CrossRef]

- Caserman, P.; Garcia-Agundez, A.; Konrad, R.; Göbel, S.; Steinmetz, R. Real-time body tracking in virtual reality using a Vive tracker. Virtual Real. 2019, 23, 155–168. [Google Scholar] [CrossRef]

- Venek, V.; Kranzinger, S.; Schwameder, H.; Stöggl, T. Human Movement Quality Assessment Using Sensor Technologies in Recreational and Professional Sports: A Scoping Review. Sensors 2022, 22, 4786. [Google Scholar] [CrossRef] [PubMed]

- Hindle, B.R.; Keogh, J.W.L.; Lorimer, A.V. Inertial-Based Human Motion Capture: A Technical Summary of Current Processing Methodologies for Spatiotemporal and Kinematic Measures. Appl. Bionics Biomech. 2021, 2021, 6628320. [Google Scholar] [CrossRef]

- Winter, D.A. Biomechanics and Motor Control of Human Movement, 1st ed.; Wiley: Hoboken, NJ, USA, 2009. [Google Scholar] [CrossRef]

- Kidziński, Ł.; Yang, B.; Hicks, J.L.; Rajagopal, A.; Delp, S.L.; Schwartz, M.H. Deep neural networks enable quantitative movement analysis using single-camera videos. Nat. Commun. 2020, 11, 4054. [Google Scholar] [CrossRef] [PubMed]

- Albert, J.A.; Owolabi, V.; Gebel, A.; Brahms, C.M.; Granacher, U.; Arnrich, B. Evaluation of the Pose Tracking Performance of the Azure Kinect and Kinect v2 for Gait Analysis in Comparison with a Gold Standard: A Pilot Study. Sensors 2020, 20, 5104. [Google Scholar] [CrossRef]

- Deng, D.; Ostrem, J.L.; Nguyen, V.; Cummins, D.D.; Sun, J.; Pathak, A.; Little, S.; Abbasi-Asl, R. Interpretable video-based tracking and quantification of parkinsonism clinical motor states. npj Park. Dis. 2024, 10, 122. [Google Scholar] [CrossRef]

- Haney, M.M.; Hamad, A.; Leary, E.; Bunyak, F.; Lever, T.E. Automated Quantification of Vocal Fold Motion in a Recurrent Laryngeal Nerve Injury Mouse Model. Laryngoscope 2019, 129, E247–E254. [Google Scholar] [CrossRef]

- Dos’Santos, T.; Thomas, C.; Jones, P.A. The effect of angle on change of direction biomechanics: Comparison and inter-task relationships. J. Sports Sci. 2021, 39, 2618–2631. [Google Scholar] [CrossRef]

- Swinton, P.A.; Lloyd, R.; Keogh, J.W.L.; Agouris, I.; Stewart, A.D. A Biomechanical Comparison of the Traditional Squat, Powerlifting Squat, and Box Squat. J. Strength Cond. Res. 2012, 26, 1805–1816. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Pang, J.; Tao, P.; Ji, Z.; Chen, J.; Meng, L.; Xu, R.; Ming, D. Locomotion transition prediction at Anticipatory Locomotor Adjustment phase with SHAP feature selection. Biomed. Signal Process. Control 2024, 92, 106105. [Google Scholar] [CrossRef]

- Hussain, A.; Adeel Zahid, M.; Ahmed, U.; Nazeer, S.; Zafar, K.; Rauf Baig, A. Time-Series Data to Refined Insights: A Feature Engineering-Driven Approach to Gym Exercise Recognition. IEEE Access 2024, 12, 100343–100354. [Google Scholar] [CrossRef]

- Robertson, D.G.E.; Caldwell, G.E.; Hamill, J.; Kamen, G.; Whittlesey, S.N. Research Methods in Biomechanics, 2nd ed.; Human Kinetics: Champaign, IL, USA, 2014. [Google Scholar] [CrossRef]

- Van Bladel, A.; De Ridder, R.; Palmans, T.; Van Der Looven, R.; Verheyden, G.; Meyns, P.; Cambier, D. Defining characteristics of independent walking persons after stroke presenting with different arm swing coordination patterns. Hum. Mov. Sci. 2024, 93, 103174. [Google Scholar] [CrossRef] [PubMed]

- Teufl, S.; Preston, J.; Van Wijck, F.; Stansfield, B. Quantifying upper limb tremor in people with multiple sclerosis using Fast Fourier Transform based analysis of wrist accelerometer signals. J. Rehabil. Assist. Technol. Eng. 2021, 8, 2055668320966955. [Google Scholar] [CrossRef] [PubMed]

- Kuduz, H.; Kaçar, F. A deep learning approach for human gait recognition from time-frequency analysis images of inertial measurement unit signal. Int. J. Appl. Methods Electron. Comput. 2023, 11, 165–173. [Google Scholar] [CrossRef]

- Too, J.; Rahim, A.; Mohd, N. Classification of Hand Movements based on Discrete Wavelet Transform and Enhanced Feature Extraction. Int. J. Adv. Comput. Sci. Appl. 2019, 10, 83–89. [Google Scholar] [CrossRef]

- Yu, X.; Xiong, S. A Dynamic Time Warping Based Algorithm to Evaluate Kinect-Enabled Home-Based Physical Rehabilitation Exercises for Older People. Sensors 2019, 19, 2882. [Google Scholar] [CrossRef]

- Gu, Y.; Pandit, S.; Saraee, E.; Nordahl, T.; Ellis, T.; Betke, M. Home-Based Physical Therapy with an Interactive Computer Vision System. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Seoul, Republic of Korea, 27–28 October 2019; IEEE: Piscataway, NJ, USA, 2020; pp. 2619–2628. [Google Scholar] [CrossRef]

- Safi, K.; Aly, W.H.F.; AlAkkoumi, M.; Kanj, H.; Ghedira, M.; Hutin, E. EMD-Based Method for Supervised Classification of Parkinson’s Disease Patients Using Balance Control Data. Bioengineering 2022, 9, 283. [Google Scholar] [CrossRef]

- Zhang, J.-H.; Li, P.; Jin, C.-C.; Zhang, W.-A.; Liu, S. A Novel Adaptive Kalman Filtering Approach to Human Motion Tracking With Magnetic-Inertial Sensors. IEEE Trans. Ind. Electron. 2020, 67, 8659–8669. [Google Scholar] [CrossRef]

- Palmieri, P.; Melchiorre, M.; Scimmi, L.S.; Pastorelli, S.; Mauro, S. Human Arm Motion Tracking by Kinect Sensor Using Kalman Filter for Collaborative Robotics. In Advances in Italian Mechanism Science; Niola, V., Gasparetto, A., Eds.; Mechanisms and Machine Science; Springer International Publishing: Cham, Switzerland, 2021; Volume 91, pp. 326–334. [Google Scholar] [CrossRef]

- Calin, A.D. Gesture Recognition on Kinect Time Series Data Using Dynamic Time Warping and Hidden Markov Models. In Proceedings of the 2016 18th International Symposium on Symbolic and Numeric Algorithms for Scientific Computing (SYNASC), Timisoara, Romania, 24–27 September 2016; IEEE: Piscataway, NJ, USA, 2017; pp. 264–271. [Google Scholar] [CrossRef]

- Kleine, J.D.; Rybarczyk, Y. Hidden Markov Model approach for the assessment of tele-rehabilitation exercises. Int. J. Artif. Intell. 2018, 16, 1–19. [Google Scholar]

- Haghighi Osgouei, R.; Soulsby, D.; Bello, F. Rehabilitation Exergames: Use of Motion Sensing and Machine Learning to Quantify Exercise Performance in Healthy Volunteers. JMIR Rehabil. Assist. Technol. 2020, 7, e17289. [Google Scholar] [CrossRef] [PubMed]

- Georga, E.I.; Gatsios, D.; Tsakanikas, V.; Kourou, K.D.; Liston, M.; Pavlou, M.; Kikidis, D.; Bibas, A.; Nikitas, C.; Bamiou, D.E.; et al. A Dynamic Bayesian Network Approach to Behavioral Modelling of Elderly People during a Home-based Augmented Reality Balance Physiotherapy Programme. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 5544–5547. [Google Scholar] [CrossRef]

- Peterson, K.D. An efficient dynamic Bayesian network classifier structure learning algorithm: Application to sport epidemiology. J. Complex Netw. 2020, 8, cnaa036. [Google Scholar] [CrossRef]

- Chen, D.; Li, G.; Zhou, D.; Ju, Z. A Novel Curved Gaussian Mixture Model and Its Application in Motion Skill Encoding. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 7813–7818. [Google Scholar] [CrossRef]

- Remedios, S.M.; Armstrong, D.P.; Graham, R.B.; Fischer, S.L. Exploring the Application of Pattern Recognition and Machine Learning for Identifying Movement Phenotypes During Deep Squat and Hurdle Step Movements. Front. Bioeng. Biotechnol. 2020, 8, 364. [Google Scholar] [CrossRef]

- Mei, F.; Hu, Q.; Yang, C.; Liu, L. ARMA-Based Segmentation of Human Limb Motion Sequences. Sensors 2021, 21, 5577. [Google Scholar] [CrossRef] [PubMed]

- Tron, T.; Resheff, Y.S.; Bazhmin, M.; Weinshall, D.; Peled, A. ARIMA-based motor anomaly detection in schizophrenia inpatients. In Proceedings of the 2018 IEEE EMBS International Conference on Biomedical & Health Informatics (BHI), Las Vegas, NV, USA, 4–7 March 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 430–433. [Google Scholar] [CrossRef]

- Nasrabadi, A.M.; Eslaminia, A.R.; Bakhshayesh, P.R.; Ejtehadi, M.; Alibiglou, L.; Behzadipour, S. A new scheme for the development of IMU-based activity recognition systems for telerehabilitation. Med. Eng. Phys. 2022, 108, 103876. [Google Scholar] [CrossRef]

- Safira, S.M.; Harry Gunawan, P.; Kurniawan, W.Y.; Gede Karang Komala Putra, I.; Reganata, G.P.; Kadek Winda Patrianingsih, N.; Wahyu Surya Dharma, I.G.; Kadek Arya Sugianta, I.; Udhayana, K.F.R. Comparative Analysis of the K-Nearest Neighbor Method and Support Vector Machine in Human Fall Detection. In Proceedings of the 2023 3rd International Conference on Intelligent Cybernetics Technology & Applications (ICICyTA), Denpasar, Bali, Indonesia, 13–15 December 2023; IEEE: Piscataway, NJ, USA, 2024; pp. 148–153. [Google Scholar] [CrossRef]

- Qi, W.; Yao, H.; Song, H. Research on human activity classification based on K-nearest algorithm model. In Proceedings of the International Conference on Cloud Computing, Performance Computing, and Deep Learning (CCPCDL 2023), Huzhou, China, 17–19 February 2023; Subramaniam, K., Saxena, S., Eds.; SPIE: Nuremberg, Germany, 2023; p. 68. [Google Scholar] [CrossRef]

- Moreira, J.; Silva, B.; Faria, H.; Santos, R.; Sousa, A. Systematic Review on the Applicability of Principal Component Analysis for the Study of Movement in the Older Adult Population. Sensors 2022, 23, 205. [Google Scholar] [CrossRef]

- Van Andel, S.; Mohr, M.; Schmidt, A.; Werner, I.; Federolf, P. Whole-body movement analysis using principal component analysis: What is the internal consistency between outcomes originating from the same movement simultaneously recorded with different measurement devices? Front. Bioeng. Biotechnol. 2022, 10, 1006670. [Google Scholar] [CrossRef] [PubMed]

- Crema, C.; Depari, A.; Flammini, A.; Sisinni, E.; Haslwanter, T.; Salzmann, S. IMU-based solution for automatic detection and classification of exercises in the fitness scenario. In Proceedings of the 2017 IEEE Sensors Applications Symposium (SAS), Glassboro, NJ, USA, 13–15 March 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, X.; Shi, Y. Application of multiple regression models in the analysis of kinematic parameters in competitive gymnastics. Appl. Math. Nonlinear Sci. 2024, 9, 20230256. [Google Scholar] [CrossRef]

- Gwak, G.; Hwang, U.; Kim, J. Clustering of shoulder movement patterns using K-means algorithm based on the shoulder range of motion. J. Bodyw. Mov. Ther. 2025, 41, 164–170. [Google Scholar] [CrossRef]

- Contreras Rodriguez, L.A.; Cardiel, E.; Soto, A.L.; Antonio Barraza Madrigal, J.; Hernandez Rodriguez, P.R. Human Upper Limb Motion Recognition Using IMU sensors and Artificial Neural Networks. In Proceedings of the 2022 19th International Conference on Electrical Engineering, Computing Science and Automatic Control (CCE), Mexico City, Mexico, 9–11 November 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–4. [Google Scholar] [CrossRef]

- Robles, D.; Benchekroun, M.; Lira, A.; Taramasco, C.; Zalc, V.; Irazzoky, I.; Istrate, D. Real-time Gait Pattern Classification Using Artificial Neural Networks. In Proceedings of the 2022 IEEE International Workshop on Metrology for Living Environment (MetroLivEn), Cosenza, Italy, 25–27 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 76–80. [Google Scholar] [CrossRef]

- Spilz, A.; Munz, M. Automatic Assessment of Functional Movement Screening Exercises with Deep Learning Architectures. Sensors 2022, 23, 5. [Google Scholar] [CrossRef]

- Mottaghi, E.; Akbarzadeh-T., M.-R. Automatic Evaluation of Motor Rehabilitation Exercises Based on Deep Mixture Density Neural Networks. J. Biomed. Inform. 2022, 130, 104077. [Google Scholar] [CrossRef]

- Alawneh, L.; Mohsen, B.; Al-Zinati, M.; Shatnawi, A.; Al-Ayyoub, M. A Comparison of Unidirectional and Bidirectional LSTM Networks for Human Activity Recognition. In Proceedings of the 2020 IEEE International Conference on Pervasive Computing and Communications Workshops (PerCom Workshops), Austin, TX, USA, 23–27 March 2020; IEEE: Piscataway, NJ, USA, 2022; pp. 1–6. [Google Scholar] [CrossRef]

- De Assis Neto, S.R.; Santos, G.L.; Da Silva Rocha, E.; Bendechache, M.; Rosati, P.; Lynn, T.; Takako Endo, P. Detecting Human Activities Based on a Multimodal Sensor Data Set Using a Bidirectional Long Short-Term Memory Model: A Case Study. In Challenges and Trends in Multimodal Fall Detection for Healthcare; Ponce, H., Martínez-Villaseñor, L., Brieva, J., Moya-Albor, E., Eds.; Studies in Systems, Decision and Control; Springer International Publishing: Cham, Switzerland, 2020; Volume 273, pp. 31–51. [Google Scholar] [CrossRef]

- Jung, D.; Nguyen, M.D.; Han, J.; Park, M.; Lee, K.; Yoo, S.; Kim, J.; Mun, K.-R. Deep Neural Network-Based Gait Classification Using Wearable Inertial Sensor Data. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 3624–3628. [Google Scholar] [CrossRef]

- Ozdemir, M.A.; Kisa, D.H.; Guren, O.; Akan, A. Hand gesture classification using time–frequency images and transfer learning based on CNN. Biomed. Signal Process. Control 2022, 77, 103787. [Google Scholar] [CrossRef]

- Yen, C.-T.; Liao, J.-X.; Huang, Y.-K. Human Daily Activity Recognition Performed Using Wearable Inertial Sensors Combined With Deep Learning Algorithms. IEEE Access 2020, 8, 174105–174114. [Google Scholar] [CrossRef]

- Du, C.; Graham, S.; Depp, C.; Nguyen, T. Assessing Physical Rehabilitation Exercises using Graph Convolutional Network with Self-supervised regularization. In Proceedings of the 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Mexico, 1–5 November 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 281–285. [Google Scholar] [CrossRef]

- Archana, N.; Hareesh, K. Real-time Human Activity Recognition Using ResNet and 3D Convolutional Neural Networks. In Proceedings of the 2021 2nd International Conference on Advances in Computing, Communication, Embedded and Secure Systems (ACCESS), Ernakulam, India, 2–4 September 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 173–177. [Google Scholar] [CrossRef]

- Sharifi-Renani, M.; Mahoor, M.H.; Clary, C.W. BioMAT: An Open-Source Biomechanics Multi-Activity Transformer for Joint Kinematic Predictions Using Wearable Sensors. Sensors 2023, 23, 5778. [Google Scholar] [CrossRef]

- Mourchid, Y.; Slama, R. D-STGCNT: A Dense Spatio-Temporal Graph Conv-GRU Network based on transformer for assessment of patient physical rehabilitation. Comput. Biol. Med. 2023, 165, 107420. [Google Scholar] [CrossRef] [PubMed]

- Rudin, C. Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat. Mach. Intell. 2019, 1, 206–215. [Google Scholar] [CrossRef]

- Aguilera-Morillo, M.C.; Aguilera, A.M. Multi-class classification of biomechanical data: A functional LDA approach based on multi-class penalized functional PLS. Stat. Model. 2020, 20, 592–616. [Google Scholar] [CrossRef]

- McLachlan, G.J. Discriminant Analysis and Statistical Pattern Recognition, 1st ed.; Wiley Series in Probability and Statistics; Wiley: Hoboken, NJ, USA, 1992. [Google Scholar] [CrossRef]

- Flanagan, S.P.; Wang, M.-Y.; Greendale, G.A.; Azen, S.P.; Salem, G.J. Biomechanical Attributes of Lunging Activities for Older Adults. J. Strength Cond. Res. 2004, 18, 599. [Google Scholar] [CrossRef]

- Zhang, G.; Ji, Z.; Wang, Z.; Meng, L.; Xu, R. The cross-subject locomotion transition prediction during previous double-stance phases with SHAP feature selection. In Proceedings of the Proceedings of the 2024 11th International Conference on Biomedical and Bioinformatics Engineering, Osaka Japan, 8–11 November 2024; ACM: New York, NY, USA, 2025; pp. 140–145. [Google Scholar] [CrossRef]

- Hartmann, Y.; Liu, H.; Schultz, T. Feature Space Reduction for Human Activity Recognition Based on Multi-Channel Biosignals. In Proceedings of the 14th International Joint Conference on Biomedical Engineering Systems and Technologies; SciTePress—Science and Technology Publications: Setúbal, Portugal, 2021; pp. 215–222. [Google Scholar] [CrossRef]

- Mossberg, K.A.; Smith, L.K. Axial Rotation of the Knee in Women. J. Orthop. Sports Phys. Ther. 1983, 4, 236–240. [Google Scholar] [CrossRef] [PubMed]

- Pereira, A.; Folgado, D.; Nunes, F.; Almeida, J.; Sousa, I. Using Inertial Sensors to Evaluate Exercise Correctness in Electromyography-based Home Rehabilitation Systems. In Proceedings of the 2019 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Istanbul, Turkey, 26–28 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Vox, J.P.; Weber, A.; Wolf, K.I.; Izdebski, K.; Schüler, T.; König, P.; Wallhoff, F.; Friemert, D. An Evaluation of Motion Trackers with Virtual Reality Sensor Technology in Comparison to a Marker-Based Motion Capture System Based on Joint Angles for Ergonomic Risk Assessment. Sensors 2021, 21, 3145. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.