Abstract

Reconstructing animatable humans, together with their surrounding static environments, from monocular, motion-blurred videos is still challenging for current neural rendering methods. Existing monocular human reconstruction approaches achieve impressive quality and efficiency, but they are designed for clean intensity inputs and mainly focus on the foreground human, leading to degraded performance under motion blur and incomplete scene modeling. Event cameras provide high temporal resolution and robustness to motion blur, making them a natural complement to standard video sensors. We present E-Sem3DGS, a semantically augmented 3D Gaussian Splatting framework that leverages hybrid event-intensity streams to jointly reconstruct explicit 3D volumetric representations of human avatars and static scenes. E-Sem3DGS maintains a single set of 3D Gaussians in Euclidean space, each endowed with a learnable semantic attribute that softly separates dynamic human and static scene content. We initialize human Gaussians from Skinned Multi-Person Linear (SMPL) model priors with semantic values set to 1 and scene Gaussians by sampling a surrounding cube with semantic values set to 0, then jointly optimize geometry, appearance, and semantics. To mitigate motion blur, we derive optical flow from events and use it to supervise image-based optical flow between rendered frames, enforcing temporal coherence in high-motion regions and sharpening both humans and backgrounds. On the motion-blurred ZJU-MoCap-Blur dataset, E-Sem3DGS improves the average full-frame PSNR from 21.75 to 32.56 (+49.7%) over previous methods. On MMHPSD-Blur, our method improves PSNR from 25.23 to 28.63 (+13.48%).

1. Introduction

Reconstructing photorealistic, animatable human avatars, together with their surrounding static environments, from sensory input is a pivotal challenge in computer vision, with transformative applications in extended reality (XR), gaming, and visual try-on [1,2,3,4]. Recent advances in neural rendering integrate body articulation into Neural Radiance Fields (NeRFs) [5,6,7,8] and point-based rendering such as 3D Gaussian Splatting (3DGS) [9,10,11,12], enabling high-fidelity reconstruction of clothed human geometry and appearance from sparse or monocular videos. While these methods can utilize 3D volumetric representations to render high-quality human avatars under novel poses, they typically assume clean intensity inputs and mainly focus on the foreground human, often leaving static scenes under-modeled and degrading in the presence of motion blur [13].

Three-Dimensional Gaussian Splatting (3DGS) has emerged as an efficient alternative to NeRFs, offering fast inference and high-fidelity rendering with reduced computational cost [10]. Methods such as 3DGS-Avatar [9] and ASH [11] leverage 3DGS to render animatable human avatars by integrating articulated deformation with Multi-Layer Perceptrons (MLPs) or Convolutional Neural Networks (CNNs) for real-time performance. HUGS [12] extends this line of work to jointly model humans and static backgrounds by employing two separate sets of 3D Gaussians—one for the human and one for the scene—yet guided by precomputed human foreground masks [14,15]. However, maintaining dual Gaussian sets increases representation and optimization complexity, and the reliance on 2D foreground masks becomes problematic under motion blur, where mask predictions are noisy and misaligned. This limits scene realism and robustness in immersive applications such as augmented reality (AR) [1,2].

Motion blur is a common artifact in monocular videos of fast-moving subjects, introducing temporal ambiguities that challenge consistent reconstruction of both geometry and appearance across frames [16]. Intensity-based deblurring methods such as MPR [17] and NAFNet [18] attempt to restore sharp frames, but they struggle in highly dynamic scenes with complex motion patterns [19]. Event cameras, which asynchronously capture per pixel brightness changes, naturally complement standard video sensors by providing high-temporal-resolution data that is robust to motion blur [20]. Hybrid event-intensity approaches, including EFNet [19] and D2Net [21], improve deblurring by fusing event streams and intensity images, yet they are typically formulated as 2D pre-processing modules that are decoupled from downstream 3D reconstruction and have limited generalization across diverse scenes. In human pose estimation, EventHPE [13] demonstrates that event-derived optical flow can drive high-precision 3D human pose and shape estimations under motion blur, highlighting the potential of event-based motion cues for dynamic 3D reconstruction.

In this work, we address the joint reconstruction of animatable humans and static scenes from monocular, motion-blurred videos by introducing E-Sem3DGS, a semantically augmented 3D Gaussian Splatting framework that leverages event-based optical flow. Building upon 3DGS-Avatar [9], E-Sem3DGS maintains a single set of 3D Gaussians, each endowed with a learnable semantic attribute that softly separates dynamic human content from static scene content within a unified representation, as illustrated in Figure 1. We initialize human Gaussians from Skinned Multi-Person Linear (SMPL) model priors with their semantic values set to 1 and scene Gaussians by sampling a surrounding cube with their semantic values set to 0, then jointly optimize geometry, appearance, and semantics. Unlike HUGS [12], which relies on separate Gaussian sets and precomputed foreground masks, our unified semantic representation simplifies the pipeline and avoids dependence on external segmentation under blur.

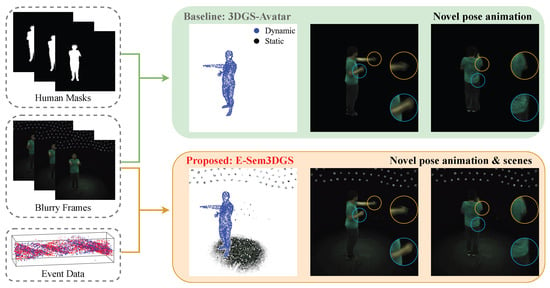

Figure 1.

Comparison between the baseline 3DGS-Avatar [9] and our E-Sem3DGS. While the baseline relies on human masks and suffers from motion-blur artifacts in fast-moving scenes, our method integrates event data and semantic features, enabling improved human-body reconstruction and consistent static-scene modeling.

To mitigate motion blur, we integrate event-based optical flow supervision. Specifically, we derive optical flow from event streams and use it to supervise image-based optical flow between rendered images at consecutive time steps, with the loss selectively applied to regions exhibiting high motion magnitude. This design enforces temporal coherence in high-motion regions and sharpens both human and background reconstructions, in contrast to approaches that apply 2D deblurring as a separate pre-processing stage [17,18,19,21]. Compared to 3DGS-Avatar [9] and HUGS [12] cascaded with intensity-based [17,18] or event-intensity deblurring [19,21] methods, our approach achieves superior performance on the ZJU-MoCap-Blur [6] and MMHPSD-Blur [13] datasets. On motion-blurred ZJU-MoCap-Blur, for full-frame rendering encompassing both humans and scenes, E-Sem3DGS improves the average PSNR from to , corresponding to a relative gain over 3DGS-Avatar [9]. On MMHPSD-Blur, our method improves PSNR from to , corresponding to a gain over HUGS [12].

Compared with prior 3DGS-based methods that either require precomputed masks for human–scene separation or treat deblurring as a stand-alone 2D pre-processing step, E-Sem3DGS integrates semantic disentanglement and event-based motion cues directly into a unified 3D representation and optimization pipeline.

In summary, our main contributions are threefold:

- We propose E-Sem3DGS, a semantically augmented 3D Gaussian Splatting framework that unifies human and scene reconstruction within a single Gaussian representation, enabling efficient human–scene disentanglement and high-quality rendering from monocular videos under motion blur.

- We introduce an event-based optical flow supervision strategy that exploits event-derived flows to guide image-based flows between rendered frames, enhancing temporal consistency and mitigating motion blur in high-motion regions.

- We construct motion-blurred ZJU-MoCap-Blur and MMHPSD-Blur benchmarks and conduct extensive experiments, showing that E-Sem3DGS significantly outperforms strong baselines and state-of-the-art methods on both human and full-frame reconstruction in blurry scenes.

2. Related Work

2.1. Neural Human Rendering

Early photorealistic rendering and animation utilized complex multi-camera setups [22] and manual rigging of human body meshes [23,24]. Subsequent statistical body-shape models [25,26,27,28,29] facilitated the representation of diverse body shapes yet lacked fine details such as clothing, hair, and accessories. Neural Radiance Fields (NeRFs) [5] have transformed 3D reconstruction by modeling geometry and appearance for view synthesis from multi-view images without extensive setup. Originally developed for static scenes [30,31], NeRF has been adapted for dynamic human rendering by incorporating body encodings [32,33,34] or by learning a canonical NeRF representation and transforming camera rays from the observation space to the canonical space to retrieve radiance and density values from the canonical NeRF [7,35,36]. However, most NeRF-based methods, reliant on large MLPs, suffer from slow training (hours to days) and rendering (seconds) [9,12]. Optimized schemes, such as learning functions at grid points [37,38], hash encoding [31], or the elimination of learnable components [39,40], have been developed. Three-Dimensional Gaussian Splatting (3DGS) [10] offers an efficient alternative to NeRF, modeling scenes as sets of 3D Gaussians splatted onto the image plane via alpha blending. The field of 3D Gaussian-based avatar reconstruction [9,12,41,42] has rapidly advanced. However, most existing methods primarily focus on reconstructing human avatars in isolation, often neglecting the concurrent reconstruction of static background scenes [9,41,42]. HUGS [12] represents a state-of-the-art approach by maintaining separate Gaussian sets for the human and scene. However, this hard separation relies heavily on precomputed 2D foreground masks [14]. In motion-blurred scenarios, mask prediction becomes unreliable, leading to severe error propagation where human parts are misclassified as background and fail to deform. In contrast, E-Sem3DGS employs a unified representation with soft, learnable semantic attributes. We use semantics as a differentiable gating mechanism to functionally control the deformation pathway. This enables a self-correcting convergence behavior: even if points are initially misclassified, flow-based supervision can update their semantic attributes, dynamically “activating” their deformation capabilities. This unified representation simplifies optimization complexity by maintaining a single Gaussian set while ensuring geometric robustness against mask failures.

2.2. Deblurring Neural Rendering

Several methods [43,44,45] have been developed to adapt NeRF and 3DGS for the generation of sharp outputs from blurry inputs. Deblur-NeRF [43] pioneered deblurring in NeRF for blurry inputs during training, using a compact MLP to model spatially dependent blur kernels. Subsequent advancements leverage physical priors from the blurring process [44] and jointly optimize Gaussian parameters with the camera trajectory to enhance rendering quality for dynamic human reconstruction [45].

With the development of event cameras, some works [16,46,47] have optimized NeRF and 3DGS solely using event streams. Recent works also integrate events and images for 3D reconstruction to mitigate blur from extreme camera shake [48,49,50]. DE-NeRF [51] reconstructs deformable neural radiance fields for fast-moving objects using event streams and sparse sharp RGB frames. EvaGaussians [49] integrates event streams to explicitly model motion blur and guide deblurring reconstruction, jointly optimizing 3DGS parameters and camera motion for high-fidelity novel view synthesis. EaDeblur-GS [50] utilizes an Adaptive Deviation Estimator (ADE) network and novel loss functions to achieve sharp 3D reconstructions. However, regarding the utilization of event streams, these methods [49,50,51] predominantly rely on event generation models, minimizing the discrepancy between captured events and those simulated based on brightness changes. While rigorous, this strategy requires precise calibration of sensor parameters (e.g., contrast thresholds), limiting generalization across different devices and lighting conditions. Notably, ExFMan [52] introduces a neural rendering framework that reconstructs high-quality dynamic humans from monocular blurry videos by leveraging event camera data and velocity-aware losses to mitigate motion blur. However, this approach explicitly estimates a 3D velocity field from the deformation network’s derivatives. It relies heavily on the accuracy of this internal velocity estimation, which is prone to failure under complex, non-linear articulated motions, and its event supervision remains sensitive to sensor calibration. In contrast, our E-Sem3DGS adopts event-based optical flow as a robust intermediate representation. By supervising the motion field directly with external flow cues, we abstract away sensor-specific signal variations and provide explicit geometric guidance.

2.3. Video Deblurring Methods

Motion blur in monocular videos presents significant challenges for 3D human reconstruction due to its ill-posed nature [19,21]. Traditional intensity-based (RGB or grayscale) deblurring methods [53,54,55] estimate 2D blur kernels or leverage supervised deep learning with paired blurry–sharp datasets [17,18,56] to recover sharp frames. Event-intensity hybrid methods exploit the high temporal resolution of event cameras [19,21] to complement standard intensity data for motion deblurring. For 3D human reconstruction, these methods can serve as a two-stage baseline, first deblurring images, then reconstructing the 3D human model. However, their limited generalization across varied scenes and lack of human-specific priors often lead to failures in handling complex motion [16,52]. In contrast, our framework integrates event-based optical flow supervision to align rendered image flows and event-derived flows, emphasizing high-motion regions to provide explicit geometric cues, improving reconstruction accuracy.

3. Preliminaries

3.1. 3DGS-Avatar

3DGS-Avatar [9] introduces an efficient method for reconstructing animatable human avatars from monocular videos using 3D Gaussian Splatting (3DGS). It initializes a collection of 3D Gaussians () in a canonical space derived from an SMPL mesh [26] and transforms them to the observation space via non-rigid and rigid deformations. The non-rigid deformation module is expressed as follows:

where is the deformation network that maps the canonical position () and a latent code ( [57]), which encodes SMPL pose and shape parameters, to the Gaussian’s position, scale, and rotation offsets, as well as a feature vector:

resulting in deformed Gaussians with , , and . The rigid deformation uses Linear Blend Skinning (LBS) [26]:

where a skinning MLP () predicts weights at position and are bone transformations. A neural color model is applied to generate view-dependent appearance from canonicalized viewing directions, a per Gaussian color feature, a pose-dependent feature, and a per frame latent code, while as-isometric-as-possible constraints on Gaussian positions and covariances enhance generalization to unseen poses.

3.2. Event-Based Optical Flow

Integrating event information into neural rendering techniques [16,46,47] commonly involves directly utilizing raw event data, often by deriving reconstruction losses from event generation models. However, this direct approach can be sensitive to diverse event sensor characteristics and varying acquisition environments. Inspired by EventHPE [13] and its ability to robustly extract motion information, we adopt an alternative strategy, inferring explicit geometric clues in the form of event optical flow. Specifically, EventHPE uses an unsupervised encoder–decoder Convolutional Neural Network (CNN)—namely, FlowNet [13,58]—to estimate optical flow from event frames. Its loss function combines a photometric term (warped image pixel differences) and a smoothness term (penalizing flow discrepancies).

In our method, this event-derived optical flow plays a crucial role. We apply this supervision primarily to regions exhibiting high motion intensity, i.e., areas where the event optical flow magnitude is significant. This selective application allows us to focus our deblurring efforts precisely where motion blur is most severe and where event data provides the most reliable motion estimates. By integrating these precise geometric constraints, our method significantly enhances the reconstruction of dynamic humans from blurry monocular videos, effectively mitigating severe motion blur.

4. Method

4.1. Overview

This section introduces the E-Sem3DGS framework, which reconstructs dynamic human avatars and static scenes from monocular, jointly calibrated blurry videos and event data, along with provided SMPL parameters [26]. Our approach leverages event-aided semantic 3DGS to effectively mitigate motion blur and bypasses the need for human foreground masks by semantically distinguishing human and scene points. The method augments 3D Gaussians with a semantic logit vector initialized from the SMPL mesh for human Gaussians and random cubic sampling for scene Gaussians, with human Gaussians deformed via rigid and non-rigid transformations while scene Gaussians remain static. Deformed human Gaussians and static scene Gaussians are rendered through a single rasterization process supervised by a frozen flow network using event-based optical flow to address motion blur, as depicted in Figure 2. Semantic logits enhance human–scene segmentation (Section 4.2), a scene-color MLP ensures robust static-region appearance (Section 4.3), and event-based flow supervision mitigates dynamic blur (Section 4.4). Joint optimization of semantic Gaussians, human deformation, skinning, event-based flow, and color networks is conducted to reconstruct humans and scenes from blurry video inputs and event data (Section 4.5).

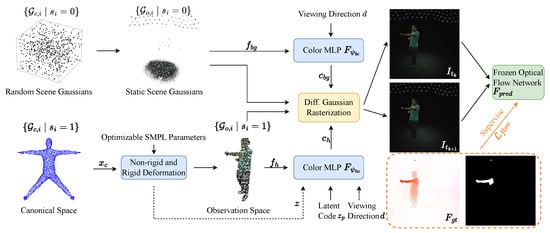

Figure 2.

Overview of our approach. For a fixed hybrid sensor (e.g., Dynamic and Active-pixel Vision Sensor (DAVIS) camera [59]) capturing aligned event and intensity frame data, given a camera pose and human pose, E-Sem3DGS creates an avatar and renders human and scene images for specified human poses. The method represents human and scene regions with a set of Gaussians endowed with semantic attributes, where human Gaussians undergo deformation, while scene Gaussians remain static. Leveraging the event camera’s high temporal resolution, event-based optical flow supervision effectively mitigates dynamic blur from high-speed human motion during rendering.

4.2. Semantic 3D Gaussians

We introduce semantic attributes to the 3D Gaussian primitives to enable explicit segmentation of dynamic human bodies and static scenes. Each 3D Gaussian () in the canonical space, defined by its mean , scaling factor (), rotation quaternion (), opacity (), and color features (), is augmented with a semantic logit vector (, where represents the background (0) and human (1) classes).

The semantic logits () are initialized based on input labels. Given a point cloud with N points and corresponding labels (), we initialize the logits as follows:

where is a scalar hyperparameter that controls the initial class confidence, ensuring high probability for the correct class. The semantic probabilities are computed via a softmax activation:

where is a learnable parameter optimized during training. The predicted class for each Gaussian is obtained as

and segmentation masks are derived as for the human class and for the background class, identifying points belonging to each class. Semantic attributes are integrated into the 3DGS pipeline, where human points are deformed from canonical to observation space using rigid and non-rigid transformations, then rasterized alongside background points for unified rendering of both parts.

4.3. Scene-Color MLP

We introduce a scene-color MLP to model static background appearance in monocular video to achieve higher expressiveness, flexibility, and robustness to noise or blur data compared to traditional spherical harmonics (SH) methods. For scene Gaussians (), the MLP takes the feature vector () and the SH basis () of the viewing direction () as input, predicting the appearance colors via

where is an MLP with one 64-dimension hidden layer and is the canonicalized direction derived from the relative position of the 3D Gaussian and the camera center. This approach enables fine-grained, non-linear color modeling and leverages end-to-end optimization for improved reconstruction quality while ensuring robust color prediction for static regions.

4.4. Event-Flow Supervision

To enhance the reconstruction of motion-blurred human bodies in monocular videos, we incorporate event-based optical flow [13] as a supervisory signal, leveraging the high temporal resolution of event cameras. We employ a lightweight, frozen flow network—either SPyNet [60] or MaskFlowNetS [61]—to predict optical flow () from pairs of rendered frames (, where denotes the current time step in the frame sequence ()). The flow network, denoted as , is defined as follows:

where the frames are resized to a resolution scale of via bilinear interpolation to reduce computational cost, i.e.,

and is the bilinear resize operator.

The predicted flow () is supervised by event-based optical flow to enhance the rendering quality of fast-moving human body parts in motion-blurred monocular videos. The flow loss is defined as follows:

where is a mask selecting pixels with significant flow magnitude, ensures numerical stability, and denotes the L1 norm of the vector difference. This masked L1 loss focuses supervision on regions with substantial motion, enhancing robustness to motion blur.

This supervision strategy is grounded in the premise that, despite originating from different modalities, both flows represent the same underlying physical velocity field. Consequently, minimizing their discrepancy enforces 2D motion consistency on the image plane. While this acts as geometric guidance for the projected deformation rather than strict 3D consistency under complex self-occlusions, it effectively provides structural constraints where intensity data is ambiguous. Moreover, the employment of flow networks acts as a robustness filter, abstracting away sensor noise and contrast threshold sensitivities to focus optimization on the correction of geometric deviations. Note that our framework is modular: while we employ specific pre-trained models in our experiments, the flow estimation module is compatible with general-purpose event optical flow networks (e.g., E-RAFT [62]), ensuring broad applicability across different datasets.

4.5. Optimization

We jointly optimize the 3D semantic Gaussians (), comprising canonical human and scene Gaussians, along with human deformation, skinning, color networks for human modeling [9], and the scene-color MLP () (Section 4.3), using event-based optical flow supervision with a frozen flow network to reconstruct dynamic human avatars and static scenes from blurry monocular videos and event data. The optimization is driven by a loss function combining (1) L1 loss for alignment of rendered and ground-truth images, (2) event-based flow loss (Section 4.4) for motion supervision, (3) perceptual loss [9] to provide robustness to local misalignments, (4) skinning loss based on SMPL priors, and (5) as-isometric-as-possible regularization losses for human Gaussians’ position and coherence. Note that the integration of event cues is achieved via gradient-based supervision rather than direct feature concatenation. The flow-loss gradients specifically guide the deformation in high-motion regions, effectively fusing temporal motion cues with the 3D geometry during backpropagation. Furthermore, structural integrity is maintained without external depth priors by employing skinning loss and as-isometric-as-possible regularization to penalize unphysical distortions.

5. Experiments

5.1. Datasets

ZJU-MoCap-Blur. Following 3DGS-Avatar [9], we select six sequences (377, 386, 387, 392, 393, and 394) from the ZJU-MoCap dataset [6] and generate motion-blurred images using Super-SloMo [63] to simulate realistic monocular video conditions. We select view “1” to focus on reconstructing both humans and scenes from a fixed viewpoint, aiming to minimize hardware costs and isolate high-speed human motion from camera ego-motion. Since this dataset lacks real event data, we employ a simulation strategy for event-based supervision. Specifically, the target optical flow is inferred from the sharp ground-truth images using the pretrained RAFT model [64]. This serves as a high-fidelity proxy for ideal event optical flow, which would naturally be free from motion-blur artifacts. To ensure training and test sets evenly sample the sequence and minimize train–test discrepancies arising from a single 360° human rotation, we apply an interleaved split. Specifically, we partition the sequence into consecutive blocks of 10 frames each, assigning the first 7 frames of every block to the training set and the subsequent 3 frames to the test set. This strategy ensures both comprehensive sampling across the entire sequence and a consistent train–test ratio for optical flow-based training. Human masks derived from RobustVideoMatting [15] enable comparisons with methods requiring human masks, with rendering quality assessed via PSNR, SSIM, and LPIPS metrics for both full images and human regions defined by bounding boxes.

MMHPSD-Blur. The MMHPSD dataset [13], captured using a single fixed CeleX-V event camera, provides event–grayscale image pairs, SMPL ground-truth parameters, and event-based optical flow. Specifically, the provided optical flow is inferred from event frames using the unsupervised FlowNet framework proposed in EventHPE [13,58]. Technically, this inference aggregates asynchronous events into multi-channel frames to explicitly encode polarity and high-temporal-resolution information into the motion estimation. Originally designed for 3D human pose estimation, the dataset is now extended for 3D reconstruction and rendering of dynamic human avatars in this work. To evaluate performance across diverse motion speeds and subjects, six sequences (s1g2t3, s5g1t1, s7g1t1, s10g3t4, s14g2t2, and s15g3t4) are selected. Motion-blurred images are generated using Super-SloMo [63] to replicate the visual effects of motion blur in monocular videos, with human masks derived via RobustVideoMatting [15] to enable comparisons with methods reliant on human segmentation.

5.2. Comparison with Baselines

Baseline methods include 3DGS-Avatar [9], which is a state-of-the-art method specifically designed for animatable human avatar rendering using 3D Gaussian Splatting, and HUGS [12], a prominent approach that simultaneously reconstructs and renders both animatable humans and static scenes within the 3DGS framework. For a fair comparison, the HUGS implementation adopts the official codebase [12], with scene point-cloud initialization modified from the original COLMAP-based approach [65,66] to random cubic sampling, denoted as HUGS†. To extend 3DGS-Avatar for simultaneous human and scene rendering, scalar semantic attributes are incorporated, with initialization combining the human body-mesh sampling and random cube sampling, setting initial semantic values to , referred to as 3DGS-Avatar*. Additionally, 3DGS-Avatar* and HUGS† are cascaded with intensity-based deblurring methods (MPR [17] and NAFNet [18]) and Intensity+Event-based deblurring methods (EFNet [19] and D2Net [21]) for comparison. Deblurring results are input to 3DGS-Avatar* and HUGS† for 3D reconstruction, denoted as Deblurring method + Reconstruction method.

First, we analyze the performance on the ZJU-MoCap-Blur dataset from multiple perspectives. Quantitative Evaluation on Full-Frame Rendering: Table 1 evaluates PSNR, SSIM, and LPIPS metrics across full images. 3DGS-Avatar, designed for human rendering, exhibits the lowest performance across sequences. Incorporating scalar semantic attributes in 3DGS-Avatar (3DGS-Avatar*) enables simultaneous human and scene rendering, boosting the PSNR from to , with an improvement of . Cascading with intensity deblurring enhances HUGS† accuracy, whereas 3DGS-Avatar* remains unchanged, which is attributed to the limited discriminative power of scalar semantic attributes initialized at 0.5, constraining its responsiveness to deblurred inputs. Furthermore, D2Net with Intensity + Event methods further improves deblurring for HUGS†, while EFNet, trained on datasets with significant domain gaps relative to the current dataset, shows improvement over blurred inputs but underperforms compared to intensity-only deblurring. Our proposed method, integrating one-hot semantic attributes and event flow supervision, achieves the highest accuracy across all sequences. Quantitative Evaluation within Human Bounding Boxes: Table 2 reports average rendering metrics within human bounding boxes; the ZJU-MoCap-Blur dataset exhibits minimal background detail within these regions. 3DGS-Avatar*, with basic human–scene discrimination, increases the PSNR from to compared to 3DGS-Avatar, with the PSNR and SSIM trailing only the proposed method when cascaded with the Intensity + Event (D2Net) deblurring method. The proposed method yields the best PSNR, SSIM, and LPIPS values within human bounding boxes. Qualitative Analysis: In Figure 3, each column illustrates the input blurred image, D2Net + HUGS†, D2Net + 3DGS-Avatar*, the proposed method, and the reference image. Relative to the original blurry frames (with motion trailing highlighted in the first column’s yellow-circled zoomed inset), both the cascaded methods and the proposed method mitigate this artifact, demonstrating the benefit of event information in reducing dynamic blur. Compared to D2Net + HUGS† and D2Net + 3DGS-Avatar*, the proposed method delivers sharper arm and hand contours, underscoring the advantage of event flow supervision over direct event deblurring cascades. Additionally, in the reconstruction of the static scene, HUGS†’s optimization proves challenging with random background initialization, and 3DGS-Avatar*’s scalar semantic attributes yield inadequate results (e.g., top regions), whereas the proposed method achieves a more realistic reconstruction.

Table 1.

Quantitative results on the ZJU-MoCap-Blur dataset.

Table 2.

Quantitative results within human bounding boxes on ZJU-MoCap-Blur.

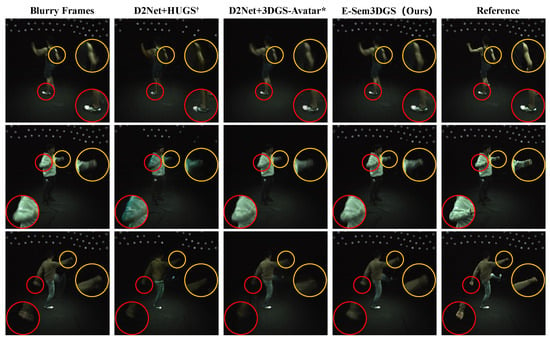

Figure 3.

Qualitative analysis on the ZJU-MoCap-Blur dataset. Our E-Sem3DGS method, utilizing event information, significantly mitigates motion blur in dynamic regions (e.g., reduced arm-motion ghosting in the yellow-circled zoomed area and clearer shoe details in the red-circled area of the first row) compared to original blurred frames. Against tandem RGB-event deblurring approaches (D2Net + HUGS† [12,21] and D2Net + 3DGS-Avatar* [9,21]), E-Sem3DGS demonstrates superior reconstruction fidelity in static background details, particularly in upper image regions.

Then, we extend the evaluation to the performance on the MMHPSD-Blur dataset. Table 3 records per sequence results, with the proposed method attaining the highest PSNR and SSIM values, alongside the lowest LPIPS across most sequences. Unlike ZJU-MoCap-Blur, HUGS† ranks second, with performance degrading when cascaded with deblurring methods due to the dataset’s complex background. Switching scene initialization from COLMAP to random sampling increases optimization difficulty for the two Gaussian groups (human + scene), where HUGS†’s reconstruction capability outweighs the impact of human blur severity. Table 4 presents quantitative results within human bounding boxes, with the proposed method achieving the best metric performance. Figure 4 displays the blurred image, HUGS†, 3DGS-Avatar*, the proposed method, and the reference image. As noted, HUGS† (second column) maintains the human foreground and static scene background via two Gaussian groups, but random background initialization, unlike COLMAP’s facilitative structure, heightens optimization challenges, impairing human rendering quality. 3DGS-Avatar* outperforms HUGS† for human rendering but misclassifies much of the background as human due to limited scalar semantic attribute capacity, causing co-deformation. The proposed method accurately reconstructs both humans and scenes, with event information supervision yielding clearer contours compared to the original blurred image.

Table 3.

Quantitative results on the MMHPSD-Blur dataset.

Table 4.

Quantitative results within human bounding boxes on MMHPSD-Blur.

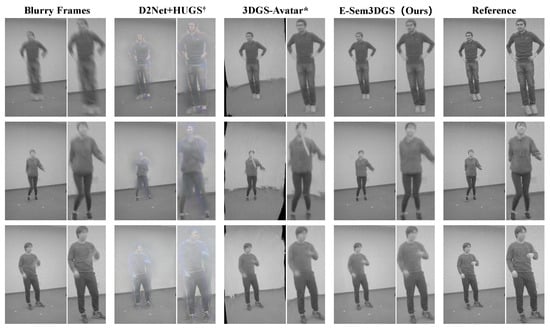

Figure 4.

Qualitative evaluation on the MMHPSD-Blur dataset. For a fair comparison, HUGS [12] uses random background initialization instead of COLMAP [65,66], causing foreground and background points to interfere with human point optimization. 3DGS-Avatar* [9], with an initial semantic attribute of , misclassifies background points as human, degrading background rendering. Our E-Sem3DGS accurately reconstructs static scenes and leverages event information to enhance human body clarity compared to blurred images (see first row, columns one and four).

5.3. Ablation Study

Ablation experiments are conducted on Subject 386 of the ZJU-MoCap-Blur dataset unless otherwise specified. As shown in Table 5, comparisons are made using only blurred image inputs, evaluating the effect of semantic attributes. 3DGS-Avatar [9], focused on human rendering, achieves a PSNR of when tasked with simultaneous human and scene reconstruction due to its lack of scene modeling capability. Adding scalar semantic attributes enables differentiation of human and scene Gaussians, raising the PSNR to . Upgrading to one-hot semantic attributes further enhances this distinction, yielding the best rendering metrics, with a PSNR of .

Table 5.

Ablation study on semantic attributes in 3D Gaussians.

Next, the integration of event information is ablated, as detailed in Table 6. Building on the blurred image baseline (last row of Table 5), the E2VID approach [67,68] converts events to grayscale images and blends them with blurry intensity images using a foreground human mask. This process disrupts image continuity and temporal consistency, lowering the PSNR from to . Applying D2Net [21] for Intensity+Event deblurring modestly improves rendering, though the cascaded deblurring–reconstruction approach is limited by the deblurring model’s training scenarios. Incorporating event loss supervision [16,69], which computes loss from the log-intensity differences between pairs of rendered images compared to real events, effectively leverages event data to address motion blur, significantly enhancing rendering performance. While event loss supervision is effective, it often lacks generalizability across different event cameras due to varying sensor parameters (e.g., contrast thresholds) and inconsistent data formats (e.g., the polarity-free CeleX-V sensor in MMHPSD [13]). In contrast, optical flow [60,64,70,71] provides a more robust and universal representation of motion. Its effectiveness as an intermediate modality is well documented across a range of event-based downstream tasks, including Visual–Inertial Odometry (VIO) [72,73], keypoint detection [74], and human pose estimation [13]. By leveraging supervision from event-based optical flow to deblurring, our method achieves the highest evaluation metrics, improving the baseline with a gain of in PSNR ( vs. ), a gain of in SSIM ( vs. ), and a reduction of in LPIPS ( vs. ).

Table 6.

Ablation study on different event utilization methods.

Since the introduction of 3D Gaussian Splatting (3DGS) [10], the initialization problem has been a critical focus for researchers. In Table 7, we explore the impact of different initialization strategies on the reconstruction performance of our proposed method. When both human and scene points are randomly initialized, successful reconstruction is achieved. This is because our method leverages SMPL model parameters as a prior to optimize all learnable parameters, including 3D Gaussians, the deformation network, the skinning network, and the color network. Nonetheless, initializing only the human points based on the SMPL model while leaving scene points uninitialized leads to inferior reconstruction quality compared to random initialization of both human and scene points, as 3DGS relies on initial points for splitting and cloning. SMPL-based human initialization with random scene points improves performance over dual random initialization, with gray initialization of scene points to distinguish white human points, achieving the best rendering quality.

Table 7.

Ablation study on 3DGS initialization strategies.

In our method, we employ semantic differentiation between human and scene Gaussians. Table 8 illustrates the impact of semantic initialization on reconstruction performance. Assigning an initial semantic value of to all points (with human Gaussians corresponding to a semantic value of 1 and scene Gaussians corresponding to a semantic value of 0) enables successful reconstruction. However, this initialization significantly increases the learning complexity for the Gaussian model and associated networks. In contrast, initializing points on and inside the SMPL model surface with a semantic value of 1 and all other points with a semantic value of 0 results in superior rendering performance. Therefore, in our method, we initialize human Gaussians based on the SMPL model, randomly initialize scene Gaussians using a cubic volume, and assign a semantic value of 1 to Gaussians on and inside the SMPL model surface, with all other Gaussians assigned a value of 0. The performance gap suggests that while random initialization allows for reasonable convergence, the lack of explicit geometric guidance leads the optimization into suboptimal local minima with ambiguous semantic separation. In contrast, our SMPL-based initialization acts as a strong geometric prior, placing the system within a favorable basin of attraction. Furthermore, the stability during optimization is maintained by the synergy of loss constraints: the masked flow loss prevents dynamic parts from drifting into the static background, while the photometric intensity loss anchors the background, preventing it from drifting into the dynamic class. This mechanism effectively focuses the optimization on boundary refinement, though it remains sensitive to gross tracking errors where the prior is spatially decoupled from the image content.

Table 8.

Comparison of 3DGS semantic initialization strategies.

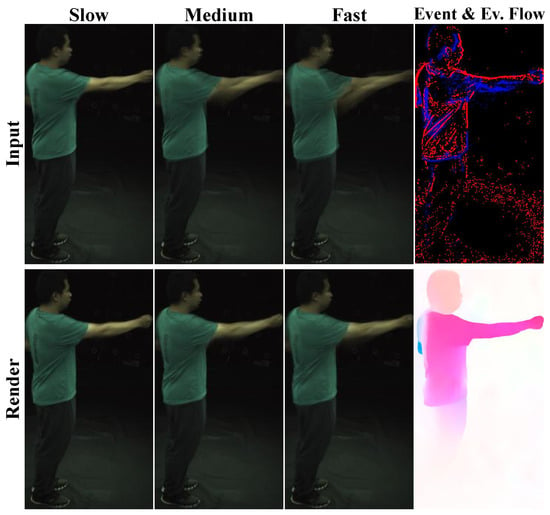

Figure 5 illustrates the rendering performance of our method under different blur levels (slow, medium, and fast), with the first row displaying input blurry images and the second row showing the corresponding rendering results. As the speed of the arm motion increases, the blur level in the arm region intensifies accordingly. Across varying blur levels, our method, by incorporating constraints from event optical flow, consistently produces arm contours that are sharper than those in the input blurry images. However, as the blur level increases, the arm edges in the rendering results become progressively more blurred, aligning with the changes observed in the input images.

Figure 5.

Rendering performance under different blur levels. This figure illustrates our method’s rendering results (bottom row) from blurry input images (top row) across slow, medium, and fast blur levels. Our method consistently generates sharper arm contours compared to the input, demonstrating effective blur mitigation across different motion speeds. By leveraging event-derived optical flow rather than raw event streams, our design provides more stable and sensor-agnostic motion cues, enabling robust deblurring across diverse acquisition conditions. The last column displays the raw event stream and the derived event-based optical flow, where color hue indicates motion direction and saturation indicates flow magnitude.

To align with sustainable computing standards, we report our method’s efficiency. On a single NVIDIA RTX 3090 Ti GPU, training takes ∼35 min for a ∼400-frame sequence (256 × 256). Furthermore, inference operates at ∼19 FPS, demonstrating a favorable balance between high-fidelity reconstruction and computational cost.

6. Limitations and Future Work

Despite the effectiveness of our method in reconstructing both human bodies and scenes from a monocular hybrid sensor camera, several limitations remain. First, while optical flow supervision enhances reconstruction, it also introduces additional training complexity due to the computational overhead. However, during inference, rendering relies solely on the optimized 3D Gaussians and associated networks. Additionally, regarding hardware, the framework relies on hybrid sensors providing aligned intensity frames to ensure high-fidelity static scene reconstruction. Second, our evaluations are limited to indoor scenes due to the scarcity of real-world event camera datasets, leaving outdoor robustness under dynamic lighting and complex backgrounds unexplored. It is worth noting that despite these data constraints, our framework is theoretically extensible to broader settings. For varying camera trajectories, the optical flow supervision remains mathematically valid, as it naturally captures combined ego-motion and object motion. For multi-person scenes, our unified semantic representation can be extended by assigning distinct semantic identifiers to different subjects. Furthermore, the use of optical flow as an intermediate modality acts as a spatio-temporal filter, offering inherent robustness against sensor noise (e.g., hot pixels) and background clutter typical of unstructured environments. Furthermore, the limited pose space in training data may lead to suboptimal performance on unseen poses, especially for out-of-distribution motions.

To address these challenges, future work could explore the following directions. First, our method has demonstrated robust performance across diverse indoor and controlled lighting conditions. For a more comprehensive evaluation of its real-world applicability and robustness, future work could focus on testing its performance in more unconstrained settings, including complex outdoor environments characterized by dynamic natural light and intricate shadow variations, as well as scenarios involving moving cameras and multi-person interactions. Second, integrating generative models, such as diffusion-based approaches [75,76], could help augment the pose space and improve the model’s ability to generalize to previously unseen poses, improving performance in real-world scenarios. Finally, leveraging large-scale human motion datasets, such as AMASS [77], combined with zero-shot learning techniques, could enable the model to generalize across new identities, capturing a broader range of body shapes and appearances.

7. Conclusions

We presented E-Sem3DGS, a semantically augmented 3D Gaussian Splatting framework for joint reconstruction of animatable humans and static scenes from monocular intensity frames and collocated event streams. By maintaining a single set of 3D Gaussians endowed with learnable semantic attributes, our method explicitly disentangles dynamic human content from static backgrounds within a unified representation: human Gaussians are deformed through articulated networks, while scene Gaussians remain static. To cope with severe motion blur, we derive optical flow from events and use it to supervise image-based optical flow between rendered views, enforcing temporal coherence in high-motion regions and sharpening both geometry and appearance. Extensive experiments on synthetic and real-world motion-blurred datasets demonstrate that E-Sem3DGS consistently outperforms strong baselines and state-of-the-art methods on both human and full-frame reconstruction, achieving superior PSNR, SSIM, and LPIPS metrics. In future work, we plan to extend our framework to more complex interactions and multi-person scenarios and to further explore training on large-scale real event-camera datasets.

Author Contributions

Conceptualization, H.S.; data curation, J.Z.; methodology, X.Y. and H.S.; supervision, K.Y. and K.W.; validation, X.Y., J.Z. and S.G.; visualization, X.Y.; writing—original draft, X.Y. and H.S.; writing—review and editing, K.Y. and K.W.; project administration, K.Y. and K.W.; funding acquisition, K.Y. and K.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Natural Science Foundation of Zhejiang Province (Grant No. LZ24F050003); the National Natural Science Foundation of China (Grant Nos. 12174341 and 62473139); the Hunan Provincial Research and Development Project (Grant 2025QK3019); the Open Research Project of the State Key Laboratory of Industrial Control Technology, China (Grant No. ICT2025B20); and the State Key Laboratory of Autonomous Intelligent Unmanned Systems (opening project number ZZKF2025-2-10).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original data presented in the study are openly available in Refs. [6,13].

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Chen, L.; Peng, S.; Zhou, X. Towards efficient and photorealistic 3D human reconstruction: A brief survey. Vis. Inform. 2021, 5, 11–19. [Google Scholar] [CrossRef]

- Kyrlitsias, C.; Michael-Grigoriou, D. Social interaction with agents and avatars in immersive virtual environments: A survey. Front. Virtual Real. 2022, 2, 786665. [Google Scholar] [CrossRef]

- Morgenstern, W.; Bagdasarian, M.T.; Hilsmann, A.; Eisert, P. Animatable Virtual Humans: Learning Pose-Dependent Human Representations in UV Space for Interactive Performance Synthesis. IEEE Trans. Vis. Comput. Graph. 2024, 30, 2644–2650. [Google Scholar] [CrossRef]

- Ren, Y.; Zhao, C.; He, Y.; Cong, P.; Liang, H.; Yu, J.; Xu, L.; Ma, Y. LiDAR-aid Inertial Poser: Large-scale Human Motion Capture by Sparse Inertial and LiDAR Sensors. IEEE Trans. Vis. Comput. Graph. 2023, 29, 2337–2347. [Google Scholar] [CrossRef]

- Mildenhall, B.; Srinivasan, P.P.; Tancik, M.; Barron, J.T.; Ramamoorthi, R.; Ng, R. NeRF: Representing scenes as neural radiance fields for view synthesis. Commun. ACM 2022, 65, 99–106. [Google Scholar] [CrossRef]

- Peng, S.; Zhang, Y.; Xu, Y.; Wang, Q.; Shuai, Q.; Bao, H.; Zhou, X. Neural Body: Implicit Neural Representations with Structured Latent Codes for Novel View Synthesis of Dynamic Humans. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 9050–9059. [Google Scholar]

- Jiang, T.; Chen, X.; Song, J.; Hilliges, O. InstantAvatar: Learning Avatars from Monocular Video in 60 Seconds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 16922–16932. [Google Scholar]

- Yu, Z.; Cheng, W.; Liu, X.; Wu, W.; Lin, K.Y. MonoHuman: Animatable Human Neural Field from Monocular Video. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 16943–16953. [Google Scholar]

- Qian, Z.; Wang, S.; Mihajlovic, M.; Geiger, A.; Tang, S. 3DGS-Avatar: Animatable Avatars via Deformable 3D Gaussian Splatting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 5020–5030. [Google Scholar]

- Kerbl, B.; Kopanas, G.; Leimkuehler, T.; Drettakis, G. 3D Gaussian Splatting for Real-Time Radiance Field Rendering. ACM Trans. Graph. (TOG) 2023, 42, 1–14. [Google Scholar] [CrossRef]

- Pang, H.; Zhu, H.; Kortylewski, A.; Theobalt, C.; Habermann, M. ASH: Animatable Gaussian Splats for Efficient and Photoreal Human Rendering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 1165–1175. [Google Scholar]

- Kocabas, M.; Chang, J.H.R.; Gabriel, J.; Tuzel, O.; Ranjan, A. HUGS: Human Gaussian Splats. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 505–515. [Google Scholar]

- Zou, S.; Guo, C.; Zuo, X.; Wang, S.; Wang, P.; Hu, X.; Chen, S.; Gong, M.; Cheng, L. EventHPE: Event-based 3D Human Pose and Shape Estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 10976–10985. [Google Scholar]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.; et al. Segment anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 3992–4003. [Google Scholar]

- Lin, S.; Yang, L.; Saleemi, I.; Sengupta, S. Robust high-resolution video matting with temporal guidance. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2022; pp. 3132–3141. [Google Scholar]

- Rudnev, V.; Elgharib, M.; Theobalt, C.; Golyanik, V. EventNeRF: Neural Radiance Fields from a Single Colour Event Camera. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 4992–5002. [Google Scholar]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.; Shao, L. Multi-Stage Progressive Image Restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 14816–14826. [Google Scholar]

- Chen, L.; Chu, X.; Zhang, X.; Sun, J. Simple Baselines for Image Restoration. In Proceedings of the European Conference on Computer Vision(ECCV), Tel Aviv, Israel, 23–27 October 2022; pp. 17–33. [Google Scholar]

- Sun, L.; Sakaridis, C.; Liang, J.; Jiang, Q.; Yang, K.; Sun, P.; Ye, Y.; Wang, K.; Gool, L.V. Event-Based Fusion for Motion Deblurring with Cross-modal Attention. In Proceedings of the European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; pp. 412–428. [Google Scholar]

- Gallego, G.; Delbruck, T.; Orchard, G.; Bartolozzi, C.; Taba, B.; Censi, A.; Leutenegger, S.; Davison, A.; Conradt, J.; Daniilidis, K.; et al. Event-based vision: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 154–180. [Google Scholar] [CrossRef]

- Shang, W.; Ren, D.; Zou, D.; Ren, J.S.; Luo, P.; Zuo, W. Bringing Events Into Video Deblurring with Non-Consecutively Blurry Frames. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 4511–4520. [Google Scholar]

- Debevec, P. The light stages and their applications to photoreal digital actors. In Proceedings of the Computer Graphics and Interactive Techniques-Asia(SIGGRAPH Asia), Singapore, Singapore, 28 November–1 December 2012; pp. 1–6. [Google Scholar]

- Alexander, O.; Rogers, M.; Lambeth, W.; Chiang, J.; Ma, W.; Wang, C. The digital emily project: Achieving a photorealistic digital actor. IEEE Comput. Graph. Appl. 2010, 30, 20–31. [Google Scholar] [CrossRef] [PubMed]

- Alexander, O.; Fyffe, G.; Busch, J.; Yu, X.; Ichikari, R.; Jones, A.; Debevec, P.; Jimenez, J.; Danvoye, E.; Antionazzi, B.; et al. Digital ira: Creating a real-time photoreal digital actor. In Proceedings of the ACM SIGGRAPH 2013 Posters, Anaheim, CA, USA, 21–25 July 2013; p. 1. [Google Scholar]

- Anguelov, D.; Srinivasan, P.; Koller, D.; Thrun, S.; Rodgers, J.; Davis, J. SCAPE: Shape completion and animation of people. ACM Trans. Graph. (TOG) 2005, 24, 408–416. [Google Scholar] [CrossRef]

- Loper, M.; Mahmood, N.; Romero, J.; Pons-Moll, G.; Black, M.J. SMPL: A skinned multi-person linear model. ACM Trans. Graph. (TOG) 2015, 34, 1–16. [Google Scholar] [CrossRef]

- Pavlakos, G.; Choutas, V.; Ghorbani, N.; Bolkart, T.; Osman, A.A.A.; Tzionas, D.; Black, M.J. Expressive Body Capture: 3D Hands, Face, and Body From a Single Image. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 10967–10977. [Google Scholar]

- Osman, A.A.A.; Bolkart, T.; Black, M.J. STAR: Sparse Trained Articulated Human Body Regressor. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; pp. 598–613. [Google Scholar]

- Osman, A.A.A.; Bolkart, T.; Tzionas, D.; Black, M.J. SUPR: A sparse unified part-based human representation. In Proceedings of the European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; pp. 568–585. [Google Scholar]

- Barron, J.T.; Mildenhall, B.; Verbin, D.; Srinivasan, P.P.; Hedman, P. Mip-NeRF 360: Unbounded Anti-Aliased Neural Radiance Fields. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 5460–5469. [Google Scholar]

- Müller, T.; Evans, A.; Schied, C.; Keller, A. Instant neural graphics primitives with a multiresolution hash encoding. ACM Trans. Graph. (TOG) 2022, 41, 1–15. [Google Scholar] [CrossRef]

- Noguchi, A.; Sun, X.; Lin, S.; Harada, T. Neural articulated radiance field. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 5742–5752. [Google Scholar]

- Su, S.; Yu, F.; Zollhöfer, M.; Rhodin, H. A-NeRF: Articulated Neural Radiance Fields for Learning Human Shape, Appearance, and Pose. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Online, 6–14 December 2021; pp. 12278–12291. [Google Scholar]

- Xu, H.; Alldieck, T.; Sminchisescu, C. H-NeRF: Neural Radiance Fields for Rendering and Temporal Reconstruction of Humans in Motion. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Online, 6–14 December 2021; pp. 14955–14966. [Google Scholar]

- Guo, C.; Jiang, T.; Chen, X.; Song, J.; Hilliges, O. Vid2Avatar: 3D Avatar Reconstruction from Videos in the Wild via Self-supervised Scene Decomposition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 12858–12868. [Google Scholar]

- Peng, S.; Dong, J.; Wang, Q.; Zhang, S.; Shuai, Q.; Zhou, X.; Bao, H. Animatable neural radiance fields for modeling dynamic human bodies. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 14294–14303. [Google Scholar]

- Chen, A.; Xu, Z.; Geiger, A.; Yu, J.; Su, H. TensoRF: Tensorial Radiance Fields. In Proceedings of the European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; pp. 333–350. [Google Scholar]

- Reiser, C.; Peng, S.; Liao, Y.; Geiger, A. KiloNeRF: Speeding up Neural Radiance Fields with Thousands of Tiny MLPs. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 14315–14325. [Google Scholar]

- Fridovich-Keil, S.; Yu, A.; Tancik, M.; Chen, Q.; Recht, B.; Kanazawa, A. Plenoxels: Radiance Fields without Neural Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 5491–5500. [Google Scholar]

- Liu, L.; Gu, J.; Zaw Lin, K.; Chua, T.S.; Theobalt, C. Neural sparse voxel fields. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Online, 6–12 December 2020; pp. 15651–15663. [Google Scholar]

- Hu, S.; Hu, T.; Liu, Z. GauHuman: Articulated Gaussian Splatting from Monocular Human Videos. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 20418–20431. [Google Scholar]

- Jung, H.; Brasch, N.; Song, J.; Perez-Pellitero, E.; Zhou, Y.; Li, Z.; Navab, N.; Busam, B. Deformable 3D Gaussian Splatting for Animatable Human Avatars. arXiv 2023, arXiv:2312.15059. [Google Scholar] [CrossRef]

- Ma, L.; Li, X.; Liao, J.; Zhang, Q.; Wang, X.; Wang, J.; Sander, P.V. Deblur-NeRF: Neural Radiance Fields from Blurry Images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 12851–12860. [Google Scholar]

- Lee, D.; Lee, M.; Shin, C.; Lee, S. DP-NeRF: Deblurred Neural Radiance Field with Physical Scene Priorss. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 12386–12396. [Google Scholar]

- Chen, W.; Liu, L. Deblur-GS: 3D Gaussian Splatting from Camera Motion Blurred Images. Proc. ACM Comput. Graph. Interact. Tech. 2024, 7, 1–15. [Google Scholar] [CrossRef]

- Xiong, T.; Wu, J.; He, B.; Fermuller, C.; Aloimonos, Y.; Huang, H.; Metzler, C.A. Event3DGS: Event-based 3D Gaussian Splatting for High-Speed Robot Egomotion. In Proceedings of the Conference on Robot Learning (CoRL), Munich, Germany, 6–9 November 2024; pp. 4100–4118. [Google Scholar]

- Yin, X.; Shi, H.; Bao, Y.; Bing, Z.; Liao, Y.; Yang, K.; Wang, K. E-3DGS: 3D Gaussian Splatting with Exposure and Motion Events. Appl. Opt. 2025, 64, 3897–3908. [Google Scholar] [CrossRef]

- Qi, Y.; Zhu, L.; Zhang, Y.; Li, J. E2NeRF: Event Enhanced Neural Radiance Fields from Blurry Images. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 13208–13218. [Google Scholar]

- Yu, W.; Feng, C.; Tang, J.; Yang, J.; Tang, Z.; Jia, X.; Yang, Y.; Yuan, L.; Tian, Y. EvaGaussians: Event Stream Assisted Gaussian Splatting from Blurry Images. arXiv 2024, arXiv:2405.20224. [Google Scholar] [CrossRef]

- Weng, Y.; Shen, Z.; Chen, R.; Wang, Q.; Wang, J. EaDeblur-GS: Event assisted 3D Deblur Reconstruction with Gaussian Splatting. arXiv 2024, arXiv:2407.13520. [Google Scholar]

- Ma, Q.; Paudel, D.P.; Chhatkuli, A.; Van Gool, L. Deformable Neural Radiance Fields using RGB and Event Cameras. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 3567–3577. [Google Scholar]

- Chen, K.; Wang, Z.; Wang, L. ExFMan: Rendering 3D Dynamic Humans with Hybrid Monocular Blurry Frames and Events. arXiv 2024, arXiv:2409.14103. [Google Scholar] [CrossRef]

- Cho, S.; Lee, S. Fast motion deblurring. ACM Trans. Graph. (TOG) 2009, 28, 1–8. [Google Scholar] [CrossRef]

- Gupta, A.; Joshi, N.; Zitnick, C.L.; Cohen, M.F.; Curless, B. Single image deblurring using motion density functions. In Proceedings of the European Conference on Computer Vision (ECCV), Heraklion, Greece, 5–11 September 2010; pp. 171–184. [Google Scholar]

- Hyun Kim, T.; Mu Lee, K. Segmentation-free dynamic scene deblurring. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 2766–2773. [Google Scholar]

- Chakrabarti, A. A neural approach to blind motion deblurring. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016; pp. 221–235. [Google Scholar]

- Mihajlovic, M.; Zhang, Y.; Black, M.J.; Tang, S. LEAP: Learning articulated occupancy of people. In Proceedings of the IEEE/CVF conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 10461–10471. [Google Scholar]

- Zhu, A.Z.; Yuan, L.; Chaney, K.; Daniilidis, K. EV-FlowNet: Self-supervised optical flow estimation for event-based cameras. arXiv 2018, arXiv:1802.06898. [Google Scholar]

- Brandli, C.; Berner, R.; Yang, M.; Liu, S.C.; Delbruck, T. A 240× 180 130 dB 3 μs latency global shutter spatiotemporal vision sensor. IEEE J. Solid-State Circuits 2014, 49, 2333–2341. [Google Scholar] [CrossRef]

- Ranjan, A.; Black, M.J. Optical flow estimation using a spatial pyramid network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2720–2729. [Google Scholar]

- Zhao, S.; Sheng, Y.; Dong, Y.; Chang, E.I.; Xu, Y. MaskFlownet: Asymmetric Feature Matching with Learnable Occlusion Mask. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 6277–6286. [Google Scholar]

- Gehrig, M.; Millhäusler, M.; Gehrig, D.; Scaramuzza, D. E-raft: Dense optical flow from event cameras. In Proceedings of the International Conference on 3D Vision (3DV), London, UK, 1–3 December 2021; pp. 197–206. [Google Scholar]

- Jiang, H.; Sun, D.; Jampani, V.; Yang, M.H.; Learned-Miller, E.; Kautz, J. Super SloMo: High Quality Estimation of Multiple Intermediate Frames for Video Interpolation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 9000–9008. [Google Scholar]

- Teed, Z.; Deng, J. RAFT: Recurrent All-Pairs Field Transforms for Optical Flow. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; pp. 402–419. [Google Scholar]

- Schönberger, J.L.; Zheng, E.; Frahm, J.M.; Pollefeys, M. Pixelwise view selection for unstructured multi-view stereo. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016; pp. 501–518. [Google Scholar]

- Schonberger, J.L.; Frahm, J.M. Structure-from-motion revisited. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4104–4113. [Google Scholar]

- Rebecq, H.; Ranftl, R.; Koltun, V.; Scaramuzza, D. High Speed and High Dynamic Range Video with an Event Camera. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 1964–1980. [Google Scholar] [CrossRef] [PubMed]

- Rebecq, H.; Ranftl, R.; Koltun, V.; Scaramuzza, D. Events-to-video: Bringing modern computer vision to event cameras. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 3857–3866. [Google Scholar]

- Klenk, S.; Koestler, L.; Scaramuzza, D.; Cremers, D. E-NeRF: Neural Radiance Fields From a Moving Event Camera. IEEE Robot. Autom. Lett. 2023, 8, 1587–1594. [Google Scholar] [CrossRef]

- Gallego, G.; Rebecq, H.; Scaramuzza, D. A unifying contrast maximization framework for event cameras, with applications to motion, depth, and optical flow estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 3867–3876. [Google Scholar]

- Shiba, S.; Aoki, Y.; Gallego, G. Secrets of event-based optical flow. In Proceedings of the European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; pp. 628–645. [Google Scholar]

- Zhu, A.Z.; Atanasov, N.; Daniilidis, K. Event-based visual inertial odometry. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5816–5824. [Google Scholar]

- Lee, M.S.; Jung, J.H.; Kim, Y.J.; Park, C.G. Event-and Frame-Based Visual-Inertial Odometry with Adaptive Filtering Based on 8-DOF Warping Uncertainty. IEEE Robot. Autom. Lett. 2024, 9, 1003–1010. [Google Scholar] [CrossRef]

- Liu, M.; Delbruck, T. EDFLOW: Event Driven Optical Flow Camera with Keypoint Detection and Adaptive Block Matching. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 5776–5789. [Google Scholar] [CrossRef]

- Poole, B.; Jain, A.; Barron, J.T.; Mildenhall, B. DreamFusion: Text-to-3D using 2D Diffusion. In Proceedings of the International Conference on Learning Representations (ICLR), Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Lin, C.H.; Gao, J.; Tang, L.; Takikawa, T.; Zeng, X.; Huang, X.; Kreis, K.; Fidler, S.; Liu, M.; Lin, T. Magic3D: High-resolution Text-to-3D Content Creation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 300–309. [Google Scholar]

- Mahmood, N.; Ghorbani, N.; Troje, N.F.; Pons-Moll, G.; Black, M.J. AMASS: Archive of motion capture as surface shapes. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 5441–5450. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.