Highlights

What are the main findings?

- We introduced a novel homogenous hybrid image sensing technique.

- The blur image captured by CMOS Image Sensor (CIS) can be compensated effectively.

What are the implications of the main findings?

- The world’s smallest pixel size of the hybrid image sensor can be achieved.

- The proposed technique can compensate the motion blur of the CIS image captured in the situation of jogging at a 3 m distance.

Abstract

We propose and demonstrate a novel motion blur-free hybrid image sensing technique. Unlike the previous hybrid image sensors, we developed a homogeneous hybrid image sensing technique including 60 fps CMOS Image Sensor (CIS) and 1440 fps pseudo Dynamic Vision Sensor (DVS) image frames without any performance degradation caused by static bad pixels. To achieve the fast readout, we implemented two one-side ADCs on two photodiodes (PDs) and the pixel output settling time can be reduced significantly by using the column switch control. The high-speed pseudo DVS frame can be obtained by differentiating fast-readout CIS frames, by which, in turn, the world’s smallest pseudo DVS pixel (1.8 μm) can be achieved. In addition, we confirmed that CIS (50 Mp resolution) and DVS (0.78 Mp resolution) data obtained from the hybrid image sensor can be transmitted over the MIPI (4.5 Gb/s four-lane D-PHY) interface without signal loss. The results showed that the motion blur of a 60 fps CIS frame image can be compensated dramatically by using the proposed pseudo DVS frames together with a deblur algorithm. Finally, using the event simulation, we verified that a 1440 fps pseudo DVS frame can compensate the motion blur of the CIS image captured in the situation of jogging at a 3 m distance.

1. Introduction

Over the last three decades, the pixel resolution of the CMOS Image Sensor (CIS) has reached to beyond 100 M, which can compete with the human eye [1]. In addition, there is an increasing demand for the development of a motion blur-free high-speed image sensor because the mobile users suffer from image quality degradation due to motion blur, especially in low-illuminance conditions [2]. Recently, it has been proposed that hybrid sensors can mitigate the motion blur by utilizing the event-based high-speed Dynamic Vision Sensor (DVS) [3,4]. However, because these heterogeneous hybrid sensors merged the DVS into a CIS at the pixel level, the image quality of the CIS can be degraded due to DVS pixels (i.e., DVS pixels are considered as deficient pixels in terms of CIS images). Moreover, the DVS pixel size has the fundamental limit of 4 μm due to the large number of required (~30) transistors. To address these problems, we report that a novel homogeneous hybrid sensing technique, having the smallest pseudo DVS pixel size (1.8 μm), can obtain motion blur-free high-speed (~1000 fps) video frames without any performance degradation caused by static bad pixels. In this paper, we propose and demonstrate that the pseudo DVS event data can be extracted by using the frame difference in the fast-readout CIS. The calculation results showed that a 1440 fps pseudo DVS frame (1 Mp) can be transmitted over the interface between sensor and mobile AP together with 60 fps CIS video frames without any signal loss. In addition, we confirmed that the motion blur generated in the CIS video frame could be compensated by using the proposed pseudo DVS event data and deblur algorithm. Section 2 explains the conventional motion-deblur methods, such as two-sensor and heterogeneous hybrid sensor methods. Section 3 describes technical details of the proposed hybrid sensing technique such as operating principle, readout circuit schematic, required data bandwidth, and motion blur compensation. Section 4 discusses the specifications and comparisons with the previous hybrid sensors. In addition, the use case coverage is estimated in Section 4 and conclusions are described in Section 5.

2. Conventional Motion-Deblur Methods

2.1. Two-Sensor Method

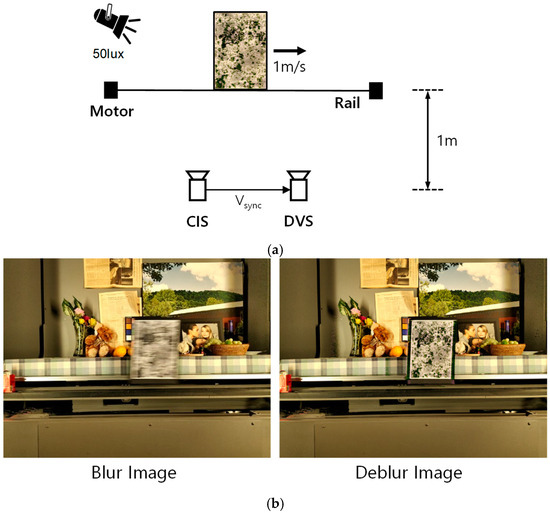

We recently developed a DVS with small pixel sizes (4 μm and 9 μm) [5,6]. The DVS is a unique image sensor inspired by biological visual systems. Each DVS pixel produces a stream of asynchronous events just as the ganglion cells of a biological retina do. By processing the information of local pixels having relative intensity changes instead of entire images at fixed frame rates, the computational requirements can be reduced dramatically. Thanks to the sparsity and binary features of DVS images, the vision task can also be achieved with low computational cost and latency. For example, it has been reported that the motion blur of CIS can be compensated simply by synthesizing with the images obtained from double integral of DVS events [7]. To perform motion blur compensation based on the conventional two-sensor approach, we set up the experiment as shown in Figure 1a. We utilized a moving metal panel stuck on a dead leaves chart as a target subject. The illumination was set to 50 lux and the distance between image sensors and the chart was 1 m. To compensate the motion blur, CIS and DVS cameras were temporally synchronized. In the experiment, we captured the images by adjusting the exposure time to 66.67 ms. Basically, the image quality degradation due to motion blur becomes worse as exposure time is increased. Figure 1b shows that a blurred CIS image is clearly restored by using the deblur technique based on the DVS.

Figure 1.

(a) Experimental setup and (b) deblur results using DVS and event-based double integral technique [7].

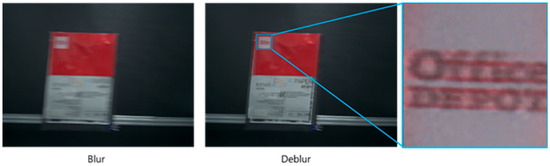

However, during the performance evaluation of motion-deblur based on the conventional two-sensor approach, we found severe artifacts resulting in image quality degradation caused by discrepancies between CISs and DVSs. For example, because a BW (black and white) DVS only outputs the intensity changes, the DVS cannot restore the color information of the CIS accurately, as shown in Figure 2. In particular, in the deblur image, red-color ghosts are observed around the text (Office DEPOT). This is because the pixel resolution, capture time, and optical axis are different between the CIS and DVS. In addition, the DVS has a quantization error, which, in turn, causes ghosts in compensated color intensity due to an imperfect event integral. In addition, the conventional technique could generate various problems such as calibration mismatch, disparity, and occlusions.

Figure 2.

Color information loss of the conventional technique.

2.2. Heterogeneous Hybrid Sensor Method

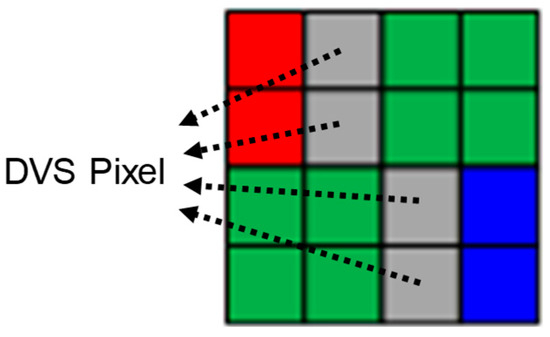

In order to avoid the discrepancy issues in the conventional two-sensor approach, the hybrid sensor has been recently proposed [3,4]. Figure 3 shows the color filter array pattern of the hybrid sensor. The DVS pixels are located in-between the R/B pixels. These pixels adopt white color filters and four PDs are connected and used as one DVS pixel. Because CIS and DVS pixels are separated from each other, this sensor is considered to be a heterogeneous-type hybrid sensor.

Figure 3.

Color filter array pattern of the heterogeneous hybrid sensor [4].

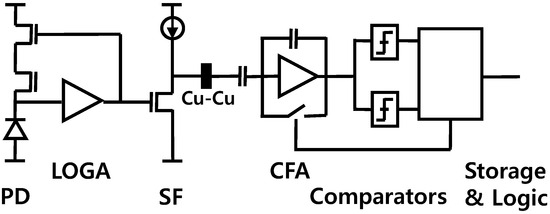

The DVS pixel structure is shown in Figure 4. The DVS pixel is composed of a PD, a log trans-impedance amplifier (LOGA), a source follower (SF), a capacitive-feedback amplifier (CFA), two comparators, their following event storages, and in-pixel logic [5]. It results in several tens (~30) of transistors for a pixel, then causes difficulties in reducing the pixel pitch to under 9 μm. This constraint can be resolved by adopting in-pixel Cu-Cu (copper–copper) wafer bonding technology and dividing pixel circuits into top and bottom chips. For example, the circuitry from PD to SF is placed in the top chip, whereas the rest from CFA are placed in the bottom chip. This allows the pixel pitch to be reduced to <5 μm. The DVS pixel size can be reduced further to be around 4 μm by optimizing the allocation of circuitry. However, in principle, the heterogeneous hybrid sensor has a fundamental limit in DVS pixel size of around 4 μm.

Figure 4.

DVS pixel structure.

3. Proposed Hybrid Image Sensing Technique

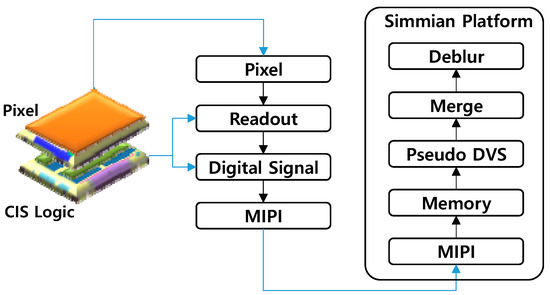

To mitigate the undesirable artifacts and critical problems of the conventional approaches, we propose and demonstrate a novel homogeneous hybrid sensing technique. Figure 5 shows the operating structure of the proposed hybrid image sensing technique. We developed a 4032 × 3024 CIS with Phase Detection Auto-Focus (PDAF) in all pixels. The size of the PD is 0.9 μm by 1.8 μm. Two PDs compose a single unit pixel. In order to achieve the fast readout, we implemented two ADCs on two PDs. In addition, as for the binning mode, the pixel output settling time can be reduced significantly by using the column switch control for the fast-readout operation, by which, in turn, horizontal random and pattern noises can be decreased as well. As a result, the 1 Mp, 1440 fps CIS images captured by the fast-readout circuit were transmitted to the Image Signal Processing (ISP) chain in the digital logic module. We verified the 1440 fps frame generation by capturing the fabricated sensor chip [8]. The pseudo DVS frame can be generated by differentiating 1440 fps CIS frames [9].

where Lt and Yt are log and luma intensity values of the CIS image at time t. When the log difference ΔL goes beyond the predetermined threshold (i.e., 20%), the pseudo DVS event signal can be generated. Using the numerical simulation based on the frame difference in CIS images [10], we empirically found that 15~30% is the optimum sensitivity range, because it can improve edge sharpness and reduce pixel noises as well. In the experiment, we performed the frame differentiation between 1440 fps CIS frames using the HW emulation board (Simmian board), as shown in Figure 5. Finally, the 60 fps CIS and 1440 fps pseudo DVS frames can be transmitted to the application algorithm module. Thus, the proposed homogeneous hybrid sensing technique can reduce the pseudo DVS pixel size to 1.8 μm, which can break the fundamental limit (~4 μm) of the conventional heterogeneous hybrid sensor.

ΔL = Lt − Lt−1 = ln(Yt/Yt−1)

Figure 5.

Operating structure of the proposed hybrid sensing technique.

3.1. Fast-Readout Structure

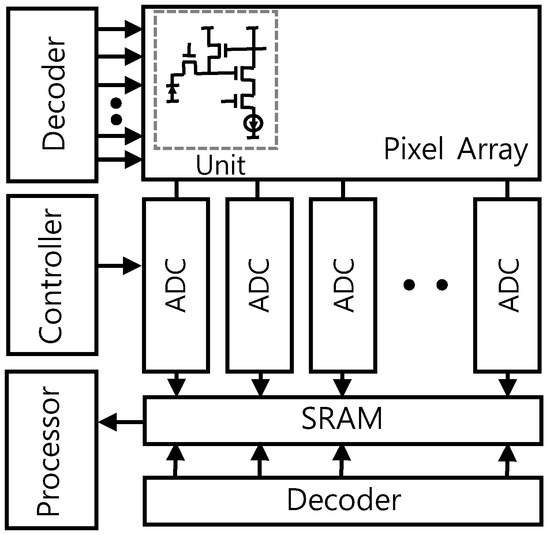

The block diagram of the image sensor is illustrated in Figure 6 [11]. The CIS is exposed to light and the image information is transferred to electric charge by a PD in each pixel. The electric charge of each pixel can be converted to an analog voltage through the readout circuit. Finally, this information is converted to digital code by column ADCs, and then the digital processor improves the image quality. The row and column address decoders are located to generate and enable signals with an address input for sequential data readout.

Figure 6.

Block diagram of the image sensor.

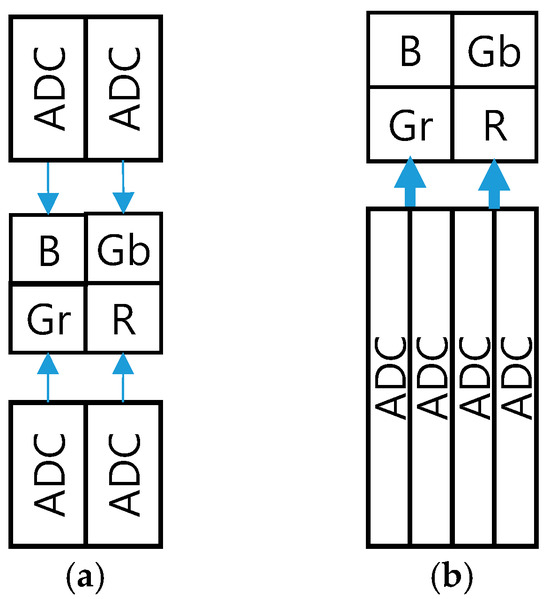

Figure 7a shows the readout structure of the conventional high-speed CIS [12]. The two-side single slope ADCs had been utilized in the pixel units. However, we utilized 0.9 μm pitch one-side ADCs and optimized the digital processing and floorplan to reduce the power consumption and chip size, as shown in Figure 7b.

Figure 7.

(a) Conventional and (b) proposed readout structures.

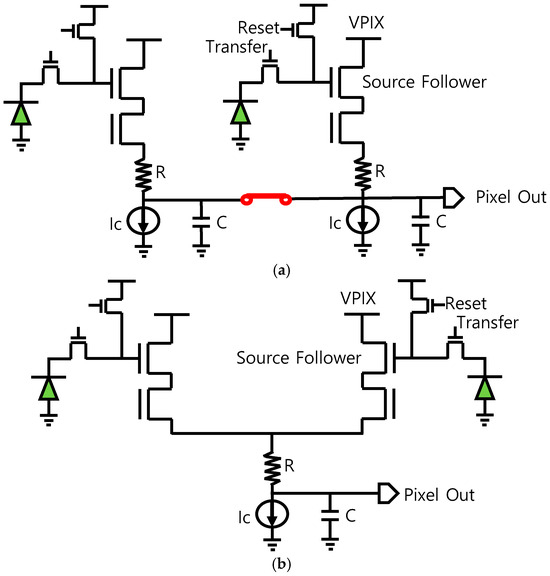

Figure 8 shows the equivalent pixel readout circuits. We found that the required current of the proposed readout structure can be four times less than the conventional method. In addition, the time constant (τ) of the pixel output of the proposed structure can be described as follows:

where Kn is the follower transistor transconductance [13], Ic is the bias current, and R and C are the resistance and capacitance of the pixel output, respectively. Thus, the time constant of the pixel output derived from the proposed circuit can be decreased by a factor of √2 more than the conventional circuit because the bias current is shared in the pixel readout. Our estimated results showed that the analog power of the readout circuit based on a two-side ADC was 308 mW, whereas a one-side ADC was only about 215 mW in the case of FHD resolution at high-speed (960 fps) operation.

τ = 1/(4√(KnIc) + R) × C

Figure 8.

The equivalent pixel readout circuits (a) conventional and (b) proposed.

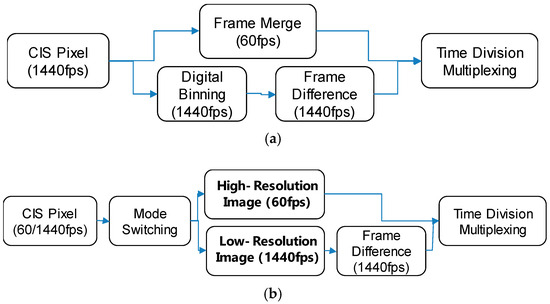

3.2. Hybrid Image Generation

Figure 9 shows the operating principles of a hybrid image signal generation. The high-resolution low-speed (60 fps) CIS image can be obtained by merging the 1440 fps images over 24 frames, as shown in Figure 9a. In addition, the low-resolution high-speed (1440 fps) pseudo DVS image can be generated by differentiating the CIS frames as well. In this case, the pseudo DVS frames can be obtained during the time period of the merged CIS frame (i.e., time-shared method). On the other hand, the high-resolution low-speed and low-resolution high-speed images can be captured alternately by switching the pixel readout circuits, as shown in Figure 9b. Thus, the pseudo DVS and CIS frames do not share each other’s capturing time period. Because the time-shared method requires high-resolution and fast-readout operation, the non-time-shared method would be more power-efficient for use by a mobile phone. For the proof of concept, in this paper, we demonstrate the time-shared hybrid sensing technique.

Figure 9.

Hybrid image signal generation (a) time-shared method and (b) non-time-shared method.

3.3. Hybrid Image Transmission

To transmit the hybrid image sensor data over the MIPI interface, we propose a concurrent data transmission technique, as shown in Figure 10. For example, the CIS frame and a series of DVS frames generated in different vertical channels can be merged at the Camera Serial Interface (CSI) block. The CSI-2 block can transmit 10 Gb/s data through a four-lane D-PHY transmitter. The CIS and DVS frame can be interleaved within a transmission frame, and then transmitted over the MIPI interfaces.

Figure 10.

Hybrid image data transmission over the MIPI interface.

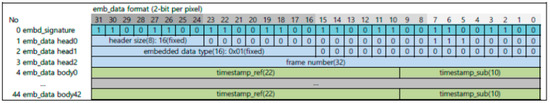

In general, the DVS constructs a 2-bit image frame. Thus, each 2-bit per a pixel can be represented as 00 (no event), 01 (ON event), 10 (OFF event), or 11 (not assigned). To packetize DVS event data, we defined two kinds of frame format. Firstly, the raw data transfer mode can send out the 2-bit pixel data, which is similar to the RAW2 format, as shown in Figure 11. Thus, the embedded data of the RAW2 format includes a timestamp and frame number information. This embedded data can be divided into the header and the body. The header size value is fixed at 16 bytes and the data type value is fixed at 1. The frame counter of the header is changed at each frame and increased one by one. In addition, the body parts include the timestamp value of each frame. The same value is repeated to match the total number of bytes of the embedded data.

Figure 11.

RAW2 embedded header packet.

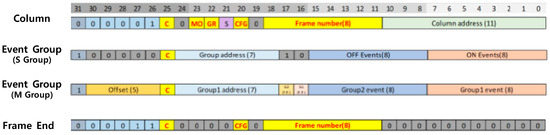

Secondly, the compressed frame transfer mode can send out the 2-bit pixel data more efficiently than the raw data transfer mode. In this case, DVS event packets can be distinguished by the first 6 bits of each column, as Figure 12 illustrates. The column packet includes the data for the column address, frame number, and frame information. The event group packet represents the data for the group address and group events. Lastly, the frame end packet includes the frame end code for checking data loss. This compressed frame transfer mode can improve the data processing capacity significantly compared to the raw data transfer mode. Previously, a fully synthesized word-serial group address-event representation (G-AER) had been published, which handles massive events in parallel by binding neighboring pixels into a group to acquire data (i.e., pixel events) at high speed, even with high resolution [6]. This G-AER-based compressed method can transmit the sparse event data up to 1.3 Geps at 1 Mp resolution [5].

Figure 12.

32-bit DVS event packet.

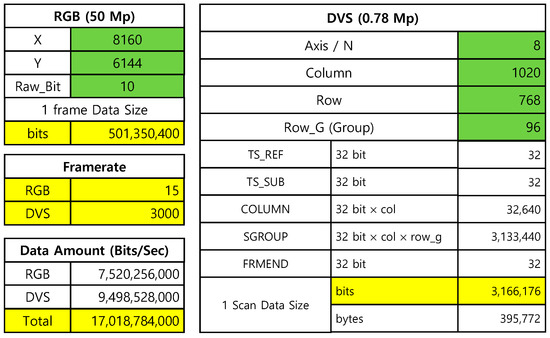

Figure 13 shows the required bandwidth calculated when the CIS and DVS resolutions are 50 Mp and 0.78 Mp, respectively. In this case, we assumed that the CIS and DVS frame rates were 15 fps and 3000 fps, and the CIS bit depth was 10 bits. It should be noted that the compressed frame transfer mode (G-AER) was considered as DVS frame format [6]. The results show that the required bandwidth was estimated to be 17 Gb/s, which can be transmitted through the MIPI v2.1 (4.5 Gb/s four-lane D-PHY) interface. In the case of using the RAW2 mode for the DVS transmission, the required bandwidth can be calculated to be 12.2 Gb/s. The recent SoC/ISP can support MIPI D-PHY v1.2 interface up to 10 Gb/s. In this case, the maximum required DVS frame rate is 1000 fps (resolution = 1 Mp). However, because the latest image sensor including the MIPI D-PHY v2.1 interface [8] can support 18 Gb/s, the maximum resolution and frame rate of DVS becomes 1 Mp and 3000 fps.

Figure 13.

Hybrid image data transmission over the MIPI interface (RGB represents CIS).

3.4. Motion Blur Compensation

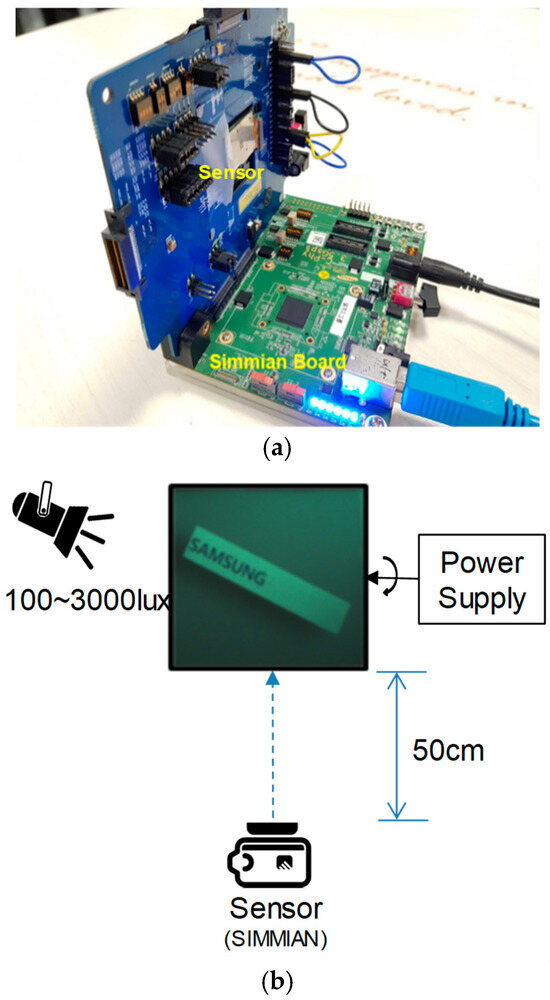

Recently, it has been reported that the motion blur of conventional the CIS can be compensated by synthesizing with the images obtained from the DVS [7]. To perform the motion blur compensation, we set up the experiment as shown in Figure 14. We utilized a rotating fan stuck on a Samsung logo as a target subject. We controlled the moving speed by adjusting the motor bias current. The illumination was set to 100–3000 lux and the distance between the image sensor and the fan was 50 cm. When the illumination was less than 50 lux, the pseudo DVS signal could not be observed clearly at 1440 fps. On the other hand, the CIS image became saturated in the case of more than 5000 lux illuminations.

Figure 14.

(a) Sensor and HW emulation board and (b) experimental setup.

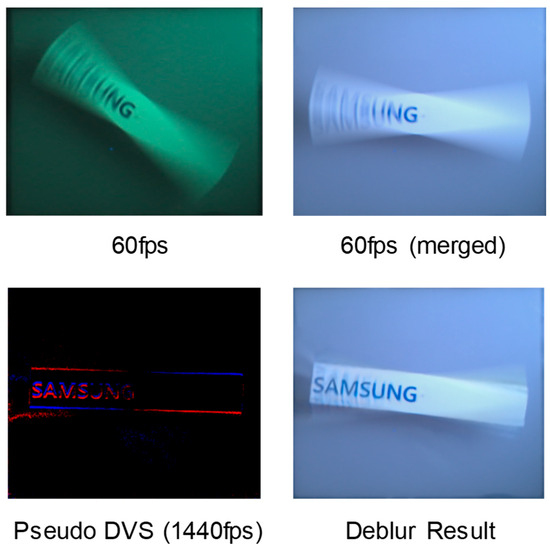

In the experiment, we captured the images by changing exposure times for 1 Mp (CIS and pseudo DVS) images, as shown in Figure 15. We confirmed that the image quality degradation due to motion blur becomes worse as exposure time is increased, whereas the hybrid sensing images are clearly restored by using a deep learning deblur algorithm based on the pseudo DVS. We utilized the Time Lens algorithm (without any modifications for this experiment), which is a CNN framework that combines warping and synthesis-based interpolation approaches to compensate the motion blur [14]. In particular, MTF50 can be defined as the frequency whose spectrum value is decreased to 50% of the low frequency component, which can be used to measure the performance of motion-deblur [15] and the sharpness of YOLO-based object detection [16]. In order to measure the sharpness, we utilized the MTF50 ratio between the ground truth and blurred images. The results showed that the MTF50 ratio was measured to be 0.4 and 0.8 before and after compensation, respectively. It should be noted that the motion-deblur performance based on the proposed pseudo DVS would be same as the heterogeneous hybrid sensor because the pseudo DVS signal is theoretically identical to a real DVS event. In this paper, we demonstrated the 1 Mp resolution CIS motion-deblur and pseudo DVS generation technique. This is because the readout speed of full CIS resolution (12 Mp) is limited to 120 fps, and so 1 Mp resolution was utilized as a concept validation for obtaining a 1440 fps CIS frame. However, for the real chip implementation, as shown in Figure 9, it is required to develop either a whole 12 Mp, 1440 fps fast-readout CIS or a switchable 12 Mp, 60 fps and 1 Mp, 1440 fps CIS.

Figure 15.

Motion-deblur results using the proposed hybrid image sensing technique (CIS and pseudo DVS resolution: 1 Mp).

4. Discussion

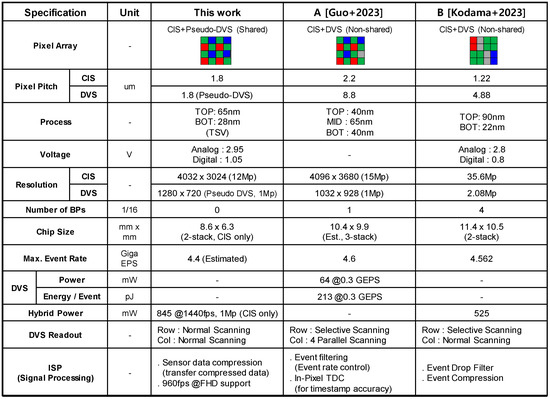

Figure 16 illustrates the comparisons of specifications. The high-speed CIS of the proposed hybrid sensing technique was implemented in a 65 nm 1P 5M back-illuminated CIS process and a 28 nm 1P 8M standard CMOS process. The top chip includes 1.8 μm pitch 12 M pixels and ADCs and logic circuits are located on the bottom chip. The specifications (pseudo DVS pixel pitch = 1.8 μm) of the proposed sensing technique are superior to the previously published sensors [3,4]. In addition, there is no performance degradation due to bad pixels, unlike the others. Especially, the proposed hybrid sensing technique has the world’s smallest pseudo DVS pixel, which breaks the fundamental limit of 4 μm due to the large number of required (~30) transistors. However, the proposed hybrid sensing is inferior to the heterogeneous hybrid sensor in terms of power consumption and event latency. Specifically, the power consumption of the heterogeneous sensor is less than the proposed hybrid sensing (i.e., 525 mW [4] vs. 845 mW), even though the number of pixels is two times larger (i.e., 2 Mp vs. 1 Mp). This is because the transistors in the DVS circuit are operating in the sub-threshold region [6], while the proposed hybrid sensing technique needs a high-speed readout circuit. Thus, we expect that the power consumption of the proposed technique can be reduced through the optimization of the readout pipeline, the improvement of the high-speed ADC design, and partial row scanning [17]. In addition, the event latency of the proposed sensing technique is larger than the real DVS (i.e., 1 ms vs. 10 μs), due to the CIS frame differentiation. Basically, DVS generates event-driven, frame-free signals asynchronously in response to temporal contrast [18,19,20]. However, there is trade-off relationship between the event latency and power consumption in the proposed hybrid image sensing technique, unlike DVS. For example, to obtain a 10 μs latency in the proposed technique, it is necessary to increase the CIS frame rate up to 100,000 fps. In this case, the power consumption would be beyond several hundred watts.

Figure 16.

Comparisons of specifications (A [3] and B [4]).

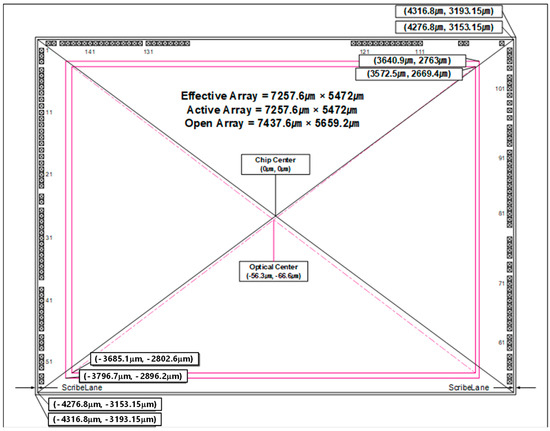

Figure 17 shows the chip dimension (high-speed CIS only). This chip is fabricated by the SAMSUNG ISOCELL process developed for imaging applications to realize a high-efficiency and low-power photo sensor. The sensor consists of 4032 × 3024 effective pixels that meet with the 1/1.76-inch optical format. The CIS has on-chip 10-bit ADC arrays to digitize the pixel output and on-chip Correlated Double Sampling (CDS) to drastically reduce Fixed Pattern Noise (FPN). It incorporates on-chip camera functions such as defect correction, exposure setting, white balance setting, and image data compression. In addition, the CIS is programmable through a CCI serial interface and includes on-chip One-Time Programmable (OTP) Non-Volatile Memory (NVM). The detailed technical specifications are available online [8].

Figure 17.

Chip dimension (CIS only, top view).

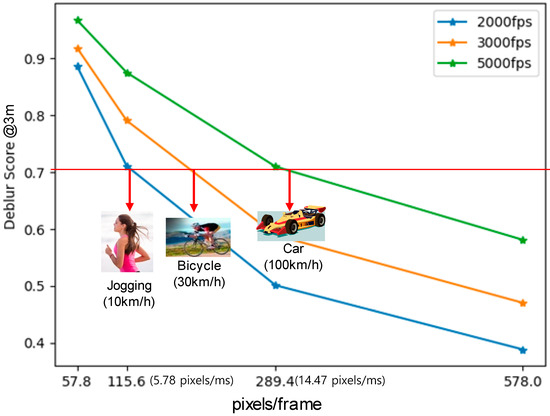

Lastly, we estimated the use case coverage of the proposed hybrid sensing technique, as shown in Figure 18. Throughout the numerical simulations, we empirically discovered that the motion-deblur tolerance can be described as an MTF50 ratio. For example, when the MTF50 ratio becomes less than 0.7, the image is noticeably blurred. Thus, we defined the deblur score as an MTF50 ratio and the target value was set to 0.7. The motion-deblur depends on the object speed and the distance between the camera and the object. As the object speed increases and the distance decreases, the effect of the motion blur is increased, which, in turn, decreases the deblur score. To compensate this large motion blur, it is required to increase the frame rate of the hybrid sensing technique. Thus, we observed the deblur performance while varying the DVS frame rate and the motion speed of the subject by using the DVS simulator [10] and the event-based double integral technique [7]. Here, a pseudo DVS signal can be numerically generated by using frame differentiation [10] and motion blur can be compensated by using double integral of event signal. Our numerical results show that, because the pseudo DVS frame rate of the proposed hybrid sensing technique is 1440 fps, it can compensate the motion blur of the CIS image captured in the situation of jogging at a distance of almost 3 m. In order to capture the motion blur-free image of a car being driven, our estimation shows that the DVS frame rate should be higher than 5000 fps. In these high-speed use cases (>2000 fps), because the power consumption of the proposed technique would increase beyond several watts, the heterogeneous hybrid sensor would be proper to be used in sports, cars, and military applications. However, toward mobile camera and VR applications, the proposed technique can also be utilized effectively, because there is no image quality degradation due to defective pixels. On top of the motion-deblur, we expect that the high-speed (1440 fps) video frame reconstruction can be achieved by using the proposed hybrid sensing technique and the frame-interpolation algorithm [21].

Figure 18.

The use case coverage of the motion-deblur simulated with varying DVS frame rates.

5. Conclusions

We proposed and demonstrated a high-speed (1440 fps) motion blur-free image sensing technique based on hybrid CIS and pseudo DVS frame generation. The proposed hybrid sensing technique has the world’s smallest pseudo DVS pixel compared to its competitors (i.e., 1.8 μm vs. 4.88 μm). In addition, we confirmed that the proposed sensing technique could improve the motion blur performance dramatically without any image quality degradation caused by static bad pixels. However, because the proposed technique utilizes a high-speed readout circuit, the power consumption is larger than the previous heterogenous hybrid sensor (i.e., 845 mW vs. 525 mW). In addition, the event latency of the proposed technique is larger than the real DVS (i.e., 1 ms vs. 10 μs), due to the CIS frame differentiation. Throughout the numerical simulations, we found that the proposed hybrid sensing technique could compensate the motion blur of the CIS image in the situation of jogging at a distance of 3 m (target MTF50 ratio = 0.7). In conclusion, we envision that, as image sensors become even more widespread, for example, with low-light automotive sensing and high-speed action camera, our proposed hybrid sensing technique will also play a critical role in the development of such applications.

Author Contributions

Conceptualization, writing—review, and editing, P.K.J.P.; technical discussion, J.K. (Junseok Kim); project administration, J.K. (Juhyun Ko). All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Dataset available on request from the authors.

Acknowledgments

The authors are thankful to Moo-young Kim, Jun-hyuk Park, Ji-won Im, and Bong-ki Son for providing valuable data and giving useful comments.

Conflicts of Interest

Author Junseok Kim and Juhyun Ko were employed by the company Samsung Electronics. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CMOS | Complementary Metal-Oxide Semiconductor |

| CIS | CMOS Image Sensor |

| DVS | Dynamic Vision Sensor |

| MIPI | Mobile Industry Processor Interface |

| fps | Frames Per Second |

| R/B | Red/Blue |

| PD | Photodiode |

| LOGA | LOG Trans-Impedance Amplifier |

| SF | Source Follower |

| CFA | Capacitive-Feedback Amplifier |

| PDAF | Phase Detection Auto-Focus |

| ISP | Image Signal Processing |

| ADC | Analog to Digital Converter |

| CSI | Camera Serial Interface |

| FHD | Full High Definition |

| CDS | Correlated Double Sampling |

| FPN | Fixed Pattern Noise |

| CCI | Camera Control Interface |

| OTP | One-Time Programmable |

| NVM | Non-Volatile Memory |

| MTF | Modulation Transfer Function |

References

- Kim, M.; Kim, D.; Chang, K.; Woo, K.; Yoon, K.; Ko, H.; Kim, J.; Cho, K.; Ji, H.; Kim, S.; et al. A 0.5 μm pixel-pitch 200-Megapixel CMOS Image Sensor with Partially Removed Front Deep Trench Isolation for Enhanced Noise Performance and Sensitivity. In Proceedings of the International Image Sensor Workshop, Hyogo, Japan, 2–5 June 2025. [Google Scholar]

- Nakabayashi, T.; Hasegawa, K.; Matsugu, M.; Saito, H. Event-based blur kernel estimation for blind motion deblurring. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Guo, M.; Chen, S.; Gao, Z.; Yang, W.; Bartkovjak, P.; Qin, Q.; Hu, X.; Zhou, D.; Huang, Q.; Uchiyama, M.; et al. A three-wafer-stacked hybrid 15-Mpixel CIS + 1-Mpixel EVS with 4.6-Gevent/s readout, in-pixel TDC, and on-chip ISP and ESP function. IEEE J. Solid-State Circuits 2023, 58, 2955–2964. [Google Scholar] [CrossRef]

- Kodama, K.; Sato, Y.; Yorikado, Y.; Berner, R.; Mizoguchi, K.; Miyazaki, T.; Tsukamoto, M.; Matoba, Y.; Shinozaki, H.; Niwa, A.; et al. 1.22 μm 35.6Mpixel RGB hybrid event-based vision sensor with 4.88 μm-pitch event pixels and up to 10k event frame rate by adaptive control on event sparsity. In Proceedings of the International Solid-State Circuits Conference, San Francisco, CA, USA, 19–23 February 2023. [Google Scholar]

- Suh, Y.; Choi, S.; Ito, M.; Kim, J.; Lee, Y.; Seo, J.; Jung, H.; Yeo, D.; Namgung, S.; Bong, J.; et al. A 1280 × 960 Dynamic Vision Sensor with a 4.95-μm pixel pitch and motion artifact minimization. In Proceedings of the IEEE International Symposium on Circuits and Systems, Seville, Spain, 10–21 October 2020. [Google Scholar]

- Son, B.; Suh, Y.; Kim, S.; Jung, H.; Kim, J.; Shin, C.; Park, P.; Lee, K.; Park, J.; Woo, J.; et al. A 640×480 dynamic vision sensor with a 9 μm pixel and 300Meps address-event representation. In Proceedings of the International Solid-State Circuits Conference, San Francisco, CA, USA, 5–9 February 2017. [Google Scholar]

- Pan, L.; Scheerlinck, C.; Yu, X.; Hartley, R.; Liu, M.; Dai, Y. Bringing a blurry frame alive at high frame-rate with an event camera. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- ISOCELL 2LD Specifications. Available online: https://semiconductor.samsung.com/image-sensor/mobile-image-sensor/isocell-2ld/ (accessed on 21 November 2025).

- Hu, Y.; Liu, S.-C.; Delbruck, T. v2e: From video frames to realistic DVS events. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Nashville, TN, USA, 19–25 June 2021. [Google Scholar]

- Radomski, A.; Georgiou, A.; Debrunner, T.; Li, C.; Longinotti, L.; Seo, M.; Kwak, M.; Shin, C.; Park, P.; Ryu, H.; et al. Enhanced Frame and Event-Based Simulator and Event-Based Video Interpolation Network. arXiv 2021, arXiv:2112.09379. [Google Scholar]

- Kim, Y.; Jung, Y.; Sul, H.; Koh, K. A 1/1.12-inch 1.4 μm-pitch 50Mpixel 65/28nm stacked CMOS image sensor using multiple sampling. In Proceedings of the IEEE International Symposium on Circuits and Systems, Monterey, CA, USA, 21–25 May 2023. [Google Scholar]

- Haruta, T.; Nakajima, T.; Hashizume, J.; Umebayashi, T.; Takahashi, H.; Taniguchi, K.; Kuroda, M.; Sumihiro, H.; Enoki, K.; Yamasaki, T.; et al. A 1/2.3inch 20Mpixel 3-layer stacked CMOS image sensor with DRAM. In Proceedings of the International Solid-State Circuits Conference, San Francisco, CA, USA, 5–9 February 2017. [Google Scholar]

- Salama, K.; Gamal, A. Analysis of active pixel sensor readout circuit. IEEE Trans. Circuits Syst. 2003, 50, 941–945. [Google Scholar] [CrossRef]

- Tulyakov, S.; Gehrig, D.; Georgoulis, S.; Erbach, J.; Gehrig, M.; Li, Y.; Scaramuzza, D. Time lens: Event-based video frame interpolation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Nashville, TN, USA, 19–25 June 2021; Available online: https://github.com/uzh-rpg/rpg_timelens (accessed on 30 November 2025).

- Lin, H.; Mullins, D.; Molloy, D.; Ward, E.; Collins, F.; Denny, P.; Glavin, M.; Deegan, B.; Jones, E. Optimizing camera exposure time for automotive applications. Sensors 2024, 24, 5135. [Google Scholar] [CrossRef] [PubMed]

- Muller, P.; Braun, A.; Keuper, M. Examining the impact of optical aberrations to image classification and object detection models. arXiv 2025, arXiv:2504.18510. [Google Scholar] [CrossRef] [PubMed]

- Hyun, J.; Kim, H. Low-power CMOS image sensor with multi-column-parallel SAR ADC. J. Sens. Sci. Technol. 2021, 30, 2093–7563. [Google Scholar] [CrossRef]

- Brandli, C.; Berner, R.; Yang, M.; Liu, S.-C.; Delbruck, T. A 240 × 180 130 dB 3 μs latency global shutter spatiotemporal vision sensor. IEEE J. Solid-State Circuits 2014, 49, 2333–2341. [Google Scholar] [CrossRef]

- Serrano-Gotarrendona, T.; Linares-Barranco, B. A 128 × 128 1.5% contrast sensitivity 0.9% FPN 3us latency 4mW asynchronous frame-free dynamic vision sensor using transimpedance preamplifiers. IEEE J. Solid-State Circuits 2013, 48, 827–838. [Google Scholar] [CrossRef]

- Lenero-Bardallo, J.A.; Serrano-Gotarrendona, T.; Linares-Barranco, B. A 3.6 μs latency asynchronous frame-free event driven dynamic-vision-sensor. IEEE J. Solid-State Circuits 2011, 46, 1443–1455. [Google Scholar] [CrossRef]

- Sun, L.; Sakaridis, C.; Liang, J.; Sun, P.; Cao, J.; Zhang, K.; Jiang, Q.; Wang, K.; Gool, L. Event-based frame interpolation with ad-hoc deblurring. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).