Abstract

Movement artifact direction and magnitude are critical parameters in noise detection and image analysis, especially for single-frame images where temporal information is unavailable. This paper introduces the Movement Artifact Direction Estimation (MADE) algorithm, a signal processing-based approach that performs 3D geometric analysis to estimate both the direction (in degrees) and weighted quantity (in pixels) of movement artifacts. Motivated by computational challenges in medical image quality assessment systems such as LUIAS, this work investigates directional multiplicative noise characterization using controlled experimental conditions with optical camera imaging. The MADE algorithm operates on multi-directional quantification outputs from a preprocessing pipeline—MAPE, ROPE, and MAQ. The methodology is designed for computational efficiency and instantaneous processing, providing interpretable outputs. Experimental results using precision-controlled apparatus demonstrate robust estimation of movement artifact direction and magnitude across a range of image shapes and velocities, with principal outputs aligning closely to ground truth parameters. The proposed MADE algorithm offers a methodological proof of concept for movement artifact analysis in single-frame images, emphasizing both directional accuracy and quantitative assessment under controlled imaging conditions.

1. Introduction

Motion blur is a prevalent artifact in images caused by camera movement or subject motion during exposure, significantly degrading image quality and making content analysis challenging. In medical imaging, this phenomenon, also known in as movement artifact, occurs across photography, computer vision, and medical imaging applications, resulting in blurred images that lack sharpness and detail, leading to potential misinterpretation of image content [1,2,3]. Movement artifacts present significant challenges across diverse imaging modalities, from Magnetic Resonance Imaging (MRI) procedures where patient movement during scanning causes ghosting and blurring artifacts [4,5], to endoscopic ultrasonography where motion affects vessel detection capabilities [6], and ultrasound pulse-echo techniques where tracking moving objects requires sophisticated signal processing [7,8,9]. The impact of motion blur extends to clinical applications such as colonoscopy where blurry frames from motion, out-of-focus conditions, and water jets can lead to missed detections [10], and medical imaging quality assessment where movement artifacts significantly affect evaluation accuracy [11]. Specialized measurement techniques have been developed for various applications, including photoacoustic velocimetry for blood flow measurement in microvasculature using cross-correlation of successive signal pairs [12] and super-resolution ultrasound imaging with two-stage motion estimation methods combining affine and nonrigid estimation [13]. Accurately estimating the direction of motion blur is crucial for effective image restoration and analysis, as the directional information provides valuable insights about camera or subject movement, enabling improved image quality enhancement and supporting advanced image analysis tasks [14,15,16,17,18]. The assessment of motion blur quality has been advanced through the development of objective blur level metrics using point spread function analysis [19] and structural similarity indices that degrade with movement artifacts [20].

Deep learning approaches have gained significant attention for motion blur detection and estimation in recent years. Convolutional neural networks (CNNs) have shown promising results in identifying blur patterns and estimating blur parameters [21,22,23,24,25]. Notable implementations include Motion Correction-Net (MC-Net) for motion compensation in brain MRI achieving approximately 40 ms processing times [21], end-to-end networks for rotary motion deblurring addressing polar coordinate transformation errors [24], and two-stage deblurring modules using high frequency residual learning [25]. Advanced architectures encompass classification models for directional analysis [26], autoencoder frameworks for blind deconvolution [27], attention mechanisms, generative adversarial networks, and transformer-based approaches [28,29]. Despite achieving high accuracy in controlled scenarios, these methods face significant limitations: (1) requirement for large annotated datasets, (2) high computational complexity during training and inference with GPU dependency, (3) limited interpretability of learned features, and (4) domain-specific performance that may not generalize across imaging conditions [30], rendering them impractical for real-time or resource-constrained applications.

Multi-frame approaches leverage temporal information from sequential image acquisitions to estimate motion blur direction and magnitude, analyzing frame-to-frame variations, optical flow patterns, and temporal gradients to infer motion characteristics [31,32]. Several studies have explored these sequential methods, including optical flow-based tracking [33] and multi-frame joint detection approaches [34], but these approaches share the common limitation of requiring multiple frames for analysis. Advanced motion correction techniques have been developed for medical imaging applications, including elastic motion correction in PET imaging through mass preservation optical flow algorithms [35] and cone-beam computed tomography movement artifact correction using morphological analysis of blood vessels [36]. The literature reveals a progression from simple frame differencing to sophisticated optical flow estimation and multi-object tracking systems [37,38], with applications extending to moving object detection using enhanced optical flow estimation and feature threshold segmentation [39]. Frequency domain methods utilize Fourier transforms and spectral analysis to identify motion blur characteristics, typically assuming that lower frequencies correspond to motion blur while higher frequencies represent sharpness and edges [40,41]. Cortés-Osorio et al. [16] introduced discrete cosine transform (DCT) for velocity estimation from single motion blurred images, achieving mean absolute errors of 4.66° in direction estimation. Advanced frequency domain approaches include reorganized DCT representation for autofocus applications [42], frequency-Bessel transform methods for extracting multi-order dispersion curves from background noise [43], and time-domain inverse digital recursive methods for characteristic frequency signal removal [44]. However, the fundamental limitation of frequency domain methods is the loss of precise spatial localization information. While these techniques can identify the presence and general characteristics of motion blur, they cannot pinpoint the exact location and direction of blur artifacts in the spatial domain [45,46], limiting their effectiveness for targeted correction and analysis.

Single-frame images present particularly challenging scenarios for motion blur analysis, as they lack temporal information that multi-frame approaches rely upon. The need for low-latency processing capabilities in applications such as medical imaging, surveillance systems, and computer vision further emphasizes the importance of computationally efficient solutions for single-frame directional estimation [47,48,49,50,51]. Theoretical foundations for modern signal processing approaches provide essential frameworks for addressing these challenges through comprehensive methodologies encompassing stochastic signals, parameter estimation, and adaptive filtering techniques [52].

Iterative approaches apply multiple noise reduction techniques and measure signal-to-noise ratios (SNRs), selecting optimal results through comparative analysis [53,54]. These methods often combine spatial and temporal information, repeatedly applying different filtering strategies until achieving satisfactory SNR metrics, but this iterative nature makes them costly and time-consuming. Time-domain methodologies for motion assessment have emerged as alternatives to traditional approaches, offering advantages in specific applications where temporal analysis provides superior motion characterization [55]. Advanced signal processing techniques for time-of-flight and frequency estimation have shown promise in reducing background noise interference and improving parameter extraction accuracy [56], with comprehensive reviews of measurement techniques emphasizing the importance of fluctuating characteristics in dynamic systems [57]. Specialized system approaches have been developed for specific applications, including SABER (Systems Approach to Blur Estimation and Reduction) for X-ray radiographs using point spread function modeling and numerical optimization [58], and QP-based visual servoing schemes that limit motion blur through velocity constraints and image gradient sharpness metrics [59].

Traditional spatial domain methods focus on direct analysis of pixel intensities, gradients, and local features to identify blur characteristics, including edge detection, gradient analysis, and local variance computations [60,61]. Recent studies have explored robust estimation procedures and adaptive filtering techniques for motion parameter identification [45,46], while specialized applications have demonstrated movement artifact elimination in optical coherence tomography angiograms [62] and controllable motion-blur effects for post-processing applications [63]. Advanced spatial domain techniques include convolved feature vector based adaptive fuzzy filters for impulse noise removal [64], super-resolution algorithms with outline feature extraction for small object detection [65], and precision coordinate alignment methods using motion capture systems [66]. However, most existing spatial methods lack sophisticated geometric analysis capabilities for precise directional estimation. Current spatial domain approaches generally lack comprehensive geometric frameworks for motion blur direction estimation, and few approaches integrate advanced geometric analysis with signal processing principles to achieve precise directional localization in 3D space representations.

Despite these advances, several critical gaps remain in the literature that limit the effectiveness of existing motion blur direction estimation methods. First, most frequency-domain approaches lack the ability to provide precise directional information in the spatial domain [67], as they cannot pinpoint the exact location and orientation of motion blur artifacts [40,46,68,69]. While these methods may detect the presence of motion blur, they often fail to deliver the spatial localization and directional vectors necessary for targeted correction, which is especially problematic in applications requiring geometric analysis of blur patterns. Second, the computational complexity of current methods—particularly deep learning and frequency-domain techniques—poses significant challenges for low-latency or large-scale applications, as they demand substantial resources and processing time [21,22]. Third, many existing approaches are domain-specific and rely on assumptions about noise characteristics or motion patterns that may not generalize across different image types or acquisition conditions.

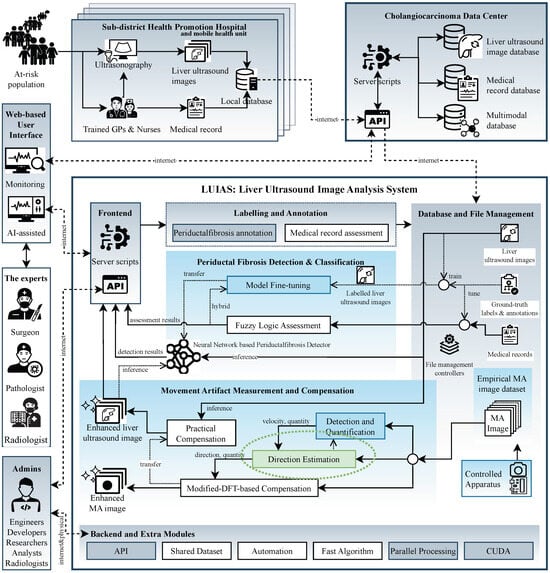

The need for robust directional multiplicative noise characterization is particularly evident in AI-based image analysis systems where classifier performance depends on image quality. In systems such as the Liver Ultrasound Image Analysis System (LUIAS) for cholangiocarcinoma surveillance system [14,47], multiple factors including acoustic noise, probe angle selection, and acquisition conditions affect periductal fibrosis detection and classification robustness. While many degradation factors have established mitigation pathways, multiplicative noise in single static images remains challenging to characterize computationally. Multiplicative noise captures degradations that scale with signal intensity—such as motion-induced patterns—which cannot be addressed through conventional additive noise reduction alone. This motivates developing signal processing frameworks that quantify such patterns under controlled conditions, providing methodological foundations for future domain-specific investigations. The present work addresses this through optical imaging experiments where ground-truth parameters are precisely controlled, enabling rigorous validation without ultrasound physics confounds. Critically, precise directional and magnitude estimates would enable future compensation strategies that could systematically enhance periductal fibrosis detection and classification performance in such systems. Figure 1 illustrates the relationship between this methodological work and LUIAS motivation, where system infrastructure components are highlighted in gray, recent research developments are indicated in blue, and the green block with dotted ellipse delineates the specific scope addressed in this paper.

Figure 1.

Overview of the Liver Ultrasound Image Analysis System (LUIAS) in the Cholangiocarcinoma Surveillance System.

A critical research gap remains: existing methods lack computationally efficient frameworks for characterizing multiplicative noise in single-frame images under controlled conditions with known ground-truth parameters. While frequency-domain and deep learning approaches offer sophisticated analysis, they sacrifice either spatial precision or computational tractability. Moreover, developing efficient movement artifact compensation strategies fundamentally requires precise direction and magnitude estimates—yet no prior work provides both parameters simultaneously through computationally tractable signal processing approaches validated under controlled conditions where ground-truth is known.

We propose the Movement Artifact Direction Estimation (MADE) framework—a signal processing approach using controlled optical imaging to characterize directional multiplicative noise through mathematical modeling and empirical validation with precision-controlled apparatus. The MADE algorithm performs 3D geometric analysis on multi-directional quantification outputs from a preprocessing pipeline (MAPE, ROPE, MAQ), extracting directional features across four axes and transforming them into precise directional estimates. This methodology delivers spatial domain analysis, low computational complexity, and comprehensive multi-directional capability validated through controlled experiments. This work addresses the estimation component, providing the directional and magnitude parameters that future compensation algorithms would require as inputs. While motivated by LUIAS computational challenges (Figure 1), this study establishes fundamental signal processing methodologies using optical imaging, with artifact compensation strategies and domain-specific applicability reserved for future work.

2. Materials and Methods

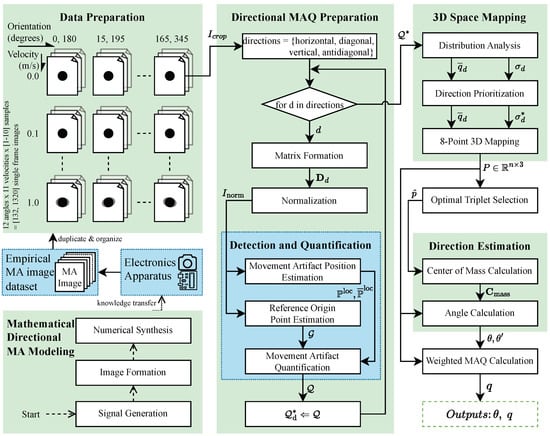

This section presents our signal processing framework for movement artifact direction estimation in single-frame images. The methodology combines a detection and quantification pipeline (MAPE, ROPE, MAQ) with the MADE algorithm for 3D geometric analysis. The pipeline quantifies movement artifacts across four principal directions, while MADE transforms these outputs into precise directional estimates through optimal triplet selection, center of mass calculation, and weighted distance computation. Figure 2 illustrates the methodology overview, with blue components representing previous work and green components indicating novel contributions.

Figure 2.

Overview of the proposed Movement Artifact Direction Estimation methodology.

2.1. Signal-Domain Representation of Movement Artifacts

This section establishes the mathematical foundation for modeling movement artifacts from a signal processing perspective, distinct from conventional pixel-based image processing approaches and ultrasound acoustic beamforming. The proposed model captures both rotational and translational components of motion, providing a rigorous analytical basis for subsequent algorithmic development and empirical validation.

In signal processing theory, the observed signal is represented as a combination of the original signal , multiplicative noise , and additive noise :

For movement artifact analysis, emphasis is placed on the multiplicative component , where motion introduces structured spatial distortions rather than stochastic interference. The transformation from the spatial domain to the signal domain enables deterministic modeling of movement-induced modulation as a function of motion parameters.

Let denote the spatial image function and represent the spatial-to-signal transformation operator. The movement artifact manifests as a multiplicative modulation term parameterized by the orientational angle , yielding the observed signal:

Here, serves as the fundamental control parameter governing the multiplicative structure, enabling directional characterization of motion-induced distortions through signal-domain analysis.

Within this framework, the object’s motion is described by a continuous-time parametric trajectory that combines rotational and translational components:

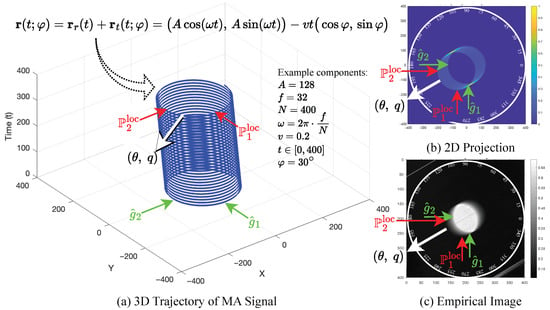

where is the oscillation amplitude, is the angular frequency, is the linear velocity, is the exposure time, and defines the motion orientation. The rotational term induces cyclic displacement, while the translational drift produces monotonic elongation aligned with . For conceptual illustration, see Figure 3a.

Figure 3.

Mathematical modeling of movement artifact signal. Each subfigure contains five arrows pointing to the same corresponding areas: green arrows ( and ) indicate some origin points, red arrows ( and ) indicate some movement artifact points, and white arrows highlight the most important parameters targeted by this paper, , estimated direction in degrees and distance in pixels.

The image formation process is governed by the integration of a spatial point spread function (PSF) along the parametric trajectory . The PSF h is assumed normalized () and compactly supported, representing the system’s response to a point source. Typical choices include the Gaussian kernel,

where is the standard deviation controlling the spread of the blur, and are spatial coordinates. For an idealized point response, the Kronecker delta is used:

where denotes the Dirac delta function, representing an impulse at the origin. Physically, h describes how light or signal from a single point is distributed in space, controlling the degree of sharpness or blur in the resulting image. The observed image is generated by accumulating the PSF along the trajectory , effectively forming a superposition of the PSF at each position visited during the motion:

This formulation can be equivalently expressed as a convolution of the PSF h with a trajectory-induced measure :

where is a measure (e.g., Dirac or distribution) supported along the path traced by , assigning mass to each location visited during exposure. This links the physical motion model directly to the observed image structure.

To quantify the directional extent of blur, we define the arc-length projection as:

where A represents the amplitude of oscillatory motion, is the angular frequency, and v denotes the constant velocity component. The variable t is time, while specifies the direction along which the blur is measured. The integrand computes the instantaneous speed along the trajectory, combining both oscillatory and linear motion effects.

This explicit expression demonstrates how the orientational angle modulates the total directional path elongation, linking the underlying motion parameters to the observed blur characteristics in the image.

For practical simulation, the above integrals are approximated by a Riemann sum with step size : , , . The discrete trajectory samples are

where K is a discrete Kronecker or Gaussian kernel, and the approximation error is under standard smoothness of . The mapping yields . All non-angular parameters affect scale or smoothness, but only determines the orientation of the drift and thus the anisotropy of the resulting blur.

The directional blur pattern is a deterministic functional of , i.e., for fixed parameters. However, the inverse problem—estimating from a single observed frame I—is non-trivial due to the entanglement of rotational and drift components and the absence of temporal context. This motivates our proposed estimation pipeline, which leverages multi-directional statistical features (MAPE, ROPE, MAQ) and geometric optimization (MADE) to approximate the inverse mapping .

The is the known ground-truth or fundamental orientation parameter that determines the direction of the movement artifact, while denotes the estimated direction obtained by our methodology. The governs the orientation of the drift, which in turn shapes the anisotropic spatial patterns observed in the image. Our methodology systematically extracts and quantifies these patterns through a multi-stage pipeline, culminating in a robust estimation of the underlying movement artifact direction . For visual interpretation, Figure 3b illustrates the 2D projection of the modeled movement artifact, while Figure 3c presents the corresponding empirical image, demonstrating how the theoretical framework translates to practical image analysis.

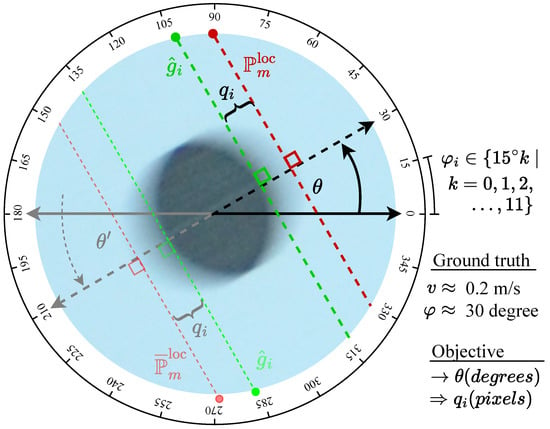

To clarify the problem statement and the principal parameters estimated by our approach, Figure 4 illustrates a unified circular object moving across a uniform background, captured as it traverses from right to left in front of the camera at a velocity . The motion occurs at angles and , with ground truth labels available for every increment from to and their complementary angles, enabling comprehensive analysis. Both velocity v and orientation are known during ground truthing and serve as reference labels for evaluating the proposed method. The figure highlights the two principal parameters estimated—direction and magnitude —as well as the outputs of the Detection and Quantification Pipeline ( and ), which are adaptively incorporated as required by the procedure. Solid lines and parameters denote primary analysis, while faint lines and parameters represent secondary or complementary analysis.

Figure 4.

Unified object movement and principal parameters for movement artifact estimation.

2.2. Data Preparation

The data preparation stage primarily employs empirical acquisition of single-frame images exhibiting controlled movement artifacts, with mathematical synthesis serving to confirm theoretical hypotheses. This approach ensures that the proposed methodology is grounded in real-world imaging conditions while maintaining analytical rigor. For empirical validation, we constructed a real-world dataset using a precision-controlled apparatus that induces motion in a unified black object on white background across 12 orientation configurations, spanning orientation angles with uniform angular spacing . Each primary orientation inherently defines its corresponding complementary orientation , establishing bidirectional symmetry pairs that comprehensively cover all possible orientations within the full angular domain . For each orientation configuration, the apparatus generates motion at 11 predefined velocities with uniform velocity spacing , resulting in distinct parameter combinations. For each parameter combination , multiple image acquisitions are performed with repetition count , yielding a comprehensive dataset cardinality of images, with theoretical bounds total image instances. The apparatus consists of a velocity control subsystem (a geared DC motor rotating a metallic rod with an object, allowing fine-grained speed control) and a shutter triggering subsystem (a microcontroller triggers the camera when the object interrupts a laser beam, ensuring consistent capture timing). Each acquired image is annotated with its ground truth velocity (v) and orientation (), providing precise reference data for validation. Multiple images are acquired for each velocity–orientation combination, and all images are post-processed for spatial consistency by cropping and alignment to center the object and standardize the region of interest. This unified approach, combining mathematical modeling and empirical acquisition with comprehensive orientation and velocity coverage, ensures that the dataset is both physically meaningful and analytically tractable, providing a robust foundation for subsequent movement artifact quantification and analysis.

2.3. Detection and Quantification Pipeline

The detection and quantification pipeline is designed to extract robust, multi-directional features that quantify movement artifacts in single-frame images. This stage consists of three core algorithms—MAPE, ROPE, and MAQ—each grounded in classical signal processing and tailored for computational efficiency and interpretability.

Movement Artifact Position Estimation (MAPE) systematically detects and localizes movement artifacts by analyzing the spatial structure of normalized signals along each scanline. For a normalized image matrix , each row m is treated as a one-dimensional signal . The algorithm computes the normalized impulse response (self-similarity profile) for each scanline:

Peaks in are detected using a prominence criterion, which ensures that only structurally significant features are retained. Prominence is defined as the vertical distance between a peak and its lowest contour line, i.e., the minimum value to which the signal must descend before rising to a higher peak.

Let denote the set of lag indices l where attains a local maximum that satisfies the prominence criterion. The lag position of the second most prominent peak for each scanline m is then defined as:

where arg max returns the lag index of the second most prominent peak (excluding , which corresponds to the main peak at zero lag). Here, the notation (for “second peak”) with subscript “loc” (for “location”) is used to denote the set of detected second peak locations. The set of all detected artifact positions across all scanlines is , and a symmetry-based lag complementation is applied to account for both displacement directions, yielding the complementary set . The resulting sets and succinctly encode artifact peak positions and their complements, forming the basis for quantification. For all sums involving , we define whenever or (i.e., zero-padding is used for out-of-bounds indices).

ROPE estimates the undistorted reference origin for each scanline, providing a stable anchor for artifact quantification. Each scanline is smoothed using an exponential moving average (EMA) with parameter :

The first difference (slope) of the EMA sequence is computed to highlight structural transitions:

The dominant transition for each scanline i is identified as the index where the slope attains its maximum absolute value:

This transition is retained only if its magnitude exceeds a threshold , where is a tunable parameter and is the maximum slope for scanline i. The set of reference origins is then defined as

where captures the most significant structural boundaries for each scanline, providing robust reference points for subsequent artifact quantification.

MAQ quantifies the spatial displacement between detected artifact positions and their reference origins, incorporating adaptive filtering to suppress outliers. For each scanline, the MAQ value is defined as the spatial distance between the reference origin and the closest valid artifact position (from either or ), subject to a plausibility threshold :

with resulting in suppression (). The final set provides a robust, scanline-wise quantification of movement artifact magnitude.

This detection and quantification pipeline yields a compact, multi-directional summary of movement artifacts, with each step mathematically grounded and computationally efficient. The outputs—artifact positions, reference origins, and quantified displacements—serve as critical inputs for the subsequent geometric analysis in the MADE algorithm, enabling precise and interpretable motion blur direction estimation.

2.4. MADE Algorithm

The MADE algorithm represents the core innovation of our methodology, transforming the directional MAQ sets from the detection and quantification pipeline into precise directional estimates through 3D geometric analysis. The algorithm employs a systematic four-stage approach: directional MAQ preparation, 3D space mapping with reliability prioritization, optimal triplet selection, and direction estimation with weighted distance calculation. This geometric framework transcends traditional signal processing limitations by integrating spatial relationships and statistical reliability measures to achieve unprecedented accuracy in single-frame motion blur direction estimation.

2.4.1. Directional MAQ Preparation

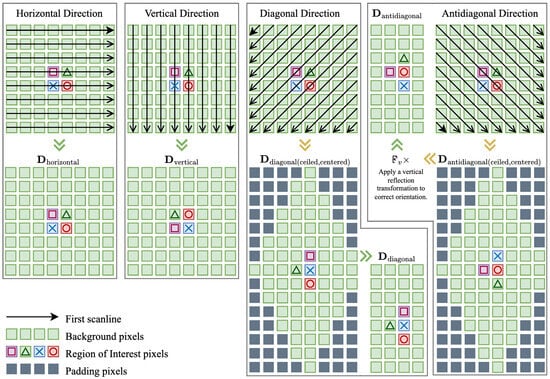

The MADE algorithm begins by applying the detection and quantification pipeline to four directional components: horizontal, vertical, diagonal, and antidiagonal. Each direction requires a specific enumeration strategy to preserve geometric structure and enable optimal feature extraction without interpolation artifacts.

For a given centered cropped image , directional matrices are constructed as follows:

Horizontal and Vertical Directions: These correspond directly to image rows and columns, respectively. The horizontal matrix uses the original image structure (), while the vertical matrix applies a 90° rotation (). Diagonal and Antidiagonal Directions: These require more complex processing involving element collection along diagonal lines, center alignment, and spatial cropping to maintain consistency. The diagonal direction collects elements where , while the antidiagonal direction uses the constraint . Detailed formulations for these transformations, including centering offsets, cropping boundaries, and reflection operations, are provided in Appendix A, with the illustration shown in Figure 5.

Figure 5.

Matrix formation process for directional MAQ preparation.

After matrix construction, row-wise normalization is applied to ensure consistent intensity scaling:

where and are the row mean and standard deviation, respectively (see Appendix A.1 for complete definitions).

Each normalized directional matrix is processed through the detection and quantification pipeline (MAPE, ROPE, MAQ) to yield directional MAQ sets:

With all four directional MAQ sets computed and spatially aligned, the algorithm proceeds to 3D space mapping for integrated geometric analysis and robust direction estimation.

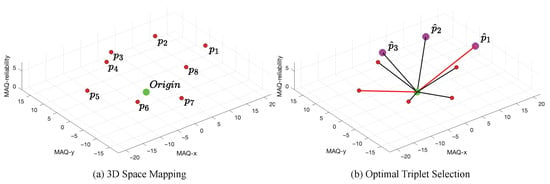

2.4.2. Three-Dimensional Space Mapping

In this stage, the MADE algorithm integrates the directional MAQ statistics to construct a geometric representation in 3D space. For each direction d, let denote the number of lines in the d-th direction of . The mean and standard deviation of the MAQ values for direction d are defined as

where is the MAQ value for the k-th line in direction d.

To enhance the influence of variability and to allow for tunable sensitivity in the geometric mapping, the standard deviation for each direction is scaled by a configurable boost factor :

where is a user-defined parameter. This boosted standard deviation is used as a measure of directional uncertainty or variability, and its role as the third dimension in the 3D mapping allows the algorithm to emphasize or de-emphasize the effect of dispersion in the quantification process. A higher increases the impact of variability, while a lower reduces it, providing flexibility for different application requirements.

To prioritize directions with greater reliability, a value swapping strategy is applied to the set . The rationale is that a lower standard deviation indicates more consistent (and thus more reliable) MAQ measurements in that direction. Therefore, the smallest value in is assigned the highest reliability score, and the largest is assigned the lowest. This is achieved by swapping the smallest and largest values, as well as the two intermediate values, effectively inverting the order of significance. Let be the ordered set such that . The reliability scores are then defined by

where are the directions corresponding to the original order of . This inversion ensures that directions with lower variability are given higher reliability scores, which will be reflected in the geometric analysis.

Each direction and its complement are then mapped to a point in 3D space, with coordinates determined by the mean MAQ value and the corresponding reliability score. Let denote the image center (or origin). The eight 3D points are defined as

where is the reliability score for direction d after the value swapping operation.

This coordinate system encodes the directional MAQ mean as spatial displacement in the x and y axes, and the reliability score as the z axis, as illustrated in Figure 6a. The resulting 3D geometric configuration yields a set of points , which serves as the input for the subsequent optimal triplet selection process. Briefly, the next optimal triplet selection section determines the three consecutive adjacent points in 3D space that maximize the total distance from the origin, ensuring both adjacency constraints and geometric significance for directional estimation. This step is critical for accurately identifying the predominant movement artifact direction by selecting the most representative triplet from the eight available points, as illustrated in Figure 6b.

Figure 6.

(a) Three-dimensional space mapping: Directional MAQ means are represented as points to in 3D space (MAQ-x, MAQ-y, MAQ-reliability), each correlated to the origin (green, “MAQ Origin”). (b) Optimal triplet selection: The three most distant adjacent points (, , ) are highlighted, with red lines indicating the maximal distances included in the selected triplet.

2.4.3. Optimal Triplet Selection

Given the points matrix where represents the total number of directional points, and the origin point , the algorithm first calculates the Euclidean distance from the origin to each point:

where represents the i-th point coordinates and is the corresponding distance from the origin.

To identify the optimal triplet, the selection process enumerates all possible consecutive adjacent triplets with wrap-around connectivity. For each triplet starting at index i, the three consecutive points are defined as:

For each candidate triplet, the total distance is computed as the sum of individual distances:

The objective is to maximize this total distance while maintaining the adjacency constraint:

Accordingly, the optimal triplet is defined as the three consecutive points corresponding to the indices determined by :

For consistency in subsequent analysis, the optimal triplet is directly utilized in the center of mass calculation described in Section 2.4.4.

This selection approach ensures that the identified triplet maximizes the collective distance from the origin while preserving spatial adjacency, which is critical for maintaining geometric coherence in the subsequent center of mass computation. The adjacency constraint prevents the selection of noncontiguous points, thereby supporting a robust and interpretable direction estimation process.

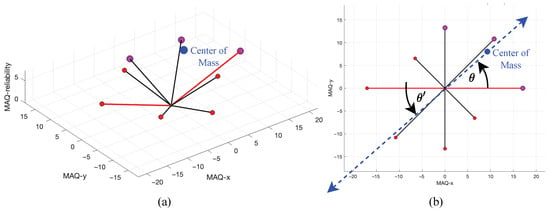

2.4.4. Direction Estimation

The direction estimation process constitutes the principal outcome of the MADE algorithm, yielding the estimated direction angle of the movement artifact in degrees. This step transforms the optimal triplet selection results into a definitive directional estimate that serves as the foundation for subsequent calculations, including the estimation of the actual MAQ distance in pixels.

The direction estimation process begins with the computation of the 3D center of mass from the optimal triplet obtained from the previous stage. The center of mass represents the geometric centroid of the three most significant points in 3D space and serves as a representative point indicating the overall directionality of the movement artifact. The 3D center of mass is calculated as the arithmetic mean of the three optimal triplet coordinates:

where represents the center of mass coordinates in 3D space.

The primary direction vector is established from the origin to the center of mass, creating a directional vector that encapsulates the predominant movement artifact orientation. This vector is then projected onto the 2D plane (x-y coordinates) for angular representation, as the directional estimation focuses on the spatial plane where the movement artifacts manifest.

The direction angle is computed using the four-quadrant inverse tangent function to ensure proper angular representation across all quadrants:

where is the quadrant correction factor defined as:

This formulation ensures correct angular determination across all four quadrants of the coordinate system, avoiding the inherent ambiguity of the standard arctangent function. To ensure the angle representation falls within the standard range , a normalization process is applied:

Additionally, the complementary angle is calculated to provide a complete directional reference:

Figure 7 illustrates the center of mass calculation for the optimal triplet, highlighting the geometric relationship between the estimated direction and its distance from the origin. The blue dot marks the center of mass, with the blue dashed line indicating its spatial displacement. Figure 7a provides a 3D perspective, while Figure 7b presents the corresponding 2D (top-down) view. The bidirectional blue dashed line with arrowheads denotes the estimated movement artifact direction, which serves as the principal output of the proposed methodology and forms the basis for subsequent weighted distance calculation.

Figure 7.

Illustration of center of mass calculation and its geometric interpretation, (a) 3D view, (b) 2D view.

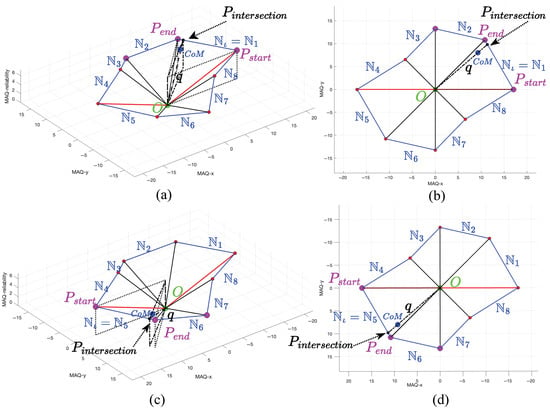

2.4.5. Weighted Distance Calculation

The weighted distance calculation refines the estimated movement artifact magnitude by aligning it with the principal direction determined in the previous stage. While directional MAQ values quantify artifacts along predefined axes, this step computes the true magnitude in pixels along the actual artifact orientation. The process constructs a circular adjacency matrix connecting each 3D point to its neighbor, forming an 8-sided polygon boundary around the origin. The intersection of the direction vector with the corresponding polygon edge yields the weighted distance, representing the artifact’s true strength along its principal direction. Figure 8 illustrates the geometric relationships and key steps in this procedure.

Figure 8.

Illustration of the weighted distance calculation process. Subfigures (a,b) present the 3D and 2D views of the calculation along the corresponding line segment, while (c,d) depict the complementary relationships, respectively.

The neighbor connectivity matrix is constructed such that each row i contains the coordinates of point and its circular neighbor :

where the neighbor index follows circular adjacency, i.e., next_idx , ensuring that the last point connects back to the first and creates a closed polygon boundary. The resulting neighbor connectivity matrix establishes line segments that form the boundary of an 8-sided polygon in 3D space. Each row represents a directed edge connecting consecutive points, with the general structure:

where each row i contains the start point coordinates followed by the end point coordinates , with circular indexing ensuring that the final row connects back to the first point. This matrix representation enables systematic identification of polygon edges for subsequent ray-line intersection calculations, where each row defines a line segment through its start and end coordinates in 3D space.

The algorithm performs angular segmentation by dividing the 360° space into 8 equal segments of 45° each, corresponding to the polygon edges. The line segment identification process determines which polygon edge contains the center of mass angle :

where for points. For the boundary case where , the line index is adjusted to maintain circular continuity:

Once the appropriate line segment is identified, the algorithm extracts the start and end points of the selected polygon edge in 3D space:

The ray-line intersection calculation employs parametric equations to find the intersection between the direction ray from the origin and the identified polygon edge. The direction ray is defined by its origin and direction :

where is the origin in 2D space and is the parameter along the ray.

The corresponding line segment is described by its endpoints and :

To identify the intersection, we equate the ray and segment parametric forms:

This yields a linear system for the parameters t and s:

where the parameter t represents the distance along the ray direction from the origin , while s denotes the relative position along the line segment, with at and at . Values of s between 0 and 1 correspond to points within the segment, and clamping s ensures the intersection point remains within the segment bounds. If the determinant of this matrix is sufficiently small, the ray and segment are nearly parallel, and the midpoint of the segment is selected as a fallback to ensure robustness. Otherwise, we solve for t and s and clamp s to the interval to guarantee the intersection point remains within the segment bounds.

To solve for the intersection, the system is addressed for the parametric coefficients, with special handling for the case of nearly parallel lines where (with typically set to ). In such situations, the midpoint of the line segment is used as a fallback:

To ensure the intersection remains within the segment, the parameter s is clamped to the interval :

The corresponding 3D intersection point is then obtained by linear interpolation between the segment endpoints:

The weighted distance is finally computed as the Euclidean distance from the origin to this intersection point:

The final output of the proposed method is the pair , where is the estimated movement artifact direction (in degrees) as defined in Equation (35) and Section 2.1, and q is the weighted artifact magnitude (in pixels) along the principal direction. Together, provide a precise and interpretable quantification of movement artifacts, supporting downstream applications with geometric accuracy and computational efficiency.

3. Results

This section presents the results and analysis in the same sequence as outlined in the methodology overview (Figure 2), ensuring clarity and logical progression. First, we report the outcomes of the mathematical directional movement artifact modeling, followed by the data preparation results. Next, we detail the findings from the directional movement artifact quantification preparation. Finally, we present the integrated results of 3D space mapping, optimal triplet selection, direction estimation, and weighted movement artifact quantification calculation, as these components are closely interdependent and best interpreted together.

3.1. Signal-Domain Representation of Movement Artifacts Results

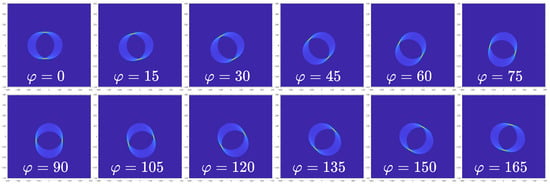

This section presents results synthesized from the mathematical model described previously, focusing on the effects of orientation () and velocity (v) on movement artifact formation. By systematically varying from to in increments, the model demonstrates clear directional movement artifact patterns, supporting the theoretical basis for estimating artifact direction , as illustrated in Figure 9.

Figure 9.

Synthesized movement artifact patterns for varying orientation angles with m/s.

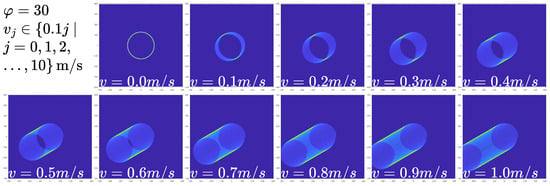

The visibility of movement artifacts increases with velocity, becoming prominent at m/s. However, excessively high velocities may produce artifacts that are indistinguishable from valid image structures, potentially exceeding the optimal detection range. In practical imaging scenarios, including medical applications, observable movement artifacts typically occur within the 0 to m/s range, as shown in Figure 10.

Figure 10.

Synthesized movement artifact patterns for varying velocities v with .

These synthesized results validate the model’s capacity to predict movement artifact direction and support the feasibility of the proposed estimation framework. Nevertheless, empirical validation requires real-world datasets with properties beyond those achievable through simulation, motivating the development of the experimental dataset described in Section 3.2.

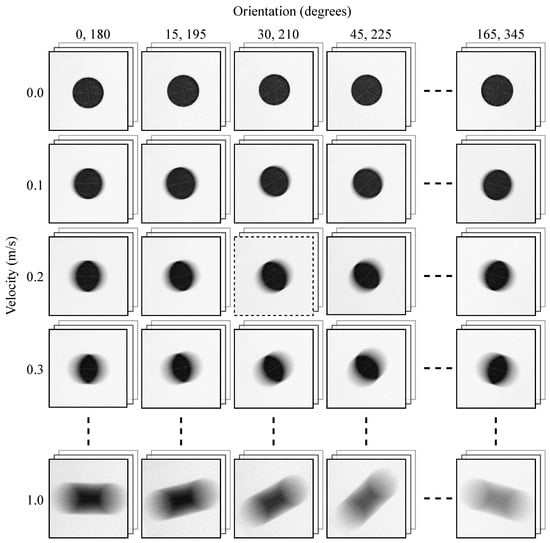

3.2. Data Preparation Results

The empirical image employed in the proposed methodology was acquired using a precision-controlled electronic apparatus, as described in Section 2.2. The resulting images are systematically organized according to the two principal parameters: orientation () and velocity (v). Specifically, columns represent orientation angles from , , , up to , and their complementary angles from , , , up to , arranged left to right. Rows correspond to velocities from 0, , , up to m/s, arranged top to bottom. For each combination, at least one sample image is acquired and processed, as shown in Figure 11. The empirical image at orientation and m/s velocity (highlighted by the dashed frame) is selected for detailed illustration in Section 3.3.

Figure 11.

Empirical images for all orientations and velocities in the dataset.

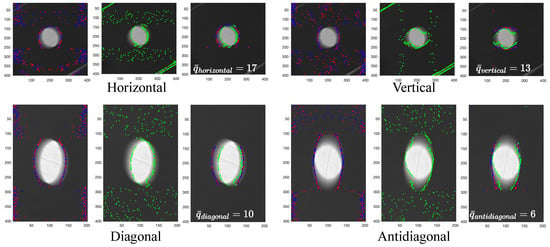

3.3. Directional MAQ Preparation Results

To quantify movement artifacts in pixels, empirical images at each orientation and velocity are processed using the detection and quantification pipeline. While all images undergo this procedure, results for the orientation and m/s velocity are presented in Figure 12 to illustrate the internal algorithmic steps. The figure displays outputs for MAPE, ROPE, and MAQ, arranged left to right for horizontal, diagonal, vertical, and antidiagonal directions. MAPE identifies artifact locations and their complements (red and blue dots), ROPE estimates the reference origin (green dots), and MAQ quantifies the pixel-wise displacement between artifact and origin, suppressing irrelevant data. These results correspond to the original image coordinates and exemplify the pipeline’s operation on a single instance.

Figure 12.

Directional MAQ preparation results for the empirical image at orientation and m/s velocity.

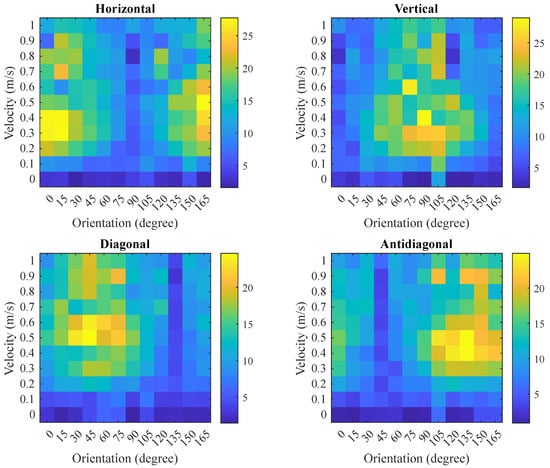

When applied across the entire dataset, the MAQ values for each image and direction are aggregated and visualized as a heatmap in Figure 13. The heatmap reveals that the horizontal, diagonal, vertical, and antidiagonal directions exhibit maximal MAQ responses at , , , and , respectively. In each direction, the MAQ value increases with velocity up to approximately m/s before declining, reflecting the relationship between artifact magnitude and motion parameters.

Figure 13.

Directional MAQ preparation results heatmap for all orientations and velocities. Color bar indicates MAQ value in pixels.

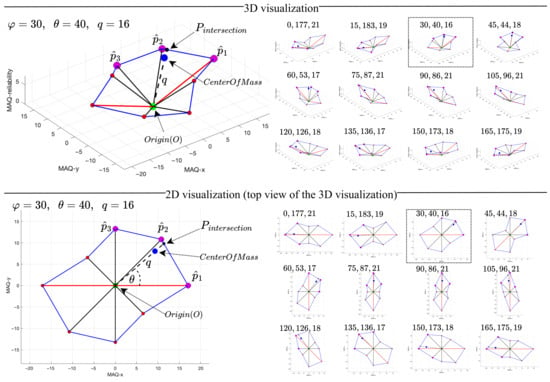

3.4. Direction Estimation and Weighted Distance Calculation Results

This section presents the experimental outputs of the proposed methodology: the estimated movement artifact direction (in degrees) and the weighted distance q (in pixels). Figure 14 illustrates representative results for and q at a velocity of m/s across all tested orientations ( to in increments), with both 3D and 2D visualizations provided. The 2D view corresponds to the top-down projection of the 3D space (excluding the MAQ-reliability axis, which does not affect calculation). Ground truth orientation is systematically compared to estimated direction using angular error and velocity-dependent analysis across m/s, demonstrating that estimated directions closely align with true orientations and confirming the effectiveness of the proposed approach. Figure 14 provides a comprehensive visual summary, while Table 1 reports the detailed estimated directions for all orientations and velocities in the dataset.

Figure 14.

Visualization of the integrated results of the proposed MADE algorithm for the empirical image at m/s velocity.

Table 1.

Estimated movement artifact direction () in degrees for each velocity (v) in m/s and orientation () in degrees.

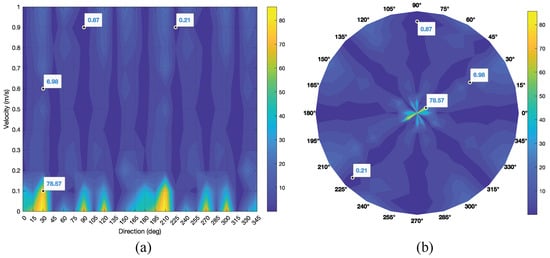

Table 1 summarizes the estimated movement artifact direction (degrees) across velocities v and ground-truth orientations . The tabulated values reveal a clear velocity dependence: estimation errors are notably higher at very low velocities ( m/s), where artifacts are present but lack sufficient separability to expose a dominant orientation. As v increases beyond m/s, directional cues become more pronounced and estimates stabilize across , yielding consistently low angular deviations. This behavior is consistent with the bidirectional nature of movement artifacts, where energy may manifest along both the primary and complementary angles; consequently, for certain pairs, estimates may fall within either 0– or 180– while still indicating the correct orientation class. For clarity, Figure 15 visualizes the error structure over , complementing the trends in the table.

Figure 15.

Estimation error for each velocity and orientation, (a) Cartesian coordinates, (b) polar coordinates.

Figure 15 presents the angular error distribution as a function of orientation and velocity. Figure 15a shows that errors peak at low velocities, particularly around at m/s, and then decrease markedly as v increases; for example, the error drops to approximately near at m/s. Beyond m/s, the error surface flattens across orientations, indicating stable estimation in the moderate-velocity regime. Figure 15b highlights the angular structure from to , with the lowest errors concentrated along the principal axes (, , , ). Error symmetry about complementary angles reflects the intrinsic bidirectionality; occasional flips can occur in low-SNR regimes but become rare once m/s.

Confining analysis to the four principal axes provides a favorable accuracy–efficiency trade-off for direction estimation. As emphasized in Section 2.4.1, these axes capture the dominant error minima, enabling substantial computational savings (approximately a reduction) while maintaining marginal angular deviation on the order of under typical conditions. Practically, an operational threshold emerges near m/s; once m/s, directional estimation becomes consistently high-performance and remains stable at greater velocities. The often-cited m/s boundary pertains to a separate magnitude-oriented consideration discussed subsequently and does not impose an upper limit on the reliability of the direction estimates.

In the following context, we turn to the weighted movement artifact magnitude q (pixels) to characterize where the upper-velocity constraint arises and how it informs practical operation.

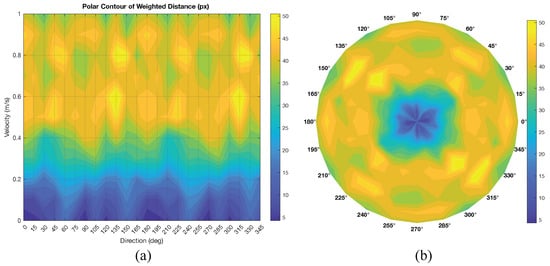

Table 2 reports the estimated weighted movement artifact magnitude q (in pixels) as a function of velocity v across orientations. These values are magnitude estimates—not errors—and they exhibit a clear velocity-driven trend: q remains small at low velocities, increases steadily with v, and approaches its maximum near m/s. This behavior is expected, since lower motion naturally yields smaller artifact magnitudes; consequently, there is no lower-bound limitation for q—small values at low v are valid and informative, rather than a failure mode. The visualizations in Figure 16 are consistent with the tabulated results: Figure 16a (Cartesian coordinates) highlights the growth of q with increasing v followed by a gentle roll-off, while Figure 16b (polar coordinates) shows radially expanding magnitudes with largely symmetric behavior over orientations. The only practical constraint for interpreting q arises beyond approximately m/s, where magnitude responses become ambiguous due to saturation and overlap of artifact structures with image content, leading to reduced separability. Therefore, in the context of weighted distance estimation, the upper-velocity regime defines the sole limitation, whereas low-velocity regimes simply reflect proportionally small but valid q values. These findings provide a pixel-scale, quantitatively interpretable measure of movement artifact strength that is directly actionable for downstream compensation and image quality assessment.

Table 2.

Estimated weighted movement artifact distance (q) in pixels for each velocity (v) in m/s and direction () in degrees.

Figure 16.

Plottings of estimated weighted movement artifact distance (q) in pixels, (a) Cartesian coordinates; (b) polar coordinates.

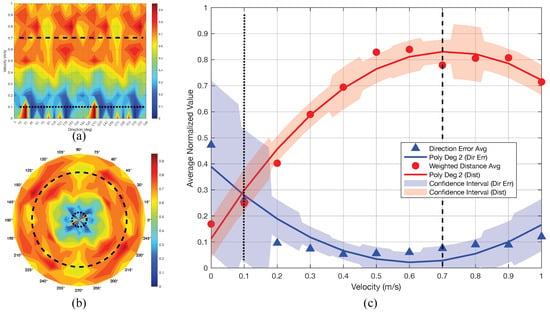

In practical applications, the overall system performance is determined by jointly considering the limitations of both movement artifact direction estimation and weighted distance quantification, which are directly influenced by changes in velocity v. This relationship is visualized in Figure 17. Figure 17a,b display the system’s capabilities in Cartesian and polar coordinates, respectively. The contour maps in these subfigures represent the average sum of normalized direction errors and normalized weighted distances, with values ranging from 0 (dark blue, indicating optimal performance) to 1 (dark red, indicating boundary limitation).

Figure 17.

Comprehensive performance evaluation of the proposed methodology. (a) Integrated performance analysis for direction estimation and weighted distance quantification, presented in Cartesian coordinates. (b) Corresponding performance visualization in polar coordinates. (c) Polynomial regression analysis of performance metrics.

The dark blue regions correspond to velocity ranges where there is sufficient information to accurately estimate both the direction and magnitude of movement artifacts. In contrast, dark red regions indicate performance degradation—either due to insufficient data at low velocities (making direction estimation unreliable) or ambiguity at high velocities (where weighted distance quantification becomes indistinct and artifacts may be confused with inherent image features). The continuous high-performance zone, spanning velocities from approximately to m/s (dotted to dashed lines), defines the operational “safe zone” for the proposed method. This range encompasses typical velocities encountered in practical imaging scenarios, where acceptable motion artifacts are generally below m/s.

Figure 17c presents a quadratic polynomial regression analysis of the normalized errors. The blue triangles and line represent the normalized average direction estimation error and its trend, with shaded areas indicating standard deviation across velocity ranges. The lower bound of reliable performance is observed near m/s (dotted line). The red line shows the normalized average weighted distance, with an upper bound of approximately m/s (dashed line).

Optimal system performance is achieved when the blue line (direction error) is minimized and the red line (weighted distance) is maximized, confirming that the most reliable operation occurs within the – m/s velocity range.

The computational complexity analysis presented in Table 3 highlights the practical efficiency of the proposed signal processing framework relative to conventional movement artifact direction estimation methods. Deep learning and multi-frame approaches incur significantly greater computational costs ( and , respectively) and resource requirements, limiting their suitability for real-time and single-frame applications. In contrast, the proposed pipeline—comprising MAPE, ROPE, MAQ, and the MADE algorithm—achieves comprehensive multi-directional estimation with an overall complexity of and minimal demands on memory, CPU, and GPU resources, as further detailed in Table 4.

Table 3.

Computational complexity analysis per image for major movement artifact direction estimation methods and proposed algorithm components.

Table 4.

System resource usage comparison for major movement artifact direction estimation methods and proposed algorithm components.

Furthermore, the MADE algorithm leverages 3D geometric analysis to deliver precise direction estimation with negligible additional overhead, while the entire pipeline remains highly efficient and suitable for real-time processing. The lightweight nature of the proposed method further ensures its applicability on single-board computers (e.g., Raspberry Pi), Microcontrollers (e.g., ESP32) and system-on-chip (SoC) platforms, supporting practical integration in embedded and portable imaging systems. The results confirm that the proposed method not only matches or exceeds the directional accuracy of existing techniques but also offers significant improvements in efficiency, scalability, and single-frame capability. These findings substantiate the theoretical and practical contributions of the signal processing approach, positioning it as a robust alternative to deep learning and frequency domain methods for motion blur analysis, especially movement artifact direction estimation.

4. Discussion

The proposed method leverages signal processing principles to enable real-time movement artifact direction estimation with minimal computational resources, contrasting sharply with deep learning and multi-frame methods that demand substantial hardware and computational complexity. The pipeline’s simplicity allows deployment on conventional embedded systems (Raspberry Pi, ESP32, system-on-chip platforms) requiring only 10 MB RAM and a single CPU core, making it ideal for resource-constrained and portable imaging applications.

The framework is grounded in discrete signal processing, utilizing spatial self-similarity analysis, correlation, and related operations. While the core processes are straightforward, practical implementations may benefit from fast algorithms, such as the Fast Fourier Transform, to further reduce computational complexity from to in certain sub-components. Additionally, the pipeline is amenable to parallelization; for example, the Directional MAQ Preparation step can be distributed across GPU cores, as each image row is processed independently, potentially reducing processing time by a factor of N.

Although the primary focus is on movement artifact direction estimation across the full angular range (–), direct comparison of estimation errors with other methods is challenging due to differences in task objectives, accuracy metrics, and processing speed priorities. The diversity of approaches—ranging from deep learning and multi-frame analysis to frequency domain and conventional computer vision—further complicates fair benchmarking. This work emphasizes a theoretically fundamental signal processing approach, enabling robust operation on real-world data and resource-limited hardware.

Several limitations merit consideration for practical deployment. The methodology demonstrates robust performance under controlled conditions but faces challenges including illumination variability, where intensity variations may be misinterpreted as movement artifacts, and noise sensitivity in low-quality images, despite EMA noise suppression in ROPE. Complex background scenarios with textured backgrounds or multiple objects may introduce false positives, while the motion pattern assumptions of linear motion with oscillatory components may limit accuracy for irregular movement patterns. The dataset employed features velocity increments of 0.1 m/s, which may obscure subtle phenomena at low velocities and impact directional accuracy. Finer sampling intervals and denser orientation steps (e.g., reducing from 15° to 10°, 5°, or 1°) could enhance resolution but require extensive data preparation. While the current pipeline focuses on four principal directions, adaptive selection of additional axes (e.g., 8, 16, or 32) may further improve accuracy, albeit with increased computational demands. Expanding to more complex patterns, including irregular object geometries, textured surfaces, multi-object scenarios, and realistic medical imaging conditions with tissue heterogeneity, could reveal new insights into algorithm robustness and applicability. Future validation should include varying noise levels (Gaussian, salt-and-pepper, and speckle), illumination conditions (non-uniform lighting, shadows, and contrast variations), motion complexity (non-linear trajectories, acceleration patterns), and adaptive parameter selection for enhanced robustness across diverse imaging modalities and clinical scenarios.

Future research will focus on integrating the estimated direction and magnitude parameters with Modified-DFT-based compensation techniques to evaluate overall artifact correction performance. The methodology will then be extended to real-world imaging applications, particularly the LUIAS, where movement artifacts significantly impact image quality assessment. By enabling accurate artifact characterization and compensation, this work aims to enhance surveillance capabilities and improve detection accuracy for high-risk patients, supporting timely identification of abnormalities by healthcare professionals.

5. Conclusions

This work presents a signal processing-based framework for movement artifact direction estimation in single-frame images, addressing key limitations of existing deep learning, frequency domain, and multi-frame approaches. By integrating multi-directional preprocessing algorithms (MAPE, ROPE, MAQ) with the MADE algorithm’s 3D geometric analysis, the proposed method achieves comprehensive estimation of two principal outputs: the direction of movement artifacts (in degrees) and their weighted quantity (in pixels). These outputs provide both precise angular information and quantitative assessment of artifact magnitude, supporting interpretable and actionable results for downstream applications.

Experimental results demonstrate robust performance and instantaneous processing capability under typical computing environments. Theoretically, the approach offers a fundamental spatial domain solution for accurate movement artifact quantification and direction estimation. Practically, it demonstrates potential applicability in domains such as medical imaging, surveillance, and embedded systems. Limitations include dataset granularity and the scope of tested image shapes, which may be addressed in future work by expanding sampling intervals and exploring adaptive multi-directional analysis. Future research will focus on applying the methodology to real-world movement artifact compensation, particularly in the LUIAS, to enhance image quality and surveillance capabilities.

In summary, the proposed framework delivers efficient and scalable movement artifact direction estimation, with explicit emphasis on both directional accuracy and quantitative measurement, advancing the theoretical and practical aspects of signal processing and image analysis.

Author Contributions

W.N. conceived the idea, designed the methodology, and wrote the manuscript. S.S. supervised the project and provided critical feedback on the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The dataset and code that support the findings of this study will be published publicly on GitHub at https://github.com/nonsakhoo (accessed on 2 December 2025). Until then, they are available from the corresponding author upon reasonable request.

Acknowledgments

This work was supported by the Graduate Production Strengthening Promotion Fund, Department of Computer Science, College of Computing, Khon Kaen University, Thailand. The authors used artificial intelligence (AI) tools for grammar correction, minor stylistic adjustments, and LaTeX formatting support.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| DCT | Discrete Cosine Transform |

| DFT | Discrete Fourier Transform |

| EMA | Exponential Moving Average |

| LUIAS | Liver Ultrasound Image Analysis System |

| MADE | Movement Artifact Direction Estimation |

| MAPE | Movement Artifact Position Estimation |

| MAQ | Movement Artifact Quantification |

| ROPE | Reference Origin Point Estimation |

| SNR | Signal-to-Noise Ratio |

Appendix A. Mathematical Formulations for Directional Matrix Construction

This appendix provides the complete mathematical formulations for diagonal and antidiagonal matrix construction referenced in Section 2.4.1.

Appendix A.1. Diagonal Direction Processing

Elements are collected along lines where the sum of row and column indices is constant (), yielding diagonal vectors. Each diagonal of length is defined as:

To maintain spatial consistency, diagonals are reversed and center-aligned within a matrix of size , where :

where is the centering offset.

Central cropping is then applied to remove padding artifacts:

with cropping boundaries determined by halving the matrix dimensions around the center:

Appendix A.2. Antidiagonal Direction Processing

Elements are collected along lines where the difference of column and row indices plus the row count is constant (), yielding antidiagonal vectors:

Following the same centering and cropping procedure as for diagonals, the final antidiagonal matrix is obtained by applying a vertical reflection transformation to correct orientation:

where denotes the vertical reflection operator that reverses the row order.

Appendix A.3. Row-Wise Normalization

For each row k in the directional matrix , the row mean and standard deviation are defined as:

The normalization ensures that each scanline has zero mean and unit variance, providing the normalized image matrix that serves as input to the detection and quantification pipeline.

References

- Tang, J.; Postnov, D.D.; Kilic, K.; Erdener, S.E.; Lee, B.; Giblin, J.T.; Szabo, T.L.; Boas, D.A. Functional Ultrasound Speckle Decorrelation-Based Velocimetry of the Brain. Adv. Sci. 2020, 7, 2001044. [Google Scholar] [CrossRef] [PubMed]

- Lyu, Q.; Shan, H.; Xie, Y.; Kwan, A.C.; Otaki, Y.; Kuronuma, K.; Li, D.; Wang, G. Cine Cardiac MRI Motion Artifact Reduction Using a Recurrent Neural Network. IEEE Trans. Med. Imaging 2021, 40, 2170–2181. [Google Scholar] [CrossRef] [PubMed]

- Atkinson, D.; Hill, D.L.G.; Stoyle, P.N.R.; Summers, P.E.; Keevil, S.F. Automatic correction of movement artifacts in magnetic resonance images using an entropy focus criterion. IEEE Trans. Med. Imaging 1997, 16, 903–910. [Google Scholar] [CrossRef] [PubMed]

- Edelman, V.; Chassidim, H.; Rabaev, I. Reducing Patient Movement During Magnetic Resonance Imaging: A Case Study. Electronics 2025, 14, 668. [Google Scholar] [CrossRef]

- Kostyrko, B.; Rubarth, K.; Althoff, C.; Zibell, M.; Neizert, C.A.; Poch, F.; Torsello, G.F.; Gebauer, B.; Lehmann, K.; Niehues, S.M.; et al. Evaluation of Different Registration Algorithms to Reduce Motion Artifacts in CT-Thermography (CTT). Diagnostics 2023, 13, 2076. [Google Scholar] [CrossRef] [PubMed]

- Yamashita, Y.; Yoshikawa, T.; Yamazaki, H.; Kawaji, Y.; Tamura, T.; Hatamaru, K.; Itonaga, M.; Ashida, R.; Ida, Y.; Maekita, T.; et al. A Novel Endoscopic Ultrasonography Imaging Technique for Depicting Microcirculation in Pancreatobiliary Lesions without the Need for Contrast-Enhancement: A Prospective Exploratory Study. Diagnostics 2021, 11, 2018. [Google Scholar] [CrossRef]

- Nonsakhoo, W.; Sirisawat, P.; Saiyod, S.; Benjamas, N. Angle of arrival estimation by using stereo ultrasonic technique for local positioning system. In Proceedings of the 2017 IEEE 13th International Colloquium on Signal Processing and its Applications (CSPA), Penang, Malaysia, 10–12 March 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 112–117. [Google Scholar] [CrossRef]

- Nonsakhoo, W.; Saiyod, S.; Moungmingsuk, N. Angle-of-Arrival Analysis of Multi-channel Stereo Ultrasonic Signal for Angular Coordinates Positioning. In Proceedings of the 2018 2nd International Conference on Imaging, Signal Processing and Communication (ICISPC), Kuala Lumpur, Malaysia, 20–22 July 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 125–131. [Google Scholar] [CrossRef]

- Povolny, A.; Kikura, H.; Ihara, T. Ultrasound Pulse-Echo Coupled with a Tracking Technique for Simultaneous Measurement of Multiple Bubbles. Sensors 2018, 18, 1327. [Google Scholar] [CrossRef]

- Hossain, M.S.; Ono, N.; Kanaya, S.; Altaf-Ul-Amin, M. No-Reference Blurred Image Detection from Colonoscopy Videos Using Walsh-Hadamard Transform and Kolmogorov Smirnov Test. In Proceedings of the 2024 IEEE International Conference on Imaging Systems and Techniques (IST), Tokyo, Japan, 14–16 October 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar]

- Kastryulin, S.; Zakirov, J.; Pezzotti, N.; Dylov, D.V. Image Quality Assessment for Magnetic Resonance Imaging. IEEE Access 2023, 11, 14154–14168. [Google Scholar] [CrossRef]

- Fujinami, K.; Shirai, K. Performance Evaluation of Cross-Correlation Based Photoacoustic Measurement of a Single Object with Sinusoidal Linear Motion. Appl. Sci. 2023, 13, 13202. [Google Scholar] [CrossRef]

- Harput, S.; Christensen-Jeffries, K.; Brown, J.; Li, Y.; Williams, K.J.; Davies, A.H.; Eckersley, R.J.; Dunsby, C.; Tang, M.-X. Two-Stage Motion Correction for Super-Resolution Ultrasound Imaging in Human Lower Limb. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2018, 65, 803–814. [Google Scholar] [CrossRef]

- Saiyod, S.; Wayalun, P.; Khorinphan, C.; Chaichawananit, J.; Boonkwang, S. A novel technique of liver ultrasound image enhancement by modified Fourier transform for Bile Duct Cancer surveillance. In Proceedings of the International Computer Science and Engineering Conference (ICSEC), Chiang Mai, Thailand, 14–17 December 2016; IEEE: Piscataway, NJ, USA, 2016. [Google Scholar] [CrossRef]

- Wayalun, P.; Saiyod, S.; Chamadol, N. A hybrid technique for enhancement of Periductal fibrosis ultrasound images for Cholangiocacinoma surveillance. In Proceedings of the 2014 6th International Conference on Information Technology and Electrical Engineering (ICITEE), Yogyakarta, Indonesia, 7–8 October 2014; IEEE: Piscataway, NJ, USA, 2014. [Google Scholar] [CrossRef]

- Cortés-Osorio, J.A.; Gómez-Mendoza, J.B.; Riaño-Rojas, J.C. Velocity Estimation From a Single Linear Motion Blurred Image Using Discrete Cosine Transform. IEEE Trans. Instrum. Meas. 2019, 68, 4038–4050. [Google Scholar] [CrossRef]

- Lu, Q.; Zhou, W.; Fang, L.; Li, H. Robust Blur Kernel Estimation for License Plate Images From Fast Moving Vehicles. IEEE Trans. Image Process. 2016, 25, 2311–2323. [Google Scholar] [CrossRef] [PubMed]

- Tani, J.; Mishra, S.; Wen, J.T. Motion Blur-Based State Estimation. IEEE Trans. Control Syst. Technol. 2016, 24, 1012–1019. [Google Scholar] [CrossRef]

- Abdullah-Al-Mamun, M.; Tyagi, V.; Zhao, H. A New Full-Reference Image Quality Metric for Motion Blur Profile Characterization. IEEE Access 2021, 9, 156361–156371. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, X.; Rawson, M.; Balan, R.; Herskovits, E.H.; Melhem, E.R.; Chang, L.; Wang, Z.; Ernst, T. Motion Correction for Brain MRI Using Deep Learning and a Novel Hybrid Loss Function. Algorithms 2024, 17, 215. [Google Scholar] [CrossRef]

- Adke, D.; Karnik, A.; Berman, H.; Mathi, S. Detection and Blur-Removal of Single Motion Blurred Image using Deep Convolutional Neural Network. In Proceedings of the 2021 International Conference on Artificial Intelligence and Computer Science Technology (ICAICST), Yogyakarta, Indonesia, 29–30 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 79–83. [Google Scholar]

- Kupyn, O.; Budzan, V.; Mykhailych, M.; Mishkin, D.; Matas, J. DeblurGAN: Blind Motion Deblurring Using Conditional Adversarial Networks. arXiv 2018, arXiv:1711.07064. [Google Scholar] [CrossRef]

- Qin, J.; Ma, Y.; Huang, J.; Cai, Z.; Fan, F.; Du, Y. An End-to-End Network for Rotary Motion Deblurring in the Polar Coordinate System. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 2422–2435. [Google Scholar] [CrossRef]

- Liu, K.-H.; Yeh, C.-H.; Chung, J.-W.; Chang, C.-Y. A Motion Deblur Method Based on Multi-Scale High Frequency Residual Image Learning. IEEE Access 2020, 8, 66025–66036. [Google Scholar] [CrossRef]

- Garcia, R.E.; Alvarez, J.; Marcia, R.F. Machine Learning for Classifying Images with Motion Blur. In Proceedings of the 2022 21st IEEE International Conference on Machine Learning and Applications (ICMLA), Nassau, Bahamas, 12–14 December 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 490–494. [Google Scholar]

- Mondal, S.; Das, S.; Ghosh, P. Non-Blind and Blind Deconvolution Methodologies in Restoration of Motion-Blurred images. In Proceedings of the 2024 International Conference on Big Data Analytics in Bioinformatics (DABCon), Kolkata, India, 21–23 November 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 01–06. [Google Scholar]

- Zhang, K.; Luo, W.; Zhong, Y.; Ma, L.; Liu, W.; Li, H. Adversarial Spatio-Temporal Learning for Video Deblurring. IEEE Trans. Image Process. 2019, 28, 291–301. [Google Scholar] [CrossRef]

- Huang, S.; Liang, Q.; Xie, K.; He, Z.; Wen, C.; He, J.; Zhang, W. Improved Transformer-Based Deblurring of Commodity Videos in Dynamic Visual Cabinets. Electronics 2024, 13, 1440. [Google Scholar] [CrossRef]

- Lin, H.-Y. Vehicle Speed Detection and Identification from a Single Motion Blurred Image. In Proceedings of the 2005 Seventh IEEE Workshops on Applications of Computer Vision (WACV/MOTION’05), Breckenridge, CO, USA, 5–7 January 2005; IEEE: Piscataway, NJ, USA, 2005; Volume 1, pp. 461–467. [Google Scholar]

- Kale, K.; Pawar, S.; Dhulekar, P. Moving object tracking using optical flow and motion vector estimation. In Proceedings of the 2015 4th International Conference on Reliability, Infocom Technologies and Optimization (ICRITO) (Trends and Future Directions), Noida, India, 2–4 September 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1–6. [Google Scholar]

- Yazdi, M.; Bouwmans, T. New trends on moving object detection in video images captured by a moving camera: A survey. Comput. Sci. Rev. 2018, 28, 157–177. [Google Scholar] [CrossRef]

- Chen, M.; Hashimoto, K. Vision System for Coarsely Estimating Motion Parameters for Unknown Fast Moving Objects in Space. Sensors 2017, 17, 2820. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Jia, D.; He, Z.; Wu, N. Multi-Frame Joint Detection Approach for Foreign Object Detection in Large-Volume Parenterals. Mathematics 2025, 13, 1333. [Google Scholar] [CrossRef]

- Hong, I.; Jones, J.; Casey, M. Ultrafast Elastic Motion Correction via Motion Deblurring. In Proceedings of the 2014 IEEE Nuclear Science Symposium and Medical Imaging Conference (NSS/MIC), Seattle, WA, USA, 8–15 November 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 1–2. [Google Scholar]

- Jung, Y.; Lee, H.; Jun, H.; Cho, S. Evaluation of Motion Artifact Correction Technique for Cone-Beam Computed Tomography Image Considering Blood Vessel Geometry. J. Clin. Med. 2024, 13, 2253. [Google Scholar] [CrossRef]

- Sengar, S.S.; Mukhopadhyay, S. Moving object detection based on frame difference and W4. Signal Image Video Process. 2017, 11, 1357–1364. [Google Scholar] [CrossRef]

- Chapel, M.-N.; Bouwmans, T. Moving objects detection with a moving camera: A comprehensive review. Comput. Sci. Rev. 2020, 38, 100310. [Google Scholar] [CrossRef]

- Ding, J.; Zhang, Z.; Yu, X.; Zhao, X.; Yan, Z. A Novel Moving Object Detection Algorithm Based on Robust Image Feature Threshold Segmentation with Improved Optical Flow Estimation. Appl. Sci. 2023, 13, 4854. [Google Scholar] [CrossRef]

- Shen, Y.; Dang, J.; Lei, T.; Luo, W. Motion blur parameters estimation based on frequency and spatial domain analysis. In Proceedings of the 2012 2nd International Conference on Consumer Electronics, Communications and Networks (CECNet), Yichang, China, 21–23 April 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 387–390. [Google Scholar]

- Soe, A.K.; Zhang, X. A simple PSF parameters estimation method for the de-blurring of linear motion blurred images using wiener filter in OpenCV. In Proceedings of the International Conference on Systems and Informatics (ICSAI2012), Yantai, China, 19–20 May 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 1855–1860. [Google Scholar]

- Zhang, Z.; Liu, Y.; Xiong, Z.; Li, J.; Zhang, M. Focus and Blurriness Measure Using Reorganized DCT Coefficients for an Autofocus Application. IEEE Trans. Circuits Syst. Video Technol. 2018, 28, 15–30. [Google Scholar] [CrossRef]

- Chen, S.; Liu, D.; Cheng, F.; Xu, J. Multi-Mode Imaging of Ambient Background Noise for Karst Detection in the Limestone Area Based on Frequency-Bessel Transform. Appl. Sci. 2023, 13, 5135. [Google Scholar] [CrossRef]

- Xu, Y.; Xie, X.; Zhou, L.; Xi, B.; Yan, L. Noise Characteristics and Denoising Methods of Long-Offset Transient Electromagnetic Method. Minerals 2023, 13, 1084. [Google Scholar] [CrossRef]

- Tanaka, M.; Yoneji, K.; Okutomi, M. Motion Blur Parameter Identification from a Linearly Blurred Image. In Proceedings of the 2007 Digest of Technical Papers International Conference on Consumer Electronics, Las Vegas, NV, USA, 10–14 January 2007; IEEE: Piscataway, NJ, USA, 2007; pp. 1–2. [Google Scholar]

- Ye, W.; Ma, K.-K. Blurriness-Guided Unsharp Masking. IEEE Trans. Image Process. 2018, 27, 4465–4477. [Google Scholar] [CrossRef] [PubMed]

- Nonsakhoo, W.; Saiyod, S.; Sirisawat, P.; Suwanwerakamtorn, R.; Chamadol, N.; Khuntikeo, N. Liver Ultrasound Image Classification of Periductal Fibrosis Based on Transfer Learning and FCNet for Liver Ultrasound Images Analysis System. In Proceedings of the 2021 International Conference on Computing, Communication, and Intelligent Systems (ICCCIS), Greater Noida, India, 19–20 February 2021; IEEE: Piscataway, NJ, USA, 2021. [Google Scholar] [CrossRef]

- Pang, Y.; Zhu, H.; Li, X.; Pan, J. Motion Blur Detection With an Indicator Function for Surveillance Machines. IEEE Trans. Ind. Electron. 2016, 63, 5592–5601. [Google Scholar] [CrossRef]

- Gong, H.; Zhou, Z.; Pan, J.; Yang, M.; Ding, H.; Zhu, X.; Wu, Q. Image Motion Blur Mechanism-Based Measurement Method for Low-Frequency Vibration Amplitude and Direction. IEEE Sens. J. 2024, 24, 41301–41310. [Google Scholar] [CrossRef]