Abstract

Neuroimaging assumes spatial and temporal uniformity, yet brain activity exhibits a multifractal cascade structure with intermittent bursts and long-range dependencies. We use controlled simulations to test how well standard sampling strategies (random, grid-based, hierarchical; sensors) recover statistical properties—mean, variability, burstiness, and fractal dimension—from synthetic multifractal brain fields. Estimation errors deviate substantially from the classical scaling expected under independent sampling. For higher-order statistics like burstiness, error reduction is remarkably flat in log–log space: orders-of-magnitude increases in sensor density yield virtually no improvement. Grid sampling performs best for fractal dimension at high densities; hierarchical sampling is more stable for burstiness. These results indicate that current neuroimaging fundamentally underestimates brain complexity and variability, with major implications for interpreting both healthy and pathological brain function.

1. Introduction

A hippocampal sharp-wave ripple lasts 50 ms and spans roughly 1 mm3, yet fMRI samples every 2 s across 27 mm3 voxels. This mismatch reflects a deeper problem: Neuroimaging instruments (EEG: electrodes; MEG: sensors; fMRI: millimeter voxels sampled at s intervals) assume spatial and temporal uniformity that the brain does not possess [1,2]. Growing evidence from animal models and humans points instead to multifractal cascade structure [3,4,5,6,7,8,9], where variability is distributed unevenly across scales, producing intermittent bursts (hippocampal sharp-wave ripples, cortical up-states) nested within ongoing fluctuations [10,11]. Scale-free avalanche behavior in human MEG [12] and cross-frequency phase synchronization [13] reveal dependencies that linear models cannot explain. If this multifractal structure is fundamental, then current sampling must systematically mischaracterize brain function.

Multifractal cascades violate the independent and identically distributed (IID) assumptions underlying neuroimaging [14,15,16,17,18,19,20]. Their hallmarks—scale invariance, long-range dependence, and regionally heterogeneous scaling [21,22,23]—mean that sampling limitations can underestimate variability, miss rare but consequential events, and obscure hierarchical organization [24,25,26,27]. Standard preprocessing of brain activity may suppress informative variability, plausibly contributing to under-detection of complexity changes in neurological and psychiatric conditions [28,29,30,31]. Classical intuitions about sampling efficiency ( convergence) become unreliable [32], with implications for both normal function and pathology.

Most prior work has examined temporal multifractality using long time series (EEG, MEG, fMRI) [3,4,5] and methods such as multifractal detrended fluctuation analysis [33,34,35]. We address a complementary but distinct issue: the spatial multifractality of brain activity fields, i.e., how variability is distributed across cortical regions at a given moment. This spatial focus interrogates fundamental measurement constraints: how many sensors are needed, which layouts are robust, and whether estimation error follows classical convergence or the slower rates expected in multifractal systems.

We use controlled simulations to probe what different sampling methods can recover from synthetic brain fields generated by multifractal cascades. We examine (i) how sampling density (from to ) affects estimation accuracy for mean, variability, burstiness, and fractal dimension; (ii) how random, grid-based, and hierarchical layouts compare across regions with varying complexity; and (iii) whether estimation error follows the IID benchmark [32] or exhibits slower convergence characteristic of multifractal cascade structure [14,15,16,17,18,19,20].

Brain dynamics span roughly ten orders of magnitude in space and time [36,37,38,39], making sampling limits particularly acute. By comparing estimates to known ground truth, we identify which statistical measures degrade, which sampling strategies remain resilient, and where sensor density offers diminishing returns. The results have direct implications for neuroimaging design: recognizing multifractality motivates sampling and inference strategies matched to hierarchical dependencies across spatial and temporal scales, potentially improving sensitivity to clinically relevant changes [40,41,42,43] and bridging cellular and large-scale dynamics [36,37,38,39].

2. Methods

2.1. Generation of Synthetic Brain Data

2.1.1. Lattice and Global Settings

All simulations were performed in Matlab 2024a (MathWorks, Natick, MA, USA) on a lattice. We ran 100 independent simulations and simulated time steps per simulation. Sample sizes were as follows: .

2.1.2. Template Generation (Mask and Regions)

We constructed a disc-like brain mask centered at the image midpoint with a radius of the image size. The binary disc was randomly eroded by retaining only pixels where an auxiliary uniform noise field satisfied , then Gaussian-smoothed ( pixels) and thresholded at . Mesoscale heterogeneity was imposed by six Gaussian “blob” regions inside . For each blob , a random in-mask center was selected; a Gaussian field with width was formed, multiplied by , and thresholded at to assign its voxels to a region label i. Voxels in not assigned by any blob were set to a catch-all region, yielding disjoint regions that partition .

2.1.3. Regional Multiplicative Cascade

We initialized on and generated a quad-tree multiplicative cascade independently within each . The recursion depth was . At each level, the current sub-quadrant received an independent lognormal multiplier:

with . Region-specific burst factors were drawn once per region per simulation as follows:

Multipliers were applied only within . No renormalization across levels was applied. Region fields were merged and masked to to form .

2.1.4. Temporal Evolution

To induce temporal persistence without additional regional modulation, the field evolved according to the following:

where each is a fresh cascade on generated as above but using a uniform region mask (a single region); is IID Gaussian noise with standard deviation supported on . Non-negative activity was enforced at each step via ; values outside were zeroed.

2.1.5. Multifractal Analysis

We estimated the spatial multifractal spectrum with the Chhabra–Jensen direct method [44,45]. For dyadic box sizes starting at 4 pixels and increasing up to , we tiled the field and computed normalized box masses from the mean activity within each box. For moment orders in steps of 1, we formed and estimated

as slopes from linear regressions versus across the available scales. Only q-values whose fits satisfied (i.e., ) were retained. All analyses used the final snapshot within the mask .

2.2. Sampling Strategies

We compared three strategies at each N: Random draws N distinct in-mask voxels uniformly; Grid uses a stride and scans row–column order, accepting in-mask coordinates until exactly N are collected; Hierarchical allocates samples per region and distributes the remainder uniformly at random over regions, then samples uniformly without replacement within each .

2.3. Statistical Analysis

2.3.1. Mean and Standard Deviation

Let and . Ground-truth and standard deviation were as follows:

For a sample , , and

2.3.2. Burstiness

Burstiness combined pooled skewness and excess kurtosis:

2.3.3. Fractal Dimension

For ground truth, we used box counting on within . Let and be the spatial mean and standard deviation over ; we thresholded at to obtain a binary pattern and counted occupied boxes for . We regressed on overall valid scales and took the negative slope as , clamped to ; if fewer than three valid scales existed, . For sampled data, we reconstructed a sparse image having observed values at the sampled coordinates and applied the same box-counting estimator when ; otherwise .

2.3.4. Error Metrics and Aggregation

For each statistic , sampling method, and N, percentage relative error was as follows:

with -regularization applied to for when needed. Errors were averaged across the 100 simulations.

3. Results

3.1. Visualization of Synthetic Cascade Fields

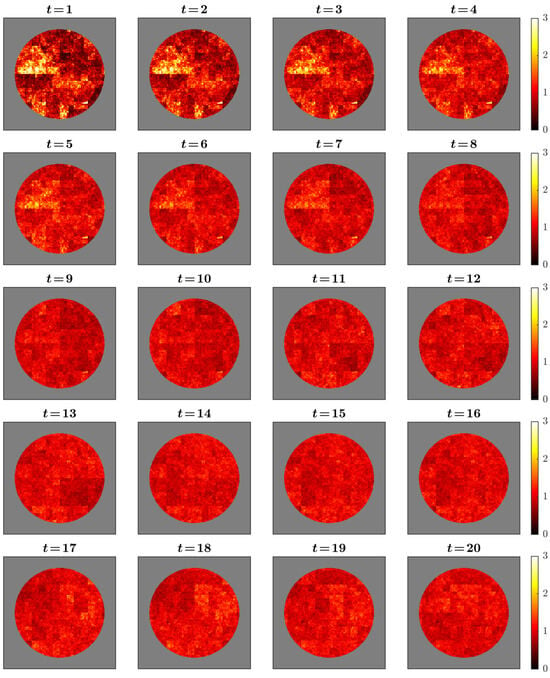

The simulated cascade fields captured the intended multifractal dynamics (Figure 1). Activity patterns appeared as intermittent clusters of high intensity embedded within broader regions of lower amplitude, consistent with the spatially heterogeneous distribution characteristic of multiplicative cascades. Successive time points showed mild temporal persistence, yielding gradual rather than abrupt transitions in spatial structure. Regional contrasts arose solely from controlled modulation of cascade multipliers across predefined masks, introduced to examine how spatial heterogeneity affects sampling accuracy. These patterns illustrate the internal behavior of the cascade model under the chosen parameters and do not assert correspondence to neurophysiological organization.

Figure 1.

Temporal evolution of the simulated cascade field across 20 time points. Values are shown within a disc-shaped mask with a slightly irregular boundary using a “hot” colormap (darker = lower amplitude, brighter = higher). The sequence illustrates spatial intermittency and mild temporal persistence characteristic of multiplicative cascades. Regional heterogeneity arises solely from region-specific modulation of cascade multipliers. Panels are illustrative of model behavior and do not assert neurophysiological realism.

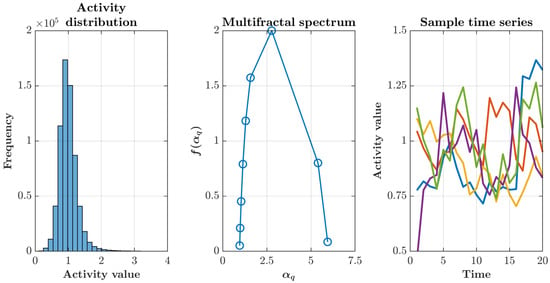

The statistical properties of this simulated activity confirmed its multifractal character. Activity values across all time points exhibited a right-skewed, approximately lognormal distribution characteristic of multiplicative cascade processes, with a concentration of low-to-moderate values and a heavy tail extending toward rare but intense activity peaks (Figure 2, left). Analysis of the mass exponent revealed nonlinear scaling, providing rigorous confirmation of multifractality through deviation from the linear relationship expected for monofractal processes (Figure 2, middle). Time series extracted from five randomly selected points within the brain mask demonstrated heterogeneous temporal dynamics, with each location displaying distinct patterns of burstiness and autocorrelation while maintaining global statistical properties consistent with the underlying multifractal structure (Figure 2, right). Quantitative analysis of the multifractal spectrum confirmed robust spatial multifractality across all simulations. The spectrum width, defined as , averaged ( simulations), indicating multifractality.

Figure 2.

Statistical properties of the multifractal cascade simulation. (Left) Pooled histogram of activity values across all voxels and time points, showing a right–skewed, approximately lognormal distribution with a long upper tail—typical of multiplicative cascades. (Middle) Singularity (multifractal) spectrum versus obtained using the Chhabra–Jensen direct method [44,45]. Its broad, concave shape with a clear maximum indicates genuine multifractality; the spectrum’s width reflects intermittency and spatial heterogeneity. (Right) Sample time series from five randomly chosen locations illustrating heterogeneous temporal dynamics with intermittent bursts and mild persistence. Together, these features validate the cascade generator and motivate testing how different sampling layouts recover statistics under multifractal structure.

3.2. Sampling Performance Across Statistics

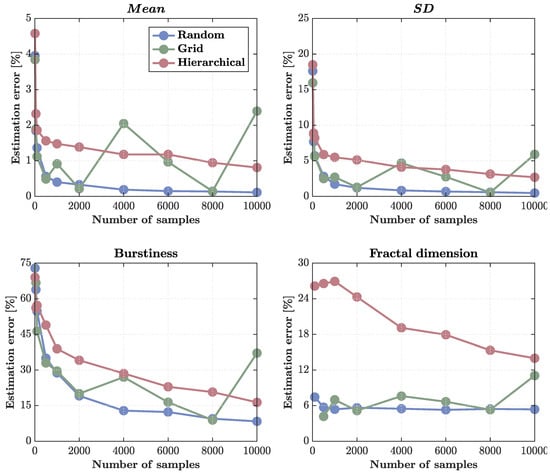

Estimation accuracy improved with increasing sample size for all metrics, but the rate and stability of convergence differed sharply across statistics and sampling strategies (Figure 3 and Figure 4).

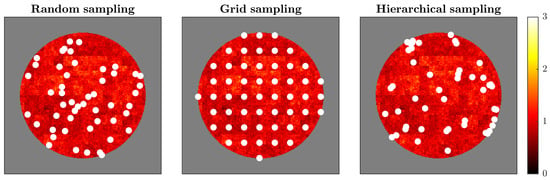

Figure 3.

Comparison of sampling strategies applied to the multifractal brain simulation. The figure shows three sampling approaches (50 sampling points each, indicated by white dots) overlaid on the final time point of simulated brain activity. (Left) Random sampling distributes points uniformly across the brain mask without considering the underlying spatial structure. (Middle) Grid sampling arranges points in a regular geometric lattice, providing systematic spatial coverage. (Right) Hierarchical sampling allocates points proportionally across predefined regions, ensuring representation from all spatial zones while maintaining randomness within each region. White dots represent sensor locations. The background colormap shows activity intensity from low (dark red/black) to high (bright yellow). Each strategy reflects different principles for sensor placement, with implications for capturing multifractal dynamics: random sampling assumes spatial homogeneity, grid sampling prioritizes geometric regularity, and hierarchical sampling respects regional structure while balancing coverage.

Figure 4.

Estimation errors for different sampling methods across sample sizes. Percentage errors are shown for four statistics—, standard deviation (), burstiness, and fractal dimension—estimated from the multifractal cascade simulation as a function of sample size ( samples). (Top left) estimation error shows that only random sampling achieves classical convergence, reaching near-zero error () by . Grid sampling fluctuates with sample size, and hierarchical sampling fails to converge, maintaining residual errors around due to redundancy within clusters. (Top right) errors show similar trends: random sampling converges rapidly, grid sampling remains noisy, and hierarchical sampling shows persistent bias and elevated error () even at high N. (Bottom left) Burstiness estimation error remains high for all methods, reflecting the intrinsic difficulty of capturing intermittent, heavy-tailed dynamics. Random sampling performs best ( at ), while hierarchical and grid sampling converge slowly and irregularly. (Bottom right) Fractal dimension errors are smallest and most stable under random sampling (), with grid sampling moderately higher and hierarchical sampling persistently overestimating error () due to spatial clustering and reduced effective sample size. Overall, hierarchical sampling shows weak to no convergence for even first-order statistics due to the detrimental effect of spatial redundancy in clustered designs.

First-order statistics ( and standard deviation ) were the most reliably estimated. For the , random sampling achieved near-perfect convergence, with errors dropping below by and approaching zero () at . Grid sampling performed moderately well but showed irregular fluctuations with sample size, likely reflecting aliasing with the cascade’s underlying spatial periodicities. Hierarchical sampling failed to achieve full convergence: its errors plateaued around , indicating that clustered, region-based allocation introduces redundancy that limits statistical independence even at large N (Figure 4, top left).

The same pattern held for the standard deviation () (Figure 4, top right). Random sampling again achieved the lowest and most stable errors ( at high densities), grid sampling remained erratic, and hierarchical sampling retained a systematic upward bias, with residual errors of at the highest N. This lack of convergence suggests that hierarchical clustering effectively reduces the number of independent observations, yielding an effective sample size smaller than the nominal N.

Higher-order statistics exhibited markedly poorer scaling. Burstiness estimation remained dominated by large errors across all methods (Figure 4, bottom left). Random sampling outperformed the others, reducing errors from roughly at minimal sampling to about at , whereas grid and hierarchical sampling converged slowly and irregularly, plateauing at ∼15% and ∼30%, respectively. These patterns reflect the intrinsic difficulty of recovering heavy-tailed, intermittent statistics from finite, spatially correlated samples.

Fractal dimension estimation displayed similar trends (Figure 4, bottom right). Random sampling again produced the most consistent results (∼5% error across the entire range), grid sampling fluctuated between , and hierarchical sampling systematically overestimated errors (>15%), again reflecting the reduced independence of clustered observations.

Overall, these results demonstrate that random sampling offers the most statistically efficient and stable recovery of both first- and higher-order quantities, while hierarchical designs suffer from redundancy that prevents convergence even for simple moments. The findings emphasize that, in multifractal fields, the structure of sampling matters as much as the number of samples: clustered layouts degrade effective resolution and bias estimates, whereas decorrelated designs (random or jittered grids) preserve information across scales.

3.3. Scaling Properties

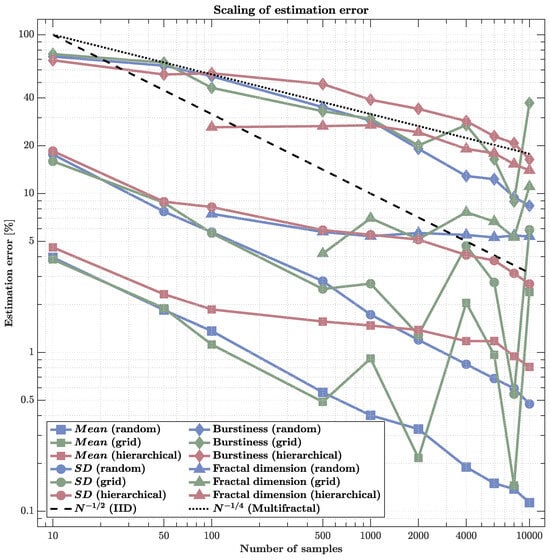

Analysis of the relationship between sample size and estimation error in log–log coordinates revealed qualitatively distinct scaling regimes across metrics and sampling strategies (Figure 5).

Figure 5.

Scaling of estimation error across sampling schemes and metrics (log–log). Errors are shown for , standard deviation (), burstiness, and fractal dimension as sample size N increases. The black dashed line indicates the classical IID rate ; the dotted line shows the slower rate typical of multifractal structure. and errors for random sampling closely track , while for grid sampling, they initially do so before flattening at high N. By contrast, under hierarchical sampling, even first-order statistics ( and ) do not converge: errors remain elevated and non-monotonic with N, indicating that the branching, clustered design yields highly redundant observations, reduces effective sample size, and can bias moment estimates. Higher-order quantities (burstiness and dimension) converge far more slowly for all schemes—roughly near —with errors still at . Clearly, estimation accuracy depends as much on the sampling structure as on N. Hierarchical strategies are ill-suited for estimating moments; decorrelated designs (random or jittered grid) are preferable. Moreover, even large increases in N provide only modest gains for higher-order, multiscale statistics.

First-order statistics ( and ) showed scaling near the classical rate under random sampling, achieving subpercent errors at high densities (). Grid sampling followed a similar trend but exhibited mild irregularities due to aliasing with a cascade structure. Hierarchical sampling, however, deviated sharply: its errors remained roughly constant or even fluctuated with N, indicating non-convergence and effective loss of independence among samples caused by clustering within correlated regions.

Higher-order metrics demonstrated fundamentally slower scaling. Burstiness errors decreased approximately as , consistent with multifractal cascade behavior, and remained above even at maximal sampling. Fractal dimension estimates showed similar sluggish improvement, saturating around error despite increasing N by two orders of magnitude. These scaling behaviors collectively indicate that statistical efficiency collapses in multifractal fields: the gains from additional sensors diminish sharply once spatial correlations dominate, leaving residual estimation bias that cannot be reduced by density alone.

These results have direct implications for neuroimaging and large-scale sensing. While simple averages converge efficiently, metrics reflecting intermittency and scale-dependent organization (e.g., burstiness, fractal dimension) display structural limits on sampling precision. Thus, denser sensor arrays may provide little benefit unless sensor placement actively decorrelates measurements or adapts to the cascade hierarchy itself.

4. Discussion

Our simulations reveal a fundamental constraint: if brain activity exhibits multifractal cascade properties, then current neuroimaging systematically underestimates its complexity. The non-classical scaling between sample size and estimation error ( rather than ) indicates that even substantial increases in sensor density yield only modest gains for complex statistics. This directly challenges the assumption that incremental growth in sensor count proportionally improves measurement accuracy. Notably, even 2000 sampling points—far beyond routine practice—left large errors for burstiness and fractal dimension, raising doubts about whether standard neuroimaging can recover the full complexity of neural activity. These results point to a measurement challenge that transcends any single modality and argue for rethinking acquisition and analysis rather than simply scaling hardware.

The poor and often non-monotonic behavior of hierarchical sampling for the and is expected in clustered designs applied to multifractal fields. First, clustering induces positive intra-cluster correlation , so the effective sample size collapses from N to , where m is the average within-region sample count and is the intra-region correlation. In multifractal fields, at many scales, making neighboring observations highly redundant and biasing moment estimates toward regional centroids. Second, our allocation rule (a floor of per region) creates unequal inclusion probabilities that are not corrected by weights; small, low-variance regions become over-represented while intermittent, high-amplitude “hot spots” are under-sampled. This design effect depresses and explains why errors do not decrease with N as and can even fluctuate when N crosses dyadic cascade scales (aliasing with the cascade hierarchy). Practically, this means that adding sensors within the same regional clusters yields diminishing returns for first-order statistics.

A second, practical implication is that sampling design matters. Hierarchical sampling outperformed random and grid-based approaches for higher-order statistics, lowering errors by approximately while maintaining representation across regions. This suggests that sensor placement informed by anatomy and functional organization could improve accuracy without increasing sensor count. By contrast, regular grids—adequate for means—were least effective at capturing burstiness and fractal dimension, suggesting that uniform layouts systematically miss localized, intermittent structure. Taken together, these findings support biologically informed, multi-resolution arrays over technologically convenient uniform distributions.

The heavy-tailed activity distributions observed here also have implications for preprocessing. Standard filtering and averaging procedures designed to reduce noise may suppress precisely those bursts that carry information, thereby masking individual differences and transients of clinical or cognitive relevance. In parallel, the slow convergence of higher-order estimates suggests that classical pipelines may underestimate true variability, potentially encouraging overconfidence in group-level summaries [46,47].

In our simulations, apparent “regional” differences in estimation accuracy reflect methodological artifacts—interactions between scaling properties and spatial sampling schemes—not intrinsic biological variation. Certain combinations of scaling behavior may require denser sampling to yield stable multifractal estimates. We therefore refrain from drawing inferences about dynamic brain properties solely from simulations and view these results as guidance for designing and testing sampling strategies that must be validated with empirical data. This interpretation is consistent with persistent difficulties in characterizing some areas (e.g., subcortical structures) despite hardware advances [48,49]; however, any claim about true regional differences must await direct empirical confirmation. Region-specific underestimation of burstiness and complexity, if present in vivo, could be consequential for neurological and psychiatric disorders given the diagnostic and prognostic value of neural variability [28,29,30,31].

These limitations are not insurmountable. Several complementary approaches could substantially improve the recovery of multifractal structure from limited samples. Model-based reconstruction that encodes cascade-like priors (e.g., lognormal/multiplicative cascades with long-range dependence) together with spatial regularizers consistent with intermittency may recover more structure than purely geometric interpolation [50]. Multiscale integration across modalities can leverage complementary spatial and temporal sensitivities via joint inverse modeling, cross-modal constraints, and harmonized preprocessing [51,52,53]. Adaptive sampling that reallocates sensors toward emergent hotspots—using information-theoretic criteria [54] in a closed loop—may optimize coverage of intermittent events. Finally, analyses explicitly tailored to multifractal processes—for example, wavelet-leader/wavelet-based estimators and detrended fluctuation approaches [33,34,35]—augmented with robust/quantile estimators and surrogate-data tests, may extract more information from sparse or noisy data than standard statistics.

Several limitations warrant acknowledgment. The synthetic brain model simplifies anatomy and physiology; incorporating white-matter connectivity and region-specific dynamics is a clear next step. Parameter choices were theory-driven and should be validated against empirical neuroimaging. Although we focused on spatial sampling, temporal constraints also shape inference and interpretability. Moreover, realistic sensor noise and physiological artifacts were not modeled here and should be included in future work to increase ecological validity.

Our findings are predictive, not confirmatory: they arise from controlled simulations that assume multifractal cascade structure and idealized sampling conditions. We have not demonstrated that identical error-scaling deviations occur in empirical neuroimaging, nor that our cascade generator is a mechanistic model of neural activity. The simulations establish what would happen if multifractal structure is present; empirical validation requires multimodal datasets with harmonized preprocessing, validated complexity metrics, and cross-scale analyses. This represents a clear priority for future work.

Within these constraints, the results carry a clear implication: if neural activity exhibits multifractal cascade properties, commonly used sampling strategies will systematically under-represent emergent complex dynamics [55]. Progress will not come primarily from denser uniform arrays—which our results show provide diminishing returns—but from optimized sampling strategies, multiscale integration, and analysis methods explicitly designed for intermittent, nonlinear structure. Given the hierarchical organization of the brain—from neurons to networks to systems—measurement and analysis should be comparably hierarchical [56,57,58,59]. If multifractality proves fundamental to neural dynamics, acknowledging it will be pivotal for next-generation diagnostics and interventions that operate effectively in the presence of cascade-like variability. The question is no longer whether to account for multifractality, but how.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing does not apply to this article as no new data were created or analyzed in this study.

Conflicts of Interest

The author declares no competing interest.

References

- Goense, J.; Bohraus, Y.; Logothetis, N.K. fMRI at high spatial resolution: Implications for BOLD-models. Front. Comput. Neurosci. 2016, 10, 66. [Google Scholar] [CrossRef] [PubMed]

- Kriegeskorte, N.; Cusack, R.; Bandettini, P. How does an fMRI voxel sample the neuronal activity pattern: Compact-kernel or complex spatiotemporal filter? NeuroImage 2010, 49, 1965–1976. [Google Scholar] [CrossRef]

- Czoch, A.; Kaposzta, Z.; Mukli, P.; Stylianou, O.; Eke, A.; Racz, F.S. Resting-state fractal brain connectivity is associated with impaired cognitive performance in healthy aging. GeroScience 2024, 46, 473–489. [Google Scholar] [CrossRef]

- Mukli, P.; Nagy, Z.; Racz, F.S.; Herman, P.; Eke, A. Impact of healthy aging on multifractal hemodynamic fluctuations in the human prefrontal cortex. Front. Physiol. 2018, 9, 1072. [Google Scholar] [CrossRef]

- Racz, F.S.; Mukli, P.; Nagy, Z.; Eke, A. Multifractal dynamics of resting-state functional connectivity in the prefrontal cortex. Physiol. Meas. 2018, 39, 024003. [Google Scholar] [CrossRef]

- Racz, F.S.; Stylianou, O.; Mukli, P.; Eke, A. Multifractal dynamic functional connectivity in the resting-state brain. Front. Physiol. 2018, 9, 1704. [Google Scholar] [CrossRef]

- Racz, F.S.; Stylianou, O.; Mukli, P.; Eke, A. Multifractal and entropy analysis of resting-state electroencephalography reveals spatial organization in local dynamic functional connectivity. Sci. Rep. 2019, 9, 13474. [Google Scholar] [CrossRef]

- Racz, F.S.; Czoch, A.; Kaposzta, Z.; Stylianou, O.; Mukli, P.; Eke, A. Multiple-resampling cross-spectral analysis: An unbiased tool for estimating fractal connectivity with an application to neurophysiological signals. Front. Physiol. 2022, 13, 817239. [Google Scholar] [CrossRef]

- Stylianou, O.; Racz, F.S.; Kim, K.; Kaposzta, Z.; Czoch, A.; Yabluchanskiy, A.; Eke, A.; Mukli, P. Multifractal functional connectivity analysis of electroencephalogram reveals reorganization of brain networks in a visual pattern recognition paradigm. Front. Hum. Neurosci. 2021, 15, 740225. [Google Scholar] [CrossRef] [PubMed]

- Buzsáki, G. Hippocampal sharp wave-ripple: A cognitive biomarker for episodic memory and planning. Hippocampus 2015, 25, 1073–1188. [Google Scholar] [CrossRef] [PubMed]

- Destexhe, A.; Contreras, D. Neuronal computations with stochastic network states. Science 2006, 314, 85–90. [Google Scholar] [CrossRef]

- Palva, J.M.; Palva, S. The correlation of the neuronal long-range temporal correlations, avalanche dynamics with the behavioral scaling laws and interindividual variability. In Criticality in Neural Systems; Plenz, D., Niebur, E., Eds.; Wiley-VCH Verlag GmbH & Co.: Weinheim, Germany, 2014; pp. 105–126. [Google Scholar] [CrossRef]

- Zhigalov, A.; Arnulfo, G.; Nobili, L.; Palva, S.; Palva, J.M. Relationship of fast-and slow-timescale neuronal dynamics in human MEG and SEEG. J. Neurosci. 2015, 35, 5385–5396. [Google Scholar] [CrossRef]

- Kelty-Stephen, D.G.; Mangalam, M. Fractal and multifractal descriptors restore ergodicity broken by non-Gaussianity in time series. Chaos Solitons Fractals 2022, 163, 112568. [Google Scholar] [CrossRef]

- Mandelbrot, B.B.; Van Ness, J.W. Fractional Brownian motions, fractional noises and applications. SIAM Rev. 1968, 10, 422–437. [Google Scholar] [CrossRef]

- Mangalam, M.; Kelty-Stephen, D.G. Ergodic descriptors of non-ergodic stochastic processes. J. R. Soc. Interface 2022, 19, 20220095. [Google Scholar] [CrossRef]

- Mangalam, M.; Metzler, R.; Kelty-Stephen, D.G. Ergodic characterization of nonergodic anomalous diffusion processes. Phys. Rev. Res. 2023, 5, 023144. [Google Scholar] [CrossRef]

- Mangalam, M.; Sadri, A.; Hayano, J.; Watanabe, E.; Kiyono, K.; Kelty-Stephen, D.G. Multifractal foundations of biomarker discovery for heart disease and stroke. Sci. Rep. 2023, 13, 18316. [Google Scholar] [CrossRef] [PubMed]

- Mangalam, M.; Kelty-Stephen, D.G. Multifractal perturbations to multiplicative cascades promote multifractal nonlinearity with asymmetric spectra. Phys. Rev. E 2024, 109, 064212. [Google Scholar] [CrossRef]

- Mangalam, M.; Likens, A.D.; Kelty-Stephen, D.G. Multifractal nonlinearity as a robust estimator of multiplicative cascade dynamics. Phys. Rev. E 2025, 111, 034126. [Google Scholar] [CrossRef] [PubMed]

- Fraiman, D.; Chialvo, D.R. What kind of noise is brain noise: Anomalous scaling behavior of the resting brain activity fluctuations. Front. Physiol. 2012, 3, 307. [Google Scholar] [CrossRef]

- Linkenkaer-Hansen, K.; Nikouline, V.V.; Palva, J.M.; Ilmoniemi, R.J. Long-range temporal correlations and scaling behavior in human brain oscillations. J. Neurosci. 2001, 21, 1370–1377. [Google Scholar] [CrossRef]

- He, B.J.; Zempel, J.M.; Snyder, A.Z.; Raichle, M.E. The temporal structures and functional significance of scale-free brain activity. Neuron 2010, 66, 353–369. [Google Scholar] [CrossRef]

- Aru, J.; Aru, J.; Priesemann, V.; Wibral, M.; Lana, L.; Pipa, G.; Singer, W.; Vicente, R. Untangling cross-frequency coupling in neuroscience. Curr. Opin. Neurobiol. 2015, 31, 51–61. [Google Scholar] [CrossRef]

- Liu, Q.; Ganzetti, M.; Wenderoth, N.; Mantini, D. Detecting large-scale brain networks using EEG: Impact of electrode density, head modeling and source localization. Front. Neuroinform. 2018, 12, 4. [Google Scholar] [CrossRef]

- Priesemann, V.; Valderrama, M.; Wibral, M.; Le Van Quyen, M. Neuronal avalanches differ from wakefulness to deep sleep–evidence from intracranial depth recordings in humans. PLoS Comput. Biol. 2013, 9, e1002985. [Google Scholar] [CrossRef] [PubMed]

- Roberts, J.A.; Boonstra, T.W.; Breakspear, M. The heavy tail of the human brain. Curr. Opin. Neurobiol. 2015, 31, 164–172. [Google Scholar] [CrossRef] [PubMed]

- Catarino, A.; Churches, O.; Baron-Cohen, S.; Andrade, A.; Ring, H. Atypical EEG complexity in autism spectrum conditions: A multiscale entropy analysis. Clin. Neurophysiol. 2011, 122, 2375–2383. [Google Scholar] [CrossRef] [PubMed]

- Fernández, A.; López-Ibor, M.I.; Turrero, A.; Santos, J.M.; Morón, M.D.; Hornero, R.; Gómez, C.; Méndez, M.A.; Ortiz, T.; López-Ibor, J.J. Lempel–Ziv complexity in schizophrenia: A MEG study. Clin. Neurophysiol. 2011, 122, 2227–2235. [Google Scholar] [CrossRef]

- Lai, M.C.; Lombardo, M.V.; Chakrabarti, B.; Sadek, S.A.; Pasco, G.; Wheelwright, S.J.; Bullmore, E.T.; Baron-Cohen, S.; Suckling, J.; MRC AIMS Consortium. A shift to randomness of brain oscillations in people with autism. Biol. Psychiatry 2010, 68, 1092–1099. [Google Scholar] [CrossRef]

- Racz, F.S.; Stylianou, O.; Mukli, P.; Eke, A. Multifractal and entropy-based analysis of delta band neural activity reveals altered functional connectivity dynamics in schizophrenia. Front. Syst. Neurosci. 2020, 14, 49. [Google Scholar] [CrossRef]

- Fisher, R.A. On the mathematical foundations of theoretical statistics. Philos. Trans. R. Soc. Lond. Ser. A 1922, 222, 309–368. [Google Scholar] [CrossRef]

- Ihlen, E.A. Introduction to multifractal detrended fluctuation analysis in Matlab. Front. Physiol. 2012, 3, 141. [Google Scholar] [CrossRef]

- Kantelhardt, J.W.; Zschiegner, S.A.; Koscielny-Bunde, E.; Havlin, S.; Bunde, A.; Stanley, H.E. Multifractal detrended fluctuation analysis of nonstationary time series. Phys. A Stat. Mech. Its Appl. 2002, 316, 87–114. [Google Scholar] [CrossRef]

- Oświecimka, P.; Kwapień, J.; Drożdż, S. Wavelet versus detrended fluctuation analysis of multifractal structures. Phys. Rev. E 2006, 74, 016103. [Google Scholar] [CrossRef]

- Breakspear, M. Dynamic models of large-scale brain activity. Nat. Neurosci. 2017, 20, 340–352. [Google Scholar] [CrossRef]

- Buzsáki, G.; Watson, B.O. Brain rhythms and neural syntax: Implications for efficient coding of cognitive content and neuropsychiatric disease. Dialogues Clin. Neurosci. 2012, 14, 345–367. [Google Scholar] [CrossRef] [PubMed]

- He, B.J. Scale-free brain activity: Past, present, and future. Trends Cogn. Sci. 2014, 18, 480–487. [Google Scholar] [CrossRef] [PubMed]

- Varela, F.; Lachaux, J.P.; Rodriguez, E.; Martinerie, J. The brainweb: Phase synchronization and large-scale integration. Nat. Rev. Neurosci. 2001, 2, 229–239. [Google Scholar] [CrossRef]

- Fernández, A.; Gómez, C.; Hornero, R.; López-Ibor, J.J. Complexity and schizophrenia. Prog. Neuro-Psychopharmacol. Biol. Psychiatry 2013, 45, 267–276. [Google Scholar] [CrossRef]

- Pijnenburg, Y.A.; Vd Made, Y.; Van Walsum, A.V.C.; Knol, D.; Scheltens, P.; Stam, C.J. EEG synchronization likelihood in mild cognitive impairment and Alzheimer’s disease during a working memory task. Clin. Neurophysiol. 2004, 115, 1332–1339. [Google Scholar] [CrossRef] [PubMed]

- Cohen, J.R. The behavioral and cognitive relevance of time-varying, dynamic changes in functional connectivity. NeuroImage 2018, 180, 515–525. [Google Scholar] [CrossRef]

- Fornito, A.; Zalesky, A.; Breakspear, M. The connectomics of brain disorders. Nat. Rev. Neurosci. 2015, 16, 159–172. [Google Scholar] [CrossRef]

- Chhabra, A.; Jensen, R.V. Direct determination of the f (α) singularity spectrum. Phys. Rev. Lett. 1989, 62, 1327. [Google Scholar] [CrossRef]

- Kelty-Stephen, D.G.; Lane, E.; Bloomfield, L.; Mangalam, M. Multifractal test for nonlinearity of interactions across scales in time series. Behav. Res. Methods 2023, 55, 2249–2282. [Google Scholar] [CrossRef] [PubMed]

- Mangalam, M.; Kelty-Stephen, D.G. Point estimates, Simpson’s paradox, and nonergodicity in biological sciences. Neurosci. Biobehav. Rev. 2021, 125, 98–107. [Google Scholar] [CrossRef] [PubMed]

- Papo, D.; Zanin, M.; Martínez, J.H.; Buldú, J.M. Beware of the small-world neuroscientist! Front. Hum. Neurosci. 2016, 10, 96. [Google Scholar] [CrossRef] [PubMed]

- Duyn, J.H. The future of ultra-high field MRI and fMRI for study of the human brain. NeuroImage 2012, 62, 1241–1248. [Google Scholar] [CrossRef]

- Tambalo, S.; Scuppa, G.; Bifone, A. Segmented echo planar imaging improves detection of subcortical functional connectivity networks in the rat brain. Sci. Rep. 2019, 9, 1397. [Google Scholar] [CrossRef]

- Rabuffo, G.; Fousek, J.; Bernard, C.; Jirsa, V. Neuronal cascades shape whole-brain functional dynamics at rest. ENeuro 2021, 8, ENEURO.0283-21.2021. [Google Scholar] [CrossRef]

- Liu, Z.; He, B. fMRI–EEG integrated cortical source imaging by use of time-variant spatial constraints. NeuroImage 2008, 39, 1198–1214. [Google Scholar] [CrossRef]

- Muraskin, J.; Brown, T.R.; Walz, J.M.; Tu, T.; Conroy, B.; Goldman, R.I.; Sajda, P. A multimodal encoding model applied to imaging decision-related neural cascades in the human brain. NeuroImage 2018, 180, 211–222. [Google Scholar] [CrossRef]

- Ou, W.; Nummenmaa, A.; Ahveninen, J.; Belliveau, J.W.; Hämäläinen, M.S.; Golland, P. Multimodal functional imaging using fMRI-informed regional EEG/MEG source estimation. NeuroImage 2010, 52, 97–108. [Google Scholar] [CrossRef]

- Ince, R.A.; Giordano, B.L.; Kayser, C.; Rousselet, G.A.; Gross, J.; Schyns, P.G. A statistical framework for neuroimaging data analysis based on mutual information estimated via a gaussian copula. Hum. Brain Mapp. 2017, 38, 1541–1573. [Google Scholar] [CrossRef]

- Chialvo, D.R. Emergent complex neural dynamics. Nat. Phys. 2010, 6, 744–750. [Google Scholar] [CrossRef]

- Buzsáki, G. Time, space, memory and brain–body rhythms. Nat. Rev. Neurosci. 2025, 1–18. [Google Scholar] [CrossRef]

- Lynn, C.W.; Bassett, D.S. The physics of brain network structure, function and control. Nat. Rev. Phys. 2019, 1, 318–332. [Google Scholar] [CrossRef]

- Presigny, C.; De Vico Fallani, F. Colloquium: Multiscale modeling of brain network organization. Rev. Mod. Phys. 2022, 94, 031002. [Google Scholar] [CrossRef]

- Steinke, G.K.; Galán, R.F. Brain rhythms reveal a hierarchical network organization. PLoS Comput. Biol. 2011, 7, e1002207. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).