Abstract

Accurate estimation of attitude, line of sight (LOS), and carrier-phase ambiguity is essential for the performance of Guidance, Navigation, and Control (GNC) systems operating under highly dynamic and uncertain conditions. Traditional sensor fusion and filtering methods, although effective, often require precise modeling and high-grade sensors to maintain robustness. This paper investigates a deep learning-based estimation framework for attitude, LOS, and GNSS ambiguity through the fusion of onboard sensors—GNSS, IMU, and semi-active laser (SAL)—and remote sensing information. Two neural network estimators are developed to address the most critical components of the navigation chain: GNSS carrier-phase ambiguity and gravity-vector reconstruction in the body frame, which are integrated into a hybrid guidance and navigation scheme for attitude and LOS determination. These learning-based estimators capture nonlinear relationships between sensor measurements and physical states, improving generalization under degraded conditions. The proposed system is validated in a six-degree-of-freedom (6-DoF) simulation environment that includes full aerodynamic modeling of artillery guided rockets. Comparative analyses demonstrate that the learning-based ambiguity and gravity estimators reduce overall latency, enhance estimation accuracy, and improve guidance precision compared to conventional networks. The results suggest that deep learning-based sensor fusion can serve as a practical foundation for next-generation low-cost GNC systems, enabling precise and reliable operation in scenarios with limited observability or sensor degradation.

1. Introduction

Accurate and robust state estimation lies at the core of modern Guidance, Navigation, and Control (GNC) systems. In highly dynamic flight environments—such as spin-stabilized artillery rockets, guided munitions, and small unmanned aerial vehicles (UAVs)—the ability to determine the platform’s attitude, line of sight (LOS), and GNSS carrier-phase ambiguity is fundamental to maintaining stable control, ensuring target tracking, and achieving mission success. Each of these estimation tasks is intrinsically nonlinear and sensitive to sensor noise, modeling errors, and environmental degradation. Over the past several decades, a variety of classical approaches have been developed to address these challenges, relying on integrated INS/GNSS navigation and observer-based filtering techniques [1,2,3]. While these methods are mathematically elegant and computationally efficient, they depend heavily on accurate system models and high-grade inertial sensors, which limits their applicability in low-cost or resource-constrained platforms.

The continuous evolution of artificial intelligence and machine learning—particularly deep neural networks (DNNs)—has recently enabled the development of data-driven estimation frameworks capable of learning complex nonlinear relationships directly from multisensor data [4,5,6,7]. Neural architectures, ranging from multilayer perceptrons to recurrent and convolutional models, have shown a strong capacity to infer latent physical relationships in the presence of uncertainty. In aerospace applications, these models can approximate the dynamics of flight systems and fuse information from heterogeneous sensors, compensating for missing data or modeling inaccuracies. Reinforcement learning approaches have even demonstrated the ability to optimize guidance strategies and control laws for intercepting maneuvering targets under partially observable conditions [8,9]. These results suggest that learning-based estimation has the potential to complement or even replace traditional deterministic algorithms in demanding GNC contexts.

Throughout this work, the full projectile dynamics and guidance environment are modeled using a six-degree-of-freedom simulation framework implemented in MATLAB/Simulink R2024b, which incorporates aerodynamic, kinematic, and actuator subsystems. This environment provides the synthetic data required to train and validate the different components of the architecture. All neural networks are trained using the TensorFlow v2.16 framework with the Keras API, ensuring reproducibility and efficiency.

Attitude estimation is one of the most critical components in any GNC architecture. Conventional techniques combine inertial sensor integration with GNSS measurements in complementary or Kalman-based filters [10,11,12,13,14]. Although effective under nominal conditions, these methods are vulnerable to accumulated drift, bias instability, and measurement dropout. In contrast, deep neural networks trained on synchronized GNSS/IMU data can learn the underlying nonlinear mapping between raw signals and the corresponding orientation or gravity vector, eliminating the need for precise analytical models. Several studies have shown that fusing accelerometer data with GNSS observables enables robust attitude estimation even in the absence of high-grade gyroscopes, thereby reducing overall system cost and complexity. This is particularly advantageous for guided projectiles and small UAVs, where size, weight, and power constraints limit the onboard sensor suite.

In parallel, the estimation of the line-of-sight (LOS) vector plays a central role in terminal guidance systems employing semiactive laser (SAL) seekers. Classical methods reconstruct the LOS from geometric relationships between the projectile, the seeker, and the target’s laser spot [15,16,17,18]. However, in rapidly spinning projectiles or under turbulent flight conditions, nonlinear aerodynamic effects and sensor misalignment introduce significant estimation errors. Hybrid schemes integrating analytical guidance laws with neural correction modules have demonstrated improved performance by compensating for unmodeled dynamics and optical distortions [19,20,21]. These methods extend the operational envelope of SAL-based systems, allowing reliable target tracking at high spin rates and varying Mach numbers. In addition, neural LOS estimators can operate with partial signal information, enhancing robustness when the photodiode array experiences occlusion or degraded signal-to-noise ratio.

Beyond attitude and LOS, GNSS carrier-phase ambiguity resolution remains a persistent challenge for achieving centimeter-level accuracy in real-time navigation and attitude determination. The well-known Least-Squares Ambiguity Decorrelation Adjustment (LAMBDA) algorithm and its derivatives have established the mathematical foundation for integer ambiguity fixing [22,23]. Subsequent research has explored triple-frequency GNSS combinations to accelerate convergence and enhance precision [24,25], as well as Bayesian and multi-frequency extensions that exploit statistical prior knowledge [26,27] and an innovative NRTK framework for mobile platforms [28]. However, these algorithms are often sensitive to multipath effects, measurement outliers, and low signal-to-noise conditions. Recent work has proposed convolutional and recurrent neural networks to model the nonlinear dependencies among code and carrier-phase observables, thus improving ambiguity resolution in real time under degraded environments [29,30,31]. These neural solutions provide adaptive capabilities that classical estimators inherently lack, enabling faster ambiguity convergence without compromising accuracy.

The use of high-fidelity aerodynamic modeling and six-degree-of-freedom (6-DoF) simulations has been essential for validating such estimation and guidance frameworks. Nonlinear projectile models incorporating aerodynamic coefficients dependent on Mach number, spin rate, and angle of attack allow researchers to assess the behavior of estimators under realistic conditions [32,33,34]. These simulation environments can emulate cross-coupling between rotational and translational dynamics, actuator saturation, and time-varying external disturbances such as crosswinds or gravity anomalies. Moreover, the inclusion of complete actuator models (e.g., nose-mounted canards) permits the evaluation of guidance and control laws in closed-loop conditions, ensuring that estimator errors translate meaningfully into guidance performance metrics such as miss distance or Circular Error Probable (CEP).

While these studies have significantly advanced individual estimation domains, a notable gap remains: most existing works treat attitude, LOS, and GNSS ambiguity as separate problems handled by independent modules. In classical system architectures, attitude and LOS are recovered from inertial and SAL or optical sensors, whereas GNSS ambiguity resolution is performed by dedicated algorithms, each with its own data pipeline, filtering scheme, and computational budget. This separation often leads to redundant sensor processing, delays in information propagation, and limited exploitation of the correlations that naturally exist between the underlying quantities. For instance, accurate attitude estimation stabilizes the coordinate transformations required for LOS reconstruction, and robust ambiguity resolution provides consistent GNSS baselines for orientation determination. In this work we do not attempt to replace the entire chain with a single monolithic network; instead, we focus on two key bottlenecks—gravity vector reconstruction and GNSS double-difference ambiguity estimation—and study how learning-based estimators for these tasks, when embedded in a unified 6-DoF navigation and guidance framework, impact the overall attitude and LOS restitution performance.

In summary, this work proposes and assesses a hybrid learning-based navigation framework in which two neural estimators replace classical gyroscopic instrumentation and analytical ambiguity resolution within a realistic GNC chain for spin-stabilized projectiles. A gravity vector network and a GNSS double-difference ambiguity network are designed, trained on data generated by a high-fidelity 6-DoF simulation, and embedded in the full guidance and attitude-reconstruction pipeline. The paper reports a structured, multi-phase experimental campaign that compares classification and regression formulations, MLP and RNN architectures, time-window lengths, and training hyperparameters, providing practical design guidelines for similar aerospace estimation problems. End-to-end Monte Carlo simulations show that the proposed learning-based estimators achieve attitude restitution accuracy close to RTK-like performance while relying on lower-cost sensing and without explicit analytical ambiguity resolution. By integrating standard deep learning models into a physics-consistent 6-DoF testbed and quantifying their impact at system level, the work advances the application of neural estimation techniques to low-cost, high-dynamic GNC systems.

The proposed system is validated in a high-fidelity 6-DoF simulation environment representative of spin-stabilized projectiles, including nonlinear aerodynamics and realistic GNSS, IMU and SAL sensor models. The gravity vector and GNSS double-difference ambiguity networks are trained on synthetic datasets generated from this environment and subsequently embedded in the full guidance and attitude-restoration chain. Model performance is evaluated through Monte Carlo campaigns, including cases with degraded GNSS availability, SAL noise corruption and sensor misalignments, analysing ambiguity accuracy, gravity reconstruction error and the resulting attitude and LOS restitution. The experimental study compares MLP and RNN architectures, classification- and regression-based formulations for ambiguity, and different input time windows, and includes a reference RTK control simulation as a classical baseline. The results show that the learning-based estimators—in particular the classification-based ambiguity networks combined with the learned gravity estimator and SAL information in the terminal phase—can significantly improve ambiguity resolution robustness and gravity vector reconstruction, leading to attitude restitution RMSEs close to those of the RTK baseline while relying on lower-cost sensing and enhancing guidance performance in challenging flight conditions.

The framework provides qualitative benefits in terms of scalability and integration. By unifying multiple estimation tasks within a single network, the architecture simplifies onboard implementation and facilitates adaptive reconfiguration. The shared backbone can be extended to incorporate additional sensing modalities—such as radar or infrared cameras—without significant architectural modifications. Furthermore, the model’s differentiable nature enables end-to-end optimization with respect to downstream control and guidance objectives, opening pathways toward fully learned GNC systems.

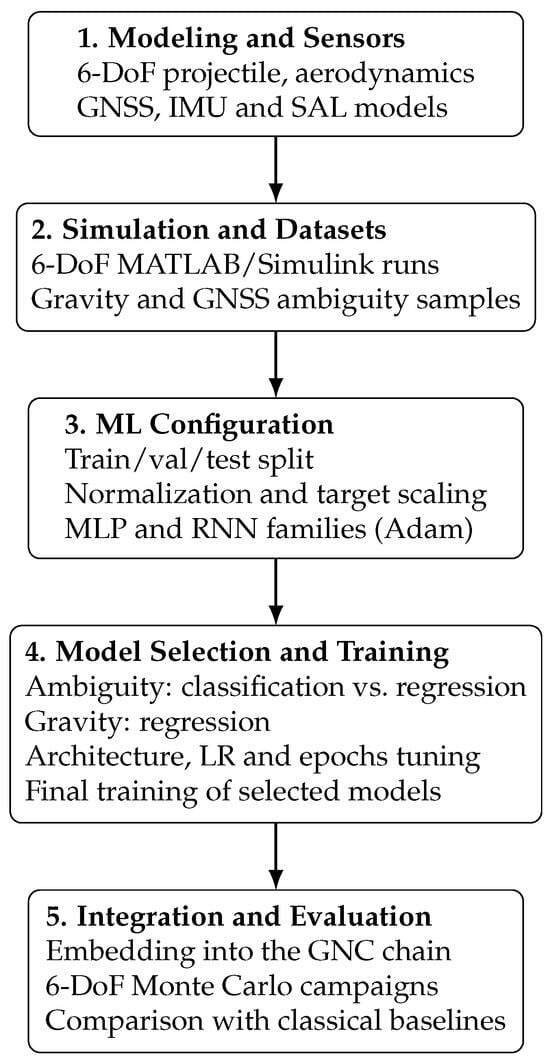

The remainder of this article is structured as follows. Section 2 presents the mathematical formulation underlying the projectile dynamics, sensor models, and the estimation problem. Section 3 introduces the proposed neural network architecture, detailing the network design, training strategy, and loss functions used for joint attitude, LOS, and GNSS ambiguity estimation. Section 4 describes the complete simulation environment and experimental setup, including the MATLAB/Simulink R2024b 6-DoF model and the procedures used to generate the training and validation datasets and reports the results of the Monte Carlo campaigns and compares the performance of the proposed framework against classical baselines. Finally, Section 5 summarizes the main contributions and discusses potential avenues for future research. To provide a clear overview of the methodology and to help readers navigate the transition from the theoretical formulation to the experimental implementation, Figure 1 presents the complete workflow of the proposed framework. The diagram summarizes all major steps of the study, from the mathematical modeling and dataset generation to the machine-learning procedure, model selection, and final integration within the GNC system.

Figure 1.

Compact workflow of the proposed learning-based framework, from modeling and dataset generation to model selection, training, and integration into the GNC system.

2. Mathematical Formulation

This section presents the formal mathematical foundation of the proposed neural network based estimation framework. The formulation integrates the physical models of projectile dynamics, the sensor measurement equations, and the data-driven learning structure used to jointly estimate attitude, line of sight (LOS), and GNSS ambiguity. All vector quantities are expressed in , unless otherwise stated, and follow standard aerospace notation conventions [21,22,32].

2.1. Characterization of the Aerial Platform

The proposed GNC strategy is implemented on a guided rocket equipped with a maneuvering assembly consisting of four forward-mounted canard control surfaces. These canards are mechanically isolated from the main airframe, allowing independent roll control of the forebody. A canard, in aeronautical terms, refers to a small lifting surface located ahead of the main wing, primarily intended to enhance stability and maneuverability, often substituting the conventional horizontal tail. In this configuration, the canard pairs are operated in a differential manner—two by two—to generate the desired control forces and the corresponding aerodynamic moments required for attitude regulation. Figure 2 clarifies the configuration of the vehicle. The forces depicted in the figure are described later in this section.

Figure 2.

Scheme of the rocket vehicle.

Table 1 and Table 2 summarize the principal physical and aerodynamic properties of the vehicle, including thrust characteristics, total and propellant mass, inertia tensor components, and aerodynamic coefficients. These data were derived from a combination of computational fluid dynamics (CFD) analyses, experimental testing, and validation through wind tunnel campaigns (see [32] for additional details). To ensure smoothness and continuity in both thrust and aerodynamic coefficient variations, cubic-spline interpolation was applied at intermediate data points. Based on the reported inertia distribution, the vehicle exhibits symmetry about its principal planes.

Table 1.

Mass and inertia parameters of the aerial vehicle (values adjusted within ).

Table 2.

Aerodynamic coefficients of the vehicle.

2.2. Equations Governing the Flight Dynamics

To formulate the flight dynamics model, three reference frames are introduced for projecting forces and moments: the North–East–Down (NED) frame and the body-fixed frame.

The NED frame, denoted by the subscript e, is an Earth-fixed coordinate system in which the axis points toward geographic north, the axis is perpendicular to the Earth’s surface and directed downward (nadir), and the axis completes a right-handed, clockwise triad.

The body-fixed frame, denoted by the subscript b, is attached to the vehicle itself. The axis points forward along the longitudinal symmetry plane, is perpendicular to and directed downward within the same plane, and completes a right-handed coordinate system. The origin of this frame coincides with the vehicle’s center of gravity, and the entire triad is rigidly attached to the roll-decoupled control assembly.

The following section presents the governing equations of flight dynamics. Assuming the vehicle behaves as a rigid body, its motion is described by the classical Newton–Euler formulation. These six coupled differential equations describe both translational and rotational motion of the center of gravity as a function of the total external forces and moments acting on the body. The resultant aerodynamic, thrust, and gravitational contributions are expressed in Equations (1) and (2).

where: is the lift force, the drag force, the thrust, the Magnus force, the pitch damping contribution, the gravitational force, and the Coriolis term.

Similarly, denotes the pitch damping moment, the overturning moment, the Magnus moment, and the spin damping moment.

The total external force vector and moment vector summarize all aerodynamic, gravitational, and inertial effects acting on the vehicle at each instant of flight. These terms are projected into the selected reference frame for integration within the full Newton–Euler dynamic model.

The external forces expressed in the Earth frame are nonlinear functions of the vehicle’s motion variables—such as aerodynamic velocity, total angle of attack, and Mach number—as well as of the aerodynamic coefficients and atmospheric properties. Due to the strong nonlinear coupling between these quantities, the explicit analytical formulation is omitted here; instead, their functional dependencies are presented. Additional mathematical details can be found in [32].

The lift force in the Earth frame is defined in Equation (3) as a nonlinear function of the angle of attack , velocity vector , and Mach number M:

The drag force, shown in Equation (4), depends primarily on , Mach number, and air density:

The pitch damping contribution, given in Equation (5), is influenced by angular momentum and moment of inertia:

The Magnus force, presented in Equation (6), depends on spin rate and cross-coupling effects:

The thrust contribution is modeled in Equation (7), as a function of the thrust direction vector :

The gravitational force is represented by Equation (8), which depends on the gravitational acceleration vector and the vehicle mass m:

Finally, Equation (9) expresses the Coriolis contribution, which arises from the Earth’s angular velocity and the relative motion of the vehicle:

Here, and denote the linear and cubic lift coefficients, respectively, while represents the total angle of attack. The coefficients and correspond to the drag model (linear and quadratic terms). The vector is the angular momentum expressed in the Earth frame, and , are the principal moments of inertia in the body frame. is the pitch damping coefficient, and the Magnus force coefficient. The vector represents the vehicle pointing direction, the gravity vector in the Earth frame, the Earth’s rotation vector, and the velocity of the vehicle in the same frame. Finally, S denotes the reference aerodynamic surface and the local air density.

Similarly, the aerodynamic and inertial moments acting on the vehicle in the Earth frame are given by the following expressions. These include the contributions of overturning, pitch damping, Magnus, and spin damping effects, all of which depend nonlinearly on the vehicle’s dynamic state, aerodynamic parameters, and flow conditions.

The pitch damping moment, defined in Equation (10), depends on the vehicle’s angular motion and the coefficient :

The overturning moment is described in Equation (11), where and represent the linear and cubic coefficients:

The Magnus moment, expressed in Equation (12), accounts for rotational flow coupling:

The spin damping moment, shown in Equation (13), represents the stabilizing torque due to roll damping:

Here, denotes the pitch damping moment coefficient, while and are the linear and cubic overturning moment coefficients, respectively. The coefficient corresponds to the Magnus moment contribution, and represents the spin damping moment coefficient. The variables and describe the position and velocity vectors in the Earth frame, is the angular momentum, M the Mach number, the air density, and S the vehicle’s reference surface. The principal moments of inertia and are included to account for the coupling between translational and rotational dynamics in the roll-decoupled configuration.

The control forces and moments, generated by the maneuvering system composed of four independently actuated canards, are expressed in Equations (14) and (15). These nonlinear relations depend on the aerodynamic flow, canard deflection, and local orientation of each surface.

Here, is the forward-pointing unit vector lying in the vehicle’s plane of symmetry, while denotes the deflection angle of the i-th canard. The vector represents the surface normal to the corresponding canard aerodynamic plane. The term is the aerodynamic coefficient associated with the normal force on each fin, S is the vehicle reference area, and denotes the characteristic surface area of each canard element. The total control force and moment are obtained by summing the individual contributions of the four actuators.

As previously stated, a Newton–Euler formulation is adopted to describe the equations of motion of the vehicle. The dynamics are expressed in both the body-fixed frame (denoted as b) and the flat-Earth frame (denoted as e). These frames are related through the Euler angles of yaw (), pitch (), and roll (), which define the vehicle’s orientation in space. The complete formulation is presented in Equations (16) and (17).

The translational motion of the center of mass, expressed in the body-fixed reference frame, follows Newton’s second law as shown in Equation (16):

where represents the linear velocity of the vehicle in body axes, is the angular velocity vector, and m is the total mass of the system. The term accounts for the Coriolis effects arising from the rotation of the body frame.

Similarly, the rotational motion of the vehicle is governed by Euler’s equation, shown in Equation (17):

where is the angular momentum vector expressed in body axes, defined as , with being the inertia tensor referred to the body frame. The cross product represents the gyroscopic coupling between roll, pitch, and yaw dynamics.

Equations (16) and (17) together constitute the six-degree-of-freedom (6-DoF) Newton–Euler equations of motion. To ensure consistency, all control forces and moments (, ) as well as external forces and moments (, ) must be expressed in the body-fixed coordinate system prior to their inclusion in these dynamic equations.

2.3. Attitude Determination Equations

The vehicle’s attitude can be geometrically characterized by identifying two non-collinear direction vectors, denoted here as and , expressed in two distinct reference frames—for example, the conventional Earth-fixed North–East–Down (NED) frame and the body-fixed coordinate system.

These two direction vectors, and , span a unique plane in three-dimensional space. The orientation of this plane is determined by its associated normal vector, , which is computed as the vector cross product of and , as defined in Equation (18).

The transformation between the Earth reference frame and the body-fixed coordinate system can be expressed through a rotation tensor, denoted as . For convenience and numerical stability, each direction vector () is normalized according to . The rotation tensor can then be assembled following Equation (19), where the normalized direction vectors are arranged as column components.

This approach is fundamental in determining the spatial orientation of flight vehicles, as it allows the precise mapping between the Earth and body axes. By employing a rotation tensor, onboard sensors can be correctly aligned with the vehicle’s reference frame, thereby ensuring accurate measurement interpretation. Such information is essential for the guidance, navigation, and control (GNC) system, allowing the onboard computer to continuously correct deviations and maintain the desired trajectory. Overall, this method provides a reliable and computationally efficient framework for attitude estimation in aerospace systems.

The use of multiple vectors instead of only three provides redundancy and significantly increases the robustness of the attitude estimation. The number of sensors employed in this study to provide the sources for those vectors, was selected based on a trade-off between computational cost and accuracy. Increasing the number of sorces improves the geometric conditioning of the rotation matrix but also increases the system complexity.

Consequently, Equation (19) can be generalized to accommodate an arbitrary number of direction vectors, as expressed in Equation (20).

The next subsections outline two complementary methods for deriving the required vector pairs.

2.3.1. Velocity, Line of Sight and Gravity Vector Method

As mentioned earlier, determining the vehicle’s attitude requires identifying at least two distinct vectors expressed in two different reference frames. In this work, the velocity vector and the line-of-sight (LOS) vector are used as the reference pair.

If a GNSS sensor suite is installed on the vehicle, the velocity vector can be directly obtained from GNSS measurements expressed in the Earth-fixed frame (denoted by subscript e). The velocity components in this frame, namely , , and , define the velocity vector as shown in Equation (21).

In parallel, the same velocity vector can also be computed in the body-fixed frame from a triad of accelerometers, one mounted along each principal axis. By integrating their measured accelerations over time, the velocity vector in the body frame is obtained as expressed in Equation (22). In this equation, , , and denote the acceleration components measured along the body axes, and represents the estimated angular velocity vector, also expressed in body coordinates. It should be noted that, at this stage, remains unknown; the algorithm used to estimate it will be presented in the following sections.

Similarly, the line-of-sight (LOS) vector must be determined in both the Earth-fixed and body-fixed reference frames, denoted as and , respectively. The vector can be readily obtained from GNSS sensor data, as it directly provides the vehicle and target positions in Earth coordinates. However, determining requires the use of the semi-active laser (SAL) seeker, which becomes operational only when the vehicle is sufficiently close to the target. Consequently, during the initial and mid-course flight phases, when the SAL signal is unavailable, an additional reference vector is required to enable continuous and reliable attitude estimation.

The gravity vector constitutes a natural candidate for this purpose. Determining the gravity vector in the Earth-fixed reference frame is straightforward, as it is always aligned with the axis. Its expression is given in Equation (23), where g denotes the gravitational acceleration, assumed constant in this model with a value of . It should be noted that higher accuracy can be achieved using more refined gravity models, in which g varies with latitude, longitude, and altitude.

However, the gravity vector expressed in the body-fixed reference frame, , is also required. Although several methods exist to estimate , most are either complex or require additional sensors. For example, one approach involves identifying the constant component of the measured acceleration using a low-pass filter. In this method, the jerk in the body frame is computed as the time derivative of the acceleration; integration of this term yields the non-constant component of the motion, which is then subtracted from the measured acceleration to isolate the gravity component. Nonetheless, this technique becomes invalid when the vehicle undergoes rotational motion.

Another classical method to estimate relies on the integration of the inertial mechanization equations [35]. However, such an approach requires gyroscopes to provide angular rate information, contradicting the low-cost, gyro-free philosophy of the proposed system.

Therefore, the cornerstone of the present attitude estimation framework lies in accurately determining the gravity vector in the body-fixed frame using only accelerometer data. The Neural Networw methodology section introduces an estimation method that remains valid for both rotating and non-rotating vehicles while relying exclusively on accelerometers.

2.3.2. Array of GNSS Sensors Methodology

In the context of a vehicle equipped with an array of Global Navigation Satellite System (GNSS) antennas, the pair of vectors required for attitude determination can be directly obtained from the spatial distribution of GNSS sensors. A minimum of antennas (and up to in the tests conducted in this research) are required to reconstruct the rigid-body attitude.

The eight GNSS antennas are installed along the vehicle body as follows: the reference antenna is located at the center of mass, coinciding with the origin of the body-fixed frame. Two lateral antennas are mounted symmetrically at coordinates [0, 0.1, 0] m and [0, −0.1, 0] m in body axes. Two additional antennas are positioned along the longitudinal direction—one near the nose at [1, 0, 0] m and another near the tail at [−1, 0, 0] m. Finally, three antennas are mounted near the fin roots at [−0.8, 0.1, 0.1] m, [−0.8, −0.1, 0.1] m, and [−0.8, 0, −0.1] m. This geometric configuration provides full spatial coverage for accurate reconstruction of the orientation, while minimizing aerodynamic interference and preserving symmetry about the longitudinal axis.

Each antenna continuously transmits its GNSS position to the onboard computer. Taking one of them (denoted ) as the reference, the vectors connecting it to the remaining antennas are computed as for . After normalization, the following set of unit vectors is obtained in both coordinate frames:

2.4. Sensor Models

As outlined in the introduction, the main goal of this research is to streamline onboard navigation architectures by minimizing the reliance on complex and costly instrumentation. In conventional guidance and navigation systems, gyroscopes are typically used to measure angular velocity and orientation. Although these sensors provide accurate short-term attitude information, their performance tends to deteriorate in highly dynamic flight conditions, while maintaining precision under such regimes significantly increases system cost and complexity. To address this limitation, the proposed approach seeks to eliminate the dependency on gyroscopes by leveraging the fusion of Global Navigation Satellite System (GNSS) measurements, accelerometer data, and photodetector-based line-of-sight information. By integrating these complementary sources, the navigation solution enhances overall accuracy and robustness without the need for high-grade inertial sensors. This section presents the mathematical models used to characterize the behavior of each sensor and their role within the data fusion framework.

2.4.1. GNSS Sensor Modeling

Global Navigation Satellite System (GNSS) receivers determine position and attitude by measuring the carrier phase offset, denoted as , expressed in units of the carrier wavelength . Under ideal conditions, this observable allows for sub-centimeter accuracy, theoretically reaching approximately 0.003 m. However, such precision requires resolving the integer phase ambiguity N, representing the total number of complete wavelength cycles between a satellite and a receiver antenna. This ambiguity resolution remains one of the key challenges in high-accuracy GNSS-based navigation.

Several sources of error contribute to measurement uncertainty, including the satellite clock bias , the receiver clock bias , propagation delays caused by the ionosphere and troposphere, , and local effects such as thermal noise and multipath interference . Combining these elements, the pseudo-range observable, —which represents the apparent distance between satellite and receiver —can be expressed as:

where and denote the signal reception and transmission times, respectively, and c is the speed of light. Equation (24) combines deterministic components (geometric range, clock biases) and stochastic components (multipath, atmospheric delays).

In attitude determination applications, not all error terms can be explicitly estimated.

Nevertheless, since several error sources are common to all receivers, differencing techniques can be applied to mitigate or eliminate their impact. For each satellite , a line-of-sight (LOS) unit vector from receiver to the satellite is defined as . Given that the baseline between receivers is negligible compared to the satellite range, the LOS direction can be considered identical for all receivers and is denoted as . Consequently, the relative geometry between receivers and observing the same satellite can be expressed as

The difference between two pseudo-range measurements from distinct receivers tracking the same satellite, known as the single difference, is given by (26):

The differencing operation removes common-mode errors, such as satellite clock bias and atmospheric propagation delay, which are approximately identical across closely spaced receivers.

To further suppress receiver-dependent biases, a double difference is formed by subtracting the single difference of one satellite, , from that of another, . This yields the double-differenced observable , defined by (27):

The receiver clock bias term cancels completely in Equation (27), which significantly improves robustness in the carrier-phase solution. However, multipath-related components, , cannot be eliminated through differencing since they vary with antenna position and local scattering environment.

As noted by [29], multipath effects are not well modeled by white noise due to their temporal and spatial correlation. Although multipath has a substantial influence on ground or low-altitude navigation—particularly in environments with reflective surfaces such as airports—its contribution diminishes considerably during cruise flight, where the primary source of reflection is the missile body itself [36]. This assumption has been adopted in the present work.

Alternative approaches for compensating correlated GNSS errors include the use of deep learning architectures, such as recurrent or long short-term memory (LSTM) networks [29,37]. Nevertheless, given that the present study focuses on mid- and high-altitude flight, the simplified differencing model presented in Equation (27) provides a suitable trade-off between computational efficiency and estimation accuracy for the proposed navigation framework.

As detailed previously, GNSS sensors can provide highly accurate measurements of carrier phase differences, expressed through double-differenced observations. Once the integer carrier ambiguity term, , is determined—either by estimation or by correction through a trained neural network as introduced in subsequent sections—the double-difference of pseudo-ranges, , can be computed directly from carrier-phase data, as shown in Equation (27). Applying this to the previously defined geometric relationship in Equation (25), the following expression is obtained:

where is the baseline vector between sensors and , and and are the unit vectors pointing toward satellites and , respectively. This expression links the measured double-difference in carrier phase to the geometric configuration of the GNSS satellite constellation and the sensor array mounted on the vehicle.

Equation (28) can be reformulated in matrix form to simultaneously account for all available satellites, as shown in Equation (29). Here, represents the Moore–Penrose pseudo-inverse of the geometry matrix H, which allows estimation of the baseline vector in a least-squares sense:

where is the column vector containing the set of double-differenced pseudo-range measurements with respect to a reference satellite , as defined in Equation (30), and H is the geometry matrix composed of the relative satellite direction cosines, as expressed in Equation (31):

The vectors are obtained directly from the GNSS navigation data, representing the direction from the vehicle to each satellite in the Earth-fixed reference frame. Equation (28) therefore defines an overdetermined system that can be optimally solved through Equation (29) whenever is invertible. If this condition is not met, an approximate solution for the baseline vector, , can be obtained by minimizing the residual norm according to

The recovered baseline vectors among all sensor pairs define the spatial geometry of the GNSS array rigidly attached to the vehicle body. By comparing these vectors expressed in the Earth-fixed frame and in the body-fixed frame, the rotation matrix relating both reference systems can be reconstructed using the same formalism introduced in Equation (29). This provides an independent and highly accurate estimation of the vehicle’s attitude without relying on gyroscopes or additional attitude sensors.

Consequently, the angular restitution approach represents a complementary and self-contained method for attitude determination, valid throughout all flight phases. When fused with accelerometer, GNSS, and semi-active laser (SAL) data, it contributes to a robust hybrid navigation system capable of maintaining accurate attitude and position estimates even under high-dynamic flight conditions.

2.4.2. Accelerometers

An accelerometer measures the specific force acting on the vehicle, that is, the instantaneous rate of change of velocity within the body-fixed reference frame. In this study, each accelerometer is modeled as an ideal sensor affected by a bias and additive white noise process, with a standard deviation of approximately , which reflects the performance of typical high-grade navigation devices. The sensor model can therefore be represented as

where denotes the measured acceleration vector in the body frame, is the true specific acceleration of the vehicle, represents the accelerometer bias (assumed to vary slowly with time), and is a zero-mean Gaussian noise vector.

The estimated velocity in body axes is subsequently employed, together with other onboard measurements, to determine the vehicle’s inertial velocity and orientation through sensor fusion. It is important to note that, due to the integration process, even small bias or noise components in acceleration cause cumulative drift in the velocity estimate over time. Such errors must therefore be mitigated through complementary or Kalman-based filtering strategies.

Beyond their role in kinematic state propagation, accelerometer measurements are also used for gravity vector estimation. As detailed in subsequent sections, the magnitude of the measured acceleration is exploited to infer the direction of the local gravity vector once the translational motion component has been removed. Accurate knowledge of the vehicle’s velocity magnitude is essential for this purpose, as it enables separation of inertial and gravitational contributions within the measured acceleration signal.

2.4.3. Semi-Active Laser Kit

Laser-based guidance provides a reliable means of steering a vehicle toward a designated target using the reflection of a laser beam. In this approach, an external laser illuminates the target continuously, and part of the reflected radiation is scattered in multiple directions. When the vehicle enters the region where a portion of this scattered energy reaches its optical seeker, a semi-active laser (SAL) kit detects the direction of the incoming energy and generates control signals to adjust the vehicle trajectory toward the illuminated spot. This optical seeker, therefore, constitutes the primary element of the terminal guidance system.

The SAL kit employs a quadrant photo-detector composed of four independent photodiodes that convert incident light into electrical currents, denoted as , , , and . The relative distribution of these intensities defines the position of the laser spot centroid on the detector surface. Following the formulation in [19], the normalized coordinates of the centroid on the sensor plane can be estimated as

The corresponding radial distance from the detector center, , can then be determined as

Although these computed coordinates are conformally related to the actual centroid position, a correction is required to obtain the true radial displacement, , on the detector plane. Experimental calibration data provide the nonlinear mapping , as shown in Table 3. A cubic spline interpolation is then applied to approximate this relationship as a continuous function.

Table 3.

Experimental calibration between measured and actual spot displacement.

Once the calibration function is known, the corrected spot coordinates on the detector plane can be obtained using Equation (36):

where is the physical radius of the photo-detector. From these coordinates , the direction of the line-of-sight (LOS) vector can be reconstructed in the body-fixed coordinate system, considering the geometric offset between the photo-detector and the vehicle’s center of mass.

It is important to note that the SAL seeker provides useful information only during the terminal phase of the flight—when the reflected laser energy is strong enough to be detected. Nevertheless, this phase is also the most critical, as small errors in relative positioning between target and vehicle (on the order of a few meters) can cause significant impact deviations. Consequently, integrating a high-precision terminal guidance device such as a semi-active laser seeker is strongly recommended for this stage of the mission.

The fusion of the SAL sensor data with measurements from GNSS and inertial accelerometers enables an accurate estimation of the line-of-sight vector and overall vehicle attitude. This multisensor hybridization ensures precise trajectory correction during terminal homing, enhancing both hit accuracy and robustness under real flight conditions.

4. Results, Discussion, and Selection of the Final ML Model

The experimental campaign was organized into five consecutive phases, each building on the outcomes of the previous one:

- 1

- Determination of the optimal neural network type and learning task (regression or classification).

- 2

- Identification of the most suitable network architecture and parameter configuration.

- 3

- Fine-tuning of the initial learning rate and number of epochs.

- 4

- Generation of the final model, where each output is predicted by an individual network.

- 5

- Evaluation of the model’s performance when applied to an angular restitution problem.

The following sections present the main results obtained at each stage and the rationale for selecting the optimal configuration for both neural network families.

4.1. Phase 1—Selection of the Neural Network Type and Learning Task

Training deep neural models can occasionally lead to convergence to local minima. To mitigate this issue and ensure the reliability of the results, each configuration was trained three times with different random seeds, and the best-performing iteration was retained for comparison.

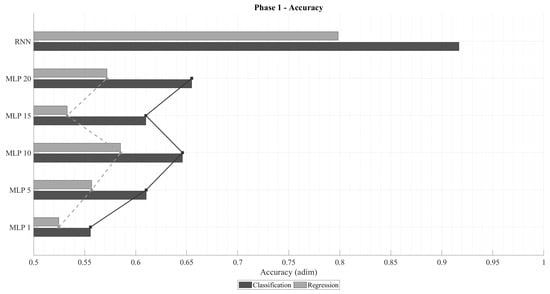

Figure 3 illustrates the accuracy obtained for both regression and classification tasks using Multi-Layer Perceptrons (MLP) with various sliding time-window lengths, as well as Recurrent Neural Networks (RNN). As expected, classification networks outperform regression ones in terms of accuracy, since their training objective explicitly minimizes categorical cross-entropy. A clear trend can be observed: accuracy improves as the time window increases, up to an optimal range beyond which performance begins to degrade. For regression, the optimal window appears near , whereas for classification, the maximum likely occurs between and . This pattern aligns with physical intuition: the relevance of past samples diminishes over time, and excessively long input sequences increase the model’s complexity, leading to reduced generalization capability. In all cases, RNN architectures consistently outperform their MLP counterparts, confirming that temporal dependencies play a critical role in predicting .

Figure 3.

Accuracy of MLP and RNN architectures in classification and regression tasks for different time-window lengths.

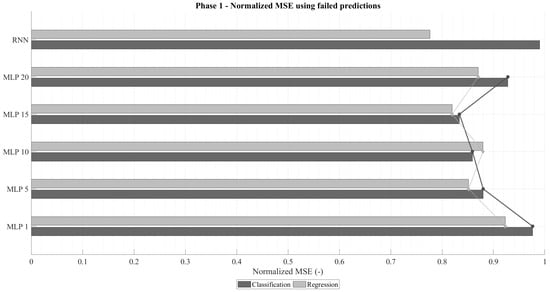

Figure 4 presents the normalized mean squared error () computed only for failed predictions. As anticipated, regression networks exhibit lower errors than classification ones, given that their loss function directly minimizes . Analogously to the accuracy trend, decreases with increasing time-window size, reaching a minimum before rising again. In an ideal failure scenario, the error would correspond to a one-unit deviation from the correct value (i.e., ). Among them, the RNN-regression models consistently achieved the lowest values, while RNN-classification networks produced the highest. This is attributable to the fact that classification networks prioritize categorical accuracy, occasionally making riskier predictions that result in larger deviations when they fail.

Figure 4.

Normalized in failed predictions for MLP and RNN architectures under regression and classification tasks.

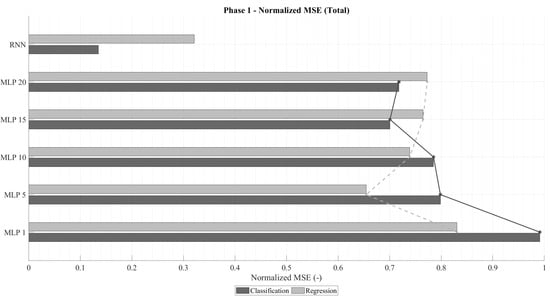

The emphasis on analyzing the in the case of failure stems from the fact that highly accurate classification networks naturally exhibit low overall , even when their individual mispredictions deviate substantially. This effect is illustrated in Figure 5, which shows the total normalized across all predictions. Despite regression models being trained to minimize this metric, classification networks can outperform them in total whenever their accuracy becomes sufficiently high. This trend becomes particularly evident for longer time windows in both MLP and RNN configurations.

Figure 5.

Total normalized for MLP and RNN architectures in classification and regression configurations.

In summary, two architectures stand out from this phase: (1) a recurrent classification network with very high accuracy and moderate deviations in failed predictions, and (2) a recurrent regression network with lower accuracy but notably smaller deviations when errors occur. These two configurations will serve as the basis for the subsequent analysis, where architectural refinements and parameter optimizations are explored to further enhance model performance.

4.2. Phase 2—Selection of the Architecture and Parameters

This phase focuses on identifying the most suitable neural network architecture and parameter configuration for both learning tasks. Three network scales were tested to assess the influence of architectural complexity on performance:

- Big Architecture (BA): 800, 400, 200, and 100 neurons in the dense layers.

- Medium Architecture (MA): 400, 200, 100, and 50 neurons.

- Little Architecture (LA): 200, 100, 50, and 25 neurons.

Within each architecture, several recurrent configurations were examined, varying the number of neurons in the recurrent layer (100, 125, 150, 175, and 200) and the activation function (tanh and ReLU). As in Phase 1, each configuration was trained three times with different random seeds, and the best-performing repetition was selected for evaluation.

The evaluation metrics were chosen according to the learning formulation: accuracy for classification networks and normalized MSE () for regression networks, considering only failed predictions. This normalization enables a consistent comparison between architectures of different sizes and output ranges.

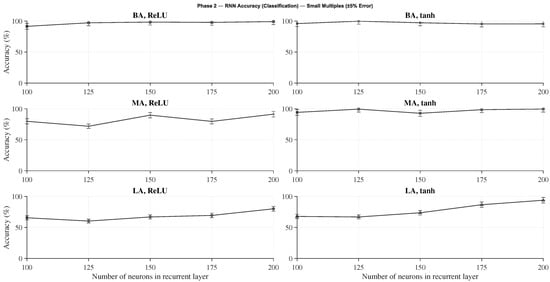

Figure 6 shows the classification accuracy obtained across the various architectures and configurations. The results reveal that using the tanh activation function in the recurrent layer consistently outperforms ReLU. Moreover, larger architectures achieve higher accuracy, though the improvement between the Medium (MA) and Big (BA) configurations is relatively modest. The Big Architecture using tanh shows stable accuracy across all recurrent-layer sizes (from 100 to 200 neurons), suggesting robustness with respect to this parameter. Therefore, to balance performance and computational efficiency, the configuration with 100 recurrent neurons is selected as the reference model for the classification task.

Figure 6.

Accuracy of classification networks with different RNN architectures and activation functions.

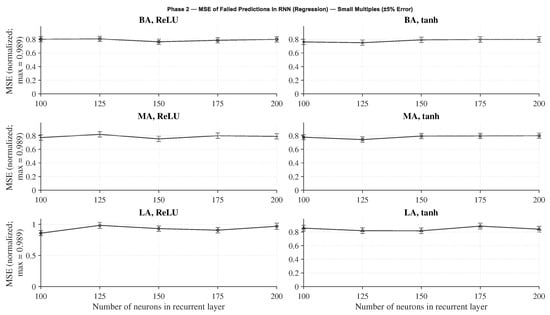

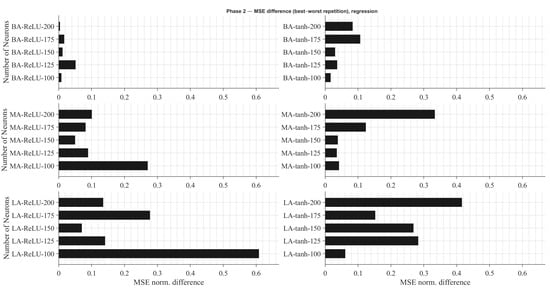

Figure 7 presents the normalized mean squared error () for the regression networks under the same architectural variations. Similar to the classification case, larger architectures and the use of tanh activation yield the best results, with optimal performance observed at 100 recurrent neurons. The performance gap between Medium and Big Architectures is minimal, indicating that the MA configuration could serve as a computationally lighter alternative with negligible accuracy loss. However, when considering robustness to initialization, the Big Architecture shows smaller sensitivity to seed variability, as depicted in Figure 8.

Figure 7.

Normalized in failed predictions for regression networks using different RNN architectures and activation functions.

Figure 8.

Difference in normalized between best and worst training repetitions for each RNN architecture in regression tasks.

This figure quantifies the difference in between the best and worst training repetitions for each tested architecture. It is evident that variability decreases as the network size increases, confirming that larger models provide more stable convergence behavior. Consequently, the Big Architecture with 100 recurrent neurons and tanh activation in the recurrent layer is selected as the preferred configuration for regression networks as well.

In summary, both analyses indicate that the Big Architecture (BA) with 100 recurrent neurons and tanh activation provides the most balanced trade-off between accuracy, stability, and computational cost for both network types. The next phase focuses on fine-tuning the learning rate and number of epochs to further refine the performance of this selected configuration.

4.3. Phase 3—Setting the Number of Epochs and Initial Learning Rate

This phase aims to determine the optimal initial learning rate (LR) and number of training epochs required to obtain a well-converged model before proceeding to the final training phase. In Phase 4, the selected configuration will be trained using an expanded dataset, without checkpoints or a dedicated validation set, in order to maximize the amount of data available for learning.

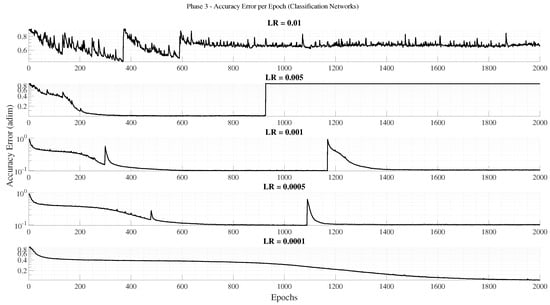

A total of 2000 epochs were executed for each experiment, testing five different initial learning rates: , , , , and . For each learning rate, the best-performing repetition—defined as the one achieving the highest accuracy on the test set for classification networks—was selected.

Figure 9 summarizes the validation accuracy error across epochs for the different initial learning rates. Intermediate values (0.005, 0.001, 0.0005) provide better generalization than the extreme cases, and the lowest validation error is obtained with an initial learning rate of . Consequently, this learning rate was adopted for the subsequent phases, together with a reduced maximum training horizon of 600 epochs for the final models.

Figure 9.

Accuracy error on the validation set across epochs for different initial learning rates in classification networks.

For regression networks, the training dynamics differ from those of classification. While these models can achieve low global mean squared error (), they may still yield relatively large deviations in failed predictions. Beyond a certain number of epochs, the network tends to prioritize minimizing overall by tolerating a few large errors rather than many small ones— a behavior consistent with the trends observed in Figure 6. To prevent this imbalance and maintain consistent accuracy across predictions, the regression models in Phase 4 will adopt an initial learning rate of and a total of 600 training epochs, representing a compromise between convergence quality and computational efficiency.

In summary, the classification networks perform best with an initial learning rate of and approximately 1050 epochs, whereas the regression networks achieve their optimal trade-off at a learning rate of and 600 epochs. These values will serve as the baseline configuration for the final model training in the next phase.

4.4. Phase 4—Training the Final Model

In this final phase, the complete models were trained and validated—one neural network for each value—using the optimal architecture and parameters determined in the previous stages. To maximize the available data, the validation set was merged with the training set, thereby enlarging the effective training base. Unlike the earlier phases, no checkpoints were used; instead, the final network obtained at the end of training was retained for evaluation. If a network failed to meet the reliability thresholds—defined as at least accuracy for classification networks or a normalized mean squared error () lower than in the case of regression networks—training was restarted from scratch with a different random seed until the desired criteria were achieved.

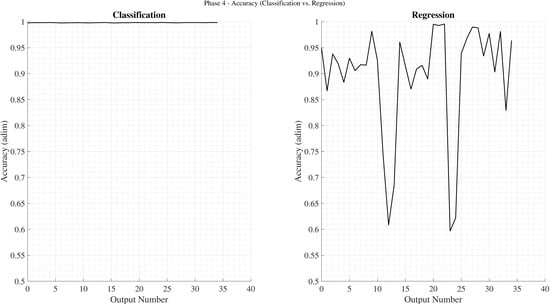

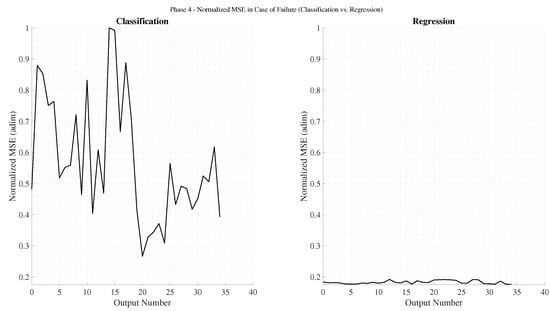

Figure 10 presents the accuracy obtained by the final classification and regression networks. Although the regression networks display greater variability, none of them surpass the classification networks in accuracy. A similar trend is observed when analyzing values for failed predictions, shown in Figure 11. These results confirm that minimizing the frequency of failures with larger deviations (as achieved by classification networks) is preferable to minimizing smaller errors that occur more frequently (as seen in regression models). Notably, the lowest achieved by the regression models is only marginally higher than that of the best-performing classification model.

Figure 10.

Accuracy of the final classification and regression networks.

Figure 11.

Normalized in failed predictions for the final classification and regression networks.

Temporal Dependence of Prediction Failures

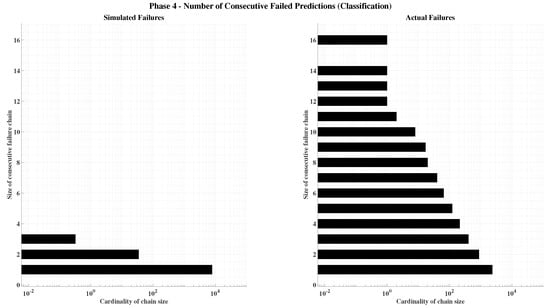

A deeper analysis was conducted to determine whether prediction failures are temporally correlated—that is, whether an error at time step t is influenced by an error at time step . To evaluate this, results from 1000 independent simulations were analyzed. Each simulation recorded the number of prediction failures per historical data sequence (the test set includes 20 historical datasets of 5760 samples each) across all 35 networks comprising the model. Synthetic results were then generated by randomly redistributing the same number of failures uniformly across time. The number and size of failure clusters were then tallied and averaged across the 1000 trials.

As shown in Figure 12, the simulated classification data display a greater number of isolated failures (single events uncorrelated with neighboring time steps) compared with the real model outcomes. However, the actual model produces larger and more diverse failure clusters—up to 16 consecutive time steps— whereas the randomized data rarely exceed four. A similar yet more pronounced behavior appears in the regression models (Figure 13), reflecting their lower overall accuracy. Empirically, this confirms that the conditional probability of failure given a previous failure is higher than the probability of failure given a previous success, i.e., . This observation is consistent with the temporal nature of RNNs, where consecutive inputs are similar and thus yield correlated prediction outcomes. It should be noted, however, that in a Simple RNN architecture, the recurrent connections propagate processed input information— not previous prediction errors—between consecutive time steps.

Figure 12.

Cardinality of consecutive failures in the final classification model.

Figure 13.

Cardinality of consecutive failures in the final regression model.

Inter-Network Dependence of Prediction Failures

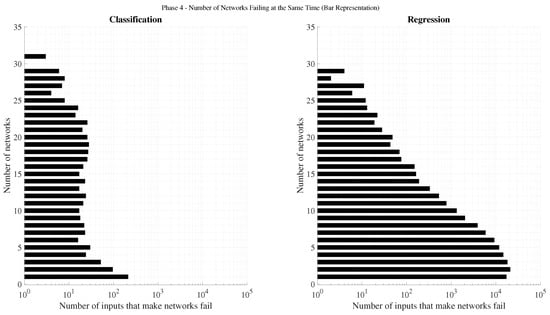

Each input sample is processed by all 35 networks within the model, resulting in 35 parallel outputs per time step. To assess whether failures in one network influence those in others, the number of simultaneous incorrect outputs across networks was analyzed. Figure 14 shows the distribution of instances in which K networks () failed simultaneously for the same input.

Figure 14.

Cardinality of simultaneous incorrect outputs across the 35 networks in the final classification and regression models.

In the worst-case scenario, assuming a 1% individual failure rate (99% accuracy), the probability of 20 networks failing concurrently for the same input would be on the order of , or roughly one event every samples. Nevertheless, this phenomenon was observed more than ten times within only 115,200 instances. This clearly indicates statistical dependence between networks: the probability that a network m fails given that another network n also fails at the same time step is significantly higher than the probability of failure given that the remaining networks succeed, i.e.,

where , , and .

This interdependence arises from the inherent architectural and parameter similarities among the networks, which were trained independently but share the same structural design and data characteristics. As a result, they exhibit correlated behavior under certain input conditions, particularly when the input features lie near the network decision boundaries.

The following subsection presents the angular restitution results, demonstrating the applicability and performance of the proposed algorithms in a complete attitude determination scenario.

4.5. Angular Restitution Results

In order to assess the effectiveness of the proposed attitude determination algorithm, a non-linear flight dynamics model, as developed by [54], was employed in a series of simulation experiments. Monte Carlo simulations were used to evaluate the performance of the closed-loop system [54] under various sources of uncertainty, including initial conditions, sensor data acquisition, atmospheric conditions, thrust characteristics, and aerodynamic coefficients (with their associated dispersion bounds).

Vector pairs used for restitution. Consistently with the methodology established in the previous sections, the angular restitution relies on pairs of vectors defined in both the Earth-fixed and body-fixed frames to reconstruct the rotation matrix (see Equation (20)). In this work, two complementary sources of vector pairs were employed: (i) Across the whole trajectory, vector pairs derived from the outputs of the neural networks: the GNSS-based ambiguity network provides the inter-antenna baselines in the Earth frame (via the double-difference formulation previously defined, while the gravity-estimation network supplies the gravity direction in the body frame; these vectors are stacked to form the correspondence sets used in Equation (20). (ii) During the terminal phase, the pairs of vectors defined previously from the semi-active laser (SAL) seeker are incorporated, i.e., the line of sight (LOS) to the target in Earth axes from GNSS/geometry and the LOS in body axes from the SAL photodiode measurements. Thus, when SAL data becomes available, the restitution set is augmented with these SAL-based pairs, increasing observability and improving conditioning of the least-squares attitude solution.

The simulation tests involved the execution of a predefined set of nominal, regular Euler-angle commands to enable a direct comparison between the estimated and true attitudes. Two families of commands were used: (a) an ascending ramp with constant slope between 0 and 10 s, reaching final amplitudes from 5° to 45° in 5° steps; and (b) a sinusoid at 1 rad/s with amplitudes in the same range. All runs were carried out in MATLAB/Simulink R2024b on a desktop computer (2.8 GHz CPU, 8 GB RAM).

To quantify restitution quality, the root mean squared error (RMSE) over the full simulation horizon is computed for each Euler angle as

As a reference baseline for the ambiguity-resolution approach using neural networks, a control simulation with RTK was executed under identical initial and boundary conditions. Signals were generated with an Orolia GSG-8 (Skydel engine) configured for RTK differential simulation [45]. Antenna positions, the vehicle reference point, and the motion profile were assumed known.

Results for regression-based networks (REG), classification-based networks (CLAS), and the RTK control are summarized in Table 5, Table 6 and Table 7. For each Euler angle, rows report RMSE for the ramp (“Constant”), the sinusoid (“Sin”), and their average. The last column gives the across-amplitude average. As anticipated, classification networks deliver RMSEs comparable to the classical RTK baseline and consistently lower than those of regression networks, while benefiting from the additional SAL-based vector pairs in the terminal segment.

Table 5.

Root Mean Squared Errors for roll angles.

Table 6.

Root Mean Squared Errors for pitch angles.

Table 7.

Root Mean Squared Errors for yaw angles.

These outcomes reflect the high redundancy and precision of the sensor suite: most samples are highly accurate, and the restitution least-squares fit (Equation (20)) effectively attenuates residual inconsistencies with largest . For the angular restitution trials we adopted the “Big Architecture” defaults from Section 3.2.4 and trained for 2000 epochs to ensure convergence under the combined (NN+SAL) vector-set regime.

Overall, the average error across all amplitudes remains below one degree, which is fully compatible with guidance, navigation, and control requirements for low-cost aerial platforms. The joint use of NN-derived vector pairs throughout the trajectory and SAL-based pairs in the terminal segment proves effective and robust for attitude restitution across diverse operational scenarios.

5. Conclusions

This work presented a hybrid, learning-based navigation framework in which two neural networks estimate the gravity vector in body axes and the GNSS double-difference carrier-phase ambiguities , complemented in the terminal phase by a semi-active laser (SAL) seeker.

A multi-phase experimental campaign was carried out to (i) select the learning formulation and network family for each task (classification vs. regression, MLP vs. RNN), (ii) tune architectures, input time windows and other hyperparameters, (iii) adjust the learning rate and training horizon, and (iv) train the gravity vector estimator together with one specialized network per ambiguity term before validating the full angular restitution pipeline. These steps rely on standard supervised learning methods rather than on a new training algorithm; the contribution of the study lies in how such methods are configured, embedded and assessed within a realistic 6-DoF navigation and guidance framework. Across the different phases, classification-based ambiguity networks achieved the highest prediction accuracy, whereas regression models yielded the lowest per-failure errors; to compare both families on a common basis, the mean squared error was normalized with respect to the dynamic range of each output. The final model set—comprising one gravity network and one ambiguity network per —meets the prescribed reliability thresholds, with the classification configuration providing the most favorable compromise between accuracy and normalized MSE in end-to-end attitude restitution.

Against an RTK control setup, the proposed approach achieved comparable precision, remaining within the same order of magnitude while relying on lower-cost sensing and without explicit analytical ambiguity resolution. Monte Carlo trials with a non-linear 6-DoF flight model confirmed robust closed-loop behavior under uncertainty in initial conditions, sensing, atmosphere, thrust, and aerodynamics. In the terminal segment, introducing SAL-based vector pairs improved conditioning and reduced restitution error, yielding average angular RMSEs below one degree across the tested maneuver set—values compatible with guidance, navigation, and control requirements for cost-effective aerial platforms.

Scope and limitations. To bound training cost and demonstrate feasibility, data generation assumed a fixed site (URJC, Madrid) and a 24 h window, with representative satellite visibility and sensor noise models. These constraints do not cover all geodetic conditions or constellation geometries. Nevertheless, the methodology is readily extensible: future work will (i) generalize GNSS simulation across latitude/longitude/altitude and time, (ii) reduce sensor count via architecture sharing or multiple neural network based learning, (iii) fuse both networks with classical filters for consistency checks, and (iv) exploit SAL more deeply for terminal-phase refinement and contingency handling.

Main takeaway. Learning gravity in body axes from accelerations and learning from GNSS observables provide two independent, mutually reinforcing vector sources for attitude determination—supplemented by SAL near impact. This redundancy, coupled with normalized, task-agnostic evaluation, delivers accurate, scalable attitude estimation suitable for onboard implementation in low-cost aerospace vehicles, and establishes a solid foundation for broader, global deployments in future studies.

Author Contributions

R.d.C. conducted the experimentation and performed the data analysis and calculations. L.C. contributed to the manuscript layout and text preparation. Both authors collaborated in the funding acquisition. Conceptualization, R.d.C. and L.C.; methodology, R.d.C.; software, R.d.C.; validation, R.d.C. and L.C.; formal analysis, R.d.C.; investigation, R.d.C.; resources, R.d.C.; data curation, R.d.C.; writing—original draft preparation, R.d.C.; writing—review and editing, L.C.; visualization, R.d.C.; supervision, L.C.; project administration, R.d.C. and L.C.; funding acquisition, R.d.C. and L.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the “Agencia Estatal de Investigación in Spain (MCIN/AEI/10.13039/501100011 033)” Project under Grant PID2020-112967GB-C33, in part by the “NextGenerationEU” Project under Grant TED2021-130347B-I00, in part by Rey Juan Carlos University Project under Grant 2023/00004/010-F919, and in part by the “Unión Europea–Next Generation EU” Project under Grant URJC-AI-22.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Bryne, T.H.; Hansen, J.M.; Rogne, R.H.; Sokolova, N.; Fossen, T.I.; Johansen, T.A. Nonlinear observers for integrated INS/GNSS navigation: Implementation aspects. IEEE Control Syst. Mag. 2017, 37, 59–86. [Google Scholar] [CrossRef]

- Hu, G.; Gao, B.; Zhong, Y.; Gu, C. Unscented Kalman filter with process noise covariance estimation for vehicular INS/GPS integration system. Inf. Fusion 2020, 64, 194–204. [Google Scholar] [CrossRef]

- Schmidt, G.T.; Phillips, R.E. INS/GPS Integration Architecture Performance Comparisons. NATO RTO-EN-SET-116 Lecture Series 2011. Available online: https://www.semanticscholar.org/paper/INS-GPS-Integration-Architecture-Performance-Schmidt-Phillips/1cb8e282e25d90048c1232778f3fbb21eb4c9de8 (accessed on 20 October 2025).

- Murtagh, F. Multilayer perceptrons for classification and regression. Neurocomputing 1991, 2, 183–197. [Google Scholar] [CrossRef]

- MacKay, D.J.C. Bayesian interpolation. Neural Comput. 1992, 4, 415–447. [Google Scholar] [CrossRef]

- Møller, M.F. A scaled conjugate gradient algorithm for fast supervised learning. Neural Netw. 1993, 6, 525–533. [Google Scholar] [CrossRef]

- Foresee, F.D.; Hagan, M.T. Gauss–Newton approximation to Bayesian learning. In Proceedings of the International Conference on Neural Networks, Houston, TX, USA, 12 June 1997; Volume 3, pp. 1930–1935. [Google Scholar]

- Gaudet, B.; Furfaro, R.; Linares, R. Reinforcement learning for angle-only intercept guidance of maneuvering targets. Aerosp. Sci. Technol. 2020, 99, 105746. [Google Scholar] [CrossRef]

- Razmi, H.; Afshinfar, S. Neural network-based adaptive sliding mode control design for position and attitude control of a quadrotor UAV. Aerosp. Sci. Technol. 2019, 91, 12–27. [Google Scholar] [CrossRef]

- De Celis, R.; Cadarso, L. Attitude determination algorithms through accelerometers, GNSS sensors, and gravity vector estimator. Int. J. Aerosp. Eng. 2018, 2018, 5394057. [Google Scholar] [CrossRef]

- De Celis, R.; Cadarso, L. Hybridized attitude determination techniques to improve ballistic projectile navigation, guidance and control. Aerosp. Sci. Technol. 2018, 77, 138–148. [Google Scholar] [CrossRef]

- Xu, Y.; Wang, K.; Jiang, C.; Li, Z.; Yang, C.; Liu, D.; Zhang, H. Motion-constrained GNSS/INS integrated navigation method based on BP neural network. Remote Sens. 2022, 15, 154. [Google Scholar] [CrossRef]

- Zhang, P.; Zhao, Y.; Lin, H.; Zou, J.; Wang, X.; Yang, F. A novel GNSS attitude determination method based on primary baseline switching for a multi-antenna platform. Remote Sens. 2020, 12, 747. [Google Scholar] [CrossRef]

- Dong, Y.; Li, W.; Li, D.; Liu, C.; Xue, W. Intelligent Tracking Method for Aerial Maneuvering Target Based on Unscented Kalman Filter. Remote Sens. 2024, 16, 3301. [Google Scholar] [CrossRef]

- Nesline, F.W.; Zarchan, P. Line-of-sight reconstruction for faster homing guidance. J. Guid. Control Dyn. 1985, 8, 3–8. [Google Scholar] [CrossRef]

- Zarchan, P. Tactical and Strategic Vehicle Guidance, 7th ed.; AIAA: Reston, VA, USA, 2012. [Google Scholar]

- Lechevin, N.; Rabbath, C.A. Robust discrete-time proportional-derivative navigation guidance. J. Guid. Control. Dyn. 2012, 35, 1007–1013. [Google Scholar] [CrossRef]

- Theodoulis, S.; Gassmann, V.; Wernert, P.; Dritsas, L.; Kitsios, I.; Tzes, A. Guidance and control design for a class of spin-stabilized fin-controlled projectiles. J. Guid. Control Dyn. 2013, 36, 517–531. [Google Scholar] [CrossRef]

- De Celis, R.; Cadarso, L. Spot-centroid determination algorithms in semiactive laser photodiodes for artillery applications. J. Sens. 2019, 2019, 7938415. [Google Scholar] [CrossRef]

- Esper-Chaín, R.; Escuela, A.M.; Fariña, D.; Sendra, J.R. Configurable quadrant photodetector: An improved position sensitive device. IEEE Sens. J. 2015, 16, 109–119. [Google Scholar] [CrossRef]

- Solano-López, P.; De Celis, R.; Cadarso, L. Sensor hybridization using neural networks for rocket terminal guidance. Aerosp. Sci. Technol. 2021, 111, 106527. [Google Scholar] [CrossRef]

- Teunissen, P.J.G.; De Jonge, P.J.; Tiberius, C.C.J.M. The least-squares ambiguity decorrelation adjustment: Its performance on short GPS baselines and short observation spans. J. Geod. 1997, 71, 589–602. [Google Scholar] [CrossRef]

- Teunissen, P.J.G. The LAMBDA method for the GNSS compass. Artif. Satell. 2006, 41, 89–103. [Google Scholar] [CrossRef]

- Geng, J.; Bock, Y. Triple-frequency GPS precise point positioning with rapid ambiguity resolution. J. Geod. 2013, 87, 449–460. [Google Scholar] [CrossRef]

- Li, B. Review of triple-frequency GNSS: Ambiguity resolution, benefits and challenges. J. Glob. Position. Syst. 2018, 16, 1. [Google Scholar] [CrossRef]

- Henkel, P.; Günther, C. Reliable integer ambiguity resolution using multi-frequency code carrier combinations. Navigation 2012, 59, 61–75. [Google Scholar] [CrossRef]

- Scataglini, T.; Pagola, F.; Cogo, J.; Garcia, J. Attitude estimation using GPS carrier phase single differences. IEEE Lat. Am. Trans. 2014, 12, 847–852. [Google Scholar] [CrossRef]

- Chen, W.; Ding, J.; Wang, Y.; Mi, X.; Liu, T. A novel network RTK technique for mobile platforms: Extending high-precision positioning to offshore environments. TransNav Int. J. Mar. Navig. Saf. Sea Transp. 2025, 19, 371–380. [Google Scholar] [CrossRef]

- Tao, Y.; Liu, C.; Chen, T.; Zhao, X.; Liu, C.; Hu, H.; Zhou, T.; Xin, H. Real-time multipath mitigation in multi-GNSS short baseline positioning via CNN-LSTM method. Math. Probl. Eng. 2021, 2021, 6573230. [Google Scholar] [CrossRef]

- Mosavi, M.R.; Shafiee, F. Narrowband interference suppression for GPS navigation using neural networks. GPS Solut. 2016, 20, 341–351. [Google Scholar] [CrossRef]

- de Celis, R.; Solano-López, P.; Barroso, J.; Cadarso, L. Neural network-based ambiguity resolution for precise attitude estimation with GNSS sensors. IEEE Trans. Aerosp. Electron. Syst. 2024, 60, 6702–6716. [Google Scholar] [CrossRef]

- de Celis, R.; Cadarso, L.; Sánchez, J. Guidance and control for high dynamic rotating artillery rockets. Aerosp. Sci. Technol. 2017, 64, 204–212. [Google Scholar] [CrossRef]

- Nguyen, N.V.; Tyan, M.; Lee, J.-W. Efficient framework for vehicle design and 6DoF simulation using multi-fidelity analysis and data fusion. In Proceedings of the 17th AIAA/ISSMO Multidisciplinary Analysis and Optimization Conference, Washington, DC, USA, 13–17 June 2016; p. 3365. [Google Scholar]

- Lee, H.-I.; Sun, B.-C.; Tahk, M.-J.; Lee, H. Control design of spinning rockets based on co-evolutionary optimization. Control Eng. Pract. 2001, 9, 149–157. [Google Scholar] [CrossRef]

- Britting, K.R. Inertial Navigation Systems Analysis; John Wiley & Sons: Washington, DC, USA, 1971; pp. 65–69. ISBN 0-471-10485-X. [Google Scholar]

- Harris, M.; Schlais, P.; Murphy, T.; Joseph, A.; Kazmierczak, J. GPS and GALILEO airframe multipath error bounding method and test results. In Proceedings of the 33rd International Technical Meeting of the Institute of Navigation (ION GNSS+), San Diego, CA, USA, 22–25 September 2020; pp. 114–139. [Google Scholar]

- Fang, W.; Jiang, J.; Lu, S.; Gong, Y.; Tao, Y.; Tang, Y.; Liu, J. A LSTM algorithm estimating pseudo measurements for aiding INS during GNSS signal outages. Remote Sens. 2020, 12, 256. [Google Scholar] [CrossRef]

- Yu, J.-Y.; Zhang, Y.-A.; Gu, W.-J. An approach to integrated guidance/autopilot design for missiles based on terminal sliding mode control. In Proceedings of the 2004 International Conference on Machine Learning and Cybernetics (IEEE Cat. No. 04EX826), Shanghai, China, 26–29 August 2004; IEEE: Shanghai, China, 2004; Volume 1, pp. 610–615. [Google Scholar]

- Jankovic, M.; Paul, J.; Kirchner, F. GNC architecture for autonomous robotic capture of a non-cooperative target: Preliminary concept design. Adv. Space Res. 2016, 57, 1715–1736. [Google Scholar] [CrossRef]

- Mohamed, M.; Dongare, V. Aircraft neural modeling and parameter estimation using neural partial differentiation. Aircr. Eng. Aerosp. Technol. 2018, 90, 764–778. [Google Scholar] [CrossRef]

- Yu, Y.; Yao, H.; Liu, Y. Aircraft dynamics simulation using a novel physics-based learning method. Aerosp. Sci. Technol. 2019, 87, 254–264. [Google Scholar] [CrossRef]

- Villa, J.; Taipalmaa, J.; Gerasimenko, M.; Pyattaev, A.; Ukonaho, M.; Zhang, H.; Raitoharju, J.; Passalis, N.; Perttula, A.; Aaltonen, J.; et al. aColor: Mechatronics, Machine Learning, and Communications in an Unmanned Surface Vehicle. arXiv 2020, arXiv:2003.00745. [Google Scholar] [CrossRef]

- Yadav, N.; Yadav, A.; Kumar, M. An Introduction to Neural Network Methods for Differential Equations; Springer: Cham, Switzerland, 2015. [Google Scholar]

- Tatar, M.; Masdari, M. Investigation of pitch damping derivatives for the Standard Dynamic Model at high angles of attack using neural network. Aerosp. Sci. Technol. 2019, 92, 685–695. [Google Scholar] [CrossRef]

- Safran. GSG-8 Advanced GNSS Simulator. Available online: https://safran-navigation-timing.com/document/gsg-8-data-sheet/ (accessed on 23 November 2025).

- Mao, A.; Harrison, C.G.A.; Dixon, T.H. Noise in GPS coordinate time series. J. Geophys. Res. Solid Earth 1999, 104, 2797–2816. [Google Scholar] [CrossRef]

- Kavzoglu, T.; Saka, M.H. Modelling local GPS/levelling geoid undulations using artificial neural networks. J. Geod. 2005, 78, 520–527. [Google Scholar] [CrossRef]

- Längkvist, M.; Kiselev, A.; Alirezaie, M.; Loutfi, A. Classification and segmentation of satellite orthoimagery using convolutional neural networks. Remote Sens. 2016, 8, 329. [Google Scholar] [CrossRef]

- Mahmon, N.A.; Ya’acob, N. A review on classification of satellite image using Artificial Neural Network (ANN). In Proceedings of the 2014 IEEE 5th Control and System Graduate Research Colloquium, Shah Alam, Malaysia, 11–12 August 2014; IEEE: Shah Alam, Malaysia, 2014; pp. 153–157. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Yu, Y.; Si, X.; Hu, C.; Zhang, J. A review of recurrent neural networks: LSTM cells and network architectures. Neural Comput. 2019, 31, 1235–1270. [Google Scholar] [CrossRef] [PubMed]

- Sherstinsky, A. Fundamentals of recurrent neural network (RNN) and long short-term memory (LSTM) network. Phys. D Nonlinear Phenom. 2020, 404, 132306. [Google Scholar] [CrossRef]

- Sola, J.; Sevilla, J. Importance of input data normalization for the application of neural networks to complex industrial problems. IEEE Trans. Nucl. Sci. 1997, 44, 1464–1468. [Google Scholar] [CrossRef]