Highlights

What are the main findings?

- A novel main orientation representation algorithm of feature points is presented for visible and infrared porcine body images.

- A novel visible and infrared porcine body image registration model is constructed to enhance registration accuracy in variable illumination conditions.

What is the implication of the main finding?

- The visible and infrared porcine body image registration method can achieve a lower average root-mean-square error than current registration algorithms.

Abstract

The safety of animal-related agricultural products has been a hot issue. To obtain a multi-feature representation of porcine bodies for detecting their health, visible and infrared imaging is valuable for exploiting multiple images of a porcine body from different modalities. However, the direct registration of visible and infrared porcine body images can easily cause the dislocation of structural information and spatial position, due to different resolutions and spectrums of multi-source images. To overcome the problem, a novel multi-source image feature representation method based on contour angle orientation is proposed and named Gabor-Ordinal-based Contour Angle Orientation (GOCAO). Moreover, a visible and infrared porcine body image registration method is described and named GOCAO-Rough to Fine (GOCAO-R2F). First, contour and texture features of the porcine body are acquired using a Gabor filter with variable scales and an ordinal operation. Second, feature points in contours are obtained by curvature scale space (CSS), and the main orientation of each feature point is determined by GOCAO. Third, modified scale-invariant feature transform (MSIFT) features are received on the main orientation and registered with bilateral matching. Finally, accurate registrations are extracted by R2F. Experimental results show that the proposed registration algorithm accurately matches multi-source images for porcine body multi-feature detection and is capable of achieving lower average root-mean-square error than current registration algorithms.

1. Introduction

As quality of life continues to improve, safety issues in agricultural products, especially those related to animals, have attracted public attention. Porcine body health is vital to the safety of animal-related products, and an effective multi-source porcine body image analysis method can achieve non-contact porcine body health detection.

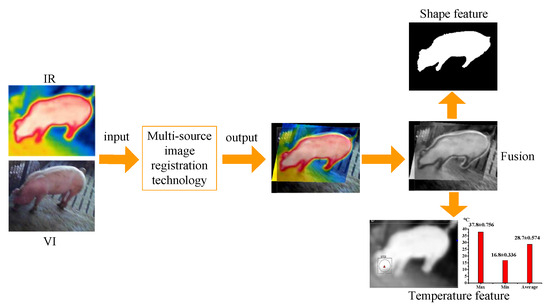

To achieve accurate detection of porcine body health, weight estimation [1] and behavior detection [2] are used to detect abnormal phenomena in a timely manner. Nowadays, deep learning-based methods are used to fit 2D image features into 3D models to accurately calculate weight and behavioral features [3,4]. However, it is difficult to acquire a large number of 3D models in practice, which reduces the reliability of animal 3D reconstruction. Due to the dependence of porcine body shape features on the construction of 3D models, which is also an important manifestation of animal health, shape feature extraction is crucial for achieving accurate detection of porcine body health. Moreover, the increase in porcine body temperature is one of the earliest adverse health conditions. Therefore, to timely detect the health status of the porcine body, shape and temperature features are considered as porcine body health representation objects. However, it is difficult to obtain porcine body shape and temperature features based on uni-source images, and the direct registration of multi-source porcine body images is prone to causing the dislocation of structural information and spatial position.

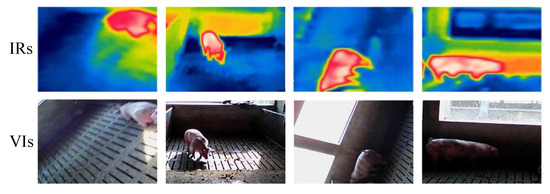

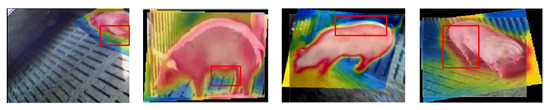

To obtain porcine body shape and temperature features, the multi-source porcine body images are gained via FLIR C2 (FLIR Systems, USA), which can realize simultaneous acquisition of visible and infrared images, and the main technical indicators are shown in Table 1. However, due to the different spectrums and imaging resolutions of thermal infrared detectors and digital cameras, the acquired multi-source images may have a misalignment phenomenon, which seriously affects the multi-source porcine body image fusion and multi-feature detection. The examples of misalignment phenomena are shown in Figure 1. To effectively extract shape and temperature features of the porcine body, visible and infrared image registration is a significant procedure for multi-source image fusion and multi-feature detection, as shown in Figure 2.

Table 1.

Main technical indicators of FLIR C2.

Figure 1.

The misalignment phenomenon of multi-source porcine body images.

Figure 2.

The procedure of porcine body multi-feature detection.

The aim of visible and infrared image registration is to align two images, which are captured at multiple viewpoints, modalities, or times. Gray-based methods utilize the local similarity descriptors to optimize the alignment processing [5], which mainly contain methods based on mutual information (MI) [6], mean squared difference (MSD) [7], and cross correlation (CC) [8]. However, the registration methods based on CC have a high complexity, which affects the efficiency of the algorithm and reduces the actual application requirements [9]. Due to the different local gray mapping relationships of multi-source images and the interference of noise, the registration methods based on MI and MSD can lead to low accuracy of algorithms [10,11].

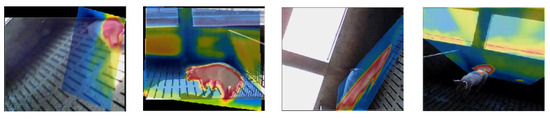

Feature-based methods extract various features and construct a deformation function to minimize the feature variability between multi-view, multi-source, and multi-time images [12,13]. The most commonly used methods mainly include SIFT [14,15], BRIEF [16], SURF [17,18], and ORB [19]. Due to the obvious variations in multi-source images, the error rate is relatively high in registration processing. To enhance the robustness of multi-modal feature descriptors, a partial intensity invariant feature descriptor (PIIFD) is proposed [20]. However, due to the lack of scale invariance in PIIFD, it is impossible to establish reliable correspondence between visible and infrared porcine body images. To enhance the scale invariance and reduce the influence of different spectrums and resolutions in multi-source images, a contour angle orientation (CAO) method is proposed [21]. However, existing methods cannot meet the requirements of visible and infrared porcine body image registration, especially in the case of variable illumination conditions. As CAO-C2F has been proven to be the most accurate method for registering multi-source images among four state-of-the-art methods, which contain SIFT-LPM, SI-PIIFD-LPM, EG-SURF-RANSAC, and CAO-C2F [21], especially in multi-source images with obvious contour features, the examples of registered visible and infrared porcine body images based on CAO-C2F are shown in Figure 3. It can be seen that multi-source porcine body images are not effectively registered by CAO-C2F, especially in the case of poor illumination.

Figure 3.

The examples of registered visible and infrared porcine body images based on CAO-C2F.

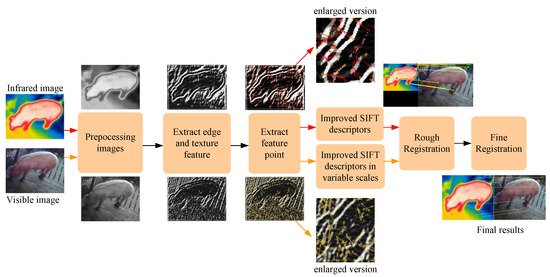

Nowadays, deep learning-based methods have great advantages in multi-source image registration, but they require a large amount of training data and real registered values [22]. Therefore, considering the lack of real value in multi-source registration, a novel visible and infrared porcine body image registration algorithm is proposed and named GOCAO-Rough to Fine (GOCAO-R2F), which is demonstrated in Figure 4.

Figure 4.

The flowchart of the proposed visible and infrared porcine body image registration framework.

The main superiorities of the proposed visible and infrared image registration algorithm are summarized as follows:

- A novel main orientation representation algorithm of feature points is presented for visible and infrared porcine body images.

- A novel visible and infrared porcine body image registration model is constructed to enhance registration accuracy in variable illumination conditions.

- The visible and infrared porcine body image registration method can achieve a lower average root-mean-square error than current registration algorithms.

2. Materials and Methods

In this section, a visible and infrared porcine body image registration algorithm is presented in detail. First, the proposed visible and infrared image registration algorithm is provided to obtain reliable multi-source porcine body images. Second, the multi-feature is represented in view of multi-source porcine body fusion images.

2.1. Multi-Source Porcine Body Image Registration

2.1.1. Gabor-Ordinal-Based Contour Angle Orientation

To achieve spectrum and resolution invariance of visible and infrared porcine body images, a novel multi-source image representation algorithm is proposed and named Gabor-Ordinal-based Contour Angle Orientation (GOCAO).

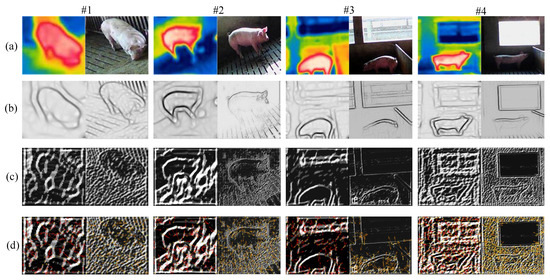

First, due to the adjustable center frequency and orientation of the Gabor filter [23], the porcine body feature maps are acquired by the Gabor filter with variable scales, which can effectively represent the porcine body contour features, and the sketch maps of the Gabor-based contour feature F(x,y) in multi-source porcine body images with variable illumination conditions are shown in Figure 5b.

where G(.) and I(.) represent an even-symmetry Gabor filter and multi-source porcine body images, respectively. f and θ are the center frequency and angle, respectively. σ and γ are the scale and length–-width ratio of the envelope, respectively. is a convolution operation. xθ and yθ are angles in the x and y directions, which are shown as follows:

Figure 5.

Sketch maps of contour feature under variable illumination conditions: (a) porcine body infrared and visible images; (b) Gabor-based porcine body contour feature maps; (c) Gabor-Ordinal-based porcine body contour feature maps; (d) Gabor-Ordinal-based contour angle orientation feature maps.

Second, to improve the robustness and richness of contour feature extraction, the ordinal features are obtained by the Ordinal filter with triple lobes [24]. The sketch maps of Gabor-Ordinal-based contour feature S in multi-source porcine body images with variable illumination conditions are shown in Figure 5c.

where ω and δ are the central position and scale of the ordinal filter, respectively. Np and Nn are the number of positive and negative lobes, respectively. Constant coefficients Cn and Cp are adopted to maintain a balance between positive and negative lobes. F(.) represents Gabor-based contour feature maps, Γj is the jth contour feature in S, and Ns is the number of contour features in S. n represents the number of pixels in each contour, Pj represents a pixel in the jth contour feature, and each pixel is viewed as a feature point in this paper.

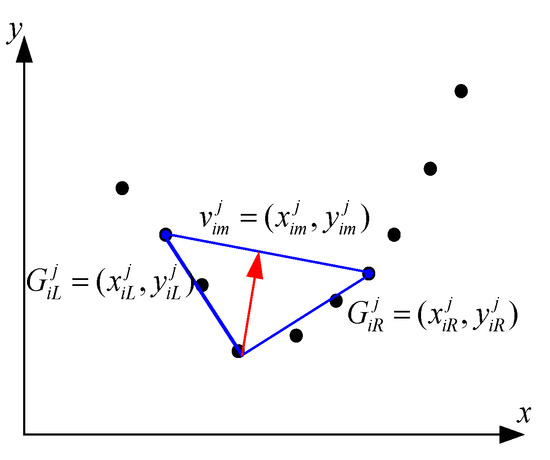

Next, the feature points of visible and infrared porcine body images are represented by curvature scale space (CSS) [25] and assumed as GiLj = (xiLj, yiLj) and GiRj = (xiRj, yiRj), respectively. The orientation of the angular bisector vector vimj is displayed in Figure 6, and it can be defined as follows:

where ximj and yimj represent the horizontal and vertical orientation of the jth angular bisector vector in the ith pair of multi-source porcine body images, respectively. xiLj and yiLj represent the horizontal and vertical orientation of the jth angular bisector vector in the ith visible porcine body image, respectively. xiRj and yiRj represent the horizontal and vertical orientation of the jth angular bisector vector in the ith infrared porcine body image, respectively.

Figure 6.

Sketch map of computing the orientation of the Gabor-Ordinal-based feature point.

Finally, the Gabor-Ordinal-based contour angle orientation of each feature point is indicated as follows:

where ximj and yimj represent the horizontal and vertical orientation of the jth angular bisector vector in the ith pair of multi-source porcine body images, respectively. Ordinalij represents the jth feature point in the ith Gabor ordinal feature map. Moreover, the Gabor-Ordinal-based contour angle orientation feature maps are viewed in Figure 5d.

2.1.2. Multi-Source Porcine Body Feature Rough to Fine Registration

Due to the scale and rotation invariance of SIFT, modified SIFT (MSIFT) is adopted to improve the grayscale invariance of multi-source Gabor-Ordinal-based porcine body feature images [26], the gray gradient magnitudes of which are normalized to lower the interference of different spectrums, and the orientation histogram dimension with 16 bins is converted to an orientation histogram with only eight bins. Considering the rich scale information of visible porcine body images, multi-scale MSIFT features are received on the main orientation, which is determined by GOCAO. Moreover, the threshold of MSIFT is chosen empirically according to different illuminations.

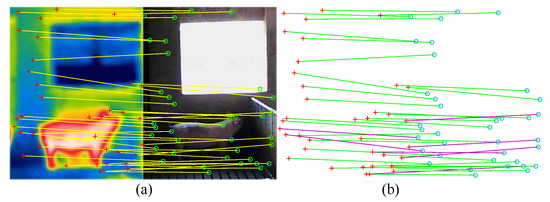

Then, the random sample consensus (RANSAC) algorithm [27] is adopted to estimate scale parameters, which can omit obvious incorrect matches. The registered visible and infrared porcine body feature points of rough registration are required and denoted as Pri and Prv, respectively, which is shown in Figure 7.

Figure 7.

Sketch map of rough registration: (a) rough registration result; (b) possible correct matches in green lines and identified mismatches in purple lines.

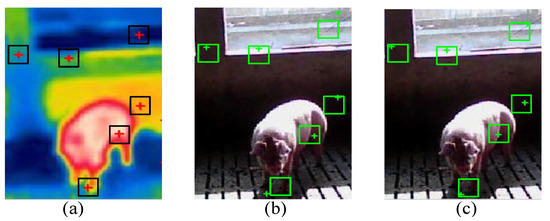

Finally, due to different resolutions of multi-source Gabor-Ordinal-based porcine body feature images, the spatial positions of feature points have deviations. To overcome this problem, fine matches can be obtained by the proposed method in [9], which can reduce the deviation of rough matches, and the location of Prv is renewed. The sketch map is shown in Figure 8. It can be seen that the location errors are corrected to the right position based on fine registration.

Figure 8.

Feature points location of fine registration: (a) Infrared registration. (b) Visible rough registration. (c) Visible fine registration.

2.2. Porcine Body Multi-Feature Representation

To test the effectiveness of visible and infrared image registration, a porcine body multi-feature representation method is adopted in view of a multi-source image fusion method, which is my previously proposed method [28].

3. Results

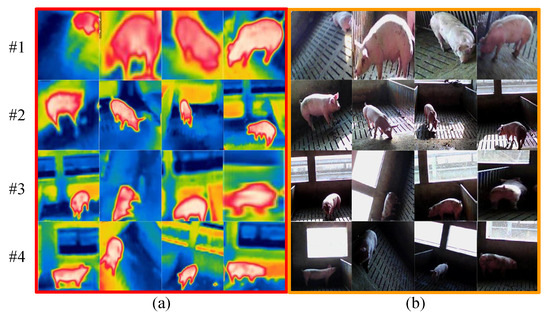

The proposed visible and infrared porcine body registration model is achieved by MATLAB (v2014a) on a notebook with a 2.6 GHz Intel Core CPU and an 8 GB RAM. To estimate the performance of the proposed multi-source image registration algorithm, a self-collected multi-source porcine body database is built by FLIR C2 and revealed in Figure 9. It can be seen that visible and infrared images are divided into four groups according to the intensity of visible porcine body images with variable illumination conditions, which are represented as #1, #2, #3, and #4.

Figure 9.

Sketch maps of self-collected database: (a) infrared porcine body images; (b) visible porcine body images.

To verify the registration performance of the proposed algorithm, five state-of-the-art visible and infrared image registration methods, such as Dense [29], CAO-C2F [21], SI-PIIFD-RPM [20], EG-SURF-RANSAC [30], and SIFT-LPM [31], are compared to the proposed method. Moreover, objective evaluation metrics are commonly adopted to estimate the registration and detection results, which are root-mean-square error (RMSE) [32], Precision–Recall [33], and Accuracy [34].

RMSE is the root-mean-square error between experimental registration points acquired through registration methods and reference registration points acquired through manual calibration; its formula is as follows:

where (xi,yi) and (xiref,yiref) are the coordinates of the ith matching point and reference matching point, respectively. N is the number of final registration points, which is acquired via R2F matching. The smaller the RMSE, the higher the registration accuracy.

Precision is the ratio of the number of correct matches to the total number of matches, both of which are acquired through the registration method, whose formula is as follows:

where total matches contain correct matches and false matches. Correct matches are the number of those matches that satisfy

False matches are the rest of the total matches. The distance threshold is chosen empirically. The higher the precision, the more accurate the feature registration method.

Recall is the ratio of the number of correct matches to the number of correct matches in the feature points described by CSS, which represents the ability of the registration method to obtain correct matches from multi-source porcine body images taken under variable illumination conditions. Its formula is as follows:

where correspond features represent the number of correct matches in the feature points described by CSS. The larger the recall, the better the ability of the registration algorithm to distinguish feature points.

Accuracy is used to measure the detection rate of the porcine body shape feature and is defined as follows:

where TP and TN are true positives and negatives, respectively. FP and FN are false positives and negatives, respectively. The better the accuracy, the more accurate the porcine body shape feature detection method.

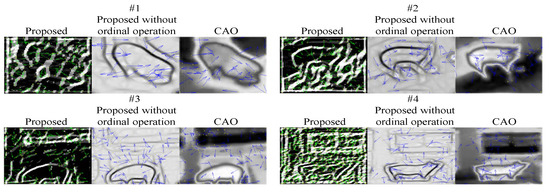

3.1. Comparisons of Main Orientation

The main orientation is a major property of Gabor-Ordinal-based feature points in the procedure of visible and infrared porcine body image registration algorithm. The more correct matches with the same main orientation, the more precise the final matches will be. In this section, the absolute error of the angle is less than 5°.

To prove the superior performance of the GOCAO, it is compared with CAO, which has been proven to have the best representation of the main orientation among four state-of-the-art visible and infrared image registration methods [21]. The four sketch maps are composed of one image chosen under four variable illumination conditions, and the results are shown in Figure 10. It can be seen that the number of main orientations of feature points extracted by the proposed method is significantly more than that by CAO, and it can be further explained that the number of extracted feature points is maximum based on the proposed method. Therefore, it can lay the foundation for feature matching based on multi-source porcine body images.

Figure 10.

The comparisons of the main orientation.

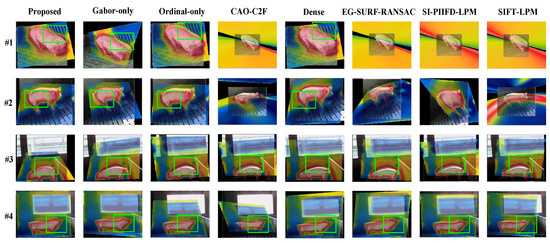

3.2. The Registration Performance of the Proposed Method

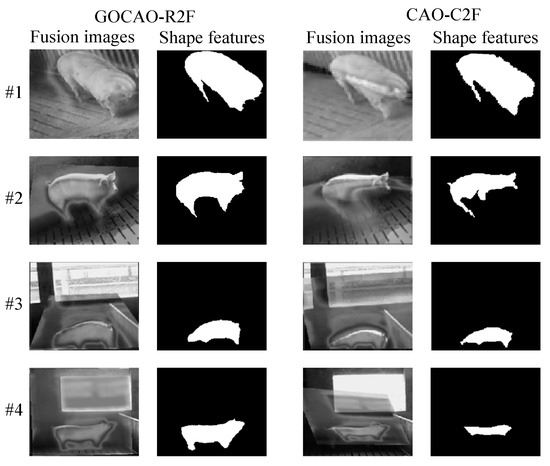

To achieve qualitative analysis of registration performance, four multi-source porcine body image pairs are composed of one image pair, which was chosen under four variable illumination conditions, and the registration results are shown in Figure 11. It can be seen that the proposed method can effectively register contour features in visible and infrared porcine body images, especially in poor illumination. Moreover, the results reflect the contributions of Gabor filtering and the Ordinal operation.

Figure 11.

The registration results based on different multi-source image registration methods with variable illumination conditions.

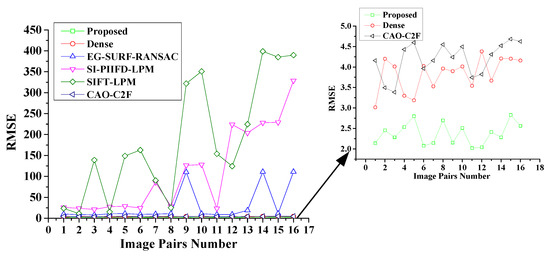

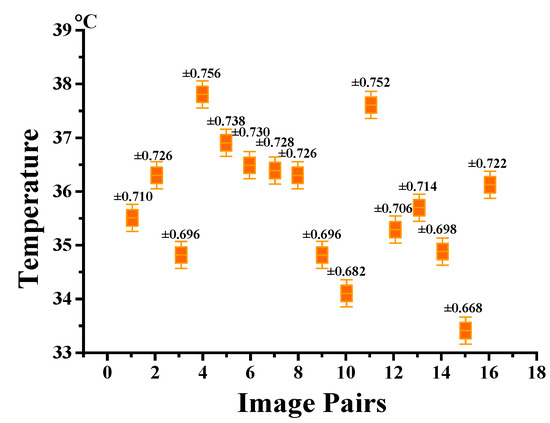

To conduct a quantitative analysis of the registration performance, the RMSEs of the considered methods are shown in Figure 12. The GOCAO-R2F fulfils the minimum RMSE among the six considered registration methods. Due to the smaller RMSE and the better registration performance, five state-of-the-art visible and infrared image registration methods fail to effectively align most of the porcine body images with variable illumination conditions, except the proposed multi-source image registration method.

Figure 12.

The RMSE of the six considered methods with variable illumination conditions.

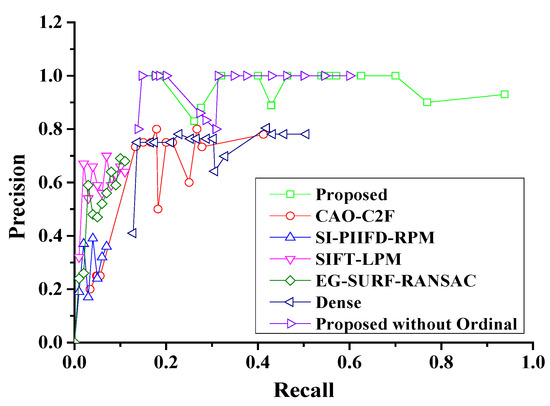

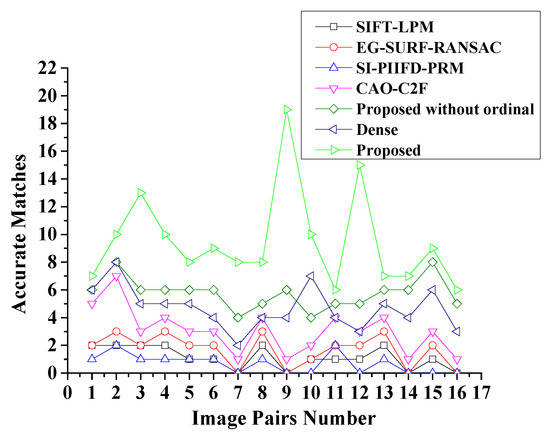

Moreover, the Precision–Recall curves of the considered algorithms are shown in Figure 13. It can be seen that GOCAO-R2F has the highest average precision among the seven considered algorithms, due to rich contour and texture features, robust main orientation description of GOCAO. Meanwhile, GOCAO-R2F achieves the most matched feature points in each multi-source porcine body image pair, as shown in Figure 14.

Figure 13.

The Precision–Recall curves of the seven considered algorithms.

Figure 14.

The number of accurate matches based on the seven considered methods.

To prove the real-time operation of the proposed registration method, the average running times of the considered visible and infrared registration methods are shown in Table 2, and the sizes of multi-source porcine body images are the same in the processing of each run. The results can validate that GOCAO-R2F has great advantages in efficiency among the considered state-of-the-art smart visible and infrared image registration methods, except CAO-C2F and Dense. The reason for this phenomenon may be that the proposed method takes a long time to represent multi-source porcine body image features, which reduces the overall efficiency of the registration algorithm.

Table 2.

The average running times of multi-source image registration methods.

3.3. The Porcine Body Multi-Feature Representation

To prove the accuracy of the proposed registration algorithm for porcine body multi-feature representation, visible and infrared porcine body image pairs are consistent with Section 3.2.

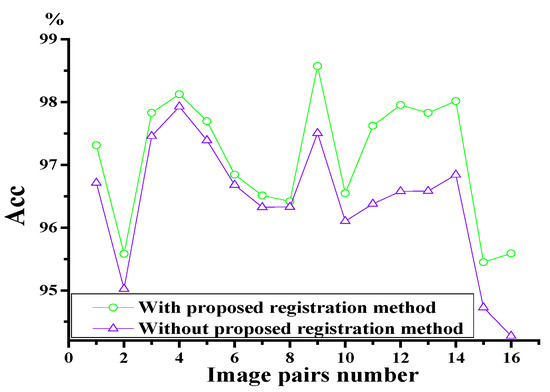

Because CAO-C2F has the best registration performance among the five considered comparative methods, which is proven in Figure 12, the extracted shape features are only compared with those of CAO-C2F. In this paper, MGANFuse [28] is used to obtain multi-source porcine body fusion images and shape features with variable illumination conditions, which are shown in Figure 15. To validate the detection performance of porcine body shape features with the presented registration algorithm, an average accuracy is adopted to measure porcine body shape features, as shown in Figure 16. It can be seen that the representation method based on the proposed registration model can more effectively represent porcine body shape features, especially in poor illumination.

Figure 15.

The porcine body shape features under variable illumination conditions.

Figure 16.

The average accuracy of porcine body shape features with different registration methods.

Then, the temperature features are extracted in view of shape features [35], and the porcine body temperature features are obtained and shown in Figure 17. It can be seen that due to a 2% error of FLIR C2, the obtained temperature features have a certain range of error, and the highest temperature features with floating error are all in the normal range of animal temperature.

Figure 17.

The porcine body temperature features under variable illumination conditions.

4. Discussion

The limitation of the proposed multi-source porcine body image registration method is greatly affected by bright illuminations, which reduce the accuracy of multi-source porcine body image matching, as shown in Figure 18. From the figure, there are slight deviations in the matching of porcine body shape edge features under brighter illumination, which can affect the accuracy of porcine body shape feature extraction. The possible reason is that there are too many feature points in the visible porcine body image under brighter illumination conditions, which can easily cause mismatching in visible and infrared porcine body image registration. The possible solution is whether to replace the Ordinal operation with the Local Binary operation [36], which is more robust to variable illumination conditions and can reduce the effect of bright illumination.

Figure 18.

The examples of bad situations.

5. Conclusions

A novel visible and infrared porcine body image registration algorithm called GOCAO-R2F is presented for multi-feature detection. Firstly, porcine body contour features are acquired by a Gabor filter with variable scales and an ordinal operation. Secondly, feature points in contour features are obtained via CSS, and the main orientations of each feature point are determined using the GOCAO. Thirdly, modified SIFT features are received on the main orientation and registered via R2F. Finally, the porcine body multi-feature is represented in view of registration results. Experimental results show that the presented registration algorithm accurately matches visible and infrared images for porcine body multi-feature representation and is capable of achieving lower average root-mean-square errors than current registration algorithms.

This work mainly focuses on registering multi-source porcine body images in variable illumination conditions. The limited number of multi-source porcine body image pairs and the limited automation performance of the presented registration method can reduce the adaptability of the algorithm. Future studies should focus on robust feature representation of multi-source porcine body image and an adaptive registration model for visible and infrared porcine body images under occlusion and noisy environments, which can improve the robustness and accuracy of the porcine body multi-feature representation method in view of multi-source image registration. In addition, it is necessary to continuously improve the multi-source porcine body image database.

Author Contributions

Conceptualization, Z.Z.; methodology, Z.Z.; software, Z.Z.; validation, S.Z.; writing—original draft preparation, Z.Z.; writing—review and editing, S.Z.; funding acquisition, Z.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Tianjin Education Commission Research Program, grant number 2023KJ194. The funder is Zhen Zhong.

Data Availability Statement

Data will be made available on request.

Acknowledgments

The author would like to thank her colleagues for their support of this work. The detailed comments from the anonymous reviewers were gratefully acknowledged.

Conflicts of Interest

Author Zhen Zhong was employed by the Post-Doctoral Workstation, Tianjin Development Zone Zhonghuan System Electronic Engineering Co., Ltd., Tianjin 300074, China. The remaining author declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Bhoj, S.; Tarafdar, A.; Chauhan, A.; Singh, M.; Gaur, G.K. Image processing strategies for pig liveweight measurement: Updates and challenges. Comput. Electron. Agric. 2022, 193, 106693. [Google Scholar] [CrossRef]

- Gosztolai, A.; Günel, S.; Lobato-Ríos, V. LiftPose3D: A deep learning-based approach for transforming two-dimensional to three-dimensional poses in laboratory animals. Nat. Methods 2021, 18, 975–981. [Google Scholar] [CrossRef]

- Rueegg, N.; Zuffi, S.; Schindler, K.; Black, M.J. BARC: Learning to regress 3D dog shape from images by exploiting breed information. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 3876–3884. [Google Scholar]

- Marshall, J.D.; Li, T.; Wu, J.H.; Dunn, T.W. Leaving flatland: Advances in 3D behavioral measurement. Curr. Opin. Neurobiol. 2022, 73, 102522. [Google Scholar] [CrossRef]

- Kalantar, B.; Mansor, S.B.; Halin, A.A.; Shafri, H.; Zand, M. Multiple moving object detection from uav videos using trajectories of matched regional adjacency graphs. IEEE Trans. Geoence Remote Sens. 2017, 55, 5198–5213. [Google Scholar] [CrossRef]

- Yang, T.; Tang, Q.; Li, L.; Song, J.; Zhu, C.; Tang, L. Nonrigid registration of medical image based on adaptive local structure tensor and normalized mutual information. J. Appl. Clin. Med. Phys. 2019, 20, 99–110. [Google Scholar] [CrossRef] [PubMed]

- Kybic, J.; Unser, M. Fast parametric elastic image registration. IEEE Trans. Image Process. 2003, 12, 1427–1442. [Google Scholar] [CrossRef] [PubMed]

- Cao, X.; Yang, J.; Zhang, J.; Wang, Q.; Yap, P.T.; Shen, D. Deformable Image Registration Using a Cue-Aware Deep Regression Network. IEEE Trans. Biomed. Eng. 2018, 65, 1900–1911. [Google Scholar] [CrossRef] [PubMed]

- Photiou, C.; Pitris, C. Dual-angle optical coherence tomography for index of refraction estimation using rigid registration and cross-correlation. J. Biomed. Opt. 2019, 24, 106001. [Google Scholar] [CrossRef]

- Liu, X.; Wang, M.; Song, Z. Multi-modal image registration based on multi-feature mutual information. J. Med. Imaging Health Inform. 2019, 9, 153–158. [Google Scholar] [CrossRef]

- Zou, B.; He, Z.; Zhao, R.; Zhu, C.; Liao, W.; Li, S. Non-rigid retinal image registration using an unsupervised structure-driven regression network. Neurocomputing 2020, 404, 14–25. [Google Scholar] [CrossRef]

- Yu, K.; Ma, J.; Hu, F.; Ma, T.; Fang, B. A grayscale weight with window algorithm for infrared and visible image registration. Infrared Phys. Technol. 2019, 99, 178–186. [Google Scholar] [CrossRef]

- Xu, G.; Wu, Q.; Cheng, Y.; Yan, F.; Yu, Q. A robust deformed image matching method for multi-source image matching. Infrared Phys. Technol. 2021, 115, 103691. [Google Scholar] [CrossRef]

- Chang, H.H.; Wu, G.L.; Chiang, M.H. Remote sensing image registration based on modified sift and feature slope grouping. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1363–1367. [Google Scholar] [CrossRef]

- Safdari, M.; Moallem, P.; Satari, M. SIFT Detector Boosted by Adaptive Contrast Threshold to Improve Matching Robustness of Remote Sensing Panchromatic Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 675–684. [Google Scholar] [CrossRef]

- Lam, S.K.; Jiang, G.Y.; Wu, M.Q.; Cao, B. Area-time efficient streaming architecture for fast and brief detector. IEEE Trans. Circuits Syst. II Express Briefs 2018, 66, 282–286. [Google Scholar] [CrossRef]

- Hassanin, A.; El-Samie, F.; Banby, G. A real-time approach for automatic defect detection from pcbs based on surf features and morphological operations. Multimed. Tools Appl. 2019, 78, 34437–34457. [Google Scholar] [CrossRef]

- Sedaghat, A.; Mohammadi, N. High-resolution image registration based on improved SURF detector and localized GTM. Int. J. Remote Sens. 2019, 40, 2576–2601. [Google Scholar] [CrossRef]

- Tian, X.; Zhou, G.; Xu, M. Image copy-move forgery detection algorithm based on ORB and novel similarity metric. IET Image Process. 2020, 14, 2092–2100. [Google Scholar] [CrossRef]

- Du, Q.; Fan, A.; Ma, Y.; Fan, F.; Mei, X. Infrared and visible image registration based on scale-invariant piifd feature and locality preserving matching. IEEE Access 2018, 6, 64107–64121. [Google Scholar] [CrossRef]

- Jiang, Q.; Liu, Y.; Yan, Y.; Deng, J.; Jiang, X. A contour angle orientation for power equipment infrared and visible image registration. IEEE Trans. Power Deliv. 2021, 36, 2559–2569. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, Y.; Dai, J.; Cavichini, M.; Bartsch, D.U.G.; Freeman, W.R.; An, C. Two-step registration on multi-modal retinal images via deep neural networks. IEEE Trans. Image Process. 2021, 31, 823–838. [Google Scholar] [CrossRef]

- Zhong, Z.; Gao, W.; Khattak, A.M.; Wang, M. A novel multi-source image fusion method for pig-body multi-feature detection in NSCT domain. Multimed. Tools Appl. 2020, 79, 26225–26244. [Google Scholar] [CrossRef]

- Zhong, Z.; Wang, M.; Gao, W.; Zheng, L. A novel multisource pig-body multifeature fusion method based on gabor features. Multidimens. Syst. Signal Process. 2021, 32, 381–404. [Google Scholar] [CrossRef]

- Chen, H.X.; Yung, N.H. Corner detector based on global and local curvature properties. Opt. Eng. 2008, 47, 057008. [Google Scholar] [CrossRef]

- Chen, J.; Tian, N.; Lee, J.; Zheng, R.T.S.; Laine, A.F. A partial intensity invariant feature descriptor for multimodal retinal image registration. IEEE Trans. Biomed. Eng. 2010, 57, 1707–1718. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Zhong, Z.; Yang, J. A Novel Pig-body Multi-feature Representation Method Based on Multi-source Image Fusion. Measurement 2022, 204, 111968. [Google Scholar] [CrossRef]

- Li, L.Z.; Han, L.; Ding, M.T.; Cao, H.Y. Multimodal image fusion framework for end-to-end remote sensing image registration. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5607214. [Google Scholar] [CrossRef]

- Tang, C.; Tian, G.Y.; Chen, X.; Wu, J.; Li, K.; Meng, H. Infrared and visible images registration with adaptable local-global feature integration for rail inspection. Infrared Phys. Technol. 2017, 87, 31–39. [Google Scholar] [CrossRef]

- Yang, H.; Li, X.; Ma, Y.; Zhao, L.; Chen, S. A high precision feature matching method based on geometrical outlier removal for remote sensing image registration. IEEE Access 2019, 7, 180027–180038. [Google Scholar] [CrossRef]

- Gao, J.; Cai, X.F. Image matching method based on multi-scale corner detection. In Proceedings of the 2017 13th International Conference on Computational Intelligence and Security (CIS), Hong Kong, China, 15–18 December 2017; pp. 125–129. [Google Scholar]

- Mikolajczyk, K.; Schmid, C. A performance evaluation of local descriptors. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1615–1630. [Google Scholar] [CrossRef] [PubMed]

- Nemalidinne, S.M.; Gupta, D. Nonsubsampled contourlet domain visible and infrared image fusion framework for fire detection using pulse coupled neural network and spatial fuzzy clustering. Fire Saf. J. 2018, 101, 84–101. [Google Scholar] [CrossRef]

- Zhong, Z. A novel visible and infrared image fusion method based on convolutional neural network for pig-body feature detection. Multimed. Tools Appl. 2022, 81, 2757–2775. [Google Scholar] [CrossRef]

- Zhang, W.; Shan, S.; Chen, X.; Gao, W. Local gabor binary patterns based on mutual information for face recognition. Int. J. Image Graph. 2007, 7, 777–793. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).