Abstract

Modern dental education increasingly calls for smarter tools that combine precision with meaningful feedback. In response, this study presents the Intelligent Dental Handpiece (IDH), a next-generation training tool designed to support dental students and professionals by providing real-time insights into their techniques. The IDH integrates motion sensors and a lightweight machine learning system to monitor and classify hand movements during practice sessions. The system classifies three motion states: Alert (10°–15° deviation), Lever Range (0°–10°), and Stop Range (>15°), based on IMU-derived features. A dataset collected from 61 practitioners was used to train and evaluate three machine learning models: Logistic Regression, Random Forest, Support Vector Machine (Linear RBF, Polynomial kernels), and a Neural Network. Performance across models ranged from 98.52% to 100% accuracy, with Random Forest and Logistic Regression achieving perfect classification and AUC scores of 1.00. Motion features such as Deviation, Take Time, and Device type were most influential in predicting skill levels. The IDH offers a practical and scalable solution for improving dexterity, safety, and confidence in dental training environments.

1. Introduction

Dental procedures demand a high level of dexterity, accuracy, and experience. Therefore, preclinical dental education plays a crucial role in helping students develop essential motor skills prior to engaging directly in patient care [1]. During this important training phase, students usually train using conventional dental simulators, which have played an important role in dental education since 1894 [2,3]. Although there are many limitations to conventional simulators, they enhanced the training process of students over a broad range of standardized technical procedures using dental instruments [4]. They remained an essential tool for simulating diverse dental procedures, preparing students for real-world dental practice [5].

An advancement in dental simulators is haptic simulation, which provides realistic video, audio, and tactile feedback to improve fine motor skills while providing unlimited training time, leading to enhanced students performance [3,6,7].

Dental handpieces have been used by dentists for decades; however, the increased complexity of procedures and the need for enhanced clinical outcomes have highlighted the need for more advanced dental handpieces through integrating real-time feedback and performance monitoring and evaluation [8]. This could be reached through the integration of Artificial Intelligence (AI) techniques into dental instruments, leading to precision-driven feedback [9,10,11].

Recent advances in Machine Learning (ML) and Deep Learning (DL) have significantly enhanced the ability to analyze and classify human motion across domains such as healthcare, robotics, sports, and human–computer interaction. Techniques like Support Vector Machines (SVMs), Random Forests, and deep neural networks have been widely applied to inertial measurement unit (IMU) data for gesture recognition and motion classification [12,13]. For instance, CNN-LSTM and CNN-GRU architectures have demonstrated high accuracy in recognizing dynamic gestures from IMU signals [13]. Reinforcement learning approaches, such as deep Q-networks, have also been explored for hand gesture recognition using EMG-IMU data [14]. In wearable systems, real-time hand tracking using deep learning models like MobileNet-SSD has shown promising results in human-computer interaction applications [15]. These methods, although developed for various applications, share core principles: feature extraction, temporal modeling, and classification. These principles are directly transferable to dental training. The proposed work builds on these foundations by applying and comparing multiple ML models for classifying dental handpiece motion states using IMU data.

Different commercial haptic dental handpieces are currently being used, as summarized in Table 1 [16]. Simondont is the most widely adopted solution offering immersive virtual reality and high-fidelity haptic feedback for a broad range of dental procedures. On the other hand, newer solutions, such as Virtual Reality Dental Training System (VRDTS) and Intelligent Dental Simulation System (IDSS), integrate advanced features such as AI-based assessment and real-time performance tracking. Notable IDSS emphasizes intelligent feedback and automated scoring [17,18].

Table 1.

Comparison of commercial dental simulation tools.

Compared to the commercial systems mentioned in Table 1, the proposed Intelligent Dental Handpiece (IDH) offers several unique advantages. Unlike haptic-based simulators, the IDH focuses on real-time motion classification using IMU data and machine learning models, enabling lightweight and cost-effective feedback. IT supports cloud-based analytics, provides immediate visual and auditory alerts, and is built using compact, low-cost components. Furthermore, the IDH is accompanied by a publicly available dataset collected from 61 practitioners, supporting reproducibility and further research-related features that are rarely offered by commercial tools. These characteristics make the IDH a scalable and accessible solution for dental skill assessment and training.

In [27], authors presented a virtual system for training (hapTEL) using haptic technology. It allowed students to practice various procedures such as caries removal, dental drilling, and cavity preparation. Their prototype consisted of a PC, a Planar 3D stereo display, a camera head along with a Falcon haptic device, and a modified dental handpiece.

In [28], the authors introduced a robotic system guided by vision to improve spatial positioning accuracy during dental procedures. It integrated an enhanced force-feedback system and real-time visual guidance.

Multiple review papers have evaluated the acceptability and evaluation of haptic and virtual dental solutions. In [29], the authors studied evolution in the performance of learners trained to prepare access cavities incorporating Simodont, and also they determined learner acceptability of the Haptic Virtual Reality simulator (HVRS). Their results showed that it is reasonable to use Simodont, which was acceptable to learners for endodontic access cavity training. In [30], the authors examined the use of virtual assessment tools within the context of dental education in studies between 2000 and 2024. Their conclusion exploits how the virtual assessments have enhanced the accuracy and comprehensiveness of dental student evaluations; on the other hand, they highlighted some important challenges, such as the need for significant investment in technology, infrastructure, and training.

This research introduces the Intelligent Dental Handpiece (IDH), a novel integration of embedded sensor technology, real-time data processing, and smart feedback mechanisms into a conventional dental handpiece. Unlike traditional tools, the IDH captures motion and behavioral data during use, providing haptic and visual cues that alert the user to deviations in technique. These features aim not only to enhance procedural precision but also to support skill development and supervised learning, making it particularly valuable for dental education and training environments.

This paper presents the technical architecture, functionalities, and use-case scenarios of the IDH. It further evaluates its potential to transform current practices by enhancing the accuracy, accountability, and quality assurance in dental procedures. The work aims to lay a foundation for intelligent, data-driven systems within clinical dentistry, ultimately advancing both patient safety and practitioner development.

2. Prototype Design

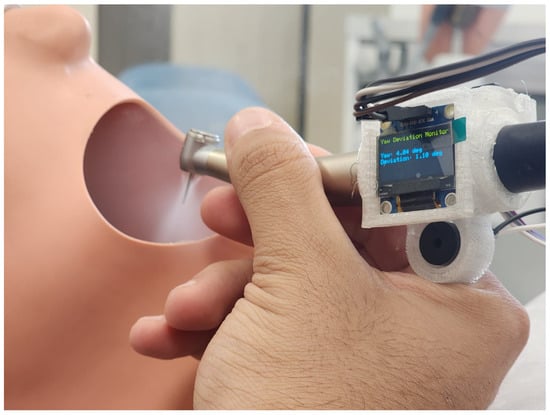

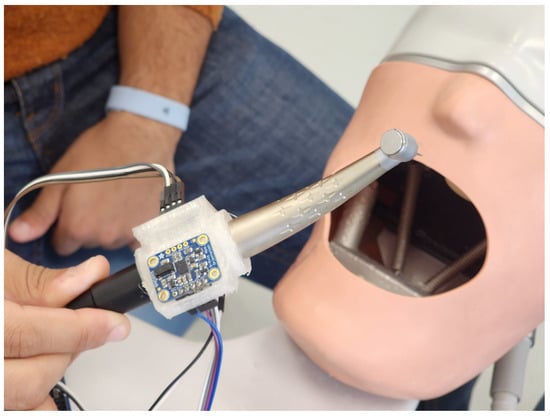

The system consists of a dental handpiece with a 3D-printed compartment to mount some components installed to track and measure the movement of the students in practice. Figure 1 and Figure 2 showcase a dental training setup designed to track the movement of a dental handpiece in a simulated clinical setting. The device captures sensor data at a sampling rate of one sample per second. Each motion segment is evaluated as a short time series comprising consecutive sensor readings. To precisely identify the start and end of each movement, a manual trigger mechanism is employed: the practitioner activates a control button on the handpiece to mark the onset of the motion, and the release of the button designates the motion’s conclusion.

Figure 1.

Intelligent dental handpiece in practice on phantom head model of a dental manikin.

Figure 2.

Another angle showing the intelligent dental handpiece in practice on phantom head model of a dental manikin.

The device motion is tracked by IMU LSM6DS3TR-C -the micro-controller was manufactured by STMicroelectronics- Geneva, Switzerland) and transmitted to a cloud server to be processed by the developed machine learning model. The prototype components are as follows:

- Air-turbine drill handpiece manufactured by NSK (Nakanishi Inc., Tochigi, Japan);

- Microcontroller: XIAO nRF52840 manufactured by XIAO (Birmingham, UK);

- IMU: LSM6DS3TR-C manufactured by XIAO STMicroelectronics (Plan-les-Ouates, Switzerland);

- OLED: 0.96-inch SSD1306 (128×64 resolution) manufactured by Solomon Systech (Hong Kong, China);

- Buzzer;

- On/Off switch;

- PCB.

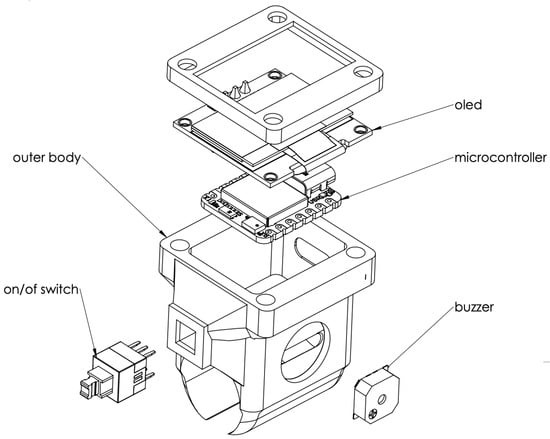

The schematic design of the 3D compartment can be seen in Figure 3. The setup is being tested on a dental manikin, commonly used for training and practice, creating a realistic environment for evaluating hand control and technique. By capturing real-time motion data, this system could help assess skill levels, improve training methods, and even contribute to advancements in robotic-assisted dentistry. Ultimately, this kind of technology has the potential to refine how dental students and professionals develop precision and dexterity in their work.

Figure 3.

The developed AI-based device main compartment components.

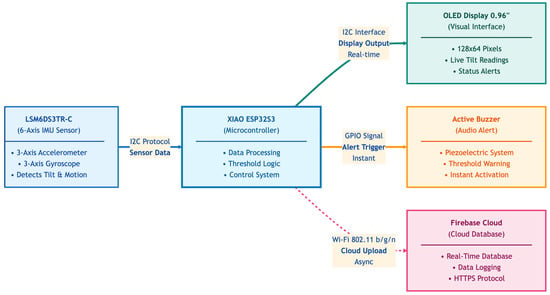

The system architecture in Figure 4 of the Intelligent Dental Handpiece is designed to seamlessly integrate motion sensing, real-time feedback, and cloud-based analytics into a compact and user-friendly setup. At its core, the LSM6DS3TR-C sensor captures six degrees of motion—acceleration and angular velocity—transmitting data via I2C to the XIAO ESP32S3 microcontroller (XIAO, Birmingham, UK). This microcontroller processes the data, applies threshold logic, and coordinates responses across the system. A 0.96" OLED display provides live visual feedback, while an active buzzer delivers immediate audio alerts when deviations occur. To support long-term tracking and remote analysis, the system uploads motion data asynchronously to a Firebase cloud database using Wi-Fi. Together, these components create a responsive and intelligent training tool that enhances precision, awareness, and skill development in dental education.

Figure 4.

System diagram.

The developed prototype was used to collect 3720 data records from 61 practitioners (29 male and 31 female), with an average age of 38. Data was collected during the operation of opening cavities for fillings. The dataset comprises three balanced classes based on handpiece deviation angles: Class 0 (Alert) with 1239 samples (33.3%) representing deviation angles between 10° and 15°, Class 1 (Lever Range) with 1265 samples (34.0%) representing deviation angles between 0° and 10°, and Class 2 (Stop Range) with 1216 samples (32.7%) representing deviation angles exceeding 15°. Each data record includes the following parameters: ID, State, Time, Deviation, roll, pitch, yaw, acceleration, and velocity.

Each practitioner (user) has a unique ID to store their data separately. The State parameter defines the motion range observed, with values corresponding to one of the following: Lever Range, Alert, or Stop Range. The Time parameter represents the timestamp at which each record was collected. The roll, pitch, and yaw parameters describe the rotation axes of the handpiece during the procedure. The acceleration and velocity parameters indicate the linear dynamics of the tool’s movement during operation.

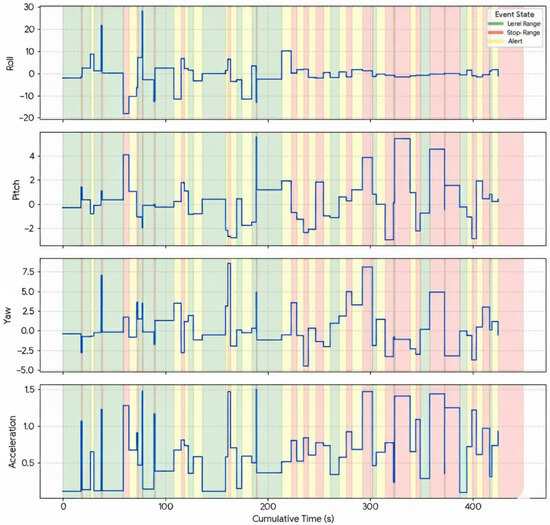

Figure 5 illustrates examples of the collected signals, where roll, pitch, yaw, and acceleration are plotted over time with color-coded background regions representing each state class (Level Range, Stop Range, and Alert). These visualizations help clarify the temporal and angular behavior of the handpiece across different states. More about the dataset is detailed in [31].

Figure 5.

Visualization of collected signals for one practitioner showing roll, pitch, yaw, and acceleration over cumulative time. Background colors indicate motion states: green for Level Range, yellow for Alert, and red for Stop Range.

3. Methodology

This section presents the design and implementation of the proposed intelligent dental handpiece, focusing on data acquisition, preprocessing, and the motion analysis techniques.

The IMU is the main sensory component; it captures six degrees of freedom (DOF) of motion data, including acceleration, angular velocity, and orientation roll, pitch, and yaw, which are then analyzed to assess the dexterity and control. The following subsections elaborate on the preprocessing of IMU, feature extraction, ML models, and performance evaluation.

3.1. Data Preprocessing

The collection includes device-specific metadata together with data gathered by the IMU. Both numerical and categorical factors are present in these data. Deviation, Device, roll, pitch, yaw, acceleration, and velocity are the primary parameters in the datasets. The categorization category based on the manual observation made during data collection is represented by the target variable with the label State. Several preprocessing methods have been used to optimize the dataset for model training and evaluation.

3.1.1. Feature Selection

The most crucial elements need to be found and extracted for model training and assessment. These features, which comprise device-specific data (Device and Deviation) and motion parameters (acceleration, roll, pitch, yaw, and velocity), were selected through exploratory analysis.

3.1.2. Time Column Normalization

Every data record in the gathered dataset has a timestamp field. The time values are transformed to numerical values, counting the seconds since the recording started, to make numerical processing easier and guarantee consistent input ranges throughout the whole observations. The Min-Max scaling is then used to normalize them. The influence of temporal magnitude differences on model training was lessened by normalizing the results to a standard range of [0, 1] [32].

3.1.3. Categorical Encoding

The dataset contains a State parameter in one of its columns. It is a categorical parameter that denotes various handpiece classes or operating states. Consequently, categorical values are transformed into numerical ones to guarantee compatibility with classification algorithms [33]. Without introducing ordinal bias, this modification preserved class information.

3.1.4. Data Splitting

The train_test_split function from the scikit-learn library was used to divide the dataset into training and testing sections. A traditional 80/20 ratio was applied, with 20% of the data being used for performance evaluation and 80% being used to train the models. The random_state parameter was set to 42 in order to guarantee experiment repeatability.

3.2. Machine Learning Models

The analysis included a range of classifiers: Logistic Regression (LR), Random Forest (RF), the three main Support Vector Machine (SVM) kernels (Linear, RBF, Polynomial), and a Neural Network (NN) model. This mix covers both simple linear models and more complex deep-learning approaches, which helps identify the best pattern recognition method for the IMU sensor data. Each model was trained using the preprocessed data.

3.2.1. Random Forest

An ensemble-based classifier, the Random Forest model builds several decision trees and combines their predictions [34]. It was selected for its inherent robustness against overfitting, its capacity to handle complex feature interactions, and its stability in analyzing high-dimensional, noisy sensor data.

3.2.2. SVM (Kernel-Based)

The Support Vector Machine (SVM) classifier is highly regarded for its ability to locate the optimal separating hyperplanes between classes by maximizing the judgment margin. By explicitly exploring the Linear, Radial Basis Function (RBF), and Polynomial kernels, the study ensured that the best decision boundary—from a simple hyperplane to a complex, non-linear one—could be identified [35,36].

3.2.3. Logistic Regression

A linear classifier that models the likelihood of an outcome, Logistic Regression was included as a highly interpretable performance benchmark [37,38]. It is crucial for assessing how much of the classification problem can be solved by simple linear relationships.

3.2.4. Neural Network (NN)

A Neural Network (MLP) was selected to leverage its capacity for deep feature learning and modeling highly non-linear, hierarchical patterns within the time-series sensor data. NN models provide a state-of-the-art benchmark, particularly when complex spatial or temporal dependencies exist in motion data [39,40].

3.3. Hyperparameter Optimization Framework

Hyperparameter tuning was performed using Randomized Search with stratified k-fold cross-validation to optimize model performance and generalization. Randomized search efficiently explores vast hyperparameter spaces by randomly sampling from specified distributions, in contrast to exhaustive grid search, which analyzes every possible combination [41]. This method is particularly advantageous when computational resources are limited or when certain parameters have minimal performance impact [42].

3.3.1. Randomized Search Methodology

Randomized search was selected over exhaustive grid search based on empirical studies demonstrating comparable performance with significantly reduced computational cost [41]. By randomly sampling from specified parameter distributions rather than exhaustively evaluating every combination, this method efficiently explores vast hyperparameter spaces while maintaining optimization quality. This approach is particularly advantageous when computational resources are limited or when certain parameters have minimal performance impact [42].

The randomized search process samples hyperparameter combinations from predefined distributions, evaluating each configuration through cross-validation to estimate generalization performance. The number of search iterations was determined based on each model’s parameter space complexity, balancing thorough exploration with computational feasibility. Model-specific iteration counts ranged from 15 to 30, as detailed in Table 2.

Table 2.

Hyperparameter optimization configuration by model.

3.3.2. Cross-Validation Strategy

All models employed 5-fold stratified cross-validation to ensure reliable performance estimation and prevent overfitting [43]. Stratification maintains the original class distribution within each fold, which is critical for imbalanced datasets and ensures that model performance estimates are representative across all classes.

General Configuration:

- Cross-Validation Method: 5-fold stratified cross-validation ();

- Primary Optimization Metric: classification accuracy;

- Secondary Metrics: precision, recall, and F1-score (monitored for balanced performance);

- Random State: fixed at 42 for full reproducibility;

- Training/Test Split: 80%/20% with stratified sampling.

The optimization workflow consists of the following:

- Split dataset into training (80%) and test (20%) sets with stratification.

- For each hyperparameter combination sampled

- (a)

- Divide training set into five stratified folds;

- (b)

- Train model on four folds, validate on the remaining fold;

- (c)

- Rotate validation fold and repeat (five iterations total);

- (d)

- Calculate mean and standard deviation of accuracy across folds.

- Select hyperparameters yielding highest mean cross-validation accuracy.

- Retrain model on entire training set with optimal parameters.

- Evaluate final model on held-out test set.

Table 2 summarizes the search configuration for each model, including the number of iterations, cross-validation folds, parameters tuned, and total model evaluations performed.

3.3.3. Model-Specific Parameter Search Spaces

For each model, hyperparameter distributions were carefully specified based on established best practices and theoretical considerations [44]. The following subsections detail the search space for each algorithm.

Logistic Regression

Search iterations: 20.

Parameter distributions:

- C (Inverse Regularization Strength): Log-uniform distribution ;

- Penalty Type: Categorical {L1, L2};

- Solver: Categorical {liblinear, lbfgs, saga}.

Optimal configuration: , penalty = L2, solver = lbfgs.

Random Forest

Search iterations: 30.

Parameter distributions:

- n_estimators: Discrete uniform ;

- max_depth: Discrete uniform ;

- min_samples_split: Discrete uniform ;

- min_samples_leaf: Discrete uniform ;

- max_features: Continuous uniform .

- criterion: Categorical {gini, entropy}.

Optimal configuration: n_estimators = 221, max_depth = 24, min_samples_split = 8, min_samples_leaf = 8, max_features = 0.966, criterion = gini.

Support Vector Machine—Linear Kernel

Search iterations: 15

Parameter distributions:

- C: Log-uniform .

- class_weight: Categorical {None, balanced}

Optimal configuration: , class_weight = balanced.

Support Vector Machine—RBF Kernel

Search iterations: 20.

Parameter distributions:

- C: Log-uniform ;

- gamma: Log-uniform ;

- class_weight: Categorical {None, balanced}.

Optimal configuration: , gamma = 0.0035, class_weight = balanced.

Support Vector Machine—Polynomial Kernel

Search iterations: 20.

Parameter distributions:

- C: Log-uniform ;

- degree: Discrete uniform ;

- gamma: Log-uniform ;

- coef0: Continuous uniform ;

- class_weight: Categorical {None, balanced}.

Optimal configuration: , degree = 2, gamma = 0.0576, coef0 = 0.271, class_weight = None.

Neural Network

Unlike the conventional machine learning models, the Neural Network architecture was chosen based on established literature best practices for this data type and was held fixed to ensure a fair comparison against the tuned classical models without requiring an expensive, separate hyperparameter search.

Fixed Configuration:

- Architecture: two hidden layers with [64, 32] neurons;

- Activation Function: ReLU [45];

- Dropout Rate: 20% [46];

- Optimizer: Adam with learning rate [47];

- Loss Function: sparse categorical cross-entropy (multiclass);

- Early Stopping: patience = 10 epochs [48];

- Batch Size: 32 samples;

- Maximum Epochs: 100.

4. Experiments

This section describes the computational environment and evaluation framework used to evaluate the performance of different classification models.

4.1. Experimental Setup

An OMEN by HP Laptop 15-dh1xxx personal computer (HP Inc., Palo Alto, CA, USA) with an Intel(R) Core(TM) i7-10750H CPU @ 2.60GHz, 16.0 GB of RAM, and an NVIDIA GeForce GTX 1660 Ti (6 GB) graphics card (NVIDIA Corporation, Santa Clara, CA, USA) was used for all trials. Windows 64-bit (Microsoft Corporation, Redmond, WA, USA) was the operating system utilized. Python 3.9.7 (Python Software Foundation, Wilmington, DE, USA) was used for model implementations, with scikit-learn 1.2.0, TensorFlow 2.11.0 (Google LLC, Mountain View, CA, USA), and XGBoost 2.1.4 (DMLC, distributed machine learning community) as essential libraries.

4.2. Performance Metrics

ROC AUC utilizing a One-vs.-Rest (OvR) technique, test accuracy, weighted precision, weighted recall, weighted F1-score, and other common evaluation metrics were used to evaluate the predictive validity of the classification models [49].

By computing the percentage of properly predicted cases out of all the instances in the test set, test accuracy [50] measures the model’s overall correctness. Simple and easy to use, it could not provide as much information in datasets that are unbalanced. Calculated each class and then averaged depending on class frequency, weighted precision [51] assesses the percentage of accurate positive predictions among all positive predictions generated by the model. This measure is especially crucial in situations when false positives are expensive.

The percentage of genuine positive predictions among all actual instances of each class is measured by weighted recall [51], which is also combined using class-weighted averaging. In situations where false negatives are more important, this statistic is particularly pertinent.

The weighted F1-score [52] uses the harmonic mean of precision and recall to integrate them into a single metric. By weighting by the amount of true cases in each class, it corrects for class imbalance and takes into consideration both false positives and false negatives.

Last but not least, the model’s capacity to differentiate between classes over a range of classification thresholds is evaluated using the ROC AUC (Area Under the Receiver Operating Characteristic Curve) utilizing a One-vs.-Rest (OvR) strategy [50]. The model’s discriminative strength is revealed in the multiclass environment by reporting a weighted average of the AUC scores for every class.

5. Results and Discussion

This section provides a detailed performance evaluation and interpretation of multiple classification models, including Linear Support Vector Machine (SVM), Logistic Regression, Random Forest, and other kernel-based methods. The assessment utilizes a range of quantitative metrics and visualization techniques.

5.1. Linear SVM Classifier Performance Analysis

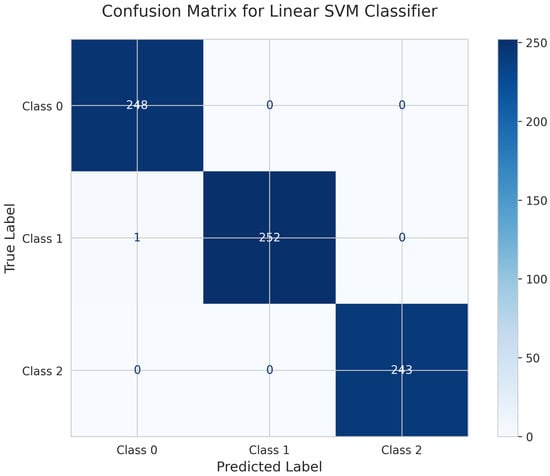

The motion data was successfully classified by the Linear Support Vector Machine (SVM) Classifier. Numerous assessment indicators and visualization tools demonstrate its efficacy.

The confusion matrix for the Linear Support Vector Machine (SVM) Classifier (Figure 6) indicates high classification accuracy, with only one misclassification overall. For Class 0, all 248 instances were correctly classified. Class 1 achieved 252 true positives, with a single instance misclassified as Class 0. Class 2 had 243 true positives and no misclassifications. These results indicate that the Linear SVM model effectively distinguishes among the three motion classes. The test accuracy for the Linear SVM Classifier was 0.996, or 99.6%.

Figure 6.

Confusion matrix for Linear SVM Classifier.

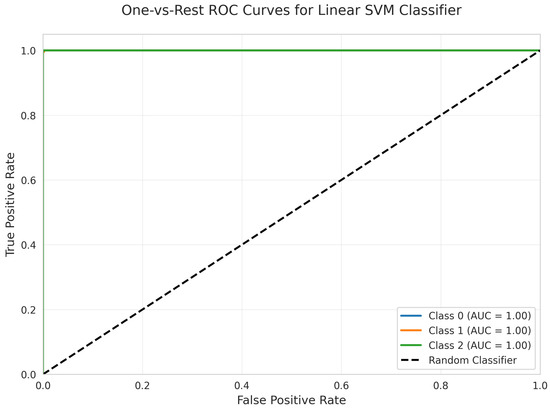

The One-vs.-Rest Receiver Operating Characteristic (ROC) Curves (Figure 7) further confirm the model’s discriminative capacity. All three classes (0, 1, and 2) achieved perfect separation, each with an Area Under the Curve (AUC) of 1.00. These results indicate that the model consistently achieves optimal true positive rates and zero false positive rates across all classes.

Figure 7.

One -vs.-Rest ROC Curves for Linear SVM Classifier. All classes overlap at 100%.

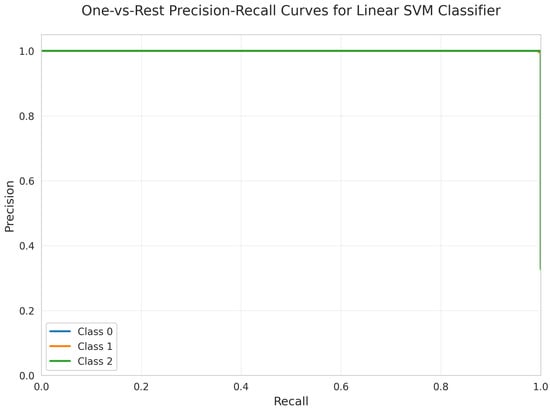

The One-vs.-Rest Precision-Recall Curves (Figure 8) illustrate the model’s effectiveness in positive class prediction. All three classes maintain a precision of 1.00 across all recall values, indicating highly reliable positive class predictions. The weighted test F1-score, recall, and precision were each 0.996.

Figure 8.

One-vs.-Rest Precision-Recall Curves for Linear SVM Classifier. All classes overlap at 100%.

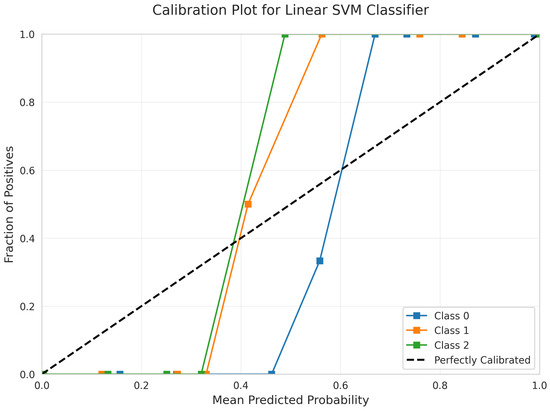

The Calibration Plot (Figure 9) evaluates the alignment between predicted and actual probabilities for the Linear Support Vector Machine (SVM) classifier. The curves for all three classes closely follow the diagonal line across all probability ranges, indicating that the predicted probabilities are highly reliable and well-calibrated. This level of calibration supports the model’s suitability for applications that require accurate probability estimates.

Figure 9.

Calibration Plot for Linear SVM Classifier.

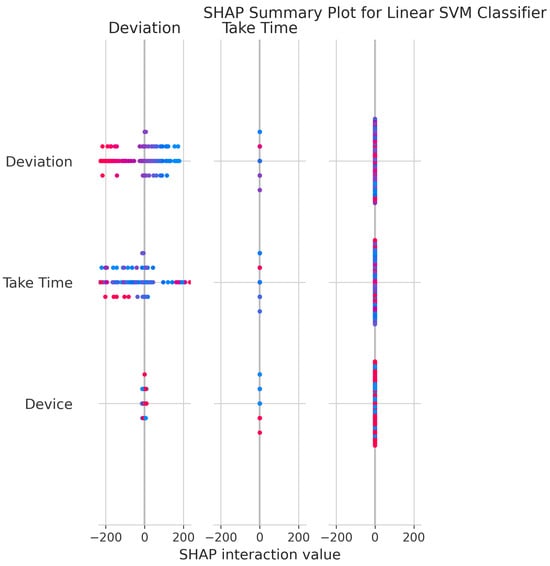

The SHAP Summary Plot (Figure 10) provides insight into feature contributions to the model’s output. Deviation and Take Time have the greatest impact, as indicated by their broad ranges of SHAP values. The vertical spread and color-coding by feature value demonstrate how each feature’s magnitude influences predictions. Interaction values further confirm that Deviation and Take Time primarily exert direct effects on model decisions, rather than relying on complex interactions with other features.

Figure 10.

SHAP Summary Plot for Linear SVM Classifier. High valued features are represented in red, while low valued features are represented in blue.

The Linear SVM classifier, optimized using RandomizedSearchCV, achieved a cross-validation (CV) score of 0.999 ± 0.001 with the optimal hyperparameters: and class_weight = `balanced’. However, nine out of fifteen fitting attempts failed due to an unsupported combination of penalty = `l2’ and loss = `hinge’ when dual = False.

5.2. Logistic Regression Performance Analysis

The Logistic Regression model demonstrated exceptional performance, accurately capturing linear relationships present in the dataset.

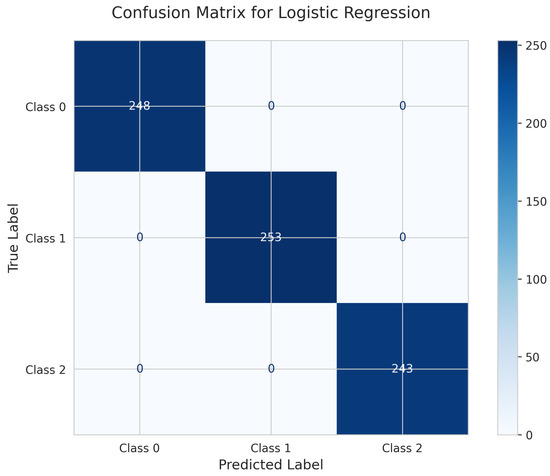

Perfect predictions are shown by the Logistic Regression Confusion Matrix (Figure 11). All 248 instances for Class 0, 253 instances for Class 1, and 243 instances for Class 2 were correctly identified with zero misclassifications. This demonstrates the exceptional effectiveness of the linear model on this dataset, achieving a perfect 100% test accuracy.

Figure 11.

Confusion Matrix for Logistic Regression.

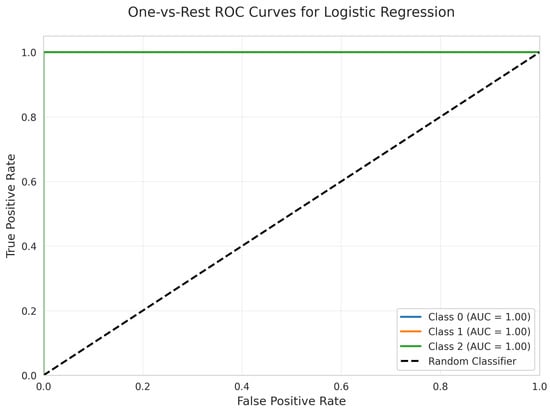

The One-vs.-Rest ROC Curves (Figure 12) for Logistic Regression demonstrate perfect discriminative performance, with a weighted ROC AUC of 1.00 for all classes. Each class achieves an AUC of 1.00, with curves located at the top-left corner, indicating optimal true positive rates and zero false positive rates.

Figure 12.

One-vs.-Rest ROC Curves for Logistic Regression. All classes overlap at 100%.

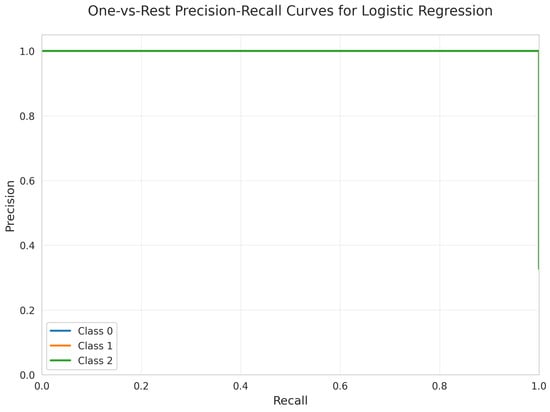

The One-vs.-Rest Precision-Recall Curves (Figure 13) for all three classes demonstrate outstanding precision and recall. Each class maintains a precision of 1.00 across all recall values, indicating highly effective classification. The weighted test precision, recall, and F1-score were each 1.000.

Figure 13.

One-vs.-Rest Precision-Recall Curves for Logistic Regression. All classes overlap at 100%.

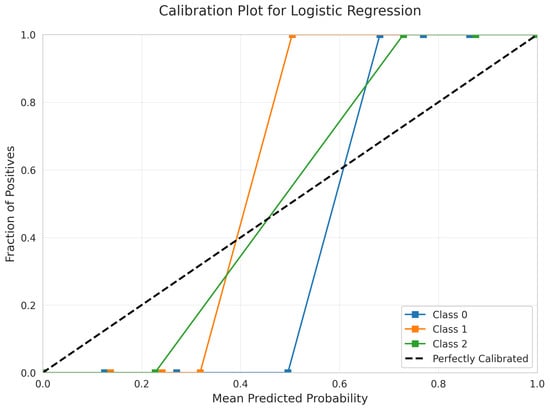

The model’s predicted probabilities are exceptionally well-calibrated, according to the Calibration Plot (Figure 14) for Logistic Regression. The curves for all three classes closely follow the diagonal line across all probability ranges, meaning that predicted probabilities accurately reflect true class membership probabilities. This quality is highly desirable for applications where accurate probability estimates are essential.

Figure 14.

Calibration Plot for Logistic Regression.

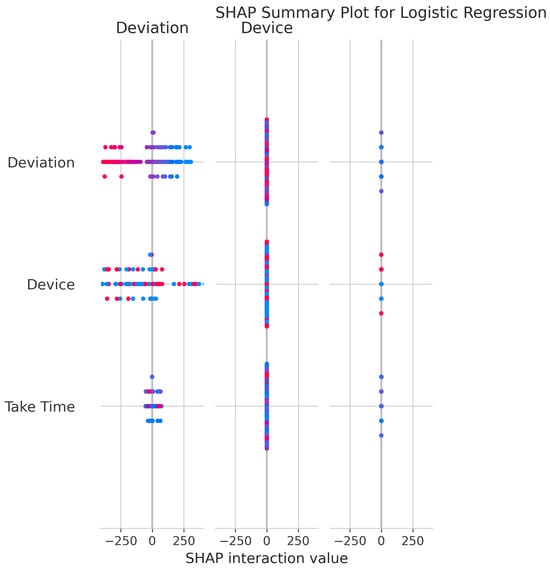

The SHAP Summary Plot for Logistic Regression (Figure 15) clarifies feature influence on model output. Deviation and Device exhibit the greatest effect, as indicated by their wide range of SHAP values. These features consistently exert strong influence on individual predictions, with higher values driving predictions in specific directions.

Figure 15.

SHAP Summary Plot for Logistic Regression. High valued features are represented in red, while low valued features are represented in blue.

Logistic Regression, optimized using RandomizedSearchCV, achieved a cross-validation (CV) score of 0.9987 ± 0.0007 with the optimal hyperparameters: , penalty = `l2’, and solver = `lbfgs’. During training and evaluation, a FutureWarning indicated that the multi_class = `multinomial’ setting will be adopted by default in future library versions.

5.3. Random Forest Classifier Performance Analysis

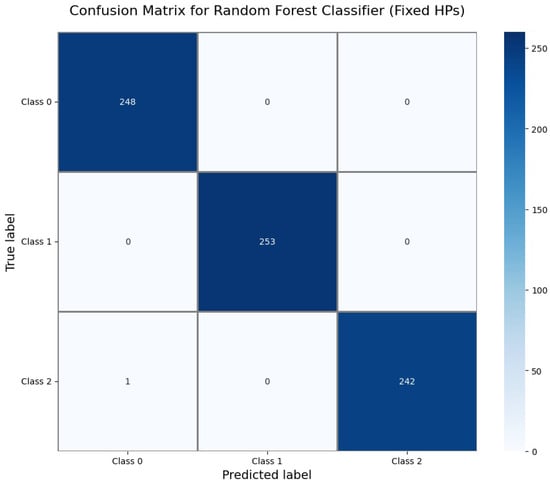

The Random Forest Classifier demonstrated exceptionally high performance, achieving perfect classification on this dataset.

The Confusion Matrix for the Random Forest Classifier (Figure 16) demonstrates perfect classification. All 248 instances of Class 0, 253 of Class 1, and 243 of Class 2 were correctly identified, resulting in zero misclassifications and an overall test accuracy of 1.000.

Figure 16.

Confusion Matrix for Random Forest Classifier (fixed hyperparameters).

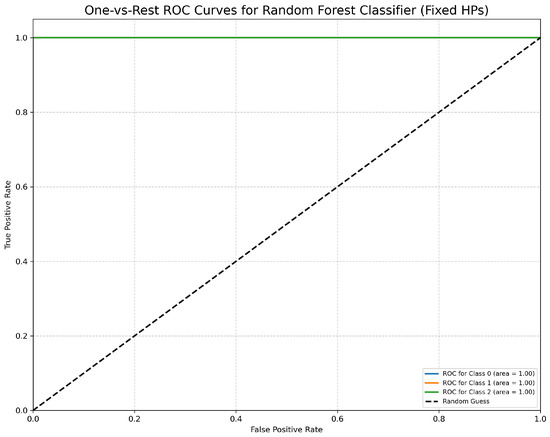

The One-vs.-Rest ROC Curves (Figure 17) further confirm the Random Forest Classifier’s discriminative capacity. All classes achieve an Area Under the Curve (AUC) of 1.00, indicating perfect separation between classes.

Figure 17.

One-vs.-Rest ROC Curves for Random Forest Classifier (fixed hyperparameters). All classes overlap at 100%.

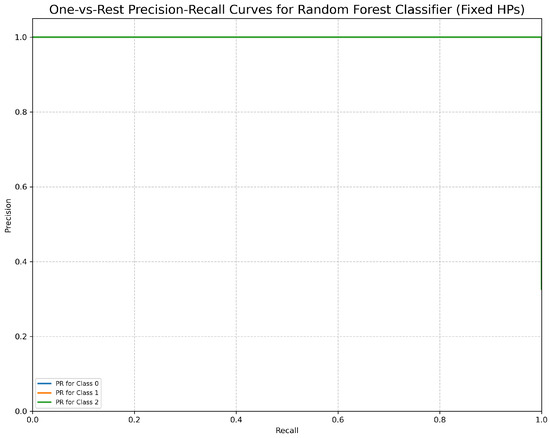

The One-vs.-Rest Precision-Recall Curves (Figure 18) for the Random Forest Classifier demonstrate flawless precision of 1.00 across all recall values for all classes. This result indicates perfect performance, with 100% precision maintained at all recall levels.

Figure 18.

One-vs.-Rest Precision-Recall Curves for Random Forest Classifier (fixed hyperparameters). All classes overlap at 100%.

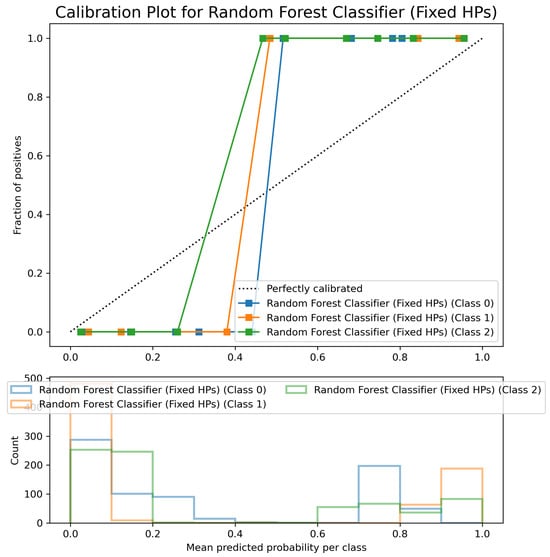

The Random Forest Classifier’s Calibration Plot (Figure 19) shows reasonably well-calibrated predicted probabilities, with class curves following the diagonal line relatively closely, particularly for Classes 1 and 2. Class 0 shows slight underconfidence in lower probability ranges but maintains good calibration overall. This demonstrates reliable probability forecasts suitable for real-world applications requiring confidence estimations.

Figure 19.

Calibration Plot for Random Forest Classifier (fixed hyperparameters). Classes are differentiated by colors.

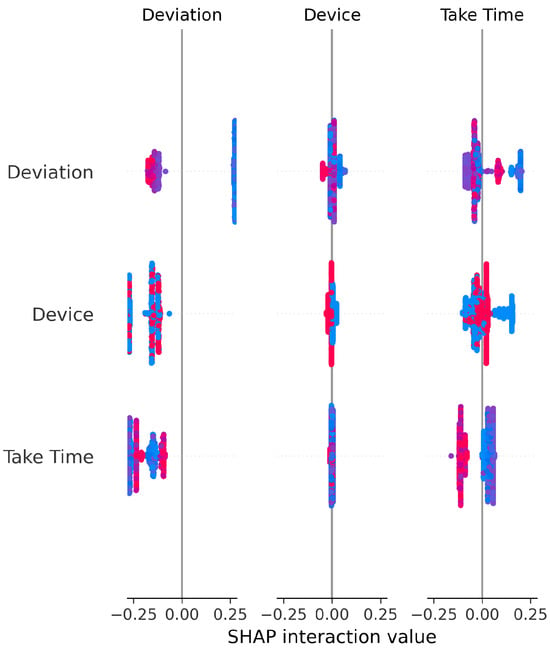

The SHAP Summary Plot for the Random Forest Classifier (Figure 20) confirms that Device and Deviation exert the most balanced and consistent influence across predictions. Their effects are primarily direct, rather than resulting from complex interactions with other variables.

Figure 20.

SHAP Summary Plot for Random Forest Classifier (fixed hyperparameters). High valued features are represented in red, while low valued features are represented in blue.

RandomizedSearchCV’s Random Forest achieved a perfect CV score of 1.000 ± 0.000 with optimal hyperparameters: n_estimators = 221, max_depth = 24, max_features = 0.966, min_samples_split = 8, min_samples_leaf = 8, criterion = ’gini’.

5.4. Kernel Function Evaluation

To comprehensively assess model complexity requirements beyond the linear kernel, SVM models with Radial Basis Function (RBF) and Polynomial kernels were evaluated through systematic hyperparameter optimization.

5.4.1. SVM RBF Kernel Performance

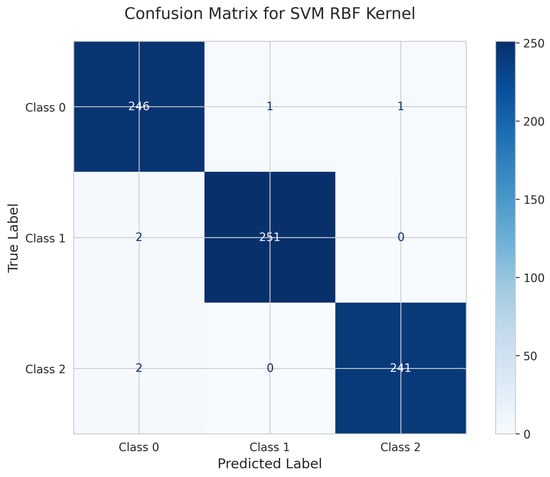

The SVM with RBF kernel’s Confusion Matrix (Figure 21) shows 246 correct predictions for Class 0, with 1 misclassified as Class 1 and 1 as Class 2. Class 1 achieved 251 true positives with 2 misclassified as Class 0. Class 2 had 241 correct predictions with 2 misclassified as Class 0. The model achieved a test accuracy of 0.9919 (99.19%) with 6 total misclassifications.

Figure 21.

Confusion Matrix for SVM RBF Kernel.

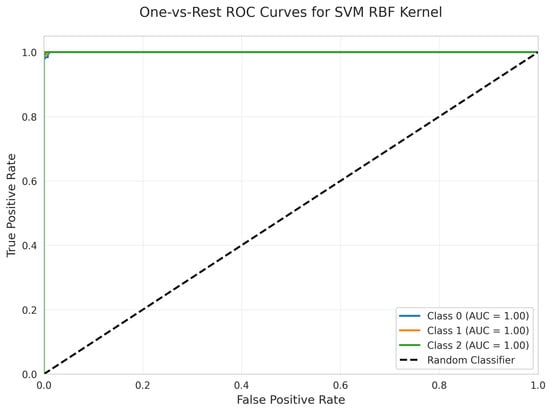

The One-vs.-Rest ROC Curves (Figure 22) demonstrate that all classes achieve a perfect AUC of 1.00, indicating excellent discriminative ability despite the misclassifications in the confusion matrix.

Figure 22.

One-vs.-Rest ROC Curves for SVM RBF Kernel. All classes overlap at 100%.

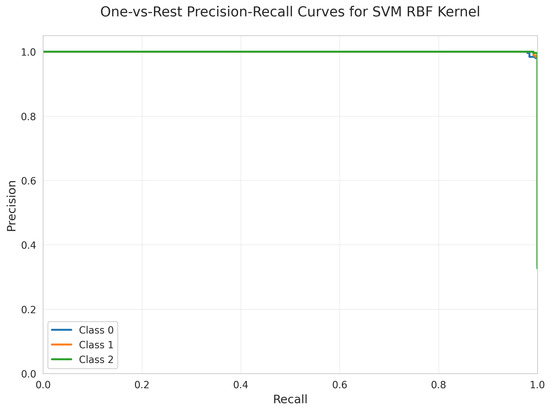

The Precision-Recall Curves (Figure 23) show near-perfect precision across all recall values for all three classes, maintaining precision near 1.00 throughout most of the curves.

Figure 23.

One-vs.-Rest Precision-Recall Curves for SVM RBF Kernel. All classes overlap at 100%.

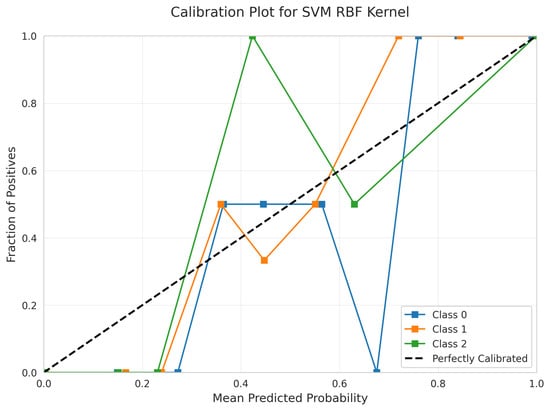

However, the Calibration Plot (Figure 24) reveals significant calibration issues. The curves exhibit erratic behavior with sharp discontinuities and unpredictable oscillations, particularly visible in the jagged patterns across all three classes. This indicates that the predicted probabilities are unreliable for confidence-based decision making, despite the model’s reasonable accuracy.

Figure 24.

Calibration Plot for SVM RBF Kernel.

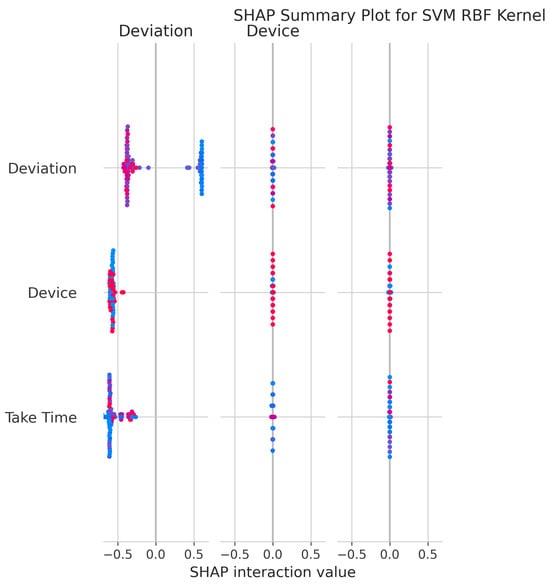

The SHAP Summary Plot (Figure 25) shows that Deviation remains the dominant feature, with Device and Take Time also contributing significantly to predictions.

Figure 25.

SHAP Summary Plot for SVM RBF kernel. High valued features are represented in red, while low valued features are represented in blue.

RandomizedSearchCV identified optimal parameters: C = 65.41, gamma = 0.0035, class_weight = ’balanced’, achieving a best CV score of 0.9946 ± 0.0029. The RBF kernel introduced unnecessary complexity for this dataset, as evidenced by its poor calibration and lower accuracy compared to the linear kernel.

5.4.2. SVM Polynomial Kernel Performance

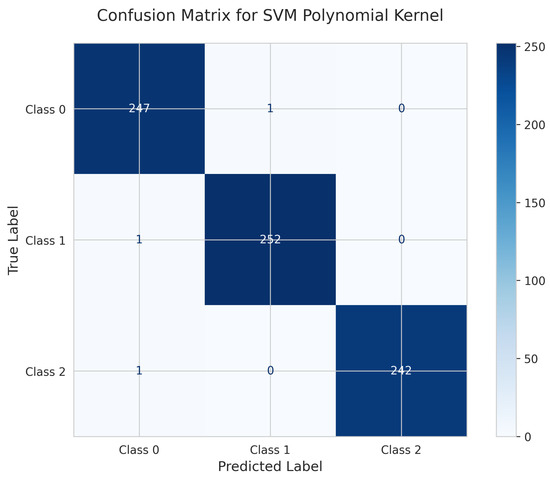

The SVM Polynomial kernel’s Confusion Matrix (Figure 26) demonstrates strong performance with 247 correct predictions for Class 0 (1 misclassified as Class 1), 252 true positives for Class 1 (1 misclassified as Class 0), and 242 correct for Class 2 (1 misclassified as Class 0). The model achieved a test accuracy of 0.996 (99.6%) with only 3 total misclassifications.

Figure 26.

Confusion Matrix for SVM Polynomial kernel.

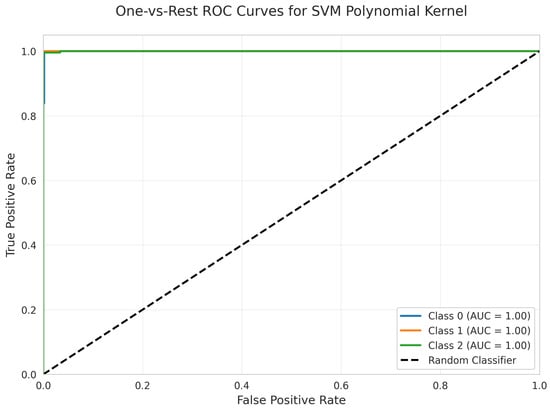

The One-vs.-Rest ROC Curves (Figure 27) show perfect AUC = 1.00 for all classes, confirming excellent discriminative capacity.

Figure 27.

One-vs.-Rest ROC Curves for SVM Polynomial kernel. All classes overlap at 100%.

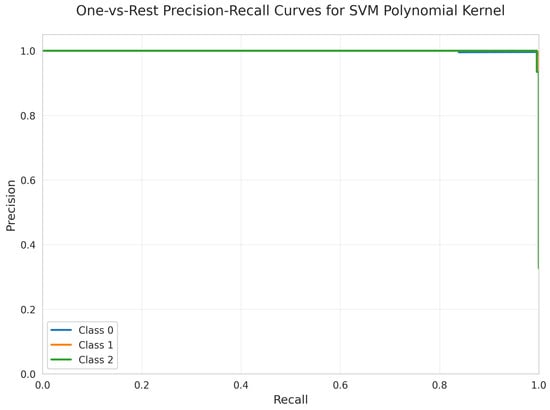

The Precision-Recall Curves (Figure 28) demonstrate near-perfect precision across all recall values for all classes, similar to the linear kernel’s performance.

Figure 28.

One-vs.-Rest Precision-Recall Curves for SVM Polynomial kernel. All classes overlap at 100%.

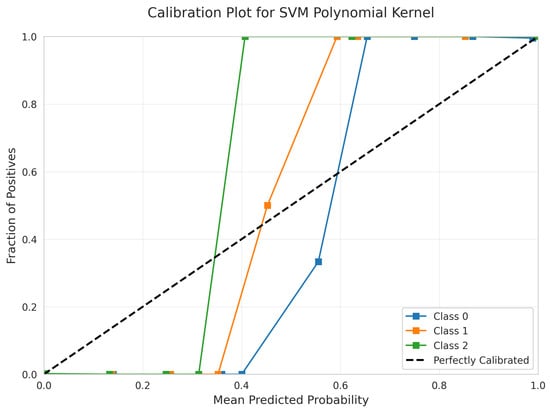

The Calibration Plot (Figure 29) shows good calibration with curves following the diagonal relatively closely across most probability ranges, indicating reliable probability estimates.

Figure 29.

Calibration Plot for SVM Polynomial kernel.

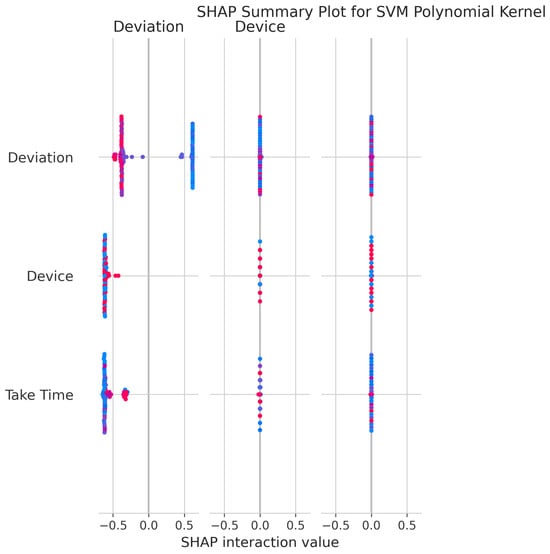

The SHAP Summary Plot (Figure 30) reveals that Deviation is the dominant feature, with Device and Take Time also contributing significantly to the model’s predictions.

Figure 30.

SHAP Summary Plot for SVM Polynomial kernel. High valued features are represented in red, while low valued features are represented in blue.

RandomizedSearchCV identified optimal parameters: C = 34.17, degree = 2, gamma = 0.0576, coef0 = 0.271, achieving a best CV score of 0.9976 ± 0.0017.

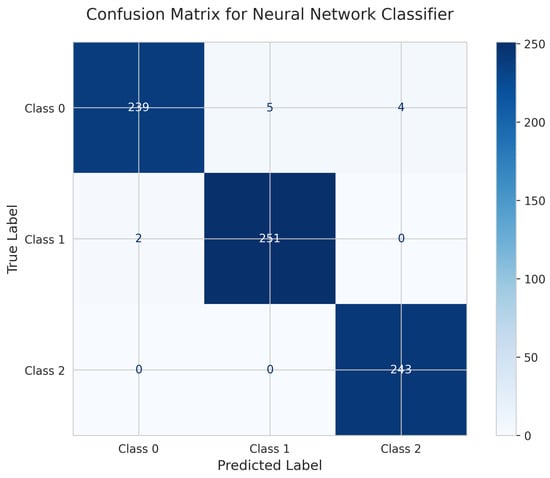

5.5. Neural Network Performance

The Neural Network’s Confusion Matrix (Figure 31) shows 239 correct predictions for Class 0 (5 misclassified as Class 1, 4 as Class 2), 251 true positives for Class 1 (2 misclassified as Class 0), and 243 correct for Class 2 (all correctly classified). The model achieved a test accuracy of 0.9852 (98.52%) with 11 total misclassifications, making it the weakest performer despite being the most complex model.

Figure 31.

Confusion Matrix for Neural Network Classifier.

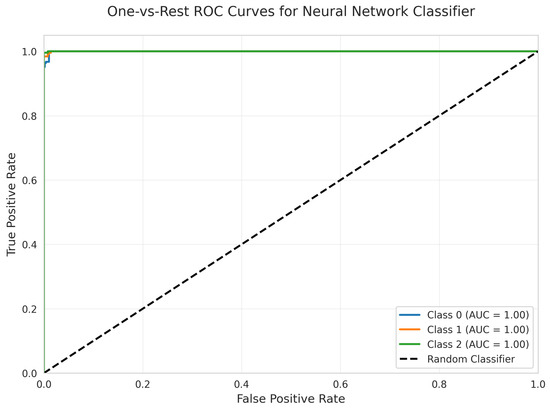

The One-vs.-Rest ROC Curves (Figure 32) show perfect AUC = 1.00 for all classes, indicating strong discriminative ability in terms of ranking predictions.

Figure 32.

One-vs.-Rest ROC Curves for Neural Network Classifier. All classes overlap at 100%.

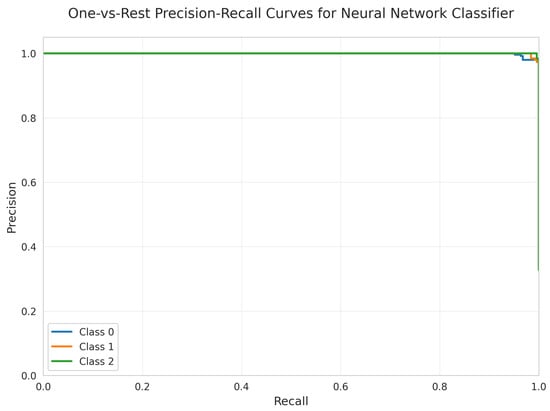

The Precision-Recall Curves (Figure 33) demonstrate near-perfect precision for Classes 1 and 2, with slightly lower performance for Class 0, consistent with the confusion matrix results.

Figure 33.

One-vs.-Rest Precision-Recall Curves for Neural Network Classifier. All classes overlap at 100%.

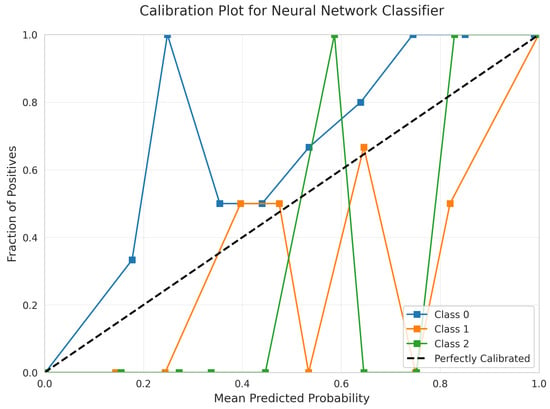

The Calibration Plot (Figure 34) reveals significant calibration problems with erratic curves that wildly deviate from the diagonal, showing multiple spikes and dips. This indicates unreliable probability estimates and suggests potential overfitting despite the model’s complexity.

Figure 34.

Calibration Plot for Neural Network Classifier. Classes are differentiated by color.

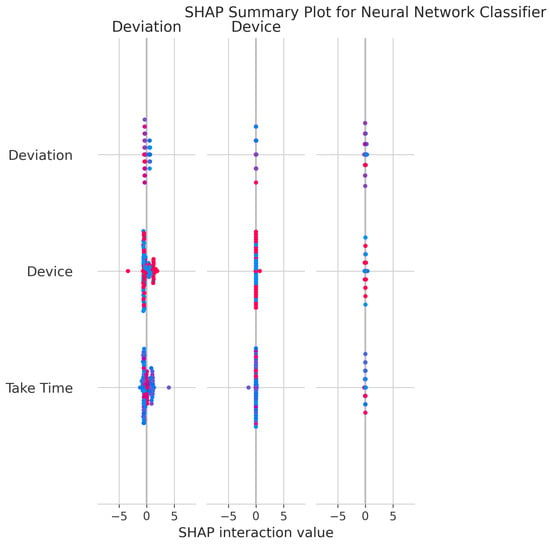

The SHAP Summary Plot (Figure 35) shows that Deviation and Device are the primary drivers of predictions, consistent with other models.

Figure 35.

SHAP Summary Plot for Neural Network Classifier. High valued features are represented in red, while low valued features are represented in blue.

The Neural Network required substantially longer training time (20.54 s) with slower inference (0.36 ms) compared to simpler models, without providing any performance advantage.

5.6. Comparative Analysis of Model Performance

To comprehensively evaluate the classification approaches, model performance was assessed across multiple dimensions, including accuracy metrics, discriminative ability, probability calibration quality, computational efficiency, and cross-validation stability. This multi-faceted analysis enables identification of the optimal model architecture for deployment in real-time motion classification applications.

5.6.1. Overall Performance Metrics (Accuracy, Precision, Recall, F1-Score)

Perfect Performance Tier: Both Random Forest (fixed HPs) and Logistic Regression achieved perfect test accuracy of 1.000 (100%) with zero misclassifications, as clearly demonstrated in their confusion matrices (Figure 16 and Figure 11), proving that the dataset exhibits strong linear separability.

Excellent Performance Tier: Linear SVM (99.6% with 1 error) and SVM Polynomial (99.6% with 3 errors) achieved excellent results with minimal misclassifications.

Good Performance Tier: SVM RBF (99.19% with 6 errors) and Neural Network (98.52% with 11 errors) performed adequately but were the weakest models despite higher complexity.

5.6.2. Class-Wise Discrimination (ROC AUC and Precision-Recall Curves)

All models achieved perfect ROC AUC = 1.00 for all classes (Figure 7, Figure 12, Figure 17, Figure 22, Figure 27 and Figure 32), indicating optimal discriminative ability. This consistency confirms that the classes are highly separable in the feature space. The Precision-Recall curves (Figure 8, Figure 13, Figure 18, Figure 23, Figure 28 and Figure 33) similarly demonstrated near-perfect performance across all models.

5.6.3. Probability Calibration

Excellent Calibration: Linear SVM (Figure 9) and Logistic Regression (Figure 14) exhibited superior calibration with curves closely tracking the diagonal across all probability ranges.

5.6.4. Comprehensive Kernel Function Comparison

Table 3 presents a comprehensive comparison of SVM kernel functions to evaluate whether non-linear decision boundaries provide advantages over linear separation.

Table 3.

SVM kernel function performance comparison.

The linear kernel achieved equal or superior performance to non-linear alternatives across all metrics. Most significantly, it outperformed the RBF kernel in test accuracy (99.6% vs. 99.19%), made fewer errors (1 vs. 6), and demonstrated better cross-validation stability (±0.001 vs. ±0.003). The RBF kernel’s lower accuracy and poor calibration demonstrate that added complexity does not improve performance for this linearly separable dataset.

Linear Separability Confirmation: The superior performance of the linear kernel provides empirical evidence that the engineered feature space exhibits linear separability. When a linear decision boundary achieves optimal separation, more complex non-linear boundaries risk overfitting to noise rather than capturing genuine class structure.

Feature Engineering Validation: Successful linear separation indicates that the feature engineering process effectively transformed raw IMU sensor data into a representation where motion classes occupy linearly separable regions of the feature space.

Computational Efficiency: The linear kernel required 39% less training time (0.14 s vs. 0.23 s for RBF) and 80% faster inference (0.01 ms vs. 0.05 ms), critical advantages for real-time motion classification applications.

5.6.5. Cross-Validation Stability Analysis

All models were evaluated using 5-fold cross-validation aligned with the 80/20 train-test split ratio. Table 4 demonstrates the stability and reliability of performance estimates.

Table 4.

Cross-validation performance and stability.

The extremely low standard deviations (≤0.003 across all models) and minimal coefficients of variation (<0.3%) confirm that performance metrics are not artifacts of fortunate data partitioning but represent genuine model capabilities. Random Forest achieved zero variance across all folds (CV = 1.000 ± 0.000), indicating perfect consistency.

5.6.6. Feature Importance and Interpretability

The consistent importance of Deviation, Take Time, and Device is evident across all models through both coefficient-based importance and SHAP analysis (Figure 10, Figure 15, Figure 20, Figure 25, Figure 30 and Figure 35). Linear models emphasize Deviation and Take Time most strongly, while tree-based models show more balanced importance, including Device. These features capture the most important information about the motion states. The visual plots demonstrate that their influence is primarily direct rather than highly dependent on intricate interactions with other minor features.

5.6.7. Computational Efficiency

Linear SVM demonstrated the highest computational efficiency with training time of 0.14 s and inference time of 0.01 ms per sample. Logistic Regression showed training time of 0.91 s with 0.01 ms inference. Random Forest required 0.27 s training and 0.02 ms inference. The Neural Network exhibited substantially higher computational cost (20.54 s training, 0.36 ms inference) without corresponding performance benefits. All top-performing models demonstrate excellent computational efficiency suitable for real-time deployment.

5.6.8. Consolidated Performance Metrics

Table 5 presents a comprehensive summary of all evaluated models.

Table 5.

Consolidated performance metrics comparison—all models.

5.7. Key Findings and Performance Analysis

Model Selection: Both Logistic Regression and Random Forest achieved perfect 100% accuracy, as clearly demonstrated in their confusion matrices (Figure 11 and Figure 16). Logistic Regression demonstrates optimal performance due to faster training (0.91 s vs. 0.27 s) combined with perfect accuracy and excellent calibration. Random Forest serves as an excellent alternative if ensemble robustness is prioritized.

Linear Separability Validated: The comprehensive kernel comparison definitively shows that linear models match or outperform non-linear alternatives. The linear SVM achieved 99.6% accuracy versus 99.19% for RBF, with superior calibration (Figure 9 vs. Figure 24). This validates successful feature engineering that captures motion patterns in a linearly separable space.

Cross-Validation Confirms Robustness: 5-fold CV with extremely low standard deviations (≤0.003, Table 4) proves that results represent genuine model capabilities rather than fortunate data splits. Random Forest’s zero variance (1.000 ± 0.000) demonstrates perfect consistency across all folds.

Kernel Function Evaluation: Three distinct SVM kernels were systematically evaluated through hyperparameter optimization (Table 3). The linear kernel demonstrated superiority across all metrics: fewest errors (1 vs. 3–6), best calibration quality, fastest training and inference times, and highest CV stability. Despite exhaustive hyperparameter searches (20 candidates for RBF and Polynomial), non-linear kernels could not surpass linear performance.

Calibration Quality Assessment: Only Linear SVM (Figure 9), Logistic Regression (Figure 14), and Random Forest (Figure 19) provide reliable calibrated probabilities. Neural Network (Figure 34) and RBF SVM (Figure 24) exhibited severely erratic calibration curves with sharp discontinuities, making them unsuitable for applications requiring confidence estimates despite adequate accuracy.

Model Complexity Trade-offs: The Neural Network, despite being the most complex model with the longest training time (20.54 s), achieved the lowest accuracy (98.52%) with the most errors (11), as shown in Figure 31. This demonstrates that unnecessary complexity degrades performance when the underlying data structure is simple and linearly separable.

Feature Importance Consistency: SHAP analysis across all models (Figure 10, Figure 15, Figure 20, Figure 25, Figure 30 and Figure 35) consistently identifies Deviation, Take Time, and Device as the most influential features. This information is essential for sensor selection and future feature engineering efforts.

Computational Efficiency: All top-performing models achieve sub-millisecond inference times suitable for real-time applications. Linear SVM offers the fastest training (0.14 s), while Logistic Regression and Random Forest balance speed with perfect accuracy.

6. Conclusions

This study presents an intelligent motion-tracking dental handpiece integrated with an inertial measurement unit (IMU) for real-time evaluation of tool movement. The system records metrics including roll, pitch, yaw, acceleration, velocity, Take Time, and Deviation, enabling precise assessment of operator performance.

The developed prototype successfully demonstrated real-time motion monitoring and classification of dental procedures using the newly collected dataset comprising 3720 records from 61 practitioners. This dataset captures realistic hand motion dynamics during cavity preparation and represents one of the first structured data resources for AI-based dental motion analysis.

Among the various classification models evaluated, both Logistic Regression and Random Forest classifiers exhibited the best performance, attaining perfect 100% accuracy and flawless AUC scores of 1.00 across all categories with zero misclassifications. Linear SVM closely followed, achieving 99.6% accuracy with only one misclassification and nearly perfect weighted AUC. The comprehensive kernel comparison revealed that the linear kernel outperformed non-linear alternatives, with the RBF kernel, achieving 99.19% accuracy (six errors) and the Polynomial kernel matching the Linear SVM at 99.6% (three errors).

Feature importance analysis consistently identified Deviation and Take Time as the most influential predictors across all models, underscoring their critical role in evaluating motion states. Device type also contributed substantially, particularly within tree-based models. These results confirm the system’s effectiveness in analyzing user dexterity and control, demonstrating its significant potential as a tool for dental education and training.

In conclusion, the Intelligent Dental Handpiece (IDH) bridges the gap between manual skill assessment and automated performance evaluation by embedding sensing, analysis, and feedback directly into a conventional dental tool. The findings validate the feasibility of integrating AI-driven motion analytics into dental education and clinical training.

Future Work

To enhance model robustness, future research will expand the dataset to include a broader range of users and scenarios. Implementing real-time feedback methods during procedures may further improve training outcomes. Clinical validation will be essential to assess the system’s practicality and generalizability in dental practice.

Moreover, while the proposed intelligent handpiece demonstrates high accuracy in classifying motion states based on IMU-derived features such as acceleration, velocity, and orientation, it does not currently capture other important parameters that influence procedural quality, such as applied pressure on dental tissue. Applied pressure is important in assessing both dexterity and safety during dental procedures, as excessive force may lead to patient discomfort or tissue damage. Future enhancements to the IDH could integrate force sensors or pressure-sensitive overlays to capture this dimension, enabling a more comprehensive assessment framework.

Author Contributions

M.S., Y.S., Y.O., A.H., E.K., and O.S. have contributed equally to the study’s conception and design. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The dataset is available at this link: https://doi.org/10.17632/h76rf38jkn.1 (accessed on 15 October 2025).

Acknowledgments

The researchers acknowledges Ajman University for its support in this research.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Park, C. A comprehensive narrative review exploring the current landscape of digital complete denture technology and advancements. Heliyon 2025, 11, e41870. [Google Scholar] [CrossRef] [PubMed]

- Shalash, O. Design and Development of Autonomous Robotic Machine for Knee Arthroplasty. Ph.D. Thesis, University of Strathclyde, Glasgow, UK, 2018. [Google Scholar]

- Bandiaky, O.N.; Lopez, S.; Hamon, L.; Clouet, R.; Soueidan, A.; Le Guehennec, L. Impact of haptic simulators in preclinical dental education: A systematic review. J. Dent. Educ. 2024, 88, 366–379. [Google Scholar] [CrossRef] [PubMed]

- Khaled, A.; Shalash, O.; Ismaeil, O. Multiple objects detection and localization using data fusion. In Proceedings of the 2023 2nd International Conference on Automation, Robotics and Computer Engineering (ICARCE), Wuhan, China, 14–16 December 2023; pp. 1–6. [Google Scholar]

- Roy, E.; Bakr, M.M.; George, R. The need for virtual reality simulators in dental education: A review. Saudi Dent. J. 2017, 29, 41–47. [Google Scholar] [CrossRef]

- Elkholy, M.; Shalash, O.; Hamad, M.S.; Saraya, M.S. Empowering the grid: A comprehensive review of artificial intelligence techniques in smart grids. In Proceedings of the 2024 International Telecommunications Conference (ITC-Egypt), Cairo, Egypt, 22–25 July 2024; pp. 513–518. [Google Scholar]

- Shalash, O.; Rowe, P. Computer-assisted robotic system for autonomous unicompartmental knee arthroplasty. Alex. Eng. J. 2023, 70, 441–451. [Google Scholar] [CrossRef]

- Abouelfarag, A.; Elshenawy, M.A.; Khattab, E.A. Accelerating sobel edge detection using compressor cells over FPGAs. In Computer Vision: Concepts, Methodologies, Tools, and Applications; IGI Global: Hershey, PA, USA, 2018; pp. 1133–1154. [Google Scholar]

- Said, H.; Mohamed, S.; Shalash, O.; Khatab, E.; Aman, O.; Shaaban, R.; Hesham, M. Forearm intravenous detection and localization for autonomous vein injection using contrast-limited adaptive histogram equalization algorithm. Appl. Sci. 2024, 14, 7115. [Google Scholar] [CrossRef]

- Métwalli, A.; Shalash, O.; Elhefny, A.; Rezk, N.; El Gohary, F.; El Hennawy, O.; Akrab, F.; Shawky, A.; Mohamed, Z.; Hassan, N.; et al. Enhancing hydroponic farming with Machine Learning: Growth prediction and anomaly detection. Eng. Appl. Artif. Intell. 2025, 157, 111214. [Google Scholar] [CrossRef]

- Fawzy, H.; Elbrawy, A.; Amr, M.; Eltanekhy, O.; Khatab, E.; Shalash, O. A systematic review: Computer vision algorithms in drone surveillance. J. Robot. Integr. 2025. [Google Scholar]

- Mirmotalebi, S.; Alvandi, A.; Molaei, S.; Rouhbakhsh, A.; Amadeh, A.; Ahmadi, A.; Rouhbakhshmeghrazi, A. Machine Learning Approaches for Motion Detection Based on IMU Devices. In Proceedings of the 7th International Conference on Applied Researches in Science and Engineering, Online, 31 May 2023. [Google Scholar]

- Kumari, N.; Yadagani, A.; Behera, B.; Semwal, V.B.; Mohanty, S. Human motion activity recognition and pattern analysis using compressed deep neural networks. Comput. Methods Biomech. Biomed. Eng. Imaging Vis. 2024, 12, 2331052. [Google Scholar] [CrossRef]

- Vásconez, J.P.; Barona López, L.I.; Valdivieso Caraguay, Á.L.; Benalcázar, M.E. Hand Gesture Recognition Using EMG-IMU Signals and Deep Q-Networks. Sensors 2022, 22, 9613. [Google Scholar] [CrossRef]

- Wang, P.T.; Sheu, J.S.; Shen, C.F. Real-time Hand Movement Trajectory Tracking with Deep Learning. Sens. Mater. 2023, 35, 4117–4129. [Google Scholar] [CrossRef]

- Salah, Y.; Shalash, O.; Khatab, E. A lightweight speaker verification approach for autonomous vehicles. Robot. Integr. Manuf. Control 2024, 1, 15–30. [Google Scholar] [CrossRef]

- Wei, Y.; Peng, Z. Application of Simodont virtual simulation system for preclinical teaching of access and coronal cavity preparation. PLoS ONE 2024, 19, e0315732. [Google Scholar] [CrossRef] [PubMed]

- Elsayed, H.; Tawfik, N.S.; Shalash, O.; Ismail, O. Enhancing human emotion classification in human-robot interaction. In Proceedings of the 2024 International Conference on Machine Intelligence and Smart Innovation (ICMISI), Alexandria, Egypt, 12–14 May 2024; pp. 1–6. [Google Scholar]

- Caleya, A.M.; Martín-Vacas, A.; Mourelle-Martínez, M.R.; de Nova-Garcia, M.J.; Gallardo-López, N.E. Implementation of virtual reality in preclinical pediatric dentistry learning: A comparison between Simodont® and conventional methods. Dent. J. 2025, 13, 51. [Google Scholar] [CrossRef] [PubMed]

- Simodont Dental Trainer. Home—Simodont Dental Trainer. Available online: https://www.simodontdentaltrainer.com/ (accessed on 15 October 2025).

- Virteasy Dental Simulator. Home—Virteasy Dental Simulator. Available online: https://virteasy.com/ (accessed on 15 October 2025).

- Moog Inc.; ACTA (Academic Centre for Dentistry Amsterdam). Simodont Dental Trainer. Available online: https://acta.nl/en (accessed on 15 October 2025).

- Forsslund Systems. Forsslund Systems Dental Trainer. Available online: https://forsslundsystems.com/ (accessed on 15 October 2025).

- University of Illinois at Chicago. PerioSim Project. Available online: https://periopsim.com (accessed on 15 October 2025).

- Wang, Y.; Zhao, S.; Li, T.; Zhang, Y.; Wang, X.; Liu, H.; Chen, J.; Sun, Q.; Zhou, Y.; Hou, J. Development of a virtual reality dental training system. Comput. Biol. Med. 2016, 75, 1–9. [Google Scholar]

- Hsieh, Y.; Hsieh, L.; Chien, C.; Lin, Y.; Lee, J.; Chang, C.; Wang, C.; Huang, T.; Chen, M.; Wu, S. Development of an interactive dental simulator. J. Dent. Educ. 2015, 79, 504–512. [Google Scholar]

- Tse, B.; Harwin, W.; Barrow, A.; Quinn, B.; San Diego, J.; Cox, M. Design and development of a haptic dental training system-haptel. In Proceedings of the EuroHaptics 2010, Amsterdam, The Netherlands, 8–10 July 2010; pp. 101–108. [Google Scholar]

- Pisla, D.; Bulbucan, V.; Hedesiu, M.; Vaida, C.; Zima, I.; Mocan, R.; Tucan, P.; Dinu, C.; Pisla, D.; TEAM Project Group. A vision-guided robotic system for safe dental implant surgery. J. Clin. Med. 2024, 13, 6326. [Google Scholar] [CrossRef]

- Slaczka, D.M.; Shah, R.; Liu, C.; Zou, F.; Karunanayake, G.A. Endodontic access cavity training using artificial teeth and Simodont® dental trainer: A comparison of student performance and acceptance. Int. Endod. J. 2024. [Google Scholar] [CrossRef]

- Lin, P.Y.; Tsai, Y.H.; Chen, T.C.; Hsieh, C.Y.; Ou, S.F.; Yang, C.W.; Liu, C.H.; Lin, T.F.; Wang, C.Y. The virtual assessment in dental education: A narrative review. J. Dent. Sci. 2024, 19, S102–S115. [Google Scholar] [CrossRef]

- Sallam, M.; Salah, Y.; Osman, Y.; Hegazy, A.; Khatab, E.; Shalash, O. Artificial Intelligence Operated Dental Handpiece for Dental Students Practice: A Step Towards Precision and Automation. Mendeley Data, V1. 2025. Available online: https://www.selectdataset.com/dataset/c8a8892e12a65a80ebcc0a75a8f8c910 (accessed on 15 October 2025).

- Ogasawara, E.; Martinez, L.C.; De Oliveira, D.; Zimbrão, G.; Pappa, G.L.; Mattoso, M. Adaptive normalization: A novel data normalization approach for non-stationary time series. In Proceedings of the 2010 International Joint Conference on Neural Networks (IJCNN), Barcelona, Spain, 18–23 July 2010; pp. 1–8. [Google Scholar]

- Potdar, K.; Pardawala, T.S.; Pai, C.D. A comparative study of categorical variable encoding techniques for neural network classifiers. Int. J. Comput. Appl. 2017, 175, 7–9. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Vapnik, V.N. The Nature of Statistical Learning Theory; Springer: Berlin/Heidelberg, Germany, 1995. [Google Scholar]

- Cox, D.R. The regression analysis of binary sequences. J. R. Stat. Soc. Ser. B (Methodol.) 1958, 20, 215–242. [Google Scholar] [CrossRef]

- Hosmer, D.W., Jr.; Lemeshow, S.; Sturdivant, R.X. Applied Logistic Regression; JohnWiley & Sons: Hoboken, NJ, USA, 2013. [Google Scholar]

- McCulloch, W.S.; Pitts, W. A logical calculus of the ideas immanent in nervous activity. Bull. Math. Biophys. 1943, 5, 115–133. [Google Scholar] [CrossRef]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef]

- Bergstra, J.; Bengio, Y. Random search for hyper-parameter optimization. J. Mach. Learn. Res. 2012, 13, 281–305. [Google Scholar]

- Hutter, F.; Hoos, H.H.; Leyton-Brown, K. Sequential model-based optimization for general algorithm configuration. In International Conference on Learning and Intelligent Optimization; Springer: Berlin/Heidelberg, Germany, 2011; pp. 507–523. [Google Scholar]

- Kohavi, R. A study of cross-validation and bootstrap for accuracy estimation and model selection. Int. Jt. Conf. Artif. Intell. (IJCAI) 1995, 14, 1137–1145. [Google Scholar]

- Probst, P.; Boulesteix, A.L.; Bischl, B. Tunability: Importance of hyperparameters of machine learning algorithms. J. Mach. Learn. Res. 2019, 20, 1–32. [Google Scholar]

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted Boltzmann machines. In Proceedings of the 27th International Conference on Machine Learning (ICML), Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Prechelt, L. Early stopping—But when? In Neural Networks: Tricks of the Trade; Springer: Berlin/Heidelberg, Germany, 1998; pp. 55–69. [Google Scholar]

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning: Data Mining, Inference, and Prediction; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Fawcett, T. An introduction to ROC analysis. Pattern Recognit. Lett. 2006, 27, 861–874. [Google Scholar] [CrossRef]

- Sokolova, M.; Lapalme, G. A systematic analysis of performance measures for classification tasks. Inf. Process. Manag. 2009, 45, 427–437. [Google Scholar] [CrossRef]

- Sasaki, Y. The Truth of the F-Measure; Technical Report; School of Computer Science, University of Manchester: Manchester, UK, 2007. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).