MMFNet: A Mamba-Based Multimodal Fusion Network for Remote Sensing Image Semantic Segmentation

Abstract

1. Introduction

2. Related Work

2.1. Single Modal Semantic Segmentation

2.2. Multimodal Semantic Segmentation (MSS)

3. Methodology

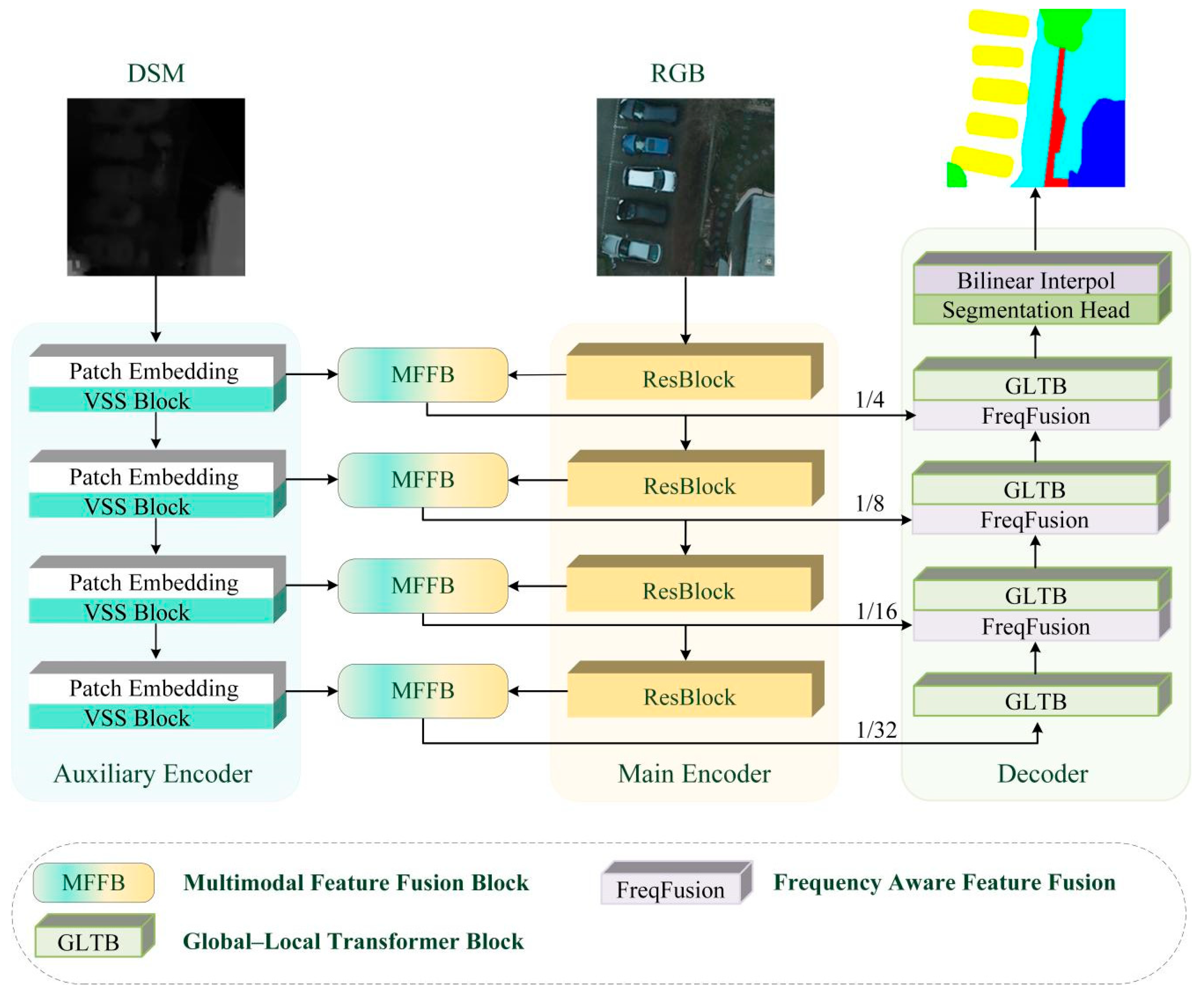

3.1. Framework of MMFNet

3.2. Dual Branch Encoder

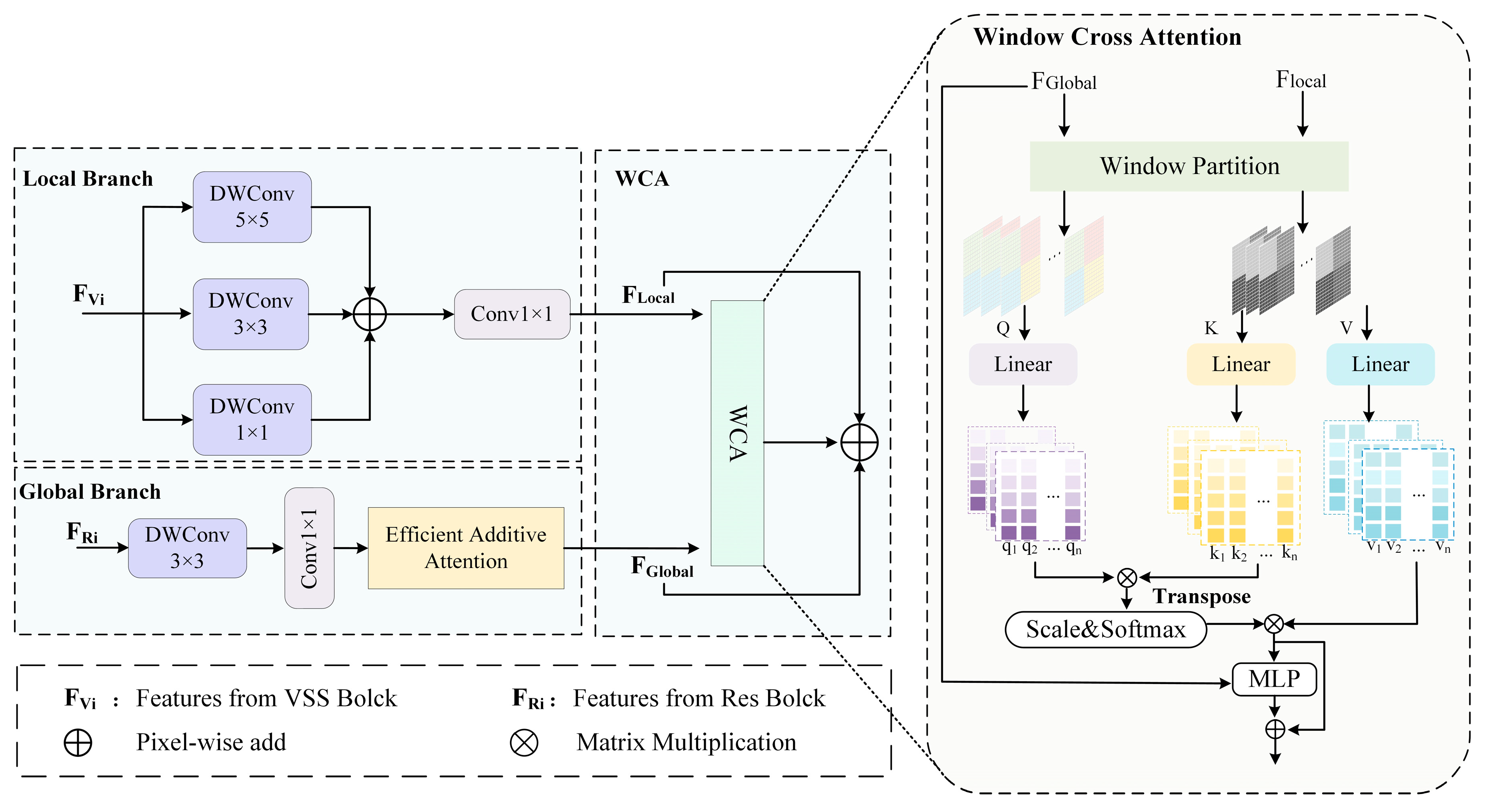

3.3. Multimodal Feature Fusion Block

3.4. Transfomer Decoder

3.5. Loss Function

4. Experiment and Results

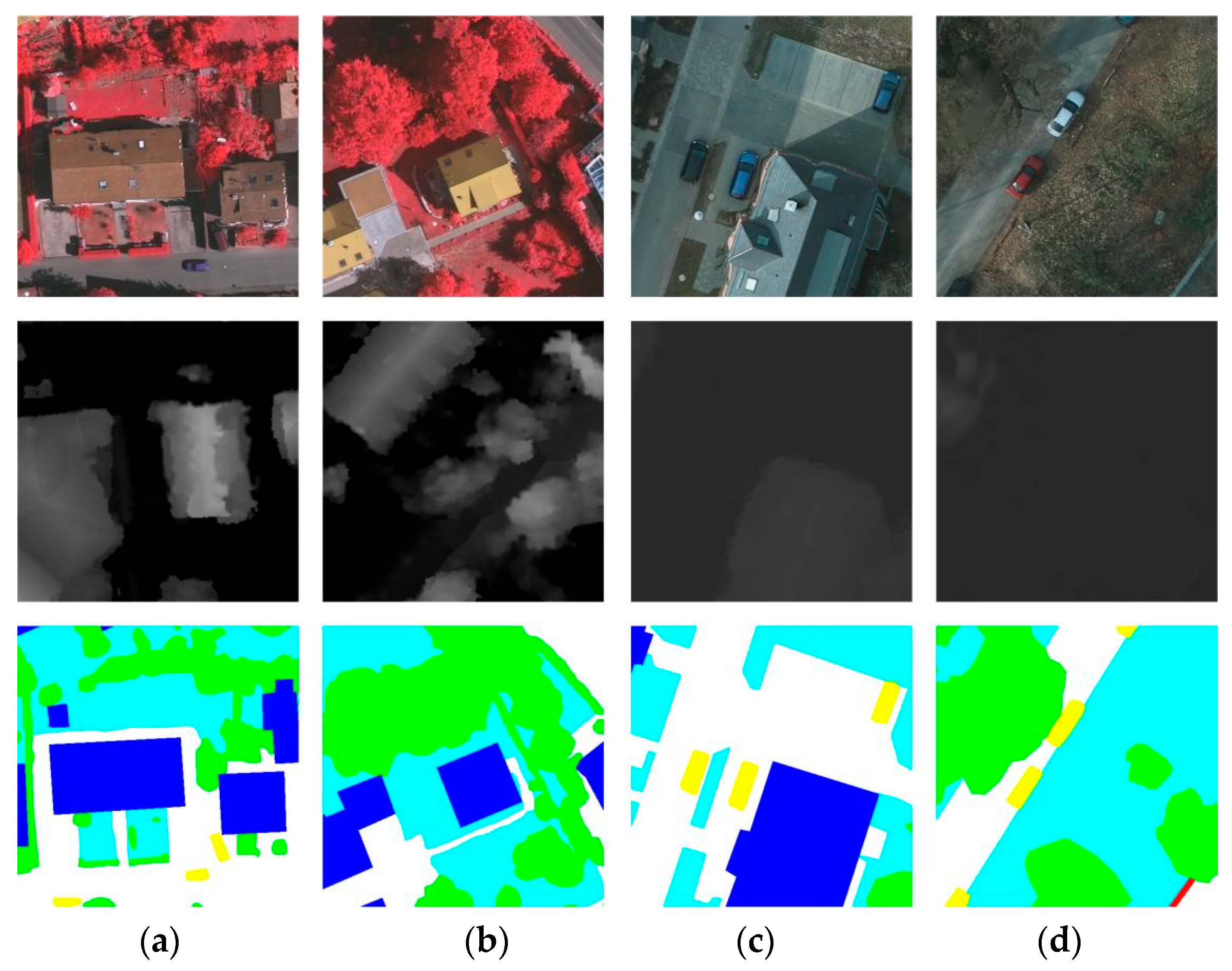

4.1. Dataset

4.2. Evaluation Metrics

4.3. Experiment Setup

4.4. Experimental Results and Analysis

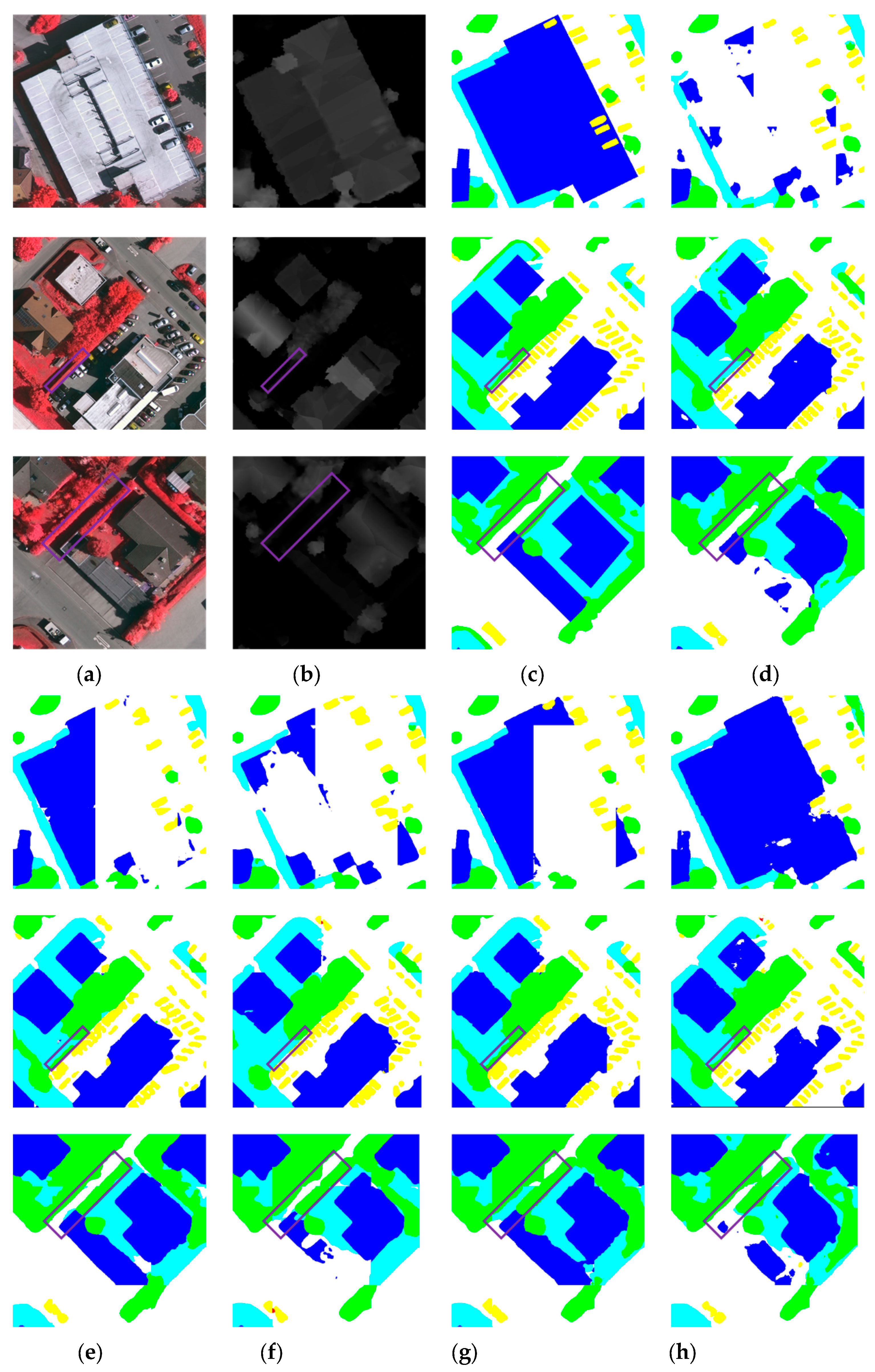

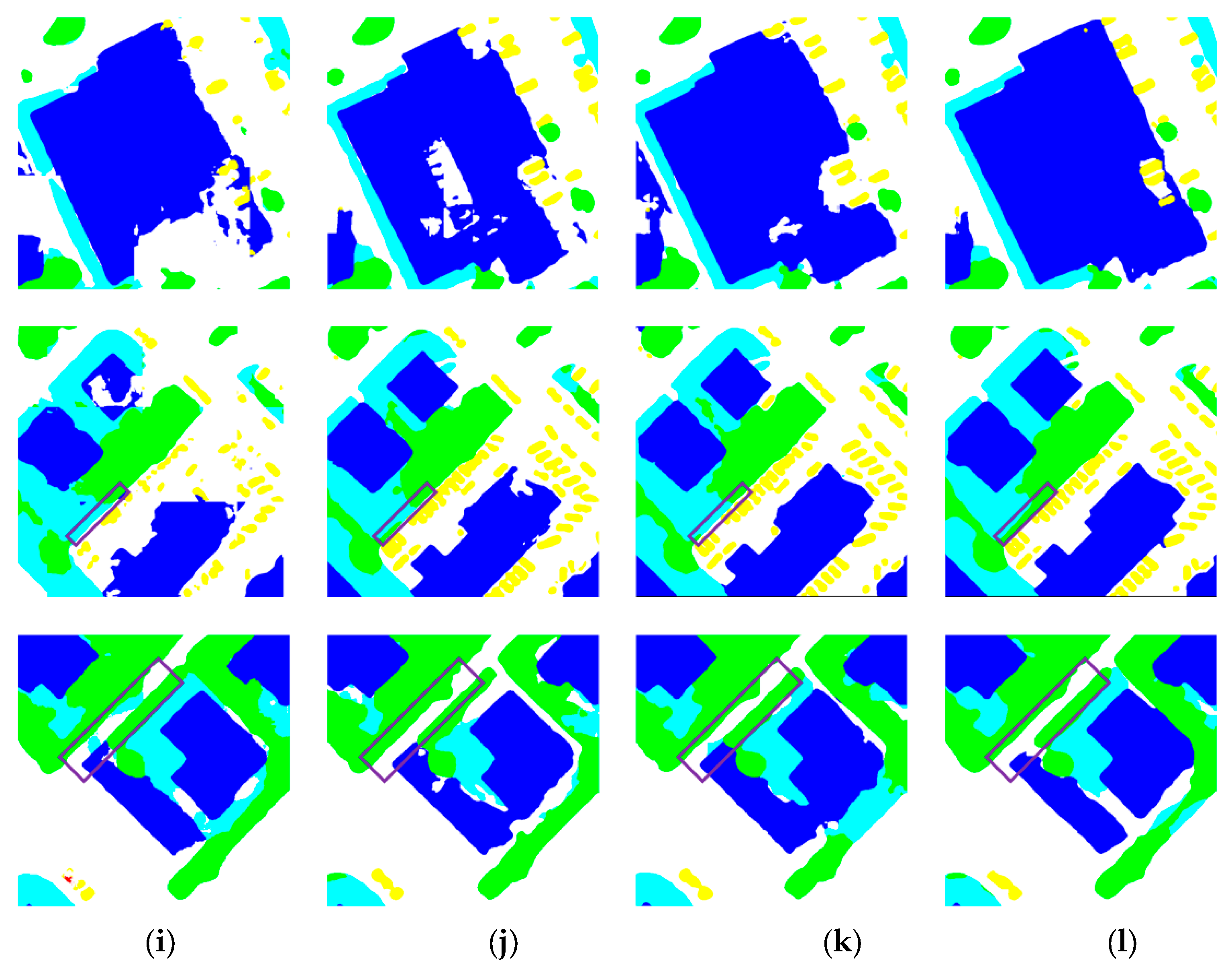

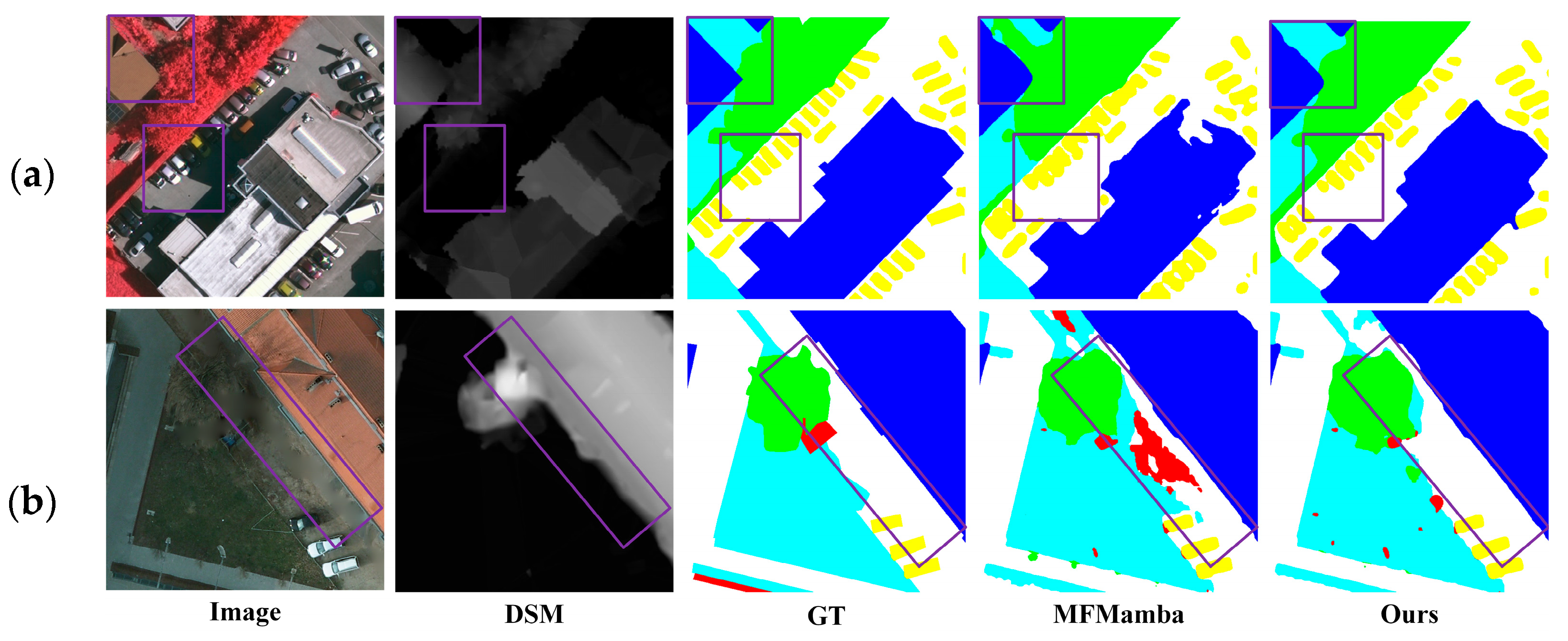

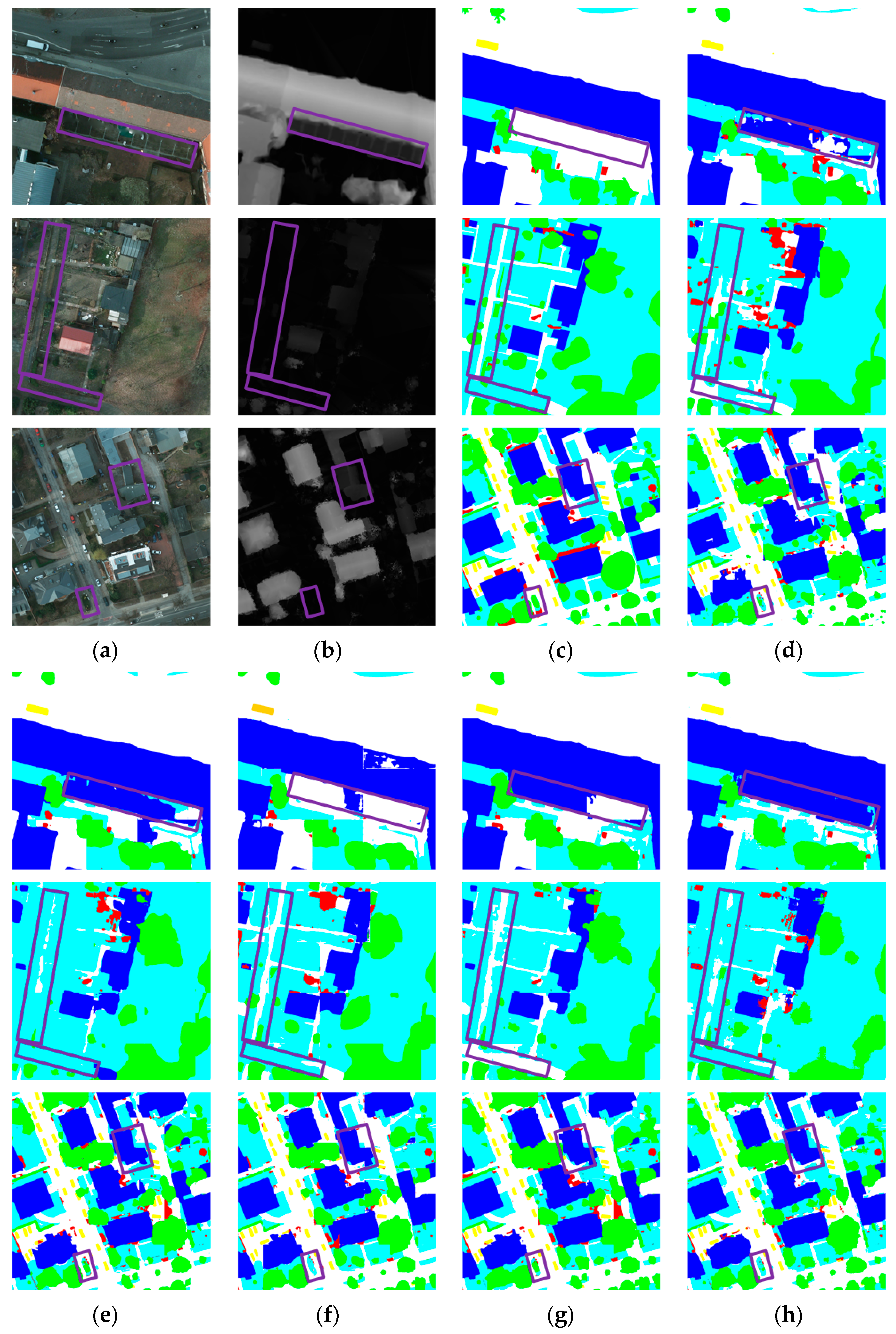

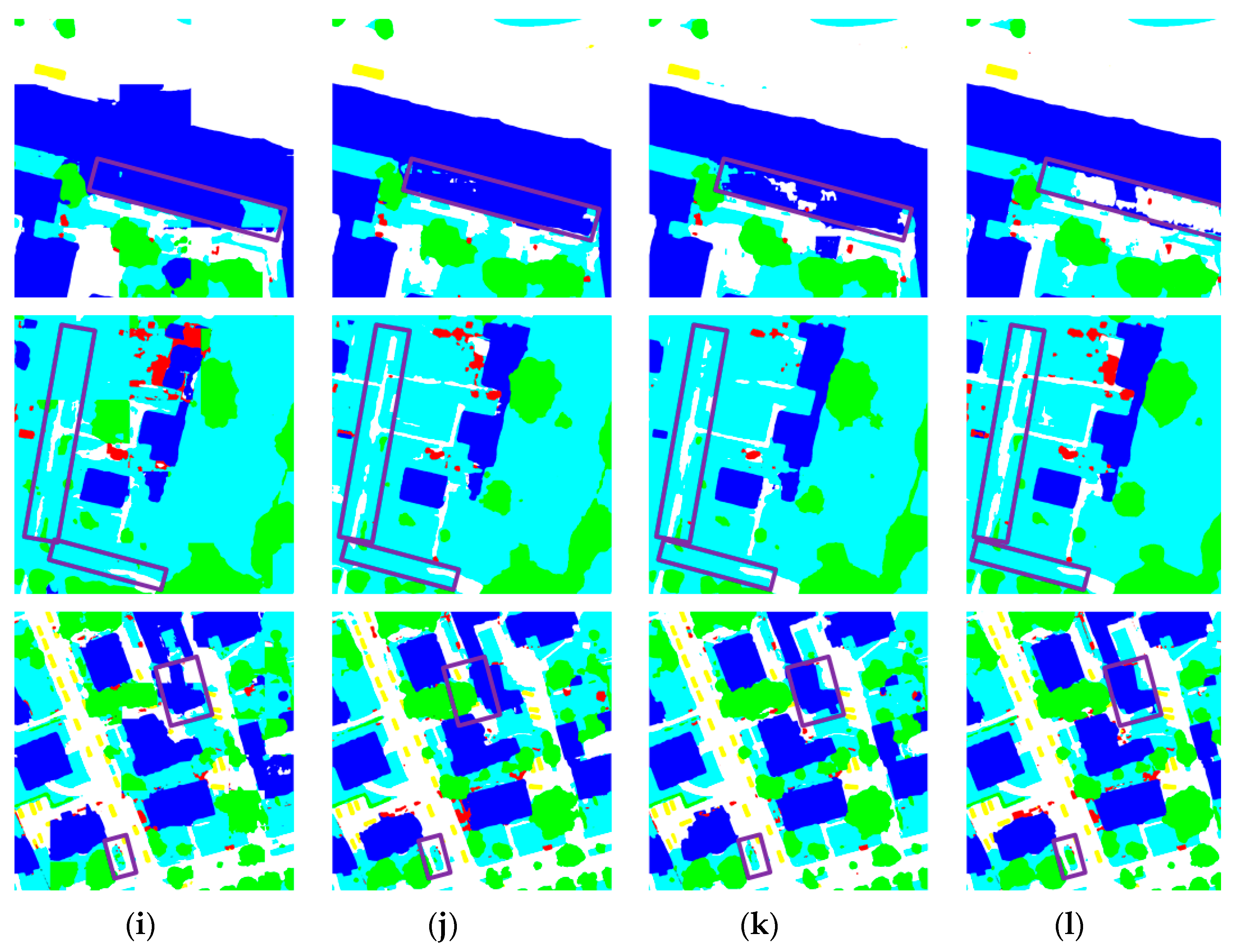

4.4.1. Comparison Results on the Vaihingen Dataset

4.4.2. Comparison Results on the Potsdam Dataset

4.4.3. Computational Complexity Analysis

4.5. Ablation Studies

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhang, C.; Jiang, W.; Zhang, Y.; Wang, W.; Zhao, Q.; Wang, C. Transformer and CNN Hybrid Deep Neural Network for Semantic Segmentation of Very-High-Resolution Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4408820. [Google Scholar] [CrossRef]

- Boonpook, W.; Tan, Y.; Xu, B. Deep Learning-Based Multi-Feature Semantic Segmentation in Building Extraction from Images of UAV Photogrammetry. Int. J. Remote Sens. 2021, 42, 1–19. [Google Scholar] [CrossRef]

- Weiss, M.; Jacob, F.; Duveiller, G. Remote Sensing for Agricultural Applications: A Meta-Review. Remote Sens. Environ. 2020, 236, 111402. [Google Scholar] [CrossRef]

- Asadzadeh, S.; Oliveira, W.J.D.; Souza Filho, C.R.D. UAV-Based Remote Sensing for the Petroleum Industry and Environmental Monitoring: State-of-the-Art and Perspectives. J. Pet. Sci. Eng. 2022, 208, 109633. [Google Scholar] [CrossRef]

- Grekousis, G. Local Fuzzy Geographically Weighted Clustering: A New Method for Geodemographic Segmentation. Int. J. Geogr. Inf. Sci. 2021, 35, 152–174. [Google Scholar] [CrossRef]

- Zhou, X.; Zhou, L.; Gong, S.; Zhong, S.; Yan, W.; Huang, Y. Swin Transformer Embedding Dual-Stream for Semantic Segmentation of Remote Sensing Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 175–189. [Google Scholar] [CrossRef]

- Jiang, J.; Feng, X.; Ye, Q.; Hu, Z.; Gu, Z.; Huang, H. Semantic Segmentation of Remote Sensing Images Combined with Attention Mechanism and Feature Enhancement U-Net. Int. J. Remote Sens. 2023, 44, 6219–6232. [Google Scholar] [CrossRef]

- Qin, R.; Fang, W. A Hierarchical Building Detection Method for Very High Resolution Remotely Sensed Images Combined with DSM Using Graph Cut Optimization. Photogramm. Eng. Remote Sens. 2014, 80, 873–883. [Google Scholar] [CrossRef]

- Cao, Z.; Fu, K.; Lu, X.; Diao, W.; Sun, H.; Yan, M.; Yu, H.; Sun, X. End-to-End DSM Fusion Networks for Semantic Segmentation in High-Resolution Aerial Images. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1766–1770. [Google Scholar] [CrossRef]

- Hosseinpour, H.; Samadzadegan, F.; Javan, F.D. CMGFNet: A Deep Cross-Modal Gated Fusion Network for Building Extraction from Very High-Resolution Remote Sensing Images. ISPRS J. Photogramm. Remote Sens. 2022, 184, 96–115. [Google Scholar] [CrossRef]

- Ma, X.; Zhang, X.; Pun, M.-O. A Crossmodal Multiscale Fusion Network for Semantic Segmentation of Remote Sensing Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 3463–3474. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Lin, G.; Liu, F.; Milan, A.; Shen, C.; Reid, I. RefineNet: Multi-Path Refinement Networks for Dense Prediction. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 42, 1228–1242. [Google Scholar] [CrossRef] [PubMed]

- Khan, S.; Naseer, M.; Hayat, M.; Zamir, S.W.; Khan, F.S.; Shah, M. Transformers in Vision: A Survey. ACM Comput. Surv. 2022, 54, 1–41. [Google Scholar] [CrossRef]

- Gu, A.; Dao, T. Mamba: Linear-Time Sequence Modeling with Selective State Spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar] [CrossRef]

- Zhu, L.; Liao, B.; Zhang, Q.; Wang, X.; Liu, W.; Wang, X. Vision Mamba: Efficient Visual Representation Learning with Bidirectional State Space Model. arXiv 2024, arXiv:2401.09417. [Google Scholar] [CrossRef]

- Liu, Y.; Tian, Y.; Zhao, Y.; Yu, H.; Xie, L.; Wang, Y.; Ye, Q.; Liu, Y. VMamba: Visual State Space Model. arXiv 2024, arXiv:2401.10166. [Google Scholar]

- Wan, Z.; Zhang, P.; Wang, Y.; Yong, S.; Stepputtis, S.; Sycara, K.; Xie, Y. Sigma: Siamese Mamba Network for Multi-Modal Semantic Segmentation. arXiv 2024, arXiv:2404.04256. [Google Scholar] [CrossRef]

- Wang, Y.; Cao, L.; Deng, H. MFMamba: A Mamba-Based Multi-Modal Fusion Network for Semantic Segmentation of Remote Sensing Images. Sensors 2024, 24, 7266. [Google Scholar] [CrossRef]

- Cao, Z.; Diao, W.; Sun, X.; Lyu, X.; Yan, M.; Fu, K. C3Net: Cross-Modal Feature Recalibrated, Cross-Scale Semantic Aggregated and Compact Network for Semantic Segmentation of Multi-Modal High-Resolution Aerial Images. Remote Sens. 2021, 13, 528. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Chen, L.; Fu, Y.; Gu, L.; Yan, C.; Harada, T.; Huang, G. Frequency-Aware Feature Fusion for Dense Image Prediction. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 10763–10780. [Google Scholar] [CrossRef]

- Shaker, A.; Maaz, M.; Rasheed, H.; Khan, S.; Yang, M.-H.; Khan, F.S. SwiftFormer: Efficient Additive Attention for Transformer-Based Real-Time Mobile Vision Applications. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1 October 2023; pp. 17379–17390. [Google Scholar]

- Sun, W.; Wang, R. Fully Convolutional Networks for Semantic Segmentation of Very High Resolution Remotely Sensed Images Combined With DSM. IEEE Geosci. Remote Sens. Lett. 2018, 15, 474–478. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2015; Volume 9351, pp. 234–241. ISBN 978-3-319-24573-7. [Google Scholar]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Computer Vision—ECCV 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2018; Volume 11211, pp. 833–851. ISBN 978-3-030-01233-5. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Wang, D.; Yang, R.; Zhang, Z.; Liu, H.; Tan, J.; Li, S.; Yang, X.; Wang, X.; Tang, K.; Qiao, Y.; et al. P-Swin: Parallel Swin Transformer Multi-Scale Semantic Segmentation Network for Land Cover Classification. Comput. Geosci. 2023, 175, 105340. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 9992–10002. [Google Scholar]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers. In Proceedings of the 35th International Conference on Neural Information Processing Systems (NeurIPS), Online, 6–14 December 2021. [Google Scholar]

- Strudel, R.; Garcia, R.; Laptev, I.; Schmid, C. Segmenter: Transformer for Semantic Segmentation. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 7242–7252. [Google Scholar]

- Wang, L.; Li, R.; Zhang, C.; Fang, S.; Duan, C.; Meng, X.; Atkinson, P.M. UNetFormer: A UNet-like Transformer for Efficient Semantic Segmentation of Remote Sensing Urban Scene Imagery. ISPRS J. Photogramm. Remote Sens. 2022, 190, 196–214. [Google Scholar] [CrossRef]

- Zhu, Q.; Cai, Y.; Fang, Y.; Yang, Y.; Chen, C.; Fan, L.; Nguyen, A. Samba: Semantic Segmentation of Remotely Sensed Images with State Space Model. Heliyon 2024, 10, e38495. [Google Scholar] [CrossRef] [PubMed]

- Chi, K.; Guo, S.; Chu, J.; Li, Q.; Wang, Q. RSMamba: Biologically Plausible Retinex-Based Mamba for Remote Sensing Shadow Removal. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5606310. [Google Scholar] [CrossRef]

- Diakogiannis, F.I.; Waldner, F.; Caccetta, P.; Wu, C. ResUNet-a: A Deep Learning Framework for Semantic Segmentation of Remotely Sensed Data. ISPRS J. Photogramm. Remote Sens. 2020, 162, 94–114. [Google Scholar] [CrossRef]

- Audebert, N.; Le Saux, B.; Lefèvre, S. Beyond RGB: Very High Resolution Urban Remote Sensing with Multimodal Deep Networks. ISPRS J. Photogramm. Remote Sens. 2018, 140, 20–32. [Google Scholar] [CrossRef]

- Hazirbas, C.; Ma, L.; Domokos, C.; Cremers, D. FuseNet: Incorporating Depth into Semantic Segmentation via Fusion-Based CNN Architecture. In Computer Vision—ACCV 2016; Lai, S.-H., Lepetit, V., Nishino, K., Sato, Y., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2017; Volume 10111, pp. 213–228. ISBN 978-3-319-54180-8. [Google Scholar]

- Yan, L.; Huang, J.; Xie, H.; Wei, P.; Gao, Z. Efficient Depth Fusion Transformer for Aerial Image Semantic Segmentation. Remote Sens. 2022, 14, 1294. [Google Scholar] [CrossRef]

- Li, Y.; Xing, Y.; Lan, X.; Li, X.; Chen, H.; Jiang, D. AlignMamba: Enhancing Multimodal Mamba with Local and Global Cross-Modal Alignment. arXiv 2024, arXiv:2412.00833. [Google Scholar]

- Pan, H.; Zhao, R.; Ge, H.; Liu, M.; Zhang, Q. Multimodal Fusion Mamba Network for Joint Land Cover Classification Using Hyperspectral and LiDAR Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 17328–17345. [Google Scholar] [CrossRef]

- Ye, F.; Tan, S.; Huang, W.; Xu, X.; Jiang, S. MambaTriNet: A Mamba-Based Tribackbone Multimodal Remote Sensing Image Semantic Segmentation Model. IEEE Geosci. Remote Sens. Lett. 2025, 22, 2503205. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, Q.; Zhao, Y.; Xiong, S.; Lu, X. Bilinear Parallel Fourier Transformer for Multimodal Remote Sensing Classification. IEEE Trans. Geosci. Remote Sens. 2025, 63, 4702414. [Google Scholar] [CrossRef]

- Ding, L.; Tang, H.; Bruzzone, L. LANet: Local Attention Embedding to Improve the Semantic Segmentation of Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2021, 59, 426–435. [Google Scholar] [CrossRef]

| Model | Backbone | Imp. | Bui. | Low. | Tre. | Car | OA | mF1 | mIoU |

|---|---|---|---|---|---|---|---|---|---|

| IoU | |||||||||

| PSPNet | Resnet-18 | 79.27 | 89.5 | 60.41 | 77.25 | 71.45 | 87.58 | 86.31 | 75.76 |

| Swin | Swin-T | 81.49 | 89.93 | 63.08 | 75.05 | 64.97 | 86.74 | 84.87 | 74.90 |

| Unetformer | Resnet-18 | 79.33 | 88.67 | 61.86 | 73.56 | 70.73 | 86.65 | 85.31 | 74.83 |

| DCSwin | Swin-T | 81.47 | 89.81 | 63.69 | 74.82 | 70.54 | 87.70 | 86.11 | 76.07 |

| CMFNet | VGG-16 | 86.59 | 94.25 | 66.75 | 82.75 | 77.03 | 91.39 | 89.50 | 81.47 |

| Vmamba | Vmamba-T | 82.58 | 89.06 | 67.35 | 77.83 | 57.07 | 82.23 | 84.56 | 74.78 |

| RS3Mamba | R18-Mamba-T | 85.21 | 92.34 | 66.51 | 82.94 | 81.21 | 90.87 | 89.28 | 81.64 |

| MFMamba | R18-Mamba-T | 86.04 | 94.02 | 66.14 | 83.64 | 77.43 | 91.37 | 89.48 | 81.46 |

| Ours | R18-Mamba-T | 87.55 | 94.37 | 68.92 | 84.21 | 82.42 | 92.06 | 90.77 | 83.50 |

| Model | Backbone | IoU | OA | mF1 | mIoU | ||||

|---|---|---|---|---|---|---|---|---|---|

| Imp. | Bui. | Low. | Tre. | Car | |||||

| PSPNet | Resnet-18 | 78.98 | 88.93 | 68.23 | 68.42 | 77.77 | 86.12 | 82.51 | 76.47 |

| Swin | Swin-T | 79.28 | 90.5 | 69.98 | 70.41 | 79.64 | 87.05 | 83.69 | 77.96 |

| Unetformer | Resnet-18 | 84.51 | 92.08 | 72.70 | 71.42 | 83.45 | 89.19 | 89.20 | 80.83 |

| DCSwin | Swin-T | 82.96 | 92.50 | 71.31 | 71.24 | 82.29 | 88.31 | 88.71 | 80.06 |

| CMFNet | VGG-16 | 85.55 | 93.65 | 72.23 | 74.65 | 91.25 | 89.97 | 91.01 | 83.37 |

| Vmamba | Vmamba-T | 84.82 | 91.24 | 75.16 | 75.38 | 88.04 | 81.52 | 90.40 | 82.93 |

| RS3Mamba | R18-Mamba-T | 86.95 | 94.46 | 75.50 | 76.28 | 92.98 | 90.73 | 87.39 | 85.24 |

| MFMamba | R18-Mamba-T | 87.34 | 94.90 | 75.09 | 76.81 | 92.88 | 90.89 | 91.92 | 85.41 |

| Ours | R18-Mamba-T | 87.41 | 95.71 | 76.54 | 77.50 | 93.13 | 91.32 | 92.31 | 86.06 |

| Method | FLOPs (G) | Parameter (M) | mIoU (%) |

|---|---|---|---|

| PSPNet | 64.15 | 65.60 | 75.76 |

| Swin | 60.28 | 59.02 | 74.90 |

| Unetformer | 5.99 | 11.72 | 74.83 |

| DCSwin | 40.08 | 66.95 | 76.07 |

| CMFNet | 159.55 | 104.07 | 81.47 |

| Vmamba | 12.41 | 29.94 | 74.78 |

| RS3Mamba | 19.78 | 43.32 | 81.64 |

| MFMamba | 19.12 | 62.43 | 81.46 |

| Ours | 19.15 | 69.85 | 83.50 |

| Dataset | Bands | Class OA (%) | mF1 (%) | mIoU (%) | ||||

|---|---|---|---|---|---|---|---|---|

| Imp. | Bui. | Low. | Tre. | Car | ||||

| Vaihingen | NIRRG | 92.04 | 96.06 | 80.64 | 92.04 | 88.36 | 90.04 | 82.29 |

| NIRRG + DSM | 93.57 | 97.21 | 81.16 | 91.50 | 88.39 | 90.77 | 83.50 | |

| Potsdam | RGB | 92.45 | 97.50 | 88.94 | 86.63 | 96.11 | 91.63 | 84.91 |

| RGB + DSM | 92.70 | 98.01 | 88.78 | 87.65 | 96.38 | 92.31 | 86.06 | |

| WCA | FreqFusion | Imp. | Bui. | Low. | Tre. | Car | OA | mF1 | mIoU |

|---|---|---|---|---|---|---|---|---|---|

| √ | × | 87.53 | 95.49 | 75.71 | 77.48 | 93.30 | 91.13 | 92.21 | 85.90 |

| × | √ | 87.32 | 95.31 | 75.44 | 77.50 | 93.09 | 90.97 | 92.12 | 85.73 |

| √ | √ | 87.41 | 95.71 | 76.54 | 77.50 | 93.13 | 91.32 | 92.31 | 86.06 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qiu, J.; Chang, W.; Ren, W.; Hou, S.; Yang, R. MMFNet: A Mamba-Based Multimodal Fusion Network for Remote Sensing Image Semantic Segmentation. Sensors 2025, 25, 6225. https://doi.org/10.3390/s25196225

Qiu J, Chang W, Ren W, Hou S, Yang R. MMFNet: A Mamba-Based Multimodal Fusion Network for Remote Sensing Image Semantic Segmentation. Sensors. 2025; 25(19):6225. https://doi.org/10.3390/s25196225

Chicago/Turabian StyleQiu, Jingting, Wei Chang, Wei Ren, Shanshan Hou, and Ronghao Yang. 2025. "MMFNet: A Mamba-Based Multimodal Fusion Network for Remote Sensing Image Semantic Segmentation" Sensors 25, no. 19: 6225. https://doi.org/10.3390/s25196225

APA StyleQiu, J., Chang, W., Ren, W., Hou, S., & Yang, R. (2025). MMFNet: A Mamba-Based Multimodal Fusion Network for Remote Sensing Image Semantic Segmentation. Sensors, 25(19), 6225. https://doi.org/10.3390/s25196225