Abstract

The instance segmentation of power distribution equipment in infrared images is a prerequisite for determining its overheating fault, which is crucial for urban power grids. Due to the specific characteristics of power distribution equipment, the objects are generally characterized by small scale and complex structure. Existing methods typically use a backbone network to extract features from infrared images. However, the inherent down-sampling operations lead to information loss of objects. The content regions of small-scale objects are compressed, and the edge regions of complex-structured objects are fragmented. In this paper, (1) the first unmanned aerial vehicle-based infrared dataset PDI for power distribution inspection is constructed with 16,596 images, 126,570 instances, and 7 categories of power equipment. It has the advantages of large data volume, rich geographic scenarios, and diverse object patterns, as well as challenges of distribution imbalance, category imbalance, and scale imbalance of objects. (2) A reconstruction error (RE)-guided instance segmentation framework, coupled with an object reconstruction decoder (ORD) and a difference feature enhancement (DFE) module, is proposed. The former reconstructs the objects, where the reconstruction result indicates the position and degree of information loss of the objects. Therefore, the difference map between the reconstruction result and the input image effectively replays the object features. The latter adaptively compensates for object features by global fusion between the difference features and backbone features, thereby enhancing the spatial representation of objects. Extensive experiments on the constructed and publicly available datasets demonstrate the strong generalization, superiority, and versatility of the proposed framework.

1. Introduction

Power distribution equipment is critical infrastructure responsible for delivering low- to medium-voltage electrical power and includes devices such as arresters, breakers, and insulators. Power distribution equipment is susceptible to overheating due to overloaded operations during peak consumption periods (e.g., summer and winter) and prolonged exposure to extreme weather conditions (e.g., icing and typhoons). This can cause equipment failure and may potentially result in widespread power outages. Consequently, regular monitoring of power distribution equipment is essential for ensuring the safe and stable operation of urban power grids [1,2].

The temperature distribution of power distribution equipment can be intuitively visualized in infrared images, as infrared sensors can effectively capture the thermal radiation differences between the power distribution equipment and its surroundings [3,4]. This imaging capability enables accurate temperature measurement and is unaffected by external lighting conditions [5,6].

In the early stages, infrared inspection was typically performed by workers climbing pole towers with handheld infrared cameras to manually examine each piece of power distribution equipment. This method was not only cumbersome and labor-intensive, but also posed significant safety risks, including electrocution and falls from height [7]. In recent years, unmanned aerial vehicles (UAVs) equipped with infrared cameras have been widely adopted due to their high maneuverability and operational flexibility [8,9]. UAVs can easily navigate complex urban environments, such as dense buildings and trees, and observe power distribution equipment from multiple perspectives. Notably, UAVs can cover several kilometers of scenarios in a single operation, significantly improving infrared inspection efficiency.

Instance segmentation, a fundamental task in computer vision, aims at locating the position of each object and recognizing its category within an image [10]. It has become a crucial prerequisite for assessing the operational status of each piece of power distribution equipment [11]. Traditional methods, such as region growing [12], rely on hand-crafted features, such as color and texture. These hand-crafted features are usually designed based on simple image statistics or heuristic rules, which are limited in their representation capabilities. In contrast, modern methods like Mask RCNN [13] have achieved significant advancements by automatically learning complex and nonlinear spatial and semantic relationships between foreground objects and background from large-scale images. As a result, modern methods have been successfully transferred from visible images of natural scenarios to infrared images of power scenarios [14].

The challenge of accurately locating and recognizing power distribution equipment in images stems from the following reasons. Firstly, power distribution equipment, such as a bushing, is physically small, and when imaged from an operational distance by UAVs, it occupies a minuscule number of pixels, making it a small-scale object. Secondly, power distribution equipment, like an insulator or a disconnector, possesses intricate geometries, rendering it a complex-structured object. Therefore, due to these specific characteristics, power distribution equipment is generally characterized by small scale and complex structure. Meanwhile, due to the hardware limitations of infrared sensors, the resolution of infrared images is low. In this situation, the above challenge becomes even more severe.

Modern methods typically employ backbone networks to extract hierarchical features from infrared images. However, the inherent down-sampling operations in backbone networks lead to information loss of objects, as illustrated in Figure 1. Specifically, the content regions of small-scale objects collapse, i.e., decrease or even disappear. Their features can be reduced from several pixels to a single pixel or even less, which significantly compresses their content information, making them indistinguishable from noise or background. The edge regions of objects with complex structures are discretized, i.e., jagged and fragmented. The discretization process causes a sharp and continuous edge to become a jagged, broken series of disconnected points, fragmenting the structural integrity of the object’s boundary. Therefore, missed recognition and coarse localization of objects frequently occur in modern methods.

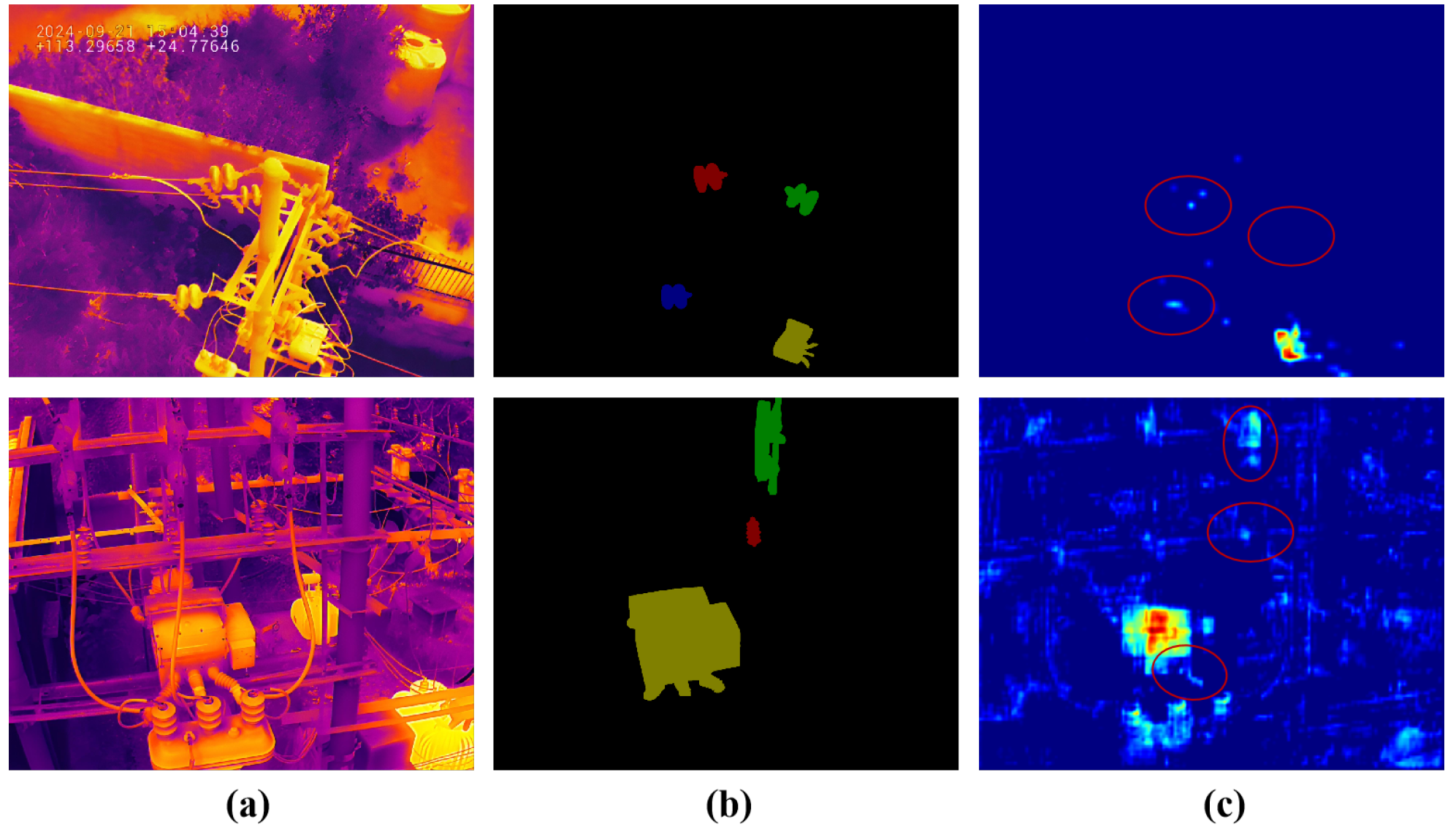

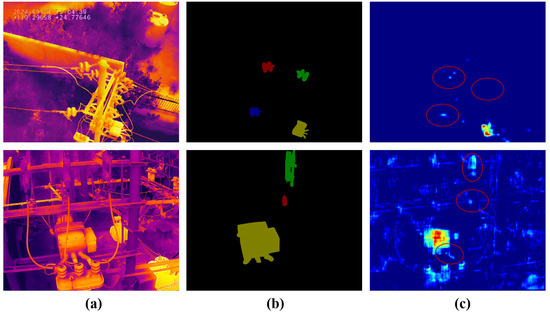

Figure 1.

Schematic diagram illustrating the information loss caused by down-sampling operations in the backbone network. (a) Input infrared image; (b) ground truth; (c) feature maps from the 4th and 1st levels of the backbone network. The content regions of small-scale objects are compressed, with their features nearly erased, while the edge regions of objects with complex structures are discretized, resulting in fragmented features, as highlighted by the red circles.

To control the information loss of objects, super resolution (SR) [15,16] and improved down-sampling (IDS) operations [17,18] are considered effective approaches. Specifically, SR reconstructs high-resolution (HR) counterparts from low-resolution images. Although super-resolution increases the information capacity of objects by enhancing pixel density, it is susceptible to generating fake textures and undesirable artifacts. Furthermore, HR images increase the computational burden of models. In contrast, IDS performs a weighted summation of all pixels within the sampling region to aggregate their information. However, this process can be laborious.

To resolve the above problems, a reconstruction error (RE)-guided instance segmentation framework is proposed, whose core idea is to replay and compensate for object features to enhance their spatial feature representations. The framework consists of two main components.

Specifically, (1) an object reconstruction decoder (ORD) is proposed to reconstruct the object regions from the backbone features. The reconstruction result indicates the position and degree of information loss of objects. Correspondingly, the difference map (reconstruction error map) between the reconstruction result and the input image effectively replays the object features. (2) A difference feature enhancement (DFE) module is proposed, which adaptively compensates for object features through global interaction and fusion between the difference features and the backbone features, thereby enhancing the spatial features of objects. The ORD and the DFE module are constrained by the pixel-wise error between the reconstruction result and the input image, which is sensitive to fine-grained pixel changes of objects and possesses self-supervision capability. (3) A real-world infrared dataset, PDI, consisting of 16,596 images, 126,570 (120K+) instances, and 7 critical categories of power equipment is constructed. The dataset covers scenarios from hundreds of geographic regions and object patterns from multiple observation viewpoints. Compared to existing infrared datasets, PDI is the first UAV-based infrared dataset for distribution inspection and has the largest data volume, rich scenarios, and diverse object patterns. Meanwhile, this dataset poses challenges of scale imbalance, category imbalance, and distribution imbalance of objects.

The proposed framework effectively captures small-scale objects and preserves the edge integrity of objects with complex structures, providing insight into the scale imbalance problem. Extensive experiments on both the constructed and publicly available datasets demonstrate the superiority, generalization, and versatility (model-agnostic) of the proposed framework, thereby greatly advancing power distribution inspection. Our code is publicly available on https://github.com/yisun98 (accessed on 23 September 2025).

The contributions of this paper can be summarized as follows:

- The first real-world UAV-based infrared dataset for distribution inspection is proposed. This dataset offers the largest data volume, diverse geographic scenarios, and varied instance patterns, while also presenting challenges such as scale imbalance, distribution imbalance, and category imbalance. It establishes a comprehensive performance benchmark and validates the superiority of the proposed framework.

- An object reconstruction mechanism is proposed to effectively mitigate the information loss of objects by reconstructing the object regions. This approach reveals the correlation between reconstruction and degradation, providing effective object features via reconstruction error.

- A difference feature enhancement (DFE) module is proposed to enhance the spatial feature representations of objects, effectively preserving both the content integrity and the edge continuity of objects.

The remainder of this paper is organized as follows. Section 2 briefly reviews instance segmentation methods. Section 3 describes the proposed framework in detail. Section 4 introduces the constructed dataset. Section 5 provides experiments and analysis to explore the limitations of existing methods and the working mechanism of the core components. Section 6 discusses the complexity and application scenarios of the proposed framework. Section 7 gives conclusions and points out future work.

2. Related Work

2.1. General Instance Segmentation

Traditional Methods: Region growing is a representative traditional method [12]. It begins by randomly selecting several seed pixels as starting points, and then progressively merges neighboring pixels based on a defined similarity measure to form connected regions, with each region corresponding to a distinct object. The similarity between pixels can be determined by features such as color, texture, and gradient. Notably, region growing is sensitive to gradients, enabling it to effectively capture object boundaries. However, this method is prone to generating isolated or fragmented regions in the presence of noise. More broadly, traditional methods like region growing heavily rely on hand-crafted features, which are typically designed using basic image statistics or simple heuristic rules [10]. As a result, their feature representation capabilities are inherently limited, often leading to performance bottlenecks in complex or dynamic scenarios. Furthermore, the design of hand-crafted features requires domain-specific knowledge, introducing human intervention bias and restricting generalization ability.

Modern Methods: In recent years, deep convolutional neural networks (DCNNs) have emerged as the mainstream solution. This can be attributed to their ability to automatically learn complex and nonlinear spatial and semantic relationships between foreground objects and backgrounds from large-scale image datasets, significantly enhancing performance. Modern methods can be categorized into two-stage and one-stage approaches. Two-stage methods, primarily based on the RCNN family, employ a region proposal network (RPN) to generate candidate object regions. The RPN operates on each pixel of the feature map using predefined anchors of different aspect ratios, thereby reducing the likelihood of missed detections. Representative two-stage methods include Mask RCNN [13] and Cascade RCNN [19]. In contrast, one-stage methods, based on the SOLO and YOLO families, can directly predict object instances. For example, the SOLO family divides the feature map into a grid of cells, with each cell responsible for predicting an object if its center falls within that grid. Representative one-stage methods include SOLOv2 [20] and YOLOACT [21].

2.2. Instance Segmentation of Power Infrared Scenarios

In recent years, both two-stage and one-stage modern methods have achieved remarkable performance on visible images of natural scenarios [8]. Transfer learning is a technique that allows models to generalize knowledge learned in a source domain to a target domain, achieving competitive performance. With the support of transfer learning, modern methods have been increasingly applied to infrared images of power scenarios, leveraging knowledge learned from visible domains to improve performance in infrared domains.

For example, Zhao et al. [22] presented category-specific image patches as distinct spatial priors to better distinguish visually similar objects. Zhou et al. [14] proposed a shuffle-polarized self-attention module to capture long-range spatial dependencies, thereby enhancing objects with complex structures. Li et al. [8] constructed a multi-task learning framework that jointly performs super-resolution and instance segmentation, facilitating the transfer of high-resolution spatial texture and details to improve object discriminability. Li et al. [23] presented a spatial transformation network to extract the multi-scale spatial context of objects. These studies collectively demonstrate that state-of-the-art modern methods are effective for power infrared scenarios. However, information loss of objects can still lead to relatively coarse results.

3. Methodology

3.1. Overall Architecture

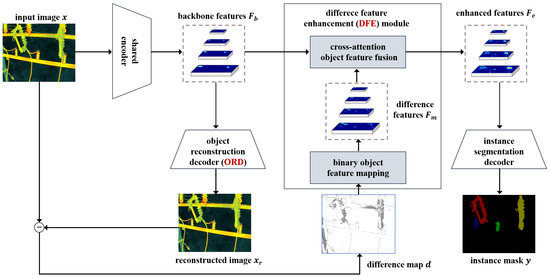

The overall architecture of the proposed framework is illustrated in Figure 2, which consists of four parts:

(1) Shared Encoder : A backbone network, such as ResNet, pretrained on large-scale datasets (e.g., ImageNet), coupled with a neck network, such as FPN [24], is employed to extract multi-scale backbone features from the input infrared image :

where H and W denote the height and width of the image, C is the number of feature channels, and l indicates the feature level. Importantly, the encoder is shared across both the object reconstruction decoder and the instance segmentation decoder, ensuring feature consistency.

In general, the backbone network involves inherent down-sampling operations, typically implemented by max-pooling layers or convolutional layers with a stride greater than one [17]. These down-sampling operations are essential for constructing hierarchical feature representations and expanding the receptive field. However, they inevitably result in information loss of objects, where the content regions of small-scale objects are compressed (e.g., diminished or even lost), and the edge regions of objects with complex structures are discretized (e.g., fragmented and jagged), making their features less distinguishable.

(2) Object Reconstruction Decoder : The image is reconstructed from the features such that it approximates the original input image as closely as possible:

This process follows a self-supervised learning paradigm, where the input image itself serves as the supervision signal. The reconstruction is object-centric: object regions are preserved with their original pixel values, while non-object regions are suppressed to zero. Correspondingly, the reconstruction result explicitly reflects the position and degree of information loss of objects. Moreover, the reconstruction is constrained by the pixel-wise error between it and the input image, revealing fine-grained pixel-level details of objects.

(3) Difference Feature Enhancement Module : The difference map (reconstruction error map) between the reconstructed image and the input image is used as input. This map describes spatial discrepancies in object regions, effectively replaying the object features. The difference feature enhancement module extracts difference features , and adaptively compensates for object features through a global interaction mechanism between the backbone features and the difference features:

(4) Instance Segmentation Decoder : The final instance-level prediction is generated through three parallel branches: a classification branch for recognizing object categories, a regression branch for locating objects via bounding boxes, and a segmentation branch for delineating object boundaries [13]. These branches share the enhanced spatial features as input. The prediction is supervised using the corresponding instance masks associated with the input infrared image :

where includes the predicted category set , bounding box set , and binary mask set . contains the highest probability category for each object, consists of the coordinates of the upper-left and lower-right corners of each bounding box , and represents the binary mask for each object.

Figure 2.

Overall architecture of the proposed framework.

Figure 2.

Overall architecture of the proposed framework.

The proposed framework is explicitly designed to mitigate information loss of power distribution equipment, reflecting its specific characteristics. For content compression of small-scale objects, the ORD counteracts loss by enforcing the model to learn features capable of reconstruction. The DFE module then takes the difference map between the reconstructed result and the input image to re-inject these features, effectively compensating for lost content and enhancing focus on small regions. For edge fragmentation of objects with complex structures, the object reconstruction loss acts as a per-pixel spatial constraint, which is highly sensitive to errors along edges and fine details. Consequently, the ORD learns object features that accurately reconstruct complex edges, and the resulting difference map serves as a self-supervised attention map highlighting edge regions. The DFE module’s fusion mechanism uses this attention map to adaptively sharpen and enhance feature representations along object boundaries, restoring structural continuity.

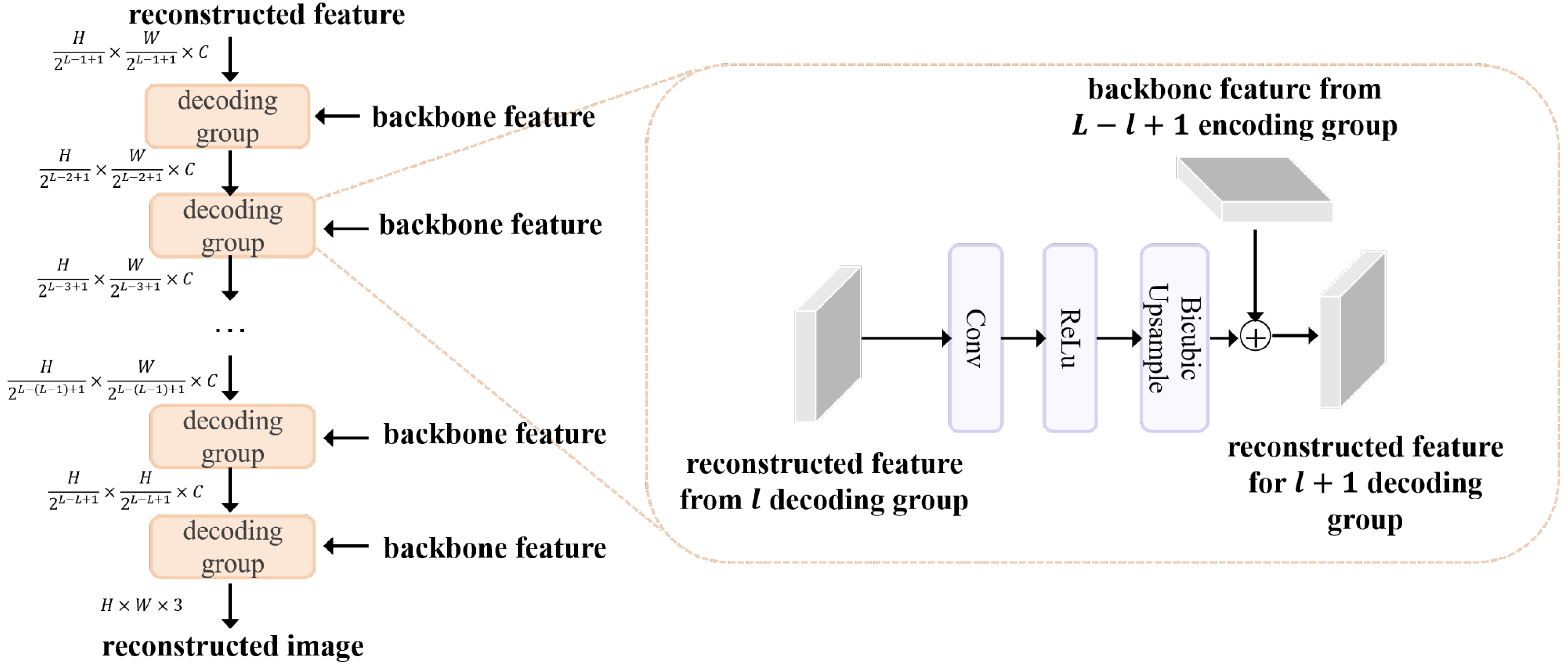

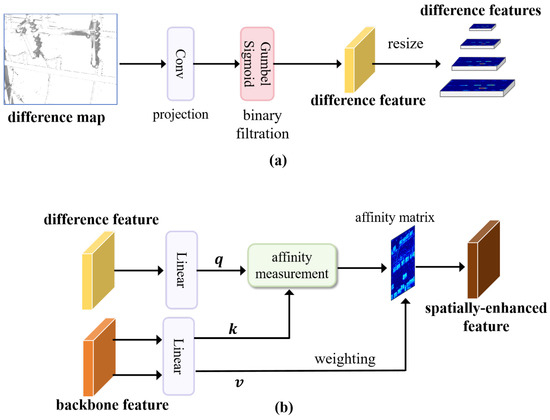

3.2. Object Reconstruction Decoder

The information loss of objects makes it difficult to preserve sufficient features for reconstructing their original morphology. The object reconstruction decoder (ORD) is proposed to reconstruct object regions from the backbone features, where the reconstruction result reflects the position and degree of information loss of objects. We present the architecture of ORD in Figure 3.

Figure 3.

Architecture of the ORD, which consists of multiple decoding groups. The detailed structure and feature dimension of each decoding group are presented.

In general, the ORD adopts a symmetric architecture with respect to the shared encoder. Each decoding group comprises a convolutional layer, a ReLU activation, and a bicubic interpolation operation. These layers serve to reduce feature channels, nonlinearly activate features, and upsample features. The decoding process is defined as:

where is the output feature, is the feature level, and . The input and output channels of the convolutional layer are both C, except for the last layer, which has an output channel of 3. After L decoding processes, the reconstructed image is generated, maintaining the same resolution as the input image .

The bicubic interpolation operation [16] is a standard method for up-sampling images or feature maps, providing smoother results than nearest-neighbor or bilinear interpolation. The formula for bicubic interpolation is given by:

where is the interpolated feature value at the continuous coordinate , is the feature value at integer grid point , is the integer part of coordinate , are offsets indicating the neighborhood around , is the cubic convolution kernel, and a is a kernel parameter.

Compared to existing SR-based and IDS-based methods, the object reconstruction mechanism has three distinctive advantages: (1) Object-centric reconstruction: Unlike SR, which reconstructs the entire image including uninformative background, this mechanism guides the model to focus reconstruction on informative object regions. This is a more efficient approach. When calculating the reconstruction loss, the object regions are preserved with their original pixel values, while non-object regions are suppressed to zero. This masking strategy also enhances the model’s sensitivity to object regions. (2) Pixel change sensitivity: Unlike IDS, the reconstruction process is constrained by per-pixel errors between the reconstruction result and the input image. The reconstruction loss provides a strong, fine-grained gradient signal for every pixel, making the output highly responsive to even minor pixel value changes. (3) Self-supervised learning: The supervision signal (the original image) is freely available and requires no additional external data or manual annotation (e.g., high-resolution images needed by SR). Furthermore, SR is susceptible to generating fake textures and artifacts, and HR images increase the computational burden. This mechanism is therefore more practical.

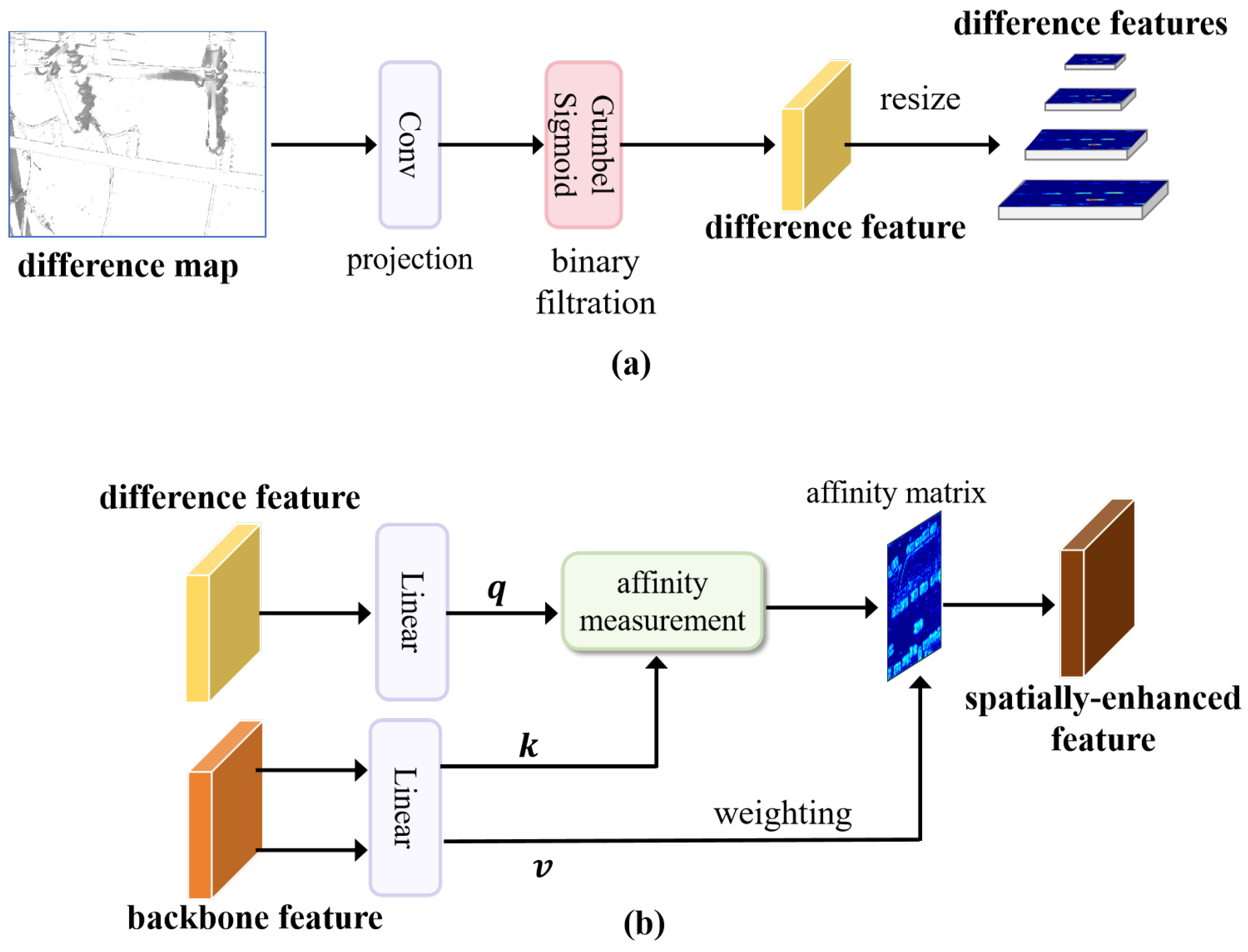

3.3. Difference Feature Enhancement Module

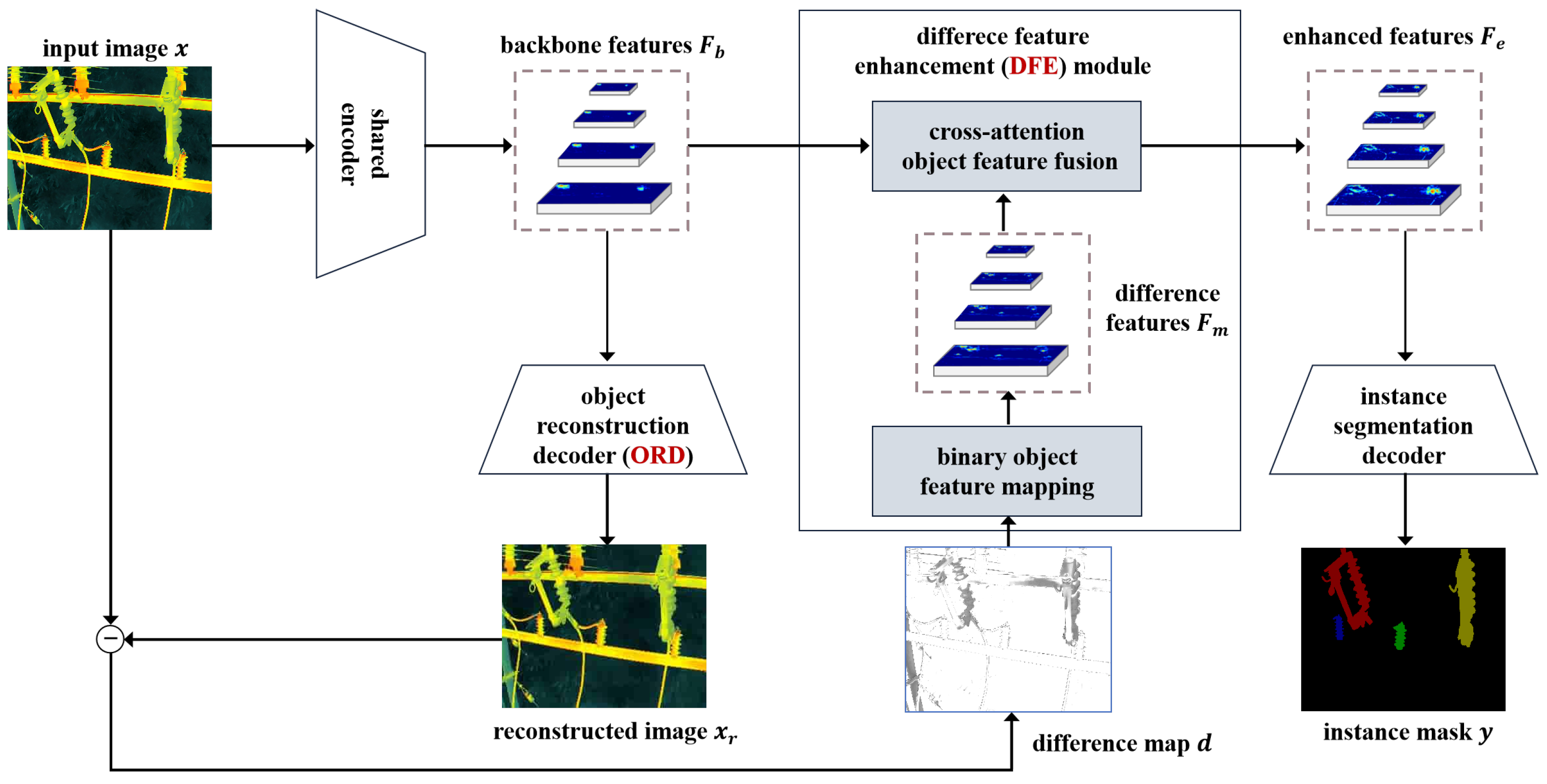

The difference map (reconstruction error map) between the reconstruction result and the input image explicitly replays object prior clues. Therefore, a difference feature enhancement (DFE) module (Figure 4) is proposed. It projects the difference map from the image space into the feature space, applies binary filtering with the Gumbel-sigmoid function to suppress background noise, and finally adaptively compensates object-related clues via global interaction and fusion between the difference features and the backbone features. In this way, the spatial feature representations of objects are effectively enhanced.

Figure 4.

Architecture of the DFE module. (a) Projection and filtration, used for binary object feature mapping; (b) embedding, used for cross-attention object feature fusion.

The DFE module consists of the following three steps:

(1) Projection and filtration for binary object feature mapping. The difference map is generated by subtracting the reconstructed image from the input image and taking the absolute value:

where Abs denotes the element-wise absolute value. A convolutional layer is then applied to project the difference map from the low-dimensional image space into the high-dimensional feature space:

where is the difference feature, and the numbers of input and output channels are 3 and C, respectively.

Due to pixel differences between and , the difference map tends to be activated at nearly every pixel, introducing significant background noise. To address this, a Gumbel-sigmoid function [25] is applied to generate a binary mask , highlighting object regions:

where is a temperature parameter controlling the sharpness of the approximation, and are independent samples from the Gumbel distribution. This function retains differentiability, ensuring foreground-background separation and enhancing object contrast.

(2) Embedding for cross-attention feature fusion. To align the difference features with the backbone features at multiple scales, bicubic interpolation is applied to resample with scale factors :

where the scale factor aligns with the ratio of adjacent levels in the backbone feature hierarchy.

After alignment, taking features of any scale as an example, a set of linear transformations is used to produce the query from the difference feature , and the key and value from the backbone feature :

where Linear denotes a fully connected layer.

The cross-attention fusion is then modeled as global feature affinity measurement and weighting:

where T denotes matrix transpose, and is a scaling factor to normalize dot products, set to . The resulting enhanced feature captures spatially compensated feature representations of objects.

The DFE module has the following advantages: (1) Global spatial awareness: Object-related clues are re-injected into the backbone features through global (pixel-to-pixel) interaction and fusion, restoring degraded object features. (2) Plug-and-play capability: The enhanced features can be seamlessly integrated into existing one-stage and two-stage instance segmentation decoders. They improve the content integrity of small-scale objects and preserve edge continuity for objects with complex structures.

3.4. Objective Function

The objective function of the proposed framework consists of the object reconstruction loss and the instance segmentation loss.

(1) Object Reconstruction Loss : The object reconstruction loss is defined as the pixel-wise mean absolute error (MAE) [26] between the reconstructed image and the input image :

where denotes the -norm. Importantly, is computed only within object regions and can be regarded as a variant of deep supervision. Compared with the mean squared error (MSE), the MAE loss is less sensitive to large outliers, which is advantageous for image reconstruction. It yields sharper results and is more tolerant of occasional bright pixels (e.g., light reflections) that would otherwise dominate the gradient of the MSE loss.

(2) Instance Segmentation Loss : Without loss of generality, the instance segmentation loss consists of a classification loss , a regression loss , and a segmentation loss [13]. Specifically, determines the category of each object, determines the bounding box of each object, and determines the mask of each object. The overall formulation is:

where and are the predicted and ground-truth categories, and are the predicted and ground-truth bounding boxes, and and are the predicted and ground-truth masks. In practice, and are implemented with the cross-entropy loss, while is computed with the smooth L1 loss [27]. The cross-entropy loss is the standard choice for multi-class classification and pixel-level segmentation, as it penalizes the divergence between predicted and actual distributions, thereby encouraging high confidence for the correct category and suppressing incorrect predictions. The smooth L1 loss, combining the advantages of MAE and MSE, behaves like MSE for small residuals (ensuring stable gradients) and like MAE for large residuals (enhancing robustness to outliers), making it highly effective for bounding box regression.

(3) Total Loss: The overall training objective is defined as:

where is a weighting factor to balance the two losses. In this work, is set to , assigning equal importance to both reconstruction and segmentation objectives.

3.5. Training and Testing Pipelines

We define the input, output, and detailed intermediate steps of the proposed framework in Algorithms 1 and 2.

| Algorithm 1 Training pipeline of RE-guided instance segmentation framework |

Input: - Input infrared image - Ground truth instance segmentation mask (includes category , bounding box , mask , for each power distribution equipment) Output: - Predicted instance segmentation result includes category , bounding box , mask , for each power distribution equipment) - Reconstructed infrared image Steps: 1: Extract multi-scale backbone features: // Equation (1) 2: Reconstruct the image from multi-scale backbone features: // Equation (2) 3: Compute the reconstruction error (difference map): // Equation (6) 4: Project and filter difference map to obtain object features // Equations (7) and (8) 5: Align object features with backbone features to get multi-scale difference features // Equation (9) 6: Fuse multi-scale backbone and difference features: // Equations (10) and (11) 7: Predict instance segmentation mask from multi-scale fused features: // Equation (4) 8: Compute object reconstruction loss: // Equation (12) 9: Compute instance segmentation loss: // Equations (13)–(15) 10: Update network parameters by minimizing the total loss: // Equation (16) Return: , |

| Algorithm 2 Testing pipeline of RE-guided instance segmentation framework |

Input: - Input infrared image Output: - Predicted instance segmentation result (includes category , bounding box , mask , for each power distribution equipment) Steps: 1: Extract multi-scale backbone features: // Equation (1) 2: Reconstruct infrared image from multi-scale backbone features: // Equation (2) 3: Compute the reconstruction error (difference map): // Equation (6) 4: Project and filter difference map to obtain object features // Equations (7) and (8) 5: Align object features with backbone features to get multi-scale difference features: // Equation (9) 6: Fuse multi-scale backbone and difference features: // Equations (10) and (11) 7: Predict instance segmentation mask from multi-scale fused features: // Equation (4) Return:

|

4. Dataset Construction

4.1. Image Acquisition

The UAV platforms used for image acquisition are primarily manufactured by DJI, including models such as the Mavic and Air series. These UAVs are equipped with uncooled vanadium oxide (VOx) microbolometer radiometric infrared cameras, which operate based on passive thermal imaging. The infrared cameras provide a thermal sensitivity of less than 50 mK@f/1.0 and cover a spectral wavelength range of 8∼14 μm. Infrared images are recorded in R-JPEG* format (16-bit) with a spatial resolution of 640 × 512 pixels. The cameras are further fitted with lenses offering a diagonal field of view (DFOV) of 40.6° and a focal length of 13.5 mm.

The temperature effect in infrared imaging may influence subsequent overheating analysis. To address this, the infrared cameras are equipped with hardware-level compensation and an internal calibration mechanism. Specifically, ambient temperature variations affecting the sensor are mitigated through an internal shutter-based non-uniformity correction (NUC). During flight, the cameras periodically initiate a calibration cycle in which the shutter briefly blocks the sensor to measure and correct for thermal drift. In addition, each infrared image contains embedded pixel-wise radiometric temperature metadata, which can be extracted using standard third-party thermal analysis tools. This ensures that the recorded imagery remains reliable for subsequent temperature-related overheating analysis.

With the support of the China Southern Power Grid (CSG), infrared data acquisition is carried out by workers from the local power supply bureau. The power distribution equipment is observed under operating conditions.

4.2. Equipment Categories and Functional Roles

Within distribution systems, each type of equipment fulfills a critical role in ensuring system safety and reliability. Specifically, bushings enable the safe passage of conductors through transformer tanks while providing electrical insulation. Insulators deliver essential mechanical support and electrical isolation for overhead lines and substation equipment. Disconnectors provide visible isolation points in circuits, facilitating safe maintenance operations. Arresters protect equipment from voltage surges induced by lightning strikes or switching events. Breakers interrupt fault currents and isolate faulty sections of the network. The switch offers circuit protection by interrupting overcurrent conditions through fuse operation. Finally, terminal ensures reliable and insulated connections between power cables and associated equipment.

All of the aforementioned power distribution equipment belongs to the distribution network tier. The operating voltage primarily falls within the medium- or low-voltage range (e.g., 10 kV, 35 kV).

4.3. Image Annotation and Extension

(1) Category: Each object is assigned a unique category ID based on a fixed mapping scheme. The dataset covers seven critical categories of power distribution equipment: bushing, insulator, disconnector, arrester, breaker, switch, and terminal.

(2) Instance Mask: Each object is annotated with dense boundary points to form a polygonal mask, and the enclosed region is taken as its corresponding instance-level mask. The number of points ranges from tens to hundreds, depending on the object’s structural complexity and category. Truncated objects are annotated only if the visible portion exceeds 60% of the original object size. All annotations strictly follow the Pascal COCO standard, with each infrared image paired with a corresponding JSON annotation file. In total, 16,596 infrared images are annotated, containing 126,570 (120K+) instances. Following a predefined 8:2 ratio, the dataset is split into training and testing subsets, containing 13,276 and 3320 images, respectively, with 107,486 and 23,484 annotated instances.

(3) Annotation Extension for Other Downstream Tasks: For semantic segmentation, annotations are generated without distinguishing individual objects within the same category. For object detection, annotations are defined as the minimum enclosing bounding rectangles of each object, represented by the coordinates of their four vertices.

(4) Annotation Quality Control: To ensure annotation consistency and dataset reliability, the following strategies were adopted:

- Annotation team: Seven professionally trained annotators from a data service company conducted the labeling, having received prior instruction on the specific categories of power distribution equipment.

- Annotation guidelines: A detailed guideline document was prepared, including category definitions, correct/incorrect annotation examples, and rules for handling special cases (e.g., occlusion and truncation).

- Quality supervision: Two senior engineers from China Southern Power Grid supervised the entire process. They continuously reviewed randomly selected subsets from each annotator. Ambiguities were discussed and resolved collectively, with iterative updates to the guidelines.

- Inter-annotator agreement: A randomly selected subset was cross-annotated by different annotators, and the average precision between the two sets of annotations was calculated. A high score quantitatively confirmed annotation consistency.

4.4. Comparison with Existing Infrared Datasets

Table 1 provides a comparative analysis between the proposed infrared dataset (PDI) and existing infrared datasets.

Table 1.

Comparison of existing infrared datasets and the constructed PDI dataset.

(1) Advantages:

- Largest scale of infrared images and instances: The PDI dataset contains 16,596 infrared images and 126,570 annotated instances, making it several times larger than existing infrared datasets in both image and annotation volume. This scale strongly meets the data requirements of modern data-driven methods and significantly improves the accuracy of instance segmentation for power distribution equipment.

- First UAV-based infrared dataset for power distribution inspection: While most existing infrared datasets focus on transmission lines or substations, PDI is the first to specifically target power distribution inspection using UAVs. Power distribution plays a critical role in delivering electricity to end-users, including households, industrial facilities, and commercial buildings, and is therefore essential for urban power grid reliability. Moreover, PDI includes seven critical categories of power distribution equipment, captured under real-world operating conditions.

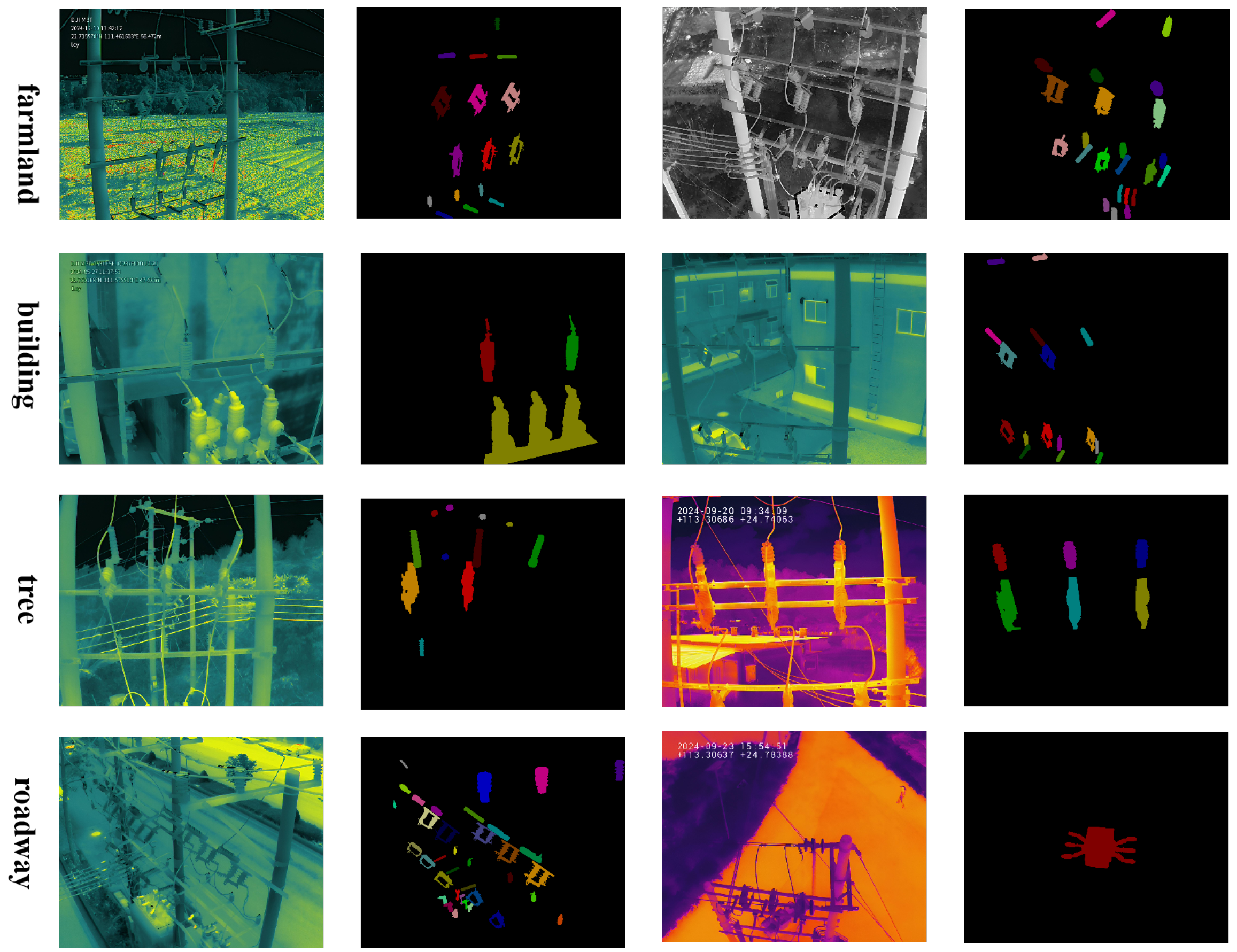

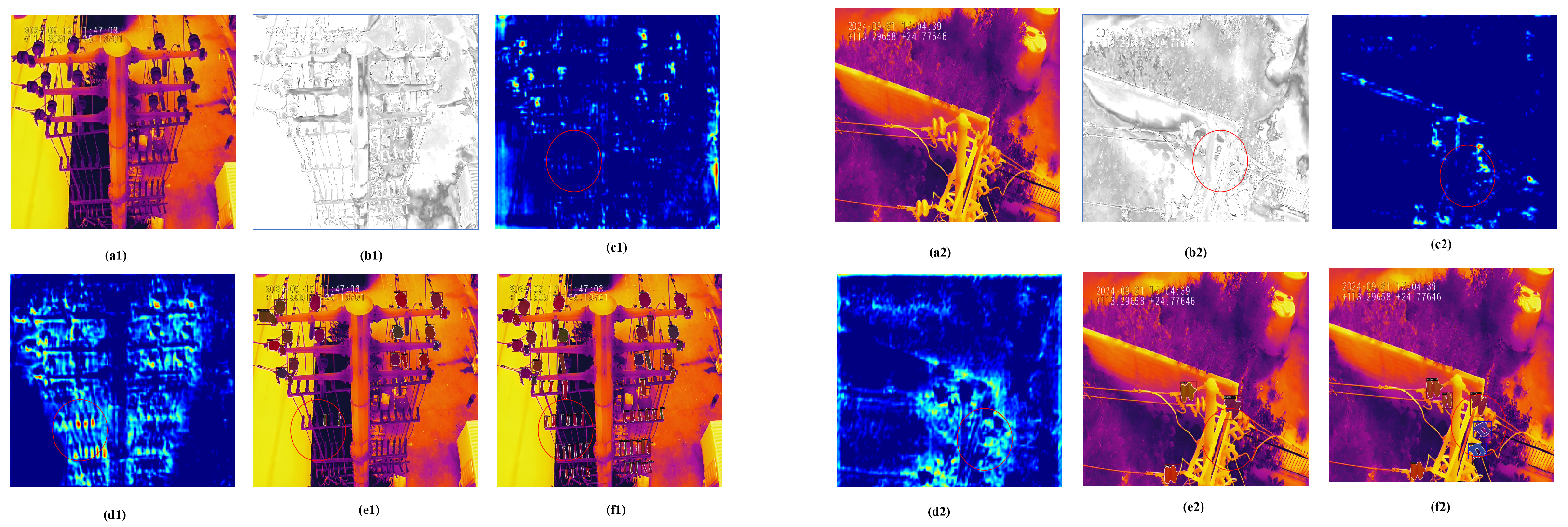

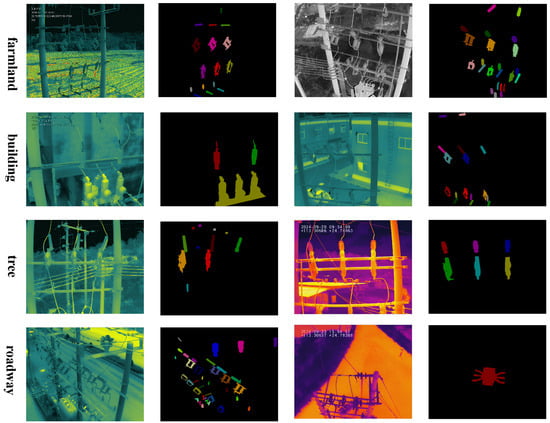

- Rich scenarios and diverse instance patterns: Power distribution equipment exhibits significant variations in appearance. Different viewpoints introduce arbitrary orientations, partial deformations, and occlusions; different flight altitudes lead to drastic scale changes; and different inspection periods result in diverse thermal radiation distributions. In addition, PDI covers hundreds of geographical regions across Guangdong Province (e.g., Yunfu City, Dongguan City), where equipment is embedded in complex backgrounds such as trees, buildings, farmland, and roadways. Representative examples are shown in Figure 5.

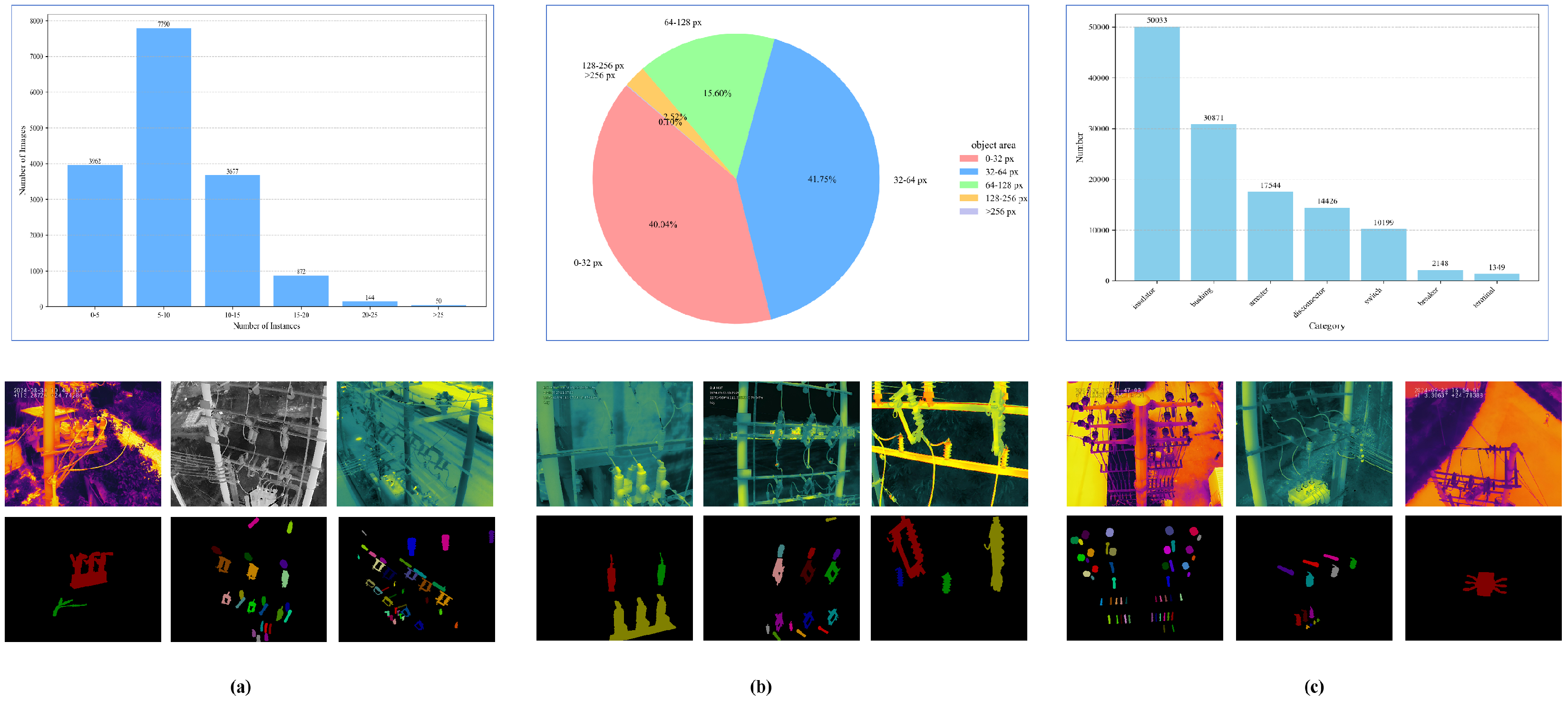

Figure 5. Examples of infrared images and instance masks from the constructed PDI dataset.

Figure 5. Examples of infrared images and instance masks from the constructed PDI dataset.

(2) Challenges:

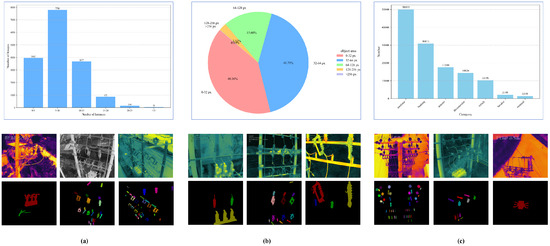

- Distribution imbalance: The number of instances per image varies considerably. Some images contain up to 49 instances, while others include only a single instance. The most common range is 5∼9 instances per image, whereas images with more than 30 instances are rare. This irregular distribution is illustrated in Figure 6a.

Figure 6. The challenges with the corresponding data samples of the constructed PDI dataset. Each power distribution equipment is considered as an instance. (a) Distribution imbalance. The number of instances in each infrared image varies considerably, with some having only 2 instances, while others have dozens of instances. (b) Scale imbalance. There are significant scale differences between instances of the same category and different categories, with some only occupying a few pixels, while others occupy hundreds of pixels. (c) Category imbalance. Instances of some categories appear frequently, while instances of other categories only appear a few times.

Figure 6. The challenges with the corresponding data samples of the constructed PDI dataset. Each power distribution equipment is considered as an instance. (a) Distribution imbalance. The number of instances in each infrared image varies considerably, with some having only 2 instances, while others have dozens of instances. (b) Scale imbalance. There are significant scale differences between instances of the same category and different categories, with some only occupying a few pixels, while others occupy hundreds of pixels. (c) Category imbalance. Instances of some categories appear frequently, while instances of other categories only appear a few times. - Scale imbalance: Significant scale variations exist both within and across categories. For example, the largest switch measures 401 × 403 pixels (far exceeding the 96 × 96 reference, covering nearly 50% of the image area), while the smallest bushing is only 3 × 5 pixels (well below 32 × 32, less than 1% of the image area). Moreover, even within the same category, scale differences are dramatic: the largest bushing reaches 157 × 368 pixels (about 20% of the image area), whereas the smallest switch is only 7 × 15 pixels (less than 1% of the image area). Across categories, the variation is also evident, ranging from 32 × 32 pixels to 256 × 256 pixels, as shown in Figure 6b.

- Category imbalance: The frequency of categories is highly uneven. The most frequent category, insulator, contains 50,033 instances, while the least frequent, terminal, has only 1349 instances. This results in a long-tailed distribution with a ratio difference exceeding 30×, as illustrated in Figure 6c.

5. Experiment

5.1. Comparison Methods and Implementation Details

All comparison models are implemented on the MMDetection 3.1.0 platform with Python 3.8.19 and PyTorch 1.12.1. All experiments are conducted on an Ubuntu 22.04 LTS system equipped with an NVIDIA GeForce RTX 4090 GPU (24 GB).

A total of ten state-of-the-art models are evaluated, including six two-stage methods: Mask RCNN [13], Cascade RCNN [19], Mask2Former [34], PowerNet (Mask R-CNN) [8], SCNet [35], and DetectoRS [36]; and four one-stage methods: QueryInst [37], RTMDet [38], SOLOv2 [20], and YOLACT [21]. Each model is initialized with COCO-pretrained weights and trained with the default hyperparameter configurations provided by MMDetection.

The proposed framework (RE) is model-agnostic. To evaluate its compatibility and effectiveness, four representative modern methods are adopted as baselines: Mask RCNN, Cascade RCNN, SOLOv2, and YOLACT. By integrating them with the proposed framework, we obtain RE-Mask RCNN, RE-Cascade RCNN, RE-SOLOv2, and RE-YOLACT.

For optimization, stochastic gradient descent (SGD) with a momentum of 0.9 and a weight decay of 0.0001 is employed. The batch size is set to 8, and training is performed for 100 epochs. The initial learning rate is 0.02 and decays by a factor of 0.1 at the 50th and 75th epochs. Each infrared image is mapped to the RGB color space and normalized to the range [−1, 1]. Data augmentation includes random scaling, random translation, and random horizontal flipping with a probability of 0.5.

5.2. Evaluation Metrics

The standard COCO evaluation protocol [39] defines two primary metrics: detection average precision () and segmentation average precision (), which evaluate object localization and mask quality, respectively. AP is computed as the area under the precision–recall (PR) curve, where precision and recall are given by:

with , , and denote true positives, false positives, and false negatives. measures the overlap between predicted and ground-truth bounding boxes, while measures the overlap between predicted and ground-truth masks. To ensure comprehensive evaluation, COCO averages AP across multiple IoU thresholds (0.5:0.95 with a step of 0.05).

In addition to AP, the F1-score is employed as a complementary metric that balances precision and recall:

Unlike AP, which integrates performance over all recall levels, the F1-score is computed at a specific operating point (e.g., IoU = 0.5). This makes it particularly useful in class-imbalanced scenarios, where a single balanced indicator of detection accuracy and completeness is needed.

5.3. Ablation Studies

To validate the effectiveness of the proposed framework, Mask RCNN [13] is adopted as the baseline, and the experimental results on the PDI dataset are presented in Table 2.

Table 2.

Performance of ablation studies. ORD: object reconstruction decoder, DFE: difference feature enhancement module, F: filtration in the DFE module. The symbol ✓ denotes the selected item.

5.3.1. Effect of ORD

The ORD is applied independently to generate reconstructed images, operating in parallel with the ISD. As shown in rows 1 and 2 of Table 2, RE-Mask RCNN achieves performance gains of 1.12% (: 53.50 → 54.10) and 1.07% (: 46.80 → 47.30) compared to the original Mask RCNN. This improvement is attributed to the ORD reconstructing object regions, thereby enhancing the model’s ability to distinguish foreground objects from the background.

5.3.2. Effect of DFE Module

The DFE module is applied in conjunction with ORD. As shown in rows 1 and 3 of Table 2, of RE-Mask RCNN improves by 0.43% compared to the baseline. By embedding the difference map as object features into the model, the DFE module strengthens the spatial features of objects. However, decreases by 0.57%, likely due to background noise introduced during feature compensation.

5.3.3. Effect of Filtration in DFE Module

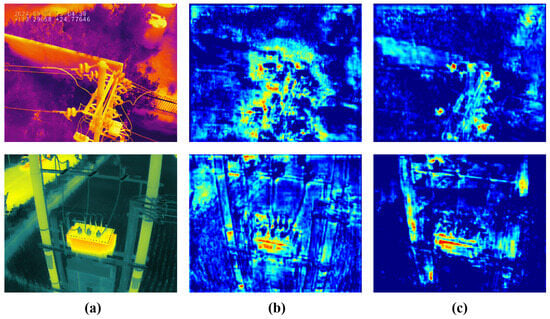

The pixel value differences between the reconstructed image and the input image produce varying degrees of activation in the difference image, with much of the noise potentially confusing the objects. To address this, a Gumbel-sigmoid function is employed for binary filtering while maintaining differentiability. As shown in rows 1 and 4 of Table 2, RE-Mask RCNN achieves performance gains of 3.18% (: 53.50 → 55.20) and 2.56% (: 46.80 → 48.00) compared to the original Mask RCNN. As illustrated in Figure 7, the Gumbel-sigmoid function effectively suppresses incorrect activations caused by noise, allowing the model to preserve object-related features and thereby enhancing the reliability of object features.

Figure 7.

Visualization of filtration. (a) Input infrared image, (b,c) difference feature maps before and after filtration in the DFE module.

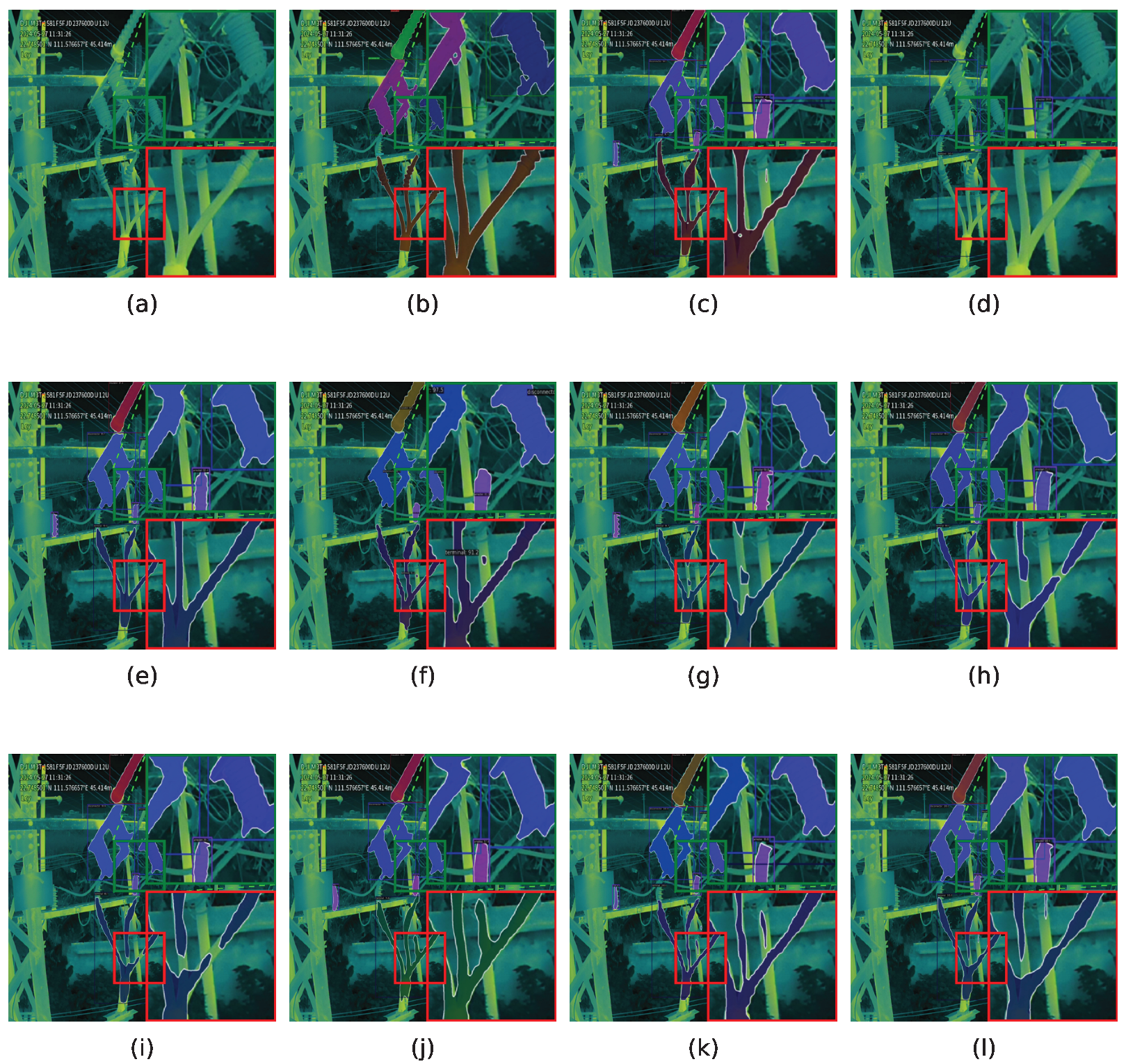

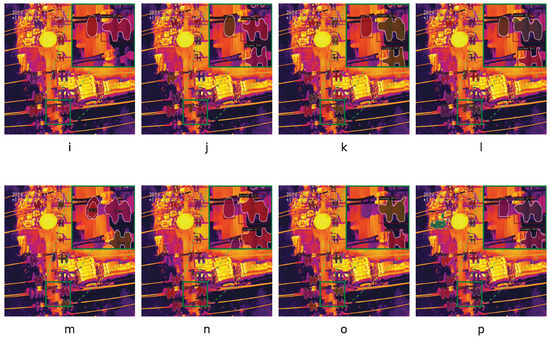

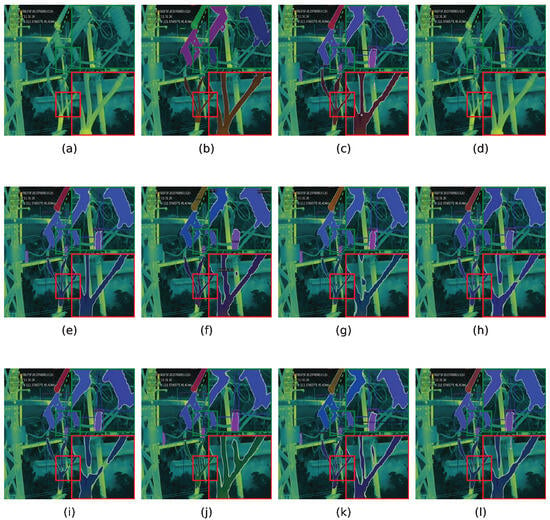

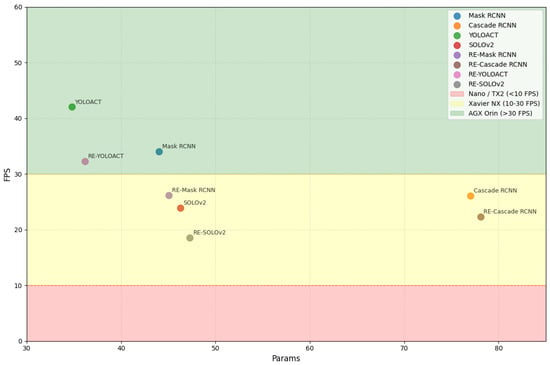

5.4. Qualitative Results

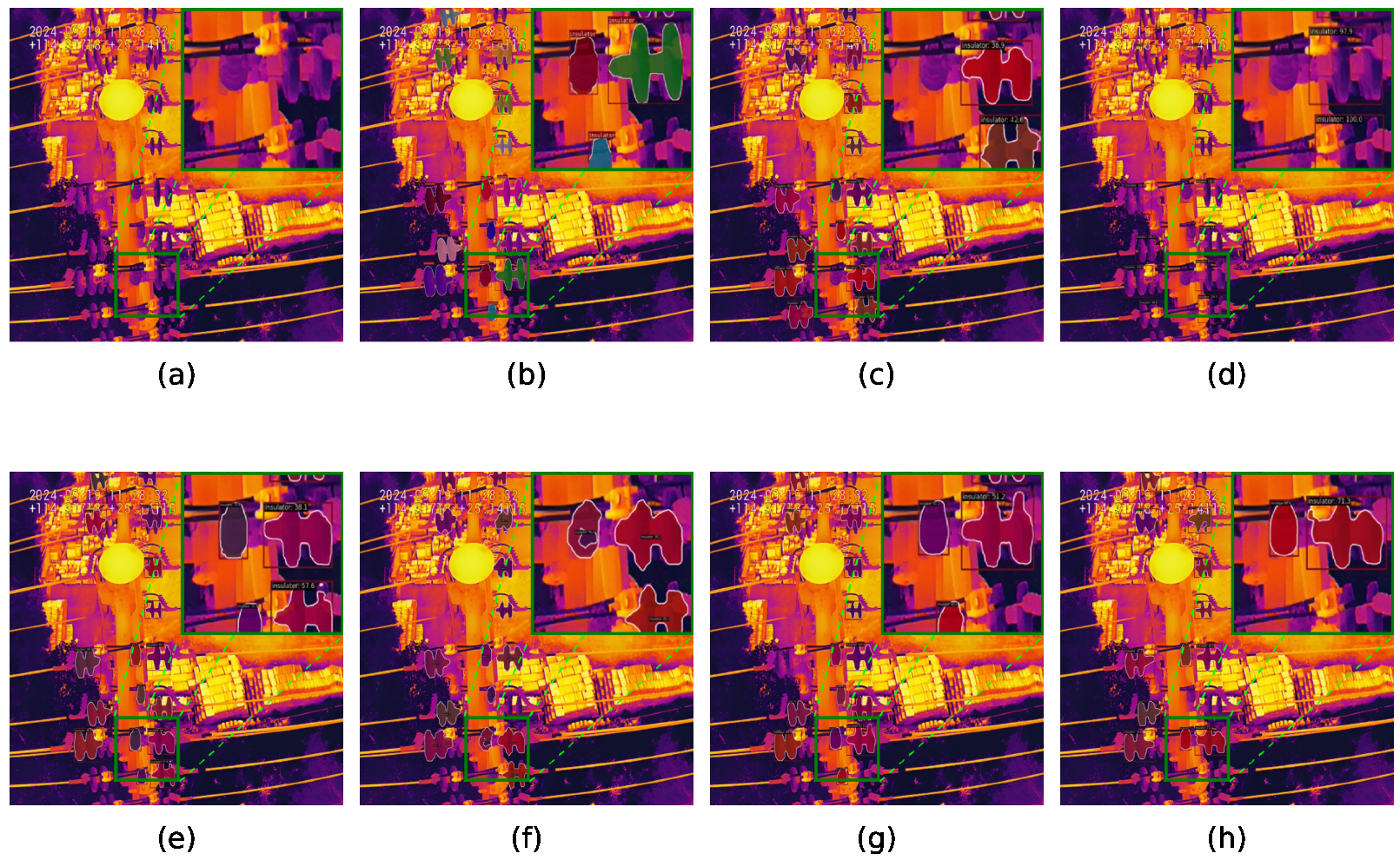

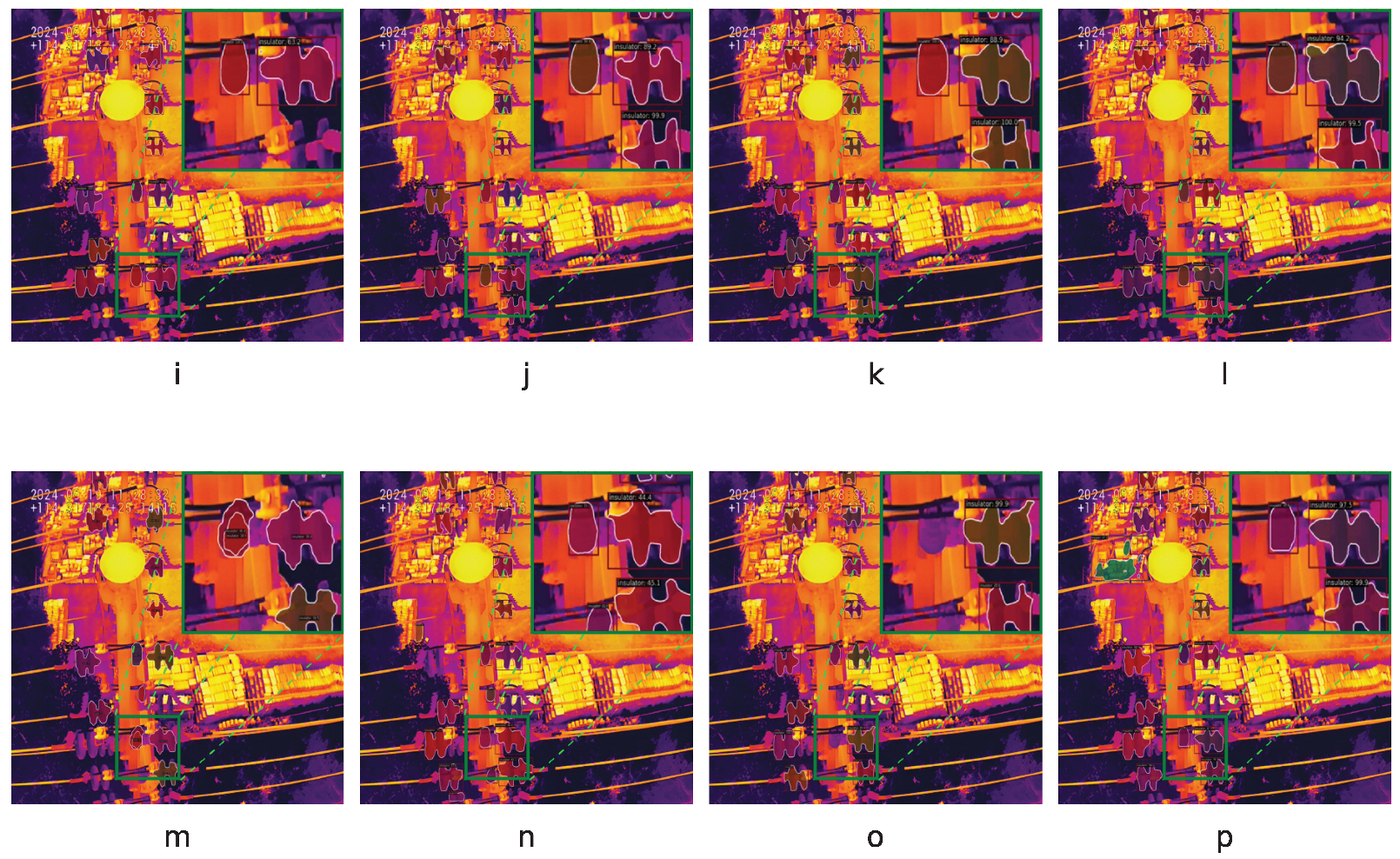

The instance segmentation results of different methods are shown in Figure 8 and Figure 9, with highlighted regions in the upper-right and lower-right corners. Cascade RCNN [19] employs multi-stage threshold refinement to reduce missed recognition of small-scale insulators, while RTMDet [38] leverages dilated convolution to preserve object context, improving recognition of large-scale disconnectors. However, both methods struggle with precise edge localization. QueryInst [37] balances detection of small-scale insulators and large-scale disconnectors via object-level querying, but exhibits limitations in low-contrast scenarios, often failing to delineate edges clearly and occasionally incorporating background noise. Detectors [36] sometimes misrecognize terminals, SOLOv2 [20] may detect insulators repeatedly, and Mask RCNN [13] often fails to localize more than half of the terminals. In contrast, RE-Cascade RCNN accurately captures small-scale insulators with high confidence, and RE-SOLOv2 preserves the structural integrity of complex disconnectors, producing smooth and complete edges. Compared with the ground truth, the proposed framework consistently improves both object recognition and localization by suppressing information loss and enhancing the spatial features of objects.

Figure 8.

Instance segmentation results of insulators on the PDI dataset. (a) Input, (b) ground truth, (c) QueryInst, (d) Detectors, (e) Mask2Former, (f) RTMDet, (g) SCNet, (h) PowerNet, (i) Mask RCNN, (j) RE-Mask RCNN, (k) Cascade RCNN, (l) RE-Cascade RCNN, (m) SOLOv2, (n) RE-SOLOv2, (o) YOLOACT, (p) RE-YOLOACT.

Figure 9.

Instance segmentation results of disconnectors and terminal on the PDI dataset. (a) Input, (b) ground truth, (c) QueryInst, (d) Detectors, (e) Mask2Former, (f) RTMDet, (g) SCNet, (h) PowerNet, (i) Mask RCNN, (j) RE-Mask RCNN, (k) Cascade RCNN, (l) RE-Cascade RCNN, (m) SOLOv2, (n) RE-SOLOv2, (o) YOLOACT, (p) RE-YOLOACT.

5.5. Quantitative Results

The instance segmentation performance of different methods is reported in Table 3. The proposed framework demonstrates consistent improvements across both overall and single-category metrics. For example, RE-Cascade RCNN achieves of 57.70 and of 47.70, surpassing the original Cascade RCNN by 3.78% and 1.71%, respectively. Similarly, RE-YOLOACT improves to 52.50 and to 43.10, outperforming the original YOLOACT by 4.37% and 5.38%, respectively. For small-scale bushings, RE-Mask RCNN shows gains of 2.00% in and 2.86% in compared to Mask RCNN. For insulators with complex edges, RE-Mask RCNN achieves improvements of 1.79% in and 1.70% in . These results indicate that the proposed framework effectively distinguishes diverse object categories and maintains high accuracy under challenging conditions, ranking first across multiple scenarios and demonstrating robust generalization capability.

Table 3.

and performance evaluation of different methods on the constructed PDI dataset. The left value is and the right value is .

5.6. Expand Experiments

5.6.1. Category Imbalance

Category imbalance is a key challenge in the PDI dataset, affecting model performance. To address this, two common strategies are employed: category-balanced sampling (CBS) and loss re-weighting (LRW). CBS over-samples images from tail categories, such as terminal and arrester, often combined with data augmentation (e.g., random affine transformations) to expand diversity. LRW modifies the classification loss, for instance using focal loss [40] or weighting inversely proportional to category frequency, to emphasize tail categories without adding data.

Experiments are conducted using Mask RCNN [13] and RE-Mask RCNN, with results summarized in Table 4. For tail categories, CBS improves terminal from 11.70 to 13.50 and arrester from 44.60 to 45.10, demonstrating enhanced localization without affecting major categories. LRW primarily boosts recognition, with terminal rising from 41.00 to 42.40 and from 11.70 to 12.90, reflecting increased classification confidence.

Table 4.

Comparison between two common category imbalance strategies. CBS: category-balanced sampling, LRW: loss re-weighting. The left value is APbox and the right value is APseg.

Overall, RE-Mask RCNN with CBS achieves 55.80 and 48.40 , while RE-Mask RCNN with LRW attains 55.40 and 48.30 , both exceeding the baseline RE-Mask RCNN. These results indicate that CBS and LRW effectively mitigate tail category deficiencies, and RE-Mask RCNN consistently benefits from these strategies, providing a practical solution for class imbalance in power equipment instance segmentation.

5.6.2. Scale Imbalance

The prevalence of small-scale objects is the primary manifestation of scale imbalance in the PDI dataset, affecting both inter-category size variation (e.g., switches are generally larger than bushings) and extreme intra-category variation (the largest and smallest bushings can differ by orders of magnitude). The proposed framework is specifically designed to address this challenge, particularly improving detection for small-scale objects.

Analysis of F1-score and recall metrics in Table 5 demonstrates that RE-based methods consistently enhance recall across most categories, reflecting increased sensitivity to positive samples. For instance, RE-Mask RCNN achieves an average recall of 84.33, outperforming the baseline Mask RCNN. Notable gains occur in terminal (+3.28) and arrester (+1.31), indicating improved detection of challenging objects. F1-score improvements are generally more modest but still positive; RE-Mask RCNN raises the F1-score for breaker from 92.08 to 92.63 and for arrester from 74.22 to 74.55, demonstrating better balance between precision and recall. RE-Cascade RCNN shows an average recall increase of 2.70, with a substantial gain of 4.80 for switch, though its F1-score slightly decreases from 81.80 to 81.70, suggesting a minor precision-recall trade-off. In contrast, RE-YOLOACT and RE-SOLOv2 exhibit marginal improvements, with categories such as insulator and disconnector showing near-baseline performance, implying limited gains from the framework in these architectures. Overall, the RE-based framework effectively enhances detection and feature representation for small-scale and challenging objects.

Table 5.

F1-score and recall performance evaluation of different methods on the constructed PDI dataset. The left value is the recall and the right value is the F1-score.

5.6.3. Power Transmission Inspection

To verify the versatility and effectiveness of the proposed framework, experiments are conducted on the publicly available HRSeg dataset [8], which contains 7 categories of transmission equipment, including composite insulator, suspension clamp, C-style clamp, strain clamp, glass insulator, parallel groove clamp, and T-style clamp. The results are summarized in Table 6.

Table 6.

APbox and APseg performance evaluation of different methods on the public HRSeg dataset. The left value is and the right value is APseg.

RE-Mask RCNN improves and by 2.80 and 2.10 points, respectively, compared to Mask RCNN (51.70 vs. 48.90 and 41.60 vs. 39.50). Similarly, RE-YOLOACT achieves gains of 1.30 in and 0.80 in (44.60 vs. 43.30 and 31.40 vs. 30.60). These improvements demonstrate that the proposed framework consistently enhances both single-category and full-category performance.

Overall, the results indicate that the framework is applicable to power transmission inspection and delivers favorable performance on both publicly available and the constructed PDI datasets.

6. Discussion

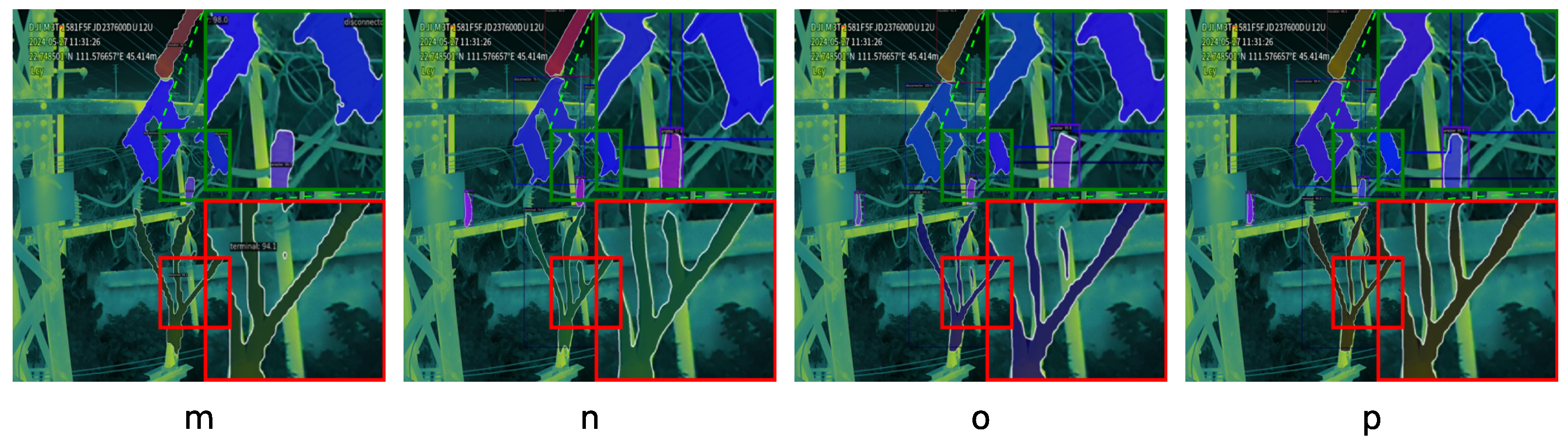

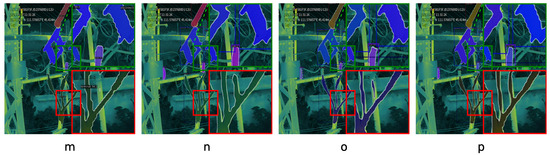

6.1. Framework Complexity Analysis

Table 7 reports the average running time (ART) and the number of parameters (Params) of different methods on the PDI dataset, with an input infrared image resolution of 640 × 512. The proposed framework is model-agnostic, allowing seamless integration into both two-stage and one-stage architectures. Although the ORD and DFE modules increase ART and Params compared to the baselines, the resulting accuracy improvements justify this overhead.

Table 7.

Analysis of model complexity. ART: the average running time of each image, Params: the number of parameters, FPS: the frames per second.

For example, RE-Mask RCNN adds 8.80 ms in ART and 1.04M parameters relative to Mask RCNN, while achieving gains of 3.18% and 2.56% in and . This trade-off highlights the advantage of enhancing object features without excessively compromising efficiency. Moreover, the framework maintains competitive ART and Params compared to other strong baselines. The efficiency is due to the linear spatial-temporal complexity of ORD and DFE, keeping computational costs manageable even in resource-constrained environments.

Furthermore, we contextualize Params and FPS relative to typical UAV onboard computing systems (e.g., NVIDIA Jetson platforms), as shown in Figure 10. Each model is positioned according to computational cost and achievable inference speed from Table 7. The background regions indicate the suitability ranges of different Jetson platforms: red (<10 FPS) for Jetson Nano/TX2, yellow (10–30 FPS) for Jetson Xavier NX, and green (>30 FPS) for Jetson AGX Orin.

Figure 10.

Instance segmentation models for NVIDIA Jetson platform suitability.

YOLOACT and RE-YOLOACT occupy the upper-left region, providing a favorable trade-off between Params and FPS, making them suitable for real-time deployment on mid-power platforms such as Xavier NX. In contrast, heavier models like Cascade RCNN and RE-Cascade RCNN lie toward the right-bottom, requiring high-power platforms like AGX Orin for near real-time performance. These results are obtained without model pruning, quantization, or knowledge distillation, suggesting further potential for deployment on low-power platforms via model compression and optimization without substantial accuracy loss.

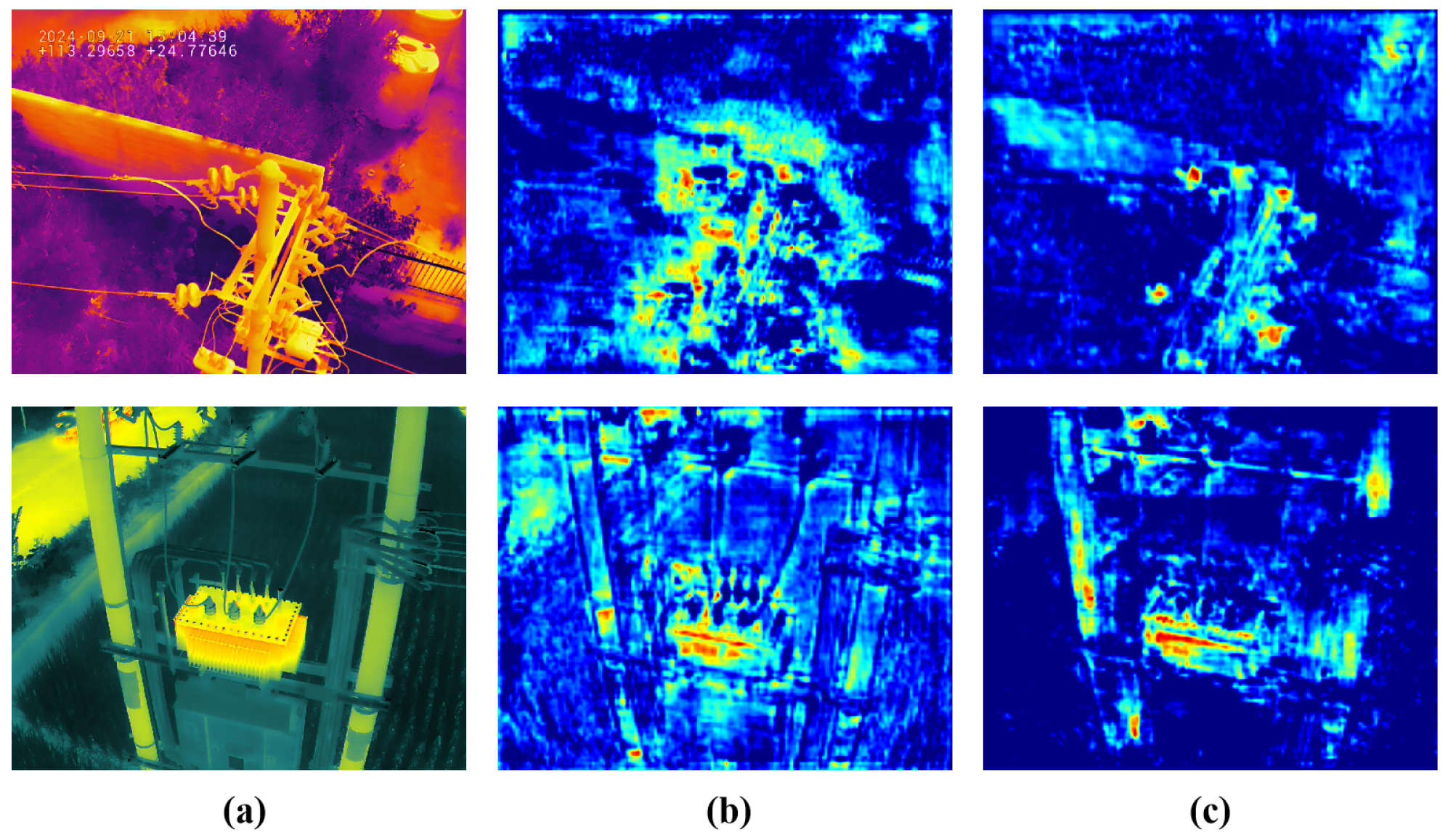

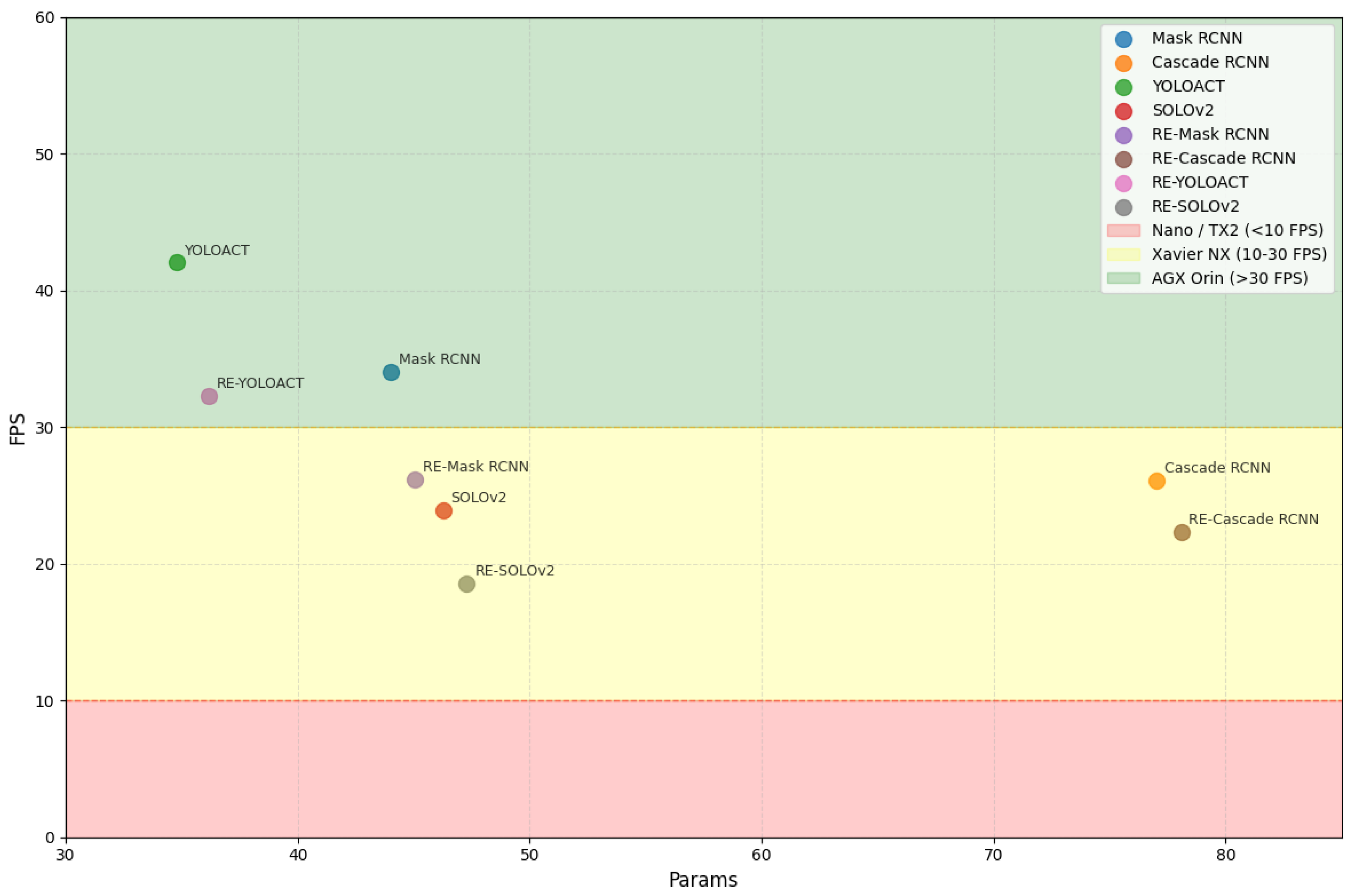

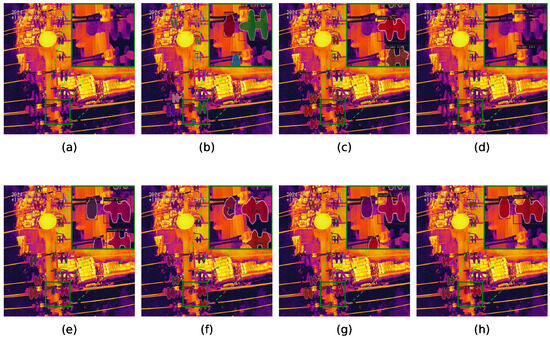

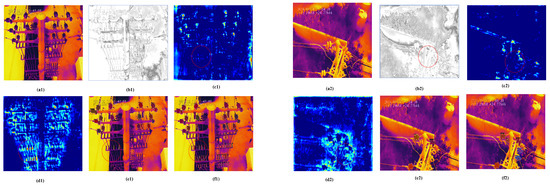

6.2. Visualization of the ORD and DFE Module

Figure 11 visualizes the effects of the ORD and DFE modules, with Mask RCNN [13] as the baseline. Two representative challenges are highlighted: small-scale insulators on the left suffer from content compression, while disconnectors with complex structures on the right exhibit severe edge fragmentation. These issues lead to sparse backbone features, resulting in collapsed content for small insulators and fragmented edges for disconnectors. The difference image between the ORD reconstruction and the input clearly indicates the location and degree of information loss, with high-error regions corresponding to small objects and structural discontinuities. By integrating the ORD and DFE modules, object features are progressively reconstructed and refined: small insulator content is enhanced, and fractured edges of disconnectors are connected. Corresponding instance segmentation results demonstrate that the proposed framework achieves more accurate and structurally consistent predictions than the baseline.

Figure 11.

Visualization of the ORD and DFE module. (a1,a2) Input infrared image, (b1,a2) the difference image between the ORD result and the input infrared image. (c1–d2) feature maps before and after the DFE module. (e1–f2) instance masks of the baseline and the proposed method. Mask RCNN is selected as a baseline. We use red circles to highlight key regions in two examples, which are represented as 1 and 2, respectively.

6.3. Application Discussion

The proposed framework can be applied in two critical scenarios for preventing grid outages:

- High-Accuracy Preventive Maintenance Analytics: Designed for offline processing of UAV-captured images, this scenario prioritizes maximum accuracy over computational cost. Accurate instance segmentation of all power distribution equipment serves as the foundation for subsequent automated temperature diagnosis, supporting reliable preventive maintenance.

- Real-Time Onboard Screening: Although the current framework emphasizes accuracy, its model-agnostic nature allows integration with lightweight backbones (e.g., YOLOACT). Future work will focus on pruning and quantization for deployment on embedded platforms (e.g., NVIDIA Jetson), enabling UAVs to perform real-time preliminary screening and generate immediate alerts for overheating. As shown in Table 7, current models are unoptimized, indicating additional potential for low-power real-time deployment.

7. Conclusions

This paper explores the problem of information loss of objects and the limitations of existing methods. Therefore, an object reconstruction decoder is first proposed to replay the object features by reconstructing the object regions. Then, a difference feature enhancement module is proposed to adaptively complement the object features. They collaborate to improve the spatial feature representations of objects and show strong generalization and superiority on the constructed dataset PDI. This dataset is the first infrared dataset oriented to power distribution inspection, which has the advantages of large data volume, rich scenarios, and diverse object patterns, as well as the challenges of scale imbalance, distribution imbalance, and category imbalance, which significantly facilitate real-world power distribution inspection. The proposed framework provides an intuitive insight into the problem of scale imbalance. However, due to the imaging characteristics of infrared images, the objects generally have low contrast, which confuses them with the background. In future work, we will focus on the following directions:

- Multi-modal fusion: Infrared images often exhibit low contrast, limiting object discrimination. Fusing complementary information from visible images, which provide rich spatial textures and fine-grained details, can significantly improve detection and localization accuracy, particularly in complex scenarios.

- Semi-supervised and self-supervised learning: Annotating instance-level masks in infrared images is costly, restricting large-scale labeled datasets. Semi-supervised or self-supervised learning can exploit abundant unlabeled data, serving as effective pre-training strategies to learn generalizable and discriminative features while reducing reliance on labeled samples.

- Resource-efficient deployment: UAV-based applications require efficient processing under strict resource constraints. Future work will explore model compression and acceleration techniques, including pruning, quantization, knowledge distillation, and lightweight architectures, to enable real-world deployment while maintaining performance in dynamic aerial environments.

Author Contributions

Resources and data curation, J.L. and J.Z.; writing—original draft preparation, Y.S.; writing—review and editing, B.S.; visualization, J.L.; supervision, J.L. and B.S.; project administration and funding acquisition, J.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the China Southern Power Grid Technology Project GDKJXM20231441.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Acknowledgments

We sincerely thank all anonymous reviewers for their valuable comments. We also thank Jiaxin Li for his suggestions on our work.

Conflicts of Interest

Authors Jinbin Luo and Jian Zhang were employed by the CSG Guangdong Power Grid Corporation company. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Yin, Y.; Duan, Y.; Wang, X.; Han, S.; Zhou, C. YOLOv5s-TC: An improved intelligent model for insulator fault detection based on YOLOv5s. Sensors 2025, 25, 4893. [Google Scholar] [CrossRef]

- Ma, J.; Qian, K.; Zhang, X.; Ma, X. Weakly supervised instance segmentation of electrical equipment based on RGB-T automatic annotation. IEEE Trans. Instrum. Meas. 2020, 69, 9720–9731. [Google Scholar] [CrossRef]

- Wang, F.; Guo, Y.; Li, C.; Lu, A.; Ding, Z.; Tang, J.; Luo, B. Electrical thermal image semantic segmentation: Large-scale dataset and baseline. IEEE Trans. Instrum. Meas. 2022, 71, 1–13. [Google Scholar] [CrossRef]

- Xu, C.; Li, Q.; Jiang, X.; Yu, D.; Zhou, Y. Dual-space graph-based interaction network for RGB-thermal semantic segmentation in electric power scene. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 1577–1592. [Google Scholar] [CrossRef]

- Cui, Y.; Lei, T.; Chen, G.; Zhang, Y.; Zhang, G.; Hao, X. Infrared small target detection via modified fast saliency and weighted guided image filtering. Sensors 2025, 25, 4405. [Google Scholar] [CrossRef]

- Zhao, L.; Ke, C.; Jia, Y.; Xu, C.; Teng, Z. Infrared and visible image fusion via residual interactive transformer and cross-attention fusion. Sensors 2025, 25, 4307. [Google Scholar] [CrossRef]

- Shi, W.; Lyu, X.; Han, L. SONet: A small object detection network for power line inspection based on YOLOv8. IEEE Trans. Power Deliv. 2024, 39, 2973–2984. [Google Scholar] [CrossRef]

- Li, D.; Sun, Y.; Zheng, Z.; Zhang, F.; Sun, B.; Yuan, C. A real-world large-scale infrared image dataset and multitask learning framework for power line surveillance. IEEE Trans. Instrum. Meas. 2025, 74, 1–14. [Google Scholar] [CrossRef]

- Huang, Y.; Zhang, Y.; He, Z.; Deng, Y. Video instance segmentation through hierarchical offset compensation and temporal memory update for UAV aerial images. Sensors 2025, 25, 4274. [Google Scholar] [CrossRef]

- Du, C.; Liu, P.X.; Song, X.; Zheng, M.; Wang, C. A two-pipeline instance segmentation network via boundary enhancement for scene understanding. IEEE Trans. Instrum. Meas. 2024, 73, 1–13. [Google Scholar] [CrossRef]

- Yang, L.; Wang, S.; Teng, S. Panoptic image segmentation method based on dynamic instance query. Sensors 2025, 25, 2919. [Google Scholar] [CrossRef]

- Brar, K.K.; Goyal, B.; Dogra, A.; Mustafa, M.A.; Majumdar, R.; Alkhayyat, A.; Kukreja, V. Image segmentation review: Theoretical background and recent advances. Inf. Fusion 2025, 114, 102608. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Zhou, J.; Liu, G.; Gu, Y.; Wen, Y.; Chen, S. A box-supervised instance segmentation method for insulator infrared images based on shuffle polarized self-attention. IEEE Trans. Instrum. Meas. 2023, 72, 1–11. [Google Scholar] [CrossRef]

- Wang, L.; Li, D.; Zhu, Y.; Tian, L.; Shan, Y. Dual super-resolution learning for semantic segmentation. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 295–307. [Google Scholar] [CrossRef]

- Stergiou, A.; Poppe, R.; Kalliatakis, G. Refining activation downsampling with SoftPool. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 10357–10366. [Google Scholar]

- Gao, X.; Dai, W.; Li, C.; Xiong, H.; Frossard, P. iPool—Information-based pooling in hierarchical graph neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 5032–5044. [Google Scholar] [CrossRef]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: High quality object detection and instance segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 1483–1498. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, R.; Kong, T.; Li, L.; Shen, C. SOLOv2: Dynamic and fast instance segmentation. Adv. Neural Inf. Process. Syst. 2020, 33, 17721–17732. [Google Scholar]

- Bolya, D.; Zhou, C.; Xiao, F.; Lee, Y.J. YOLACT: Real-time instance segmentation. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Zhao, Z.; Feng, S.; Zhai, Y.; Zhao, W.; Li, G. Infrared thermal image instance segmentation method for power substation equipment based on visual feature reasoning. IEEE Trans. Instrum. Meas. 2023, 72, 1–13. [Google Scholar] [CrossRef]

- Li, Y.; Liu, M.; Li, Z.; Jiang, X. CSSAdet: Real-time end-to-end small object detection for power transmission line inspection. IEEE Trans. Power Deliv. 2023, 38, 4432–4442. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Alexandridis, K.P.; Deng, J.; Nguyen, A.; Luo, S. Long-tailed instance segmentation using Gumbel optimized loss. In Proceedings of the European Conference of Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T., Eds.; Springer Nature: Cham, Switzerland, 2022; pp. 353–369. [Google Scholar]

- Liu, D.; Zhang, J.; Qi, Y.; Wu, Y.; Zhang, Y. Tiny object detection in remote sensing images based on object reconstruction and multiple receptive field adaptive feature enhancement. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–13. [Google Scholar] [CrossRef]

- Cui, B.; Han, C.; Yang, M.; Ding, L.; Shuang, F. DINS: A diverse insulator dataset for object detection and instance segmentation. IEEE Trans. Ind. Informat. 2024, 20, 12252–12261. [Google Scholar] [CrossRef]

- Wang, B.; Dong, M.; Ren, M.; Wu, Z.; Guo, C.; Zhuang, T.; Pischler, O.; Xie, J. Automatic fault diagnosis of infrared insulator images based on image instance segmentation and temperature analysis. IEEE Trans. Instrum. Meas. 2020, 69, 5345–5355. [Google Scholar] [CrossRef]

- Choi, H.; Yun, J.P.; Kim, B.J.; Jang, H.; Kim, S.W. Attention-based multimodal image feature fusion module for transmission line detection. IEEE Trans. Ind. Informat. 2022, 18, 7686–7695. [Google Scholar] [CrossRef]

- Zheng, H.; Liu, Y.; Sun, Y.; Li, J.; Shi, Z.; Zhang, C.; Lai, C.S.; Lai, L.L. Arbitrary-oriented detection of insulators in thermal imagery via rotation region network. IEEE Trans. Ind. Informat. 2022, 18, 5242–5252. [Google Scholar] [CrossRef]

- Ou, J.; Wang, J.; Xue, J.; Wang, J.; Zhou, X.; She, L.; Fan, Y. Infrared image target detection of substation electrical equipment using an improved Faster R-CNN. IEEE Trans. Power Deliv. 2023, 38, 387–396. [Google Scholar] [CrossRef]

- Li, J.; Xu, Y.; Nie, K.; Cao, B.; Zuo, S.; Zhu, J. PEDNet: A lightweight detection network of power equipment in infrared image based on YOLOv4-tiny. IEEE Trans. Instrum. Meas. 2023, 72, 1–12. [Google Scholar] [CrossRef]

- Zhao, Z.; Feng, S.; Ma, D.; Zhai, Y.; Zhao, W.; Li, B. A weakly supervised instance segmentation approach for insulator thermal images incorporating sparse prior knowledge. IEEE Trans. Power Deliv. 2024, 39, 2693–2703. [Google Scholar] [CrossRef]

- Cheng, B.; Misra, I.; Schwing, A.G.; Kirillov, A.; Girdhar, R. Masked-attention mask transformer for universal image segmentation. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 1290–1299. [Google Scholar]

- Vu, T.; Kang, H.; Yoo, C.D. SCNet: Training inference sample consistency for instance segmentation. Proc. of the AAAI Conf. Artif. Intell. 2021, 35, 2701–2709. [Google Scholar] [CrossRef]

- Qiao, S.; Chen, L.-C.; Yuille, A. DetectoRS: Detecting objects with recursive feature pyramid and switchable atrous convolution. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 10213–10224. [Google Scholar]

- Fang, Y.; Yang, S.; Wang, X.; Li, Y.; Fang, C.; Shan, Y.; Feng, B.; Liu, W. Instances as queries. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 6910–6919. [Google Scholar]

- Lyu, C.; Zhang, W.; Huang, H.; Zhou, Y.; Wang, Y.; Liu, Y.; Zhang, S.; Chen, K. RTMDet: An empirical study of designing real-time object detectors. arXiv 2022, arXiv:2212.07784. [Google Scholar] [CrossRef]

- Li, W.; Liu, W.; Zhu, J.; Cui, M.; Yu, R.; Hua, X.; Zhang, L. Box2Mask: Box-supervised instance segmentation via level-set evolution. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 5157–5173. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).