Abstract

In the task of integrated circuit micrograph acquisition, image super-resolution reconstruction technology can significantly enhance acquisition efficiency. With the advancement of deep learning techniques, the performance of image super-resolution reconstruction networks has improved markedly, but their demand for inference device memory has also increased substantially, greatly limiting their practical application in engineering and deployment on resource-constrained devices. Against this backdrop, we designed image super-resolution reconstruction networks based on feature reuse and structural reparameterization techniques, ensuring that the networks maintain reconstruction performance while being more suitable for deployment in resource-limited environments. Traditional image super-resolution reconstruction networks often redundantly compute similar features through standard convolution operations, leading to significant computational resource wastage. By employing low-cost operations, we replaced some redundant features with those generated from the inherent characteristics of the image and designed a reparameterization layer using structural reparameterization techniques. Building upon local feature fusion and local residual learning, we developed two efficient deep feature extraction modules, and forming the image super-resolution reconstruction networks. Compared to performance-oriented image super-resolution reconstruction networks (e.g., DRCT), our network reduces algorithm parameters by 84.5% and shortens inference time by 49.8%. In comparison with lightweight image reconstruction algorithms, our method improves the mean structural similarity index by 3.24%. Experimental results demonstrate that the image super-resolution reconstruction network based on feature reuse and structural reparameterization achieves an excellent balance between network performance and complexity.

1. Introduction

Image analysis and quantitative interpretation capabilities in material characterization [1], biological monitoring [2], and defect inspection [3] have been significantly advanced by the deep fusion of computer vision with microscopic imaging, thereby expediting developments in these fields. Microscopic imaging is established as a key inspection modality in integrated-circuit (IC) manufacturing, packaging, and post-silicon verification. In the manufacturing phase, it is employed for evaluating pattern quality after lithographic development [4], detecting film residues and defects subsequent to etching [5]. In the packaging and post-silicon verification phases, it is utilized to inspect die warpage, cracks, and contamination after bonding, and it supplies high-resolution images for hardware-Trojan localization and confirmation [6]. With increasing chip area and shrinking transistor feature sizes, the data volume and physical resolution demanded for large-area microscopic imaging at a given spatial resolution have escalated sharply. Limited by diffraction, electron-beam aberrations, and the inherent trade-off between field of view and resolution, the throughput of existing microscopic systems constitutes the principal bottleneck to acquiring high-resolution chip images, posing severe challenges to imaging timeliness [7].

Image super-resolution reconstruction involves generating a high-resolution (HR) image with enhanced details from one or multiple low-resolution (LR) images. During large-area imaging tasks such as wafer defect scanning, overall throughput is markedly improved by super-resolution through a workflow that reduces physical scan counts, decreases data volume, and expedites subsequent processing [8]. Escalating resolution requirements in IC inspection cause the physical dimensions and cost of high-resolution CMOS/CCD sensors to increase sharply with pixel density, along with elevated noise; these hardware limitations are compensated by super-resolution algorithms [7]. Resolution breakthroughs and cost optimization in IC microscopic imaging are jointly realized via algorithmic innovation and hardware co-design.

As a classic yet challenging low-level vision task, image super-resolution reconstruction is inherently an ill-posed problem. Thanks to the progress in efficient computing hardware and advanced algorithms [9], deep learning has demonstrated remarkable capabilities in processing large amounts of unstructured data. Consequently, deep learning-based approaches for image super-resolution reconstruction have gained significant momentum. Lim et al. proposed Enhanced Deep Super-Resolution (EDSR) [10], which adopted a residual backbone and removed the batch normalization (BN) layers, in addition to regularly increasing the depth of the network, significantly increased the number of output features in each layer. Zhang et al. introduced the residual dense network (RDN) [11], which utilized residual dense blocks to extract rich local features through densely connected convolutional layers in the thesis. SwinIR [12] is an image super-resolution network based on the Swin Transformer. It leverages the hierarchical structure and sliding window mechanism of the Swin Transformer to effectively process images and generate high-resolution outputs. HAT (Hybrid Attention Transformer) [13] combines channel attention and self-attention mechanisms. This hybrid approach activates more input pixels for reconstruction. CPAT [14] proposes a new Channel-Partitioned Attention Transformer to better capture long-range dependencies by sequentially expanding windows along the height and width of feature maps. DRCT [15] centers on stabilizing the information flow and enhancing the receptive fields by incorporating dense-connections within residual blocks, combining the shift-window attention mechanism to adaptively capture global information.

High-performance image super-resolution reconstruction algorithms [10,11,12,13,14,15] have achieved excellent image reconstruction results. However, these methods often involve complex model architectures and a large number of parameters, leading to increased computational costs. They also require substantial memory to store model parameters and intermediate features, which limits their application on memory-constrained devices. Therefore, developing lightweight super-resolution models to balance image reconstruction performance and computational resource consumption is of great significance.

In recent years, researchers have conducted extensive research in the lightweight direction of image super-resolution reconstruction models and achieved excellent results. IMDN [16] enhances feature extraction capabilities by employing an information distillation mechanism to gradually extract hierarchical features, which is effective for super-resolution reconstruction in complex scenes. MADNet [17] captures features at different scales using a multi-scale attention mechanism, thereby improving the model’s ability to perceive details. SwinIR-light [12] is a lightweight version of SwinIR that retains the advantages of the Swin Transformer while reducing model size and computational load, making it suitable for mobile devices. FIWHN [18] proposes a Feature Interaction Weighted Hybrid Network to achieve the model lightweight while reducing the impact of intermediate feature loss on the reconstruction quality.

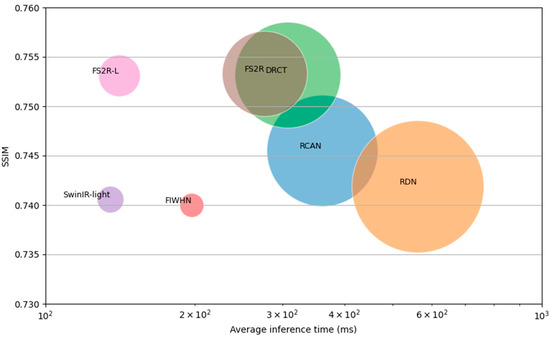

In this study, we exploit intrinsic features to generate redundant features via low-cost operations [19] and adopt structural re-parameterization [20] to design a lightweight reparameterization layer (LR-Layer). Building upon local feature fusion and local residual learning [21], we propose two efficient depth feature extraction modules called the FS-Block (Feature reuse and structural reparameterization block), LFS Block (Lightweight Feature reuse and Structural reparameterization Block). We assemble efficient image super-resolution networks FS2R (Image super-resolution reconstruction network based on feature reuse and structural reparameterization) and FS2R-L. Compared with performance-oriented SR methods (e.g., RDN), FS2R reduces parameters by 58% and cuts device inference time by 35.8%. Against a typical lightweight baseline (e.g., SwinIR-light), it improves the SSIM [22] on benchmark datasets by 3.24% on average.

The primary contributions of this paper are as follows:

- We integrate feature reuse and structural reparameterization techniques into image super-resolution reconstruction, resulting in the LR-Layer (Lightweight Reparameterization Layer). By effectively fusing features, this approach significantly reduces the model’s parameter count.

- We propose efficient deep feature extraction modules, the FS Block (Feature reuse and Structural reparameterization Block) and LFS Block (Lightweight Feature reuse and Structural reparameterization Block), for fast and accurate image super-resolution reconstruction. Under the premise of substantially reducing the model’s parameter count, our method achieves competitive results.

- We apply the model to IC microscopic image acquisition and conduct inference experiments on an edge platform, obtaining satisfactory results and advancing the practical deployment of image super-resolution.

2. Methods

2.1. Feature Reuse and Structural Reparameterization

Feature reuse is one of the essential strategies for improving model efficiency and performance in deep learning. By reusing already computed feature maps, redundant calculations can be reduced, thereby alleviating the computational burden of the model. Since multiple layers or operations can share the same feature representation, feature reuse can decrease the number of parameters in the model, enabling it to learn richer and more robust feature representations. Additionally, feature reuse can accelerate the model’s training speed because the model can process data more rapidly. Common methods for feature reuse include residual connections [11,23], dense connections [11,24], feature pyramids [25], and multi-scale feature fusion [10].

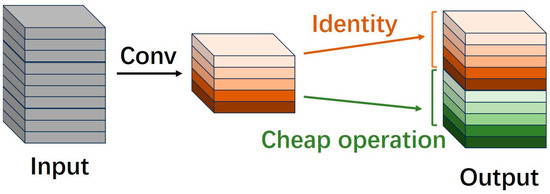

To further enhance the performance of neural network models, the scale of networks has been continuously expanding, leading to an increase in the number of features. Consequently, the issue of feature redundancy has gradually garnered attention from developers [19]. This problem is also prevalent in the task of image super-resolution reconstruction, where a large number of similar features exist [26]. Repeatedly obtaining similar features through conventional convolution operations results in significant waste of computational resources. To address this, some researchers have proposed generating a portion of intrinsic features using regular convolutions and then obtaining redundant features via low-cost/cheap operations, such as deep wise convolution and shifting operations (as shown in Figure 1). By concatenating these features, the model can effectively reduce the number of parameters and computational load while ensuring a complete set of output features.

Figure 1.

Obtain redundant features with cheap operation.

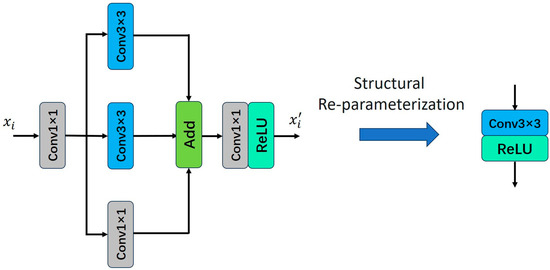

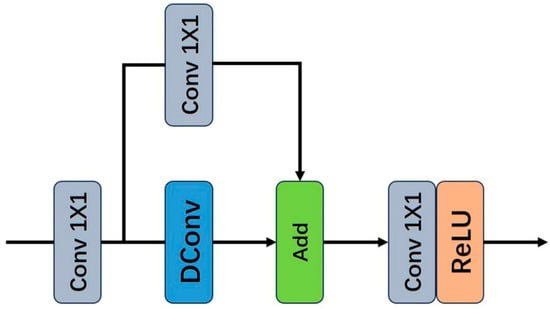

Structural Reparameterization is an optimization technique in deep learning that enhances model performance, efficiency, and generalization by altering the architecture of neural networks [27]. This technique reduces the number of parameters in the model, thereby decreasing its complexity and computational cost. According to Chen et al. [21], Structural Reparameterization typically employs multiple linear operators to generate diverse feature maps during training. These operators are then fused into a single operator through parameter fusion [28], enabling faster inference. The schematic illustration of Structural Reparameterization is shown in Figure 2.

Figure 2.

Parallel structure for structural reparameterization.

This approach can be employed in deep learning models to increase the number of channels or feature dimensions, thereby enabling subsequent layers to more effectively capture the relationships between different features. The add operation, on the other hand, involves element-wise summation of two tensors to produce a new tensor. This facilitates gradient flow, enhances training stability, and mitigates the vanishing gradient problem in deep networks.

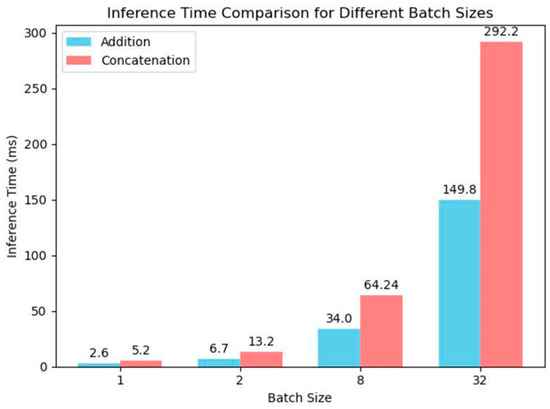

Although neither of the two feature fusion methods introduces additional parameters or FLOPs into the network, experimental validation [21] has shown that under the same batch size, the add operation incurs lower computational costs and shorter runtime compared to the concat operation, as illustrated in Figure 3.

Figure 3.

Comparison of inference time between concatenation and addition operations [21].

2.2. The Reparameterization Layer and Deep Feature Extraction Module

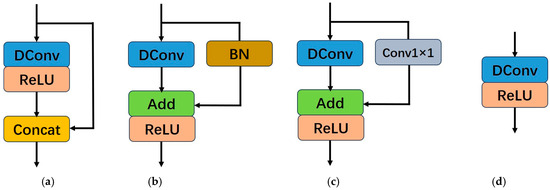

Compared with traditional convolution-activation operations, researchers [19] proposed a method in classification tasks that first uses regular convolutions to generate partial intrinsic features, followed by obtaining redundant features through cost-effective operations. These two types of features are then concatenated using the concat operation. By doing so, the method effectively reduces the model’s parameter count and computational load while ensuring a complete set of output features, as illustrated in Figure 4a. Note that the 1 × 1 convolutions used for channel transformations in Figure 4 are omitted for simplicity.

Figure 4.

The evolution process of LR-Layer for image super-resolution reconstruction. (a) is the basic layer of GhostNet for classification tasks. (b) is the basic layer of RepGhost for classification tasks. (c) is the layer that we designed for image super resolution task. (d) is the structure after reparameterization operation.

As described in Section 2.1, compared with the concat operation, the addition operation incurs lower computational costs and has shorter runtime. Chen et al. [21] replaced the concat operation in the classification network module shown in Figure 4a with the addition operation. To comply with the rules of structural reparameterization, the ReLU, which is a non-linear operation, was moved to after the addition operation. Additionally, a batch normalization (BN) operation was introduced in the identity mapping branch to bring non-linearity during training, making the structure more flexible, as illustrated in Figure 4b.

The structure of the LR-Layer used in our image super-resolution reconstruction network is shown in Figure 4c. Compared with Figure 4b, the Batch Normalization operation in the identity mapping branch is replaced with a 1 × 1 convolution. Although batch normalization can reduce the difficulty of network training and prevent overfitting, for the task of image super-resolution reconstruction, the color distribution of the image is normalized after BN, which destroys the original contrast information of the image and thus affects the quality of the super-resolution reconstruction [10]. The 1 × 1 convolution, with its trainable parameters, can learn linear transformations of the input features and improve gradient flow in deep networks [29]. Therefore, it is introduced into the identity mapping branch to address these limitations.

The structure shown in Figure 4c can be reparameterized into the structure depicted in Figure 4d during the inference process, thereby enabling fast inference for the image super-resolution reconstruction network. Our LR-Layer consists only of deep wise convolution (DConv) and activation functions during inference. As a result, it demonstrates superior adaptability to resource limited devices [30].

We replace the traditional convolution-activation operation with the LR-Layer, which is constructed based on feature reuse and structural reparameterization. The complete structure of the LR-Layer is shown in Figure 5.

Figure 5.

Complete LR-Layer structure.

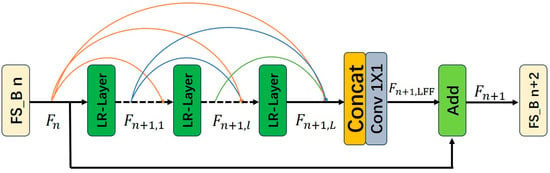

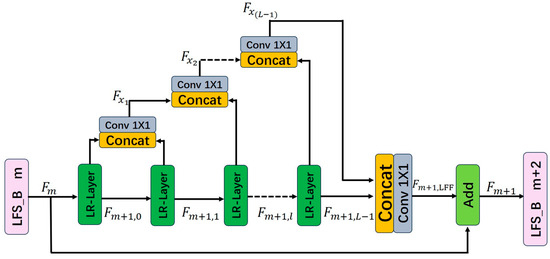

Building upon dense connectivity, local feature fusion, and local residual learning [11], we design two deep feature extraction blocks, the FS-Block (Feature Reuse and Structural Reparameterization Block) and LFS-Block (Lightweight Feature Reuse and Structural Reparameterization Block), which use the LR-Layer as its basic unit. The structures of the module are illustrated in Figure 6 and Figure 7.

Figure 6.

Structure of Feature Reuse and Structural Reparameterization Block.

Figure 7.

Structure of Lightweight Feature Reuse and Structural Reparameterization Block.

The Contiguous Memory (CM) mechanism is implemented by passing the output features of the preceding FS-Block to each LR-Layer within the current FS-Block. Let denote the output of the n-th FS-Block, which also serves as the input to the -th FS-Block. denote the output of the -th FS-Block. They both consist of features.

The output of the -th LR-Layer in the -th FS-Block can be expressed as:

In Equation (1), represents the weights of the -th LR-Layer in the -th FS-Block. Suppose consists of feature maps, its growth rate is [31]. , the output features of the -th FS-Block is concatenated with the output features of the st to -th LR-Layers of the -th FS-Block through the concat operation, resulting in + () × feature maps. The outputs of the preceding FS-Block and each LR-Layer within the current module are directly connected to all subsequent layers. This structure not only preserves the feedforward nature of the network but also extracts local dense features from the image.

After passing through LR-Layers in the -th FS-Block, the image features require local feature fusion. The output features of the -th FS-Block module and the outputs of the LR-Layers within the -th FS-Block are concatenated via the concat operation and then processed by a 1 × 1 convolution to control the output feature information:

The number of features in equals to . To further enhance the information flow within the network, the FS-Block module introduces local residual learning after local feature fusion. The output of the -th FS-Block module can be represented as:

Let denote the output of the -th LFS-Block, which also serves as the input to the -th LFS-Block. denote the output of the -th LFS-Block. They both consist of features.

The output of the -th LR-Layer in the -th LFS-Block can be expressed as:

In the LFS block, we design a branch bypass to replace the dense connections in the FS block. The dense connection concatenates feature maps from all preceding layers, leading to repeated reuse of early-stage features and high information redundancy. As the network deepens, the channel count increases linearly, causing the parameters of 1 × 1 convolutions to grow quadratically. In contrast, the branch bypass reuses features from adjacent layers for feature propagation, reducing redundancy. With the branch bypass, the network width becomes independent of depth, ensuring that the parameter count grows linearly rather than quadratically.

We define the molecular bypass’s output feature as . For a LFS Block with LR-layers, the bypass undergoes fusion operations.

After passing through LR-Layers in the -th LFS-Block, the image features require local feature fusion. The output features of the branch bypass and the outputs of the -th LR-Layer are concatenated via the concat operation and then processed by a 1 × 1 convolution to control the output feature information:

The number of features in equals to . The LFS-Block module introduces local residual learning after local feature fusion. The output of the -th LFS-Block module can be represented as:

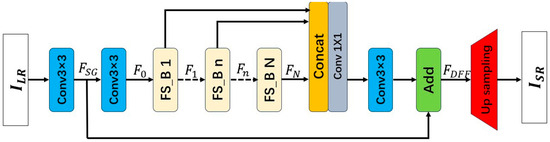

2.3. The Architecture of the Image Super-Resolution Reconstruction Network Based on Feature Reuse and Structural Reparameterization

The overall architecture of the FS2R network is illustrated in Figure 8. The network primarily consists of four components: a shallow feature extraction module, deep feature extraction modules (FS-Blocks), dense feature fusion, and an upsampling module. The input image and output image of the network are represented as and . To begin with, shallow feature extraction is performed on the input image using two layers of 3 × 3 convolutions. Specifically, the first 3 × 3 convolution layer is responsible for extracting features from .

Figure 8.

Structure of Image super-resolution reconstruction network based on feature reuse and structural reparameterization.

The extracted features are utilized for further shallow feature extraction and global residual learning. The second 3 × 3 convolutional layer takes these features as input to further extract shallow features, and its output features serve as the input to the first FS-Block.

Suppose the FS2R network utilizes FS-Blocks in total, we define the output features of the -th FS-Block as , and can be formulated as:

In Equation (10), denotes the operation of the -th FS-Block. is a composite function consisting of deepwise convolution, pointwise convolution, and a non-linear activation function. are obtained through internal convolution operations within the -th FS-Block, and thus is referred to as local features.

Dense feature fusion refers to the operation that merges local features from the FS-Blocks with global residual features . In Equation (11), the output features after dense feature fusion are denoted as , are derived from a sequence of 1 × 1 and 3 × 3 convolutional operations.

Subsequent to acquiring the fused features in the low-resolution space, an upsampling operation is conducted. This can be formally described as:

In image super-resolution reconstruction networks, upsampling operations are one of the core components, used to upscale low-resolution images to the target high-resolution size. Current mainstream upsampling methods can be divided into two major categories: traditional interpolation methods and learnable upsampling methods, each with its own advantages and disadvantages in terms of reconstruction quality, computational efficiency, and high-frequency information retention.

Our understanding of “upsampling from the low-resolution space” is that the upsampling operation itself does not contain any learnable parameters; it is merely a fixed, mathematically rule-based interpolation process entirely executed on the low-resolution feature maps. Traditional interpolation methods, including nearest-neighbor interpolation, bilinear interpolation, and bicubic interpolation, belong to the “upsampling from the low-resolution space” operation. Learnable upsampling operations (deconvolution and sub-pixel convolution) start from the input low-resolution features and are essentially learnable.

Traditional interpolation methods are inherently incapable of introducing new high-frequency information and only perform smooth estimation based on existing pixels, thus causing varying degrees of high-frequency information loss, especially in critical areas such as high-frequency textures and edges.

Commonly used learnable upsampling methods include deconvolution and sub-pixel convolution. Deconvolution is versatile but is prone to the “checkerboard artifacts,” where periodic artifacts appear in the output image. When training is insufficient or the network design is unreasonable, these artifacts can mask the true details of the image, leading to high-frequency information loss or incorrect estimation. Sub-pixel convolution is computationally efficient and is currently widely used in super-resolution networks. The upsampling method used in this paper is sub-pixel convolution, as it performs upsampling at the feature level and can better retain and learn high-frequency details.

3. Experimental Verification

3.1. Experimental Setup

This study utilizes 800 high-quality RGB images from the DIV2K [32] dataset and 2000 high-quality RGB images from the Flickr2K [33] dataset as the training set. We test the performance of our model on five benchmark datasets: set5 [34], set14 [35], BSD100 [36], Urban100 [37], Manga109 [38]. LR images are generated via bicubic interpolation downsampling. The super-resolution results are assessed using the peak signal-to-noise ratio (PSNR) and structural similarity index (SSIM) [22] calculated on the Y channel of the images in the YCbCr color space.

The size of the LR images is set to 64 × 64. For networks with different scaling factors, the corresponding HR image patches are automatically cropped from the training images. During each training iteration, one HR image patch is cropped from each training image and subjected to data augmentation by randomly applying one of the following operations: 90° rotation, horizontal flipping, or vertical flipping. The image super-resolution network was implemented using the PyTorch2.1.2 deep learning framework and optimized using the Adam optimizer. The initial learning rate for all layers was set to 10−4. After 750 training epochs, the learning rate was updated to 10−5; after 900 epochs, it was updated to 10−6; and the training was terminated after 1000 epochs.

3.2. Network Performance Comparison

3.2.1. Quantitative Results

The proposed FS2R network in this paper is compared with ten typical image super-resolution reconstruction networks, including VDSR [39], DRCN [40], EDSR-baseline [10], CARN [41], IMDN [16], MADNet [17], SwinIR-light [12], RDN [11], CPAT [14], DRCT [15] and FIWHN [18] using objective evaluation metrics. Table 1, Table 2 and Table 3 show the quantitative comparisons of the super-resolution reconstruction results for scaling factors of ×2, ×3 and ×4, respectively. The best, second-best and third-best results in each table are indicated in bold, underlined and double underlined. It can be observed that our FS2R network performs favorably on most datasets, particularly surpassing the majority of models in terms of the Structural Similarity Index Measure (SSIM).

Table 1.

Quantitative comparison of different algorithms on five benchmark datasets with scale factor of 2. The best, second-best and third-best results in each table are indicated in bold, under-lined and double underlined.

Table 2.

Quantitative comparison of different algorithms on five benchmark datasets with scale factor of 3. The best, second-best and third-best results in each table are indicated in bold, under-lined and double underlined.

Table 3.

Quantitative comparison of different algorithms on five benchmark datasets with scale factor of 4. The best, second-best and third-best results in each table are indicated in bold, under-lined and double underlined.

The Structural Similarity Index Measure (SSIM) evaluates the similarity between images based on three relatively independent metrics: luminance, contrast, and structure. The improved performance of our model in this regard implies that it can better capture and restore essential structural details, such as edges and textures. This results in a more natural and visually pleasing outcome that aligns better with human visual perception.

As shown in Table 1, FS2R achieves better objective evaluation metrics in the 5 benchmark test sets for the ×2 reconstruction task. Compared with performance-oriented models (such as RDN, CPAT, DRCT), the number of model parameters of FS2R is reduced by 58.45%, 55.17%, and 35.31%, respectively. However, the SSIM of FS2R (taking BSD100 as an example) is 0.9067, which is higher than that of RDN (0.9017), CPAT (0.9056), and DRCT (0.9051). Compared with other lightweight models (such as CARN, IMDN, FIWHN), the SSIM of FS2R-L (taking BSD100 as an example) is increased by 0.97%, 0.77%, and 0.64%, respectively.

As shown in Table 2, FS2R achieves better objective evaluation metrics in the 5 benchmark test sets for the ×3 reconstruction task. Compared with performance-oriented models (such as RDN, CPAT, DRCT), the SSIM of FS2R (taking BSD100 as an example) is 0.8342, which is higher than that of RDN (0.8093), CPAT (0.8174), and DRCT (0.8182). Compared with other lightweight models (such as CARN, IMDN, FIWHN), the SSIM of FS2R-L (taking BSD100 as an example) is increased by 3.81%, 3.65%, and 3.26%, respectively.

As shown in Table 3, compared with performance-oriented models (such as RDN, CPAT, DRCT), the SSIM of FS2R (taking BSD100 as an example) is 0.7533, which is higher than that of RDN (0.7419), CPAT (0.7527), and DRCT (0.7532). Compared with other lightweight models (such as CARN, IMDN, FIWHN), the SSIM of FS2R-L (taking BSD100 as an example) is increased by 2.48%, 2.42%, and 1.77%, respectively.

The perceptual metrics of the reconstructed images from FS2R were compared against those from typical models, with the results presented in Table 4. LPIPS (Learned Perceptual Image Patch Similarity) [42] aligns more closely with human perception than traditional metrics (like PSNR, SSIM). The lower the LPIPS value, the more similar the two images are; conversely, a higher value signifies greater differences.

Table 4.

Perceptual metrics (LPIPS) quantitative comparison of different algorithms on benchmark datasets with scale factor of 4. The best, second-best and third-best results in each table are indicated in bold, under-lined and double underlined.

FS2R performs well on most datasets, especially achieving the best results on Set14 and Urban100 (0.1069 and 0.0121), confirming that FS2R’s reconstructed image effects are more suitable for human perception.

Different test sets have different data distributions. The BSD100 [36] test set mainly contains images of natural landscapes, animals, plants, and architecture, with relatively rich textures but clear structures. FS2R achieved better objective evaluation metrics on BSD100, indicating that the model structure of FS2R and the network weights obtained through training are more compatible with the data distribution of BSD100, resulting in superior performance on this dataset. This is manifested in the fact that FS2R achieved the highest SSIM scores in tests of various magnification factors. For specific objective metric comparisons, please refer to Table 1, Table 2 and Table 3.

Although the Set5 [34] and Set14 [35] test sets have fewer images, but each contains large numbers of repetitive patterns, sharp edges, and smooth areas. The Urban100 [37] test set contains a large number of urban architectural images with regular and dense geometric structures and long-range continuous edges. Manga109 [38] mainly consists of anime images with large areas of solid colors, clear lines, and minimal natural noise. Compared with FS2R, performance-oriented models (such as DRCT [15] and CPAT [14]) can better model long-range dependencies, better maintain the coherence of lines and the purity of solid colors, and perform relatively more stably across various test data distributions. For example, the SSIM metric of FS2R is 0.9183 (for the ×4 magnification factor on Manga109 [38]), while under the same conditions, the SSIM metric of DRCT [15] is 0.9304, 1.3% higher than that of FS2R; the SSIM metric of CPAT [14] is 0.9309, 1.4% higher than that of FS2R. This is one of the reasons why FS2R’s objective evaluation metric SSIM is slightly lower than that of performance-oriented models on other test sets.

Performance-oriented models, such as DRCT [15] and CPAT [14], have more parameters and more complex nonlinear transformations, enabling them to learn richer and more detailed image feature representations. These models also include attention mechanisms, which allow the model to adaptively focus on more important areas and allocate more computational resources to reconstructing these important regions. In contrast, FS2R and FS2R-L, which are designed with engineering applications in mind, strive to find a balance between model size and inference efficiency without adding complex attention structures. Our models aim to achieve good texture recovery and high perceptual evaluation (as shown in Table 4) through relatively simple network architectures. However, when dealing with complex structures that require extremely high precision and coordination, their capabilities are slightly inferior to those of performance-oriented models, and the reconstruction results may contain minor misalignments or blurriness. These minor misalignments or blurriness are detected by the structural similarity index, directly resulting in slightly lower SSIM metrics on test sets other than BSD100 [36] compared to performance-oriented models.

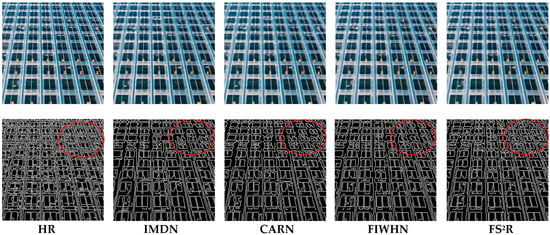

3.2.2. Subjective Evaluation

We carried out image reconstruction experiments at different scaling factors on benchmark datasets. Figure 9 and Figure 10 present the ×4 visual comparisons on the common test datasets. For img_36 from BSD100 [36] and img_88 from Urban100 [37], FS2R demonstrates superior grid structure recovery compared to other methods, confirming its effectiveness. As depicted in Figure 9 and Figure 10, FS2R’s local reconstruction of BSD100_img_36 and Urban_img_88 achieves results that are on par with or even surpass those of high-performance methods (e.g., RDN) and lightweight methods (e.g., FSRCNN, VDSR, EDSR-baseline, CARN, IMDN and FIWHN). FS2R effectively restores edges and textures, making details clearly visible. Notably, in the reconstruction of img_88, FS2R accurately recovers the textures of architectural structures. This visual comparison further underscores that FS2R reaches an advanced level of performance, meeting the needs of practical engineering applications.

Figure 9.

Reconstruction results comparison of typical algorithms and FS2R on BSD100 dataset with scale factor of 4.

Figure 10.

Reconstruction results comparison of typical algorithms and FS2R on Urban100 dataset with scale factor of 4.

As shown in Table 1, Table 2 and Table 3, FS2R attains higher SSIM compared to other models, yet it exhibits relatively lower PSNR metrics. By analyzing the definitions of SSIM and PSNR and conducting an in-depth investigation of the reconstructed images, we have concluded that the image reconstruction performance of FS2R is more focused on enhancing the structural information of images rather than achieving absolute pixel value matching. Research has already demonstrated [23], that in the task of image super-resolution reconstruction, a higher PSNR does not necessarily equate to better image reconstruction quality. Some reconstructed images may have high PSNR values, but their overly smooth details can result in a worse intuitive perception. We conducted edge extraction on the reconstructed images, and the results are displayed in Figure 11. The results show that the reconstructed images of FS2R possess richer edge information compared to other models. At the pixel level, these restored details may not be entirely consistent with the original image, which can lead to an increase in the mean squared error (MSE) and a decrease in the PSNR.

Figure 11.

Edge extraction on the reconstructed image of typical algorithms and FS2R on Urban100 (img_30) with scale factor of 4.

Compared to RepGhost [21], which is mainly used for classification tasks, it uses batch normalization layers in the network to enhance feature expression. Through reparameterization techniques, multiple branches are merged into a single convolution to boost inference speed. FS2R is mainly used for image reconstruction tasks, replacing BN layers with 1 × 1 convolutions to prevent BN from damaging image contrast information in super-resolution tasks. Compared to RepVGG [20], which is mainly used for image classification, although both networks use reparameterization, FS2R also incorporates the lightweight idea of low-cost redundant feature generation, exploring the issue of feature redundancy in neural networks. Compared to GhostSR [26], although both are image super-resolution reconstruction networks, GhostSR only uses feature reuse techniques, while FS2R further compresses the model through structural reparameterization. FS2R-L introduces a bypass branch structure to replace dense connections, further reducing information redundancy and parameter growth. The proposed FS2R model is a comprehensive improvement on existing lightweight network models, retaining the advantages of advanced models while further exploring the balance between model size and inference efficiency in the engineering applications of neural networks.

3.2.3. Ablation Study

A series of ablation experiments were designed to evaluate the effectiveness of the layers and modules in our model. The network was trained using images of size 64 × 64 and updated with the Adam optimizer, with an initial learning rate of 10−4. After 750 training epochs, the learning rate was updated to10−5. after 900 epochs, it was updated to 10−6, and the training was terminated after 1000 epochs. The ×4 super-resolution reconstruction performance of FS2R was evaluated on three benchmark datasets: set14 [35], BSD100 [36] and Urban100 [37]. The experimental results of FS2R are documented in Table 5. Experiments revealed that the FS2R model achieves optimal performance in ×4 super-resolution reconstruction when employing 16 FS-Blocks, each comprising 8 LR-Layers. Table 6 shows that FS2R-L achieves optimal performance in ×4 super-resolution reconstruction when employing 12 LFS-Blocks, each comprising 8 LR-Layers.

Table 5.

Comparison of model parameter quantity and performance for different numbers of FS-Block and LR-Layer on FS2R. The best results in the table are indicated in bold.

Table 6.

Comparison of model parameter quantity and performance for different numbers of LFS-Block and LR-Layer on FS2R-L. The best results in the table are indicated in bold.

In order to further validate the contribution of redundant features generated by various low-cost operations to model performance and to prove the advancement of the structure designed in this paper, we performed substitution tests on low-cost operations in the lightweight layer using the FS2R model as the basis. We compared identity mapping, batch normalization, and 1 × 1 convolution (as proposed in this paper). The experimental results indicate that the lightweight layer structure designed in this paper obtains superior results in both objective metrics and subjective evaluation, with the metrics statistics shown in Table 7.

Table 7.

Comparison of objective indicators of reconstruction effect when using different low-cost operations with the same model structure. The best results in the table are indicated in bold.

Under the premise of the same model structure, we employ 1 × 1 convolution as a low-cost operation, compared with the use of batch normalization (BN) structure, the average improvement in objective evaluation metrics is 2.72%; compared with identity mapping, the average improvement in objective evaluation metrics is 3.47%. Ablation experiments demonstrate that the model designed in this paper has certain structural innovation and performance advantages in the task of image super-resolution reconstruction.

3.2.4. Inference Time

In engineering applications, in addition to the pursuit of performance of neural network models, the inference time of the model is also an important metric. We selected representative networks and conducted comparative experiments on reconstruction speed using high-performance GPU devices (NVIDIA GeForce RTX 3090) on the BSD100 dataset (×4). The test LR images were of size 64 × 64. An initial model warm-up operation was performed, as the first inference time may include network loading time. Subsequently, each model was repeatedly run 10 times to obtain the average inference time, as shown in Table 8.

Table 8.

Comparison of model parameter quantity and performance on high-performance GPU devices. The best results in the table are indicated in bold. The best, second-best and third-best results in each table are indicated in bold, under-lined and double underlined.

While ensuring high-quality image super-resolution reconstruction, our model achieves a better balance between performance and model lightweighting. Compared to high-performance super-resolution networks, on the BSD100 dataset with scale ×4, FS2R can obtain similar objective evaluation metrics with reduced parameter counts and even surpass several representative algorithms in specific metrics. Compared to the advanced DRCT, FS2R reduces the parameter count by 35% while increasing the SSIM metric by 0.013%. Compared to RDN, FS2R reduces the parameter count by 58.5% and increases the SSIM metric by 1.54%. Compared to advanced lightweight networks, our model achieves improved evaluation metrics on certain datasets. Compared to FIWHN, FS2R increases the SSIM metric by 1.8%. Compared to SwinIR-light, FS2R increases the SSIM metric by 1.71%. Intuitive comparison as shown in Figure 12.

Figure 12.

Comparison of our model with other models in terms of performance, parameter count, and inference time.

To further validate the performance of FS2R in engineering applications, we conducted edge hardware inference tests using the Jetson Nano B01, with the results shown in Table 9. The Jetson Nano B01 is an embedded and edge computing AI development kit launched by NVIDIA, equipped with a quad-core ARM Cortex-A57 MP core processor and a 128-core NVIDIA Maxwell GPU. Designed specifically for edge computing, it is capable of processing and analyzing data near the data source edge. Its compact size, low power consumption, and powerful computing performance make it highly suitable for deployment in a variety of edge application scenarios.

Table 9.

Comparison of model parameter quantity and performance on edge hardware. The best results in the table are indicated in bold. The best and second-best results in each table are indicated in bold and under-lined.

3.3. Evaluation of Datasets in Different Fields

The FS2R and FS2R-L are image super-resolution reconstruction networks constructed from LR-Layers. In order to validate the generalizability of the network, we performed super-resolution reconstruction network training and image reconstruction experiments using integrated circuit (IC) microscopic images. The training set is composed of REFICS [45] and a portion of self-collected images. REFICS is a large-scale synthetic scanning electron microscope (SEM) dataset, which includes 800,000 SEM images spanning two node technologies: 32 nm and 90 nm. We chose 5000 images with minimal noise and high clarity from the active area, polysilicon, and metal layers in REFICS, and combined them with 2000 self-collected high-definition integrated circuit microscopic images to form the training set. Self-collected high-definition micrographs of IC were acquired from two distinct devices. The first device is fabricated in a 0.18 µm 1P6M BCD (Bipolar-CMOS-DMOS) process; its images were captured at 1800× magnification using an optical electron microscope. The second device is manufactured in a 55 nm 1P5M Bipolar-CMOS technology; its images were obtained at 200,000× magnification via scanning electron microscopy.

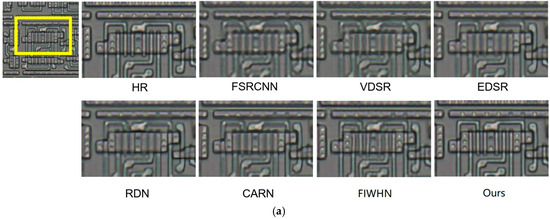

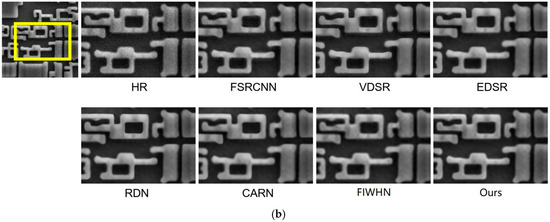

We utilized 50 self-collected images that do not overlap with the training set as the overall test set, 30 metal layer images, 30 poly layer images, and 30 DF area images that do not overlap with the training set form the independent test sets. We retrained several typical networks on our IC training dataset for reconstruction performance comparison. The objective performance indicators of our model on ×4 scale are compared with other typical model indicators as shown in Table 10. The visual effects of super-resolution reconstruction of IC microscopic images by FS2R-L are demonstrated in Figure 13.

Table 10.

Quantitative comparison of different algorithms on IC test sets with scale factor of 4. The best, second-best and third-best results in each table are indicated in bold, under-lined and double underlined.

Figure 13.

Reconstruction results comparison of typical algorithms and FS2R-L on IC dataset with scale factor of 4 ((a) shows the reconstruction result of the integrated circuit polysilicon layer obtained through an optical microscope; (b) shows the reconstruction result of the integrated circuit active area obtained through a scanning electron microscope.).

As shown in Table 10, FS2R achieves better objective evaluation metrics in the 4 IC image test sets for the ×4 reconstruction task. Compared with performance-oriented models (such as RDN), the structural similarity index of FS2R (taking the Overall test set as an example) is 0.9654, which is higher than that of RDN (0.9478). Compared with other lightweight models (such as CARN, IMDN, SwinIR-light), the structural similarity index of FS2R-L (taking the Overall test set as an example) is increased by 0.93%, 1.149%, and 1.07%, respectively.

The aim of developing FS2R is to significantly lower the hardware requirements and reduce image acquisition costs in the context of IC microscopic image acquisition. The main attention engineers inspect the microscopic structure of integrated circuits is the circuit’s structural characteristics, such as the linewidth and edges within the circuit. The structural similarity measured by SSIM directly meets these requirements. A high SSIM index signifies that the reconstructed IC microscopic image is more in line with the actual situation regarding key structural information, including line shape and edge position. If a model that solely focuses on achieving a high PSNR causes the edges in the microscopic images to be overly smooth, integrating such a model into the IC microscopic image acquisition process would be counterproductive.

In the subjective comparison of reconstructed images between FS2R and other models, although FS2R’s high SSIM advantage is not very noticeable in natural image test sets (as shown in Figure 9 and Figure 10), in the subjective comparison of IC microscopic image reconstruction, FS2R-L effectively restores the edges and textures of the input images, making the details of the integrated circuits clearly visible. In the reconstruction shown in Figure 13a, it is worth highlighting that FS2R-L accurately restores the edges where the polysilicon and active regions overlap. This visual comparison further highlights that FS2R has achieved an advanced performance level, meeting the requirements of practical engineering applications.

In order to further facilitate the practical deployment and application of FS2R in engineering contexts, two approaches were utilized in the task of integrated circuit microscopic image acquisition: standalone acquisition via scanning electron microscopy (SEM) and acquisition via SEM coupled with image super-resolution reconstruction. The acquisition efficiency of these two approaches was statistically compared and shown in Table 11. The super-resolution model employed in the experiment was FS2R, the edge device utilized was Jetson Nano B01, and the acquisition output image size was 256 × 256.

Table 11.

Comparison of the efficiency between standalone acquisition via SEM and acquisition via SEM coupled with image super-resolution reconstruction.

When the same electron beam dwell time is employed, for the same target, the time needed for the SEM combined with super-resolution reconstruction acquisition mode is decreased by 79.3% compared to the time needed for acquiring microscopic images using the SEM alone. To further improve the quality of microscopic image acquisition, when our acquisition method uses a dwell time of 100 microseconds per pixel, the acquisition time can be saved by 73.1%.

4. Conclusions

In response to the challenges of high computational complexity and large memory consumption in current image super-resolution networks, we propose FS2R and FS2R-L, networks that leverage feature reuse and structural reparameterization techniques. FS2R and FS2R-L enable fast and accurate extraction of local and global deep features from images. By leveraging intrinsic features and low-cost operations to generate redundant features, we design a reparameterization layer (LR-Layer) for feature reuse. Additionally, we develop the FS-Block and LFS-Block module. These components collectively form the FS2R and FS2R-L network. Experimental results indicate that FS2R achieves a good balance between performance and network complexity compared with other state-of-the-art algorithms. However, the reconstructed images still exhibit some edge blurring and artifacts. Compared to advanced lightweight image super-resolution reconstruction algorithms, there is still a large compression space for the model size. We will continue to improve the compression of our model. In the future, we will focus on improving the image quality of the reconstructed results while further advancing the lightweight design of the model to better adapt it for resource-limited devices.

Author Contributions

Methodology, T.L.; software, T.L.; validation, T.L.; data curation, Q.L.; writing—original draft, T.L.; writing—review & editing, X.J.; project administration, X.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author(s).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Cheng, X.; Liu, C.; Li, X.; Chen, T.; Zhang, T.; Sun, L. In-situ observation and kinetics study on shrinkage defect corrosion of ductile iron in NaCl solution. Corros. Sci. 2024, 232, 112034. [Google Scholar] [CrossRef]

- Sigal, Y.M.; Sahl, S.J. From Blur to Brilliance: The Ascendance of Advanced Microscopy in Neuronal Cell Biology. Annu. Rev. Neurosci. 2024, 47, 235–253. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.M.; Zhu, J.L.; Yu, Z.; Feng, X.R.; Li, Z.; Zhong, L.; Zhang, J.S.; Gu, H.G.; Chen, X.G.; Jiang, H.; et al. Quasi-visualizable Detection of Deep Sub-wavelength Defects in Patterned Wafers by Breaking the Optical Form Birefringence. Int. J. Extrem. Manuf. 2025, 7, 015601. [Google Scholar] [CrossRef]

- Chen, H.D.; Ravichandran, J. A System Built for Both Deterministic Transfer Processes and Contact Photolithography. Adv. Eng. Mater. 2024, 26, 2401228. [Google Scholar] [CrossRef]

- Enni, K.; Sreelakshmy, K.; Lal R, M. Comparative Study on the Effects of Dry and Wet Etching on Surface Characteristics of Fused Silica Optics. Opt. Mater. 2025, 162, 116809. [Google Scholar] [CrossRef]

- Bhasin, S.; Danger, J.L.; Guilley, S.; Ngo, X.T.; Sauvage, L. Hardware Trojan Horses in Cryptographic IP Cores. In Proceedings of the 10th Workshop on Fault Diagnosis and Tolerance in Cryptography, Santa Barbara, CA, USA, 20 August 2013; pp. 15–29. [Google Scholar]

- Sreehari, S.; Venkatakrishnan, S.V.; Bouman, K.L.; Simmons, J.P.; Drummy, L.F.; Bouman, C.A. Multi-resolution Data Fusion for Super-resolution Electron Microscopy. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2017. [Google Scholar]

- Chien, J.; Lee, E. Deep-CNN-Based Layout-to-SEM Image Reconstruction with Conformal Uncertainty Calibration for Nanoimprint Lithography in Semiconductor Manufacturing. Electronics 2025, 14, 2973. [Google Scholar] [CrossRef]

- Yang, W.; Zhang, X.; Tian, Y.; Wang, W.; Xue, J. Deep Learning for Single Image Super-Resolution: A Brief Review. IEEE Trans. Multimedia 2019, 21, 3106–3121. [Google Scholar] [CrossRef]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Lee, K.M. Enhanced Deep Residual Networks for Single Image Super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Zhang, Y.; Tian, Y.; Kong, Y.; Zhong, B.; Fu, Y. Residual Dense Network for Image Super-Resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Liang, J.; Cao, J.; Sun, G.; Zhang, K.; Van Gool, L.; Timofte, R. SwinIR: Image Restoration Using Swin Transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021. [Google Scholar]

- Chen, X.; Wang, X.; Zhou, J.; Qiao, Y.; Dong, C. Activating More Pixels in Image Super-Resolution Transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023. [Google Scholar]

- Tran, D.P.; Hung, D.D.; Kim, D. Channel-Partitioned Windowed Attention and Frequency Learning for Single Image Super-Resolution. arXiv 2024, arXiv:2407.16232. [Google Scholar] [CrossRef]

- Hsu, C.C.; Lee, C.M.; Chou, Y.S. DRCT: Saving Image Super-Resolution Away from Information Bottleneck. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 17–21 June 2024. [Google Scholar]

- Hui, Z.; Gao, X.; Yang, Y.; Wang, X. Lightweight Image Super-Resolution with Information Multi-Distillation Network. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019. [Google Scholar]

- Lan, R.; Sun, L.; Liu, Z.; Lu, H.; Pang, C.; Luo, X. MADNet: A Fast and Lightweight Network for Single-Image Super Resolution. IEEE Trans. Cybern. 2020, 51, 1443–1453. [Google Scholar] [CrossRef] [PubMed]

- Li, W.; Li, J.; Gao, G.; Zhang, K.; Liu, Y. Efficient Image Super-Resolution with Feature Interaction Weighted Hybrid Network. IEEE Trans. Multimed. 2024, 26, 2024–2032. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. GhostNet: More Features from Cheap Operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Ding, X.; Zhang, X.; Ma, N.; Han, J.; Ding, G.; Sun, J. RepVGG: Making VGG-Style ConvNets Great Again. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021. [Google Scholar]

- Chen, C.; Guo, Z.; Zeng, H.; Xiong, P.; Dong, J. RepGhost: A Hardware-Efficient Ghost Module via Re-parameterization. arXiv 2022, arXiv:2211.06088. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image Quality Assessment: From Error Visibility to Structural Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Tong, T.; Li, G.; Liu, X.; Gao, Q. Image Super-Resolution Using Dense Skip Connections. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Lai, W.S.; Huang, J.B.; Ahuja, N.; Yang, M.H. Deep Laplacian Pyramid Networks for Fast and Accurate Super-Resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Nie, Y.; Han, K.; Liu, Z.; Xiao, A.; Deng, Y.; Xu, C.; Wang, Y. GhostSR: Learning Ghost Features for Efficient Image Super-Resolution. arXiv 2021, arXiv:2101.08525. [Google Scholar]

- Luo, G.; Huang, M.; Zhou, Y.; Sun, X.; Jiang, G.; Wang, Z.; Ji, R. Towards Efficient Visual Adaption via Structural Re-Parameterization. arXiv 2023, arXiv:2302.08106. [Google Scholar] [CrossRef]

- Ding, X.; Zhang, X.; Han, J.; Ding, G. Diverse Branch Block: Building a Convolution as an Inception-Like Unit. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Virtual, 19–25 June 2021; pp. 10886–10895. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Hu, M.; Feng, J.; Hua, J.; Lai, B.; Huang, J.; Gong, X.; Hua, X.-S. Online Convolutional Re-Parameterization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022. [Google Scholar]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Agustsson, E.; Timofte, R. Ntire 2017 Challenge on Single Image Super-Resolution: Dataset and Study. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 126–135. [Google Scholar]

- Wang, Y.; Wang, L.; Yang, J.; An, W.; Guo, Y. Flickr1024: A Large-Scale Dataset for Stereo Image Super-Resolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Bevilacqua, M.; Roumy, A.; Guillemot, C.; Morel, M.-L.A. Low-Complexity Single-Image Super-Resolution Based on Nonnegative Neighbor Embedding. In Proceedings of the British Machine Vision Conference (BMVC), Surrey, UK, 3–7 September 2012. [Google Scholar]

- Elad, M.; Milanfar, P. On Single Image Scale-Up Using Sparse-Representations. In Proceedings of the International Conference on Curves and Surfaces (ICCS), Avignon, France, 24–30 June 2010; pp. 711–730. [Google Scholar]

- Martin, D.; Fowlkes, C.; Malik, J. A Database of Human Segmented Natural Images and Its Application to Evaluating Segmentation Algorithms and Measuring Ecological Statistics. In Proceedings of the International Conference on Computer Vision (ICCV), Vancouver, BC, Canada, 7–14 July 2001; pp. 416–423. [Google Scholar]

- Huang, J.B.; Singh, A.; Ahuja, N. Single Image Super Resolution from Transformed Self-Exemplars. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 5197–5206. [Google Scholar]

- Matsui, Y.; Ito, K.; Aramaki, Y.; Fujimoto, A.; Ogawa, T.; Yamasaki, T.; Aizawa, K. Sketch-based manga retrieval using manga109 dataset. Multimed. Tools Appl. 2017, 76, 21811–21838. [Google Scholar] [CrossRef]

- Kim, J.; Lee, J.K.; Lee, K.M. Accurate Image Super-Resolution Using Very Deep Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1646–1654. [Google Scholar]

- Kim, J.; Lee, J.K.; Lee, K.M. Deeply-Recursive Convolutional Network for Image Super-Resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1637–1645. [Google Scholar]

- Ahn, N.; Kang, B.; Sohn, K.A. Fast, Accurate, and Lightweight Super-Resolution with Cascading Residual Network. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 252–268. [Google Scholar]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Deng, W.; Yuan, H.; Deng, L.; Lu, Z. Reparameterized residual feature network for lightweight image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Zhang, Y.; Li, K.; Li, K.; Wang, L.; Zhong, B.; Fu, Y. Image Super-Resolution Using Very Deep Residual Channel Attention Networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Wilson, R.; Lu, H.; Zhu, M.; Forte, D.; Woodard, D.L. REFICS: Assimilating data-driven paradigms into reverse engineering and hardware assurance on integrated circuits. IEEE Access 2021, 9, 131955–131976. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).