Highlights

What are the main findings?

- Proposed PFL-YOLO, a lightweight deep learning model specifically designed for sheep face recognition, integrating efficient feature extraction strategies.

- Achieved high-precision sheep face recognition with low computational complexity, balancing accuracy and efficiency effectively.

What is the implication of the main finding?

- Outperforms mainstream recognition models in extracting frontal face features of sheep, demonstrating superior performance in livestock monitoring.

- Enables feasible deployment on mobile devices, embedded systems, and surveillance networks, promoting practical applications in intelligent agriculture.

Abstract

Sheep face recognition technology is critical in key areas such as individual sheep identification and behavior monitoring. Existing sheep face recognition models typically require high computational resources. When these models are deployed on mobile or embedded devices, problems such as reduced model recognition accuracy and increased recognition time arise. To address these problems, an improved Parameter Fusion Lightweight You Only Look Once (PFL-YOLO) sheep face recognition model based on YOLOv8n is proposed. In this study, the Efficient Hybrid Conv (EHConv) module is first integrated to enhance the extraction capability of the model for sheep face features. At the same time, the Residual C2f (RC2f) module is introduced to facilitate the effective fusion of multi-scale feature information and improve the information processing capability of the model; furthermore, the Efficient Spatial Pyramid Pooling Fast (ESPPF) module was used to fuse features of different scales. Finally, parameter fusion optimization work was carried out for the detection head, and the construction of the Parameter Fusion Detection (PFDetect) module was achieved, which significantly reduced the number of model parameters and computational complexity. The experimental results show that the PFL-YOLO model exhibits an excellent performance–efficiency balance in sheep face recognition tasks: mAP@50 and mAP@50:95 reach 99.5% and 87.4%, respectively, and the accuracy is close to or equal to the mainstream benchmark model. At the same time, the number of parameters is only 1.01 M, which is reduced by 45.1%, 83.7%, 66.6%, 71.4%, and 61.2% compared to YOLOv5n, YOLOv7-tiny, YOLOv8n, YOLOv9-t, and YOLO11n, respectively. The size of the model was compressed to 2.1 MB, which was reduced by 44.7%, 82.5%, 65%, 72%, and 59.6%, respectively, compared to similar lightweight models. The experimental results confirm that the PFL-YOLO model maintains high accuracy recognition performance while being lightweight and can provide a new solution for sheep face recognition models on resource-constrained devices.

1. Introduction

In today’s era of rapid technological development, intelligent agriculture has become an important trend in the field of agriculture. Castañeda-Miranda et al. and Chatterjee et al. utilize IoT technology to achieve smart agriculture [,]. Castañeda-Miranda et al. employ IoT technology and artificial neural networks to monitor and collect environmental data in real time, enabling intelligent frost control and irrigation management []. Chatterjee et al. have developed an IoT-based livestock health monitoring framework that can identify the health status of dairy cows and analyze changes in their behavior to predict various dairy cow diseases []. IoT technology has significantly improved the efficiency of agricultural systems. Animal husbandry, as a pillar industry of agriculture, is being promoted by the Internet of Things (IoT), artificial intelligence, and other technologies for precise and intelligent management. Zhang et al. discussed the application of Wearable Internet of Things (W-IoT) technology in smart farms to realize precision livestock breeding, where accurate identification of individual animals is the key to realizing precision management []. Many scholars have used deep learning technology to perform facial recognition on pigs and cattle to achieve accurate individual identification [,]. Deep learning-driven animal facial recognition technology (such as individual identification of livestock, such as pigs and cattle) is driving the livestock industry toward a paradigm shift toward individual precision management, intelligent health monitoring, and automated behavioral analysis, providing core technological support for agricultural intelligence. For sheep, as a widely farmed livestock species worldwide, the accurate identification of its individual animals is of great significance to improve farming efficiency and ensure product safety.

Traditional identification of individual sheep relies mainly on physical marking and manual observation: ear tags are easily removed, branding can cause infection, and spray paint fails as the wool grows; the Radio Frequency Identification (RFID) [] technology allows for electronic identification but suffers from the risk of tag damage and high cost, while empirical body observation methods are inefficient and difficult to scale up. These methods have significant limitations in terms of durability, automation, and affordability. In contrast, biometric technologies are more adaptable: DNA detection is difficult to scale up due to the lack of real-time performance and high cost, iris recognition is limited by equipment investment and animal cooperation, and facial recognition can analyze sheep’s facial features using deep learning technology to identify individuals, which can simultaneously meet the demand for accurate management and tracking of health data, providing an efficient and reliable solution for modern agriculture.

With the rapid development of deep learning technology, sheep face recognition shows important application value in intelligent animal husbandry. The existing research mainly focuses on four directions: Convolutional Neural Networks (CNNs), Vision Transformer (ViT), Metric Learning, and model lightweighting. Although each method has made significant progress in specific scenarios, there is still room for improvement in terms of model efficiency, feature representation capability, and complex scene adaptation. Early research focused on building end-to-end recognition frameworks using CNNs. Almog et al. collected 81 Assaf goat facial images, first localized the goat face with Faster R-CNN, and then classified it with ResNet50V2 model embedded with ArcFace loss function, with an average accuracy of 95% on the two datasets, but the number of parameters was 40.4 M []. Billah et al. used a YOLOv4 model to recognize the key parts of a goat’s face; the accuracy of face, eyes, ears, and nose was 93%, 83%, 92%, and 85%; however, global facial features are not used, and there may be missed detections []. Zhang et al. used the YOLOv4 model, incorporating the CBAM attention mechanism to recognize the sheep’s face trained by migration learning; the mAP@50 was 91.58% and 90.61% in the two datasets, respectively, demonstrating the importance of the attention mechanism in screening key features. However, the number of parameters and floating-point operations of YOLOv4 are extremely large, which makes it difficult to meet the requirements of mobile deployment []. Zhang et al. incorporated the SE attention mechanism into the AlexNet model and used the Mish activation function. The recognition accuracy on the validation set reached 98.37%, and the accuracy on the validation set was about 96.58% after 100 days of tracking and collection. It demonstrates the long-term stability of the model, but its shallow network structure limits the ability to handle complex occlusion scenarios []. Guo et al. collected 10 types of sheep facial images as a dataset. They used knowledge distillation to migrate the knowledge from YOLOv5x to YOLOv5s to enhance the feature extraction capability, with a model accuracy of 92.75%, mAP@50:95 of 94.67%, and an inference time of 12.63 ms. However, the number of model parameters is 7.2 M, and the model size is 14.065 MB, which is still far from the lightweight model []. Pang et al. proposed the Attention Residual Module (ARM) on a self-constructed dataset containing 4490 images of 38 sheep with VGG16, GoogLeNet, and ResNet50, combined with 10.2%, 6.65%, and 4.38% accuracy improvement, respectively. However, the number of ARM module references is 1.6 M, which may not satisfy the lightweight requirement in combination with other models [].

Many other researchers have used the algorithm based on the Vision Transformer architecture to achieve good results in sheep face recognition. Li et al. combined MobileNetV2 with ViT. They achieved 97.13% accuracy on a dataset of 7434 images containing 186 sheep, with the number of parameters and floating-point operations reduced by 5 times compared to that of ResNet-50 []. Zhang et al. improved the ViT model by introducing the LayerScale module and migration learning method, achieving 97.9% accuracy on a self-built dataset of 16,000 images containing 160 sheep []. Zhang et al. designed a multiview image acquisition device by adopting the T2T-ViT method and introducing the SE attention mechanism, the LayerScale module, and ArcFace loss function. The improved T2T-ViT model achieves a recognition accuracy of 95.9% and an F1-Score of 95.5%. The ViT model is a heavyweight model with a huge number of parameters, making it difficult to meet lightweight requirements [].

In addition, some scholars have conducted research based on the Siamese network. Zhang et al. constructed two datasets and designed RF_Block and EI_Block. They introduced a 3D attention mechanism to construct SAM_Block; the accuracies on 100 little-tailed cold sheep datasets were 97.2% and 90.5%, and the accuracies on small sample training were 92.1% and 86.5%, respectively. However, the size of the model is 112.2 MB, and the average recognition time is 49.7 ms [].

Some scholars have also conducted research on model lightweighting. Zhang et al. lighten the model based on the YOLOv5s model. On the self-constructed dataset containing 63 small-tailed frigid sheep, the parameters and computational complexity of LSR-YOLO is 4.8 M and 12.3 GFLOPs, which are 25.5% and 33.4% lower than that of YOLOv5s, respectively, and the model size is only 9.5 MB when the mAP@50 reaches 97.8%, but there is still some room for compression in the number of model parameters and FLOPs []. Zhang et al. made improvements based on the YOLOv7-tiny module. Finally, they obtained the YOLOv7-SFR algorithm through knowledge distillation, with the number of parameters, model size, and average recognition time of 5.9 M, 11.3 MB, and 3.6 ms, respectively, on the self-constructed sheep face dataset; mAP@50 was 96.9%. However, with the introduction of the Dyhead module, the parameter and model sizes increased by 3.3 M and 6.5 MB, respectively [].

The current research on lightweight sheep face recognition faces four core challenges: the number of model parameters and computational volume are too large, the number of parameters and FLOPs of traditional CNNs (e.g, ResNet50V2, YOLOv4) and Transformer architectures (e.g, ViT) are far more than the upper limit of the deployment of the mobile terminal, and the attention modules, such as CBAM, ARM, and so on, have introduced additional parameter overheads while improving the accuracy; Complex scene robustness is insufficient, and shallow networks (e.g, AlexNet) are poorly adapted to occluded scenes; it is difficult to balance lightweighting and accuracy, and although existing methods (e.g, LSR-YOLO, YOLOv7-SFR) compress parameters, the FLOPs and model size still affect the ultimate lightweighting, so it is necessary to optimize the parameter and computation volume in depth while guaranteeing the accuracy.

Facing these challenges, in this paper, the PFL-YOLO model is proposed, which aims to fill the gap in lightweight sheep face recognition and provide a new solution. The model is lightweight based on YOLOv8n, and by optimizing the model structure and parameter configurations, the number of parameters and computational complexity are significantly reduced to make it more adaptable to mobile devices or devices with limited computational resources while ensuring higher recognition accuracy. The main contributions of this study include the following:

- In this study, a dataset of sheep facial images was constructed through multi-angle image acquisition of 60 Australian and Lake hybrid sheep (bred from Australian White Sheep and Hu Sheep) in the Linxia Hui Autonomous Prefecture, Gansu Province, China.

- To meet the requirements of lightweight sheep face recognition, this study proposes the PFL-YOLO model. The EHConv module is embedded in the backbone network, which greatly reduces the number of parameters and computations while maintaining the receptive field and enhances the ability to extract local fine features such as wool texture and horn shape. The RC2f module is designed to realize the efficient interaction between shallow detail features and deep semantic features through the residual ladder network structure. The ESPPF module is proposed to fuse features of different scales and enhance the semantic information of the sheep face feature map through adaptive spatial pyramid pooling. The PFDetect module is constructed, which greatly reduces the number of parameters and the computation of the detection head through cross-layer parameter sharing and an attention mechanism.

- The PFL-YOLO model maintains an extremely low level of parameter number, computational complexity, and model size while being able to recognize a single sheep face efficiently and accurately. In addition, the PFL-YOLO model is superior to mainstream object recognition models in recognizing the positive features of sheep faces and is suitable for use in mobile devices, embedded devices, and surveillance systems.

2. Materials and Methods

2.1. Sheep Face Image Dataset

2.1.1. Image Acquisition

The sheep facial image dataset of this study was obtained from the Luyuanxin Meat Sheep Industrial Park, Linxia County, Linxia Hui Autonomous Prefecture, Gansu Province, China. In July 2024, the researchers used a Redmi Note 12 Turbo mobile phone to capture facial videos of 60 healthy 4- to 5-month-old Australian–Hu crossbred sheep at 1920 × 1080 video resolution and 60 frames per second. The Australian–Hu crossbred sheep is a crossbreed between the Australian White Sheep and the Hu Sheep, and their faces have no or short horns, long ears, precise contours, light skin color, and gentle expressions. In the data acquisition process, due to the shy character of the Australian–Hu crossbred sheep and their remarkable habit of living in groups, they will often gather and move in an unorganized manner in the natural state, which will lead to the face of the sheep to be captured being obscured by the other sheep, which will affect the data annotation in the later stage of the study. Therefore, this study adopts the method of fixing the sheep to capture the images.

Three staff members in the sheep house, Z, R, and L, performed the image acquisition task for ease of presentation. During the specific operation, Z quickly stood at the position of the sheep’s front legs and precisely fixed the sheep’s neck to ensure the relative stability of the sheep’s head. At the same time, R took a position at the sheep’s hind legs and worked closely with Z to stabilize the sheep’s body. L held the Redmi Note 12 Turbo phone at a distance from the sheep. Holding the Redmi Note 12 Turbo phone, L photographed the sheep’s face from a distance of 0.25m to 1.0m, with a full angle range of 0° to 180° horizontally and a multi-angle range of 45° to 135° vertically, and the duration of the shot was controlled to be within 1 to 2 min. After a sheep is photographed, R airbrushed the tail of the sheep to avoid repeated shots.

2.1.2. Image Preprocessing

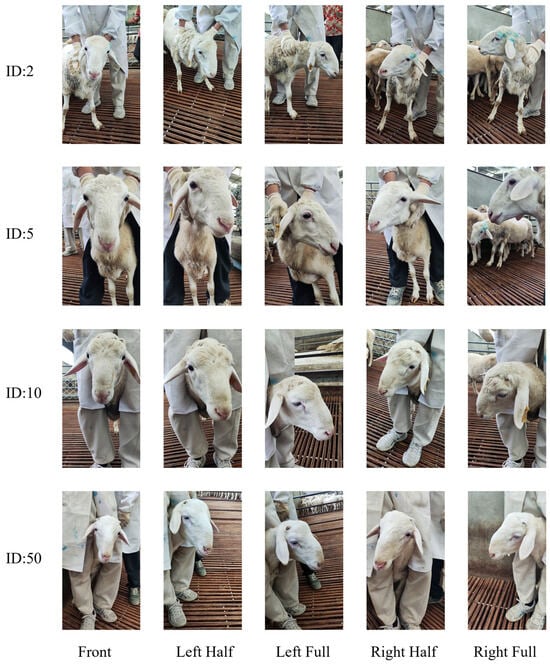

In data processing, the Structural Similarity Index Measure (SSIM) [] is used as a key index to measure the similarity of two images, and a value of SSIM close to 1 indicates that the two images are highly similar. The process of data processing was as follows: firstly, frames were extracted from the captured sheep face video at an interval of 30 frames to prevent high similarity due to the small frame interval, and a total of 10,225 images with a resolution of 1920 × 1080 were obtained; secondly, in the manual filtering stage, the images that did not contain the sheep’s face, the blurred images of the sheep’s face, and images that lacked the facial features due to high noise and exposure were filtered; and finally, SSIM was utilized to evaluate the similarity of the screened images in each category, and the set SSIM threshold was 0.75 to exclude images with high similarity. After manual and similarity screening by SSIM, 6418 high-quality sheep face images were finally obtained. The sheep face images from different angles are shown in Figure 1. The SSIM calculation formula is defined as follows:

where x and y denote two images, respectively, and μx and μy are the mean values of images x and y and are the variances of images x and y, respectively. σxy is the covariance of images x and y. C1 = (k1L)2, C2 = (k2L)2 are constants set to avoid the denominator from being zero, L is the dynamic range of pixel values (usually 255), and k1 and k2 are microscopic constants that typically take values of 0.01 and 0.03.

Figure 1.

Images of sheep’s faces at different angles. Five views with IDs of 2, 5, 10, and 50 for four sheep: front view, left half view, left view, right half view, and right view.

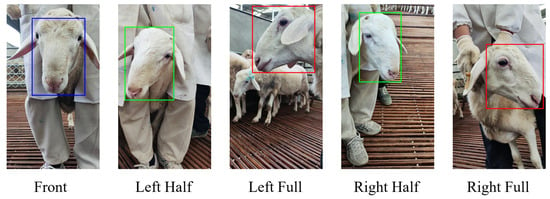

2.1.3. Data Annotations

In the data annotation process, this paper chose to use Make Sense, a free and open-source online annotation tool, to annotate 6418 sheep face images. In the annotation process, the rectangular box was used for labeling, and the labeled area was from 2 cm above the sheep’s forehead to below the sheep’s nose; in addition, it was necessary to ensure that the labeled area did not contain the sheep’s ear markers to reduce the impact of the sheep’s ear markers on sheep face recognition. After the labeling was completed, the results were exported to a .txt file. The contents of the .txt file included the ID, the x-coordinate of the center point of the bounding box, the y-coordinate of the center point, the width, and the height; to ensure that the labeling results were rigorous, all the contents of the labeling file were verified. After rigorous manual and SSIM similarity screening, each image in the dataset contained only one valid annotated target (GT). Figure 2 shows the annotation schematic of different angles of the sheep’s face.

Figure 2.

Schematic labeling of different angles of the sheep’s face.

2.1.4. Data Enhancement

To enhance the robustness and generalization of the dataset, this study used the Albumentations library to augment the sheep face dataset to simulate the facial condition of sheep in different scenes. The six enhancement methods of horizontal and vertical flip, random 90-degree rotation, panning zoom and rotation, random brightness and contrast adjustment, and hue saturation and luminance adjustment were firstly selected to adapt to the diversified scene variations, such as different angles and light intensities, etc. In data enhancement, one of these six enhancement methods was randomly selected each time to enhance the image; this random selection strategy not only improved the generalization ability of the dataset but also avoided over-enhancement of the dataset. After data enhancement, the number of images in the dataset was expanded to 12,836. Subsequently, it was divided into a training set (10,272 images), a validation set (1282 images), and a test set (1282 images) according to the ratio of 8:1:1 for subsequent model training, validation, and performance evaluation. After completing the data enhancement operation, the labeled files after data enhancement were verified, focusing on checking whether the coordinates of the center point, as well as the width and height, were out of the boundary range. Table 1 shows the divided dataset. Table 2 shows the number of images of 60 sheep before and after data augmentation.

Table 1.

The segmentation of the sheep face dataset.

Table 2.

The number of images per sheep before and after data augmentation.

2.2. PFL-YOLO Model

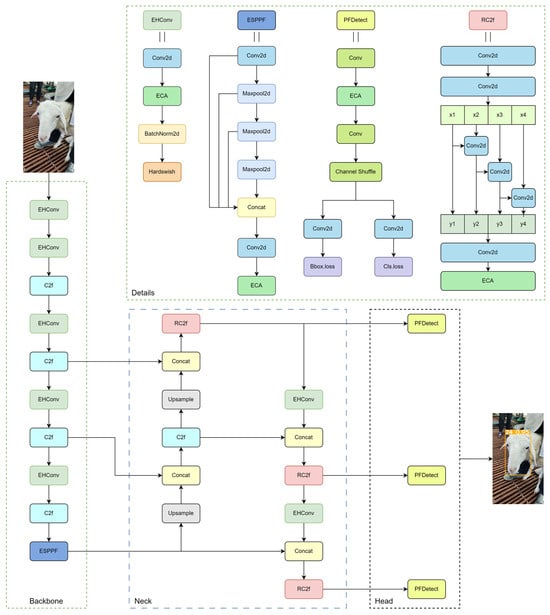

YOLOv8n, the lightest model in the YOLOv8 series, is widely used in lightweight recognition scenarios. On the self-built sheep face dataset, YOLOv8n achieved high recognition accuracy and had a relatively low demand for computational resources. However, in real production environments, mobile and embedded devices may face computational resource limitations, affecting the model’s recognition accuracy and detection speed. Based on this, this study lightened and improved the YOLOv8n model to reduce its dependence on computational resources, and in this paper, the improved model was named PFL-YOLO. Its network structure diagram is shown in Figure 3.

Figure 3.

The architecture of PFL-YOLO network covers the backbone, neck, and head parts, and it improves four modules: EHConv, ESPPF, RC2f, and PFDetect. The Details section of the figure further shows the specific structure of each improved module, and each Conv2d function adopts grouped convolutional design. In addition, the ECA attention mechanism is introduced in the architecture, and the Hardswish activation function is applied.

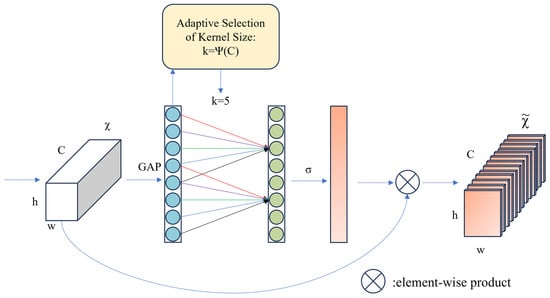

2.2.1. ECA Attention Mechanism

ECA (Efficient Channel Attention) [] is a channel attention mechanism that enables the model to focus on key parts of the input data, such as target regions in the labeled data. It adaptively weights the features in the channel dimension, prompting the model to pay more attention to information-rich channels while suppressing relatively unimportant channels, thus improving the feature representation capability of the model. Compared with the traditional attention mechanism, the ECA module avoids the complex dimensionality reduction and upgrading process. It has the characteristics of high efficiency and lightweight qualities, which is very suitable for the task of a lightweight model. The ECA module first obtains the global features through Global Average Pooling and then uses the Conv1D and Sigmoid activation function to compute the convolution kernel by using the formula to compute the convolutional kernel, then adjusts the shape of the output of the convolutional layer to generate the weights of each channel. Subsequently, each channel is multiplied by the weight of the corresponding channel, making the network more focused on the features that are favorable for the current task. The ECA module diagram is shown in Figure 4:

Figure 4.

The network structure of the ECA attention mechanism.

2.2.2. Hardswish Activation Function

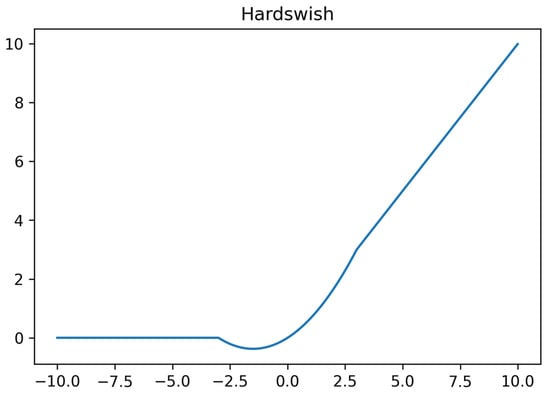

Hardswish is an activation function optimized for mobile devices and embedded systems. It dramatically improves computational efficiency by simplifying the form of the Swish function while maintaining its nonlinear properties. This is especially important for resource-constrained devices, which can speed up the model without sacrificing too much performance., Figure 5 shows the image of the Hardswish activation function. The definition of the Hardswish activation function is as follows:

Figure 5.

The graph of the Hardswish activation function.

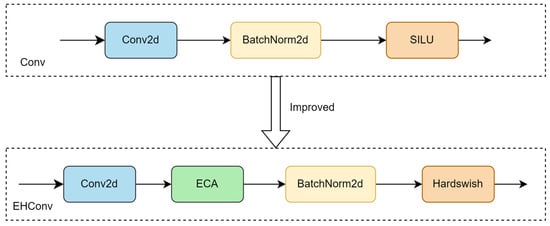

2.2.3. Improved EHConv Module

To strengthen the model’s ability to extract the basic features of the sheep face and capture the image details, improvements were made based on the Conv module to obtain the EHConv module. Specifically, the grouping operation is utilized in the Conv2d function, effectively maintaining the feature extraction ability while reducing the model complexity. Subsequently, the ECA attention mechanism is added after the Conv2d function, which focuses on the feature channels important to the sheep face recognition model. It thus improves the discriminative and expressive ability of the features. BatchNorm2d is used to accelerate the training process and improve the training efficiency of the model. Finally, the Hardswish activation function is used, which can realize more efficient nonlinear transformation in some cases and help the model to learn more complex features. The comparison diagram of the Conv module before and after the improvement is shown in Figure 6:

Figure 6.

A comparison of the Conv module before and after improvement.

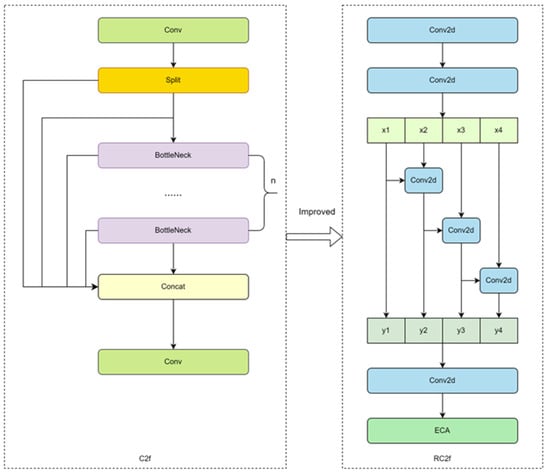

2.2.4. Improved RC2f Module

Aiming at the challenges of performance and efficiency of the C2f module of YOLOv8 on resource-constrained devices, an improved scheme, namely the RC2f module, was proposed. The design of the RC2f module refers to the network module structure of Res2Net [], and by reconfiguring the structure, more fine-grained processing of feature information is realized. In the RC2f module, the initial feature extraction is first carried out through two Conv2d layers and then divided into four groups regarding channel dimensions. Starting from the second group [], each group of features handles its information independently, receives and integrates the channel information of the previous group, and realizes cross-group fusion of the features through the summing operation. This design enables the model to capture complex feature relationships more effectively. The fused features are spliced in the channel dimension, and a Conv2d layer further refines the features. Finally, a lightweight ECA attention mechanism is introduced to adaptively adjust the channel weights, which enhances the model’s ability to focus on key features while maintaining computational efficiency. Through these improvements, the unnecessary computational cost was cut down, and the computational efficiency was improved. Also, in scenarios such as sheep face recognition, the feature information can be processed more finely, which is more conducive to focusing on the key parts of the sheep’s face. The structure diagrams before and after the improvement of the C2f module are shown in Figure 7:

Figure 7.

Comparison of C2f module before and after improvement.

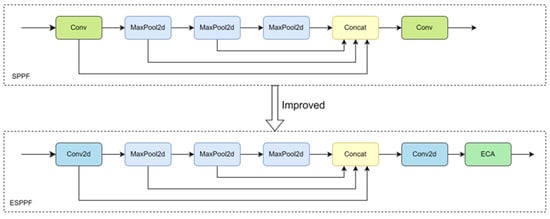

2.2.5. Improved ESPPF Module

In order to realize multi-scale feature fusion with fewer parameters and thus enhance the semantic information of the sheep face feature map, the ESPPF module based on the SPPF module was proposed. Specifically, the first Conv module in SPPF is firstly replaced by the Conv2d function with grouping operation, which can effectively reduce the number of parameters. Subsequently, after a series of pooling operations, the information on the sheep’s facial features on different scales is fused in the channel dimension. Then, the Conv2d function with grouping operation is applied again to reduce the number of parameters and computational complexity. Finally, the ECA attention mechanism filters the input channel information to optimize the feature fusion effect. The comparison diagram of the SPPF module before and after the improvement is shown in Figure 8:

Figure 8.

A comparison of the SPPF module before and after improvement.

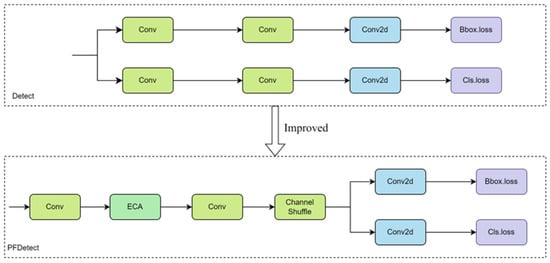

2.2.6. Improved PFDetect Detection Header

In this study, the original Detect detection head was optimized, and the PFDetect detection head was proposed to achieve the lightweighting of the YOLOv8n model for the sheep face recognition task. The core improvement of PFDetect lies in merging the structure by streamlining the number of parameters and effectively reducing computational complexity. First, two independent branches exist in the original Detect detector head, which increases the number of parameters in the model and raises the computational burden. For this reason, merging the branches was adopted to reduce the number of parameters by sharing the Conv module. This improvement simplifies the model structure and increases the efficiency of the model operation by sharing the feature extraction process. Second, to further reduce the number of parameters, grouping operations are used in the merged Conv module, which effectively reduces the number of parameters and computation. However, grouping convolution may limit the information exchange between groups and affect the expressiveness of features. For this reason, Channel Shuffle [] was used, a technique that effectively improves the ability of channel information exchange between different groups and ensures feature richness. In addition, to enhance the model’s ability to capture key features, an ECA attention mechanism was added between the two Conv modules, which can adaptively focus on important feature channels, thus improving the model’s sensitivity to key information and enhancing the feature discrimination ability.

These improvements significantly reduce the number of parameters and computational complexity of the model, improve the operational efficiency of the model, and enhance its applicability on resource-constrained devices, providing strong technical support for real-time application scenarios such as sheep face recognition. The structure of Detect before and after the improvement is shown in Figure 9:

Figure 9.

Comparison of Detect module before and after improvement.

2.3. Experimental Configuration and Parameter Setting

Given the large size of the sheep face dataset in this study and the high resolution of 1920×1080, this can lead to excessive training time. In order to reduce the training time and improve efficiency, server was used throughout this experiment. The operating system of the server was CentOS 8.5; the CPU was Intel(R) Xeon(R) Gold 6148 CPU @ 2.40GHz (Intel Corporation, Santa Clara, CA, USA), and the GPU was NVIDIA Tesla V100(NVIDIA Corporation, Santa Clara, CA, USA). The specific experimental environment settings are shown in Table 3. All models were trained using a single card and without pretrained weights. The training hyperparameter settings are shown in Table 4.

Table 3.

Hardware configuration and software environment for algorithm operation.

Table 4.

Hyperparameter information during training.

2.4. Evaluation Indicators

In order to evaluate the accuracy of the PFL-YOLO model for recognizing sheep’s faces, precision, recall, mAP@50, mAP@50:95, and F1-Score were selected as the accuracy evaluation indices in this study. The number of parameters, floating point operations per second, model size, average detection time (Dt), and frame rate (FPS) were selected as lightweight indicators for the model. The formulas for calculating precision, recall, F1-Score, mAP (mean average precision), average detection time (Dt), and FPS are defined as follows:

where TP (True Positive) means that the model accurately recognizes the sheep IDs; FP (False Positive) indicates that the model incorrectly recognizes a sheep ID as another sheep ID; and FN (False Negative) means that no sheep IDs are recognized even if the corresponding IDs exist. In Equation (6), N represents the number of categories in the sheep face dataset, which is 60 in this dataset. Ap is the area under the P-R curve, i.e., mAP denotes the average AP of the different categories. The detection time t is the sum of pre_process, inference, and post_process, and M is the number of images in the test set.

3. Results

3.1. Results and Analysis of Ablation Experiments

In order to understand the impact of the improved module on the model, ablation experiments were designed in this study. The ablation experiments were conducted with the same server configuration and the same hyperparameters. The results of the ablation experiments are shown in Table 5 and Table 6, where √ indicates the use of the module and is left blank if it is not used.

Table 5.

Precision indicators for ablation experiments.

Table 6.

Lightweighting parameters for ablation experiments.

The introduction of the ESPPF module into the YOLOv8n model alone reduced the number of parameters by 5.3% (0.16 M) but resulted in a 0.7% decrease in recall and a 0.2% decrease in mAP@50; this suggests that the ESPPF module has a role in lightweighting the model but has an impact on the model’s detection performance, especially on the recall metrics.

On the basis of YOLOv8n+ESPPF, the introduction of the EHConv module alone can increase the precision by 0.1%, reduce the number of parameters by 23.8% (0.72 M), and compress the FLOPs by 14.8% (1.2 G), but it can lead to a decrease in the recall rate by 0.2%. This suggests that the EHConv module excels in improving model accuracy and lightweighting, with relatively little impact on recall. The introduction of the RC2f module alone reduces the number of parameters by 24.8% (0.75 M), FLOPs by 13.5% (1.1 G), and model size by 25% (1.5 MB), but it results in a 0.2% decrease in the recall rate and a 0.4% decrease in mAP@50:95. While the RC2f module has a significant effect in terms of model compression, it has an acceptable impact on detection performance. The introduction of the PFDetect module alone can increase precision by 0.1%, reduce the number of parameters by 28.4% (0.86 M), FLOPs by 33.3% (2.7 G), and model size by 28.3% (1.7 MB) while decreasing mAP@50:95 by 0.4%. This suggests that the PFDetect module effectively improves accuracy while compressing the model with relatively little effect on mAP@50:95.

Based on the YOLOv8n+ESPPF model, the EHConv, RC2f, and PFDetect modules were combined in pairs to explore the influence of the combination effect of these modules on the model. When EHConv is combined with the RC2f module, the mAP@50:95 is reduced by 0.9%, but the parameters are reduced by 43.6% (1.31 M), the model size is reduced by 41.2% (2.6 M), and the FLOPs are reduced by 27.1% (2.2 G). Recall is improved by 0.2%. When EHConv is combined with the PFDetect module, the recall rate is reduced by 0.5%, but the number of parameters is reduced by 47% (1.42 M), the model size is reduced by 45% (2.7 MB), and the FLOPs are reduced by 46.9% (3.8 G). When RC2f is combined with the PFDetect module, the recall rate is reduced by 1.1%, but the number of parameters is reduced by 48% (1.45 M), the model size is reduced by 46.7% (2.8 MB), and FLOPs are reduced by 45.6% (3.7 G).

After the integration of the four modules, the number of model parameters was compressed to 33.4% (1.01 M) of the original size, and the FLOPs were reduced by 59.3% (4.8 G); the core detection accuracy (mAP@50 99.5%) was maintained, but the mAP@50:95 index decreased by 1.0%. The results show that the combination of the four modules achieves an effective balance between model lightness (model parameter number 1.01 M, model size 2.1 MB) and high accuracy.

3.2. Comparison Experiment

In this comparison experiment, to comprehensively evaluate the model performance, several lightweight versions of the YOLO series are selected for comparison. Specifically, these include YOLOv5n, YOLOv7-tiny [], YOLOv8n, YOLOv9-t [], and YOLO11n, the most lightweight models within their respective series. In addition to investigating the effect of the lightweight model further, this study carries out a series of innovative lightweight improvements based on YOLOv8n. The backbone layer of YOLOv8n was modified with the help of Hugging Face’s timm library. Specifically, several layers before the SPPF module were replaced with a more lightweight model architecture, which includes the mobilenetv3_small_050, mobilenetv4_conv_small_035, ghostnet_050, and lcnet_035 models. The interpretation of the above models is as follows: mobilenetv3_small_050 adopts the miniature model of MobileNetV3 [] with a model width of 0.5; mobilenetv4_conv_small_035 selects the small convolutional model of MobileNetV4 [], a small convolutional model with the same model width of 0.5; ghostnet_050 uses the GhostNet [] model with the model width kept at 0.5; and lcnet_035 employs the LCNet [] model, which also has a model width of 0.5. In this paper, the transformed models are named V3-YOLOv8n, V4-YOLOv8n, LCNet-YOLOv8n, and Ghost-YOLOv8n for the subsequent analysis and comparison of model performance.

3.2.1. PFL-YOLO: Optimal Balance of Performance and Efficiency

In a comprehensive analysis of the performance of various YOLO series models and their improved versions, the PFL-YOLO model demonstrated distinct advantages. First, in terms of key performance metrics, the results are shown in Table 7. PFL-YOLO achieved 98.5% precision, 98.8% recall, 99.5% mAP@50, 87.4% mAP@50:95, and 98.65% F1-Score. These results indicate that although PFL-YOLO did not achieve the highest score across all evaluation criteria, it maintained a consistently competitive level across all major performance indicators, particularly excelling in mAP@50 and F1-Score, which reflects an excellent balance between detection accuracy and efficiency. From the perspective of model performance efficiency, the results are shown in Table 8. The PFL-YOLO model requires only 1.01 M parameters, 3.3 GFLOPs of computational complexity, and a model size of 2.1 MB to achieve an average detection time of 11.2 ms and a detection speed of approximately 89.4 images per second. Compared to other models such as YOLOv5n and YOLOv7-tiny, PFL-YOLO significantly reduces computational resource requirements and model size while maintaining similar or even superior detection performance, making it especially suitable for deployment on resource-constrained devices.

Table 7.

Comparison of precision indicators.

Table 8.

Comparison of lightweight parameters.

Further comparison shows that although some models, such as YOLOv9-t, are slightly higher than PFL-YOLO in terms of precision and recall, they tend to require more parameters, higher computational complexity, and larger model sizes, e.g., YOLOv9-t has 3.51 million parameters and 15.3 GFLOPs, which results in a much slower inference than PFL-YOLO. In addition, the modified backbone networks like V3-YOLOv8n and Ghost-YOLOv8n, although similar to PFL-YOLO in terms of the number of parameters and model size, are inferior to PFL-YOLO in terms of detection performance metrics, which once again demonstrates that PFL-YOLO does a particularly good job of optimizing the balance between model efficiency and performance.

In summary, PFL-YOLO not only excels in detection performance but also demonstrates significant advantages in computational efficiency and model size. This high performance and low resource consumption make PFL-YOLO particularly suitable for applications in scenarios with high real-time and computational resource requirements, such as target detection tasks in mobile devices or edge computing environments.

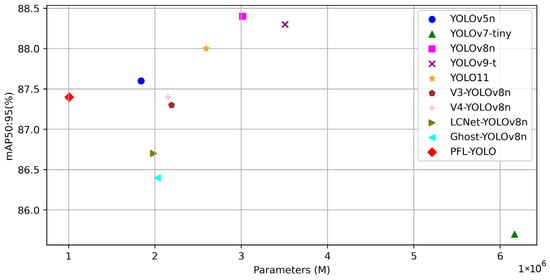

3.2.2. Balance of High Precision and Low Parameters

The PFL-YOLO model substantially reduces the number of parameters and complexity while maintaining high accuracy, reducing the demand for computational resources; it can be deployed on resource-constrained devices to recognize sheep faces in different scenarios effectively, and it provides a highly efficient and lightweight solution for sheep individual recognition. Figure 10 shows the relationship between the accuracy of the ten target detection models and the number of parameters, from which it can be seen that the closer to the point in the upper left corner, it proves that the model has a high recognition accuracy while having a low number of parameters. The mAP@50:95 accuracy of the PFL-YOLO model is 87.4%; among the other target detection models, the index of YOLOv8n is the highest, amounting to 88.4%, and compared with YOLOv8n, the PFL-YOLO model loses only 1%, but the number of parameters is reduced by 2M. The PFL-YOLO model achieves a balance between high accuracy and lightweight.

Figure 10.

Scatter plot of accuracy and number of parameters.

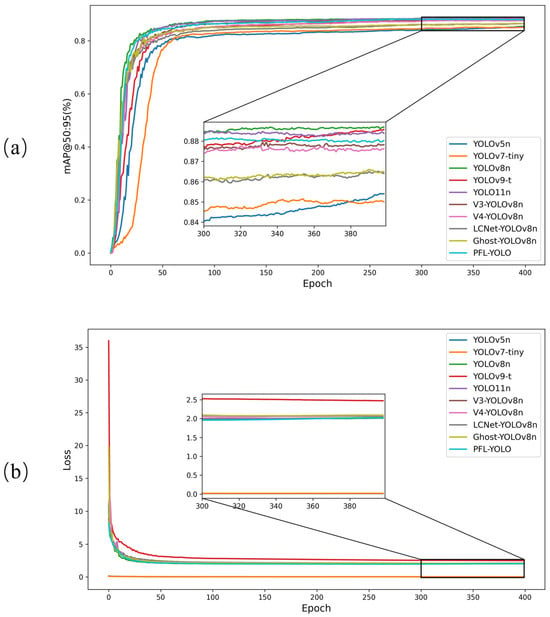

3.2.3. Comparison of Accuracy and Loss Curves for Different Models

Figure 11 shows the mAP@50:95 accuracy curves and the validation set loss curves of the ten object detection models in the validation set. In Figure 11a, the mAP@50:95 of PFL-YOLO is slightly lower than that of YOLOv8n and YOLOv9-t but better than that of other models with modified backbones. The lower accuracy of YOLOv5n and YOLOv7-tiny indicates their limitations in complex tasks. In the later training phase (300–400 epochs), mAP@50:95 is basically stable for all models. In Figure 11b, the loss values of all models decrease with an increasing number of training epochs, indicating that the models gradually converge during the training process. In addition, the loss of most models tends to be stable around 100 epochs. The loss of PFL-YOLO fluctuates slightly around 300 epochs but eventually stabilizes around 380–400 epochs.

Figure 11.

Comparison of model accuracy and loss curves. (a) mAP@50:95 curve graphs of different models. (b) Validation loss curves of different models.

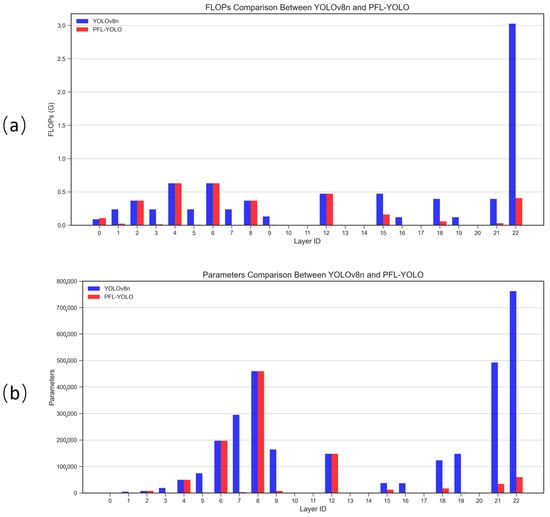

3.2.4. Comparison of Parameter Counts and FLOPs for Models

Figure 12 illustrates the histogram comparison of the number of parameters and FLOPs for the YOLOv8n and PFL-YOLO models. In Figure 12a, the FLOPs of PFL-YOLO in most layers are significantly lower than those of YOLOv8n, especially in the 22nd layer, where the FLOPs of YOLOv8n is 3.025 G, while PFL-YOLO is only 0.405 G, indicating that PFL-YOLO has more advantages in computational efficiency. In Figure 12b, the number of parameters of PFL-YOLO is significantly lower than that of YOLOv8n in the 22nd layer, where the number of parameters of YOLOv8n is 762,244 while that of PFL-YOLO is only 59,861, which further proves the lightweight advantage of PFL-YOLO.

Figure 12.

A comparison of the number of parameters and FLOPs between YOLOv8n and PFL-YOLO. (a) A comparison of FLOPs at each layer between YOLOv8n and PFL-YOLO. (b) A comparison of the number of parameters in each layer between YOLOv8n and PFL-YOLO.

PFL-YOLO outperforms YOLOv8n in terms of FLOPs and the number of parameters, which makes it more suitable for use in resource-constrained environments.

Table 9 shows the statistical results of the PFL-YOLO model with the lightweight detection model. The number of PFL-YOLO parameters is only 1.01 M (33.4% of YOLOv8n and 54.8% of YOLOv5n), the FLOPs are only 3.3 G (40.7% of YOLOv8n), and the model size is compressed to 2.1 MB (55.2% of YOLOv5n). Compared with YOLOv7-tiny, the number of parameters is reduced by 83.6%, and the computation amount is reduced by 75.5%, but it can still maintain high accuracy with very low resource consumption (mAP@50:95 87.4%). In terms of precision–efficiency balance, the PFL-YOLO model achieves a mAP@50 of 99.5% (equal to YOLOv9-t and YOLO11n), which is only 0.1% lower than YOLOv7-tiny (99.6%), and the mAP@50:95 (87.4%) is slightly worse than YOLOv8n (88.4%). However, it approaches the performance of mainstream models with 33.4% parameters and 40.7% computation, which is more suitable for resource-constrained scenarios.

Table 9.

Experimental comparison results for lightweight detection models.

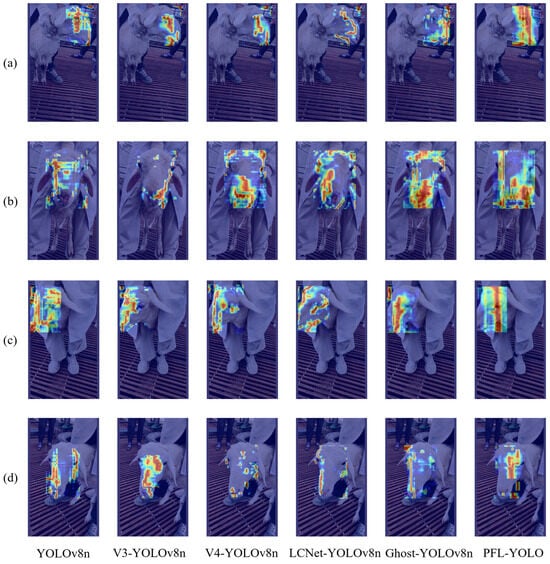

3.2.5. Comparison of Heatmap Effects of Different Models

Heatmaps can effectively visualize the region of concern of the algorithm through the change of color shades to intuitively present the model’s determination of the likelihood of the existence of different regions and targets in the image. The Gradient-weighted Class Activation Mapping (Gradient-CAM) [] technique has been used in this study to visualize the effect of sheep face recognition.

As shown in Figure 13, in images (a) and (b), the heatmap area of the PFL-YOLO model was significantly superior to that of YOLOv8n and other lightweight models. It shows that it can capture the key information of the image more comprehensively, especially when dealing with the frontal features of the sheep’s face, and can make full use of the large effective area to improve the recognition accuracy.

Figure 13.

Comparison of heatmap visualization effects: (a) sheep face image with ID 4, (b) sheep face image with ID 6, (c) sheep face image with ID 11, and (d) sheep face image with ID 24. In the heat map, red indicates that the model pays more attention to the area, while blue represents less attention.

To deepen the comparison, the heatmap area of interest was calculated through a process involving grayscale conversion, binarization, contour extraction, and area computation of the contour-surrounded region. Table 10 lists the pixel area of the area of interest for the different models. In image (a), PFL-YOLO’s heatmap concern area (20,066 pixels) far exceeded that of other models. In image (b), its area reached 38,888.5 pixels, again surpassing others. In image (c), although PFL-YOLO’s 27,417.5 pixels were lower than YOLOv8n’s 29,417 pixels, it still outperformed other lightweight models. In image (d), its 13,686 pixels were lower than YOLOv8n (17,062.5 pixels) and V3-YOLOv8n (17,556 pixels) but higher than some lightweight models.

Table 10.

A comparison of the heatmap area of concern.

PFL-YOLO demonstrated a larger focus area across all images, indicating its ability to comprehensively capture key information during detection. In contrast, models such as YOLOv8n, V3-YOLOv8n, V4-YOLOv8n, LCNet-YOLOv8n, and Ghost-YOLOv8n exhibited smaller focus areas in certain images, suggesting incomplete capture of key areas in specific scenes.

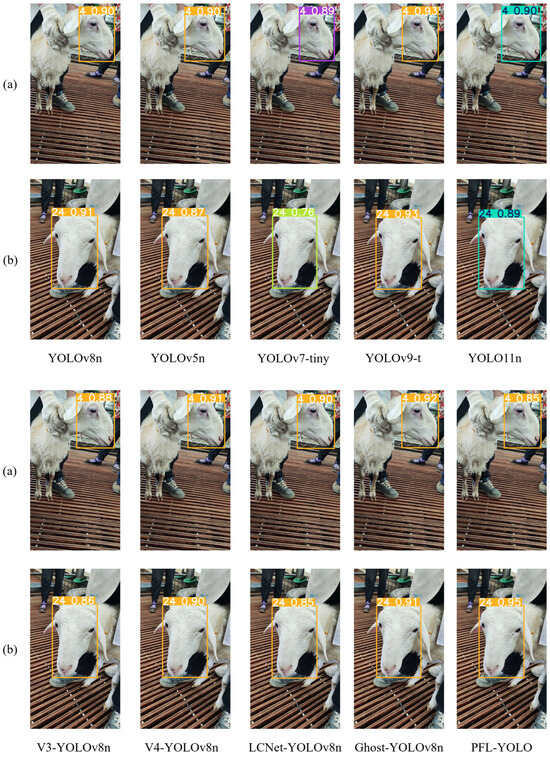

3.2.6. Comparison of Prediction Results of Different Models

To evaluate the performance of different target detection models in the sheep individual recognition task, two images from the test set were randomly selected for sheep face recognition in this study. Figure 14 displays the prediction results of ten target detection models. In image (a), most models present relatively balanced recognition abilities, with prediction probabilities roughly centered at 90%. Notably, PFL-YOLO shows the lowest prediction probability of 85%, a 5% reduction compared to YOLOv8n, revealing its limitations in dealing with the lateral features of sheep faces, which impacts its generalization ability in this specific scenario. Conversely, in image (b), PFL-YOLO achieves the highest prediction probability of 95%, reflecting a 4% improvement over YOLOv8n. These findings highlight PFL-YOLO’s distinct advantage in capturing the frontal features of sheep faces, enabling robust recognition for sheep faces with prominent frontal characteristics. Although PFL-YOLO demonstrates suboptimal performance in some scenarios, its strengths in identifying the frontal features of sheep faces remain significant.

Figure 14.

A comparison of the results of different models predicting sheep faces. (a) Sheep face image with ID 4 and (b) sheep face image with ID 24.

4. Discussion

Many scholars have carried out related research on lightweight sheep face recognition. For example, Zhang et al. based their work on the lightweight YOLOv5s model and achieved some results on the self-built dataset; the number of parameters, FLOPs, and model size of LSR-YOLO were reduced to a certain extent, and the mAP@50 also had good performance []. Zhang et al. based their work on the improved YOLOv7-tiny module. The obtained YOLOv7-SFR algorithm also shows good performance on the self-built sheep face dataset []. Compared with the sheep face recognition models proposed by these two scholars, the PFL-YOLO model in this study has greatly improved in terms of parameter quantity and mAP@50 indicators; the PFL-YOLO model maintains a higher mAP@50 (99.5%) while further reducing the number of parameters to 1.01 M and is also competitive in terms of average detection time and FPS. This indicates that the lightweight strategy in this study is more efficient in balancing model performance and resource consumption and can be better adapted to sheep face recognition in resource-constrained environments.

The data collection method in this study is manual, and this method is suitable for small farms. However, the manual collection method is time-consuming and labor-intensive for large farms. An automated acquisition scheme can be considered to solve this problem. Expressly, a 3m×3m fenced area can be set up on a large farm, and one sheep at a time can be guided into the area and allowed to move freely. Four video cameras are installed around the fence to take footage of the sheep. Image frames were extracted from the video at a frequency of 30 frames per second by video processing techniques. The extracted image frames are then processed using a sheep face detection model (which is only used to locate sheep faces and not to identify individuals) to filter out images containing sheep faces and assign a unique ID number to each sheep. This automated acquisition method effectively reduces the reliance on human resources, significantly improves work efficiency, and gives reuse value to the acquired data. Compared with the manual collection method, the automated scheme demonstrates higher efficiency and greater scalability, providing strong technical support for the intelligent management of large-scale farms.

The sheep face dataset constructed in this study contains only a single breed (Australian–Hu crossbred sheep) at this stage, and all the samples were collected in the sheep house environment. Although the robustness of the sheep face dataset has been improved by a series of data enhancement techniques, it lacks images of sheep faces from other breeds and complex scenes (high occlusion and low light), and we will work on expanding the dataset in the future to include images of sheep faces of multiple breeds and age groups in complex scenes to improve the generalization and generalization ability of the dataset. Robustness testing will be conducted on the expanded complex dataset to evaluate sheep face recognition capabilities, providing a new option for a high-precision, low-parameter general sheep face recognition model.

The mAP@50 and mAP@50:95 of PFL-YOLO on the self-built sheep face dataset are 99.5% and 87.4%, respectively, which is a 0.1% improvement over YOLOv8n on the mAP@50 metric, while the number of model parameters is compressed by 66.5%, and the computational complexity is reduced by 59.2%. It is worth noting that the average inference time of the model is 11.2 ms, which is mainly due to the extra computational overhead introduced by the bidirectional feature fusion structure of the RC2f module. Future research will focus on structured pruning and knowledge distillation, which can greatly reduce the number of parameters and FLOPs while maintaining accuracy. In addition, they can be applied in livestock breeding scenarios, accurately and stably play the individual identification function, and provide strong support for the intelligent management of the livestock industry.

5. Conclusions

In order to meet the lightweight requirement for sheep face recognition, the PFL-YOLO model is proposed in this study. The model achieves efficient feature processing through four innovative optimization strategies: the EHConv module is integrated into the backbone network to enhance image detail capture capability; the SPPF module is upgraded to the ESPPF module to expand the receptive field and improve semantic representation; and the RC2f module is employed to strengthen multi-scale feature fusion in the neck network. Additionally, the PFDetect module is introduced in the design of the detection head, where a parameter fusion strategy is applied to compress the number of parameters. The experimental results demonstrate that while maintaining a detection accuracy of 99.5% mAP@50, the model has only 1.01 M parameters (33.3% of YOLOv8n), with computational complexity reduced to 3.3 GFLOPs (40.8% of YOLOv8n). The model size is compressed to 2.1 MB (35% of YOLOv8n). This model exhibits significant advantages in the intelligent management of the sheep farming industry, enabling rapid and accurate identification of sheep and providing efficient solutions for functions such as precise feeding, disease monitoring, and behavior analysis.

Author Contributions

Conceptualization, G.L., L.K. and Y.D.; methodology, G.L.; software, G.L.; data curation, G.L.; writing—original draft preparation, G.L.; writing—review and editing, L.K. and Y.D.; visualization, G.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. As the research has not yet concluded, the dataset has not been made public.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Castañeda-Miranda, A.; Castaño-Meneses, V.M. Internet of things for smart farming and frost intelligent control in greenhouses. Comput. Electron. Agric. 2020, 176, 105614. [Google Scholar] [CrossRef]

- Chatterjee, P.S.; Ray, N.K.; Mohanty, S.P. LiveCare: An IoT-Based Healthcare Framework for Livestock in Smart Agriculture. IEEE Trans. Consum. Electron. 2021, 67, 257–265. [Google Scholar] [CrossRef]

- Zhang, M.; Wang, X.; Feng, H.; Huang, Q.; Xiao, X.; Zhang, X. Wearable Internet of Things enabled precision livestock farming in smart farms: A review of technical solutions for precise perception, biocompatibility, and sustainability monitoring. J. Clean. Prod. 2021, 312, 127712. [Google Scholar] [CrossRef]

- Li, G.; Shi, G.; Jiao, J. YOLOv5-KCB: A New Method for Individual Pig Detection Using Optimized K-Means, CA Attention Mechanism and a Bi-Directional Feature Pyramid Network. Sensors 2023, 23, 5242. [Google Scholar] [CrossRef] [PubMed]

- Meng, Y.; Yoon, S.; Han, S.; Fuentes, A.; Park, J.; Jeong, Y.; Park, D.S. Improving Known–Unknown Cattle’s Face Recognition for Smart Livestock Farm Management. Animals 2023, 13, 3588. [Google Scholar] [CrossRef] [PubMed]

- Voulodimos, A.S.; Patrikakis, C.Z.; Sideridis, A.B.; Ntafis, V.A.; Xylouri, E.M. A complete farm management system based on animal identification using RFID technology. Comput. Electron. Agric. 2009, 70, 380–388. [Google Scholar] [CrossRef]

- Almog, H.; Edan, Y.; Godo, A.; Berenstein, R.; Lepar, J.; Ilan, H. Biometric identification of sheep via a machine-vision system. Comput. Electron. Agric. 2022, 194, 106713. [Google Scholar] [CrossRef]

- Billah, M.; Wang, X.; Yu, J.; Jiang, Y. Real-time goat face recognition using convolutional neural network. Comput. Electron. Agric. 2022, 194, 106730. [Google Scholar] [CrossRef]

- Zhang, X.; Xuan, C.; Ma, Y.; Su, H.; Zhang, M. Biometric facial identification using attention module optimized YOLOv4 for sheep. Comput. Electron. Agric. 2022, 203, 107452. [Google Scholar] [CrossRef]

- Zhang, C.; Zhang, H.; Tian, F.; Zhou, Y.; Zhao, S.; Du, X. Research on sheep face recognition algorithm based on improved AlexNet model. Neural Comput. Appl. 2023, 35, 24971–24979. [Google Scholar] [CrossRef]

- Guo, Y.; Yu, Z.; Hou, Z.; Zhang, W.; Qi, G. Sheep face image dataset and DT-YOLOv5s for sheep breed recognition. Comput. Electron. Agric. 2023, 211, 108027. [Google Scholar] [CrossRef]

- Pang, Y.; Yu, W.; Zhang, Y.; Xuan, C.; Wu, P. An attentional residual feature fusion mechanism for sheep face recognition. Sci. Rep. 2023, 13, 17128. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Xiang, Y.; Li, S. Combining convolutional and vision transformer structures for sheep face recognition. Comput. Electron. Agric. 2023, 205, 107651. [Google Scholar] [CrossRef]

- Zhang, X.; Xuan, C.; Ma, Y.; Su, H. A high-precision facial recognition method for small-tailed Han sheep based on an optimised Vision Transformer. Animal 2023, 17, 100886. [Google Scholar] [CrossRef]

- Zhang, X.; Xuan, C.; Ma, Y.; Tang, Z.; Gao, X. An efficient method for multi-view sheep face recognition. Eng. Appl. Artif. Intell. 2024, 134, 108697. [Google Scholar] [CrossRef]

- Zhang, X.; Xuan, C.; Ma, Y.; Tang, Z.; Cui, J.; Zhang, H. High-similarity sheep face recognition method based on a Siamese network with fewer training samples. Comput. Electron. Agric. 2024, 225, 109295. [Google Scholar] [CrossRef]

- Zhang, X.; Xuan, C.; Xue, J.; Chen, B.; Ma, Y. LSR-YOLO: A high-precision, lightweight model for sheep face recognition on the mobile end. Animals 2023, 13, 1824. [Google Scholar] [CrossRef]

- Zhang, X.; Xuan, C.; Ma, Y.; Liu, H.; Xue, J. Lightweight model-based sheep face recognition via face image recording channel. J. Anim. Sci. 2024, 102, skae066. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image Quality Assessment: From Error Visibility to Structural Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. arXiv 2019, arXiv:1910.03151. [Google Scholar]

- Gao, S.-H.; Cheng, M.-M.; Zhao, K.; Zhang, X.-Y.; Yang, M.-H.; Torr, P. Res2Net: A New Multi-Scale Backbone Architecture. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 652–662. [Google Scholar] [CrossRef]

- Ma, N.; Zhang, X.; Zheng, H.-T.; Sun, J. ShuffleNet V2: Practical Guidelines for Efficient CNN Architecture Design. arXiv 2018, arXiv:1807.11164. [Google Scholar] [CrossRef]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. arXiv 2017, arXiv:1707.01083. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Wang, C.-Y.; Yeh, I.H.; Liao, H.-Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv 2024, arXiv:2402.13616. [Google Scholar] [CrossRef]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.-C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for MobileNetV3. arXiv 2019, arXiv:1905.02244. [Google Scholar] [CrossRef]

- Qin, D.; Leichner, C.; Delakis, M.; Fornoni, M.; Luo, S.; Yang, F.; Wang, W.; Banbury, C.; Ye, C.; Akin, B.; et al. MobileNetV4—Universal Models for the Mobile Ecosystem. arXiv 2024, arXiv:2404.10518. [Google Scholar]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. GhostNet: More Features from Cheap Operations. arXiv 2019, arXiv:1911.11907. [Google Scholar]

- Cui, C.; Gao, T.; Wei, S.; Du, Y.; Guo, R.; Dong, S.; Lu, B.; Zhou, Y.; Lv, X.; Liu, Q.; et al. PP-LCNet: A Lightweight CPU Convolutional Neural Network. arXiv 2021, arXiv:2109.15099. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Ramakrishna, V.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. Int. J. Comput. Vis. 2019, 128, 336–359. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).