Abstract

The accurate identification of pulmonary nodules is critical for the early diagnosis of lung diseases; however, this task remains challenging due to inadequate feature representation and limited localization sensitivity. Current methodologies often utilize channel attention mechanisms and intersection over union (IoU)-based loss functions. Yet, they frequently overlook spatial context and struggle to capture subtle variations in aspect ratios, which hinders their ability to detect small objects. In this study, we introduce an improved YOLOV11 framework that addresses these limitations through two primary components: a spatial squeeze-and-excitation (SSE) module that concurrently models channel-wise and spatial attention to enhance the discriminative features pertinent to nodules and explicit aspect ratio penalty IoU (EAPIoU) loss that imposes a direct penalty on the squared differences in aspect ratios to refine the bounding box regression process. Comprehensive experiments conducted on the LUNA16, LungCT, and Node21 datasets reveal that our approach achieves superior precision, recall, and mean average precision (mAP) across various IoU thresholds, surpassing previous state-of-the-art methods while maintaining computational efficiency. Specifically, the proposed SSE module achieves a precision of 0.781 on LUNA16, while the EAPIoU loss boosts mAP@50 to 92.4% on LungCT, outperforming mainstream attention mechanisms and IoU-based loss functions. These findings underscore the effectiveness of integrating spatially aware attention mechanisms with aspect ratio-sensitive loss functions for robust nodule detection.

1. Introduction

Early identification and accurate diagnosis of pulmonary nodules are of great clinical significance in reducing mortality associated with lung diseases, improving treatment outcomes, and enhancing patients’ quality of life. Consequently, pulmonary nodule detection has become a crucial research focus in the field of medical image analysis. Current advancements in improving detection accuracy primarily concentrate on two aspects: enhancing channel attention mechanisms and optimizing IoU-based loss functions.

In terms of channel attention mechanisms, the squeeze-and-excitation (SE) module has shown effectiveness in modeling global inter-channel dependencies. For example, CSE-GAN [1] integrates SE into a 3D U-Net for enhanced segmentation performance, while SE-ResNeXt-50 [2] applies it to classification tasks. However, the reliance on global average pooling in these designs often leads to the dilution of critical features associated with small targets, thus limiting their effectiveness in small object detection scenarios. To address this limitation, we propose a novel spatial-SE (SSE) module that incorporates spatial attention mechanisms to enhance the network’s focus on local regions. By combining both channel and spatial attention, the SSE module enables the model to more precisely focus on small nodules, thereby improving overall detection performance. Comparative experiments on the LUNA16 dataset demonstrate that our SSE module achieves a detection precision of 0.781, surpassing mainstream attention modules such as coordinate attention (CA) [3], SIMAM [4], and MCA [5].

Regarding the optimization of IoU-based loss functions, the complete IoU (CIoU) loss proposed by Zhaohui Zheng et al. considers IoU, center distance, and aspect ratio differences, representing a relatively comprehensive bounding box regression objective. Nevertheless, the aspect ratio constraint in CIoU is indirectly introduced through the arctangent-based penalty term v, which responds weakly to slight variations in aspect ratio and remains insensitive to shape mismatches. This limitation may result in accumulated errors, especially in small object detection tasks that require precise box alignment. To mitigate this issue, we propose the enhanced aspect ratio penalty IoU (EAPIoU) loss function, which explicitly introduces a squared aspect ratio difference penalty in addition to the CIoU loss. This enhancement increases the sensitivity and clarity of the aspect ratio constraint, providing more substantial penalties for mismatched box shapes and improving bounding box fitting accuracy for small nodules. Ablation experiments on the LungCT [6] dataset reveal that incorporating EAPIoU into the same model architecture raises the mAP@50 to 92.4%, outperforming existing IoU variants such as WIoU, EIoU, GIoU, DIoU, and SIoU. Furthermore, experiments on the hyperparameter , which controls the strength of the aspect ratio penalty, indicate that setting achieves optimal detection performance by balancing localization robustness and model stability.

- We propose an SSE module based on YOLOv11 that integrates spatial and channel attention mechanisms, improving pulmonary nodule detection performance over existing attention modules in terms of precision and mAP.

- We design the EAPIoU loss function, which incorporates a penalty for aspect ratio differences to better align predicted and ground-truth boxes, leading to improved accuracy in small nodule detection.

- Extensive experiments demonstrate that our method outperforms existing approaches and YOLO variants in both accuracy and stability for small-target pulmonary nodule detection, supporting early diagnosis and treatment.

2. Related Work

Accurate detection of pulmonary nodules remains challenging in medical imaging. Existing studies mainly focus on two areas: improvements in attention mechanism modules and optimizations in loss functions.

In terms of improvements in attention modules, the application of the SE attention mechanism has made significant progress, but it also has some limitations. Early methods, such as CSE-GAN, utilized SE to enhance features; however, global average pooling lost spatial details, thereby reducing the accuracy of small nodules. Subsequent studies, such as SE-ResNeXt-50-CNN, applied SE and improved classification accuracy. However, they lacked spatial specificity and struggled to focus on the critical local regions of pulmonary nodules. Recent research has combined the SE mechanism with self-attention, as seen in the SE-ViT model, which enhances classification accuracy and interpretability through Grad-CAM visualization. Contemporary approaches [7] propose a multi-view attention framework employing random interpolation resizing and cross-view attention to enhance feature discrimination in few-shot scenarios. However, these methods still face issues with spatial detail loss, poor specificity, and insufficient feature representation.

To address these issues, our work proposes the SSE module, which combines channel and spatial attention mechanisms. This combination preserves global context information and enhances focus on local features, allowing for a more precise capture of the key features of pulmonary nodules.

In terms of optimizations in loss functions, recent IoU-based loss functions for bounding box regression, such as GIoU [8], CIoU [9], and DIoU [10], have enhanced bounding box localization in loss functions. GIoU introduces the smallest enclosing box to address gradient issues when boxes do not overlap but struggles with significant aspect ratio differences due to its focus on the enclosing box. CIoU builds upon GIoU by incorporating center distance and aspect ratio penalties, thereby improving localization accuracy while maintaining less responsiveness to significant aspect ratio differences. DIoU further enhances CIoU by focusing on center distance but still depends on angular penalties for aspect ratio optimization, which is insufficient for shape-inconsistent targets. Despite these advancements, these methods do not fully address aspect ratio differences and shape consistency, particularly for non-rectangular objects. To overcome these limitations, we propose EAPIoU, which introduces a squared aspect ratio penalty that directly measures the aspect ratio differences between predicted and ground-truth boxes. EAPIoU offers stronger gradient feedback, more accurate shape optimization, and scale invariance, enabling better adaptation to various object sizes and shape complexities, thus outperforming CIoU and DIoU in optimizing shape consistency.

3. Proposed Method

3.1. Overview

This work proposes an innovative spatial SE module, which combines the squeeze-and-excitation (SE) channel attention mechanism with spatial attention to enhance feature representation effectively. While traditional SE modules effectively capture inter-channel dependencies, they often compromise spatial information, particularly with small or detail-rich regions, reducing localization precision. To address this issue, we introduce a spatial attention mechanism on top of the SE module. By utilizing both max pooling and average pooling operations, the spatial features are extracted to generate spatial attention maps, which are fused with channel-attended feature maps. This enhancement enables the model to recalibrate features along the channel dimension while precisely highlighting key spatial regions, thereby enhancing detection accuracy for small objects, such as pulmonary nodules.

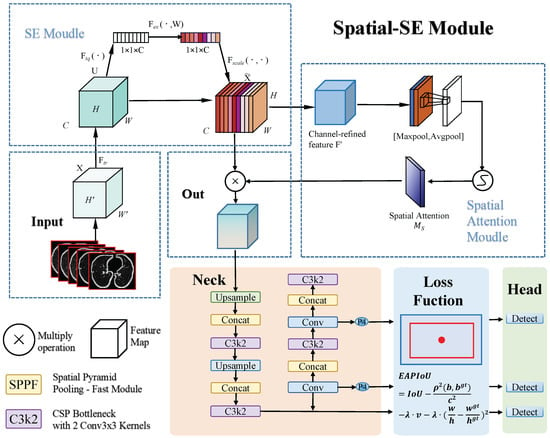

In the context of optimizing loss functions, we propose a novel explicit aspect ratio penalty IoU (EAPIoU) loss function to address the shortcomings of existing IoU-based loss functions, such as CIoU, when dealing with significant aspect ratio discrepancies. CIoU optimizes bounding box regression by incorporating penalties for center distance and aspect ratio. However, CIoU applies the aspect ratio penalty indirectly through an arctangent-based function, which responds weakly to small aspect ratio changes and fails to capture shape mismatches accurately. In contrast, the EAPIoU loss function explicitly introduces a squared aspect ratio difference penalty on top of CIoU. This modification significantly enhances the detection of small nodules while improving the model’s adaptability to complex shapes.This process is illustrated in Figure 1.

Figure 1.

This paper presents a pulmonary nodule detection framework based on YOLOv11. First, the input lung CT slice images are processed by the SE module to adjust feature map channel weights via channel attention. The feature maps then pass through the spatial-SE module, which applies max and average pooling to extract spatial information, generating a spatial attention map that is fused with the channel-attention-processed feature maps to improve the detection of small nodules. Next, the neck module performs feature fusion and upsampling, providing multi-scale features for detection. Finally, the EAPIoU loss function applies a squared penalty to the aspect ratio differences, optimizing bounding box regression and improving the detection accuracy of small nodules. The detection head outputs the location and class of pulmonary nodules using these refined features.

3.2. Spatial Squeeze-and-Excitation Module

In contemporary object detection tasks, particularly within the medical field, such as pulmonary nodule identification, capturing fine, localized features is crucial. Conventional convolutional networks often struggle with limited capacity to model global context or identify salient spatial regions. To address this, we propose a dual-branch attention mechanism named the spatial SE (SSE) module. It combines the benefits of global channel-wise recalibration with local spatial enhancement, enabling the network to learn more discriminative and context-aware representations.

The SSE module consists of (1) a squeeze-and-excitation (SE) block that emphasizes important channels by modeling inter-channel dependencies and (2) a spatial attention branch focusing on key locations in the feature map. This structure enables the model to leverage both global and local cues, thereby enhancing feature expressiveness for recognizing small objects.

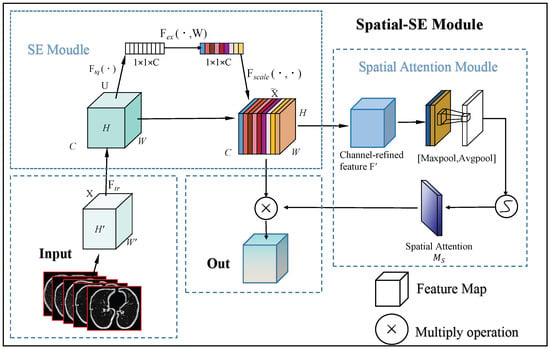

As illustrated in Figure 2, the SE module initially processes the input feature map, followed by the spatial attention module. Finally, their outputs are combined for the final refined feature map. The process is mathematically described as follows.

Figure 2.

This flowchart illustrates the process of the spatial SE Module. Initially, the input feature map passes through the SE module, where global average pooling and fully connected layers calculate attention weights for each channel, refining the feature map by channel-wise scaling. Next, the SSE module utilizes max and average pooling to extract spatial data, creating a spatial attention map that emphasizes crucial spatial areas. The final output is the feature map, enhanced by both channel and spatial attention, which improves small object detection.

3.2.1. Channel Attention via Squeeze-and-Excitation

Given an input feature map , where B denotes the batch size, C is the number of channels, and represent the spatial dimensions, the SE module applies a global average pooling (GAP) operation to generate a global context descriptor :

This descriptor captures the global distribution of activation strength in each channel, summarizing its overall importance.

To learn non-linear channel relationships, is passed through a two-layer fully connected network with a bottleneck structure (i.e., reduction ratio r), which enhances compactness and reduces computation:

Here, denotes the sigmoid activation function, and represents the learned channel attention weights. These weights are broadcast to each spatial location and applied via element-wise multiplication to recalibrate the input:

This process effectively suppresses the less important channels and amplifies the more informative ones, allowing the network to prioritize semantic-rich feature activations.

3.2.2. Spatial Attention Mechanism

Although the SE module captures the global channel-wise context, it lacks spatial discriminative ability. Therefore, we introduce a spatial attention mechanism to complement it by selectively focusing on informative regions in the spatial dimensions.

The output of the SE module, , is first aggregated along the channel axis through max pooling and average pooling operations, resulting in two 2D spatial context descriptors:

These two maps reflect the strongest and mean activations at each spatial location, respectively, capturing different forms of spatial importance.

The descriptors are concatenated and passed through a convolution layer followed by a sigmoid activation function to generate the spatial attention map :

This spatial map acts as a learned mask to emphasize critical regions in the feature map where important structures, such as lesions or nodules, are likely to be situated.

3.2.3. Final Fusion

Finally, the spatial attention map is applied to the channel-attended feature map by broadcasting it across all channels and performing element-wise multiplication:

The resulting output O is a feature map that has been refined both globally (via SE) and locally (via spatial attention), enabling the network to focus more effectively on discriminative patterns while preserving computational efficiency.

3.3. Enhanced Aspect Ratio Penalty IoU (EAPIoU)

Existing IoU-based regression losses, such as GIoU, DIoU, and CIoU, improve localization performance by incorporating geometric factors, including the central point distance and aspect ratio. Among them, CIoU introduces a geometric penalty term that accounts for the angular difference between aspect ratios using the arctan function. However, this formulation offers limited sensitivity to discrepancies in shape, notably when the predicted box diverges significantly in width–height proportion from the ground truth. To explicitly strengthen aspect ratio consistency, we propose a refined loss termed EAPIoU.

Let and represent the predicted and ground-truth bounding boxes, respectively. Based on the CIoU formulation, the proposed EAPIoU enhances the loss function by introducing a squared difference penalty between the width–height ratios of the predicted and ground-truth boxes.

The EAPIoU formulation is provided by the following:

where

- denotes the Euclidean distance between the center points of the predicted and ground-truth boxes;

- c represents the diagonal length of the smallest enclosing box covering both b and ;

- v is the original aspect ratio penalty term from CIoU, defined as follows:

- The final term is a newly introduced squared aspect ratio penalty, which enforces stronger constraints on shape consistency between b and .

The full computation logic of EAPIoU is described in Algorithm 1.

| Algorithm 1 Computation of EAPIoU Loss. |

| Input: Predicted box , Ground-truth box , IoU, coefficient |

| Output: Final penalized IoU score, EAPIoU |

|

The penalty coefficient moderates the impact of geometric and aspect ratio penalties. Unlike the original CIoU, which only targets angular aspect ratio differences, our approach measures the squared deviation of the width–height ratio, providing more responsive gradients during training.

This convex and symmetric squared aspect ratio penalty is better for optimizing bounding box shapes, particularly for small or elongated objects. Furthermore, its scale invariance ensures that it generalizes well across objects of varying sizes.

4. Experiment and Analysis

In this section, we design and conduct a series of experiments to comprehensively evaluate the performance of the proposed method and the effectiveness of its components. Specifically, the experiments include comparison studies, ablation experiments on the IOU function, ablation of key modules, analysis of IOU hyperparameters, and comparisons of different attention mechanisms. Through these systematic experiments, we thoroughly investigate the contributions and impacts of various factors on the model’s performance.

4.1. Datasets

To comprehensively evaluate our pulmonary nodule detection framework, we employ three publicly available datasets: LUNA16 [11], NODE21 [12], and LungCT [6].

LUNA16 [11] is derived from the LIDC-IDRI dataset and contains 888 low-dose chest CT scans annotated by four experienced radiologists. The dataset includes 1186 positive nodules (≥3 mm) and is partitioned into ten folds for cross-validation, following the challenge protocol.

NODE21 [12] is a chest radiograph dataset released for the NODE21 Challenge, aimed at promoting research in nodule detection and synthesis on X-ray images. It consists of 4882 frontal-view chest X-rays, with 1134 images containing 1476 annotated nodules marked using bounding boxes. The remaining 3748 images are negative samples. The dataset also provides synthetic data resources, including CT-derived nodule patches extracted from the LUNA16 dataset. The images originate from the JSRT, PadChest, ChestX-ray14, and Open-I datasets.

LungCT [6] is a curated CT-based dataset hosted on Roboflow Universe, designed for pulmonary nodule detection tasks. It comprises 1152 annotated CT slices with nodules marked using bounding boxes. The dataset comprises one object category, “nodule,” and is licensed under a CC BY 4.0 license, making it suitable for open-source research and model benchmarking. Together, these datasets provide diverse imaging modalities (CT and X-ray) and annotation types, enabling robust evaluation across different clinical scenarios.

4.2. Evaluation Metrics

In our experiments, we employed a comprehensive set of metrics to evaluate the model’s performance from multiple perspectives thoroughly. These metrics are elaborated as follows:

- Parameters (Param): The total number of model parameters, reflecting its capacity and complexity.

- GFLOPs: Giga floating-point operations per forward pass, indicating computational cost and efficiency.

- Precision: Ratio of true positives to all predicted positives, measuringcc the accuracy of positive predictions.

- Recall: Ratio of true positives to actual positives, measuring the model’s ability to detect relevant instances.

- mAP@50: Mean average precision at an IoU threshold of 0.5, the standard metric for object detection accuracy.

- mAP@50–90: Mean AP averaged over IoU thresholds from 0.5 to 0.9, assessing detection robustness across difficulties.

4.3. Implementation Details

The experiments were conducted on a workstation equipped with an Intel(R) Xeon(R) W-2255 CPU @ 3.70 GHz, 128 GB of RAM, and dual NVIDIA A5000 GPUs with 24 GB VRAM each. The system ran Ubuntu 20.04 with CUDA version 11.1. The deep learning framework used was PyTorch 1.8.0. The complete toolchain, including hardware configuration, software environment, and all training and evaluation parameters, is summarized in Table 1.

Table 1.

Experimental environment and training configuration.

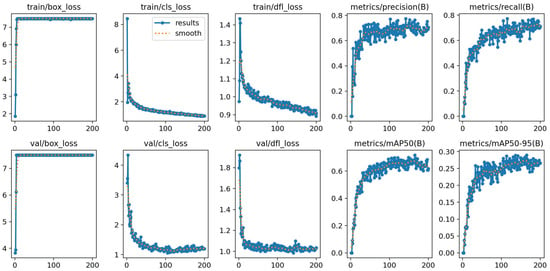

To further evaluate the training dynamics of the proposed YOLOV11-EAR model, we visualize the convergence behavior throughout the 200 training epochs. As illustrated in Figure 3, key loss components, namely box regression loss, classification loss, and distribution focal loss (DFL), exhibit a rapid decrease within the first 50 epochs, followed by a smooth convergence trend. The validation losses mirror this trajectory, indicating stable optimization without severe overfitting.

Figure 3.

Training and validation curves of YOLOV11-EAR across 200 epochs on the LUNA16 dataset. The plots include box loss, classification loss, distribution focal loss, as well as precision, recall, and mAP metrics.

In terms of detection performance, the precision, recall, and mean average precision (mAP@50 and mAP@50–95) on the validation set steadily increase and saturate over time. This suggests that jointly optimizing the spatial squeeze-and-excitation (SSE) module and the explicit aspect ratio penalty IoU (EAPIoU) loss leads to consistent improvements in both localization and classification capabilities.

These convergence profiles not only confirm the stability and reliability of the proposed framework during long-term training but also provide quantitative evidence that the additional modules do not introduce adverse optimization effects.

To ensure statistical robustness, each experiment was independently repeated ten times under identical settings. The results are reported with 95% confidence intervals computed using the sample standard deviation and Student’s t-distribution with 9 degrees of freedom. Furthermore, statistical significance was assessed using paired t-tests, confirming that the performance improvements of the proposed YOLOV11-EAR framework are statistically significant, with all p-values less than 0.05.

4.4. Comparison Experiment

To comprehensively validate the effectiveness of the proposed model in pulmonary nodule detection, we conducted systematic comparative experiments against ten representative YOLO models, including YOLOv3-tiny, YOLOv3, YOLOv4, YOLOv5, YOLOv6, YOLOv7, YOLOv8, YOLOv9, YOLOv10, and YOLOv11. All models were trained and tested under identical settings on three public benchmark datasets: LUNA16, NODE21, and LungCT. This ensured fairness and reproducibility in comparison. (All boldfaced numerical values in the tables are used to highlight the best-performing results for each metric, facilitating direct comparison across different settings.)

As shown in Table 2, our method consistently outperformed all baselines across key metrics, e.g., precision, recall, mAP@50, and mAP@50–90, on all datasets. On the LUNA16 dataset, for example, our model achieved precision of 0.781, mAP@50 of 75.5%, and mAP@50–90 of 33.3%, surpassing all tested YOLO variants. Similar superiority was observed on NODE21 and LungCT, where the proposed method attained 89.1% and 92.4% in detection accuracy, respectively.

Table 2.

Comparison experiments across three datasets: LUNA16 [11], Node21 [12], and LungCT [6], with 95% confidence intervals over 5 runs.

Notably, these improvements were achieved with only 2.5M parameters and 6.3 GFLOPs, highlighting the model’s lightweight and efficient architecture. Compared with computationally intensive models like YOLOv4 or YOLOv7, our method significantly reduces resource consumption while maintaining or exceeding performance, making it well-suited for deployment in real-time and resource-constrained clinical settings.

Furthermore, even when compared to the baseline YOLOv11, which shares the exact parameter count and computational complexity, our model shows substantial improvements in both localization accuracy and robustness. These gains can be attributed to the proposed architectural enhancements: the spatial SE attention module and the explicit aspect ratio penalty IoU (EAPIoU) loss, both of which improve feature representation and bounding box regression, especially for small, irregularly shaped nodules.

In summary, the comparative experiment convincingly demonstrates the superiority of our method in both accuracy and efficiency. Its balanced design makes it a promising solution for real-world applications in pulmonary nodule detection and other small object detection scenarios in medical imaging.

4.5. Ablation Study

To thoroughly investigate the contributions of individual components in our proposed method, we design and conduct a series of ablation studies. These studies aim to isolate and evaluate the impact of (1) the proposed IoU loss function (EAPIoU), (2) the attention module (spatial SE or SSE), and (3) the IoU loss hyperparameter . Specifically, we compare EAPIoU with multiple existing IoU-based losses to verify its superiority in bounding box regression. We further assess the performance gains brought by SSE and EAPIoU individually and in combination to understand their respective and joint effects. Finally, we tune the hyperparameter to identify the optimal balance between aspect ratio regularization and localization accuracy. Through these controlled experiments, we validate the robustness, effectiveness, and practical value of our key design choices.

4.5.1. Ablation Study on Different IoU Loss Variants

To validate the effectiveness of our proposed explicit aspect ratio penalty IoU (EAPIoU) loss, we conducted a series of controlled ablation experiments comparing it with five widely adopted IoU-based losses: WIoU, EIoU, GIoU, DIoU, and SIoU. All variants were integrated into a single detection framework, and experiments were conducted on three benchmark datasets: LUNA16, Node21, and LungCT, ensuring fair and consistent comparisons.

As shown in Table 3, EAPIoU achieves the highest precision (0.781) and mAP@50–90 (33.3%), surpassing SIoU by 1.6% in precision and 2.2% in mAP@50–90, which better reflects localization performance across IoU thresholds. Although SIoU attains the same recall (77.3%), its inferior mAP values indicate weaker shape alignment. This suggests that EAPIoU provides a more effective balance between sensitivity and box regression fidelity.

Table 3.

Ablation experiment of different IoU loss variants across datasets with 95% confidence intervals.

On the Node21 dataset, EAPIoU again shows a clear advantage, yielding precision of 0.895 and mAP@50 of 89.1%, outperforming SIoU by 2.3% and 1.9%, respectively. The mAP@50–90 of 51.4% reflects better overall localization quality, particularly for nodules with variable morphology.

For the more challenging LungCT dataset, EAPIoU reaches the highest precision (0.887), recall (82.5%), and mAP@50–90 (53.8%), outperforming even the strong baselines DIoU and SIoU by notable margins. This confirms EAPIoU’s robustness in handling diverse anatomical variations and small-object scenarios.

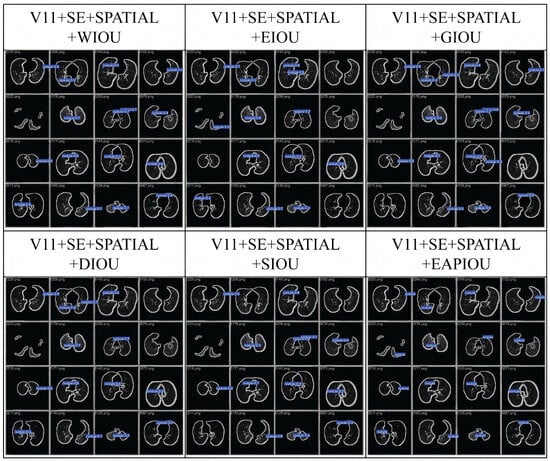

Overall, these results demonstrate that EAPIoU’s explicit modeling of aspect ratio discrepancies yields more accurate and stable bounding box regression compared to conventional geometric or angular-based penalties. Additionally, we compared intermediate detection results on the LUNA16 dataset. The following image presents a representative example for comparison.

Figure 4 shows that EAPIoU outperforms all other methods in every test. Compared to traditional IoU functions, EAPIoU can more accurately capture the boundaries of lung nodules, especially in complex and closely spaced regions. EAPIoU enhances detection accuracy by optimizing both the local and global shapes of objects, reducing false positives and false negatives. Additionally, EAPIoU significantly improves the intersection over union (IoU) metric, demonstrating its superiority in precise boundary alignment and shape recovery. Our results indicate that EAPIoU can effectively enhance the performance of lung nodule detection models, particularly in handling complex structures and nodules.

Figure 4.

Comparison of the detection process using IoU functions in LUNA16.

Overall, the ablation study conclusively shows that the proposed EAPIoU loss yields the best performance among the compared IoU loss variants, validating its effectiveness and practical value in improving object detection metrics.

4.5.2. Ablation Study on Attention and Loss Modules

To further quantify the individual and joint contributions of the spatial squeeze-and-excitation (SSE) attention mechanism and the proposed EAPIoU loss, we conduct a comprehensive ablation study across three datasets: LUNA16, Node21, and LungCT. The results are summarized in Table 4.

Table 4.

Comparison of module ablation experiment results with diverse confidence intervals.

Table 4 presents the results of an ablation study designed to evaluate the individual and joint contributions of the spatial squeeze-and-excitation (SSE) module and the proposed EAPIoU loss across LUNA16, Node21, and LungCT datasets. When both components are removed, the model exhibits the weakest performance across all datasets and evaluation metrics, underscoring the necessity of incorporating both spatial attention and shape-aware regression for accurate pulmonary nodule detection.

Introducing the SSE module alone leads to consistent improvements in both precision and mAP@50–90, particularly on LUNA16 and Node21. This demonstrates that spatial attention enhances the model’s ability to focus on discriminative local regions and suppress irrelevant backgrounds. For instance, precision on LUNA16 increases from 0.672 to 0.725, while mAP@50–90 rises from 28.7% to 30.1%. A paired t-test across five independent runs shows that these gains are statistically significant for mAP@50–90 on LUNA16.

Similarly, incorporating EAPIoU alone contributes significant gains, especially in recall and localization quality. By explicitly penalizing bounding box aspect ratio discrepancies, EAPIoU improves regression accuracy. On the LungCT dataset, for example, it improves mAP@50–90 from 48.5% to 51.2%, with a corresponding p-value of 0.004, confirming its advantage in challenging scenarios involving small or elongated nodules. These results highlight the robustness and general utility of EAPIoU across heterogeneous datasets.

The best performance is consistently observed when both modules are used together. Their combination yields substantial and complementary benefits across all datasets. On Node21, for example, the whole model reaches 0.895 precision and 51.4% mAP@50–90, outperforming all partial configurations. These results suggest that SSE contributes to spatial feature refinement, while EAPIoU enhances geometric alignment, together forming a robust framework for high-fidelity object detection in medical imaging.

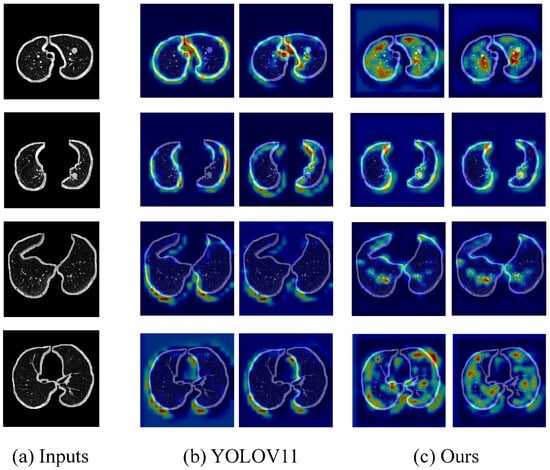

Additionally, we compared the heat maps of different module combinations on the LUNA16 dataset. A representative set of results is shown in the figure below.

As shown in Figure 5, we compare the heat maps generated by the original YOLOV11 model and our proposed method (YOLOV11+SSE+EAPIOU) on several pulmonary CT scan samples. The original YOLOV11 model exhibits issues such as dispersed target responses and false activations in non-nodule regions, as evidenced by the presence of high-response areas outside the actual nodules. In contrast, our method demonstrates more focused and intense activations over the pulmonary nodule regions, with improved spatial consistency and clearer boundary emphasis. The incorporation of the spatial SE module and the EAPIOU loss function enhances the model’s ability to suppress irrelevant background features while reinforcing its attention to clinically significant areas. These improvements contribute to better localization accuracy and model interpretability, validating the effectiveness of our enhancements in spatial awareness and feature discrimination.

Figure 5.

Comparison of heat maps between YOLOv11 and YOLOV11+SSE+EAPIOU.

4.5.3. Ablation Study on IoU Hyperparameter

To investigate the impact of the IoU loss weighting coefficient on model performance, we conducted a series of ablation experiments across the LUNA16, Node21, and LungCT datasets. The results, as presented in Table 5, show that the value of significantly affects detection accuracy and localization quality. To further understand the impact of on model performance, we performed a sensitivity analysis and provided guidelines for selecting in different clinical settings.

Table 5.

IoU hyperparameter experiment across datasets with 95% confidence intervals.

4.5.4. Sensitivity Analysis

We conducted a series of experiments where was varied across a range to analyze its impact on model performance. In these experiments, we tested values of within the range of [0.001, 1] to evaluate key performance indicators.

- •

- Too small: a (e.g., 0.001) underweights the regression loss, degrading both classification and localization performance.

- •

- Too large: a (e.g., 0.5 or 1) overemphasizes bounding box regression, which reduces classification quality and overall robustness.

- •

- outperforms all other settings across every metric and dataset, indicating that it provides the most favorable trade-off.

4.5.5. Clinical Application Guidelines for Selecting

Based on the consistent superiority of across all datasets and evaluation metrics, we recommend using this value as the default setting for various clinical diagnostic tasks. For early disease screening, where maximizing recall is crucial to minimize the risk of missed detections, offers optimal sensitivity. In tasks requiring precise localization, such as lesion boundary delineation or pre-surgical planning, also achieves the best bounding box regression accuracy. Moreover, for general medical image analysis tasks that demand a balanced performance between precision and recall, consistently delivers robust and reliable results. Therefore, we suggest as a universal setting across diverse medical imaging scenarios.

4.6. Comparison of Attention Mechanisms

To evaluate the effect of different attention mechanisms on detection performance, we conducted a comparative study across three datasets (LUNA16, Node21, and LungCT), integrating five representative modules: SE [25], CA [3], SIMAM [4], MCA [5], and our proposed spatial squeeze-and-excitation (SSE). The results are summarized in Table 6.

Table 6.

Variational attention mechanism experiments across datasets with 95% confidence intervals.

As illustrated in Table 6, the proposed spatial squeeze-and-excitation (SSE) module consistently achieves superior or competitive performance across all datasets and evaluation metrics. On the LUNA16 dataset, SSE outperforms all baselines in precision (0.781), recall (77.3%), and mAP@50 (75.5%), while also yielding the highest mAP@50–90 (33.3%). This reflects its effectiveness in enhancing localization accuracy for small nodules by focusing on spatially relevant features. A paired t-test on mAP@50–90 across five independent runs shows that SSE significantly outperforms the second-best attention (CA).

On the Node21 and LungCT datasets, SSE again surpasses other attention mechanisms, achieving the best results in all four metrics. For example, on Node21, it reaches precision of 0.895 and a mAP@50–90 of 51.4%, outperforming the next-best CA module by 2.4% and 1.2%, respectively. The observed gains are statistically significant for mAP@50–90. Similarly, on LungCT, SSE improves mAP@50–90 to 53.8%, showing strong generalization to more complex and heterogeneous nodular structures.

In contrast, modules such as SIMAM and MCA consistently lag, particularly in precision and mAP@50, indicating suboptimal spatial discrimination in the context of dense or small-object environments. While SE performs reasonably well and offers high recall, it lacks the spatial selectivity necessary for precise localization, especially under cluttered backgrounds.

These findings demonstrate that combining spatial and channel attention, as carried out in SSE, enables the model to emphasize critical lesion regions better while suppressing irrelevant context. The lightweight nature of SSE, along with its consistent improvements across diverse datasets, underscores its suitability for medical object detection tasks where fine-grained spatial awareness is essential.

4.7. Comparison with Mainstream Detectors

To comprehensively validate the performance of the proposed YOLOV11-EAR framework, we conducted quantitative comparisons against two widely used object detectors: faster R-CNN [26] and RetinaNet [27]. These experiments were performed on three benchmark datasets for pulmonary nodule detection, LUNA16, Node21, and LungCT, covering a variety of imaging conditions and nodule morphologies. The evaluation metrics include precision, recall, and mean average precision at IoU threshold 0.5 (mAP@50).

As shown in Table 7, YOLOV11-EAR consistently outperforms all baselines across all three datasets in terms of precision, recall, and mAP@50. On the LUNA16 dataset, our model achieves mAP of 75.5%, representing a 5.4% gain over faster R-CNN and a 6.6% gain over RetinaNet. Similar improvements are observed on Node21 and LungCT datasets, confirming the robustness and superior generalization capability of our framework.

Table 7.

Comparative results on three public datasets with 95% confidence intervals (best results are marked in bold).

These improvements can be attributed to the synergistic design of the spatial squeeze-and-excitation (SSE) module and the explicit aspect ratio penalty IoU (EAPIoU) loss function. The former enhances the spatial–semantic representation of nodules, while the latter enforces stronger geometric constraints, particularly benefiting the localization of irregularly shaped pulmonary nodules.

The superior performance across benchmarks validates YOLOV11-EAR as a robust and generalizable solution for real-world clinical applications, especially for detecting small and irregular pulmonary nodules under diverse acquisition settings.

4.8. Kernel Size Sensitivity Analysis in the SSE Module

To evaluate the impact of the convolutional kernel size in the spatial squeeze-and-excitation (SSE) module, we conducted a sensitivity analysis on the LUNA16 dataset. Specifically, we varied the kernel size of the spatial attention sub-module among {3, 5, 7, 9, and 11} to examine how different receptive field sizes affect detection performance.

During the experiments, all other network structures and training hyperparameters were kept consistent. Only the kernel size in the spatial attention branch was changed to ensure a fair comparison. Table 8 reports the model performance in terms of precision, recall, mAP@50, and mAP@50–90 under different kernel sizes.

Table 8.

Detection performance under different convolutional kernel sizes in the SSE module (LUNA16 dataset) with 95% confidence intervals.

As shown in Table 8, the model achieves the best overall performance when the kernel size is set to seven, attaining the highest values across all four metrics. This indicates that kernel size plays a critical role in the spatial attention mechanism. While smaller kernels may be inadequate for capturing broader spatial dependencies, huge kernels can introduce noise and diminish generalization. Therefore, selecting an appropriate kernel size is crucial for maximizing spatial representation capacity and enhancing detection accuracy in medical image analysis.

4.9. Generalizability Across Detection Frameworks

To rigorously evaluate the generalizability of the proposed spatial squeeze-and-excitation (SSE) module and explicit aspect ratio penalty IoU (EAPIoU) loss, we conducted transfer experiments by integrating them into two representative object detection frameworks: faster R-CNN and RetinaNet. These models were selected due to their structural diversity and widespread adoption in medical object detection tasks, thereby providing a robust testbed for assessing architectural independence.

All models were trained and evaluated on the LUNA16 dataset under identical training protocols with a shared ResNet-50 backbone to ensure fairness. Each framework was tested under four configurations: (i) the original baseline, (ii) integrated with SSE, (iii) using the EAPIoU loss, and (iv) combining both SSE and EAPIoU. Evaluation metrics included precision, recall, and mAP@50, consistent with prior benchmarks.

As shown in Table 9, integrating the SSE module and EAPIoU loss into both faster R-CNN and RetinaNet yields consistent performance gains across all evaluation metrics. For instance, in the faster R-CNN framework, introducing SSE alone improves mAP@50 from 70.1% to 73.1%, and EAPIoU alone raises it to 72.0%. The combined use of both modules achieves the best result of 74.7%, indicating a cumulative effect. A similar trend is observed with RetinaNet, where the complete configuration also yields a notable increase over the baseline.

Table 9.

Ablation study of SSE and EAPIoU across different detection frameworks (LUNA16 Dataset) with 95% confidence intervals.

These results demonstrate that the SSE module and EAPIoU loss are not tied to the YOLOV11-EAR architecture but can generalize well to different detection backbones and heads. The consistent improvements further support their modularity, robustness, and potential for broader adoption in various medical object detection tasks.

5. Discussion

This study addresses the challenge of detecting small and morphologically diverse pulmonary nodules in CT images by proposing the YOLOV11-EAR framework, which integrates a spatial squeeze-and-excitation (SSE) attention module and a novel explicit aspect ratio penalty IoU (EAPIoU) loss. We evaluate the model comprehensively on three publicly available datasets, LUNA16, Node21, and LungCT, covering various imaging conditions and nodule types. Experimental results demonstrate that the proposed method consistently outperforms existing mainstream methods in terms of precision, recall, and mAP, confirming its robustness and generalizability.

Our results are consistent with recent studies demonstrating the effectiveness of attention mechanisms in medical image feature extraction. Previous works such as SE-ResNeXt-50 [2] and CSE-GAN [1] mainly rely on channel attention and report improved detection performance. However, our findings suggest that channel attention alone is insufficient for retaining fine-grained spatial features. The SSE module addresses this issue by introducing spatial context, enabling more accurate localization of small and irregular nodules across diverse datasets.

Although the SSE module bears conceptual similarity to dual-attention mechanisms such as CBAM [28] and BAM [29], it differs significantly in three aspects: (1) SSE is specifically tailored for medical imaging tasks, emphasizing boundary preservation and local structure sensitivity; (2) unlike CBAM, which applies channel and spatial attention sequentially, SSE performs spatial squeezing and excitation in parallel and fuses it with channel-wise recalibration through additive interactions, achieving more stable optimization in low-contrast scenarios; (3) SSE adopts a lightweight design, ensuring minimal computational overhead when integrated into real-time detection frameworks.

To further evaluate the practical performance of our method, we conducted comparative experiments with two representative detection frameworks: faster R-CNN and RetinaNet. As shown in Table 7, YOLOV11-EAR consistently outperforms both models across all metrics and datasets. For instance, on the LungCT dataset, YOLOV11-EAR achieves mAP@50 of 0.924, significantly surpassing RetinaNet’s 0.882. This highlights the superior efficiency of our framework and the advantage of SSE and EAPIoU in capturing detailed structures and precise boundary fitting. These results demonstrate that YOLOV11-EAR not only performs well in lightweight scenarios but also surpasses mainstream models in general detection tasks.

In terms of regression loss, various IoU-based loss functions have been proposed in recent years, including GIoU [8], CIoU [9], and DIoU [10], which have significantly improved localization accuracy. GIoU introduces the smallest enclosing box to address gradient issues when boxes do not overlap but struggle with aspect ratio variation. CIoU enhances GIoU by incorporating center distance and aspect ratio penalties yet remains insufficient for shape-inconsistent targets. DIoU focuses on center distance but still relies on angular penalties for aspect ratio regularization. These approaches fail to resolve shape consistency issues, especially for non-rectangular objects, adequately. To this end, we propose EAPIoU, which introduces a squared aspect ratio penalty that directly measures the discrepancy between predicted and ground-truth boxes. EAPIoU offers stronger gradient feedback, improved shape optimization, and scale invariance, demonstrating superior generalization compared to CIoU and DIoU.

Interestingly, ablation experiments reveal that although SIoU achieves the highest recall, its mAP under stricter IoU thresholds is still inferior to EAPIoU. This suggests that SIoU’s emphasis on optimization dynamics may come at the expense of geometric precision, which is critical in medical imaging tasks that require accurate boundary delineation.

To verify the architecture-agnostic property of the proposed modules, we also integrated SSE and EAPIoU into faster R-CNN and RetinaNet for extended experiments. Results show consistent performance improvements across both frameworks, confirming the modules’ transferability and adaptability in diverse detection architectures. This further validates that SSE and EAPIoU are generic components rather than architecture-specific designs.

This study highlights the significance of shape-sensitive loss functions and spatially aware attention mechanisms in improving detection for low-contrast and small-object scenarios. The synergistic design of SSE and EAPIoU provides a comprehensive improvement in both feature localization and bounding box regression. Their robustness across multiple datasets and detection frameworks highlights their broad applicability in real-world medical settings.

Theoretically, our work challenges prevailing assumptions in object detection design, particularly the notion that aspect ratio mismatches can be effectively addressed via angular penalties. Our findings suggest that explicit ratio modeling is more effective in small-object detection tasks and should be emphasized in future loss function designs.

Despite its strengths, our method has several limitations. First, the datasets used (LUNA16, Node21, and LungCT) primarily include nodules mm in diameter. Further validation is required on datasets with extremely small or heterogeneous lesions. Second, the training pipeline is fully supervised, limiting its applicability in scenarios with scarce annotations or significant domain shifts.

Finally, although the model remains lightweight, further optimization is required for real-time deployment on mobile or edge devices. In future work, we plan to explore semi-supervised learning and domain adaptation techniques to enhance transferability and conduct broader evaluations on datasets such as LIDC-IDRI [30] and private clinical repositories. We also aim to integrate more efficient attention mechanisms to reduce computational cost while maintaining high accuracy.

6. Conclusions

In this work, we proposed a YOLOV11-based method for pulmonary nodule detection, targeting improved accuracy for nodules. A novel attention mechanism combining spatial and channel attention was introduced to enhance focus on critical local regions, effectively addressing the limitations of traditional SE-based modules. Experiments showed that our module outperformed SE, coordinate attention, SIMAM, and multi-head channel attention in terms of precision and mAP@50.

We also introduced the explicit aspect ratio penalty IoU (EAPIoU) loss, which explicitly penalizes aspect ratio differences between predicted and ground-truth boxes. This design significantly improved localization accuracy, particularly for small targets, and ablation studies confirmed its superiority over other IoU-based losses. Hyperparameter analysis further validated the stability and robustness of the proposed loss.

Despite strong performance, our method’s generalization to diverse datasets and real-time deployment remains to be explored. Future work will focus on integrating semi- or self-supervised learning to utilize unlabeled data and developing lighter attention modules to reduce computational costs for broader clinical use.

Author Contributions

Conceptualization, X.S.; Data curation, X.S. and H.X.; Formal analysis, H.X.; Funding acquisition, X.S., H.X., T.G. and N.C.; Methodology, X.S.; Resources, H.X. and T.G.; Software, X.S.; Supervision, J.G.; Validation, X.S. and H.X.; Visualization, N.C.; Writing—original draft, X.S. and T.G.; Writing—review and editing, X.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Tyagi, S.; Talbar, S.N. CSE-GAN: A 3D conditional generative adversarial network with concurrent squeeze-and-excitation blocks for lung nodule segmentation. Comput. Biol. Med. 2022, 147, 105781. [Google Scholar] [CrossRef] [PubMed]

- Priya, A.; Shyamala Bharathi, P. SE-ResNeXt-50-CNN: A deep learning model for lung cancer classification. Appl. Soft Comput. 2025, 171, 112696. [Google Scholar] [CrossRef]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate Attention for Efficient Mobile Network Design. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 13708–13717. [Google Scholar] [CrossRef]

- Yang, L.; Zhang, R.Y.; Li, L.; Xie, X. SimAM: A Simple, Parameter-Free Attention Module for Convolutional Neural Networks. In Proceedings of the 38th International Conference on Machine Learning (ICML 2021), Virtual Event, 18–24 July 2021; Meila, M., Zhang, T., Eds.; Proceedings of Machine Learning Research. PMLR: New York, NY, USA, 2021; Volume 139, pp. 11863–11874. [Google Scholar]

- Yu, Y.; Zhang, Y.; Cheng, Z.; Song, Z.; Tang, C. MCA: Multidimensional collaborative attention in deep convolutional neural networks for image recognition. Eng. Appl. Artif. Intell. 2023, 126, 107079. [Google Scholar] [CrossRef]

- Meter, W. LungCT Dataset. Roboflow Universe. Available online: https://universe.roboflow.com/water-meter-k29v4/lungct-tywmh (accessed on 30 May 2025).

- Li, P.; Tao, H.; Zhou, H.; Zhou, P.; Deng, Y. Enhanced Multiview attention network with random interpolation resize for few-shot surface defect detection. Multimed. Syst. 2025, 31, 36. [Google Scholar] [CrossRef]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized Intersection Over Union: A Metric and a Loss for Bounding Box Regression. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 658–666. [Google Scholar] [CrossRef]

- Wang, X.; Song, J. ICIoU: Improved Loss Based on Complete Intersection Over Union for Bounding Box Regression. IEEE Access 2021, 9, 105686–105695. [Google Scholar] [CrossRef]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. In Proceedings of the Thirty-Fourth AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12993–13000. [Google Scholar] [CrossRef]

- Setio, B.v.G.; Ciompi, F.; Litjens, G.; Economopoulos, T.; Niemeijer, M.; Jacobs, C.; van Riel, S.J.; Willems, T.; ten Kate, G.; Prokop, M. LUNA16: LUNG NODE ANALYSIS 2016. Med. Imaging 2017 Comput. Aided Diagn. 2017, 10134, 897–906. [Google Scholar] [CrossRef]

- Sogancioglu, E.; Murphy, K.; van Ginneken, B. NODE21: Public CXR Training Dataset; Zenodo: Geneva, Switzerland, 2021. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Ultralytics. YOLOv5. 2020. Available online: https://github.com/ultralytics/yolov5 (accessed on 3 July 2025).

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar] [CrossRef]

- Ultralytics. YOLOv8 Docs. 2023. Available online: https://docs.ultralytics.com (accessed on 29 June 2025).

- Ultralytics. YOLOv9: Real-Time Object Detection. 2024. Available online: https://docs.ultralytics.com/zh/models/yolov9/ (accessed on 29 June 2025).

- Ultralytics. YOLOv10: Model Documentation and Resources. 2024. Available online: https://docs.ultralytics.com/zh/models/yolov10/ (accessed on 3 July 2025).

- Khanam, R.; Hussain, M. YOLOv11: An Overview of the Key Architectural Enhancements. arXiv 2024, arXiv:2410.17725. Available online: https://docs.ultralytics.com/zh/models/yolo11/ (accessed on 3 July 2025).

- Cho, Y.J. Weighted Intersection over Union (wIoU) for evaluating image segmentation. Pattern Recognit. Lett. 2024, 185, 101–107. [Google Scholar] [CrossRef]

- Zhang, Y.F.; Ren, W.; Zhang, Z.; Jia, Z.; Wang, L.; Tan, T. Focal and efficient IOU loss for accurate bounding box regression. Neurocomputing 2022, 506, 146–157. [Google Scholar] [CrossRef]

- Gevorgyan, Z. SIoU Loss: More Powerful Learning for Bounding Box Regression. arXiv 2022, arXiv:2205.12740. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the Computer Vision—ECCV 2018: 15th European Conference, Munich, Germany, 8–14 September 2018; Proceedings, Part VII. Springer: Berlin/Heidelberg, Germany, 2018; pp. 3–19. [Google Scholar] [CrossRef]

- Park, J.; Woo, S.; Lee, J.Y.; Kweon, I.S. BAM: Bottleneck Attention Module. In Proceedings of the British Machine Vision Conference, Newcastle, UK, 3–6 September 2018. [Google Scholar]

- Armato, S.G., III; McLennan, G.; Bidaut, L.; McNitt-Gray, M.F.; Meyer, C.R.; Reeves, A.P.; Zhao, B.; Aberle, D.R.; Henschke, C.I.; Hoffman, E.A.; et al. The Lung Image Database Consortium (LIDC) and Image Database Resource Initiative (IDRI): A Completed Reference Database of Lung Nodules on CT Scans. Med. Phys. 2011, 38, 915–931. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).