GDM-DTM: A Group Decision-Making-Enabled Dynamic Trust Management Method for Malicious Node Detection in Low-Altitude UAV Networks

Abstract

1. Introduction

- GDM-Based Trust Management: We integrate group decision-making (GDM) into the UAV trust management method, termed GDM-DTM. GDM-DTM evaluates and aggregates trust values based on four consensus parts to make consensus-driven decisions, while trust management governs UAV trustworthiness. This approach has significantly enhanced malicious node detection capability in scenarios without ground infrastructure.

- Multi-dimensional Trust Modeling: A trust value calculation method based on multi-dimensional UAV attribute data is proposed to provide a more reliable trust computation approach.

- Proactive Trust Validation via Self-Proof Consistency: To counter collusion and false trust propagation, we introduce a self-proof consistency concept for UAV environments and propose self-proof consistency degree as a new metric to evaluate consensus levels. GDM-DTM transforms trust validation from passive estimation to proactive provision, thus more effectively identifying and adjusting discrepancies in opinions.

- Dynamic Threshold and Weight Adjustment: A dynamic threshold adjustment algorithm based on attribute weight distribution and node tolerance is introduced to enhance the system’s environmental adaptability in dynamic topologies and novel attack scenarios.

- We conduct detailed evaluation experiments on the effectiveness of GDM-DTM in trust evaluation and malicious node detection. The experimental results show that GDM-DTM not only outperforms traditional methods in terms of performance but also demonstrates stronger adaptability and robustness when dealing with complex attack scenarios.

2. Related Work

2.1. UAV Trust Management

2.2. Group Decision-Making

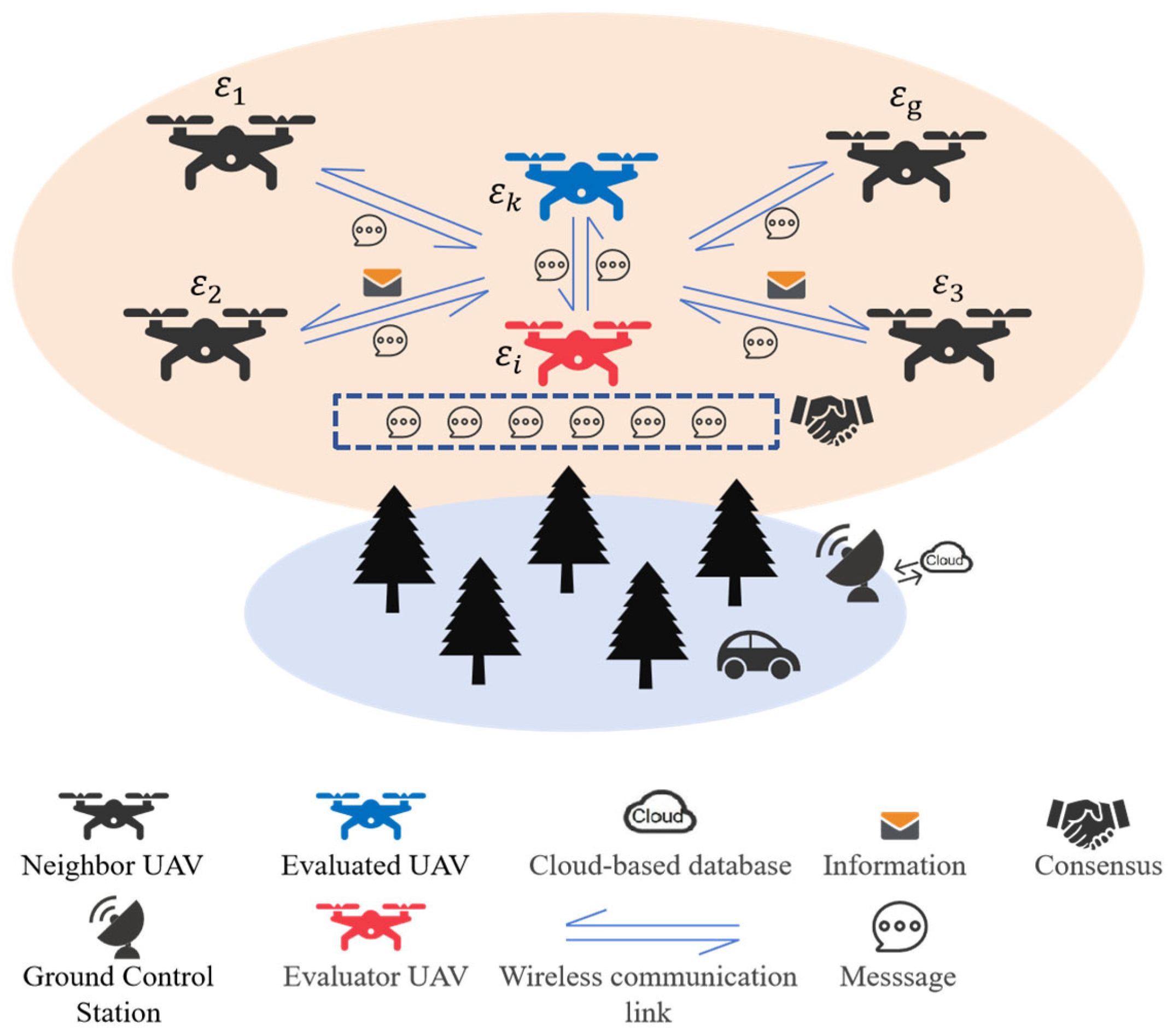

3. UAV System Architecture

4. Proposed GDM-DTM

4.1. Overview of the Proposed GDM-DTM

4.2. Consistency Evaluation Algorithm

4.2.1. Subjective Consistency Part

- Multi-dimensional Attribute Trust Computation

- Weight Calculation

- Subjective Consistency Degree Calculation

4.2.2. Objective Consistency Part

4.2.3. Global Consistency Part

4.2.4. Self-Proof Consistency Part

4.2.5. Consistency Degree Similarity Calculation

4.3. Dynamic Trust Adjustment Mechanism

- Case 1: ;

- Case 2: ;

- Case 3: ;

- Case 4: ;

4.4. State Update Mechanism

5. Performance Evaluation

5.1. Simulation Setup

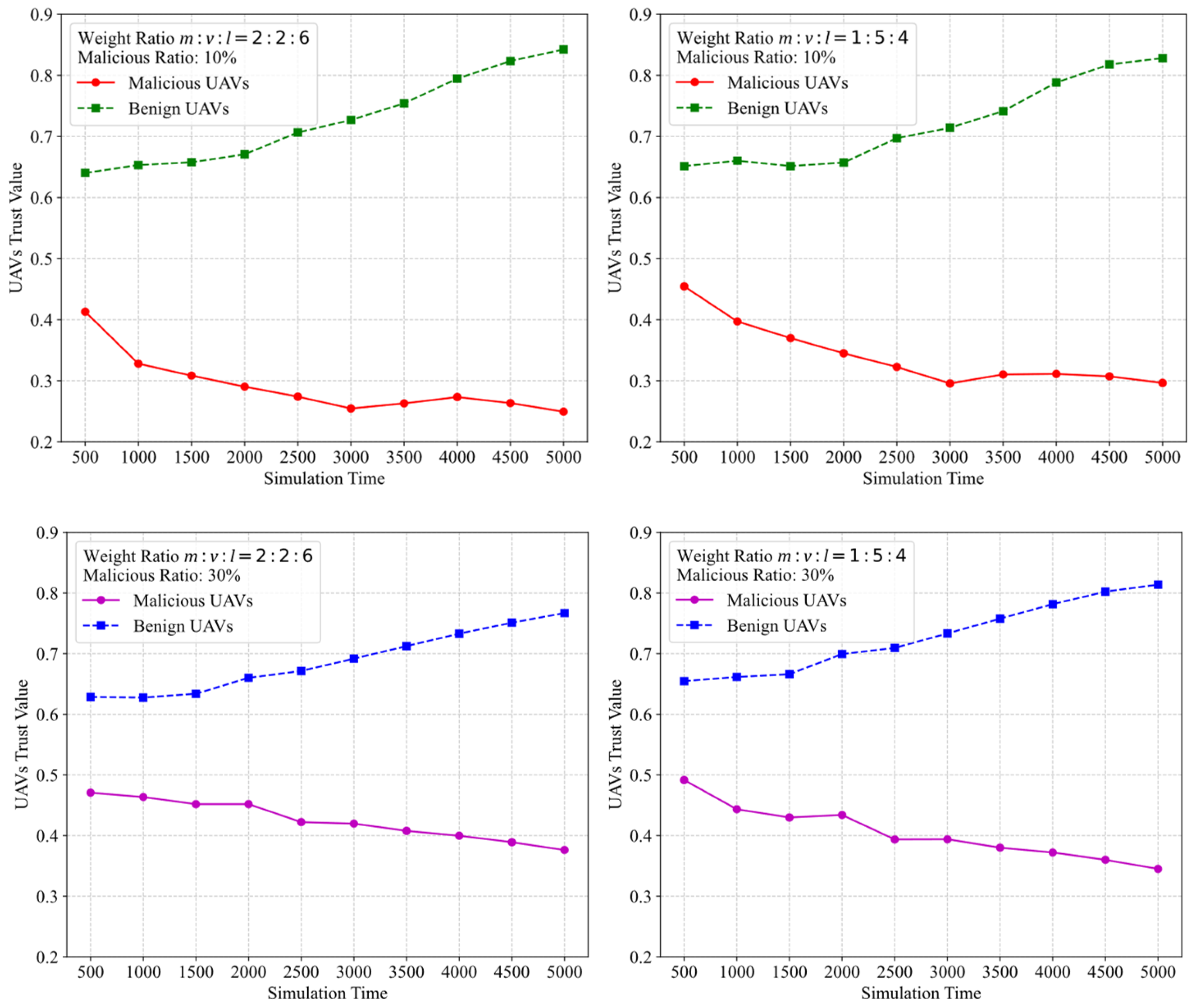

5.2. UAV Trust Value Calculation Evaluation Analysis

5.3. UAV Trust Management System Performance Analysis

5.3.1. Attack Model

5.3.2. Performance Evaluation Under MTGI

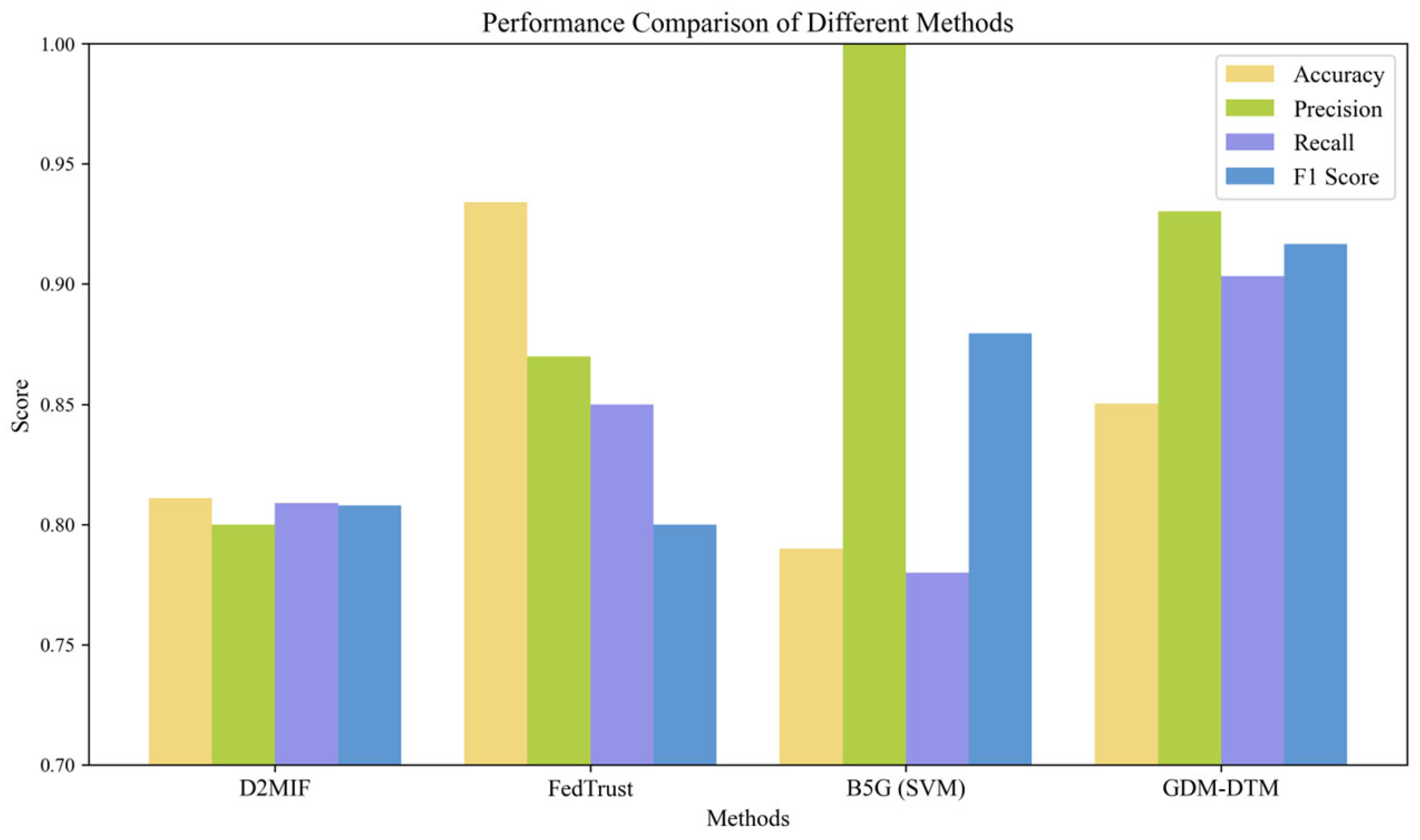

- Accuracy: Represents the percentage of correctly identified results.

- Precision: Represents the proportion of predicted malicious nodes that are actually malicious.

- Recall: Represents the proportion of actual malicious nodes that are correctly identified.

- F-score: A performance measure calculated from precision and recall.

- Accuracy Performance

- Precision

- Recall

- F-score

5.3.3. Comparison of GDM-DTM with Other Models

6. Conclusions and Future Directions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Zhou, Y. Unmanned Aerial Vehicles Based Low-Altitude Economy with Lifecycle Techno-Economic-Environmental Analysis for Sustainable and Smart Cities. J. Clean. Prod. 2025, 499, 145050. [Google Scholar] [CrossRef]

- Zheng, K.; Fu, J.; Liu, X. Relay Selection and Deployment for NOMA-Enabled Multi-AAV-Assisted WSN. IEEE Sens. J. 2025, 25, 16235–16249. [Google Scholar] [CrossRef]

- Teng, M.; Gao, C.; Wang, Z. A Communication-Based Identification of Critical Drones in Malicious Drone Swarm Networks. Complex Intell. Syst. 2024, 10, 3197–3211. [Google Scholar] [CrossRef]

- Zilberman, A.; Stulman, A.; Dvir, A. Identifying a Malicious Node in a UAV Network. IEEE Trans. Netw. Serv. Manage. 2024, 21, 1226–1240. [Google Scholar] [CrossRef]

- Meng, X.; Liu, D. GeTrust: A Guarantee-Based Trust Model in Chord-Based P2P Networks. IEEE Trans. Dependable Secur. Comput. 2018, 15, 54–68. [Google Scholar] [CrossRef]

- You, X.; Hou, F.; Chiclana, F. A Reputation-Based Trust Evaluation Model in Group Decision-Making Framework. Inf. Fusion 2024, 103, 102082. [Google Scholar] [CrossRef]

- Zhaofeng, M.; Lingyun, W.; Xiaochang, W. Blockchain-Enabled Decentralized Trust Management and Secure Usage Control of IoT Big Data. IEEE Internet Things J. 2020, 7, 4000–4015. [Google Scholar] [CrossRef]

- Haseeb, K.; Saba, T.; Rehman, A. Trust Management with Fault-Tolerant Supervised Routing for Smart Cities Using Internet of Things. IEEE Internet Things J. 2022, 9, 22608–22617. [Google Scholar] [CrossRef]

- Chen, X.; Ding, J.; Lu, Z. A Decentralized Trust Management System for Intelligent Transportation Environments. IEEE Trans. Intell. Transport. Syst. 2022, 23, 558–571. [Google Scholar] [CrossRef]

- Deng, M.; Lyu, Y.; Yang, C. Lightweight Trust Management Scheme Based on Blockchain in Resource-Constrained Intelligent IoT Systems. IEEE Internet Things J. 2024, 11, 25706–25719. [Google Scholar] [CrossRef]

- Xiang, X.; Cao, J.; Fan, W. Blockchain Enabled Dynamic Trust Management Method for the Internet of Medical Things. Decis. Support Syst. 2024, 180, 114184. [Google Scholar] [CrossRef]

- Gu, C.; Ma, B.; Hu, D. A Dependable and Efficient Decentralized Trust Management System Based on Consortium Blockchain for Intelligent Transportation Systems. IEEE Trans. Intell. Transport. Syst. 2024, 25, 19430–19443. [Google Scholar] [CrossRef]

- Zheng, J.; Xu, J.; Du, H. Trust Management of Tiny Federated Learning in Internet of Unmanned Aerial Vehicles. IEEE Internet Things J. 2024, 11, 21046–21060. [Google Scholar] [CrossRef]

- Wang, P.; Xu, N.; Zhang, H. Dynamic Access Control and Trust Management for Blockchain-Empowered IoT. IEEE Internet Things J. 2022, 9, 12997–13009. [Google Scholar] [CrossRef]

- Din, I.U.; Awan, K.A.; Almogren, A. Secure and Privacy-Preserving Trust Management System for Trustworthy Communications in Intelligent Transportation Systems. IEEE Access 2023, 11, 65407–65417. [Google Scholar] [CrossRef]

- Wang, C.; Cai, Z.; Seo, D. TMETA: Trust Management for the Cold Start of IoT Services with Digital-Twin-Aided Blockchain. IEEE Internet Things J. 2023, 10, 21337–21348. [Google Scholar] [CrossRef]

- Yu, Y.; Lu, Q.; Fu, Y. Dynamic Trust Management for the Edge Devices in Industrial Internet. IEEE Internet Things J. 2024, 11, 18410–18420. [Google Scholar] [CrossRef]

- Xu, Q.; Zhang, L.; Qin, X. A Novel Machine Learning-Based Trust Management Against Multiple Misbehaviors for Connected and Automated Vehicles. IEEE Trans. Intell. Transport. Syst. 2024, 25, 16775–16790. [Google Scholar] [CrossRef]

- A Review of Research on UAV Network Intrusion Detection and Trust Systems; National Cybersecurity Institute, Wuhan University: Wuhan, China, 2023.

- Liu, Z.; Guo, J.; Huang, F. Lightweight Trustworthy Message Exchange in Unmanned Aerial Vehicle Networks. IEEE Trans. Intell. Transport. Syst. 2023, 24, 2144–2157. [Google Scholar] [CrossRef]

- Kundu, J.; Alam, S.; Koner, C. Trust-Based Dynamic Leader Selection Mechanism for Enhanced Performance in Flying Ad-Hoc Networks (FANETs). IEEE Trans. Intell. Transport. Syst. 2024, 25, 20616–20627. [Google Scholar] [CrossRef]

- Liang, J.; Liu, W.; Xiong, N.N. An Intelligent and Trust UAV-Assisted Code Dissemination 5G System for Industrial Internet-of-Things. IEEE Trans. Ind. Inf. 2022, 18, 2877–2889. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, W.; Shi, F. Reputation-Based Raft-Poa Layered Consensus Protocol Converging UAV Network. Comput. Netw. 2024, 240, 110170. [Google Scholar] [CrossRef]

- Akram, J.; Anaissi, A.; Rathore, R.S. GALTrust: Generative Adverserial Learning-Based Framework for Trust Management in Spatial Crowdsourcing Drone Services. IEEE Trans. Consum. Electron. 2024, 70, 6196–6207. [Google Scholar] [CrossRef]

- Akram, J.; Anaissi, A.; Rathore, R.S. Digital Twin-Driven Trust Management in Open RAN-Based Spatial Crowdsourcing Drone Services. IEEE Trans. Green Commun. Netw. 2024, 8, 1061–1075. [Google Scholar] [CrossRef]

- Zha, Q.; Dong, Y.; Zhang, H. A Personalized Feedback Mechanism Based on Bounded Confidence Learning to Support Consensus Reaching in Group Decision Making. IEEE Trans. Syst. Man Cybern. Syst. 2021, 51, 3900–3910. [Google Scholar] [CrossRef]

- Zhou, X.; Li, S.; Wei, C. Consensus Reaching Process for Group Decision-Making Based on Trust Network and Ordinal Consensus Measure. Inf. Fusion 2024, 101, 101969. [Google Scholar] [CrossRef]

- Zeng, Y.; Guvenc, I.; Zhang, R.; Geraci, G.; Matolak, D.W. (Eds.) Front Matter. In UAV Communications for 5G and Beyond; Wiley: Hoboken, NJ, USA, 2020; ISBN 978-1-119-57569-6. [Google Scholar][Green Version]

- Keränen, A.; Ott, J.; Kärkkäinen, T. The ONE Simulator for DTN Protocol Evaluation. In Proceedings of the Second International ICST Conference on Simulation Tools and Techniques, Rome, Italy, 2–6 March 2009; ICST: Rome, Italy, 2009. [Google Scholar][Green Version]

- Yan, J.; Zhao, X.; Li, Z. Deep-Reinforcement-Learning-Based Computation Offloading in UAV-Assisted Vehicular Edge Computing Networks. IEEE Internet Things J. 2024, 11, 19882–19897. [Google Scholar] [CrossRef]

- Kurunathan, H.; Huang, H.; Li, K. Machine Learning-Aided Operations and Communications of Unmanned Aerial Vehicles: A Contemporary Survey. IEEE Commun. Surv. Tutor. 2022, 26, 496–533. [Google Scholar] [CrossRef]

- Rahmani, M.; Delavernhe, F.; Mohammed Senouci, S. Toward Sustainable Last-Mile Deliveries: A Comparative Study of Energy Consumption and Delivery Time for Drone-Only and Drone-Aided Public Transport Approaches in Urban Areas. IEEE Trans. Intell. Transport. Syst. 2024, 25, 17520–17532. [Google Scholar] [CrossRef]

- Ajakwe, S.O.; Kim, D.-S.; Lee, J.-M. Drone Transportation System: Systematic Review of Security Dynamics for Smart Mobility. IEEE Internet Things J. 2023, 10, 14462–14482. [Google Scholar] [CrossRef]

- Liu, W.; Lin, H.; Wang, X. D2MIF: A Malicious Model Detection Mechanism for Federated-Learning-Empowered Artificial Intelligence of Things. IEEE Internet Things J. 2023, 10, 2141–2151. [Google Scholar] [CrossRef]

- Awan, K.A.; Ud Din, I.; Zareei, M. Securing IoT with Deep Federated Learning: A Trust-Based Malicious Node Identification Approach. IEEE Access 2023, 11, 58901–58914. [Google Scholar] [CrossRef]

- Abubaker, Z.; Javaid, N.; Almogren, A. Blockchained Service Provisioning and Malicious Node Detection via Federated Learning in Scalable Internet of Sensor Things Networks. Comput. Netw. 2022, 204, 108691. [Google Scholar] [CrossRef]

| Independent of Ground Infrastructure | Multi-Dimensional Trust Modeling | Resistance to Collusion Attacks | Dynamic Threshold/Weight Adjustment | Group Decision-Making Mechanism | Self-Proof Consistency Verification | |

|---|---|---|---|---|---|---|

| GDM-DTM (ours) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| LTME [20] | ✗ | ✗ | ✗ | ✓ | ✗ | ✗ |

| TDLS-FANET [21] | ✗ | ✓ | ✗ | ✗ | ✗ | ✗ |

| UTCD [22] | ✗ | ✗ | ✓ | ✗ | ✗ | ✓ |

| Rep-Raft-Poa [23] | ✗ | ✗ | ✓ | ✗ | ✗ | ✗ |

| GALTrust [24] | ✗ | ✓ | ✓ | ✓ | ✗ | ✗ |

| TMIoDT [25] | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ |

| Scenario | Condition | Operation |

|---|---|---|

| Agreement between subjective and objective (judged as benign) | ||

| Agreement between subjective and objective (judged as malicious) | Mark as malicious node, block communication | |

| Conflict between subjective and objective (subjective judged as benign) | , divide into Case 1 and Case 2 | |

| Conflict between subjective and objective (subjective judged as malicious) | , divide into Case 3 and Case 4 |

| Parameter | Value |

|---|---|

| Simulation time | 5000 s |

| Number of drones | 120 |

| Speed distribution | [3~15] m/s |

| Storage buffer | 2 G |

| Bandwidth transfer speed | 5 Mbit/s |

| Communication range | 300 m |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, Y.; Gan, Y.; Wu, H.; Wang, C.; Ma, M.; Xiong, C. GDM-DTM: A Group Decision-Making-Enabled Dynamic Trust Management Method for Malicious Node Detection in Low-Altitude UAV Networks. Sensors 2025, 25, 3982. https://doi.org/10.3390/s25133982

Hu Y, Gan Y, Wu H, Wang C, Ma M, Xiong C. GDM-DTM: A Group Decision-Making-Enabled Dynamic Trust Management Method for Malicious Node Detection in Low-Altitude UAV Networks. Sensors. 2025; 25(13):3982. https://doi.org/10.3390/s25133982

Chicago/Turabian StyleHu, Yabao, Yulong Gan, Haoyu Wu, Cong Wang, Maode Ma, and Cheng Xiong. 2025. "GDM-DTM: A Group Decision-Making-Enabled Dynamic Trust Management Method for Malicious Node Detection in Low-Altitude UAV Networks" Sensors 25, no. 13: 3982. https://doi.org/10.3390/s25133982

APA StyleHu, Y., Gan, Y., Wu, H., Wang, C., Ma, M., & Xiong, C. (2025). GDM-DTM: A Group Decision-Making-Enabled Dynamic Trust Management Method for Malicious Node Detection in Low-Altitude UAV Networks. Sensors, 25(13), 3982. https://doi.org/10.3390/s25133982