Abstract

The use of recurrent neural networks has proven effective in time series prediction tasks such as weather. However, their use in resource-limited systems such as MCUs presents difficulties in terms of both size and stability with longer prediction windows. In this context, we propose a variant of the LSTM model, which we call SE-LSTM (Single Embedding LSTM), which uses embedding techniques to vectorially represent seasonality and latent patterns through variables such as temperature and humidity. The proposal is systematically compared in two parts: The first compares it against other reference architectures such as CNN-LSTM, TCN, LMU, and TPA-LSTM. The second stage, which includes implementation, compares it against the CNN-LSTM, LSTM, and TCN networks. Metrics such as the MAE and MSE are used along with the network weight, a crucial aspect for MCUs such as an ESP32 or Raspberry Pi Pico. An analysis of the memory usage, energy consumption, and generalization across different regions is also included. The results show that the use of embedding optimizes the network space without sacrificing the performance, which is crucial for edge computing. This research is part of a larger project, which focuses on improving agricultural monitoring systems.

1. Introduction

Weather prediction using recurrent neural networks such as Long Short-Term Memory (LSTM) has proven to be effective in learning temporal dependencies [1]; however, its implementation in systems such as Microcontroller Units (MCUs) presents some challenges. These problems include the model size and the performance stability when using a longer prediction window time [2]. Although solutions such as TPA-LSTM [3] or Legendre Memory Unit (LMU) [4] improve the accuracy, their complexity makes them unfeasible for these devices. In contrast, the use of AI and the IoT can solve this problem [5,6]. Other architectures, such as CNN-LSTM [7] and TCN [8], can be implemented in MCUs and are considered in this study for comparative purposes. This research prioritizes a completely local solution, performing inferences on an Microcontroller Unit (MCU). This allows us to utilize its advantages, such as privacy, by avoiding data transfer to the cloud, achieving minimal latency due to an on-premises execution, attaining computational resource efficiency, and maintaining accessibility in environments with limited connectivity, as demonstrated in comparative studies of distributed architectures [9] and in model optimization solutions for constrained hardware [10]. This approach allows for more autonomous and efficient solutions in environments with low connectivity or a lack of a robust infrastructure. By performing processing locally, the dependence on third parties is minimized, and, in addition, the low energy consumption of MCUs is leveraged.

In this article, we present an LSTM model with embedding layers, a technique that, as demonstrated in [11], allows the size of the neural network to be reduced without sacrificing its performance. This technique is similar to how embeddings are used in natural language processing (NLP) to represent semantic relationships between words [12]. For this application, embeddings are learned during the training phase as vectors, which find the relationships between seasons based on the temperature and humidity series. In addition, to explicitly capture seasonality, a label corresponding to the season was designated, grouping the months into quarters according to the meteorological convention and taking into account the hemisphere where each meteorological station is located. In the southern hemisphere, the distribution is as follows: December–February (summer), March–May (autumn), June–August (winter), September–November (spring); in the northern hemisphere, the distribution is reversed: the grouping is reversed: December–February (winter), March–May (spring), June–August (summer), September–November (autumn) [13]. This category was encoded using embeddings, allowing the neural network to learn latent representations of seasonality and its influence on climatic variables, such as temperature and humidity. Similar to their use in sequential tasks, such as time series or natural language processing, the categorical variables are represented using embedding vectors that capture relationships and patterns over time. Once the network completes the training, these vectors are integrated as input to the LSTM. Thus, the network reduces the number of parameters and the final model size while maintaining the quality or accuracy of a larger architecture.

We performed three main comparisons, all evaluating the MAE, MSE, RMSE, MAPE, RSE, and R2 metrics in the time windows t + 1, t + 3, and t + 6. All of the results shown in the tables are normalized to facilitate the comparison between models. The first comparison measured how much the size is reduced without losing performance, similarly to other networks such as LMU, TPA-LSTM, CNN-LSTM, TCN, and the standard LSTM. The second comparison focused on the implementation of these models on various MCU boards, also considering the inference time and power consumption, which are important aspects in real-world applications [14]. For the compatibility reasons explained in the results and implementation sections, TPA-LSTM and LMU were not included. Finally, the third comparison consisted of validating the effect of using embeddings in the LSTM, contrasting the base model with the proposed SE-LSTM model that integrates these vectors. This comparison was conducted in 13 cities at different latitudes.

The model maintains consistency in predictions when run on different hardware platforms, validating its portability. All resources (dataset, code, and trained models) are available as indicated in the Data Availability Statement to facilitate their adoption in practical applications.

2. Materials and Methods

This study used temperature and humidity data obtained from NASA’s POWER [15], with coordinates of latitude −16.4333 and longitude −71.5617, covering the periods from January 2020 to March 2025, with a sampling frequency of one hour, yielding a total of 45,864 data points. These data points were distributed as follows: 80% for training, 15% for validation, and 5% for testing. Temperature and humidity were selected as the main variables due to their high availability and temporal continuity in different geographic locations, allowing for a comparison of the model performance in different contexts. Furthermore, missing values marked as −999 are very rare in these variables, which facilitated their processing and maintained temporal continuity without affecting the model. Other meteorological variables, such as solar radiation and wind speed, were excluded due to their lower availability and continuity in the datasets used. Each sample contains the date and time, as well as the temperature and humidity values, which were normalized between 0 and 1. Discordant data (values −999) caused by missing data on certain dates were eliminated, as indicated by POWER NASA. In cases where the value −999 is not repeated more than twice consecutively, the missing data were replaced by the average of the immediately adjacent values in order to preserve the continuity of the time series without introducing distortions.

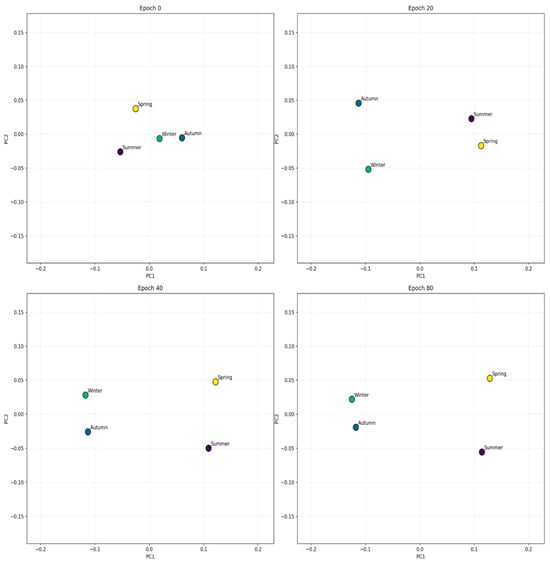

Because both temperature and humidity are related to the climatic seasons, the seasons were coded as an integer (0−3) and incorporated using the four-dimensional embedding layer. The coding process groups the months into four seasons according to meteorological convention: December–February (0), March–May (1), June–August (2), September–November (3). This layer initializes its vectors randomly and, during training, adjusts these vectors by backpropagation of the error—along with the rest of the network weights—to minimize the loss function. In this way, the model automatically learns to locate stations with similar weather patterns close to each other in the vector space, improving the LSTM’s ability to capture temporal and seasonal relationships. The optimal number of dimensions was determined by modifying the parameters of the embedding layer and evaluating the model’s overall performance.

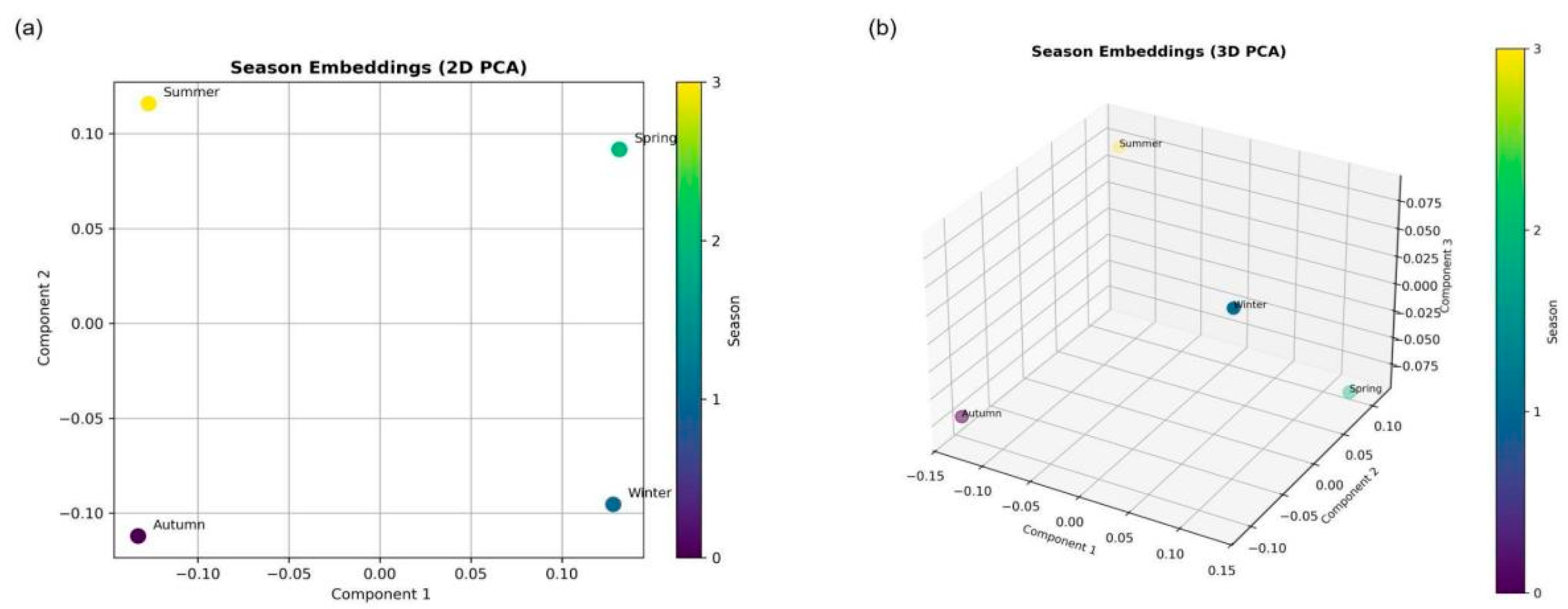

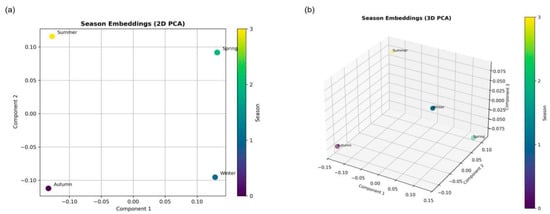

As illustrated in Figure 1, the relationships between the seasons are represented by by vectors in the 2D and 3D planes:

Figure 1.

A PCA-based visualization of the 4D embeddings. (a) 2D projection. (b) 3D projection.

The arrangement of points on a semicircle illustrates how the embedding vectors captured seasonal variations in the climate variables. Using a PCA dimensionality reduction, these four-dimensional vectors allow for the connections between seasons. Contrary seasons, such as summer and winter, are often reflected in opposite areas of the plane, as the embeddings have learned to represent their different weather patterns (such as high temperatures and low humidity under opposite circumstances). Spring and autumn, during periods of greater climatic stability, emerge in the transitional areas. This illustration shows how the model was able to gather seasons with similar climatic behaviors close to each other in the vector space.

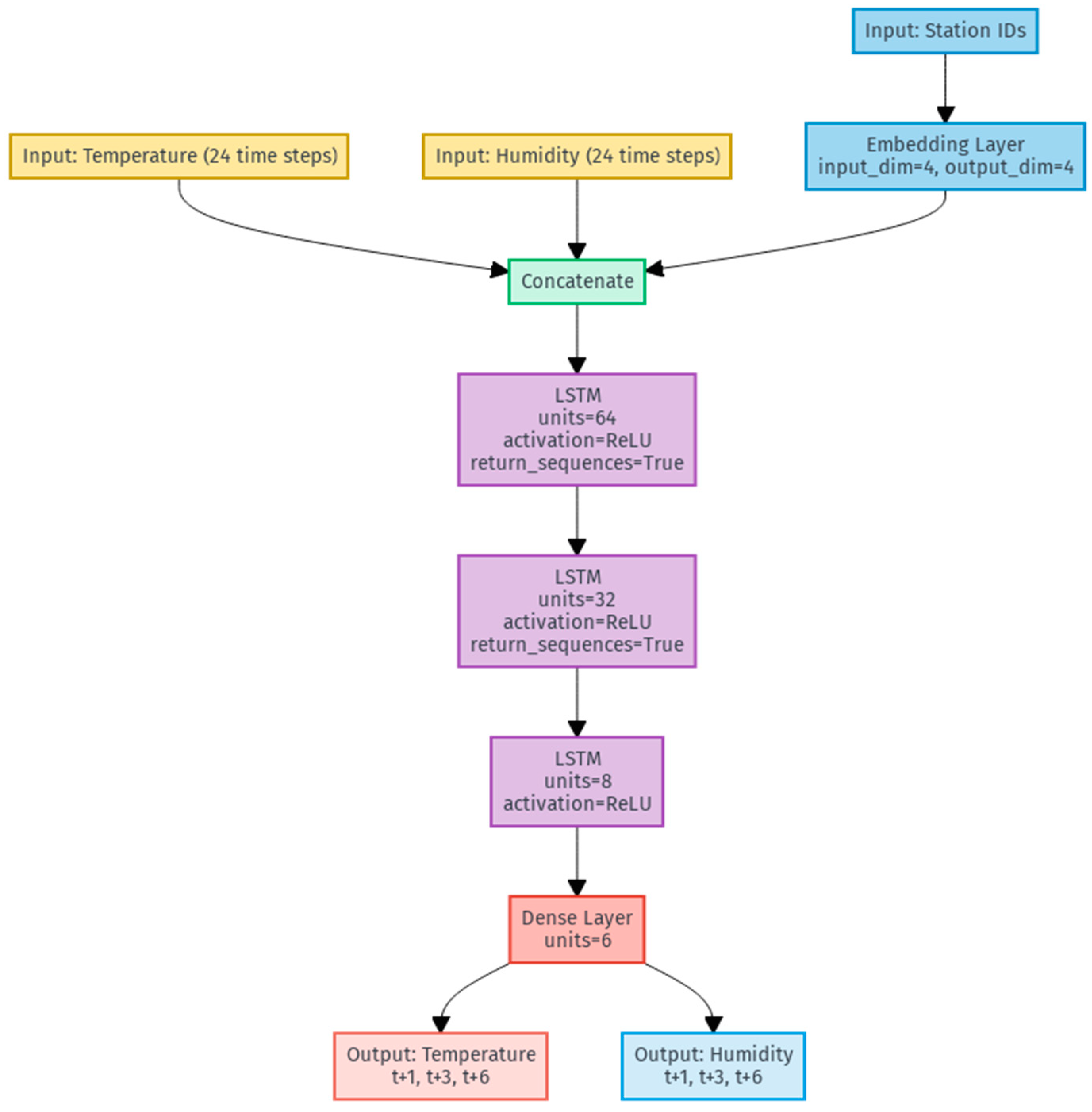

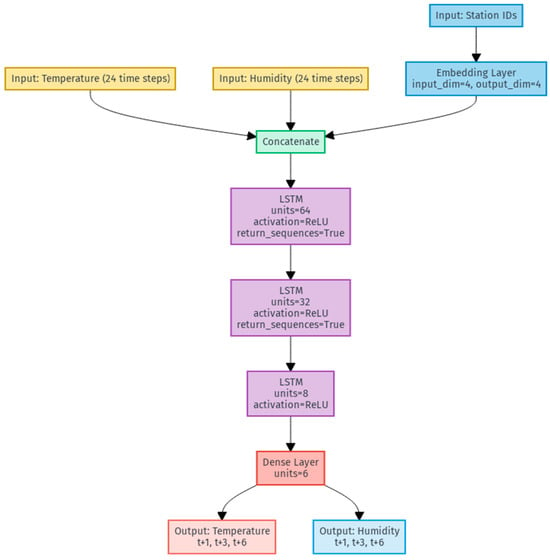

The architecture is structured as an LSTM network organized into three levels. The initial layer has 64 units and uses the return_sequences function to collect temporal data for the entire sequence. The second layer has 32 units and also uses the return_sequences function, and the third LSTM layer is composed of 8 units. All of the layers use the ReLU activation function, which provides nonlinearity in the variables. The output layer is a dense layer with 6 units and a linear activation, for the 3 temperature and 3 humidity predictions, corresponding to the time windows t+1h, t+3h, and t+6h. Thus, the input to the model is 24 samples of both temperature and humidity, concatenated to the 4-dimensional embedding layer learned during training.

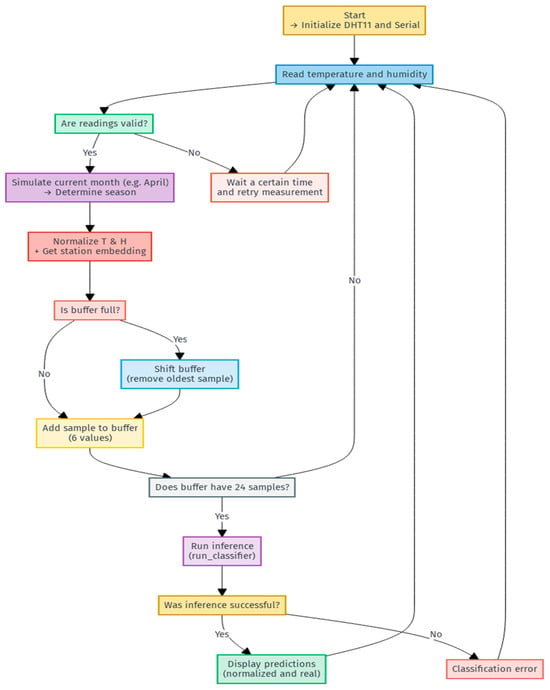

The following flowchart, shown in Figure 2, illustrates how station embeddings are integrated with the LSTM network.

Figure 2.

A flowchart of the model.

The integration of embeddings with the LSTM network allows the model to learn the context of the seasons and the relationship between temperature and humidity over the following hours.

The model was trained for 115 epochs, using the Adam optimizer, along with a learning rate of 0.001, a batch size of 64, and a loss function of the MSE. The hyperparameters were progressively defined, taking into account the behavior of the performance metrics in the test set, with the goal of achieving good performance.

Once the training is complete, the model is able to predict future temperature and humidity levels in the aforementioned time windows; at the same time, the learned vectors are also obtained, and in each training, these can change in magnitude, since they may give more weight to a certain variable during a certain season.

3. Results

This section is divided into four main parts. The design stage compares the performance of the proposed model, SE-LSTM, against other neural networks in the aforementioned city—networks such as CNN-LSTM, TCN, TPA-LSTM, LMU, and standard LSTM. In the second part, the dynamics of the embeddings are obtained. The third part compares the performance in various MCUs, and the fourth part involves the validation in various cities around the world other than the city in the first part.

3.1. Comparison at the Design Stage

The models compared were CNN-LSTM, TCN, LSTM, TPA-LSTM, LMU, and the proposed SE-LSTM model. The performance metrics used to compare were the MAE, MSE, RMSE, MAPE, RSE, , and the trained network weight. They were evaluated over three time horizons: t + 1 h, t + 3h, and t + 6 h, where “t” is the time after the collection of the previous 24 samples. An analysis of the variation in the metrics in each window and a summary of the overall performance are presented. The images accompanying the tables correspond to the model’s predictions of the test data. All of the metrics were calculated on the test set and applied to all of the networks analyzed.

3.1.1. First Window (t + 1)

In the first time window, the TPA-LSTM model shows the lowest errors (MSE, MAE, RMSE) and a very high , indicating its accuracy in nowcasting.

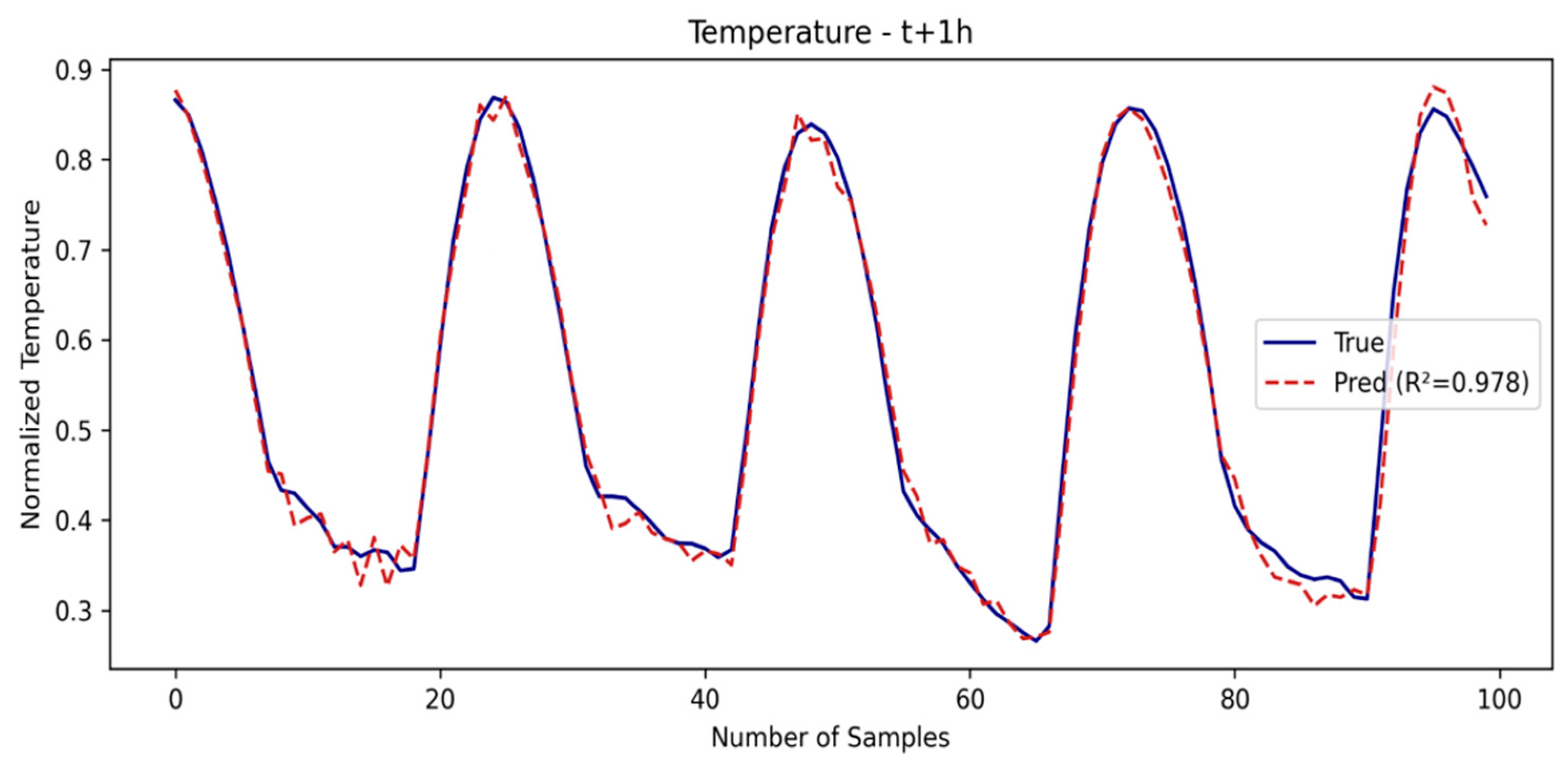

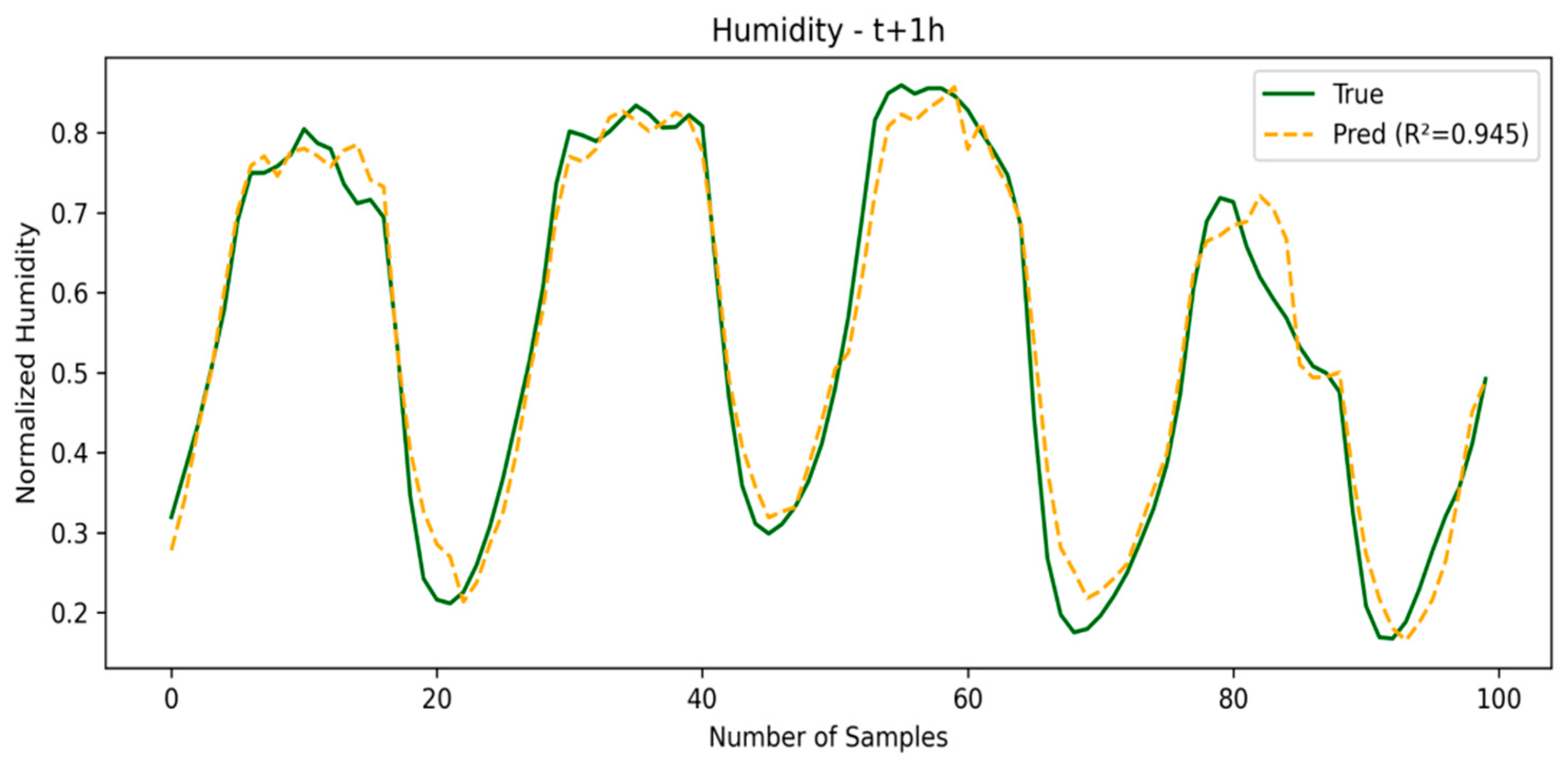

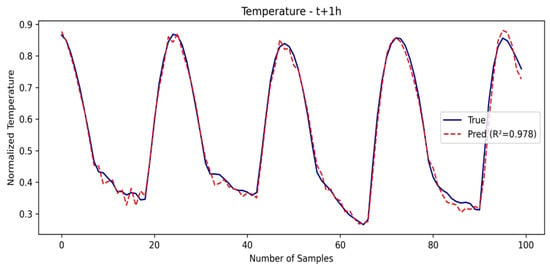

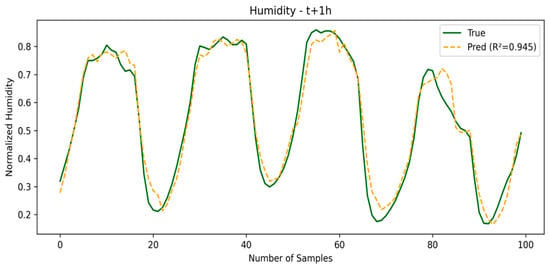

The SE-LSTM model also performs well, with competitive metrics and an for temperature of 0.9781, demonstrating that the use of embeddings based on meteorological representations helps capture short-term patterns. The SE-LSTM predictions for temperature and humidity are illustrated in Figure 3 and Figure 4, respectively, while the performance metrics are summarized in Table 1.

Figure 3.

Temperature prediction in first window.

Figure 4.

Humidity prediction in first window.

Table 1.

First time window (t + 1).

3.1.2. Second Window (t + 3)

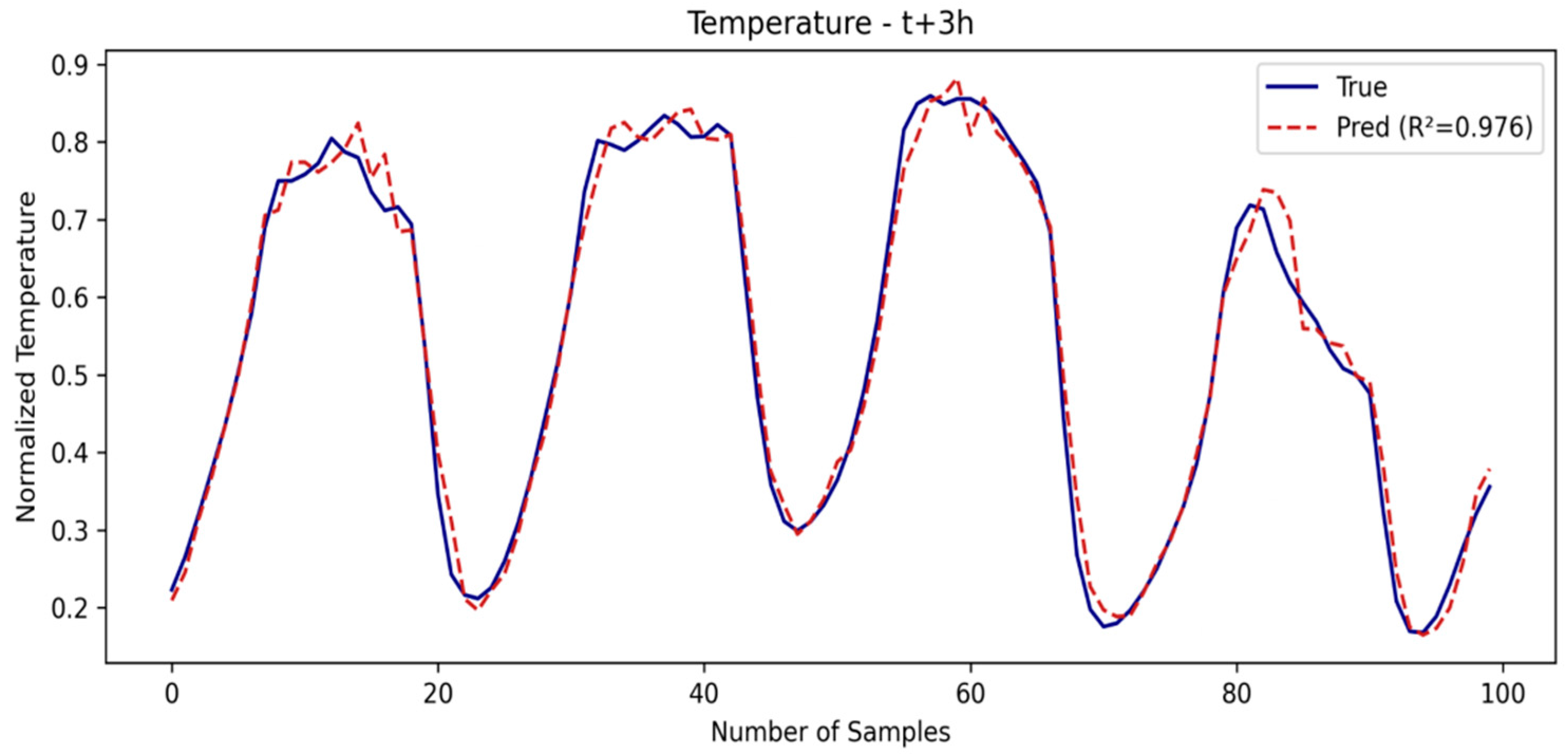

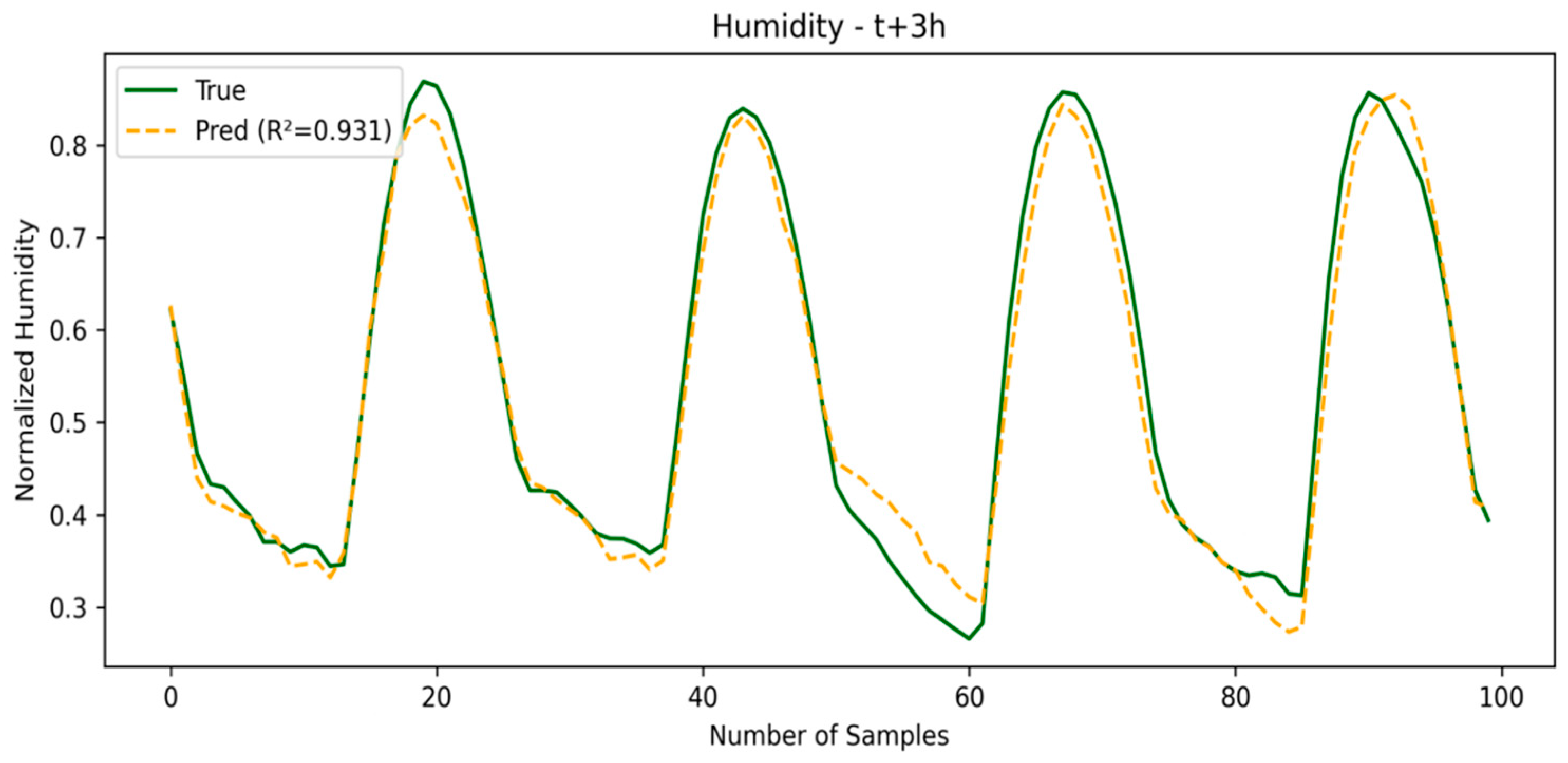

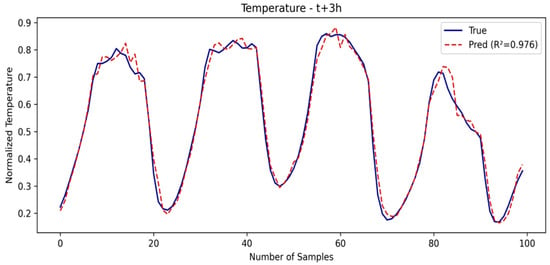

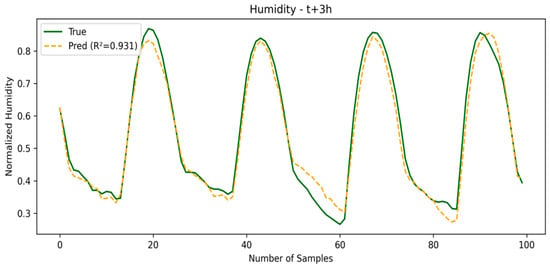

In this window, the errors tend to increase for all of the models. SE-LSTM remains consistent, while the other models show greater performance degradation. This demonstrates that the use of embedding in SE-LSTM helps maintain stability in medium-term predictions, particularly for the temperature variable. The SE-LSTM predictions for temperature and humidity are illustrated in Figure 5 and Figure 6, respectively, while the performance metrics are summarized in Table 2.

Figure 5.

Temperature prediction in second window.

Figure 6.

Humidity prediction in second window.

Table 2.

Second time window (t + 3).

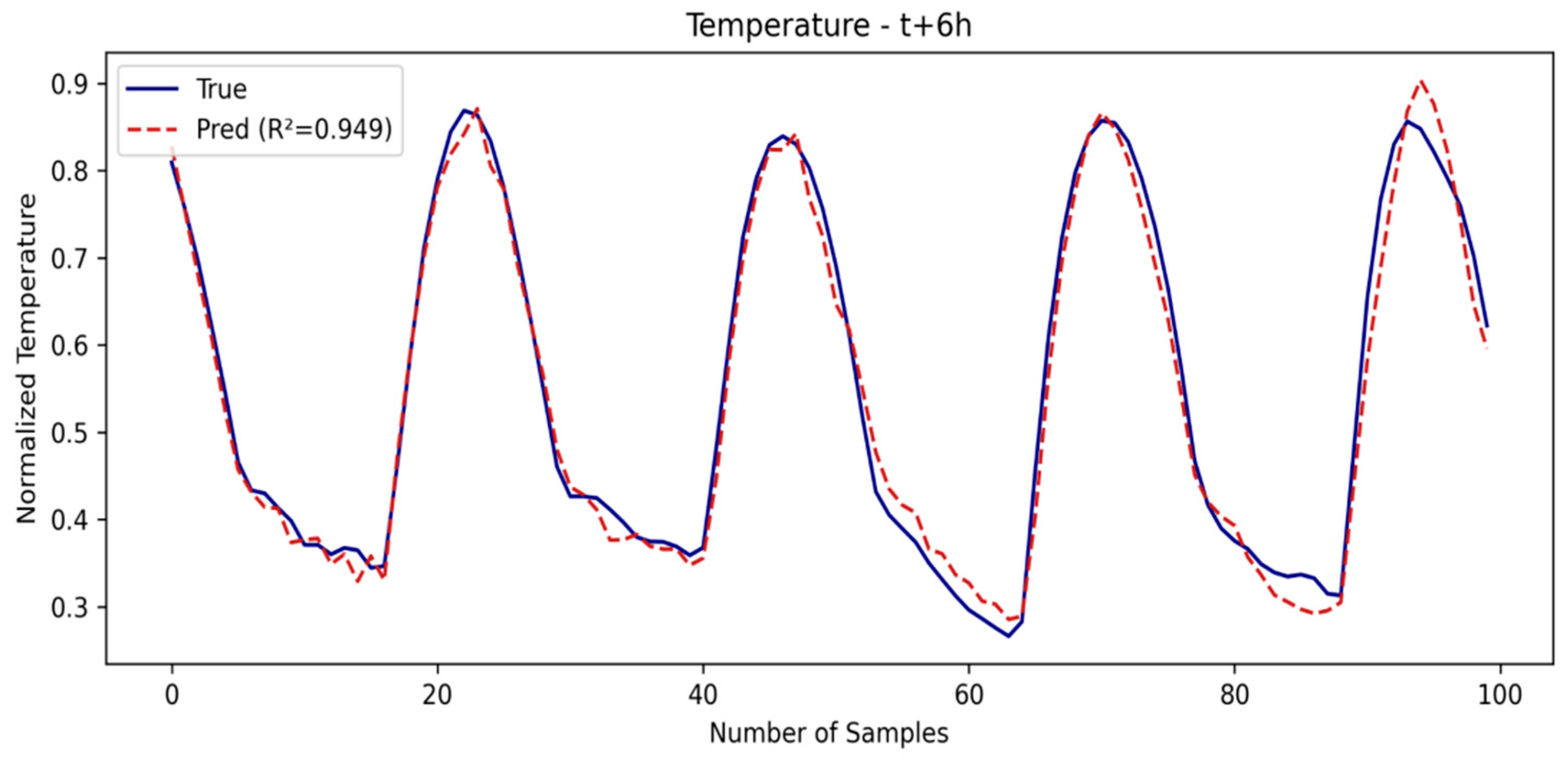

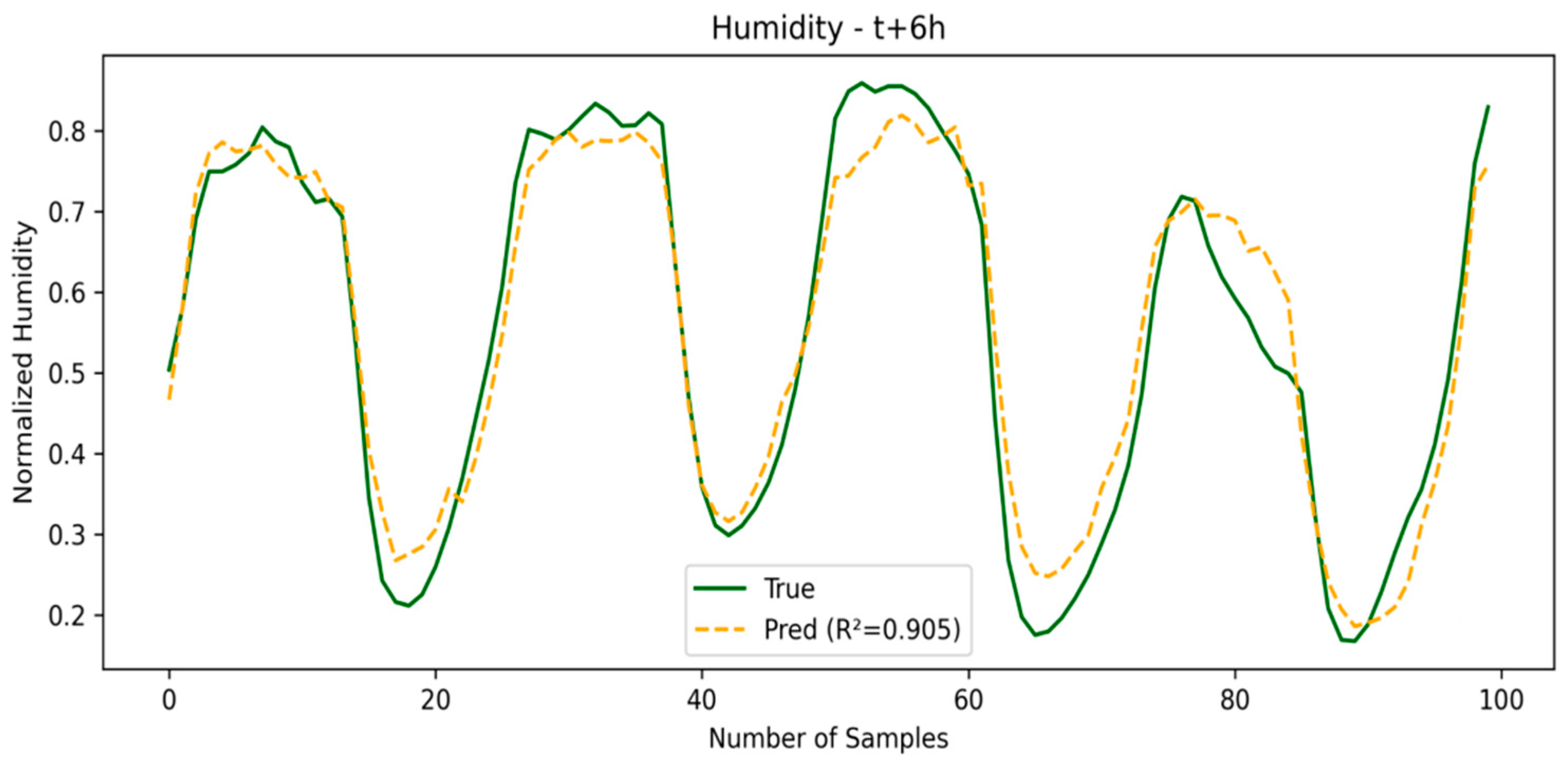

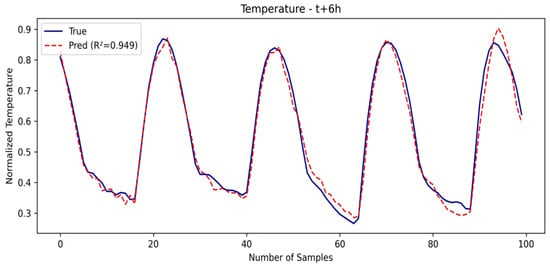

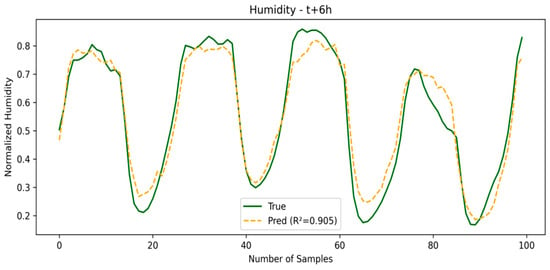

3.1.3. Third Window (t + 6)

As the prediction window grows, models like TPA-LSTM and TCN offer a good balance between accuracy and generalization, while LSTM and CNN-LSTM show a more noticeable performance drop. Overall, SE-LSTM establishes itself as the model most resilient to degradation in environments with high temporal uncertainty. The SE-LSTM predictions for temperature and humidity are illustrated in Figure 7 and Figure 8, respectively, while the performance metrics are summarized in Table 3.

Figure 7.

Temperature prediction in third window.

Figure 8.

Humidity prediction in third window.

Table 3.

Third time window (t + 6).

As seen in Table 4, the use of embeddings in SE-LSTM is aimed at maintaining an optimal balance between accuracy and efficiency, unlike TPA-LSTM and LSTM, which seek to maximize performance at the expense of greater complexity (more neurons or layers). Overall, SE-LSTM slightly outperforms LSTM with lower weights and LMU with similar weights, proving to be more suitable for MCU applications. On the other hand, models such as TCN and CNN-LSTM show a competitive performance but with an intermediate weight.

Table 4.

Overall performance.

As seen in the Table 1, Table 2, Table 3 and Table 4 the use of embeddings in SE-LSTM is aimed at maintaining an optimal balance between accuracy and efficiency, while TPA-LSTM and LSTM are aimed at achieving the highest possible performance, having more complex architectures or a greater number of neurons (the reasons for their weighting); as a general rule, SE-LSTM performs slightly better than LSTM at lower weights, and better than LMU at similar weights, for this specific application.

3.2. Learning Dynamics of the Embeddings

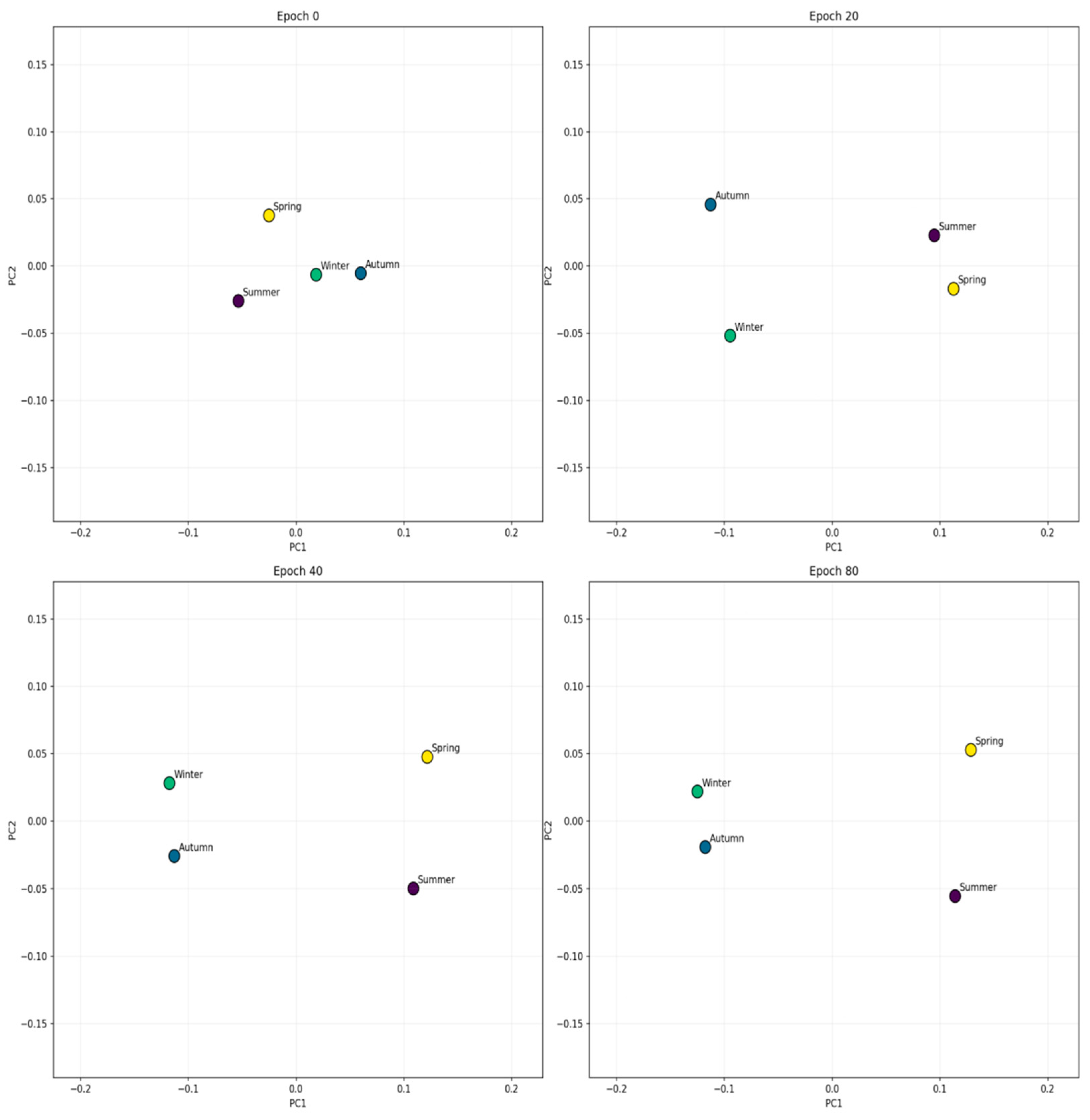

In the methodology section, it was explained that each spatial cell has an embedding vector that is adjusted during the training. To see if they are actually learning anything useful, we visualize how these vectors change over the epochs. As seen in Figure 9, they take shape from epoch 0 to 80. This allows us to observe how the model organizes its internal representation space as it trains.

Figure 9.

Evolution of embedding vectors during training.

3.3. Comparison in MCUs

The LMU and TPA-LSTM networks are not available in TFLite due to the use of advanced functions; therefore, in this section, we compare the performance of the CNN-LSTM, LSTM, TCN, and SE-LSTM networks. The embedding function is not available in TFLite, but we can emulate it by adding the matrices (generated by learning the relationships between temperature and humidity during training) as an additional input. These vectors often vary in magnitude; for example, in a given training session, the following were obtained, as shown in Table 5.

Table 5.

Vectors obtained after training.

The implementation would then be as follows: 6 features composed of temperature, humidity, and a season (a 4-dimensional vector), with steps of 24, briefly (none, 24, 6), and finally flattened to an input of type (none, 144).

The architecture was also modified to 1 LSTM layer with the ReLU activation function, followed by two dense layers with 128 and 36 neurons, respectively, both with the ReLU activation, and an output layer with 6 neurons and a linear activation function for the prediction times per variable. The batch size was 32, with 80 epochs, and the remaining metrics remained the same. This configuration was applied to both the SE-LSTM and LSTM models. As can be seen in Table 6, the performance when using embeddings increases when the network weight is maintained.

Table 6.

Performance by prediction window.

3.3.1. Edge Impulse

To upload the model to a board, several environments can be used, but the one we chose was the Edge Impulse platform [16] due to its ease of inserting the necessary libraries into the same downloadable.zip; for the compilation, TensorFlow Lite was used, and as shown in Table 7, the inference time is around 55 ms on an ESP32-S3, 671 ms on a Raspberry Pi Pico, and 74 ms on an ESP32. The inference time was with the same input data, a vector of size 144. To estimate the electrical charge consumption per inference (µAh), a Keweisi KWS-V20 USB Tester was used, and the inferences were made continuously, without delay, for 20 min. The total accumulated consumption is divided by the number of inferences made in that period, which is calculated based on the average inference time per device.

Table 7.

Inference time and current cost of various MCUs with SE-LSTM, TCN, CNN-LSTM, and LSTM.

3.3.2. Implementation

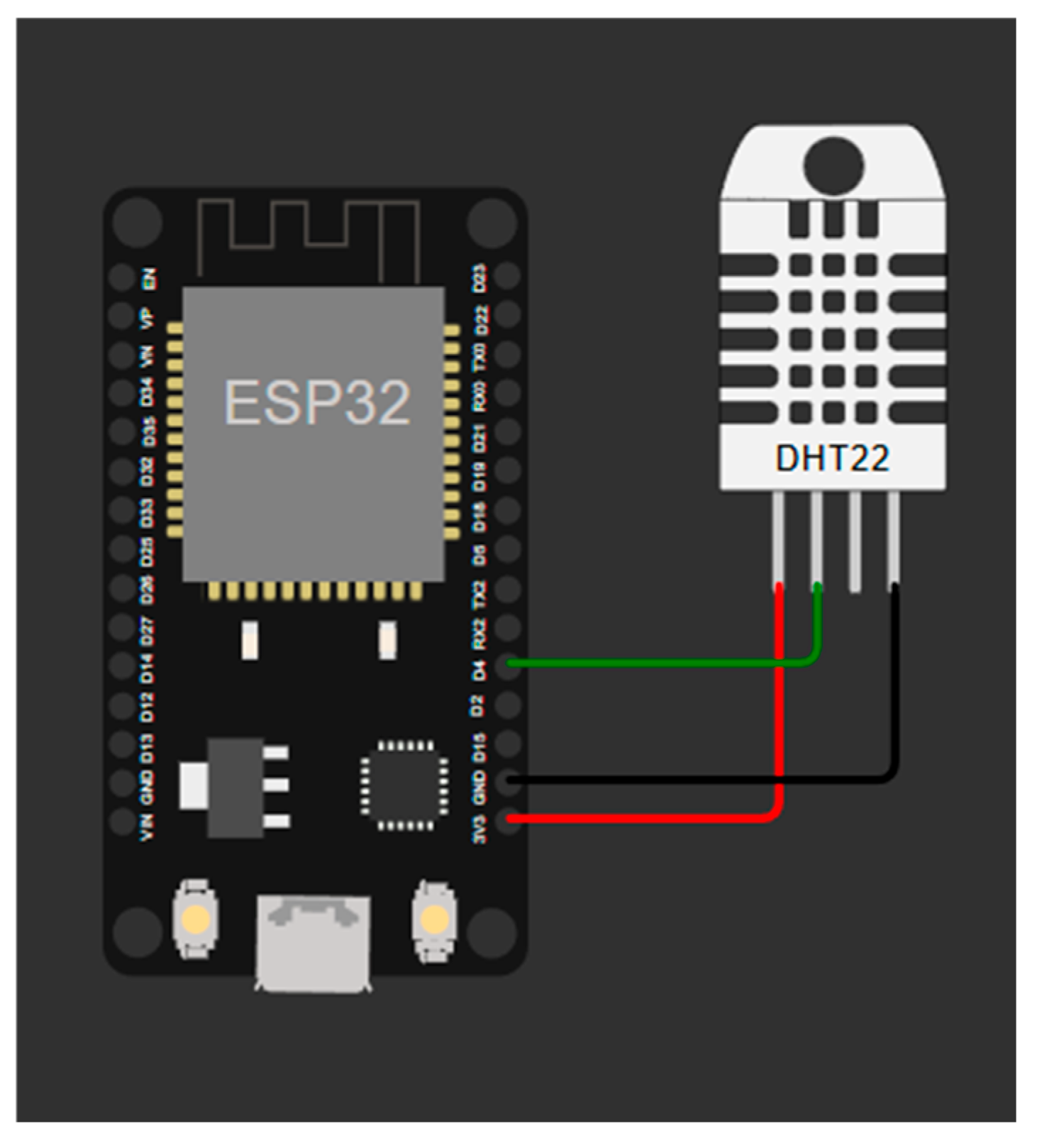

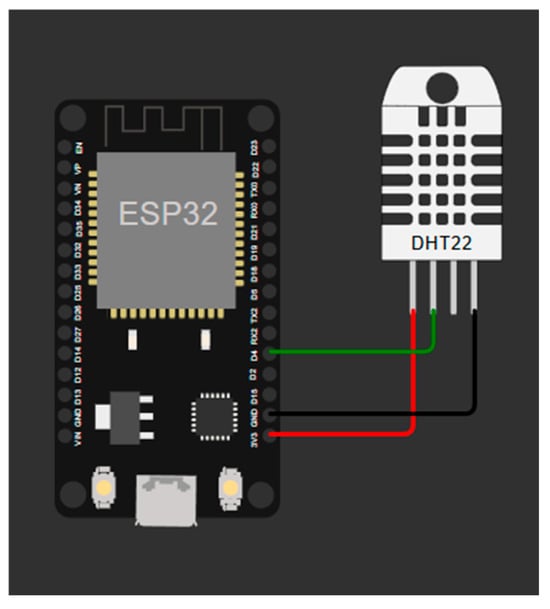

For the physical implementation part, there are aspects to consider, such as where to obtain the month or season in which the inference is made, since it is another input to the network. The connection of the DHT22 sensor to the ESP32 is shown in Figure 10. To obtain this input variable, the ESP32 can be configured with a local Wi-Fi to obtain the date, by an RTC module, by using the millis function, or even by setting the embedding value to a specific month.

Figure 10.

Connection of DHT22 sensor to ESP32 GPIO pin 4.

The network will function exactly the same as in the simulation under the same data; that is, a 144-value input vector will have the same output whether the inference is on a computer or an MCU. For the proper operation, an algorithm is required to sort the data and provide accurate updates at a given time.

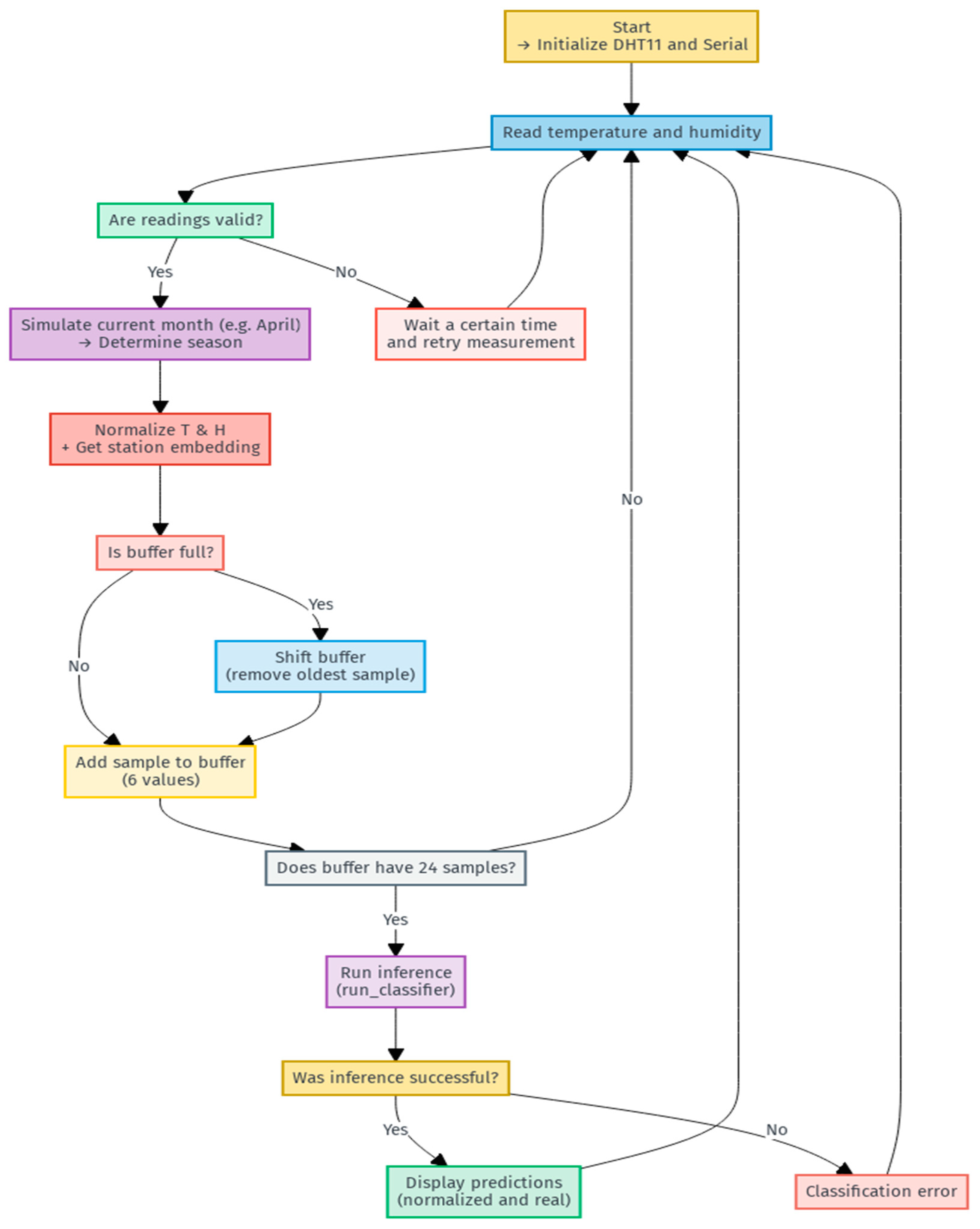

As seen in the flowchart in Figure 11, setting a default month, we take every hour as an update, with a circular memory buffer; the number of buffers can be increased to measure it in real time for every minute or every 10, 15, or 20 min, where each buffer (independent of the other buffers) is an entry to the network, but the SRAM memory must be taken into consideration. Generally, each buffer occupies 576 bytes (144 values of 4 bytes, float type variables), so with six active buffers, the total consumption of only the buffers in RAM would be 3456 bytes. Once the buffer is filled or every time it is updated with a new value, it will have one output per buffer so that only at the beginning will the system require a 24-h calibration to begin predicting correctly.

Figure 11.

Operation flow for real application.

3.4. Robustness Validation and Geographic Generalization

To evaluate the generalization capability of the proposed model, a geographic validation was performed using data from different cities around the world, selected to represent a wide variety of climates and latitudinal locations. In this evaluation, the performance of the SE-LSTM model was compared against a standard LSTM network, using the RMSE and the coefficient of determination R2 for temperature and humidity as the metrics.

The results are summarized in Table 8. In terms of temperature, SE-LSTM generally performs better, with a lower RMSE and a higher R2 in most cities. In terms of humidity, however, the performance is more variable. SE-LSTM achieves lower errors in some cases, while the classic LSTM achieves better R2 values in several cities.

Table 8.

A comparison of the performance of the SE-LSTM and LSTM models in the prediction of temperature and humidity in different cities around the world.

4. Discussion

Using the different state-of-the-art architectures, the network parameters were optimized to maintain the best performance in this application, using the same dataset and test data to see how much the overall performance varies between them, in addition to the proposed model.

It was observed that the fixed embedding approach limits the adaptability to extreme weather events (e.g., El Niño), so it would be desirable to explore online learning mechanisms that adjust the vectors during the operation.

This network can still be optimized to achieve higher performance, but the limitation is that it would have to be able to operate in low-power embedded systems. As described in the implementation, six circular buffers consume approximately 3.4KB of SRAM, an amount manageable on an MCU.

Although TPA-LSTM demonstrated better performance in the design phase, its exclusion from the embedded evaluation was due to its incompatibility with lightweight deployment frameworks, such as TensorFlow Lite, used in edge computing environments. This technical limitation reinforces the need for optimized architectures such as SE-LSTM, which maintain good predictive performance while meeting the local execution, energy efficiency, and low latency requirements of embedded systems.

Future work could test variables other than the temperature and humidity, such as the pressure, wind direction, and speed. Applications could also be extrapolated to other fields, where only one variable per neural network model or multiple variables need to be predicted, requiring a local execution or short inference times.

However, the model performs optimally in contexts similar to those of the training; changing to a greenhouse or a very different biome requires retraining or readjusting the embeddings.

5. Conclusions

This research demonstrated that the use of embeddings in LSTM networks can be essential for improving climate projections in systems such as MCUs, reducing the network size without losing performance. This can be vital for fields such as precision agriculture or resource management, where issues such as latency, privacy, or energy efficiency are key components.

Although improvements in accuracy were noted, it was also evident that more complex models, such as TPA-LSTM, can offer an even better performance. However, the device where the inference will be performed, along with the environment where it may be monitored, are also taken into account. This solution offers an approach in remote scenarios such as rural, agricultural, or low-connectivity areas.

In tests on MCUs, SE-LSTM was shown to run in inference times between 55 ms and 671 ms, and its power consumption was lower compared to other lower-precision networks.

In short, while the study demonstrates progress in climate prediction, it opens the door to new research that could not only improve predictions but also have the potential to be applied in other areas, thanks to the power of edge computing and data processing. This is a powerful tool for a variety of future applications.

Author Contributions

Conceptualization, J.P.P.M.Y.; methodology, J.P.P.M.Y.; software, J.P.P.M.Y., D.J.C.Q., G.A.E.E., M.A.V.S., D.D.Y.A.C. and A.O.S.; validation, J.P.P.M.Y., D.J.C.Q., G.A.E.E., M.A.V.S., D.D.Y.A.C. and A.O.S.; formal analysis, J.P.P.M.Y., D.J.C.Q., G.A.E.E., M.A.V.S., D.D.Y.A.C. and A.O.S.; investigation, J.P.P.M.Y.; resources, J.P.P.M.Y., D.J.C.Q., G.A.E.E., M.A.V.S., D.D.Y.A.C. and A.O.S.; data curation, J.P.P.M.Y.; writing—original draft preparation, J.P.P.M.Y., D.J.C.Q., G.A.E.E., M.A.V.S., D.D.Y.A.C. and A.O.S.; writing—review and editing, J.P.P.M.Y., D.J.C.Q., G.A.E.E., M.A.V.S., D.D.Y.A.C. and A.O.S.; visualization, J.P.P.M.Y., D.J.C.Q., G.A.E.E., M.A.V.S., D.D.Y.A.C. and A.O.S.; supervision, G.A.E.E., M.A.V.S., D.D.Y.A.C. and A.O.S.; project administration, D.J.C.Q., G.A.E.E., M.A.V.S. and D.D.Y.A.C.; funding acquisition, D.J.C.Q., G.A.E.E., D.D.Y.A.C. and A.O.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Universidad Nacional de San Agustín Arequipa (UNSA) through UNSA INVESTIGA (Contract N◦ PI-01-2024-UNSA).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All resources used in this research (dataset, code, and trained models) are available in the GitHub repository and Google Drive: https://github.com/JhanSudoAPT/SE-LSTM-MCU (accessed on 9 May 2025); https://drive.google.com/drive/folders/1NegTTShIXsTYjQVaiSDWSIg_QDC5_z5k?usp (accessed on 9 May 2025).

Acknowledgments

We would like to thank the National University of San Agustín de Arequipa.

Conflicts of Interest

The authors declare no conflicts of interest.

Correction Statement

This article has been republished with a minor correction to resolve spelling and grammatical errors. This change does not affect the scientific content of the article.

Abbreviations

| LSTM | Long Short-Term Memory |

| MCUs | Microcontroller Units |

| RTC | Real-Time Clock |

| SE-LSTM | Seasonal Embedding Long Short-Term Memory |

| TPA-LSTM | Temporal Pattern Attention Long Short-Term Memory |

| LMU | Legendre Memory Unit |

| MAE | Mean Absolute Error |

| MSE | Mean Squared Error |

| RMSE | Root Mean Squared Error |

| MAPE | Mean Absolute Percentage Error |

| RSE | Residual Sum of Squares Error |

| R2 | Coefficient of Determination |

| IoT | Internet of Things |

| PCA | Principal Component Analysis |

| GitHub | Collaborative development platform |

| NASA | National Aeronautics and Space Administration |

| POWER | NASA database with solar energy and meteorological data |

| RAM | Random Access Memory |

| µAh | Microampere-hour |

References

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Codeluppi, G.; Davoli, L.; Ferrari, G. Forecasting air temperature on edge devices with embedded AI. Sensors 2021, 21, 3973. [Google Scholar] [CrossRef] [PubMed]

- Guo, Z.; Feng, L. Multi-step prediction of greenhouse temperature and humidity based on temporal position attention LSTM. Stoch. Environ. Res. Risk Assess. 2024, 38, 4907–4934. [Google Scholar] [CrossRef]

- Voelker, A.R.; Kajić, I.; Eliasmith, C. Legendre memory units: Continuous-time representation in recurrent neural networks. Adv. Neural Inf. Process. Syst. 2019, 32, 15544–15553. [Google Scholar]

- Shi, W.; Cao, J.; Zhang, Q.; Li, Y.; Xu, L. Edge computing: Vision and challenges. IEEE IoT J. 2016, 3, 637–646. [Google Scholar] [CrossRef]

- Srivastava, A.; Das, D.K. A comprehensive review on the application of Internet of Things (IoT) in smart agriculture. Wirel. Pers. Commun. 2022, 122, 1807–1837. [Google Scholar] [CrossRef]

- Elmaz, F.; Eyckerman, R.; Casteels, W.; Latré, S.; Hellinckx, P. CNN-LSTM architecture for predictive indoor temperature modeling. Build. Environ. 2021, 203, 108327. [Google Scholar] [CrossRef]

- Hewage, P.; Behera, A.; Trovati, M.; Prreira, E.; Ghahremani, M.; Palmieri, F.; Liu, Y. Temporal convolutional neural (TCN) network for an effective weather forecasting using time-series data from the local weather station. Soft Comput. 2020, 24, 16453–16482. [Google Scholar] [CrossRef]

- Andriulo, F.C.; Fiore, M.; Mongiello, M.; Traversa, E.; Zizzo, V. Edge computing and cloud computing for Internet of Things: A review. Informatics 2024, 11, 71. [Google Scholar] [CrossRef]

- King, T.; Zhou, Y.; Röddiger, T.; Beigl, M. MicroNAS for memory and latency constrained hardware aware neural architecture search in time series classification on microcontrollers. Sci. Rep. 2025, 15, 7575. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Song, Q.; Li, L.; Choi, S.H.; Chen, R.; Hu, X. PME: Pruning-based multi-size embedding for recommender systems. Front. Big. Data. 2023, 6, 1195742. [Google Scholar] [CrossRef] [PubMed]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- National Oceanic and Atmospheric Administration (NOAA). Meteorological Versus Astronomical Seasons. Available online: https://www.ncei.noaa.gov/news/meteorological-versus-astronomical-seasons. (accessed on 26 May 2025).

- Rzepka, K.; Szary, P.; Cabaj, K.; Mazurczyk, W. Performance evaluation of Raspberry Pi 4 and STM32 Nucleo boards for security-related operations in IoT environments. Comput. Netw 2024, 242, 110252. [Google Scholar] [CrossRef]

- NASA Langley Research Center. POWER NASA—Prediction Of Worldwide Energy Resources Data Access Viewer. Available online: https://power.larc.nasa.gov/data-access-viewer/. (accessed on 26 May 2025).

- Edge Impulse—Machine Learning for Edge Devices. Available online: https://www.edgeimpulse.com/ (accessed on 26 May 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).