Abstract

To address the limitations of traditional optimization methods in achieving high accuracy in high-dimensional problems, this paper introduces the snow leopard optimization (SLO) algorithm. SLO is a novel meta-heuristic approach inspired by the territorial behaviors of snow leopards. By emulating strategies such as territory delineation, neighborhood relocation, and dispute mechanisms, SLO achieves a balance between exploration and exploitation, to navigate vast and complex search spaces. The algorithm’s performance was evaluated using the CEC2017 benchmark and high-dimensional genetic data feature selection tasks, demonstrating SLO’s competitive advantage in solving high-dimensional optimization problems. In the CEC2017 experiments, SLO ranked first in the Friedman test, outperforming several well-known algorithms, including ETBBPSO, ARBBPSO, HCOA, AVOA, WOA, SSA, and HHO. The effective application of SLO in high-dimensional genetic data feature selection further highlights its adaptability and practical utility, marking significant progress in the field of high-dimensional optimization and feature selection.

1. Introduction

With the advancement of technology, the data collected by sensors have become increasingly high-dimensional and large in scale. The primary challenges in processing high-dimensional sensor data are the curse of dimensionality and data redundancy. As the number of features generated by sensors skyrockets, the feature space becomes exceedingly complex, making it difficult for traditional algorithms to efficiently search and often leading to local optima. Moreover, sensor data typically contain a large number of redundant and irrelevant features, which increases computational costs and reduces model accuracy. Therefore, feature selection is crucial, as selecting the most informative features not only enhances model performance but also reduces computational complexity, improving the ability to handle high-dimensional data. The snow leopard optimization (SLO) algorithm’s main contribution to addressing the issue of feature selection in high-dimensional sensor data lies in its ability to effectively mitigate the curse of dimensionality and optimize the feature selection process. The experimental results demonstrated that SLO provides a high-precision solution for sensor data feature selection problems.

In recent years, more and more complex real-world problems can be abstracted into mathematical models. Part of these mathematical models are for optima problems in optimization problems. Scholars usually use optimization algorithms [1] to find the optimal solutions to optimization problems. In the real world, optimization algorithms can solve practical problems and improve efficiency. To deal with building energy optimization problems [2,3,4], the butterfly optimization algorithm (BOA), the pelican optimization single candidate optimizer (POSCO), and the greedy strategy-based adaptive particle swarm optimization (GAPSO) algorithm were proposed. The hybrid sine cosine algorithm (HSCA) and the refined particle swarm optimization algorithm were used to solve the engineering design optimization problems [5,6]. The wrapper feature selection technique anchored on the whale optimization algorithm (WOA) [7] was introduced to optimize feature subset identification. The enhanced lemur optimization (ELO) [8] algorithm was used to determine the most optimal relevant features. Many researchers have proposed new optimization methods to solve healthcare problems [9], image segmentation [10], and cost-effectiveness problems [11,12].

This research sought to tackle the intricate challenges of high-dimensional optimization, where the vastness and complexity of the search space often hinder the performance of traditional algorithms. The aim of developing the snow leopard optimization (SLO) algorithm was to provide a robust solution that can efficiently navigate and solve these complex problems by striking an effective balance between exploration and exploitation. Through this work, our research aspires to advance optimization techniques, especially in fields requiring high-dimensional feature selection, such as bioinformatics and environmental science, where current methods may fall short.

2. Related Work

Optimization algorithms are great tools for solving real-life problems, especially in the fields of healthcare, engineering design, image processing, etc., and play an important role in reducing costs, optimizing system performance, and improving accuracy. However, optimization problems, including constrained optimization problems [13], nonlinear optimization problems [14], and combinatorial optimization problems [15], have become more complex and challenging. Many scholars have proposed meta-heuristic optimization algorithms to better solve these optimization problems. Meta-heuristic algorithms [16,17,18] are a general class of optimization frameworks usually inspired by biological behavioral strategies [19,20,21,22], natural theories [23], and phenomena [24]. Abdollahzadeh [25] introduced the African vultures optimization algorithm (AVOA) to better balance diversity and resonance and improve optimization performance. Wang [26] introduced artificial rabbit optimization (ARO) inspired by rabbit survival strategies in nature. Guo [27] introduced a novel hermit crab optimization algorithm (HCOA), inspired by the distinctive behavior of hermit crabs in searching for appropriate houses to survive. The HCOA improved the robustness and accuracy of the algorithm in high-dimensional optimization work. Agushaka [28] proposed a novel population-based meta-heuristic algorithm, the gazelle optimization algorithm (GOA), which was inspired by the adaptive survival skills of gazelles in predator-rich habitats. Hashim [29] introduced the snake optimizer (SO), emulating the distinct mating behavior of snakes. Heidari [30] introduced the Harris hawks optimizer (HHO), derived from the strategic surprise pounce behavior of Harris hawks. Xue [31] introduced the sparrow search algorithm (SSA), a novel swarm optimization method inspired by the collective knowledge of sparrows and their foraging and anti-predation tactics. Dehghani [32] introduced Tasmanian devil optimization (TDO) modeled on the feeding strategies of the Tasmanian devil. Ghaedi [33] introduced the cat hunting optimization (CHO) algorithm, which adjusted the search radius around optimal solutions using trigonometric relations such as the cosine. The CHO delays rapid prey targeting, enhancing its capability to discover efficient global solutions. Hashim [34] proposed the honey badger algorithm (HBA), which was inspired by the intelligent foraging behavior of honey badgers.

Developments in fields such as oil [35,36] and environmental science [37,38] have introduced new challenges to existing technologies. In the task of feature selection [39,40], researchers have proposed numerous novel methods [41,42].

Nature-inspired optimization algorithms can solve existing optimization problems well, but these algorithms still have many limitations in solving certain optimization problems, such as easily falling into local optima [43]. To address these limitations, many researchers have proposed improved strategies based on existing optimization algorithms, to enhance their ability to solve optimization problems. These improvement methods can be divided into the following categories: adjusting parameters [44,45,46,47,48], improving the initialization strategy [49,50,51,52,53], changing the search strategy [54,55,56,57,58,59,60], and combining other optimization algorithms [61,62,63].

These optimization algorithms perform well in solving most optimization problems. However, as real-world problems become more complex, the dimensions of these optimization problems increase. Feature selection for genetic data is an example of a high-dimensional realistic optimization problem. High-dimensional genetic data have a large number of redundant or correlated features [64]. Reducing the number of features helps to improve the generalization performance of a classification model and avoid overfitting [65]. As the dimensionality of optimization problems increases, it becomes more challenging to efficiently explore the solution space. High-dimensional problems have more decision parameters and the search space becomes deeper and wider. When dealing with high-dimensional problems, it is necessary for optimization algorithms to better balance global and local search. In high-dimensional problems, local optima also occur more frequently. The performance of optimization algorithms in solving high-dimensional problems can be improved by increasing their ability to escape from local optima. When addressing high-dimensional optimization problems, it is easy for HHO to become trapped in local optima, because of its low global exploration efficiency. Due to the lack of a powerful local exploitation capacity, the horse herd optimization algorithm (HOA) [66] and SSA performed worse when solving high-dimensional optimization problems.

To face these challenge, we introduced the novel snow leopard optimization (SLO), which was inspired by the behavior of the snow leopard in nature, to better solve high-dimensional problems. More specifically, the main research contributions of this paper are as follows:

- A novel snow leopard optimization (SLO) algorithm is proposed, which has a better performance on high-dimensional problems.

- The four different strategies of the SLO can provide a better balance between global search and local search.

- Compared with eight well-known optimizers, the HSO was verified to be more effective in solving high-dimensional problems, including the CEC2017 benchmark and feature selection of high-dimensional genetic data.

3. Methods

High-dimensional problems are caused by large increases in decision variables. When increasing the decision variables, the solution space becomes wider and the number of local optima increases. Balancing the global and local search of a solution space and increasing the ability of escape from local optima are the main challenges of high-dimensional problems. To better solve these problems, we developed a novel snow leopard optimization (SLO), which was inspired by the real-world behavior of snow leopards, including territorial delineation, territory encroachment, and resource pillage. In this section, we discuss some details of the snow leopard and the SLO.

3.1. Behavior of Snow Leopards

The snow leopard is a big cat, known as the “monarch of the icy realms”. They are often found in icy alpine bare rock and cold desert zone environments. Snow leopards live in both solitary habitats, where they continuously attempt to seek a mate, and group habitats, mainly during the early stages of mating and cub rearing. Snow leopards typically have extensive territories, and the size of this territory depends on environmental conditions and the availability of food resources. The range of territory may vary from tens of square kilometers to several hundreds of square kilometers. This territory provides food, habitat, and resources for reproduction. Snow leopard territories are also gender-related. During the mating season, males and females jointly seek food, and this cooperative approach helps females acquire sufficient nutrition to support pregnancy. Snow leopards without mating partners attempt to move in the vicinity of those without mates, trying to seize opportunities for mating. They generally operate within their territorial boundaries. However, in the winter, as temperatures drop, they compete for food outside their territorial range, and this behavior becomes more frequent as temperatures decline.

3.2. Snow Leopard Optimization

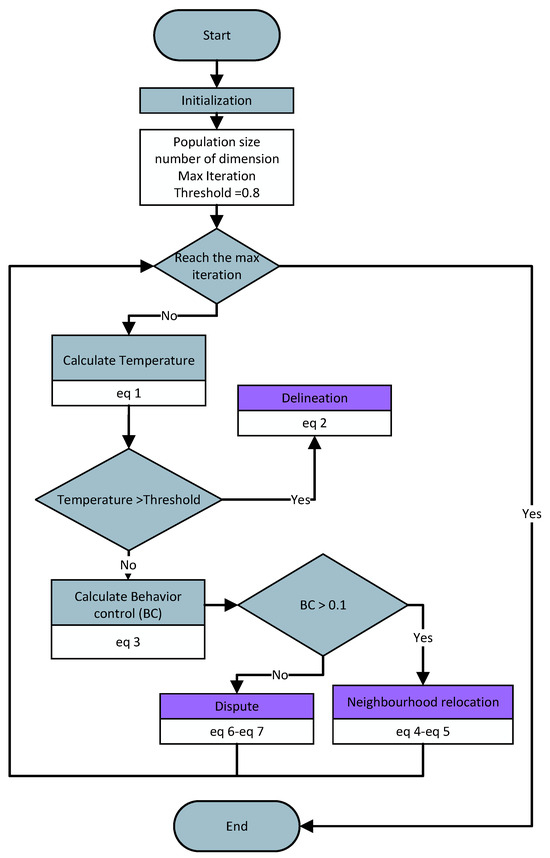

Inspired by snow leopard behavior, we introduced a novel meta-heuristic optimization algorithm, snow leopard optimization (SLO). The process of snow leopards seeking territories can be abstracted as an optimization algorithm searching for the optimal solution. In SLO, the search space represents the habitat of snow leopards in the natural world. The territories of individuals in the search space correspond to a solution. Each snow leopard has territorial superiority, which can be associated with the fitness of the optimization function. Territorial superiority involves many factors such as resource abundance, geographical position, and food availability. These influencing factors affect territorial superiority just like decision variables impact an optimization problem. Snow leopards with better territories have a better territorial superiority fitness. Among all individuals, the snow leopard with the best territorial superiority is referred to as the “Leopard King”. In the natural world, the territorial behaviors of snow leopards are often associated with gender, territorial superiority, and living arrangements. Based on the living arrangements and gender of snow leopards, we categorize them into solitary males, solitary females, co-residing males, and co-residing females. In the initialization phase, each male snow leopard is randomly assigned as either solitary or co-residing. Co-residing male snow leopards are assigned a cohabiting female snow leopard as an mating object. Different types of snow leopards exhibit different territorial behaviors. Furthermore, temperature also influences the behavior of snow leopards. In the SLO, we introduce the concept of temperature, which is related to the number of iterations. The equation for the temperature is shown in Equation (1). When the temperature is high, snow leopards delineate territory. However, neighborhood relocation and dispute only occur when the temperature is low. As the temperature decreases, the probability of resource disputes increases, while the proportion of neighborhood relocations decreases. These strategies were used to guide the optimization algorithm to improve its efficiency and performance. A flowchart of SLO is shown in Figure 1.

where t and are the number of current and the max iterations, respectively.

Figure 1.

Flowchart of SLO.

3.3. Delineation

The behavior of snow leopard territorial delineation is a crucial behavior used to ensure the availability of survival resources. In SLO, delineation takes place in the early iterations, when the is above a threshold (threshold = 0.8). Snow leopards search for territories near members of the opposite sex with the same living arrangement. Solitary snow leopards can secure more mating opportunities, while co-residing snow leopards can acquire territories with better access to survival resources. The delineation of these territories is not random. In SLO, the territorial delineation of the snow leopards is based on the superiority of their territory and the superiority of the selected opposite-sex territory. Stronger individual snow leopards tend to have better territorial superiority. When choosing targets, snow leopards avoid those with a large gap in superiority, in order to minimize competition. As a result, snow leopards with poorer territorial superiority do not approach snow leopards with better territorial superiority, but rather stay away from them.The calculation of candidate territorial delineation for snow leopards is as shown in Equation (2). With the help of this strategy, individuals in SLO can continuously achieve a better territorial delineation. This measure prevents the population from converging too quickly in the early stages and increases the ability for global search.

where is a function to calculate territorial superiority. and represent the territory of the i-th snow leopard, with different types that include solitary males, solitary females, co-residing males, and co-residing females, and the territory of the same-type counterparts with a different gender. and are the maximum and minimum values of the search space range. indicates a random value from 0 to 1. is a constant with a value of 0.5.

3.4. Neighborhood Relocation

The neighborhood relocation behavior of the snow leopard describes the situation in which they leave their existing territory to seek a better one and relocate to a new territory nearby. Neighborhood relocation is a strategy for snow leopards to adapt to changes and ensure their survival. However, it also came with various challenges, including competition with other snow leopards, the difficulty of finding a new territory, and the advantages of the new territory. Due to variations in their competitors, different types of snow leopards have different relocation methods. Furthermore, the occurrence of territorial neighborhood relocation is related to temperature. The lower the temperature, the more likely snow leopards are to change their territories. Therefore, as the temperature decreases, territorial encroachment occurs more frequently. In SLO, the behavioral control (BC) formula is designed to adaptively control the frequency of occurrence of territorial neighborhood relocation. The calculation method for BC is shown in Equation (3).

where is a random value from 0 to 1. The can be calculated using Equation (1).

Neighborhood relocation of territory only occurs when BC is more than 0.1. In this strategy, the snow leopards select co-residing snow leopards of the same gender as their encroachment targets. These snow leopards undergo repeated territorial shifts, which are influenced by their competitive objectives. Furthermore, the relocations of male snow leopards are impacted by the leopard king, which has the best territory in the area. The calculation formula for generating candidate territories during territorial neighborhood relocation of snow leopards is shown in Equations (4) and (5).

where and represent the territory of the i-th snow leopard with different types, which includes solitary males, solitary females, co-residing males, and co-residing females, and the territory of their same-type counterparts with the same gender. is a function value that is generated randomly from a Gaussian distribution with an expected value of 0 and a variance of 1. is a constant with a value of 0.5.

where and represent the territory of the i-th snow leopard, with different types that include solitary males and co-residing males, and the territory of leopard king. is a function value that is generated randomly from a Gaussian distribution with an expected value of 0 and a variance of 1. is a constant with a value of 0.5.

To better explain the process of territorial neighborhood relocation in SLO, the pseudocode for the territorial neighborhood relocation strategy is shown in Algorithm 1. This strategy involves individuals continuously searching for superior territories around them, while being influenced by various factors. This enhances each individual’s local search capabilities, and at the same time, the population moves in the direction of the optimal solution, ensuring that the entire population evolves toward the optimal outcome.

| Algorithm 1 Neighborhood relocation |

|

3.5. Dispute

In the snow leopard swarm, territorial disputes of snow leopards are a natural behavior that helps them ensure an adequate supply of food and habitat resources for survival. Territorial disputes mainly occur near the leopard king and become more frequent as temperatures decrease. On the one hand, the leopard king possesses the superior territory, and on the other hand, as temperatures drop, the available survival resources within the territories sharply decrease. Superior territory provides access to greater survival resources. In SLO, both disputes and neighborhood relocations of a territory are observed when the falls below 0.8. Specially, disputes happen when is less than 0.1, and the likelihood increases with the number of iterations. In the behavior of disputes, the primary focus is the territory of the leopard king. Nevertheless, solitary snow leopards engage in territorial battles with individuals of the same sex to secure mating rights. The formulation for generating potential territories during disputes among snow leopards is illustrated in Equations (6) and (7).

where and represent the territory of the i-th snow leopard with different types, including all kinds of the snow leopards, and the leopard king territory, respectively. is a function value that is generated randomly from a Gaussian distribution with an expected value of 0 and a variance of 1. is a constant with a value of 0.05. is a variable that varies with the number of iterations, and its calculation method is outlined in Equation (1).

where and represent the territory of the i-th snow leopard with different types, which includes solitary males, solitary females, and the territory of their same-type counterparts with the same gender. is the territory of the leopard King. is a function value that is generated random from a Gaussian distribution with an expected value of 0 and a variance of 1. is a constant with a value of 0.05. is a variable that varies with the number of iterations, and its calculation method is outlined in Equation (1).

To provide a clear representation of how the territorial dispute behavior operates, its pseudocode is presented in Algorithm 2. With this strategy in place, individuals gravitate toward territories with global superiority. Different types of individuals are influenced by various contesting parties, resulting in diverse approaches to territorial disputes. This enhances the individual local search capabilities, ultimately improving the precision of later solutions.

| Algorithm 2 Dispute |

|

4. Experiments and Results

In this article, 7 algorithms, ETBBPSO, ARBBPSO, HCOA, AVOA, WOA, SSA, and HHO, were selected as a control group to compare with SLO. To validate the performance of SLO in high-dimensional optimization problems, the highest recommended dimension in CEC2017 was chosen as a benchmark. Eight real high-dimensional gene datasets were selected to validate the ability for feature selection. In this section, the details of these two group experiments and results are given. In the experiment, MATLAB software version 2020b on the Windows 10 operating system was used.

4.1. Benchmark Test

The CEC2017 is a well-know optimization problem test set. It includes a total of 29 benchmark functions, which comprise unimodal functions (), simple multimodal functions (), hybrid functions (), and composition functions (). There are 10, 30, 50, and 100 dimensions in CEC2017. The 100-dimension version was selected to conduct this test. In order to reduce the randomness of the experimental results, both SLO and the control group algorithms were subjected to 36 independent experiments. We employed the mean error (ME) to evaluate the performance of all algorithms on CEC2017. The formula for calculating the ME is shown in Equation (8). To ensure the fairness of the comparison, all algorithms used the parameters set by the original paper. All experiments were had the same population of 100, max iteration number of 10,000, and dimensions of 100.

where N is the number of independent experiments. represents a function used to calculate the absolute value of data. and are the actual optimal value calculated by the algorithms and the theoretical optimal value of the test function itself.

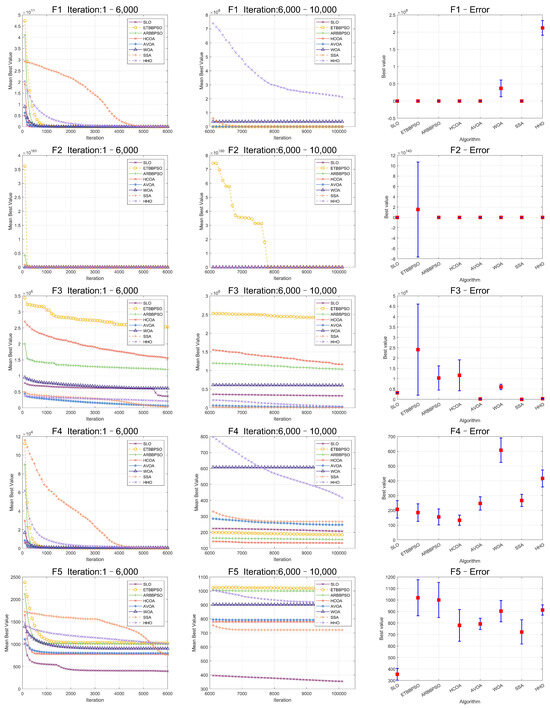

The Friedman test was used to analyze the ME. The specific ME, standard deviation(Std), and rank are presented in Table 1 and Table 2, and the average rank of the algorithms is shown at the bottom of Table 2. To provide a more intuitive representation of the convergence process and errors for SLO and control group algorithms, the convergence process of ME for all algorithms on CEC2017, as well as the final ME error bars, are shown in Figure 2, Figure 3, Figure 4, Figure 5, Figure 6 and Figure 7.

Table 1.

Experimental results of SLO, HCOA, ARBBPSO, ETBBPSO, AVOA, SSA, HHO, and WOA for –. Mean, Std, and Rand are dimensionless.

Table 2.

Experimental results of SLO, HCOA, ARBBPSO, ETBBPSO, AVOA, SSA, HHO, and WOA for –, with the average rank at the end of the table. Mean, Std, and Rand are dimensionless.

Figure 2.

Convergence curves and error bars of SLO and control group algorithms on CEC2017 function 1–function 5.

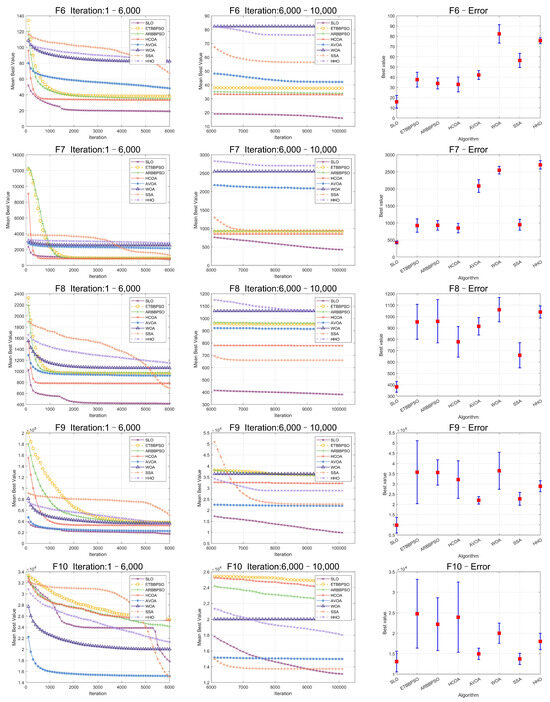

Figure 3.

Convergence curves and error bars of SLO and control group algorithms on CEC2017 function 6–function 10.

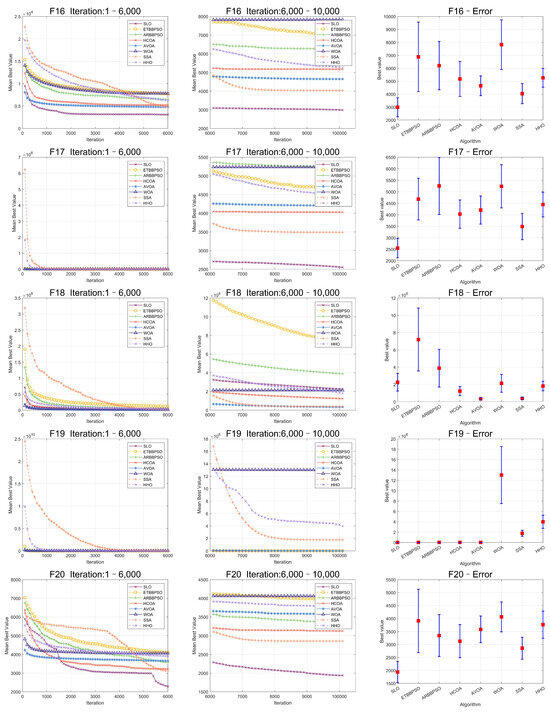

Figure 4.

Convergence curves and error bars of SLO and control group algorithms on CEC2017 function 11–function 15.

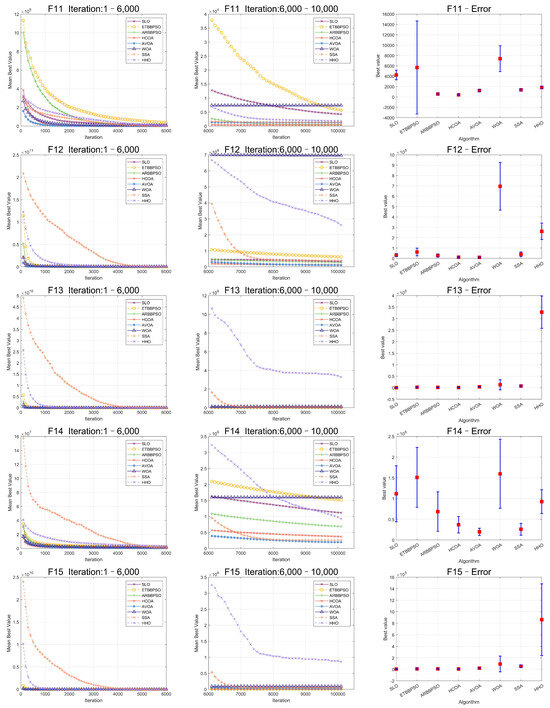

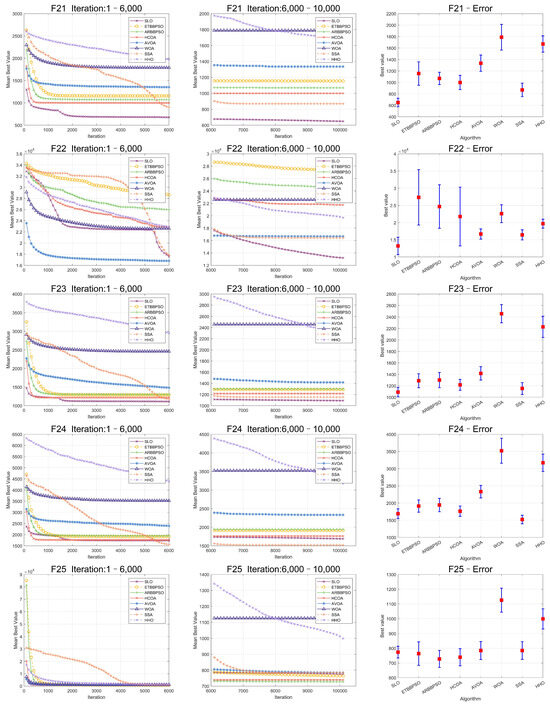

Figure 5.

Convergence curves and error bars of SLO and control group algorithms on CEC2017 function 16–function 20.

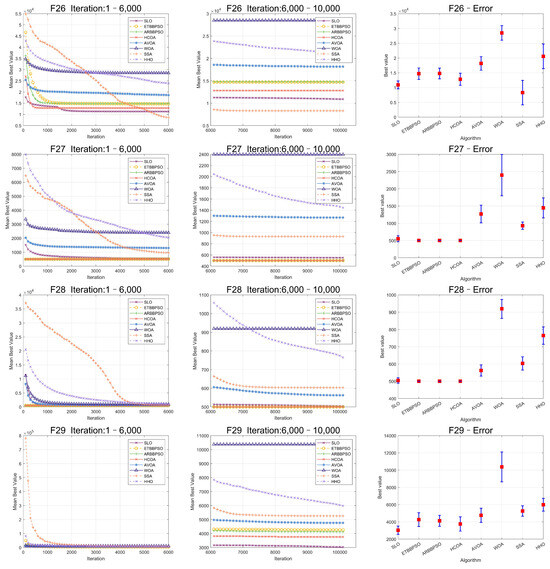

Figure 6.

Convergence curves and error bars of SLO and control group algorithms on CEC2017 function 21–function 25.

Figure 7.

Convergence curves and error bars of SLO and control group algorithms on CEC2017 function 26–function 29.

Specifically, SLO ranked first on average among the 8 algorithms, with a score of 2.4138, which was 21.35% better than the second-ranked algorithm, HCOA. For the four different types of test functions of CEC2017, SLO obtained better optimal solutions than the control group algorithms in most of the functions with simple multimodal functions, hybrid functions, and composition functions. SLO achieved 5 first ranks compared with the control group for the 7 simple multimodal functions. SLO outperformed the other algorithms for the hybrid and composition functions, with a 60% and 50% share of the first rank. However, SLO was not as good as the other controls in solving single-peaked functions. SLO had no worst performance rankings among all 29 functions. On balance, SLO was superior in solving high-dimensional benchmark functions.

As seen in Figure 2, Figure 3, Figure 4, Figure 5, Figure 6 and Figure 7, SLO had a significant advantage in convergence speed on , , , , and . This was due to its territorial division strategy. Except for , , , and , SLO found the exact solution in the later stage. Meanwhile, as seen from the error bar chart, SLO had a strong robustness, with small errors in 36 independent experiments. This indicated that SLO can provide a good balance between global and local searching.

4.2. Feature Selection

Eight real gene datasets were selected to further validate the performance of SLO in high-dimensional problems. The details of these datasets are shown in the Table 3.

Table 3.

Details of datasets.

The data in the dataset were divided into a training set and test set in the ratio of 80% and 20%. The K nearest neighbor (KNN) algorithm was used as a classifier to validate the results of the feature selection. Due to the small number of training samples in the high-dimensional gene dataset, we used K-fold cross-validation to reduce the chance of error in training. The search range of all algorithms was [0,1]. The selected features [40] were determined by Equation (9).

where is the value of the i-th dimension in the optimal individual.

The parameters used in training are shown in Table 4. Using a 10-fold cross-validation provided a reliable assessment of the model’s performance by ensuring that each data point was used for both training and validation across the different iterations [42]. In the KNN model, the value of K was set to 5 to achieve an optimal balance between generalization capability and classification accuracy [41]. In order to comprehensively measure the performance of the algorithms in feature selection, we divided the fitness of the optimization algorithms into two parts, one part was the accuracy of the classification after feature selection, and the other part was the ratio of the number of selected features to the number of original features. The fitness of feature selection was calculated as shown in Equation (10).

where is the accuracy of classification after feature selection. n and N represent the number of features after feature selection and the total number of features, respectively. and are equal to 0.99 and 0.01 constants, respectively.

Table 4.

Details of parameters.

To reduce the variation in the experimental results, all algorithms were subjected to 20 separate experiments on each dataset. The average experimental results were chosen to compare the performance of the algorithms. The Friedman test method was used to evaluate the performance of SLO in feature selection. The results of the fitness and accuracy calculated by SLO and the control group algorithms are shown in Table 5 and Table 6. The mean number and standard deviation of the selected features are shown in Table 7.

Table 5.

The fitness values results of SLO, HCOA, ARBBPSO, ETBBPSO, AVOA, SSA, HHO, and WOA. The average ranks are at the end of the table. Mean, Std, and Rand are dimensionless.

Table 6.

The accuracy results of SLO, HCOA, ARBBPSO, ETBBPSO, AVOA, SSA, HHO, and WOA. The average ranks are at the end of the table. Mean, Std, and Rand are dimensionless.

Table 7.

The feature selection number result of SLO, HCOA, ARBBPSO, ETBBPSO, AVOA, SSA, HHO, and WOA. Mean, Std, and Rand are dimensionless.

As seen in Table 5, the fitness value of the SLO had a distinct superiority over the other algorithms. It achieved five first ranks among the eight datasets and achieved first place according to the Friedman test. SLO had the best overall rank of 1.625, which was 0.375 higher than the second ranking algorithm, AVOA.

As seen in Table 6, SLO achieved classification accuracies of over 70% for all eight datasets after feature selection. The highest classification accuracy of 98.90% was achieved on the Lymphoma dataset. In the Friedman test, SLO obtained five first and best average rankings of 1.625, which was 0.15 higher than the second ranked algorithm, AVOA.

Table 7 shows that SLO also achieved a great improvement in the number of features selected. Compared to the other algorithms, it had a minimum of six features selected in the eight datasets. SLO had the greatest advantage in the mean number of features selected on the Colon dataset, with 27.28 after selection, which accounted for 1.36% of the total features, a reduction of 1972.72 features.

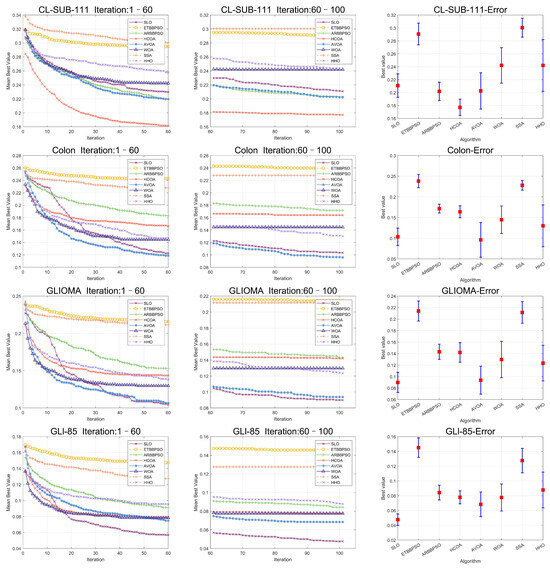

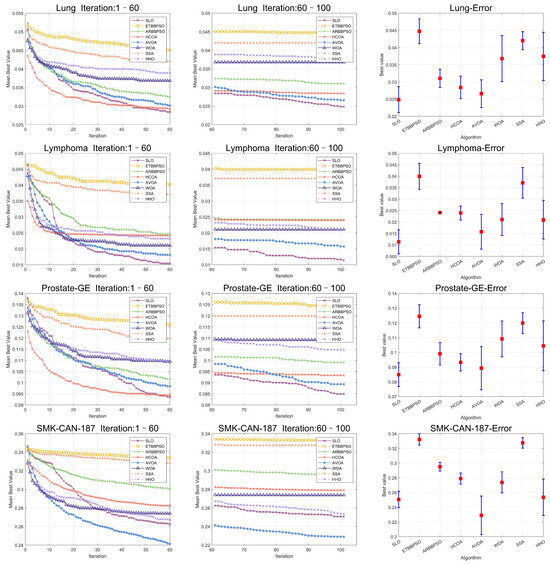

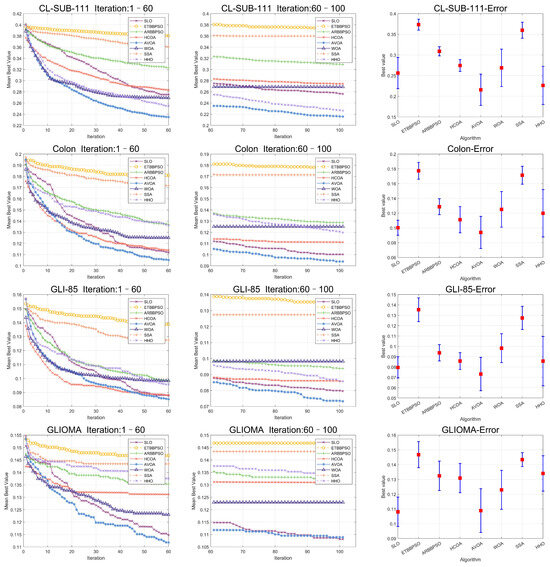

To clearly visualize the convergence curves of the fitness of all the algorithms on the eight datasets, as well as their error, convergence plots of the SLO and control group algorithms, as well as error bars, are shown in Figure 8 and Figure 9.

Figure 8.

Convergence curves and error bars of SLO and the control group algorithms on CL-SUB-111, Colon, GLIOMA, and GLl-85.

Figure 9.

Convergence curves and error bars of SLO and the control group algorithms on Lung, Lymphoma, Prostate-GE, and SMK-CAN-187.

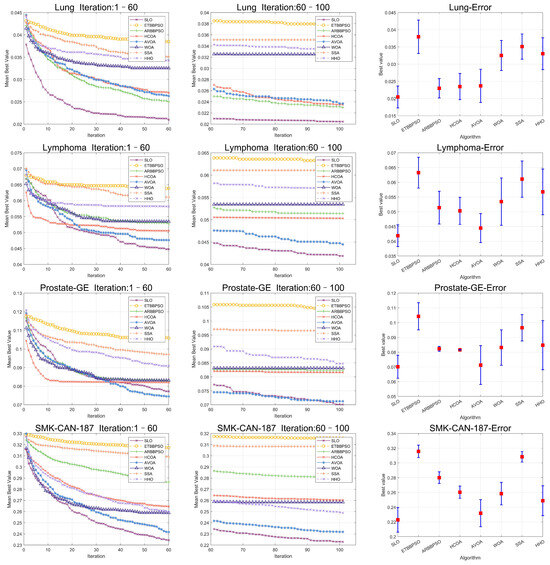

In addition, to enhance the persuasiveness of the experiment, 90 percent of the data were used for training and 10 percent of the data were used for testing, and the results of the experiment are shown in Table 8, Table 9 and Table 10, and the convergence plots of the SLO and control group algorithms, as well as the error bars, are shown in Figure 10 and Figure 11.

Table 8.

The fitness values results of SLO, HCOA, ARBBPSO, ETBBPSO, AVOA, SSA, HHO, and WOA. The average ranks are at the end of the table. Mean, Std, and Rank are dimensionless.

Table 9.

The accuracy results of SLO, HCOA, ARBBPSO, ETBBPSO, AVOA, SSA, HHO, and WOA. Mean, Std, and Rank are dimensionless.

Table 10.

The feature selection numerical results of SLO, HCOA, ARBBPSO, ETBBPSO, AVOA, SSA, HHO, and WOA. Mean, Std, and Rank are dimensionless.

Figure 10.

Convergence curves and error bars of SLO and the control group algorithms on CL-SUB-111, Colon, GLIOMA, and GLl-85.

Figure 11.

Convergence curves and error bars of SLO and the control group algorithms on Lung, Lymphoma, Prostate-GE, and SMK-CAN-187.

4.3. Discussion

From Figure 8 and Figure 9, it is evident that, while the early convergence speed of the snow leopard optimization (SLO) algorithm in the eight high-dimensional gene feature selection problems was slower than that of the other algorithms, the accuracy of its solutions was notably higher than that of the control group. This behavior can be attributed to the nature of the feature selection problem, which has a limited search range. The territory delineation strategy in SLO, while not achieving rapid convergence initially, helped prevent premature convergence by reducing the likelihood of becoming trapped in a local optimum in the early stages.

Additionally, the territorial neighborhood relocation and dispute strategies in SLO enhanced the local search efficiency, thereby improving the accuracy of the later solutions. By using these local search methods, the algorithm refined its search around promising areas in the later stages, yielding more accurate solutions.

In the comparative evaluations, SLO was benchmarked against seven well-known optimization algorithms—HCOA, ARBBPSO, ETBBPSO, AVOA, SSA, HHO, and WOA—which served as a control group. In the CEC2017 benchmark test function set, SLO achieved the highest average rank. Furthermore, when applied to eight real gene datasets, SLO outperformed the control algorithms in terms of predictive accuracy and achieved the highest average ranking for the number of selected features. This demonstrated SLO’s superior performance in maintaining accuracy, while effectively managing the challenges of high-dimensional feature selection.

5. Conclusions

In this paper, we introduced a novel optimization algorithm, named snow leopard optimization(SLO). SLO is a meta-heuristic algorithm inspired by the behavior of snow leopards. It primarily employs the territorial behaviors of snow leopard delineation, neighborhood relocation, and disputes to address high-dimensional optimization problems. To demonstrate the performance of SLO on high-dimensional optimization problems, we selected two types of tests, including benchmark functions and real-world problems. In the CEC2017 benchmark test function set, SLO ranked first on average. Moreover, after feature selection on eight real gene datasets, its predictive accuracy and the average statistical ranking of the number of feature selections also ranked first.

Moving forward, future work will focus on extending the SLO algorithm to tackle a wider range of optimization problems, including multi-objective and dynamic optimization challenges. Additionally, improvements in computational efficiency and adaptability will be explored, allowing SLO to be applied to real-time or resource-constrained environments.

However, some limitations of this work should be acknowledged. While SLO demonstrated strong performance on benchmark datasets, its effectiveness in extremely high-dimensional problems may decrease as the complexity of the search space increases. Moreover, like many meta-heuristic methods, the algorithm’s performance can be sensitive to parameter tuning. Future studies could explore adaptive parameter settings and hybrid techniques to further enhance the robustness and flexibility of SLO.

Author Contributions

Conceptualization, J.G., W.Y. and D.W.; methodology, Z.H. and Z.Y.; software, W.Y. and D.W.; validation, J.G., Z.H. and Z.Y.; formal analysis, M.S. and Y.S.; investigation, J.G., M.S. and Y.S.; resources, J.G.; data curation, M.S. and Y.S.; writing—original draft preparation, J.G.; writing—review and editing, Z.H. and Z.Y.; visualization, J.G.; supervision, M.S. and Y.S.; project administration, Z.H.; funding acquisition, J.G. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Natural Science Foundation of Hubei Province (2022CFB076, 2023AFB003, 2024AFB002), Education Department Scientific Research Program Project of Hubei Province of China (Q20222208) Artificial Intelligence Innovation Project of Wuhan Science and Technology Bureau (No.2023010402040016 and 2022010702040068). China School Youth Fund Program (grant number XJZD202305). JSPS KAKENHI Grant Numbers JP22K12185. The research was conducted under the auspices of the Hosei International Fund (HIF) Foreign Scholars Fellowship.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the authors.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| BOA | Butterfly Optimization Algorithm |

| POSCO | Pelican Optimization Single Candidate Optimizer |

| GAPSO | Greedy-Strategy-Based Adaptive Particle Swarm Optimization |

| HSCA | Hybrid Sine Cosine Algorithm |

| WOA | Whale Optimization Algorithm |

| ELO | Enhanced Lemurs Optimization |

| AVOA | African Vultures Optimization Algorithm |

| ARO | Artificial Rabbit Optimization |

| HCOA | Hermit Crab Optimization Algorithm |

| GOA | Gazelle Optimization Algorithm |

| SO | Snake Optimizer |

| HHO | Harris Hawks Optimizer |

| SSA | Sparrow Search Algorithm |

| TDO | Tasmanian Devil Optimization |

| CHO | Cat Hunting Optimization |

| HBA | Honey Badger Algorithm |

| HOA | Horse herd Optimization Algorithm |

| SLO | Snow Leopard Optimization |

| BC | behavioral control |

| ME | mean error |

| Std | standard deviation |

| KNN | K Nearest Neighbor |

References

- Zhao, S.; Zhang, T.; Ma, S.; Chen, M. Dandelion Optimizer: A nature-inspired metaheuristic algorithm for engineering applications. Eng. Appl. Artif. Intell. 2022, 114, 105075. [Google Scholar] [CrossRef]

- Ghalambaz, M.; Yengejeh, R.J.; Davami, A.H. Building energy optimization using butterfly optimization algorithm. Therm. Sci. 2022, 26, 3975–3986. [Google Scholar] [CrossRef]

- Yuan, X.; Karbasforoushha, M.A.; Syah, R.B.Y.; Khajehzadeh, M.; Keawsawasvong, S.; Nehdi, M.L. An Effective Metaheuristic Approach for Building Energy Optimization Problems. Buildings 2023, 13, 80. [Google Scholar] [CrossRef]

- Ren, K.; Jia, L.; Huang, J.; Wu, M. Research on cutting stock optimization of rebar engineering based on building information modeling and an improved particle swarm optimization algorithm. Dev. Built Environ. 2023, 13, 100121. [Google Scholar] [CrossRef]

- Brajević, I.; Stanimirović, P.S.; Li, S.; Cao, X.; Khan, A.T.; Kazakovtsev, L.A. Hybrid Sine Cosine Algorithm for Solving Engineering Optimization Problems. Mathematics 2022, 10, 4555. [Google Scholar] [CrossRef]

- Mourtzis, D.; Angelopoulos, J. Reactive Power Optimization Based on the Application of an Improved Particle Swarm Optimization Algorithm. Machines 2023, 11, 724. [Google Scholar] [CrossRef]

- Mafarja, M.; Mirjalili, S. Whale optimization approaches for wrapper feature selection. Appl. Soft Comput. 2018, 62, 441–453. [Google Scholar] [CrossRef]

- Al-Khatib, R.M.; Al-qudah, N.E.A.; Jawarneh, M.S.; Al-Khateeb, A. A novel improved lemurs optimization algorithm for feature selection problems. J. King Saud Univ. Comput. Inf. Sci. 2023, 35, 101704. [Google Scholar] [CrossRef]

- Gupta, P.; Bhagat, S.; Saini, D.K.; Kumar, A.; Alahmadi, M.; Sharma, P.C. Hybrid Whale Optimization Algorithm for Resource Optimization in Cloud E-Healthcare Applications. Comput. Mater. Contin. 2022, 71, 5659–5676. [Google Scholar] [CrossRef]

- Lakshmi, S.A.; Anandavelu, K. Enhanced Cuckoo Search Optimization Technique for Skin Cancer Diagnosis Application. Intell. Autom. Soft Comput. 2023, 35, 3403–3413. [Google Scholar] [CrossRef]

- Ren, H.J.; Ren, H.B.; Sun, Z.Q. HSFA: A novel firefly algorithm based on a hierarchical strategy. Knowl.-Based Syst. 2023, 279, 110950. [Google Scholar] [CrossRef]

- Li, J.; Chen, R.; Liu, C.; Xu, X.; Wang, Y. Capacity Optimization of Independent Microgrid with Electric Vehicles Based on Improved Pelican Optimization Algorithm. Energies 2023, 16, 2539. [Google Scholar] [CrossRef]

- Pathak, V.K.; Srivastava, A.K. A novel upgraded bat algorithm based on cuckoo search and Sugeno inertia weight for large scale and constrained engineering design optimization problems. Eng. Comput. 2022, 38, 1731–1758. [Google Scholar] [CrossRef]

- Braik, M.; Hammouri, A.; Atwan, J.; Al-Betar, M.A.; Awadallah, M.A. White Shark Optimizer: A novel bio-inspired meta-heuristic algorithm for global optimization problems. Knowl.-Based Syst. 2022, 243, 108457. [Google Scholar] [CrossRef]

- Schuetz, M.J.A.; Brubaker, J.K.; Katzgraber, H.G. Combinatorial optimization with physics-inspired graph neural networks. Nat. Mach. Intell. 2022, 4, 367–377. [Google Scholar] [CrossRef]

- Zhao, S.; Zhang, T.; Ma, S.; Wang, M. Sea-horse optimizer: A novel nature-inspired meta-heuristic for global optimization problems. Appl. Intell. 2023, 53, 11833–11860. [Google Scholar] [CrossRef]

- Seyyedabbasi, A.; Kiani, F. Sand Cat swarm optimization: A nature-inspired algorithm to solve global optimization problems. Eng. Comput. 2023, 39, 2627–2651. [Google Scholar] [CrossRef]

- Dehghani, M.; Trojovský, P.; Malik, O.P. Green Anaconda Optimization: A New Bio-Inspired Metaheuristic Algorithm for Solving Optimization Problems. Biomimetics 2023, 8, 121. [Google Scholar] [CrossRef]

- Eisham, Z.K.; Haque, M.M.; Rahman, M.S.; Nishat, M.M.; Faisal, F.; Islam, M.R. Chimp optimization algorithm in multilevel image thresholding and image clustering. Evol. Syst. 2023, 14, 605–648. [Google Scholar] [CrossRef]

- Pan, J.S.; Zhang, L.G.; Wang, R.B.; Snášel, V.; Chu, S.C. Gannet optimization algorithm: A new metaheuristic algorithm for solving engineering optimization problems. Math. Comput. Simul. 2022, 202, 343–373. [Google Scholar] [CrossRef]

- Mohapatra, S.; Mohapatra, P. American zebra optimization algorithm for global optimization problems. Sci. Rep. 2023, 13, 5211. [Google Scholar] [CrossRef] [PubMed]

- Trojovský, P.; Dehghani, M. Pelican Optimization Algorithm: A Novel Nature-Inspired Algorithm for Engineering Applications. Sensors 2022, 22, 855. [Google Scholar] [CrossRef] [PubMed]

- Abdollahzadeh, B.; Gharehchopogh, F.S.; Khodadadi, N.; Mirjalili, S. Mountain Gazelle Optimizer: A new Nature-inspired Metaheuristic Algorithm for Global Optimization Problems. Adv. Eng. Softw. 2022, 174, 103282. [Google Scholar] [CrossRef]

- Naruei, I.; Keynia, F.; Molahosseini, A.S. Hunter–prey optimization: Algorithm and applications. Soft Comput. 2022, 26, 1279–1314. [Google Scholar] [CrossRef]

- Abdollahzadeh, B.; Gharehchopogh, F.S.; Mirjalili, S. African vultures optimization algorithm: A new nature-inspired metaheuristic algorithm for global optimization problems. Comput. Ind. Eng. 2021, 158, 107408. [Google Scholar] [CrossRef]

- Wang, L.; Cao, Q.; Zhang, Z.; Mirjalili, S.; Zhao, W. Artificial rabbits optimization: A new bio-inspired meta-heuristic algorithm for solving engineering optimization problems. Eng. Appl. Artif. Intell. 2022, 114, 105082. [Google Scholar] [CrossRef]

- Guo, J.; Zhou, G.; Yan, K.; Shi, B.; Di, Y.; Sato, Y. A novel hermit crab optimization algorithm. Sci. Rep. 2023, 13, 9934. [Google Scholar] [CrossRef]

- Agushaka, J.O.; Ezugwu, A.E.; Abualigah, L. Gazelle optimization algorithm: A novel nature-inspired metaheuristic optimizer. Neural Comput. Appl. 2023, 35, 4099–4131. [Google Scholar] [CrossRef]

- Hashim, F.A.; Hussien, A.G. Snake Optimizer: A novel meta-heuristic optimization algorithm. Knowl.-Based Syst. 2022, 242, 108320. [Google Scholar] [CrossRef]

- Heidari, A.A.; Mirjalili, S.; Faris, H.; Aljarah, I.; Mafarja, M.; Chen, H.L. Harris hawks optimization: Algorithm and applications. Future Gener. Comput. Syst.-Int. J. Esci. 2019, 97, 849–872. [Google Scholar] [CrossRef]

- Xue, J.; Shen, B. A novel swarm intelligence optimization approach: Sparrow search algorithm. Syst. Sci. Control. Eng. 2020, 8, 1708830. [Google Scholar] [CrossRef]

- Dehghani, M.; Hubalovsky, S.; Trojovsky, P. Tasmanian Devil Optimization: A New Bio-Inspired Optimization Algorithm for Solving Optimization Algorithm. IEEE Access 2022, 10, 3151641. [Google Scholar] [CrossRef]

- Ghaedi, A.; Bardsiri, A.K.; Shahbazzadeh, M.J. Cat hunting optimization algorithm: A novel optimization algorithm. Evol. Intell. 2023, 16, 417–438. [Google Scholar] [CrossRef]

- Hashim, F.A.; Houssein, E.H.; Hussain, K.; Mabrouk, M.S.; Al-Atabany, W. Honey Badger Algorithm: New metaheuristic algorithm for solving optimization problems. Math. Comput. Simul. 2022, 192, 84–110. [Google Scholar] [CrossRef]

- Meng, M.; Ge, H.; Shen, Y.; Ji, W.; Wang, Q. Rock fabric of tight sandstone and its influence on irreducible water saturation in Eastern Ordos Basin. Energy Fuels 2023, 37, 3685–3696. [Google Scholar] [CrossRef]

- Meng, M.; Hu, Q.; Wang, Q.; Hong, Z.; Zhang, L. Effect of initial water saturation and water film on imbibition behavior in tight reservoirs using nuclear magnetic resonance technique. Phys. Fluids 2024, 36, 056603. [Google Scholar] [CrossRef]

- Meng, M.; Peng, J.; Ge, H.; Ji, W.; Li, X.; Wang, Q. Rock fabric of lacustrine shale and its influence on residual oil distribution in the Upper Cretaceous Qingshankou Formation, Songliao Basin. Energy Fuels 2023, 37, 7151–7160. [Google Scholar] [CrossRef]

- Guo, J.; Yan, Z.; Shi, B.; Sato, Y. A Slow Failure Particle Swarm Optimization Long Short-Term Memory for Significant Wave Height Prediction. J. Mar. Sci. Eng. 2024, 12, 1359. [Google Scholar] [CrossRef]

- Ihianle, I.K.; Machado, P.; Owa, K.; Adama, D.A.; Otuka, R.; Lotfi, A. Minimising redundancy, maximising relevance: HRV feature selection for stress classification. Expert Syst. Appl. 2024, 239, 122490. [Google Scholar] [CrossRef]

- Abdel-Salam, M.; Alzahrani, A.I.; Alblehai, F.; Zitar, R.A.; Abualigah, L. An improved Genghis Khan optimizer based on enhanced solution quality strategy for global optimization and feature selection problems. Knowl.-Based Syst. 2024, 302, 112347. [Google Scholar] [CrossRef]

- Dhakal, P.; Tayara, H.; Chong, K.T. An ensemble of stacking classifiers for improved prediction of miRNA–mRNA interactions. Comput. Biol. Med. 2023, 164, 107242. [Google Scholar] [CrossRef] [PubMed]

- Batool, S.; Zainab, S. A comparative performance assessment of artificial intelligence based classifiers and optimized feature reduction technique for breast cancer diagnosis. Comput. Biol. Med. 2024, 183, 109215. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Cai, Z.; Ye, X.; Wang, M.; Kuang, F.; Chen, H.; Li, C.; Li, Y. A multi-strategy enhanced salp swarm algorithm for global optimization. Eng. Comput. 2022, 38, 1177–1203. [Google Scholar] [CrossRef]

- Wang, Z.; Chen, Y.; Ding, S.; Liang, D.; He, H. A novel particle swarm optimization algorithm with Lévy flight and orthogonal learning. Swarm Evol. Comput. 2022, 75, 101207. [Google Scholar] [CrossRef]

- Emam, M.M.; El-Sattar, H.A.; Houssein, E.H.; Kamel, S. Modified orca predation algorithm: Developments and perspectives on global optimization and hybrid energy systems. Neural Comput. Appl. 2023, 35, 15051–15073. [Google Scholar] [CrossRef]

- Sheta, A.; Braik, M.; Al-Hiary, H.; Mirjalili, S. Improved versions of crow search algorithm for solving global numerical optimization problems. Appl. Intell. 2023, 53, 26840–26884. [Google Scholar] [CrossRef]

- Ren, T.; Luo, T.; Jia, B.; Yang, B.; Wang, L.; Xing, L. Improved ant colony optimization for the vehicle routing problem with split pickup and split delivery. Swarm Evol. Comput. 2023, 77, 101228. [Google Scholar] [CrossRef]

- Özbay, F.A. A modified seahorse optimization algorithm based on chaotic maps for solving global optimization and engineering problems. Eng. Sci. Technol. Int. J. 2023, 41, 101408. [Google Scholar] [CrossRef]

- Song, X.; Zhang, Y.; Gong, D.; Liu, H.; Zhang, W. Surrogate Sample-Assisted Particle Swarm Optimization for Feature Selection on High-Dimensional Data. IEEE Trans. Evol. Comput. 2023, 27, 595–609. [Google Scholar] [CrossRef]

- Wei, F.; Li, J.; Zhang, Y. Improved neighborhood search whale optimization algorithm and its engineering application. Soft Comput. 2023, 27, 17687–17709. [Google Scholar] [CrossRef]

- Jia, H.; Sun, K.; Zhang, W.; Leng, X. An enhanced chimp optimization algorithm for continuous optimization domains. Complex Intell. Syst. 2022, 8, 65–82. [Google Scholar] [CrossRef]

- Shen, Y.; Zhang, C.; Gharehchopogh, F.S.; Mirjalili, S. An improved whale optimization algorithm based on multi-population evolution for global optimization and engineering design problems. Expert Syst. Appl. 2023, 215, 119269. [Google Scholar] [CrossRef]

- Liang, X.; Cai, Z.; Wang, M.; Zhao, X.; Chen, H.; Li, C. Chaotic oppositional sine–cosine method for solving global optimization problems. Eng. Comput. 2022, 38, 1223–1239. [Google Scholar] [CrossRef]

- Zhang, Y.; Kong, X. A particle swarm optimization algorithm with empirical balance strategy. Chaos Solitons Fractals X 2023, 10, 100089. [Google Scholar] [CrossRef]

- Nama, S.; Saha, A.K.; Chakraborty, S.; Gandomi, A.H.; Abualigah, L. Boosting particle swarm optimization by backtracking search algorithm for optimization problems. Swarm Evol. Comput. 2023, 79, 101304. [Google Scholar] [CrossRef]

- Yang, Q.; Zhu, Y.; Gao, X.; Xu, D.; Lu, Z. Elite Directed Particle Swarm Optimization with Historical Information for High-Dimensional Problems. Mathematics 2022, 10, 1384. [Google Scholar] [CrossRef]

- Nadimi-Shahraki, M.H. An Effective Hybridization of Quantum-based Avian Navigation and Bonobo Optimizers to Solve Numerical and Mechanical Engineering Problems. J. Bionic Eng. 2023, 20, 1361–1385. [Google Scholar] [CrossRef]

- Wang, H.; Zhao, J. A novel high-level target navigation pigeon-inspired optimization for global optimization problems. Appl. Intell. 2023, 53, 14918–14960. [Google Scholar] [CrossRef]

- Chhabra, A.; Hussien, A.G.; Hashim, F.A. Improved bald eagle search algorithm for global optimization and feature selection. Alex. Eng. J. 2023, 68, 141–180. [Google Scholar] [CrossRef]

- You, J.; Jia, H.; Wu, D.; Rao, H.; Wen, C.; Liu, Q.; Abualigah, L. Modified Artificial Gorilla Troop Optimization Algorithm for Solving Constrained Engineering Optimization Problems. Mathematics 2023, 11, 1256. [Google Scholar] [CrossRef]

- Elaziz, M.A.; Abualigah, L.; Ewees, A.A.; Al-qaness, M.A.A.; Mostafa, R.R.; Yousri, D.; Ibrahim, R.A. Triangular mutation-based manta-ray foraging optimization and orthogonal learning for global optimization and engineering problems. Appl. Intell. 2023, 53, 7788–7817. [Google Scholar] [CrossRef]

- Duan, Y.; Liu, C.; Li, S.; Guo, X.; Yang, C. Manta ray foraging and Gaussian mutation-based elephant herding optimization for global optimization. Eng. Comput. 2023, 39, 1085–1125. [Google Scholar] [CrossRef]

- Horng, S.C.; Lin, S.S. Improved Beluga Whale Optimization for Solving the Simulation Optimization Problems with Stochastic Constraints. Mathematics 2023, 11, 1854. [Google Scholar] [CrossRef]

- Alhenawi, E.; Al-Sayyed, R.; Hudaib, A.; Mirjalili, S. Feature selection methods on gene expression microarray data for cancer classification: A systematic review. Comput. Biol. Med. 2022, 140, 105051. [Google Scholar] [CrossRef] [PubMed]

- Nadimi-Shahraki, M.H.; Zamani, H.; Mirjalili, S. Enhanced whale optimization algorithm for medical feature selection: A COVID-19 case study. Comput. Biol. Med. 2022, 148, 105858. [Google Scholar] [CrossRef]

- MiarNaeimi, F.; Azizyan, G.; Rashki, M. Horse herd optimization algorithm: A nature-inspired algorithm for high-dimensional optimization problems. Knowl.-Based Syst. 2021, 213, 106711. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).