Abstract

The use of linear array pushbroom images presents a new challenge in photogrammetric applications when it comes to transforming object coordinates to image coordinates. To address this issue, the Best Scanline Search/Determination (BSS/BSD) field focuses on obtaining the Exterior Orientation Parameters (EOPs) of each individual scanline. Current solutions are often impractical for real-time tasks due to their high time requirements and complexities. This is because they are based on the Collinearity Equation (CE) in an iterative procedure for each ground point. This study aims to develop a novel BSD framework that does not need repetitive usage of the CE with a lower computational complexity. The Linear Regression Model (LRM) forms the basis of the proposed BSD approach and uses Simulated Control Points (SCOPs) and Simulated Check Points (SCPs). The proposed method is comprised of two main steps: the training phase and the test phase. The SCOPs are used to calculate the unknown parameters of the LR model during the training phase. Then, the SCPs are used to evaluate the accuracy and execution time of the method through the test phase. The evaluation of the proposed method was conducted using ten various pushbroom images, 5 million SCPs, and a limited number of SCOPs. The Root Mean Square Error (RMSE) was found to be in the order of ten to the power of negative nine (pixel), indicating very high accuracy. Furthermore, the proposed approach is more robust than the previous well-known BSS/BSD methods when handling various pushbroom images, making it suitable for practical and real-time applications due to its high speed, which only requires 2–3 s of time.

1. Introduction

Over the past few decades, linear pushbroom cameras have become increasingly popular in various applications of remote sensing and photogrammetry [1], including global and topographic mapping, environmental monitoring, change detection, geological survey, target detection, and three-dimensional (3D) reconstruction [2,3]. Linear array pushbroom cameras can provide high-resolution panchromatic or multispectral satellite images with a wider field of view and high revisit frequency, making it easier to map at different scales [4,5].

However, processing linear pushbroom images is more complex than processing frame-type images [5,6,7]. In linear pushbroom imaging sensors, the two-dimensional (2D) image scene is the result of capturing sequential one-dimensional (1D) scanlines at different instants in time, each of which has distinct Exterior Orientation Parameters (EOPs) [8,9]. This dynamic imaging geometry complicates the process of transforming 3D ground space to 2D image space [10,11]. Unlike frame-type images, where each image has one set of EOPs, the position and orientation of the perspective center varies line-by-line when using pushbroom images [12,13]. This variation in EOPs for each scanline makes it challenging to determine the exact time of exposure required to achieve EOPs for each ground point. This problem is called the Best Scanline Search/Determination (BSS/BSD) and often known as determining the precise time of exposure [14,15]. The EOPs of the corresponding scanline are required for Collinearity Equation- (CE) based object-to-image transformation, which is unavailable for pushbroom images [16,17,18]. This transformation process is fundamental in the geometric processing of linear pushbroom images, including DTM generation [19], image matching [19], epipolar resampling [20], stereoscopic measurements, and orthorectification [21,22].

A great deal of research has been undertaken on the topic of object-to-image space transformation using pushbroom images, known as the Best Scanline Search (BSS). Several methods have been proposed to solve this challenge, including the Sequential Search (SS), the Bisecting Window Search (BWS) [23], the Newton Raphson (NR) [24], the Central Perspective Plane (CPP) [25], the General Distance Prediction (GDP) [19], the Optimal Global Polynomial (OGP) using the Genetic Algorithm (GA) [21], and the Artificial Neural Network (ANN) [21].

In the SS, each ground point is back projected from the ground/object space to the image space for the total number of image scanlines, and the value of the first component of the CE is evaluated, such that if this value approaches zero or close to zero, the corresponding scanline is deemed as the best scanline. The BWS has effectively diminished the search space of the SS by successively halving the image space and incorporating the first and last scanlines of the image in the assessment of the CE’s first component during an iterative procedure until the best scanline is attained. The NR has been employed to ascertain the root of the initial component of the CE, in accordance with the inherent characteristics of linear array pushbroom images. Furthermore, this methodology necessitates the application of the CE for each ground point throughout the iterative process. The CPP is introduced for linear array aerial images, endeavoring to address the challenge of identifying the best scanline by delineating the CPP corresponding to each ground point through iterative process of geometric equations. Additionally, in the final stage, the CE is utilized within the iterative process in accuracy improvement stage, albeit with a reduced number of iterations. The GDP approach is based on the back-projection of each ground point to image space through the CE by employing EOPs of the first scanline. Subsequently, the GDP, which quantifies the spatial interval between the projected image point and the first line of the image, is computed and employed for updating value via specific mathematical formulations. This iterative process persists until the predetermined stopping criterion is satisfied, leading to the identification of the best scanline corresponding to the ground point using linear interpolation. This approach operates through an iterative framework involving multiple interpolations, thereby necessitating a moderate number of computational resources. The determination of the best scanline within the framework of the OGP and ANN is conducted through a three-step procedure. Initially, the BSS is executed by utilizing many simulated points, thereby computing the estimated scanline value. Subsequently, the ground points transferred from the object space to the image space using estimated scanline value, and their accuracy are refined within the image space. Following this, a model is established to relate the final scanline values calculated for the aforementioned points in conjunction with the computed approximate value, so that it can facilitate the application of this model to other points in the third step, devoid of the necessity to transfer between the two spaces and independent of the CE. In the second stage, both GA and ANN have been employed separately.

However, all these methods except the OGP and ANN require consecutive complicated iterations based on the CE with high computational cost, making them unsuitable for practical applications due to low efficiency and high time consumption. Two non-iterative BSD methods were proposed by the authors of [21] who suggested two distinct BSD approaches regardless of using the CE. Although these methods provide proper accuracy in less time compared to the previous methods, their accuracy and execution time will be highly dependent on the GA and ANN parameters and procedure, and they also require a number of control points called Simulated Control Points (SCOPs) in the training phase.

As the current solutions to solve BSS/BSD problems are still computationally demanding, time-consuming, and not feasible for real-time tasks, there is a need for faster, non-iterative, and simpler BSS/BSD methods. Since Machine Learning (ML) methods have the advantage of simplicity in implementation and higher accuracy [26], this paper proposes a non-iterative, innovative, and fast BSD approach by taking a new look at the regression model as one of the ML methods. This approach removes the necessity for iterative search and the use of the CE in the BSD process, significantly reducing the time required. Previous methods often involved iterative procedures or the need to use the CE, resulting in high time consumption in the object-to-image space transformation process. In contrast, the proposed single-step LRM offers high speed and requires very little time. The proposed method involves two main steps: the training step and the test step. In the training phase, a few SCOPs are used to obtain the regression model parameters. In the test phase, the proposed method performs the BSD procedure of the other set of Simulated Check Points (SCPs) through the defined regression model without any iteration of the CE, resulting in a very short processing time. Ten pushbroom satellite images were employed in the proposed method, and the results were used in performance evaluation. Furthermore, the proposed method has been compared with some previous methods such as the BWS, NR, OGP, and ANN.

The rest of this paper is organized as follows. The relevant mathematical models, dataset description, and explanation of the proposed method are included in Section 2. The experimental results and accuracy assessments are provided in Section 3. Further discussion is given in Section 4. Finally, the conclusions are outlined in Section 5.

2. Materials and Methods

This section is dedicated to the explanation of the used datasets, the theoretical foundations and the description of the proposed method. Figure 1 displays the proposed BSD method’s flowchart. The proposed method based on a regression model has two main steps. The first stage is the training stage of the model, which is performed by employing SCOPs, and the second stage includes the evaluation of BSD using SCPs. Section 2.3 will provide additional explanations about the SCOPs and SCPs generation as well as the proposed method description. An overview of the proposed method is given in Table 1 for clarification.

Figure 1.

The proposed BSD approach’s flowchart.

Table 1.

An overview of the proposed method.

2.1. Dataset Description

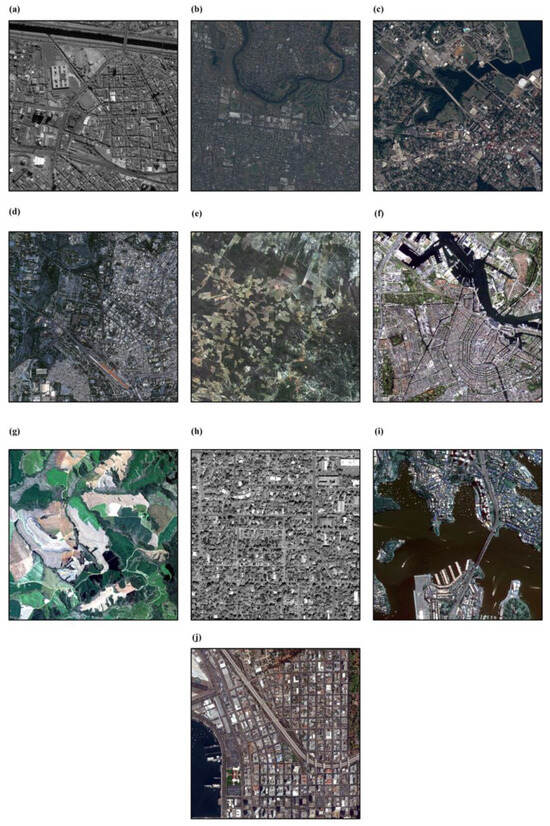

As reported in Table 2, the experimental datasets include ten different satellite pushbroom images acquired by various sensors including IKONOS, Pleiades 1A and 1B, QuickBird, SPOT6, SPOT7, WorldView 1, and WorldView 2. A comprehensive selection of ten images was made, illustrating a variety of sensor characteristics, including dimensions and spatial resolutions, alongside disparate land cover and topographical conditions, such as urban areas, flat terrains, agricultural regions, and combinations thereof, for a more thorough assessment of the proposed BSD (see Figure 2). Specifically, the spatial resolution of these images exhibited a range from 0.5 to 6 m. It is noteworthy that all images utilized in this research were supplemented by Rational Polynomial Coefficients (RPCs) files; the necessary Ground Control Points (GCPs) were derived from these supplementary files. Furthermore, elevation data for the GCPs as well as the average height of the study areas were derived from the accessible Digital Elevation Model (DEM) resources.

Table 2.

Specifications of the experimental datasets.

Figure 2.

Employed images with different coverage area: (a) SPI, (b) MP, (c) AP, (d) JQB, (e) JS, (f) AS, (g) CS, (h) BWV, (i) SWV, and (j) SDWV.

2.2. The Collinearity Equation (CE)

From the photogrammetric point of view, the relationship between the 2D image space and the 3D ground space is established using mathematical models [21,27]. The CE is a well-known mathematical model based on the geometry of the image at the time of imaging, which has been widely used for pushbroom images [4,28]. In the case of pushbroom images, the extended CE can be expressed as Equation (1).

where (, ) are 2D image coordinates of an arbitrary point, is the focal length, (, , ) are 3D ground coordinates of an arbitrary point, i refers to the scanline number, and ( and () are the positions of the perspective center in object space and rotation matrix elements of rotation angles and (EOPs), respectively.

According to Equation (1), to use the CE, reliable EOPs are necessary. Therefore, a space resection method is required to obtain the EOPs of all scanlines, considering the dynamic nature of pushbroom images. The Multiple Projection Center (MPC) model was used in the space resection step in this study. The equations for the MPC model equations can be found in Equation (2) [12,29].

where is the i-th scanline’s exposure time (equivalent to the satellites’ along-track coordinate); , , …, and are the reference scanline EOPs that were determined during the space resection phase; ,, …, and are the i-th scanline’s EOPs.

2.3. Proposed Method

2.3.1. SCOPs and SCPs Generation

As previously mentioned, the proposed method comprises two key phases—a training step and a testing step—utilizing two distinct groups of points: Simulated Control Points (SCOPs) and Simulated Check Points (SCPs). While both sets are generated in a similar manner, SCOPs are utilized during the training phase with a limited number of points, whereas SCPs are employed in the testing phase with a substantially larger quantity of points. To generate the points, regular grids are created separately in the image space for both SCOPs and SCPs, giving them image coordinates based on where they are placed in the grid. Then, the image-simulated points are transferred to the object space using the CE, the study area’s average height (determined from real GCPs), and the EOPs of all scanlines obtained through the MPC model. This results in sets of SCOPs and SCPs with known image coordinates as well as object coordinates, which are ready for processing and the evaluation of BSD.

2.3.2. Linear Regression

One of the simplest and most widely used methods among statistical and machine learning algorithms is the linear regression model [30,31]. Linear regression is a method used to establish the linear relationship between dependent and independent variables [32,33].

In regression models, the independent variables predict the dependent variables [31]. The regression model with a single independent variable is known as Simple Linear Regression (SLR) [32]. The formula for SLR is given by Equation (3):

where y is the dependent variable, x is the independent variable, and are the intercept and linear term values, respectively.

The goal of Multivariate Linear Regression (MLR) is to model the linear relation between one or more independent variables and a dependent variable [32]. The formula for simple MLR is given by Equation (4):

where is the single dependent variable, (,…,) are the coefficients forming the matrix, and are the independent variables forming the matrix.

Finally, the least square method (LSM), Equation (5) is used to find the best line or curve that fits the data sets, minimizing the cumulative squared residual errors [34,35].

2.3.3. Polynomial Regression Model (PRM)

Polynomial regression is a type of multiple regression that involves modeling with an degree polynomial. This regression is used when there is a curvilinear relationship between the independent and dependent variables [36]. The general model for PR is represented by Equation (6) [37].

In Equation (6), represents the dependent variable, represents independent variable, (, …,) are the regression coefficients of the independent variable, and n represents the polynomial degree or order of the regression model. If there are multiple independent variables, Equation (6) can be rewritten using the X matrix, like Equation (4), but with an degree polynomial.

2.3.4. Linear Regression BSD Model

The Linear Regression Model (LRM)–BSD is divided into two steps, namely the training step and the test step. In the training step, the SCOPs with known ground and image coordinates are used to obtain the model parameters. The SCPs are then used for every unknown ground point for accuracy assessment. During the Least Squares Method (LSM), the unknown parameters of the MLR model are calculated using SCOPs. The MLR model takes the ground coordinates (X, Y) as input parameters and the row number (r) as the target parameter. This way, the transformation between ground space and image space is established. In the test step, the MLR model is applied to the ground coordinate (X, Y) of SCPs, and the row values are estimated. Finally, the accuracy assessment of SCPs is performed using a Root Mean Square Error (RMSE) value. The PRM is also applied to the proposed method for a more accurate evaluation using SCOPs and SCPs. The two steps and the accuracy assessment are like the case of using the MLR model.

2.3.5. Accuracy Assessment

In evaluating the efficiency of the proposed method both in comparison with prior research and with the effective parameters of the current method, several measurement criteria have been employed. These criteria include the Root Mean Square Error (RMSE), drmax (the maximum BSD error among all SCPs), and execution time. The RMSE and drmax values are acquired by comparing the best scanline value derived from the proposed LRM–BSD approach with the exact scanline value provided during the simulation stage. Additionally, the number of SCOPs and suitability of the models are examined to obtain a robust model.

3. Results

The proposed BSD method’s performance is assessed for efficiency in both LRM and PRM modes. The model structure is clear, and the number of unknown parameters is known, allowing for an examination of the required SCOPs to solve the model and achieve desired accuracy. The number of SCOPs considered are ten, thirty, fifty, and one hundred per image, with five million SCPs employed for each image. Results are compared with other methods, such as the NR, BWS, ANN, and OGP using quantitative measures including RMSE, computation time, drmax, and the number of required SCOPs for comparison.

Experimental results are achieved using Intel Core i7 hardware with a 2.90 GHz processor, HD graphics 620, and 8 GB RAM. The analysis of changes in the number of SCOPs is performed by applying four groups of SCOPs. A numerous number of SCPs provides a more accurate assessment of the proposed BSD method’s robustness. According to the results presented in Table 3, increasing the number of SCOPs has a negligible effect on ultimate accuracy in terms of the RMSE and drmax. Interestingly, no significant difference is found in the computational time between these four groups (around 2 s). This issue reveals that the limitation in obtaining, and the number of SCOPs does not interfere with the proposed BSD, and it achieves very high accuracy (the RMSE is equal to ten to the negative power of nine) with only a small number of SCOPs (up to 50) in a very short amount of time.

Table 3.

The results of the proposed BSD by testing different number of SCOPs.

The SPI image includes urban areas with dense buildings, and this issue can be the reason for its lower accuracy compared to other images (an order of accuracy reduction in the RMSE). Furthermore, taking into account that the CS image, possessing a resolution of 6 m, represents the lowest resolution within the used dataset, the obtained RMSE of this image reached 10 to the power of negative 10. Thus, despite using images with different characteristics, there is no correlation between the number of SCOPs, land cover, spatial resolution of the images, and final accuracy. This confirms the success of the proposed BSD using a variety of satellite images from all aspects.

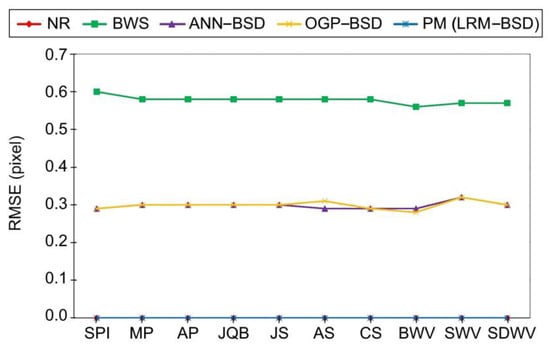

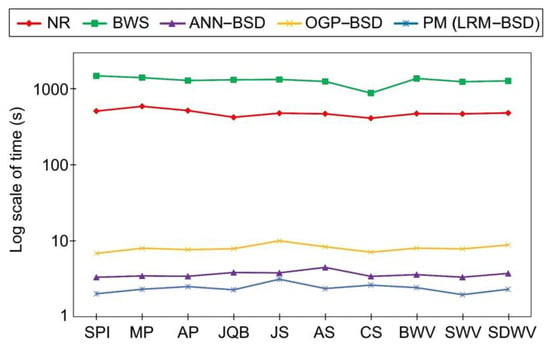

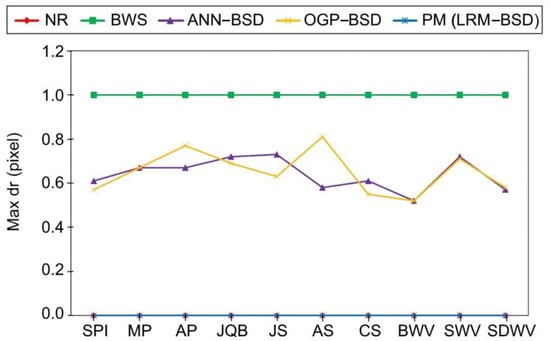

The comparison results of the proposed BSD with other methods like the NR, BWS ANN–BSD, and OGP–BSD are reported in Table 4. Quantitative metrics including the RMSE, computation time, drmax, and the number of SCOPs are used to conduct the comparison. The results of this comparison are also illustrated in Figure 3, Figure 4 and Figure 5 for better comprehension.

Table 4.

The results of the proposed BSD compared to some previous studies.

Figure 3.

The comparison of the proposed BSD with previous studies in terms of the RMSE. The NR and PM values are very close to zero.

Figure 4.

Comparison of the proposed BSD with previous studies in terms of time.

Figure 5.

The comparison of the proposed BSD with previous studies in terms of drmax. The NR and PM values are very close to zero.

The proposed method requires significantly less time than all the other methods. The NR and BWS require 250 and 750 times more computational time than the proposed method, respectively. The reason for this significant reduction in time is the non-iterative procedure used in the proposed method, regardless of using the CE. On the other hand, the NR and BWS require a lot of CE iterations for each ground point, and their accuracy depends on this. However, the BWS cannot achieve sub-pixel accuracy, which is essential for photogrammetric applications since the drmax value for this method is one pixel. Additionally, the BWS takes the most computation time due to the large search space and more iteration of the CE required.

Furthermore, the discrepancies between the RMSE and drmax of the proposed method and NR are negligible, (Both have the RMSEs and drmax values of the order of 10 to the power of 9 or 10). The comparison of the ANN–BSD and OGP–BSD with the proposed method reveals that the LRM achieves a higher RMSE by using fewer SCOPs in less time. The main reason for this difference is that the ANN–BSD and OGP–BSD require more SCOPs than the LRM in the error modeling phase. The number and distribution of these SCOPs directly affect the final accuracy of modeling and computation time to ensure accuracy in determining the best scanline.

According to Figure 3, Figure 4 and Figure 5 among the compared methods, it can be observed that the NR and LRM exhibit superior accuracy, characterized by the RMSEs approximately zero. Subsequently, the OGP and ANN present the RMSEs of about 0.3 pixels. The BWS demonstrates the greatest level of RMSE, with a recorded value of 0.6 pixels. Furthermore, the BWS exhibits the most considerable computational time, amounting to 1300 s, due to the necessity of more repetition of the CE for each ground point across all images. The NR follows closely in terms of time consumption, requiring 500 s, which is the second longest duration after BWS. This issue can also be ascribed to the iterative application of the CE; however, it is noteworthy that the frequency of repetitions for the NR is lower than that of the BWS. In contrast, the OGP demonstrates an average execution time of merely 7 s, while both ANN and LRM exhibit equivalent processing times ranging from 2 to 3 s. It is essential to highlight that the RMSE of the LRM is very close to zero, whereas the RMSE of the ANN is approximately 0.3 pixels. Additionally, the behavior of the drmax can be inferred from the RMSE values. Consequently, the BWS, characterized by the drmax equivalent to one pixel, exhibits the maximum error in comparison to the other methods. The LRM and NR, both exhibiting the drmax values of approximately zero, reside at the lower boundary of the graph, while the ANN and OGP occupy a mid-range position among the methodologies, achieving sub-pixel accuracy within the interval of 0.5 to 0.7 pixels. Based on this evidence, the proposed LRM is preferable to previous studies for real-time applications due to its high accuracy, low required time, and minimum drmax.

4. Discussion

The task of searching/determining the best scanline for mapping the object space onto the image space is a key issue when dealing with pushbroom images. Well-known existing research in this domain commonly relies on the principle of the CE, which often leads to significant computational demands due to its intricate nature. In response to this challenge, a novel approach has been introduced to reduce the computational process, minimize time requirements, and eliminate the necessity for the CE.

The accuracy and time-consumption of the BSS/BSD method directly affect the accuracy and total time required for photogrammetric products. So, the proposed method’s efficiency can be evaluated based on these two factors. As expected, the experiments demonstrated that the LRM–BSD achieved sub-pixel accuracy in the shortest possible time compared to previous studies. A notable decrease in time is attributed to the omission of the individual usage of the CE for each ground point. In the previous approaches, BSS methods like NR and BWS the transformation of ground coordinates to image coordinates necessitated the application of the CE through an iterative procedure, involving a search in the image space for each projected ground points. Conversely, the proposed method eliminates the dependence on the CE by determining the best scanline for each ground point through an LRM regardless of searching in iterative manner. The ANN and OGP do not depend on the CE; nevertheless, the LRM–BSD is considered more advantageous due to its improved precision, efficiency, and minimum number of SCOPs requirements. The RMSE achieved by the LRM method () is suitable for photogrammetric applications, such as orthorectification with low computation time for many points.

Considering that SCOPs are pivotal for the training and computation of LRM parameters, the distribution and precision of these points substantially impact the final accuracy of the proposed algorithm making use of SCPs. In the simulation phase of SCOPs and SCPs, the sole source of error arises from inaccuracies in the specification of EOPs. Given that these parameters have been derived and approximated utilizing real GCPs through the MPC model, any discrepancies in the GCPs will consequently spread to the determination of the EOPs. Furthermore, the LRM inherently possesses a degree of error and uncertainty, which can be acknowledged as a potential source of methodological errors. Nonetheless, based on the achieved RMSE and drmax, the influence of above-mentioned error sources on the final accuracy can be deemed negligible.

As mentioned earlier, providing SCOPs characterized by suitable distribution across each image and precision will lead to robust accuracy of the proposed method. Keeping this point in mind regarding the inputs of the LRM, the level of uncertainty associated with the outputs was assessed in terms of two key parameters: drmax and the RMSE (overall accuracy). Based on the quantity of results derived from all images pertaining to these two parameters, the proposed method exhibits a minimal degree of uncertainty, which is deemed advantageous. Thus, due to the improved accuracy metrics presented by the LRM, compared to the previous studies, this method is superior for application in photogrammetric contexts.

The space resection phase to obtain EOPs preceded the LRM–BSD, but if EOPs are available from other sources, the space resection step can be omitted. Therefore, the proposed LRM–BSD approach can be used even in the absence of EOPs. This issue emphasizes the algorithm’s potential to perform independently of presumptions or obligatory parameters. Nonetheless, it is imperative to establish real GCPs in instances where EOPs are unavailable, in addition to preparing a finite quantity of SCOPs for the training phase. Moreover, the proposed LRM–BSD requires fewer SCOPs than the ANN and OGP BSD due to the fixed and certain unknown parameters of the model (three unknown parameters based on the LRM structure). Although there were no significant differences in computation time and the RMSE found by increasing the number of SCOPs, it is preferable to use a smaller number of them due to the limitation in providing SCOPs.

Further investigation was undertaken using the PRM–BSD, and the results obtained from this analysis had the same accuracy as the LRM–BSD. The coefficients with degrees higher than linearity had values close to zero, indicating the adequacy of the LRM in solving the BSD problem.

5. Conclusions

This paper presents an approach to object-to-image transformation using satellite pushbroom images through the LRM in BSD. This method is based on the LRM, comprising of training and test phases involving SCOPs and SCPs respectively. It is easy to implement and not dependent on using the CE iteratively. The accuracy assessment was carried out using ten, thirty, fifty, and one hundred groups of SCOPs along with five million SCPs. Additionally, the LRM–BSD was compared to other methods, such as the BWS, NR, ANN, and OGP methods based on evaluation metrics, such as the RMSE, drmax, and execution time. The experimental results indicate that the proposed LRM–BSD procedure is faster than the other methods and has a better sub-pixel accuracy. The LRM is 250 times faster than the NR, but both methods have the same level of accuracy with the RMSEs very close to zero (pixel). The BWS is not capable of obtaining accuracies better than 0.5 pixels based on the obtained drmax and requires significant execution time, on average of 1300 s. On the other hand, the LRM obtains much better accuracies than the ANN and OGP (the RMSE values ranged between 0.28 and 0.32 pixels) in a shorter time and with fewer required SCOPs. The required time of these two methods is between 3 and 8 s, and the number of required SCOPs is 500. However, the LRM takes 2 s on average, and the maximum number of needed SCOPs is 50. The proposed LRM has potential applications in orthophoto generation, epipolar resampling, and other similar tasks. Therefore, future research will focus on applying the LRM to these tasks. It is suggested that the LRM on different aerial pushbroom images be applied in future research to further confirm the findings.

Author Contributions

Conceptualization, S.S.A.N., M.J.V.Z. and E.G.; methodology, S.S.A.N.; software, S.S.A.N.; validation, S.S.A.N. and M.J.V.Z.; formal analysis, S.S.A.N. and M.J.V.Z.; investigation, S.S.A.N.; resources, S.S.A.N.; data curation, S.S.A.N.; writing—original draft preparation, S.S.A.N.; writing—review and editing, M.J.V.Z., F.Y. and E.G.; visualization, S.S.A.N. and E.G.; supervision, M.J.V.Z.; project administration, M.J.V.Z.; funding acquisition, F.Y. and E.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The satellite images to develop and evaluate the proposed method were freely downloaded from https://intelligence.airbus.com/ (Airbus-intelligence) and https://apollomapping.com/ (Apollo Mapping).

Acknowledgments

The authors express their gratitude to Airbus-intelligence and Apollo Mapping for supplying pushbroom satellite images. Additionally, the authors appreciate the valuable feedback received from the anonymous peer reviewers.

Conflicts of Interest

Ebrahim Ghaderpour is the CEO of Earth and Space Inc. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

| ANN | Artificial Neural Network |

| AP | image of Annapolis, Pleiades |

| AS | image of Amsterdam, SPOT |

| BSD | Best Scanline Determination |

| BSS | Best Scanline Search |

| BWS | Bisecting Window Search |

| BWV | image of Boulder, WorldView |

| CE | Collinearity Equation |

| CPP | Central Perspective Plane |

| CS | image of Curitiba, SPOT |

| DTM | Digital Terrain Model |

| DEM | Digital Elevation Model |

| EOPs | Exterior Orientation Parameters |

| GA | Genetic Algorithm |

| GCPs | Ground Control Points |

| GDP | General Distance Prediction |

| IOPs | Interior Orientation Parameters |

| JQB | image of Jaipur, QuickBird |

| JS | image of Jaicos, SPOT |

| LR | Linear Regression |

| LRM | Linear Regression Model |

| LSM | Least Square Method |

| MLR | Multivariate Linear Regression |

| MP | image of Melbourne, Pleiades |

| MPC | Multiple Projection Center |

| NR | Newton Raphson |

| OGP | Optimal Global Polynomial |

| PR | Polynomial Regression |

| PRM | Polynomial Regression Model |

| RAM | Random Access Memory |

| RMSE | Root Mean Square Error |

| RPCs | Rational Polynomial Coefficients |

| SCOPs | Simulated Control Points |

| SCPs | Simulated Check Points |

| SDWV | image of San Diego, WorldView |

| SLR | Simple Linear Regression |

| SPI | image of Sao Paulo, IKONOS |

| SS | Sequential Search |

| SWV | image of Sydney, WorldView |

References

- Gong, D.; Han, Y.; Zhang, L. Quantitative Assessment of the Projection Trajectory-Based Epipolarity Model and Epipolar Image Resampling for Linear-Array Satellite Images. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 5, 89–94. [Google Scholar] [CrossRef]

- Gong New Methods for 3D Reconstructions Using High Resolution Satellite Data. 2021. Available online: http://elib.uni-stuttgart.de/bitstream/11682/11470/1/PhD_thesis_Ke_Gong.pdf (accessed on 18 October 2022).

- Wang, M.; Hu, J.; Zhou, M.; Li, J.M.; Zhang, Z. Geometric Correction of Airborne Linear Array Image Based on Bias Matrix. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, XL–1/W1, 369–372. [Google Scholar] [CrossRef]

- Koduri, S. Modeling pushbroom scanning systems. In Proceedings of the 2012 14th International Conference on Modelling and Simulation, UKSim 2012, Cambridge, UK, 28–30 March 2012; pp. 402–406. [Google Scholar] [CrossRef]

- Yang, S.; Zhu, S.; Li, Z.; Li, X.; Liu, T.; Wang, J.; Xie, J. Affine & scale-invariant heterogeneous pyramid features for automatic matching of high resolution pushbroom imagery from Chang’e 2 satellite. J. Earth Sci. 2016, 27, 716–726. [Google Scholar] [CrossRef]

- Geng, X.; Xu, Q.; Lan, C.; Hou, Y.; Miao, J.; Xing, S. An Efficient Geometric Rectification Method for Planetary Linear Pushbroom Images Based on Fast Back Projection Algorithm. In Proceedings of the 2018 Fifth International Workshop on Earth Observation and Remote Sensing Applications (EORSA), Xi’an, China, 18–20 June 2018. [Google Scholar] [CrossRef]

- Morgan, M.; Kim, K.O.; Jeong, S.; Habib, A. Epipolar resampling of space-borne linear array scanner scenes using parallel projection. Photogramm. Eng. Remote Sens. 2006, 72, 1255–1263. [Google Scholar] [CrossRef]

- Draréni, J.; Roy, S.; Sturm, P. Plane-based calibration for linear cameras. Int. J. Comput. Vis. 2011, 91, 146–156. [Google Scholar] [CrossRef]

- Jannati, M.; Zoej, M.J.V.; Mokhtarzade, M. Epipolar resampling of cross-track pushbroom satellite imagery using the rigorous sensor model. Sensors 2017, 17, 129. [Google Scholar] [CrossRef] [PubMed]

- Jannati, M.; Zoej, M.J.V.; Mokhtarzade, M. A novel approach for epipolar resampling of cross-track linear pushbroom imagery using orbital parameters model. ISPRS J. Photogramm. Remote Sens. 2018, 137, 1–14. [Google Scholar] [CrossRef]

- Wang, M.; Hu, F.; Li, J. Epipolar resampling of linear pushbroom satellite imagery by a new epipolarity model. ISPRS J. Photogramm. Remote Sens. 2011, 66, 347–355. [Google Scholar] [CrossRef]

- Ahooei Nezhad, S.S.; Valadan Zoej, M.J.; Khoshelham, K.; Ghorbanian, A.; Farnaghi, M.; Jamali, S.; Youssefi, F.; Gheisari, M. Best Scanline Determination of Pushbroom Images for a Direct Object to Image Space Transformation Using Multilayer Perceptron. Remote Sens. 2024, 16, 2787. [Google Scholar] [CrossRef]

- Marsetič, A.; Oštir, K.; Fras, M.K. Automatic orthorectification of high-resolution optical satellite images using vector roads. IEEE Trans. Geosci. Remote Sens. 2015, 53, 6035–6047. [Google Scholar] [CrossRef]

- Geng, X.; Xu, Q.; Xing, S.; Lan, C. A Generic Pushbroom Sensor Model for Planetary Photogrammetry. Earth Sp. Sci. 2020, 7, e2019EA001014. [Google Scholar] [CrossRef]

- Habib, A.F.; Bang, K.I.; Kim, C.J.; Shin, S.W. True ortho-photo generation from high resolution satellite imagery. In Lecture Notes in Geoinformation and Cartography; Springer: Berlin/Heidelberg, Germany, 2006; pp. 641–656. [Google Scholar] [CrossRef]

- Jiang, Y.; Zhang, G.; Li, L.; Tan, Z.; Tang, Z.; Xu, Y. A novel inverse transformation algorithm for pushbroom TDI CCD imaging. Int. J. Remote Sens. 2022, 43, 1074–1090. [Google Scholar] [CrossRef]

- Shen, X.; Wu, G.; Sun, K.; Li, Q. A fast and robust scan-line search algorithm for object-to-image projection of airborne pushbroom images. Photogramm. Eng. Remote Sens. 2015, 81, 565–572. [Google Scholar] [CrossRef]

- Zhang, A.; Hu, S.; Meng, X.; Yang, L.; Li, H. Toward high altitude airship ground-based boresight calibration of hyperspectral pushbroom imaging sensors. Remote Sens. 2015, 7, 17297–17311. [Google Scholar] [CrossRef]

- Geng, X.; Xu, Q.; Xing, S.; Lan, C. A Robust Ground-to-Image Transformation Algorithm and Its Applications in the Geometric Processing of Linear Pushbroom Images. Earth Sp. Sci. 2019, 6, 1805–1830. [Google Scholar] [CrossRef]

- Geng, X.; Xu, Q.; Xing, S.; Lan, C.; Hou, Y. Real time processing for epipolar resampling of linear pushbroom imagery based on the fast algorithm for best scan line searching. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci.-ISPRS Arch. 2013, XL–2/W2, 129–131. [Google Scholar] [CrossRef]

- Nezhad, S.S.A.; Zoej, M.J.V.; Ghorbanian, A. A fast non-iterative method for the object to image space best scanline determination of spaceborne linear array pushbroom images. Adv. Sp. Res. 2021, 68, 3584–3593. [Google Scholar] [CrossRef]

- Bang, K.I.; Kim, C. A New True Ortho-photo Generation Algorithm for High Resolution Satellite Imagery. Korean J. Remote Sens. 2010, 26, 347–359. [Google Scholar]

- Liu, J.; Wang, D. Efficient orthoimage generation from ADS40 level 0 products. J. Remote Sens. 2007, 11, 247. [Google Scholar]

- Chen, L.C.; Rau, J.Y. A Unified Solution for Digital Terrain Model and Orthoimage Generation from SPOT Stereopairs. IEEE Trans. Geosci. Remote Sens. 1993, 31, 1243–1252. [Google Scholar] [CrossRef]

- Wang, M.; Hu, F.; Li, J.; Pan, J. A fast approach to best scanline search of airborne linear pushbroom images. Photogramm. Eng. Remote Sens. 2009, 75, 1059–1067. [Google Scholar] [CrossRef]

- Al-Rbaihat, R.; Alahmer, H.; Al-Manea, A.; Altork, Y.; Alrbai, M.; Alahmer, A. Maximizing efficiency in solar ammonia–water absorption refrigeration cycles: Exergy analysis, concentration impact, and advanced optimization with GBRT machine learning and FHO optimizer. Int. J. Refrig. 2024, 161, 31–50. [Google Scholar] [CrossRef]

- Huang, R.; Zheng, S.; Hu, K. Registration of aerial optical images with LiDAR data using the closest point principle and collinearity equations. Sensors 2018, 18, 1770. [Google Scholar] [CrossRef]

- Safdarinezhad, A.; Zoej, M.J.V. An optimized orbital parameters model for geometric correction of space images. Adv. Sp. Res. 2015, 55, 1328–1338. [Google Scholar] [CrossRef]

- Zoej, M.J.V. Photogrammetric Evaluation of Space Linear Array Imagery for Medium Scale Topographic Mapping. Ph.D. Thesis, University of Glasgow, Glasgow, UK, 1997. Available online: https://theses.gla.ac.uk/4777/1/1997zoejphd1.pdf (accessed on 18 October 2022).

- Kumari, K.; Yadav, S. Linear regression analysis study. J. Pract. Cardiovasc. Sci. 2018, 4, 33. [Google Scholar] [CrossRef]

- Hope, T.M.H. Linear regression. In Machine Learning: Methods and Applications to Brain Disorders; Academic Press: Cambridge, MA, USA, 2019; pp. 67–81. [Google Scholar] [CrossRef]

- Maulud, D.; Abdulazeez, A.M. A Review on Linear Regression Comprehensive in Machine Learning. J. Appl. Sci. Technol. Trends 2020, 1, 140–147. [Google Scholar] [CrossRef]

- Uyanık, G.K.; Güler, N. A Study on Multiple Linear Regression Analysis. Procedia-Soc. Behav. Sci. 2013, 106, 234–240. [Google Scholar] [CrossRef]

- Ghaderpour, E.; Pagiatakis, S.D.; Hassan, Q.K. A survey on change detection and time series analysis with applications. Appl. Sci. 2021, 11, 6141. [Google Scholar] [CrossRef]

- Filzmoser, P.; Nordhausen, K. Robust linear regression for high-dimensional data: An overview. Wiley Interdiscip. Rev. Comput. Stat. 2021, 13, e1524. [Google Scholar] [CrossRef]

- Ostertagová, E. Modelling using polynomial regression. Procedia Eng. 2012, 48, 500–506. [Google Scholar] [CrossRef]

- Yee, G.P.; Rusiman, M.S.; Ismail, S.; Suparman; Hamzah, F.M.; Shafi, M.A. K-means clustering analysis and multiple linear regression model on household income in Malaysia. IAES Int. J. Artif. Intell. 2023, 12, 731–738. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).