Adapting Static and Contextual Representations for Policy Gradient-Based Summarization

Abstract

1. Introduction

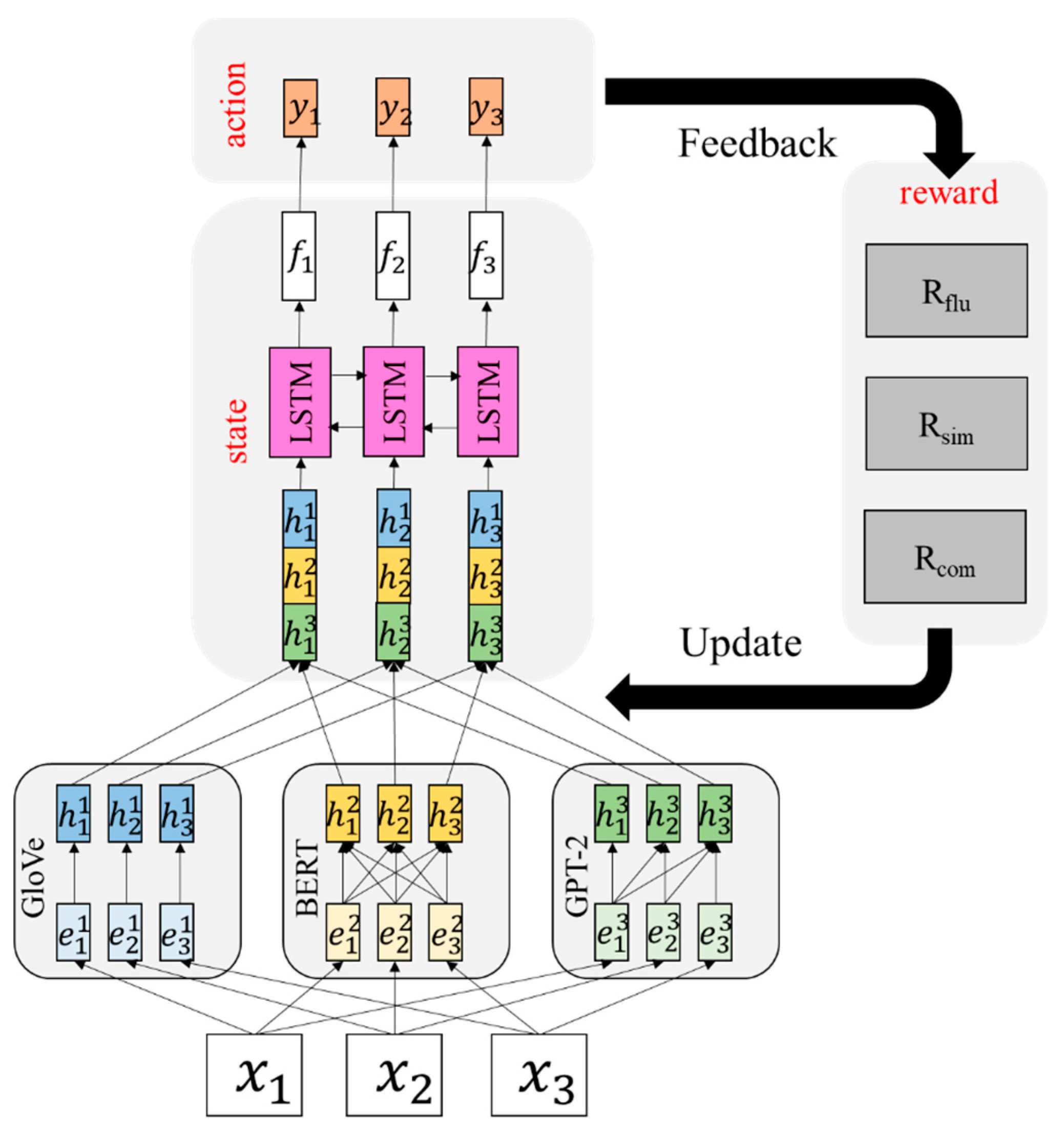

- We propose an automated model for unsupervised extractive text summarization based on a policy gradient reinforcement learning approach. The semantic representations of the text are extracted from static and contextual embeddings, including GloVe-, BERT- and GPT-based models.

- Empirical studies on the Gigaword dataset illustrate that the proposed solution is capable of creating reasonable summaries and is comparable with other state-of-the-art algorithms.

2. Related Work

3. Proposed Method

3.1. The Policy Gradient Reinforcement Learning Architecture

- State: A document is considered as a state s. Each token is further represented by the concatenation of its GloVe (), BERT() and GPT-2() embeddings. GloVes are a kind of static embeddings, the BERT is a category of dynamic embeddings trained on masked language modeling and GPT-2 is another type of dynamic embeddings trained on autoregressive language modeling. We then pass the concatenated embeddings into an LSTM layer to encode the sequential information and capture contextual features. The state representation is then denoted as [35,36].

- Action: The selection of important words from a sentence is treated as a sequence labelling problem in this work. Given the state representation , the algorithm will perform the action of producing a sequence of binary labels to indicate the importance of a word.

- Reward: We apply three commonly used reward functions to measure the quality of the extracted text, including fluency (), similarity () and compression () [14,36,37]. The fluency reward judges if the generated text is grammatically sound and semantically correct. Its score is calculated as the average of their perplexities by a language model. The similarity reward measures the semantic similarity between the generated summary and the source document in order to ensure the content’s preservation. We adopt the cosine similarity as the similarity score to compute the distance between the embeddings of the generated summary and the source document. The compression reward encourages the agent to generate summaries close to the predefined length. We refer to the prior research work [14], , to calculate the compression score, where is the length of the generated summary, is the target summary length and is a hyper-parameter.

3.2. Training Algorithm

| Algorithm 1: Policy Gradient-Based Summarization Model |

| Parameters θ for the policy network π. |

|

4. Experiments and Results

4.1. Dataset

4.2. Evaluation Metric

4.3. Experimental Results

- Lead-8: This approach is a simple baseline which directly selects the first eight words in the source document to assemble a summary.

- Contextual Match [40]: This research work introduces two language models to create the summarization and maintain output fluency. A generic pre-trained language model is used to perform contextual matching and the other target domain-specific language model is used to guide the generation fluency.

- AdvREGAN [15]: The method uses cycle-consistency to encode the input text representation and applies an adversarial reinforcement-based GAN to generate human-like text.

- HC_title_8 [37]: This work extracts words from the input text based on a hill-climbing approach by discrete optimization algorithms with a summary length of about eight words.

- HC_title_10 [37]: The model is identical to the HC_title_8 but with a summary length of about 10 words.

- SCRL_L8 [36]: The approach models the sentence compression to fine-tune BERT using a reinforcement learning setup.

4.4. Ablation Experiments

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Luhn, H.P. The automatic creation of literature abstracts. IBM J. Res. Dev. 1958, 2, 159–165. [Google Scholar] [CrossRef]

- Ferreira, R.; de Souza Cabral, L.; Lins, R.D.; e Silva, G.P.; Freitas, F.; Cavalcanti, G.D.; Lima, R.; Simske, S.J.; Favaro, L. Assessing sentence scoring techniques for extractive text summarization. Expert Syst. Appl. 2013, 40, 5755–5764. [Google Scholar] [CrossRef]

- Nallapati, R.; Zhou, B.; Gulcehre, C.; Xiang, B. Abstractive text summarization using sequence-to-sequence rnns and beyond. arXiv 2016, arXiv:1602.06023. [Google Scholar]

- Miller, D. Leveraging BERT for extractive text summarization on lectures. arXiv 2019, arXiv:1906.04165. [Google Scholar]

- Zhang, J.; Zhao, Y.; Saleh, M.; Liu, P. Pegasus: Pre-training with extracted gap-sentences for abstractive summarization. In Proceedings of the International Conference on Machine Learning, Virtual, 13–18 July 2020; PMLR: New York, NY, USA, 2020; pp. 11328–11339. [Google Scholar]

- Rossiello, G.; Basile, P.; Semeraro, G. Centroid-based text summarization through compositionality of word embeddings. In Proceedings of the MultiLing 2017 Workshop on Summarization and Summary Evaluation Across Source Types and Genres, Valencia, Spain, 3 April 2017; pp. 12–21. [Google Scholar]

- Radev, D.R.; Jing, H.; Styś, M.; Tam, D. Centroid-based summarization of multiple documents. Inf. Process. Manag. 2004, 40, 919–938. [Google Scholar] [CrossRef]

- Pennington, J.; Socher, R.; Manning, C.D. Glove: Global vectors for word representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 26–28 October 2014; pp. 1532–1543. [Google Scholar]

- Kenton JD MW, C.; Toutanova, L.K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the NAACL-HLT 2019, Minneapolis, Minnesota, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language models are unsupervised multitask learners. OpenAI Blog. 2019, 1, 9. [Google Scholar]

- Ethayarajh, K. How Contextual are Contextualized Word Representations? Comparing the Geometry of BERT, ELMo, and GPT-2 Embeddings. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 55–65. [Google Scholar]

- Amplayo, R.K.; Angelidis, S.; Lapata, M. Unsupervised opinion summarization with content planning. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, Canada, 2–9 February; Volume 35, pp. 12489–12497.

- Hyun, D.; Wang, X.; Park, C.; Xie, X.; Yu, H. Generating Multiple-Length Summaries via Reinforcement Learning for Unsupervised Sentence Summarization. arXiv 2022, arXiv:2212.10843. [Google Scholar]

- Wang, Y.; Lee, H.Y. Learning to Encode Text as Human-Readable Summaries using Generative Adversarial Networks. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 4187–4195. [Google Scholar]

- Pasunuru, R.; Celikyilmaz, A.; Galley, M.; Xiong, C.; Zhang, Y.; Bansal, M.; Gao, J. (2021, May). Data augmentation for abstractive query-focused multi-document summarization. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, Canada, 2–9 February; Volume 35, pp. 13666–13674.

- Wang, H.; Wang, X.; Xiong, W.; Yu, M.; Guo, X.; Chang, S.; Wang, W.Y. Self-supervised learning for contextualized extractive summarization. arXiv 2019, arXiv:1906.04466. [Google Scholar]

- Liu, L.; Lu, Y.; Yang, M.; Qu, Q.; Zhu, J.; Li, H. Generative adversarial network for abstractive text summarization. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Sharma, G.; Sharma, D. Automatic Text Summarization Methods: A Comprehensive Review. SN Comput. Sci. 2022, 4, 33. [Google Scholar] [CrossRef]

- Sharma, G.; Gupta, S.; Sharma, D. Extractive text summarization using feature-based unsupervised RBM Method. In Cyber Security, Privacy and Networking: Proceedings of ICSPN 2021; Springer Nature Singapore: Singapore, 2022; pp. 105–115. [Google Scholar]

- Liu, Y. Fine-tune BERT for extractive summarization. arXiv 2019, arXiv:1903.10318. [Google Scholar]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to sequence learning with neural networks. Adv. Neural Inf. Process. Syst. 2014, 27. [Google Scholar]

- Ma, X.; Keung, J.W.; Yu, X.; Zou, H.; Zhang, J.; Li, Y. AttSum: A Deep Attention-Based Summarization Model for Bug Report Title Generation. IEEE Trans. Reliab. 2023; early access. [Google Scholar]

- Mendes, A.; Narayan, S.; Miranda, S.; Marinho, Z.; Martins, A.F.; Cohen, S.B. Jointly Extracting and Compressing Documents with Summary State Representations. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; Volume 1, pp. 3955–3966. [Google Scholar]

- Lebanoff, L.; Song, K.; Dernoncourt, F.; Kim, D.S.; Kim, S.; Chang, W.; Liu, F. Scoring Sentence Singletons and Pairs for Abstractive Summarization. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 2175–2189. [Google Scholar]

- See, A.; Liu, P.J.; Manning, C.D. Get To The Point: Summarization with Pointer-Generator Networks. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, Vancouver, Canada, 30 July–4 August 2017; Volume 1, pp. 1073–1083. [Google Scholar]

- Li, Y. Deep reinforcement learning: An overview. arXiv 2017, arXiv:1701.07274. [Google Scholar]

- Alomari, A.; Idris, N.; Sabri AQ, M.; Alsmadi, I. Deep reinforcement and transfer learning for abstractive text summarization: A review. Comput. Speech Lang. 2022, 71, 101276. [Google Scholar] [CrossRef]

- Bian, J.; Huang, X.; Zhou, H.; Zhu, S. GoSum: Extractive Summarization of Long Documents by Reinforcement Learning and Graph Organized discourse state. arXiv 2022, arXiv:2211.10247. [Google Scholar]

- Wu, Y.; Hu, B. Learning to extract coherent summary via deep reinforcement learning. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Hu, B.; Lu, Z.; Li, H.; Chen, Q. Convolutional neural network architectures for matching natural language sentences. Adv. Neural Inf. Process. Syst. 2014, 2, 2042–2050. [Google Scholar]

- Liu, Y.; Liu, P.; Radev, D.; Neubig, G. BRIO: Bringing Order to Abstractive Summarization. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics, Dublin, Ireland, 22–27 May 2022; Volume 1, pp. 2890–2903. [Google Scholar]

- Xiao, L.; He, H.; Jin, Y. FusionSum: Abstractive summarization with sentence fusion and cooperative reinforcement learning. Knowl. Based Syst. 2022, 243, 108483. [Google Scholar] [CrossRef]

- Zhang, X.; Lapata, M. Sentence Simplification with Deep Reinforcement Learning. In Proceedings of the EMNLP 2017: Conference on Empirical Methods in Natural Language Processing, Association for Computational Linguistics, Copenhagen, Denmark, 7–11 September 2017; pp. 584–594. [Google Scholar]

- Ghalandari, D.G.; Hokamp, C.; Ifrim, G. Efficient Unsupervised Sentence Compression by Fine-tuning Transformers with Reinforcement Learning. arXiv 2022, arXiv:2205.08221. [Google Scholar]

- Schumann, R.; Mou, L.; Lu, Y.; Vechtomova, O.; Markert, K. Discrete Optimization for Unsupervised Sentence Summarization with Word-Level Extraction. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 5032–5042. [Google Scholar]

- Sutton, R.S.; McAllester, D.; Singh, S.; Mansour, Y. Policy gradient methods for reinforcement learning with function approximation. Adv. Neural Inf. Process. Syst. 1999, 12. [Google Scholar]

- Lin, C.Y.; Hovy, E. Automatic evaluation of summaries using n-gram co-occurrence statistics. In Proceedings of the 2003 Human Language Technology Conference of the North American Chapter of the Association for Computational Linguistics, Edmonton, Canada, 27 May–1 June 2003; pp. 150–157. [Google Scholar]

- Zhou, J.; Rush, A.M. Simple Unsupervised Summarization by Contextual Matching. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 5101–5106. [Google Scholar]

- Aharoni, R.; Narayan, S.; Maynez, J.; Herzig, J.; Clark, E.; Lapata, M. mFACE: Multilingual Summarization with Factual Consistency Evaluation. arXiv 2022, arXiv:2212.10622. [Google Scholar]

| DOCUEMNT: The country joined several of its South American neighbors in allowing the unions when President Michelle Bachelet enacted a new law on Monday. This is a concrete step in the drive to end the difference between homosexual and heterosexual couples, Bachelet said. The new law will take effect in six months. It will give legal weight to cohabiting relationships between two people of the same sex and between a man and a woman. The Chilean government estimates that around 2 million people will be able to benefit from the change. The law is intended to end discrimination faced by common-law couples, such as not being allowed to visit partners in hospital, make medical decisions on their behalf or decide what to do with their remains. It also gives the couples greater rights in the realms of property, health care, pensions and inheritance. A number of South American nations have moved to allow same-sex civil unions in recent years. But marriage between people of the same sex is legal only in Argentina, Brazil and Uruguay. |

| EXTRACTIVE SUMMARY [4]: The law is intended to end discrimination faced by common-law couples, such as not being allowed to visit partners in hospital, make medical decisions on their behalf or decide what to do with their remains. |

| ABSTRACTIVE SUMMARY [5]: Chile has become the first country in the world to legalise same-sex civil unions. |

| Training | Validation | Testing | |

|---|---|---|---|

| AvgInputLen | 31.19 | 31.32 | 29.70 |

| AvgSummaryLen | 8.04 | 8.31 | 8.79 |

| Parameter | Value |

|---|---|

| Batch size | 4 |

| GloVe embeddings size | 300 |

| BERT embeddings size | 768 |

| GPT-2 embeddings size | 768 |

| LSTM hidden units | 300 |

| Learning rate | 0.00005 |

| Dropout rate | 0.5 |

| R-1 | R-2 | R-L | |

|---|---|---|---|

| Lead-8 | 21.39 | 7.42 | 20.03 |

| Contextual Match | 26.48 | 10.05 | 24.41 |

| AdvREGAN | 27.29 | 10.01 | 24.59 |

| HC_title_8 | 26.32 | 9.63 | 24.19 |

| HC_title_10 | 27.52 | 10.27 | 24.91 |

| SCRL_L8 | 29.64 | 9.98 | 26.57 |

| Our Model | 29.75 | 10.26 | 26.98 |

| INPUT_1: un under-secretary-general for political affairs ibrahim gambari said on wednesday that although the future of the peace process in the middle east is hopeful, it still faces immense challenges. |

| GOLD SUMMARY: un senior official says peace process in middle east hopeful with immense challenges |

| GEN SUMMARY: under-secretary-general ibrahim gambari said future peace process still faces immense challenges |

| INPUT_2: rose UNK, a soprano who performed ## seasons at the metropolitan opera and established herself as a premier voice in american opera, has died. |

| GOLD SUMMARY: rose UNK metropolitan opera star in the ####s and ##s dies at ## |

| GEN SUMMARY: rose UNK soprano who performed at the opera has died. |

| INPUT_3: an over-loaded minibus overturned in a county in southwestern guizhou province tuesday afternoon, killing ## passengers and injuring three others. |

| GOLD SUMMARY: traffic accident kills ## injures # in sw china |

| GEN SUMMARY: over-loaded minibus in county in southwestern guizhou killing passengers others. |

| INPUT_4: south african cricket captain graeme smith said on sunday he would not use politics as an excuse for south africa ‘s performance in the cricket world cup in the west indies. |

| GOLD SUMMARY: cricket: politics not to blame for world cup failure: smith |

| GEN SUMMARY: african captain smith said politics an excuse in cricket cup |

| Summary | |||

|---|---|---|---|

| R-1 | R-2 | R-L | |

| Our Model | 29.75 | 10.26 | 26.98 |

| GloVe | 11.98 | 1.03 | 11.37 |

| BERT | 28.19 | 8.62 | 25.64 |

| GPT-2 | 20.54 | 5.31 | 19.02 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, C.-S.; Jwo, J.-S.; Lee, C.-H. Adapting Static and Contextual Representations for Policy Gradient-Based Summarization. Sensors 2023, 23, 4513. https://doi.org/10.3390/s23094513

Lin C-S, Jwo J-S, Lee C-H. Adapting Static and Contextual Representations for Policy Gradient-Based Summarization. Sensors. 2023; 23(9):4513. https://doi.org/10.3390/s23094513

Chicago/Turabian StyleLin, Ching-Sheng, Jung-Sing Jwo, and Cheng-Hsiung Lee. 2023. "Adapting Static and Contextual Representations for Policy Gradient-Based Summarization" Sensors 23, no. 9: 4513. https://doi.org/10.3390/s23094513

APA StyleLin, C.-S., Jwo, J.-S., & Lee, C.-H. (2023). Adapting Static and Contextual Representations for Policy Gradient-Based Summarization. Sensors, 23(9), 4513. https://doi.org/10.3390/s23094513