Abstract

Point cloud registration is a crucial preprocessing step for point cloud data analysis and applications. Nowadays, many deep-learning-based methods have been proposed to improve the registration quality. These methods always use the sum of two cross-entropy as a loss function to train the model, which may lead to mismatching in overlapping regions. In this paper, we designed a new loss function based on the cross-entropy and applied it to the ROPNet point cloud registration model. Meanwhile, we improved the ROPNet by adding the channel attention mechanism to make the network focus on both global and local important information, thus improving the registration performance and reducing the point cloud registration error. We tested our method on ModelNet40 dataset, and the experimental results demonstrate the effectiveness of our proposed method.

1. Introduction

Point cloud registration is the basis of 3D reconstruction [1,2], 3D localization [3,4,5,6], pose estimation [7,8,9], and other fields. With the development of deep learning, point cloud registration develops from traditional iterative closest point-based (ICP) [10] methods to approaches based on deep learning, such as PCRNet [11], D3feat [12], iterative distance-aware similarity matrix convolution network (IDAM) [13], point cloud registration with deep attention to the overlap region (PREDATOR) [14], robust point matching (RPMNet) [15], etc. In recent years, these methods have achieved good registration results under certain conditions. However, most of the current models do not perform well in overlapping regions. In this paper, to solve the above issue, we will take measures from two aspects, i.e., the loss function and the backbone network. To demonstrate the effectiveness, we use the representative overlapping points network (ROPNet) [16] as an example in the following context.

Loss functions measure the difference between the real value and the predicted value in deep learning and play important roles in back-propagation. Depending on different tasks, loss functions can be roughly divided into two categories. The first one is used for regression tasks, such as mean absolute (MAE), mean square error (MSE), Huber loss, etc. The other one is used for classification tasks, such as focal loss [16], center loss [17], circle loss [18], dice loss [19], cross-entropy loss, etc. The point cloud registration task involves the classification problem, and often uses the cross-entropy as the loss function. However, researchers [19,20] found that, in some cases cross-entropy loss can not achieve good results. For example, when the data between positive and negative samples are seriously unbalanced, negative samples will dominate the training of the model. In this case, by using the original cross-entropy loss, the model cannot predict accurately. To solve this problem, many researchers have made a lot of improvements based on the original cross-entropy, such as weighted cross-entropy (WCE) [21], balanced cross-entropy (BCE) [22], dice loss [19], focal loss [20], etc. Most of the above approaches improve the cross-entropy loss by introducing weights; however, these metrics often neglect the relationship between the corresponding points in overlapping regions.

In this paper, to improve the registration quality on overlapping areas, we introduce a new way of calculating loss function based on the cross-entropy. Specifically, we use the product of two cross-entropy loss instead of the sum. We prove the validity of our proposed loss function in theory in Section 3.1. Moreover, to testify the effectiveness of the proposed loss, we apply it to a recently proposed network, i.e., the ROPNet, which originally uses the sum of two cross-entropy to supervise the overlapping area. In addition, the ROPNet considers more global information but ignores local information, which leads to mis-registration at places where there are details. Therefore, we propose to add a channel attention mechanism to focus on important local information, and use it to complement the self-attention mechanism in the original model. A large number of experiments show that, compared with the original ROPNet scheme, the proposed method has a certain improvement in overlapping point cloud registration. Our main contributions are as follows:

- •

- A new loss function based on cross-entropy is designed, which helps improve the point clouds registration accuracy, especially in overlapping regions.

- •

- The performance of the ROPNet is also improved by adding a channel attention mechanism.

- •

- A large number of experiments on commonly used datasets were conducted and verified the effectiveness of our proposed method.

2. Related Work

In this section, we introduce some related work and preliminary knowledge, including the ROPNet model, cross-entropy loss, and attention mechanism.

2.1. ROPNet

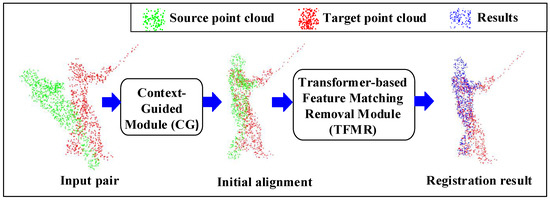

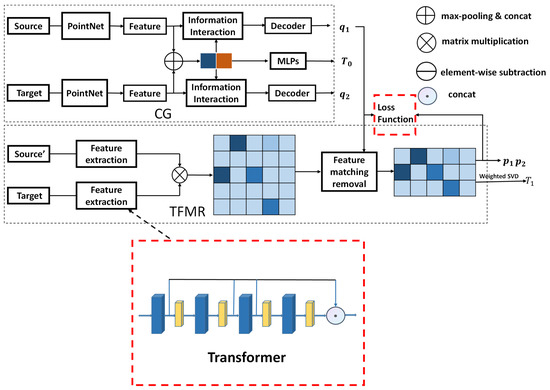

ROPNet is a point cloud registration model that typically uses representative points in overlapping regions for registration. As shown in Figure 1, the ROPNet consists of a context-guided (CG) module and a transformer-based feature matching removal (TFMR) module.

Figure 1.

The original point cloud registration model of ROPNet.

Generally speaking, ROPNet can be divided into two stages: coarse alignment and fine alignment. In the first stage, the source point cloud and the target point cloud are input. The CG module is used to extract global features from the input and compute the overlap score between two point clouds. The extracted global features are then used for coarse alignment, and the non-representative points are removed based on the overlap score. In the second stage, point features are generated based on the offset attention [23,24]. The representative points are used to generate accurate correspondences. Finally, the precise registration of point clouds is carried out.

2.2. Cross-Entropy Loss

The cross-entropy loss is a widely used loss function to classification problems. In the point cloud registration model [16,25], p is defined as the true distribution. It can be written as

where refers to any point in the source point cloud. If the point in the source point cloud matches the point in the target point cloud, which means , then . Otherwise, .

At the same time, q is defined as the predicted probability distribution, which represents the probability that the source point cloud matches the target point cloud. It can be written as:

where n represents the number of points in the point cloud.

Then the cross-entropy is defined as:

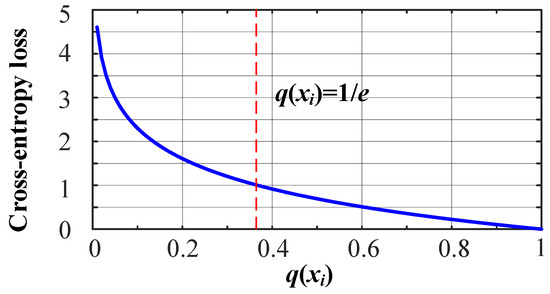

Figure 2 shows the curve of simplified cross-entropy loss. We can see that when . Moreover, the larger the q, the smaller the cross-entropy is. It indicates that the difference between the true value and the predicted value will be smaller with more accurate classification.

Figure 2.

Simplified cross-entropy loss.

2.3. Attention Mechanism

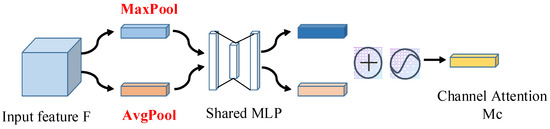

In recent years, a series of attention mechanisms have been proposed to improve the performance of the deep learning models, such as channel attention mechanism (CAM) [26], spatial attention mechanism (SAM) [3], mixed attention [27,28], etc. The attention mechanism focuses on the important part of the obtained information. For example, the channel attention mechanism focuses on the correlation between different channels. It captures and reinforces the important features on each channel. The spatial attention mechanism focuses on the areas associated with the task. More succinctly, channel attention mechanisms focus on what is important and spatial attention mechanisms focus on where is important. It is found in [29] that channel-related information is more important than spatial information when embedding the attention module of point cloud features. Therefore, in this paper, we mainly improve the model performance by adding the channel attention mechanism—see Figure 3.

Figure 3.

Channel attention module.

3. Proposed Method

In this section, we improve the ROPNet by introducing the revised cross-entropy and the channel attention mechanism.

3.1. Modification of Loss Function

In most overlap-based point cloud registration models including ROPNet, the overlap loss is defined as:

where , are the overlap supervisory losses of the two point clouds. N and M are the number of points in the source and target point clouds. , are the true distribution of the source and target point clouds, and , are the predicted probability distribution of the source and target point clouds, respectively.

Next, we modify the loss function to reduce the cross-entropy. Since the overlap between the source and target point clouds is high in most point cloud registration tasks, we mainly consider the case when . We can see from Figure 2 that when . According to the square sum inequality, that is, for any , we propose a new loss function shown by Definition 1.

Definition 1.

An improved loss function with cross-entropy is defined as:

Denote as any one of the cross-entropy loss, where . According to (4), the cross-entropy loss can be expressed as

Take the derivative of . It can be written as

When , . (7) can be written as

When , . The derivation of with can be written as

Combining (7) and (9), the derivation of the loss function in different conditions can be expressed as

Then, to compare the values of the original and proposed loss functions, we compute the derivation of and .We denote and as the derivation of and , respectively. Substituting (1) and (11), and can be calculated as

According to Figure 2, when , it has . Since , we have . Therefore, .

The above analysis shows that the absolute value of the derivative of our proposed loss function is smaller than that of the original loss function. It means that the proposed loss function has a better performance. Moreover, since in most point cloud alignment tasks, the proposed loss function can be generalized in more tasks based on overlapping point regions.

3.2. Channel Attention-Based ROPNet

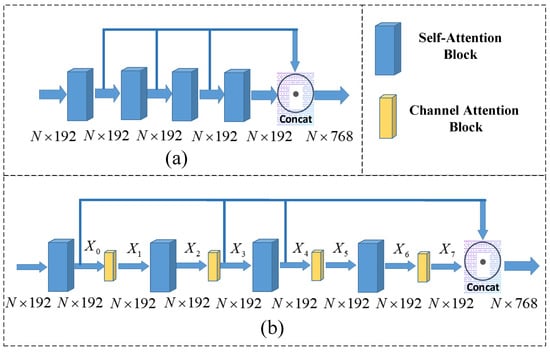

Figure 4a shows the original structure of TMFR module, which contains offset-attention blocks. The offset attention block is used as the self-attention to generate global features. The TMFR module fuses the multi-scale features obtained from multiple attention modules. However, the original self-attention module only extracts global features, but ignores the local features. Hence, to make the features more complete, we add a channel attention module after each of the original attention module. As a matter of fact, there are a lot of ways to add the channel attention model. Empirically, we use a hybrid attention mechanism as shown in Figure 4b. The improvement of the proposed model with the attention mechanism can be demonstrated by experiments presented in Section 4.

Figure 4.

(a) Initial TMFR structure; (b) improved structure.

3.3. Overall Improved Architecture

An overall architecture of the improved ROPNet is presented in Figure 5. Source’ represents the point cloud after the initial transformation of the source point cloud. is the initial transformation matrix, is the final transformation matrix. The improvement has two aspects. One is that we added a channel attention mechanism in the feature extraction part to focus more on the local features. The other is that an improved loss function presented in Section 3.2 is used after obtaining the true distribution and .

Figure 5.

An overall architecture of the improved ROPNet.

4. Experiments

We conducted a series of experiments to demonstrate the effectiveness and advantage of our proposed method. Firstly, we introduce the dataset and the evaluation metrics. Secondly, we compared our proposed loss function with the original one by experiments. Thirdly, we conducted the ablation experiment to show the effectiveness of our proposed model with attention mechanism. Finally, we use both the improved model and the improved loss function to show the advantage of our proposed method. We keep the same experimental settings, parameters, and evaluation indicators with [15].

4.1. Dataset and Evaluation Metrics

As with [15], this paper used dataset ModelNet40 [30], including 40 categories and 12311 CAD models. We trained the model in the first 20 categories and tested in the remaining 20. Since there are some symmetrical categories in ModelNet40, we divided the data into two categories. One is the total objects (TO), and the other is the asymmetric objects (AO). No symmetrical objects, such as tent or vase, exist in AO. According to RPMNet [15], we generated the source and target point clouds by sampling twice to take 1024 points. We randomly generated three Euler angles within and translations within [−0.5, 0.5] on each axis. Then, we projected all points to a random direction and deleted thirty percent of them to generate a partial point cloud. In addition, the model is trained on noisy data. The noise is sampled from and clipped to [−0.05, 0.05].

In the following experiments, we set the average isotropic rotation and translation error as the evaluation metrics, which are

and are the ground truth transformation, while R and t are the predicted transformation. The mean absolute error is used for the rotation matrix and is used for the translation vector.

4.2. Improvement by New Loss Function

In this subsection, we compare the results obtained from different loss functions. In the following tables and legends of the figures, denote loss*loss as the proposed loss function, and loss + loss as the original loss function. Denote 0*loss as the loss functions without considering the overlapping region.

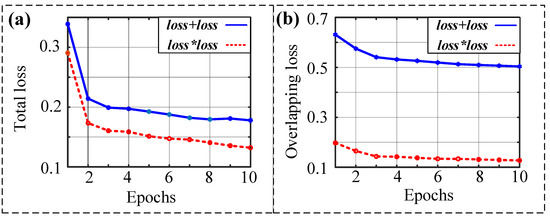

First, let us observe the cross-entropy loss convergence of different loss functions under 10 epochs. Figure 6a depicts the total loss function of the two methods. We can see that the total loss of the proposed loss function is smaller than the total loss of the original one. In addition, the starting point of the decline of the proposed loss function is lower than the original starting point. Figure 6b shows the overlapping loss calculated by different loss functions. It can be seen that both curves decrease with the increase in epochs. The trend of the two curves is consistent. More importantly, the product of cross-entropy loss, i.e., , is smaller than the sum of cross-entropy loss functions, i.e., . The above results illustrate that the proposed loss function has a better performance.

Figure 6.

Comparison of loss function decline in the case of 10 epochs: (a) total loss; (b) overlapping loss.

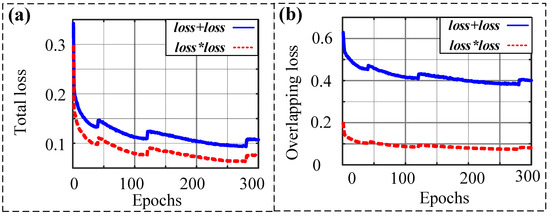

In order to obtain more reliable results, we calculate different loss functions after training for 300 epochs. The convergence of the total loss and the overlapping loss are shown in Figure 7a,b. The results show that the two loss functions have the same trend and the proposed loss function is smoother and smaller than the original one.

Figure 7.

Comparison of loss function decline in the case of 300 epochs: (a) total loss; (b) overlapping loss.

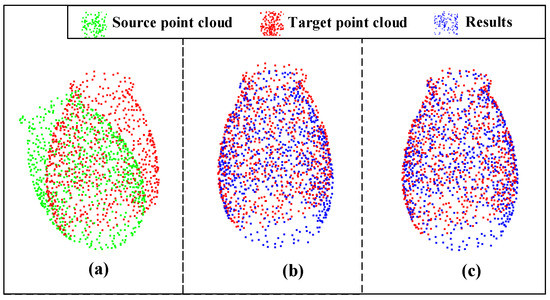

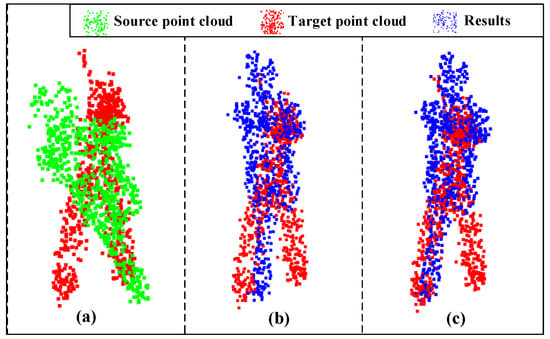

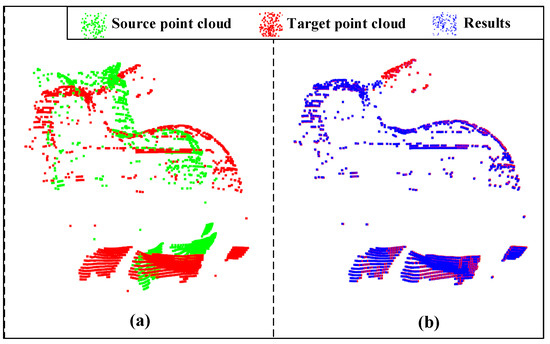

Next, we conducted two experiments to show the evaluation metrics of different loss functions. Table 1 shows the results of the evaluation metrics in the original and proposed loss functions after training for 300 epochs, and Table 2 shows the results of the evaluation metrics after training for 600 epochs. It is important to note that our table is divided into two parts: the top half being the results obtained in the noiseless condition and the bottom half in the noisy condition. We can see that in both tables, most of the metrics of the proposed loss function are smaller than the metrics of the original loss function, which indicates that our proposed loss function performs better than the original one. Furthermore, we found that in most cases, the larger the original value of the metrics, the better error reduction is. It is also worth noticing that comparing with the loss function without considering the overlapping areas, the proposed loss function improves the accuracy of the registration significantly. Taking the results training for 300 epochs as an example, the analysis results can be tested via alignment visualization results for some data, as shown in Figure 8 and Figure 9. From these figures, we can see that our proposed method improves the alignment effects.

Table 1.

Results of metrics with different loss functions training for 300 epochs.

Table 2.

Results of metrics with different loss functions training for 600 epochs.

Figure 8.

Registration visualization under 300 epochs: (a) input; (b) ROPNet; (c) ours.

Figure 9.

Registration visualization under 300 epochs with Gaussian noise: (a) input; (b) ROPNet; (c) ours.

4.3. Ablation Experiment

In this subsection, we compare the results of the original ROPNet model and the ROPNet model with channel attention mechanism. Then, we analyze the results obtained from our proposed solution.

Table 3 shows the results of the evaluation metrics in the original and proposed ROPNet model. Compared with the original ROPNet, the results show that most of the metrics, especially the metrics in Schemes 1 and 3 are smaller than the original one. The larger the original value of metrics, the better the performance of the proposed model.

Table 3.

Results of metrics with different models.

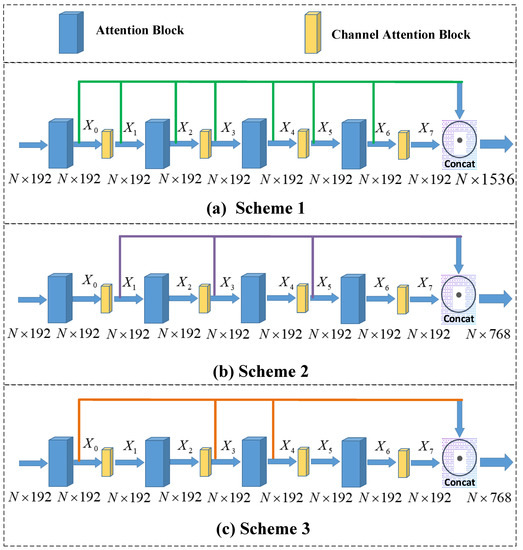

Next, we discuss the results when selecting different positions to fuse the features. As mentioned in Section 3.2, there are a lot of ways to add the channel attention model. Figure 10 illustrates three fusion schemes with different positions. In specific, Figure 10a shows the stepwise fusion method, Figure 10b shows the feature extracted through the channel attention module, and Figure 10c shows a mixed method. Table 3 depicts the results of feature fusion with different positions. It can be seen that Scheme 1 and Scheme 3 has better performance than Scheme 2, because Scheme 2 ignores the global information. Moreover, Scheme 1 fuses multiple layers of feature and leads to the feature redundancy. So, we choose Scheme 3 as the feature fusion method.

Figure 10.

Comparison of different schemes: (a) Scheme 1 concat[x0, x1, x2, x3, x4, x5, x6, x7]; (b) Scheme 2 concat[x1, x3, x5, x7]; (c) Scheme 3 concat[x0, x3, x4, x7].

4.4. Combination of Two Improvements

In this subsection, we combine the proposed loss function with the improved model to show the effectiveness of our proposed method on ModelNet40.

Table 4 shows the results of evaluation metrics in different methods. We can see that the results with the improved loss function and improved model has the best performance comparing with others. When comparing the metric results in AO utilizing both the improved loss function and model with the original metric results, we can see from Table 4 that Error(R) and Error(t) with no noise are reduced by 4.83% and 14.16%. Error(R) and Error(t) with noise are reduced by 9.60% and 8.89%. When comparing the metric results utilizing both the improved loss function and model with the results only utilizing the improved loss function, Error(R) and Error(t) with no noise are reduced by 4.10% and 11.82%. The Error(R) and Error(t) with noise are reduced by 5.34% and 9.56%. When comparing the metric results utilizing both the improved loss function and model with the results only utilizing the improved model, Error(R) and Error(t) with no noise are reduced by 7.20% and 20.18%. The Error(R) and Error(t) with noise are reduced by 5.69% and 9.56%. The results show that the proposed method with the improved loss function and the improved model is effective in improving the performance of the model.

Table 4.

Overall improved results on ModelNet40

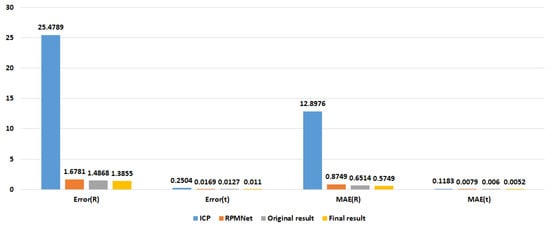

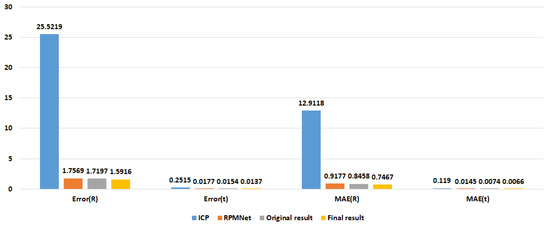

Figure 11 and Figure 12 show the comparison results of ICP, RPMNet, ROPNet, and the proposed model in the form of bar charts. We can see that our method is superior to both RPMNet and the original ROPNet. When comparing the metric results with RPMNet, Error(R) and Error(t) with no noise are reduced by 17.44% and 34.91%. The Error(R) and Error(t) with noise are reduced by 9.41% and 22.60%, respectively. Comparing with ICP, our method performs better, the Error(R) and Error(t) is reduced by a factor of more than ten.

Figure 11.

Registration results on ModelNet40.

Figure 12.

Registration results on ModelNet40 with Gaussian noise.

We also tested our method on the Stanford Bunny dataset. The Stanford Bunny dataset is composed of 35,947 points. We rotated the Bunny model by 30 degrees around the y-axis. Then, 1500 points are selected from the original point cloud and the point cloud after rotation as the source and target point clouds. The final result is shown in Figure 13.

Figure 13.

Registration results of Stanford bunny model: (a) input; (b) result error (R): 0.3403; Error(t): 0.0219.

5. Conclusions

In this paper, we designed a new way of using the cross-entropy loss, which integrates two cross-entropy losses by the product instead of sum. To verify the effectiveness of this loss, we applied it to the point cloud registration network ROPNet. In addition, we improve the original ROPNet model by introducing the channel attention mechanism, which focus on the local features. A large number of experiments show that the proposed method improves the accuracy and efficiency of the point cloud registration task. Compared to the original ROPNet, the Error(R) and Error(t) with noise are reduced by 4.83% and 14.16%. Compared to the RPMNet, the Error(R) and Error(t) with noise are reduced by 9.41% and 22.60%. Compared to other traditional methods such as ICP the Error(R) and Error(t) are even reduced by more than ten times. In this way, the proposed method is expected to contribute to more point cloud registration models.

Author Contributions

Conceptualization, F.Y. and W.Z.; methodology, Y.L., F.Y. and W.Z.; software, validation, investigation, data curation, writing—original draft preparation, and visualization, Y.L.; writing—review and editing, F.Y. and W.Z.; supervision, W.Z.; funding acquisition, F.Y., and W.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China under Grant 62101392, and in part by the Stability Module trial production Project of the State Grid Hubei Power Research Institute under Grant 2022420612000585.

Institutional Review Board Statement

Not applicable

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable

Conflicts of Interest

The authors declare no conflict of interest.

References

- Izadi, S.; Kim, D.; Hilliges, O.; Molyneaux, D.; Newcombe, R.; Kohli, P.; Shotton, J.; Hodges, S.; Freeman, D.; Davison, A.; et al. KinectFusion: Real-time 3D reconstruction and interaction using a moving depth camera. In Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology, Santa Barbara, CA, USA, 16–19 October 2011; pp. 559–568. [Google Scholar]

- Huang, X.; Mei, G.; Zhang, J.; Abbas, R. A comprehensive survey on point cloud registration. arXiv 2021, arXiv:2103.02690. [Google Scholar]

- Elhousni, M.; Huang, X. Review on 3d lidar localization for autonomous driving cars. arXiv 2020, arXiv:2006.00648. [Google Scholar]

- Nagy, B.; Benedek, C. Real-time point cloud alignment for vehicle localization in a high resolution 3D map. In Proceedings of the 15th European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Zhang, A.; Min, Z.; Zhang, Z.; Meng, M.Q.-H. Generalized point set registration with fuzzy correspondences based on variational Bayesian Inference. IEEE Trans. Fuzzy Syst. 2022, 30, 1529–1540. [Google Scholar] [CrossRef]

- Fu, Y.; Brown, N.M.; Saeed, S.U.; Casamitjana, A.; Baum, Z.M.C.; Delaunay, R.; Yang, Q.; Grimwood, A.; Min, Z.; Blumberg, S.B.; et al. DeepReg: A deep learning toolkit for medical image registration. arXiv 2020, arXiv:2011.02580. [Google Scholar] [CrossRef]

- Li, Y.; Harada, T. Lepard: Learning partial point cloud matching in rigid and deformable scenes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 20–25 June 2022; pp. 5554–5564. [Google Scholar]

- Min, Z.; Wang, J.; Pan, J.; Meng, M.-Q.H. Generalized 3-D point set registration with hybrid mixture models for computer-assisted orthopedic surgery: From isotropic to anisotropic positional error. IEEE Trans. Autom. Sci. Eng. 2020, 18, 1679–1691. [Google Scholar] [CrossRef]

- Min, Z.; Liu, J.; Liu, L.; Meng, M.-Q.H. Generalized coherent point drift with multi-variate gaussian distribution and watson distribution. IEEE Robot. Autom. Lett. 2021, 6, 6749–6756. [Google Scholar] [CrossRef]

- Besl, P.J.; McKay, N.D. Method for registration of 3-D shapes. Sensor fusion IV: Control paradigms and data structures. Spie 1992, 1611, 586–606. [Google Scholar]

- Sarode, V.; Li, X.; Goforth, H.; Aoki, Y.; Srivatsan, R.A.; Lucey, S.; Choset, H. Pcrnet: Point cloud registration network using pointnet encoding. arXiv 2019, arXiv:1908.07906. [Google Scholar]

- Bai, X.; Luo, Z.; Zhou, L.; Fu, H.; Quan, L.; Tai, C.-L. D3feat: Joint learning of dense detection and description of 3d local features. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 6359–6367. [Google Scholar]

- Li, J.; Zhang, C.; Xu, Z.; Zhou, H.; Zhang, C. Iterative distance-aware similarity matrix convolution with mutual-supervised point elimination for efficient point cloud registration. In Proceedings of the 16th European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Cham, Switzerland, 2020; pp. 378–394. [Google Scholar]

- Huang, S.; Gojcic, Z.; Usvyatsov, M.; Wieser, A.; Schindler, K. Predator: Registration of 3d point clouds with low overlap. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 4267–4276. [Google Scholar]

- Yew, Z.J.; Lee, G.H. Rpm-net: Robust point matching using learned features. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11824–11833. [Google Scholar]

- Zhu, L.; Liu, D.; Lin, C.; Yan, R.; Gómez-Fernández, F.; Yang, N.; Feng, Z. Point cloud registration using representative overlapping points. arXiv 2021, arXiv:2107.02583. [Google Scholar]

- Wen, Y.; Zhang, K.; Li, Z.; Qiao, Y. A discriminative feature learning approach for deep face recognition. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 499–515. [Google Scholar]

- Sun, Y.; Cheng, C.; Zhang, Y.; Zhang, C.; Zheng, L.; Wang, Z.; Wei, Y. Circle loss: A unified perspective of pair similarity optimization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6398–6407. [Google Scholar]

- Li, X.; Sun, X.; Meng, Y.; Liang, J.; Wu, F.; Li, J. Dice loss for data-imbalanced NLP tasks. arXiv 2019, arXiv:1911.02855. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Pihur, V.; Datta, S.; Datta, S. Weighted rank aggregation of cluster validation measures: A monte carlo cross-entropy approach. Bioinformatics 2007, 23, 1607–1615. [Google Scholar] [CrossRef] [PubMed]

- Jadon, S. A survey of loss functions for semantic segmentation. In Proceedings of the 2020 IEEE Conference on Computational Intelligence in Bioinformatics and Computational Biology (CIBCB), Viña del Mar, Chile, 27–29 October 2020; pp. 1–7. [Google Scholar]

- Guo, M.H.; Cai, J.X.; Liu, Z.N.; Mu, T.J.; Martin, R.R.; Hu, S.M. Pct: Point cloud transformer. IEEE Comput. Vis. Media 2021, 7, 187–199. [Google Scholar] [CrossRef]

- Zhao, H.; Jiang, L.; Jia, J.; Torr, P.; Koltun, V. Point transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 16259–16268. [Google Scholar]

- Xu, H.; Liu, S.; Wang, G.; Liu, G.; Zeng, B. Omnet: Learning overlapping mask for partial-to-partial point cloud registration. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 3132–3141. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, March 2018; pp. 3–19. [Google Scholar]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual attention network for scene segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3146–3154. [Google Scholar]

- Qiu, S.; Wu, Y.; Anwar, S.; Li, C. Investigating attention mechanism in 3d point cloud object detection. In Proceedings of the 2021 International Conference on 3D Vision (3DV), London, UK, 1–3 December 2021; pp. 403–412. [Google Scholar]

- Wu, Z.; Song, S.; Khosla, A.; Yu, F.; Zhang, L.; Tang, X.; Xiao, J. 3d shapenets: A deep representation for volumetric shapes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1912–1920. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).