Abstract

Brain tumors in Magnetic resonance image segmentation is challenging research. With the advent of a new era and research into machine learning, tumor detection and segmentation generated significant interest in the research world. This research presents an efficient tumor detection and segmentation technique using an adaptive moving self-organizing map and Fuzzyk-mean clustering (AMSOM-FKM). The proposed method mainly focused on tumor segmentation using extraction of the tumor region. AMSOM is an artificial neural technique whose training is unsupervised. This research utilized the online Kaggle Brats-18 brain tumor dataset. This dataset consisted of 1691 images. The dataset was partitioned into 70% training, 20% testing, and 10% validation. The proposed model was based on various phases: (a) removal of noise, (b) selection of feature attributes, (c) image classification, and (d) tumor segmentation. At first, the MR images were normalized using the Wiener filtering method, and the Gray level co-occurrences matrix (GLCM) was used to extract the relevant feature attributes. The tumor images were separated from non-tumor images using the AMSOM classification approach. At last, the FKM was used to distinguish the tumor region from the surrounding tissue. The proposed AMSOM-FKM technique and existing methods, i.e., Fuzzy-C-means and K-mean (FMFCM), hybrid self-organization mapping-FKM, were implemented over MATLAB and compared based on comparison parameters, i.e., sensitivity, precision, accuracy, and similarity index values. The proposed technique achieved more than 10% better results than existing methods.

1. Introduction

Medical significance dramatically influenced many researchers with the development of image-processing technologies. Today’s imaging techniques include computerized tomography scans, X-rays, positron emissions tomography (PET), and magnetic resonance imaging (MRI). MRI is the most frequently used diagnosis of brain tumors [1]. The doctors decide on therapy by assessing the present condition of the tumor based on the diagnostic value reported. Doctors devise treatments depending on the significance stated in the diagnosis process by analyzing the current situation of the tumor [2].

The tumor treatment depends on the tumor’s nature and size. However, both the form and location are most important. It is necessary to recognize the tumor as benign or non-benign. In the brain with irregular tissues, the tumor can cause uncontrollable growth. The non-benign tumor can be appropriately removed without affecting any natural tissues and redeveloped [3]. Non-benign tumors are sometimes considered malignant tumors that control the role of the neighborhood cells of the brain. This happens primarily because of the excessive development of irregular tissues. A benign tumor [4] is a distinctive array of tissues in the brain. It does not depend on the age of humans and occurs in any malefic. This task can be carried out with various methods, such as radiotherapy or chemotherapy.

The critical distinction is that benign cancers are homogenous, while malignant tumors are homogenous. Their specific features vary because benign tumors [5] are chemotherapy/radiation therapies that radiological operatively pulverize malignant tumors. The MRI technique offers detailed brain knowledge for successfully treating brain tumors, so any tumor region can be quickly identified in Figure 1. Trained experts utilize magnetic resonance imaging (MRI) in qualitative and quantitative analysis that depends on human vision. This is limited to eight bits of grey color as visual checks. The doctors assess brain tumor prevalence in grades I, II, III, and IV.

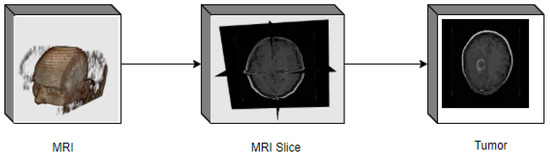

Figure 1.

MRI acquisition for brain tumor detection.

In Figure 1, the three Tesla Siemens Magnetom Spectra MR system was used to collect these test pictures. Then, the images were converted into MRI slices. The brain has a complex body system closely linked to the skull. Segments of neurologists note that MRI is carried out manually by slice selection. However, it is time intensive, distracting, and leads to an incorrect diagnosis. The main goal of medical imaging is to obtain meaningful and accurate information from these images with as little error as possible. Of the various medical imaging methods available to us, MRI is the most reliable and safest. The body is not exposed to any harmful radiation. The proposed work implements an energy-efficient and hybrid segmentation approach to minimize this problem.

It uses a self-organizing adaptive map and k-means to execute [6]. Segmentation is intended to distinguish between the brain tumor area from the pathological one and the brain tumor from the normal one. The procedure uses the hybrid GLCM algorithm to segment tumors efficiently. A modern technique is used to measure the amount of tumor after segmentation. Traditional methods make identifying a brain tumor from an MRI image challenging. The significant advancements for locating brain tumors are image enhancement and identification. Different techniques include GLCM, statistical, texture, region-based, and wavelet features [7].

Additionally, various ways exist to remove the necessary elements from the picture. Utilizing a wide variety of characteristics for classification acts as a strong deterrent. Generally, processing the images while segmenting the relevant region from the whole frame is challenging. Image segmentation splits an entire image into many sets with similar characteristics for different areas [8]. This method involves the amount of grey background or colour, form, shape, contrast, rarity, and luminosity. This research presents brain tumor segmentation using a hybrid model. The proposed hybrid model utilizes an adaptive method that augments an ASOM with Fuzzy K-means cluster formation. The critical contribution of this research is as follows:

- This research aims to suggest an automated workflow that can automatically accurately identify and classify brain tumors. The proposed model’s initial training images were compiled using the GLCM feature extraction method. One of the most well-known feature extraction techniques is GLCM, which can determine the textural connection among an image’s pixels;

- This research utilizes the online Kaggle brain tumor dataset;

- An FKM is used to distinguish the tumor region from the surrounding tissue;

- The proposed AMSOM-FKM technique and existing methods, i.e., Fuzzy-C-means and K-mean (FMFCM), hybrid self-organization mapping-FKM, were implemented over MATLAB and compared based on comparison parameters, i.e., sensitivity, precision, accuracy, and similarity index values;

- The proposed model achieves better precision, accuracy, and sensitivity than existing methods.

The complete research is organized as follows: Section 2 covers the related work, Section 3.1 covers the dataset details, Section 3.2 explains the proposed method, Section 4 covers the experimental results and analysis, and Section 5 covers the conclusion and future works.

2. Related Work

An MRI scan automation system using ML was presented in research [1] for detecting brain cancers. The suggested system went through three phases of implementation. The first step involved determining if the MR scans showed any signs of malignancy (binary approach). Second, using a multi-class method, MR scans were analyzed to identify four distinct tumor types, i.e., normal, glioma, meningioma, and pituitary. Finally, CAMs of each tumor kind were developed as a supplementary resource for the specialists’ tumor identification efforts. The findings of the ResNet50, InceptionV3, and MobileNet designs indicated a 100% overall accuracy for the binary technique. At the same time, for the VGG19 architecture, the figure was 99.71%. A brain tumor automation message was presented in [2]. The main emphasis of this research was on detection, localization, and segmentation. Using test data from 793 brain tumors, a 2-D superpixel segmentation method was used to accurately segment the tumor, with an average dice index of 94 ± 2.6%. The suggested approach was used to MRI images from the BraTS2018 Dataset to demonstrate its efficacy. The proposed methodology’s strength and clinical relevance were shown by comparing its performance assessment parameters to those of the gold standard approach.

An OHDNN (automatically optimized hybrid deep neural network)-based method for detecting brain tumors was presented in [3]. The suggested method comprised two parts. Once the data are assembled into pictures, they undergo pre-processing procedures, including image enhancement and noise reduction. Next, the photos go through a categorization procedure after being cleaned up. In this research, OHDNN was employed for the classification procedure. In addition, the adaptive rider optimization (ARO) technique was used to arbitrarily choose a parameter from among those available in the classifiers to boost the Convolutional Neural Network- Long Short-Term Memory Networks (CNN-LSTM) classifier’s performance. We used an MRI image dataset in our experiments.

Brain tumor segmentation using CT was presented in [4]. Traumatic brain injuries, malignant tumors, and skull fractures can all be diagnosed using CT scans. We extracted pictures from the brain tumor database as a test subject in this research project. Images were cleaned of noise and high-frequency artefacts in the pre-processing phase. Median filters are special nonlinear digital filters that reduce unwanted background noise in digital images and signals. The proposed method employed a genetic algorithm (GA) in conjunction with centroid improvements such as grey wolf optimization (GWO) and social spider optimization (SSO) to boost the precision of the FCM centroid. Compared to prior studies, the one recommended here achieved the highest possible execution in a cancer picture segmentation assessment. The results showed that when compared to using separate algorithms, the hybrid approach (SSO-GA) yielded the best accuracy (99.24%). This study used MATLAB 2014 to create a classification and segmentation method for brain tumors.

A lightweight implementation of U-Net was presented in [5]. The suggested architecture provided real-time MRI image segmentation. It provided this without requiring much data to train the proposed lightweight U-Net. Furthermore, no extra data augmentation process was needed. As a bonus, this study illustrated how the three perspective planes might be used in place. The ResNet50 network was used to identify brain tumors, as presented in [6]. They analyzed the results using a variety of traditional data augmentation approaches. We also provided our main component analysis-based technique. The ImageNet Dataset was used for training with a network learned from zero and transferred learning. Through this study, we increased our F1 detection rate to 92.34%. Using the recommended strategy and implementing learning transfer, we obtained this score using the ResNet50 network. Additionally, it was also determined, using the Kruskal–Wallis test statistic, that the suggested approach is distinct from the other traditional methods at the 0.05 level of significance.

Intracranial tumor segmentation (ICTS) data were constructed and presented in [7]. This data set was compiled from actual hospital radiosurgery procedures and shaped by experienced neurosurgeons and radiation oncologists. It included T1-weighted pictures with contrast added from 1500 patients and labelled which tumors needed to be removed. Artificial intelligence (AI)-based categorization of brain cancers using convolution neural network (CNN) methods was presented in [8] for use with publicly available datasets for this purpose. It can identify (also known as categorize) tissue as a tumor or non-neoplastic. Super-resolution approach and ResNet50 architecture detect an accuracy rate of 98.14% inside the framework.

A contactless tumor removal system to remove phantom tumor tissue autonomously was presented in [9]. When the size of the internal tumor varied from 7.5 to 12.5 mm, there was no change in the system’s functioning. The categorization of brain tumor pictures without human interaction was presented in [10], in which several standards and hybrid ML models were constructed and evaluated in depth. These models were considered to find the most effective model for using neural networks for brain tumor classification. Finally, a stacked classifier was presented that used several distinct cutting-edge methods to surpass the others. Their performance was 99.2%, 99.1%, and 99.2%, respectively.

A convolutional layer to execute a convolution operation for segmenting and recognizing MRI brain tumors was presented in [11]. Several classification algorithms were used to determine if an image was normal or pathological. After the data were classified, the Fuzzy C-Means (FCM) clustering method and its related optimization approaches were used to keep tabs on the aberrant photos and to choose which ones to segment. In [12], models were used to compare their performance in detecting and categorizing two distinct brain tumors. The characteristics needed for brain tumor classification were first retrieved from several Inception modules. Using Inception-v3 and DensNet201 on test samples, the suggested technique obtained the maximum performance in detecting brain tumors, with testing accuracies of 99.34% and 99.51%, respectively, as demonstrated by the findings. This work suggested strategy is based on the concatenation of features.

An approach for image compression using a deep wavelet autoencoder (DWA) was presented in [13]. When used together, they dramatically reduced the feature set size that needs to persist through a subsequent classification task using DNN. It was evaluated on a dataset consisting of brain images. When comparing the DWA-DNN classifier’s performance criterion to those of other classifiers, it was found that the suggested technique excelled. Using machine learning techniques was presented in [14]. It offered a noninvasive automated diagnostic approach for gliomas. First, standard pictures were produced using image standardization techniques such as size normalization and background removal; next, low-contrast traditional brain images were improved via the modified dynamic histogram equalization; last, skull removal via outlier identification was provided.

A multi-CNNs technique to identify brain cancers, combining multimodal information fusion with convolution neural networks, was presented in [15]. First, this research utilized multimodal 3D-CNNs, an extension of the 2D-CNNs, to produce brain lesions with varying modal features over three dimensions. As a result, it could better extract the modal of the differences in information and solve the problem of the comprehensive neighbourhood of faults needed by 2D-CNNs for their raw input. Once the network’s convergence speeds are optimized by adding a genuine normalization layer between the convolution layers and the pooling layer, the overfitting issue may be addressed. The testing findings demonstrated that the suggested approach for detecting brain tumors could accurately pinpoint tumor lesions with improved correlation coefficient, sensitivity, and specificity outcomes. The detection accuracy was much higher than it.

A Sobel edge operator using a closed-contour algorithm with an image-dependent threshold method was presented in [16]. In another piece of research [17], using the multi-threshold K-means algorithm, a CAD (computer-aided design) machine method was used to detect the tumor area and shape. A study [18] reviewed numerous methods for diagnosing neoplasm. It also developed a hybrid approach for classifying brain tumors from MRI images. It introduced various techniques of classification. Research [19] reported the automated morphological identification and differentiation of non-enhancing tumors from stable brain tumors by localization processes. It implemented an automatic CNN segmentation method that considered all local features, an input image, and global area features—developed a fully automated brain tissue detection system using fluid-attenuated investment recovery image MRI images.

A noise reduction technique [20] that could remove special Image features was used in a study. A hybrid approach to discrete wavelet transformation was discussed in [21] to differentiate ordinary or irregular photographs of MRI brain tumors. A revolutionary strategy of classification that would take the possible vector quantization of normal brain tissue segment-damaged segment was also discussed. A new, improved approach with feature optimization for detecting brain tumors was used in [22]. Their scheme used a threshold algorithm and the comparative brain identification test to increase accuracy and decrease difficulty during medical image segmentation. The soft edge detection operator performed the image boundary extraction. A robust, intelligent, creative algorithm method that reduces the impact of image endorsing and blurring was discussed in [23]. This approach removed the threshold-based MRI brain tumor portion. Morphological procedures were used to assess edge limits and remove brain skulls correctly. The previous optimization methods were stuck with optimal local stages, but certain implementations crashed, so PSO lacked certain features [24].

To solve this problem, [25] introduced SOM-FKM for segmentation and classification on MRI images but faced the problem of area overlap. It also introduced KMFCM and intensity adjustment by analyzing volume. The threshold in Alzheimer’s disease was used by [26] to incorporate current and enhanced segmentation methods for MRI segmentation. Nearly all of the methodologies mentioned earlier rely upon segmentation of the MR brain image sequence, while the proposed approach promoted the clear distinction of the T1, T2, and FLAIR image sequences [27]. Table 1 shows the summary of existing works below.

Table 1.

Related works summary.

3. Materials and Methods

In every clinical evaluation technique, the overall performance of the evolved analysis device depends on the database taken into consideration based on the trouble to be solved.

3.1. Dataset

The proposed model utilized the online KaggleBraTS 2018 dataset [27]. A single image session with visualization parameters of MRI scanners included (Siemens, Erlangen, Germany): Repetition Time T.R. = 9.8 ms, Echo Time T.E. = 4.0 ms, Rotating angle = 10, Inversion Recovery Time T.I. = 20 ms, Delay Time T.D. = 200 ms, 128 sagittal 1.25 mm gapless slices, and 256: 256 (1 × 1 mm) pixel size in the image dataset [28]. The clinical tumor case between 21 and 37 was used with 1696 images, 12 in Figure 2. Brain imaging samples of MR (magnetic resonance imaging) were classified as images of T1, T2, FLAIR (fluid-attenuated reversal recovery), and MRS (magnetic resonance spectroscopy).

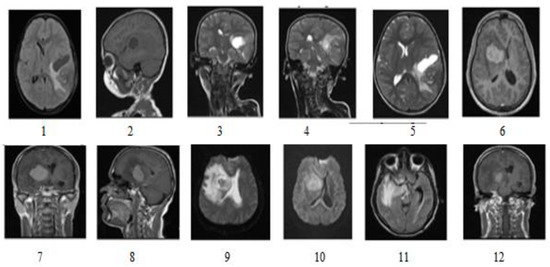

Figure 2.

Dataset Includes different types of image sequences 1–12 (T1, T2, and FLAIR) [22].

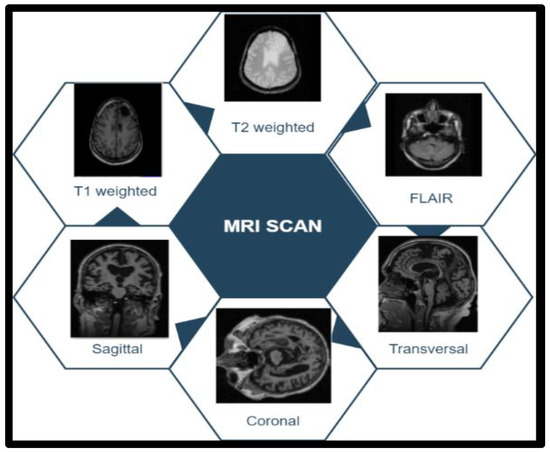

T1 images represent white matter in white (internal tissue area) and grey matter (external tissue area) in grey [28]. In the case of T2-weighted images, it is white for grey matter and grey for white matter. FLAIR visualization allowed the radiologist to properly visualize the brain tissue by removing the brain’s fluid material (water and brain fluid) through sagittal, coronal, and transverse MRI scan tranches. Figure 3 shows tumor image categories in a dataset. The training in set included 70% of the total images, the validation set contained 20%, and the testing set included 10%. The picture sequences T1, T2, and FLAIR were used for the proposed function.

Figure 3.

Tumor image categories in the dataset.

3.2. Proposed Method

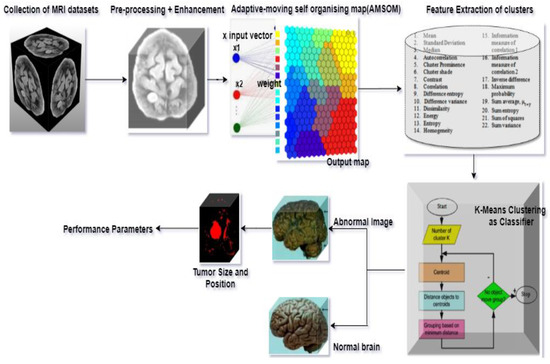

This research presents an efficient tumor detection and segmentation technique using an adaptive moving self-organizing map and Fuzzy K-mean clustering (AMSOM-FKM). The proposed approach mainly focused on segmenting tumors using extraction of the tumor region. The proposed method was implemented over MATLAB. The following phases were utilized. Pre-processing and development, function extraction, AMSOM segments, and K-means were achieved with efficient programming, as seen in Figure 4. Based on the algorithm, the image of the MRI was classified as usual and abnormal.

Figure 4.

Process flow of the proposed method.

3.2.1. Pre-Processing

The MRI image was pre-processed first and was then used for different processes. The input images were revamped to 256 × 256 pixels resolution during pre-processing without missing any image data. The essential tasks are to remove excessive image noise and remove patients’ names, ages, sex, place, address of residence, and skull. The representation of the RGB colour was then converted by 256 (0–255) into grey type. It was helpful to imagine the image quality and to achieve an ideal signal-to-noise level. Following the pre-processing steps, image enhancement was carried out [29].

3.2.2. Image Enhancement

Numerous image processing methods were developed to increase image quality. Histogram equalization (EH) is one of the popular global image processes. That is the method of distributing the degree of prominence of the image over the full spectrum of histograms [30]. The suggested hybrid segmentation method was implemented from brightness to improve image quality during the next phase [31]. Suppose mbi and mbo are the mean brightness of the input image and the image function f obtained after equalization. In that case, grey pixel information is g-function obtained.

3.2.3. Clustering

Adaptive moving self-organizing (AMSOM) is a unique clustering technique used by [32], which was used in this work for the segmentation of MRI. The first step begins with voxel intensities initially evaluated and modelled using SOM (self-organizing map) prototypes. It is unsupervised learning and groups the same form of characteristics into two or more dimensional lattices. At the same time, in the output space, different ones appear. The position vectors were initially determined in the same way as the positions of the neurons in the hexagonal grid structure, where the voxel intensities were initially evaluated and modelled using SOM prototypes. The input data were set at the input vector, and then RєI, so It was the input vector at time t and is the raw vector at each input i. When the unit was closer to the winning neuron, it was defined as the best-winning neuron. It is calculated at each iterative step using [33].

The prototype was updated sequentially. The incremental process used the exponential decay learning factor and was the neighbourhood function.

Both factors are inversely proportional to time, so it decreases over time. The incremental process reduces as the neighbourhood weight factor falls, which is a few units. Here, it indicates the position of the exit space and is the distance between the winning unit and the space. The Euclidean distance is computed as . However, after the initial process, the orthogonal and symmetric matrices T and P of the same size, where T (p, q) means 0 for no relation, 1 is a connected neuron, and P (p, q) indicates the boundary age of the neurons [21]. The neurons are nearest neighbours in the present era, meaning there is 0. Yet, another value means neurons are the closest neighbours. The MTr threshold is a function of the data dimension (D) given by the MTr threshold [34,35,36].

In adaptive learning, the neurons’ weight is approximated by an algorithm of the SO’M array, where wi (t + 1) is used as

where nj(t) is an integer, hji(t) is the neighborhood function, xej(t) is the average vector of x, and xej(t) is an adaptive feature. The distances between the neuronal vectors (wi) are determined at each point and after modifying the neuronal weight vectors. These distances measure neuronal similarity in the input region [34].

Value 0.01 explains δji(t) is a distinct feature, μ is a neighbourhood function, γ controls the diminishing district as a fraction. Since learning is complete, no neurons are added or deleted. However, at a lower rate, weight and location adaptation vectors are continued [37]. With the following equation, the cluster is produced:

Here, the membership element is. “”. The number of AMSOM clusters is “k”, and the number of input pixels is identified as “n”.

3.2.4. Feature Extraction

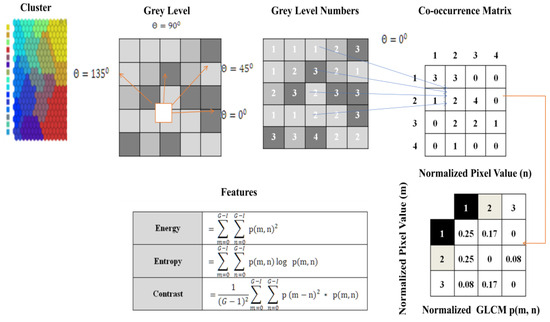

In this function, the clusters generated were considered for the extraction feature. The matrix was constructed at a distance of d = 1 and angles of degrees (0, 45, 90, 135). It is calculated at different angles. Grey-level co-occurrence matrix (GLCM) is a textured character. This profile refers to the touch, i.e., smooth, silky, rough, etc [38,39,40,41,42,43].

The order of statics was as follows: first-order texture steps were statistics reported from the original image values, such as variance, and pixel neighbour relationships were not implemented. Second-order measures describe the relationship between groups of two (usually adjacent) pixels in the original image. GLCM is the feature extraction tool used to analyze the textures considered for examining feature analysis [44]. Figure 5 shows how the grey-level co-occurrence matrix features differentiated the surface of an image by measuring how often pixel pairs of different values and in a given spatial relationship occurred in a photo [45], generating a matrix and then extracting the statistical measures described in Figure 6.

Figure 5.

Process of feature extraction using AMSOM cluster.

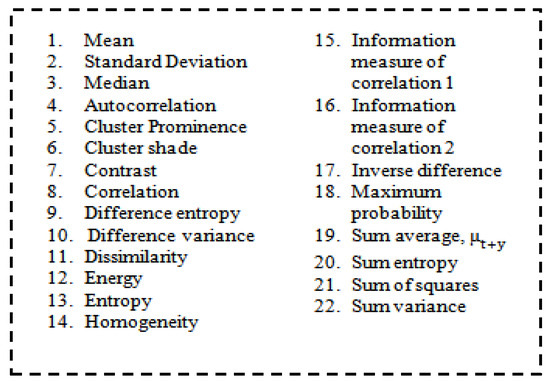

Figure 6.

List of features.

3.3. Classification

FKM assigned each object to its group and calculated the distances between all the groups based on the chosen linking criteria to merge the two closest until only one cluster remained. The estimated distance was produced using [46] Equation (8).

where k is no. of iteration, N is the data points or pixels present in the input image, M is the number of clusters formed by FKM, m is the fuzziness coefficient, is Squared Euclidean distance between pixel xi calculated as the co-efficiently obtained due to the overlapping of clusters.

3.4. Volume Estimation

It was evaluated using connected region calculation on segmented images by assessing the number of pixels covered over the total number of pixels in mm3.

3.5. Performance Parameters

The significant quality parameters that determine the precision and efficiency of the proposed algorithm are presented as statistical measures, such as the mean square error, and others were evaluated using Equations (10)–(19). These statistical measures were briefly defined in [47,48,49,50,51,52,53].

Computational time =Pre-Processing In Segmentation process+ Classification Process

where R is a raw image, S is segmented, TP is a true positive, TN is a true negative, FP is a false positive, and FN is a false negative [53].

3.6. Proposed AMSOM-FKM Algorithm

The steps of the proposed Algorithm 1 are as follows.

| Algorithm 1 Proposed AMSOM-FKM Algorithm |

| Input: MRI image dataset Output: Tumor and non-tumor images

|

4. Result and Analysis

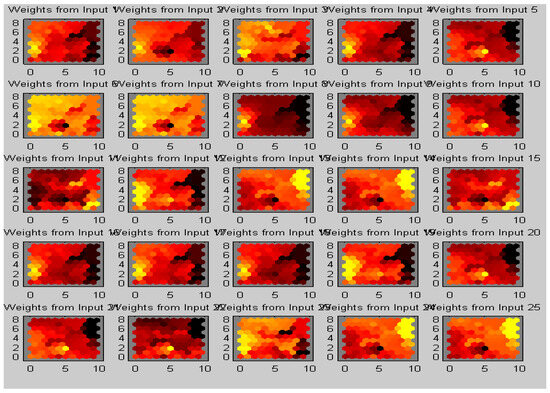

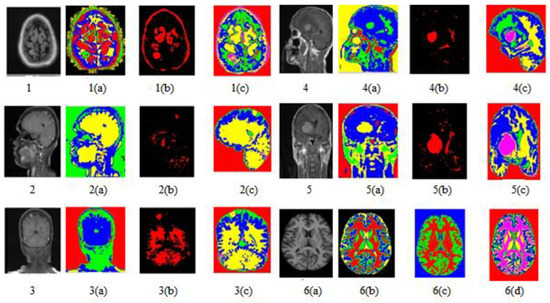

The work was carried out on a laptop with an Intel (R) Core (TM) i5-5005U CPU @ 2.00 GHz with 8 GB of RAM using the MATLAB software version (R2018b). The results of our proposed algorithm were obtained from 42 real data sets of MRI images of different age groups and genders that predict the tumor’s type, position, and area. The pre-processing was performed with the removal of the skull and image enhancement followed by clustering, whose Voronoi output is shown in Figure 7. The images were pooled for image segmentation and comparing the algorithm shown in Figure 8. This table calculated the validation parameters that offer a low mean square value concerning the previous algorithm, such as KMFCM and SOM-FKM.

Figure 7.

AMSOMV diagram illustrates 5 × 5 maps with varying colours as clusters in each map.

Figure 8.

Image segmentation of T1, T2, and FLAIR image sequences.

Figure 8 shows all abnormal images. Figure 8(1) is the axial flair image with meningioma; Figure 8(2) is T1-Sagittal obtained from the 35-year-old patient suffering from PNET (primitive neuroectodermal tumor); Figure 8(3) is T1 Coronary with Contrast Enhancement, Figure 8(4) and Figure 8(5) show unclear identification of the Tumor region and portions of edema.

Figure 8(6) is a standard brain image. The lateral ventricular system GM and WM were not identified using the SOM-FKM in Figure 8(1a,1b) KMFKM algorithm. However, perfect tissue separation and tumor identification was performed with the proposed methodology AMSOM-FKM in Figure 8(1c). The result produced with SOM-FKM cannot identify the Tumor, so AMSOM placed a transparent Tumor region Figure 8(4a,5a) show unclear identification of the Tumor region portions edema with SOM-FKM, KMFCM.

However, the proposed algorithm produced a clear and good tissue group with a separate area of tumors and edema shown in Figure 8(4c) in the result with AMSOM-FKM was distinguished are available and seen in result Figure 8(1c). Peak signal noise ratio (PSNR) and mean square error (MSE) compare the squared error between the original and the reconstructed image. There is an inverse relationship between PSNR and MSE so, so a higher PSNR value indicates a higher image quality (better). The groups produced and the validation parameters show that MSE and PSNR were 0.03 and 62.91 dB, satisfying the algorithm’s efficiency.

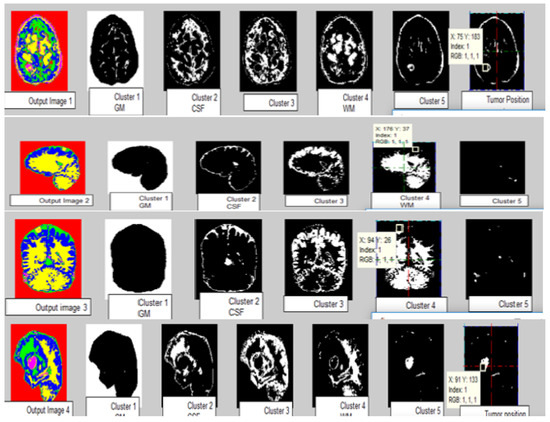

The images of different clusters formed during the clustering extraction of the tumor region are clearly illustrated in Figure 9. Perfect tumor identification and tissue segmentation with other sets for size estimation are shown in Figure 9. Output Image 1 produced five clusters with an exact tumor in cluster 5 with positions X = 75 mm and Y = 183 mm. Output Image 2 had five clusters with positions X = 176 mm and Y = 37 mm. Output Image 3 with position X = 94 mm, Y = 26 mm. Output Image 4 had five clusters with positions X = 99 mm and Y = 133 mm.

Figure 9.

Tumor size and location using Output segmented images. Output Image 1 produces 5 clusters with the exact Tumor in cluster 5 with positions X = 75 mm, Y = 183 mm. Output Image 2 has 5 clusters with positions X = 176 mm and Y = 37 mm. Output Image 3 with position X = 94 mm, Y = 26 mm. Output Image 4 produces 5 clusters with positions X = 99 mm and Y = 133 mm.

There was a high variation in tumor size, directly correlating with the disease status and medical approach. If such minute-size tumors can be identified, it will reduce misdiagnosis and enhance early diagnosis. Based on the datasets, machine learning algorithms were finalized for the model. Underfitting and overfitting are the two main conditions that can affect accuracy. Underfitting occurs when data are less, and overfitting occurs when data are extensive. So, an excellent fit algorithm must be used for better performance. Table 2 shows the experimental results of the proposed AMSOM-FKM and existing methods, i.e., KMFCM, SOM-FKM, and AMSOM [54].

Table 2.

Experimental results (Proposed vs. Existing Method).

Table 2 illustrates the size of extracted tumor and edema region. The comparative analysis with methodology perceptive and accuracy evaluated was compared and is shown in Table 3. The 22 features with AMSOM-FKM used by the proposed algorithm were the overcoming factor for all other techniques. The average accuracy of KMFCM [20] was 98%, SOM-FKM [19] was 94%, and the proposed was high at 99.8%.

Table 3.

Estimation of tumor size.

The MRI images of different data compared with different algorithms implemented by researchers formed in one tumor region are clearly illustrated in Table 4. Tumor identification and tissue segmentation with other methods enhanced efficiency and accuracy as the OTSU method had a high accuracy of 97.3%. In contrast, the hybrid-clustering technique had 97.69% accuracy. Table 4 clearly states that the accuracy was improved as the features increased, so the proposed method considered 22 features.

Table 4.

Accuracy analysis with various techniques.

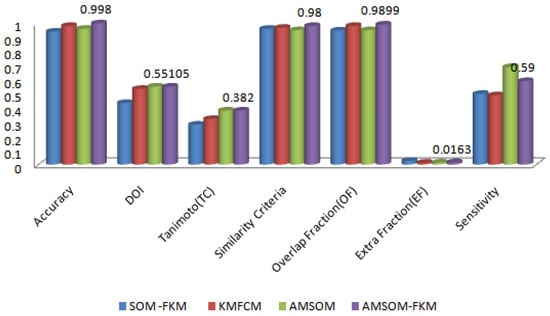

As is clear from Figure 10, the different validating parameter is represented on the figure’s x-axis, and corresponding values are present on the y-axis, which has no unit. Accuracy, DOI, Tanimoto index, similarity criteria, overlap fraction and extra fraction, and sensitivity were the parameters compared. As the accuracy was high, the algorithm was efficient. As the sensitivity was high, the algorithm was sensitive to variation or noise. This method showed that the proposed algorithm had better accuracy than existing techniques.

Figure 10.

Comparative analysis of various algorithms over validation parameters.

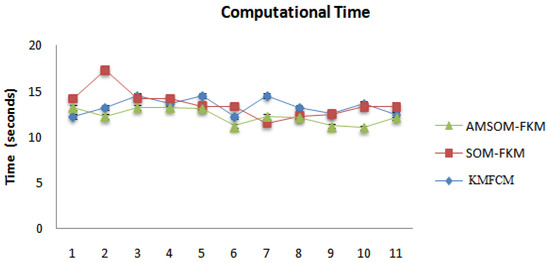

The proposed algorithm allowed neurons to change positions during training, providing better visualization and faster training time, as shown in Figure 11. Therefore, cluster numbers were adequate and accurate cluster points that adequately segmented tissue regions. The proposed algorithm also ranked higher in producing better DOI and TC values. The average DOI and TC values produced by the AMSOM-FKM algorithm were 0.435105 and 0.282381. The accuracy shown in Table 4 revealed that the proposed algorithm produced satisfactory results with 99.8% accuracy. Table 5 demonstrates the performance evaluation regarding the Dice score and Jaccard index below.

Figure 11.

Comparative analysis of various algorithms for time consumption.

Table 5.

Dice score and Jaccard Index analysis with various techniques.

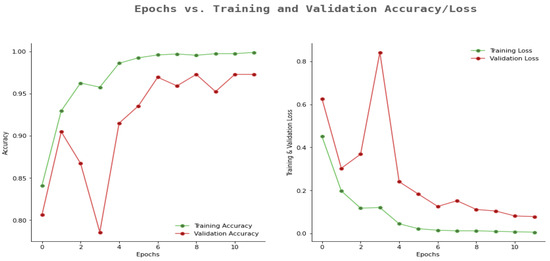

Similarly, Figure 12 shows the outcomes of the proposed model in terms of epoch and accuracy/loss results. The proposed model had better training accuracy and validation for more epochs. Additionally, we observed that training and validation loss were more elevated once the number of epochs was less. However, when the number of epochs increased, the training and validation loss was less.

Figure 12.

Proposed model accuracy and loss results.

5. Conclusions and Future Work

In this research, we developed an efficient brain tumor segmentation method AMSOM-FKM based on an adaptive moving self-organizing map and the Fuzzy K-mean clustering method. Specifically, the Brats18 MRI Tumor Image database was used for this study. Detecting and extracting the heterogeneous tumor area from the many MR brain images in the collection is difficult. The suggested method demonstrated superior performance over the AMSOM and FKM algorithms for solving the segmentation and segregation issues in the tumor area. By integrating prior information with characteristics extracted from brain MR images, classifiers may be created for the segmentation techniques. The proposed method and existing KMFCM, SOM-FKM, andAMSOM were generated using MATLAB and various performance measuring parameters, i.e., detection rate, accuracy, loss validation, MSE, PSNR, and DOI. The proposed method achieved more than 10% better results than existing methods.

In future work, the proposed methodology can be used in radiology for the everlasting detection and position of the tumor. The same methods can also classify and analyze pathologies like Parkinson’s disease. The suggested soft computing algorithms should be used in the field programmable gate array (FPGA) of a clinical MRI scanner so that the regions and tissues found in the brain can be easily visualized.

Author Contributions

Conceptualization, U.K.L., I.K. and K.R.; methodology, U.K.L.; software, P.M., F.H. and A.S.; validation, S.D., U.R. and I.K.; formal analysis, P.M.; resources, U.R., F.H. and A.S.; data curation, U.R., F.D. and I.K.; writing—original draft, S.D., F.D. and I.K.; supervision, S.S.; project administration, S.S.; funding acquisition, K.R. All authors have read and agreed to the published version of the manuscript.

Funding

The authors would like to thank Prince Sattam Bin Abdulaziz University, project number (PSAU/2023/R/1444). The authors thank Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2023R236), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The dataset will be available with the corresponding author based on individual requests.

Conflicts of Interest

All the authors declared no conflict of interest related to this research.

References

- Turk, O.; Ozhan, D.; Acar, E.; Akinci, T.C.; Yilmaz, M. Automatic detection of brain Tumors with the aid of ensemble deep learning architectures and class activation map indicators by employing magnetic resonance images. Z. Med. Physik. 2023, in press. [CrossRef]

- Ahuja, S.; Panigrahi, B.K.; Gandhi, T.K. Enhanced performance of Dark-Nets for brain Tumor classification and segmentation using colormap-based superpixel techniques. Mach. Learn. Appl. 2022, 7, 100212. [Google Scholar] [CrossRef]

- Shanthi, S.; Saradha, S.; Smitha, J.A.; Prasath, N.; Anandakumar, H. An efficient automatic brain Tumor classification using optimized hybrid deep neural network. Int. J. Intell. Netw. 2022, 3, 188–196. [Google Scholar] [CrossRef]

- Vankdothu, R.; Hameed, M.A. Brain Tumor segmentation of MR images using SVM and fuzzy classifier in machine learning. Meas. Sens. 2022, 24, 100440. [Google Scholar] [CrossRef]

- Walsh, J.; Othmani, A.; Jain, M.; Dev, S. Using U-Net network for efficient brain Tumor segmentation in MRI images. Healthc. Anal. 2022, 2, 100098. [Google Scholar] [CrossRef]

- Anaya-Isaza, A.; Mera-Jimenez, L. Data Augmentation and Transfer Learning for Brain Tumor Detection in Magnetic Resonance Imaging. IEEE Access 2022, 10, 23217–23233. [Google Scholar] [CrossRef]

- Lu, S.L.; Liao, H.C.; Hsu, F.M.; Liao, C.C.; Lai, F.; Xiao, F. The intracranial Tumor segmentation challenge: Contour Tumors on brain MRI for radiosurgery. Neuroimage 2021, 244, 118585. [Google Scholar] [CrossRef]

- Deshpande, A.; Estrela, V.V.; Patavardhan, P. The DCT-CNN-ResNet50 architecture to classify brain Tumors with super-resolution, convolutional neural network, and the ResNet50. Neurosci. Inform. 2021, 1, 100013. [Google Scholar] [CrossRef]

- Onyema, E.M.; Shukla, P.K.; Dalal, S.; Mathur, M.N.; Zakariah, M.; Tiwari, B. Enhancement of patient facial recognition through deep learning algorithm: ConvNet. J. Healthc. Eng. 2021, 6, 2021. [Google Scholar] [CrossRef]

- Majib, M.S.; Rahman, M.M.; ShahriarSazzad, T.M.; Khan, N.I.; Dey, S.K. VGG-SCNet: A VGG Net based Deep Learning framework for Brain Tumor Detection on MRI Images. IEEE Access 2021, 9, 116942–116952. [Google Scholar] [CrossRef]

- Wang, W.; Bu, F.; Lin, Z.; Zhai, S. Learning Methods of Convolutional Neural Network Combined with Image Feature Extraction in Brain Tumor Detection. IEEE Access 2020, 8, 152659–152668. [Google Scholar] [CrossRef]

- Noreen, N.; Palaniappan, S.; Qayyum, A.; Ahmad, I.; Imran, M.; Shoaib, M. A Deep Learning Model Based on Concatenation Approach for the Diagnosis of Brain Tumor. IEEE Access 2020, 8, 55135–55144. [Google Scholar] [CrossRef]

- Kumar Mallick, P.; Ryu, S.H.; Satapathy, S.K.; Mishra, S.; Nguyen, G.N.; Tiwari, P. Brain MRI Image Classification for Cancer Detection Using Deep Wavelet Autoencoder-Based Deep Neural Network. IEEE Access 2019, 7, 46278–46287. [Google Scholar] [CrossRef]

- Song, G.; Huang, Z.; Zhao, Y.; Zhao, X.; Liu, Y.; Bao, M.; Han, J.; Li, P. A Noninvasive System for the Automatic Detection of Gliomas Based on Hybrid Features and PSO-KSVM. IEEE Access 2019, 7, 13842–13855. [Google Scholar] [CrossRef]

- Li, M.; Kuang, L.; Xu, S.; Sha, Z. Brain Tumor Detection Based on Multimodal Information Fusion and Convolutional Neural Network. IEEE Access 2019, 7, 180134–180146. [Google Scholar] [CrossRef]

- Alam, M.S.; Rahman, M.M.; Hossain, M.A.; Islam, M.K.; Ahmed, K.M.; Ahmed, K.T.; Miah, M.S. Automatic human brain Tumor detection in MRI image using template-based K means and improved fuzzy C means clustering algorithm. Big Data Cogn. Comput. 2019, 3, 27. [Google Scholar] [CrossRef]

- Aslam, A.; Khan, E.; Beg, M.M.S. Improved edge detection algorithm for brain Tumor segmentation. In Proceedings of the Second International Symposium on Computer Vision and the Internet (VisionNet’15), Kerala, India, 10–13 August 2015. [Google Scholar]

- Chanchlani, A.; Chaudhari, M.; Shewale, B.; Jha, A. Tumor detection in brain MRI using Clustering and segmentation algorithm. Imp. J. Interdiscip. Res. 2017, 3, 2122–2127. [Google Scholar]

- Lakra, A.; Dubey, R.B. A comparative analysis of MRI brain Tumor segmentation technique. Int. J. Comput. Appl. 2015, 125, 5–14. [Google Scholar] [CrossRef]

- Chadded, A. Automated feature extraction in brain Tumor by magnetic resonance imaging using Gaussian mixture models. Int. J. Biomed. Image 2015, 2015, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Devkota, B.; Alsadoon, A.; Prasad, P.W.C.; Singh, A.K.; Elchouemi, A. Image segmentation for early stage brain Tumor detection using mathematical morphological reconstruction. In Proceedings of the 6th International Conference on Smart Computing and Communications, ICSCC, Kurukshetra, India, 7–8 December 2017. [Google Scholar]

- Olenska, E.B.; Thoene, M.; Wlodarczyk, A.; Wojtkiewicz, J. Application of MRI for the diagnosis of neoplasms. Biomed Res Int. 2018, 2018, 2715831. [Google Scholar]

- Malik, V.; Mittal, R.; Mavaluru, D.; Narapureddy, B.R.; Goyal, S.B.; Martin, R.J.; Srinivasan, K.; Mittal, A. Building a Secure Platform for Digital Governance Interoperability and Data Exchange using Blockchain and Deep Learning-based frameworks. IEEE Access 2023, 11, 70110–70131. [Google Scholar] [CrossRef]

- Hooda, M.; Shravankumar Bachu, P. Artificial Intelligence Technique for Detecting Bone Irregularity Using Fastai. In Proceedings of the International Conference on Industrial Engineering and Operations Management, Dubai, United Arab Emirates, 10–12 March 2020; pp. 2392–2399. [Google Scholar]

- Subramanian, M.; Cho, J.; Easwaramoorthy, V. Multiple types of Cancer classification using CT / MRI images based on Learning without Forgetting powered Deep Learning Models. IEEE Access 2023, 11, 10336–10354. [Google Scholar] [CrossRef]

- Jazaeri, S.S.; Asghari, P.; Jabbehdari, S.; Javadi, H.H. Composition of caching and classification in edge computing based on quality optimization for SDN-based IoT healthcare solutions. J. Supercomput. 2023, 9, 1–51. [Google Scholar] [CrossRef] [PubMed]

- Cui, S.; Mao, L.; Jiang, J.; Xiong, S. Automatic semantic segmentation of brain gliomas from MRI images using a deep cascaded neural network. J. Healthc. Eng. 2018, 2018, 4940593. [Google Scholar] [CrossRef] [PubMed]

- Brain Tumour Dataset. Available online: https://www.kaggle.com/datasets/sartajbhuvaji/brain-Tumor-classification-mri (accessed on 19 July 2022).

- Dalal, S.; Khalaf, O.I. Prediction of occupation stress by implementing convolutional neural network techniques. J. Cases Inf. Technol. 2021, 23, 27–42. [Google Scholar] [CrossRef]

- Sheikh Abdullah, S.N.H.; Bohani, F.A.; Nayef, B.H.; Sahran, S.; Akash, O.A.; Hussain, R.I.; Ismail, F. Round randomized learning vector quantization for brain Tumor imaging. Comput. Math. Methods Med. 2016, 2016, 8603609. [Google Scholar] [CrossRef]

- Jalalifar, S.A.; Soliman, H.; Sahgal, A.; Sadeghi-naini, A.; Member, S. Automatic Assessment of Stereotactic Radiation Therapy Outcome in Brain Metastasis using Longitudinal Segmentation on Serial MRI. IEEE J. Biomed. Health Inform. 2023, 1–12. [Google Scholar] [CrossRef]

- Santosh, S.; Raut, A.; Kulkarni, S. Implementation of image processing for detection of brain Tumours. In Proceedings of the IEEE International Conference on Computing Methodologies and Communication (ICCMC), Delhi, India, 3–5 July 2017. [Google Scholar]

- Prastawa, M.; Bullitt, E.; Ho, S.; Gerig, G. A brain tumor segmentation framework based on outlier detection. Malays. J. Comput. Sci. 2001, 8, 275–281. [Google Scholar] [CrossRef]

- Ilhan, U.; Ilhan, A. Brain Tumor segmentation based on a new threshold approach. In Proceedings of the 9th International Conference on Theory and Application of Soft Computing, ICSCCW 2017, Budapest, Hungary, 24–25 August 2017. [Google Scholar]

- Vijay, V.; Kavitha, A.R.; Rebecca, S.R. Automated brain Tumor segmentation and detection in MRI using enhanced Darwinian particle swarm optimization (EDPSO). In Proceedings of the 2nd International Conference on Intelligent Computing, Communication & Convergence (ICCC), Bhubaneswar, India, 24–25 January 2016. [Google Scholar]

- Govindaraj, V.; Vishnuvarthanan, A.; Thiagarajan, A.; Kannan, M.; Murugan, P.R. Short Notes on Unsupervised Learning Method with Clustering Approach for Tumor Identification and Tissue Segmentation in Magnetic Resonance Brain Images. J Clin. Exp. Neuroimmunol. 2016, 1, 101. [Google Scholar]

- Rajan, P.G.; Sundar, C. Brain Tumor Detection and Segmentation by Intensity Adjustment. J. Med. Syst. 2019, 43, 282. [Google Scholar] [CrossRef]

- Jazaeri, S.S.; Asghari, P.; Jabbehdari, S.; Javadi, H.H. Toward caching techniques in edge computing over SDN-IoT architecture: A review of challenges, solutions, and open issues. Multimed. Tools Appl. 2023, 5, 1–61. [Google Scholar] [CrossRef]

- Behera, T.K.; Khan, M.A.; Bakshi, S. Brain MR Image Classification Using Superpixel-Based Deep Transfer Learning. IEEE J. Biomed. Health Inform. 2022, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Wadhwa, A.; Bhardwaj, A.; Verma, V.S. A review on brain tumor segmentation of MRI images. Magn. Reson. Imaging 2019, 61, 247–259. [Google Scholar] [CrossRef] [PubMed]

- Soomro, T.A.; Zheng, L.; Afifi, A.J.; Ali, A.; Soomro, S.; Yin, M.; Gao, J. Image Segmentation for MR Brain Tumor Detection Using Machine Learning: A Review. IEEE Rev. Biomed. Eng. 2022, 16, 70–90. [Google Scholar] [CrossRef] [PubMed]

- Zhuang, Y.; Liu, H.; Song, E.; Hung, C.C. A 3D Cross-Modality Feature Interaction Network with Volumetric Feature Alignment for Brain Tumor and Tissue Segmentation. IEEE J. Biomed. Health Inform. 2022, 27, 75–86. [Google Scholar] [CrossRef]

- Dalal, S.; Onyema, E.M.; Kumar, P.; Maryann, D.C.; Roselyn, A.O.; Obichili, M.I. A hybrid machine learning model for timely prediction of breast cancer. Int. J. Model. Simul. Sci. Comput. 2022, 2023, 1–21. [Google Scholar] [CrossRef]

- Liu, D.; Sheng, N.; He, T.; Wang, W.; Zhang, J.; Zhang, J. SGEResU-Net for brain tumor segmentation. Math. Biosci. Eng. 2022, 19, 5576–5590. [Google Scholar] [CrossRef]

- Pereira, S.; Pinto, A.; Alves, V.; Silva, C.A. Brain tumor segmentation using convolutional neural networks in MRI images. IEEE Trans. Med. Imagingón Apl. Pyme 2016, 35, 1240–1251. [Google Scholar] [CrossRef]

- Zhao, X.; Wu, Y.; Song, G.; Li, Z.; Zhang, Y.; Fan, Y. A deep learning model integrating FCNNs and CRFs for brain tumor segmentation. Med. Image Anal. 2018, 43, 98–111. [Google Scholar] [CrossRef]

- Dalal, S.; Onyema, E.M.; Malik, A. Hybrid XGBoost model with hyperparameter tuning for prediction of liver disease with better accuracy. World J. Gastroenterol. 2022, 28, 6551–6563. [Google Scholar] [CrossRef]

- Kaya, I.E.; Pehlivanlı, A.Ç.; Sekizkardeş, E.G.; Ibrikci, T. PCA based clustering for brain tumor segmentation of T1w MRI images. Comput. Methods Programs Biomed. 2017, 140, 19–28. [Google Scholar] [CrossRef]

- Zhang, W.; Yang, G.; Huang, H.; Yang, W.; Xu, X.; Liu, Y.; Lai, X. ME-Net: Multi-encoder net framework for brain tumor segmentation. Int. J. Imaging Syst. Technol. 2021, 31, 1834–1848. [Google Scholar] [CrossRef]

- Li, Q.; Yu, Z.; Wang, Y.; Zheng, H. TumorGAN: A multi-modal data augmentation framework for brain tumor segmentation. Sensors 2020, 20, 4203. [Google Scholar] [CrossRef]

- Wang, T.; Cheng, I.; Basu, A. Fluid vector flow and applications in brain tumor segmentation. IEEE Trans. Biomed. Eng. 2009, 56, 781–789. [Google Scholar] [CrossRef]

- Abdel-Maksoud, E.; Elmogy, M.; Al-Awadi, R. Brain tumor segmentation based on a hybrid clustering technique. Egypt. Inform. J. 2015, 16, 71–81. [Google Scholar] [CrossRef]

- Sachdeva, J.; Kumar, V.; Gupta, I.; Khandelwal, N.; Ahuja, C.K. A novel content-based active contour model for brain tumor segmentation. Magn. Reson. Imaging 2012, 30, 694–715. [Google Scholar] [CrossRef] [PubMed]

- Hu, J.; Gu, X.; Gu, X. Mutual ensemble learning for brain tumor segmentation. Neurocomputing 2022, 504, 68–81. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).