Abstract

Due to the impact of the production environment, there may be quality issues on the surface of printed circuit boards (PCBs), which could result in significant economic losses during the application process. As a result, PCB surface defect detection has become an essential step for managing PCB production quality. With the continuous advancement of PCB production technology, defects on PCBs now exhibit characteristics such as small areas and diverse styles. Utilizing global information plays a crucial role in detecting these small and variable defects. To address this challenge, we propose a novel defect detection framework named Defect Detection TRansformer (DDTR), which combines convolutional neural networks (CNNs) and transformer architectures. In the backbone, we employ the Residual Swin Transformer (ResSwinT) to extract both local detail information using ResNet and global dependency information through the Swin Transformer. This approach allows us to capture multi-scale features and enhance feature expression capabilities.In the neck of the network, we introduce spatial and channel multi-head self-attention (SCSA), enabling the network to focus on advantageous features in different dimensions. Moving to the head, we employ multiple cascaded detectors and classifiers to further improve defect detection accuracy. We conducted extensive experiments on the PKU-Market-PCB and DeepPCB datasets. Comparing our proposed DDTR framework with existing common methods, we achieved the highest F1-score and produced the most informative visualization results. Lastly, ablation experiments were performed to demonstrate the feasibility of individual modules within the DDTR framework. These experiments confirmed the effectiveness and contributions of our approach.

1. Introduction

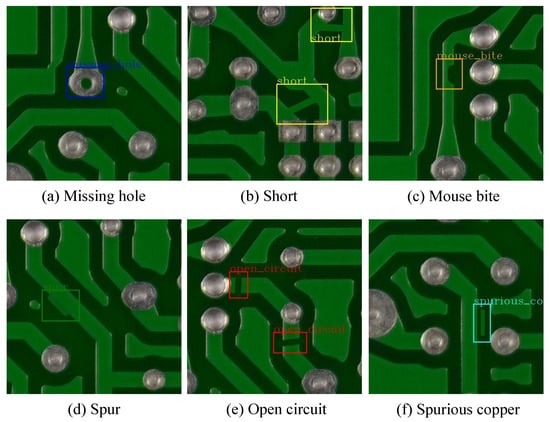

With the emergence of Industry 4.0, production processes have been enhanced by incorporating cyber-physical systems that utilize an increased number of circuit boards to create intelligent systems. To ensure the integrity of the circuit board layout, each element needs to be carefully designed, including the through-holes in the hardware, to guarantee high operational reliability. However, due to process uncertainty and noise, ensuring the integrity of all produced circuit boards becomes challenging. Nevertheless, various machine vision-based methods have been introduced to detect defects. With the upgrading of the PCB production process, the circuit density of PCBs is increasing. The defects generated during the PCB production process exhibit characteristics such as small area, large quantity, and different shapes, requiring PCB defect detection methods to be highly precise and fast. The rapid and widespread adoption of deep learning algorithms has led to the development of numerous deep learning-based techniques in the field of electronic circuits, particularly in identifying flaws in printed circuit boards (PCBs). The primary purpose of a PCB is to provide mechanical support for connecting electronic components, achieved through pads, conductive tracks, and soldering. However, environmental factors make PCB surfaces highly vulnerable to quality issues that deviate from design and manufacturing specifications. For instance, Figure 1 displays six types of PCB defects: spur, mouse bite, spurious copper, missing hole, short circuit, and open circuit. These defects not only significantly affect the quality and performance of final products but also result in substantial economic losses for relevant industries. As a result, detecting flaws on PCB surfaces has become a crucial process in managing PCB production quality, attracting significant attention from the industry.

Figure 1.

Some defect examples. (a) Missing hole, (b) Short, (c) Mouse bite, (d) Spur, (e) Open circuit, (f) Spurious copper.

Through the adoption of automated optical inspection (AOI) techniques [1], manual inspections have been largely replaced, leading to enhanced detection accuracy and efficiency. While AOI systems are more convenient and cost-effective than human inspection, they heavily rely on visible imaging sensors, which can be limiting. The quality of PCB images captured by these visible imaging sensors is significantly impacted by illumination conditions, resulting in uneven brightness levels and decreased detection accuracy for various defect types.

Traditional defect detection methods utilize image-processing techniques, prior knowledge, and conventional machine learning approaches to extract low-level features related to defects. However, these methods necessitate the creation of specific classifiers for different defect categories, which restricts their applicability across various application scenarios. In recent years, a range of image processing algorithms have been investigated for PCB defect detection. These include similarity measurement approaches [2], segmentation-based methods [3], and binary morphological image processing [4]. Nevertheless, these techniques require the alignment of inspected images with standard samples during defect inspection. Therefore, there is an urgent need to develop a novel defect detection framework capable of adapting to diverse defect types seamlessly.

The introduction of deep learning has brought significant advancements to object detection, including techniques such as fast R-CNN [5], RetinaNet [6], and You Only Look Once (YOLO) [7], which have demonstrated impressive capabilities in feature extraction. However, when it comes to PCB defect detection, these methods face certain limitations due to the local feature nature of convolutional neural networks (CNN) [8,9]. Defect detection regions on PCBs often occupy only a small portion of the overall image, and even within the same category of surface defects, there can be significant variations in morphology and patterns. While various deep learning-based detectors have been developed to address these challenges, current detectors struggle to simultaneously achieve high detection accuracy, fast detection speed, and low memory consumption. Therefore, there is a need to explore innovative approaches that can effectively address these limitations and meet the requirements of high accuracy, efficient processing, and optimized resource utilization in PCB defect detection.

In recent years, transformer-based deep learning methods have shown remarkable achievements. Within the domain of object detection, transformers [10] have surpassed convolutional neural networks (CNNs) in terms of accuracy. Prominent examples include DETR [11] and Swin Transformer [12]. Unlike CNNs, which are limited to extracting local features within their receptive fields, transformers have the capability to capture global dependency information even in shallow network architectures. This characteristic is especially advantageous for recognition and detection tasks.

However, transformers suffer from the drawback of high computational complexity. To address this issue, a common approach is to divide the input image into patches before feeding them into the transformer. Although this solves the computational challenge, it inevitably results in a loss of local detail information. Therefore, the combination of CNN and transformer has emerged as an optimal solution in numerous tasks across various fields. By utilizing CNN to extract local detail information and transformer to capture global dependency information, superior performance has been demonstrated.

Given the aforementioned problems, we propose a novel PCB surface defeat detection network. To take full advantage of the deep information provided by the source input images, we design a novel two-way cascading feature extractor.

A novel dual cascaded feature extractor, Residual Swin Transformer (ResSwinT), consisting of ResNet and Swin Transformer, is proposed, which can simultaneously focus on local detail information and global dependency information of images. By utilizing the spatial and channel features of spatial multi-head self-attention (SSA) and channel multi-head self-attention (CSA) fusion features, the network can focus on advantageous features. A large number of experiments have been conducted on the PKU Market PCB dataset and DeepPCB dataset, proving that our proposed defect detection converter (DDTR) can better detect difficult defect targets, achieve higher precision defect detection, and improve the yield of PCB production.

2. Related Works

2.1. PCB Defeat Detection

Over the past few decades, numerous vision-based defect detection methods have been introduced in the field of PCB defect detection. For instance, Tang et al. [13] developed a deep model capable of accurately detecting defects by analyzing a pair of input images—an unblemished template and a tested image. They incorporated a novel group pyramid pooling module to efficiently extract features at various resolutions, which were then merged by groups to predict corresponding scale defects on the PCB. Recognizing the complexity and diversity of PCBs, Ding et al. [14] proposed a lightweight defect detection network based on the fast R-CNN framework. This method leveraged the inherent multi-scale and pyramidal hierarchies of deep convolutional networks to construct feature pyramids, strengthening the relationship between feature maps from different levels and providing low-level structural information for detecting tiny defects. Additionally, they employed online hard example mining during training to mitigate the challenges posed by small datasets and data imbalance. Kim et al. [15] developed an advanced PCB inspection system based on a skip-connected convolutional autoencoder. The deep autoencoder model was trained to reconstruct non-defective images from defect images. By comparing the reconstructed images with the input image, the location of the defect could be identified. In recent years, significant progress has been made in object detection, including the rapid development of algorithms such as YOLO. Liao et al. [16] introduced a cost-efficient PCB surface defect detection system based on the state-of-the-art YOLOv4 framework. Free from the constraints of visible imaging sensors, Li et al. [17] designed a multi-source image acquisition system that simultaneously captured brightness intensity, polarization, and infrared intensity. They then developed a Multi-sensor Lightweight Detection Network that fused polarization information and brightness intensities from the visible and thermal infrared spectra for defect detection on PCBs.

Addressing the challenges posed by small defect targets and limited available samples in the application of deep learning methods to real-world enterprise scenarios for PCB defect detection, this paper presents a novel approach. The proposed method involves a dual-way cascading feature extractor to extract more comprehensive and refined features from PCB images. By employing this feature extractor, the model can effectively capture relevant information for defect detection.

Furthermore, the paper introduces a multi-head spatial and channel self-attention fusion algorithm. This algorithm enables the model to leverage the benefits of focusing on different sizes and channel features of PCB defects. By applying spatial and channel self-attention mechanisms, the model can selectively attend to relevant regions and channels, enhancing its ability to detect defects accurately.

These advancements in feature extraction and attention fusion contribute to overcoming the limitations commonly encountered in PCB defect detection. The proposed approach has the potential to improve the performance and robustness of deep learning models when applied to various enterprise scenarios for PCB defect detection.

2.2. Visual Transformer

The Vision Transformer (ViT) architecture, introduced by Google in 2020, has proven to be an effective deep learning approach for a wide range of visual tasks. It serves as a general-purpose backbone for various downstream tasks, including image classification [18], object detection [19], semantic segmentation [20,21], human pose estimation [22], and image fusion [23,24]. Unlike traditional convolutional neural networks (CNNs), ViT eliminates the need for hand-crafted feature extraction and data augmentation, which can be time-consuming. Additionally, ViT can leverage self-supervised learning techniques to train models without labeled data.

In ViT, an image is divided into a grid of patches, and each patch is flattened into a one-dimensional vector. These patch vectors are then processed by a series of Transformer blocks, which operate in parallel and allow the model to attend to different parts of the image. The output of the last Transformer block is fed into a multi-layer perceptron to generate class predictions. ViT has achieved best performance on image classification benchmarks, such as CIFAR, and has outperformed previous methods in multiple computer vision tasks.

Researchers have explored and extended ViT for different applications. For instance, Smriti et al. [25] compared ViT with various CNNs and transformer-based methods for medical image classification tasks, demonstrating that ViT achieved state-of-the-art performance and surpassed CNN and data-efficient Image Transformer-based models. Zhu et al. introduced weakTr [26], a concise and efficient framework based on plain ViT, for weakly supervised semantic segmentation. This approach enabled the generation of high-quality class activation maps and efficient online retraining. Additionally, a saliency-guided vision transformer [27] was proposed for few-shot keypoints detection, incorporating masked self-attention and a morphology learner to constrain attention to foreground regions and adjust the morphology of saliency maps.

In the context of PCB defect detection, the proposed Dual-branch Detection Transformer (DDTR) utilizes a ResSwinT to encode global dependencies and extract comprehensive features. This enables the subsequent detection branch to achieve robust and comprehensive features for defect detection, resulting in notable advancements in detection accuracy for the model.

Overall, ViT has proven to be a versatile and effective architecture in computer vision tasks, and its application and extensions show promising results across various domains, including medical imaging, semantic segmentation, and keypoints detection. In the field of PCB defect detection, the DDTR model leverages the strengths of ViT to improve the accuracy and robustness of the detection process.

3. Methodology

Since the introduction of the Swin Transformer, numerous methods employing this architecture have demonstrated remarkable performance in object detection. Given its unique ability for parallel computing and managing global dependencies, the Swin Transformer is employed to extract more comprehensive object information. Furthermore, traditional CNNs can be employed to uncover edge features through shallow convolutional layers and high-level features through deeper layers. This paper proposes their combination to offer abundant semantic information for subsequent detections. Additionally, we introduce a multi-source self-attention fusion strategy to bolster the robustness and flexibility of our model.

3.1. Overall Architecture

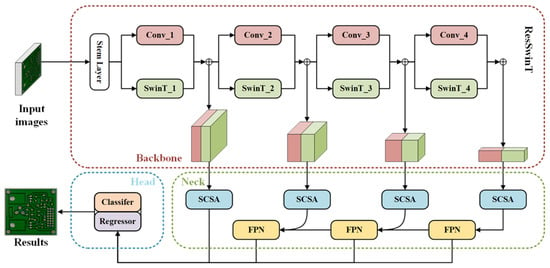

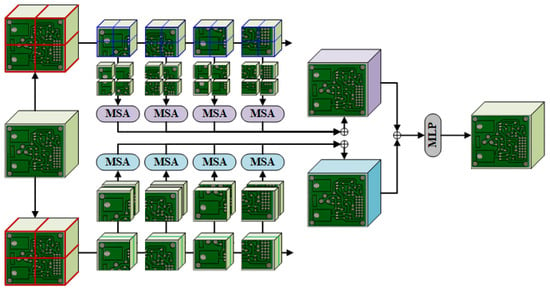

The structure of our proposed DDTR as shown in Figure 2 is similar to the existing object detection network Cascade R-CNN [28]. It can be divided into a backbone for feature extraction, a neck for feature enhancement, and a head for recognition and detection.

Figure 2.

The overall architecture of DDTR. Firstly, the image is input into a dual backbone network called ResSwinT composed of Resnet and Swin Transformer to obtain the multi-scale features. In the neck, feature enhancement is performed by mixing convolutional layers and Transformer. Due to the various shapes of defects on PCBs, DDTR introduces a spatial attention mechanism to enable the network to adaptively perceive important spatial features. Additionally, the features extracted by the backbone exhibit high dimensionality in terms of channels, and DDTR will prioritize crucial channels through channel attention. Lastly, in the head of DDTR, the same cascade heads as those in Cascade R-CNN are employed to enhance the accuracy of PCB defect recognition and detection.

Firstly, the image is input into a dual backbone network called ResSwinT composed of Resnet and Swin Transformer. The multi-scale features obtained by ResSwinT are represented as , where . In the neck, feature enhancement is performed by mixing convolutional layers and Transformer. Due to the various shapes of defects on PCBs, DDTR introduces a spatial attention mechanism to enable the network to adaptively perceive important spatial features. Furthermore, the features extracted by the backbone exhibit high dimensionality in terms of channels. DDTR will emphasize significant channels through channel attention. Ultimately, the same cascade heads used in Cascade R-CNN are employed to enhance the accuracy of PCB defect recognition and detection in the head of DDTR.

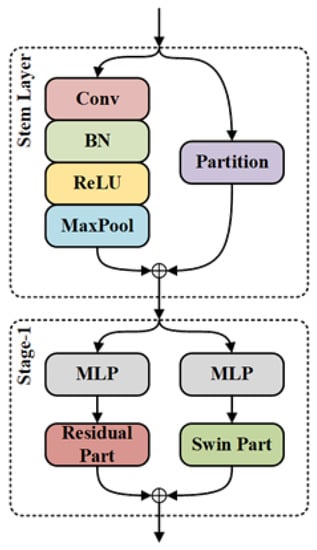

3.2. Residual Swin Transformer (ResSwinT)

While traditional single-path CNNs can offer computational and memory efficiency, their extraction of local features alone restricts the model from capturing the broader contextual information present in the input image. This limitation proves critical for the detection of minute defects in PCBs. To address this, we introduce a dual backbone network called ResSwinT, illustrated in Figure 3. ResSwinT combines the residual modules of ResNet with the self-attention mechanism of Swin Transformer, which employs shift windows, to produce multi-scale features encompassing both global and local information within the feature space.

Figure 3.

The structure of stem layer and stage-1 in ResSwinT.

In the initial stage of ResSwinT, the image will generate a feature through the stem layer containing a partition and a convolutional layer. The input image X in the partition is divided into patches of size and flattened to obtain . The stem layer contains convolution and max-pooling with a stride of 2, and its output is . So the calculation process is

where ⊕ represents the channel concatenation.

The subsequent structure of ResSwinT consists of four stages, each consisting of multi-layers perceptron (MLP), a residual part, and a swin part. In order to load the pre-trained weights from ResNet and Swin Transformer, we do not change the structure of the residual and swin parts. The input feature in i-th stage is first adjusted through MLP to match the channel in the pre-trained network. The residual part of i-th stage contains residual layers, which are composed of convolution, batch normalization (BN) [29], ReLU [30] and shortcut, as shown in Figure 4. Its calculation process is

where represents three convolution layers in the residual layer. By using the shortcut of the residual layer, the degradation problem of deep networks can be solved.

Figure 4.

The structure of stage-i in ResSwinT.

Due to partition in the stem layer, only MLP is used to adjust the channel in stage 1. However, in stage 2, 3, and 4, down-sampling is performed through partition before feature extraction by swin transformer blocks. The swin transformer blocks contain two transformer encoders, which are composed of multi-head self-attention (MSA), feed forward (FF) network, and layer normalization (LN) [31], as shown in Figure 4. Unlike the MSA of the transformer, the swin transformer adopts window multi-head self-attention (W-MSA). In the W-MSA of the first encoder, the feature X only calculates local dependency information within the window of (w, w), as shown in Figure 4. In the next encoder, the window is shifted by to expand the area of extracting dependency information, as shown in Figure 4. Its calculation process is

Through swin transformer blocks in the i-th stage, global dependency information can be gradually extracted with less computational cost.

In summary, the calculation of ResSwinT is

where is the weight of MLP in i-th stage, is the multi-scale features obtained by the residual part , is the multi-scale features extracted by the swin part , where:

where i = 1,2,3,4. Then, generated by the channel concatenation between and is fed into the next stage, where:

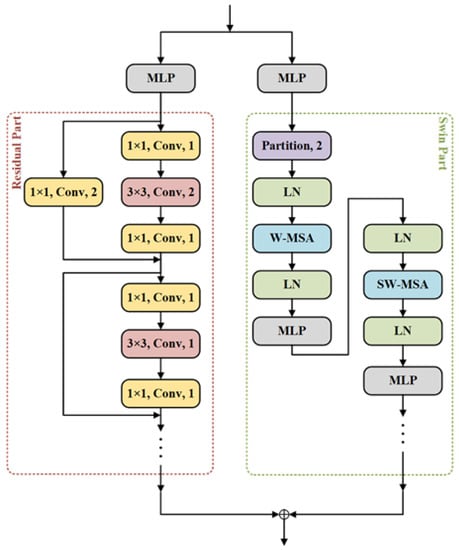

3.3. Multi-Head Spatial and Channel Self-Attention

In the object detection network, the Neck connects the backbone and head, completing the task of feature enhancement. In recent years, multi-scales feature fusion networks have shown significant improvements in accuracy, such as feature pyramid networks (FPN) [32]. We propose a new multi-scale feature fusion strategy named multi-head spatial and channel self-attention (SCSA), as shown in Figure 5. SCSA includes spatial self-attention (SSA) and channel self-attention (CSA), aiming to solve the problem of difficulty in correctly identifying defect targets due to significant differences in PCB defect size, shape, and channel information.

Figure 5.

The structure of SCSA. The purple background module is SSA, and the blue background module is CSA.

3.3.1. SSA

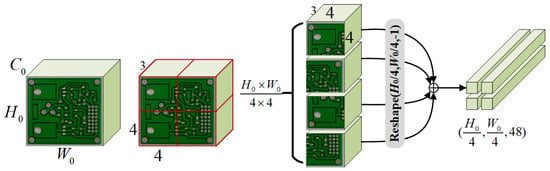

Due to the large amount of computation involved in the global spatial attention, is firstly partitioned according to the size of to obtain , as shown in Figure 6, where . Afterwards, the in each region will be clipped into in units of as shown in Figure 5 and the embedded feature will be obtained by MLP, where . By using an encoder of SSA to extract dependency information within local regions, its structure is shown in Figure 5, and its calculation process can be represented as

where is the embedded weight. The MSA is the same as the MSA in the original transformer. Query vectors key vectors , and value vectors are generated by

where , , and are the weights of the linear layer. Use key vectors to query on the query vectors, and the query results are the sum weights corresponding to the value vectors. The attention calculation process in MSA is as follows:

where is the dimension of the vectors. In the calculation process of MSA, all vectors are evenly divided into each head for self-attention.

Figure 6.

The operation process of partition.

3.3.2. CSA

The features of the backbone are obtained by concatenating the features of two branches on channel, resulting in a large amount of redundancy in the features. CSA can calculate channel self-attention through spatial embedding encoding, making the network more focused on advantageous channel features.

Similarly, CSA will first partition to obtain , but will not further clip the feature into patches. Secondly, the transformed feature is used to calculate channel self-attention. By using an encoder of CSA to extract dependency information within local regions, its structure is shown in Figure 5, and its calculation process can be represented as

where is the embedded weight. In SSA, patches are embedded in the channel dimension, and spatial self-attention is the weighted sum between all patches. However, CSA is embedded features within the channel, and channel self-attention is the weighted sum between channels.

4. Experiment Results

4.1. Datasets

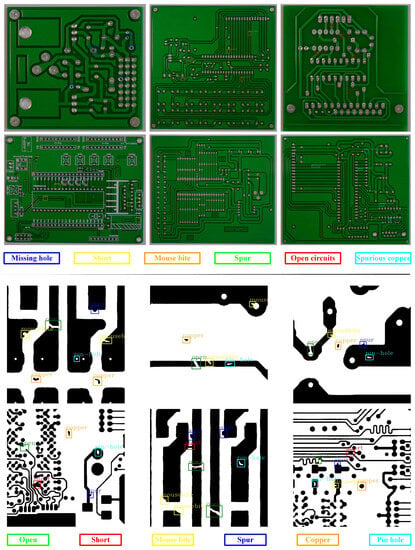

In this section, the PKU-Market-PCB [33] dataset and DeepPCB [13] dataset are used to validate the performance of our proposed DDTR model.

4.1.1. PKU-Market-PCB Dataset

There are 693 PCB defect images in the PKU-Market-PCB dataset, with an average shape of . PCB defects include six types: missing hole, short, mouse bite, spur, open circuits, and Spurious copper. The image only contains one defect type, but there may be multiple defect targets. The training set contains a total of 541 images, the test set contains 152 images.

Because of the large size of the image, the hardware cannot directly train and test on the initial images. Therefore, we cropped all images into patches. Finally, the training dataset contained 8508 images, while the test set contained 2897 images. More detailed information can be found in Table 1.

Table 1.

The target number of the PKU-Market-PCB dataset.

4.1.2. DeepPCB Dataset

All images in the DeepPCB dataset were obtained from linear scanning CCD, with a resolution of approximately 48 pixels per 1 millimeter. Then, they are cropped into many sub images of size and aligned using template matching technology. In order to avoid illumination interference, images are converted to binary image after carefully selecting the threshold. The dataset is manually annotated with six common PCB defect types: open, short, mouse bite, spur, copper, and pin hole. The training set contains a total of 1000 images, the test set contains 500 images, and some instance images are shown in Figure 7. In addition, the number of targets in the dataset is shown in Table 2.

Figure 7.

Examples of PKU-Market-PCB datasets and DeepPCB datasets.

Table 2.

The target number of the DeepPCB dataset.

4.2. Evaluation Metrics

First, the confusion matrix between the ground truth and the prediction results of the test set is calculated. When the predicted category is the same as the ground truth category, and the Intersection over Union (IoU) between the predicted box and the ground truth box is not lower than the threshold, the prediction is considered correct. True positive (TP) is the number of positive samples for both the ground truth and the predicted result. False positive (FP) is defined as the number of samples with negative ground truth and positive predicted results. True negative (TN) is the number of negative samples for both ground truth and predicted results. False negative (FN) is defined as the number of samples with positive ground truth and negative predicted results.

We used F1-score, which is commonly used in the field of object detection, as the metric for verifying performance. The definition of F1-score is as follows

where P is the precision, defined as

R is the recall, and the calculation formula is

4.3. Implemental Details

We have designed two types of ResSwinT for DDTR. One is the ResSwinT-T based on ResNet50 and SwinT-T, which has a slightly smaller computational complexity. The another is ResSwinT-S based on ResNet101 and SwinT-S, which has a slightly higher computational complexity. The information for the two types of ResSwinT is shown in Table 3. For SSA in SCSA, the area is , the patch is , and the input feature dimension for each head is 32. For CSA in SCSA, the area is , and the input feature dimension for each head is 25.

Table 3.

The parameters of ResSwinT.

To verify the effectiveness of our proposed DDTR, we compared it with six advanced object detection methods, including: (1) one-stage methods: YOLOv3, SSD [34], ID-YOLO [35] and LightNet [36]; (2) two-stage methods: faster R-CNN [37] and cascade R-CNN. During the training and testing process, all methods use a fixed input size of . All the methods are trained on a Ubuntu18.04 server equipped with E5 2697v3 and RTX3090. Python is 3.7, PyTorch is 1.13.1, and CUDA is 11.7.

4.4. Experimental Results

4.4.1. Experimental Results of PKU-Market-PCB

The precision results on the PKU-Market-PCB dataset are shown in Table 4. From the results, it can be seen that the accuracy of all two-stage methods exceeds one-stage object detection methods. A backbone based on the Transformer architecture has higher detection and recognition accuracy compared to CNN. The proposed DDTR method achieved the best results in AP, AR, and F1-scores. Compared to YOLOv3, the DDTR improved 15.42% on F1-score.

Table 4.

The indicator results of various methods on PKU-Market-PCB dataset.

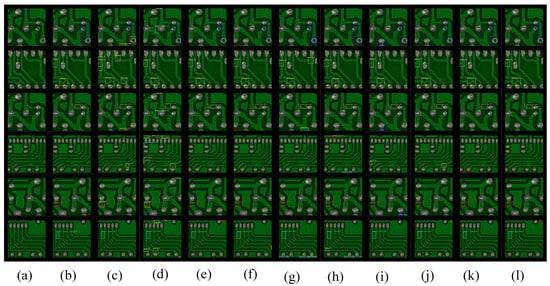

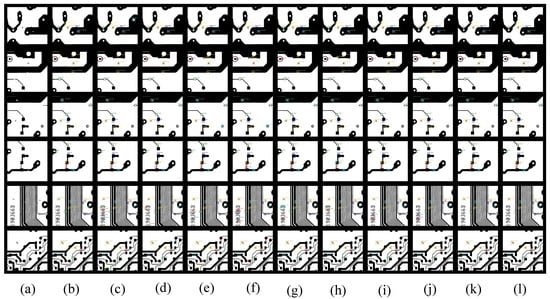

From the visualization results in Figure 8, the SSD and YOLO of one-stage object detection have more false alarms. Due to the lack of global dependency information, there are some overlapping target results in CNN-based object detection methods, which are alleviated after using transformer. The proposed DDTR method has good visualization results on the PKU-Market-PCB dataset.

Figure 8.

Some visualization results on the PKU-Market-PCB dataset. (a) Input image, (b) ground truth, (c) YOLOv3, (d) SSD, (e) Faster R-CNN_ResNet50, (f) Faster R-CNN_ResNet101, (g) Cascade R-CNN_ResNet50, (h) Cascade R-CNN_ResNet101, (i) Cascade R-CNN_SwinT-T, (j) Cascade R-CNN_SwinT-S, (k) DDTR_ResSwinT-T, (l) DDTR_ResSwinT-S.

4.4.2. Experimental Results of DeepPCB

The accuracy results of the DeepPCB dataset are shown in Table 5. From the results, it can be seen that the accuracy of all two-stage methods equally exceeds that of the one-stage target detection methods. Compared to CNN, the Transformer-based backbone has higher detection and recognition accuracy. The proposed DDTR method achieved the best results in AP, AR, and F1-score. Compared to YOLOv3, the DDTR has improved 9.04% on F1-score.

Table 5.

The indicator results of various methods on DEEPPCB dataset.

From the visualization results in Figure 9, it can be seen that SSD and YOLO have more false alarms. Due to the lack of global dependency information, there are some overlapping target results in CNN-based object detection methods, which have been alleviated by the use of transformer. Due to the lack of attention information, all comparison methods have a significant amount of false positives in the digital area. The proposed DDTR method has good visualization performance on the DeepPCB dataset.

Figure 9.

Some visualization results on the DeepPCB dataset. (a) Input image, (b) ground truth, (c) YOLOv3, (d) SSD, (e) Faster R-CNN_ResNet50, (f) Faster R-CNN_ResNet101, (g) Cascade R-CNN_ResNet50, (h) Cascade R-CNN_ResNet101, (i) Cascade R-CNN_SwinT-T, (j) Cascade R-CNN_SwinT-S, (k) DDTR_ResSwinT-T, (l) DDTR_ResSwinT-S.

4.5. Ablation Experiments

We conducted some ablation experiments on the proposed module, as shown in Table 6, where the best performing ones are highlighted in bold. Firstly, we use ResNet101 Cascade R-CNN as the baseline, which has 0.3813 F1-score on the PKU-MARKET-PCB dataset. If SwinT-S is used to replace ResNet101, it has a 0.89% improvement. If the proposed ResSwinT-S is used as the backbone, it has a 2.89% improvement. On this basis, networks using SSA have a 4.44% improvement compared to Baseline, and networks using CSA is 4.69%. The difference between the SSA and CSA is not significant, indicating that SSA and CSA can enhance the expression ability of features in different dimensions. When ResSwinT-S and SCSA are introduced simultaneously, addition of the feature SSA and CSA shows a 5.99% improvement, while channel concatenation is 6.19%.

Table 6.

Results of ablation experiment on PKU-Market-PCB.

5. Conclusions

DDTR has designed a new backbone for extracting multi-scale features, named ResSwinT. ResSwinT combines ResNet and Swin Transformer to extract local details and global dependency information. And it can load pre-trained model weights to assist training. Secondly, due to the higher complexity of the features extracted by ResSwinT, we designed a spatial channel multi-head self-attention structure. Spatial multi-head self-attention can encode space features through channel information, and use a self-attention mechanism to achieve weighted summation of spatial features within the region. Channel multi-head self-attention can encode channel features through spatial information, and use a self-attention mechanism to achieve a weighted sum of channel features within the region.

We conducted extensive experiments on the PKU-MARKET-PCB and DeepPCB datasets, and compared to the existing one-stage and two-stage detection models, the proposed DDTR can improve the F1-score by up to 15.42%. The results of multiple visualizations also show that DDTR demonstrates better detection performance. To verify the effectiveness of the module, we conducted a series of ablation experiments. The results of ablation experiments show that ResSwinT and SCSA can improve the accuracy of defect detection.So if DDTR is applied to automated defect detection in the PCB production process, it can accurately detect PCB defects and improve the yield of PCB production.

Author Contributions

All of the authors made significant contributions to the article. B.F. took on most of the content; J.C. mainly undertook English submission and other tasks. All authors have read and agreed to the published version of the manuscript.

Funding

National Natural Science Foundation of China: 62274123.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The DeepPCB dataset used in this article is available from https://github.com/tangsanli5201/DeepPCB (accessed on 1 July 2023). The PKU-Market-PCB dataset used in this article is available from https://robotics.pkusz.edu.cn/resources/dataset/ (accessed on 1 July 2023).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Chen, S.-H.; Perng, D.-B. Automatic optical inspection system for IC molding surface. J. Intell. Manuf. 2016, 27, 915–926. [Google Scholar] [CrossRef]

- Gaidhane, V.H.; Hote, Y.V.; Singh, V. An efficient similarity measure approach for pcb surface defect detection. Pattern Anal. Appl. 2017, 21, 277–289. [Google Scholar] [CrossRef]

- Kaur, B.; Kaur, G.; Kaur, A. Detection and classification of printed circuit board defects using image subtraction method. In Proceedings of the Recent Advances in Engineering and Computational Sciences (RAECS), Chandigarh, India, 6–8 March 2014; pp. 1–5. [Google Scholar]

- Malge, P.S.; Nadaf, R.S. PCB defect detection, classification and localization using mathematical morphology and image processing tools. Int. J. Comput. Appl. 2014, 87, 40–45. [Google Scholar]

- Girshick, B.R. Fast R-CNN. arXiv 2015, arXiv:1504.08083. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2980–2988. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. arXiv 2015, arXiv:1506.02640. [Google Scholar]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In European Conference on Computer Vision; Springer International Publishing: Cham, Switzerland, 2020. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual Conference, 11–17 October 2021. [Google Scholar]

- Tang, S.; He, F.; Huang, X.; Yang, J. Online PCB Defect Detector On A New PCB Defect Dataset. arXiv 2019, arXiv:1902.06197. [Google Scholar]

- Ding, R.; Dai, L.; Li, G.; Liu, H. TDD-net: A tiny defect detection network for printed circuit boards. CAAI Trans. Intell. Technol. 2019, 4, 110–116. [Google Scholar] [CrossRef]

- Kim, J.; Ko, J.; Choi, H.; Kim, H. Printed Circuit Board Defect Detection Using Deep Learning via A Skip-Connected Convolutional Autoencoder. Sensors 2021, 21, 4968. [Google Scholar] [CrossRef]

- Liao, X.; Lv, S.; Li, D.; Luo, Y.; Zhu, Z.; Jiang, C. YOLOv4-MN3 for PCB Surface Defect Detection. Appl. Sci. 2021, 11, 11701. [Google Scholar] [CrossRef]

- Li, M.; Yao, N.; Liu, S.; Li, S.; Zhao, Y.; Kong, S.G. Multisensor Image Fusion for Automated Detection of Defects”, in Printed Circuit Boards. IEEE Sensors J. 2021, 21, 23390–23399. [Google Scholar] [CrossRef]

- Ahmed, I.; Muhammad, S. BTS-ST: Swin transformer network for segmentation and classification of multimodality breast cancer images. Knowl.-Based Syst. 2023, 267, 110393. [Google Scholar]

- Wang, Z.; Zhang, W.; Zhang, M.L. Transformer-based Multi-Instance Learning for Weakly Supervised Object Detection. arXiv 2023, arXiv:2303.14999. [Google Scholar]

- Lin, F.; Ma, Y.; Tian, S.W. Exploring vision transformer layer choosing for semantic segmentation. In Proceedings of the ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–9 June 2023; pp. 1–5. [Google Scholar]

- Ru, L.; Zheng, H.; Zhan, Y.; Du, B. Token Contrast for Weakly-Supervised Semantic Segmentation. arXiv 2023, arXiv:2303.01267. [Google Scholar]

- Xu, Y.; Zhang, J.; Zhang, Q.; Tao, D. ViTPose+: Vision Transformer Foundation Model for Generic Body Pose Estimation. arXiv 2022, arXiv:2212.04246. [Google Scholar]

- Chang, Z.; Feng, Z.; Yang, S.; Gao, Q. AFT: Adaptive Fusion Transformer for Visible and Infrared Images. IEEE Trans. Image Process. 2023, 32, 2077–2092. [Google Scholar] [CrossRef]

- Chang, Z.; Yang, S.; Feng, Z.; Gao, Q.; Wang, S.; Cui, Y. Semantic-Relation Transformer for Visible and Infrared Fused Image Quality Assessment. Inf. Fusion 2023, 95, 454–470. [Google Scholar] [CrossRef]

- Regmi, S.; Subedi, A.; Bagci, U.; Jha, D. Vision Transformer for Efficient Chest X-ray and Gastrointestinal Image Classification. arXiv 2023, arXiv:2304.11529. [Google Scholar]

- Zhu, L.; Li, Y.; Fang, J.; Liu, Y.; Xin, H.; Liu, W.; Wang, X. WeakTr: Exploring Plain Vision Transformer for Weakly-supervised Semantic Segmentation. arXiv 2023, arXiv:2304.01184. [Google Scholar]

- Lu, C.; Zhu, H.; Koniusz, P. From Saliency to DINO: Saliency-guided Vision Transformer for Few-shot Keypoint Detection. arXiv 2023, arXiv:2304.03140. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving into High Quality Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 6–11 July 2015. [Google Scholar]

- Glorot, X.; Bordes, A.; Bengio, Y. Deep Sparse Rectifier Neural Networks. J. Mach. Learn. Res. 2011, 15, 315–323. [Google Scholar]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer Normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Huang, W.; Wei, P. A PCB Dataset for Defects Detection and Classification. arXiv 2019, arXiv:1901.08204. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision—ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Lecture Notes in Computer, Science; Springer: Cham, Switzerland, 2016; Volume 9905, pp. 21–37. [Google Scholar] [CrossRef]

- Hao, K.; Chen, G.; Zhao, L.; Li, Z.; Liu, Y. An insulator defect detection model in aerial images based on multiscale feature pyramid network. IEEE Trans. Instrum. Meas. 2022, 71, 1–12. [Google Scholar] [CrossRef]

- Liu, J.; Li, H.; Zuo, F.; Zhao, Z.; Lu, S. KD-LightNet: A Lightweight Network Based on Knowledge Distillation for Industrial Defect Detection. IEEE Trans. Instrum. Meas. 2023, 72, 3525713. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).