1. Introduction

Currently, with the rapid progress of deep learning algorithms and computer vision technologies, the accuracy of FER has improved gradually. As a result, FER is now being applied in various human-computer interaction systems, e.g., social robots, medical equipment, and fatigue driving monitoring. In the online learning field, facial expressions are important explicit state characteristics of the learners. Thus, determining how to improve the capability of learners’ emotion–state perception based on FER has attracted increasing attention in the online learning field [

1]. However, when applied to online learning, the FER task faces the following problems [

2]: (1) Images obtained under natural conditions meet low-quality problems. (2) FER must consider certain differences due to different usage scenarios. For example, in a natural scene with uncertain lighting and occlusion, it is necessary to adjust the parameters of the image adaptively and eliminate the influence of occlusion. In contrast, in a laboratory environment, better classification results can be obtained because there is less environmental interference. (3) Manual labeling processes are easily affected by the subjectivity of labelers, which results in noisy labels and affect classification accuracy. (4) Due to the interclass similarity and annotation ambiguity of facial expression data, the FER task is more challenging than traditional classification tasks.

Typically, traditional face recognition methods include four parts, i.e., the raw image input, data preprocessing, feature engineering, and expression classification [

3]. Feature engineering, which is the most important process in traditional methods, needs to be extracted manually and input into the classifier for learning. The quality of feature extraction is closely related to the level of classification performance; thus, adaptability is weak, and the recognition accuracy is typically limited.

The end-to-end supervised deep learning method is the most common classification paradigm for FER, and its classification performance largely depends on a large amount of high-quality labeled data [

4]. However, collecting large-scale datasets with highly accurate annotations is generally an expensive and time-consuming process, and training with an insufficient amount of data will result in poor generalization performance caused by overfitting. Thus, it is necessary to collect and clean data on a large scale or expand the number of samples using data augmentation techniques. In recent years, due to the powerful feature learning ability of deep learning methods, FER methods based on deep neural networks have made remarkable progress. For example, Wang et al. proposed SCN [

5] to suppress the uncertainty of facial expression data to learn the robust features of FER. This network includes self-attention importance weighting, ranking regularization, and relabeling. The network uses a self-attention importance weighting module to learn the weight of each face image to capture the importance of the sample for training and for loss weighting. The ranking regularization module is employed to highlight certain samples and suppress uncertain samples, and the relabeling module attempts to identify mislabeled samples and modify their labels. Wang et al. proposed RAN to solve the FER problem under occlusion conditions and pose changes in natural scenes [

6]. This network divides the input face image into several areas and inputs them to the backbone convolutional neural network (CNN) for feature extraction. It then uses a self-attention and relational attention module to summarize the facial region features in static images, and it introduces region-biased features to enhance the region weights for classification. Wen et al. proposed DAN to address the low recognition performance problem caused by the interclass similarity of facial expression images [

7]. This network learns to maximize the class separability of the backbone facial expression features using a feature clustering network. Then, a multi-head cross-attention network captures multiple distinct attentions, and an attention fusion network punishes overlapping attentions and fuses the learned features. Zhang et al. proposed EAC to handle noisy labels from a feature-relearning perspective [

8]. Their study exploits erasing attention consistency by designing an unbalanced framework to prevent the model from memorizing noisy labels. Liao et al. proposed a locally improved residual network attention model (RCL-Net). They introduced LBP features in the facial expression feature extraction stage to extract texture information on the expression image, emphasizing facial feature information and improving the model’s recognition accuracy [

9]. Qiu et al. proposed a local sliding window attention network (SWA-Net) for FER. They proposed a sliding window strategy for feature-level cropping, which preserves the integrity of local features without requiring complex preprocessing. Their proposed local feature enhancement module mines fine-grained features with intra-class semantics through a multiscale, deep network. They introduced an adaptive local feature selection module to guide the model to find more of the essential local features [

10].

Due to the limitation of network structure, the above methods cannot make full use of spatial information and channel information in facial images, and lack of attention consistency constraints, resulting in a certain limitation on the recognition accuracy of the model. Therefore, the proposed HDCNet firstly extracts facial expression-related features in the spatial and channel domains, and then it constrains the consistent expression of features through the mixed domain consistency loss function. Finally, the network weights are learned to optimize the classification network through the loss function of the mixed domain consistency constraints. Unlike EAC, which only constrains feature learning in the spatial domain, the proposed HDCNet method further strengthens the consistency of the channel representation probability distribution in the channel domain and further enhances the contribution of image label-related regions to FER. Our primary contributions are summarized as follows:

(1) A simple and effective mixed-domain consistency constraint is proposed. Here, by extracting facial expression features in the spatial and channel domains and constraining the consistent expression of features by designing a mixed-domain consistency loss function, state-of-the-art FER accuracy is obtained. (The best SOTA accuracy on RAF-DB and AffectNet datasets reach 90.35% and 60.40%, respectively).

(2) In the spatial domain, the network is constrained, and the loss function is designed based on the prior assumption of spatial attention consistency. In the channel domain, the loss function is constrained. The loss function is designed based on the consistency of the channel representation’s probability distribution before and after image transformation.

(3) Multiple evaluation and ablation experiments are conducted on multiple benchmarks to verify the effectiveness of mixed domain consistency (demonstrating that 0.39–3.84% and 0.13–1.23% improvement is achieved on RAF-DB and AffectNet, respectively).

The remainder of this paper is organized as follows.

Section 2 introduces the research on the attention mechanism, the principle of attention consistency, and applications of class activation maps.

Section 3 describes the proposed methods and modules, as well as the overall architecture and design of the loss function. In

Section 4, we discuss the experimental process and analyze the experimental results. Finally, the paper is concluded in

Section 5.

2. Related Works

In this section, we introduce the attention mechanism, attention consistency, JS divergence, and other technologies involved in the proposed HDCNet.

2.1. Attention Mechanism

The essence of the attention mechanism is a set of weight coefficients independently learned by the network and a dynamic weighting method to emphasize the areas of interest while suppressing irrelevant background areas. Attention mechanisms can be broadly classified into channel attention, spatial attention, hybrid attention, and self-attention [

11].

Channel attention can strengthen important features and suppress unimportant features by modeling the correlation between feature maps of different channels and assigning different weight coefficients to each channel. For example, SENet adjusts the feature response between channels adaptively through feature recalibration [

12], and SKNet was inspired by Inception-block and SE-block, and it considers multiscale feature representation by introducing multiple convolution kernel branches [

13,

14]. The attention to feature maps at different scales allows the network to focus more on important scale features. In addition, ECANet uses one-dimensional sparse convolution operations to optimize the “upgrade first and then reduce dimensionality” strategy adopted by SENet using two multilayer perceptrons to learn the correlations between different channels [

15].

Spatial attention attempts to improve the feature expression of key areas. Essentially, it transforms the spatial information in the original image into another space using a spatial transformation module and retains key information. It generates a weight mask for each position and weights the output, thereby highlighting specific target regions of interest while attenuating irrelevant background regions. For example, CBAM connects a spatial attention module based on the original channel attention [

16]. Generally, spatial attention ignores the information interaction between channels because it treats the features in each channel equally.

Self-attention is a variant of the attention mechanism. The purpose of self-attention is to reduce the dependence on external information and utilize the inherent information of the feature as much as possible to interact with attention. It first appeared in the transformer architecture proposed by Google. Later, He et al. applied self-attention to the CV field and proposed the Non-Local module, which models the global context through the self-attention mechanism and effectively captures long-distance feature dependencies. Generally, the process of acquiring attention is mapped into three vector branches through the original feature map, i.e., Q (query), K (key), and V (value). First, the correlation weight matrix coefficients of Q and K are calculated, and then the weight matrix is normalized using the SoftMax function. Finally, the weight coefficients are superimposed on V to model the global context information [

17]. The dual attention mechanism proposed by DANet applies the No-local concept to the spatial and channel domains simultaneously. Respectively, it uses the spatial pixel points and channel features as Q vectors to realize context modeling [

18].

2.2. Consistency of Attention

Generally, the rationality of class activation mapping (CAM) heatmaps can reflect the performance of CNN classifiers [

19]. If the attention heatmap emphasizes the semantic regions related to the considered labels, then the CNN classifier will exhibit better classification performance. However, it is very difficult to label relevant regions accurately on a large number of training images, and there may be discrepancies between different annotators when labeling relevant regions. Thus, a straightforward method to improve the plausibility of the attentional heatmap is to provide explicit supervision of label-related regions during CNN training.

As discussed in the literature [

4], in multi-label classification tasks, various techniques, e.g., data augmentation and image transformation, are employed to improve the performance of CNN classifiers. However, even when the training images are augmented by these transformations, current CNN classifiers cannot maintain the consistency of attention under many spatial transformations. In other words, the attention heatmap of the image before the data augmentation is inconsistent with the inverse transformation of the heatmap of the image after data augmentation. Thus, we designed an unsupervised loss function called “Attention Consistency Loss” by considering the consistency of visual attention under spatial transformation to realize better visual perception rationality and better image classification performance.

2.3. Class Activation Mapping

The CAM technique is used to generate a heatmap in order to highlight the contributing regions of discriminative classes in images in a CNN and can realize interpretable analysis and saliency analysis of the images. CAM algorithms are primarily divided into activation-based methods and gradient-based methods.

The activation value-based method obtains the heatmap of the corresponding category by weighting and summing the output feature maps of the convolutional layer. The original CAM algorithm uses the output of the global average pooling layer as the weight to perform a weighted summation of the feature map [

19]. The ScoreCAM method introduces the attention mechanism and obtains the attention weight of each channel through repeated pooling and convolution operations, and then it performs a weighted summation of the feature maps [

20]. The ssCAM method adds a spatial attention mechanism based on ScoreCAM and further improves the accuracy of attention by performing spatial attention transformation on the feature maps [

21]. In addition, the AblationCAM method calculates the contribution of each channel to the final result by eliminating channels one by one to obtain the heatmap [

22].

The gradient-based method primarily uses the gradient backpropagation method to calculate the importance score of each position according to the gradient information of the output feature map of the convolution layer to the target category to obtain a heatmap. The GradCAM method performs global average pooling on the gradient of the output feature map, obtains the weight coefficient, and performs weighted summation on the feature map [

23]. The GradCAM++ method adds the calculation of second-order information based on GradCAM to obtain a more refined heatmap [

24], and the LayerCAM method involves an element-based calculation process. For each element of each feature map, there is a corresponding weight coefficient that reflects the importance of the feature map more finely than previous methods [

25].

The above CAM algorithms have achieved good performance in several tasks, e.g., image classification and target positioning; however, they also have their own limitations and deficiencies. First, the gradient-based methods have certain restrictions in terms of the order of the convolutional and pooling layers in the network; thus, they cannot be directly applied to all types of network structures. Second, the gradient-based methods are susceptible to background noise, which leads to a reduction in the accuracy of the heat maps. There are also some problems with the method based on the activation value. For example, for some unbalanced datasets, the training model will focus on categories with a large number of samples, which will affect the generalization ability of the model on the test data. In addition, these algorithms only focus on the target category. In other words, they do not consider the influence of other categories; thus, some important contextual information may be ignored.

3. Proposed Method

In this section, we describe the proposed HDCNet method in detail.

Most typical CNN architectures, e.g., the Inception [

14] and VGGNet [

26] networks, begin with convolutional layers, and then they perform global average pooling on the feature maps from the last convolutional layer. The pooled features are then input to the final fully connected (FC) layer for classification. The structure of the proposed HDCNet is shown in

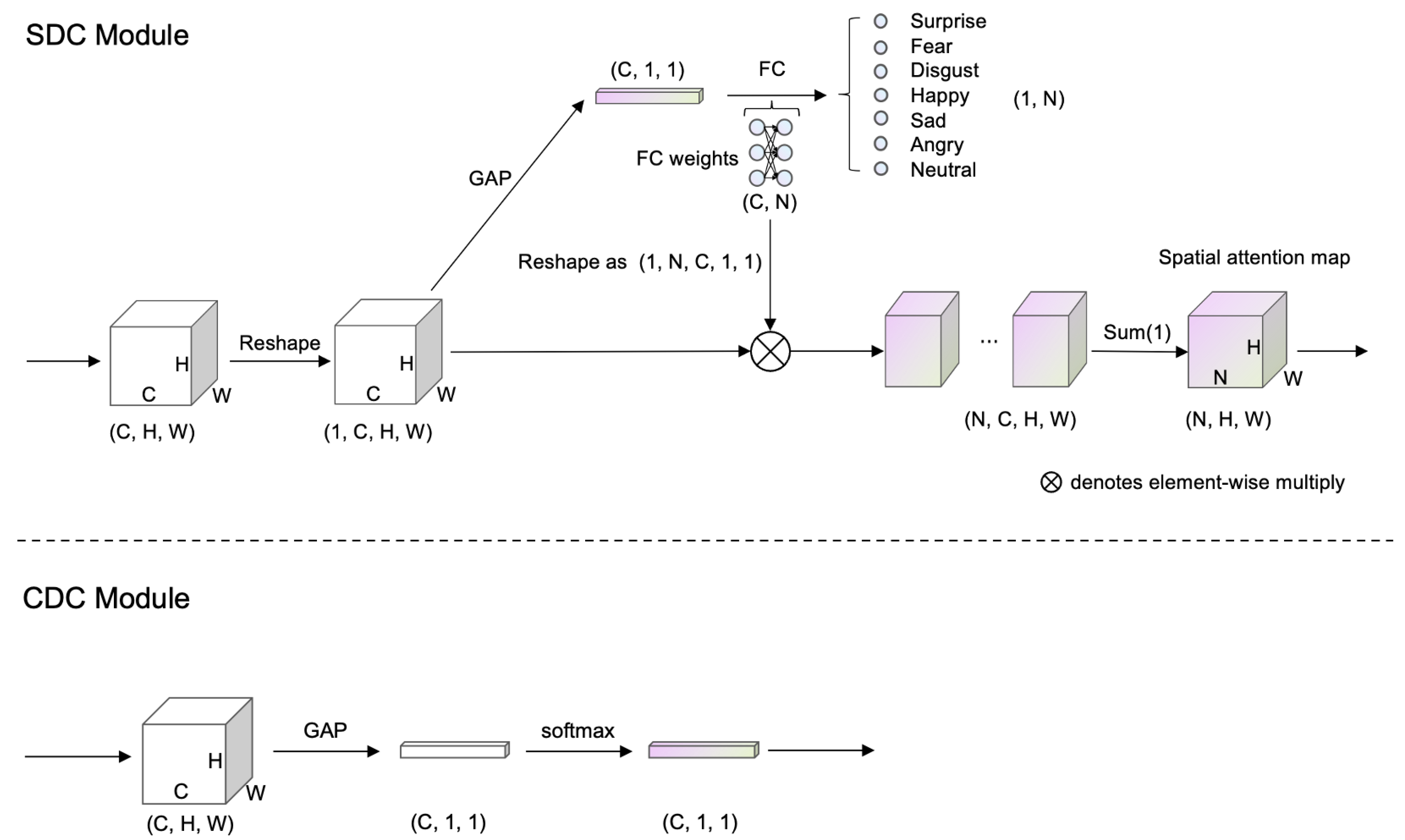

Figure 1. To learn high-quality features and realize better feature decoupling, our mixed domain consistency module is divided into two parts, i.e., the spatial domain consistency (SDC) constraint module and the channel domain consistency (CDC) constraint module, for short: SDC module and CDC module, respectively.

First, we perform data enhancement processing on a batch of images to obtain image I and its horizontally flipped image I′ satisfying the condition , where i, j, k, and w are the channel index, height index, width index, and width of the image, respectively. The images I and I′ are input to the backbone simultaneously, and after feature extraction, the feature maps F and F′ of the deep channel and low spatial resolution with basic category discrimination are obtained. In this study, we refer to the experimental results of EAC, where images are only processed with horizontal flipping and random erasing in the data augmentation stage.

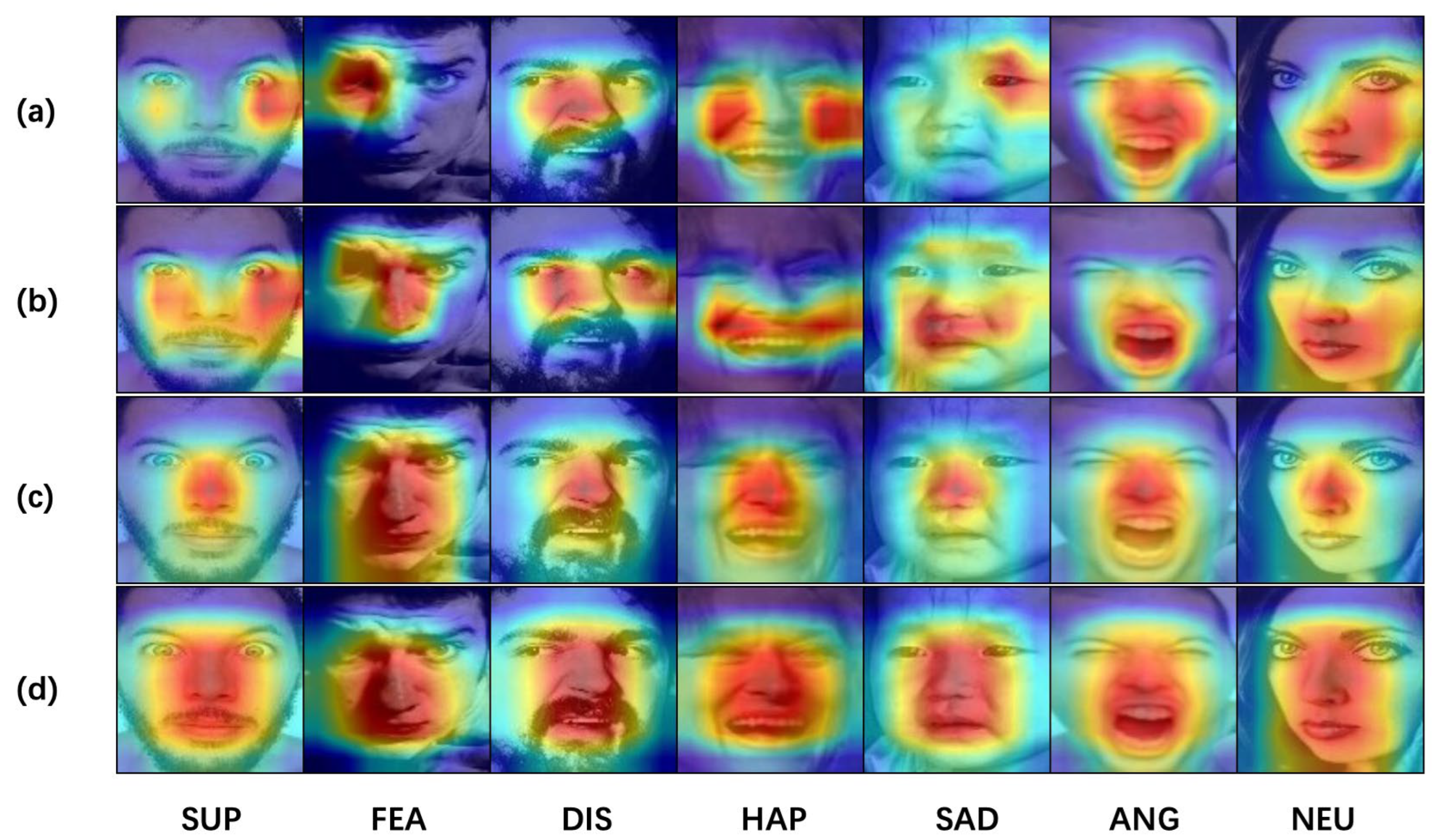

The SDC module generates CAM heatmaps and according to the feature map. Here, by minimizing the MSE difference between the attention maps, the network can learn the features related to the label from the spatial domain.

The CDC module generates probability distributions P and Q in the channel dimension according to the feature map. By minimizing the probability distribution difference, the network can stably learn the contribution distribution of each channel in the category discrimination, thereby reducing the label-independent features that are learned by the network.

As shown in

Figure 1 and

Figure 2, we simultaneously input facial expression images processed with random erasing and horizontal flipping to the backbone CNN with ResNet50. One branch is the feature output from the original image, and the other branch is the feature output from the horizontally flipped image. The two output feature vectors are then processed by the CDC and SDC modules, respectively. Note that the CDC and SDC modules reuse the Global Average Pooling(GAP) layer. To be specific, the CDC module uses the SoftMax function to compress the features in the spatial dimension and infers the corresponding channel representation probability distribution. The SDC module integrates the global spatial information through the weighting of the FC layer to obtain the probability of the classification.

3.1. SDC Module

The GAP layer integrates the global spatial information. Here, the mean value after the GAP is weighted by the weight of the FC layer to obtain the probability of classification, and CAM weights the feature map before GAP to obtain the classification explanation. Typically, the rationality of the CAM heatmap can reflect the performance of the CNN classifier; thus, if the attention heatmap highlights regions that are semantically related to the considered label, the CNN will demonstrate better classification performance. The equation for GAP is as follows.

here,

represent the height and width of the feature map,

represents the value of the position of the feature map

, and

yi represents the pixel mean corresponding to channel

.

As shown in

Figure 3, the areas of interest in the image CAM heatmap before and after partial horizontal flipping do not overlap, which means that, although the semantic information before and after the image transformation has not changed, the network model is not consistent with the area of interest before and after the transformation. This demonstrates that the model does not fully focus on the label-related regions when performing the classification.

Table 1 are

, and the feature maps obtain the corresponding spatial average value after passing through GAP. Here,

is used as the weight of the feature map channel, and the weight of the FC layer of the final image classification is

. The shape of the feature map

is changed to

, and the shape of the FC weight

is changed to

, and multiply it linearly by channel. Combine the feature maps of each label and sum them along the channel dimension

C to obtain the CAM heatmap

corresponding to each label, formalized as follows.

here,

are the number of classification tasks, the number of channels, height and width of the feature map, respectively.

indicates the attention heatmap of the label l at the spatial position

,

indicates the weight of the feature map channel

c corresponding to the label

l, and

represents the feature map of channel c from the last convolutional layer at the spatial position

.

We define the spatial domain consistency loss as the distance (

) between the attention heatmap before horizontal flipping and the attention heatmap after horizontal flipping. By optimizing the loss function, the spatial domain attention of the image is consistent before and after transformation, which can be expressed as follows.

Equation (3) represents the spatial domain consistency distance for a single facial expression sample image, and the total spatial domain consistency loss is discussed in

Section 4.3.

3.2. CDC Module

Given that each channel contains a specific feature response whose contribution to the final classification result is discriminative when exploring the consistency problem in the channel domain, we propose an a priori hypothesis: for a pair of feature maps

output by the final convolutional layer, the CDC infers that the corresponding channel representation probability distributions

P and

Q are consistent.

To measure the difference between two probability distributions, we first introduce the

KL divergence to describe the difference from

P to

Q.

where (

x) and

q(

x) denote the probabilities of

P and

Q on the

xth event, respectively.

The higher the similarity between

P and

Q is, the smaller the

KL divergence will be. Note that the difference from

Q to

P can be obtained in the same manner.

However, due to the asymmetry of the

KL divergence, the training efficiency may be reduced during the training process, and the convergence speed may be reduced. To solve this problem, we utilize the JS divergence to represent the difference between the two distributions.

where

,

M represents the intermediate distribution of

P and

Q. According to Equations (6)–(8), the simplified Equation (9) is obtained as follows.

here,

represents the channel feature probability distribution of the feature

. Note that Equation (9) is the channel-domain consistency constraint loss for a single sample. Refer to

Section 3.3 for the total channel-domain constraint loss.

3.3. Full Objective Function

We employ the cross entropy function as the classification loss function after the feature f of the GAP layer and the weight of the FC layer, , as follows.

here,

represents the

-th weight of the FC layer, where

is the label given by the

i-th sample. In addition,

represents the feature obtained by the feature

F of the

i-th sample through the GAP layer.

The loss function derived from Equation

can be expressed as follows:

here,

represents the heatmap of the

l-th category label of the

n-th sample, and

is the heatmap of the expression image corresponding to

, after flipping, where

N is the sample size,

L is the total number of classification task categories, and

H and

W are the height and width of the expression image, respectively.

The channel domain consistency constraint loss function derived from Equation can be expressed as follows.

Then, from Equations (10)–(12), we can clarify that the calculation method of the total objective function is expressed as follows.

here,

represents the classification cross-entropy loss function,

represents the spatial domain consistency constraint loss function, and

represents the channel domain consistency constraint loss function. In addition,

and

are the hyperparameters of

and

, respectively. For additional details, refer to

Section 4.5.1, where the ablation experiments of

and

are discussed.

5. Conclusions

In this paper, we have proposed the HDCNet to solve some difficult problems in the FER task, e.g., uneven illumination, large facial pose changes, occlusions, and low recognition accuracy caused by noisy labels in the target dataset. The proposed HDCNet consists of two parts, i.e., the SDC and CDC modules. The SDC module enhances the network to learn the label-related regions in the feature map by observing the areas of interest in the CAM heatmap before and after the feature map is flipped horizontally, thereby improving the model’s robustness. The CDC module assists the SDC module by minimizing the difference in the channel representation before and after the feature map is flipped horizontally such that the probability distribution of channel representation tends to be consistent. These two modules cooperate to improve both the accuracy and robustness of the model.

The proposed HDCNet was evaluated experimentally, and the experimental results on two benchmark datasets demonstrate that the proposed HDCNet achieved state-of-the-art performance, which highlights the effectiveness and practicality of the proposed method. We found that the proposed HDCNet can solve difficult problems in FER tasks; thus, it has broad applicability in practical applications.

Future work will explore new methods to measure channel domain consistency, improve the loss function, and make the model more robust to adapt to complex expression changes. We also plan to investigate the effectiveness of spatial domain consistency for feature layers at different depths and explore the effectiveness of using a mixed domain consistency method on lightweight models.