Abstract

Unmanned aerial vehicle (UAV) autonomous navigation requires access to translational and rotational positions and velocities. Since there is no single sensor to measure all UAV states, it is necessary to fuse information from multiple sensors. This paper proposes a deterministic estimator to reconstruct the scale factor of the position determined by a simultaneous localization and mapping (SLAM) algorithm onboard a quadrotor UAV. The position scale factor is unknown when the SLAM algorithm relies on the information from a monocular camera. Only onboard sensor measurements can feed the estimator; thus, a deterministic observer is designed to rebuild the quadrotor translational velocity. The estimator and the observer are designed following the immersion and invariance method and use inertial and visual measurements. Lyapunov’s arguments prove the asymptotic convergence of observer and estimator errors to zero. The proposed estimator’s and observer’s performance is validated through numerical simulations using a physics-based simulator.

1. Introduction

Nowadays, quadrotors are used in various applications, thanks to their low cost, mechanical robustness, and high maneuverability. Such applications include homeland security, forest-fire control, surveillance, sea and land exploration, human search and rescue, archaeological exploration, and volcanic activity monitoring, among many others [1,2]. Most of the abovementioned applications become impractical or even dangerous for human operators; thus, autonomous navigation, control, and guidance are required.

A quadrotor can perform autonomous navigation in unknown environments when its autopilot has access to all states. However, providing access to all quadrotor states without relying on a remote computer or sensors demands a vehicle with the capacity to process and extract information from only onboard sensors. The bottleneck to measuring all quadrotor states is that there are no out-of-the-box functional and reliable sensors to measure all states directly. For example, the quadrotor’s attitude is obtained by fusing the measurements from an inertial measurement unit (IMU). On the other hand, the global position system (GPS) is the primary sensor used for quadrotor positioning, but it presents limitations. It fails in environments where satellite communication is degraded, called GPS-denied environments, such as water bodies and indoors [3]. Furthermore, low-cost GPS does not provide enough resolution for trajectories on a centimeter scale, and the price of a GPS with higher resolution, such as differential GPS, increases drastically.

An algorithm to fuse GPS measurements with optical flow information using a Kalman filter (KF) was proposed in [4]. Shortly after, the work reported in [5] presented an improved sensor fusion algorithm based on an extended Kalman filter (EKF) that includes the measurements from an inertial navigation system (INS). Both sensor fusion algorithms improved the position estimation for low-cost GPS but not for GPS-denied environments.

Computer vision has emerged as a powerful solution for quadrotor position estimation. Visual sensors have many advantages over other sensors: they are cheap, provide color and geometric information for scene understanding, and consume less power. Many computer-vision algorithms are available for position estimation. For example, visual odometry (VO) estimates the ego-motion of a vehicle with an onboard camera. VO incrementally estimates the vehicle’s pose by examining the changes that motion induces on the input images [6]. Using a red, green, blue, depth (RGBD) camera, the method reported in [7] improved the VO algorithm by including a novel covariance estimation technique. The resulting VO-based algorithm allowed autonomous quadrotor navigation with satisfactory results. The depth camera measurement allows for determining the position scale factor.

The SLAM algorithm estimates the vehicle’s pose and, at the same time, constructs a map of the surroundings. The most successful versions of SLAM, running in real time, are ORB-SLAM [8] and LSD-SLAM [9]. These SLAM variants rely on techniques used to calculate the camera position and construct the map, such as feature extraction, or direct methods that operate on the image intensities [10]. A comparison between SLAM algorithms for mobile robot navigation in indoor environments is reported in [11], where it is concluded that ORB-SLAM can be used to determine the robot position with an additional module to recover the scale factor. The report [12] presents quadrotor autonomous navigation using a SLAM algorithm without determining the position scale factor.

Semidirect visual odometry (SVO) is a hybrid algorithm combining feature-based and direct methods. It estimates the relative motion between two frames by minimizing photometric errors. The projection error between the location of the feature points and their predicted positions is minimized to obtain the optimal camera pose [13]. The autonomous navigation of a UAV using SVO and a recovery mechanism to reinitialize the visual map when a failure occurs are proposed in [14]. The navigation strategy includes a pose estimation scheme for temporary vehicle control and a method to correct the scene scale factor using altitude measurements.

With some differences, computer-vision algorithms can be implemented using monocular or stereoscopic cameras. Stereo cameras can capture three-dimensional images, meaning the scene’s depth is known. This ability leads to better accuracy and resolution than in the monocular camera case for position estimation purposes. The algorithms ORB-SLAM, LSD-SLAM, and SVO, among others, were first developed for monocular camera implementation and, years after, improved for multi-camera configurations as reported in [15,16,17], respectively.

Nevertheless, monocular cameras are preferred for implementation in small vehicles for several reasons: a single camera is easy to mount due to its smaller size, is lighter and cheaper, and consumes less power. Additionally, a single camera configuration is free from the burden of multi-camera calibration and requires less processing power from the CPU onboard than multi-camera configurations [18]. Only one drawback is present for monocular cameras: they cannot recover the image’s three-dimensional structure and the camera position with complete metric information; in other words, the information on the scene’s depth is unavailable. This phenomenon is known as similarity ambiguity [19]. At least one piece of metric information is required to recover the absolute scale factor. This cue may come from prior scene knowledge, such as camera height, object size, vehicle speed, stereo camera baseline, or other sensors such as LiDAR or GPS.

Some methods have been proposed to deal with the similarity ambiguity problem. In [20,21], the extra piece of geometric information to determine the position measurement scale factor comes from an ultrasonic sensor and a one-dimensional laser range finder (LRF), respectively. These approaches require additional sensors onboard. Besides, the absolute scale is only calculated on the axis where the sensor is mounted, so it is assumed that the scale is the same on the other two axes, which is not always valid.

An EKF algorithm considering multirotor dynamics is proposed in [22] to estimate the scale factor online. A scale factor observability analysis supports the estimator design. However, using EKF on this approach makes the estimator nondeterministic, so stability is not formally proven.

In the field of deterministic estimators, ref. [23] presents a scale estimator based on control stability. It shows that the absolute scale and control gain have a unique linear relationship. The absolute scale can be estimated by detecting self-induced oscillations and analyzing the system stability. The problem with this approach is that an adaptive control technique must be used for an online estimation, leaving out other types of controllers.

This article presents a scale factor estimator in the cartesian plane fused with a velocity observer, deterministic and based on the quadrotor dynamic model, using only onboard sensors. The set of sensors provides the quadrotor’s attitude and angular velocity from an attitude and heading reference system (AHRS), the quadrotor’s acceleration from the set of sensors of the inertial measurement unit, and the scaled position from a SLAM algorithm based on a monocular camera. The scale factor estimator and the velocity observer are designed following the immersion and invariance methodology introduced in [24]. The singular contributions of this work are: the estimator and observer are designed considering the full quadrotor nonlinear model, the Coriolis forces are not neglected, the position scale factor is reconstructed in all three dimensions, and it is formally proven using Lyapunov arguments that the estimator and observer errors locally asymptotically converge to zero. Numerical simulations using Gazebo are presented to support the theoretical developments. The outcomes of this paper are based on the preliminary works reported in [25,26].

The remaining parts of the paper are arranged as follows. The sensor models used are presented in Section 2, along with the quadrotor dynamics. Section 3 outlines the fundamental contribution of this paper and describes the mathematical advancements used to create the scale factor estimator. Through numerical simulations, Section 4 illustrates the performance of the estimator. Finally, Section 5 wraps up this paper with a few closing thoughts and suggestions for future work.

2. Materials and Methods

Table 1 summarizes the notation used to introduce the quadrotor dynamic model.

Table 1.

Quadrotor dynamic model notation.

2.1. Quadrotor Dynamics

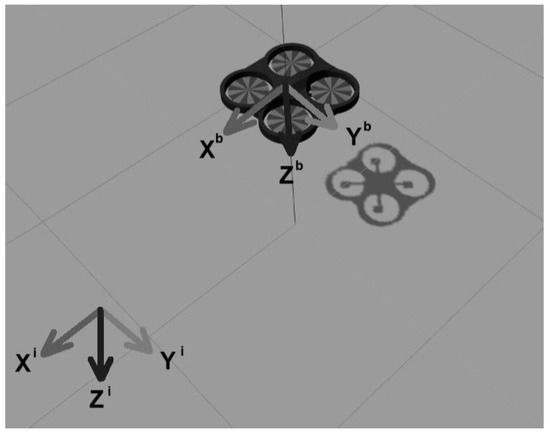

An inertial coordinate frame and a non-inertial coordinate frame (body frame) attached to the quadrotor center of gravity are needed to describe the quadrotor dynamic, see Figure 1. The following equations, expressed in mixed inertial and body coordinates, describe the translational and rotational quadrotor dynamics [26]:

with

Figure 1.

Inertial and Body coordinates.

2.2. Available Sensors

It is assumed that the quadrotor carries onboard a set of sensors that provide the following measurements.

2.2.1. Scaled Position

The vehicle carries a monocular camera facing the horizontal plane and the necessary computer power to implement a monocular-vision algorithm to determine its scaled inertial position. Therefore, the following measurement is available

where is the scaled position delivered by the monocular SLAM vision algorithm and is the dimensionless unknown scale factor on the axes , respectively.

Remark 1.

The operator represents a diagonal matrix whose elements are the elements of vector . This operator satisfies the following indentities

with vectors.

2.2.2. Specific Acceleration

Commonly, quadrotors are equipped with an inertial measuring unit (IMU) that measures the Earth’s magnetic field intensity, angular velocity, and specific acceleration in body coordinates. According to the Accelerometer Tutorial reported in [27], the specific acceleration measured by an accelerometer mounted on a quadrotor is given by

where is the specific acceleration measured in the body axis and is the total external force acting on the quadrotor expressed in the body axis. From the quadrotor dynamics model in Equation (1), it follows that

as a result,

Hence, the specific acceleration is an available output, element wise it reads as

with , and the specific acceleration along the body axis.

2.2.3. Attitude and Heading Reference Systems

The device that computes the quadrotor’s attitude and rotational velocity from the IMU measurements is called the attitude and heading reference system (AHRS). Assuming that the quadrotor carries an AHRS, the following signals are available.

where , are the columns of the rotation matrix transposed,

is the unit 2-sphere.

2.2.4. Vertical Speed

Through the use of a laser sensor or an ultrasonic sensor, the vertical quadrotor position can be measured so that the vertical speed can be determined. As a result, it is presumed that the subsequent measurement is available

Finally, note that the quadrotor translational dynamic, the first equation in (1), expressed in terms of the measured states reads as

2.3. Immersion and Invariance Observers

The following developments are based on Chapter 5 of [24]. Consider the following non-linear, deterministic, time invariant system

where and are the unmeasured and measured states, respectively. It is assumed that the vector fields and are forward complete.

Definition 1.

The dynamic system

with, is an observer for the unmeasured state η if there exists a mappingsuch that the manifold

has the following properties

- is positively invariant,

The construction of the observer of the form given in Definition 1 requires additional properties on the mapping , as stated in the following result.

Theorem 1.

Consider the system (13). Suppose that there exist differentiable maps such that

- A1

- For all and y the map satisfies

- A2

- The dynamic systemhas a (globally) asymptotically stable equilibrium at uniformily in and y.

The proof of this Theorem is presented in Appendix A. The result expressed in Theorem 1 is followed to design the velocity observer and the scale factor estimator.

3. Observer and Estimator Design

This section discusses how the observer and the estimator that reconstruct and K, respectively, are designed from the available measurements of acceleration, scaled position, attitude, angular velocity, and vertical speed.

3.1. Observation and Estimation Problems

The following terms state the observation problem. Assume that the outputs are measurable. Design two dynamic systems, likely, of the form

where and , such that two functions exist, and , that depend on the available information, and the following identities asymptotically hold

3.2. Velocity Observer

According to the immersion and invariance technique, the observation error is defined as follows

with element wise reading as

where

and

Equation (18) models the distance to the manifold of Definition 1, where the velocity is equal to . This distance must asymptotically converge to zero to complete the observer design. Note that the output is not directly used since the time derivative of will require the computation of ; this is the reason why the new state is introduced.

The time derivative of is given by

Substituting , the body velocity and the time derivative of from Equations (12), (18), and (20), respectively, one has

Now, the observer state dynamic is defined in terms of the known signals, as

Consider the following Lyapunov function to define the function

it follows that

Hence, to guarantee that the time derivative of is negative-definite, the matrix must also be negative-definite. On the other hand, Equation (23) requires the matrix to be invertible. Note that selecting

with

and , and positive gains, it follows that

with and the identity matrix. As a result, is negative-definite and is invertible.

3.3. Scale Factor Estimator

In reference [25], the scale factor estimator needs the translational velocity as a measurable output, but in this work it is available through the observer designed (27) according to (17). It is important to note also in this equation that depends on states expressed in mixed inertial and body coordinates, unlike which depends only on states expressed in body coordinates. In order to have all the states expressed in inertial coordinates, needs to be translated with the rotation matrix. The inertial velocity is introduced as follows

Additionally, the inertial velocity observer error is defined

with

Now, the scale factor estimation error is defined in the following form

with element wise reading as

The derivative with respect to the time of the estimation error is

The dynamic of the scale factor estimator state is defined in terms of the known signals as follows

After substituting into the scale factor estimator error (40), it follows that

Once again, the function needs to be defined to ensure that the estimation error converges to zero with an invertible matrix. Thus, the following vector function is proposed

with

and the scale factor estimator gains.

Replacing (43) into (42), one obtains

where (3), (4) and (33) had been considered. From (31), it follows that

The following assumptions are considered to state the main result of this paper.

Assumption 1.

The following identity holds.

with the identity matrix.

Assumption 2.

There exist control inputs and such that the following quadrotor states can be upper bounded, this is

for some not-necessarily constants and . The notation stands for the Euclidean norm for a matrix or vector .

Remark 2.

Assumption 1 is the persistence of the excitation condition; in this case, fulfilling this condition implies that the quadrotor must move to estimate the scale factor successfully. Assumption 2 means that a control loop allows the quadrotor to fly stably; consequently, the quadrotor dynamics is forward complete.

The following Proposition summarizes the main result of this work.

Proposition 1.

The proof of this Proposition is reported in Appendix B.

4. Numerical Simulations

A numerical simulation study was performed on different platforms to evaluate the observer and estimator’s performance.

4.1. Matlab-Simulink

The first one was performed using Matlab-Simulink, to avoid problems such as sensors’ noise and external disturbances so that we can evaluate the estimators by themselves. A program was designed to simulate a quadrotor in a closed loop with the controller developed in [28], tracking a circular trajectory on the Cartesian plane and a sinusoidal form on the vertical plane. To fulfill Assumption 1, the desired trajectories were , and . For the velocity observer, the initial conditions used were with a proposed and gains , and . Regarding the monocular-vision positioning algorithm for this numerical simulation, any real values for the scale factor K can be used; nevertheless, in more realistic simulations such as the ones in the next section, it is observed that the scaled position is always smaller than the real position, , so ; hence, the real values of the scale factor for this simulation where fixed at . The gains used for the estimator were , and .

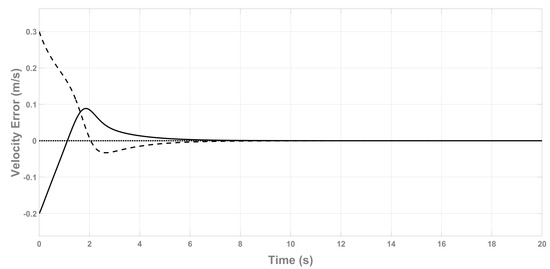

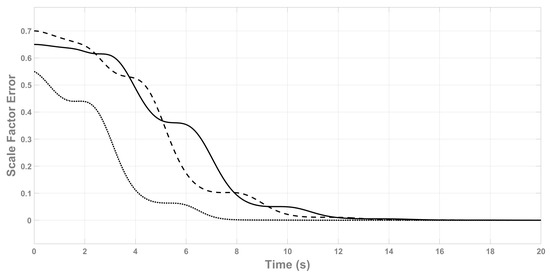

Figure 2 shows the velocity observer error for this simulation, where it can be seen that the velocities converge to zero correctly. Note that the velocity error on the Z axis, , is always zero because the speed on this axis is measurable, (11).

Figure 2.

Velocity observer error . (continuous line), (dashed line), (dotted line).

Figure 3 shows the scale factor estimator error that also proves the correct convergence of the estimator. In this graph the cascade behavior of (46) with (24) can also be seen, which means that will always converge after due to the interconnection term .

Figure 3.

Scale factor estimator error . (continuous line), (dashed line), (dotted line).

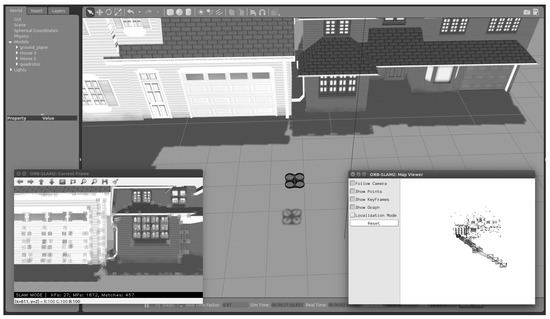

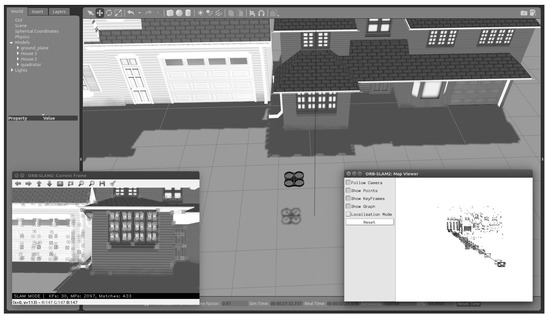

4.2. Gazebo

It is time to put the observers to the test in a simulator, such as Gazebo, which is more like reality after confirming their proper operation in a controlled setting. The Gazebo is an open-source 3D robotics simulator. It incorporates the open dynamics engine (ODE) as a physics engine, OpenGL for graphics, support code for sensor simulation, and actuator control. The robot operating system (ROS) and the Gazebo simulator are wholly linked.

In the previous simulation, it was easy to set simulation values for the constant and the scaled position delivered by a monocular-vision algorithm. In the Gazebo simulation, such values will have to be treated more rigorously as would be performed in an actual experiment.

4.2.1. Calculation of

is a term related to the drag coefficient, a positive constant representing a combination of the profile and induced drag forces on the rotors, known in the helicopter literature as “rotor drag” [27,29]. Like any other parameter of the quadrotor, such as its weight (m), it must be measured before experiments. Due to the units of this parameter, listed in Table 1, it is known as the “mass flow rate”.

From Equation (8), it can be seen that

From any of these two equations, the constant can be measured by having access to the accelerometer and translational velocities measurements, for example

We have access to both of these measurements in Gazebo, so a circular trajectory tracking simulation was performed to measure these states. The calculated value for the quadrotor used in Gazebo is .

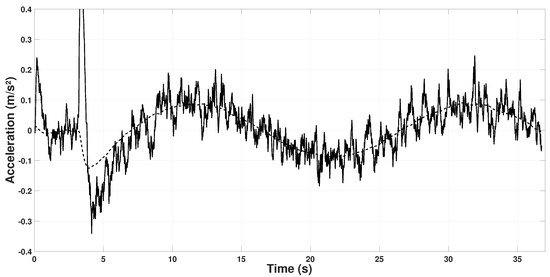

With calculated, Figure 4 shows the Equation (48) on the X axis with the quadrotor following a circular trajectory, where it can be seen that the relation holds, so the calculated is correct. Note that the accelerometer readings () have noise added due to the rotors.

Figure 4.

Relation between accelerometer and on the axis. (continuous line), (dashed line).

4.2.2. Monocular-Vision Algorithm

As mentioned before, the designed scale factor estimator works with any computer-vision algorithm that implements a monocular camera and delivers position measurements. For this simulation, we use ORB-SLAM2 [15] due to its precision and accuracy, it is easy to install and well-documented, and it has ROS integration. Hence, it is easy to add to the Gazebo environment.

4.2.3. Trajectory Tracking Control Using the Scale Factor Estimator

The simulation involves flying the quadrotor running ORB-SLAM2 from the visual information obtained by its onboard monocular camera without position control following a diagonal trajectory in the horizontal plane to obtain the information required to make the identities in (17) hold. After this step, the scale factor K will be known through , so the quadrotor will be able to measure its actual position with , (2). Then, the quadrotor will follow a lemniscate trajectory autonomously (closed-loop).

The low-level controller of the quadrotor is driven by [30], which is a driver to interface with Parrot AR-Drone quadrotors through ROS. It takes translational velocities as control inputs.

Figure 5 shows the Gazebo environment with the SLAM algorithm running. The window on the left shows what the onboard camera is seeing with the features (points) detected, and the window on the right depicts the mapping construction.

Figure 5.

Gazebo environment.

The first step is to fly the quadrotor in open-loop over a diagonal trajectory from position to , after that to the position and finally back to the origin , always maintaining a constant velocity of and .

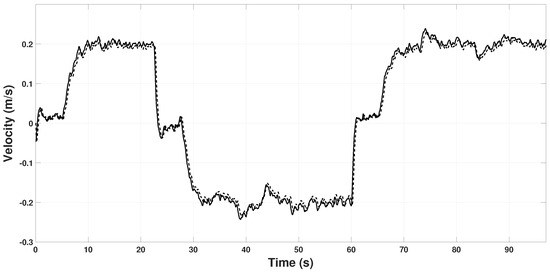

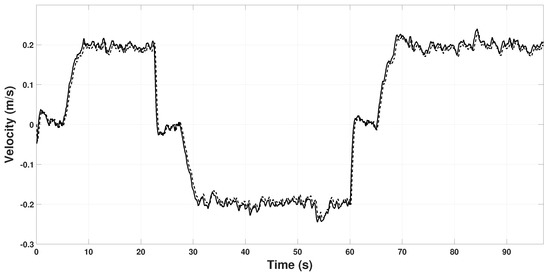

Figure 6 and Figure 7 show the real velocity and the estimated by the observer. With the initial conditions of the real velocity and the observer being the same, , and the high gains , and , make the observer converge immediately.

Figure 6.

Observed speed on the axis. u (continuous line), (dashed line).

Figure 7.

Observed speed on the axis. v (continuous line), (dashed line).

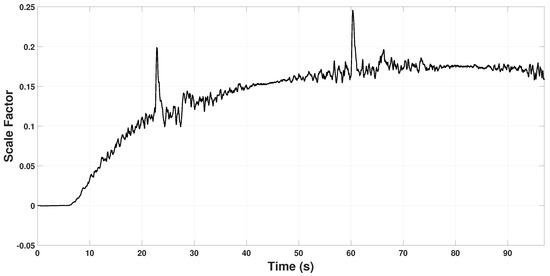

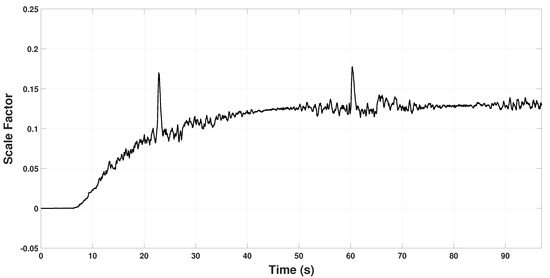

Figure 8 and Figure 9 show the scale factor estimation on the X and Y axes, respectively. Note that from time 0 s to 6 s, the estimator remains in zero because, in that period, the quadrotor takes off and holds in hover for some seconds, so Assumption 1 is not fulfilled. In the period 22 s to 60 s, some peaks appear because the quadrotor reaches the coordinates and , respectively. Hence, it changes velocity abruptly to change its direction of movement to reach the next point. These changes in velocity can also be seen in Figure 6 and Figure 7 in the time periods.

Figure 8.

Estimated scale factor on the axis.

Figure 9.

Estimated scale factor on the axis.

After the open-loop routine, is calculated to converge at and . It can also be seen in Figure 8 and Figure 9 that converges exponentially, as it was anticipated in (A7). From this point, knowing the scale factor K, the quadrotor will be able to measure its actual position through in (2) as follows

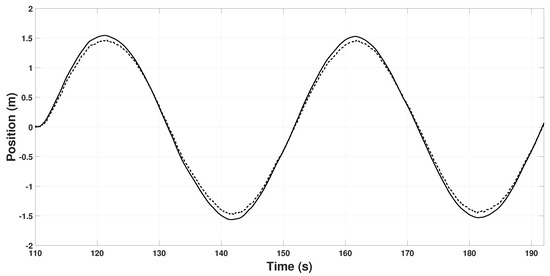

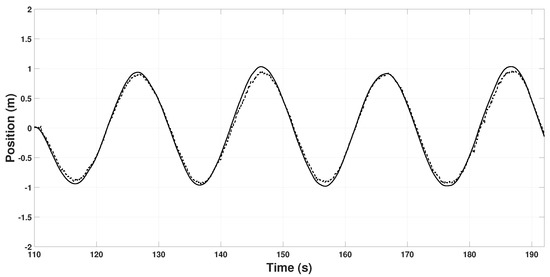

Finally, using the estimated information, the quadrotor will follow a lemniscate trajectory autonomously in closed-loop; that is, and . Figure 10 and Figure 11 show the real position and the position measured by the quadrotor on the X and Y axis, respectively, along the lemniscate trajectory.

Figure 10.

Quadrotor position on the axis. x (continuous line), (dashed line).

Figure 11.

Quadrotor position on the axis. y (continuous line), (dashed line).

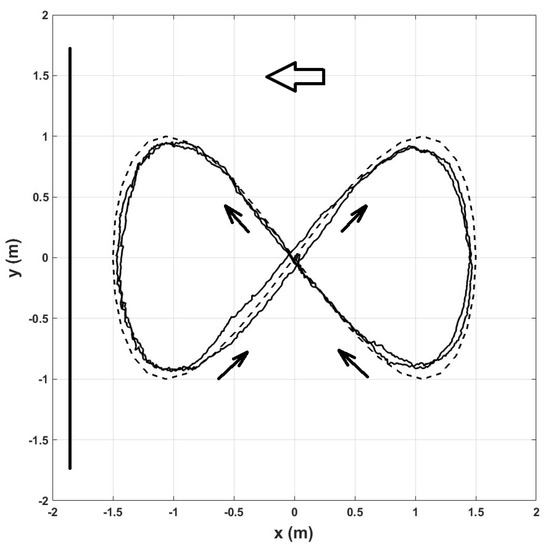

Figure 12 shows the graph of the trajectory validating the closed-loop control of the quadrotor using the proposed scale factor estimator. The quadrotor completed the whole lemniscate trajectory twice with the given simulation time. The big arrow indicates the direction the onboard camera faces the whole trajectory, the line on the left represents the buildings’ location, and the small arrows indicate the quadrotor motion direction.

Figure 12.

Lemniscate trajectory tracking using the scale factor estimator. Real position (continuous line), desired position (dashed line).

As shown in Figure 12, the SLAM algorithm has better precision in the left part of the lemniscate because the camera is closer to the buildings, so more image features fed the algorithm. On the diagonals, the SLAM algorithm performs better when the quadrotor moves towards the buildings than when it moves away. Although the scale factor estimator recovers the actual position dimension, it does not help in any sense to improve the SLAM algorithm performance.

Changing the position of the house on the right in the Gazebo environment, we ran a second simulation to check if small changes in the initial conditions of the monocular-vision algorithm influence the final value of the scale factor. In this case, the house on the right is closer to the quadrotor, as shown in Figure 13. At the end of the simulation, the calculated scale factor values were and , proving that the scale factor is different even for the same environment but with minor changes in the initial conditions.

Figure 13.

Gazebo environment with different initial conditions.

5. Conclusions

This article proposed a velocity observer in cascade with a scale factor estimator. The significant contributions of this work are listed next:

- The velocity observer does not neglect the Coriolis term, offering greater accuracy in fast flights.

- The scale factor estimator allows taking advantage of all the benefits of monocular cameras, obtaining the accuracy of a stereoscopic camera without increasing the processing power.

- Lyapunov’s arguments prove asymptotic convergence to zero of the observer and estimator errors, and the simulations validate the correct performance and use of the proposed theory.

- It is illustrated that the scale factor is not the same in all axes, as some authors assume. It is even different from experimenting in the same environment if the initial conditions change.

- The proposed approach allows for position trajectory tracking to be performed directly using the measurements of a monocular-vision positioning algorithm, removing the limitations of a GPS or a motion capture system.

In future work, experiments will be carried out by a real quadrotor in more complex environments, combined with other kinds of computer vision algorithms such as person recognition or obstacle avoidance.

Author Contributions

Conceptualization, A.G.-C. and H.R.-C.; methodology, A.G.-C. and H.R.-C.; software, A.G.-C.; validation, A.G.-C.; formal analysis, H.R.-C.; investigation, A.G.-C. and H.R.-C.; writing—original draft preparation, A.G.-C.; writing—review and editing, H.R.-C.; visualization, A.G.-C.; supervision, H.R.-C.; project administration, H.R.-C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The simulation files are available upon request to the first author.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Proof of Theorem 1

Appendix B. Proof of Proposition 1

Proof.

The proof is divided into the following steps. In the first step, it is shown that the velocity observer error converges exponentially to zero. Then, it is proven that the trajectories of the estimation error do not explode in finite time. Finally, it is verified that the interconnection term between the observer and estimator dynamics satisfies a linear growth condition to the estimator error.

Note that considering Equation (27), the time derivative of the Lyapunov function (26) can be written as follows

as a result, one has converges exponentially to zero.

Now, to prove that the estimation error trajectories do not explode in finite time, consider the following Lyapunov function

whose derivative with respect to time along the estimator dynamic (46) is

thus, ( stands for the maximal eigenvalue of matrix ·.)

Hence, since the quadrotor dynamics is forward complete, the relationship in (A6) implies that the dynamic of does not blow up in finite time. Note that if the error signal is equal to zero the estimation error dynamic (46) reduces to

and the time derivative of the Lyapunov function (A4) along the trajectories of (A7) becomes

then, from Assumption A1 and selecting as a positive-definite matrix, it follows that the estimation error converges asymptotically to zero.

The last step in this proof is to prove that the interconnection term between the estimator and observer dynamics given by

grows lineally with respect to . From, Assumption A2 one has

References

- Gupte, S.; Mohandas, P.I.T.; Conrad, J.M. A survey of quadrotor Unmanned Aerial Vehicles. In Proceedings of the 2012 Proceedings of IEEE Southeastcon, Orlando, FL, USA, 15–18 March 2012; pp. 1–6.

- Ganesan, R.; Raajini, X.M.; Nayyar, A.; Sanjeevikumar, P.; Hossain, E.; Ertas, A.H. BOLD: Bio-Inspired Optimized Leader Election for Multiple Drones. Sensors 2020, 20, 3134. [Google Scholar] [CrossRef] [PubMed]

- Balamurugan, G.; Valarmathi, J.; Naidu, V.P.S. Survey on UAV navigation in GPS denied environments. In Proceedings of the 2016 International Conference on Signal Processing, Communication, Power and Embedded System (SCOPES), Paralakhemundi, India, 3–5 October 2016; pp. 198–204. [Google Scholar]

- Xie, N.; Lin, X.; Yu, Y. Position estimation and control for quadrotor using optical flow and GPS sensors. In Proceedings of the 2016 31st Youth Academic Annual Conference of Chinese Association of Automation (YAC), Wuhan, China, 11–13 November 2016; pp. 181–186. [Google Scholar]

- Arreola, L.; de Oca, A.M.; Flores, A.; Sanchez, J.; Flores, G. Improvement in the UAV position estimation with low-cost GPS, INS and vision-based system: Application to a quadrotor UAV. In Proceedings of the 2018 International Conference on Unmanned Aircraft Systems (ICUAS), Dallas, TX, USA, 12–15 June 2018; pp. 1248–1254. [Google Scholar]

- Scaramuzza, D.; Fraundorfer, F. Visual Odometry [Tutorial]. IEEE Robot. Autom. Mag. 2011, 18, 80–92. [Google Scholar] [CrossRef]

- Anderson, M.L.; Brink, K.M.; Willis, A.R. Real-Time Visual Odometry Covariance Estimation for Unmanned Air Vehicle Navigation. J. Guid. Control. Dyn. 2019, 42, 1272–1288. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Montiel, J.M.M.; Tardós, J.D. ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Engel, J.; Schöps, T.; Cremers, D. LSD-SLAM: Large-Scale Direct Monocular SLAM. In Computer Vision—ECCV 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 834–849. [Google Scholar]

- Chen, Y.; Zhou, Y.; Lv, Q.; Deveerasetty, K.K. A Review of V-SLAM. In Proceedings of the 2018 IEEE International Conference on Information and Automation (ICIA), Wuyishan, China, 11–13 August 2018; pp. 603–608. [Google Scholar]

- Filipenko, M.; Afanasyev, I. Comparison of Various SLAM Systems for Mobile Robot in an Indoor Environment. In Proceedings of the 2018 International Conference on Intelligent Systems (IS), Funchal, Portugal, 25–27 September 2018; pp. 400–407. [Google Scholar]

- Mouaad, B.; Razika, B.Z.; Ramzi, R.H.; Karim, C. Control Design and Visual Autonomous Navigation of Quadrotor. In Proceedings of the 2019 International Conference on Advanced Electrical Engineering (ICAEE), Algiers, Algeria, 19–21 November 2019; pp. 1–7. [Google Scholar]

- Forster, C.; Pizzoli, M.; Scaramuzza, D. SVO: Fast semi-direct monocular visual odometry. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 15–22. [Google Scholar]

- Lin, Y.; Wang, J.; Shi, Z.; Zhong, Y. Reinitializable and scale-consistent visual navigation for UAVs. In Proceedings of the 2017 36th Chinese Control Conference (CCC), Dalian, China, 26–28 July 2017; pp. 5871–5876. [Google Scholar]

- Mur-Artal, R.; Tardós, J.D. ORB-SLAM2: An Open-Source SLAM System for Monocular, Stereo, and RGB-D Cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Engel, J.; Stückler, J.; Cremers, D. Large-scale direct SLAM with stereo cameras. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 1935–1942. [Google Scholar]

- Forster, C.; Zhang, Z.; Gassner, M.; Werlberger, M.; Scaramuzza, D. SVO: Semidirect Visual Odometry for Monocular and Multicamera Systems. IEEE Trans. Robot. 2017, 33, 249–265. [Google Scholar] [CrossRef]

- Giubilato, R.; Pertile, M.; Debei, S. A comparison of monocular and stereo visual FastSLAM implementations. In Proceedings of the 2016 IEEE Metrology for Aerospace (MetroAeroSpace), Florence, Italy, 22–23 June 2016. [Google Scholar]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision, 2nd ed.; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Esrafilian, O.; Taghirad, H.D. Autonomous flight and obstacle avoidance of a quadrotor by monocular SLAM. In Proceedings of the 2016 4th International Conference on Robotics and Mechatronics (ICROM), Tehran, Iran, 26–28 October 2016; pp. 240–245. [Google Scholar]

- Zhang, Z.; Zhao, R.; Liu, E.; Yan, K.; Ma, Y. Scale Estimation and Correction of the Monocular Simultaneous Localization and Mapping (SLAM) Based on Fusion of 1D Laser Range Finder and Vision Data. Sensors 2018, 18, 1948. [Google Scholar] [CrossRef] [PubMed]

- Ludhiyani, M.; Rustagi, V.; Dasgupta, R.; Sinha, A. Multirotor dynamics based online scale estimation for monocular SLAM. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 6475–6481. [Google Scholar]

- Lee, S.H.; de Croon, G. Stability-Based Scale Estimation for Monocular SLAM. IEEE Robot. Autom. Lett. 2018, 3, 780–787. [Google Scholar] [CrossRef]

- Astolfi, A.; Karagiannis, D.; Ortega, R. Nonlinear and Adaptive Control with Applications; Springer: Berlin/Heidelberg, Germany, 2008; Volume 187. [Google Scholar]

- Nieto-Hernández, L.; Gómez-Casasola, A.A.; Rodríguez-Cortés, H. Monocular SLAM Position Scale Estimation for Quadrotor Autonomous Navigation. In Proceedings of the 2019 International Conference on Unmanned Aircraft Systems (ICUAS), Atlanta, GA, USA, 11–14 June 2019; pp. 1359–1364. [Google Scholar]

- Gómez-Casasola, A.; Rodríguez-Cortés, H. Sensor Fusion for Quadrotor Autonomous Navigation. In Proceedings of the 2020 American Control Conference (ACC), Denver, CO, USA, 1–3 July 2020; pp. 5219–5224. [Google Scholar]

- Leishman, R.C.; Macdonald, J.C.; Beard, R.W.; McLain, T.W. Quadrotors and Accelerometers: State Estimation with an Improved Dynamic Model. IEEE Control Syst. Mag. 2014, 34, 28–41. [Google Scholar] [CrossRef]

- Rodríguez-Cortés, H. Aportaciones al control de vehículos aéreos no tripulados en México. Rev. Iberoam. Autom. Inform. Ind. 2022, 19, 430–441. [Google Scholar] [CrossRef]

- Bramwell, A.R.S.; Done, G.; Balmford, D. Bramwell’s Helicopter Dynamics, 2nd ed.; Butterworth-Heinemann: Oxford, UK, 2001. [Google Scholar]

- Monajjemi, M. Ardrone_autonomy: A ROS Driver for AR-Drone 1.0 & 2.0. 2012. Available online: https://github.com/AutonomyLab/ardrone_autonomy (accessed on 10 September 2022).

- Sepulchre, R.; Jankovic, M.; Kokotovic, P.V. Constructive Nonlinear Control; Springer: London, UK, 2012. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).