Abstract

This paper considers trajectory a modeling problem for a multi-agent system by using the Gaussian processes. The Gaussian process, as the typical data-driven method, is well suited to characterize the model uncertainties and perturbations in a complex environment. To address model uncertainties and noises disturbances, a distributed Gaussian process is proposed to characterize the system model by using local information exchange among neighboring agents, in which a number of agents cooperate without central coordination to estimate a common Gaussian process function based on local measurements and datum received from neighbors. In addition, both the continuous-time system model and the discrete-time system model are considered, in which we design a control Lyapunov function to learn the continuous-time model, and a distributed model predictive control-based approach is used to learn the discrete-time model. Furthermore, we apply a Kullback–Leibler average consensus fusion algorithm to fuse the local prediction results (mean and variance) of the desired Gaussian process. The performance of the proposed distributed Gaussian process is analyzed and is verified by two trajectory tracking examples.

1. Introduction

Trajectory tracking is a common problem in control and robotics, and its generation systems represent a large class of dynamical physical models. In the past few decades, various control schemes have been investigated and modeled, and most of them can be considered as a subset of computed torque control laws [1]. Generally speaking, in order to track trajectories, one needs to know the system model, such as the kinematic model, observation model, and motion model [2]. However, in many practical applications, one usually cannot obtain the model information/knowledge, or the system model is dynamical and is difficult to characterize. The system model is often filled with a high degree of uncertainty, nonlinearity, and dependency, which makes it difficult to model accurately. Therefore, traditional modeling methods are no longer suitable for the actual dynamical environment [3]. More recently, data-driven approaches are getting more and more attention in many fields, such as the control and machine learning communities [4,5]. Since data-driven methods can train the system model with high efficiency and precision, they have become the most popular choice for system modeling [6,7]. In particular, the Gaussian process (GP) is the most representative one, and it has been successfully applied to many fields.

A research frontier in the realm of GP is the trajectory modeling issue. Due to its capability to tackle complex perturbations, uncertainties, dependencies, and nonlinearities, GP is becoming a popular choice in various systems, such as in solar power forecasting [8], permanent magnet spherical motors [9], iterative learning control [10], and in swarm kinematic model [11]. In particular, GP has been proven to be effective in improving the learning accuracy and the learning effectiveness of uncertainties and dependencies in low data regimes [12]. More recently, a non-parametric Gaussian process (GP) was proposed for modeling with quantifiable uncertainty and nonlinearity [13,14] based on implicit variance trade-off [15,16]. This bridges the system modeling and data-driven methods. However, computational burden and hardware requirements make GP impractical for big data sets. Furthermore, the high cost of GP also severely hinders the application to an actual physical system. The engineering community has acknowledged these limitations and has attempted to address the problem. Since one can decompose the learning process into a part for a solution, this inspires one to address it in a distributed manner. Accordingly, a distributed GP is an urgent need [17,18].

Generally speaking, the processing is called distributed manner if it is carried out by a cooperative strategy among nodes without central coordination [19]. The distributed method aims at minimizing the amount of computation and communication required by each node as well as making these requirements scalable in the number of nodes [20]. Distributed methods are available for parameter estimation [14], Kalman filtering [21], control [22], optimization [23], learning [13], etc. A major division among distributed methods is based on whether all nodes estimate the full system state [24] or whether each node only estimates a subset of the state variables [25]. The challenge consists in how to execute the update and fusion step in a distributed manner. Existing fusion strategies are usually from the perspective of state estimation and estimation error covariance. Since GP is indeed a Gaussian probability density function (PDF), the trajectory model constructed by GP requires us to consider fusion strategy from the view of PDF [26]. Therefore, this paper is targeted to design a novel GP fusion strategy for multi-agent systems. Generally, the strategy is organized as follows: after obtaining the local predicted results of GP, we perform a fusion of the Kullback–Leibler average consensus on local predictions of GP among neighbors. The distributed GP model can then be developed and successfully applied in large-scale multi-agent systems.

1.1. Related Works

Gaussian process-based modeling and based trajectory tracking have been widely investigated and applied over the past two decades. In the first place, most focus on the centralized GP and the multi-input–output GP. In addition, they are developed based on the need for engineering applications in learning and control fields such as GP-based tracking control, state space model learning, and their applications to trajectory tracking. For example, Beckers et al. studied the stable Gaussian process-based tracking control of Lagrangian systems [1]. Umlauft et al. learned stable Gaussian process state space models [27], while Mohammad et al. learned stable nonlinear dynamical systems with Gaussian mixture models [5]. In addition, Pushpak et al. designed control barrier functions for unknown nonlinear systems using Gaussian processes [28]. Umlauft et al. considered human motion tracking with stable Gaussian process state space models in ref. [29] and proposed an uncertainty-based control Lyapunov approach for control-affine systems modeled by the Gaussian process [30]. They also calculated uniform error bounds of Gaussian process regression with application to safe control [31]. Even Gaussian process-based trajectory tracking and control are becoming research hotspots; they focus mainly on one agent and are seldom involved in multi-agent systems. In the second place, distributed and centralized GPs are flourishing in solving data-driven learning algorithms for multi-agent systems. Generally speaking, the main research results are organized as follows: (1) For contributions of models and theories, the unknown map function was modeled and characterized as a GP but with zero-mean assumption, and a distributed parameter and non-parameter Gaussian regression was proposed by using Karhunen–Loeve expansion in refs. [13,14]. To scale GP to large datum, Deisenroth et al. introduced a robust Bayesian committee machine, a practical and scalable product-of-experts model for large-scale distributed GP regression [32]. To address the hyperparameter optimization problem in big data processing, Xie et al. proposed an alternative distributed GP hyperparameter optimization scheme using the efficient proximal alternating direction method of multipliers [33]. Multiple-task GP was studied in ref. [34], while multi-out regression by GP was studied in ref. [35]. Both of them were centralized approaches and could not be extended to a large-scale problem. GP networks were flexible and effective to be used in multi-output regression by combining with variational inference and distributed variational inference in ref. [36], which involved applications to settle non-linear dimension reduction and regression, and provided a powerful tool to address uncertainty and over-fitting problems. (2) For engineering applications, Nerurkar et al. [37] presented a distributed conjugate gradient algorithm for cooperative localization. Franceschelli and Gasparri [38] presented a distributed gossip-based approach to address the pose estimation problem. Cunnigham et al. [39] developed an approach for robot smoothing and mapping by using Gaussian elimination. Distributed localization from distance measurements is studied in [40]. The distributed position estimation was considered in [41]. Distributed rotation estimation algorithm was developed in various engineering [42,43,44]. Distributed Gauss–Seidel algorithm was studied in [45]. GP for data learning in robotic control was considered in [46]. (3) For trajectory tracking in a multi-agent system, an efficient algorithm was presented in ref. [47] to generate trajectory. Gaussian mixture models were used to learn stable trajectory in ref. [5]. The centralized GP for human motion tracking was studied [48]. (4) For distributed model predictive control (MPC), an overview and future research opportunities were discussed in ref. [49]. A cooperative distributed model predictive control for nonlinear systems was studied in [50], for tracking was studied in ref. [51], for linear systems was studied in ref. [52], and for event-based communication and parallel optimization, it was developed in ref. [53]. Additionally, non-cooperative distributed model predictive control was investigated in ref. [54]. More recently, explicit distributed and localized model predictive control via system-level synthesis was investigated in refs. [55,56] and was applied to the trajectory generation of a multi-agent system in ref. [57]. In short, the study of distributed GP is scarce, especially in the trajectory modeling problem.

1.2. Contributions

More recently, GP was widely used to model tracking systems and applied to track the target in a real-world environment, such as speed racing (quadrotors) [58], trajectory tracking for wheeled mobile robots [59], 3D people tracking [60], and Simultaneous Localization and Mapping (SLAM) [61,62]. However, these applications focus on one agent, which ignores the advantages of multi-agent systems. After surveying these related references, we find that the trajectory tracking problem is mainly solved by control methods, not data-driven methods, and we focus on one agent, not a multi-agent collaboration. In addition, these existing GP-based learning algorithms are limited by training manners. Motivated by the above discussion, we investigate the distributed GP to learn the trajectory system model in this paper. More specifically, the main contributions of the paper are four-fold. (1) Compared with GP in Lagrangian systems [1,58], this paper considers a general state-space model for both discrete-time and continuous-time. (2) Compared with centralized GP in the state space model [27,28,29,30], PD control and model predictive control are combined together with GP to achieve tracking system modeling and estimating, which can make the estimation error globally uniformly bounded. (3) Compared with existing multiple GP-based centralized approaches, such as collaborative GP [32,33,34,35,63,64], this paper achieves a distributed GP manner to estimate the state, and we apply Kullback–Leibler (KL) average consensus to fuse local training results of GPs, which is different with the Wasserstein metric for measuring GP [65]. (4) Compared with the centralized GP without giving the performance bound [32,33,34,35,63,64] or only providing Kullback–Leibler average analysis [26], this paper analyzes the probabilistically globally ultimate bound of distributed GP.

1.3. Paper Structure

The remainder of the paper is organized as follows. Section 2 introduces some preliminaries, including notations, graph theory, Gaussian process, and Kullback–Leibler average consensus. Section 3 states the considered systems. Section 4 designs the local control strategy and proposes a Kullback–Leibler (KL) average consensus to fuse the local predictions of GP. Section 5 provides two tracking experiments. Finally, Section 6 concludes the paper.

2. Preliminaries

2.1. Notation

Throughout the paper, vectors and vector-valued functions are denoted with bold characters. Matrices are described with capital letters. , , , , , , , and denote, respectively, the trace operation, the logarithm operation, the determinate operation, the inner product, the 2-norm of a matrix or vector, the addition operation of probability density functions (PDFs), the multiplication operation of PDFs, a Gaussian distribution, and a Gaussian process. Moreover, , , , and denote, respectively, the first-order differential operation on , the second-order differential operation, the prediction, and the mean operation of . In addition, , , denote, respectively, the KL divergence/distance between probabilities and , the expectation operation, the variance operation, and an n-by-n identity matrix. In addition, , , and denote, respectively, the derivative operation, the partial derivative operation, and the complexity.

2.2. Graph Theory

A graph is defined as ; where is a set of nodes and a set of edges. In particular, graph is undirected iff for all . The order is and the size of is . Further, let denote the set of neighbors for node .

2.3. Gaussian Process

Definition 1.

([66]). A Gaussian process is a collection of random variables, any finite number of which have a joint Gaussian distribution.

A Gaussian process is completely specified by its mean function and covariance function. We define mean function and the covariance function (kernel) of a real process as , , and denote the Gaussian process as . When is an n-dimensional map, the GP can be denoted by .

Then, the Gaussian process can be organized as [29]

In addition, the covariance function (kernel) measures similarity between any two states/variables and the common kernel functions include the linear kernel, squared-exponential (SE) kernel, the polynomial kernel, the Gaussian kernel, and the Matèrn kernel.

Assumption 1

. Suppose the measurement equation is, where is the observed vector, is the state vector defined in a compact set, andis the measurement noise obeying a Gaussian distribution with zero mean and variance (denoted by). In addition,is the unknown mapping function () and is assumed to be a GP (denoted by). Hereis a kernel function with respect to hyper-parameters,denotes the covariance matrix of set, and denotes the covariance matrix between and.

Generally speaking, given a training set , (input and output ) [29], the log-likelihood can be computed by

Then, when a new input is introduced, the posterior prediction of the Gaussian process [29] is , where

To summarize, the likelihood maximization of (2) is performed to compute gradients for training, and the mean and covariance functions (3) are used for fast predictions.

More specifically, given an arbitrary new testing input conditioning a dataset described above, the prediction response is jointly Gaussian distributed with the training set, which is given by

For j = 1,…, n, the posterior distribution corresponding to fj(·) at x∗ yields a Gaussian distribution with mean function and covariance function as

Furthermore, in order to learn the hyper-parameter given a chosen kernel, we can use the maximum likelihood function based on Bayes’ rules as

which can be solved by gradient-based approaches [29].

2.4. Kullback–Leibler Average Consensus Algorithm

This section introduces the consensus/fusion algorithm of GPs. A Gaussian process is a Gaussian probability density function over mean function and covariance function. Therefore, the fusion of GPs is indeed the fusion of probabilities. It raises a problem: How to achieve consensus/fusion of probabilities among multiple agents?

Before proceeding on, we first introduce some definitions.

Definition 2.

(Probability space [26]). Let

denote the set of probabilities (PDFs) over and let denote the local probability/PDF of agent .

Definition 3.

(Kullback–Leibler divergence [26]). In statistics, the Kullback–Leibler divergence, (also called relative entropy), is a statistical distance: a measure of the probability distribution p(·) is different from the probability distribution q(·), which is defined as (for distributions and of a continuous random variable )

Definition 4.

(Probabilistic operation [26]). Define the plus and multiplicative operators over probabilities ( and ) for a variable ( ) and a real constant as a

Then, we attempt to find a Kullback–Leibler average consensus/fusion algorithm over probabilities obtained by multiple agents.

First, according to [26], Kullback–Leibler average (KLA) is to average over probabilities. Motivated by this, we define the weighted KLA () among the probabilities as

where denotes the weight of agent and satisfies .

Then, the average consensus/fusion problem is to achieve

for all agents , where l is the consensus step and represents the asymptotic KLA with uniform weights.

Second, we attempt to find the solution of the average consensus in (9). Based on [26], the solution is

with . In addition, the local consensus of agent at the l-th consensus step can be obtained by

where is the consensus weight satisfying , and represents the ()-th component of the consensus matrix (if ). Therefore, when the -th iteration of the consensus algorithm is initialized by , we can finally obtain the consensus as

Third, for special Gaussian case, the local probability takes the form as

where and denote the mean vector and the covariance matrix, respectively. In view of this case, the KLA can be directly obtained by operating the means and the covariances instead of probabilities. The following lemma states the KLA on Gaussian distributions.

Lemma 1.

([26]). Given Gaussian distributions defined in (14), with corresponding weigh , then the weighted KLA can be calculated by directly fusing the means and the covariance matrices as

Lemma 1 indicates that the consensus/fusion of Gaussian probabilities can directly operate their means and covariance matrices. Note that a Gaussian process is indeed a Gaussian probability. Therefore, the KLA consensus/fusion on GPs can be directly obtained by fusing the mean functions and the covariance functions.

2.5. Uniform Error Bounds

This section analyzes the probabilistic uniform error bounds.

Definition 5.

(Probabilistic uniform error bound [31]). , if there exists a function such that , then, on a compact set , GP has a uniformly bounded error. A probabilistic uniform error bound is one that holds with a probability of at least for any .

Definition 6.

(Lipschitzconstant of the kernel [64]). The Lipschitz constant of a differentiable covariance kernel is

Next, we show that the posterior prediction (3) of GP is continuous. Given the continuous unknown with Lipschitz constant and the Lipschitz continuous kernel with Lipschitz constant , we then have the following theorem.

Theorem 1.

([31]). Given a GP defined by the continuous covariance kernel function with Lipschitz constant , a continuous unknown map with Lipschitz constant and measurements satisfying Assumption 1. Then, the posterior predictions of the GP conditioning on the training date set are continuous with Lipschitz constant and modulus of continuity such that

In addition,, it follows that

Proof of Theorem 1.

The proof is given in Appendix A. □

Asymptotic Analysis

The asymptotic analysis of the error bound (19) in the limit is organized as the following theorem.

Theorem 2.

([31]). Given a GP defined by the continuous covariance kernel function with Lipschitz constant , and an infinite data response of measurements of the continuous unknown map with Lipschitz constant and the maximum absolute value . The first N measurements inform the posterior predictions of the GP as . If there exists a such that , for any , it follows that

Proof of Theorem 2.

The proof is given in Appendix B. □

3. Problem Formulation

The trajectories generate from a continuous dynamical system

where in a compact set, denotes the state (location), denotes the control input, denotes the process noise with and the initial state is . We have agents/sensors connected with a network to acquire measurements (location or velocity). In particular, sensor measures

where is the observed vector of sensor at the -th step , is the measurement noise with .

Suppose that a training data set of trajectories is given. contains the state (current location) and the measurement, which is denoted by The nonlinear map function is unknown and is assumed to be a Gaussian process. In addition, the following assumption is satisfied.

Assumption 2.

Suppose the measurement is Lipschitz continuous and has a bounded RKHS (reproducing kernel Hilbert space) norm with respect to fixed common kernel,.

The objective is to find an estimated of , for which the output trajectory x tracks the desired trajectory such that the tracking error vanishes over time, i.e., . l. Since the noises and and the uncertain dynamics affect the system and control, we use multiple agents to eliminate the influence of stochastic uncertainty, i.e., given local , the goal is also to fuse them and to find a fused/consensus such that the uncertainty also vanishes over time.

4. Control Design and Analysis

Classical control uses static feedback gains. Low feedback gains are designed to avoid saturation of the actuators and good noise suppression. However, the considered unknown dynamics require a more minimal feedback gain to keep the tracking error under a defined limit. After performing a training procedure, we use the mean function of GP to adapt the gains. For this purpose, the uncertainty of the GP and multiple agents are employed to scale the feedback gains.

Before proceeding on, the following natural assumptions and lemmas are given.

Assumption 3.

The desired trajectoryis bounded by.

Lemma 2.

([67]) If there exist a positive constant such that , if there exists a function satisfying , then we have that , i.e., the trajectory of the dynamics (21) is globally ultimately bounded.

Lemma 3.

([67]) If there exists a Lyapunov function such that for all , the dynamical system is globally ultimately bounded to a set .

Next, we design the controller and the control law such that stability and high-performance tracking are achieved. The controller is designed as

where is the model estimation of nonlinear dynamics and is obtained by utilizing the posterior mean function of GP trained by the data set , and is the control law.

In addition, is designed as a Proportional–Derivative (PD) type controller

where is the filtered state with , , and the control gain .

Given the above controller, one needs to verify the effectiveness of the model estimation and the choices of the parameters and . The following theorem states the control law with guaranteed boundedness of the tracking error.

Theorem 3.

Consider the system (23), where f satisfies Assumption 2 and admits a Lipschitz constant. If Assumption 3 is satisfied, then the controller withand the control law guarantee that the tracking error is globally ultimately bounded and converges to a ball, whereandare given in Theorem 1, with a probability of at least.

Proof of Theorem 3.

The proof is given in Appendix C. □

Remark 1.

From Theorem 3, it can be seen that trajectory tracking with high probability is achieved with the proposed GP-based controller. Compared with most existing results where only uniformly ultimate boundedness of the trajectory tracking errors was achieved [1,68], the proposed control law ensures high control precision in the presence of the estimation errors from GP.

Proof of Remark 1.

The proof is given in Appendix C. □

4.1. Consensus

The aforementioned control law focuses one agent/sensor. Since the noises and affect the measurements and the dynamical system, the proposed controller will fluctuate for different agents. Furthermore, since the uncertainty of dynamics exists, the proposed controller may also change for different agents. Therefore, this section will fuse them and make them reach a consensus, i.e., given local ( and ), the goal is to fuse them and to find a fused/consensus such that the uncertainty and the disturbance can vanish over time. Obviously, the controller (23) and the control law (24) for different agents can reach a consensus .

More specially, after training the local by using GP, node sends the result to its neighbors . After collecting the training results from neighbors, it performs the following dynamic consensus/fusion step. Given weights satisfying and based on the Kullback–Leibler average consensus given in Section 2.4, the desired weighted KLA takes the Gaussian form as , in which the fusion of the mean function and the fusion of the covariance function can be calculated by

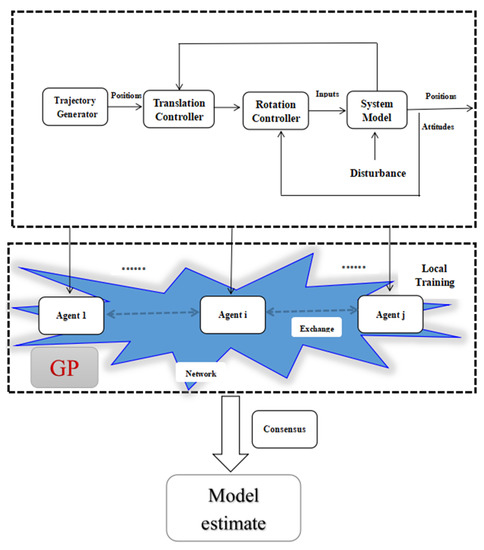

while the global/centralized fusion using . The flowchart is given in Figure 1.

Figure 1.

The flowchart of consensus/fusion.

After obtaining the consensus mean function, the controllers of different agents can be designed to be a unified controller.

Remark 2.

The main advantage of the distributed method lies in that local nodes can only receive part of the training data or even missing data. Neighboring nodes can make the prediction faster and keep high accuracy through information interaction and consensus algorithm, which can also avoid processor failure caused by data loss or node/sensor failure.

4.2. GP-Based Model Predictive Control for Discrete-Time System

The above discussion discusses the continuous-time system. Usually, we need to discretize the system in an actual physical system. This section designs the control strategy for discrete-time by using GP-based model predictive control (MPC).

First, the considered system (21) is assumed to be discrete-time and can be modeled by GP, where the control tuple and the state difference are, respectively, designed as the training input and the desired target. Given the training date set , according to (4) and (5), at a new training input , we can obtain the mean function and covariance function as follows

where , , and is defined in (4). Therefore, (26) is given to predict the next step. By using the moment matching approach [46], the mean function and covariance function of the training target at time k can be calculated by

At time , the mean and covariance functions are updated as

For more details, please refer to [46].

Then, based on (6), we next attempt to learn the hyper-parameters . A distributed GP-based MPC scheme is presented to address this problem. First, we design the objective function as

where the cost function is

where is the desired trajectory (desired state), and are positive definite weight matrices, and is the prediction horizon and also the control horizon. According to GP in Section 2, (30) can be rewritten as

Next, to address the optimization problem (29), a gradient-based method is used. Set and . Using the chain rule, the gradient can be calculated by

where are easy to calculate. In addition,

where are easy to calculate.

Finally, the gradient-based algorithm is formulated as Algorithm 1.

| Algorithm 1 Gradient-based optimization method |

| Input: learning GP, , , , and |

| Output: Optimal control |

| 1: Initialization: Max iteration number , threshold , the initialized input and optimal control ; |

| 2: for to do |

| 3: if then |

| 4: ; |

| 5: end Loop; |

| 6: else |

| 7: Calculate the gradient by (32); |

| 8: Update search step size based on [69]; |

| 9: Update control ; |

| 10 Go next end end |

| 11: return Optimal control . |

Remark 3.

Similarly, due to the stochastic uncertainty caused by the noises and model perturbations, we can use multiple agents to address this problem. The consensus/fusion algorithm is given above, which is similar to the continuous system. Therefore, we will not introduce it any more.

Remark 4.

The GP has been widely applied in various real-world applications such as quadrotor tracking, 3D people tracking, localization and mapping, and control-based application models. These applications have attracted much attention from engineers and researchers. As for the limitations, in our opinion, the first is that the model needs real-world data to achieve perfect training and application. The second is that the dynamics are Gaussian distributed or Gaussian-approximate distributed.

5. Simulations

To evaluate the performance and to verify the effectiveness of the proposed algorithms, this section provides two trajectory tracking examples, where one is the trajectory tracking of a robotic manipulator and the other one is the trajectory tracking of an unmanned quadrotor. All simulations are conducted on a computer with 2.6 GHz Intel(R) Core(TM) i7-5600U CPU and MATLAB R2015b.

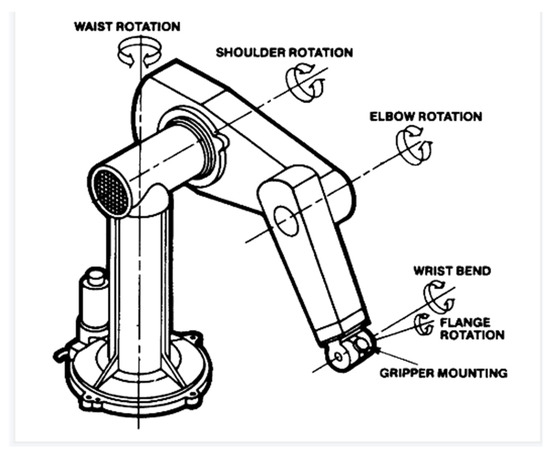

5.1. Trajectory Tracking of Robotic Manipulator

First, we consider the trajectory of a Puma 560 robot arm manipulator in -- plane with 6 degrees of freedom (DoFs), which is shown in Figure 2. The Puma 560 robot was designed to have approximately the dimensions and reach of a human worker. It also had a spherical joint at the wrist, just as humans have. Roboticists use like waist, shoulder, elbow, and wrist when describing serial link manipulators. For the Puma, these terms correspond respectively to joints 1, 2, 3, and 4–6, which is shown in Figure 2.

Figure 2.

The diagram of the Puma 560 robot arm manipulator (6 DoFs).

For the considered robot arm, , , and are the control torques of the motors controlling the joint angles , , . The trajectory of the robotic manipulator can be controlled by these torques. The motion can be described by the following Lagrangian system [1]

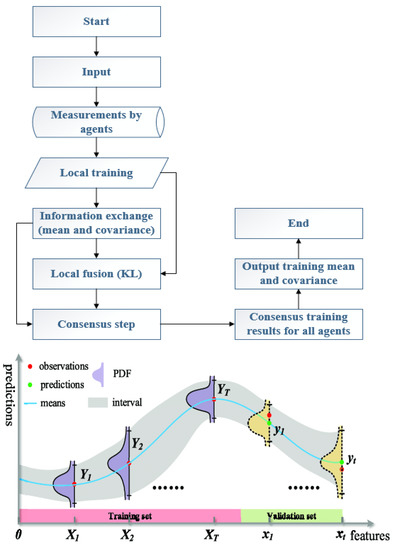

Figure 3.

The process methodology and flowchart.

Tracking error is the error between the actual value of joint angle or velocity with the desired values

The following controllers are tested. (1) Computed torque (CT) controller: . The gains for this controller is = 50 and = 40. (2) The proposed PD controller (24): the composite error is λ, a reference velocity is , and the control torque is . The gains for this controller are λ = 30 and = 20. (3) The adaptive controller: the control torque is =, where , , , are the unknown parameters. are called the regressor vectors defined as [70]. =, =, =, =, where , , , are controller gains, in addition to = 50, = 30, = 20, = 50, and = 20.

Data. Speeds: 5 s, 10 s, 15 s, and 20 s completion times; 4 paths × 4 speeds with 16 different trajectories; 15 loads (0.2 kg…3.0 kg), various shapes and sizes; 10 agents. Training Data. One desired trajectory common to handling of all loads; one trajectory has no data for any context; sixteen unique training trajectories, one for each load. Test Data. Interpolation data sets for testing on reference trajectory and the unique trajectory for each load. Extrapolation data sets for testing on all trajectories.

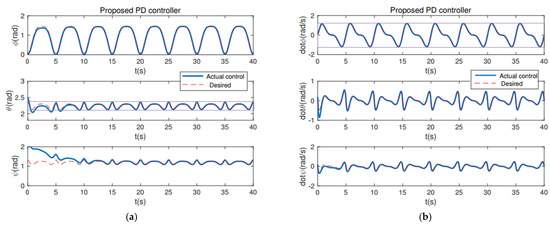

From Figure 4, it can be seen that the PD controller action is able to hold the position of the robot arm at the desired joint angles. λ = 30 and = 20 are the gains associated with holding the respective positions necessary. The convergence of the plots is achieved in about 10 s. The first couple runs of the robot can be used for tuning the robot, and the robot should have good repeatability after that. In addition, from the position and velocity plots of a computed torque controller (Figure 5) and adaptive controller (Figure 6), it is observed that both controllers are able to achieve convergence of parameters to the desired values. However, the proposed PD torque controller has a quicker convergence time and also has lesser gains compared to the computed torque controller. The error results from Table 1 further verify the effectiveness. Therefore, the proposed PD torque controller is better for the considered application. In addition, we can also obtain that the distributed GP can effectively eliminate the uncertainty and disturbance caused by the system model and the noises.

Figure 4.

The proposed PD controller: (a) Position Plot; (b) Velocity Plot.

Figure 5.

The CT controller: (a) Position Plot; (b) Velocity Plot.

Figure 6.

The Adaptive controller: (a) Position Plot; (b) Velocity Plot.

Table 1.

Mean absolute error.

To further compare with the multiple agent processing methods, these approaches are tested: (1) Independent GP (IGP): model trained independently for each input [6]; (2) Combined GP (CGP): one agent to train GP by combining data across inputs [34]; (3) Proposed distributed GP with BIC (Bayesian Information Criterion) criterion. The training results (interpolation and extrapolation manners) of NMSE (normalized mean square error) with regard to the number of training data points are demonstrated in Figure 7. Note that IGP and CGP, i.e., existing GP, are centralized methods, which are different from the proposed GP, which is a distributed method for training. From Figure 7, the first line displays the training results of the interpolation manner for the three methods. As we can see from it, for joint 1, the proposed distributed GP achieves the best performance for any number of training points. For joint four and joint six, the performance of the proposed GP is close to IGP and also better than CGP when the training points increase. The second line displays the training results of the extrapolation manner for the three methods. As we can see from it, for joint 1, the proposed distributed GP achieves the best performance for small numbers of training points (<500). However, when training points are increased further, the CGP is better than the proposed distributed GP (note that they are very close). For joint four and joint six, the performance of the proposed GP is close to IGP and also better than CGP when the training points increase. To sum up, the proposed distributed GP model can reach the performance of the centralized method and is close to (even outperforms) the existing state-of-the-art centralized-based multiple combined and multi-task methods.

Figure 7.

Training results using different methods.

5.2. Trajectory Tracking of an Unmanned Quadrotor

This section tests the proposed distributed GP-based model predictive control (GPMPC) of an unmanned quadrotor. The trajectory of an unmanned quadrotor is generated by a discrete-time Euler–Lagrange dynamical system [2]. The goal is to track its positions (X, Y, Z) and Euler angles (, , ). To compare with the state-of-the-art controllers, the efficient MPC (EMPC) [71] and the efficient nonlinear MPC (ENMPC) [72] are also tested in the simulations. The parameters are selected as = 5 and .

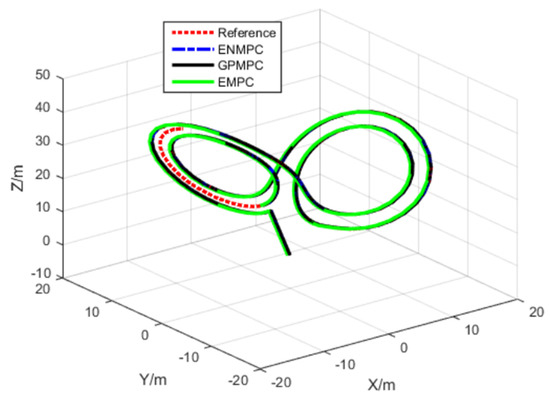

In the first scenario, the unmanned quadrotor tracks a “Lorenz” trajectory with Gaussian white noise (zero mean and unit variance), which is shown in Figure 8. To train the system model, we use the efficient MPC design proposed in [71]. One hundred seventy measurements, states, and controls are used to train the GP. The datum from the rotational system is with the range [0, 1], the angle is with a range , and the input is with the range . The training of GP takes 5 s, and we use 10 agents to train. The values of mean squared error (MSE) trained by GP are very small. The mean squared error (MSE) for the positions is close to the stable GPMPC (GPMPC1) [73] with ; MSE for the angels is also close to GPMPC1 with . This indicates that the proposed distributed GP (GPMPC2) is efficient and well-trained, which is illustrated in Figure 9 (with different confidences). Note that the stable GPMPC (GPMPC1) is the most recent best method at present and is also a method for one agent. Therefore, the training results are very close, which indicates that the proposed distributed GP can achieve a good training performance.

Figure 8.

Lorenz trajectory tracking.

Figure 9.

Training performance with 60%, 80%, and 90% confidence: (a) ; (b) .

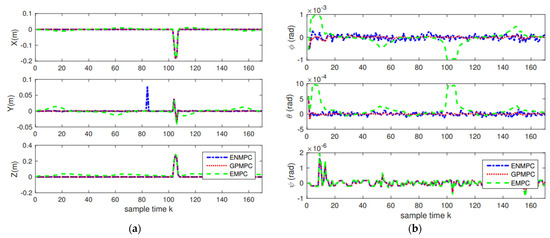

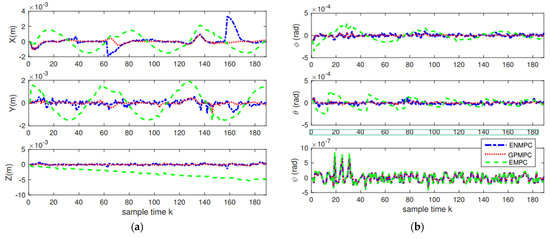

The positions and attitudes tracking results are demonstrated in Figure 10, and the tracking errors are displayed in Figure 11 and Table 2. As we can see from Figure 10 and Figure 11, the proposed distributed GP can learn the system model well and track the trajectories with high precision, which is close to the state-of-the-art controllers. This also indicates that as long as the training sets are introduced, we can track the trajectory without model knowledge (model-free), i.e., the proposed GP can learn the system model well. In addition, as long as multiple agents are introduced, the model uncertainties and noise disturbances can be eliminated and suppressed.

Figure 10.

Tracking results: (a) Positions tracking; (b) Attitudes tracking.

Figure 11.

Tracking errors: (a) Positions errors; (b) Attitudes errors.

Table 2.

Training errors and tracking errors.

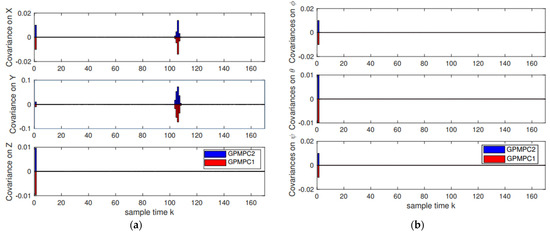

Furthermore, the covariance on positions and attitudes by different GP models (the stable GPMPC (GPMPC1) [69] and the proposed distributed GPMPC (GPMPC2)) is displayed in Figure 12. This indicates that the proposed distributed GP can also reach the performance of the state-of-the-art GP model.

Figure 12.

Covariance results: (a) Positions covariance; (b) Attitudes covariance.

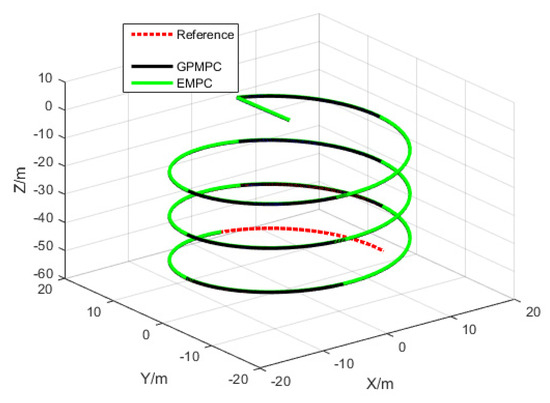

In the second scenario, the unmanned quadrotor tracks an “Elliptical” trajectory with Gaussian white noise (zero mean and unit variance), which is shown in Figure 13. The tracking performance and the tracking errors are shown in Figure 14 and Figure 15 and Table 3. From Figure 13, Figure 14 and Figure 15, we can also see that the proposed distributed GP can learn the trajectory model effectively, which is very close to the desired reference trajectory and is close to the state-of-the-art controllers. The covariance results by GPMPC1 and GPMPC2 are shown in Figure 16, which further verifies the effectiveness of the proposed distributed GP.

Figure 13.

Elliptical trajectory tracking.

Figure 14.

Tracking results: (a) Positions tracking; (b) Attitudes tracking.

Figure 15.

Tracking errors: (a) Positions errors; (b) Attitudes errors.

Table 3.

Training errors and tracking errors.

Figure 16.

Covariance results: (a) Positions covariance; (b) Attitudes covariance.

6. Conclusions

This paper used the Gaussian process to learn the trajectory model, and a distributed GP-based model learning strategy was proposed. For the continuous- and discrete-time system, we, respectively, designed a GP-based PD controller and a GP-based MPC controller to address the problem. To address the uncertainties of the model and the disturbances of the noises, a distributed multiple-agent system was used to train the model. In addition, since data-driven algorithms needed a large number of training sets, the distributed GP model could also be employed to address this problem by using a Kullback–Leibler average consensus fusion criterion.

The proposed GP can solve the actual model-free problem as long as the training data sets are given. Since the considered multi-agent is interconnected and it is only used to eliminate the uncertainties of the model and disturbances of the noises, future research mainly focuses on the efficiency of distributed Gaussian process and the robustness of the multi-agent network. In the future, we will focus on the application deployment of an unmanned aerial vehicle (UAV) and its usage in UAV detection and location. UAV racing is a challenging problem to overcome.

Author Contributions

Conceptualization, L.S. and D.X.; methodology, D.X.; software, D.X.; validation, D.X.; formal analysis, L.S. and D.X.; investigation, L.S. and D.X.; resources, D.X.; data curation, D.X.; writing—original draft preparation, D.X.; writing—review and editing, L.S. and D.X.; supervision, L.S.; funding acquisition, L.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the Shaanxi Provincial Fund under Grant 2020JM-185 and the National Natural Science Foundation of China under grant 62171338.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Proof of Theorem 1

Proof.

First, we prove the Lipschitz constant of and the modulus of continuity of . At two different states and , the norm of the difference of is with Then, based on the Lipschitz continuity of the kernel and the Cauchy–Schwarz inequality, we have which proves that is Lipschitz continuous.

Similarly, we have

Due to the Lipschitz continuity of kernel (also ), we have

Therefore, the continuous modulus can be obtained by combing the above equations and taking the square root.

Finally, we prove the probabilistic uniform error bound. According to [74], for every grid with the -th number of grid points and it follows that

Appendix B. Proof of Theorem 2

Proof.

According to Theorem 1, given and , it follows that

with a probability of at least . In addition, given the distance we can obtain a trivial bound Then, we can obtain that

On the one hand, Lipschitz constant is bounded by

On the other hand, since f is bounded by and is positive semi-definite, is bounded by

where vector composed of variables is a Gaussian disturbance with zero mean and covariance . This indicates that obeys a chi-square distribution, i.e., Note that with a probability of at least we have

Then, by using the union bounds over all and setting we can obtain that

with a probability of at least . Therefore, the Lipschitz constant of the posterior mean function has

In addition, since ηN is logarithmically increased with the number of training samples , it follows that with a probability of at least .

Furthermore, based on (17) and , we can bound the modulus of continuity as

According to (37), the uniform bound holds with a probability of at least with

Note that if the error is designed to vanish, the above equation should be guaranteed convergence to 0 as . This indicates that decreases faster than . Therefore, we can set , then we can obtain that . Furthermore, this indicates that . Therefore, there exists an such that , it follows that , which concludes the proof. □

Appendix C. Proof of Theorem 3

Proof.

According to Lemma 2 and 3, and recalling that the noise w is the stationary Gaussian process, we use the following Lyapunov candidate .

, it follows that . According to Theorem 1, the model error is bounded with probability . According to Lemma 3, we can obtain the global ultimate boundedness. □

References

- Beckers, T.; Umlauft, J.; Kulic, D.; Hirche, S. Stable Gaussian process based tracking control of Lagrangian systems. In Proceedings of the 2017 IEEE 56th Annual Conference on Decision and Control (CDC), Melbourne, Australia, 12–15 December 2017; pp. 5180–5185. [Google Scholar]

- Corke, P.I.; Khatib, O. Robotics, Vision and Control: Fundamental Algorithms in MATLAB; Springer: Berlin/Heidelberg, Germany, 2011; Volume 73. [Google Scholar]

- Wang, D.; Mu, C. Adaptive-critic-based robust trajectory tracking of uncertain dynamics and its application to a spring-mass-damper system. IEEE Trans. Ind. Electron. 2018, 65, 654–663. [Google Scholar] [CrossRef]

- Choi, J.; Jung, J.; Park, I. Area-efficient approach for generating quantized gaussian noise. IEEE Trans. Circuits Syst. I Regul. Pap. 2016, 63, 1005–1013. [Google Scholar] [CrossRef]

- Khansari-Zadeh, S.M.; Billard, A. Learning stable nonlinear dynamical systems with Gaussian mixture models. IEEE Trans. Robot. 2011, 27, 943–957. [Google Scholar] [CrossRef]

- Choi, J. Data-aided sensing for Gaussian process regression in iot systems. IEEE Internet Things 2021, 8, 7717–7726. [Google Scholar] [CrossRef]

- Diaz-Rozo, J.; Bielza, C.; Larranaga, P. Clustering of data streams with dynamic Gaussian Mixture Models: An IoT application in industrial processes. IEEE Internet Things J. 2018, 5, 3533–3547. [Google Scholar] [CrossRef]

- Sheng, H.; Xiao, J.; Cheng, Y.; Ni, Q.; Wang, S. Short-term solar power forecasting based on weighted Gaussian process regression. IEEE Trans. Ind. Electron. 2018, 65, 300–308. [Google Scholar] [CrossRef]

- Wen, Y.; Li, G.; Wang, Q.; Guo, X.; Cao, W. Modeling and analysis of permanent magnet spherical motors by a multi-task Gaussian process method and finite element method for output torque. IEEE Trans. Ind. Electron. 2021, 68, 8540–8549. [Google Scholar] [CrossRef]

- Jin, X. Fault tolerant nonrepetitive trajectory tracking for mimo output constrained nonlinear systems using iterative learning control. IEEE Trans. Cybern. 2019, 49, 3180–3190. [Google Scholar] [CrossRef] [PubMed]

- Fedele, G.; D’Alfonso, L. A kinematic model for swarm finite-time trajectory tracking. IEEE Trans. Cybern. 2019, 49, 3806–3815. [Google Scholar] [CrossRef]

- Wilson, A.G.; Knowles, D.A.; Ghahramani, Z. Gaussian process regression networks. In Proceedings of the 29th International Conference on Machine Learning, Edinburgh, UK, 26 June–1 July 2012. [Google Scholar]

- Pillonetto, G.; Schenato, L.; Varagnolo, D. Distributed multi-agent gaussian regression via finite-dimensional approximations. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 2098–2111. [Google Scholar] [CrossRef]

- Varagnolo, D.; Pillonetto, G.; Schenato, L. Distributed parametric and nonparametric regression with on-line performance bounds computation. Automatica 2012, 48, 2468–2481. [Google Scholar] [CrossRef]

- Krivec, T.; Papa, G.; Kocijan, J. Simulation of variational Gaussian process NARX models with GPGPU. ISA Trans. 2021, 109, 141–151. [Google Scholar] [CrossRef]

- Aman, K.; Kocijan, J. Application of Gaussian processes for black-box modelling of biosystems. ISA Trans. 2007, 46, 443–457. [Google Scholar] [CrossRef] [PubMed]

- Hensman, J.; Durrande, N.; Solin, A. Variational fourier features for Gaussian processes. J. Mach. Learn. Res. 2017, 18, 5537–5588. [Google Scholar]

- Damianou, A.C.; Titsias, M.K.; Lawrence, N.D. Variational inference for latent variables and uncertain inputs in Gaussian processes. J. Mach. Learn. Res. 2016, 17, 1425–1486. [Google Scholar]

- Meng, Z.; Lin, Z.; Ren, W. Robust cooperative tracking for multiple non-identical second-order nonlinear systems. Automatica 2013, 49, 2363–2372. [Google Scholar] [CrossRef]

- Pu, S.; Yu, X.; Li, J. Distributed Kalman filter for linear system with complex multichannel stochastic uncertain parameter and decoupled local filters. Int. J. Adapt. Control. Signal Process. 2021, 35, 1498–1512. [Google Scholar] [CrossRef]

- Yu, X.; Li, J. Adaptive Kalman filtering for recursive both additive noise and multiplicative noise. IEEE Trans. Aerosp. Electron. Syst. 2022, 58, 1634–1649. [Google Scholar] [CrossRef]

- Huang, Y.; Meng, Z. Bearing-based distributed formation control of multiple vertical take-off and landing UAVs. IEEE Trans. Control. Netw. Syst. 2021, 8, 1281–1292. [Google Scholar] [CrossRef]

- Yang, T.; Yi, X.; Wu, J.; Yuan, Y.; Wu, D.; Meng, Z.; Hong, Y.; Wang, H.; Lin, Z.; Johansson, K.H. A survey of distributed optimization. Annu. Rev. Control. 2019, 47, 278–305. [Google Scholar] [CrossRef]

- Li, X.; Caimou, H.; Haoji, H. Distributed filter with consensus strategies for sensor networks. J. Appl. Math. 2013, 2013, 683249. [Google Scholar] [CrossRef]

- Zhou, T. Coordinated one-step optimal distributed state prediction for a networked dynamical system. IEEE Trans. Autom. Control. 2013, 58, 2756–2771. [Google Scholar] [CrossRef]

- Battistelli, G.; Chisci, L. Kullback-Leibler average, consensus on probability densities, and distributed state estimation with guaranteed stability. Automatica 2014, 50, 707–718. [Google Scholar] [CrossRef]

- Umlauft, J.; Lederer, A.; Hirche, S. Learning stable Gaussian process state space models. In Proceedings of the 2017 American Control Conference (ACC), Seattle, DC, USA, 24–26 May 2017; pp. 1499–1504. [Google Scholar]

- Jagtap, P.; Pappas, G.J.; Zamani, M. Control barrier functions for unknown nonlinear systems using Gaussian processes. In Proceedings of the 2020 59th IEEE Conference on Decision and Control (CDC), Jeju Island, Korea, 14–18 December 2020; pp. 3699–3704. [Google Scholar]

- Pöhler, L.D.; Umlauft, J.; Hirche, S. Uncertainty-based Human Motion Tracking with Stable Gaussian Process State Space Models. IFAC-Pap. 2019, 51, 8–14. [Google Scholar] [CrossRef]

- Umlauft, J.; Pöhler, L.D.; Hirche, S. An uncertainty-based control Lyapunov approach for control-affine systems modeled by Gaussian process. IEEE Control. Syst. Lett. 2018, 2, 483–488. [Google Scholar] [CrossRef]

- Lederer, A.; Umlauft, J.; Hirche, S. Uniform error bounds for Gaussian process regression with application to safe control. Adv. Neural Inf. Process. Syst. 2019, 32, 659–669. [Google Scholar]

- Deisenroth, M.; Ng, J.W. Distributed Gaussian processes. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 7–9 July 2015; pp. 1481–1490. [Google Scholar]

- Xie, A.; Yin, F.; Xu, Y.; Ai, B.; Chen, T.; Cui, S. Distributed Gaussian processes hyperparameter optimization for big data using proximal ADMM. IEEE Signal Processing Lett. 2019, 26, 1197–1201. [Google Scholar] [CrossRef]

- Bonilla, E.V.; Chai, K.M.; Williams, C. Multi-task Gaussian process prediction. In Proceedings of the Advances in Neural Information Processing Systems 20 (NIPS 2007), Vancouver, BC, Canada, 3–5 December 2008; pp. 153–160. [Google Scholar]

- Alvarez, M.; Lawrence, N.D. Sparse convolved Gaussian processes for multi-output regression. In Proceedings of the Advances in Neural Information Processing Systems 21 (NIPS 2008), Vancouver, BC, Canada, 8 December 2009. [Google Scholar]

- Gal, Y.; van der Wilk, M.; Rasmussen, C.E. Distributed variational inference in sparse Gaussian process regression and latent variable models. In Proceedings of the Advances in Neural Information Processing Systems 27 (NIPS 2014), Montreal, Canada, 8–13 December 2014; pp. 3257–3265. [Google Scholar]

- Nerurkar, E.D.; Roumeliotis, S.I.; Martinelli, A. Distributed maximum a posteriori estimation for multi-robot cooperative localization. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 6 July 2009; pp. 1402–1409. [Google Scholar]

- Franceschelli, M.; Gasparri, A. On agreement problems with gossip algorithms in absence of common reference frames. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 15 July 2010; pp. 4481–4486. [Google Scholar]

- Cunningham, A.; Indelman, V.; Dellaert, F. DDF-SAM 2.0: Consistent distributed smoothing and mapping. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 5220–5227. [Google Scholar]

- Anderson, B.D.; Shames, I.; Mao, G.; Fidan, B. Formal theory of noisy sensor network localization. SIAM J. Discret. Math. 2010, 24, 684–698. [Google Scholar] [CrossRef]

- Carron, A.; Todescato, M.; Carli, R.; Schenato, L. An asynchronous consensus-based algorithm for estimation from noisy relative measurements. IEEE Trans. Control. Netw. Syst. 2014, 1, 283–295. [Google Scholar] [CrossRef]

- Thunberg, J.; Montijano, E.; Hu, X. Distributed attitude synchronization control. In Proceedings of the 2011 50th IEEE Conference on Decision and Control and European Control Conference, Orlando, FL, USA, 12–15 December 2011; pp. 1962–1967. [Google Scholar]

- Piovan, G.; Shames, I.; Fidan, B.; Bullo, F.; Anderson, B.D. On frame and orientation localization for relative sensing networks. Automatica 2013, 49, 206–213. [Google Scholar] [CrossRef]

- Sarlette, A.; Sepulchre, R. Consensus optimization on manifolds. SIAM J. Control. Optim. 2009, 48, 56–76. [Google Scholar] [CrossRef]

- Choudhary, S.; Carlone, L.; Nieto, C.; Rogers, J.; Christensen, H.I.; Dellaert, F. Distributed trajectory estimation with privacy and communication constraints: A two-stage distributed Gauss-seidel approach. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 5261–5268. [Google Scholar]

- Deisenroth, M.P.; Fox, D.; Rasmussen, C.E. Gaussian processes for data-efficient learning in robotics and control. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 408–423. [Google Scholar] [CrossRef] [PubMed]

- Robinson, D.R.; Mar, R.T.; Estabridis, K.; Hewer, G. An efficient algorithm for optimal trajectory generation for heterogeneous multi-agent systems in non-convex environments. IEEE Robot. Autom. Lett. 2018, 3, 1215–1222. [Google Scholar] [CrossRef]

- Wang, J.M.; Fleet, D.J.; Hertzmann, A. Gaussian process dynamical models for human motion. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 30, 283–298. [Google Scholar] [CrossRef]

- Negenborn, R.R.; Maestre, J.M. Distributed model predictive control: An overview and roadmap of future research opportunities. IEEE Control. Syst. Mag. 2014, 34, 87–97. [Google Scholar]

- Stewart, B.T.; Wright, S.J.; Rawlings, J.B. Cooperative distributed model predictive control for nonlinear systems. J. Process Control. 2011, 21, 698–704. [Google Scholar] [CrossRef]

- Ferramosca, A.; Limón, D.; Alvarado, I.; Camacho, E.F. Cooperative distributed MPC for tracking. Automatica 2013, 49, 906–914. [Google Scholar] [CrossRef]

- Conte, C.; Jones, C.N.; Morari, M.; Zeilinger, M.N. Distributed synthesis and stability of cooperative distributed model predictive control for linear systems. Automatica 2016, 69, 117–125. [Google Scholar] [CrossRef]

- Groß, D.; Stursberg, O. A cooperative distributed MPC algorithm with event-based communication and parallel optimization. IEEE Trans. Control. Netw. Syst. 2015, 3, 275–285. [Google Scholar] [CrossRef]

- Alrifaee, B.; Heßeler, F.J.; Abel, D. Coordinated non-cooperative distributed model predictive control for decoupled systems using graphs. IFAC-Pap. 2016, 49, 216–221. [Google Scholar]

- Alonso, C.A.; Matni, N. Distributed and localized closed loop model predictive control via system level synthesis. In Proceedings of the 2020 59th IEEE Conference on Decision and Control (CDC), Jeju, Korea, 14–18 December 2020; pp. 5598–5605. [Google Scholar]

- Alonso, C.A.; Matni, N.; Anderson, J. Explicit distributed and localized model predictive control via system level synthesis. In Proceedings of the 2020 59th IEEE Conference on Decision and Control (CDC), Jeju, Korea, 14–18 December 2020; pp. 5606–5613. [Google Scholar]

- Luis, C.E.; Schoellig, A.P. Trajectory generation for multiagent point-to-point transitions via distributed model predictive control. IEEE Robot. Autom. Lett. 2019, 4, 375–382. [Google Scholar] [CrossRef]

- Torrente, G.; Kaufmann, E.; Föhn, P.; Scaramuzza, D. Data-driven MPC for quadrotors. IEEE Robot. Autom. Lett. 2021, 6, 3769–3776. [Google Scholar] [CrossRef]

- Liu, D.; Tang, M.; Fu, J. Robust adaptive trajectory tracking for wheeled mobile robots based on Gaussian process regression. Syst. Control. Lett. 2022, 163, 105210. [Google Scholar] [CrossRef]

- Akbari, B.; Zhu, H. Tracking Dependent Extended Targets Using Multi-Output Spatiotemporal Gaussian Processes. IEEE Trans. Intell. Transp. Syst. 2022, 23, 18301–18314. [Google Scholar] [CrossRef]

- Hidalgo-Carrió, J.; Hennes, D.; Schwendner, J.; Kirchner, F. Gaussian process estimation of odometry errors for localization and mapping. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 5696–5701. [Google Scholar]

- Brossard, M.; Bonnabel, S. Learning wheel odometry and IMU errors for localization. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 291–297. [Google Scholar]

- Nguyen, T.V.; Bonilla, E.V. Collaborative multi-output Gaussian processes. In Proceedings of the UAI’14: Thirtieth Conference on Uncertainty in Artificial Intelligence, Citeseer, Quebec City, QC, Canada, 23–27 July 2014; pp. 643–652. [Google Scholar]

- Carron, A.; Todescato, M.; Carli, R.; Schenato, L.; Pillonetto, G. Multi-agents adaptive estimation and coverage control using Gaussian regression. In Proceedings of the 2015 European Control Conference (ECC), Linz, Austria, 15–17 July 2015; pp. 2490–2495. [Google Scholar]

- Mallasto, A.; Feragen, A. Learning from uncertain curves: The 2-wasserstein metric for gaussian processes. In Proceedings of the 31st Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Rasmussen, C.E.; Williams, C.K. Gaussian Processes for Machine Learning. In Adaptive Computation and Machine Learning; MIT Press: Cambridge, MA, USA, 2006. [Google Scholar]

- Khalil, H.K. Nonlinear Systems: International Edition. Bull. Am. Acad. Arts Sci. 2002, 53, 20–24. [Google Scholar]

- Umlauft, J.; Hirche, S. Feedback linearization based on Gaussian processes with event triggered online learning. IEEE Trans. Autom. Control. 2020, 65, 4154–4169. [Google Scholar] [CrossRef]

- Zhou, B.; Gao, L.; Dai, Y.H. Gradient methods with adaptive step-sizes. Comput. Optim. Appl. 2006, 35, 69–86. [Google Scholar] [CrossRef]

- Ivanov, S.E.; Zudilova, T.; Voitiuk, T.; Ivanova, L.N. Mathematical modeling of the dynamics of 3-DOF robot-manipulator with software control. Procedia Comput. Sci. 2020, 178, 311–319. [Google Scholar] [CrossRef]

- Abdolhosseini, M. Model Predictive Control of an Unmanned Quadrotor Helicopter: Theory and Flight Tests. Ph.D. Thesis, Concordia University, Montreal, QC, Canada, 2012. [Google Scholar]

- Cannon, M. Efficient nonlinear model predictive control algorithms. Annu. Rev. Control. 2004, 28, 229–237. [Google Scholar] [CrossRef]

- Beckers, T.; Kulic, D.; Hirche, S. Stable Gaussian process based tracking control of Euler-Lagrange systems. Automatica 2019, 103, 390–397. [Google Scholar] [CrossRef]

- Srinivas, N.; Krause, A.; Kakade, S.M.; Seeger, M.W. Information-theoretic regret bounds for Gaussian process optimization in the bandit setting. IEEE Trans. Inf. Theory 2012, 58, 3250–3265. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).