Abstract

Lightning waveform plays an important role in lightning observation, location, and lightning disaster investigation. Based on a large amount of lightning waveform data provided by existing real-time very low frequency/low frequency (VLF/LF) lightning waveform acquisition equipment, an automatic and accurate lightning waveform classification method becomes extremely important. With the widespread application of deep learning in image and speech recognition, it becomes possible to use deep learning to classify lightning waveforms. In this study, 50,000 lightning waveform samples were collected. The data was divided into the following categories: positive cloud ground flash, negative cloud ground flash, cloud ground flash with ionosphere reflection signal, positive narrow bipolar event, negative narrow bipolar event, positive pre-breakdown process, negative pre-breakdown process, continuous multi-pulse cloud flash, bipolar pulse, skywave. A multi-layer one-dimensional convolutional neural network (1D-CNN) was designed to automatically extract VLF/LF lightning waveform features and distinguish lightning waveforms. The model achieved an overall accuracy of 99.11% in the lightning dataset and overall accuracy of 97.55% in a thunderstorm process. Considering its excellent performance, this model could be used in lightning sensors to assist in lightning monitoring and positioning.

1. Introduction

Lightning is one of the most commonly geophysical phenomena [1]. There are 30–100 lightning strikes per second worldwide. In 2013, the World Wide Lightning Location Network (WWLLN) detected 210.6 million lightning strikes through 67 sites [2]. Lightning is simply divided into two types according to the location where the lightning occurs: cloud flash and cloud-to-ground flash (CG).

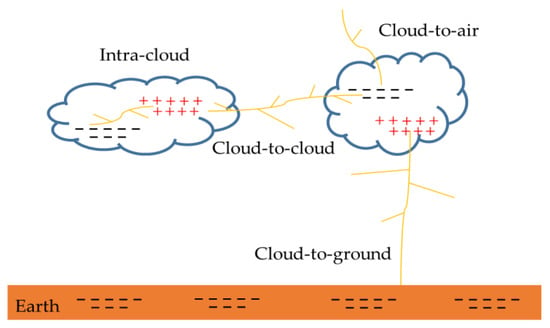

As shown in Figure 1, cloud flash includes cloud-to-cloud discharges, intra-cloud discharges, and cloud-to-air discharges. According to statistics, cloud flashes account for about 75% of global lightning events [3]. When these discharges occur from cloud to ground, it is called cloud-to-ground discharges. This discharge is the most harmful to human production and life, and can directly lead to human death. In general, about 90% of cloud-to-ground flashes are negative cloud-to-ground flashes (−CG), and the remaining 10% are positive cloud-to-ground flashes (+CG) [4,5]. Narrow bipolar events (NBE) is usually generated during a thunderstorm [6,7,8]. The discharge channel is short, and the intensity is stronger than the cloud-to-ground flash or normal cloud flash. Lightning’s seemingly random occurrence in space and time and the wide range of its significant time variation makes lightning particularly difficult to study. Different discharge processes produce different electromagnetic radiation. The accurate classification of lightning is of great significance for lightning observation, lightning disaster investigation, and research on the rules of lightning activity.

Figure 1.

Different types of the lightning discharge.

The lightning process is accompanied by strong electromagnetic radiation, and the signal frequency bands cover very low frequency (VLF), low frequency (LF), high frequency (HF), and very high frequency (VHF). The main method for observing the lightning process is to use antennas to receive lightning signals in different frequency bands [9,10,11,12]. Since the 1980s, many regional lightning monitoring networks have been deployed around the world. Distinguishing cloud flashes from cloud ground flashes has been an important indicator of lightning monitoring and research. According to incomplete statistics, more than 10,000 people are killed or injured each year due to lightning strikes, and cloud-to-ground flashes are the direct cause of lightning strikes. After receiving the lightning signal, the lightning sensor will classify the signal, determine whether it is a cloud flash or cloud ground flash, and upload the classification result to the lightning location calculation server. Generally, the rise and fall time of the lightning waveform and the signal-to-noise ratio of the waveform are calculated to distinguish between cloud flash and cloud ground flash [13,14]. Due to the complexity and diversity of lightning occurrences, the statistical method is only suitable for single-pulse events or a specific thunderstorm. In addition, the classification effect of this method is not ideal in practical applications: the recognition rate of cloud ground flashes is 70% ~ 80%, and the recognition rate of cloud flashes is less than 85%, and it cannot effectively distinguish the ionosphere reflection signals [15].

From the perspective of lightning location, cloud-to-ground flash is the lightning that hits the ground directly. If the location algorithm can use the type of lightning as a known condition, this will have an important effect on improving the location accuracy. Lightning signal classification plays an important role in a lightning warning and weather forecasting. A large number of cloud-to-ground flashes herald regional thunderstorms. Accurate identification of cloud-to-light types can improve aircraft navigation safety. In summary, we need a better way to distinguish lightning signals.

Deep learning [16] is a rapidly growing area of machine learning, which has been widely used in the fields of image recognition, speech analysis, and medical diagnosis in recent years [17,18,19,20]. Recently, Zaiwar Ali et al. [21] proposed an energy-saving deep learning shunting scheme to train a deep learning-based intelligent decision-making algorithm; Mohamed Alloghani et al. [22] applied machine learning algorithms to the clustering and prediction of vital signs; Stamatios Samaras et al. [23] described the progress of deep learning of multi-sensor information fusion in drone applications. Heena Rathore et al. [24] proposed a new deep learning strategy for classifying different attack methods of deep brain implants. The proposed method was able to effectively detect different types of simulated attack patterns, thereby notifying patients of possible attacks. The effect of deep brain stimulation was tested in patients with Parkinson’s tremor. Dengshan Li et al. [25] proposed a method for detecting rice diseases and insect pests based on deep convolutional neural networks. Ammar Mahmood et al. [26] used the deep residual function to automatically classify kelp. These works strongly demonstrated the powerful role of deep learning algorithms in various research fields. Considering that the lightning signal and the ECG signal have a high characteristic similarity, they are both pulse signals, and deep learning has been applied to the ECG signal processing. Therefore, the use of deep learning methods to classify lightning is of great significance for analyzing the lightning process and investigating lightning disasters.

Novelty and Contributions

In this study, we proposed a novel method for classifying lightning signals based on a one-dimensional convolutional deep neural network. To our knowledge, this was the first attempt in the classification of lightning signals. One-dimensional convolutional neural network (1D-CNN) is able to identify patterns and learn useful information from raw input data. 1D-CNN does not require extensive data preprocessing, making it particularly suitable for analyzing lightning data. In addition, since the performance of 1D-CNN tends to improve with the increase in the amount of training data, this method can be well applied to a large number of lightning data classifications. We analyzed a large amount of lightning waveform data, and the related work is summarized as follows:

- Using the lightning waveforms and lightning location data from different regions collected by the three-dimensional lightning positioning network of the Institute of Electrical Engineering of the Chinese Academy of Sciences, a lightning waveform database was constructed for deep learning testing, training, and verification. The collected lightning signal is an electric field signal in the VLF/LF band.

- Based on the expert classification method, we classified the waveforms in the lightning waveform database. Currently, the database contains 10 types of lightning. It needs to be emphasized that we considered lightning signals transmitted from different distances, including but not limited to the basic types of lightning described earlier. This paper mainly analyzed the feasibility and effectiveness of deep learning methods in lightning signal classification.

- A lightning classification algorithm based on deep learning was proposed to replace the statistical method. The input of the algorithm is a fixed-length lightning signal, and the output is a lightning type number.

- Apply the classification algorithm to a thunderstorm process to detect the actual application of the algorithm. Test results showed that the model could accurately recognize lightning during this thunderstorm as high as 97.55%.

The paper is organized as follows. Section 2 introduces the lightning dataset and data preprocessing method. Section 3 introduces some of the deep learning methods used for classification. Section 4 details the model structure and parameters, model evaluation, and model test results. Section 5 compares the convolutional neural network with a statistical method. It also discusses the impact of different model structures on classification performance and analyzes the real-time processing performance of the model. The conclusions and future research directions are given in Section 6.

2. Dataset and Preprocessing

2.1. Lightning Dataset

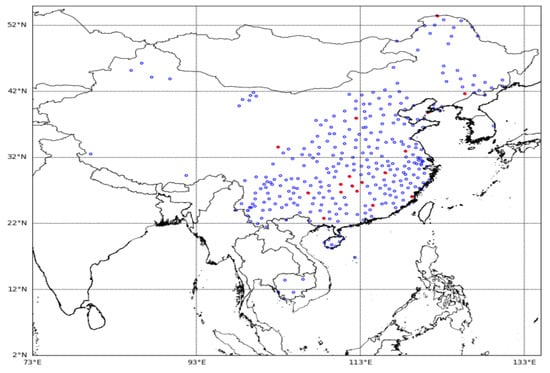

Lightning data comes from the three-dimensional lightning location system (advanced direction-time lightning detection system, ADTD) of the Institute of Electrical Engineering, Chinese Academy of Sciences. The system has 333 detection stations, and the detection region covers China and Southeast Asia. The site distribution is shown in Figure 2. The positioning error is less than 500 m. Each detection site includes a VLF/LF electric field antenna, a magnetic antenna, a GPS receiving system, and a signal processing system. The channel bandwidth of the device is 3 kHz to 400 kHz, and the GPS time synchronization accuracy is less than 20 ns.

Figure 2.

Map of lightning detection sites. The blue circle represents a regular site, and the red circle represents a waveform acquisition site.

Since 2018, we have upgraded some ADTD lightning detectors. The new detector has the functions of lightning waveform acquisition, storage, and remote file transfer. The system adopts the trigger sampling method, the sampling rate is 1MSPS (Million Samples per Second), the sampling time length is 1 ms, and the pre-trigger length is 100 μs.

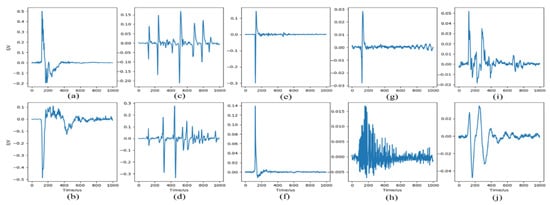

The lightning signals received by the lightning detector divide into the following categories: cloud ground flashes and cloud flashes, where cloud ground flashes include: positive cloud-to-ground flash (+CG), negative cloud-to-ground flash (−CG), cloud ground flash with ionosphere reflected signals (CG-IR); cloud flash includes positive NBE (+NBE), negative NBE (−NBE), positive pre-breakdown (+PBP), negative pre-breakdown (−PBP), multi-pulse cloud flash (MP), polarity pulse (NBE, positive and negative amplitudes are consistent), far-field skywave (SW). The typical waveforms of these types are shown in Figure 3. The total number of signal samples is 50,000, and the number of samples for each type is 5000. Please refer to the Supplementary Materials for detailed data.

Figure 3.

Example of some typical very low frequency/low frequency (VLF/LF) electric field waveforms. (a) negative cloud-to-ground flash (−CG), (b) positive CG (+CG), (c) negative pre-breakdown (−PBP), (d) positive PBP (+PBP), (e) negative narrow bipolar event (−NBE), (f) positive NBE (+NBE), (g) NBE, (h) multi-pulse cloud flash (MP), (i) cloud ground flash with ionosphere reflected signals (CG-IR), (j) skywave (SW).

2.2. Pre-Processing

Data preprocessing is mainly divided into two parts:

- (1)

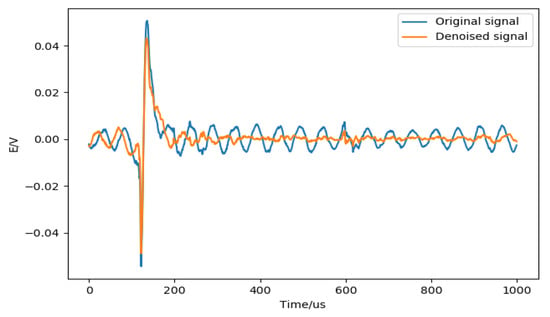

- Digital filter to reduce noise. The zero-phase digital filtering [27] method is used to remove the high-frequency noise contained in the signal. Zero-phase filtering reduces noise in the signal and preserves the lightning complex at the same time it occurs in the original. Conventional filtering reduces noise in the signal but delays the lightning complex. Figure 4 shows an example of suppressing signal noise by zero-phase digital filtering.

Figure 4. An example of zero-phase digital filtering. The blue line indicates data before filtering, and the orange line indicates data after filtering.

Figure 4. An example of zero-phase digital filtering. The blue line indicates data before filtering, and the orange line indicates data after filtering. - (2)

- Data standardization (normalization) [28]. Data standardization is to scale the data to a specific interval according to a certain algorithm, remove the unit limit of the data, and convert it into a dimensionless pure value. Normally, standardization allows the features between different dimensions to be numerically comparable, which can greatly improve the accuracy of the classifier and prompt the convergence speed. The most typical one is the normalization of the data; that is, the data is uniformly mapped to [0,1]. Data normalization methods include min-max normalization (min-max normalization), log conversion, arctangent conversion, z-score normalization, and fuzzy quantization.

In this study, the z-score normalization method was used. For a sample x of length n, the calculation formula is:

where μ is the average of sample data, σ is the standard deviation of sample data, and is the amplitude of the ith point.

3. Methods

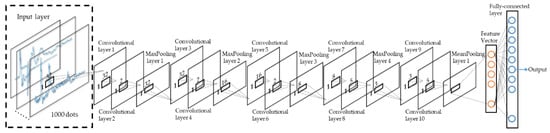

Lightning signals are similar to signals, such as voice and ECG, and belong to one-dimensional time series. This paper built a one-dimensional CNN model (as shown in Figure 5). The input data of the model was waveform data after filtering and noise reduction and standard deviation normalization, and each data consisted of 1000 sampling points. The sampling rate of the sensor was 1MSPS, and each point represented a time length of 1 μs, that is, 1000 points represented that the sensor collected information with a length of 1 ms. The model consisted of multiple one-dimensional convolutional layers and pooling layers (maximum pooling and mean pooling). The specific parameters of the model are detailed in Section 4.1.

Figure 5.

Proposed one-dimensional convolutional neural network (1D-CNN) structure diagram composed of an input layer, ten convolutional layers, five pooling layers, one fully-connected layer, and output.

3.1. Independent Feature Branch

With the rapid development of deep learning methods, many advanced algorithms have been applied to deep learning methods. Researchers apply different algorithms and network structures to different fields. Lightning signals are instantaneous signals. For signal classification detection, it is mainly to identify the relevant characteristics of the signal at a certain moment. Therefore, the feature extraction of the signal is crucial. This section briefly introduces several deep learning methods used in the 1D-CNN lightning signal classification model. Among them, one-dimensional convolution was used to extract the characteristics of the lightning signal; the pooling method down-sampled the signal to improve the calculation speed; the fully connected layer integrated all features, and finally gave the probability distribution of the signal on different types. Each layer was from top to bottom to complete the classification of lightning signals.

3.1.1. 1-D Convolution Layer

The 1-D convolution layer performs 1-D convolution, data filling, and activation calculations. Similar to using 2-D convolution to extract image features, 1-D convolution calculations are used to extract lightning waveform features. Through the calculation of convolution kernels of different sizes, the lightning waveform characteristics of different scales are extracted. The activation function can add some non-linear factors to the network, causing the network to better solve multiple complex problems. The operation can be formulated as:

where is a 1-D kernel (or weight) connecting and , * represents a 1-D convolution operation, is the corresponding deviation, and f (⋯) is the activation function, producing nonlinearity; here, a nonlinearity rectification function (rectified linear unit, ReLU) [16] is used, f (x) = max (0, x). It is known as an efficient activation function for the deep learning structure, which can accelerate the training process [29].

3.1.2. 1-D Pooling Layer

The pooling layer (also called down-sampling) can reduce the dimensionality of the feature extracted by the convolutional layer. On the one hand, the feature map is smaller, simplifying the computational complexity of the network [30] and avoiding over-fitting to a certain extent; on the other hand, feature compression is performed to extract the main features.

Considering the transient nature of the lightning signal and the inconsistency of the time scale, the pooling layer can effectively reduce the influence of these factors, greatly reduce the amount of data calculation, and improve the robustness of the model. Common pooling methods include maximum pooling and mean pooling. In the 1-D CNN lightning signal classification model, the maximum pooling and mean pooling are used in combination to obtain signal characteristics at different scales.

3.1.3. Fully-Connected Layer

Fully-connected layer plays the role of “classifier” in the entire convolutional neural network. The fully connected layer combines the features of different scales of the lightning waveform extracted by the convolutional layer and the pooling layer and makes a decision on all the extracted features from a global perspective. The calculation can be expressed as:

where W is a weight matrix created by the network layer, b is a bias vector, and f (⋯) is an activation function. Here, the softmax function is used to calculate the prediction probability of each category.

3.2. Parameter Optimization

Deep learning has derived various parameter optimization methods, which can trace back to the stochastic gradient descent (SGD) algorithm [16]. In this paper, Adam [31] (adaptive momentum) adaptive momentum was used to replace the SGD algorithm. Adam combines the best performance of the AdaGrad [32] and RMSProp, using both first-order and second-order momentum.

Adam’s tuning is relatively simple, and the default parameters can handle most problems. There are only two hyperparameters: and , the former controls first-order momentum, and the latter controls second-order momentum. Adam optimizer parameters default to and , the initial learning rate is 0.001, and the size of each training batch is 100.

4. Result

4.1. Model Structure and Parameters

Based on the above hierarchical structure and training methods, parameters were determined through experiments, such as the size of the convolution kernel and the number of convolution layers. Finally, the model structure and parameters were determined, as shown in Table 1. Due to the wide spectrum of lightning signals, the signal characteristics appeared as pulse widths of different scales in the time domain. Therefore, when designing the size of the convolution kernel, the kernel size of the convolution layer decreased with the number of layers, and the recognition scale changed from large when it was small, and it finally achieved fine identification of the signal. The model and experiments were implemented in Keras + TensorFlow on a Windows PC with Intel Core i7 CPU (@ 2.2 GHz) and NVIDIA Quadro P600.

Table 1.

Model parameters.

4.2. Train and Evaluate

A certain number of samples were randomly drawn from the entire data set for training, and the remaining part was used for testing. In this paper, 5-fold cross-validation was used for model tuning to find hyperparameter values that optimize the generalization performance of the model. Lightning data set was divided into five equal sets, and each set was used once as testing data, and the remaining sets were used as training data. As for multiple types of classification models, the accuracy of each category was used to evaluate model performance:

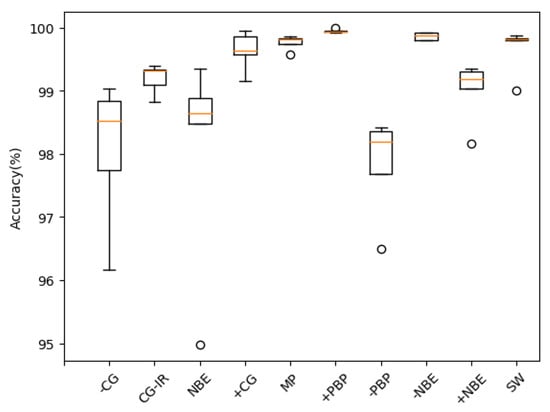

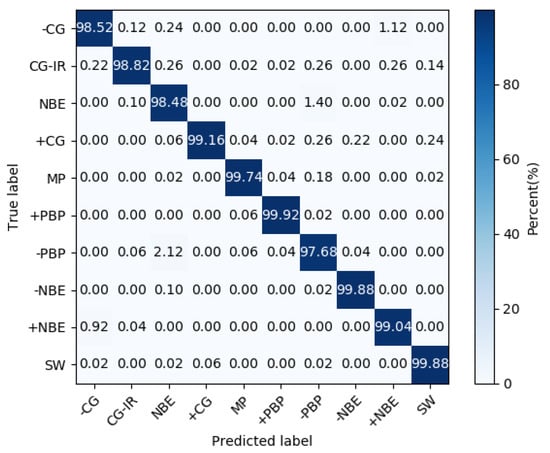

Table 2 lists the model’s recognition accuracy for different types of lightning under each folding cross-validation (K). Figure 6 shows the box plot of model classification accuracy. Figure 7 shows the model’s confusion matrix for different types of recognition results during five-fold cross-validation.

Table 2.

Five-fold cross-validation for different types of classification accuracy.

Figure 6.

Box plot of model classification accuracy.

Figure 7.

Confusion matrix for classification results.

It could be seen from Table 2 that for the 10 types of lightning signals, the model had good recognition, and the overall recognition accuracy rate was as high as 99.11%. From the confusion matrix, it could be seen that for several types of signals that were prone to confusion (such as −CG and +NBE, NBE, and PBP), the model still had good discrimination. The average recognition accuracy of a single type was as low as 97.83%, and the highest was 99.95%.

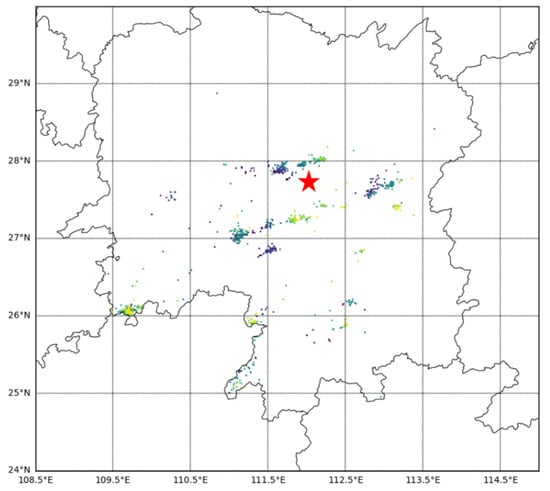

4.3. Model Test

Several thunderstorms occurred in Hunan, China, on 6 June 2019. Figure 8 shows the distribution of thunder and lightning at 13:00 on that day. The lightning waveform data was recorded by the lightning detection station located in Loudi, Hunan Province. A total of 9647 pieces of data were collected by the detection station within an hour and used for testing.

Figure 8.

Thunderstorm map. The red pentagram represents a site location.

The model classification results are shown in Table 3. Through manual inspection, the model’s recognition accuracy of various signals was given. The overall classification accuracy of the model was 97.55%. For most categories, the classification accuracy was above 97%.

Table 3.

The classification accuracy of the model on the test set.

Besides, it could be noted that the accuracy of +CG was significantly lower than in other classes. There were two factors:

- (1)

- The +CG occurrence probability was significantly less than other classes, which resulted in fewer samples in this class.

- (2)

- We found that there were a few data that were significantly different from the waveforms in the data set when manually verifying the classification accuracy of the model. This was also the reason that the accuracy of +CG type recognition was significantly reduced.

Overall, the model had a good classification effect on these 10 types of lightning waveforms.

5. Discussion

5.1. Methods Comparison

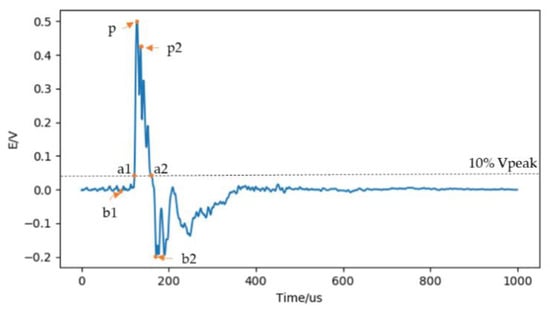

This section has discussed the technical advantages of using convolutional neural networks over traditional statistical methods in the field of lightning signal classification in detail. The comparison method refers to the typical characteristics of the three types of lightning signals counted by Li Cai [33]. Three types of lightning signals include cloud-to-ground flash, cloud-to-cloud flash, and +NBE/−NBE. In order to distinguish these types of lightning, specific nine waveform parameter extraction methods are used to classify the collected lightning radiation electric field waveforms.

As shown in Figure 9, they are:

Figure 9.

Schematic diagram of waveform characteristic parameter definition.

- (1)

- Pulse rise time. The time elapsed from the 10% peak of the waveform to the peak of the waveform. .

- (2)

- Pulse fall time. The time elapsed from the peak of the waveform to the 10% peak of the waveform. .

- (3)

- Pulse width. The time elapsed from the 10% peak of the rising edge to the 10% peak of the falling edge. .

- (4)

- Forward peak-to-peak ratio. The ratio of initial negative peak to maximum peak. .

- (5)

- Backward peak-to-peak ratio. The ratio of following negative peak to maximum peak. .

- (6)

- Sub-peak ratio. The ratio of the maximum peak to the secondary peak. .

- (7)

- Signal to noise ratio (SNR). The ratio of the average power of the signal within 20 before and after the peak point to the average power of the signal at other times.where represents the amplitude of the Nth point, P represents the point with the largest amplitude.

- (8)

- Pre-SNR. The ratio of the average power of the signal within 20 before and after the peak point to the average power of the signal before the peak point.

- (9)

- Post-SNR. The ratio of the average power of the signal within 20 before and after the peak point to the average power of the signal after the peak point.

We strictly calculated the 50,000 lightning waveform data that have been classified in the database, according to Li Cai’s statistical law. The related calculation results are shown in Table 4.

Table 4.

Results of statistical classification.

We newly added a classification called Other Types, which represents signals whose calculation results are not any of the three types (cloud-to-cloud flash, cloud-to-ground flash, and +NBE/−NBE). This article separates unipolar narrow pulse events separately into +NBE and −NBE. It should be emphasized that there is a category in this paper called a narrow bipolar pulse event, which is very different from Li Cai’s. The distinguishing feature is that the positive and negative amplitudes of these signals are symmetrical.

The results showed that compared to the accuracy of classifying waveforms (99.11%) in the waveform library using a one-dimensional convolutional neural network (Table 2), the average classification accuracy using statistical methods was only 55.66%. In addition, there are nearly 30% of the waveform that could not be identified by statistical methods. Firstly, the statistical method is a statistical rule for a specific waveform, it has a certain dependence on the sample, and there are some differences in the statistics of different samples. Secondly, there are other types of waveforms in the waveform library, such as CG-IR, MP. The classification accuracy of these two types is particularly low, which indicates that existing statistical rules do not consider these types of signals, and then, due to the similarity between the narrow bipolar event and the cloud-based flash memory, both of them have a stronger amplitude. The method distinguishes these two types of signals with certain defects. Finally, comparing the two methods, it shows that the one-dimensional convolutional neural network has a strong advantage in the feature extraction of lightning signals. It does not need to manually extract waveform features and avoid the impact of different feature parameters on classification.

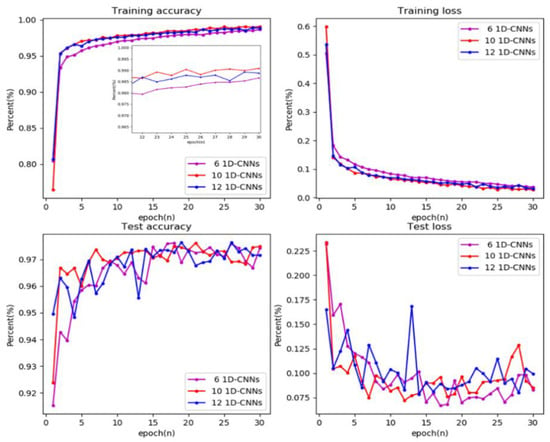

5.2. Model Structure Analysis

Different model structures would lead to different experimental results. The model structure proposed in this article was a scheme selected after a large number of experiments. Because the selection of different parameters leads to too many model combinations, we selected some experimental data to illustrate the parameter selection method of the lightning classification model proposed in this paper. Figure 10 shows the results of a model with different convolutional layers under training and testing conditions. Using the 10-layer convolutional layer proposed in this paper as a reference, the model performance of the 6-layer convolutional layer and 12-layer convolutional layer were compared. It could be found that when all three models were trained 30 times, the classification accuracy of each model on the test data could reach more than 97%. The difference was that with the increase of convolutional layers, the model converged faster, and the classification accuracy of training data was higher. It was indicated that the training accuracy with 12 convolutional layers was lower than that of 10 convolutional layers. Of course, a larger number of convolutional layers play an important role in the case of big data. Considering that the model would be applied to lightning sensors to classify lightning signals in real-time, due to the impact of embedded processor performance factors, we had to make a trade-off between the size of the model parameters and the processing speed. Based on the above considerations, a 10-layer convolutional neural network was adopted.

Figure 10.

Training and test results of models with different convolutional layers.

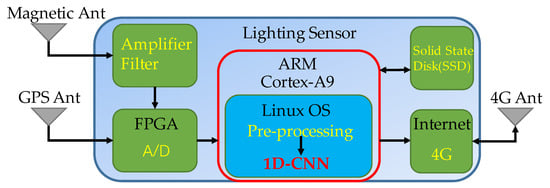

5.3. Real-Time Analysis

The method proposed in this paper would be applied to the three-dimensional lightning detection equipment deployed by the Institute of Electrical Engineering, Chinese Academy of Sciences in China, instead of the traditional waveform classification method. In order to evaluate the real-time performance of the 1D-CNN designed in this paper, we tested the model on a PC and an embedded platform, respectively. The model was executed on a 64-bit Windows 10 laptop (processor: Intel Core i7 CPU (@ 2.2 GHz)) using Tensorflow+Keras in Spyder open source cross-platform integrated development environment for scientific programming in Python. According to the test dataset, the time required for the model to execute the classification algorithm for 50,000 lightning waveforms was measured. The average time for the model to classify a waveform in the Spyder environment was 378.2 μs.

For embedded platforms, we are currently using a Cortex-A9 processor with a CPU operating frequency of 800 MHz. FPGA (Field-Programmable Gate Array) performs data acquisition and triggers the time labeling of lightning waveforms. The ARM processor performs tasks, such as data preprocessing, waveform classification, and calculating signal arrival time. After the data analysis is completed, it would be saved to the local solid-state hard disk, and the processing results would be transmitted to the server located at the Institute of Electrical Engineering, Chinese Academy of Sciences, via the network. The system block diagram of the lightning sensor is shown in Figure 11. We transplanted the 1D-CNN model that has been trained on the PC to the embedded processor. The average time for this embedded system to process a waveform was 4.12 ms. Considering the global average of 30 to 100 lightning occurrences per second, the calculation cost required by this model was significantly less than the frequency of lightning occurrences, indicating that the algorithm could be applied to real-time lightning signal analysis.

Figure 11.

The system block diagram of the lightning sensor.

6. Conclusions

Based on a large amount of lightning waveform data, 10 types of 50,000 waveform samples were extracted to form a lightning dataset. A 1D-CNN lightning signal classification model was built using the deep learning method.

Compared with the simple classification method of cloud flash and cloud ground flash based on statistical methods, the 1D-CNN model could realize multiple classifications of lightning signals. Among them, a 1-D convolutional layer containing multiple filters (convolution kernels) extracts the characteristics of lightning signals at different time scales, multiple pooling layers down-sample the lightning signals, and summarize global information through a fully connected layer. The dataset comes from lightning signals collected at different sites, different times, and during different lightning processes. The diversity of samples makes the model have good generalization and adaptability. For 10 types of lightning datasets, the overall recognition accuracy of the model was as high as 99.11%. Compared with the classification method using statistical rules, the classification accuracy rate was only 55.66%, which was far lower than the lightning classification model using the deep neural network method. For the selected thunderstorm process, the overall model recognition accuracy was 97.55%, and for most categories, the recognition accuracy was above 97%.

Under the condition of having a large amount of lightning data, we also found more other types of lightning signals by using this model. In future research, we would try to fine-tune these signals to provide a more comprehensive and reliable classification of lightning signals. Since distinguishing between cloud flashes and cloud ground flashes has been an important indicator for lightning monitoring and research, and accurate lightning classification can significantly improve the positioning accuracy of the lightning positioning network, the application of deep learning in the classification of lightning signals proposed in this paper has a bright future.

Supplementary Materials

The test data and 1D-CNN lightning classification model are available online at https://github.com/jqwang1993/lightning-dataset.

Author Contributions

Conceptualization: J.W., Q.H., Q.M., S.C., J.H., H.W., and F.X; Data curation: J.W. and C.G.; Methodology: J.W., Q.H., Q.M., X.Z., and C.G.; Software: J.W. and C.G.; Writing—original draft: J.W., Q.H., Q.M., S.C., J.H., H.W., and F.X; Writing–review and editing: J.W., Q.H., S.C., J.H., H.W., and F.X. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (Grant No. 81971702), the Fundamental Research Funds for the Central Universities, Wuhan University (Grant No. 2042018gf0045), the Key Deployment Project of the Chinese Academy of Sciences (Grant No. KFZD-SW-432).

Acknowledgments

Thanks to the Institute of Electrical Engineering, Chinese Academy of Sciences for their data support. The author would also like to thank the reviewers for their helpful feedback, which significantly improved the manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Rakov, V.; Uman, M.; Raizer, Y. Lightning: Physics and Effects. Phys. Today-PHYS TODAY 2004, 57, 63–64. [Google Scholar] [CrossRef]

- Rodger, C.J.; Brundell, J.B.; Hutchins, M.; Holzworth, R.H. The world wide lightning location network (WWLLN): Update of status and applications. In Proceedings of the 2014 XXXIth URSI General Assembly and Scientific Symposium (URSI GASS), Beijing, China, 16–23 August 2014; pp. 1–2. [Google Scholar]

- Rakov, V.A. Lightning phenomenology and parameters important for lightning protection. In Proceedings of the IX International Symposium on Lightning Protection, Foz do Iguaçu, Brazil, 26–30 November 2007. [Google Scholar]

- Akinyemi, M.; Boyo, A.; Emetere, M.; Usikalu, M.; Olawole, O. Lightning a Fundamental of Atmospheric Electricity. IERI Procedia 2014, 9, 47–52. [Google Scholar] [CrossRef][Green Version]

- Dwyer, J.; Uman, M. The physics of lightning. Phys. Rep. 2013, 534. [Google Scholar] [CrossRef]

- Le Vine, D.M. Sources of the strongest RF radiation from lightning. J. Geophys. Res. 1980, 85. [Google Scholar] [CrossRef]

- Willett, J.C.; Bailey, J.C.; Krider, E.P. A class of unusual lightning electric field waveforms with very strong high-frequency radiation. J. Geophys. Res. Atmos. 1989, 94, 16255–16267. [Google Scholar] [CrossRef]

- Rison, W.; Krehbiel, P.R.; Stock, M.G.; Edens, H.E.; Shao, X.M.; Thomas, R.J.; Stanley, M.A.; Zhang, Y. Observations of narrow bipolar events reveal how lightning is initiated in thunderstorms. Nat. Commun. 2016, 7, 10721. [Google Scholar] [CrossRef] [PubMed]

- Rison, W.; Thomas, R.J.; Krehbiel, P.R.; Hamlin, T.; Harlin, J. A GPS-based three-dimensional lightning mapping system: Initial observations in central New Mexico. Geophys. Res. Lett. 1999, 26, 3573–3576. [Google Scholar] [CrossRef]

- Thomas, R.J.; Krehbiel, P.R.; Rison, W.; Hamlin, T.; Boccippio, D.J.; Goodman, S.J.; Christian, H.J. Comparison of ground-based 3-dimensional lightning mapping observations with satellite-based LIS observations in Oklahoma. Geophys. Res. Lett. 2000, 27, 1703–1706. [Google Scholar] [CrossRef]

- Lyu, F.; Cummer, S.A.; Solanki, R.; Weinert, J.; McTague, L.; Katko, A.; Barrett, J.; Zigoneanu, L.; Xie, Y.; Wang, W. A low-frequency near-field interferometric-TOA 3-D Lightning Mapping Array. Geophys. Res. Lett. 2014, 41, 7777–7784. [Google Scholar] [CrossRef]

- Wu, T.; Wang, D.; Takagi, N. Lightning Mapping With an Array of Fast Antennas. Geophys. Res. Lett. 2018, 45, 3698–3705. [Google Scholar] [CrossRef]

- Smith, D.A. The Los Alamos Sferic Array: A research tool for lightning investigations. J. Geophys. Res. 2002, 107. [Google Scholar] [CrossRef]

- Cooray, V.; Fernando, M.; Gomes, C.; Sorenssen, T. The Fine Structure of Positive Lightning Return-Stroke Radiation Fields. IEEE Trans. Electromagn. Compat. 2004, 46, 87–95. [Google Scholar] [CrossRef]

- Dowden, R.L.; Brundell, J.B.; Rodger, C.J. VLF lightning location by time of group arrival (TOGA) at multiple sites. J. Atmos. Sol.-Terr. Phys. 2002, 64, 817–830. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learn. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Cho, H.; Yoon, S.M. Divide and Conquer-Based 1D CNN Human Activity Recognition Using Test Data Sharpening. Sensors (Basel) 2018, 18, 1055. [Google Scholar] [CrossRef]

- Liu, W.; Zhang, M.; Zhang, Y.; Liao, Y.; Huang, Q.; Chang, S.; Wang, H.; He, J. Real-Time Multilead Convolutional Neural Network for Myocardial Infarction Detection. IEEE J. Biomed. Health Inform. 2018, 22, 1434–1444. [Google Scholar] [CrossRef]

- Hannun, A.Y.; Rajpurkar, P.; Haghpanahi, M.; Tison, G.H.; Bourn, C.; Turakhia, M.P.; Ng, A.Y. Cardiologist-level arrhythmia detection and classification in ambulatory electrocardiograms using a deep neural network. Nat Med. 2019, 25, 65–69. [Google Scholar] [CrossRef]

- Liu, W.; Wang, F.; Huang, Q.; Chang, S.; Wang, H.; He, J. MFB-CBRNN: A hybrid network for MI detection using 12-lead ECGs. IEEE J. Biomed. Health Inform. 2019. [Google Scholar] [CrossRef]

- Ali, Z.; Jiao, L.; Baker, T.; Abbas, G.; Abbas, Z.H.; Khaf, S. A Deep Learning Approach for Energy Efficient Computational Offloading in Mobile Edge Computing. IEEE Access 2019, 7, 149623–149633. [Google Scholar] [CrossRef]

- Alloghani, M.; Baker, T.; Al-Jumeily, D.; Hussain, A.; Mustafina, J.; Aljaaf, A.J. Prospects of Machine and Deep Learning in Analysis of Vital Signs for the Improvement of Healthcare Services. In Nature-Inspired Computation in Data Mining and Machine Learning; Yang, X.-S., He, X.-S., Eds.; Springer International Publishing: Cham, Switzerland, 2020. [Google Scholar]

- Samaras, S.; Diamantidou, E.; Ataloglou, D.; Sakellariou, N.; Vafeiadis, A.; Magoulianitis, V.; Lalas, A.; Dimou, A.; Zarpalas, D.; Votis, K.; et al. Deep Learning on Multi Sensor Data for Counter UAV Applications-A Systematic Review. Sensors (Basel) 2019, 19, 4837. [Google Scholar] [CrossRef]

- Rathore, H.; Al-Ali, A.K.; Mohamed, A.; Du, X.; Guizani, M. A Novel Deep Learning Strategy for Classifying Different Attack Patterns for Deep Brain Implants. IEEE Access 2019, 7, 24154–24164. [Google Scholar] [CrossRef]

- Li, D.; Wang, R.; Xie, C.; Liu, L.; Zhang, J.; Li, R.; Wang, F.; Zhou, M.; Liu, W. A Recognition Method for Rice Plant Diseases and Pests Video Detection Based on Deep Convolutional Neural Network. Sensors 2020, 20, 578. [Google Scholar] [CrossRef] [PubMed]

- Mahmood, A.; Ospina, A.G.; Bennamoun, M.; An, S.; Sohel, F.; Boussaid, F.; Hovey, R.; Fisher, R.B.; Kendrick, G.A. Automatic Hierarchical Classification of Kelps Using Deep Residual Features. Sensors 2020, 20, 447. [Google Scholar] [CrossRef] [PubMed]

- Gustafsson, F. Determining the initial states in forward-backward filtering. IEEE Trans. Signal Process. 1996, 44, 988–992. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. ImageNet Classification with Deep Convolutional Neural Networks. Neural Inf. Process. Syst. 2012, 25. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, Q.; Wu, H.; Liu, Y. A Shallow Network with Combined Pooling for Fast Traffic Sign Recognition. Information 2017, 8, 45. [Google Scholar] [CrossRef]

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Duchi, J.; Hazan, E.; Singer, Y. Adaptive Subgradient Methods for Online Learning and Stochastic Optimization. J. Mach. Learn. Res. 2011, 12, 2121–2159. [Google Scholar]

- Cai, L. Ground-based VLF/LF Three Dimensional Total Lightning Location Technology. Ph.D. Thesis, Wuhan University, Wuhan, China, 2013. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).