Abstract

In X-ray tomography image reconstruction, one of the most successful approaches involves a statistical approach with norm for fidelity function and some regularization function with norm, . Among them stands out, both for its results and the computational performance, a technique that involves the alternating minimization of an objective function with norm for fidelity and a regularization term that uses discrete gradient transform (DGT) sparse transformation minimized by total variation (TV). This work proposes an improvement to the reconstruction process by adding a bilateral edge-preserving (BEP) regularization term to the objective function. BEP is a noise reduction method and has the purpose of adaptively eliminating noise in the initial phase of reconstruction. The addition of BEP improves optimization of the fidelity term and, as a consequence, improves the result of DGT minimization by total variation. For reconstructions with a limited number of projections (low-dose reconstruction), the proposed method can achieve higher peak signal-to-noise ratio (PSNR) and structural similarity index measurement (SSIM) results because it can better control the noise in the initial processing phase.

1. Introduction

X-ray computer tomography (CT) measures the attenuation of X-ray beams passing through an object, generating projections. Such projections are processed, resulting in an image (slice) of the examined object. This is known as a CT image reconstruction. The CT scan, formed by concatenating a large number of adjacent reconstructed images, has been proven to have great value in delivering rapid and accurate diagnoses for many cases in modern medicine. Although CT scanning has evolved considerably since its creation in 1972 by Godfrey Hounsfield, only recently has the concern with radiation levels in radiological examinations become important, leading to the “as-low-as-reasonably-achievable principle”, known as the ALARA principle. The ALARA principle states that only the minimum amount of radiation must be applied to the patient. For this reason, ALARA is widely accepted in the medical CT community [1]. To reduce the X-ray dose of the patient during the CT scan, there are two possibilities: (1) reduce the amount of projection (the quantity of X-rays emitted) during the CT scan or (2) reduce the power of the X-ray source during image acquisition. Both cases generally lead to low-quality reconstructed CT images. Then, a state-of-the-art problem is to propose a method that allows good-quality CT image reconstruction with a low-dose X-ray source. The central theme of this work is the reconstruction of images from the signal of the CT process, where the X-ray dosage is a concern. Before discussing CT image reconstruction approaches with low-dose X-rays, the next sections will present the most important classical, iterative, and statistical techniques to enable the reader to understand how CT image reconstruction has evolved to the current state of the art.

1.1. Classical, Iterative, and Statistical CT Image Reconstruction Approaches

The first approach to become popular, especially for its performance, was the filtered backprojection (FBP) reconstruction technique [2,3]. FBP is a classic method based on the Fourier central slice theorem and is implemented with the fast Fourier transform (FFT). Although it exhibits good performance, FBP has difficulty in being adapted to new CT scanner architectures. FBP requires high-dose radiation (in comparison to modern methods) and is not consistent with the ALARA (as-low-as-reasonably-achievable) principle [1].

The classical approaches, while successful, do not favor the incorporation of physical-statistical phenomena in the CT framework. For example, photon emission is a rare event and may be well described by the Poisson distribution [4]; beam behavior is best described by a response function that models the shadows cast onto detectors using a Gaussian model [5]; the beam hardening phenomenon (lines and shadows adjacent to high-density reconstructed areas) that appears due to the polyenergetic nature of X-ray emissions can be statistically corrected [6]; the loss of photons by sensors, known as photon read-out, is a Gaussian phenomenon [7]; data acquisition electronic noise and energy-dependent signals can be modeled as compound Poisson plus Gaussian noise [8]; etc. In this context, a statistical approach means adding to the mathematical model elements that describe physical-statistical phenomena present in the CT image reconstruction process. Therefore, the incorporation of detailed statistical models into CT reconstruction is not straightforward. In this sense, many solutions for the CT image reconstruction problem use some form of statistical approach [9,10,11,12,13,14,15,16,17,18,19,20]. Adaptive statistical iterative reconstruction techniques have shown significant results compared to non-adaptive techniques [17,18,21,22]. In general, although the models incorporate part of the statistical phenomena, most of these phenomena are not modeled since the practical effects are relatively insignificant and/or result in high-cost computational solutions.

An important method is proposed by Clark et al. [23], which consists of using rank-sparse kernel regression filtration with bilateral total variation (BTV) to map the reconstructed image into spectral and temporal contrast images. In this work, the authors strictly constrain the regularization problem while separating temporal and spectral dimensions, resulting in a highly compressed representation and enabling substantial undersampling of acquired signals. The method (5D CT data acquisition and reconstruction protocol) efficiently exploits the rank-sparse nature of spectral and temporal CT data to provide high-fidelity reconstruction results without increased radiation dose or sampling time. However, a remark should be made regarding the use of BTV (regularization based on norm). This often leads to the piecewise constant result and hence tends to produce artificial edges on the smooth areas. In order to mitigate this counterpoint of norm regularization, Charbonnier et al. [24] developed an edge-preserving regularization scheme known as bilateral edge preservation (BEP), which allows the used of an norm, , and is applied in this work. Sreehari et al. [25] proposed a plug-and-play (P&P) priors framework with a maximum a posteriori (MAP) estimate approach used to design an algorithm for electron tomographic reconstruction and sparse image interpolation that exploits the non-local redundancy in images. The power of the P&P approach is that it allows a wide array of modern denoising algorithms to be used as a prior model for tomography and image interpolation. Pirelli et al. [26] propose the denoising CT generalized approximate message passing algorithm (DCT-GAMP), an adaptation of approximate message passing (AMP) techniques that represents the state of the art for solving undersampling compressed sensing problems with random linear measurements. In contrast, this approach uses minimum mean square error (MMSE) instead of MAP, and the authors show that using MMSE favors decoupling between the noise conditioning effects and the system models.

The Bayesian statistical approach is widely applied to the reconstruction of X-ray tomographies, with some variations, [14,15,16,18,27,28], and makes it possible to insert prior knowledge into the CT system model. This approach promises two advantages. First, it provides the search with more satisfactory solutions (noiseless ones) through the limitation of the searchable set of solutions using an a priori function (known as restriction). This restriction may mean that, for example, very high variations (high frequencies) between neighboring pixels will be considered as noise (and therefore must be discarded), and moderate frequencies will be considered as edges (and therefore must be preserved). In the context of computed tomography, this means that large differences in intensity between neighboring pixels tend to be interpreted as noise, and therefore, such a solution should be disregarded [5]. Moreover, solving the CT reconstruction system, , is an inverse and ill-posed problem, and prior knowledge often ensures the stability of the solution. Second, we can adopt a simplified mathematical model for tomographic image reconstruction and compensate its inefficiency (instability, noisy reconstruction, etc.) by adding the statistical component (prior knowledge) to the objective function. However, the model simplification has its limitations and should be used restrictively [5]. As a consequence of prior knowledge introduction, more satisfactory solutions (low noise level) can be found. Maximum a posteriori (MAP) is a useful statistical framework for CT reconstruction [12,15,16,27,28] and favors the incorporation of the regularization term with prior knowledge into the model. The MAP strategy provides an objective function composed by the sum of the probability (also known as fidelity) and the regularization function that establishes the optimization restriction criteria, also known as a prior.

1.2. Signal Modeling and Error Considerations in CT Image Reconstruction

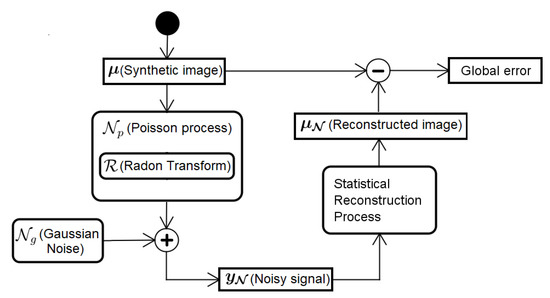

As previously discussed, the statistical approaches can reduce deficiencies caused by classical mathematical modeling without having to literally incorporate the complexity of a real-world model. However, before proposing a statistical (non-deterministic) model that results in a lower noise reconstruction, it is necessary to establish the process as a whole. As shown in Figure 1, the process begins with a synthetic image, .

Figure 1.

From acquisition to reconstruction and measurement of error.

In this work, we use different synthetic images, namely Shepp–Logan head phantom and FORBILD head and abdomen phantom definitions [2,29]. The synthetic image is submitted to the Radon transform, , generating the ideal (free of noise) signal of the CT scan. The acquisition of data in the CT equipment depends on the amount of photons that reaches each detector. Once this problem is a particle countable process, well described by Poisson statistics [8], it is used in the model in Figure 1, represented by the random variable. However, due to the high number of photons, the acquisition process can be modeled as Gaussian due to the central limit theorem. In addition, the Gaussian model leads to additive algorithms, whereas the Poisson model leads to multiplicative, and therefore less efficient, algorithms [24]. In this work, we assume the signal arriving at the CT equipment detectors is influenced by Gaussian additive noise and, from among the dosage reduction methods presented in Section 1, we chose to emulate the low radiation dosage by reducing the number of projection angles processed. This means we assume that each detector absorbs an amount of photons that allows modeling the noise as a Gaussian additive, and the low dosage occurs by reducing the number of projections captured by the detectors (by reducing scanning angles). Accordingly, even with the process having a Poissonian nature, Gaussian additive noise can be added to the process, as

where is the resulting signal that approximates a tomography signal, is the result of applying the Radon transform on the synthetic image, , and is the Gaussian additive noise.

The remainder of this work is dedicated to the reconstruction of the CT image, , from the signal, , and the reduction of the global error, i.e., the reduction of the difference between synthetic and reconstructed images. As a criterion for measuring the quality of image reconstruction, we use peak signal-to-noise ratio (PSNR) and structural similarity, known as SSIM [30].

1.3. The Contribution of This Work

This work proposes a MAP solution with adaptive regularization term modeled by a bilateral edge-preserving (BEP) function [31,32] to regularize the fidelity term ( norm). The result of BEP regularization is then subject to regulation by the total variation (TV) of the discrete gradient transform (DGT) function. The results of reconstruction with an adaptive norm, , using BEP are compared via structural similarity (SSIM) and peak signal-to-noise ratio (PSNR) with a simultaneous algebraic reconstruction technique (SART) reconstruction regularized via the TV minimization of the discrete gradient transform (DGT) function. The image reconstruction is an iterative process, and SSIM is calculated for each step, making it possible to objectively compare the methods step by step. The assumption is that the better the reconstruction method, the higher the SSIM value associated with the reconstructed image. We also use the well-known PSNR metric to compare the process of image reconstruction step by step. The proposal is to determine if both SSIM and PSNR present consistent results in comparison to each other. Both approaches use synthetic images (the same used to generate the input signal, ) as a reference (for comparison). The rest of this work is organized as follows. In Section 2, the MAP model is developed, resulting in the objective function. In Section 3, the optimization technique of the objective function is developed. In Section 4, experiments are performed, and the results are presented and analyzed. Finally, in Section 5, we present the conclusions and final considerations.

2. Modeling the Objective Function

Most modern CT scanners use energy integration detectors whose photon counts are proportional to the total energy incident on them. Energy, in turn, is proportional to the number of X-ray photons that affect the detectors (sensors) of the tomograph. The denser the region traversed by X-ray photons, the lower the count of detected photons over integral line , , where is the maximum number of projections acquired by the CT scanner. This is known as the Beer–Lambert law, defined as

where is the number of detected photons when the beam finds no obstacle, and the exponential term is the integral of all linear attenuation coefficients on the line (with being 2-D coordinates following ), which is the path of the beam. Equation (2) assumes that every X-ray emission has the same energy level, meaning that the process is monoenergetic. This approach is adopted in many works such as [9,10,11,15,16,27,28,33,34,35], with the advantage of avoiding the beam hardening problem. Moreover, the monoenergetic approach leads to a more tractable mathematical model. However, the emission of X-rays is, by nature, polyenergetic. As a consequence, the same object reacts differently when subjected to X-rays of different energy levels, generating unwanted artifacts in the reconstructed image. These defects can be avoided, but with the adoption of complex models as in [12,13,36] and at a high computational cost. This topic is complex and still subject to change because CT scanners using monoenergetic X-ray sources are beginning to emerge [37].

In favor of a better understanding of the purpose of this work, we first present a base solution that uses SART reconstruction regularized via TV minimization of the DGT function (SART+DGT), highlighting the relevant parts, and then we present our approach. This strategy is trustworthy because makes it clear the value of the contribution in this work. The proposed method is abbreviated as SART+BEP+DGT.

2.1. Objective Function Modeling Using Soft-threshold Filtering for CT Image Reconstruction

This method consists of modeling an objective function with a norm fidelity function added and a DGT prior function regularized by a norm with TV minimization. Optimization of the objective function (Section 3) is performed using alternating minimization. The fidelity term is minimized by SART. The regularization (prior minimization) is performed by constructing a pseudo-inverse of the DGT and adapting a soft-threshold filtering algorithm whose convergence and efficiency have been theoretically proven by [38].

The key aspect of the modeling process is that reconstruction estimates the discrete attenuation, for each j pixel of the image, with . Thus, the integral over the line, , can be discretized as

where is the matrix representing the system geometry, is the linear attenuation coefficient vector with representing the j-th pixel, and the symbol T is the transpose of the matrix. In this model, every is defined as the normalized length of the intersection between the i-th projection beam and j-th rectangular pixel centered in . The emission of X-ray photons is a rare event, so a Poisson distribution is usually adopted to describe the probabilistic model, expressed as

where is the projection (measurement) along the i-th X-ray beam, and is the expected value. Because the X-ray beams are independent from each other, taking into account Equation (4), the joint probability of given , and observing countable events may be expressed as

Using the MAP approach, as in [9,15,16,27], we have the objective function as follows:

where is the fidelity term, with being an estimate of , and (multiplied by a factor ignored in Equation (6)) is the regularization term with norm based on DGT, defined as

where , , , with W and H being, respectively, the width and height of the matrix representing the image with pixels. By definition, TV is the sum of DGT for all pixels of the image:

with . Thus, introducing the auxiliary variable and applying the transformation

with being a diagonal matrix, the objective function in Equation (6) can be rewritten as

where is a positive adjustment parameter to balance the terms of fidelity and TV and is usually set to 1 [12,16]. The ultimate goal is to minimize the objective function , obtaining , as shown below:

where the fidelity term, , represented both in the expanded version, as in Equation (6), and in the compact version, as in Equation (10), is shown below as

and is the restriction that drives the solution according to certain criteria ( norm in this case). The optimization of , although simple, is an important concept and can be defined as follows:

Inspired by the model in Equation (10), we propose in what follows a method for CT image reconstruction using adaptive soft-threshold filtering, which means, in brief, that the proposed method is intended to balance edge preservation and noise mitigation.

2.2. Objective Function Modeling by Using Bilateral Edge Preservation for CT Image Reconstruction

Regularization using the DGT ( norm) works well for the CT reconstruction problem because it searches among the solutions of fidelity ( norm) optimization looking for the one with a lower TV. However, it is common sense that the regularization based on the norm often introduces artificial edges in smooth transition areas. Moreover, a good regularization strategy must simultaneously perform noise suppression and edge preservation. With this motivation, Charbonnier et al. [24] proposed the bilateral edge preserving (BEP) regularization function, inspired by the bilateral total variation (BTV) regularization technique [39]. BTV regularization is defined by

where q is a positive number, and are displacements by l and m pixels in the horizontal and vertical directions, respectively, is the image in reconstruction/regularization, and , , is applied to create a spatial decay effect for the sum of terms in BTV regularization. The BEP regulation uses the same principle as BTV but with an adaptive norm (instead of the norm) defined by

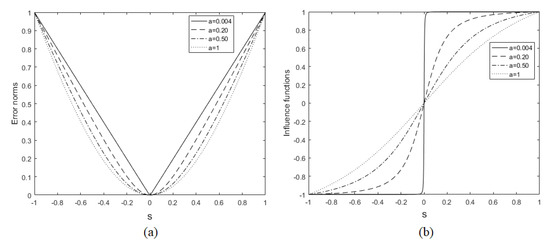

where a is a positive value and s is the difference that one wants to minimize. This function was initially proposed by [24] to preserve edges in the image regularization process. The parameter a is used to specify the error value for which the regularization becomes linear (growing with the error) to constant (saturated, regardless of the error). The same adaptive norm definition is also used in super-resolution problems [32]. The function is an M-estimator since it corresponds to the maximum likelihood (LM) type estimation [40], and has its influence function given by

The influence function indicates how much a particular measure contributes to the solution [32]. We illustrate in the graphs of Figure 2a the behavior of (the error norm function), and in Figure 2b, its influence function. It can be observed that as parameter a evolves from 0 to 1, the function changes its behavior from to norm. Thus, as mentioned by [31] and [32], Equation (15) behaves adaptively with respect to the norm that it implements.

Therefore, combining Equations (14) and (15) for the particular case of tomography reconstruction, we propose an adaptive operator defined as

where q, , , and are the same as in Equation (14); , defined in Equation (13), is the estimated image obtained in the i-th iteration by minimization of the objective function in Equation (12); and is the j-th pixel of image , with . It is noteworthy that the term imposes an regularization norm, , on the image . Thus, we can rewrite the objective function of Equation (10), , so that a new regularization term, , is introduced between the norm minimization and TV minimization. As a consequence, the objective function proposed in this paper incorporates adaptive regularization to the objective function, and defining an auxiliary variable , we have

where is a positive adjustment parameter to balance the terms of fidelity and adaptive regularization. The other parameters are the same as in Equation (10), and , , is the norm BEP method imposed on the regularization process.

3. Objective Function Optimization

The alternating minimization technique makes the simultaneous optimization of two or more terms of an objective function possible. Thus, for the proposed method, three steps are necessary: (1) minimizing with SART, (2) applying the gradient descent (GD) method to the result of the first stage, using as a regularization term, and (3) applying DGT regularization to the previous result, minimizing with soft-threshold filtration. The three stages are repeated iteratively until a satisfactory result is obtained or a certain number of steps is reached. For the purpose of better understanding, each of the three stages is presented in sequence.

3.1. First Stage: Minimization of the Fidelity Term with SART

The first step is to solve the optimization problem described by Equation (13). A popular solution was proposed by Ge and Ming [33], and it can be computationally expressed by the iterative equation

where , , is the i-th line of , k is the iteration index, and is an arbitrary relaxation parameter [15,41]. To simplify the notation, one can establish as a diagonal matrix with , and also as a diagonal matrix with . Then, Equation (19) can be rewritten as

where the term is usually constant and equal to 1. The method described in Equation (19) is commonly known as SART. This method produces a relatively noisy reconstruction, as can be observed in Section 4. We reinforce here that is the input of the second stage in the reconstruction process.

3.2. Second Stage: Bilateral Edge-Preserving with a Gradient Descent Method

In the second stage, the goal is to solve the optimization problem defined by

where is a parameter that weights the contribution of the constraint (Equation (17)). The gradient descent method can be applied to solve this problem as

resulting in an optimization problem written as follows (see Appendix A for details):

where , as defined in Equation (13), is the result of first-stage minimization; is as in Equation (15) but with ; and is the same as in Equation (15) but with a constant c instead of a constant a and , where and are the same as in Equation (14). A computable matrix form was derived from Equation (23), and the result is shown below as

where is an adjustment parameter to balance the k-th value of with the gradient descent contribution, ; is also an adjustment parameter, but it balances terms inside gradient descent; ⊙ is the element-by-element product of two matrices of compatible dimensions; and I is the identity matrix. The matrix is the difference between and its version shifted by , and the operators and are defined, respectively, as

and are influence functions (as defined in Equation (16)) resulting from the application of the gradient descent method.

It is important to clarify that in Equation (22), appears with the upper index instead of k because the previous result of the gradient descent, , feeds the calculation of the current value, , and this is the manner in which gradient descent works. In contrast, Equation (24) shows with upper index k (as in ) rather than because is obtained in the same interaction step, k, as , but in a previous stage denoted by the upper mark “tilde” , while the current stage is denoted by the upper mark “hat” .

3.3. Third Stage: TV Minimization by Soft-threshold Filtering

The third stage (TV optimization, ) is to solve the problem , where D is not invertible, as proposed by Yu and Wang [15] and shown below:

with

where is a pre-established threshold; , with and , with W and H being the width and height of the reconstructed image, respectively. is the DGT matrix as defined in Equation (7).

As explained in detail by [15] and observing Equation (27), when , the values of , , and must be adjusted so that . This means that if neighboring pixels in the reconstructed image are very close in value, it is likely that they have equal (or very close) values in the real image. Then, the method smooths the region around the pixel so that they look alike. Alternately, when , the goal is to reduce and but not cancel them. In this case, the method recognizes the differences between values of neighboring pixels as too significant to be totally eliminated. Instead, the differences are just softened.

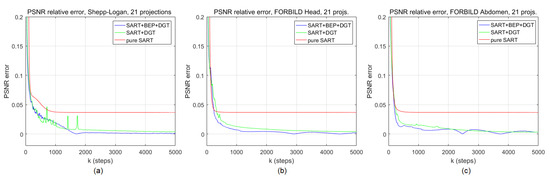

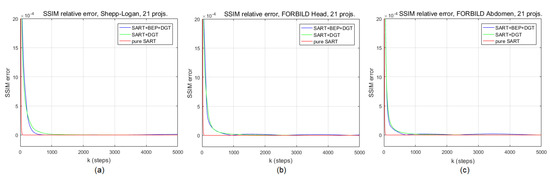

3.4. Convergence and Convexity Considerations

The model proposed in this work, represented by Equation 18, gives an important initial gain in terms of PSNR to the reconstruction of CT images, as reported in Section 4. However, it is important to know how this model behaves in long-term processing. It is therefore necessary to investigate its convergence. We do this empirically by comparing the PSNR values of the cost function between the iterations k and , with . We present in Figure 3 a chart for each image used in the simulations of Section 4, and more specifically for the graphs and images of Figures 5 and 6 (Shepp–Logan head phantom), 7 and 8 (FORBILD head phantom), 9 and 10 (FORBILD abdomen phantom). It is worth noting that in all cases shown in Figure 3, the SART+BEP+DGT method is consistent with respect to convergence and presents better error reduction in terms of the PSNR metric. This simulation uses the same initial values defined in Section 4. The same situation applies when we graphically analyze the convergence of the proposed method using the SSIM metric, as can be seen in Figure 4.

Figure 3.

Peak signal-to-noise ratio (PSNR) difference along k iterations, , for the pure simultaneous algebraic reconstruction technique (SART), SART+discrete gradient transform (DGT), and SART+bilateral edge-preserving (BEP)+DGT reconstructions for (a) Shepp–Logan head phantom, (b) FORBILD head phantom, and (c) FORBILD abdomen phantom.

Figure 4.

Sructural similarity (SSIM) difference along k iterations, , for pure SART, SART+DGT, and SART+BEP+DGT reconstructions for (a) Shepp–Logan head phantom, (b) FORBILD head phantom, and (c) FORBILD abdomen phantom.

It is noteworthy that, according to Charbonnier et al. [24], the function described in Equation (15) is convex, and therefore, it would be possible to apply an iTV-style minimization procedure [42] to assure data consistency and regularization term improvement in each iteration step.

4. Experiments and Results

In the experiments, we used the synthetic images presented in Section 1.2, that is, Shepp–Logan head phantom, FORBILD head phantom, and FORBILD abdomen phantom. The signal from the CT equipment is simulated according to the model in Equation (1) addressing the low dosage scenario, considering a limited number of projections (meaning a limited number of scanning angles). On the image reconstruction side, we use the model , which, as discussed in Section 1.1, denotes an inverse and ill-posed problem, where () is the matrix that describes the capture system, x () is the phantom represented lexicographically, and e () is the error, whose features were presented in Section 1.2. It is worth remembering that x is the image we intend to reconstruct from the noise signal y and, in the modeling process presented in Section 2 and Section 3, we use the variable to represent it. By improving the system description, is the number of projections, where is the number of projection lines (i.e., the number of detectors) for each scan angle, and is the total number of scan angles. is the parameter whose value should be changed when the intention is to set a new dosage value, i.e., when we want to define a different (lower) number of projections, . The image has dimensions , where , (positive natural). In this work, we use , and therefore, , and , with detectors. Thus, has dimensions , which are compatible with the dimensions of y and , respectively, i.e., and . It is important to note that the dimension of A (and y) is related to the number of scan angles, , and this number of angles is what determines if the signal is of low (or regular) dosage, as discussed in Section 1, according to the ALARA principle. For a low dosage, we consider subsets of , i.e., equally spaced sets of integer values between 0 and 179 degrees named . For example, would be a possible subset, in which the angles are equally spaced at 44 degrees. Using this notation, is equivalent to , meaning that there are scan angles equally spaced by 1 degree (which represents a regular dosage). In the experiments with low dosage, the sets , with g in , will be used. was obtained for a parallel architecture scanner.

By observing the objective function optimization process detailed in Section 3, and in alignment with the proposal in Section 1.3, we design a test framework that involves (1) the execution of the first stage (Section 3.1) alternating with the third stage (Section 3.3), which we will call here SART+DGT, and (2) execution of the first, second (Section 3.2), and third stages alternately and in this sequence, named SART+BEP+DGT. Simulations are shown in Table 1.

Table 1.

Comparison of computer tomography (CT) reconstruction methods A (SART+BEP+DGT), B (SART+DGT), and C (pure SART) for the Shepp–Logan (SL), FORBILD abdomen (FA), and FORBILD head (FH) phantom images using PSNR and SSIM metrics for 15 and 30 projections and signal-to-noise ratio (SNR) of 32, 46, and 60 dB. Each result is the mean of 101 executions of a particular testing case. The values in bold represent the highest value comparing methods A, B and C for each metric (PSNR or SSIM), number of projections (15 or 30) and iterations (350, 700 or 1000).

For both arrangements (SART+BEP and SART+BEP+DGT), the first stage is mandatory because it is the core of the reconstruction process. That is, it represents the optimization of the fidelity term in Equation (13). Because of its omnipresence, we also show simulations with SART only, which serve as a basis for comparing how much constraint terms actually contribute to the reconstruction process. The subsequent stages represent the application of constraint terms as described in Section 3.2 and Section 3.3, respectively, Equations (24) and (26). For each of these test arrangements, it was arbitrarily established that the iterator, k, ranges from 1 to L, with , approximately. Because Gaussian noise and the Poisson process are random, each experiment is performed a considerable number of times, defined arbitrarily as 101 executions by experiment, and each result in Table 1 is the mean of the 101 SSIM and PSNR values. It is important to note that the result presented for each experiment (with a particular additive Gaussian noise or a certain number of projections) is the mean of 101 executions performed. Each execution produces a particular SSIM and PSNR result. We do not average pixels in any reconstructed image, but rather the SSIM and PSNR of the 101 executions performed for each testing case. The idea of using the average of a considerable number of iterations is based on the central limit theorem, which states that the arithmetic mean of a sufficiently large number of iterates of independent random variables will be approximately normally distributed, regardless of the underlying distribution, provided that each iteration has a finite expected value.

Low Dosage Tests and Results

As recommended by the ALARA principle, an alternative to reduce the total amount of radiation applied to a patient is decreasing the number of projections in the acquisition of the CT signal. According to the signal model proposed in Equation (1), we will consider the projections as individually influenced by Gaussian additive noise, and the low dosage signal is provided by reducing the number of scanning angles. In the batch of tests with low dosage projections, we consider using the sets of angles , with g in , where g is the amount of angles in (remember that in a regular dosage, we have 180 angles, from ). In our model, these values represent a reduced amount of photon emission, which can be understood as a low radiation dosage. All low dosage presented in this section is performed with signal-to-noise ratio (SNR) , 46, and 60 dB. The SART stage used , according [15] and [41]. The DGT stage maintained , as discussed in Section 2.1, and used as a threshold, , the average of the DGT for each k iteration. The BEP stage used , (Section 3.2), , , , and (Section 2.2). All parameters were empirically set.

A batch of experiments using the set , with g in , of projections for SART+BEP+DGT (named here as method A), SART+DGT (named here as B), and pure SART (named here as method C) methods are shown in Table 1 for PSNR and SSIM metrics, using the FORBILD abdomen phantom (FA), FORBILD head phantom (FH), and Shepp–Logan head phantom (SL) synthetic images. Analyzing the results for the PSNR metric with 15 and 30 projections, it is observed that for steps, the results of the proposed method present a higher PSNR value in general. The exceptions are the FA and FH images, with an SNR of 32 dB. However, for and 1000 steps and 30 projections, the results favor the SART+DGT method according to the PSNR metric. For 15 projections, results for steps favor the proposed method in most tests performed with PSNR metrics. For the SSIM metric, the proposed method presents interesting results when compared to the SART+DGT method.

For the reconstruction of Shepp–Logan head phantom with 15 angles of projection, Figure 5 shows the evolution of the SSIM and PSNR values for SART+BEP+DGT (proposed), SART+DGT, and pure SART methods for SNR = 60 dB. In this particular experiment, marker 1 in Figure 5a indicates the highest SSIM value, , reached by the proposed method and corresponding to the highest PSNR value, , indicated by marker 1 in Figure 5b. Marker 2 shows in Figure 5a,b, respectively, the SSIM ( ) and PSNR ( ) values obtained in step . Marker 3 in Figure 5b highlights the point at which the SART+DGT method reaches the same PSNR value as the proposed method, in step , approximately, and the graphs in Figure 5 agree with Table 1.

Figure 5.

Evolution of (a) SSIM and (b) PSNR values for the reconstruction of the Shepp–Logan phantom with 15 projections for pure SART, SART+DGT, and SART+BEP+DGT.

Looking closely at Figure 6b,c, it is possible to note the presence of random noise (indicated by the white arrows) that manifests as small white dots in Figure 6c, while in Figure 6b this phenomenon is not easily perceived. This is because the BEP regularization used in the proposed method (Figure 6b) tends to eliminate noise faster. However, the reconstruction performed by the SART+DGT method produces a more homogeneous image, as shown in Figure 6c. This is also related to the elimination of random noise by the introduction of BEP regularization in the reconstruction process. Figure 6d presents the result of the image reconstruction using the pure SART method.

Figure 6.

(a) The original Shepp–Logan head phantom and particular reconstructions for 15 projections (b) from SART+BEP+DGT with steps, PSNR: , SSIM: , (c) from SART+DGT with steps, PSNR: , SSIM: , and (d) from pure SART with steps, PSNR: , SSIM: . All with SNR = 60 dB.

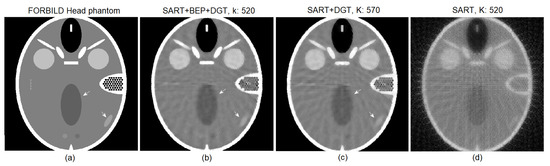

For the reconstruction of the FORBILD head phantom with 30 projections with SNR = 46 dB, shown in Figure 7, we observe that the best PSNR () obtained by the proposed method in step (Figure 7b, marker 1) is reached by the SART+DGT method in step (marker 3). Marker 2 shows, in Figure 7a,b, respectively, the SSIM () and PSNR () values obtained in step . The SSIM values remain higher for the proposed method, according the graph of Figure 7a. We show the evolution of the pure SART method in terms of SSIM and PSNR for comparison purposes only. Observing the reconstructions shown in Figure 8b (with steps, PSNR , SSIM ) and Figure 8c (with steps, PSNR , SSIM ), the results are low in quality due to the number of projections, and the images practically do not present a difference, except for a better contrast level presented by Figure 8b. The pure SART reconstruction is shown in Figure 8d.

Figure 7.

Evolution of (a) SSIM and (b) PSNR values for a particular reconstruction of the FORBILD head phantom with 30 projections using the pure SART, SART+DGT, and SART+BEP+DGT methods.

Figure 8.

(a) The original FORBILD head phantom and particular reconstructions for 30 projections (b) from SART+BEP+DGT with steps, PSNR: , SSIM: , (c) from SART+DGT with steps, PSNR: , SSIM: , and (d) from pure SART with steps, PSNR: , SSIM: . All with SNR = 46 dB.

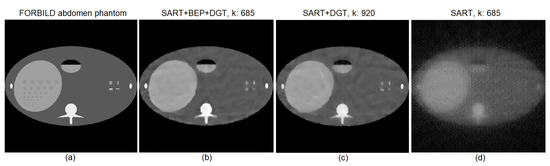

Figure 9 shows the evolution of the SSIM and PSNR values for the reconstruction with 15 angles of projection with SNR = 60 dB for the FORBILD abdomen phantom using the SART+BEP+DGT, SART+DGT, and pure SART methods. In this particular experiment, marker 1 in Figure 9a indicates the highest SSIM value, , reached by the proposed method and corresponding to the highest PSNR value, , indicated by marker 1 in Figure 9b, both obtained in step . Marker 2 shows, in Figure 9a,b, respectively, the SSIM () and PSNR () values obtained in step . Marker 3 in Figure 9b highlights the point at which the SART+DGT method reaches the same PSNR value of the proposed method, in step , approximately. The image of Figure 10b shows a tendency to eliminate the characteristic lines and bands of the reconstruction process with few scanning angles.

Figure 9.

Evolution of (a) SSIM and (b) PSNR values for a particular reconstruction of the FORBILD abdomen phantom with 15 projections for pure SART, SART+DGT, and SART+BEP+DGT methods.

Figure 10.

(a) The original FORBILD abdomen phantom and particular reconstructions for 15 projections (b) from SART+BEP+DGT with steps, PSNR: , SSIM: , (c) from SART+DGT with steps, PSNR: , SSIM: , and (d) pure SART with steps, PSNR: , SSIM: .

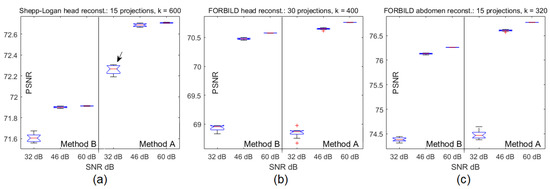

Each of the examples of reconstructed images shown in Figure 6, Figure 8 and Figure 10, and their SSIM and PSNR graphs in Figure 5, Figure 7, and Figure 9, respectively, result from a single execution test pinched from a set of 101 executions. Figure 11 shows box plot graphs for various situations, combining image to be reconstructed, SNR dB value, number of projections, method used, and step k of the execution. As an example, for the reconstruction of Shepp–Logan with 15 projections, method A and SNR = 32 dB using the column (step) of the processing matrix is highlighted in the graph of Figure 11a. Table 2 shows the details of each element of the box plot graphs in Figure 11, and it is straightforward to note that the standard deviation (Table 2) increases with noise (Figure 11).

Figure 11.

(a) Box plot graph for the Shepp–Logan head reconstruction with 15 projections and , (b) box plot graph for the FORBILD head phantom with 30 projections and , and (c) box plot graph for the FORBILD abdomen phantom with 15 projections and .

Table 2.

Mean, median, maximum, minimum, standard deviation, and number of outliers of box plot element in Figure 11a.

5. Conclusions and Final Comments

The proposed method, composed by the steps (1) SART reconstruction, (2) BEP adaptive minimization, and (3) TV minimization via DGT, synthesized in Equation (18), presents, in the first steps of the processing, a more pronounced reduction in the noise level of the reconstructed image both for SSIM and PSNR metrics, as can be seen in Section 4. It is important to emphasize that the tests were done with 15 and 30 projections, as shown in Table 1. At some point, the proposed method reaches its maximum PSNR value. It can be observed that at this point (maximum PSNR), the images are reasonably intelligible. From this point forward, the SART+DGT method gives higher values of PSNR and, consequently, a less noisy reconstruction. Even after the apex of the proposed method with regard to the value of PSNR, the value of SSIM remains above in many of the cases studied, when compared to the result of the SART+DGT method. The best values for SSIM generally result in images with better contrast, and this is very important for artifact viewing and contour distinction in the reconstructed image. Structural similarity works considering morphological features in the evaluation of reconstruction results and for this reason presents results more suitable to human standards, when compared with the PSNR metric. However, the main disadvantage of this method is that in a practical application, we cannot know the maximum PSNR since we do not have an original image for comparison. On the other hand, the advantage of the proposed method is that it delivers results earlier in the reconstruction process.

The use of BEP minimization soon after the SART reconstruction, as explained in Section 3, is intended to promote image noise reduction in the reconstruction process, delivering a less noisy image to the later stage (of minimization by TV using DGT). In fact, the noise reduction occurs up to a certain point, and although it is not possible in a practical application to define the ideal stopping point (maximum PSNR), it may be possible to estimate this point based on the type of image and the number of projections used.

Author Contributions

The contributions of the authors can be described as follows: conceptualization, T.T.W. and E.O.T.S.; formal analysis, T.T.W. and E.O.T.S; funding acquisition, T.T.W., investigation: T.T.W.; metodology, T.T.W. and E.O.T.S.; project administration, E.O.T.S.; software, T.T.W.; supervision, E.O.T.S.; validation, T.T.W. and E.O.T.S.; visualization, T.T.W.; writing–original draft, T.T.W.; writing–review editing, T.T.W. and E.O.T.S.

Acknowledgments

Thanks to PPGEE—Programa de Pós-graduação em Engenharia Elétrica—UFES for the support provided during the preparation and submission of this work.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ALARA | As-low-as-reasonably-achievable |

| AMP | Approximate message passing |

| ART | Algebraic reconstruction technique |

| BEP | Bilateral edge preserving |

| CT | Computer tomography |

| DCT-GAMP | Denoising CT generalized Approximate message passing |

| DGT | Discrete gradient transform |

| FBP | Filtered backprojection |

| FFT | Fast Fourier transform |

| MAP | Maximum a posteriori |

| MMSE | Minimum mean square error |

| OS-SART | Ordered subset simultaneous algebraic reconstruction technique |

| PSNR | Peak signal-to-noise ratio |

| TV | Total variation |

| SART | Simultaneous algebraic reconstruction technique |

| SSIM] | Structural similarity |

| VW-SART | Variable weighted simultaneous algebraic reconstruction technique |

Appendix A

With Equation (22) in mind and substituting Equation (15) into the core (within the braces) of Equation (23), we have

and performing the derivative in relation to results in

Assuming the same considerations and notation presented in Section 3.2, Equation (A1) can be rewritten as

and Equation (23) can be written in compact form as

and in its expanded form as

References

- Brody, A.S.; Frush, D.P.; Huda, W.; Brent, R.L. Radiation Risk to Children From Computed Tomography. Am. Acad. Pediatrics 2007, 120, 677–682. [Google Scholar] [CrossRef] [PubMed]

- Shepp, L.A.; Logan, B.F. The Fourier reconstruction of a head section. IEEE Trans. Nucl. Sci. 1973, NS-21, 21–43. [Google Scholar] [CrossRef]

- Horn, B.K.P. Fan-beam reconstruction methods. Proc. IEEE 1979, 67, 1616–1623. [Google Scholar] [CrossRef]

- Hsieh, J. Computed Tomography—Principles, Design, Artifacts and Recent Advances. In Computed Tomography—Principles, Design, Artifacts and Recent Advances, 2nd ed.; Wiley Inter-science: Bellingham, WA, USA, 2009; Chapter 2; pp. 29–30. [Google Scholar]

- Hsieh, J. Computed Tomography—Principles, Design, Artifacts and Recent Advances, 2nd ed.; Wiley Inter-science: Bellingham, WA, USA, 2009; Chapter 3; pp. 102–112. [Google Scholar]

- Lange, K.; Bahn, M.; Little, R. A Theoretical Study of Some Maximum Likelihood Algorithms for Emission and Transmission Tomography. IEEE Trans. Med. Imaging 1987, 6, 106–114. [Google Scholar] [CrossRef]

- Snyder, D.L.; Helstrom, C.W.; Lanterman, A.D.; Faisal, M.; White, R.L. Compensation for readout noise in CCD images. J. Opt. Soc. Am. 1995, 12, 272–283. [Google Scholar] [CrossRef]

- Whiting, B.R. Signal statistics in x-ray computed tomography. Proc. SPIE 2002, 4682, 53–60. [Google Scholar]

- Yu, H.; Wang, G. Sart-Type Half-Threshold Filtering Approach for CT Reconstruction. Access IEEE 2014, 2, 602–613. [Google Scholar]

- Carson, R.E.; Lange, K. E-M Reconstruction Algorithms for Emission and Transmission Tomography. J. Comput. Assist. Tomogr. 1984, 8, 306–316. [Google Scholar]

- Man, B.D.; Nuyts, J.; Dupont, P.; Marchal, G.; Suetens, P. Reduction of metal streak artifacts in X-ray computed tomography using a transmission maximum a posteriori algorithm. IEEE Trans. Nucl. Sci. 2000, 47, 977–981. [Google Scholar] [CrossRef]

- Man, B.D.; Nuyts, J.; Dupont, P.; Marchal, G.; Suetens, P. An iterative maximum-likelihood polychromatic algorithm for CT. IEEE Trans. Med. Imaging 2001, 20, 999–1008. [Google Scholar] [CrossRef] [PubMed]

- Elbakri, I.A.; Fessler, J.A. Statistical Image Reconstruction for Polyenergetic X-Ray Computed Tomography. IEEE Trans. Med. Imaging 2002, 21, 89–99. [Google Scholar] [CrossRef] [PubMed]

- Yu, H.; Wang, G. Compressed sensing based interior tomography. Phys. Med. Biol. 2009, 54, 2791–2805. [Google Scholar] [CrossRef] [PubMed]

- Yu, H.; Wang, G. A soft-threshold filtering approach for reconstruction from a limited number of projections. Phys. Med. Biol. 2010, 1, 3905–3916. [Google Scholar] [CrossRef]

- Xu, Q.; Mou, X.; Wang, G.; Sieren, J.; Hoffman, E.A.; Yu, H. Statistical Interior Tomography. IEEE Trans. Med. Imaging 2011, 30, 1116–1128. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Deák, Z.; Grimm, J.M.; Treitl, M.; Geyer, L.L.; Linsenmaier, U.; Körner, M.; Reiser, M.F.; Wirth, S. Filtered Back Projection, Adaptive Statistical Iterative Reconstruction, and a Model-based Iterative Reconstruction in Abdominal CT: An Experimental Clinical Study. Radiology 2013, 266, 197–206. [Google Scholar] [CrossRef] [PubMed]

- Choudhary, C.; Malik, R.; Choi, A.; Weigold, W.G.; Weissman, G. Effect of Adaptive Statistical Iterative Reconstruction on Coronary Artery Calcium Scoring. J. Am. Coll. Cardiol. 2014, 63, A1157. [Google Scholar] [CrossRef]

- Aday, A.W.; MacRae, C.A. Genomic Medicine in Cardiovascular Fellowship Training. Circulation 2017, 136, 345–346. [Google Scholar] [CrossRef]

- Zhu, Z.; Pang, S. Few-photon computed x-ray imaging. Appl. Phys. Lett. 2018, 113, 231109. [Google Scholar] [CrossRef]

- Kim, J.H.; Choo, K.S.; Moon, T.Y.; Lee, J.W.; Jeon, U.B.; Kim, T.U.; Hwang, J.Y.; Yun, M.J.; Jeong, D.W.; Lim, S.J. Comparison of the image qualities of filtered back-projection, adaptive statistical iterative reconstruction, and model-based iterative reconstruction for CT venography at 80 kVp. Eur. Radiol. 2016, 26, 2055–2063. [Google Scholar] [CrossRef]

- Zhang, G.; Tzoumas, S.; Cheng, K.; Liu, F.; Liu, J.; Luo, J.; Bai, J.; Xing, L. Generalized Adaptive Gaussian Markov Random Field for X-Ray Luminescence Computed Tomography. IEEE Trans. Biomed. Eng. 2018, 65, 2130–2133. [Google Scholar] [CrossRef]

- Clark, D.P.; Lee, C.L.; Kirsch, D.G.; Badea, C.T. Spectrotemporal CT data acquisition and reconstruction at low dose. Med. Phys. 2015, 42, 6317–6336. [Google Scholar] [CrossRef]

- Charbonnier, P.; Blanc-Feraud, L.; Aubert, G.; Barlaud, M. Deterministic edge-preserving regularization in computed imaging. IEEE Trans. Image Process. 1997, 6, 298–311. [Google Scholar] [CrossRef] [PubMed]

- Sreehari, S.; Venkatakrishnan, S.V.; Wohlberg, B.; Buzzard, G.T.; Drummy, L.F.; Simmons, J.P.; Bouman, C.A. Plug-and-Play Priors for Bright Field Electron Tomography and Sparse Interpolation. IEEE Trans. Comput. Imag. 2016, 2, 408–423. [Google Scholar] [CrossRef]

- Perelli, A.; Lexa, M.A.; Can, A.; Davies, M.E. Denoising Message Passing for X-ray Computed Tomography Reconstruction. arXiv 2016, arXiv:1609.04661. [Google Scholar]

- Sun, Y.; Chen, H.; Tao, J.; Lei, L. Computed tomography image reconstruction from few views via Log-norm total variation minimization. Digital Signal Process. 2019, 88, 172–181. [Google Scholar] [CrossRef]

- Gu, P.; Jiang, C.; Ji, M.; Zhang, Q.; Ge, Y.; Liang, D.; Liu, X.; Yang, Y.; Zheng, H.; Hu, Z. Low-Dose Computed Tomography Image Super-Resolution Reconstruction via Random Forests. Sensors 2019, 19, 207. [Google Scholar] [CrossRef] [PubMed]

- Yu, Z.; Noo, F.; Dennerlein, F.; Wunderlich, A.; Lauritsch, G.; Hornegger, J. Simulation tools for two-dimensional experiments in x-ray computed tomography using the FORBILD head phantom. Phys. Med. Biol. 2012, 57, N237. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Zeng, X.; Yang, L. Mixed impulse and Gaussian noise removal using detail-preserving regularization. Opt. Eng. 2010, 49, 097002. [Google Scholar] [CrossRef]

- Zeng, X.; Yang, L. A robust multiframe super-resolution algorithm based on half-quadratic estimation with modified BTV regularization. Digital Signal Process. 2013, 1, 98–109. [Google Scholar] [CrossRef]

- Ge, W.; Ming, J. Ordered-subset simultaneous algebraic reconstruction techniques (OS-SART). J. X-Ray Sci. Technol. 2004, 12, 169–177. [Google Scholar]

- Pan, J.; Zhou, T.; Han, Y.; Jiang, M. Variable Weighted Ordered Subset Image Reconstruction Algorithm. Int. J. Biomed. Imaging 2006, 2006, 10398. [Google Scholar] [CrossRef] [PubMed]

- Yu, H.; Wang, G. SART-Type Image Reconstruction from a Limited Number of Projections with the Sparsity Constraint. Int. J. Biomed. Imaging 2010, 2010, 3. [Google Scholar] [CrossRef] [PubMed]

- Gu, R.; Dogandzc, A. Polychromatic X-ray CT Image Reconstruction and Mass-Attenuation Spectrum Estimation. IEEE Trans. Comput. Imag. 2016, 2, 150–165. [Google Scholar] [CrossRef]

- Achterhold, K.; Bech, M.; Schleede, S.; Potdevin, G.; Ruth, R.; Loewen, R.; Pfeifferb, F. Monochromatic computed tomography with a compact laser-driven X-ray source. Sci. Rep. 2013, 13, 150–165. [Google Scholar] [CrossRef] [PubMed]

- Daubechies, I.; Defrise, M.; Mol, C.D. An iterative thresholding algorithm for linear inverse problems with a sparsity constraint. Commun. Pure Appl. Math. 2004, 57, 1413–1457. [Google Scholar] [CrossRef]

- Farsiu, S.; Dirk, M.; Elad, M.; Milanfar, P. Fast and robust multiframe super resolution. IEEE Trans. Image Process. 2004, 13, 1327–1344. [Google Scholar] [CrossRef] [PubMed]

- Rabie, T. Robust estimation approach for blind denoising. IEEE Trans. Image Process. 2005, 14, 1755–1765. [Google Scholar] [CrossRef]

- Hansen, P.C.; Saxild-Hansen, M. AIR Tools—A MATLAB Package of Algebraic Iterative Reconstruction Methods. J. Comput. Appl. Math. 2012, 236, 2167–2178. [Google Scholar] [CrossRef]

- Ritschl, L.; Bergner, F.; Fleischmann, C.; Kachelrieß, M. Improved total variation-based CT image reconstruction applied to clinical data. Phys. Med. Biol. 2011, 56, 1545–1561. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).