Abstract

The determination of RNA secondary structure (RSS) could help understand RNA’s functional mechanisms, guiding the design of RNA-based therapeutics, and advancing synthetic biology applications. However, traditional methods such as NMR for determining RSS are typically time-consuming and labor-intensive. As a result, the accurate prediction of RSS remains a fundamental yet unmet need in RNA research. Various deep learning (DL)-based methods achieved improved accuracy over thermodynamic-based methods. However, the over-parameterization nature of DL makes these methods prone to overfitting and thus limits their generalizability. Meanwhile, the inconsistency of RSS predictions between these methods further aggravated the crisis of generalizability. Here, we propose TrioFold to achieve enhanced generalizability of RSS prediction by integrating base-pairing clues learned from both thermodynamic- and DL-based methods by ensemble learning and convolutional block attention mechanism. TrioFold achieves higher accuracy in intra-family predictions and enhanced generalizability in inter-family and cross-RNA-types predictions. Additionally, we have developed an online webserver equipped with widely used RSS prediction algorithms and analysis tools, providing an accessible platform for the RNA research community. This study demonstrated new opportunities to improve generalizability for RSS predictions by efficient ensemble learning of base-pairing clues learned from both thermodynamic- and DL-based algorithms.

1. Introduction

RNA finds extensive applications in biology, medicine, synthetic biology, and other functional purposes, in which the RNA secondary structure (RSS) is fundamental to carrying diverse functionalities [1,2]. Characterization of RSS is crucial for understanding and designing functional RNA molecules. For example, a comprehensive analysis of RSS yields essential insights into gene transcription, catalytic activity, and RNA stability under diverse conditions [3], which helps understand the biological functions of RNA and the mechanism of related diseases. Accurately predicting RSS is also the key step in RNA molecule design using generative artificial intelligence (AI) algorithms [4]. Traditional biological experiments usually rely on magnetic resonance imaging, X-ray crystallization, enzyme probing, and other methods [5,6] to determine the RSS. However, these methods exhibit protracted experimental durations and incur high experimental costs. Additionally, the inherent instability of RNA molecules and the challenges of in vitro crystallization make it more difficult to carry out related experiments. Only less than 0.001% of the non-coding RNAs have been experimentally determined [7].

Given the time-consuming and expensive nature of traditional methods for determining RSS, there is an imperative to develop efficient and accurate RSS prediction algorithms, which has become a focal concern in RNA research. In recent years, machine learning (ML)- and deep learning (DL)-based algorithms have gained substantial attention and achieved significant advancements in structural biology [8,9]. Notably, the field has witnessed increased interest in developing RSS prediction algorithms based on ML and DL. For example, some ML algorithms such as UFold [10], RNAformer [11], RNAErnie [12], and RNADiffFold [13] reported in recent years have introduced novel tools for RSS prediction with enhanced prediction accuracy. In addition, online tools such as RNAthor [14] and RNAProbe [15] provide user-friendly platforms showcasing advanced methods for integrating experimental probing results with computational RNA structure prediction, which offer new insights into the RSS prediction field.

Although these ML/DL-based algorithms have shown improved prediction accuracy over classical thermodynamic-based methods like RNAfold [16] and RNAstructure [17], the existing methods suffer several limitations [18]. Firstly, the prediction accuracy of individual methods varied across RNA families. Secondly, there is a low concordance of the prediction results between individual methods for the same RNAs. This may stem from the fact that each algorithm only captured a partial of RNA folding rules from different perspectives. The limited generalizability of current algorithms is the fundamental problem underlying the above-mentioned limitations. Therefore, there is a strong need for novel methods with improved generalizability to advance deep learning-based RSS algorithms.

Different algorithms use different underlying principles, so their predictions are prone to being less consistent. However, each type of method captures the rules of RNA folding to some extent [19]. Therefore, we hypothesize that the ensemble of algorithms that learn from diverse RNA folding principles will improve both prediction accuracy and generalizability.

Ensemble learning, including bagging, boosting, and stacking strategies, integrates the predictions of multiple individual models, known as base learners, to enhance overall predictive performance and increase generalizability. Ensemble learning can alleviate output biases arising from diverse base learners, thus improving the generalizability of models [20]. In the context of training with limited sample data [21], ensemble learning mitigates the overfitting problem in deep learning, consequently enhancing the generalizability and performance of the model [22].

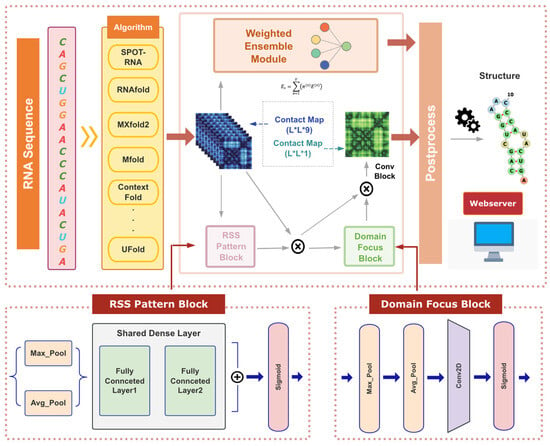

Here, we proposed an end-to-end RSS prediction algorithm, TrioFold, based on ensemble deep learning and convolutional attention mechanism [23] (Figure 1). In detail, six representative ML models (MXfold2 [24], UFold [10], SPOT-RNA [25], EternaFold [26], CONTRAfold [27], and ContextFold [28]) and three thermodynamic-based models (Mfold [29], LinearFold [30], and RNAfold) were used as base learners to explore the impacts of different ensemble methods on RSS prediction accuracy and generalizability. Firstly, two different ensemble models were evaluated in the TestSetA and TS0 datasets. In the TestSetA and TS0 datasets, the F1 score of the TrioFold was 3–5% higher than that of the second-best model, and in other datasets, TrioFold showed strong prediction robustness. Furthermore, TrioFold achieved the best performance on three datasets of unseen families, suggesting its enhanced generalizability for new RNA sequences and families. Moreover, we implemented TrioFold and those base learner methods in a one-stop user-friendly webserver to enable convenient usage for biologists without any programming requirement. The webserver provides RSS prediction and analysis functions, and it can be freely accessed at http://triofold.aiddlab.com/ (accessed on 16 August 2025).

Figure 1.

The network illustration of the TrioFold and TrioFold-lite.

2. Results

2.1. Varied Outputs Among RSS Prediction Algorithms Despite Comparable Accuracies

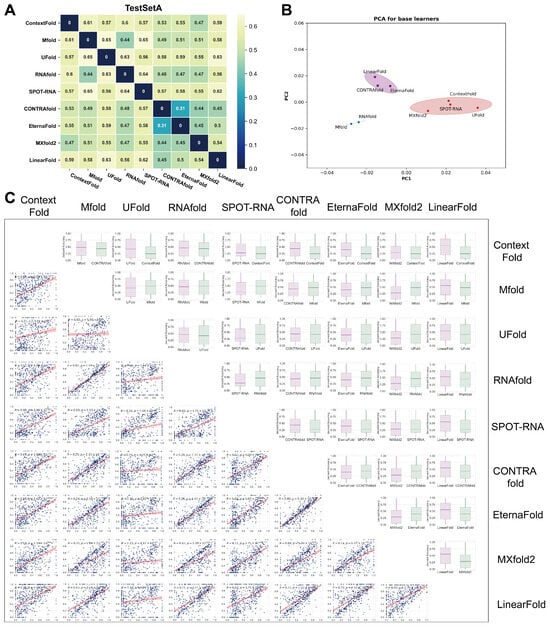

Although RSS prediction accuracy did not vary sharply across different algorithms, the concordance of their predictions was merely investigated. We attempted to tackle this question by comparing RSS predictions with nine commonly used algorithms. To measure the dissimilarity between the output of various predictive algorithms and the ground truth structure, we defined the Jaccard distance between the predicted structure and the ground truth structure on the same sequence as a metric. On the TestSetA dataset, for every two algorithms, the contact map of all samples in TestSetA was first predicted by each algorithm, and the average of Jaccard distances of contact maps of all samples was then calculated to represent the Jaccard distances of predictions between the two algorithms. The Jaccard distance between nine algorithms varies within the range of 0.3–0.65 (Figure 2A). The two algorithms with the greatest concordance are EternaFold and CONTRAfold (Jaccard distance = 0.31), which may result from the fact that EternaFold is an algorithm developed based on CONTRAfold. To investigate the distinctions among various algorithms, we employed PCA for dimensionality reduction (see Materials and Methods). PCA results also demonstrate the discordance between algorithms (Figure 2B). Unexpectedly, this analysis indicated that the output similarity of RSS predictions between different algorithms is usually low. A detailed visualization of the pair-wise comparison indicated that the accuracy of Jaccard distances between two algorithms only exhibited a weak degree of correlation in most cases (Figure 2C), further proving the diversity of different algorithms in RSS prediction.

Figure 2.

Significant differences in the output structures between different algorithms. Base learners’ concordance and prediction performance of ensemble models using different base learner combinations on TestSetA. (A) The Jaccard distance of RSS predicted by each base learner. The larger the Jaccard distance, the greater the difference in the predicted SS of the two base learners. (B) PCA results for base learners with ellipses. (C) The box plot illustrates the difference in accuracy of Jaccard distance between the two algorithms, while the scatter plot shows the correlation of Jaccard distance for all samples between algorithm pairs.

2.2. Ensemble Learning Is Efficient in Enhancing RSS Prediction Performance

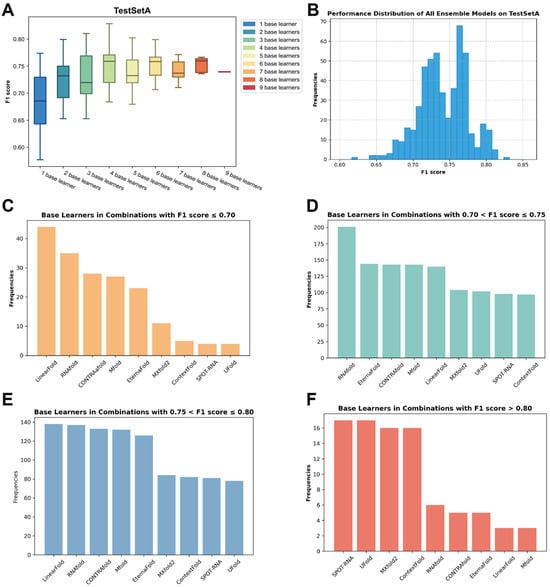

Though ensemble models can be characterized into homogeneous ensembles or heterogeneous ensembles [31], the key to a successful ensemble model is the diversity of base learners. A crucial concept in ensemble methods is determining the optimal number of base learners to be included in the final ensemble, commonly referred to as the ensemble size [32]. However, the current knowledge on the diversity of RSS prediction base learners and their contributions to ensemble models is poor. To determine what combination of ensemble methods can improve the model’s performance to the greatest extent, we used TestSetA to test the impact of different ensemble methods on the model’s prediction performance. Nine base learners were selected as the candidate models. We systematically explored all possible combinations of base learners on the TestSetA, employing the F1 score as the benchmark metric for evaluating different combinations. As the number of base learners increases, the performance of the ensemble model does not exhibit a strictly monotonous upward trend but gradually converges toward a fairly stable value (Figure 3A) and the effects of different combinations vary considerably (Figure 3B). Notably, an optimal performing model emerges when the ensemble size is set at four. The ensemble model based on SPOT-RNA + UFold + MXfold2 + ContextFold (TrioFold-lite) performs best. Therefore, we chose an ensemble model based on the above four algorithms and conducted our following experiments.

Figure 3.

The impact of different ensemble strategies on the performance of the final model. (A) The impact of the ensemble size on the performance of the ensemble model. (B) The F1 score distribution of ensemble models based on all possible combinations on TestSetA. (C–F) The number of base learners appeared in all possible combinations according to the F1 score range.

Furthermore, in our observation of combinations exhibiting different F1 scores, we noted variations in the frequency of appearance of base learners (Table S1). We found that combinations with lower F1 scores demonstrate a higher occurrence of base learners such as RNAfold and Mfold. Conversely, in combinations yielding higher F1 scores, algorithms like UFold and ContextFold exhibit a higher frequency (Figure 3C–F). This signifies that the performance of base learners is a pivotal determinant of the overall performance of the ensemble model.

2.3. TrioFold Outperforms RSS Prediction Methods on Intra-Family Datasets

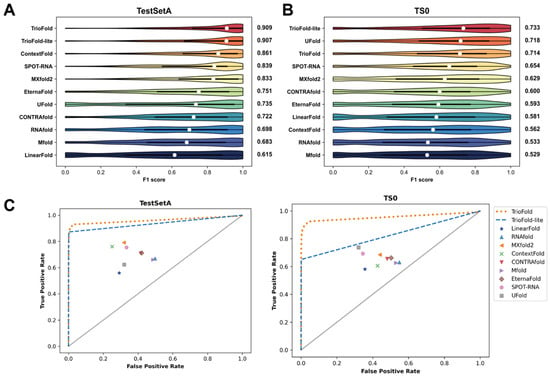

We employed two integration strategies, TrioFold and TrioFold-lite, for our investigations. See Methods for the detailed network architecture and setup. The RSS prediction performance of TrioFold and representative state-of-the-art (SOTA) methods was evaluated on two well-established benchmark datasets, TestSetA and TS0. Sequence identity analysis reveals that most sequences in TestSetA and TS0 share low similarity (<30% identity) with those in the training set (Tables S2 and S3). TrioFold and TrioFold-lite achieve a median F1 score of 0.909 and 0.907, respectively, which shows a notable improvement of 5.6% and 5.3%, respectively, when compared to the second-best model (Figure 4A). Among compared methods, the median F1 score of TrioFold is 23.7%, 8.34%, 5.57%, and 9.12% higher than the average F1 score of four base learners (UFold, SPOT-RNA, ContextFold, and MXfold2, respectively), indicating that the ensemble learning mechanisms of TrioFold are efficient. The median F1 score of TrioFold is 25.9%, 33.1%, 30.2%, 47.8%, and 21.0% higher than the median F1 score of the remaining methods (CONTRAfold, Mfold, RNAfold, LinearFold, and EternaFold, respectively). On the TestSetA, TrioFold-lite exhibits similar performance compared to TrioFold. The average computational time is also calculated (Table S4). On the TS0 dataset, TrioFold and TrioFold-lite achieve a median F1 score of 0.714 and 0.733, respectively. Here, TrioFold-lite achieves the best performance and TrioFold is slightly inferior to UFold. TrioFold-lite shows improvements in F1 scores ranging from 2.1% to 38.6% over SOTA methods (Figure 4B). The ROC curves also suggest the superior performance of TrioFold on TestSetA and TS0 datasets (Figure 4C). In addition, the results also show significant variations in different methods’ performance across the two datasets. For instance, SPOT-RNA achieves an F1 score of 0.839 on TestSetA, whereas it only achieves 0.654 on the TS0 dataset. This discrepancy may suggest that algorithms exhibit preferences when predicting sequences from different RNA types [33]. Together, these results indicated that the ensemble learning mechanisms of TrioFold are efficient in enhancing the RSS prediction performance.

Figure 4.

Performance comparison between TrioFold and existing methods. Distribution of F1 scores for different algorithms on TestSetA (A) and TS0 (B), respectively. (C) ROC curves on TestSetA and TS0 by various methods.

2.4. Performance Comparison Between Algorithms Across RNA Types

RNA types categorize RNA molecules by their general functional roles, while RNA families group sequences within these types based on conserved sequence and structural features, reflecting evolutionary relationships and functional similarities. For RNA families that have appeared in the training set, deep learning models tend to have a good performance. Conversely, when dealing with RNA sequences from families not represented in the training set, the prediction accuracy tends to decline sharply.

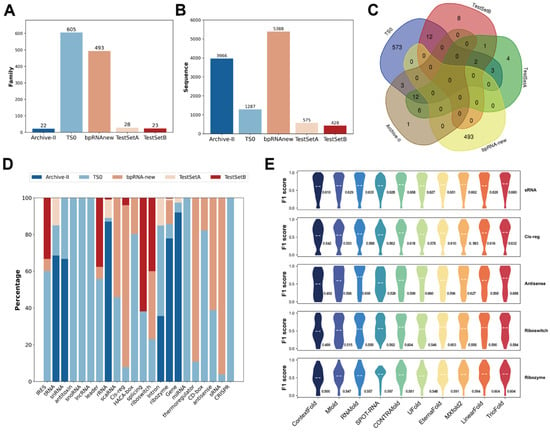

To examine the distribution of RNA families in various datasets, we use Infernal 1.1.4 [34] software to map RNA sequences to specific families. The result shows that the distribution of RNA families in different datasets is extremely uneven (Figure 5A,C), and the number of sequences in certain datasets also varies (Figure 5B). The imbalanced distribution of RNA types in the dataset suggests potential variations in the performance of the model across different RNA types.

Figure 5.

RNA SS prediction performance among different RNA clans. (A) Bar chart shows the distribution of Rfam families in certain datasets. (B) Bar chart shows the sequence numbers in certain datasets. (C) Venn chart shows the overlapping of different Rfam families in different datasets. (D) Histogram plot shows the distribution of RNA types on the TestSetA + TS0 + bpRNA-new + Archive-II. (E) Violin plot shows the performance on top-5 RNA CLANs on bpRNA-new.

Due to the extensive number of RNA families, the dataset for each family is insufficient to support statistical analysis. A Rfam clan is a collection of families that either share a common ancestor but have diverged too significantly to be aligned reliably, or a group of families that could be aligned but possess distinct functions. When the family distribution is uneven, the distribution of clans in specific datasets is also uneven (Figure 5D). To evaluate prediction performance across various RNA clans, we first map the sequences from the RNA family to the clans, and choose the bpRNA-new dataset taking into account both sequence counts and clan distributions. TrioFold demonstrates robustness and accuracy across various RNA clans (Figure 5E).

2.5. The Generalizability of TrioFold to Predict RSS in Unseen Families

The RNA family that does not appear in the training set is called the unseen RNA family, and the RNA family that appears in the training set is called the intra-family RNA family. The main challenge in RSS prediction using deep learning is the prediction of unseen family sequences. This challenge arises from the limited sample size of existing RNA data and the imbalanced distribution of RNA types. The consequence is that deep learning models are prone to overlearning specific features of certain RNA types, resulting in overfitting and suboptimal performance when applied to unseen family sequences. The ensemble model’s ability to diminish variance between models and improve the deep learning model’s generalizability across unknown RNA types is a key benefit. Here, we used TS-inter, bpRNA-new, and a PDB-derived dataset [35] (see Materials and Methods) as unseen family datasets to determine the models’ generalizability on unseen sequences. Note that we have ensured that the aforementioned sequences were not included in the training set of any RNA prediction algorithm, thereby avoiding potential biases in assessing the model’s performance. Table 1, Table 2 and Table 3 show that the TrioFold achieves the best performance on all three datasets. To assess the statistical significance of these improvements, per-sequence paired t-tests comparing TrioFold against each baseline algorithm are also shown in the Tables.

Table 1.

Performance on the bpRNA-new dataset. TrioFold/TrioFold-lite’s margin of F1 score over the remaining algorithms is listed. The highest values for each metric are highlighted in bold. **: p < 0.01.

Table 2.

Performance on the PDB dataset. TrioFold/TrioFold-lite’s margin of F1 score over the remining algorithms is listed. The highest values for each metric are highlighted in bold. n.s.: not significant (p ≥ 0.05); *: p < 0.05; **: p < 0.01.

Table 3.

Performance on the TS-inter dataset. TrioFold/TrioFold-lite’s margin of F1 score over the remaining algorithms is listed. The highest values for each metric are highlighted in bold. n.s.: not significant (p ≥ 0.05); *: p < 0.05; **: p < 0.01.

Here, we compared the TrioFold with both DL-based and thermodynamic-based algorithms. TS-inter comprises sequences that are part of the TS0 dataset but have low similarity with the training sets. Consequently, these sequences can be regarded as unseen family sequences. Though UFold outperforms all other models on the TS0 dataset, its performance dropped drastically on unseen sequences in TS0, only achieving a performance similar to algorithms like RNAfold and MXfold2. Additionally, we categorized the TS-inter sequence structures based on their dissimilarity to the training set structures (calculated using RNAforester) as cutoffs. We recorded the performance of different models on these sequences (Figure S1).

To further benchmark TrioFold’s generalizability, we extended our evaluation to include the bpRNA-new and PDB datasets. To ensure that the model’s performance is not the result of data leakage, we used VSEARCH [36] to compare every sequence in these two datasets against the training set. The results show that 96.27% of sequences in bpRNA-new and 80.67% of those in PDB have less than 30% sequence identity to any training sequence (Tables S5 and S6). This low number indicates the independence of the training and test datasets. We also found that, on the bpRNA-new dataset, the DL-based model is inferior to those thermodynamic-based methods represented by LinearFold (Table 1). Moreover, we further conducted pseudoknot prediction evaluation on PDB datasets. Although most base learners are restricted to pseudoknot-free structures, our model exhibited limited but non-zero ability to capture pseudoknotted base pairs (Table S7), suggesting potential for future improvement.

In addition, we re-evaluated the concordance of RSS predictions of different algorithms including TrioFold and TrioFold-lite on TestSetA, as previously performed in Figure 2A. TrioFold and TrioFold-lite achieved the best RSS prediction accuracies (Figure 4A) and exhibited the lowest Jaccard distances to the remaining algorithms (0.19–0.53 and 0.19–0.57, respectively) (Figures S2 and S3). TrioFold and TrioFold-lite shown the lowest median of Jaccard distances to other algorithms (0.427 and 0.414, respectively), while SPOT-RNA (the median is 0.574, the range is 0.391–0.65) and UFold (the median is 0.569, the range is 0.296–0.649) had high Jaccard distances to other algorithms.

Collectively, our findings revealed that TrioFold achieves the best performance on unseen family datasets and enhanced concordance of RSS predictions among algorithms, further demonstrating the robustness and generalizability of the model to RSS prediction tasks. Additionally, TrioFold and TrioFold-lite models had a low number of trainable parameters compared with other algorithms (Table S8).

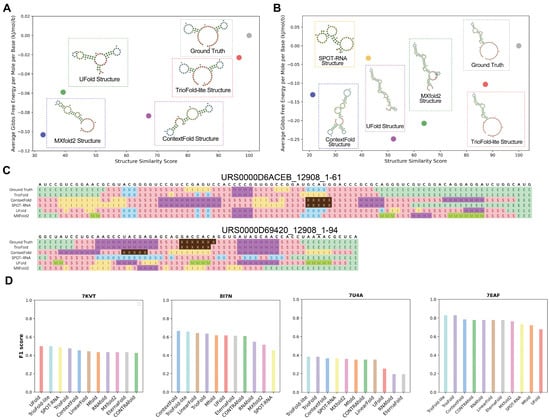

2.6. Showcase of SS Prediction on Representative RNAs from Unseen Families

To further characterize the generalizability of the model in unseen families, two particularly challenging sequences (URS0000D6ACEB_12908_1-61, URS0000D69420_12908_1-94) were selected for visualization with the Forna tool [37], which are from the bpRNA-new dataset. To determine the similarity between the predicted RNA SS and the ground truth, BEAGLE is implemented [38]. The visualization results show that, in both cases, TrioFold-lite markedly generates SS more akin to the ground truth, with structure similarities of 96.721 and 87.234 (Figure 6A,B), structure alignment shows that our algorithm generates motifs more accurately (Figure 6C), compared with other algorithms. Although the prediction of the SS differs for each base learner, the ensemble model can obtain results with less generalization error by assigning different weights to different base learners, as evidenced by the fact that the SS predicted by the TrioFold-lite is closer to the real structure in both cases.

Figure 6.

Showcases with representative examples from bpRNA-new and PDB. The RSS of (A) URS0000D6ACEB_12908_1-61 and (B) URS0000D69420_12908_1-94 were predicted by TrioFold, TrioFold-lite, and four base learners. A higher structure similarity indicates a greater similarity in SSs. In both cases, the TrioFold-lite predicted SS is more consistent with the ground truth structures. (C) Structure alignment for URS0000D6ACEB_12908_1-61 and URS0000D69420_12908_1-94, motif annotation is generated by bpRNA. Different letters represent different motifs. (S: stem, M: multiloop, I: internal loop, B: bulge, E: dangling end, X: external loop) (D) Bar plot of F1 scores of RSS predictions by different algorithms for four recently released RNA structures from the PDB (PDB IDs: 7KVT, 8I7N, 7U4A, and 7EAF).

Additionally, we found four experimentally determined RNA structures newly released after 1 June 2021 in the PDB database and ensured the exclusion of any sequences from these RNAs in the datasets employed for this study. The performance of ensemble models was compared with other methods (Figure 6D). We observed that in certain sequences, the performance of all algorithms was suboptimal. Take 7U4A (crystal structure of Zika virus xrRNA1 mutant) for example; the F1 scores for the majority of algorithms hover around 0.35. This observation may be correlated with the complicated pseudoknots present in the structure. In summary, TrioFold outperformed other methods on three (7KVT, 7U4A, and 7EAF) out of the four RNA structures and was listed as the second-best method for the remaining one structure (8I7N).

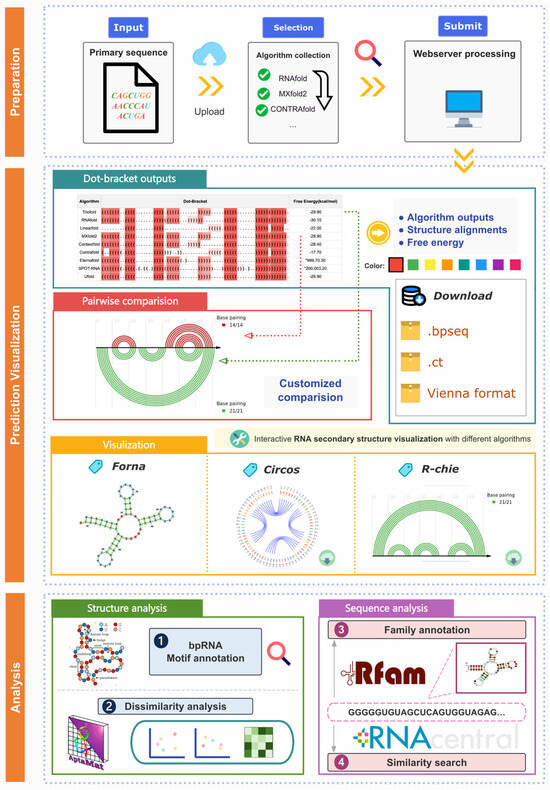

2.7. A User-Friendly Webserver for RSS Prediction and Analysis

Moreover, we provide a user-friendly web interface integrated with RNA prediction and analysis (Figure 7). Users interested in utilizing the ensemble model can input the RNA primary sequence, and the site will invoke the TrioFold along with MXFold2, UFold, SPOT-RNA, EternaFold, CONTRAfold, RNAfold, LinearFold and ContextFold to generate RSS predictions. The output includes the predicted RSS from various algorithms and their alignments. The website also features a visualization window for intuitively observing and comparing the RSS predicted by various methods.

Figure 7.

Workflow and functions of TrioFold webserver. The TrioFold webserver is a user-friendly platform that offers comprehensive RSS prediction and analysis. Additionally, the webserver provides custom RSS based on various methods, along with tools for motif annotation, dissimilarity analysis, and sequence analysis.

Based on this, the website also offers an analysis module based on RNA sequence and SS. The site integrates software including Forna (http://rna.tbi.univie.ac.at/forna/, accessed on 16 August 2025), Circos (https://circos.ca/, accessed on 16 August 2025) [39], e-RNA (https://www.e-rna.org/, accessed on 16 August 2025) [40], and Aptamat 1.0 [41]. Users can obtain information on RNA structure motifs and dissimilarity analysis is also available using different algorithms. Additionally, information on Rfam families and similar sequences from RNAcentral can also be obtained.

3. Materials and Methods

3.1. Sequence Module

The sequence module learns sequence information through various base learners = (1, , l) to obtain the structure module’s input data, where is the number of base learners. Given an RNA sequence = (1, , n), where is the sequence length, TrioFold inputs the sequence into each base learner to generate an RSS described by dot–bracket notation.

Each base learner produces a binary matrix where:

- = 1 if the -th base and the -th base form a complementary pair according to the -th base learner’s prediction.

- = 0 otherwise.

The complete output is represented as a 3-dimensional binary matrix:

3.2. Structure Module

To better integrate the strengths of base learners and compensate for their lack of generalization, we employ CBAM (Convolutional Block Attention Module) to capture important information from different learners and spatial locations. The overall ensemble process can be described as:

where 1D RSS Pattern map and 2D Domain Focus map as illustrated in Figure 1, ⊗ denotes element-wise multiplication. is the final refined output.

3.3. RSS Pattern Block

In RNA molecules, bases form specific pairings through hydrogen bonds (such as A-U, G-C, and G-U pairings), determining the RSS. We utilize the channel attention module from CBAM as the RSS Pattern Block to identify and reinforce important feature channels, thereby selecting and stabilizing key base-pair relationships. The RSS Pattern Block can be defined as follows:

where σ denotes the sigmoid function, and the MLP weights are shared for both inputs. AvgPool and MaxPool are applied along the spatial dimensions of the feature map while preserving the channel dimension, effectively aggregating information across the spatial locations.

3.4. Domain Focus Block

Key regions in RNA secondary structures, such as hairpin loops and internal loops, are crucial for RNA’s function and stability. We employ the spatial attention module from CBAM to enhance the feature representations of these critical regions, enabling the model to understand and predict RNA secondary structures better. The Domain Focus Block can be defined as follows:

where σ denotes the sigmoid function, and represents a convolution operation with the filter size of . This block applies AvgPool and MaxPool along the channel dimension, aggregating spatial information across all channels to generate two spatial context descriptors.

3.5. TrioFold-Lite

For TrioFold-lite, we employ a learnable ensemble network [42] that is capable of assigning weights to the matrices produced by different base learners. Firstly, we obtain the weighted sum of adjacent matrices through the following formula:

where is the adjacent matrix output by v-th base learner, and is the automatically learned weight parameter optimized using gradient descent. To constrain the values of the adjacent matrix within the range of 0 and 1, we incorporate the softmax function into the network:

3.6. Dataset

The datasets used in this study have been uniformly processed in the following steps: (1) CD-HIT-EST [43] is used to remove sequences with similarity > 80% to enhance the sampling representativeness of training data, to ensure the ability of the model to learn important features; (2) screen and filter some invalid sequences (such as some sequences with missing nucleotides); (3) remove RNA sequences containing more than 500 nucleotides in the database (except TestSetA) due to the limitation of computer resources. The datasets used in this study are as follows:

The Archive II dataset is a benchmark dataset established by Mathews [44]. The dataset contains 3975 RNA sequences from 10 RNA families, including small subunit ribosomal RNA, large subunit ribosomal RNA, 5S ribosomal RNA, Group I self-splicing introns, RNase P RNA, signal recognition particle RNA, tRNA, and tmRNA. All RSS in this dataset were obtained by comparative sequence analysis. This benchmark dataset is one of the most widely used RSS test sets.

The TS0 dataset is derived from the benchmark dataset established by Jaswinder [25]. The main source of this benchmark dataset is the bpRNA-1m 1.0 database [45]. The bpRNA database contains a wide range of RNA families. This dataset contains more than 100,000 sequences from 2588 RNA families. Therefore, it is suitable for unseen RNA family evaluation experiments of ensemble models. In this study, we use the same train-test split method as UFold. In detail, the bpRNA is divided into three datasets: TR0, VL0, and TS0, and only TS0 is used for experimental evaluation.

The bpRNA-new dataset is derived from the benchmark dataset established by Kengo [24]. The main source of the benchmark dataset is the Rfam14.2 database [46]. This benchmark dataset provides rich RNA family information and helps to verify the generalization ability of the model in different RNA families.

The PDB dataset is derived from the benchmark dataset established by Jaswinder [35], which can be further divided into TS1, TS2, and TS3. The main source of this benchmark dataset is the high-resolution RNA structure released by the PDB database [47]. In the PDB dataset, according to the method of SPOT-RNA2, homologous sequences were removed using BLAST-N software (https://blast.ncbi.nlm.nih.gov/Blast.cgi, accessed on 16 August 2025) [48] with an e-value cutoff of 10. These structures are non-redundant with the existing training, validation, and test sets.

TestSetA and TestSetB datasets are derived from the benchmark dataset established by Rivas [49]. The main source of the benchmark dataset is the Rfam 10.0 database. Test Seta keeps sequences greater than 500 nt, with sequence lengths ranging from 10 to 768 nt. It mainly contains 31 RNA families, such as tRNA, SRP RNA, 5sRNA, tmRNA, telomerase RNA, etc. The sequence length in TestSetB varies from 27 to 244 nt. The RNA family contained in TestSetA is completely different from the RNA family contained in TestSetB, so it can be considered that TestSetA and TestSetB are independent of each other. For TestSetA and TestSetB, after the above pre-processing steps, the method used by MXfold2 is used to remove the sequences that contain pseudoknot structures in the dataset.

For the TrioFold, to avoid overfitting, TestSetB and ArchiveII are used as the training set, and the sequences contained in the test set are removed. We used TestSetA as the validation set and evaluated model performance further on the bpRNA-new, TS0, TS-inter and PDB datasets. To assess sequence similarity between the training and evaluation datasets, we performed sequence identity analysis using VSEARCH [36]. We then trained this model for 100 epochs, saved the model at epoch 15 according to accuracy on the TestSetA, and used this model for subsequent ensemble model performance valuation.

3.7. Loss Function

RSS prediction is a typical binary classification problem. Binary cross entropy (BCE) loss function is a loss function invented for binary classification problems. The formula is as follows:

where N represents the number of samples, represents the real label, and represents the base-pairing probability output by the model.

3.8. Baselines

In our study, we classify and introduce several baseline models to provide a comprehensive comparison with TrioFold. These baselines are grouped based on their underlying methodologies:

- Machine Learning-Based Models: Algorithms such as UFold (available at https://github.com/uci-cbcl/UFold, accessed on 16 August 2025), CONTRAfold, ContextFold, MXfold2 (available at https://github.com/mxfold/mxfold2, accessed on 16 August 2025), EternaFold (available at https://github.com/eternagame/EternaFold, accessed on 16 August 2025) and SPOT-RNA (available at https://github.com/jaswindersingh2/SPOT-RNA, accessed on 16 August 2025) leverage machine learning techniques, particularly deep learning and probabilistic models, to predict RNA secondary structures. These models are trained on large RNA datasets to capture complex sequence-structure relationships, offering high accuracy for various RNA types.

- Thermodynamic and Energy-Based Models: RNAfold (available at https://www.tbi.univie.ac.at/RNA/, accessed on 16 August 2025), Mfold (available at http://www.unafold.org/mfold/software/download-mfold.php, accessed on 16 August 2025), and LinearFold (available at https://linearfold.eecs.oregonstate.edu/, accessed on 16 August 2025) rely on thermodynamic principles, where RNA secondary structures are predicted by minimizing the free energy of the sequence. LinearFold, while not a machine learning method itself, integrates parameters from CONTRAfold, a machine learning-based model, to enhance its prediction efficiency without requiring a machine learning framework for its operation. These classical approaches are widely recognized for their robustness in RNA structure prediction based on energy calculations.

To ensure fairness and accuracy in our comparisons, the implementation and default parameter settings for each model strictly follow the original papers.

3.9. Implementation Details

In this study, the data pre-processing and training model are mainly completed on a server equipped with four NVIDIA GeForce RTX 3090. The CPU used is an Intel (R) Xeon (R) Gold 6230R CPU @ 2.10GHz, Python version is 3.9.16, and Pytorch version [50] is 1.10.0. The Linux system version number is Ubuntu 20.04.4 LTS, the optimizer selected for training is Adam optimizer, and its default parameters are set as training parameters. The batch size is set to 4. Due to the unbalanced proportion of positive and negative samples, the positive class weight is set to 300 to balance the weight of positive and negative samples during training.

3.10. Post-Process

It is widely accepted that the RSS should be restricted by certain hard constraints [51]. For a given sequence = (1, , n), with a length of , the contact map of RSS can be defined as a binary matrix *. If the i-th base in the sequence is complementary to the j-th base, then = 1, otherwise = 0. To make the output of the ensemble model more consistent with the actual RSS, the output structure should follow the following rules: (1) in the predicted structure, only canonical base pairings plus G-U pairing are allowed; (2) the contact map of the model output must be symmetrical; (3) paired bases should be at least four bases apart, where ∀i, j, |i − j| < 4, = 0; (4) a base can only be paired with only one other base, regardless of a base pairing with two bases at the same time. That is, ∀i, ≤ 1.

To meet the above constraints, we use the post-processing method used by E2Efold [52]. The output matrix of the model without post-processing is defined as . Matrix is defined as: = 1 if meets constraints 1 and 2, and = 0 otherwise. By defining the following nonlinear transformation , the matrix satisfying constraints 1, 2 and 3 can be obtained:

To satisfy constraint 4, the problem can be transformed into a linear programming problem, where is the output of the previous iteration, and is a hyperparameter that controls the sparsity of the output matrix. Through the optimization of the output matrix by this algorithm, a matrix with high similarity to while satisfying constraint 4 can be obtained.

Finally, we set the threshold . Let the element in the output that is greater than the threshold be set to 1, otherwise 0, and the final binary matrix can be obtained.

3.11. Experimental Evaluation of TrioFold

Since the RSS prediction is to predict whether the bases of the corresponding sites can be paired, the problem can be defined as a binary classification problem, and the confusion matrix is used to classify the predicted results.

The commonly used metrics for RSS are recall, precision, F1 score, etc. Instead of using MCC, we adopt interaction network fidelity (INF), which is numerically equivalent to MCC but more appropriately captures the base-pairing interactions characteristic of RNA structure prediction [53]. The metrics as shown in the formulas below:

If the predicted base pairs also exist in the actual structure, such cases are called true positive (TP); if the predicted base pair does not exist in the actual structure, such cases are called false positive (FP); if it is predicted that a base is not paired, and the base pair does not exist in the actual structure, such cases are defined as true negative (TN); if a predicted base pair does not exist in practice, such a case is defined as a false negative (FN).

To measure the prediction differences for different base learners, we use the Jaccard distance as a metric to determine dissimilarities. The calculation formula of the Jaccard distance is as follows.

where A and B are the contact maps for a single RNA sequence’s SS prediction computed by the base learners, respectively. The greater the Jaccard distance, the greater the difference between the prediction results of the two base learners.

3.12. Principal Component Analysis

For PCA, we reshaped the MSE of each structure calculated according to the ground truth structure into a one-dimensional matrix and used the resulting matrix as features for PCA analysis.

3.13. Webserver

A webserver was developed based on HTML5, PHP, Apache, etc. to provide the predictions using TrioFold and other widely used algorithms including CONTRAfold, MXfold2, UFold, ContextFold, EternaFold, LinearFold, RNAfold, and SPOT-RNA. The webserver can be freely visited at http://triofold.aiddlab.com/ (accessed on 16 August 2025). The input sequence length is limited due to computational resource constraints.

4. Discussion and Conclusions

Augmented by DL algorithms, RSS prediction algorithms have made huge leaps over the past several years. However, the generalizability of existing algorithms is usually unsatisfactory, which is inevitable to some extent because of the data-dependency nature of machine learning algorithms [54]. Due to the small amount and low quality of existing RNA structure data, the highly imbalanced distribution of RNA families, and the limitations in RNA sample representation strategies, DL-based RSS prediction algorithms often suffer from overfitting problems. The huge number of model parameters may lead to overfitting and poor generalizability [55]. To circumvent potential overfitting, we develop our method based on ensemble modules, thereby succeeding in mitigating the pitfalls associated with overparameterization. We hope this study will contribute ideas that may prove valuable in enhancing the generalizability and performance of DL-based RSS prediction algorithms.

Collectively, in this study, we present an end-to-end algorithm TrioFold and TrioFold-lite, based on ensemble learning and convolutional attention mechanisms to predict RSS. Our method successfully improves the accuracy of existing RSS prediction algorithms and alleviates the potential overfitting problem commonly occurring in ML/DL-based models, offering insights into strategies for enhancing both generalizability and overall performance. Although we did not retrain base learners, and they may have overfitting problems in predicting certain types of RNA, our algorithm still outperformed them. Furthermore, we have established a webserver to provide users with RSS prediction and analysis functions. By submitting the RNA sequences, users can acquire comprehensive information, including prediction results from various algorithms, structure visualization, and key properties (e.g., minimal free energy) of individual RSS automatically, all within a one-stop platform workflow, thereby enabling a more extensive utilization by biologists in a programming-free manner.

Limitations of the Study

Although we demonstrated that the TrioFold/TrioFold-lite exhibits enhanced generalizability and accuracy in predicting both intra-family and inter-family sequences, there are still limitations in our study. The input of this model is solely the primary sequence of RNA; the integration of other information can be further considered, such as experiment-derived SHAPE reactivity [56] and multiple sequence alignment (MSA)-derived features, to further enhance the accuracy of RSS prediction. In addition, it is important to note the existence of numerous other algorithms in the field of RSS prediction. By expanding the scope to incorporate additional algorithms as the choice of base learners, especially those able to predict RNA structures with pseudoknots [57], the performance of TrioFold/TrioFold-lite may be further improved.

Supplementary Materials

The following supporting information can be downloaded at https://www.mdpi.com/article/10.3390/molecules30163447/s1.

Author Contributions

X.Z., H.L. and D.H. designed and performed the experiments, analyzed the data and wrote the manuscript. H.L., Z.L. and S.W. (Shuaiqi Wang) collected and organized the datasets. J.Q., J.G. and Y.L. provided support on webserver deployment. R.Y., H.Z. and S.W. (Shaofei Wang) collected and extracted the RNA structures from the PDB database. X.Z., H.L. and D.H. wrote the manuscript. D.J., Y.C. and X.Z. provided funding and laboratory resources for the experiments. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by grants from the National Key Research and Development Program of China (2023YFC3404000), Fudan University (DGF301009-3/001), the National Natural Science Foundation of China (32000479, 82371781 and 82073752) and Shenzhen Bay Laboratory (201901).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets used in this study have been deposited at https://doi.org/10.5281/zenodo.12714014 (accessed on 16 August 2025). The code is available at https://github.com/sfsdfd62/TrioFold (accessed on 16 August 2025) and https://figshare.com/articles/software/TrioFold/26377156 (accessed on 16 August 2025). The Supplementary Table S1 is also available at https://doi.org/10.5281/zenodo.15765109 (accessed on 16 August 2025).

Acknowledgments

We sincerely appreciate the valuable comments from two anonymous reviewers.

Conflicts of Interest

Author Hui Zhao was employed by the company Byterna Therapeutics Ltd., Shanghai, China. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Caporali, A.; Anwar, M.; Devaux, Y.; Katare, R.; Martelli, F.; Srivastava, P.K.; Pedrazzini, T.; Emanueli, C. Non-coding RNAs as therapeutic targets and biomarkers in ischaemic heart disease. Nat. Rev. Cardiol. 2024, 21, 556–573. [Google Scholar] [CrossRef]

- Kim, Y.-A.; Mousavi, K.; Yazdi, A.; Zwierzyna, M.; Cardinali, M.; Fox, D.; Peel, T.; Coller, J.; Aggarwal, K.; Maruggi, G. Computational design of mRNA vaccines. Vaccine 2024, 42, 1831–1840. [Google Scholar] [CrossRef]

- Sato, K.; Hamada, M. Recent trends in RNA informatics: A review of machine learning and deep learning for RNA secondary structure prediction and RNA drug discovery. Brief. Bioinform. 2023, 24, bbad186. [Google Scholar] [CrossRef] [PubMed]

- Sumi, S.; Hamada, M.; Saito, H. Deep generative design of RNA family sequences. Nat. Methods 2024, 21, 435–443. [Google Scholar] [CrossRef] [PubMed]

- Shi, H.; Rangadurai, A.; Abou Assi, H.; Roy, R.; Case, D.A.; Herschlag, D.; Yesselman, J.D.; Al-Hashimi, H.M. Rapid and accurate determination of atomistic RNA dynamic ensemble models using NMR and structure prediction. Nat. Commun. 2020, 11, 5531. [Google Scholar] [CrossRef] [PubMed]

- Wacker, A.; Weigand, J.E.; Akabayov, S.R.; Altincekic, N.; Bains, J.K.; Banijamali, E.; Binas, O.; Castillo-Martinez, J.; Cetiner, E.; Ceylan, B. Secondary structure determination of conserved SARS-CoV-2 RNA elements by NMR spectroscopy. Nucleic Acids Res. 2020, 48, 12415–12435. [Google Scholar] [CrossRef]

- RNAcentral Consortium. RNAcentral 2021: Secondary structure integration, improved sequence search and new member databases. Nucleic Acids Res. 2021, 49, D212–D220. [Google Scholar] [CrossRef]

- Kim, G.; Lee, S.; Levy Karin, E.; Kim, H.; Moriwaki, Y.; Ovchinnikov, S.; Steinegger, M.; Mirdita, M. Easy and accurate protein structure prediction using ColabFold. Nat. Protoc. 2024, 20, 620–642. [Google Scholar] [CrossRef]

- Abramson, J.; Adler, J.; Dunger, J.; Evans, R.; Green, T.; Pritzel, A.; Ronneberger, O.; Willmore, L.; Ballard, A.J.; Bambrick, J. Accurate structure prediction of biomolecular interactions with AlphaFold 3. Nature 2024, 630, 493–500. [Google Scholar] [CrossRef]

- Fu, L.; Cao, Y.; Wu, J.; Peng, Q.; Nie, Q.; Xie, X. UFold: Fast and accurate RNA secondary structure prediction with deep learning. Nucleic Acids Res. 2022, 50, e14. [Google Scholar] [CrossRef]

- Franke, J.K.; Runge, F.; Koeksal, R.; Backofen, R.; Hutter, F. RNAformer: A Simple Yet Effective Deep Learning Model for RNA Secondary Structure Prediction. bioRxiv 2024. bioRxiv:2024.02.12.57988. [Google Scholar]

- Wang, N.; Bian, J.; Li, Y.; Li, X.; Mumtaz, S.; Kong, L.; Xiong, H. Multi-purpose RNA language modelling with motif-aware pretraining and type-guided fine-tuning. Nat. Mach. Intell. 2024, 6, 548–557. [Google Scholar] [CrossRef]

- Wang, Z.; Feng, Y.; Tian, Q.; Liu, Z.; Yan, P.; Li, X. RNADiffFold: Generative RNA secondary structure prediction using discrete diffusion models. Brief. Bioinform. 2025, 26, bbae618. [Google Scholar] [CrossRef] [PubMed]

- Gumna, J.; Zok, T.; Figurski, K.; Pachulska-Wieczorek, K.; Szachniuk, M. RNAthor–fast, accurate normalization, visualization and statistical analysis of RNA probing data resolved by capillary electrophoresis. PLoS ONE 2020, 15, e0239287. [Google Scholar] [CrossRef]

- Wirecki, T.K.; Merdas, K.; Bernat, A.; Boniecki, M.J.; Bujnicki, J.M.; Stefaniak, F. RNAProbe: A web server for normalization and analysis of RNA structure probing data. Nucleic Acids Res. 2020, 48, W292–W299. [Google Scholar] [CrossRef]

- Lorenz, R.; Bernhart, S.H.; Höner zu Siederdissen, C.; Tafer, H.; Flamm, C.; Stadler, P.F.; Hofacker, I.L. ViennaRNA Package 2.0. Algorithms Mol. Biol. 2011, 6, 26. [Google Scholar] [CrossRef]

- Bellaousov, S.; Reuter, J.S.; Seetin, M.G.; Mathews, D.H. RNAstructure: Web servers for RNA secondary structure prediction and analysis. Nucleic Acids Res. 2013, 41, W471–W474. [Google Scholar] [CrossRef]

- Runge, F.; Franke, J.K.; Fertmann, D.; Hutter, F. Rethinking performance measures of rna secondary structure problems. arXiv 2023, arXiv:2401.05351. [Google Scholar] [CrossRef]

- Zhao, Q.; Zhao, Z.; Fan, X.; Yuan, Z.; Mao, Q.; Yao, Y. Review of machine learning methods for RNA secondary structure prediction. PLoS Comput. Biol. 2021, 17, e1009291. [Google Scholar] [CrossRef]

- Cao, Y.; Geddes, T.A.; Yang, J.Y.H.; Yang, P. Ensemble deep learning in bioinformatics. Nat. Mach. Intell. 2020, 2, 500–508. [Google Scholar] [CrossRef]

- Flamm, C.; Wielach, J.; Wolfinger, M.T.; Badelt, S.; Lorenz, R.; Hofacker, I.L. Caveats to deep learning approaches to RNA secondary structure prediction. Front. Bioinform. 2022, 2, 835422. [Google Scholar] [CrossRef]

- Wu, H.; Levinson, D. The ensemble approach to forecasting: A review and synthesis. Transp. Res. Part C Emerg. Technol. 2021, 132, 103357. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Sato, K.; Akiyama, M.; Sakakibara, Y. RNA secondary structure prediction using deep learning with thermodynamic integration. Nat. Commun. 2021, 12, 941. [Google Scholar] [CrossRef]

- Singh, J.; Hanson, J.; Paliwal, K.; Zhou, Y. RNA secondary structure prediction using an ensemble of two-dimensional deep neural networks and transfer learning. Nat. Commun. 2019, 10, 5407. [Google Scholar] [CrossRef] [PubMed]

- Wayment-Steele, H.K.; Kladwang, W.; Strom, A.I.; Lee, J.; Treuille, A.; Becka, A.; Participants, E.; Das, R. RNA secondary structure packages evaluated and improved by high-throughput experiments. Nat. Methods 2022, 19, 1234–1242. [Google Scholar] [CrossRef] [PubMed]

- Do, C.B.; Woods, D.A.; Batzoglou, S. CONTRAfold: RNA secondary structure prediction without physics-based models. Bioinformatics 2006, 22, e90–e98. [Google Scholar] [CrossRef] [PubMed]

- Zakov, S.; Goldberg, Y.; Elhadad, M.; Ziv-Ukelson, M. Rich parameterization improves RNA structure prediction. J. Comput. Biol. 2011, 18, 1525–1542. [Google Scholar] [CrossRef] [PubMed]

- Zuker, M. Mfold web server for nucleic acid folding and hybridization prediction. Nucleic Acids Res. 2003, 31, 3406–3415. [Google Scholar] [CrossRef]

- Huang, L.; Zhang, H.; Deng, D.; Zhao, K.; Liu, K.; Hendrix, D.A.; Mathews, D.H. LinearFold: Linear-time approximate RNA folding by 5′-to-3′dynamic programming and beam search. Bioinformatics 2019, 35, i295–i304. [Google Scholar] [CrossRef]

- Ganaie, M.A.; Hu, M.; Malik, A.; Tanveer, M.; Suganthan, P. Ensemble deep learning: A review. Eng. Appl. Artif. Intell. 2022, 115, 105151. [Google Scholar] [CrossRef]

- Bonab, H.; Can, F. Less is more: A comprehensive framework for the number of components of ensemble classifiers. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 2735–2745. [Google Scholar] [CrossRef] [PubMed]

- Szikszai, M.; Wise, M.; Datta, A.; Ward, M.; Mathews, D.H. Deep learning models for RNA secondary structure prediction (probably) do not generalize across families. Bioinformatics 2022, 38, 3892–3899. [Google Scholar] [CrossRef] [PubMed]

- Nawrocki, E.P.; Eddy, S.R. Infernal 1.1: 100-fold faster RNA homology searches. Bioinformatics 2013, 29, 2933–2935. [Google Scholar] [CrossRef] [PubMed]

- Singh, J.; Paliwal, K.; Zhang, T.; Singh, J.; Litfin, T.; Zhou, Y. Improved RNA secondary structure and tertiary base-pairing prediction using evolutionary profile, mutational coupling and two-dimensional transfer learning. Bioinformatics 2021, 37, 2589–2600. [Google Scholar] [CrossRef]

- Rognes, T.; Flouri, T.; Nichols, B.; Quince, C.; Mahé, F. VSEARCH: A versatile open source tool for metagenomics. PeerJ 2016, 4, e2584. [Google Scholar] [CrossRef]

- Kerpedjiev, P.; Hammer, S.; Hofacker, I.L. Forna (force-directed RNA): Simple and effective online RNA secondary structure diagrams. Bioinformatics 2015, 31, 3377–3379. [Google Scholar] [CrossRef]

- Mattei, E.; Pietrosanto, M.; Ferrè, F.; Helmer-Citterich, M. Web-Beagle: A web server for the alignment of RNA secondary structures. Nucleic Acids Res. 2015, 43, W493–W497. [Google Scholar] [CrossRef]

- Krzywinski, M.; Schein, J.; Birol, I.; Connors, J.; Gascoyne, R.; Horsman, D.; Jones, S.J.; Marra, M.A. Circos: An information aesthetic for comparative genomics. Genome Res. 2009, 19, 1639–1645. [Google Scholar] [CrossRef]

- Tsybulskyi, V.; Semenchenko, E.; Meyer, I.M. e-RNA: A collection of web-servers for the prediction and visualisation of RNA secondary structure and their functional features. Nucleic Acids Res. 2023, 51, W160–W167. [Google Scholar] [CrossRef]

- Binet, T.; Avalle, B.; Dávila Felipe, M.; Maffucci, I. AptaMat: A matrix-based algorithm to compare single-stranded oligonucleotides secondary structures. Bioinformatics 2023, 39, btac752. [Google Scholar] [CrossRef]

- Chen, Z.; Fu, L.; Yao, J.; Guo, W.; Plant, C.; Wang, S. Learnable graph convolutional network and feature fusion for multi-view learning. Inf. Fusion 2023, 95, 109–119. [Google Scholar] [CrossRef]

- Fu, L.; Niu, B.; Zhu, Z.; Wu, S.; Li, W. CD-HIT: Accelerated for clustering the next-generation sequencing data. Bioinformatics 2012, 28, 3150–3152. [Google Scholar] [CrossRef]

- Sloma, M.F.; Mathews, D.H. Exact calculation of loop formation probability identifies folding motifs in RNA secondary structures. RNA 2016, 22, 1808–1818. [Google Scholar] [CrossRef] [PubMed]

- Danaee, P.; Rouches, M.; Wiley, M.; Deng, D.; Huang, L.; Hendrix, D. bpRNA: Large-scale automated annotation and analysis of RNA secondary structure. Nucleic Acids Res. 2018, 46, 5381–5394. [Google Scholar] [CrossRef] [PubMed]

- Kalvari, I.; Nawrocki, E.P.; Ontiveros-Palacios, N.; Argasinska, J.; Lamkiewicz, K.; Marz, M.; Griffiths-Jones, S.; Toffano-Nioche, C.; Gautheret, D.; Weinberg, Z. Rfam 14: Expanded coverage of metagenomic, viral and microRNA families. Nucleic Acids Res. 2021, 49, D192–D200. [Google Scholar] [CrossRef] [PubMed]

- Burley, S.K.; Bhikadiya, C.; Bi, C.; Bittrich, S.; Chao, H.; Chen, L.; Craig, P.A.; Crichlow, G.V.; Dalenberg, K.; Duarte, J.M. RCSB Protein Data Bank (RCSB. org): Delivery of experimentally-determined PDB structures alongside one million computed structure models of proteins from artificial intelligence/machine learning. Nucleic Acids Res. 2023, 51, D488–D508. [Google Scholar] [CrossRef]

- Altschul, S.F.; Madden, T.L.; Schäffer, A.A.; Zhang, J.; Zhang, Z.; Miller, W.; Lipman, D.J. Gapped BLAST and PSI-BLAST: A new generation of protein database search programs. Nucleic Acids Res. 1997, 25, 3389–3402. [Google Scholar] [CrossRef]

- Rivas, E.; Lang, R.; Eddy, S.R. A range of complex probabilistic models for RNA secondary structure prediction that includes the nearest-neighbor model and more. RNA 2012, 18, 193–212. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L. Pytorch: An imperative style, high-performance deep learning library. In Advances in Neural Information Processing Systems 32 (NeurIPS 2019); NeurIPS: San Diego, CA, USA, 2019; Volume 32, ISBN 9781713807933. [Google Scholar]

- Steeg, E.W. Neural networks, adaptive optimization, and RNA secondary structure prediction. In Artificial Intelligence and Molecular Biology; MIT Press: Cambridge, MA, USA, 1993; pp. 121–160. ISBN 0262581159. [Google Scholar]

- Chen, X.; Li, Y.; Umarov, R.; Gao, X.; Song, L. RNA secondary structure prediction by learning unrolled algorithms. arXiv 2020, arXiv:2002.05810. [Google Scholar] [CrossRef]

- Parisien, M.; Cruz, J.A.; Westhof, E.; Major, F. New metrics for comparing and assessing discrepancies between RNA 3D structures and models. RNA 2009, 15, 1875–1885. [Google Scholar] [CrossRef]

- Justyna, M.; Antczak, M.; Szachniuk, M. Machine learning for RNA 2D structure prediction benchmarked on experimental data. Brief. Bioinform. 2023, 24, bbad153. [Google Scholar] [CrossRef]

- Salman, S.; Liu, X. Overfitting mechanism and avoidance in deep neural networks. arXiv 2019, arXiv:1901.06566. [Google Scholar] [CrossRef]

- Morandi, E.; van Hemert, M.J.; Incarnato, D. SHAPE-guided RNA structure homology search and motif discovery. Nat. Commun. 2022, 13, 1722. [Google Scholar] [CrossRef]

- Gong, T.; Ju, F.; Bu, D. Accurate prediction of RNA secondary structure including pseudoknots through solving minimum-cost flow with learned potentials. Commun. Biol. 2024, 7, 297. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).