Abstract

The inverse Gaussian (IG) distribution, as an important class of skewed continuous distributions, is widely applied in fields such as lifetime testing, financial modeling, and volatility analysis. This paper makes two primary contributions to the statistical inference of the IG distribution. First, a systematic investigation is presented, for the first time, into three types of representative points (RPs)—Monte Carlo (MC-RPs), quasi-Monte Carlo (QMC-RPs), and mean square error RPs (MSE-RPs)—as a tool for the efficient discrete approximation of the IG distribution, thereby addressing the common scenario where practical data is discrete or requires discretization. The performance of these RPs is thoroughly examined in applications such as low-order moment estimation, density function approximation, and resampling. Simulation results demonstrate that the MSE-RPs consistently outperform the other two types in terms of approximation accuracy and robustness. Second, the Harrell–Davis (HD) and three Sfakianakis–Verginis (SV1, SV2, SV3) quantile estimators are introduced to enhance the representativeness of samples from the IG distribution, thereby significantly improving the accuracy of parameter estimation. Moreover, case studies based on real-world data confirm the effectiveness and practical utility of this quantile estimator methodology.

1. Introduction

Statistical distributions hold a pivotal position in information theory, as they outline the probabilistic features of data or signals, thereby directly influencing the precision and effectiveness of information representation, transmission, compression, and reconstruction. Entropy, being the foremost metric in the realm of information theory, relies on the statistical distribution of the random variable. Numerous applications in information theory necessitate the presumption of the data’s statistical distribution. While the normal distribution is commonly adopted in most statistical analyses owing to its mathematical ease and broad applicability, real-world data often display skewness, prompting the need for more adaptable models. Brownian motion is a widely used model for stochastic processes. In 1915, Schrödinger [] described the probability distribution of the first passage time in Brownian motion. Thirty years later, in 1945, Tweed [] gave the inverse relationship between the cumulant generating function of the first passage time distribution and that of the normal distribution, and named it the inverse Gaussian (IG) distribution. Then, in 1947, Wald [] derived the distribution as a limiting form for the sample size distribution in a sequential probability ratio test, leading to it being known in Russian literature as the Wald distribution. IG distribution is discussed in various books on stochastic processes and probability theory, such as Cox and Miller [] and Bartlett []. This distribution is also known as the Gaussian first passage time distribution [], and sometimes as the first passage time distribution of Brownian motion with positive drift []. IG distribution is suitable for modeling asymmetric data due to its skewness and relationship to Brownian motion. Folks and Chhikara [] and Chhikara and Folks [] have conducted a comprehensive examination of the mathematical and statistical properties of this distribution.

The interpretation of the inverse Gaussian random variable as a first passage time indicates its potential usefulness in examining lifetime or the frequency of event occurrences across various fields. Chhikara and Folks [] suggested that IG distribution is a useful model for capturing the early occurrence of events like failures or repairs in the lifetime of industrial products. Iyengar and Patwardhan [] indicated the possibility of using IG distribution as a useful way to model failure times of equipment. The IG distribution has found applications in various fields. For example, Kourogiorgas et al. [] applied IG distribution to a new rain attenuation time series synthesizer for Earth–space links, which enables accurate evaluation of how satellite communication networks are operating. In insurance and risk analysis, Punzo [] used IG distribution to model bodily injury claims and to analyze economic data concerning Italian households’ incomes. In traffic engineering, Krbálek [] used IG distribution models for vehicular flow.

In statistics, for an unknown continuous statistical distribution, using an empirical distribution of random samples is a conventional approach to approximate the target distribution. Nevertheless, this method frequently results in low accuracy. Thus, to retain as much information of the target distribution as possible, the support points for the discrete approximation, also called representative points (RPs), are investigated. For a comprehensive review of RPs, one may refer to Fang and Pan []. Representative points hold significant potential for applications in statistical simulation and inference. One promising application is a new approach to simulation and resampling that integrates number-theoretic methods. Li et al. [] propose moment-constrained mean square error representative samples (MCM-RS), which are generated from a continuous distribution via a method that minimizes the mean square error (MSE) between the sample and the original distribution while ensuring the first two sample moments match preset values. Peng et al. [] utilized representative points from a Beta distribution to weight patient origin data, thereby constructing the Patient Regional Index (PRI). This study serves as a practical application of representative points from different distributions. In the existing literature, various types of representative points corresponding to different statistical distributions have been investigated. Especially for complex distributions, the study of their representative points is indispensable. Many authors investigated the problem of discretizing a MixN by a fixed number of points under the minimum mean squared error, MSE-RPs of Pareto distributions, their estimation, and RPs of generalized alpha skew-t distribution with applications []. To the best of our knowledge, the representative points of the inverse Gaussian distribution have not yet been investigated, despite their potential utility. Therefore, we investigate three types of representative points for the inverse Gaussian distribution and explore their applications in estimation of moments and density function, as well as resampling.

This paper aims to investigate the applications of RPs as well as a dedicated parameter estimation approach based on nonparametric quantile estimators for the IG distribution. The main contributions of this work are summarized as follows:

- 1.

- We establish, to the best of our knowledge, the first systematic comparative framework for three distinct types of representative points—Monte Carlo (MC-RPs), quasi-Monte Carlo (QMC-RPs), and mean square error RPs (MSE-RPs)—specifically on the Inverse Gaussian distribution. This framework provides a benchmark for evaluating discrete approximation quality in terms of moment estimation, density approximation, and resampling efficiency.

- 2.

- We introduce a novel parameter estimation methodology for the IG distribution by employing the Harrell–Davis (HD) [] and Sfakianakis–Verginis (SV1, SV2, SV3) [] quantile estimators. This constitutes the first application and comprehensive demonstration of these estimators for enhancing sample representativeness and significantly improving the accuracy of IG parameter estimation.

- 3.

- Through extensive simulations and real-world case studies, we provide a comprehensive performance analysis. Our results not only conclusively demonstrate the superiority of MSE-RPs in approximation tasks but also validate the practical utility and effectiveness of the proposed quantile-based estimation framework.

The rest of this paper is organized as follows. Section 2 introduces the fundamental properties of the inverse Gaussian distribution. Section 3 details the generation of representative points and their applications in statistical simulation. Section 4 analyzes resampling methods based on representative points for the IG distribution. Section 5 discusses parameter estimation for the IG distribution using samples enhanced by the introduced nonparametric quantile estimators. A real-data case study is presented in Section 6. Finally, Section 7 provides the conclusions and future research directions.

2. Basic Properties of the IG Distribution

Definition 1.

Consider a random variable X to follow IG distribution IG, denoted as . The probability density function of X is defined as

where , , and . Denote its distribution function by.

The mean of the IG distribution is , and the variance is []. The maximum likelihood estimates (MLEs) of and are

where is a random sample from IG. Furthermore, it is also known that , , and and are independent []. Folks and Chhikara [] proved that the uniformly minimum variance unbiased estimators for and are

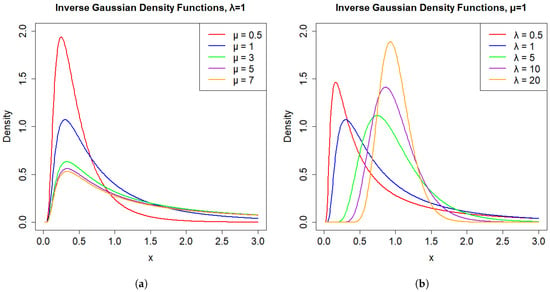

The shapes of the IG distributions with varying parameter sets are depicted in Figure 1. For Figure 1a, when is fixed and for the lower values of , the distribution demonstrates a higher peak and a steep decline in probability, which suggests a sharper distribution. As increases, the peak becomes less pronounced, indicating a distribution with a heavier tail. From Figure 1b, it can be seen that as increases, the peak value of the density curve gradually decreases, and the peak position moves along the positive direction of the x-axis. At the same time, the entire curve extends to the right, reflecting the influence of the mean of the inverse Gaussian distribution on the distribution position and shape. The larger is, the more to the right the center of the distribution is, and the flatter the peak is.

Figure 1.

(a) Density functions of IG for fixed and increasing . (b) Density functions of IG for fixed and increasing .

3. Representative Points of IG Distribution

Three types of representative points will be discussed in this section, namely MC-RPs, QMC-RPs, and MSE-RPs, from the parametric k-means algorithm, the NTLBG algorithm, and obtained by solving a system of nonlinear equations (the Fang–He algorithm []) of the IG. Furthermore, we also discuss the applications of representative points, which are applied to moment estimation and density estimation.

3.1. Three Types of Representative Points

3.1.1. MC-RPs

Let be a random vector, where denotes the parameters. In conventional statistics, inferences about the population are drawn using an independent and identically distributed random sample from F. The empirical distribution is defined as

where is the indicator function of set A. This is a discrete distribution assigning probability to each sample point, serving as a consistent approximation to .

In statistical simulation, let denote the random samples generated computationally via Monte Carlo methods; we denote this as . Efron [] extended this idea into the bootstrap method, where samples are drawn from the empirical distribution rather than F.

Although widely used, the MC method has limited efficiency due to the slow convergence rate of to . This slow convergence leads to suboptimal performance in numerical integration, motivating alternative approaches.

3.1.2. QMC-RPs

Consider computing a high-dimensional integral in the canonical form:

where g is a continuous function defined on . Monte Carlo methods approximate using random samples from , achieving a convergence rate of . Quasi-Monte Carlo (QMC) methods improve this by constructing point sets that are evenly dispersed over , achieving a convergence rate of . For the theoretical foundations and methodologies of QMC, one may consult works by Hua and Wang [] as well as Niederreiter []. In earlier research, the star discrepancy was frequently used by many scholars as a metric to assess the uniformity of within . The star discrepancy is defined as

where represents the cumulative distribution function (cdf) of and is the empirical distribution corresponding to . An optimal set minimizes . In such a case, the points in are termed QMC-RPs, which serve as support points of with each point having an equal probability of .

The optimality of the point set has a profound theoretical foundation. As demonstrated in Examples 1.1 and 1.3 of the seminal work by Fang and Wang [], this set achieves the lowest star-discrepancy, establishing its optimality under a prominent criterion in quasi-Monte Carlo methods for any continuous distribution. Meanwhile, from the perspective of statistical distance minimization, Barbiero and Hitaj [] presented the following fundamental result, proving that the same point set is also optimal under the Cramér–von Mises criterion.

Theorem 1.

Let be a strictly increasing and continuous cumulative distribution function, and k be a positive integer. Consider the set of all discrete distributions with k support points, with cumulative distribution function .

Then, for any , the unique optimal discrete distribution that minimizes the Cramér–von Mises distance family

is given by the following:

Support Points (Quantization Points): , where , for .

Probabilities (Weights):, for (i.e., a discrete uniform distribution).

In this paper, we construct the QMC-RPs for the distribution by applying the above theoretical result. Specifically, the support points are generated via the inverse transformation method using the optimal point set

with corresponding probability [16, 32]. Here, is the inverse function of , and the set of points is uniformly scattered on the interval and, as established, optimal in both the star-discrepancy and Cramér–von Mises senses.

3.1.3. MSE-RPs

MSE-RPs, also known as principal points [], are representative points designed to minimize the mean square error between the distribution and its discrete approximation. MSE-RPs were independently proposed by Cox [], Fang and He [], and many others. For a random variable with density , MSE-RPs are constructed as follows:

Take and define a stepwise function

where . Define the mean square error (MSE) to measure bias between F(x) and as follows:

To find , such that arrives at its minimum, the solution of is just MSE-RPs of . The probability of each representative point is given by

where

In this paper, we use the following three main different approaches to generate MSE-RPs:

(a) NTLBG algorithm: Combines QMC methods with the k-means-based LBG algorithm for univariate distributions [].

(b) Parametric k-means algorithm: An iterative method (Lloyd’s algorithm) for finding RPs of continuous univariate distributions [,].

(c) Fang–He algorithm: Solves a system of nonlinear equations to find highly accurate MSE-RPs, though computationally intensive for large k [].

For convenience, we use PKM-RPs, NTLBG-RPs, and FH-RPs to denote RPs from the parametric k-means algorithm, the NTLBG algorithm, and the Fang–He algorithm [] of the IG(), respectively.

3.2. Lower Moments Estimation Based on RPs of IG Distribution

In this subsection, we consider estimation of mean, variance, skewness, and kurtosis of . QMC-RPs, MSE-RPs which include PKM-RPs, FH-RPs, and NTLBG-RPs mentioned in the previous subsection are used for simulation. To save space in the main text, the specific numerical results of these representative points under different sizes () are provided in the Appendix A. Readers may refer to the Appendix A for detailed data.

We have obtained mean and variance of in Section 2, , Var. Denote the following statistics of X by

It can be concluded that

Consider a group of representative points from IG, where with probability . Then the above statistics are

In the following comparisons, we employ MC-RPs, QMC-RPs, FH-RPs, PKM-RPs, and NTLBG-RPs from IG(1, 1) and consider the sample size . Therefore, the corresponding mean, variance, skewness, and kurtosis are 1, 1, 3, and 15. For each statistic (mean, variance, skewness, and kurtosis) there are five estimators and five corresponding biases; Table 1 shows numerical results. It is evident that MC-RPs consist of random samples with size n. For the sake of fair comparisons, we generate 10 samples each with size n, and then take the average of the estimated statistics as the result of MC-RPs (10).

Table 1.

Estimation bias of variance, kurtosis, mean, and skewness.

We have marked the RP methods which have smallest absolute bias in boldface in Table 1. From the results in Table 1 we may raise the following observations: (1) the estimators of FH-RPs and PKM-RPs are more accurate than those of MC-RPs (10), QMC-RPs, and NTLBG-RPs; (2) FH-RPs and PKM-RPs have same performance in estimation of these four statistics. In fact, the bias values in Table 1 are rounded to four decimal places, which makes FH-RPs and PKM-RPs appear to perform identically. However, the performance of FH-RPs and PKM-RPs is not exactly the same. If the bias values are rounded to eight decimal places, it can be observed that FH-RPs performs better than PKM-RPs.

3.3. Density Estimation via RPs of IG Distribution

In this section, we estimate density function of inverse gaussian distribution IG(1, 1), choose as size of the input data, and give comparisons among the four RP methods (QMC-RPs, FH-RPs, PKM-RPs, and NTLBG-RPs). It is noteworthy that for the density estimation based on MC-RPs, owing to the inherent randomness of the Monte Carlo method, the density curve obtained from each fitting process varies significantly. Therefore, the results of MC-RPs-based density estimation will not be presented here.

Rosenblatt [] and Parzen [], provides a way to estimate the density function based on a set of samples, . The estimate function

is called kernel density estimation, where is the kernel function, h is the bandwidth, and . The most popular kernel is the standard density function; therefore, we choose it as the kernel. In this section, we use a set of representative points with related probabilities to replace n i.i.d. samples. In this case, (6) becomes

and the choice of the bandwidth is very important.

In our experiment, we divide the range of x into 2 parts, and choose h to have minimum between and in each part. Now, (7) becomes

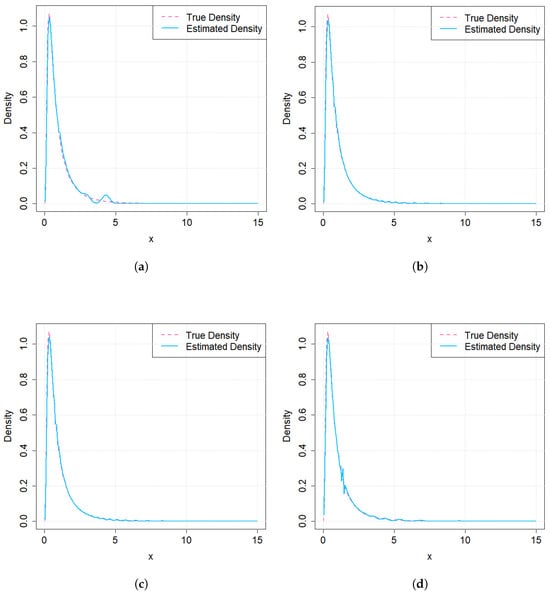

The four density estimators are shown in Figure 2. From Figure 2, it can be observed that FH-RPs and PKM-RPs have similar performance, and their performance is better than that of QMC-RPs and NTLBG-RPs.

Figure 2.

Kernel density estimations from 4 kinds of RPs, when sample size . (a) QMC-RPs (b) FH-RPs. (c) PKM-RPs. (d) NTLBG-RPs.

The recommended h and -distance between each density estimator and IG(1, 1) in each zone are given in Table 2.

Table 2.

Recommended h and -distance under n = 30.

4. Resampling Based on RPs of IG Distribution

In this section, we use 4 kinds of RPs (QMC-RPs, FH-RPs, PKM-RPs, and NTLBG-RPs) which have been introduced in Section 3 to form four different populations by resampling. Specifically, N denotes the number of representative points used for each distribution, while n represents the sample size generated in each resampling procedure. We then employ the samples obtained via resampling for statistical inference. The steps of resampling used in this paper are as follows:

Step 1. Generate a random number U, i.e., ; the latter is the uniform distribution on (0, 1).

Step 2. Define a random variable Y by

Step 3. Repeat the above two steps n times, and we have a sample of Y, . Calculate the given statistic T.

Step 4. Repeat the above three steps 1000 times, and we obtain a sample of T, .

Step 5. Use the mean of a sample of T to infer the statistic of the population.

Now we apply the four RP methods for estimation of four statistics of : mean, variance, skewness, and kurtosis. Table 3, Table 4 and Table 5 show estimation biases for the above four statistics, where QMC-RPs, FH-RPs, PKM-RPs, and NTLBG-RPs are employed involving the following cases: sample size , and representative points

Table 3.

Estimation bias for by resampling.

Table 4.

Estimation bias for by resampling.

Table 5.

Estimation bias for by resampling.

Based on the results in the Table 3, Table 4 and Table 5, the results reveal distinct performance patterns among the four methods. NTLBG-RPs demonstrates superior accuracy in mean and variance estimation, achieving near-zero biases across all configurations. FH-RPs and PKM-RPs excel in higher-moment characterization, particularly for kurtosis and skewness estimation. QMC-RPs provides reasonable mean estimates but shows significant limitations in capturing higher-order moments. This performance dichotomy suggests a fundamental trade-off between central moment accuracy and tail behavior characterization. The optimal method selection should therefore align with specific application requirements, prioritizing either distribution center or tail properties.

5. MLE via Quantile Estimators of IG Distribution

Let be a set of n i.i.d samples from IG distribution with pdf . When , then the log-likelihood function is defined as

When , the pdf is

and the log-likelihood function is defined as

The goal of MLE is to find the model parameters that can maximize the log-likelihood function over the parameter space , that is,

The sequential number theoretic optimization algorithm (SNTO), introduced by Fang and Wang [], represents a broadly applicable optimization technique. Subsequently, this SNTO algorithm can be employed to determine the numerical solutions through the maximization of Equations (19) and (21).

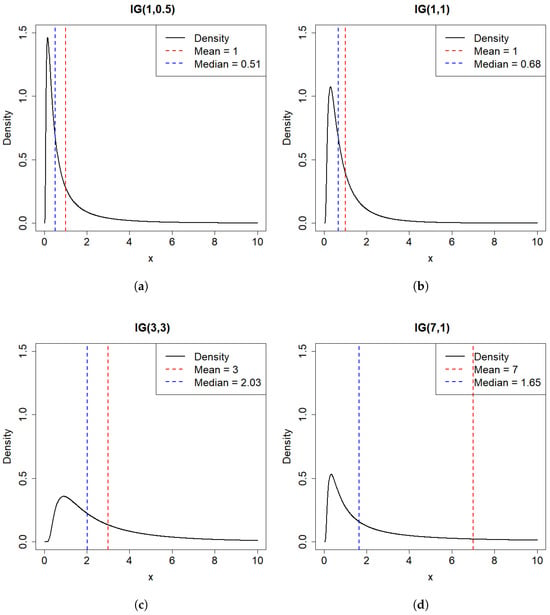

In this part, we study parameter estimation of four two-parameter Inverse Gaussian distributions (IG(1,1), IG(1,0.5), IG(7,1), IG(3,3)) and one three-parameter Inverse Gaussian distribution IG(1,0.5,1). Some results for IG(1,0.5), IG(7,1), IG(3,3) are displayed in the Appendix A to save space. The density plots corresponding to these four two-parameter distributions are shown in Figure 3. The three-parameter inverse Gaussian distribution is derived from the two-parameter inverse Gaussian distribution by adding a location shift parameter, which does not alter the shape of the distribution. Therefore, it need not be presented separately.

Figure 3.

(a) Density plot of IG(1, 0.5). (b) Density plot of IG(1, 1). (c) Density plot of IG(7, 1). (d) Density plot of IG(3, 3).

5.1. Two Nonparametric Quantile Estimators

5.1.1. HD Estimator

The Harrell–Davis quantile estimator [] consists of a linear combination of the order statistics, admitting a jackknife variance. The Harrell–Davis quantile estimator offers a significant gain in efficiency, with emphasis on small sample results. Let be a random sample of size n from an inverse Gaussian distribution. Denote as the i-th largest value in x and as the p-th population quantile. The quantile estimator based on the random sample is proposed to be

where

and denotes the incomplete beta function.

5.1.2. SV Estimators

The SV estimators, as proposed by Sfakianakis and Verginis [], offer alternative methods for quantile estimation with advantages in small sample sizes and extreme quantiles. Let be a random sample of size n from an inverse Gaussian distribution. The q-th quantile of the population, , is one of the . Define the random variables as

where . The Bernoulli distribution is a discrete distribution with two possible outcomes: 1 (success) with probability q and 0 (failure) with probability . In this context, q represents the probability of success for each trial. Then, their sum has a binomial distribution with probability q, supposing are independent. So, , where . Let the random variable , where is a point estimator of conditioned on the event , . An estimator of is obtained by calculating .

Three different definitions of are examined to derive three distinct quantile estimators, denoted as , , and . Table 6 shows construction formulas of three quantile estimators and gives assumption of or of these estimators.

Table 6.

Definitions of three quantile estimators.

Substitute the above equations into and find the expectation . After simplification, the following three SV estimators are obtained:

These four quantile estimators to QMC-data that approximate the inverse Gaussian distribution are applied to compare their effects in revising data in next section.

5.2. Estimation Accuracy Measures

In this subsection, six methods are showed for parameter estimation. These methods include the following: (1) the Plain method, which is the traditional maximum likelihood estimation (MLE) based on random samples with SNTO optimization; (2) the HD method, which uses QMC data (HD-quantiles) combined with SNTO optimization; (3) the SV1, SV2, and SV3 methods, which are based on QMC data (SV-quantiles) with different SV quantile constructions, also using SNTO optimization; and (4) the MLE-analytic formulas method, a traditional MLE approach based on analytical formulas without SNTO optimization.

There are many metrics to assess estimation precision. The following four accuracy measures, labeled (a) through (d), are examined in this study. Across 100 Monte Carlo iterations, the averages of these accuracy measures (a)–(d) are compiled in tables titled “average accuracy measures” for assessment purposes.The true distribution is represented by or , while the estimated distributions are denoted as or .

(a) The L2-distance between the cumulative distribution function (c.d.f.) (F) of the underlying distribution and its estimated distribution is considered. The L2-Distance (L2.cdf) for comparing two c.d.fs is expressed as:

(b) The L2-distance between the probability density function (f) of the underlying distribution and its estimated distribution is considered. The L2-Distance (L2.pdf) for comparing two density functions is given by

(c) The Kullback–Leibler divergence (KL), also known as relative entropy, serves as an indicator of the disparity between two probability distributions. The KL divergence from to is formulated as

(d) The absolute bias index (ABI) is employed to quantify the overall bias in parameter estimation. Let and represent the estimated values of and for the inverse Gaussian distribution, where and . The ABI is defined as

5.2.1. MLE of IG

Five MLE-based methods are compared based on the sample size of . Table 7 presents the average accuracy measures corresponding to L2-distances, KL-divergence, and ABI. For each metric, the optimal performance across different distributions and sample sizes is emphasized in bold. This bold-highlighting convention is consistently applied in the remaining tables.

Table 7.

MLE estimations—average accuracy measures ().

From the result of Table 7, we can know the comparative analysis reveals that the Plain method consistently excels in L2.cdf (bold in 9/12 cases for IG(1, 1), IG(1, 0.5), IG(7, 1), and IG(3, 3)) and ABI (7/12 bold values), demonstrating robustness for cumulative distribution accuracy, while the HD method dominates L2.pdf (bold in 9/12 cases) and KL divergence (7/12 bold values), indicating superior probability density estimation. QSV variants show mixed results with occasional competitiveness but generally underperform systematically. Performance improves with sample size (), particularly for HD and Plain, where error metrics decrease monotonically. Notably, IG(3, 3) exhibits unique behavior with shared dominance between Plain and HD, while QSV methods struggle, highlighting distribution-dependent efficacy. Overall, HD proves optimal for density-focused tasks (L2.pdf/KL) across most inverse Gaussian parameterizations, whereas the Plain method suits cumulative metrics (L2.cdf/ABI), with QSV methods lacking consistent advantages.

To better interpret the results presented in Table 7, we study the frequency of different rankings for the five methods across various metrics. The ranking of these methods is determined based on 100 Monte Carlo simulations, where each simulation records the relative performance of the methods across the specified accuracy metrics. The tables present the frequency of each method achieving ranks 1 through 5, providing a comprehensive assessment of their effectiveness. This analysis aims to identify the most robust method for parameter estimation under varying sample sizes ().

The Table 8 presents the ranking distribution of five sampling methods (Plain, HD, QSV1, QSV2, QSV3) across four accuracy measures (L2.cdf, L2.pdf, KL, ABI) based on 100 Monte Carlo simulations for sample sizes () of IG(1, 1). Since the results for IG(1, 0.5), IG(7, 1), and IG(3, 3) are similar to those for IG(1, 1), and to save space, they are not individually presented in the main text but are included in Appendix A, which readers may refer to for further details.

Table 8.

The rank of 5 methods in 4 accuracy measures (IG(1, 1)).

In Table 8, the Plain method consistently ranks third or lower across all measures and sample sizes, indicating stable but suboptimal performance. HD performs moderately, often ranking second or third, with occasional first-place rankings, suggesting reliability but limited excellence. QSV1 and QSV2 frequently achieve first-place rankings, particularly in KL and ABI for larger n, but also show higher variability, with notable fifth-place occurrences, indicating sensitivity to specific conditions. QSV3 exhibits balanced performance, with frequent first- and second-place rankings, especially in L2.pdf and ABI at (), suggesting robustness across diverse measures. As n increases, QSV2 and QSV3 generally improve in top rankings, while Plain and HD remain consistent but less competitive.

The analysis of Table 9 and Table 10 (with additional results for IG(1, 0.5) and IG(7, 1) provided in Appendix A, Table A4 and Table A5) reveals distinct performance patterns across the inverse Gaussian distributions. A consistent pattern emerges for the IG(1, 1) and IG(7, 1) distributions (see Table 9 and Table A5), where the QSV3 method consistently outperforms other contenders in density-related metrics (L2.pdf and KL divergence), achieving the lowest errors across nearly all sample sizes. This makes QSV3 the recommended choice for density-focused tasks. For parameter estimation under these distributions, the Plain method provides the most accurate estimates of , especially at smaller sample sizes. However, a different dynamic is observed for the IG(3, 3) distribution (Table 10). Here, the dominance shifts, with Plain and HD methods sharing the lead. Notably, the HD method demonstrates exceptional performance at the largest sample size (), achieving optimal values in the majority of metrics. This indicates that for distributions with certain parameter configurations, traditional discretization methods can be highly effective for parameter estimation at larger n.

Table 9.

Estimation results on IG(1, 1), N = 100.

Table 10.

Estimation results on IG(3, 3), .

Overall, the choice of optimal discretization method is context-dependent. We recommend QSV3 for applications prioritizing density estimation, Plain for cumulative distribution metrics and estimation at small n, and HD for parameter estimation tasks when dealing with larger samples from certain distributions. The Analytic formulas method occasionally excels in specific scenarios but lacks consistency, while QSV1 and QSV2 exhibit limited advantages in this study.

5.2.2. MLE of IG

In this subsection, parameter estimation for the three-parameter inverse Gaussian distribution is presented. We know that obtaining an analytical MLE solution for the three-parameter inverse Gaussian distribution is challenging due to the multimodal nature of its log-likelihood function, particularly the complexity in estimating , which renders direct solutions to the derivative equations impractical. To overcome the limitations of analytical solutions, numerical optimization methods such as SNTO can be used. We employed the SNTO algorithm on a random sample for parameter estimation in the optimization step, comparing its performance with the traditional MLE method for estimating and based on analytical expressions at a given .

Table 11 presents parameter estimation results for the IG(1, 0.5, 1)) distribution across five methods (Plain, HD, QSV1, QSV2, QSV3) and three sample sizes () across four accuracy measures (L2.pdf, L2.cdf, KL, ABI). QSV3 demonstrates superior performance at and , achieving the lowest errors in L2.pdf, L2.cdf, and KL, indicating its effectiveness for smaller samples. At , HD outperforms others with the lowest errors across all measures, suggesting its strength with larger samples. Plain shows consistent but moderate performance, often excelling in ABI, while QSV1 and QSV2 exhibit higher variability with suboptimal rankings across measures. The results highlight QSV3’s robustness at lower n and HD’s dominance as sample size increases.

Table 11.

Estimation results on IG(1, 0.5, 1), .

6. Case Study

In this section, we utilize inverse Gaussian distribution to model a set of data, focusing on the engineering planning and design. The data set is taken from Example 7 by Chhikara and Folks (1978) [].

The data set gives 25 runoff amounts at Jug Bridge, Maryland, as shown in Table 12.

Table 12.

Data for example.

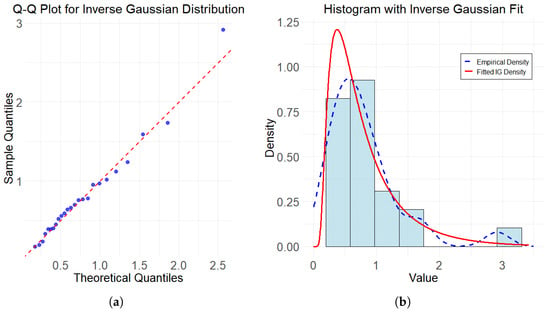

In the above case, the histogram and Q-Q plot of the data set are shown in Figure 4. According to fitted IG density, empirical density, and Q-Q plot, data set tends to inverse Gaussian distribution. Using the graphical method to determine Inverse Gaussian distribution has limitations. Therefore, it is also necessary to conduct a K-S test on the data to avoid misspecification of the data distribution type.

Figure 4.

(a) Q-Q Plot for inverse Gaussian distribution. (b) Histogram with inverse Gaussian fit.

The K-S test statistic for Example is with a p-value of 0.9976. Therefore, it is reasonable to assume that the data set follows inverse Gaussian distribution. We use the K-S test and three performance indicators to compare the fitting effects of these five methods, i.e., the bias, the sum of squares due to error (SSE), and the coeffcient of determination ().

Table 13 presents the MLE parameter values obtained by different methods, and shows the parameter estimates, maximum likelihood function values, results of the K-S goodness of fit test, and performance indicators among several methods. For each measure, the methods that perform the best are marked in bold to facilitate easier comparison. Among the several revised methods considered, QSV1-MLE demonstrates the best fitting performance.

Table 13.

The estimation result of real data.

7. Conclusions

This paper investigates the statistical simulation and parameter estimation of the IG distribution, studying and comparing five RPs methods to enhance inference accuracy and employing QMC-data revisions with HD and SV quantile estimators for MLE. The paper demonstrates that the superiority of MSE-RPs in moment and density estimation, alongside their effectiveness in resampling accuracy, underscores their potential for improving inference precision. The integration of QMC-data revisions with HD and SV quantile estimators, particularly QSV1 and QSV3, optimizes MLE performance across various sample sizes and parameter scenarios, as validated by the real runoff dataset. These findings suggest that MSE-RPs, combined with tailored quantile approaches, provide a reliable framework for addressing the challenges of skewed distributions, with broad implications for fields such as lifetime analysis, satellite communications, and risk modeling, thereby advancing the accuracy of entropy-based evaluations in practical settings.

Author Contributions

Conceptualization and supervision, K.-T.F.; writing—review and editing, X.-L.P.; methodology and software, W.-W.H.; software and writing—original draft preparation, W.-W.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported in part by the BNBU research grant UICR0200023-25 and in part by Guangdong Provincial Key Laboratory of IRADS (2022B1212010006).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used in the case study were obtained from Reference []. Further details about the data sources can be found in the cited references. No new data were created in this study.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Appendix A

Table A1.

The rank of 5 methods in 4 accuracy measures (IG(1, 0.5)).

Table A1.

The rank of 5 methods in 4 accuracy measures (IG(1, 0.5)).

| n | Method | L2.cdf | L2.pdf | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Rank1 | Rank2 | Rank3 | Rank4 | Rank5 | Rank1 | Rank2 | Rank3 | Rank4 | Rank5 | ||

| 30 | Plain | 5 | 30 | 62 | 3 | 0 | 12 | 44 | 12 | 31 | 1 |

| 30 | HD | 9 | 39 | 12 | 40 | 0 | 4 | 24 | 67 | 5 | 0 |

| 30 | QSV1 | 13 | 15 | 17 | 22 | 33 | 16 | 12 | 9 | 35 | 28 |

| 30 | QSV2 | 39 | 5 | 1 | 7 | 48 | 26 | 11 | 9 | 24 | 30 |

| 30 | QSV3 | 34 | 11 | 8 | 28 | 19 | 42 | 9 | 3 | 5 | 41 |

| KL | ABI | ||||||||||

| 30 | Plain | 9 | 42 | 36 | 13 | 0 | 15 | 44 | 16 | 25 | 0 |

| 30 | HD | 2 | 36 | 44 | 16 | 2 | 6 | 25 | 38 | 28 | 3 |

| 30 | QSV1 | 18 | 5 | 6 | 29 | 42 | 18 | 5 | 28 | 15 | 34 |

| 30 | QSV2 | 33 | 7 | 5 | 25 | 30 | 23 | 8 | 5 | 23 | 41 |

| 30 | QSV3 | 38 | 10 | 9 | 17 | 26 | 38 | 18 | 13 | 9 | 22 |

| L2.cdf | L2.pdf | ||||||||||

| 50 | Plain | 10 | 30 | 53 | 7 | 0 | 11 | 40 | 12 | 37 | 0 |

| 50 | HD | 13 | 38 | 12 | 36 | 1 | 5 | 25 | 68 | 2 | 0 |

| 50 | QSV1 | 21 | 19 | 20 | 19 | 21 | 15 | 16 | 16 | 41 | 12 |

| 50 | QSV2 | 31 | 1 | 3 | 9 | 56 | 31 | 13 | 3 | 16 | 37 |

| 50 | QSV3 | 25 | 12 | 12 | 29 | 22 | 38 | 6 | 1 | 4 | 51 |

| KL | ABI | ||||||||||

| 50 | Plain | 10 | 44 | 32 | 13 | 1 | 8 | 51 | 17 | 23 | 1 |

| 50 | HD | 9 | 39 | 37 | 14 | 1 | 10 | 28 | 29 | 32 | 1 |

| 50 | QSV1 | 24 | 6 | 12 | 31 | 27 | 26 | 4 | 32 | 17 | 21 |

| 50 | QSV2 | 26 | 5 | 7 | 21 | 41 | 25 | 3 | 4 | 17 | 51 |

| 50 | QSV3 | 31 | 6 | 12 | 21 | 30 | 31 | 14 | 18 | 11 | 26 |

| L2.cdf | L2.pdf | ||||||||||

| 100 | Plain | 2 | 21 | 73 | 4 | 0 | 2 | 32 | 32 | 34 | 0 |

| 100 | HD | 10 | 42 | 4 | 44 | 0 | 3 | 38 | 48 | 11 | 0 |

| 100 | QSV1 | 25 | 14 | 13 | 15 | 33 | 14 | 20 | 17 | 32 | 17 |

| 100 | QSV2 | 38 | 5 | 1 | 6 | 50 | 35 | 7 | 1 | 19 | 38 |

| 100 | QSV3 | 25 | 18 | 9 | 31 | 17 | 46 | 3 | 2 | 4 | 45 |

| KL | ABI | ||||||||||

| 100 | Plain | 1 | 31 | 47 | 21 | 0 | 5 | 37 | 24 | 34 | 0 |

| 100 | HD | 7 | 43 | 26 | 24 | 0 | 12 | 34 | 19 | 35 | 0 |

| 100 | QSV1 | 24 | 13 | 7 | 25 | 31 | 18 | 15 | 33 | 11 | 23 |

| 100 | QSV2 | 36 | 5 | 4 | 11 | 44 | 29 | 7 | 7 | 5 | 52 |

| 100 | QSV3 | 32 | 8 | 16 | 19 | 25 | 36 | 7 | 17 | 15 | 25 |

Table A2.

The rank of 5 methods in 4 accuracy measures (IG(7, 1)).

Table A2.

The rank of 5 methods in 4 accuracy measures (IG(7, 1)).

| n | Method | L2.cdf | L2.pdf | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Rank1 | Rank2 | Rank3 | Rank4 | Rank5 | Rank1 | Rank2 | Rank3 | Rank4 | Rank5 | ||

| 30 | Plain | 18 | 33 | 8 | 37 | 4 | 19 | 33 | 6 | 36 | 6 |

| 30 | HD | 8 | 18 | 62 | 12 | 0 | 5 | 21 | 65 | 6 | 3 |

| 30 | QSV1 | 13 | 29 | 17 | 29 | 12 | 15 | 29 | 12 | 30 | 14 |

| 30 | QSV2 | 29 | 6 | 10 | 15 | 40 | 29 | 6 | 12 | 19 | 34 |

| 30 | QSV3 | 42 | 10 | 6 | 5 | 37 | 41 | 11 | 5 | 5 | 38 |

| KL | ABI | ||||||||||

| 30 | Plain | 18 | 34 | 6 | 36 | 6 | 18 | 32 | 9 | 37 | 4 |

| 30 | HD | 8 | 18 | 64 | 6 | 4 | 9 | 17 | 60 | 14 | 0 |

| 30 | QSV1 | 13 | 31 | 10 | 29 | 17 | 14 | 31 | 10 | 27 | 18 |

| 30 | QSV2 | 30 | 7 | 14 | 19 | 30 | 28 | 9 | 17 | 13 | 33 |

| 30 | QSV3 | 39 | 12 | 4 | 8 | 37 | 39 | 10 | 6 | 7 | 38 |

| L2.cdf | L2.pdf | ||||||||||

| 50 | Plain | 13 | 36 | 5 | 44 | 2 | 13 | 37 | 3 | 45 | 2 |

| 50 | HD | 5 | 18 | 66 | 11 | 0 | 3 | 17 | 75 | 5 | 0 |

| 50 | QSV1 | 13 | 27 | 21 | 22 | 17 | 12 | 32 | 9 | 30 | 17 |

| 50 | QSV2 | 37 | 10 | 5 | 20 | 28 | 38 | 8 | 6 | 19 | 29 |

| 50 | QSV3 | 32 | 10 | 4 | 3 | 51 | 34 | 9 | 5 | 1 | 51 |

| KL | ABI | ||||||||||

| 50 | Plain | 14 | 34 | 4 | 45 | 3 | 13 | 35 | 9 | 40 | 3 |

| 50 | HD | 4 | 19 | 74 | 3 | 0 | 7 | 20 | 63 | 9 | 1 |

| 50 | QSV1 | 13 | 29 | 10 | 29 | 19 | 16 | 22 | 16 | 27 | 19 |

| 50 | QSV2 | 36 | 12 | 5 | 20 | 27 | 32 | 14 | 8 | 16 | 30 |

| 50 | QSV3 | 33 | 8 | 6 | 3 | 50 | 32 | 9 | 7 | 6 | 46 |

| L2.cdf | L2.pdf | ||||||||||

| 100 | Plain | 2 | 37 | 19 | 42 | 0 | 4 | 42 | 11 | 43 | 0 |

| 100 | HD | 9 | 17 | 59 | 14 | 1 | 4 | 12 | 76 | 8 | 0 |

| 100 | QSV1 | 13 | 30 | 10 | 29 | 18 | 22 | 28 | 4 | 31 | 15 |

| 100 | QSV2 | 35 | 9 | 9 | 15 | 32 | 30 | 15 | 6 | 14 | 35 |

| 100 | QSV3 | 41 | 8 | 2 | 1 | 48 | 40 | 4 | 2 | 5 | 49 |

| KL | ABI | ||||||||||

| 100 | Plain | 3 | 42 | 18 | 37 | 0 | 3 | 35 | 26 | 36 | 0 |

| 100 | HD | 3 | 20 | 68 | 9 | 0 | 7 | 25 | 52 | 12 | 4 |

| 100 | QSV1 | 21 | 29 | 2 | 27 | 21 | 23 | 22 | 7 | 25 | 23 |

| 100 | QSV2 | 34 | 7 | 7 | 24 | 28 | 31 | 12 | 7 | 23 | 27 |

| 100 | QSV3 | 39 | 3 | 4 | 4 | 50 | 36 | 7 | 7 | 5 | 45 |

Table A3.

The rank of 5 methods in 4 accuracy measures (IG(3, 3)).

Table A3.

The rank of 5 methods in 4 accuracy measures (IG(3, 3)).

| n | Method | L2.cdf | L2.pdf | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Rank1 | Rank2 | Rank3 | Rank4 | Rank5 | Rank1 | Rank2 | Rank3 | Rank4 | Rank5 | ||

| 30 | Plain | 16 | 19 | 54 | 11 | 0 | 14 | 25 | 38 | 22 | 1 |

| 30 | HD | 13 | 27 | 21 | 35 | 4 | 12 | 29 | 31 | 22 | 6 |

| 30 | QSV1 | 30 | 20 | 10 | 15 | 25 | 32 | 19 | 10 | 18 | 21 |

| 30 | QSV2 | 30 | 7 | 4 | 12 | 47 | 22 | 19 | 12 | 12 | 35 |

| 30 | QSV3 | 15 | 35 | 15 | 26 | 9 | 20 | 22 | 11 | 23 | 24 |

| KL | ABI | ||||||||||

| 30 | Plain | 13 | 25 | 47 | 14 | 1 | 13 | 27 | 41 | 18 | 1 |

| 30 | HD | 14 | 29 | 21 | 30 | 6 | 13 | 26 | 29 | 24 | 8 |

| 30 | QSV1 | 31 | 16 | 15 | 10 | 28 | 34 | 16 | 10 | 13 | 27 |

| 30 | QSV2 | 26 | 9 | 8 | 19 | 38 | 22 | 11 | 9 | 19 | 39 |

| 30 | QSV3 | 20 | 30 | 10 | 29 | 11 | 21 | 33 | 11 | 23 | 12 |

| L2.cdf | L2.pdf | ||||||||||

| 50 | Plain | 6 | 13 | 72 | 9 | 0 | 11 | 19 | 43 | 27 | 0 |

| 50 | HD | 18 | 34 | 10 | 36 | 2 | 10 | 35 | 34 | 19 | 2 |

| 50 | QSV1 | 32 | 16 | 9 | 13 | 30 | 27 | 18 | 14 | 23 | 18 |

| 50 | QSV2 | 35 | 9 | 4 | 5 | 47 | 36 | 15 | 6 | 11 | 32 |

| 50 | QSV3 | 13 | 27 | 9 | 35 | 16 | 20 | 12 | 7 | 18 | 43 |

| KL | ABI | ||||||||||

| 50 | Plain | 7 | 28 | 53 | 12 | 0 | 9 | 25 | 40 | 25 | 1 |

| 50 | HD | 11 | 40 | 17 | 29 | 3 | 13 | 25 | 29 | 31 | 2 |

| 50 | QSV1 | 29 | 12 | 10 | 18 | 31 | 31 | 16 | 11 | 13 | 29 |

| 50 | QSV2 | 37 | 7 | 8 | 11 | 37 | 30 | 13 | 11 | 10 | 36 |

| 50 | QSV3 | 20 | 12 | 14 | 30 | 24 | 22 | 19 | 12 | 19 | 28 |

| L2.cdf | L2.pdf | ||||||||||

| 100 | Plain | 3 | 18 | 75 | 3 | 1 | 3 | 31 | 49 | 16 | 1 |

| 100 | HD | 9 | 40 | 7 | 43 | 1 | 5 | 37 | 28 | 29 | 1 |

| 100 | QSV1 | 33 | 11 | 6 | 15 | 35 | 23 | 21 | 8 | 25 | 23 |

| 100 | QSV2 | 40 | 2 | 3 | 4 | 51 | 36 | 4 | 5 | 10 | 45 |

| 100 | QSV3 | 15 | 31 | 8 | 34 | 12 | 33 | 9 | 9 | 19 | 30 |

| KL | ABI | ||||||||||

| 100 | Plain | 2 | 29 | 52 | 17 | 0 | 8 | 36 | 28 | 28 | 0 |

| 100 | HD | 7 | 39 | 20 | 34 | 0 | 7 | 23 | 40 | 30 | 0 |

| 100 | QSV1 | 34 | 10 | 2 | 13 | 41 | 31 | 21 | 7 | 9 | 32 |

| 100 | QSV2 | 33 | 6 | 7 | 13 | 41 | 30 | 7 | 10 | 13 | 40 |

| 100 | QSV3 | 24 | 18 | 18 | 22 | 18 | 24 | 16 | 14 | 19 | 27 |

Table A4.

Estimation results on IG(1, 0.5), .

Table A4.

Estimation results on IG(1, 0.5), .

| n | Method | L2.pdf | L2.cdf | KL | ABI | ||

|---|---|---|---|---|---|---|---|

| 30 | Plain | 0.07335 | 0.02055 | 0.00462 | 0.07528 | 0.99117 | 0.57086 |

| 30 | HD | 0.09692 | 0.03336 | 0.00737 | 0.10874 | 1.04214 | 0.58768 |

| 30 | QSV1 | 0.12600 | 0.03741 | 0.01631 | 0.15858 | 0.95335 | 0.63526 |

| 30 | QSV2 | 0.13636 | 0.06959 | 0.01630 | 0.18828 | 1.15247 | 0.61204 |

| 30 | QSV3 | 0.02076 | 0.01404 | 0.00086 | 0.04045 | 0.96127 | 0.52109 |

| 30 | Analytic formulas | 0.06303 | 0.01826 | 0.00368 | 0.07274 | 0.97843 | 0.56195 |

| 50 | Plain | 0.03534 | 0.00983 | 0.00105 | 0.03612 | 0.99346 | 0.53285 |

| 50 | HD | 0.04659 | 0.01997 | 0.00164 | 0.05583 | 1.03875 | 0.53646 |

| 50 | QSV1 | 0.06421 | 0.02064 | 0.00429 | 0.08323 | 0.96340 | 0.56493 |

| 50 | QSV2 | 0.07791 | 0.05360 | 0.00632 | 0.11825 | 1.13863 | 0.54893 |

| 50 | QSV3 | 0.01822 | 0.01106 | 0.00030 | 0.02424 | 0.97320 | 0.48916 |

| 50 | Analytic formulas | 0.05150 | 0.01489 | 0.00242 | 0.05874 | 0.98228 | 0.54989 |

| 100 | Plain | 0.01899 | 0.00528 | 0.00030 | 0.01933 | 0.99604 | 0.51735 |

| 100 | HD | 0.02247 | 0.01172 | 0.00041 | 0.02889 | 1.02662 | 0.51558 |

| 100 | QSV1 | 0.03241 | 0.01169 | 0.00116 | 0.04467 | 0.97479 | 0.53207 |

| 100 | QSV2 | 0.04274 | 0.03316 | 0.00220 | 0.06649 | 1.08829 | 0.52234 |

| 100 | QSV3 | 0.00897 | 0.00572 | 0.00008 | 0.01226 | 0.98588 | 0.49481 |

| 100 | Analytic formulas | 0.01556 | 0.00627 | 0.00029 | 0.02278 | 0.98500 | 0.51527 |

The best performance within each measure is highlighted in bold.

Table A5.

Estimation results on IG(7, 1), .

Table A5.

Estimation results on IG(7, 1), .

| n | Method | L2.pdf | L2.cdf | KL | ABI | ||

|---|---|---|---|---|---|---|---|

| 30 | Plain | 0.02825 | 0.03129 | 0.00166 | 0.04631 | 6.93597 | 1.08347 |

| 30 | HD | 0.04269 | 0.04957 | 0.00380 | 0.06611 | 7.02712 | 1.12835 |

| 30 | QSV1 | 0.05288 | 0.06005 | 0.00610 | 0.08786 | 6.92041 | 1.16435 |

| 30 | QSV2 | 0.05545 | 0.06837 | 0.00651 | 0.09580 | 7.15371 | 1.16965 |

| 30 | QSV3 | 0.00079 | 0.00834 | 0.00002 | 0.00855 | 6.89497 | 1.00209 |

| 30 | Analytic formulas | 0.04011 | 0.04516 | 0.00359 | 0.07640 | 6.79364 | 1.12331 |

| 50 | Plain | 0.01586 | 0.01745 | 0.00052 | 0.02738 | 6.93861 | 1.04598 |

| 50 | HD | 0.02339 | 0.02638 | 0.00110 | 0.03413 | 6.99633 | 1.06773 |

| 50 | QSV1 | 0.03187 | 0.03539 | 0.00213 | 0.05279 | 6.92572 | 1.09496 |

| 50 | QSV2 | 0.03388 | 0.04260 | 0.00230 | 0.05917 | 7.14069 | 1.09824 |

| 50 | QSV3 | 0.01103 | 0.01484 | 0.00022 | 0.02016 | 6.91901 | 0.97126 |

| 50 | Analytic formulas | 0.03340 | 0.03711 | 0.00235 | 0.05578 | 6.91821 | 1.09988 |

| 100 | Plain | 0.00949 | 0.01163 | 0.00020 | 0.02062 | 6.90626 | 1.02784 |

| 100 | HD | 0.01157 | 0.01287 | 0.00026 | 0.01685 | 6.99344 | 1.03277 |

| 100 | QSV1 | 0.01740 | 0.01949 | 0.00065 | 0.03298 | 6.89592 | 1.05109 |

| 100 | QSV2 | 0.01859 | 0.02741 | 0.00067 | 0.03783 | 7.17362 | 1.05086 |

| 100 | QSV3 | 0.00579 | 0.01180 | 0.00007 | 0.01496 | 6.89049 | 0.98572 |

| 100 | Analytic formulas | 0.01116 | 0.01402 | 0.00235 | 0.01919 | 7.05329 | 1.03077 |

The best performance within each measure is highlighted in bold.

Table A6.

The points of RPs from .

Table A6.

The points of RPs from .

| category | RP1 | RP2 | RP3 | RP4 | RP5 | RP6 | RP7 | RP8 | |

| QMC-RPs | 0.237625 | 0.429742 | 0.675841 | 1.085120 | 2.143034 | ||||

| FH-RPs | 0.412524 | 1.077368 | 2.061354 | 3.616510 | 6.523829 | ||||

| PKM-RPs | 0.412523 | 1.077366 | 2.061348 | 3.616501 | 6.523817 | ||||

| NTLBG-RPs | 0.410067 | 1.066664 | 2.035673 | 3.564004 | 6.421223 | ||||

| QMC-RPs | 0.184113 | 0.285325 | 0.379723 | 0.483171 | 0.604832 | 0.756110 | 0.956109 | 1.244060 | |

| FH-RPs | 0.282780 | 0.583336 | 0.940531 | 1.377229 | 1.918490 | 2.601214 | 3.486682 | 4.689563 | |

| PKM-RPs | 0.282778 | 0.583331 | 0.940523 | 1.377216 | 1.918472 | 2.601190 | 3.486653 | 4.689529 | |

| NTLBG-RPs | 0.261260 | 0.518738 | 0.820360 | 1.189870 | 1.653057 | 2.248230 | 3.034748 | 4.122999 | |

| QMC-RPs | 0.162420 | 0.237625 | 0.300876 | 0.363621 | 0.429742 | 0.501927 | 0.582866 | 0.675841 | |

| FH-RPs | 0.230289 | 0.428358 | 0.643997 | 0.888024 | 1.167374 | 1.489236 | 1.862458 | 2.298770 | |

| PKM-RPs | 0.230287 | 0.428352 | 0.643986 | 0.888008 | 1.167351 | 1.489207 | 1.862421 | 2.298726 | |

| NTLBG-RPs | 0.177701 | 0.297268 | 0.420456 | 0.558135 | 0.718321 | 0.909491 | 1.142247 | 1.429964 | |

| QMC-RPs | 0.149804 | 0.212175 | 0.261786 | 0.308643 | 0.355654 | 0.404373 | 0.455963 | 0.511506 | |

| FH-RPs | 0.200694 | 0.350971 | 0.506274 | 0.674670 | 0.859939 | 1.065068 | 1.293080 | 1.547407 | |

| PKM-RPs | 0.200685 | 0.350957 | 0.506254 | 0.674643 | 0.859904 | 1.065025 | 1.293027 | 1.547344 | |

| NTLBG-RPs | 0.137958 | 0.209947 | 0.277620 | 0.347437 | 0.422782 | 0.506585 | 0.601923 | 0.712768 | |

| QMC-RPs | 0.141253 | 0.195791 | 0.237625 | 0.275954 | 0.313307 | 0.350897 | 0.389506 | 0.429742 | |

| FH-RPs | 0.181246 | 0.303859 | 0.426099 | 0.554903 | 0.693050 | 0.842349 | 1.004352 | 1.180611 | |

| PKM-RPs | 0.181242 | 0.303849 | 0.426083 | 0.554879 | 0.693018 | 0.842308 | 1.004300 | 1.180549 | |

| NTLBG-RPs | 0.120763 | 0.175902 | 0.224958 | 0.272780 | 0.321183 | 0.371487 | 0.424970 | 0.482953 | |

| QMC-RPs | 0.137262 | 0.188371 | 0.226930 | 0.261786 | 0.295327 | 0.328667 | 0.362480 | 0.397263 | |

| FH-RPs | 0.172376 | 0.283337 | 0.392099 | 0.505183 | 0.625066 | 0.753237 | 0.890873 | 1.039072 | |

| PKM-RPs | 0.172371 | 0.283326 | 0.392081 | 0.505156 | 0.625031 | 0.753192 | 0.890818 | 1.039005 | |

| NTLBG-RPs | 0.115454 | 0.165381 | 0.208911 | 0.250482 | 0.291565 | 0.333181 | 0.376107 | 0.421139 | |

| QMC-RPs | 0.134937 | 0.184113 | 0.220862 | 0.253827 | 0.285325 | 0.316417 | 0.347735 | 0.379723 | |

| FH-RPs | 0.167278 | 0.271810 | 0.373259 | 0.477921 | 0.588133 | 0.705240 | 0.830258 | 0.964093 | |

| PKM-RPs | 0.167272 | 0.271798 | 0.373239 | 0.477893 | 0.588096 | 0.705193 | 0.830200 | 0.964023 | |

| NTLBG-RPs | 0.112544 | 0.160032 | 0.200761 | 0.239180 | 0.277045 | 0.314999 | 0.353415 | 0.393126 | |

| category | RP9 | RP10 | RP11 | RP12 | RP13 | RP14 | RP15 | RP16 | |

| QMC-RPs | 1.725360 | 2.922076 | |||||||

| FH-RPs | 6.466201 | 9.623196 | |||||||

| PKM-RPs | 6.466161 | 9.623159 | |||||||

| NTLBG-RPs | 5.750920 | 8.653409 | |||||||

| QMC-RPs | 0.785355 | 0.918136 | 1.085120 | 1.305995 | 1.621860 | 2.143034 | 3.411364 | ||

| FH-RPs | 2.814540 | 3.433731 | 4.193535 | 5.156277 | 6.438620 | 8.301048 | 11.562225 | ||

| PKM-RPs | 2.814488 | 3.433673 | 4.193470 | 5.156207 | 6.438548 | 8.300979 | 11.562190 | ||

| NTLBG-RPs | 1.790194 | 2.248018 | 2.841775 | 3.632491 | 4.711291 | 6.288362 | 9.129651 | ||

| QMC-RPs | 0.572167 | 0.639315 | 0.714672 | 0.800508 | 0.899950 | 1.017518 | 1.160114 | 1.339036 | |

| FH-RPs | 1.832177 | 2.152538 | 2.515086 | 2.928504 | 3.404570 | 3.959822 | 4.618516 | 5.418287 | |

| PKM-RPs | 1.832103 | 2.152453 | 2.514989 | 2.928395 | 3.404449 | 3.959688 | 4.618370 | 5.418128 | |

| NTLBG-RPs | 0.844104 | 1.001872 | 1.193483 | 1.429018 | 1.721482 | 2.089041 | 2.555589 | 3.153091 | |

| QMC-RPs | 0.472161 | 0.517322 | 0.565834 | 0.618395 | 0.675841 | 0.739201 | 0.809779 | 0.889279 | |

| FH-RPs | 1.372816 | 1.582888 | 1.813084 | 2.066105 | 2.345241 | 2.654576 | 2.999263 | 3.385945 | |

| PKM-RPs | 1.372742 | 1.582803 | 1.812987 | 2.065994 | 2.345118 | 2.654439 | 2.999113 | 3.385782 | |

| NTLBG-RPs | 0.547016 | 0.618788 | 0.700777 | 0.795894 | 0.907606 | 1.040801 | 1.201331 | 1.396686 | |

| QMC-RPs | 0.433433 | 0.471381 | 0.511506 | 0.554238 | 0.600059 | 0.649532 | 0.703326 | 0.762262 | |

| FH-RPs | 1.198965 | 1.371784 | 1.558920 | 1.761974 | 1.982824 | 2.223707 | 2.487319 | 2.776958 | |

| PKM-RPs | 1.198887 | 1.371694 | 1.558816 | 1.761856 | 1.982693 | 2.223561 | 2.487158 | 2.776784 | |

| NTLBG-RPs | 0.469147 | 0.521023 | 0.577773 | 0.640895 | 0.712346 | 0.794621 | 0.890395 | 1.003197 | |

| QMC-RPs | 0.412743 | 0.447120 | 0.483171 | 0.521227 | 0.561647 | 0.604832 | 0.651251 | 0.701455 | |

| FH-RPs | 1.107649 | 1.261883 | 1.427848 | 1.606732 | 1.799900 | 2.008942 | 2.235732 | 2.482506 | |

| PKM-RPs | 1.107567 | 1.261788 | 1.427739 | 1.606609 | 1.799763 | 2.008790 | 2.235565 | 2.482323 | |

| NTLBG-RPs | 0.434701 | 0.478539 | 0.525587 | 0.576829 | 0.633202 | 0.696344 | 0.768272 | 0.850992 | |

| category | RP17 | RP18 | RP19 | RP20 | RP21 | RP22 | RP23 | RP24 | |

| QMC-RPs | 1.574661 | 1.909444 | 2.456955 | 3.771838 | |||||

| FH-RPs | 6.422164 | 7.748047 | 9.658992 | 12.981382 | |||||

| PKM-RPs | 6.421995 | 7.747864 | 9.658797 | 12.981110 | |||||

| NTLBG-RPs | 3.934887 | 4.993269 | 6.539831 | 9.328942 | |||||

| QMC-RPs | 0.979995 | 1.085120 | 1.209288 | 1.359587 | 1.547621 | 1.794286 | 2.143034 | 2.709786 | |

| FH-RPs | 3.823382 | 4.323461 | 4.902871 | 5.586080 | 6.411034 | 7.441252 | 8.795492 | 10.738640 | |

| PKM-RPs | 3.823206 | 4.323273 | 4.902671 | 5.585868 | 6.410811 | 7.441019 | 8.795250 | 10.738390 | |

| NTLBG-RPs | 1.636442 | 1.932897 | 2.303347 | 2.772290 | 3.376042 | 4.167427 | 5.223196 | 6.759912 | |

| QMC-RPs | 0.827366 | 0.899950 | 0.981735 | 1.075041 | 1.183105 | 1.310626 | 1.464787 | 1.657343 | |

| FH-RPs | 3.096724 | 3.451793 | 3.848838 | 4.296661 | 4.807203 | 5.397224 | 6.091291 | 6.927496 | |

| PKM-RPs | 3.096535 | 3.451589 | 3.848621 | 4.296431 | 4.806960 | 5.396969 | 6.091024 | 6.927220 | |

| NTLBG-RPs | 1.138059 | 1.300997 | 1.498690 | 1.740975 | 2.041688 | 2.416649 | 2.887119 | 3.486758 | |

| QMC-RPs | 0.756110 | 0.816036 | 0.882262 | 0.956109 | 1.039311 | 1.134207 | 1.244060 | 1.373608 | |

| FH-RPs | 2.751966 | 3.047418 | 3.372972 | 3.733821 | 4.136655 | 4.590302 | 5.106733 | 5.702749 | |

| PKM-RPs | 2.751767 | 3.047204 | 3.372743 | 3.733576 | 4.136396 | 4.590028 | 5.106445 | 5.702449 | |

| NTLBG-RPs | 0.947489 | 1.061775 | 1.198136 | 1.362230 | 1.561387 | 1.805856 | 2.107466 | 2.482508 | |

| category | RP25 | RP26 | RP27 | RP28 | RP29 | RP30 | RP31 | RP32 | |

| QMC-RPs | 4.058467 | ||||||||

| FH-RPs | 14.102428 | ||||||||

| PKM-RPs | 14.102179 | ||||||||

| NTLBG-RPs | 9.558364 | ||||||||

| QMC-RPs | 1.909444 | 2.265027 | 2.841156 | 4.206263 | |||||

| FH-RPs | 7.969590 | 9.336769 | 11.294769 | 14.677905 | |||||

| PKM-RPs | 7.969308 | 9.336459 | 11.294434 | 14.677578 | |||||

| NTLBG-RPs | 4.267639 | 5.324128 | 6.847529 | 9.558364 | |||||

| QMC-RPs | 1.530093 | 1.725360 | 1.980711 | 2.340368 | 2.922076 | 4.296947 | |||

| FH-RPs | 6.402978 | 7.245596 | 8.294494 | 9.669086 | 11.635645 | 15.030035 | |||

| PKM-RPs | 6.402665 | 7.245272 | 8.294160 | 9.668744 | 11.635300 | 15.029713 | |||

| NTLBG-RPs | 2.954570 | 3.555009 | 4.328302 | 5.355569 | 6.847529 | 9.558364 |

Table A7.

The corresponding probabilities of RPs from .

Table A7.

The corresponding probabilities of RPs from .

| category | P1 | P2 | P3 | P4 | P5 | P6 | P7 | P8 | |

| QMC-RPs | 0.200000 | 0.200000 | 0.200000 | 0.200000 | 0.200000 | ||||

| FH-RPs | 0.543429 | 0.280605 | 0.122293 | 0.044281 | 0.009393 | ||||

| PKM-RPs | 0.543427 | 0.280605 | 0.122294 | 0.044281 | 0.009393 | ||||

| NTLBG-RPs | 0.539400 | 0.281200 | 0.123800 | 0.045600 | 0.010000 | ||||

| QMC-RPs | 0.100000 | 0.100000 | 0.100000 | 0.100000 | 0.100000 | 0.100000 | 0.100000 | 0.100000 | |

| FH-RPs | 0.303210 | 0.250172 | 0.171229 | 0.113110 | 0.072568 | 0.044597 | 0.025567 | 0.013023 | |

| PKM-RPs | 0.303207 | 0.250171 | 0.171229 | 0.113111 | 0.072568 | 0.044598 | 0.025567 | 0.013024 | |

| NTLBG-RPs | 0.259600 | 0.235000 | 0.174400 | 0.124400 | 0.085800 | 0.056800 | 0.034600 | 0.019000 | |

| QMC-RPs | 0.066667 | 0.066667 | 0.066667 | 0.066667 | 0.066667 | 0.066667 | 0.066667 | 0.066667 | |

| FH-RPs | 0.197129 | 0.198779 | 0.159822 | 0.123196 | 0.093408 | 0.069955 | 0.051655 | 0.037432 | |

| PKM-RPs | 0.197125 | 0.198777 | 0.159821 | 0.123197 | 0.093409 | 0.069956 | 0.051656 | 0.037433 | |

| NTLBG-RPs | 0.099000 | 0.127800 | 0.126600 | 0.118600 | 0.108000 | 0.096200 | 0.083600 | 0.070200 | |

| QMC-RPs | 0.050000 | 0.050000 | 0.050000 | 0.050000 | 0.050000 | 0.050000 | 0.050000 | 0.050000 | |

| FH-RPs | 0.139870 | 0.159043 | 0.140258 | 0.117302 | 0.096240 | 0.078195 | 0.063079 | 0.050521 | |

| PKM-RPs | 0.139858 | 0.159039 | 0.140256 | 0.117302 | 0.096241 | 0.078196 | 0.063081 | 0.050523 | |

| NTLBG-RPs | 0.041600 | 0.064000 | 0.072600 | 0.076000 | 0.077400 | 0.077400 | 0.076600 | 0.075200 | |

| QMC-RPs | 0.040000 | 0.040000 | 0.040000 | 0.040000 | 0.040000 | 0.040000 | 0.040000 | 0.040000 | |

| FH-RPs | 0.105020 | 0.129728 | 0.121821 | 0.107483 | 0.092647 | 0.078981 | 0.066896 | 0.056387 | |

| PKM-RPs | 0.105013 | 0.129722 | 0.121816 | 0.107481 | 0.092646 | 0.078981 | 0.066897 | 0.056389 | |

| NTLBG-RPs | 0.024000 | 0.040000 | 0.047200 | 0.051000 | 0.052400 | 0.053600 | 0.054000 | 0.054800 | |

| QMC-RPs | 0.035714 | 0.035714 | 0.035714 | 0.035714 | 0.035714 | 0.035714 | 0.035714 | 0.035714 | |

| FH-RPs | 0.090203 | 0.115859 | 0.112045 | 0.101330 | 0.089310 | 0.077762 | 0.067244 | 0.057882 | |

| PKM-RPs | 0.090195 | 0.115852 | 0.112040 | 0.101326 | 0.089309 | 0.077761 | 0.067245 | 0.057884 | |

| NTLBG-RPs | 0.019600 | 0.032800 | 0.039600 | 0.042600 | 0.044200 | 0.044800 | 0.045200 | 0.045400 | |

| QMC-RPs | 0.033333 | 0.033333 | 0.033333 | 0.033333 | 0.033333 | 0.033333 | 0.033333 | 0.033333 | |

| FH-RPs | 0.082054 | 0.107826 | 0.106082 | 0.097321 | 0.086883 | 0.076561 | 0.066981 | 0.058328 | |

| PKM-RPs | 0.082046 | 0.107817 | 0.106076 | 0.097317 | 0.086881 | 0.076561 | 0.066981 | 0.058329 | |

| NTLBG-RPs | 0.017400 | 0.029600 | 0.035400 | 0.038600 | 0.040400 | 0.040800 | 0.040800 | 0.041200 | |

| category | P9 | P10 | P11 | P12 | P13 | P14 | P15 | P16 | |

| QMC-RPs | 0.100000 | 0.100000 | |||||||

| FH-RPs | 0.005301 | 0.001224 | |||||||

| PKM-RPs | 0.005301 | 0.001224 | |||||||

| NTLBG-RPs | 0.008200 | 0.002200 | |||||||

| QMC-RPs | 0.066667 | 0.066667 | 0.066667 | 0.066667 | 0.066667 | 0.066667 | 0.066667 | ||

| FH-RPs | 0.026432 | 0.018001 | 0.011639 | 0.006965 | 0.003678 | 0.001544 | 0.000366 | ||

| PKM-RPs | 0.026433 | 0.018002 | 0.011640 | 0.006965 | 0.003679 | 0.001544 | 0.000366 | ||

| NTLBG-RPs | 0.056600 | 0.043400 | 0.031200 | 0.020400 | 0.011400 | 0.005400 | 0.001600 | ||

| QMC-RPs | 0.050000 | 0.050000 | 0.050000 | 0.050000 | 0.050000 | 0.050000 | 0.050000 | 0.050000 | |

| FH-RPs | 0.040125 | 0.031535 | 0.024455 | 0.018643 | 0.013901 | 0.010068 | 0.007012 | 0.004625 | |

| PKM-RPs | 0.040127 | 0.031537 | 0.024457 | 0.018645 | 0.013902 | 0.010069 | 0.007013 | 0.004626 | |

| NTLBG-RPs | 0.072800 | 0.069000 | 0.063800 | 0.057400 | 0.049600 | 0.041200 | 0.032000 | 0.023200 | |

| QMC-RPs | 0.040000 | 0.040000 | 0.040000 | 0.040000 | 0.040000 | 0.040000 | 0.040000 | 0.040000 | |

| FH-RPs | 0.047317 | 0.039514 | 0.032814 | 0.027069 | 0.022149 | 0.017945 | 0.014364 | 0.011327 | |

| PKM-RPs | 0.047319 | 0.039516 | 0.032817 | 0.027071 | 0.022151 | 0.017947 | 0.014365 | 0.011328 | |

| NTLBG-RPs | 0.055200 | 0.055600 | 0.056200 | 0.056200 | 0.055800 | 0.054800 | 0.052600 | 0.049400 | |

| QMC-RPs | 0.035714 | 0.035714 | 0.035714 | 0.035714 | 0.035714 | 0.035714 | 0.035714 | 0.035714 | |

| FH-RPs | 0.049638 | 0.042416 | 0.036105 | 0.030598 | 0.025797 | 0.021618 | 0.017984 | 0.014831 | |

| PKM-RPs | 0.049640 | 0.042418 | 0.036107 | 0.030600 | 0.025800 | 0.021620 | 0.017986 | 0.014833 | |

| NTLBG-RPs | 0.045800 | 0.046000 | 0.046400 | 0.047000 | 0.047800 | 0.048600 | 0.048800 | 0.048800 | |

| QMC-RPs | 0.033333 | 0.033333 | 0.033333 | 0.033333 | 0.033333 | 0.033333 | 0.033333 | 0.033333 | |

| FH-RPs | 0.050614 | 0.043782 | 0.037752 | 0.032439 | 0.027763 | 0.023651 | 0.020039 | 0.016869 | |

| PKM-RPs | 0.050616 | 0.043785 | 0.037755 | 0.032442 | 0.027765 | 0.023653 | 0.020041 | 0.016872 | |

| NTLBG-RPs | 0.041000 | 0.041000 | 0.041400 | 0.041800 | 0.042200 | 0.043200 | 0.044000 | 0.044600 | |

| category | P17 | P18 | P19 | P20 | P21 | P22 | P23 | P24 | |

| QMC-RPs | 0.050000 | 0.050000 | 0.050000 | 0.050000 | |||||

| FH-RPs | 0.002817 | 0.001512 | 0.000644 | 0.000154 | |||||

| PKM-RPs | 0.002818 | 0.001512 | 0.000644 | 0.000154 | |||||

| NTLBG-RPs | 0.015400 | 0.009000 | 0.004400 | 0.001400 | |||||

| QMC-RPs | 0.040000 | 0.040000 | 0.040000 | 0.040000 | 0.040000 | 0.040000 | 0.040000 | 0.040000 | |

| FH-RPs | 0.008767 | 0.006629 | 0.004862 | 0.003426 | 0.002284 | 0.001405 | 0.000761 | 0.000327 | |

| PKM-RPs | 0.008769 | 0.006629 | 0.004863 | 0.003426 | 0.002284 | 0.001405 | 0.000761 | 0.000327 | |

| NTLBG-RPs | 0.044800 | 0.039200 | 0.032800 | 0.026000 | 0.019200 | 0.012800 | 0.007400 | 0.003800 | |

| QMC-RPs | 0.035714 | 0.035714 | 0.035714 | 0.035714 | 0.035714 | 0.035714 | 0.035714 | 0.035714 | |

| FH-RPs | 0.012104 | 0.009754 | 0.007739 | 0.006025 | 0.004579 | 0.003375 | 0.002389 | 0.001600 | |

| PKM-RPs | 0.012105 | 0.009755 | 0.007740 | 0.006026 | 0.004580 | 0.003376 | 0.002390 | 0.001600 | |

| NTLBG-RPs | 0.048200 | 0.046600 | 0.043600 | 0.040000 | 0.035200 | 0.029400 | 0.023200 | 0.017200 | |

| QMC-RPs | 0.033333 | 0.033333 | 0.033333 | 0.033333 | 0.033333 | 0.033333 | 0.033333 | 0.033333 | |

| FH-RPs | 0.014094 | 0.011670 | 0.009559 | 0.007730 | 0.006153 | 0.004805 | 0.003662 | 0.002706 | |

| PKM-RPs | 0.014096 | 0.011672 | 0.009561 | 0.007731 | 0.006154 | 0.004806 | 0.003663 | 0.002707 | |

| NTLBG-RPs | 0.045200 | 0.045400 | 0.044600 | 0.043200 | 0.040600 | 0.037400 | 0.032600 | 0.027600 | |

| category | P25 | P26 | P27 | P28 | P29 | P30 | P31 | P32 | |

| QMC-RPs | 0.040000 | ||||||||

| FH-RPs | 0.000079 | ||||||||

| PKM-RPs | 0.000079 | ||||||||

| NTLBG-RPs | 0.001200 | ||||||||

| QMC-RPs | 0.035714 | 0.035714 | 0.035714 | 0.035714 | |||||

| FH-RPs | 0.000988 | 0.000537 | 0.000231 | 0.000056 | |||||

| PKM-RPs | 0.000988 | 0.000537 | 0.000231 | 0.000056 | |||||

| NTLBG-RPs | 0.011600 | 0.007000 | 0.003400 | 0.001200 | |||||

| QMC-RPs | 0.033333 | 0.033333 | 0.033333 | 0.033333 | 0.033333 | 0.033333 | |||

| FH-RPs | 0.001920 | 0.001289 | 0.000798 | 0.000434 | 0.000188 | 0.000046 | |||

| PKM-RPs | 0.001921 | 0.001289 | 0.000798 | 0.000435 | 0.000188 | 0.000046 | |||

| NTLBG-RPs | 0.021800 | 0.016200 | 0.010800 | 0.006600 | 0.003400 | 0.001200 |

References

- Schrödinger, E. Zur Theorie der Fall- und Steigversuche an Teilchen mit Brownscher Bewegung. Z. Phys. Chem. 1915, 16, 289–295. [Google Scholar]

- Tweedie, K.C.M. Inverse Statistical Variates. Nature 1945, 155, 453. [Google Scholar] [CrossRef]

- Wald, A. Sequential Analysis; Courier Corporation: Chelmsford, MA, USA, 2004. [Google Scholar]

- Cox, D.R.; Miller, H.D. The Theory of Stochastic Processes; Methuen: London, UK, 1965. [Google Scholar]

- Bartlett, M.S. An Introduction to Stochastic Processes; Cambridge University Press: London, UK, 1966. [Google Scholar]

- Moran, P.A.P. An Introduction to Probability Theory; Clarendon Press: Oxford, UK, 1968. [Google Scholar]

- Wasan, M.T.; Roy, L.K. Tables of Inverse Gaussian Percentage Points. Technometrics 1969, 11, 591–604. [Google Scholar] [CrossRef]

- Folks, J.L.; Chhikara, R.S. The Inverse Gaussian Distribution and Its Statistical Application—A Review. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 1978, 40, 263–275. [Google Scholar] [CrossRef]

- Chhikara, R.S.; Folks, J.L. Estimation of Inverse Gaussian Distribution Function. J. Am. Stat. Assoc. 1974, 69, 250–254. [Google Scholar] [CrossRef]

- Chhikara, R.S.; Folks, J.L. The Inverse Gaussian Distribution as a Lifetime Model. Technometrics 1977, 19, 461–468. [Google Scholar] [CrossRef]

- Iyengar, S.; Patwardhan, G. Recent Developments in the Inverse Gaussian Distribution. In Handbook of Statistics; Elsevier: Amsterdam, The Netherlands, 1988; Volume 7, pp. 479–490. [Google Scholar]

- Kourogiorgas, C.; Panagopoulos, A.D.; Livieratos, S.N.; Chatzarakis, G.E. Rain Attenuation Time Series Synthesizer Based on Inverse Gaussian Distribution. Electron. Lett. 2015, 51, 2162–2164. [Google Scholar] [CrossRef]

- Punzo, A. A New Look at the Inverse Gaussian Distribution with Applications to Insurance and Economic Data. J. Appl. Stat. 2019, 46, 1260–1287. [Google Scholar] [CrossRef]

- Krbálek, M.; Hobza, T.; Patočka, M.; Krbálková, M.; Apeltauer, J.; Groverová, N. Statistical Aspects of Gap-Acceptance Theory for Unsignalized Intersection Capacity. Phys. A 2022, 594, 127043. [Google Scholar] [CrossRef]

- Fang, K.T.; Pan, J. A Review of Representative Points of Statistical Distributions and Their Applications. Mathematics 2023, 11, 2930. [Google Scholar] [CrossRef]

- Li, X.; He, P.; Huang, M.; Peng, X. A new class of moment-constrained mean square error representative samples for continuous distributions. J. Stat. Comput. Simul. 2025, 95, 2175–2203. [Google Scholar] [CrossRef]

- Peng, X.; Huang, M.; Li, X.; Zhou, T.; Lin, G.; Wang, X. Patient regional index: A new way to rank clinical specialties based on outpatient clinics big data. BMC Med. Res. Methodol. 2024, 24, 192. [Google Scholar] [CrossRef]

- Fang, K.T.; Ye, H.; Zhou, Y. Representative Points of Statistical Distributions and Their Applications in Statistical Inference; CRC Press: New York, NY, USA, 2025. [Google Scholar]

- Harrell, F.E.; Davis, C.E. A new distribution-free quantile estimator. Biometrika 1982, 69, 635–640. [Google Scholar] [CrossRef]

- Sfakianakis, M.E.; Verginis, D.G. A new family of nonparametric quantile estimators. Commun. Stat. Simul. Comput. 2008, 37, 337–345. [Google Scholar] [CrossRef]

- Wald, A. Sequential Analysis; John Wiley & Sons: New York, NY, USA, 1947. [Google Scholar]

- Tweedie, M.C.K. Statistical Properties of Inverse Gaussian Distributions. II. Ann. Math. Stat. 1957, 28, 696–705. [Google Scholar] [CrossRef]

- Fang, K.T.; He, S.D. The problem of selecting a given number of representative points in a normal population and a generalized Mills’ ratio. Acta Math. Appl. Sin. 1984, 7, 293–306. [Google Scholar]

- Efron, B. Bootstrap methods: Another look at the jackknife. Ann. Statist. 1979, 7, 1–26. [Google Scholar] [CrossRef]

- Hua, L.K.; Wang, Y. Applications of Number Theory to Numerical Analysis; Springer: Berlin, Germany; Science Press: Beijing, China, 1981. [Google Scholar]

- Niederreiter, H. Random Number Generation and Quasi-Monte Carlo Methods; SIAM: Philadelphia, PA, USA, 1992. [Google Scholar]

- Fang, K.T.; Wang, Y. Number-Theoretic Methods in Statistics; Chapman and Hall: London, UK, 1994. [Google Scholar]

- Barbiero, A.; Hitaj, A. Discrete approximations of continuous probability distributions obtained by minimizing Cramér–von Mises-type distances. Stat. Pap. 2022, 64, 1–29. [Google Scholar] [CrossRef]

- Flury, B.A. Principal points. Biometrika 1990, 77, 33–41. [Google Scholar] [CrossRef]

- Cox, D.R. Note on grouping. J. Am. Stat. Assoc. 1957, 52, 543–547. [Google Scholar] [CrossRef]

- Linde, Y.; Buzo, A.; Gray, R. An algorithm for vector quantizer design. IEEE Trans. Commun. 1980, 28, 84–95. [Google Scholar] [CrossRef]

- Lloyd, S.P. Least squares quantization in PCM. IEEE Trans. Inf. Theory 1982, 28, 129–137. [Google Scholar] [CrossRef]

- Tarpey, T. A parametric k-means algorithm. Comput. Stat. 2007, 22, 71–89. [Google Scholar] [CrossRef]

- Rosenblatt, M. Remarks on some nonparametric estimates of a density function. Ann. Math. Stat. 1956, 27, 832–837. [Google Scholar] [CrossRef]

- Parzen, E. On estimation of a probability density function and mode. Ann. Math. Stat. 1962, 33, 1065–1076. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).