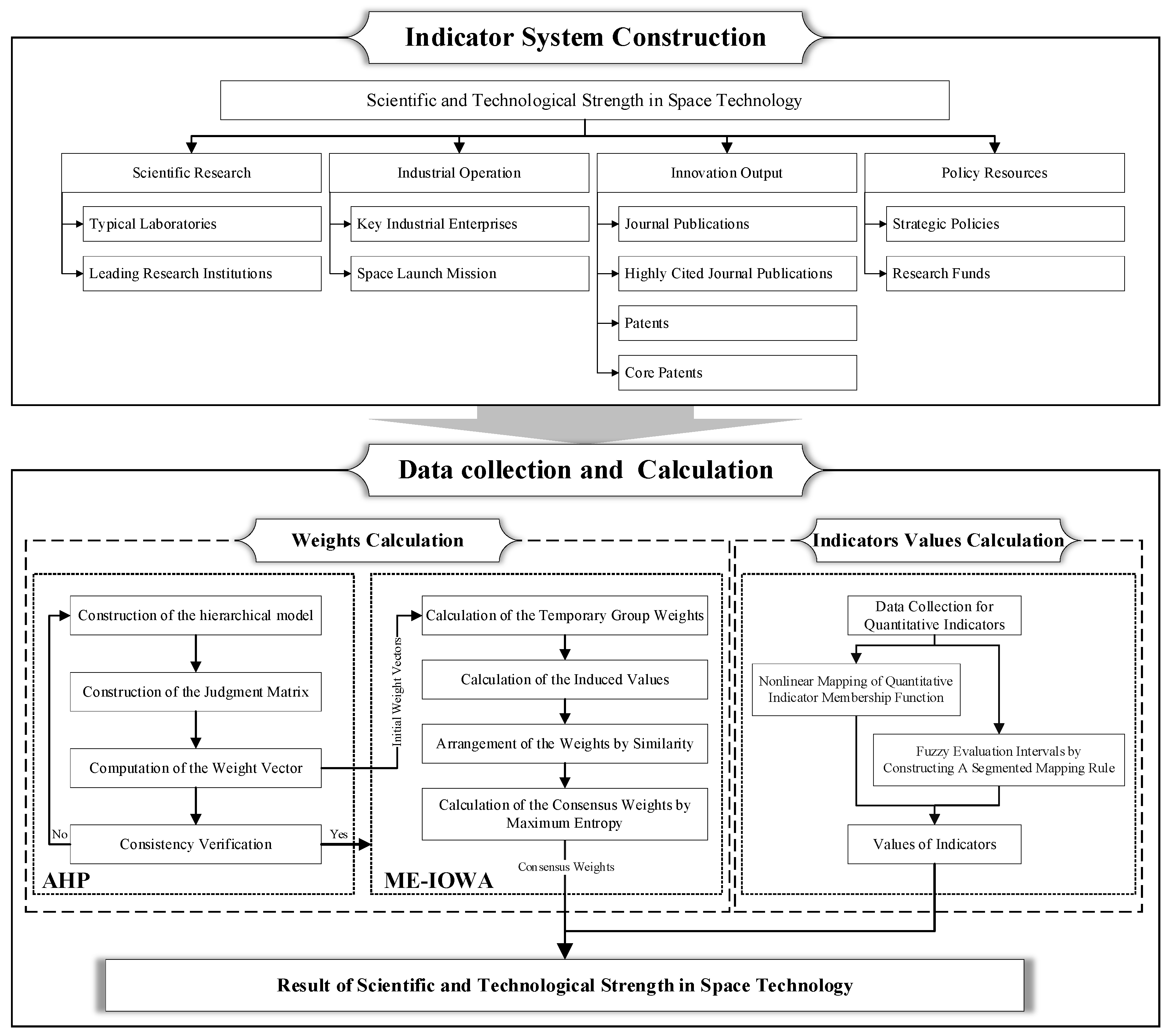

The calculation of indicator weights is a core link in the multi-indicator evaluation system, as the weight coefficient directly reflects the relative importance of each indicator in the evaluation context. Indicators with higher importance should correspond to larger weight coefficients, which is crucial for ensuring the scientificity and reliability of the final evaluation results. To accurately determine the indicator weights, this section integrates two key methods with complementary advantages. First, the AHP model is adopted to convert a single expert’s qualitative assessments of indicator importance into quantitative weight coefficients of that expert. Second, considering the complexity of practical multi-indicator evaluation problems and the differences in expert backgrounds, the ME-IOWA method is introduced for consensus adjustment of experts. This combination not only realizes the integration of multi-expert opinions but also automatically weakens the impact of outlier judgments, thereby improving the robustness of the final consensus weights.

2.2.1. AHP-Based Expert Weighting

The weight reflects the importance of a factor or indicator in a given context [

29]. In this work, we employed the AHP model to determine the weights of each indicator through expert qualitative assessments. The AHP model was constructed by Thomas L. Saaty in the early 1980s [

30], which has a significant role in all segments of life [

31,

32,

33]. The AHP model assigns weights to indicators using empirical data derived from pairwise comparisons of expert judgments, minimizing bias and including a consistency check.

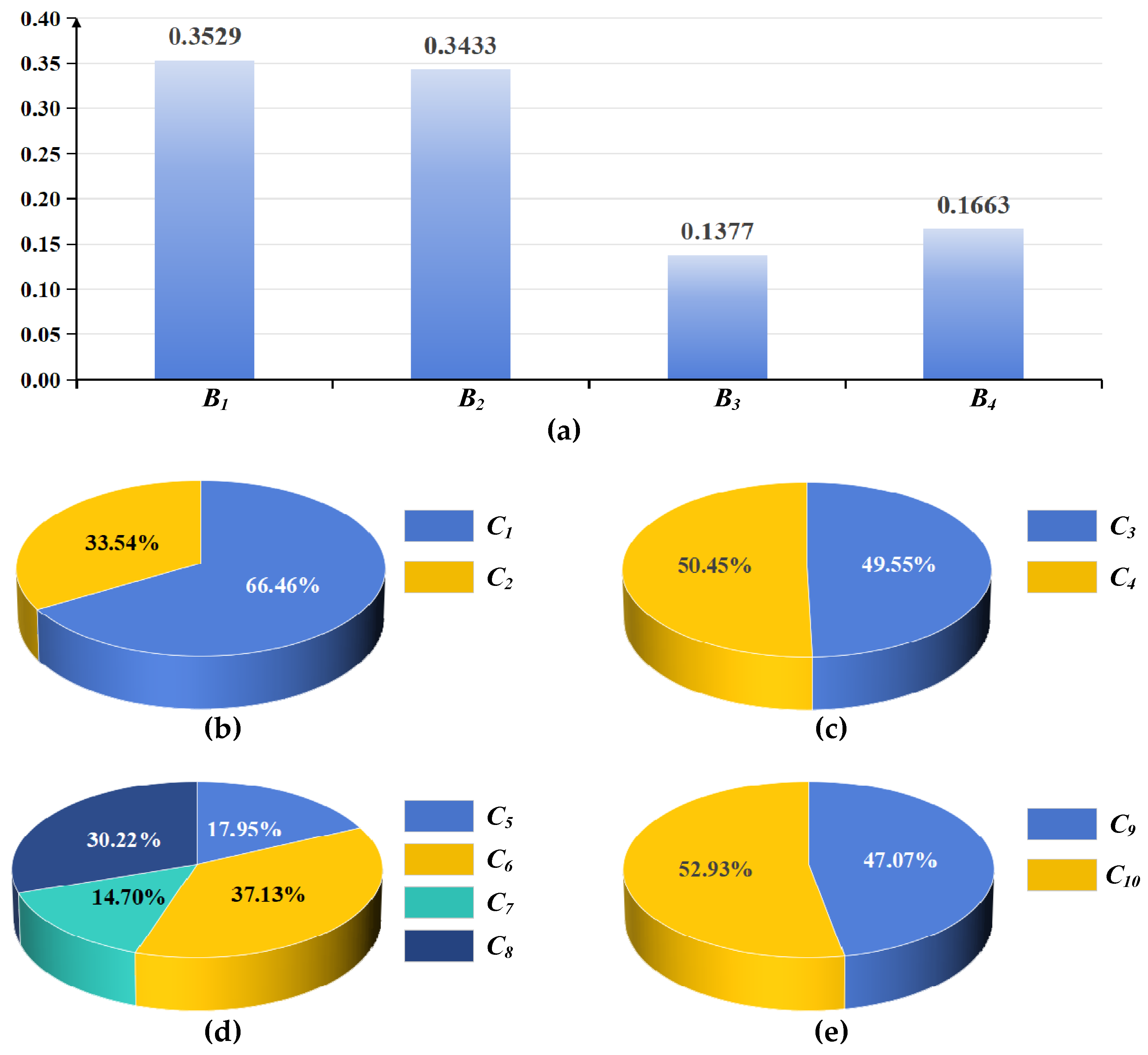

Based on the AHP model, the STSST is designated as the target layer A. Scientific research, industrial operation, innovation output, and policy resources are designated as the criteria layer B. All indicators, including typical laboratories, leading research institutions, key industrial enterprises, such as journal publications, highly cited journal publications, patents, and core patents, are designated as the indicator layer C. This structure is used to construct a hierarchical model for the quantitative evaluation indicator system of STSST.

The judgment matrix is a comparison of the relative importance of all factors in the current layer compared to a factor in the previous layer. According to the established hierarchical structure model, the judgment matrix for

A-

Bi (

i = 1, 2, 3, 4) has been constructed. The relative importance ratings among the sub-dimensions were ascertained through a combination of questionnaire surveys and expert consultations, utilizing pairwise comparisons based on the 1–9 scale rating method.

bij represents the quantitative value of the degree to which indicator

Bi is more important than indicator

Bj (

j = 1, 2, 3, 4) as shown in

Table 2.

The judgment matrix for

A-

Bi presents the criterion-layer judgment matrix for the target layer A, which is used to quantify the relative importance of each factor in the criterion layer (Scientific Research

B1, Industrial Operation

B2, Innovation Output

B3, Policy Resources

B4) with respect to the target layer STSST. The rows and columns of this matrix are all the four criterion-layer factors as shown in

Table 3. Specifically:

The diagonal elements (e.g., b11 = 1, b22 = 1, etc.) indicate that a factor is equally important to itself, complying with the basic reciprocity rule of the judgment matrix;

The off-diagonal elements (e.g., b12 = 1/b21, etc.) reflect the quantitative comparison of importance between the row factor and the column factor.

This matrix serves as a key quantitative basis for subsequently calculating the weight of each criterion-layer factor.

Similarly, the indicator layer judgment matrix B1-Ci (i = 1, 2), B2-Ci (i = 3, 4), B3-Ci (i = 5, 6, 7, 8), and B4-Ci (i = 9, 10) can be derived.

Based on the constructed judgment matrix

A-

Bi, calculate the maximum eigenvalue

λmax and its corresponding eigenvector

WA = (

ωA1,

ωA2,

ωA3,

ωA4)

T. In this step, each column vector of the judgment matrix is normalized. Subsequently, these normalized column vectors are summed on a row-by-row basis. Finally, the resultant row sums are normalized once more to yield an approximate eigenvector, which is the weight vector of the judgment matrix. The eigenvector is

WA = (

ωA1,

ωA2,

ωA3,

ωA4)

T, where

ωAi represents the weight of criterion

Bi for the overall target of a quantitative evaluation indicator system for STSST

A. For the judgment matrix

A-

Bi (

i = 1, 2, 3, 4), the maximum eigenvalue

λA is calculated using mathematical methods:

Similarly, for the judgment matrix B1-Ci (i = 1, 2), B2-Ci (i = 3, 4), B3-Ci (i = 5, 6, 7, 8) and B4-Ci (i = 9, 10), the maximum eigenvalue λB1, λB2, λB3 and λB4 is calculated using mathematical methods.

In the AHP progress, a consistent matrix represents an ideal state of a judgment matrix. It reflects the complete rationality and consistency of the decision-maker’s judgments. In practical applications, constructing a fully consistent judgment matrix is generally impractical, due to the complexity of real-world scenarios and the inherent uncertainty in human judgments. Therefore, the consistency degree of judgments is evaluated by comparing the differences between the actual judgment matrix and the consistent matrix, so as to ensure that the judgment matrix remains within an acceptable range.

Theorem 1. If matrix A is a consistent matrix, its maximum eigenvalue λmax = n, where n denotes the order of matrix A. All other eigenvalues of A are 0.

Theorem 2. An n-order positive reciprocal matrix is a consistent matrix if and only if its maximum eigenvalue λmax = n. Moreover, if the positive reciprocal matrix is inconsistent, its maximum eigenvalue must satisfy λmax > n.

Based on the above theorems, after obtaining the calculated

λmax, the value of the consistency indicator

CI is defined as:

When

CI equals 0, it indicates perfect consistency, while a larger

CI value suggests greater inconsistency. During this process, we utilize the random consistency indicator

RI, as shown in

Table 4, developed by Thomas L. Saaty. The construction method of the RI is to randomly construct 1000 positive reciprocal matrices and calculate the average value of the consistency indicator.

The ratio of

CI to the

RI is used as the criterion for assessing consistency, specifically:

The CR, or consistency ratio, is used to evaluate the consistency of the judgment matrix. If the CR value is less than 0.1, the judgment matrix is considered to have passed the consistency verification. However, if the CR value is 0.1 or higher, the judgment matrix fails the consistency verification and must be revised to improve its consistency. Therefore, for the judgment matrix A-Bi (i = 1, 2, 3, 4), if the consistency ratio CRA is less than 0.1, the consistency verification is considered successful. It is the same for the judgment matrix B1-Ci (i = 1, 2), B2-Ci (i = 3, 4), B3-Ci (i = 5,6,7,8) and B4-Ci (i = 9, 10). If the consistency verification is successful, the corresponding weights can be confirmed.

2.2.2. ME-IOWA Consensus Adjustment

With the continuous increase in the complexity of practical multi-indicator evaluation problems, involving only one expert in the evaluation process will affect the accuracy of the final weights. Moreover, experts have different focuses in their background experience and research directions, so multiple opinions can be integrated. Therefore, in the evaluation process, a team composed of multiple experts is usually formed to evaluate decision-making problems. This method introduces the IOWA method [

34], which was proposed by Ronald R. Yager and is an extended form of the Ordered Weighted Averaging (OWA) operator. First, the cosine similarity between each expert’s weight vector and the group mean is used as the induced value, and the weights are reordered according to the level of similarity; then, the position weights are determined by the ME method, and the reordered weights are aggregated. Thus, while integrating multiple judgments, it automatically weakens the impact of outliers and improves the robustness of the consensus weights.

The temporary group weight vector is calculated by aggregating the individual weight vectors provided by all experts. Specifically, assuming there are

n experts involved in the evaluation process, and each expert

k (where

k = 1, 2, …,

n) provides a weight vector

Wk = (

ωk,1,

ωk,2, …,

ωn,m)

T corresponding to

m evaluation indicators, the temporary group weight vector

is determined by computing the arithmetic mean of the individual weight vectors across the expert dimension, expressed as:

This temporary group weight serves as an initial reference for subsequent consensus adjustment, reflecting the aggregated tendency of multi-expert judgments on indicator importance.

In our method, the cosine similarity

s is introduced to measure the similarity between each expert’s weight vector and the temporary group weight vector. The cosine similarity is a widely used metric in vector space models, where a value closer to 1 indicates a higher degree of similarity between two vectors. The cosine similarity is selected to quantify the “distance” between individual expert vectors and the mean vector because it focuses on directional alignment rather than magnitude differences of vectors. This characteristic is particularly suitable for expert weight consensus measurement, as the core of weight assignment lies in the relative importance order of indicators (direction) rather than the absolute weight values (magnitude). The application of this approach is supported by existing literature. For instance, Ren et al. integrated cosine similarity into the normal cloud multi criteria group decision-making problem [

35], and calculated the consensus degree of the group through cosine similarity to determine the degree of consensus among experts’ decision-making opinions. Mathematically, the cosine similarity

sk for the

k-th expert’s weight vector

Wk and the temporary group weight vector

is defined as:

Here, and represent the Euclidean norm (L2 norm) of vectors and respectively. The calculation method is the square root of the sum of the squares of each element in the n-dimensional vector.

By calculating the cosine similarity s for each expert’s weight vector, we obtain a set of induced values that reflect the degree of alignment between individual expert judgments and the initial group consensus. These induced values will then be used in the subsequent step to reorder the expert weight vectors, which is a crucial part of the consensus adjustment process in the IOWA method.

The expert weight vectors are reordered based on the cosine similarity values {s1, s2, …, sn} calculated in Step 2. The core idea is that the higher the similarity between an expert’s weight vector and the temporary group weight vector, the further forward the position of the corresponding weight vector will be in the reordered sequence. This is because, in the subsequent OWA operation, positions closer to the front are associated with larger weight coefficients, meaning that expert judgments with higher similarity (and thus higher consistency with the group consensus) will have a greater influence on the final consensus weight.

Specifically, the reordering process is implemented as follows:

First, the expert weight vectors are sorted in descending order of their corresponding cosine similarity values s. That is, the weight vector with the highest s is placed at the first position p1, the one with the second-highest s is placed at p2, and so on, until the weight vector with the lowest s is placed at pn.

In cases where there is a tie in similarity values (for example, if the similarity values of expert 1 and expert 6 are both 0.9000), the order of these tied expert weight vectors can be random. In this specific context, to ensure a deterministic process, the tied weight vectors are ordered according to the order in which the experts were originally listed (i.e., the order of appearance in the expert group).

Through this reordering, the weight vectors are arranged in a sequence where those reflecting judgments more consistent with the group consensus are prioritized. This sequence {p1, p2, …, pn} will then be used in the next step to calculate the final consensus weight, leveraging the ordered structure to appropriately weight the expert judgments.

Step 4: Calculation of the consensus weights by ME

In this step, the rank-based ME method is adopted to determine the position weight Wp = (ωp1, ωp2, …, ωpn)T, which are further used to aggregate the reordered expert weight vectors {p1, p2, …, pn} and obtain the final consensus weight vector. The core logic of this method is to assign larger position weights to the expert weight vectors that are more consistent with the group consensus (i.e., ranked earlier in Step 3), and the weight values decrease in reverse order of the ranking—this design ensures that judgments with higher similarity to the group opinion have a greater influence on the final consensus result, while still incorporating the information of all expert judgments.

The degree of

orness associated with the

Wp is defined as:

where

orness(

Wp) =

α ∈ [0, 1] is a measurement introduced by

Yager, which can also be interpreted as the mode of decision-making in the aggregation process for weighting vector. The second characterizing measurement introduced by

Yager is a measure of dispersion of the aggregation. The dispersion of

Wp is defined as:

The principle of ME is integrated with IOWA operators to obtain the

Wp, which is designed to attain ME under a preset level of

orness. The mathematical programming approach is described as:

The Lagrange multiplier method is applied to the IOWA operator equation to derive a polynomial equation, which is the key tool for determining the

Wp that satisfies the maximal entropy criterion.

Wp can be obtained by:

and:

then:

Under this approach, the position weight

Wp is calculated by assigning different values to the

orness parameter (

α = 0.5, 0.6, 0.7,0.8, 0.9, 1.0).

Table 5 illustrates the position weights under maximal entropy when

n is 10.

When α is 0.5, the position weights tend to be more evenly distributed, which treats each expert’s input with roughly equal importance, weakening the distinction between higher-ranked and lower-ranked experts. As α increases, the weight for the top-ranked position (held by the expert most consistent with the group) becomes dominant, signifying that the scoring of this top-ranked expert carries much greater weight, while the inputs of lower-ranked experts are largely sidelined. The experts with consistent judgments will be prioritized to ensure the reliability of weights, while also retaining non-negligible weights for less consistent experts to avoid missing valuable niche insights [

36].