Abstract

We study the identification of causal effects, motivated by two improvements to identifiability that can be attained if one knows that some variables in a causal graph are functionally determined by their parents (without needing to know the specific functions). First, an unidentifiable causal effect may become identifiable when certain variables are functional. Secondly, certain functional variables can be excluded from being observed without affecting the identifiability of a causal effect, which may significantly reduce the number of needed variables in observational data. Our results are largely based on an elimination procedure that removes functional variables from a causal graph while preserving key properties in the resulting causal graph, including the identifiability of causal effects. Our treatment of functional dependencies in this context mandates a formal, systematic, and general treatment of positivity assumptions, which are prevalent in the literature on causal effect identifiability and which interact with functional dependencies, leading to another contribution of the presented work.

1. Introduction

A causal effect measures the impact of an intervention on some events of interest and is exemplified by the question, “What is the probability that a patient would recover had they taken a drug?”. This type of question, also known as an interventional query, belongs to the second rung of Pearl’s causal hierarchy [1], so it ultimately requires experimental studies if it is to be estimated from data. However, it is well known that such interventional queries can sometimes be answered based on observational queries (first rung of the causal hierarchy), which can be estimated from observational data. This becomes very significant when experimental studies are either not available, expensive to conduct, or would entail ethical concerns. Hence, a key question in causal inference asks when and how a causal effect can be estimated from available observational data, assuming a causal graph is provided [2].

More precisely, given a set of treatment variables () and a set of outcome variables (), the causal effect of on , denoted as or , is the marginal probability on when an intervention sets the states of variables () to . The problem of identifying a causal effect studies whether can be uniquely determined from a causal graph and a distribution () over some variables () in the causal graph [2], where is typically estimated from observational data. The casual effect is guaranteed to be identifiable if corresponds to all variables in the causal graph (with some positivity assumptions), that is, if all variables in the causal graph are observed. When some variables are hidden (unobserved), it is possible that different parameterizations of the causal graph will induce the same distribution () but different values for the causal effect (), which leads to unidentifiablility. In the past few decades, a significant amount of effort has been devoted to studying the identifiability of causal effects (see, e.g., [2,3,4,5,6,7]). Some early works include the back-door criterion [2,8] and the front-door criterion [2,3]. These criteria are sound but incomplete, as they may fail to identify certain causal effects that are, indeed, identifiable. Complete identification methods include the do-calculus method [2], the identification algorithm presented in [9], and the ID algorithm proposed in [10]. These methods require some positivity assumptions (constraints) on the observational distribution () and can derive an identifying formula that computes the causal effect based on when the causal effect is identifiable. Some recent works take a different approach by first estimating the parameters of a causal graph to obtain a fully specified causal model, which is then used to estimate causal effects through inference [11,12,13,14]. Further works focus on the efficiency of estimating causal effects from finite data, e.g., [15,16,17,18].

One main challenge of these algorithms is that they try to identify causal effects from limited information in the form of a causal graph and data on observed variables (). This becomes a problem when only a small number of variables () is observed, since alone may not provide enough information for deciding the values of causal effects. Such scenarios happen when the collection of data on some variables is infeasible, e.g., if these variables (such as gender and age) involve confidential information. A recent line of work mitigates this problem by studying the impact of additional information on identifiability beyond causal graphs and observational data. For example, Tikka et al. [19] showed that certain unidentifiable causal effects can become identifiable given information about context-specific independence. Our work in this paper follows the same direction, as we consider the problem of causal effect identification in the presence of a particular type of qualitative knowledge called functional dependencies [20]. We say there is a functional dependency between a variable (X) and its parents () in the causal graph if the distribution () is deterministic but we do not know the distribution itself (i.e., the specific values of ). In this case, we also say that variable X is functional. Previous works have shown that functional dependencies can be exploited to improve the efficiency of Bayesian network inference [13,21,22,23,24]. We complement these works by showing that functional dependencies can also be exploited to improve the identifiability of causal effects, especially in the presence of hidden variables. In particular, we show that some unidentifiable causal effects may become identifiable, given such functional dependencies; propose techniques for testing identifiability in this context; and highlight other implications of such dependencies on the practice of identifiability.

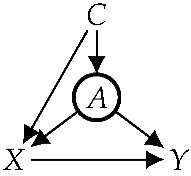

Consider the following motivational example where we are interested in how the enforcement of speed limits may affect car accidents. The driving age (A) is functionally determined by country (C), driving age and country are causes of speed (X), and speed and driving age are causes of accidents (Y). The DAG on the right captures the causal relations among these variables, where variable A is circled to indicate it is functional. Furthermore, suppose that variables are observed. According to classical cause–effect identification methods (e.g., do-calculus and ID algorithm), the causal effect of X on Y is unidentifiable in this case. However, if we take into account that variable A is a function of C, which restricts the class of distributions under consideration, then the causal effect of X on Y becomes identifiable. This exemplifies the improvements to identifiability pursued in this paper.

Consider a causal graph (G) and a distribution () over the observed variables () in G. To check the identifiability of a causal effect, it is standard to first apply the projection operation proposed in [25,26], which constructs another causal graph () with as its non-root variables, followed by the application of an identification algorithm to , like the ID algorithm [10]. We call this two-stage procedure project-ID. One restriction of project-ID is that it is applicable only under some positivity constraints (assumptions), such as strict positivity (), which preclude some events from having a zero probability. Nevertheless, these positivity constraints are not always satisfiable in practice and may contradict functional dependencies. For example, if Y is a function of X, then the positivity constraint () never holds. To systematically treat this interaction between positivity constraints and functional dependencies, we formulate the notion of constrained identifiability, which takes positivity constraints as an input (in addition to the causal graph (G) and distribution ()). We also formulate the notion of functional identifiability, which further takes functional dependencies as an input. This allows us to explicitly treat the interactions between positivity constraints and functional dependencies, which is needed for the combination of classical methods like project-ID with the results we present in this paper.

The paper is structured as follows. We start with some technical preliminaries in Section 2. We formally define positivity constraints and functional dependencies in Section 3, where we also introduce the problems of constrained and functional identifiability. Section 4 introduces two primitive operations, functional elimination and functional projection, which are needed for later treatments. Section 5 presents our core results on functional identifiability and how they can be combined with existing identifiability algorithms. We conduct experiments to evaluate the effectiveness of functional dependencies on cause–effect identifiability in Section 6. Finally, we close with concluding remarks in Section 7. Proofs of all results are included in Appendix C. This paper is an extended version of [27].

2. Technical Preliminaries

We consider discrete variables in this work. Single variables are denoted by uppercase letters (e.g., X), and their states are denoted by lowercase letters (e.g., x). Sets of variables are denoted by bold uppercase letters (e.g., ), and their instantiations (sets of values) are denoted by bold lowercase letters (e.g., ).

2.1. Causal Bayesian Networks and Interventions

A Causal Bayesian Network (CBN) is a pair , where G is a causal graph in the form of a directed acyclic graph (DAG) and is a set of conditional probability tables (CPTs). We have one CPT for each variable (X) with parents in G, denoted as , which specifies the conditional probability distributions (). It follows that every CPT () satisfies the following properties: for all instantiations () and for each instantiation (). For simplicity, we also denote as .

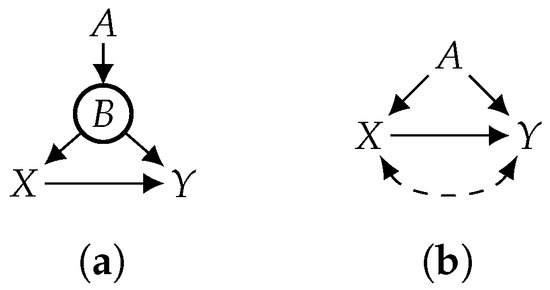

A CBN induces a joint distribution over its variables (), which is exactly the product of its CPTs, i.e., . In the CBN shown in Figure 1a, for example, . Applying a treatment () to the joint distribution yields a new distribution called the interventional distribution, denoted as . One way to compute the interventional distribution is to consider the mutilated CBN that is constructed from the original CBN as follows: Remove from G all edges that point to variables in ; then, replace the CPT in for each with a CPT ( where if x is consistent with and otherwise). Figure 1a depicts a causal graph (G), and Figure 1b depicts the mutilated causal graph () under a treatment (). The interventional distribution () is the distribution induced by the mutilated CBN , where corresponds to the causal effect (, also notated by ). In this example, the causal effect of on Y can be computed by .

Figure 1.

Mutilated and projected graphs of a causal graph. Hidden variables are circled. A bidirected edge () is aa compact notation for , where H is an auxiliary hidden variable. (a) Causal graph; (b) mutilated graph; (c) projected graph.

2.2. Identifying Causal Effects

A key question in causal inference is to check whether a causal effect can be (uniquely) computed given the causal graph (G) and a distribution () over a subset () of its variables. If the answer is yes, we say that the causal effect is identifiable, given G and . Otherwise, the causal effect is unidentifiable. Variables () are said to be observed, and the remaining variables are said to be hidden, where is usually estimated from observational data. We start with the general definition of identifiability (not necessarily for causal effects) from [2] (Ch. 3.2.4), with a slight rephrasing.

Definition 1

(Identifiability [2]). Let be any computable quantity of a model (M). We say that Q is identifiable in a class of models if, for any pair of models ( and ) from this class, whenever , where represents the observed variables.

In the context of causal effects, the problem of identifiability is to check whether every pair of fully specified CBNs ( and in Definition 1) that induces the same distribution () also produces the same value for the causal effect. Equivalently, to show that a causal effect is unidentifiable, it suffices to find two CBNs that induce the same distribution () yet different causal effects. Note that Definition 1 does not restrict the considered models ( and ) based on the properties of the distributions ( and ). However, in the literature on identifying causal effects, it is quite common to only consider CBNs (models) that induce distributions that satisfy some positivity constraints, such as . We examine such constraints more carefully in Section 3, as they may contradict functional dependencies, which we introduce later.

It is well known that under some positivity constraints (e.g., ), the identifiability of causal effects can be efficiently tested using what we call the project-ID algorithm. Given a causal graph (G), project-ID first applies the projection operation proposed in [25,26,28] to yield a new causal graph () whose hidden variables are all roots, each with exactly two children. These properties are needed by the ID algorithm [10], which is then applied to to yield an identifying formula if the causal effect is identifiable and resulting in an outcome of FAIL otherwise. Consider the causal effect () in Figure 1a, where hidden variables are the non-root variables (). We first project the causal graph (G) in Figure 1a onto its observed variables to yield the causal graph () in Figure 1c (all hidden variables in are auxiliary and roots). We then run the ID algorithm on , which returns the following (simplified) identifying formula: . Hence, the causal effect () is identifiable and can be computed using the above formula. Moreover, all quantities in the formula can be obtained from the distribution () over observed variables, which can be estimated from observational data. More details on the projection operation and the ID algorithm can be found in Appendix A.

3. Constrained and Functional Identifiability

As mentioned earlier, Definition 1 of identifiability [2] (Ch. 3.2.4) does not restrict the pair of considered models ( and ). However, it is common in the literature on cause–effect identifiability to only consider CBNs with distributions () that satisfy some positivity constraints. Strict positivity () is, perhaps, the mostly widely used constraint [2,9,28], that is, in Definition 1, we only consider CBNs and , which induce distributions and that satisfy and , respectively. Weaker and somewhat intricate positivity constraints were employed by the ID algorithm in [10] as discussed in Appendix A, but we apply this algorithm only under strict positivity to keep things simple (see [29,30] for a recent discussion of positivity constraints).

Positivity constraints are motivated by two considerations: technical convenience and the fact that most causal effects would be unidentifiable without some positivity constraints (more on this later). Given the multiplicity of positivity constraints considered in the literature and the subtle interaction between positivity constraints and functional dependencies (which are the main focus of this work), we next provide a systematic treatment of identifiability under positivity constraints.

3.1. Positivity Constraints

We first formalize the notion of a positivity constraint, then define the notion of constrained identifiability, which takes a set of positivity constraints as input (in addition to the causal graph (G) and distribution ()).

Definition 2.

A positivity constraint on is an inequality of the form , where and , that is, for all instantiations (), if , then .

When , the positivity constraint is defined on a marginal distribution (). To illustrate, the positivity constraint, in Figure 1a specifies the constraint whereby if for every instantiation (). We may impose multiple positivity constraints on a set of variables (). We use to denote the set of positivity constraints imposed on and to denote all the variables mentioned by . Consider the constraints expressed as ; then, . The weakest set of positivity constraints is (no positivity constraints, as in Definition 1), and the strongest positivity constraint is (strict positivity).

We next provide a definition of identifiability for the causal effect of treatments () on outcomes () in which positivity constraints are an input to the identifiability problem. We call it constrained identifiability, in contrast to the (unconstrained) identifiability of Definition 1.

Definition 3.

We call an identifiability tuple, where G is a causal graph (DAG), is its set of observed variables, and is a set of positivity constraints.

Definition 4

(Constrained Identifiability). Let be an identifiability tuple. The causal effect of on is said to be identifiable with respect to if for any pair of distributions ( and ) that are induced by G and that satisfy , as well as the positivity constraints ().

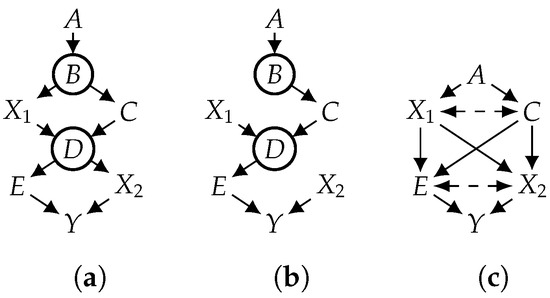

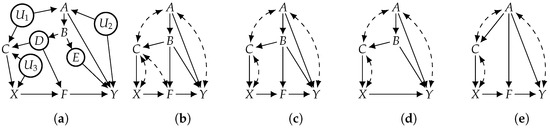

For simplicity, we say “identifiability” to mean “constrained identifiability” in the rest of this paper. We next show that without some positivity constraints, most causal effects would not be identifiable. We first define a notion called first ancestor on a causal graph as follows. We say that a treatment () is a first ancestor of some outcome () if X is an ancestor of Y in causal graph G and that there exists a directed path from X to Y that is not intercepted by . Consider the causal graph in Figure 2a with hidden variable U; treatment is a first ancestor of outcome , and outcome does not have any first ancestor. A first ancestor must exist if some treatment variable is an ancestor of some outcome variable. The following result states a criterion under which a causal effect is never identifiable.

Figure 2.

Examples for positivity.

Proposition 1.

The casual effect of on is not identifiable with respect to an identifiability tuple if some is a first ancestor of some and does not imply .

Hence, identifiability is not possible without some positivity constraints if at least one treatment variable is an ancestor of some outcome variable (which is common). According to Proposition 1, the causal effect of on is not identifiable in Figure 2a if the considered distributions do not satisfy , as is a first ancestor of .

As positivity constraints become stronger, more causal effects become more likely to be identifiable, since the set of considered models becomes smaller, that is, an unidentifiable causal effect under positivity constraint may become identifiable under positivity constraint if implies . Consider the causal graph in Figure 2b, in which all variables are observed (). Without positivity constraints (), the causal effect of X on Y is not identifiable. However, it becomes identifiable given strict positivity (), leading to an identifying formula expressed as . This causal effect is also identifiable under the weaker positivity constraint, i.e., In this example, the positivity assumption () is sufficient to make the identifying formula well defined because in the formula is equal to zero when and is computable when (the conditional probability is well defined if ). This is an example where strict positivity may be assumed for technical convenience only, as it may facilitate the application of some identifiability techniques like do-calculus [2].

3.2. Functional Dependencies

A variable (X) in a causal graph is said to functionally depend on its parents () if its distribution is deterministic () for every instantiation (). Variable X is also said to be functional in this case. In this work, we assume qualitative functional dependencies. We do not know the distribution (); we only know that it is deterministic. We assume that root variables cannot be functional, as such variables can be removed from the causal graph.

The table on the right shows two variables (B and C) that both have A as their parent. Variable C is functional, but variable B is not. The CPT for variable C is called a functional CPT in this case. Functional CPTs are also known as (causal) mechanisms and are expressed using structural equations in structural causal models (SCMs) [31,32,33]. By definition, in an SCM, every non-root variable is assumed to be functional (when noise variables are represented explicitly in the causal graph).

| A | B | C | ||

| 0 | 0 | 0 | 0.2 | 0 |

| 0 | 1 | 1 | 0.8 | 1 |

| 1 | 0 | 0 | 0.6 | 1 |

| 1 | 1 | 1 | 0.4 | 0 |

Qualitative functional dependencies are a longstanding concept. For example, they are common in relational databases (see, e.g., [34,35]), and their relevance to probabilistic reasoning was previously brought up in [20] (Ch. 3). One example of a (qualitative) functional dependency is that different countries have different driving ages, so we know that “driving age” functionally depends on “country”, even though we may not know the specific driving age for each country. Another example is that a “Letter grade” for a class is functionally dependent on the student’s “weighted average”, even though we may not know the scheme for converting a weighted average to a letter grade.

In this work, we assume that we are given a causal graph (G) in which some variables () have been designated as functional. The presence of functional variables further restricts the set of distributions (Pr) that we consider when checking identifiability. This leads to a more refined problem that we call functional identifiability (F-identifiability), which depends on four elements.

Definition 5.

We call an F-identifiability tuple when G is a DAG, is its set of observed variables, is a set of positivity constraints, and is a set of functional variables in G.

Definition 6

(F-Identifiability). Let be an F-identifiability tuple. The causal effect of on is F-identifiable with respect to if for any pair of distributions ( and ) that are induced by G, that satisfy and the positivity constraints (), and in which variables () functionally depend on their parents.

Both and represent constraints on the models (CBNs) we consider when checking identifiability, and these two types of constraints may contradict each other. We next define two notions that characterize some important interactions between positivity constraints and functional variables.

Definition 7.

Let be an F-identifiability tuple. Then, and are consistent if there exists a parameterization for G that induces a distribution satisfying and in which variables () functionally depend on their parents. Moreover, and are separable if .

If is inconsistent with , then the set of distributions (Pr) considered in Definition 6 is empty; hence, the causal effect is not well defined (and trivially identifiable according to Definition 6). As such, one would usually want to ensure such consistency. Here are some examples of positivity constraints that are always consistent with a set of functional variables (): positivity foreach treatment variable, i.e., ; positivity for the set of non-functional treatments, i.e., ; and positivity for all non-functional variables, i.e., . It turns out that all these examples are special cases of the following condition. For a functional variable (), let be variables that intercept all directed paths from non-functional variables to W (such a may not be unique). If none of the positivity constraints in mentions both W and , then and are guaranteed to be consistent (see Proposition A4 in Appendix C).

Separability is a stronger condition, and it intuitively implies that the positivity constraints do not rule out any possible functions for the variables in . We need such a condition for one of the results we present later. Some examples of positivity constraints that are separable from are and Studying the interactions between positivity constraints and functional variables, as we do in this section, will prove helpful later when utilizing existing identifiability algorithms (which require positivity constraints) for the testing of functional identifiability.

4. Functional Elimination and Projection

Our approach for testing identifiability under functional dependencies is based the elimination of functional variables from the causal graph, followed by the invocation of the project-ID algorithm on the resulting graph. This can be subtle, though, since the described process does not work for every functional variable, as we discuss in the next section. Moreover, one needs to handle the interaction between positivity constraints and functional variables carefully. However, the first step is to formalize the process of eliminating a functional variable and to study the associated guarantees.

Eliminating variables from a probabilistic model is a well studied operation also known as marginalization (see, e.g., [36,37,38]). When eliminating variable X from a model that represents distribution , the goal is to obtain a model that represents the marginal distribution (, where ). Elimination can also be applied to a DAG (G) that represents conditional independencies (), leading to a new DAG () that represents independencies () that are implied by . In fact, the projection operation we discussed earlier [25,26] can be understood in these terms. We next propose an operation that eliminates functional variables from a DAG and that comes with stronger guarantees compared to earlier elimination operations as far as preserving independencies.

Definition 8.

The functional elimination of a variable (X) from a DAG (G) yields a new DAG attained by adding an edge from each parent of X to each child of X, then removing X from G.

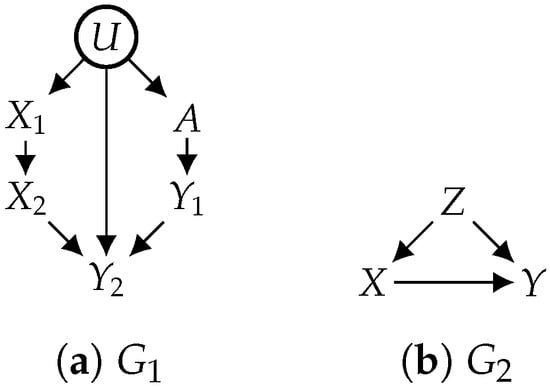

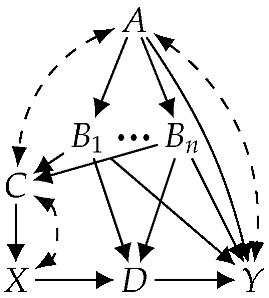

Appendix B extends this definition to causal Bayesian networks (i.e., updating both CPTs and the causal graph). For convenience, we sometimes say “elimination” to mean “functional elimination” when the context is clear. From the viewpoint of independence relations, functional elimination is not sound if the eliminated variable is not functional. In particular, the DAG () that results from this elimination process may satisfy independencies (identified by d-separation) that do not hold in the original DAG (G). As we show later, however, every independence implied by must be implied by G if the eliminated variable is functional. In the context of SCMs, functional elimination may be interpreted as replacing the eliminated variable (X) with its function in all structural equations that contain X. Functional elimination applies in broader contexts than SCMs, though. Eliminating multiple functional variables in any order yields the same DAG (see Proposition A3 in Appendix B). For example, eliminating variables from the DAG in Figure 3a yields the DAG in Figure 3c whether we use the order of or the order of .

Figure 3.

Contrasting projection with functional projection. C and D are functional. Hidden variables are circled. (a) DAG; (b) proj. (a) on A, B, G, H, I; (c) eliminate from (a); (d) proj. (c) on A, B, G, H, I.

Functional elimination preserves independencies that hold in the original DAG and that are not preserved by other elimination methods, including projection, as defined in [25,26]. These independencies are captured using the notion of D-separation [39,40], which is more refined than the classical notion of d-separation [41,42] (uppercase D versus lowercase d). The original definition of D-separation can be found in [40]. We provide a simpler definition next, stated as Proposition 2, as the equivalence between the two definitions is not immediate.

Proposition 2.

Let be disjoint variable sets and be a set of functional variables in DAG G. Then, and are D-separated by in iff and are d-separated by in G, where is obtained as follows. Initially, . The next step is repeated until stops changing. Then, every variable in whose parents are in is added to .

To illustrate the difference between d-separation and D-separation, consider, again, the DAG in Figure 3a and assume that variables C and D are functional. Variables G and I are not d-separated by A, but they are D-separated by A, that is, there are distributions that are induced by the DAG in Figure 3a and in which G and I are not independent given A. However, G and I are independent given A in every induced distribution in which variables C and D are functionally determined by their parents. Functional elimination preserves D-separation in the following sense.

Theorem 1.

Consider a DAG (G) with functional variables (). Let be the result of functionally eliminating variables from G. For any disjoint sets (, , and ) in , and are D-separated by in iff and are D-separated by in .

The above result is stated with respect to eliminating a subset of the functional variables. If we eliminate all functional variables, then D-separation is reduced to d-separation. For example, variables G and I are D-separated by A in Figure 3c and in Figure 3a as suggested by Theorem 1. In fact, G and I are also d-separated by A in Figure 3c, since we eliminated all functional variables. We now have the following stronger result.

Corollary 1.

Consider a DAG (G) with functional variables (). Let be the result of functionally eliminating all variables () from G. For any disjoint sets (, , and ) in , and are d-separated by in iff and are D-separated by in .

We now define the operation of functional projection, which augments the original projection operation proposed in [25,26] in the presence of functional dependencies.

Definition 9.

Let G be a DAG, be its observed variables, and be its hidden functional variables (). The functional projection of G on is a DAG obtained by functionally eliminating variables () from G, then projecting the resulting DAG on variables ().

We now contrast functional projection and classical projection using the causal graph in Figure 3a, assuming that the observed variables are and the functional variables are . Applying classical projection to this causal graph yields the causal graph in Figure 3b. To apply functional projection, we first functionally eliminate C and D from Figure 3a, which yields Figure 3c; then, we project Figure 3c on variables (), which yields the causal graph in Figure 3d. So we now need to contrast Figure 3b (classical projection) with Figure 3d (functional projection). The latter is a strict subset of the former, as it is missing two bidirected edges. One implication of this is that variables G and I are not d-separated by A in Figure 3b because they are not d-separated in Figure 3a. However, they are D-separated in Figure 3a; hence, they are d-separated in Figure 3d. So functional projection yields a DAG that exhibits more independencies. Again, this is because G and I are D-separated by A in the original DAG, a fact that is not visible to the projection but is visible to (and exploitable by) the functional projection.

An important corollary of functional projection is the following.

Corollary 2.

Let G be a DAG; be its observed variables; be its functional variables, which are all hidden; and be the result of functionally projecting G on . For any disjoint sets (, , and ) in , and are d-separated by in iff and are D-separated by in .

In other words, classical projection preserves d-separation, but functional projection preserves D-separation, which subsumes d-separation. Corollary 2 is a bit more subtle and powerful than it may first seem. First, it concerns D-separations based on hidden functional variables, not all functional variables. Secondly, it shows that such D-separations in G appear as classical d-separations in which allows us to feed into existing identifiability algorithms, as we show later. This is a key enabler of some results we present next on the testing of functional identifiability.

5. Causal Identification with Functional Dependencies

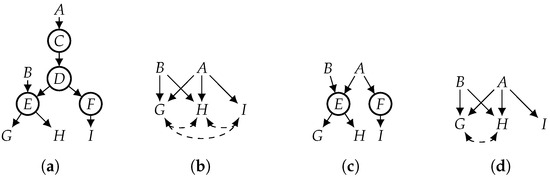

Consider the causal graph (G) in Figure 4a and let be its observed variables. According to Definition 4 of identifiability, the causal effect of X on Y is not identifiable with respect to , where . We can show this by projecting the causal graph (G) on the observed variables (), which yields the causal graph () in Figure 4b, then applying the ID algorithm to , which returns FAIL. Suppose now that the hidden variable (B) is known to be functional. According to Definition 6 of F-identifiability, this additional knowledge reduces the number of considered models, so it actually renders the causal effect identifiable—the identifying formula is , as we show later. Hence, an unidentifiable causal effect becomes identifiable in light of knowledge that some variable is functional, even without knowing the structural equations for this variable.

Figure 4.

B is functional. (a) DAG; (b) projection.

The question now is how to algorithmically test F-identifiability. We propose two techniques for this purpose, the first of which is geared towards exploiting existing algorithms for classical identifiability. This technique is based on the elimination of functional variables from the causal graph while preserving F-identifiability, with the goal of getting to a point where F-identifiability becomes equivalent to classical identifiability. If we reach this point, we can use existing algorithms for classical identifiability, like the ID algorithm, to test F-identifiability. This can be subtle, though, since hidden functional variables behave differently from observed ones. We start with the following result.

Theorem 2.

Let be an F-identifiability tuple. If is the result of functionally eliminating the hidden functional variables from G, then the causal effect of on is F-identifiable with respect to iff it is F-identifiable with respect to .

An immediate corollary of this theorem is that if all functional variables are hidden, then we can reduce the question of F-identifiability to identifiability, since , so F-identifiability with respect to collapses into identifiability with respect to .

Corollary 3.

Let be an F-identifiability tuple, where and are all hidden. If is the result of functionally projecting G on variables (), then the causal effect of on is F-identifiable with respect to iff it is identifiable with respect to (We require the positivity constraint , as we suspect the projection operation in [26] requires it even though that was not made explicit in the paper; if not, then can be empty in Corollary 3).

This corollary suggests a method for using the ID algorithm, which is popular for testing identifiability, to establish F-identifiability by coupling ID with functional projection instead of classical projection. Consider the causal graph (G) in Figure 5a with observed variables of . The causal effect of X on Y is not identifiable under ; projecting G on observed variables () yields the causal graph () in Figure 5b, and the ID algorithm produces FAIL on . Suppose now that the hidden variables () are functional. To test whether the causal effect is F-identifiable using Corollary 3, we functionally project G on the observed variables (), which yields the causal graph () in Figure 5c. Applying the ID algorithm to produces the following identifying formula: ; therefore, is F-identifiable.

Figure 5.

Variables , and Y are observed. Variables D and E are functional (and hidden). (a) Causal graph; (b) proj. of (a); (c) F-proj. of (a); (d) F-elim. F; (e) F-elim. B.

We stress, again, that Corollary 3 and the corresponding F-identifiability algorithm apply only when all functional variables are hidden. We now treat the case when some of the functional variables are observed. The subtlety here is that, unlike hidden functional variables, eliminating an observed functional variable does not always preserve F-identifiability. However, the following result identifies conditions that guarantee the preservation of F-identifiability based on the notion of separability in Definition 7. If all observed functional variables satisfy these conditions, we can, again, reduce F-identifiability into identifiability, so we can exploit existing methods for identifiability like the ID algorithm and do-calculus.

Theorem 3.

Let be an F-identifiability tuple. Let be a set of observed functional variables that are neither treatments nor outcomes, are separable from , and have observed parents. If is the result of functionally eliminating variables () from G, then the causal effect of on is F-identifiable with respect to iff it is F-identifiable with respect to .

Intuitively, the theorem allows us to remove observed functional variables from a causal graph if they satisfy the given conditions. We now have the following important corollary of Theorems 2 and 3, which subsumes Corollary 3.

Corollary 4.

Let be an F-identifiability tuple, where and every variable in satisfies the conditions of Theorem 3. If is the result of functionally projecting G on , then the causal effect of on is F-identifiable with respect to iff it is identifiable with respect to .

Consider, again, the causal effect of X on Y in graph G of Figure 5a with observed variables of . Suppose now that the observed variable (F) is also functional (in addition to hidden functional variables D and E) and assume . Using Corollary 4, we can functionally project G on A, B, C, X, and Y to yield the causal graph () in Figure 5d, which reduces F-identifiability on G to classical identifiability on . Since strict positivity holds in , we can apply any existing identifiability algorithm and conclude that the causal effect is not identifiable. For another scenario, suppose that the observed variable (B) (instead of F) is functional and we have . Again, using Corollary 4, we functionally project G onto A, C, F, X, and Y to yield the causal graph () in Figure 5e, which reduces F-identifiability on G to classical identifiability on . If we apply the ID algorithm to , we obtain the following identifying formula (which we denote as Equation (A1)): . In both scenarios presented above, we were able to test F-identifiability using an existing algorithm for identifiability.

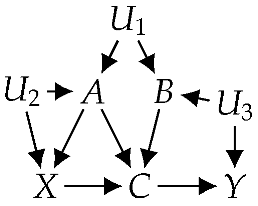

Corollary 4 (and Theorem 3) has yet another key application: it can help us pinpoint observations that are not essential for identifiability. To illustrate, consider the second scenario presented above, where the observed variable (B) is functional in the causal graph (G) of Figure 5a. The fact that Corollary 4 allowed us to eliminate variable B from G implies that observation of this variable is not needed to render the causal effect F-identifiable and, hence, is not needed to compute the causal effect. This can be seen by examining the identifying formula (Equation (A1)), which does not contain variable B. This can be further generalized to the causal graph on the right with functional variables (), where we assume . According to Corollary 4, we can functionally project the graph onto while preserving F-identifiability. Moreover, applying the ID algorithm (or do-calculus) to G yields an identifying formula for over only , that is, in this example, we only need to observe a constant number (five) of variables to render the causal effect F-identifiable, even though the number of observed variables in the original graph is unbounded. This application of Corollary 4 can be quite significant in practice, especially when some variables are expensive to measure (observe) or when they may raise privacy concerns see (e.g., [43,44]).

Theorems 2 and 3 are more far-reaching than what the above discussion may suggest. In particular, even if we cannot eliminate every (observed) functional variable using these theorems, we may still be able to reduce F-identifiability to identifiability due to the following result.

Theorem 4.

Let be an F-identifiability tuple. If every functional variable has at least one hidden parent, then a causal effect of on is F-identifiable with respect to iff it is identifiable with respect to .

That is, if we still have functional variables in the causal graph after applying Theorems 2 and 3 and if each such variable has at least one hidden parent, then F-identifiability is equivalent to identifiability. Consider, again, the causal effect of X on Y in G of Figure 5a with observed variables of . Now, suppose that the observed variables (A, B, C, X, and Y are also functional (in addition to hidden functional variables D and E) and assume . We can reduce F-identifiability to classical identifiability by combining Theorems 3 and 4. In particular, according to Theorem 3, we first reduce F-identifiability on G to F-identifiability on in Figure 5e by functionally eliminating D, E, and B. Since all the remaining functional variables (A, C, X, and Y) have a hidden parent, we can further reduce F-identifiability to identifiability on according to Theorem 4, then apply existing algorithms (e.g., ID and do-calculus) to conclude that the causal effect is identifiable.

The method we have presented thus far for the testing of F-identifiability is based on the elimination of functional variables from the causal graph, followed by the application of existing tools for causal effect identification, such as the project-ID algorithm and do-calculus. This F-identifiability method is complete if every observed functional variable either satisfies the conditions of Theorem 3 or has at least one hidden parent that is not functional.

We next present another technique for reducing F-identifiability to identifiability. This method is more general and much more direct than the previous one, but it does not allow us to fully exploit some existing tools, like the ID algorithm, due to the positivity assumptions they make. The new method involves pretending that some of the hidden functional variables are actually observed, inspired by Proposition 2, which reduces D-separation to d-separation using a similar technique.

Theorem 5.

Let be an F-identifiability tuple, where . A causal effect of on is F-identifiable with respect to iff it is identifiable with respect to , where is obtained as follows. Initially, . This is repeated until stops changing. Then, a functional variable from is added to if its parents are in .

Consider the causal effect of X on Y in graph G of Figure 5a and suppose the observed variables are ; the functional variables are D, E, and F; and we have . According to Theorem 5, the causal effect of X on Y is F-identifiable iff it is identifiable in G while pretending that variables , B, C, D, E, F, X, are all observed. In this case, the casual effect is not identifiable, but we cannot obtain this answer by applying an identifiability algorithm that requires positivity constraints that are stronger than . If we have stronger positivity constraints that imply then only the if part of Theorem 5 holds, assuming and are consistent, that is, confirming identifiability with respect to confirms F-identifiability with respect to , but if identifiability is not confirmed, then F-identifiability may still hold. This suggests that to fully exploit the power of Theorem 5, one would need a new class of identifiability algorithms that can operate under the weakest possible positivity constraints.

6. Experiments

We next report on a simple experiment to empirically demonstrate how knowledge of functional dependencies can aid the identifiability of causal effects. We randomly generated 50 causal graphs (DAGs) with variables using the Erdős–Rényi method [45], where every edge in the causal graphs appears with a probability of and every variable has, at most, 6 parents. We then randomly picked observed variables, treatment variables, outcome variables, and functional variables from the causal graphs.

For each combination of N and W, Table 1 records the number of causal effects (out of 50) that are (1) unidentifiable (uid); and (2) unidentifiable but F-identifiable (uid-fid). The table also records the average number of observed variables after applying Theorems 2–4 (#obs); these are the observed variables passed to the project-ID algorithm (We assume that strict positivity holds for the remaining observed variables after applying Theorems 2–4).

Table 1.

Numbers of causal effects that are unidentifiable (uid) and that are unidentifiable but F-identifiable (uid-fid) and average number of observed variables passed to project-ID (#obs) for causal graphs with various numbers of variables (N) and functional ones (W).

The following patterns are clear. First, more unidentifiable causal effects become F-identifiable when more variables exhibit functional dependencies. This observation demonstrates that knowledge of functional dependencies can greatly improve the identifiability of causal effects. Secondly, the number of observed variables required by the project-ID algorithm becomes smaller when there are more functional variables, implying that we only need to collect data on a smaller set of variables to estimate the (identifiable) causal effects. Again, this is because more observed functional variables can be functionally eliminated from the causal graphs by Theorem 3.

7. Conclusions

We studied the identification of causal effects in the presence of a particular type of knowledge called functional dependencies. This augments earlier works that considered other types of knowledge, such as context-specific independence. Our contributions include the formalization of the notion of functional identifiability; the introduction of an operation for eliminating functional variables from a causal graph that comes with stronger guarantees compared to earlier elimination methods; and the employment (under some conditions) of existing algorithms, such as the ID algorithm, for the testing of functional identifiability and to obtain identifying formulas. We further provided a complete reduction of functional identifiability to classical identifiability under very weak positivity constraints and showed how our results can be used to reduce the number of variables needed in observational data. Last but not least, we proposed a more general definition of identifiability based on a broader class of positivity assumptions, which opens the door to uncover causal identification algorithms under weaker positivity assumptions.

Author Contributions

Conceptualization, Y.C. and A.D.; Formal analysis, Y.C. and A.D.; Funding acquisition, A.D.; Investigation, Y.C. and A.D.; Methodology, Y.C. and A.D.; Project administration, A.D.; Resources, Y.C. and A.D.; Supervision, A.D.; Validation, Y.C. and A.D.; Visualization, Y.C. and A.D.; Writing—original draft, Y.C. and A.D.; Writing—review and editing, Y.C. and A.D. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partially supported by ONR grant N000142212501.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. More on Projection and the ID Algorithm

As mentioned in the main paper, the project-ID algorithm involves two steps: the projection operation and the ID algorithm. We review more technical details of each step in this section.

Appendix A.1. Projection

The projection [25,26,28] of G onto constructs a new DAG () over variables () as follows. Initially, DAG contains variables () but no edges. Then, for every pair of variables (), an edge is added from X to Y to if X is a parent of Y in G or if there exists a directed path from X to Y in G such that none of the internal nodes on the path is in . Furthermore, a bidirected edge () is added between every pair of variables (X and Y) in if there exists a divergent path (A divergent path between X and Y is a path in the form of ) between X and Y in G such that none of the internal nodes on the path is in . For example, the projection of the DAG in Figure 1a onto yields Figure 1c. A bidirected edge () is a compact notation for , where H is an auxiliary hidden variable. Hence, the projected DAG in Figure 1c can be interpreted as a classical DAG but with additional, hidden root variables.

The projection operation is guaranteed to produce a DAG () in which hidden variables are all roots and each has exactly two children. Graphs that satisfy this property are called semi-Markovian and can be fed as inputs to the ID algorithm for testing of identifiability [10]. Moreover, projection preserves some properties of G, such as d-separation [25] among , which guarantees that identifiability is preserved when working with instead of G [26].

Appendix A.2. ID Algorithm

After obtaining a projected causal graph, we can apply the ID algorithm for identification of causal effects [10,46]. The algorithm returns either an identifying formula if the causal effect is identifiable or FAIL otherwise. The algorithm is sound, since each line of the algorithm can be proven with basic probability rules and do-calculus. The algorithm is also complete, since a causal graph must contain a hedge, a graphical structure that induces the unidentifiability, if the algorithm returns FAIL. However, the algorithm is only sound and complete under certain positivity constraints, which are weaker but more subtle than strict positivity ().

The positivity constraints required by ID can be summarized as follows ( represents the treatment variables): (1) , where , and (2) for all quantities considered by the ID algorithm. The second constraint depends on a particular run of the ID algorithm and can be interpreted as follows. First, if the ID algorithm returns FAIL, then the causal effect is not identifiable, even under the strict positivity constraint of . However, if the ID algorithm returns “identifiable”, then the causal effect is identifiable under the above constraints, which are now well defined given a particular run of the ID algorithm. We illustrate with an example next.

Consider the causal graph on the right, which contains observed variables . Suppose we are interested in the causal effect of X on Y. Applying the ID algorithm returns the following identifying formula: . The positivity constraint extracted from this run of the algorithm is for all of a, b, c, and x, that is, we can only safely declare the causal effect identifiable based on the ID algorithm if this positivity constraint is satisfied.

Appendix B. Functional Elimination for CBNs

The functional elimination in Definition 8 removes functional variables from a DAG (G) and yields another DAG () for the remaining variables. We showed in the main paper that the functional elimination preserves the D-separations. Here, we extend the notion of functional elimination to causal Bayesian networks (CBNs), which contain not only a causal graph (DAG) but also CPTs. We show that (extended) functional elimination preserves the marginal distribution of the remaining variables, that is, given any CBN with causal graph G, we can construct another CBN with causal graph such that the two CBNs induce the same distribution of the variables in , where is the result of eliminating functional variables from G. Moreover, we show that the functional elimination operation further preserves the causal effects, which makes it applicable to causal identification. This extended version of functional elimination and the corresponding results are used as proofs in Appendix C.

Recall that a CBN contains a causal graph (G) and a set of CPTs (). We first extend the definition of functional elimination (Definition 8) from DAGs to CBNs.

Definition A1.

The functional elimination of a functional variable (X) from a CBN yields another CBN obtained as follows. The DAG () is obtained from G according to Definition 8. For each child (C) of X, its CPT in is , where and are the corresponding CPTs in .

We first show that the new CPTs produced by Definition A1 are well defined.

Proposition A1.

Let and be the CPTs for variables X and Y in a CBN; then, is a valid CPT for Y.

The next proposition shows that functional elimination preserves the functional dependencies.

Proposition A2.

Let be the CBN resulting from functionally eliminating a functional variable from a CBN (). Then, each variable (from ) is functional in if it is functional in .

The next theorem shows that the order of functional elimination does not matter.

Proposition A3.

Let be a CBN and and be two variable orders over a set of functional variables (). Then, functionally eliminating from according to and yields the same CBN.

The next result shows that eliminating functional variables preserves the marginal distribution.

Theorem A1.

Consider a CBN () that induces Pr. Let be the result of functionally eliminating a set of functional variables () from , which induces . Then, .

One key property of functional elimination is that it preserves the interventional distribution over the remaining variables. This property allows us to eliminate functional variables from a causal graph and estimate the causal effects in the resulting graph.

Theorem A2.

Let be the CBN over variables () resulting from functionally eliminating a set of functional variables () from a CBN (). Then, and attain the same for any .

Appendix C. Proofs

The proofs of the results are ordered slightly differently from the order in which they appear in the main body of the paper.

Proof of Proposition 1.

Our goal is to construct two different parameterizations ( and ) that induce the same but different . This is done by first creating a parameterization () that contains strictly positive CPTs for all variables, then constructing and based on .

Let be the directed path from to Y, denoted as , which does not contain any treatment variables other than X. Let be the parents of X in G. For each node M on the path, let be the parents of M, except for the parent that lies on . Moreover, for each variable (M) on , we only modify the conditional probability for a single state () of M, where is the treated state of X. Let be an arbitrarily small constant (close to 0). Next, we show the modifications for the CPTs in .

For every variable () that has parent Q on the path , we assign

We assign the same CPTs for X and all variables () but a different CPT for Z in .

The two parameterizations ( and ) induce the same , where if and otherwise. Next, we show that the parameterization satisfies each positivity constraint () as long as it does not imply . We first show that implies . This is because and there must exist some instantiation () where and by constraint. This implies and, therefore, . Hence, does not contain such a constraint () where . Suppose ; then, if and only if . Moreover, since , whenever , it is guaranteed that when , which implies . Finally, suppose ; then, . Hence, the positivity constraint is satisfied by both parameterizations. By construction, and induce different values for the causal effect (), since the probability of under treatment differs under the two parameterizations. □

Proposition A4.

Let G be a causal graph and be its observed variables. A set of functional variables () is consistent with positivity constraints () if no single constraint in mentions both and a set () that intercepts all directed paths from non-functional variables to W.

Proof of Proposition A4.

We construct a parameterization () and show that the distribution (Pr) induced by satisfies , which ensures consistency. The states of each variable (V) are represented in the form of , where and () are both binary indicators (0 or 1). Specifically, each corresponds to a “functional descendant path” of V defined as follows: A functional descendant path of V is a directed path that starts with V, and all variables on the path (excluding V) are functional. Suppose V does not have any functional descendant path; then, the states of V are simply represented as .

Next, we show how to assign CPTs for each variable in the causal graph (G) based on whether the variable is functional. For each non-functional variable, we assign a uniform distribution. For each functional variable (W) whose parents are and whose functional descendant paths are , we assign the CPT () as follows:

where denotes the index assigned to the path (which contains a single edge) in the state of and denotes the indicator in the state of for functional descendant path that contains functional descendant path , i.e., .

For simplicity, we call the set of variables () that satisfies the condition in the proposition a “functional ancestor set” of W. We show that for each positivity constraint in the form of . Let be a subset of functional variables. Since does not contain any functional ancestor set of W for each , it follows that there exist directed paths from a set of non-functional variables ( to W) that are unblocked by and contain only functional variables (excluding ). We can further assume that is chosen such that the set forms a valid functional ancestor set for W. Next, we show that for any state (w) of W and instantiation () of , there exists at least one instantiation () of such that .

Let denote the set of all directed paths from to W that do not contain (except for the first node on the path). Let be the paths that start with a variable in and be other paths that start with a variable in . Moreover, for any path (), let be the binary indicator (e.g., ) for in the state of (first variable in ). Since the value assignments for are independent for different s, we can always find some instantiation () such that the following equality holds given w and :

We next assign values for other path indicators of such that the indicators for the functional descendant paths in state w are set correctly. In particular, for each functional descendant path () of W, let be the set of functional descendant paths of that do not contain (except for the first node on the path) and that contain as a sub-path. Let be the paths that start with a variable in and be other paths that start with a variable in . Again, since all the indicators for paths in are independent, we can assign the indicators for such that

Finally, we combine the cases for each individual by creating the following set: . Since all the functional descendant paths we considered for different Ws are disjoint, we can always find an assignment () for that is consistent with the functional dependencies (does not produce any zero probabilities). Consequently, there must exist some full instantiation () compatible with , , and such that , which implies . □

Proof of Proposition A1.

Suppose Y is not a child of X in the CBN; then, , which is guaranteed to be a CPT for Y. Suppose Y is a child of X. Let denote the parents of X and denote the parents of Y, excluding X. The new factor () is defined over . Consider each instantiation ( and ); then, . Hence, g is a CPT for Y. □

Proof of Proposition A2.

Let X be the functional variablethat is functionally eliminated. By definition, the elimination only affects the CPTs for the children of X. Hence, any functional variable that is not a child of X remains functional. For each child (C) of X that is functional, the new CPT only contains values that are either 0 or 1, since both and are functional. □

Proof of Proposition A3.

First, note that can always be obtained from by a sequence of “transpositions”, where each transposition swaps two adjacent variables in the first sequence. Let be an elimination order, and let be the elimination order resulting from swapping and from the , i.e.,

We show that functional elimination according to and yields the same CBN, which can be applied inductively to conclude that elimination according to and yields the same CBN. Since and agree on the elimination order up to X, they yield the same CBN before eliminating variables . It suffices to show the CBNs resulting from eliminating those resulting from the and elimination of are the same. Let be the CBN before eliminating variables . Suppose X and Y do not belong to the same family (which contains a variable and its parents), the eliminations of X and Y are independent, and the order of elimination does not matter. Suppose X and Y belong to the same family; then, they are either parent and child or co-parents (X and Y are co-parents if they have a same child).

Without loss of generality, suppose X is a parent of Y. Eliminating and eliminating yield the same causal graph that is defined as follows. Each child (C) of Y has parents , and any other child C of X has parents . We next consider the CPTs. For each common child (C) of X and Y, its CPT resulting from eliminating is , and the CPT resulting from eliminating is . Since X is a parent of Y, we have and

Next, we consider the case when C is a child of Y but not a child of X. The CPT for C resulting from eliminating is , and the CPT resulting from eliminating is . Again, since X is a parent of Y, we have and

Finally, we consider the case when C is a child of X but not a child of Y. Regardless of the order of X and Y, the CPT for C resulting from eliminating X and Y is .

Next, we consider the case when X and Y are co-parents. Regardless of the order of X and Y, the causal graph resulting from the elimination satisfies the following properties: (1) for each common child (C) of X and Y, the parents for C are ; (2) the parents of each C that is a child of X but not a child of Y are ; and (3) the parents of each C that is a child of Y but not a child of X are . Next, we consider the CPTs. The CPT for each common child C of X and Y resulting from eliminating is , and the CPT resulting from eliminating is . Since X and Y are not parent and child, we have , , and

For each C that is a child of X but not a child of Y, regardless of the order of X and Y, the CPT for C resulting from eliminating variables X and Y is . A similar result holds for each C that is a child of Y but not a child of X. □

Proof of Theorem A1.

It suffices to show that when we eliminate a single variable (X). Let denote the set of CPTs for . Since is a functional CPT for X, we can replicate in , which yields a new CPT set (replication) () that induces the same distribution as (see details in ([21], Theorem 4)). Specifically, we pair the CPT for each child (C) of X with an extra copy of , denoted as , which yields a list of pairs (, where are the children of X). Functionally eliminating X from yields ([21], Corollary 1)

where represent the CPTs in that do not contain X and each is the CPT for child in . □

- Proof of Theorem A2

Lemma A1.

Consider a CBN and its mutilated CBN under . Let W be a functional variable not in and let and be the results of functionally eliminating W from and , respectively. Then, is the mutilated CBN for .

Proof.

First, observe that the children of W in G and can only differ by the variables in . Let be the children of W in both G and , and let be the children of W in G but not in . According to the definition of mutilated CBN, W has the same set of parents and CPT in and . Similarly, each child () has the same set of parents and CPT in and . Hence, eliminating W yields the same set of parents and CPT for each in and . Next, we consider the set of parents and CPT for each child (). Since W is not a parent of C in , variable C has the same set of parents and CPT in . Exactly same set of parents (empty) and CPT are assigned to C in the mutilated CBN for . □

Proof of Theorem A2.

Consider a CBN and its mutilated CBN . Let Pr and be the distributions induced by and over variables , respectively. According to Lemma A1, we can eliminate each inductively from and and obtain and its mutilated CBN . According to Theorem A1, the distribution induced by is exactly . □

Proof of Proposition 2.

First, note that the extended set () contains and all variables that are functionally determined by . Consider any path () between some and . We show that is blocked by iff it is blocked by according to the definition presented in [40]. We first show the if part. Suppose there is a convergent valve (see [38] (Ch. 4) for more details on convergent, divergent, and sequential valves) for a variable W that is closed when conditioned on ; then, the valve is still closed when conditioned on unless the parents of W are in . However, the path () is blocked in the latter case, since the parents of W must have sequential/divergent valves. Suppose there is a sequential/divergent valve that is closed when conditioned on according to [40]; then, W must be in , since it is functionally determined by . Hence, the valve is also closed when conditioned on .

Next, we show the only–if part. Suppose a convergent valve for variable W is closed when conditioned on ; then, none of is a descendant of W, since is a superset of . Suppose a sequential/divergent valve for variable W is closed when conditioned on ; then, W is functionally determined by by the construction of . Thus, the valve is closed as described in [40]. □

Proof of Theorem 1.

By induction, it suffices to show that and are D-separated by in iff they are D-separated by in , where is the result of functionally eliminating a single variable () from and . We first show the contrapositive of the if part. Suppose and are not D-separated by in ; owing to the completeness of D-separation, there exists a parameterization () on G such that . If we eliminate T from the CBN , we obtain another CBN , where is the parameterization for . According to Theorem A1, the marginal probabilities are preserved for the variables in , which include . Hence, and and are not D-separated by in .

Next consider the contrapositive of the only–if part. Suppose and are not D-separated by in ; then, there exists a parameterization () of such that owing to the completeness of D-separation. We construct a parameterization () for G such that is the parameterization of , which results from eliminating T from the CBN . This is sufficient to show that and are not D-separated by in , since the marginals are preserved by Theorem A1.

- Construction Method Let and denote the parents and children of T in G, respectively. Our construction assumes that the cardinality of T is the number of instantiations for its parents (), that is, there is a one-to-one correspondence between the states of T and the instantiations of , and we use to denote the instantiation () corresponding to state t. The functional CPT for T is assigned as if and otherwise for each instantiation () of . Now, consider each child () that has parents (excluding T) and T in G. It immediately follows from Definition 8 that C has parents in . Next, we construct the CPT () in based on its CPT () in . Consider each parent instantiation , where t is a state of T and is an instantiation of . If is consistent with , is assigned for each state (c) (For clarity, we use the notation | to separate a variable and its parents in a CPT). Otherwise, any functional distribution is assigned for . This construction ensures that the constructed CPT () for T is functional and that the functional dependencies among other variables are preserved. In particular, for each child (C) of T, the constructed CPT () is functional iff is functional. This construction method is reused later in other proofs.

Now, we just need to show that CBN is the result of eliminating T from the (constructed) CBN . In particular, we need to check that the CPT for each child () in is correctly computed from the constructed CPTs in . For each instantiation and state (c) of C,

where is the state of T such that . □

Proof of Theorem 2.

We prove the theorem by induction. It suffices to show the following statement: For each causal graph (G) with observed variables () and functional variables (), the causal effect () is F-identifiable with respect to iff it is F-identifiable with respect to , where is the result of functionally eliminating some hidden functional variable () and .

We first show the contrapositive of the if part. Suppose is not F-identifiable with respect to ; then, there exist two CBNs ( and ) that induce distributions () such that but . Let and be the results of eliminating from and , respectively; the two CBNs attain the same marginal distribution on but different causal effects according to Theorems A1 and A2. Hence, is not F-identifiable with respect to either.

Next, we show the contrapositive of the only–if part. Suppose is not F-identifiable with respect to ; then, there exist two CBNs ( and ) that induce distributions () such that but . We can obtain and by, agains, considering the construction method outlined in Theorem 1, where we assign a one-to-one mapping for T and adopt the CPTs from and for the children of T. This way, and become the results of eliminating T from the constructed and , respectively. Since , according to Theorem A1 and according to Theorem A2. Hence, is not F-identifiable with respect to either. □

Proof of Theorem 3.

Since we only functionally eliminate variables that have observed parents, it is guaranteed that each has observed parents when it is eliminated. By induction, it suffices to show that is F-identifiable with respect to iff it is F-identifiable with respect to , where is the result of eliminating a single functional variable () with observed parents from G, , and .

We first show the contrapositive of the if part. Suppose is not F-identifiable with respect to ; then, there exist two CBNs ( and ) that induce distributions , where but . Let and be the results of eliminating Z from and , respectively; the two CBNs induce the same marginal distribution () according to Theorem A1 but different causal effects () according to Theorem A2. Hence, is not F-identifiable with respect to .

We now consider the contrapositive of the only–if part. Suppose is not F-identifiable with respect to ; then, there exist two CBNs ( and ) that induce distributions () such that but . We, again, consider the construction method from the proof of Theorem 1, which produces two CBNs ( and ). Moreover, and are the results of eliminating Z from and , respectively. It is guaranteed that the two constructed CBNs produce different causal effects () according to Theorem A2. We need to show that and induce the same distribution over variables of . Consider any instantiation of . Since and assign the same one-to-one mapping () between Z and its parents in G, it is guaranteed that the probabilities are , except for the single state () where , where is the parent instantiation of Z, consistent with . By construction, for every instantiation () of hidden variables (). Similarly, for every instantiation . It then follows that . This means is not F-identifiable with respect to either. □

- Proof of Theorem 4

Lemma A2.

Let G be a causal graph, be its observed variables, and be its functional variables. Let Z be a non-descendant of that has at least one hidden parent; then, a causal effect is F-identifiable with respect to iff it is F-identifiable with respect to .

Proof.

Let denote the set expressed as . The only–if part holds immediately due to the fact that every distribution that can possibly be induced from can also be induced from . Next, we consider the contrapositive of the if part. Suppose a causal effect is not F-identifiable with respect to ; then, there exist two CBNs ( and ) that induce distributions () such that but . Next, we construct and , which constitute an example of unidentifiability with respect to . In particular, the CPTs for Z need to be functional in the constructed CBNs.

Without losing generality, we show the construction of from , which involves two steps (the construction of from follows the same procedure). Let be the parents of Z. The first step constructs a CBN based on the known method that transforms any (non-functional) CPT into a functional CPT. This is done by adding an auxiliary hidden root parent (U) for Z whose states correspond to the possible functions between and Z. The CPTs for U and Z are assigned accordingly such that , where and are the constructed CPTs in (Each state (u) of U corresponds to a function (), where is mapped to some state of Z for each instantiation (). Thus, variable U has states, since there is a total of possible functions from to Z. For each instantiation , the functional CPT for Z is defined as if and otherwise. The CPT for U is assigned as ). It follows that and induce the same distribution over , since . The causal effect is also preserved, since the summing out of U is independent of other CPTs in the mutilated CBN for .

Our second step involves converting the CBN (constructed in the first step) into the CBN over the original graph (G). Let be the hidden parent of W in G. We merge the auxiliary parents (U and T) into a new variable () and substitute it for T in G, i.e., has the same parents and children as T. is constructed as the Cartesian product of U and T. Each state of is represented as a pair , where u is a state of U and t is a state of T. We are ready to assign new CPTs for and its children. For each parent instantiation () of and each state () of , we assign the CPT for in as . Next, consider each child (C) of that has parents () (excluding ). For each instantiation () of and each state of , we assign the CPT for C in as for each state (c). Note that is functional iff is functional. Hence, the CPTs for are all functional in .

We need to show that preserves the distribution on and the causal effect from . Let be the hidden variables in and be the hidden variables in . The distribution on is preserved, since there is a one-to-one correspondence between each instantiation in and each instantiation in , where the two instantiations agree on and are assigned with the same probability, i.e., . Hence, for every instantiation (). The preservation of the causal effect can be shown similarly but on the mutilated CBNs. Thus, preserves both the distribution on and the causal effect from . Similarly, we can construct , which preserves the distribution on and the causal effect from . The two CBNs ( and ) constitute an example of unidentifiability with respect to . □

Proof of Theorem 4.

We prove the theorem by induction. We first order all the functional variables in a bottom-up order. Let denote the ith functional variable in the order and denote the functional variables that are ordered before (including ). It follows that we can go over each in the order and show that a causal effect is F-identifiable with respect to iff it is F-identifiable with respect to according to Lemma A2. Since F-identifiability with respect to is equivalent to identifiability with respect to , we conclude that the causal effect is F-identifiable with respect to iff it is identifiable with respect to . □

- Proof of Theorem 5

The proof of the theorem is organized as follows. We start with a lemma (Lemma A3) that allows us to modify the CPT of a variable when the marginal probability over its parents contains zero entries. We then show a main lemma (Lemma A4) that allows us to reduce F-identifiability to identifiability when all functional variables are observed or have a hidden parent. Finally, we prove the theorem based on the main lemma and previous theorems.

Lemma A3.

Consider two CBNs that have the same causal graph and induce distributions and . Suppose the CPTs of the two CBNs only differ by . Then, iff for all instantiations () of .

Proof.

Let and denote the CPTs for Y in the first and second CBN, respectively. Let be the ancestors of variables in (including ). If we eliminate all variables other than , then we obtain the factor set expressed as for the first CBN and that expressed as for the second CBN. Since all CPTs are the same for variables in , it is guaranteed that . If we further eliminate variables other than from and , we obtain marginal distributions of and , where denotes the projection operation that sums out variables other than from a factor. Hence, , which concludes the proof. □

Lemma A4.

If a causal effect is F-identifiable with respect to but is not identifiable with respect to , then there must exist at least one functional variable that is hidden and whose parents are all observed.

Proof.

The lemma is the same as saying that if every functional variable is observed or has a hidden parent, then F-identifiability is equivalent to identifiability. We go over each functional variable () in a bottom-up order () and prove the following inductive statement: A causal effect () is F-identifiable with respect to iff it is F-identifiable with respect to , where is a subset of variables in that are ordered before (and including ) in . Note that and F-identifiability with respect to collapses into identifiability with respect to .

The if part follows from the definitions of identifiability and F-identifiability. Next, we consider the contrapositive of the only–if part. Let Z be the functional variable in that is considered in the current inductive step. Let and be the two CBNs inducing distributions and , which constitute the unidentifiability, i.e., and . Our goal is to construct two CBNs ( and ) that induce distributions and and contain functional CPTs for Z such that and . Suppose Z has a hidden parent; we directly employ Lemma A2 to construct the two CBNs. Next, we consider the case when Z is observed and has observed parents. By default, we use to to denote the CPTs for Z in and .

The following three steps are considered to construct an instance of unidentifiability.

- First Step: We construct and by modifying the CPTs for Z. Let be the parents of Z in G. For each instantiation () of where , we modify entries and for CPTs and in and as follows. Since , there exists an instantiation () such that . Without losing generality, assume . Since is computed as the marginal probability of in the mutilated CBN for , it can be expressed in the form of a network polynomial as shown in [38,47]. If we treat the CPT entries of as unknown, then we can write as follows:where are constants and are the states of variable Z. Similarly, we can write as follows:

Let be the maximum value among and be the minimum value among ; our construction method assigns and . By construction, it is guaranteed that , where and denote the causal effects under the updated CPTs and , respectively. We repeat the above procedure for all , where , which yields the new CBNs ( and ) in which and are functional whenever . Next, we show that and (with the updated and ) constitute an example of unidentifiability. , since for the particular instantiation (). We are left to show that the distributions of and induced by and are the same over the observed variables (). Consider each instantiation () of and of , where is consistent with . If , then according to Lemma A3; thus, . Otherwise, , since none of the CPT entries consistent with was modified.