Abstract

Multi-hop networks have become popular network topologies in various emerging Internet of Things (IoT) applications. Batched network coding (BNC) is a solution to reliable communications in such networks with packet loss. By grouping packets into small batches and restricting recoding to the packets belonging to the same batch; BNC has much smaller computational and storage requirements at intermediate nodes compared with direct application of random linear network coding. In this paper, we discuss a practical recoding scheme called blockwise adaptive recoding (BAR) which learns the latest channel knowledge from short observations so that BAR can adapt to fluctuations in channel conditions. Due to the low computational power of remote IoT devices, we focus on investigating practical concerns such as how to implement efficient BAR algorithms. We also design and investigate feedback schemes for BAR under imperfect feedback systems. Our numerical evaluations show that BAR has significant throughput gain for small batch sizes compared with existing baseline recoding schemes. More importantly, this gain is insensitive to inaccurate channel knowledge. This encouraging result suggests that BAR is suitable to be used in practice as the exact channel model and its parameters could be unknown and subject to changes from time to time.

1. Introduction

Noise, interference and congestion are common causes of packet loss in network communications. Usually, a packet has to travel through multiple hops before it can arrive at the destination node. Traditionally, the intermediate nodes apply the store-and-forward strategy. In order to maintain a reliable communication, retransmission is a common practice. A feedback mechanism is applied so that a network node can acknowledge that a packet is lost. However, due to the delay and bandwidth consumption of the feedback packets, retransmission schemes come with a cost of degraded system performance.

In the era of the Internet of things (IoT), there is a diversity of devices and network topologies. Embedded devices or microcomputers have been heavily deployed due to their mobility, lightweight design, and low power consumption. Multi-hop wireless networks have become a common network topology, highlighting the issues in reliable transmission as wireless links are more vulnerable to packet loss. The packet loss at each link accumulates and the chance of successfully receiving a packet at the destination drops exponentially. Fountain codes, such as in [1,2,3], can recover the lost packets without the need for feedback because of their ratelessness property. However, the throughput still degrades quickly if there is packet loss at each network link unless link-by-link feedback and retransmission are adopted.

1.1. Network Coding-Based Approaches

Random linear network coding (RLNC) [4,5], a simple realization of network coding [6,7,8], can achieve the capacity of multi-hop networks with packet loss even without the need for feedback [9,10]. Unfortunately, a direct application of RLNC induces an enormous overhead for the coefficient vectors, as well as high computational and storage costs in network coding operations at intermediate nodes, where the intermediate nodes are usually routers or embedded devices with low computational power and storage space.

Generation-based RLNC was proposed in [11] to resolve these issues. The input packets of the file are partitioned into multiple subsets called the generations, and RLNC is applied to each generation independently. This approach, however, cannot achieve an optimal theoretical rate. Practical concerns and solutions have been further investigated to improve this type of RLNC, such as decoding delay and complexity [12,13,14,15,16,17,18,19,20], packet size [21,22,23,24,25], and coefficient overhead [26,27,28].

Instead of partitioning into disjoint subsets, overlapped subsets were investigated in [29,30,31,32]. To further reduce the computational costs, the use of RLNC was restricted to small subsets of coded packets generated from the input packets in [33,34,35,36,37,38]. Example codes used for generating the coded packets include LDPC and fountain codes. This combination of coding theory and network coding is another variant of RLNC called batched network coding (BNC). BATS codes [38,39], a class of BNC, have a close-to-optimal achievable rate where the achievable rate is upper bounded by the expectation of the rank distribution of the batch transfer matrices that model the end-to-end network operations (packet erasures, network coding operations, etc.) on the batches [40]. This hints that the network coding operations, also known as recoding, have an impact on the throughput of BNC.

1.2. Challenges of Recoding in Practice

Baseline recoding is the simplest recoding scheme which generates the same number of recoded packets for every batch. Due to its simple and deterministic structure, baseline recoding appears in many BNC designs and analyses, such as [41,42,43,44,45,46]. However, the throughput of baseline recoding is not optimal with finite batch sizes [47]. The idea of adaptive recoding, aiming to outperform baseline recoding by generating different numbers of recoded packets for different batches, was proposed in [47] without truly optimizing the numbers. Two adaptive recoding optimization models for independent packet loss channels were then formulated independently in [48,49]. A unified adaptive recoding framework was proposed in [50], subsuming both optimization models and supporting other channel models under certain conditions.

Although adaptive recoding can be applied distributively with local network information, it is a challenge to obtain accurate local information when deploying adaptive recoding in real-world scenarios. Adaptive recoding requires two pieces of information: information distribution remaining in the received batches and the channel condition of the outgoing link.

The first piece of information may change over time if the channel condition of the incoming link varies. One reason for the variation is that the link quality can be affected by interference from users of other networks around the network node. We proposed a simple way to adapt to this variation in [49], grouping a few batches into a block and observing the distribution of received batches in this block. This approach was later called blockwise adaptive recoding (BAR) in [51,52].

The second piece of information may also vary from time to time. In some scenarios, such as deep-space [53,54,55] and underwater communications [56,57,58], feedback can be expensive or is not available; therefore, a feedbackless network is preferred. Without feedback, we cannot update our knowledge on the channel condition of the outgoing link. Although we may assume an unchanged channel condition and measure information such as the packet loss rate of the channel beforehand, this measurement, however, can be inaccurate due to observational errors or precision limits.

1.3. Contributions

In this paper, we focus on the practical design of applying BAR in real-world applications. Specifically, we answer the following questions in this paper:

- How does the block size affect the throughput?

- Is BAR sensitive to an inaccurate channel condition?

- How can one calculate the components of BAR and solve the optimization efficiently?

- How can one make use of link-by-link feedback if it is available?

The first question is related to the trade-off between throughput and latency: a larger block induces a longer delay but gives a higher throughput. We show by numerical evaluations that a small block size can already give a significant throughput gain compared with baseline recoding.

For the second question, we demonstrate that BAR performs very well with an independent packet loss model on channels with dependent packet loss. We also show that BAR is insensitive to an inaccurate packet loss rate. This is an encouraging result as this suggests that it is feasible to apply BAR in real-world applications.

The third question is important in practice as BAR is suppose to run at network nodes, usually routers or IoT devices with limited computational power, but also they may need to handle a huge amount of network traffic. Furthermore, by updating the knowledge of the incoming link from a short observation, we need to recalculate the components of BAR and solve the optimization problem again. In light of this, we want to reduce the number of computations to improve the reaction time and reduce the stress of congestion. We answer this question by proposing an on-demand dynamic programming approach to build the components and some implementation techniques to speed up the algorithm for BAR.

Lastly, for the fourth question, we consider both a perfect feedback system (e.g., the feedback passes through a side-channel with no packet loss) and a lossy feedback system (e.g., the feedback uses the reverse direction of the lossy channel for data transmission). We investigate a few ways to estimate the packet loss rate and show that the throughput can be further boosted by using feedback. Furthermore, a rough estimation is sufficient to catch up the variation in the channel condition. In other words, unless there is another application which requires a more accurate estimation on the packet loss rate, we may consider using an estimation with low computational cost, e.g., the maximum likelihood estimator.

1.4. Paper Organization and Nomenclature

The paper is organized as follows. We first formulate BAR in Section 2. Then, we discuss the implementation techniques for solving BAR efficiently and evaluate the throughput of different block sizes in Section 3. In Section 4, we demonstrate that BAR is insensitive to inaccurate channel models and investigate the use of feedback mechanisms. Lastly, we conclude the paper in Section 5.

Some specific terminology and notations appear frequently throughout the paper. We summarize some of the important terminology and frequently used notations in Table 1 and Table 2, respectively.

Table 1.

Terminology used for batched network coding (BNC).

Table 2.

Frequently used notations in this paper.

2. Blockwise Adaptive Recoding

In this section, we briefly introduce BNC and then formulate BAR.

2.1. Network Model

As some intermediate nodes may be hardware-implemented routers or not easily reachable for an upgrade, it is not required to deploy a BNC recoder at every intermediate node. The nodes that do not deploy a recoder are transparent to the BNC network as no network coding operations are performed. In the following text, we only consider intermediate nodes that have deployed BNC recoders.

It is not practical to assume every intermediate node knows the information of the whole network; thus, a distributed scheme that only requires local information is desirable. For example, the statistics of the incoming batches, the channel condition of the outgoing link, etc. In a general network, there may be more than one possible outgoing link to reach the destination. We can assign one recoder or one management unit for each outgoing link at an intermediate node [59,60]. In this way, we need a constraint to limit the number of recoded packets of certain batches sent via the outgoing links. The details are discussed in Section 2.4. In other words, we consider each route from the source to the destination separately as a line network.

Line networks are the fundamental building blocks of a general network. Conversely, a recoding scheme for line networks can be extended to general unicast networks and certain multicast networks [38,48]. A line network is a sequence of network nodes where the network links only exist between two neighbouring nodes. An example of a line network is illustrated in Figure 1. In this paper, we only consider line networks in our numerical evaluations.

Figure 1.

A three-hop line network. Network links only exist between two neighbouring nodes.

2.2. Batched Network Coding

Suppose we want to send a file from a source node to a destination node through a multi-hop network. The file is divided into multiple input packets, where each packet is regarded as a vector over a fixed finite field. A BNC has three main components: the encoder, the recoder and the decoder.

The encoder of a BNC is applied at the source node to generate batches from the input packets, where each batch consists of a small number of coded packets. Recently, a reinforcement learning approach to optimize the generation of batches was proposed in [61]. Nevertheless, batches are commonly generated using the traditional approach as follows. To generate a batch, the encoder samples a predefined degree distribution to obtain a degree, where the degree is the number of input packets that constitute the batch. Depending on the application, there are various ways to formulate the degree distribution [62,63,64,65]. According to the degree, a set of packets is chosen randomly from the input packets. The size of the input packets may be obtained via certain optimizations, such as in [66], to minimize the overhead. Each packet in the batch is formed by taking random linear combinations on the chosen set of packets. The encoder generates M packets per batch, where M is known as the batch size.

Each packet in a batch has a coefficient vector attached to it. Two packets in a batch are defined as linearly independent of each other if and only if their coefficient vectors are linearly independent from each other. Immediately after a batch is generated, the packets within it are assigned as linearly independent from each other. This is accomplished by suitably choosing the initial coefficient vectors [59,67].

A recoder is applied at each intermediate node, performing network coding operations on the received batches to generate recoded packets. This procedure is known as recoding. Some packets of a batch may be lost when they pass through a network link. Each recoded packet of a batch is formed by taking a random linear combination of the received packets in a given batch. The number of recoded packets depends on the recoding scheme. For example, baseline recoding generates the same number of recoded packets for every batch. Optionally, we can also apply a recoder at the source node so that we can have more than M packets per batch at the beginning. After recoding, the recoded packets are sent to the next network node.

At the destination node, a decoder is applied to recover the input packets. Depending on the specific BNC, we can use different decoding algorithms, such as Gaussian elimination, belief propagation and inactivation [68,69].

2.3. Expected Rank Functions

The rank of a batch at a network node is defined by the number of linearly independent packets remaining in the batch, a measure of the amount of information carried by the batch. Adaptive recoding aims to maximize the sum of the expected value of the rank distribution of each batch arriving at the next network node. For simplicity, we called this expected value the expected rank.

For batch b, we denote its rank by and the number of recoded packets to be generated by . The expectation of at the next network node, denoted as , is known as the expected rank function. We have

where is the random variable of the number of packets of a batch received by the next network node when we send t packets for this batch at the current node, and is the probability that a batch of rank r at the current node with i received packets at the next network node has rank j at the next network node. The exact formulation of can be found in [38], which is , where q is the field size for the linear algebra operations and . It is convenient to use in practice as each symbol in this field can be represented by 1 byte. For a sufficiently large field size, say , is very close to 1 if , and is very close to 0 otherwise. That is, we can approximate by where is the Kronecker delta. This approximation has also been used in the literature, see, e.g., [45,70,71,72,73,74,75,76].

Besides generating all recoded packets by taking random linear combinations, systematic recoding [39,47,59,67], which concerns received packets as recoded packets, can be applied to save computational time. Systematic recoding can achieve a nearly indistinguishable performance compared with methods which generate all recoded packets by taking random linear combinations [39]. Therefore, we can also use (1) to approximate the expected rank functions for systematic recoding accurately.

For the independent packet loss model with packet loss rate p, we have , a binomial distribution. If , then a store-and-forward technique can guarantee the maximal expected rank. If , then no matter how many packets we transmit, the next network node must receive no packets. Thus, we assume in this paper. It is easy to prove that the results in this paper are also valid for or 1 when we define , which is a convention in combinatorics such that and are well-defined with correct interpretation. In the remaining text, we assume . That is, for the independent packet loss model, we have

A demonstration of the accuracy of the approximation can be found in Appendix A.

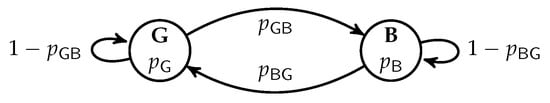

We also consider the expected rank functions for burst packet loss channels modelled by Gilbert–Elliott (GE) models [77,78], where the GE model was also used in other BNC literature such as [52,55,70]. A GE model is a two-state Markov chain, as illustrated in Figure 2. In each state, there is an independent event to decide whether a packet is lost or not. We define , where is the random variable of the state of the GE model after sending t packets of a batch. By exploiting the structure of the GE model, computation of f can be performed by dynamic programming. Then, we have

Figure 2.

A Gilbert–Elliott (GE) model. In each state, there is an independent event to decide whether a packet is lost or not.

It is easy to see that it would take more steps to compute (3) than (2). Therefore, a natural question to ask is that for burst packet loss channels, is the throughput gap small between adaptive recoding with (2) and (3)? We demonstrate in Section 4.2 that the gap is small so we use (2) any time a nice throughput is received. Therefore, in our investigation we mainly focus on (2).

In the rest of this paper, we refer to as unless otherwise specified. From [50], we know that when the loss pattern follows a stationary stochastic process, the expected rank function is a non-negative, monotonically increasing concave function with respect to t, which is valid for arbitrary field sizes. Further, for all r. However, we need to calculate the values of or its supergradients to apply adaptive recoding in practice. To cope with this issue, we first investigate the recursive formula for .

We define the probability mass function of the binomial distribution by

For integers and , we define

When , the function is the partial sum of the probability masses of a binomial distribution . The case where is used in the approximation scheme in Section 3 and is discussed in that section.

The regularized incomplete beta function, defined as ([79], Equation 8.17.2), can be used to express the partial sum of the probability masses of a binomial distribution. When , we can apply ([79], Equation 8.17.5) and obtain

There are different implementations of available for different languages. For example, the GNU Scientific Library [80] for C and C++, or the built-in function betainc in MATLAB. However, most available implementations consider non-negative real parameters and calculate different queries independently. This consideration is too general for our application, as we only need to query the integral points efficiently. In other words, this formula may be sufficient for prototyping or simulation, but it is not efficient enough for real-time deployment on devices with limited computational power. Nevertheless, this formula is useful for proving the following properties:

Lemma 1.

Assume . Let Λ be an index set.

- (a)

- for ;

- (b)

- where the equality holds if and only if or ;

- (c)

- where the equality holds if and only if ;

- (d)

- where the equality holds if and only if ;

- (e)

- for all and any non-negative integer s;

- (f)

- for all and any non-negative integer s such that .

Proof.

See Appendix B. □

With the notation of , we can now write the recursive formula for .

Lemma 2.

, where t and r are non-negative integers.

Proof.

Let be independent and identically distributed Bernoulli random variables, where for all i. When , the i-th packet is received by the next hop.

When we transmit one more packet at the current node, indicates whether this packet is received by the next network node or not. If , i.e., the packet is lost, then the expected rank will not change. If , then the packet is linearly independent from all the already received packets at the next network node if the number of received packets at the next network node is less than r. That is, the rank of this batch at the next network node increases by 1 if . Therefore, the increment of is . Note that . As are all independent and identically distributed, we have . □

The formula shown in Lemma 2 can be interpreted as a newly received packet that is linearly independent of all the already received packets with a probability tends to 1 unless the rank has already reached r. This can also be interpreted as with a probability tends to 1. The above lemma can be rewritten in a more useful form as stated below.

Lemma 3.

Let t and r be non-negative integers.

- (a)

- if ;

- (b)

- .

Proof.

See Appendix C. □

2.4. Blockwise Adaptive Recoding

The idea of adaptive recoding was presented in [47], and then independently formulated in [48,49]. The former formulation imposes an artificial upper bound on the number of recoded packets and then applies a probabilistic approach to avoid integer programming. The latter investigates the properties of the integer programming problem and proposed efficient algorithms to directly tackle this problem. These two formulations were unified in [50] as a general recoding framework for BNC. This framework requires the distribution of the ranks of the incoming batches, also called the incoming rank distribution. This distribution, however, is not known in advance, and can continually change due to environmental factors. A rank distribution inference approach was proposed in [81], but the long solving time hinders its application in real-time scenarios.

A more direct way to obtain up-to-date statistics is to use the ranks of the few latest batches, a trade-off between a latency of a few batches and the throughput of the whole transmission. This approach was proposed in [49], and later called BAR in [51,52]. In other words, BAR is a recoding scheme which groups batches into blocks and jointly optimizes the number of recoded packets for each batch in the block.

We first describe the adaptive recoding framework and its relation to BAR. We fix an intermediate network node. Let be the incoming rank distribution, the number of recoded packets to be sent for a batch of rank r, and the average number of recoded packets to be sent per batch. The value of is a non-negative real number that is interpreted as follows. Let be the fractional part of . There is an chance to transmit recoded packets, and a chance to transmit packets. That is, the fraction is the probability of transmitting one more packet. Similarly, is defined as the linear interpolation by . The framework maximizes the expected rank of the batches at the next node, which is the optimization problem:

For BAR, the incoming rank distribution is obtained from the recently received few batches. Let a block be a set of batches. We assume that the blocks at a network node are mutually disjoint. Suppose the node receives a block . For each batch , let and be the rank of b and the number of recoded packets to be generated for b, respectively. Let be the number of recoded packets to be transmitted for the block . The batches of the same rank are considered individually with the notations and , and the total number of packets to be transmitted for a block is finite; therefore, we assume for each is a non-negative integer, and is a positive integer. By dividing both the objective and the constraint of the framework by , we obtain the simplest formulation of BAR:

To support scenarios with multiple outgoing links for the same batch, e.g., load balancing, we may impose an upper bound on the number of recoded packets per batch. Let be a non-negative integer that represents the maximum number of recoded packets allowed to be transmitted for the batch b. This value may depend on the rank of b at the node. Subsequently, we can formulate the following optimization problem based on (8):

Note that we must have . In the case where this inequality does not hold, we can include more batches in the block to resolve this issue. When is sufficiently large for all , (9) degenerates into (8).

The above optimization only depends on the local knowledge at the node. The batch rank can be known from the coefficient vectors of the received packets of batch b. As a remark, the value of can affect the stability of the packet buffer. For a general network transmission scenario with multiple transmission sessions, the value of can be determined by optimizing the utility of certain local network transmissions [82,83].

Though we do not discuss such optimizations in this paper, we consider solving BAR with a general value of .

On the other hand, note that the solution to (9) may not be unique. We only need to obtain one solution for recoding purpose. In general, (9) is a non-linear integer programming problem. A linear programming variant of (9) can be formulated by using a technique in [81]. However, such a formulation has a huge amount of constraints and requires the values of for all and all possible t to be calculated beforehand. We defer the discussion of this formulation to Appendix H.

A network node will keep receiving packets until it has received enough batches to form a block . A packet buffer is used to store the received packets. Then, the node solves (9) to obtain the number of recoded packets for each batch in the block, i.e., . The node then generates and transmits -recoded packets for every batch . At the same time, the network node continually receives new packets. After all the recoded packets for the block are transmitted, the node drops the block from its packet buffer and then repeats the procedure by considering another block.

We do not specify the transmission order of the packets. Within the same block, the ordering of packets can be shuffled to combat burst loss, e.g., [43,44,52]. Such shuffling can reduce the burst error length experienced by each batch so that the packet loss events are more “independent” from each other. On the other hand, we do not specify the rate control mechanism, as it should be separated as another module in the system. This can be reflected in BAR by choosing suitable expected rank functions, e.g., modifying the parameters in the GE model. BAR is only responsible for deciding the number of recoded packets per batch.

The size of a block depends on its application. For example, if an interleaver is applied to L batches, we can group the L batches as a block. When , the only solution is , which degenerates into baseline recoding. Therefore, we need to use a block size of at least 2 in order to utilize the throughput enhancement of BAR. Intuitively, it is better to optimize (9) with a larger block size. However, the block size is related to the transmission latency as well as the computational and storage burdens at the network nodes. Note that we cannot conclude the exact rank of each batch in a block until the previous network node finishes sending all the packets of this block. That is, we need to wait for the previous network node to send the packets of all the batches in a block until we can solve the optimization problem. Numerical evaluations in Section 3.5 show that already has obvious advantage over , and it may not be necessary to use a block size larger than eight.

3. Implementation Techniques for Blockwise Adaptive Recoding

In this paper, we focus on the implementation and performance of BAR. Due to the non-linear integer programming structure of (9), we need to make use of certain properties of the model in order to solve it efficiently. The authors of [49] proposed greedy algorithms to solve (9), which were then generalized in [50] to solve (7). The greedy algorithms in [50] have an potential issue when certain probability masses in the incoming rank distribution are too small, as they may take too many iterations to find a feasible solution. The number of iterations is in the order of , depending on the solution to (7). That is, we cannot establish a bound on the time complexity as the incoming rank distribution can be arbitrary.

For BAR, we do not have this issue because the number of recoded packets in a block, , is fixed.

In this section, we first discuss the greedy algorithm to solve (9) in Section 3.1. Then, we propose an approximation scheme in Section 3.2, and discuss its application to speed up the solver for practical implementations in Section 3.3. The algorithms in Section 3.1 and Section 3.3 are similar to that in [50], but they are modified to optimize BAR. Note that the algorithms in [50] are generalized from [49], so the correctness of the aforementioned modified algorithms is inherited directly from the generalized proofs in [50]. For the approximation scheme in Section 3.2, which did not appear in [50], a more detailed discussion is provided in this section.

The algorithms in this section frequently query and compare the values of for different and . We suppose a lookup table is constructed so that the queries can be performed in time. The table is reusable if the packet loss rate of the outgoing link is unchanged. We only consider the subset of the domain because

- the maximum rank of a batch is M;

- any cannot exceed as .

The case will be used by our approximation scheme so we keep it in the lookup table. We can build the table on-demand by dynamic programming, discussed in Section 3.4.

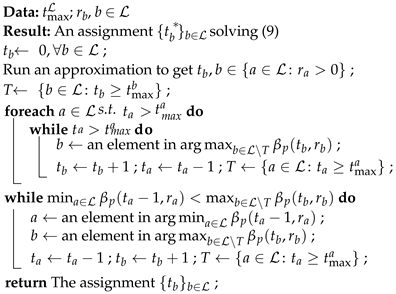

3.1. Greedy Algorithm

We first discuss the case . This condition means that the value of is too small such that the node has just enough or even not enough time to forward the linearly independent packets received. It is trivial that every satisfying and is a solution to (9), because every such recoded packet gains to the expected rank by Lemma 3(a),where this gain is maximal according to the definition of .

For , we can initialize by for every as every such recoded packet gains the maximal value to the expected rank. After this, the algorithm chooses the batch that can gain the most expected rank by sending one more recoded packet, and assigns one more recoded packet to it. The correctness is due to the concavity of the expected rank functions.

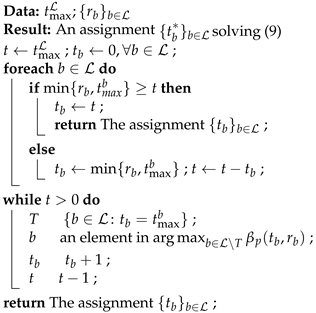

The above initialization reduces most iterations in the algorithm, as in practice, the difference between the number of recoded packets and the rank of the batch is not huge. Algorithm 1 is the improved greedy algorithm. Unlike the version in [50], the complexity of Algorithm 1 does not depend on the solution.

| Algorithm 1: Solver for BAR. |

|

Theorem 1.

Algorithm 1 can be ran in time.

Proof.

There are totally iterations in the while loop. The query of can be implemented by using a binary heap. The initialization of the heap, i.e., heapify, takes time, which can be performed outside the loop. Each query in the loop takes time. The update from into , if , takes time. For , we may remove the entry from the heap, taking the same time complexity as the update above. As by assumption, the algorithm will not query an empty heap. Therefore, the overall time complexity is . □

In the algorithm, we assume that a lookup table for is pre-computed. The table can be reused unless there is an update on the outgoing channel condition. Nevertheless, we will discuss an efficient way to construct the lookup table in Section 3.4, and the insignificance of the measurement or prediction errors of the loss probability of the outgoing channel in Section 4.

As the query is run repeatedly and an update is performed after every query, we can use a binary heap as described in the proof in real implementation. Note that by Lemma 1(b), , so the update is a decrease key operation in a max-heap. In other words, a Fibonacci heap [84] cannot benefit from this operation here.

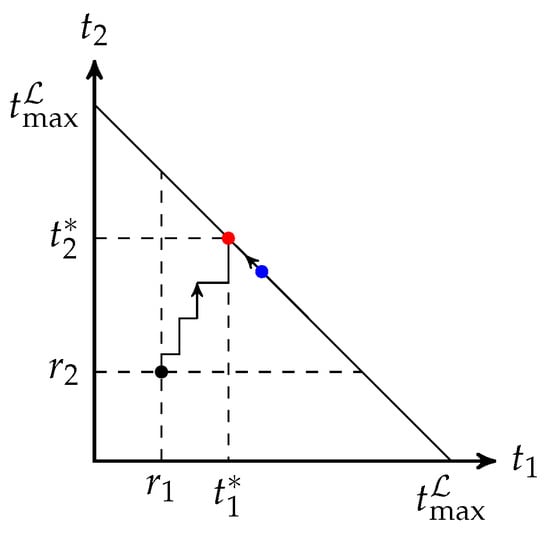

3.2. Equal Opportunity Approximation Scheme

Algorithm 1 increases step by step. From a geometric perspective, the algorithm finds a path from the interior of a compact convex polytope that models the feasible solutions to the facet . If we have a method to move a non-optimal feasible point on towards an optimal point, together with a fast and accurate approximation to (8) or (9), then we can combine them to solve (9) faster than using Algorithm 1 directly. This idea is illustrated in Figure 3. A generalized tuning scheme can be found in [50] based on the algorithm in [49]. However, there is no approximation scheme proposed in [50].

Figure 3.

This figure illustrates the idea of modifying the output of an approximation scheme with two batches, where , and . The red and blue dots represent the optimal and approximate solutions on the facet , respectively. Algorithm 1 starts the search from an interior point , while a modification approach starts the search from the blue dot.

We first give an approximation scheme in this subsection. The approximation is based on an observation of the solution for (8) that does not impose an upper boundary on : A batch of higher rank should have more recoded packets transmitted than a batch of lower rank. Unless most are too small, the approximation for (8) is also a good approximation for (9).

Theorem 2.

Proof.

See Appendix D. □

As we cannot generate any linearly independent packets for a batch of rank 0, we have . Therefore, we can exclude batches of rank 0 from before we start the approximation. We define . When , we have for all . An easy way to obtain an approximation is to assign following the guidelines given in Theorem 2 by:

- for all ;

- for all .

where . In the case where ℓ is not an integer, we can round it up for batches with higher ranks and round it down for those with lower ranks.

The above rules allocate the unassigned packets to batches equally after packets have been assigned to each batch b. Thus, we call this approach the equal opportunity approximation scheme. The steps of this scheme are summarized in Algorithm 2.

Note that we do not need to know the packet loss rate p to apply this approximation. That is, if we do not know the value of p, we can still apply this approximation to outperform baseline recoding.

| Algorithm 2: Equal opportunity approximation scheme. |

|

Proof.

It is easy to see that Algorithm 2 outputs which satisfies . That is, the output is a feasible solution of (8). Note that , so the assignments and the branches before the last for loop take time in total. The variable L after the first foreach loop equals . Adding one to the number of recoded packets for batches with the highest ranks can be performed in time. Therefore, the overall running time is .

If , i.e., the whole block is lost, then any feasible is a solution, and the optimal objective value is 0. If , then the algorithm terminates with an output satisfying for all , which is an optimal solution. □

For the step that adds 1 to the number of recoded packets for batches with the highest ranks in the algorithm, the worst linear time case can be achieved by using introselect [85] (which is quickselect [86] with a random pivot, but changes to use the median of medians [87] pivot strategy when the complexity grows). We use the selection algorithm to find the r-th largest element, making use of its intermediate steps. During an iteration, one of the following three cases will occur. If the algorithm decides to search a part larger than the pivot, then the discarded part does not contain the largest r elements. If a part smaller than the pivot is selected, then the discarded part is part of the largest r elements. If the pivot is exactly the r-th largest element, then the part larger than the pivot together with the pivot are part of the largest r elements.

In practice, the batch size M is small. We can search these r batches with the highest ranks in time using a counting technique as an efficient alternative. The technique is to use part of the counting algorithm [88]. We first compute a histogram of the number of times each rank occurs, taking time for initialization and time to scan the block. Then, we can scan and count the frequencies of the histogram from the highest rank, and eliminate the part where the count exceeds . This takes time. Lastly, we scan the ranks of the batches again in time. If included in the modified histogram, we add 1 to the corresponding and minus 1 to the corresponding frequency in the histogram.

Algorithm 2 is a -approximation algorithm, although the relative performance guarantee factor is not tight in general. However, this suggests that the smaller the packet loss rate p, the more accurate the output the algorithm gives. We defer this discussion to Appendix E.

3.3. Speed-Up via Approximation

In this subsection, we discuss the implementation that corrects an approximate solution to an optimal solution for (9). Algorithm 3 is a greedy algorithm that uses any feasible solution of (8) as a starting point. The foreach loop removes the exceeding recoded packets, assigning the released slot to another batch following the iterations in Algorithm 1. This can be regarded as mimicking a replacement of with the smallest possible value for the batch b that violates the constraint . After this, the intermediate solution is a feasible solution to (9). Then, the last loop finds an increase to the objective by reassigning some slots among the batches.

| Algorithm 3: Solver for BAR via approximation. |

|

Note that the algorithm may query for . If , then it has access to the value . Recall that we defined , the upper bound of by (10). Therefore, these values act as barriers to prevent outputting a negative number of recoded packets.

Theorem 4.

Proof.

The assignments before the foreach loop takes time. There are a total of iterations in the loops. The queries for the minimum and maximum values can be implemented using a min-heap and a max-heap, respectively. Similar to Algorithm 1, we can use binary heaps, taking initialization time, query time, and update time. Each iteration contains at most two heap queries and four heap updates. The update of the set T can be performed implicitly by setting to 0 during the heap updates for . The overall time complexity is then . □

In the last while loop, we need to query the minimum of and the maximum of . It is clear that we need to decrease the key to in the max-heap, and increase the key to in the min-heap. However, we can omit the updates for batches a and b in the max-heap and min-heap, respectively, i.e., reduce from four heap updates to two heap updates. We defer this discussion to Appendix G.

3.4. Construction of the Lookup Table

In the above algorithms, we assume that we have a lookup table for the function so that we can query its values quickly. In this subsection, we propose an on-demand approach to construct a lookup table by dynamic programming.

Due to the fact that , we have

Furthermore, it is easy to see that

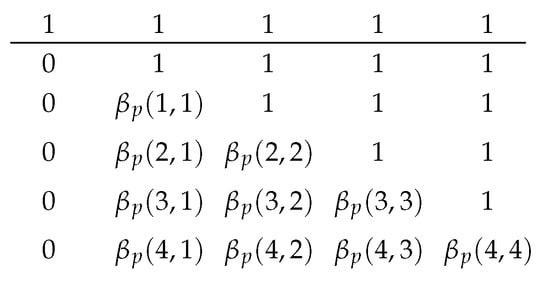

A tabular form of is illustrated in Figure 4 after introducing the boundaries 0 and 1 s.

Figure 4.

The tabular appearance of the function after introducing boundaries 0 and 1 s. The rows and columns correspond to and , respectively. The row above the line is .

Being a dynamic programming approach, we need the following recursive relations:

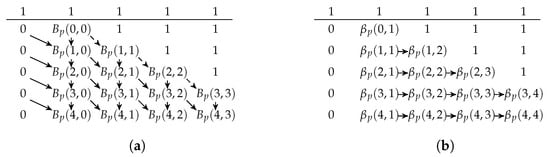

where (13) is stated in Lemma 1(a); and (14) and (15) are by the definitions of and , respectively. The boundary conditions are , , and for . The table can be built in-place in two stages. The first stage fills in at the position of the table. The second stage finishes the table by using (15). Figure 5 illustrates the two stages where the arrows represent the recursive relations (13)–(15). As , the corresponding entries can be substituted in directly.

Figure 5.

The figures illustrate the two stages of the table generation. The indices start from . The first row has the index , which is the row above the line. Compared to Figure 4, can be substituted in directly without using the relation (15). (a) The first stage of the table generation. The 1 and 0 s paddings are generated first. The solid and dashed arrows represent (13) and (14), respectively. (b) The second stage of the table generation. The 1 and 0 s paddings are kept. The arrows represent the recursive relation (15) with the function in-place.

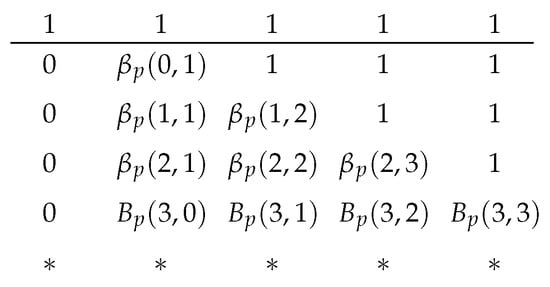

We can compute the values in the table on-demand. Suppose we have in an iteration of Algorithm 1 so that we need the values of for . Let be an element in . The table has columns. By the criteria of selecting b in Algorithm 1 and by Lemmas 1(c) and (d),we have . From Figure 5, we know we have to calculate all rows of for . Furthermore, the recursive relations on a row only depend on the previous row; thus, we need to prepare the values of in the next row so that we have the values to compute in the next row. As an example, Figure 6 illustrates the values we have prepared when .

Figure 6.

The values prepared when . The asterisks represent the values that are not yet initialized.

Each entry in the table is modified at most twice during the two stages. Each assignment takes time. Therefore, the time and space complexities for building the table are both , where R is the number of rows we want to construct. When restricted by the block size, we know that . The worst case is that we only receive one rank-M batch for the whole block, which is unlikely to occur. In this case, we have the worst case complexity .

Note that we can use fixed-point numbers instead of floating point numbers for a more efficient table construction. Furthermore, the numerical values in the table are not important as long as the orders for any pair of values in the table are the same.

3.5. Throughput Evaluations

We now evaluate the performance of BAR in a feedbackless multi-hop network. Note that baseline recoding is a special case of BAR with block size 1. Our main goal here is to show the throughput gain of BAR among different block sizes. In the evaluation, all (recoded) packets of a batch are sent before sending those of another batch.

Let be the incoming rank distribution of batches arriving at a network node. The normalized throughput at a network node is defined as the average rank of the received batches divided by the batch size, i.e., . In our evaluations in this subsection, we set for every block . That is, the source node transmits M packets per batch. We assume that every link in the line network has independent packet loss with the same packet loss rate p. In this topology, we set a sufficiently large for every batch, say, .

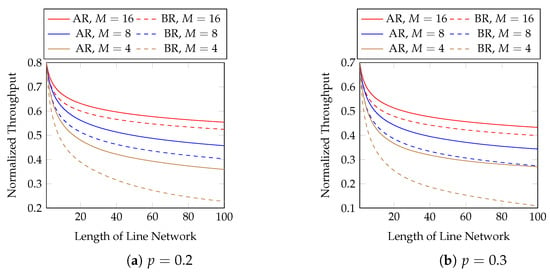

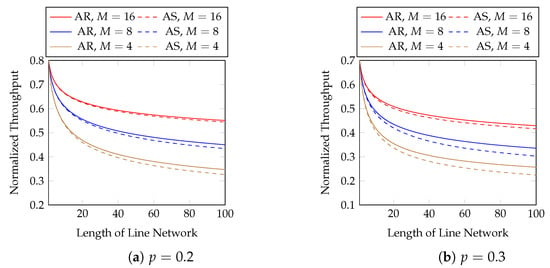

We first evaluate the normalized throughput with different batch sizes and packet loss rates. Figure 7 compares adaptive recoding (AR) and baseline recoding (BR) when we know the rank distribution of the batches arriving at each network node before the node applies BAR. In other words, Figure 7 shows the best possible throughput of AR. We compare the effect of block sizes later. We observe that

Figure 7.

Adaptive recoding (AR) vs. baseline recoding (BR) in line networks of different lengths, batch sizes and packet loss rates.

- AR has a higher throughput than BR under the same setting;

- the difference in throughput between AR and BR is larger when the batch size is smaller, the packet loss probability is larger, or the length of the line network is longer.

In terms of throughput, the percentage gains of AR over BR using and are 23.3 and 33.7% at the 20-th and 40-th hops, respectively. They become 43.8 and , respectively, when .

Although the above figure shows that the throughput of BNC with AR maintains a good performance when the length of the line network is long, many applications use a much shorter line network. We zoom into the figure for the first 10 hops in Figure 8 for practical purposes.

Figure 8.

The first 10 hops in Figure 7.

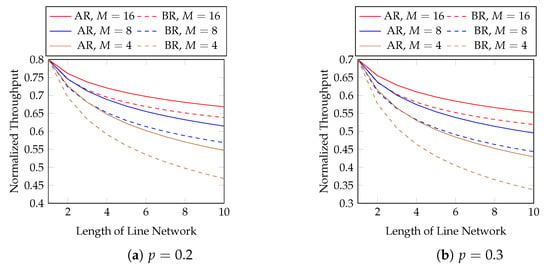

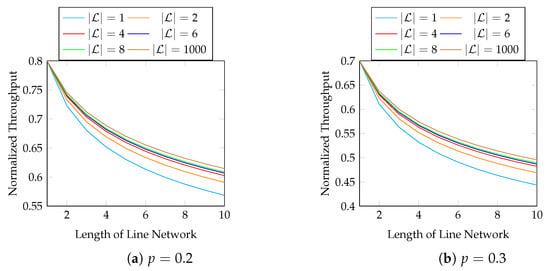

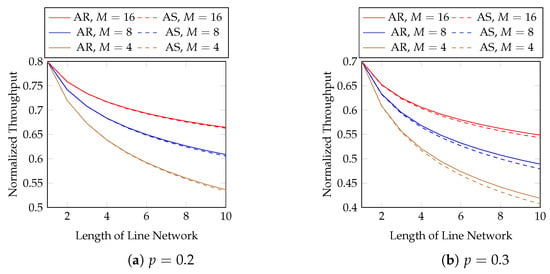

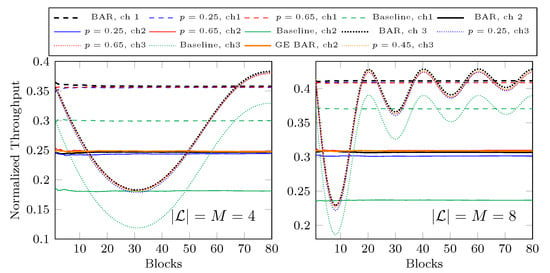

Now, we consider the effect of different block sizes. Figure 9 shows the normalized throughput of different and p with . The first 10 hops in Figure 9 are zoomed in in Figure 10. We observe that

Figure 9.

The effect of different block sizes with .

Figure 10.

The first 10 hops in Figure 9.

- a larger results a better throughput;

- using already gives a much larger throughput than using ;

- using gives little extra gain in terms of throughput.

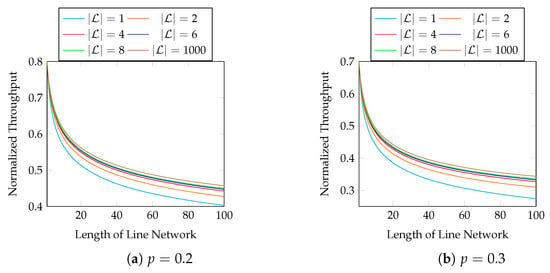

Next, we show the performance of the equal opportunity approximation scheme. Figure 11 compares the normalized throughput achieved by Algorithm 2 (AS) and the true optimal throughput (AR). We compare the best possible throughput of AR here, i.e., the same setting as in Figure 7. The first 10 hops in Figure 11 are zoomed in in Figure 12. We observe that

Figure 11.

Approximation vs. optimal AR in line networks of different lengths, batch sizes and packet loss rates.

Figure 12.

The first 10 hops in Figure 11.

- the approximation is close to the optimal solution;

- the gap in the normalized throughput is smaller when the batch size is larger, the packet loss probability is smaller, or the length of the line network is shorter.

4. Impact of Inaccurate Channel Models

In this section, we first demonstrate that the throughput of BAR is insensitive to inaccurate channel models and packet loss rates. Then, we investigate the feedback design and show that although feedback can enhance the throughput, the benefit is insignificant. In other words, BAR works very well without the need of feedback.

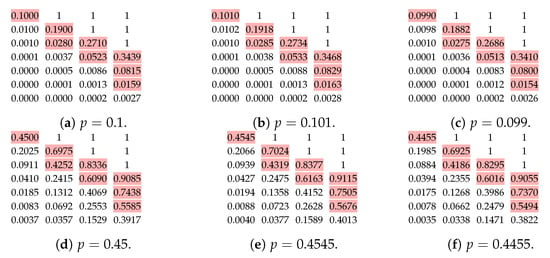

4.1. Sensitivity of

We can see that our algorithms only depend on the order of the values of ; therefore, it is possible that the optimal for an incorrect p is the same for a correct p. As shown in Figure 4, the boundaries 0 and 1 s are unaffected by . That is, we only need to investigate the stability of for . We calculate values of corrected to four digital places in Figure 13 for , and and their 1% relative changes. We can see that the order of the values are mostly the same when we slightly change p.

Figure 13.

The values of for and with different p. The coloured numbers are the largest eight values smaller than 1.

We can also check with the condition number [89] to verify the stability. Roughly speaking, the relative change in the function output is approximately equal to the condition number times the relative change in the function input. A small condition number of means that the effect of the inaccurate p is small. As shown in Figure 13, the values of drop quickly when t increases. In the view of the throughput, which is proportional to the sum of these values, we can tolerate a larger relative change, i.e., a larger condition number, when is small. We calculate condition numbers of in Figure 14 by the formula stated in Theorem 5.

Figure 14.

The condition numbers of for and .

Theorem 5.

Let and . The condition number of with respect to p is , or equivalently, .

Proof.

See Appendix F. □

4.2. Impact of Inaccurate Channel Models

To demonstrate the impact of an inaccurate channel model, we consider three different channels to present our observations.

- ch1: independent packet loss with constant loss rate .

- ch2: burst packet loss modelled by the GE model illustrated in Figure 2 with the parameters used in [70], namely , .

- ch3: independent packet loss with varying loss rates , where c is the number of transmitted batches.

All the three channels have the same average packet loss rate of . The formula of ch3 is for demonstration purpose only.

We now demonstrate the impact of inaccurate p on the throughput. We consider a line network where all the links use the same channel (ch1, ch2, or ch3). In this topology, we set a sufficiently large for every batch, say, . Similar to the previous evaluation, all (recoded) packets of a batch are sent before sending those of another batch. Furthermore, we set for every block .

In Figure 15 we plot the normalized throughput of the first 80 received blocks at the fourth hop where or 8. We use BAR with (2) for each network although ch2 is a bursty channel. The black curves with BAR are the throughput of BAR where the loss rate is known. For ch1 and ch2, this loss rate p is a constant of . The red and blue curves are the throughput of BAR when we guess and , respectively, which is from the average loss rate of . As there is no feedback, we do not change our guess on p for these curves. We can see that the throughput is actually very close to the corresponding black curves. This suggests that in the view of the throughput, BAR is not sensitive to p. Even with a wild guess on p, BAR still outperforms BR, as illustrated by the green curves. Regarding ch2, we also plot the orange curve with GE BAR, which is the throughput achieved by BAR with (3). We can see that the gap between the throughput achieved by BAR with (2) and (3) is very small. As a summary of our demonstration:

Figure 15.

Throughput with inaccurate channel conditions.

- We can use BAR with (2) for bursty channels and the loss in throughput is insignificant.

- BAR with an inaccurate constant p can achieve a throughput close to the one when we have the exact real-time loss rate.

- We can see a significant throughput gain from BR by using BAR even with inaccurate channel models.

4.3. Feedback Design

Although an inaccurate p can give an acceptable throughput, we can further enhance the throughput by adapting the varying p values. To achieve this goal, we need to use feedback.

We adopt a simple feedback strategy which lets the next node return the number of received packets of the batches for the current node to estimate p. Although the next node does not know the number of lost packets per batch, it knows the number of received packets per batch. Therefore, we do not need to introduce more overhead to the transmitted packets by the current node.

When we estimate p, we have to know the number of packets lost during a certain time frame. If the time frame is too small, the estimation is too sensitive so the estimated p changes rapidly and unpredictably. If the time frame is too long, we captured too much out-dated information about the channel so the estimated p changes too slowly and may not be able to adapt to the real loss rate. Recall that the block size is not large as we want to keep the delay small. We use a block as an atomic unit of the time frame. The next node gives feedback on the number of received packets per block. The current node uses the feedback of the blocks in the time frame to estimate p. We perform an estimation of p per received feedback. In this way, the estimated p is the same for each block so that we can apply BAR with (2).

If the feedback is sent via a reliable side channel, then we can assume that the current node can always receive the feedback. However, if the feedback is sent via an unreliable channel, say, the reverse direction of the same channel the data packets were sent from, then we need to consider feedback loss. Let be a set of blocks in a time frame with received feedback. We handle the case of feedback loss by considering the total number of packets transmitted for the blocks in as the total number of packets transmitted during the time frame. In this way, we can also start the estimation before a node sends enough blocks to fill up a time frame. Suppose no feedback is received for every block in a time frame, then we reuse the previously estimated p for BAR.

At the beginning of the transmission, we have no feedback yet so we have no information to estimate p. To outperform BR without the knowledge of p, we can use the approximation of BAR given by Algorithm 2. Once we have received at least one feedback, we can then start estimating p.

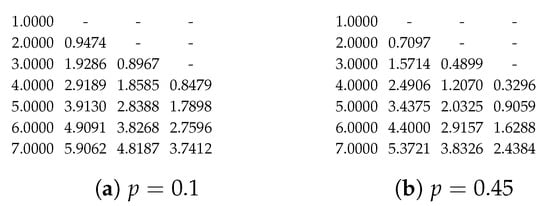

4.4. Estimators

Let x and n be the total number of packets received by the current node and the total number of packets transmitted by the previous node, respectively, in a time frame for observation. That is, the number of packets lost in the time frame is . We introduce three types of estimators for our numerical evaluations.

(1) Maximum likelihood estimator (MLE): The MLE, denoted by , estimates p by maximizing the likelihood function. is a well-known result which can be obtained via derivative tests. This form collides with the sample average, so by the law of large numbers, when if p does not change over time.

(2) Minimax estimator: The minimax estimator achieves the smallest maximum risk among all estimators. With the popular mean squared error (MSE) as the risk function, it is a Bayes estimator with respect to the least favourable prior distribution. As studied in [90,91], such prior distribution is a beta distribution . The minimax estimator of p, denoted by , is the posterior mean, which is , or equivalently, .

(3) Weighted Bayesian update: Suppose the prior distribution is , where the hyperparameters can be interpreted as a pseudo-observation having a successes and b failures. Given a sample of s successes and f failures from a binomial distribution, the posterior distribution is . To fade out the old samples captured by the hyperparameters, we introduce a scaling factor and let the posterior distribution be . This factor can also prevent the hyperparameters from growing indefinitely. The estimation of p, denoted by , is the posterior mean with and , which is . To prevent a bias when there are insufficient samples, we select a non-informative prior as the initial hyperparameters. Specifically, we use the Jeffreys prior, which is .

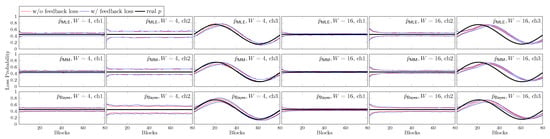

We first show the estimation of p by different schemes in Figure 16. We use BAR with (2) and . The size of the time frame is W blocks. For and , the observations in the whole time frame have the same weight. For , the effect of each observation deceases exponentially faster. We consider an observation is out of the time frame when it is scaled into 10% of the original value. That is, we define the scaling factor by . In each subplot, the black curve is the real-time p. The red and blue curves are for the estimation without and with feedback loss, respectively. In each case, the two curves are the 25 and 75% percentiles from 1000 runs, respectively.

Figure 16.

The 25 and 75% percentiles of the estimation of p by different schemes where in 1000 runs.

We can see that a larger W has a slower response to the change in p in ch3. Among the estimators, has the fastest response speed as its observations in the time frame are not fairly weighted. Furthermore, although ch1 and ch2 have the same average loss rate, the estimation has a larger variance when the channel is bursty.

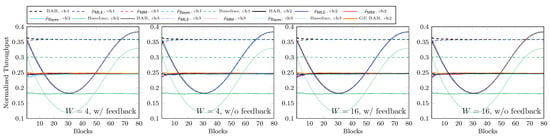

4.5. Throughput Evaluations

As discussed in Section 4.2, the guessed p values have an insignificant impact on the throughput. We now show the throughput achieved by the estimation schemes in Figure 17. The parameters for the networks and BAR are the same as in Section 4.2. We do not wildly guess p here so it is no surprise that we can achieve nearly the same throughput as when we know the real p for ch1 and ch2. If we look closely, we can see from Figure 15 that for ch3, there is a small gap between the throughput of BAR when we know the real-time p and the one of BAR when using a constant p. Although the estimation may not be accurate at all times, we can now adapt to the change in p to finally achieve a throughput nearly the same as when we know the real-time p. On the other hand, whether the feedback is lost or not, the plots shown in Figure 17 are basically the same.

Figure 17.

Throughput with estimated p via feedback where .

5. Conclusions

We proposed BAR in this paper which can adapt to variations in the incoming channel condition. In a practical perspective, we discussed how to calculate the components of BAR and how to solve BAR efficiently. We also investigated the impact of an inaccurate channel model on the throughput achieved by BAR. Our evaluations showed that

- BAR is insensitive to the channel model: guessing the loss rate still outperforms BR.

- For bursty channels, the throughput achieved by BAR with an independent loss model is nearly identical to one with the real channel model. That is, we can use the independent loss model for BAR in practice and apply the techniques in this paper to reduce the computational costs of BAR.

- Feedback can slightly enhance the throughput for channels with a dynamic loss rate. This suggests that BAR works very well without the need of feedback. On the other hand, feedback loss barely affects the throughput of BAR. Therefore, we can send the feedback through a lossy channel without the need of retransmission. Unless we need to use an accurate estimated loss rate in other applications, we can use MLE with a small time frame for BAR to reduce the computational time.

These encouraging results suggest that BAR is suitable to be deployed in real-world applications.

One drawback of our proposed scheme is that we need to change the default behaviour of some intermediate network nodes, which can be a practical problem in existing networks. In fact, this is a common issue for all network coding schemes. Some routers have hard-wired circuits to efficiently handle heavy traffic, so it is unfeasible to deploy other schemes on them without replacing the hardware. For these heavy-loaded nodes, one may consider producing a hardware to speed up the network coding operations, e.g., [92,93], inducing extra costs on the deployment. On the other hand, the protocol for BNC is not standardized yet, meaning two parties may adopt BAR with incompatible protocols, thus restricting the application of BNC in public networks. However, it is not easy to build a consensus on the protocol, because there are still many research directions to improve the performance of BNC so the protocol design is subject to change in the near future.

6. Patents

The algorithms in Section 3 are variants of those that can be found in the U.S. patent 10,425,192 granted on 24 September 2019 [94]. The linear programming-based algorithm for BAR in Appendix H can be found in the U.S. patent 11,452,003 granted on 20 September 2022 [95].

Author Contributions

Conceptualization, H.H.F.Y. and S.Y.; methodology, H.H.F.Y.; software, H.H.F.Y. and L.M.L.Y.; validation, H.H.F.Y., Q.Z. and K.H.N.; formal analysis, H.H.F.Y.; investigation, H.H.F.Y., S.Y. and Q.Z.; writing—original draft preparation, H.H.F.Y.; writing—review and editing, H.H.F.Y., S.Y. and Q.Z.; visualization, H.H.F.Y.; supervision, H.H.F.Y. and S.Y.; project administration, H.H.F.Y.; funding acquisition, S.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by NSFC under Grants 12141108 and 62171399.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

Part of the work of Hoover H. F. Yin, Shanghao Yang and Qiaoqiao Zhou was conducted when they were with the Institute of Network Coding, The Chinese University of Hong Kong, Shatin, New Territories, Hong Kong. The work of Lily M. L. Yung was performed when she was with the Department of Computer Science and Engineering, The Chinese University of Hong Kong, Shatin, New Territories, Hong Kong. The work of Ka Hei Ng was performed when he was with the Department of Physics, The Chinese University of Hong Kong, Shatin, New Territories, Hong Kong. Some results in this paper were included in the thesis of Hoover H. F. Yin [96].

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| BAR | Blockwise adaptive recoding |

| IoT | Internet of Things |

| BNC | Batched network coding |

| RLNC | Random linear network coding |

| LDPC | Low-density parity-check code |

| BATS code | Batched sparse code |

| GE model | Gilbert–Elliott model |

| MLE | Maximum likelihood estimator |

| MSE | Mean squared error |

Appendix A. Accuracy of the Approximation

We demonstrate the accuracy of the approximation

by showing the percentage error of the expected rank function corrected to three decimal places when , and in Table A1. That is, the table shows the values

for different r and t.

From the table, we can see that only three pairs of have percentage errors larger than 0.1%, where they occur when . For all the other cases, the percentage errors are less than 0.1%. Therefore, such an approximation is accurate enough for practical applications.

Table A1.

Percentage error when approximating expected rank functions.

Table A1.

Percentage error when approximating expected rank functions.

| t | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 0.39216 | 0.00153 | 0.00001 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 |

| 2 | 0.13140 | 0.15741 | 0.00061 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 |

| 3 | 0.03841 | 0.08032 | 0.08397 | 0.00033 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 |

| 4 | 0.01025 | 0.03091 | 0.05791 | 0.05042 | 0.00020 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 |

| 5 | 0.00258 | 0.01024 | 0.02761 | 0.04398 | 0.03229 | 0.00013 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 |

| 6 | 0.00062 | 0.00308 | 0.01091 | 0.02502 | 0.03416 | 0.02155 | 0.00008 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 |

| 7 | 0.00015 | 0.00087 | 0.00382 | 0.01147 | 0.02258 | 0.02685 | 0.01479 | 0.00006 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 |

| 8 | 0.00003 | 0.00023 | 0.00122 | 0.00457 | 0.01178 | 0.02023 | 0.02123 | 0.01036 | 0.00004 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 |

| 9 | 0.00001 | 0.00006 | 0.00037 | 0.00165 | 0.00526 | 0.01182 | 0.01798 | 0.01686 | 0.00738 | 0.00003 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 |

| 10 | 0.00000 | 0.00002 | 0.00011 | 0.00055 | 0.00210 | 0.00585 | 0.01163 | 0.01585 | 0.01342 | 0.00532 | 0.00002 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 |

| 11 | 0.00000 | 0.00000 | 0.00003 | 0.00017 | 0.00077 | 0.00257 | 0.00632 | 0.01125 | 0.01388 | 0.01070 | 0.00387 | 0.00002 | 0.00000 | 0.00000 | 0.00000 | 0.00000 |

| 12 | 0.00000 | 0.00000 | 0.00001 | 0.00005 | 0.00027 | 0.00103 | 0.00302 | 0.00665 | 0.01072 | 0.01208 | 0.00854 | 0.00284 | 0.00001 | 0.00000 | 0.00000 | 0.00000 |

| 13 | 0.00000 | 0.00000 | 0.00000 | 0.00002 | 0.00009 | 0.00038 | 0.00131 | 0.00344 | 0.00685 | 0.01009 | 0.01046 | 0.00682 | 0.00210 | 0.00001 | 0.00000 | 0.00000 |

| 14 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00003 | 0.00013 | 0.00052 | 0.00160 | 0.00381 | 0.00693 | 0.00940 | 0.00901 | 0.00545 | 0.00156 | 0.00001 | 0.00000 |

| 15 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00001 | 0.00004 | 0.00020 | 0.00069 | 0.00190 | 0.00412 | 0.00689 | 0.00866 | 0.00773 | 0.00436 | 0.00117 | 0.00000 |

| 16 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00001 | 0.00007 | 0.00028 | 0.00087 | 0.00219 | 0.00436 | 0.00677 | 0.00792 | 0.00660 | 0.00349 | 0.00088 |

| 17 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00002 | 0.00010 | 0.00037 | 0.00106 | 0.00246 | 0.00454 | 0.00656 | 0.00718 | 0.00562 | 0.00279 |

| 18 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00001 | 0.00004 | 0.00015 | 0.00048 | 0.00126 | 0.00271 | 0.00466 | 0.00629 | 0.00647 | 0.00476 |

| 19 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00001 | 0.00006 | 0.00020 | 0.00060 | 0.00147 | 0.00293 | 0.00471 | 0.00598 | 0.00579 |

| 20 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00002 | 0.00008 | 0.00027 | 0.00073 | 0.00167 | 0.00311 | 0.00470 | 0.00563 |

Appendix B. Proof of Lemma 1

We have the following properties when a and b are positive integers and ([79], Equations 8.17.20 and 8.17.21):

Proof of Lemma 1(a)

It is trivial for . For , recall the recursive formula of binomial coefficients ([79], Equation 1.2.7):

Applying the formula, we have

□

Proof of Lemma 1(b).

Case I: . By (10) and (12), , and the equality holds if and only if .

Case II: . By (6) and (A1),

Case III: . By (11), the equality always hold. □

Proof of Lemma 1(c).

Case I: . By (12), the equality always hold.

Case II: . By (6) and (A2),

Case III: . By (10) and (11), . □

Proof of Lemma 1(d).

Case I: . By definition, .

Case II: . Recall that is the partial sum of the probability mass of the binomial distribution . By summing one more term, i.e., , the partial sum must be larger than or equal to . Note that when , so the equality holds if and only if and if by (12). □

Proof of Lemma 1(e) and (f).

Inductively by Lemma 1(b), we have

for all where are non-negative integers such that . By (10),

for all . Combining (A3) and (A4), the proof is complete. □

Appendix C. Proof of Lemma 3

By Lemma 3(a), we have . If , we have

which proves Lemma 3(a).

For Lemma 3(b), note that we have the initial condition

We can evaluate Lemma 2 recursively and obtain the first equality in Lemma 3(b).

By Lemma 3(a), we can show that when , we have

This implies that when , we have

When , the summation term in (A6) equals 0. So, we can combine (A5) and (A6) and give

Appendix D. Proof of Theorem 2

Suppose for some , i.e.,

We define

for all . We consider the difference of

where

- (A8) follows Lemma 3(b);

- (A9) follows Lemma 1(d) together with (A7).

The above result contradicts that solves (8), which gives us that for all .

Next, we suppose for some , i.e.,

We define

for all . Moreover, we compare the difference of

where

- (A11) follows Lemma 3(a)

- (A12) follows (A10) and Lemma 1(d)

- (A13) follows Lemma 1(c) together with (A10).

This contradicts and solves (8). Therefore, we have for all .

Combining the two cases, the proof is complete.

Appendix E. Performance Guarantee and Bounded Error of Algorithm 2

We start the discussion with the following theorem.

Theorem A1.

Let SOL and OPT be the solution given by Algorithm 2 and the optimal solution of (8), respectively, then

where and .

Proof.

We first show that the algorithm has a relative performance guarantee factor of . As stated in Theorem 3, when , the algorithm guarantees an optimal solution. Therefore, we only consider . Let be the approximation given by the algorithm.

Note that any linear combinations of r-independent vectors cannot obtain more than r-independent vectors. Therefore, the expected rank of a batch at the next hop must be no larger than the rank of the batch at the current hop, and, be non-negative. That is,

This gives a bound of the optimal solution by

We consider the exact formula of the approximation:

where

- (A16) is stated in Lemma 3(b)

- (A17) holds as for all , which is by (10);

- (A18) follows the inequality (A15).

Lastly, we show the bounded error. Let be a solution to (8). We write where for all . Note that the constraint of (8), i.e., , suggests that

On the other hand, it is easy to see that the approximation must either give or . That is, we have . By Lemma 3(b),we have

We consider the difference between OPT and SOL:

where

- (A21) is the difference between the exact form of OPT by Lemma 3(b) after substituting the lower bound of SOL shown in (A20);

- the condition in the summation of (A22) can be removed, as we have if ;

- (A23) follows (A19) and the fact shown in (10) that the extra terms are non-negative.

The proof is done. □

If the relative performance guarantee factor of is tight, we need both equalities in (A17) and (A18) to hold. First, by (10), we know that is always non-negative. The equality in (A17) holds if and only if for all . The sum equals 0 only when

- and according to (11); or

- which forms an empty sum.

The equality in (A18) holds if and only if . Note that (A14) shows that is upper bounded by . This implies that we need for all . When , we can apply Lemma 3(a) to obtain , which equals if and only if , as we assumed in this paper. By Lemma 2, is a monotonic increasing function in terms of t for all . Therefore, when we need , which implies that . Then, the approximation will also give for some in this case, and the equality in (A17) does not hold.

That is, we have only when for all . In this case, we have . In practice, the probability of having for all is very small. Therefore, we can consider that the bound is not tight in most cases but it guarantees that the approximation is good when the packet loss probability is small.

Appendix F. Proof of Theorem 5

Let be the incomplete beta function. We have the beta function .

From (6), we have . By direct calculation, the condition number is

where the absolute value disappears as both numerator and denominator are non-negative. The first form of the condition number can be obtained by substituting into (A24).

Appendix G. Corrupted Heaps

In this appendix, we explain why we can omit two of the heap updates in Algorithm 3.

Before we start, we need some mathematical descriptions of the optimal solutions of BAR. Then, we will introduce our lazy evaluation technique on a modified heap that we called the corrupted heap.

To simplify the notations, we redefine as

in this appendix. In other words, is now a function of b. When , is the smallest possible value in the image of . Therefore, the algorithms in this paper will not assign more recoded packets to the batch b.

Appendix G.1. Optimality Properties of BAR

First, we introduce the following theorem that states a condition for non-optimality (or optimality after taking contraposition).

Theorem A2.

Proof.

We first prove the sufficient condition. If does not solve (9), then it means that there exists another configuration which can give a higher objective value. Since , there exists distinct such that and . Note that so we must have . We define

where

Using the fact that gives a larger objective value and by Lemma 3(b),we have

Now, we fix and such that

We have

where

- (A27) and (A29) follows Lemma 1(b);

- (A28) is the inequality shown in (A26).

Applying (A25), we have

which proves the sufficient condition.

Now we consider the necessary condition, where we have

for some distinct and . Let

for all . Then, we consider the following:

where

- (A31) and (A33) follow Lemma 3(a)

- (A32) follows (A30).

meaning that is not an optimal solution of (9). □

Note that we assume , or otherwise we should include more batches in the block.

We define a multiset that collects the value of for all integers , i.e.,

By Lemma 3(b),we have . As is concave with respect to t, is a monotonic decreasing function on t (also stated in Lemma 1(b)).

This implies the following lemma.

Lemma A1.

equals the sum of the largest t elements in .

Proof.

By Lemma 3(b), . By Lemma 1(b), is a monotonic decreasing function on t. By (10) and (12), we have for all positive integers t. Therefore, is the sum of the largest t elements in . □

We fix a block and define a multiset

If two batches have the same rank, i.e., , then for all t, we have . As is a multiset, the duplicated values are not eliminated. Now, we have the following lemma to connect (A34) and .

Lemma A2.

The optimal value of (A34) is the sum of the largest k elements in Ω.

Proof.

Let solves (A34). We suppose the optimal value is not the sum of the largest k elements in . However, Lemma A1 states that equals the sum of the largest elements in for all . This means that there exists two distinct batches with and such that .

By setting , we can apply Theorem A2 which gives that is not an optimal solution of (A34). The proof is completed by contradiction. □

We define a multiset which is a collection of the largest elements in . By Lemma A2, is the optimal value of (9). For any non-optimal solution , we define a multiset

where the value is the number of elements in which are also contained in .

Appendix G.2. Lazy Evaluations

We consider an iteration in the last while loop in Algorithm 3. Suppose we choose to increase by 1 and decrease by 1.

Lemma A3.

If batch a is selected by the max-heap or batch b is selected by the min-heap in any future iteration, then the optimal solution is reached.

Proof.

Suppose batch a with key is selected by the max-heap in a future iteration. Note that was once the smallest element in for some k. Therefore, at the current state where , every element in must be no smaller than . Equivalently, we have for all . By Theorem A2, the optimal solution is reached. The min-heap counterpart can be proved in a similar fashion. □

Suppose we omit the update for the batch in the heap. We call the key of the batch a corrupted key, or the key of the batch is corrupted. A key which is not corrupted is called an uncorrupted key. A heap with corrupted keys is called a corrupted heap. In other words, the key of a batch is corrupted in a corrupted max-heap if and only if the same batch was once the minimum of the corresponding original min-heap, and vice versa. As a remark, we do not have a guaranteed maximum portion of corrupted keys as an input. Furthermore, we do not adopt the carpooling technique. This suggests that the heap here is not a soft heap [97].

Lemma A4.

If the root of a corrupted heap is a corrupted key, then the optimal solution is reached.

Proof.

We only consider a corrupted max-heap in the proof. We can use similar arguments to show that a corrupted min-heap also works.

In a future iteration, suppose batch a is selected by the corrupted max-heap. We consider the real maximum in the original max-heap. There are three cases.

Case I: batch a is also the root of the original max-heap. As the key of a is corrupted, it means that the batch was once selected by the corresponding min-heap. By Lemma A3, the optimal solution is reached.

Case II: the root of the original max-heap is batch where the key of is also corrupted. Similar to Case I, batch was once selected by the corresponding min-heap, and we can apply Lemma A3 to finish this case.

Case III: the root of the original max-heap is batch where the key of is not corrupted. In this case, the uncorrupted key of is also in the corrupted max-heap. Note that the corrupted key of a is no larger than the actual key of a in the original max-heap. This means that the key of a, and the corrupted key of a have the same value. It is equivalent to let the original max-heap select batch a, as every element in must be no smaller than the key of , where represents the state of the current iteration. Then, the problem is reduced to Case I.

Combining the three cases, the proof is completed. □

Theorem A3.

The updates for batch a in the max-heap and batch b in the min-heap can be omitted.

Proof.

When we omit the updates, the heap itself becomes a corrupted heap. We have to make sure that when a batch with corrupted key is selected, the termination condition of the algorithm is also met.

We can express the key of batch in a corrupted max-heap and min-heap by and , respectively, where are non-negative integers. When or is 0, the key is uncorrupted in the corresponding corrupted heap. By Lemma 1(b), we have

That is, the root of the corrupted max-heap is no larger than the root of the original max-heap. Similar for the min-heap. Mathematically, we have

Suppose a corrupted key is selected. By Lemma A4, we know that the optimal solution is reached. Therefore, we can apply the contrapositive of Theorem A2 and know that

for all . We can omit the condition because by (10) and (12), we have . The inequality (A37) is equivalent to

We can mix this inequality with (A35) and (A36) to show that when a corrupted key is selected, we have

which is the termination condition shown in Algorithm 3 after we replaced the heaps into corrupted heaps.

We just showed that once a corrupted key selected, the termination condition is reached. In the other words, before a corrupted key is selected, every previous selection must be an uncorrupted key. That is, the details inside the iterations are unaffected. If an uncorrupted key is selected where it also satisfies the termination condition, then no corrupted key is touched, and the corrupted heap still acts as a normal heap at this point.

The correctness of the algorithm when using a corrupted heap is proven. Moreover, we do not need to mark which key is corrupted. This is, we can omitted the mentioned heap updates for a normal heap. □

We do not need to mark down which key is corrupted while the algorithm still works, so we can simply omit the mentioned updates as lazy evaluations. As there are two heaps in algorithm, we can reduce from four to two heap updates.

Appendix H. Linear Programming Formulation of BAR