Abstract

We investigated the impact of nonequilibrium conditions on the transmission and recovery of information through noisy channels. By measuring the recoverability of messages from an information source, we demonstrate that the ability to recover information is connected to the nonequilibrium behavior of the information flow, particularly in terms of sequential information transfer. We discovered that the mathematical equivalence of information recoverability and entropy production characterizes the dissipative nature of information transfer. Our findings show that both entropy production (or recoverability) and mutual information increase monotonically with the nonequilibrium strength of information dynamics. These results suggest that the nonequilibrium dissipation cost can enhance the recoverability of noise messages and improve the quality of information transfer. Finally, we propose a simple model to test our conclusions and found that the numerical results support our findings.

1. Introduction

The transfer of information is a crucial topic in physics, psychology, and human society, making it an important area of study for both science and technology [1,2,3,4,5]. Specifically, information theory has proven to be essential in biology, with Shannon’s pioneering work being applied to many biological systems across various scales [6,7,8]. Our central challenge is to understand how organisms can extract and represent useful information optimally from noisy channels, given physical constraints. However, the issues related to information transformation are not limited to biological systems and should be examined across a wide range of systems in physics, chemistry, and engineering. It is, therefore, essential to consider the information transfer in a more-fundamental way.

During the transmission of information, messages are frequently conveyed through noisy channels, where the noise can alter the useful information carried by the messages. Consequently, information recovery becomes a critical issue in practice [9,10,11]. Although there has been considerable research on the performance analysis of specific information recovery techniques in various fields [12,13,14,15,16], there is still a lack of a general theory on the recoverability of useful information. The quantification of recoverability should depend on the information source and the channel’s characteristics, irrespective of the specific information recovery methodologies, such as the maximum likelihood [17,18,19] or maximum a posteriori probability [20,21,22].

In [23], a classical measurement model was developed to investigate nonequilibrium behavior resulting from energy and information exchange between the environment and system. The study revealed that the Markov dynamics for sequential measurements is governed by the information driving force, which can be decomposed into two parts: the equilibrium component, which preserves time reversibility, and the nonequilibrium component, which violates time reversibility. In this work, we considered the information dynamics of noisy channels based on this nonequilibrium setup. From the perspective of nonequilibrium dynamics and thermodynamics, the information source and channel, along with their complexity, are not isolated events, but rather should be regarded as open systems [24,25]. The environment, which can exchange energy and information with these systems, can significantly influence the information transfer and its recoverability. Due to the complexity and environmental impacts, often manifesting as the stochasticity and randomness of noisy received messages, sequential information transfer should be viewed as a nonequilibrium process, leading to nonequilibrium behavior in the corresponding information dynamics. Intuitively, there must be an underlying relationship between the nonequilibrium nature and information recoverability.

This study aimed to quantify the recoverability of messages transmitted through a channel. With appropriate physical settings, we show that the randomness of the information transfer can arise from the interactions between the information receiver and the information source and from the influences of the environmental noise. This randomness can be characterized by the steady state distributions or transition probabilities of the received messages given by the receiver under different potential profiles exerted by the source, which are identified as the information driving force of the measurement dynamics [12,23]. In the sequential information transfer, the source messages randomly appear in time sequences. As a result, the receiver will change the interactions with the source messages in time. This leads to the switchings among the potential profiles and among the corresponding steady state distributions of the received messages. Then, the nonequilibrium can arise when the receiver switches its potential profiles in the sequential information transfer. We identified the nonequilibrium of the information transfer as the nonequilibrium strength, given by the difference between the information driving forces (based on the steady state transition probabilities) of the information transfer dynamics.

The main novel contributions of this work are three-fold. First, we introduce a new metric, called recoverability, to quantify the ability to recover the source messages through noisy channels. Recoverability is measured by the averaged log ratio of channel transition probabilities. Second, we demonstrate the mathematical equivalence between recoverability and entropy production [4,26,27,28,29] in nonequilibrium information dynamics. This reveals the intrinsic connection between information recoverability and thermodynamic dissipation costs. Finally, We prove that both recoverability and mutual information [30] monotonically increase with the nonequilibrium strength. This elucidates how driving a system further from equilibrium can boost information transfer quality. To our knowledge, these quantitative relationships between recoverability, entropy production, and nonequilibrium strengths have not been fully established in prior work. Our framework and analysis provide novel theoretical insights into the nonequilibrium physics of information transmission through noisy channels.

This new approach is not specific to particular models, and our conclusions are generalizable for characterizing information transfer. To further support our findings, we propose a simple information transfer model, which yields numerical results consistent with our conclusions. Lastly, we discuss the potential applications of our work in biophysics.

2. Information Dynamics

2.1. Physical Settings

Information dynamics naturally arises from information transfer, information processing, and sequential physical measurements. As discussed in the Introduction, the Markovian sequential measurement model can be properly applied to the information dynamics. Let us firstly introduce the physical settings of sequential information transfer.

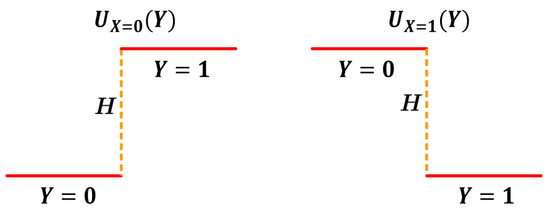

In information transfer, a receiver gains information from a source through physical interaction. The information source randomly selects a message X from a set of n different messages, according to an a priori distribution on . The interaction with the source causes the receiver to feel a potential U. The profile of U is determined by the message X, which is denoted by . The potential profile contains n distinguishable wells with different locations, corresponding to the n possible messages of the source. The well with the lowest depth is unique, and its location changes as the potential profile is switched among different messages. The location of the lowest well represents the position of the current message. An illustration for the potential profile is given in Figure 1. The receiver is immersed in an environment with reciprocal temperature .

Figure 1.

An illustration of the potential profiles in an information transfer. There are two “flat” wells in the potential: a higher well with height and a lower well with height 0. The location of the lower well depends on the source message or . The left figure shows that the lower well is located at the left half of the area when . The right figure shows that the lower well is located at the right half when . When the receiver is found at the left half of the area, the received message is ; the right half corresponds to . The locations of the lower well represent the correctly received message.

To obtain a message at time , the receiver interacts with the source for a period of time . The receiver’s position in the potential U changes much more quickly than the source message changes. This allows the receiver to quickly reach equilibrium with the environment while the source message remains fixed during this period. The position of the receiver in the potential at steady state, denoted by , is viewed as the received message. If is found at the lowest well in , the received message is correct (). However, environmental noise can drive the receiver out of the lowest well and across the potential barriers. In this case, the receiver is found at another well located at , and this is a wrongly received message. The information transition probability, given by the conditional probability of Y when the source’s message is X, can be determined by the following canonical distribution:

where denotes the free energy decided by the profile . The information transition probability distribution in Equation (1) quantifies the classical uncertainty of the information transfer channels, and it is influenced by the interaction between the receiver and the source, which is characterized by the potential , and the environmental noise, which is characterized by the reciprocal temperature .

In practice, the source often transmits messages randomly in time. These messages then come into a time sequence, such as . The corresponding time sequence of the received messages is obtained as , where each message is obtained from via the interaction described in the above. For the sake of simplicity, we assumed that the source selects message independently of the previously message . The received message is then only determined by the message according to the transition probability , and it is independent of both and . This means that the received message is not influenced by the previous received message or the previous source message. If the a priori distribution of the source messages is time-invariant, then the probability distribution of the received message is stationary, which is given by the following probability identity:

2.2. Markovian Dynamics of Sequential Information Transfer

Since the time sequences of the source messages are assumed to be stationary and due to the ergodicity, we can formulate the sequential information transfer as a coarse-grained Markov process of the received messages within one receiving period.

When a message has been received corresponding to the source message , the source transmits the next message , which interacts with the receiver immediately. Consequentially, the potential profile is changed from to promptly. Meanwhile, the receiver position in the potential remains at the previous temporarily, and is recognized as the initial position under the new potential . The conditional joint probability of and under the previous source message in this potential-switching event is given by . Here, represents the transition probability from to and is given by , because has been assumed to be independent of the previous .

Then, the initial probability of the receiver position in the new potential profile , denoted by (it can depend on the previous source message in general cases), is given as follows: . This means that is equal to the equilibrium distribution, which is only conditioning on the previous source message exactly.

During the message-receiving period, the source message remains unchanged within this period. The transition rates of the receiver between two possible positions s and in the potential can be represented by . The Markov dynamics of the receiver position under in continuous time can be given by the following master equation , where is the distribution of the receiver position at time and G is the transition matrix composed of the transition rates r.

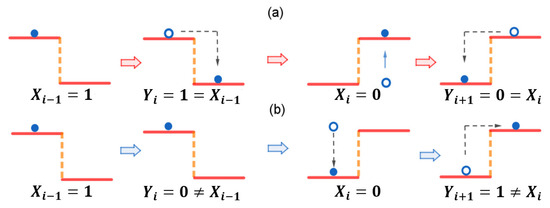

Provided the initial distribution , the solution of this master equation is given by . Since the receiver is interacting with a single environmental heat bath, then the transition rates r between two positions satisfy the local detailed balance condition, . The final distribution after a long enough message receiving period can achieve the equilibrium distribution, i.e., . Here, we take for the long enough time to reach the equilibrium. At the end of the period, we take the position as the new received message. An illustration of the sequential information transfer is given in Figure 2.

Figure 2.

An illustration of a sequential information transfer. The time sequence of the source messages is given by . The sequence of the received message is given by . (a) The receiver receives correct messages as , where all the received messages appear at the correct locations of the lower wells in the potential profiles. (b) The receiver obtains the wrong messages as , where all the received messages appear at the locations of the higher wells in the potential profiles.

According to the continuous-time dynamics, the matrix is recognized as the transition probability matrix for the information transfer dynamics at a coarse-grained level. The transition probabilities can be given by , which is recognized as the information driving force [4,29] from the initial position to the final one within a period.

Due to the description in the above, we obtain the master equation of this coarse-grained Markov process in discrete time as follows:

where

3. Information Recoverability

3.1. Decision Rules of Information Recovery

In this section, we will discuss two decision rules used to recover information. Due to the stochasticity of the noisy channel, two different source messages and can be both transformed into the same received message by the channel, according to the information driving forces and (see Equations (1) and (3)), respectively. If one extracts the original message from the received message , a decision rule should be employed to justify that originated from rather than another message .

There are mainly two kinds of decision rules frequently used for recovering the source messages. The first kind is the maximum likelihood rule, that is choosing the message such that

The maximum likelihood rule is suitable for the cases where the a priori distribution is uniform or is not important for the information transfer.

The second kind of decision rule is the so-called maximum a posteriori probability. This means selecting the message such that

where the a posteriori probability follows the Bayes equation as

If the a priori distribution is not uniform and carries the significant information of the message, then the maximum a posteriori probability rule is more appropriate than the maximum likelihood rule for the message recovery.

The connection of these two decision rules is that the log ratio r in Equation (5) carries additional information about the a priori distribution compared to the log ratio l in Equation (4) as follows:

where is the log ratio of the a priori probabilities. When the message is transmitted and the log ratio l satisfies Equation (4) or r satisfies Equation (5), then can be recovered correctly. Otherwise, if another message maximizes the likelihood or the a posteriori probability, then rather than the original message can be chosen by the decision rules incorrectly.

3.2. Information Recoverability of Noisy Channels

The decision rules can be used to justify the transferred information. However, the ability of the noisy channels to recover the transferred information is still unclear. We need a new physical quantity to quantify the recoverability of the source messages. Obviously, this quantity should be independent of the decision rules. In this subsection, we will discuss the definition of information recoverability for the noisy channels.

For illustration, we firstly considered the maximum likelihood decision rule. One should be aware that the information driving force or transition probability in Equation (1) works as the key characterization to decide the performances of the decision rules, if the a priori distribution is fixed. From the perspective of information theory, the log ratio l in Equation (4) is a fundamental entity to quantify the recoverability of the source messages, because l merely depends on the information driving force . Intuitively, while the a priori distribution is fixed, the inequality indicates the situation that is completely unrecoverable. On the other hand, as increases in the positive regime, the a posteriori distribution (see Equation (6)) conditioned on tends to be more concentrated on than , and hence, becomes more recoverable. On the contrary, as decreases, then becomes less recoverable when is received.

To address our idea more clearly, we rewrite in the following form:

with

Here, and quantify the stochastic mutual information of the messages and contained in the received message , respectively. When is transmitted and is received, is the useful information for recovering . On the other hand, quantifies the noise-induced error, which introduces a spurious correlation between and . The negative sign in represents the part of the useful information from the correct source message that is reduced by the error. Then, the log ratio l quantifies the remaining useful information of while the error information reduces the useful information . The larger l becomes, the more useful information of the source message is preserved in the received message , and becomes more recoverable.

With this consideration, we used the average of the log ratio l to describe the overall recoverability of the source messages, i.e., we can properly define information recoverability R for the maximum likelihood decision rule as follows:

where the average is taken over the ensembles of , , and .

Now, let us discuss the information recoverability for the maximum a posteriori probability decision rule. It can be verified that the average of the log ratio of the a posteriori probabilities r in the maximum a posteriori probability rule (see Equation (5)) is equal to the recoverability R, i.e., , with the log ratio of the a priori probabilities (see Equation (7)) vanishing in the average. This implies that the entity R can be used as a new rationale for the characterization of the recoverability, which should not depend on concrete decision rules.

3.3. Information Transfer Rate Enhanced by Recoverability

In this subsection, we derive the novel result that the information transfer rate increases monotonically with recoverability, formally proving that enhancing the ability to recover messages also boosts the rate of reliable information transmission through noisy channels.

The relationship between the information transfer rate and the recoverability can be given by Equations (8) and (9) straightforwardly:

with

Here, I is recognized as the mutual information between the time sequences of the messages and ; is the averaged error information of the information dynamics with the meaning of interpreted in Equations (8) and (9). Since I and can be given in terms of the relative entropies as and , then I and are both positively defined. Thus, the recoverability R is a nonnegative valued, due to Equation (11).

The mutual information I quantifies the useful information of the source messages contained in the received messages and characterizes the rate of the information transfer. It is also called the information transfer rate. Equation (11) implies that the information transfer rate I can be given as a function of the recoverability:

We can verify that I is a monotonically increasing function with respect to R with a fixed a priori distribution . This is because both the mutual information I and recoverability R are a convex function of the information driving force when is fixed (see [31] and Appendix A of this paper). They achieve the same minimum value of 0 at the points , where all the source messages cannot be distinguished from each other. Then, I and R are both monotonically increasing functions in the same directions as . Therefore, I is a monotonically increasing function of R.

From the convexity of the recoverability, one can easily obtain the following two observations. The first observation is that, if the source and received messages and are independent of each other, then all the source messages become unrecoverable. Otherwise, R increases monotonically while each absolute difference between two information driving forces increases (see Appendix A). Here, the difference d is defined as

On the other hand, the information transfer rate I is also a convex function over all the possible information driving forces [32]. The proof of the convexity of I can be given by applying the log sum inequality. The unique minimum of I is provided by zero, and this minimum information transfer rate can be achieved if and only if the messages and are independent of each other, i.e., for all . Thus, both the information transfer rate I and the recoverability R increase monotonically as each absolute difference increases. This indicates that the information transfer can be enhanced by the recoverability monotonically due to Equation (13). The increase in the information transfer rate also implies the improvement of the information recovery, with smaller upper bounds of the error probabilities of the decision rules in Equations (4) and (5) [33].

4. Nonequilibrium Information Dynamics

In this section, we will develop the model of nonequilibrium information dynamics by introducing the time-reverse sequence of the sequential information transfer process. We will show that the recoverability is closely related to the nonequilibrium behavior of the Markovian information dynamics described in Equation (3). As we can see from the following discussions, the difference d given in Equation (14) works as the nonequilibrium information driving force of the information dynamics. The recoverability R can be shown as the thermodynamic dissipation cost or the entropy production of the information dynamics, driven by the nonequilibrium information driving force d.

The nonequilibrium behavior of the Markovian information dynamics (Equation (3)) can be seen intuitively from the following fact: When the source message is changed from to , the profile of the potential is changed from to accordingly. Since is different from if , the corresponding equilibrium distribution of the receiver message , which is conditioning on , can differ from the previous equilibrium distribution , which is conditioning on . This indicates that the receiver is driven out of equilibrium in the new potential at the beginning of the message-receiving period. Then, the difference between two information driving forces under different potential profiles at the same received message, where the potential profile is switched, can reflect the degree of this nonequilibrium. With this consideration, we decompose the information driving force in Equation (3) into two parts [4,29]:

with

In Equation (15), if the nonequilibrium strength d vanishes at every source and received message, then the information transfer process (Equation (3)) is at the equilibrium state, and the information driving force degenerates to its equilibrium strength m. In this situation, the new potential is equal to the previous potential plus a constant a, i.e., , and the receiver stays at the same equilibrium state under as that under . Then, the nonequilibriumness in the information transfer vanishes, and the vanishing nonequilibrium strength at every source and received message is called the equilibrium point of the information transfer. Consequentially, the equilibrium point makes any two different source message and indistinguishable from each other by the receiver (see the Decision Rules of Information Recovery Section). Clearly, this leads to invalid information transfer. On the other hand, the non-vanishing nonequilibrium strength indicates that not only the information transfer is a nonequilibrium process, but also some source message X can be distinguished from the others by the receiver.

In addition, the nonequilibrium strength d shows the same form as Equation (14), where we can regard the source message in Equation (14) as in Equation (16) because the source select the message in the i.i.d. way. This d guarantees the convexity of the information recoverability (Equation (10), Appendix A of this paper). This means that the information recoverability is a non-decreasing function of the absolute nonequilibrium strength. Then, as a consequence, the information system is further driven away from the equilibrium state, which will give rise to the better recoverability. This leads to the connection between the nonequilibrium thermodynamics and the recoverability.

5. Nonequilibrium Information Thermodynamics

The nonequilibrium behavior of a system can give rise to a thermodynamic dissipation cost in the form of energy, matter, or information, which can be quantified by the entropy production [31,34,35]. In this section, we will discuss the entropy production of the nonequilibrium information dynamics. It is shown that the entropy production can be used to characterize the averaged dissipative information during the nonequilibrium process.

The entropy production can be written in terms of the work performed on the receiver. From the perspective of stochastic thermodynamics [18], for receiving an incoming message , a stochastic work should be performed on the receiver to change the potential profile from to . Meanwhile, the free energy change (the free energy is shown in Equation (1) quantifies the work to change the potential profile within an equilibrium information transfer. The difference between w and quantifies the energy dissipation within a nonequilibrium information transfer, which is recognized as the stochastic entropy production when receiving one single message. This stochastic entropy production can be shown as the logarithmic ratio between two transition probabilities at the same received message, as follows:

where

On the other hand, the receiver can be regarded as a finite-state information storage. It stores the information from the source message, which may be corrupted by environmental noise. However, due to its finite memory, the receiver must erase the stored information of the source message when a new source message is coming. According to the theory of information thermodynamics [1,2,3,4,5], the work w is needed to erase the information of and to write the new information of in the receiver.

The information of the source message stored in the receiver is quantified by the stochastic mutual information between the previous received message and , (see Equation (9)). On the other hand, not all the work is used to erase the information. Due to environmental noise, a part of the work introduces error information, which shows the spurious correlation between and the incoming source message , quantified by the negative stochastic mutual information (see Equation (9)). Then, it can be shown that the stochastic entropy production in Equation (17) can be rewritten as the sum of the useful information i and the error information :

where

Equation (18) indicates that the stochastic entropy production not only quantifies the work dissipation in one single information transfer, but also justifies the detailed recoverability of each received messages, as shown in Equation (8).

The average of the stochastic entropy production is the key characterization of a nonequilibrium process in thermodynamics, which is given as follows:

where

Equation (19) expresses the first equality in terms of the averaged energy or work dissipation (see Equation (17)). Since the averaged free energy vanishes in this case, then the averaged work W is completely converted into heat and dissipated into the environment. Since the averaged entropy production is shown as the relative entropy (the third equality in Equation (19)), then is always nonnegative, which is equivalently shown as the work bound . This is the thermodynamic second law for the information transfer. Here, the equal sign in the second law implies that the information transfer process is at the equilibrium point (see Equation (16)) and the discussion below). Otherwise, if , then the measurement is at a nonequilibrium point.

On the other hand, the second equality in Equation (19) establishes the bridge between the nonequilibrium energy dissipation and the information transfer. The entropy production is taken as the sum of the useful information, quantified by the mutual information I between the source and received information, and the error information . This expression shows that the entropy production works as the recoverability given in Equation (10):

This equation shows a novel result that recoverability is equivalent to entropy production. This means that information retrieval is linked to thermodynamic costs. In other words, improving the recoverability means increasing the nonequilibrium dissipation cost.

In addition, since both I and are shown as the relative entropies in the forms of and , then I and are both nonnegative. It is seen that the error information works as the interference, which reduces the recoverability of information transfer and enlarges the energy dissipation. For this reason, the work bound in the second law can be given more tightly by the acquired information , shown as

This lower bound indicates that, for valid information transfer , we should perform a positive work of at least to erase the previous transmitted information stored in the receiver. Here, Equation (21) is the so-called generalized Landauer principle [3,4], which gives the minimum work or the minimum entropy production estimation for valid information erasing and transfer in this model on the average level. Since both the entropy production and mutual information are a monotonically increasing function of the nonequilibrium strength, the entropy production or the work performed on the receiver is a monotonically increasing function of the valid information transfer. This indicates that the more-useful information of one source message is transferred, and more energy or work dissipation is needed.

6. Numerical Results

In this section, we will present a simple example that can test our previous conclusions numerically.

At first, we set the source messages to be and the possible received messages . The a priori distribution is given by the following column vector:

The information driving force or the transition probabilities are given by the following matrix:

where follows a given potential, which is shown in Equation (1)), and the labels of the columns and rows of represent the indices of the source and received messages, respectively. We then evaluated the recoverability R along a stochastic time sequence of the source and received messages, . This time sequence Z is generated by the given probabilities in Equations (22,23). Following the Markov nature, the probabilities of Z can be given by . If the time t is long enough, then the sequence Z will enter the typical set of the joint sequences of the source and received messages [31]. This means that all the time averages of Z will converge to the typical statistics of the joint sequences of the source and received messages. However, if we generate another time sequence , where the sequence of the received messages is the same as that in the typical sequence Z, but with the original sequence of the source messages being randomly shuffled, we then obtain a non-typical sequence with probability . The recoverability R in the information transfer can be evaluated by the time average of the log ratio of the probabilities to :

On the other hand, we can evaluate the average of the stochastic entropy production in Equation (17) along the same time sequence Z as follows:

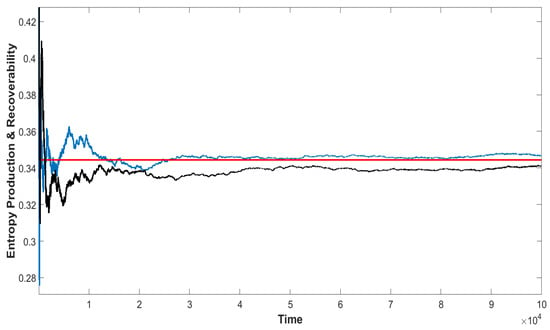

The entropy production converges to the same theoretical value with the recoverability (Equation (10)) in the long time limit. The related results are shown Figure 3, which verify the fact that the thermodynamic dissipation cost works as the recoverability.

Figure 3.

Equivalence of the entropy production and the recoverability. Black line, the time average of the stochastic entropy production. Blue line, the time average of the stochastic recoverability. Red line, the theoretical value. Both the entropy production and the recoverability converge to the theoretical value with time.

We demonstrate that both the mutual information I (Equation (12)) and the recoverability or the entropy production (Equation (19)) are convex functions of the nonequilibrium strength d (Equations (14) and (16), Appendix A of this paper). Due to the i.i.d. assumption in the Physical Settings Section, we can drop the time indices in the information driving force . Then, the nonequilibrium decomposition of in Equation (15) becomes

with

where m and d are recognized as the equilibrium and nonequilibrium strengths, respectively.

By substituting Equation (24) into the expressions of I (Equation (12)) and R (Equation (19)), respectively, we have that

Here, we note that the total number of source messages need not be equal to that of the received messages in practice. Under this consideration, we set the source messages to be (2 messages) and the possible received messages to be (3 messages). Then, the information driving force becomes a matrix, which can be given by

According to the nonequilibrium decomposition given in Equation (24), for this case, we have the equilibrium and nonequilibrium strengths as follows:

Due to the nonnegativity and the normalization of the conditional probabilities, i.e., and , the constraints on m and d can be given as follows:

By combining these constraints, we can obtain the inequality constraints on d:

According to Equation (26), we have the explicit forms of the mutual information and the recoverability for this case as follows:

where the a priori probabilities and . For no loss of generality, we set for the numerical calculations. Then, we can obtain the inequality constraints on d given in Equation (28) more explicitly:

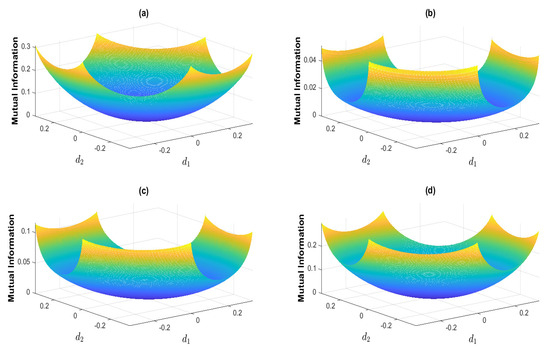

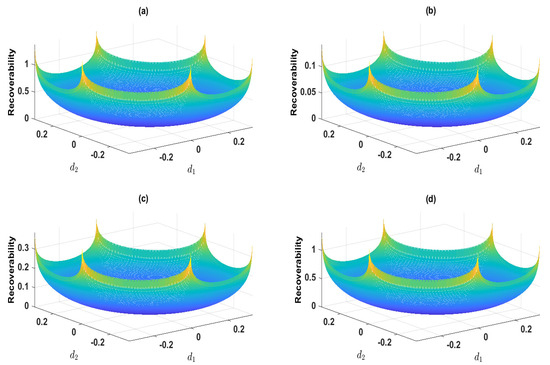

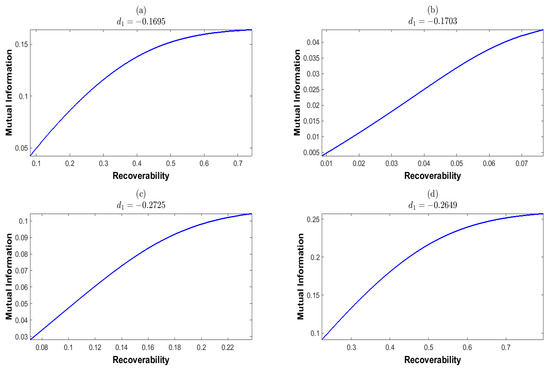

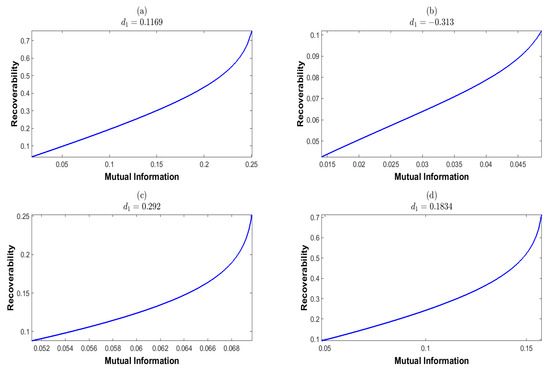

Here, we should note the identity , which is due to the equality constraint on d in Equation (27). This yields the last inequality for . We then randomly select the a priori probability p, which is shown in Table 1. We plot the mutual information I and recoverability R as functions of the nonequilibrium strengths , which are shown in Figure 4 and Figure 5, respectively. These results show that I and R are both convex for . We next plot I as the function of R and R as the function of I, which are shown in Figure 6 and Figure 7, respectively. These results show that both the recoverability and the mutual information are monotonically increasing functions of each other. This indicates that the increasing information recoverability can enhance the information transfer rate, while the increasing information transfer rate enhances the information recoverability.

Table 1.

The a priori probabilities used for numerical illustrations.

Figure 4.

The mutual information as a function of the nonequilibrium strengths . The mutual information is calculated corresponding to the a priori probabilities given in Table 1.

Figure 5.

The recoverability as a function of the nonequilibrium strengths . Each recoverability is calculated corresponding to the a priori probability shown in Table 1.

Figure 6.

The mutual information as a function of the recoverability at fixed . The mutual information and the recoverability are calculated corresponding to the a priori probabilities given in Table 1.

Figure 7.

The recoverability as a function of the mutual information at fixed . The mutual information and the recoverability are calculated corresponding to the a priori probabilities given in Table 1.

7. Conclusions

In this study, we investigated the nonequilibrium effects on the information recovery. By considering the information dynamics of the sequential information transfer (Equation (3)), we can quantify the recoverability of the source messages as the averaged log ratio of the transition probabilities of the channel (Equations (10) and (19)). We see that the difference between the transition probabilities works as the nonequilibrium strength behind the information dynamics (Equation (15)). The recoverability can be shown as the thermodynamic dissipation cost or the entropy production of the information dynamics (Equations (19) and (20)), driven by the nonequilibrium information driving force. This shows that the dissipation cost is essential for the information recoverability. The recoverability increases monotonically as the nonequilibrium information driving force or the nonequilibrium strength increases. On the other hand, as a function of the recoverability (Equation (19)), the mutual information also increases monotonically as the nonequilibrium strength increases. This demonstrates that the nonequilibrium cost can boost the information transfer from the thermodynamic perspective. In a similar spirit, increasing the information transfer rate can improve the information recoverability. The numerical results support our conclusions.

Finally, we discuss some examples that may have potential applications of the model and conclusion in the present work. As is well known, information transfer plays an important role in biology. For example, in biological sensory adaptation, which is an important regulatory function possessed by many living systems, organisms continuously monitor the time-varying environments while simultaneously adjusting themselves to maintain their sensitivity and fitness in response [36]. In this process, the information is transferred from the stochastic environments to the sensory neurons in the brain towards the objects that can be treated as the noisy channels. It is obvious that our model provides a simplified version of this process. The recoverability determines the accuracy of the response of the living system to the environment. A similar process also happens at the cellular level, where cells sense the information from the environment and transmit it towards signal transduction cascades to transcription factors in order to survive in a time-varying environment. As a response, a suitable gene expression is then initiated [37]. Similarly, in gene regulation, the time-varying transcription factor profiles are converted into distinct gene expression patterns through specific promoter activation and transcription dynamics [38,39,40,41]. In this situation, and in Equation (3) can represent the input and the output states at a fixed time. In fact, because the transcription factor profiles are time-varying, the input signals and the corresponding output signals compose the complete trajectories of the input and output processes on a considered time interval . In [42,43], it was shown that the mutual information can be used to quantify the cumulative amount of information exchanged along these trajectories. In the present work, we introduced the joint time sequence Z to describe the trajectories of the input and output processes. We believe that the model discussed in the present work can be applied to these above-mentioned situations. We will address the issues of the nonequilibrium recoverability in these examples in the near future.

Author Contributions

Design: J.W. and Q.Z. Modeling and Simulations: Q.Z.; Methodology, J.W.; Writing–Original draft, Q.Z.; Writing—Review & Editing, R.L. and J.W. All authors have read and agreed to the published version of the manuscript.

Funding

Q. Z. thanks the support from National Natural Science Foundation (12234019).

Data Availability Statement

Data available upon request.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Here, we prove the convexity of the recoverability in Equation (10).

Proof.

Select two different information driving forces and . Then, the convex combination is also an information driving force, which is due to the nonnegativity and the normalization of the probability distributions. Then, we have

The log sum inequality was applied in Equation (A1) [31]. Thus, R is convex with respect to with the unique minimum point if and only if for all and . Note that, in this paper, we chose the conventional rule that the convex function is a synonym for the concave-up function with a positive second derivative. This completes the proof.

Since both the mutual information I and the recoverability R are convex functions of the transition probabilities , then both of them are the convex functions of the affine transformation of , i.e.,

This means that both I and R are convex functions of the difference d by fixing every m. □

References

- Koski, J.V.; Kutvonen, A.; Khaymovich, I.M.; Ala-Nissila, T.; Pekola, J.P. On-Chip Maxwell’s Demon as an Information-Powered Refrigerator. Phys. Rev. Lett. 2015, 115, 260602. [Google Scholar] [CrossRef] [PubMed]

- McGrath, T.; Jones, N.S.; Ten Wolde, P.R.; Ouldridge, T.E. Biochemical Machines for the Interconversion of Mutual Information and Work. Phys. Rev. Lett. 2017, 118, 028101. [Google Scholar] [CrossRef]

- Sagawa, T.; Ueda, M. Fluctuation theorem with information exchange: Role of correlations in stochastic thermodynamics. Phys. Rev. Lett. 2012, 11, 180602. [Google Scholar] [CrossRef] [PubMed]

- Horowitz, J.M.; Esposito, M. Thermodynamics with Continuous Information Flow. Phys. Rev. X 2014, 4, 031015. [Google Scholar] [CrossRef]

- Parrondo, J.M.R.; Horowitz, J.M.; Sagawa, T. Thermodynamics of information. Nat. Phys. 2015, 11, 131–139. [Google Scholar] [CrossRef]

- Strong, S.P.; Koberle, R.; Van Steveninck, R.R.D.R.; Bialek, W. Entropy and Information in Neural Spike Trains. Phys. Rev. Lett. 1998, 80, 197. [Google Scholar] [CrossRef]

- Tkačik, G.; Bialek, W. Information processing in living systems. arXiv 2016, arXiv:1412.8752. [Google Scholar] [CrossRef]

- Petkova, M.D.; Tkačik, G.; Bialek, W.; Wieschaus, E.F.; Gregor, T. Optimal decoding of information from a genetic network. arXiv 2016, arXiv:1612.08084. [Google Scholar]

- Mark, B.L.; Ephraim, Y. Explicit Causal Recursive Estimators for Continuous-Time Bivariate Markov Chains. IEEE Trans. Signal Process. 2014, 62, 2709–2718. [Google Scholar] [CrossRef]

- Ephraim, Y.; Mark, B.L. Bivariate Markov Processes and Their Estimation. Found. Trends Signal Process. 2013, 6, 1–95. [Google Scholar] [CrossRef]

- Hartich, D.; Barato, A.C.; Seifert, U. Stochastic thermodynamics of bipartite systems: Transfer entropy inequalities and a Maxwell’s demon interpretation. J. Stat. Stat. Mech. 2014, 2014, P02016. [Google Scholar] [CrossRef]

- Zeng, Q.; Wang, J. Information Landscape and Flux, Mutual Information Rate Decomposition and Connections to Entropy Production. Entropy 2017, 19, 678. [Google Scholar] [CrossRef]

- Wang, J.; Xu, L.; Wang, E.K. Potential landscape and flux framework of nonequilibrium networks: Robustness, dissipation, and coherence of biochemical oscillations. Proc. Natl. Acad. Sci. USA 2008, 105, 12271–12276. [Google Scholar] [CrossRef]

- Wang, J. Landscape and flux theory of non-equilibrium dynamical systems with application to biology. Adv. Phys. 2015, 64, 1–137. [Google Scholar] [CrossRef]

- Li, C.; Wang, E.; Wang, J. Potential flux landscapes determine the global stability of a Lorenz chaotic attractor under intrinsic fluctuations. J. Chem. Phys. 2012, 136, 194108. [Google Scholar] [CrossRef] [PubMed]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory; John Wiley: New York, NY, USA, 2003. [Google Scholar]

- Seifert, U. Stochastic thermodynamics, fluctuation theorems and molecular machines. Rep. Prog. Phys. 2012, 75, 126001. [Google Scholar] [CrossRef]

- Jacod, J.; Protter, P. Probability Essentials; Springer: Berlin/Heidelberg, Germany, 2000. [Google Scholar]

- Wu, S.J.; Chu, M.T. Markov chains with memory, tensor formulation, and the dynamics of power iteration. Appl. Math. Comput. 2017, 303, 226–239. [Google Scholar] [CrossRef]

- Gray, R.; Kieffer, J. Mutual information rate, distortion, and quantization in metric spaces. IEEE Trans. Inf. Theory 1980, 26, 412–422. [Google Scholar] [CrossRef]

- Maes, C.; Redig, F.; van Moffaert, A. On the definition of entropy production, via examples. J. Math. Phys. 2000, 41, 1528–1554. [Google Scholar] [CrossRef]

- Zeng, Q.; Wang, J. Nonequilibrium Enhanced Classical M easurement and Estimation. J. Stat. Phys. 2022, 189, 10. [Google Scholar] [CrossRef]

- Yan, L.; Ge, X. Entropy-Based Energy Dissipation Analysis of Mobile Communication Systems. arXiv 2023, arXiv:2304.06988. [Google Scholar] [CrossRef]

- Tasnim, F.; Freitas, N.; Wolpert, D.H. The fundamental thermodynamic costs of communication. arXiv 2023, arXiv:2302.04320. [Google Scholar]

- Ball, F.; Yeo, G.F. Lumpability and Marginalisability for Continuous-Time Markov Chains. J. Appl. Probab. 1993, 30, 518–528. [Google Scholar] [CrossRef]

- Mandal, D.; Jarzynski, C. Work and information processing in a solvable model of Maxwell’s demon. Proc. Natl. Acad. Sci. USA 2012, 109, 11641–11645. [Google Scholar] [CrossRef]

- Barato, A.C.; Seifert, U. Unifying three perspectives on information processing in stochastic thermodynamics. Phys. Rev. Lett. 2014, 112, 219–233. [Google Scholar] [CrossRef]

- Gallager, R.G. Information Theory and Reliable Communication; Wiley: New York, NY, USA, 1968. [Google Scholar]

- Gaspard, P. Time-reversed dynamical entropy and irreversibility in Markovian random processes. J. Stat. Phys. 2004, 117, 599–615. [Google Scholar] [CrossRef]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory, 2nd ed.; John Wiley&Sons: Hoboken, NJ, USA, 2006; ISBN 13 978-0-471-24195-9. [Google Scholar]

- Verdu, S.; Han, T.S. A general formula for channel capacity. IEEE Trans. Inf. Theory 1994, 40, 1147–1157. [Google Scholar] [CrossRef]

- Barato, A.C.; Hartich, D.; Seifert, U. Rate of Mutual Information Between Coarse-Grained Non-Markovian Variables. J. Stat. Phys. 2013, 153, 460–478. [Google Scholar] [CrossRef]

- Boyd, S.P.; Vandenberghe, L. Convex Optimization; Cambridge University Press: Cambridge, MA, USA, 2004. [Google Scholar]

- Holliday, T.; Goldsmith, A.; Glynn, P. Capacity of finite state channels based on Lyapunov exponents of random matrices. IEEE Trans. Inf. Theory 2006, 52, 3509–3532. [Google Scholar] [CrossRef]

- Lan, G.; Sartori, P.; Neumann, S.; Sourjik, V.; Tu, Y. The energy–speed–accuracy trade-off in sensory adaptation. Nat. Phys. 2012, 8, 422–428. [Google Scholar] [CrossRef] [PubMed]

- Elowitz, M.B.; Levine, A.J.; Siggia, E.D.; Swain, P.S. Stochastic Gene Expression in a Single Cell. Science 2002, 297, 1183–1186. [Google Scholar] [CrossRef]

- Detwiler, P.B.; Ramanathan, S.; Sengupta, A.; Shraiman, B.I. Engineering aspects of enzymatic signal transduction: Photoreceptors in the retina. Biophys. J. 2000, 79, 2801–2817. [Google Scholar] [CrossRef] [PubMed]

- Tkacik, G.; Callan, C.G., Jr.; Bialek, W. Information flow and optimization in transcriptional control. Proc. Natl. Acad. Sci. USA 2008, 105, 265–270. [Google Scholar] [CrossRef] [PubMed]

- Tkacik, G.; Callan, C.G., Jr.; Bialek, W. Information capacity of genetic regulatory elements. Phys. Rev. E 2008, 78, 011910. [Google Scholar] [CrossRef] [PubMed]

- Ziv, E.; Nemenman, I.; Wiggins, C.H. Optimal signal processing in small stochastic biochemical networks. PLoS ONE 2007, 2, e1077. [Google Scholar] [CrossRef]

- Moor, A.L.; Zechner, C. Dynamic Information Transfer in Stochastic Biochemical Networks. arXiv 2022, arXiv:2208.04162v1. [Google Scholar] [CrossRef]

- Tostevin, F.; Ten Wolde, P.R. Mutual Information between Input and Output Trajectories of Biochemi cal Networks. Phys. Rev. Lett. 2009, 102, 218101. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).