Abstract

Telecom fraud detection is of great significance in online social networks. Yet the massive, redundant, incomplete, and uncertain network information makes it a challenging task to handle. Hence, this paper mainly uses the correlation of attributes by entropy function to optimize the data quality and then solves the problem of telecommunication fraud detection with incomplete information. First, to filter out redundancy and noise, we propose an attribute reduction algorithm based on max-correlation and max-independence rate (MCIR) to improve data quality. Then, we design a rough-gain anomaly detection algorithm (MCIR-RGAD) using the idea of maximal consistent blocks to deal with missing incomplete data. Finally, the experimental results on authentic telecommunication fraud data and UCI data show that the MCIR-RGAD algorithm provides an effective solution for reducing the computation time, improving the data quality, and processing incomplete data.

1. Introduction

The digital age has dramatically facilitated many aspects of our lives, whereas cybersecurity issues threaten the positive effects of technology. Since unsafe information and illegitimate users blend so well with regular information and users that they can hardly be distinguished, cybersecurity threats [1] especially online fraud, telecommunications fraud [2], online social network fraud [3], credit card fraud [4], bank fraud [5], and fraudulent credit applications [6], have become a knotty governance problem.

Fraud detection [7] is a kind of anomaly detection and is usually tackled as a classification problem by screening abnormal items out with traditional machine learning methods [8,9] or deep learning ones [10,11,12]. Compared with the traditional machine learning model, the deep learning model has the problems of poor interpretability and no direction for parameter adjustment, and its calculation time increases with the complexity index of the model. Traditional machine learning is still widely studied and applied because of its strong interpretability and fast computing speed. The traditional outlier detection methods are mainly based on distribution-based [13], distance-based [14], density-based [15], and clustering-based [16] perspectives. However, traditional approaches to anomaly detection rely heavily on the relevance of features to the classification task. When the feature space is large, the presence of invalid, irrelevant, redundant, or noisy attributes in the data may inevitably affect the performance of the model. As the saying goes, “Data and features determine the upper limit of machine learning, and models and algorithms only approach this upper limit”. Therefore, in the practical training process of traditional machine learning, model performance is largely affected and hindered by data. It is mainly in the following four aspects. First, the complexity of data, which usually contain multi-dimensional, multi-level, and multi-granularity information, makes the application and processing of data complex and diverse. Second, the heterogeneous data [17], which often contain non-single mixed information, such as numerical and categorical information, make it challenging to process data effectively. Third, the uncertainty [18], redundancy [19], and inconsistency [20] of the data bring certain difficulties to the classification task. Fourth, the information contained in missing data [21] is tough to use effectively.

In order to solve the above problems in telecom fraud, achieve fraud mining, and avoid unnecessary economic losses, a large amount of telecom fraud research has emerged. Traditional telecom fraud detection methods typically rely on compiling blacklists of fraudulent numbers to enable fraudulent user discovery and detection. However, fraudulent strategies have evolved, making traditional methods no longer applicable. Therefore, to mine valuable information for fraud detection from multiple network domains of telecommunication data (SMS data, user data, call communication data, app Internet data), behavioral interaction-based [22], topology-based [23], and content-based [24] approaches arise. Meanwhile, considering the rarity and expensive nature of labeled data, unsupervised methods[25,26] are utilized to achieve fraud mining. However, the above studies lack the consideration of fraud from the perspective of the uncertainty of the data itself. The incompleteness of data or the relevance of attributes plays a critical role in the effective detection of fraud problems. Information theory and rough set theory as valid means of measuring uncertainty provide new ideas for solving the telecommunication fraud problem.

In recent years, with the intensive study of rough set theory [27], outlier detection methods based on rough sets and information theory have received extensive attention and research, which provide theoretical support for discovering important information and classifying complex objects. It has strong interpretability and can deal with unlabeled, heterogeneous, redundant, incomplete, or uncertain data. Attribute reduction [19,20,21,28,29,30,31,32], or feature selection, is a method to simplify data, reduce data dimension, and improve model classification ability by filtering out irrelevant or redundant features in data, which can effectively avoid overfitting problems. However, vanilla attribute reduction algorithms [33] of classical rough set theory can only learn the information through strict indistinguishable relation division of the data. This equivalence relation is too tough to handle the incomplete, the ordered, the mixed, and the dynamic data, and these algorithms have poor fault tolerance. To overcome this limitation, variants of rough set theory, for example, the attribute importance based [19,20], the positive region based, the tolerance relation based [28], the maximal consistent block based [21], the discernibility matrix based [29], and the incremental based [30] have proved effective in incomplete information systems [34], ordered information systems [35], mixed-valued information systems [14], and dynamic information systems [36]. Generally speaking, the discernibility matrix-based is time-consuming and infeasible for large-scale datasets, while the attribute importance-based has low time complexity. Moreover, tolerance relation is the weakened form of indistinguishable relations, which can validly solve incomplete information. Maximal consistent block describes the maximal objects set under the tolerance relationship, meaning that there is neither redundant, irrelevant information nor information loss. In contrast, the maximal consistent block accurately expresses the objects’ information under coverage and has higher accuracy.

After weighing the applicability of these variants, this paper introduces a maximal consistent block to deal with the uncertainty, incompleteness, and redundancy of data in the telecom fraud detection problem for the first time. Guided and inspired by previous research, an anomaly detection method (MCIR-CGAD) based on correlation and the maximal consistent block is proposed in this paper. The main contributions of this paper are summarized as follows:

- From the perspective of improving data quality based on the entropy function under rough set theory, we analyze the effect of attribute correlation and independence on the importance of attributes. A max-correlation and max-independence rate attribute reduction algorithm(MCIR) is designed to eliminate redundancy and noise contained in the data.

- From the perspective of data incompleteness processing, a rough gain anomaly detection algorithm (RGAD) is constructed based on the maximal consistent blocks and information gain, which can effectively supply missing data and provide an effective solution for incomplete data processing and feature information measurement.

- The effectiveness of the MCIR-RGAD algorithm is verified in the UCI dataset and authentic telecom fraud dataset. The results show that compared with the other eight kernel functions, the MCIR-RGAD algorithm can reduce the time complexity and effectively use the information contained in the missing data to improve the model performance.

2. Preliminaries

2.1. Rough Set Theory

Rough set theory is an effective way to tackle and utilize incomplete datasets. The information contained in datasets can be represented as an information system.

An information system is a decision information system, where is a nonempty finite set of objects known as a universe. Set is composed of the condition attribute set and the decision attribute set D, where . The information function is a map from the attribute of an object to information value, i.e., . Normally, a decision information system can be abbreviated as .

Definition 1

(Indistinguishable Relation [37]). Given an information system , , is an attribute subset. An equivalence relation on the set U is called the indistinguishable relation IND(B), if it satisfies:

where is a set of equivalence relations about x. Set family means a partition of U about attribute set B. and . Normally, and can be abbreviated as and , respectively.

In an incomplete information system, the indistinguishable relation is unable to effectively divide the incomplete information. Then, the tolerance relation is given as follows.

Definition 2

(Tolerance Relation [37]). Given an incomplete information system , . is an attribute subset. The binary relation of incomplete information on is defined as

where * means the incomplete information. Denote as the family of all equivalence classes of , or simply .

Definition 3

(Maximal Consistent Block [31]). Given an incomplete information system , , is an attribute subset, and Y is said to be a maximal consistent block of attribute set . If Y satisfies

- (i)

- , s.t. ,then Y is called a consistent block;

- (ii)

- , s.t. .

where is the set of all maximal consistent blocks with , . The set of all MCB of x is denoted by , where .

Example 1.

Consider descriptions of several users of the telecom network in Table 1. It is an incomplete decision information system , , where , with -Duration, -Place, -Platform, and * means the incomplete information.

Table 1.

An incomplete information system about the telecom communication heterogeneous data.

According to the tolerance relation in Definition 2, it follows that , where , , , .

By the concept of the maximal consistent block in Definition 3, the maximal consistent block of attribute set is .

Definition 4

(Information Granularity [37]). Given an incomplete information system , , is an attribute subset, and the information granularity of attribute is defined as

where and mean the number of the indistinguishable relation set and set , respectively.

Remark 1.

Given an incomplete information system , , , conditional granularity, mutual information granularity, and joint granularity of attribute set and are defined as [28,38] , , , where means the division of knowledge under attribute and attribute .

2.2. Information Theory

Information entropy is a measure of system uncertainty from the perspective of an information view. The magnitude of entropy reflects the degree of chaos or uncertainty of the system through the distribution of data information.

Definition 5

(Information Entropy [37]). Given an incomplete information system , , is an attribute subset, and the information entropy is defined as

where means the element number of object set .

Remark 2.

By [37], the information entropy is called the granulation measure. The equivalent definition of the complete information system in Equation (4) is defined as

where , , and , . The form of Equation is consistent with the basic definition of information entropy , where , . Therefore, we can understand the change of information entropy from the relationship between sets by a Venn diagram. In the information theory of rough set theory, the finer the partition, the bigger the entropy.

Remark 3.

In a complete information system , condition entropy , mutual information , and joint entropy of attribute B and D are defined as [39] , , .

Theorem 1

(Entropy Measure). Given an incomplete information system , , , conditional entropy, mutual information, and joint entropy of attribute and are defined as

where .

Proof of Theorem 1

The specific proof of Theorem 1 can be found in Appendix A. □

3. Max-Correlation and Max-Independence Rate-Rough GAIN anomaly Detection Algorithm (MCIR-RGAD)

In the information age of Industry 4.0, the amount of data containing a large number of attributes has proliferated. However, not all attributes are relevant to the classification task. In cyberspace, data may be relevant, repetitive, or similar, which does not bring new and valuable information to the anomaly detection task, leading to unnecessary time costs. In addition, attributes that are not relevant to the anomaly detection task may be noisy and not only fail to help model learning, but may even affect detection performance. In addition, data may inevitably be lost during collection, processing, and storage. The lost data itself may contain hidden anomaly information, and a simple subjective assignment or deletion may lead to an invalid use of the lost information. As a result, the attributes in the data are usually not fully functional.

The entropy function of information theory, as a quantitative paradigm for measuring uncertainty, can effectively measure the correlation between attributes in data. In addition, the missing incomplete data has a certain degree of uncertainty, and this uncertainty may also contain valuable information. Therefore, this paper uses the mutual information function in information theory to measure important attributes that are highly relevant and less redundant to the classification task. Further, the maximal consistent blocks of rough set theory are used to process the missing data, and the information useful for the anomaly detection task is mined from the perspective of uncertainty of incomplete data to realize the improvement of the anomaly detection performance.

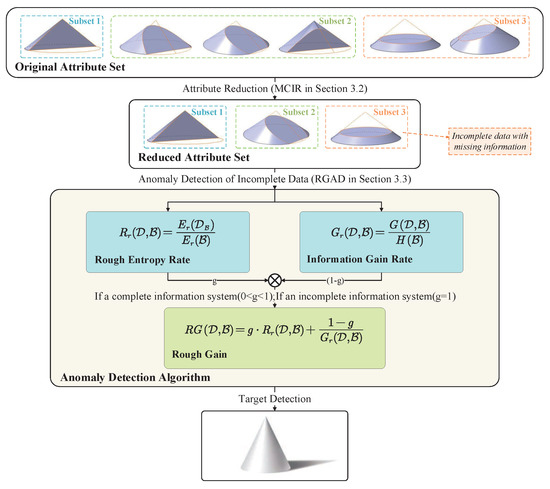

The main idea of this section can be divided into three parts. Section 3.1 theoretically discusses the relationship between the attribute correlation and redundancy in the incomplete information system. Then, Section 3.2 presents an optimization algorithm of attribute reduction(MCIR) with a correlation and independent information. Further, we design a rough gain anomaly detection algorithm(RGAD) based on the maximal consistent block to solve the incompleteness of authentic telecom fraud detection in Section 3.3. Figure 1 shows the framework of the proposed methodology.

Figure 1.

The framework of the proposed methodology.

3.1. Relationship of Correlation and Redundancy

To date, many criteria have been proposed to consider the correlation or redundancy of new classification information, such as criteria JMI, CMIM, CIFE, ICAP, ICI, MCI, etc., which are summarized in [19]. The criteria are shown as follows.

(① + ② + ③),

②③②,

② + ③②,

① + ③,

② + ③ + (① + ③),

where , ①, ②, and ③.

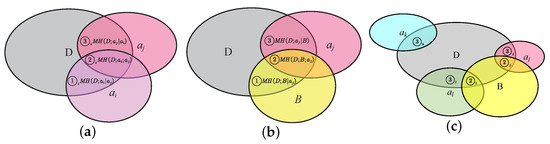

In Figure 2a, ① manifests the relevant information of selected attribute , ② means the redundant information between the attributes , , and , and ③ represents the relevant information of candidate attribute.

Figure 2.

The relationship of relevance and independence in the complete information system: (a) is a variable, is fixed; (b) is a variable, B is fixed; (c) candidate attribute selection.

In the literature, the correlation and redundancy of the criterion function are frequently compared between each candidate attribute and each attribute of the selected attribute set . However, this comparison method has a lot of redundant calculations about information. Therefore, this paper regards the selected attribute reduction set as a whole and studies the correlation and redundancy between the candidate attribute and the attribute reduction set , as shown in Figure 2b.

For the convenience of formulation, we set ①, ②, and ③ denoted as ① , ② , and ③ , respectively. In Figure 2b, ① manifests the relevant information of selected attribute , ② means the redundant information between the attributes , , and , and ③ represents the relevant and independent information of candidate attribute .

Theorem 2

(③ ≜ ① + ② + ③). Given an incomplete information system , , has been selected, and is a candidate attribute, then the correlation and redundancy relationship between the attribute , , and satisfies

Proof of Theorem 2

According to the definition of symbols ①, ②, and ③, we deduce

(① +② + ③) − ③ .

In the decision information system, the attribute set has been selected, and is certain, so the division of knowledge is definite. Then, is a constant. There is a nonnegative constant , such that

Hence, Equation (7) holds, i.e., ③ ≜ ① + ② + ③. □

Theorem 2 manifests that the correlation of the newly selected attribute is consistent with the attribute reduction set , and the effect is the same in classification detection.

Theorem 3

(① + ③ ≜ ③ − ②). Given an incomplete information system , , suppose attribute set has been selected, and is a candidate attribute, then the correlation and redundancy relationship between the attribute , , and satisfies

Proof of Theorem 3

According to the definition of symbols ①, ②, and ③, we have that (① + ③) − (③ − ②)∣.

Based on Equation (8) in Theorem 2, ③ ① + ② + ③ holds, hence

=∣(① + ③) − (③ − ②)∣

=∣(① + ③) − [(① + ② + ③ −) − ②]∣

=∣(① + ③) − (① + ③ −)∣ =

Hence, Theorem 3 is proved, i.e., ① + ③ ≜ ③ − ②. □

Theorem 3 shows that only the correlation between the new attribute and the selected attribute set is considered, which is equivalent to considering the correlation and redundancy of new attributes .

3.2. Max-Correlation and Max-Independence Rate Algorithm (MCIR)

In light of the above analysis and inspired by the literature [19], the max-correlation and max-independence rate algorithm (MCIR) is introduced as follows.

Definition 6

(MCIR). Given an incomplete information system , , , suppose and , then the max-correlation and max-independence rate function is presented as

where , i.e.③②}.

The principle of the MCIR algorithm is to maximize the correlation and the independence of new classification information and minimize the redundancy between old attributes. The definition of information entropy in rough set theory is from the view of the object attribute information division. The finer the division, the greater the entropy value. Therefore, when the system increases the correlation, it tends to select attributes with more new information.

The attribute reduction algorithm based on max-correlation and max-independence rate is shown in Algorithm 1.

| Algorithm 1:Max-Correlation and Max-Independence Rate (MCIR) |

Input: Information system . Output: An attribute reduction set .

|

With the data obtained in the different scenarios, the importance of the correlation and redundancy between attributes exists in diversity. In other words, in the incomplete information system, when the effect of correlation is far biggerer than the redundancy, it is more effective to add new information related to the decision attribute. When similar, redundant, and repetitive information causes noise to affect the detection and classification, it is necessary to increase the correlation and reduce the redundancy.

From the relationship of relevance and independence of the MCIR algorithm in Definition 6, Figure 2c satisfies ③ ③③, ② ②. It shows that the order of attribute importance is , i.e., attribute is better than attribute , and attribute is better than attribute , which can be sorted correctly by the MCIR algorithm.

3.3. Rough Gain Anomaly Detection Algorithm with Max-Correlation and Max-Independence Rate (MCIR-RGAD)

An anomaly detection algorithm (MCIR-RGAD) is designed based on the maximal consistent block horizontally supplementing reduced data. Then, anomaly detection is carried out for the new complemented data. Inspired by the design of information gain in the decision tree, the main idea of the MCIR-RGAD algorithm is to construct a correlation function to measure the ability of attribute classification.

The decision tree, one of the basic classification methods of machine learning, achieves classification tasks by the characteristics of data information. It has fast classification speed, strong interpretability, and readability. Generally, the decision tree learning process consists of feature selection, decision tree generation, and decision tree pruning. In the decision tree, to improve the learning efficiency of the decision tree, the kernel functions, such as information gain, information gain rate, or Gini coefficient, are used to select important features, and then, the decision tree is constructed recursively based on the kernel function. To avoid the occurrence of classification overfitting, we prune the decision tree to balance the model complexity while ensuring the fitting accuracy of the training data.

Both attribute reduction and decision tree work by finding significant features that can classify decision features in information systems. Attribute reduction algorithms can effectively find relevant classification features and achieve effective feature selection. In addition, since there may be intersections in the equivalence class of the object set divided by the maximally consistent block in the incomplete information system, the completeness is not satisfied, i.e., , and there is a negative value when using the information gain for decision learning. Therefore, this paper designs an improved algorithm(MCIR-RGAD) to solve the anomaly detection problem in incomplete systems. Moreover, similar, redundant, repeated, or invalid features are filtered out by reducing. Therefore, this paper does not consider decision pruning.

Frequently, missing data is handled simply by deleting the missing row, filling in zero, filling in one, or filling in the previous data information. However, the explicit deletion or subjective filling of the acquired information will destroy the original data information, so that the missing information cannot be effectively utilized and processed. In an incomplete information system, knowledge can be divided according to the compatibility between available and missing information. This division method not only does not lose the existing data information, but also is more objective. The definition of the kernel function, rough gain , is given below.

Definition 7

([40] Rough Entropy). Given an incomplete information system , , , rough entropy is defined as

where rough entropy satisfies .

Inspired by the literature [40], this paper presents a generalized form of the definition of rough entropy for decision making in information division as shown in Definition 8.

Definition 8

(Decision Rough Entropy). Given an incomplete information system , , , the maximal consistent block of attributes and are , , then the decision rough entropy is defined as

Definition 9

(Rough Gain). Given an incomplete information system , , , the maximal consistent block of attributes and are , , then the rough gain are defined as

where is a positive constant, is the rough entropy rate, is decision rough entropy, is rough entropy of attribute , is the information gain rate, is the information gain, , and .

Therefore, this paper selects features based on the MCIR algorithm, then combines the advantage of the information gain with rough entropy to deal with missing data information. We design an anomaly detection algorithm, MCIR-RGAD algorithm, to achieve the task of anomaly detection. The specific algorithm is shown in Algorithm 2.

Essentially, the MCIR-RGAD algorithm replaces the information gain function of the decision tree with the rough gain function in Definition 9. Contrary to the information gain, a smaller rough gain indicates a better attribute, and the other parts are consistent with the decision tree. Therefore, consistent with the decision tree model, the time complexity of this model is .

| Algorithm 2:MCIR-RGAD algorithm |

Input: Information system , an attribute reduction set , threshold . Output: A decision tree T |

4. Experimental Analysis

The UCI Machine Learning Repository datasets (https://archive.ics.uci.edu/ml/index.php accessed on 12 April 2022) and the Sichuan telecom fraud phone datasets (https://aistudio.baidu.com/aistudio/datasetdetail/40690 accessed on 12 April 2022) are used to verify the effectiveness of the proposed method in this section. The MCIR-RGAD the orithms are coded in Python using Visual Studio Code and were run on a remote server with a GPU, NVIDIA GeForce RTX 3090, 48 RAM.

The Sichuan telecom fraud phone dataset consists of four datasets, namely call data (VOC), short message service data (SMS), user information data (USER), and Internet behavior data (APP). The Union data is an integrated dataset combined based on user phone numbers and contains the attribute of four datasets: Voc dataset, APP dataset, SMS dataset, and User dataset. The details of the datasets are described in Table 2.

Table 2.

Description of datasets.

The goal of this paper is to detect fraudulent users among regular users according to the important attribute efficiently selected by the correlation and independence from the perspective of data uncertainty and incompleteness. Next, we discuss the effectiveness of the method proposed in this paper from three aspects: incompleteness of data (Definition 3), MCIR attribute reduction algorithm (Section 3.2), and MCIR-RGAD anomaly detection classifier (Section 3.3).

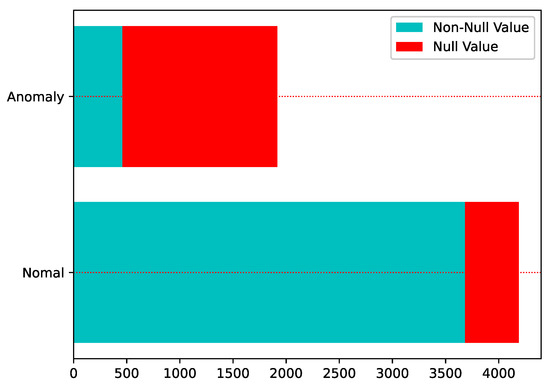

4.1. Incompleteness of Data

Loss of data during recording, storage, or transmission is a very likely problem. Normally, the way to deal with incomplete information is to delete it directly, fill it with zeero, one or mean value; however, this simple way of dealing with it will cause the loss of information. As can be seen from Figure 3, in the Sichuan Telecom fraud dataset, most of the users with null values (red parts) are abnormal, and if they are directly deleted or simply assigned, the abnormal information will not be effectively used. Therefore, from the perspective of improving data quality, this paper uses the idea of maximal consistent blocks in rough set theory to deal with incomplete data to achieve effective information mining.

Figure 3.

Incomplete data among telecom fraud users.

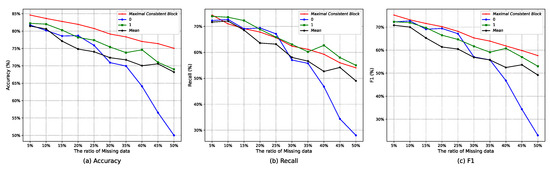

Then, Table 3 and Figure 4 further illustrate the effectiveness of the maximal consistent block in handling incomplete data. Table 3 and Figure 4 are the performance comparisons of tackling null values under authentic incomplete telecom fraud data and random deletion of artificially constructed incomplete data (5%, 10%,…, 50%, 10 types of data missing ratios). From the perspective of the accuracy (Figure 4a), recall (Figure 4b), F1 (Figure 4c), and the number of correct predictions, the maximal consistent block (MCB) can effectively utilize incomplete information and avoid unnecessary information loss.

Table 3.

Incomplete information processing of authentic telecom fraud data.

Figure 4.

Performance comparison of randomly deleting missing data of the Union dataset under the RGAD algorithm.

4.2. Attribute Reduction under MCIR Algorithm

This paper proposes an attribute reduction algorithm of MCIR which uses the entropy function to measure the correlation and independence of attributes from the perspective of rough set theory. The calculation time of the algorithm is reduced while ensuring the accuracy of the telecom fraud detection problem. The main idea is to reduce the computation time by filtering out partial attributes that are most relevant to fraudulent users and have the greatest independence (least redundancy).

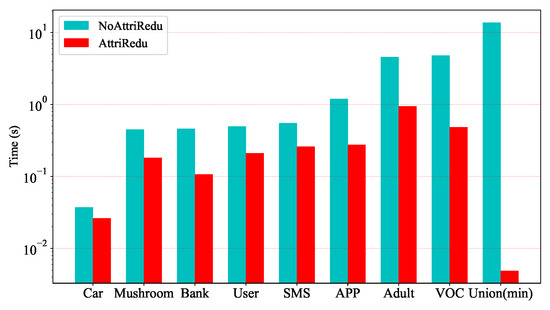

Experiments on UCI and telecom fraud data show that the computation time of the data can be significantly reduced by filtering out important attributes. Figure 5 and Figure 6 further illustrate that the MCIR algorithm not only effectively reduces the computation time, but also eliminates the adverse effects of noise on information, improves data quality, and maintains or even improves the accuracy of model detection.

Figure 5.

Comparison of the computation time before and after the MCIR-RGAD algorithm.

Figure 6.

Comparison of the classification accuracy before and after the MCIR-RGAD algorithm.

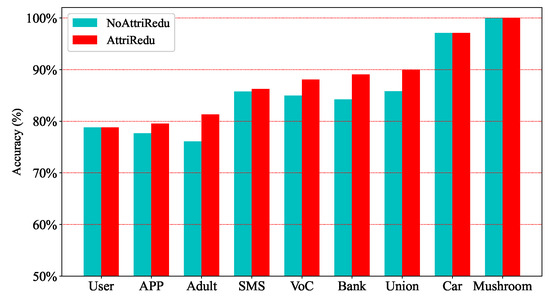

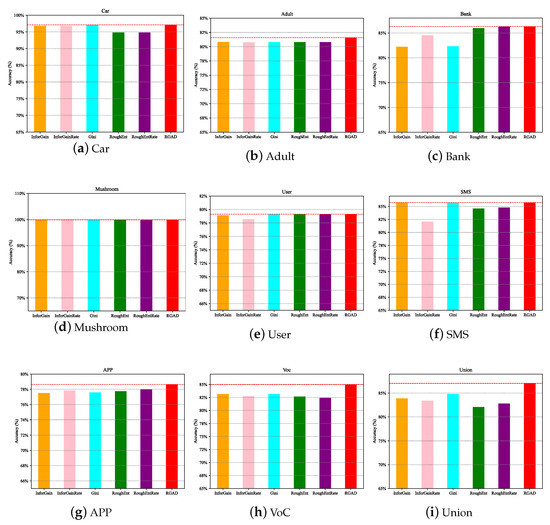

Generally, datasets can be roughly divided into four types, namely: non-redundant and noise-free dataset (Figure 7a Car, approximated as a strictly monotonically increasing function), non-redundant and noisy dataset (Figure 7b Adult, approximately concave function), redundant and noisy dataset (Figure 7c Bank, approximately non-increasing function), and redundant and non-noise dataset (Figure 7d Mushroom, approximately non-decreasing function). Redundancy shows the approximation, repetition, and correlation of attributes in the data with each other; noise refers to the interference and misleading effects of certain attributes in the data on the classification task. Specifically, for a non-redundant and noise-free dataset, there is no need to perform attribute reduction, and each dimension of features is important information. For other types of data, it is necessary to remove redundant and noisy attributes. In addition, it can be seen from Figure 7 that compared with other different attribute reduction algorithms ( [41], [42], , [43], , , ③, ① + ② + ③ [44], ① + ③ [19], ③–② [45]), the MCIR algorithm (red dotted line) designed in this paper achieves better accuracy with fewer attributes. Since the MCIR model removes as many redundant or noisy attributes from the data as possible and achieves data optimization through data dimensionality reduction, making the reduced data better for anomaly detection tasks, the model can maintain or even improve the accuracy of performance detection while reducing the time complexity.

Figure 7.

Performance comparison of different attribute reduction algorithms under the MCIR-RGAD algorithm.

Therefore, in the process of data processing, the MCIR algorithm can use partial important attribute information to shorten the computation time and effectively improve the detection accuracy of the model (Figure 7, the black dotted line).

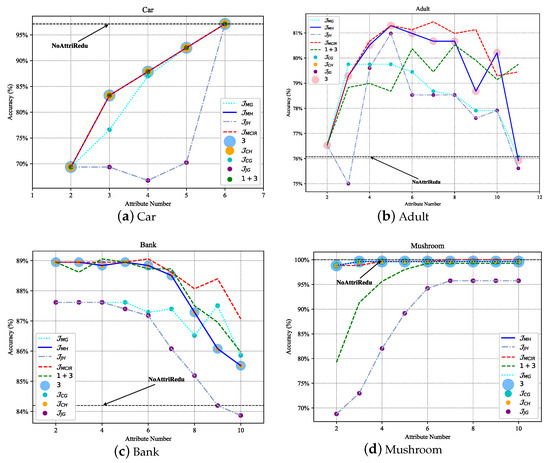

Next, the feature selection of the telecom fraud dataset under the MCIR algorithm is discussed. Figure 8 shows correlations within attributes via a heatmap. Among them, Figure 8a,b are the correlations before and after attribute reduction, respectively. As shown in Figure 8, when attribute reduction is not performed, the data contain a lot of redundant information (dark patches). This paper constructs the MCIR attribute reduction algorithm from the perspective of attribute uncertainty and correlation, which can reduce the information redundancy degree of data while reducing data weight.

Figure 8.

Attribute correlations in the Union dataset.

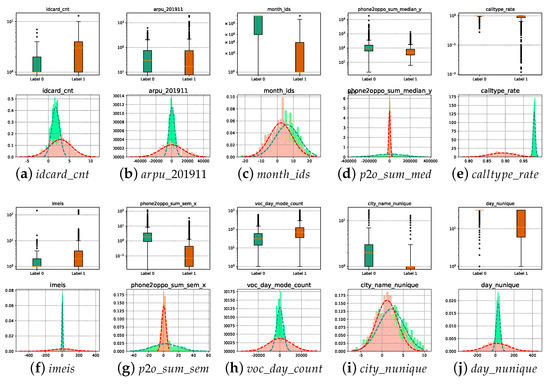

Further, the boxplot and probability distribution plot in Figure 9 show the difference in statistical distribution between normal and abnormal users. The important attributes selected based on the MCIR-RGAD algorithm can effectively highlight the difference between abnormal users and normal users, and fraudulent users can be filtered out by the selected important attributes. Compared with the original Union dataset of 84 attributes with 85.84% detection performance (Table 4), the detection performance of 10 attributes after MCIR simplification is improved to 89.96%, indicating that the MCIR model involved in this paper effectively achieves the selection of important attributes. To further visualize how the selected attributes distinguish between normal and fraudulent users, Figure 9 depicts the box line plot and statistical distribution of the 10 important attributes in the telecom fraud dataset filtered by the MCIR method. From Figure 9, it can be seen that the distributions of normal users and fraudulent users under the 10 attributes have large differences, mainly in the form of (a, f, e) with large difference in mean and variance, (b, d, j, g, h) with large difference in variances with similar means, and (c, i) with large difference in means with similar variances. The larger the difference between the mean and variance distributions of normal and fraudulent users for the selected attributes, the more effective it the method is in distinguishing fraudulent users.

Figure 9.

Classification of selected attributes under the MCIR-RGAD algorithm.

Table 4.

Comparison of accuracy, computational time, and robustness of attribute reduction in the MCIR-RGAD algorithm.

4.3. Anomaly Detection under MCIR-RGAD Algorithm

Redundancy and noise attributes are removed from the original data to improve the data quality of the MCIR algorithm. Then, to perform effective anomaly detection on incomplete data containing missing content, this paper designs the MCIR-RGAD algorithm based on maximal consistent blocks. It provides an effective solution for the processing and utilization of incomplete data.

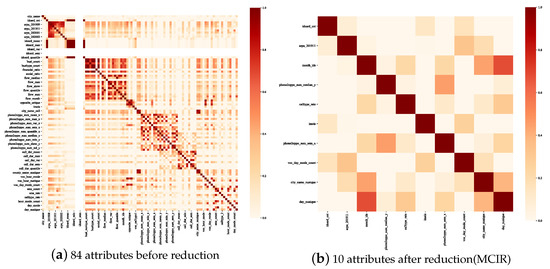

In the anomaly detection of the decision tree, six types of kernel function classification algorithms, namely Information Gain , Information Gain Rate , Gini Coefficient, Rough Entropy , Rough Entropy Rate , and Rough Gain , are compared in this paper. As shown in Figure 10, the rough gain anomaly detection algorithm (RGAD) integrates rough entropy and information gain as the kernel function has better performance.

Figure 10.

Performance comparison of six classification detection algorithms.

The performance and computation time of nine types of attribute reduction algorithms are shown in Table 5 and Table 6. Compared with other algorithms, the MCIR-RGAD algorithm proposed in this paper can effectively achieve classification detection in a shorter time.

Table 5.

Comparison of classification accuracies of attribute reduction from set view and information view.

Table 6.

Comparison of classification computation time of attribute reduction from set view and information view.

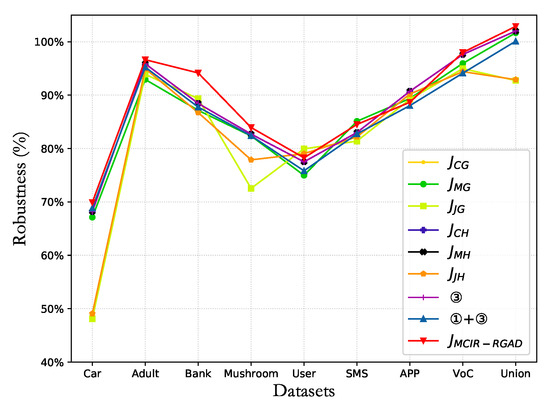

To effectively measure the trade-off between detection performance and computation time cost of an algorithm, this paper designs a robustness metric in Definition 10. In the robustness metric, since computation time and performance level have different importance in different application scenarios, a linear parameter k is designed to trade off the importance of time and performance. The telecom fraud problem in this paper pays more attention to the accuracy of the model; hence, the hyperparameter weight in the robustness metric is set as .

Definition 10

(Performance Robustness).

where is the degree of performance retention, is the degree of time optimization, , , , and are the performance and time before and after attribute reduction, respectively, and is a weight parameter of time, which means the importance of time cost.

Then, Table 4 shows the number of attributes after attribute reduction for different datasets and shows the changes in performance and computation time of the MCIR-RGAD algorithm before and after attribute reduction. Note that this paper compares the performance and computation time of different algorithms in the same number of attribute reduction sets .

The performance robustness metric with less computation time and high performance indicates that the designed classifier algorithm is better. The accuracies and computation time in Table 4 and Table 5 and Figure 11 show the robustness under the different attribute reduction algorithms. Compared with other algorithms, the MCIR-RGAD algorithm has strong robustness. That is, when the number of attributes in the attribute set is reduced to the same number, the anomaly detection algorithm MCIR-RGAD can effectively ensure the accuracy of classification detection while shortening the calculation time.

Figure 11.

Comparison of the robustness in different datasets.

4.4. Statistical Test Analysis

Two nonparametric statistical test analyses of the Friedman test and the Nemenyi post hoc test are introduced to further verify the validity of the comparison method and the proposed method. We compare the performance differences at a significance level of .

4.4.1. Friedman Test

The Friedman test can effectively determine whether there is a significant difference in algorithm performance. Suppose we compare K algorithms on N datasets. In the Friedman test, the null hypothesis assumes that there is no significant difference between the models. First, the models were ranked on different datasets using the performance accuracy cases in Table 5. Then, we acquire the average of overall ranking for each model, . The performance ranking of the nine algorithms on the nine datasets is given in Table 7. When the performance of the algorithms is equal, the ordinal values are averaged. For example, if the performance of the 7 algorithms (, , , , ③, ①+③, ) under the Car dataset in Table 5 is equal, then their rank values are .

Table 7.

Ranking on 9 datasets for 9 algorithms.

The Friedman statistic is distributed according to -distribution with degrees of freedom, when K and N are large enough. Owing to the overly conservative nature of the original Friedman test, the variable is commonly used today, which is distributed according to F-distribution with and degrees of freedom, i.e., .

This paper compares nine algorithms using nine datasets. In the Friedman test, if the p-value is less than the significance level or the value is greater than the critical value determined by the F-distribution table, the null hypothesis can be rejected, and at least two algorithms are considered to have significant differences. By checking the table and calculating, we have and . Therefore, the null hypothesis can be rejected with 95% confidence level, indicating that there is a significant difference between the algorithms in the model. Then, a pairwise comparison of the benchmark algorithms was performed using the Nemenyi post hoc test.

4.4.2. Nemenyi Post Hoc Test

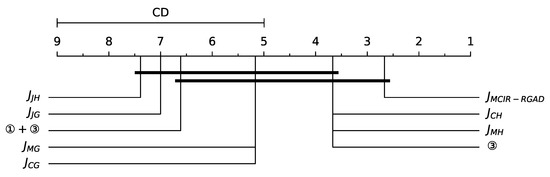

In the Nemenyi post hoc test, the performance of two models is considered to be significantly different if the average rank value of the two models is greater than or equal to the criterion distance , where the critical value obeys the Tukey distribution. By checking the table and calculating, under the confidence level , then . It can be seen from Figure 12 that the MCIR-RGAD model is optimal and significantly different from and . In addition, , , and 3 are equivalent, and is equivalent to . Namely, the model performance can be ordered as ③①+③.

Figure 12.

Average ranks diagram comparing the benchmark methods in terms of accuracy.

5. Conclusions

It is crucial and time-consuming to obtain anomaly classification information in big data with uncertainty, redundancy, and incompleteness. In this paper, a new attribute reduction algorithm (MCIR) is proposed based on the correlation and independence of the data. Furthermore, considering the consistency of attribute reduction and decision tree in selecting features, this paper combines their advantages and constructs an anomaly detection algorithm called RGAD to tackle incomplete data based on the maximal consistent blocks. The proposed algorithm (MCIR-RGAD) can significantly reduce the computation time and effectively maintain or improve the accuracy. Therefore, facing the problem of anomaly detection, this paper provides an effective solution for the optimization of data quality and the processing of incomplete data.

In the future, we plan to extend this work in the context of unsupervised learning from the perspective of structural information among objects, using the concept of neighborhood information systems in rough set theory. The extended work will optimize the data quality and reduce the time complexity through attribute reduction methods, improve the detection performance of classification tasks through structural information, and maximize valuable information through incomplete mixed data (both categorical and numerical data). This will provide an effective solution to the research of information theory and rough set theory on anomaly detection problems.

Author Contributions

Conceptualization, software, R.L. and B.W.; methodology, H.C.; validation, R.L., B.W. and X.H.; formal analysis, K.W.; writing—original draft preparation, R.L.; writing—review and editing, S.L. and H.C.; visualization, X.H.; supervision, project administration, funding acquisition, H.C. and S.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Major Scientific and Technological Special Project of Henan Province under Grant 221100210700.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets used in research are publicly available at https://archive.ics.uci.edu/ml/index.php accessed on 12 April 2022 and https://aistudio.baidu.com/aistudio/datasetdetail/40690 accessed on 12 April 2022. The codes of this research will be made available on request.

Acknowledgments

Thanks to the Sichuan Provincial Data Center and Sichuan Mobile for providing the data without which this work would not have been possible. In addition, the authors thank the editors and reviewers for their valuable comments. This work was supported by the by the Major Scientific and Technological Special Project of Henan Province under Grant 221100210700. The authors gratefully thank the associate editor and the reviewers.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Proof of Theorem 1

For a complete information system, we have that , , . Support that , , , and , , in set , where , and represent the number of elements in the set , and , respectively. and , , then , , = = = .

(i) Proof of .

In incomplete information system, , , and , .

Then, we have that

According to and , then

Hence,

Therefore,

This completes the proof (i).

Theorem (ii) and (iii) are easy to know by the relationship of and ; hence, they are omitted here. □

References

- Ahmed, I.M.; Kashmoola, M.Y. CCF Based System Framework In Federated Learning Against Data Poisoning Attacks. J. Appl. Sci. Eng. 2022, 26, 973–981. [Google Scholar]

- Lin, H.; Liu, G.N.; Wu, J.J.; Zuo, Y.; Wan, X.; Li, H. Fraud detection in dynamic interaction network. IEEE Trans. Knowl. Data Eng. 2019, 32, 1936–1950. [Google Scholar] [CrossRef]

- Shehnepoor, S.; Salehi, M.; Farahbakhsh, R. NetSpam: A network-based spam detection framework for reviews in online social media. IEEE Trans. Inf. Forensics Secur. 2017, 12, 1585–1595. [Google Scholar] [CrossRef]

- Dal-Pozzolo, A.; Caelen, O.; Le-Borgne, Y.A. Learned lessons in credit card fraud detection from a practitioner perspective. Expert Syst. Appl. 2014, 41, 4915–4928. [Google Scholar] [CrossRef]

- Repousis, S.; Lois, P.; Veli, V. An investigation of the fraud risk and fraud scheme methods in Greek commercial banks. J. Money Laund. Control. 2019, 22, 53–61. [Google Scholar] [CrossRef]

- Tsang, S.; Koh, Y.S.; Dobbie, G.; Alam, S. SPAN: Finding collaborative frauds in online auctions. Knowl. Based Syst. 2014, 71, 389–408. [Google Scholar] [CrossRef]

- Pourhabibi, T.; Ong, K.; Kam, B.H. Fraud detection: A systematic literature review of graph-based anomaly detection approaches. Decis. Support Syst. 2020, 133, 113303. [Google Scholar] [CrossRef]

- Zhao, Q.; Chen, K.; Li, T. Detecting telecommunication fraud by understanding the contents of a call. Cybersecurity 2018, 1, 1–12. [Google Scholar] [CrossRef]

- Yang, J.; Yang, T.; Shi, C. Research on fault identification method based on multi-resolution permutation entropy and ABC-SVM. J. Appl. Sci. Eng. 2021, 25, 733–742. [Google Scholar]

- Jurgovsky, J.; Granitzer, M.; Ziegler, K. Sequence classification for credit-card fraud detection. Expert Syst. Appl. 2018, 100, 234–245. [Google Scholar] [CrossRef]

- Wang, X.W.; Yin, S.L.; Li, H. A Network Intrusion Detection Method Based on Deep Multi-scale Convolutional Neural Network. Int. J. Wireless Inf. Netw. 2020, 27, 503–517. [Google Scholar] [CrossRef]

- Fiore, U.; De-Santis, A.; Perla, F. Using generative adversarial networks for improving classification effectiveness in credit card fraud detection. Inf. Sci. 2019, 479, 448–455. [Google Scholar] [CrossRef]

- Barnett, V.; Lewis, T. Outliers in Staristical Data; John Wiley and Sons: Hoboken, NJ, USA, 1994. [Google Scholar]

- Wang, Y.; Li, Y. Outlier detection based on weighted neighbourhood information network for mixed-valued datasets. Inf. Sci. 2021, 564, 396–415. [Google Scholar] [CrossRef]

- Breunig, M.M.; Kriegel, H.P.; Ng, R.T.; Sander, J. LOF: Identifying Density-Based Local Outliers. In Proceedings of the 2000 ACM SIGMOD International Conference on Management of Data, Dallas, TX, USA, 15–18 May 2000; pp. 93–104. [Google Scholar]

- Ali, D.; Omer, K. Efficient density and cluster based incremental outlier detection in data streams. Inf. Sci. 2022, 607, 901–920. [Google Scholar]

- Li, Z.; Qu, L.; Zhang, G. Attribute selection for heterogeneous data based on information entropy. Int. J. Gen. Syst. 2021, 50, 548–566. [Google Scholar] [CrossRef]

- Salehi, F.; Keyvanpour, M.R.; Sharifi, A. SMKFC-ER: Semi-supervised multiple kernel fuzzy clustering based on entropy and relative entropy. Inf. Sci. 2021, 547, 667–688. [Google Scholar] [CrossRef]

- Wang, J.; Wei, J.; Yang, Z. Feature selection by maximizing independent classification information. IEEE Trans. Knowl. Data Eng. 2017, 29, 828–841. [Google Scholar]

- Thuy, N.N.; Wongthanavasu, S. On reduction of attributes in inconsistent decision tables based on information entropies and stripped quotient sets. Expert Syst. Appl. 2019, 137, 308–323. [Google Scholar] [CrossRef]

- Patrick, G.C.; Cheng, G.; Jerzy, W.G.; Teresa, M. Characteristic sets and generalized maximal consistent blocks in mining incomplete data. Inf. Sci. 2018, 453, 66–79. [Google Scholar]

- Liu, G.N.; Guo, J.; Zuo, Y.; Wu, J.J.; Guo, R.Y. Fraud detection via behavioral sequence embedding. Knowl. Inf. Syst. 2020, 62, 2685–2708. [Google Scholar] [CrossRef]

- Hu, X.X.; Chen, H.C.; Liu, S.X.; Jiang, H.C.; Chu, G.H.; Li, R. BTG: A Bridge to Graph machine learning in telecommunications fraud detection. Fut Gen. Comp. Syst. 2022, 137, 274–287. [Google Scholar] [CrossRef]

- Emmanuel, O.; Rose, O.A.; Mohammed, U.A.; Ezekiel, R.A.; Bashir, T.; Salihu, S. Detecting Telecoms Fraud in a Cloud-Base Environment by Analyzing the Content of a Phone Conversation. Asian J. Res. Comp. Sci. 2022, 4, 115–131. [Google Scholar]

- Viktoras, C.; Andrej, B.; Rima, K.; Olegas, V. Outlier Analysis for Telecom Fraud Detection. Dig. Bus. Int. Syst. 2022, 1598, 219–231. [Google Scholar]

- Mollaoğlu, A.; Baltaoğlu, G.; Çakır, E.; Aktas, M.S. Fraud Detection on Streaming Customer Behavior Data with Unsupervised Learning Methods. In Proceedings of the 2021 International Conference on Electrical, Communication, and Computer Engineering (ICECCE), Kuala Lumpur, Malaysia, 12–13 June 2021. [Google Scholar]

- Zhong, Y.; Zhang, X.Y.; Shan, F. Hybrid data-driven outlier detection based on neighborhood information entropy and its developmental measures. Expert Syst. Appl. 2018, 112, 243–257. [Google Scholar]

- Qian, Y.; Liang, J.; Wei-zhi, Z.W. Information granularity in fuzzy binary GrC model. IEEE Trans. Fuzzy Syst. 2010, 19, 253–264. [Google Scholar]

- Feng, Q.R.; Zhou, Y. Soft discernibility matrix and its applications in decision making. Appl. Soft Comp. 2014, 24, 749–756. [Google Scholar] [CrossRef]

- Shu, W.; Qian, W. An incremental approach to attribute reduction from dynamic incomplete decision systems in rough set theory. Data Knowl. Eng. 2015, 100, 116–132. [Google Scholar] [CrossRef]

- Sun, Y.; Mi, J.; Chen, J. A new fuzzy multi-attribute group decision-making method with generalized maximal consistent block and its application in emergency management. Knowl. Based Syst. 2021, 215, 106594. [Google Scholar] [CrossRef]

- Zhao, X.; Zhang, J.; Qin, X. LOMA: A local outlier mining algorithm based on attribute relevance analysis. Expert Syst. Appl. 2017, 84, 272–280. [Google Scholar] [CrossRef]

- Liang, B.H.; Liu, Y.; Shen, C.Y. Attribute Reduction Algorithm Based on Indistinguishable Degree. In Proceedings of the 2018 IEEE 3rd International Conference on Cloud Computing and Big Data Analysis (ICCCBDA), Chengdu, China, 20–22 April 2018. [Google Scholar]

- Luo, C.; Li, T.; Huang, Y. Updating three-way decisions in incomplete multi-scale information systems. Inf. Sci. 2019, 476, 274–289. [Google Scholar]

- Du, W.S.; Hu, B.Q. Attribute reduction in ordered decision tables via evidence theory. Inf. Sci. 2016, 364, 91–110. [Google Scholar] [CrossRef]

- Lang, G.; Cai, M.; Fujita, H. Related families-based attribute reduction of dynamic covering decision information systems. Knowl. Based Syst. 2018, 162, 161–173. [Google Scholar] [CrossRef]

- Liang, J.; Shi, Z.; Li, D. Information entropy, rough entropy and knowledge granulation in incomplete information systems. Int. J. Gen. Syst. 2006, 35, 641–654. [Google Scholar] [CrossRef]

- Liu, X. Research on Uncertainty Measurement and Attribute Reduction in Generalized Fuzzy Information Systems. Ph.D. Thesis, Hunan Normal University, Changsha, China, 2022. [Google Scholar]

- Dai, J.; Liu, Q. Semi-supervised attribute reduction for interval data based on misclassification cost. Int. J. Mach. Learn. Cybern. 2022, 13, 1739–1750. [Google Scholar] [CrossRef]

- Sun, L.; Xu, J.C.; Yun, T. Feature selection using rough entropy-based uncertainty measures in incomplete decision systems. Knowl. Based Syst. 2012, 36, 206–216. [Google Scholar] [CrossRef]

- Gao, C.; Zhou, J.; Miao, D.Q.; Yue, X.D.; Wan, J. Granular-conditional-entropy-based attribute reduction for partially labeled data with proxy labels. Inf. Sci. 2021, 580, 111–128. [Google Scholar] [CrossRef]

- Wang, Y.B.; Chen, X.J.; Dong, K. Attribute reduction via local conditional entropy. Int. J. Mach. Learn. Cyb. 2019, 10, 3619–3634. [Google Scholar] [CrossRef]

- Qu, L.D.; He, J.L.; Zhang, G.Q.; Xie, N.X. Entropy measure for a fuzzy relation and its application in attribute reduction for heterogeneous data. Appl. Soft Comp. 2022, 118, 108455. [Google Scholar] [CrossRef]

- Yang, H.; Moody, J. Data visualization and feature selection: New algorithms for nongaussian data. Adv. Neural Inf. Process. Syst. 1999, 12, 687–693. [Google Scholar]

- Jakulin, A. Machine Learning Based on Attribute Interactions. Ph.D. Thesis, University of Ljubljana, Ljubljana, Slovenia, 12 May 2006. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).