Abstract

This paper investigates the status updating policy for information freshness in Internet of things (IoT) systems, where the channel quality is fed back to the sensor at the beginning of each time slot. Based on the channel quality, we aim to strike a balance between the information freshness and the update cost by minimizing the weighted sum of the age of information (AoI) and the energy consumption. The optimal status updating problem is formulated as a Markov decision process (MDP), and the structure of the optimal updating policy is investigated. We prove that, given the channel quality, the optimal policy is of a threshold type with respect to the AoI. In particular, the sensor remains idle when the AoI is smaller than the threshold, while the sensor transmits the update packet when the AoI is greater than the threshold. Moreover, the threshold is proven to be a non-increasing function of channel state. A numerical-based algorithm for efficiently computing the optimal thresholds is proposed for a special case where the channel is quantized into two states. Simulation results show that our proposed policy performs better than two baseline policies.

1. Introduction

Recently, the Internet of things (IoT) has been widely used in the field of industrial manufacturing, environment monitoring, and home automation. In these applications, the sensors generate and transmit new status updates to the destination, where the freshness of the status updates is crucial for the destination to track the state of the environment and to make decisions. Thus, a new information freshness metric, namely age of information (AoI), was proposed in [1] to measure the freshness of updates from the receiver’s perspective. There are two widely used metrics, i.e., the average peak AoI [2] and the average AoI [3]. In general, the smaller the AoI is, the fresher the received updates are.

AoI was originally investigated in [1] for updating the status in vehicular networks. Considering the impact of the queueing system, the authors in [4] investigated the system performance under the M/M/1 and M/M/1/2 queueing systems with a first-come-first-served (FCFS) policy. Furthermore, the work of [5] studied how to keep the updates fresh by analyzing some general update policies, such as the zero-wait policy. The authors of [6] considered the optimal schedule problem for a more general cost that is the weighted sum of the transmission cost and the tracking inaccuracy for the information source. However, these works assumed that the communication channel is not error-prone. In practice, status updates are delivered through an erroneous wireless channel, which suffers from fading, interference, and noises. Therefore, the received updates may not be decoded correctly, which induces information aging and energy consumption.

There are several works that considered the erroneous channel [7,8]. The authors in [9] considered multiple communication channels and investigated the optimal coding and decoding schemes. The channel with an independent and identical packet error rate over time was considered in [10,11]. The work of [12] considered the impact of fading channels in packet transmission. A Markov channel was investigated in [13], where threshold policy was proven to be optimal, and a simulation-based approach was proposed to compute the corresponding threshold. However, how the information of channel quality should be exploited to improve system performance in information freshness remains to be investigated.

Channel quality indicator (CQI) feedback is commonly used in wireless communication systems [14]. In block fading channels, the channel quality, generally reported by the terminal, is highly relevant to the packet error rate (PER) [15] or, namely, the block error rate (BLER). It is probable that a received packet fails to be decoded when the channel suffers from a poor condition. However, a transmitter with the channel quality information is able to keep idle when there is deep fading, thereby saving energy. The channel quantization was also considered in [12,13], where the channel was quantized into multiple states. However, the decision making was not dependent on the channel state in [12], while [13] did not consider the freshness of information. These motivate us to introduce the information of channel quality into the design of the updating policy.

In this paper, a status update system with channel quality feedback is considered. In particular, the channel condition is quantized into multiple states, and the destination feeds the channel quality back to the sensor before the sensor updates the status. Our problem is to investigate the channel quality-based optimal status update policy, which minimizes the weighted sum of the AoI and the energy consumption. Our key contributions are summarized as follows:

- An average cost Markov decision process (MDP) is formulated to model this problem. Due to the infinite countable states and unbounded cost of the MDP, which makes analysis difficult, the discounted version of the original problem is first investigated, and the existence of the stationary and deterministic policy to the original problem is then proven. Furthermore, it is proven that the optimal policy is a threshold structure policy with respect to the AoI for each channel state by showing the monotonic property of the value function. We also prove that the threshold is a non-increasing function of channel state.

- By utilizing the threshold structure, a structure-aware policy iteration algorithm is proposed to efficiently obtain the optimal updating policy. Nevertheless, a numerical-based algorithm which directly computes the thresholds by non-linear fractional programming is also derived. Simulation results reveal the effects of system parameters and show that our proposed policy performs better than the zero-wait policy and periodic policy.

The rest of this paper is organized as follows. In Section 2, the system model is presented and the optimal updating problem is formulated. In Section 3, the optimal updating policy is proven to be of a threshold structure, and a threshold-based policy iteration algorithm is proposed to find the optimal policy. Section 4 presents the simulation results. Finally, we summarize our conclusions in Section 5.

2. System Model and Problem Formulation

2.1. System Description

In this paper, we consider a status update system that consists of a sensor and a destination, as shown in Figure 1. Time is divided into slots. Without loss of generality, we assume that each time slot has an equal length, which is normalized to unity. At the beginning of each slot, the destination feeds the CQI back to the sensor. It is worth noting that the PER is different for different CQIs. Based on the CQI, the sensor decides in each time slot whether it should generate and transmit a new update to the destination via a wireless channel or keep idle for saving energy. These updates are crucial for the destination to estimate the states of the surrounding environment of the sensor and to make in-time decisions. Let , which takes value from the action set , denote the action that the sensor performs in slot t, where means that the sensor generates and transmits a new update to the destination, and represents that the sensor is idle. If the sensor transmits an update packet in slot t, an acknowledgment will be fed back at the end of this time slot. In particular, an ACK is fed back when the destination successfully receives the update packet, and a NACK otherwise.

Figure 1.

System model.

2.2. Channel Model

Suppose that the wireless channel is a block fading channel where the channel gain remains constant in each slot and varies independently over different slots. Let denote the channel gain in slot t which takes value from . We quantize the channel gain into levels which are denoted as . The quantization levels are arranged in an increasing order where and . Hence, the channel is said to be in state i if the channel gain belongs to the interval . We denote by the state of the channel in slot t, where . With the aid of CQI fed back from the destination, the sensor has knowledge of the channel state at the beginning of each time slot.

Let denote the distribution of the channel gain. Then, the probability of the channel being in state i is

We assume that the signal-to-noise ratio (SNR) per information bit during the transmission remains constant. Then, the PER depends only on the channel gain. In particular, the PER for channel state i is given by

where is the PER of a packet with respect to the channel gain. The success probability of a packet transmitted over channel state i is . According to [15], the success probability is a non-decreasing function of the channel state.

2.3. Age of Information

This paper uses the AoI as the freshness metric, which is defined as the time elapsed since the generation time of the latest update packet that is successfully received by the destination [1]. Let be the generation time of the ith successfully received update packet. Then, the AoI in time slot t, , is defined as

In particular, if an update packet is successfully received, the AoI decreases to one. Otherwise, the AoI increases by one. Altogether, the evolution of the AoI is expressed by

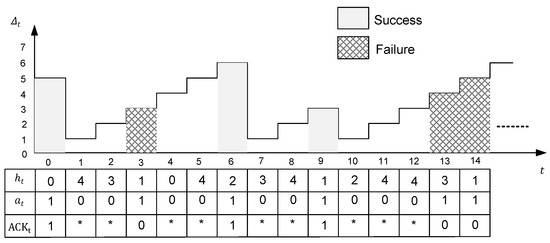

An example of the AoI evolution is shown in Figure 2, where the gray rectangle represents a successful reception of an update packet, and the mesh rectangle represents a transmission failure.

Figure 2.

An example of the AoI evolution with the channel state , the action , and the acknowledgment . The asterisk stands for no acknowledgment from destination when the sensor keeps idle.

2.4. Problem Formulation

The objective of this paper is to find an optimal updating policy that minimizes the long-term average of the weighted sum of AoI and energy consumption. A policy can be represented by the sequence of actions, i.e., . Let be a set of stationary and deterministic policies. Then, the optimal updating problem is given by

where is the energy consumption, and is the weighting factor.

3. Optimal Updating Policy

This section aims to investigate the optimal updating policy for the problem formulated in above section. In this section, our investigating problem is first formulated into an infinite horizon average cost MDP, and the existence of a stationary and deterministic policy that minimizes the average cost is proven. Then, the non-decreasing property of the value function is derived. Based on this property, we prove that the optimal update policy is of a threshold structure with respect to AoI, and the optimal threshold is a non-increasing function of the channel state. Aiming to reduce the computational complexity, a structure-aware policy iteration algorithm is proposed to find the optimal policy. Moreover, non-linear fractional programming is employed to directly compute the optimal thresholds in a special case where the channel is quantized into two states.

3.1. MDP Formulation

The Markov decision process (MDP) is typically applied to address the optimal decision problem when the investigation problem can be characterized by the evolution of the system state and the cost is per-stage. The optimization problem in (5) can be formulated as an infinite horizon average cost MDP, which is elaborated in the following.

- States: The state of the MDP in slot t is defined as , which takes values in . Hence, the state space is countable and infinite.

- Actions: The set of actions chosen in slot t is .

- Transition Probability: Let be the transition probability that the state in slot t transits to in slot after taking action . According to the evolution of AoI in (4), the transition probability is given by

- Cost: The instantaneous cost at state given action in slot t is

For an MDP with infinite states and unbounded cost, it is not guaranteed to have a stationary and deterministic policy that attains the minimum average cost in general. Fortunately, we can prove the existence of stationary and deterministic policy in next sub-section.

3.2. The Existence of Stationary and Deterministic Policy

For rigorous mathematical analysis, this section is purposed to prove the existence of a stationary and deterministic optimal policy. According to [16], we first analyze the associated discounted cost problem of the original MDP. The expectation of discount cost with respect to discounted factor and initial state under a policy is given by

where is the decision made in state under policy , and is the discounted factor. We first verify that is finite for any policy and all .

Lemma 1.

Given , for any policy π and all , we have

Proof.

By definition, the instantaneous cost in state given action is

Therefore, holds. Combined with the fact that the AoI increases, at most, linearly at each slot for any policy, we have

which completes the proof. □

Let denote the minimum expected discounted cost. By Lemma 1, holds for every and .

According to [16] (Proposition 1), we have

which implies that satisfies the Bellman equation. can be solved via a value iteration algorithm. In particular, we define , and for all , we have

where

is related to the right-hand-side (RHS) of the discounted cost optimality equation. Then, for every and .

Now, we can use the value iteration algorithm to establish the monotonic properties of

Lemma 2.

For all Δ and i, we have

and for all and i, we have

Proof.

See Appendix A. □

Based on Lemmas 1 and 2, we are ready to show that the MDP has a stationary and deterministic optimal policy in the following theorem.

Theorem 1.

For the MDP in (5), there exists a stationary and deterministic optimal policy that minimizes the long-term average cost. Moreover, there exists a finite constant for all states , where λ is independent of the initial state, and a value function , such that

holds for all .

Proof.

See Appendix B. □

3.3. Structural Analysis

According to Theorem 1, the optimal policy for the average cost problem satisfies the following equation

where

Similar to Lemma 2, the monotonic property of the value function is given in the following lemma.

Lemma 3.

Given the channel state i, for any , we have

Proof.

This proof follows the same procedure of Lemma 2, with one exception being that the value iteration algorithm is based on Equation (17). □

Moreover, based on Lemma 3, the property of the increment of the value function is established in following lemma.

Lemma 4.

Given the channel state i, for any , we have

Proof.

We first examine the relation between the state-action value functions, i.e., and . Specifically, based on Lemma 3, we have

and

Since , we complete the proof. □

Our main result is presented in the following theorem.

Theorem 2.

For any given channel state i, there exists a threshold , such that when , the optimal action is to generate and transmit a new update, i.e., , and when , the optimal action is to remain idle, i.e., . Moreover, the optimal threshold is a non-increasing function of channel state i, i.e., holds for all and .

Proof.

See Appendix C. □

According to Theorem 2, the sensor will not update the status until the AoI exceeds the threshold. Moreover, if the channel condition is not good, i.e., channel state i is small, the sensor will wait for a longer time before it samples and transmits the status update packet so as to reduce the energy consumption because of a higher probability of transmission failure.

Based on the threshold structure, we can reduce the computational complexity of the policy iteration algorithm to find the optimal policy. The details of the algorithm are presented in Algorithm 1.

| Algorithm 1 Policy iteration algorithm (PIA) based on the threshold structure. |

|

3.4. Computing the Thresholds for a Special Case

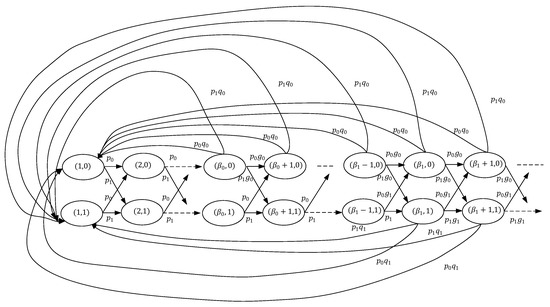

In the above section, we have proven that the optimal policy has a threshold structure. Given the thresholds , a Markov chain can be induced by the threshold policy. A special Markov chain is depicted in Figure 3, where the channel has two states. By leveraging the Markov chain, we first derive the average cost of the special case, which is summarized in the following theorem.

Figure 3.

An illustration of established Markov chain with two channel states.

Theorem 3.

Let be the steady state probability of state of the corresponding Markov chain with two states and be the threshold with respect to the channel state, respectively. The steady state probability is given by

where , , , and satisfies following equation:

The average cost then is given by

where

and

Proof.

See Appendix D. □

Therefore, the closed form of the average cost is a function of thresholds. By linear search or gradient descent algorithm, the numerical solution of optimal thresholds can be obtained. However, computing its gradient directly requires a large amount of computation till convergence. Here, a nonlinear fractional programming (NLP) [17] based algorithm which can efficiently obtain the numerical solution is proposed.

Let . We can rewrite the cost function as a fractional form, where the numerator is denoted as , and the denominator term is . The solution to an NLP problem with the form in the following

is related to the optimization problem (31)

where the following assumption should also be satisfied:

Define the function with variable q as

According to [17], is a strictly monotonic decreasing function and is convex over . Furthermore, we have if, and only if,

Then, the algorithm can be described by two steps. The first step is to solve a convex optimization problem with a one dimensional parameter by a bisection method. The second step is to solve a high dimensional optimization problem by a gradient descent method.

According to [17], a bisection method can be used to solve the optimal , under the assumption that the value of function can be obtained exactly for given q. We will actually use the gradient descent algorithm to obtain the numerical solution of since the global search method may not perform in polynomial time. As a trick, we alternate the optimization variables of thresholds by the variables of the decrement of thresholds, i.e., . To summarize, the numerical-based method for computing the optimal thresholds is given by Algorithm 2.

| Algorithm 2 Numerical computation of the optimal thresholds. |

| Input: |

| Output: |

|

4. Simulation Results and Discussions

In this section, the simulation results are presented to investigate the impacts of the system parameters. We also compare the optimal policy with the zero-wait policy and periodic policy, where the zero-wait policy immediately generates an update at each time slot and the periodic policy keeps a constant interval between two updates.

Figure 4 depicts the optimal policy for different AoI and channel states, where the number of channel states is 5. It can be seen that, for each channel state, the optimal policy has a threshold structure with respect to the AoI. In particular, when the AoI is small, it is not beneficial for the sensor to generate and transmit a new update because the energy consumption dominates the total cost. We can also see that the threshold is non-increasing with the channel state. In other words, if the channel condition is better, the threshold is smaller. This is because the success probability of packet transmission increases with the channel state.

Figure 4.

Optimal policy for different AoI and channel states ().

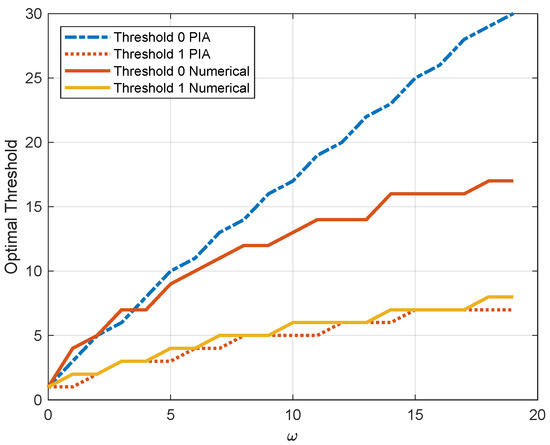

Figure 5 illustrates the thresholds for the MDP with two channel states with respect to the weighting factor , in which the two dashed lines are obtained by PIA and the other two solid lines are obtained by the proposed numerical algorithm. Both of the thresholds grow with the increasing of . Since the energy consumption has more weight, it is not efficient to update when the AoI is small. On the contrary, when decreases, the AoI dominates and the thresholds decline. In particular, both of the thresholds equal 1 when . In this case, the optimal policy reduces to the zero-wait policy. We can also see that the value of the threshold for channel state 1 of the numerical algorithm is close to the optimal solution. In contrast, the value of the threshold for channel state 0 gradually deviates from the optimal value.

Figure 5.

Optimal thresholds for two different channel states versus ().

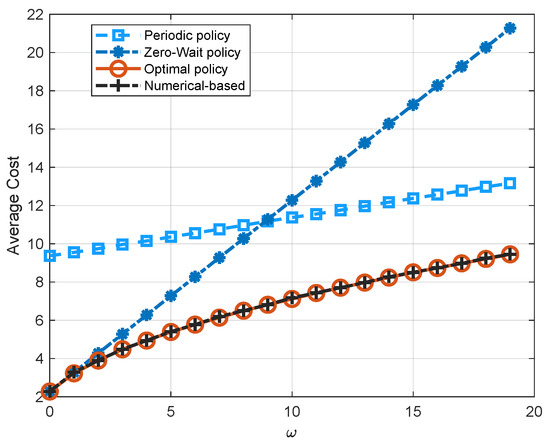

Figure 6 illustrates the performance comparison of four policies, i.e., the zero-wait policy, the periodic policy, the numerical-based policy, and the optimal policy, with respect to the weighting factor . It is easy to see that the optimal policy has the lowest average cost. As we see in Figure 6, the zero-wait policy has the same performance with the optimal policy when . As increases, the average cost of all three policies increases. However, the increment of the zero-wait policy is larger than the periodic policy and the optimal policy due to the frequent transmission in the zero-wait policy. Although the thresholds obtained by the PIA and the numerical algorithm are not exactly the same as shown in Figure 5, the performance of the numerical-based algorithm also coincides with the optimal policy. This is because the threshold for channel state 1 exists in the quadratic term of the cost function, while the threshold for channel state 0 exists in the negative exponential term of the cost function. As a result, the threshold for channel state 1 has a much more significant effect on the system performance.

Figure 6.

Comparison of the zero-wait policy, the periodic policy with period being 5, the numerical-based policy, and the optimal policy with respect to the weighting factor ().

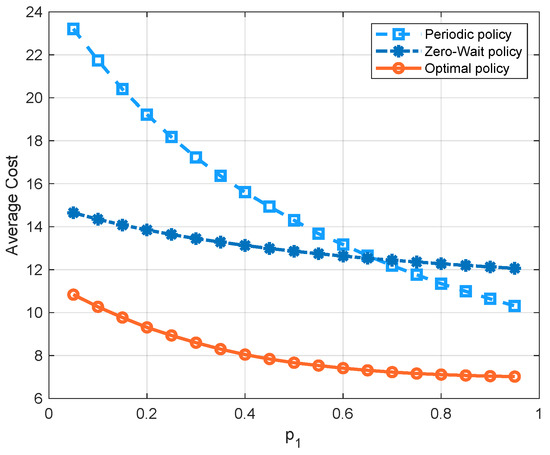

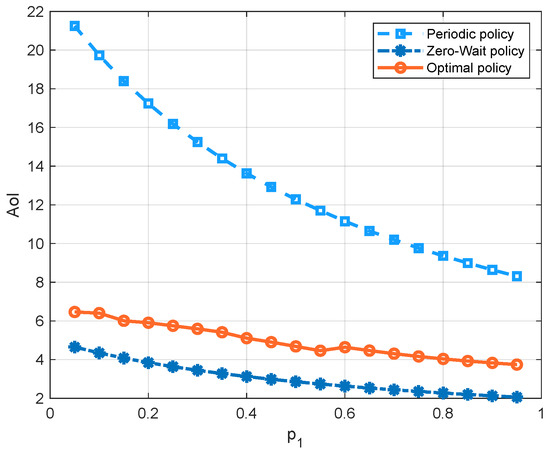

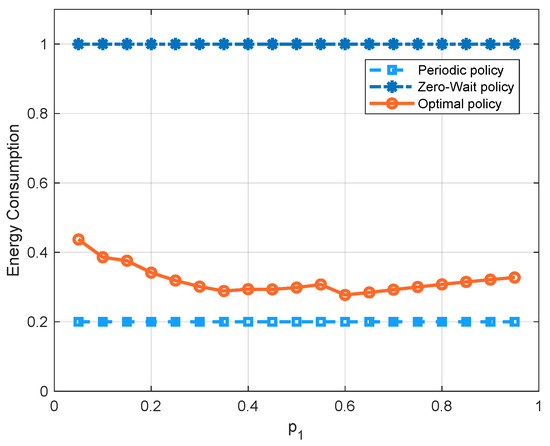

Figure 7 compares the three policies with respect to the probability of the channel being in state 1. Since there is a higher probability that the channel has a good quality as increases, the average cost of all three policies decreases. We can see that, in the regime of , the optimal policy has the lowest average cost, because it can achieve a good balance between the AoI and the energy consumption. We can also see that the cost of the periodic policy is greater than the zero-wait policy first, and smaller later. To further demonstrate these curves, we separate the energy consumption term and AoI term into different figures, i.e., Figure 8 and Figure 9. We see that the update cost of the zero-wait policy is smaller than that of the periodic policy, but the AoI of the zero-wait policy has a smaller decrease with respect to than the periodic policy.

Figure 7.

Comparison of the zero-wait policy, the periodic policy with period being 5, and the optimal policy with respect to ().

Figure 8.

AoI comparison of the zero-wait policy, the periodic policy with period being 5, and the optimal policy with respect to ().

Figure 9.

Energy consumption comparison of the zero-wait policy, the periodic policy with period being 5, and the optimal policy with respect to ().

5. Conclusions

In this paper, we have studied the optimal updating policy in an IoT system, where the channel gain is quantized into multiple states and the channel state is fed back to the sensor before the decision making. The status update problem has been formulated as an MDP to minimize the long-term average of the weighted sum of the AoI and the energy consumption. By investigating the properties of the value function, it is proven that the optimal policy has a threshold structure with respect to AoI for any given channel state. We have also proven that the threshold is a non-increasing function of the channel state. Simulation results show the impacts of system parameters on the optimal thresholds and the average cost. Through comparisons, we have also shown that our proposed policy outperforms the zero-wait policy and the periodic policy. In our future research, the time-varying channel model will be further involved for guiding the future design of realistic IoT systems.

Author Contributions

Conceptualization, F.P., X.C., and X.W.; methodology, F.P. and X.W.; software, F.P.; validation, F.P., X.C., and X.W.; formal analysis, F.P. and X.W.; investigation, X.W.; writing—original draft preparation, F.P.; writing—review and editing, X.W. and X.C.; visualization, F.P. and X.W.; supervision, X.C. and X.W.; project administration, X.C.; funding acquisition, X.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the State’s Key Project of Research and Development Plan under Grants (2019YFE0196400), in part by Guangdong R&D Project in Key Areas under Grant (2019B010158001), in part by Guangdong Basic and Applied Basic Research Foundation under Grant (2021A1515012631), in part by Key Laboratory of Modern Measurement & Control Technology, Ministry of Education, Beijing Information Science & Technology University (KF20201123202).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Proof of Lemma 2

Based on the value iteration algorithm, the induction method can be employed in following proof. Firstly, we initial that , where both Equations (15) and (16) hold for all .

Appendix A.1. Proof of Equation (16)

When ,

and

hold due to , and we have .

Suppose that holds for . Considering the case of ,

and

hold for all i according to . Therefore, we have . Since , we have .

Appendix A.2. Proof of Equation (15)

By the definition of function , we have

and

Therefore,

and

hold for all i, where step is due to Equation (16). Hence, we have . This completes the whole proof.

Appendix B. Proof of Theorem 1

Theorem 1 can be proven by verifying the conditions given in [16]. The conditions are listed as follows:

- (1): For every state and discount factor , the discount value function is finite.

- (2): There exists a non-negative value L such that for all and , where , and is a reference state.

- (3): There exists a non-negative value , such that for every and . For every , there exists an action such that .

- (4): The inequality holds for all and a.

By Lemma 1, holds for every and . Hence, condition (1) holds. According to Lemma 2, by letting and , we have , which verifies condition (2).

Before verifying condition (3), a lemma is given as follows:

Lemma A1.

Let us denote as the reference state and define the first time that an initial state transits to as . Then, the expectation cost under the always-transmitting policy , i.e., the sensor generates and transmits a new update in each slot, is

where holds for all .

Proof.

Since for all t, the probability that the state returns to from after exactly K slot is given by

Then, the expectation return cost from to is expressed as

where step is due to the fact that . □

Considering a mixture policy , in which it performs the always-transmitting policy from initial state until it enters the reference state , it later performs the optimal policy that minimizes the discounted cost. Therefore, we have

which implies that . Hence, let and ; condition (3) is verified.

On the other hand, holds for all . The states that transit from are finite. Thus, the weighted sum of finite is also finite, i.e., holds for all and a, which verifies condition (4). This completes the whole verification.

Appendix C. Proof of Theorem 2

Based on the definition of , we can obtain the difference between the state-action value function as follows:

where is due to the property of the value function given in Lemma 4. We then discuss the difference between the state-action value function in two cases.

Case 1: .

In this case, holds for any and i. Therefore, the optimal policy is to update at each slot in spite of the channel state. In other words, the optimal thresholds are all equal to 1.

Case 2: .

We note that, given i, increases linearly with . Hence, there exists a positive integer , such that is the minimum value that satisfies . Therefore, if , holds. This implies that there must exist a threshold satisfying . If , we have .

Altogether, the optimal policy has a threshold structure for . Then, we examine the non-increasing property of the thresholds. Firstly, we show that the difference between the state-action value function is monotonic with respect to the channel state by fixing the AoI. Assuming that and , it is easy to obtain that

Since when , we have according to (A14). This implies that the optimal threshold corresponding to channel state j is no greater than , i.e., . This completes the whole proof.

Appendix D. Proof of Theorem 3

Assume that is the steady probability of state in a Markov chain. The steady state probability satisfies the following global balance equation [18], i.e.,

Let . We prove Equation (A23) by discussing three cases via mathematical induction.

Case 1:

Based on Equation (A15), we have

Assuming that holds for all , we examine . We have

which completes this segment of the proof.

Case 2:

Similarly, we have

where . Assuming that holds for all , we examine . We have

which completes this segment of the proof.

Case 3:

Follow above discussion, we have

where . Assuming that holds for all , we examine . We have

Altogether, we obtain the steady state probability with respect to an unknown parameter . According to the fact that , we formulate an equation:

where the expression of is obtained.

The average cost of a Markov chain is given by

Furthermore, the first term is given by

where

and

Furthermore, the sum of last two terms is given by

This completes the proof.

References

- Kaul, S.; Yates, R.; Gruteser, M. Real-time status: How often should one update? In Proceedings of the IEEE INFOCOM, Orlando, FL, USA, 25–30 March 2012; pp. 2731–2735. [Google Scholar]

- Abd-Elmagid, M.A.; Dhillon, H.S. Average peak age-of-information minimization in UAV-assisted IoT networks. IEEE Trans. Veh. Technol. 2018, 68, 2003–2008. [Google Scholar] [CrossRef] [Green Version]

- Farazi, S.; Klein, A.G.; Brown, D.R. Average age of information for status update systems with an energy harvesting server. In Proceedings of the IEEE INFOCOM 2018-IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), Honolulu, HI, USA, 15–19 April 2018; pp. 112–117. [Google Scholar]

- Costa, M.; Codreanu, M.; Ephremides, A. On the age of information in status update systems with packet management. IEEE Trans. Inf. Theory 2016, 62, 1897–1910. [Google Scholar] [CrossRef]

- Sun, Y.; Uysal-Biyikoglu, E.; Yates, R.D.; Koksal, C.E.; Shroff, N.B. Update or wait: How to keep your data fresh. IEEE Trans. Inf. Theory 2017, 63, 7492–7508. [Google Scholar] [CrossRef]

- Yun, J.; Joo, C.; Eryilmaz, A. Optimal real-time monitoring of an information source under communication costs. In Proceedings of the 2018 IEEE Conference on Decision and Control (CDC), Miami, FL, USA, 17–19 December 2018; pp. 4767–4772. [Google Scholar]

- Imer, O.C.; Basar, T. Optimal estimation with limited measurements. In Proceedings of the 44th IEEE Conference on Decision and Control, Seville, Spain, 15 December 2005; pp. 1029–1034. [Google Scholar]

- Rabi, M.; Moustakides, G.V.; Baras, J.S. Adaptive sampling for linear state estimation. Siam J. Control Optim. 2009, 50, 672–702. [Google Scholar] [CrossRef]

- Gao, X.; Akyol, E.; Başar, T. On remote estimation with multiple communication channels. In Proceedings of the 2016 American Control Conference (ACC), Boston, MA, USA, 6–8 July 2016; pp. 5425–5430. [Google Scholar]

- Chakravorty, J.; Mahajan, A. Remote-state estimation with packet drop. IFAC-PapersOnLine 2016, 49, 7–12. [Google Scholar] [CrossRef]

- Lipsa, G.M.; Martins, N.C. Optimal state estimation in the presence of communication costs and packet drops. In Proceedings of the 2009 47th Annual Allerton Conference on Communication, Control, and Computing (Allerton), Monticello, IL, USA, 30 September–2 October 2009; pp. 160–169. [Google Scholar]

- Huang, H.; Qiao, D.; Gursoy, M.C. Age-energy tradeoff in fading channels with packet-based transmissions. In Proceedings of the IEEE INFOCOM 2020-IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), Toronto, ON, Canada, 6–9 July 2020; pp. 323–328. [Google Scholar]

- Chakravorty, J.; Mahajan, A. Remote estimation over a packet-drop channel with Markovian state. IEEE Trans. Autom. Control 2019, 65, 2016–2031. [Google Scholar] [CrossRef] [Green Version]

- Chen, X.; Yi, H.; Luo, H.; Yu, H.; Wang, H. A novel CQI calculation scheme in LTE∖LTE-A systems. In Proceedings of the 2011 International Conference on Wireless Communications and Signal Processing (WCSP), Nanjing, China, 9–11 November 2011; pp. 1–5. [Google Scholar]

- Toyserkani, A.T.; Strom, E.G.; Svensson, A. An analytical approximation to the block error rate in Nakagami-m non-selective block fading channels. IEEE Trans. Wirel. Commun. 2010, 9, 1543–1546. [Google Scholar] [CrossRef] [Green Version]

- Sennott, L.I. Average cost optimal stationary policies in infinite state Markov decision processes with unbounded costs. Oper. Res. 1989, 37, 626–633. [Google Scholar] [CrossRef]

- Dinkelbach, W. On nonlinear fractional programming. Manag. Sci. 1967, 13, 492–498. [Google Scholar] [CrossRef]

- Chandry, K.M. The Analysis and Solutions for General Queueing Networks; Citeseer: Princeton, NJ, USA, 1974. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).