Abstract

With the rapid growth of the Internet, the curse of dimensionality caused by massive multi-label data has attracted extensive attention. Feature selection plays an indispensable role in dimensionality reduction processing. Many researchers have focused on this subject based on information theory. Here, to evaluate feature relevance, a novel feature relevance term (FR) that employs three incremental information terms to comprehensively consider three key aspects (candidate features, selected features, and label correlations) is designed. A thorough examination of the three key aspects of FR outlined above is more favorable to capturing the optimal features. Moreover, we employ label-related feature redundancy as the label-related feature redundancy term (LR) to reduce unnecessary redundancy. Therefore, a designed multi-label feature selection method that integrates FR with LR is proposed, namely, Feature Selection combining three types of Conditional Relevance (TCRFS). Numerous experiments indicate that TCRFS outperforms the other 6 state-of-the-art multi-label approaches on 13 multi-label benchmark data sets from 4 domains.

1. Introduction

In recent years, multi-label learning [1,2,3,4] has been increasingly popular in applications such as text categorization [5], image annotation [6], protein function prediction [7], etc. Additionally, feature selection is of great significance to solving industrial application problems. Some researchers monitor the wind speed in the wake region to detect wind farm faults based on feature selection [8]. In signal processing applications, feature selection is effective for chatter vibration diagnosis in CNC machines [9]. Feature selection is adopted to classify the cutting stabilities based on the selected features [10]. The most crucial thing in diverse multi-label applications is to classify each sample and its corresponding label accurately. Multi-label learning, such as traditional classification approaches, is vulnerable to dimensional catastrophes. The number of features in text multi-label data is frequently in the tens of thousands, which means that there are a lot of redundant or irrelevant features [11,12]. It can easily lead to the “curse of dimensionality”, which dramatically increases the model complexity and computation time [13]. Feature selection is the process of selecting a set of feature subsets with distinguishing features from the original data set according to specific evaluation criteria. Redundant or irrelevant features can be eliminated to improve model accuracy and reduce feature dimensions, feature space, and running time [14,15]. Simultaneously, the selected features are more conducive to model understanding and data analysis.

In traditional machine learning problems, feature selection approaches include wrapper, embedded, and filter approaches [16,17,18,19]. Among them, wrapper feature selection approaches use the classifier performance to weigh the pros and cons of a feature subset, which has high computational complexity and a large memory footprint [20,21]. The processes of feature selection and learner training are combined in embedded approaches [22,23]. Feature selection is automatically conducted during the learner training procedure when the two are completed in the same optimization procedure. Filter feature selection approaches weigh the pros and cons of feature subsets using specific evaluation criteria [24,25]. It is independent of the classifier, and the calculation is fast and straight. As a result, the filter feature selection approaches are generally used for feature selection.

There are also the above-mentioned three feature selection approaches in multi-label feature selection, with filter feature selection being the most popular. Information theory is a standard mathematical tool for filter feature selection [26]. Based on information theory, this paper mainly focuses on three key aspects that affect feature relevance: candidate features, selected features, and label correlations. The method proposed in this paper examines the amount of information shared between the selected feature subset and the total label set to evaluate feature relevance and denotes it as for the time being. Once any candidate feature is selected in the current selected feature subset, the current selected feature subset will be updated at this point, and will be altered accordingly. Moreover, the original label correlations in the total label set also affect due to some new candidate features being added to the current selected feature subset. Hence, three incremental information terms which combine candidate features, selected features, and label correlations to evaluate feature relevance are used to design a novel feature relevance term. Furthermore, we employ label-related feature redundancy as the feature redundancy term to reduce unnecessary redundancy. Table 1 provides three abbreviations and their corresponding meanings we mentioned. We explain them in detail in Section 4.

Table 1.

Abbreviations meaning statistics.

The major contributions of this paper are as follows:

- Analyze and discuss the indispensability of the three key aspects (candidate features, selected features and label correlations) for feature relevance evaluation;

- Three incremental information terms taking three key aspects into account are used to express three types of conditional relevance. Then, FR combining the three incremental information terms is designed;

- A designed multi-label feature selection method that integrates FR with LR is proposed, namely TCRFS;

- TCRFS is compared to 6 state-of-the-art multi-label feature selection methods on 13 benchmark multi-label data sets using 4 evaluation criteria and certified the efficacy in numerous experiments.

The rest of this paper is structured as follows. Section 2 introduces the preliminary theoretical knowledge of this paper: information theory and the four evaluation criteria used in our experiments. Related works are reviewed in Section 3. Section 4 combines three types of conditional relevance to design FR and proposes TCRFS, which integrates FR with LR. The efficacy of TCRFS is proven by comparing it with 6 multi-label methods on 13 benchmark data sets applying 4 evaluation criteria in Section 5. Section 6 concludes our work in this paper.

2. Preliminaries

2.1. Information Theory for Multi-Label Feature Selection

Information theory is a popular and effective means to tackle the problem of multi-label feature selection [27,28,29]. It is used to measure the correlation between random variables [30] and its fundamentals are covered in this subsection.

Assume that the selected feature subset , the label set . To convey feature relevance, we typically employ , which is mutual information between the selected feature subset and the total label set. Mutual information is a measure in information theory. It can be seen as the amount of information contained in one random variable about another random variable. Assume two discrete random variables , , then the mutual information between X and Y can be represented as . Its expansion formula is as follows:

where denotes the information entropy of X, and denotes the conditional entropy of X given Y. Information entropy is a concept used to measure the amount of information in information theory. is defined as:

where represents the probability distribution of , and the base of the logarithm is 2. The conditional entropy is defined as the mathematical expectation of Y for the entropy of the conditional probability distribution of X under the given condition Y:

where and represent the joint probability distribution of and the conditional probability distribution of given , respectively. can also be represented as follows:

where is another measure in information theory, namely, the joint entropy. Its definition is as follows:

According to Equation (4), combining the relationship between the three different measures of the amount information, the mutual information can also be alternatively written as follows:

It is common in multi-label feature selection to have more than two random variables, assuming another discrete random variable . The conditional mutual information , which expresses the expected value of mutual information of two discrete random variables X and Y given the value of the third discrete variable Z. It is represented as follows:

where is the joint mutual information and is the interaction information. Their expansion formulas are as follows:

2.2. Evaluation Criteria for Multi-Label Feature Selection

In our experiments, we employ four distinct evaluation criteria to confirm the efficacy of TCRFS. The four evaluation criteria are essentially separated into two categories: label-based evaluation criteria and example-based evaluation criteria [31]. The label-based evaluation criteria include Macro- and Micro- [32]. The higher the value of these two indicators, the better the classification effect. Macro- actually calculates the -score of q categories first and then averages it as follows:

where , , and represent true positives, false positives, and false negatives in i-th category, respectively. Micro- calculates the confusion matrix of each category, and adds the confusion matrix to obtain a multi-category confusion matrix and then calculates the -score as follows:

The example-based evaluation criteria include the Hamming Loss (HL) and Zero One Loss (ZOL) [33]. The lower the value of these two indicators, the better the classification effect. HL is a metric for the number of times a label is misclassified. That is, a label belonging to a sample is not predicted, and a label not belonging to the sample is projected to belong to the sample. Suppose that is a label test set and is a set of class labels corresponding to , where is the label space with q categories. The definition of HL is as follows:

where ⊕ means the XOR operation. denotes the predicted label set corresponding to . The other example-based criterion ZOL is defined as follows:

If the predicted label subset and the true label subset match, the ZOL score is 1 (i.e., ), but if there is no error, the score is 0 (i.e., ).

3. Related Work

There have been a lot of multi-label learning algorithms proposed so far. These multi-label learning algorithms can be divided into problem transform and algorithm adaptation [34,35]. Problem transform is the conversion of multi-label learning into traditional single-label learning, such as Binary Relevance (BR) [36], Pruned Problem Transformation (PPT) [37], and Label Power (LP) [38]. BR treats the prediction of each label as an independent single classification issue and trains an individual classifier for each label with all of the training data [33]. However, it ignores the relationships between the labels, so it is possible to end up with imbalanced data. PPT removes the labels with a low frequency by considering the label set with a predetermined minimum number of occurrences. However, this irreversible conversion will result in the loss of class information [39].

In contrast to problem transform, algorithm adaptation directly enhances the existing single-label data learning algorithms to adapt to multi-label data processing. Algorithm adaption improves the issues caused by problem transformation. Cai et al. [40] propose Robust and Pragmatic Multi-class Feature Selection (RALM-FS) based on an augmented Lagrangian method, where there is just one -norm loss term in RALM-FS, with an apparent -norm equality constraint. Lee and Kim [41] propose the D2F method that makes use of interactive information based on mutual information. It is capable of measuring multiple variable dependencies by default, and its definition is as follows:

where and are regarded as the feature relevance term and the feature redundancy term, respectively. The feature relevance of D2F only considers the candidate features in feature relevance, which ignores selected features and label correlations. Lee and Kim [42] propose the Pairwise Multi-label Utility (PMU), which is derived from as follows:

where is to measure the feature relevance and is to measure the feature redundancy. Afterward, Lee and Kim [43] propose multi-label feature selection based on a scalable criterion for large SCLS. SCLS uses a scalable relevance evaluation approach to assess conditional relevance more correctly:

In fact, the scalable relevance in SCLS considers both candidate features and selected features but ignores label correlations. Liu et al. [44] propose feature selection for multi-label learning with streaming label (FSSL) in which label-specific features are learned for each newly received label, and then label-specific features are fused for all currently received labels. Lin et al. [45] apply a multi-label feature selection method based on fuzzy mutual information (MUCO) to the redundancy and correlation analysis strategies. The next feature that enters S can be selected by the following:

where denotes the fuzzy mutual information.

When we try to add a new candidate feature to the current selected feature subset S, the feature , the selected features in S, and label correlations in the total label set will all impact feature relevance. To this end, FR is devised by merging the three types of conditional relevance. Therefore, a designed multi-label feature selection method TCRFS that integrates FR with LR is proposed.

4. TCRFS: Feature Selection Combining Three Types of Conditional Relevance

According to the past multi-label feature selection methods, they do not take into account all the three key aspects of influencing feature relevance. That is, the key aspects that influence feature relevance are not comprehensively examined. Here, we utilize three incremental information terms to depict three types of conditional relevance that consider candidate features, selected features, and label correlations comprehensively. The reasons for our consideration are as follows.

4.1. The Three Key Aspects of Feature Relevance We Consider

4.1.1. Candidate Features

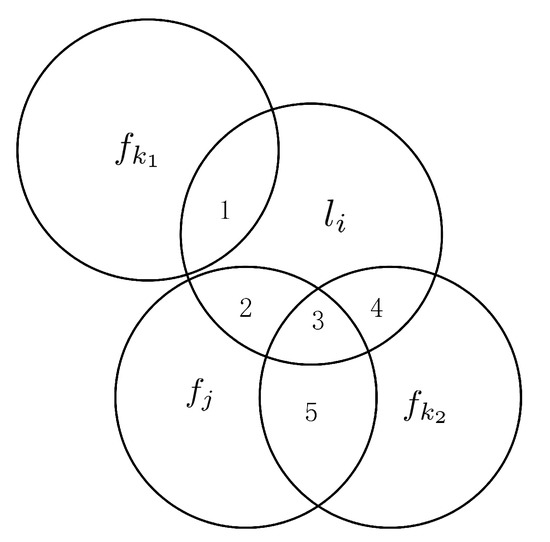

We evaluate each candidate feature according to specific criteria. When a candidate feature attempts to enter the current selected feature subset S as a new selected feature to generate a new selected feature subset, it will affect the amount of information provided by the current selected feature subset to the label set. The influence of candidate features is represented by a Venn diagram, as shown in Figure 1.

Figure 1.

The relationship between features and labels in the Venn diagram.

In Figure 1, we assume that and are two candidate features, is a selected feature in S, and is a label in the total label set L. is irrelevant to , and is redundant with . The amount of information provided by to is mutual information , that is, the area . If is selected, then the amount of information provided by to will be , which corresponds to the area . If is selected, then the amount of information provided by to will be , which corresponds to the area since the area is less than the area , . Therefore, the higher the label-related redundancy between the candidate feature and the selected feature in the current selected feature subset, the greater the amount of information between and is reduced. In other words, the label-related redundancy between the candidate feature and the selected features should be kept to a minimum. From this point of view, takes precedence over .

4.1.2. Selected Features

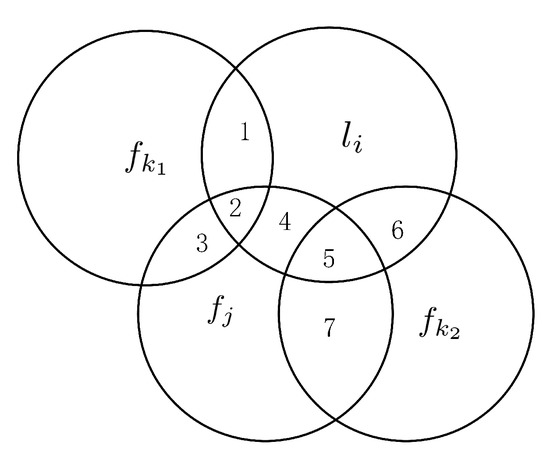

The influence of selected features is represented by a Venn diagram as shown in Figure 2.

Figure 2.

The relationship between features and labels in the Venn diagram.

As shown in Figure 2, and are both redundant with . Without considering selected features, the information that and shared with the label are and , respectively. The area denotes , and the area denotes . We assume that the area is less than the area , the area is less than the area , but the area is larger than . With the selected features taken into account, the information shared by and is (i.e., the area ), and the information shared by and is (i.e., the area ): , but . There are two causes for this situation, the first is that the amount of information provided to by itself is insufficient, and the second is that the label-related redundancy between and is excessive. Now, in the hypothesis, replace the condition that area is larger than the area to the area is less than the area , and we obtain the following result: but . Therefore, considering the influence of the selected features on feature relevance is necessary.

4.1.3. Label Correlations

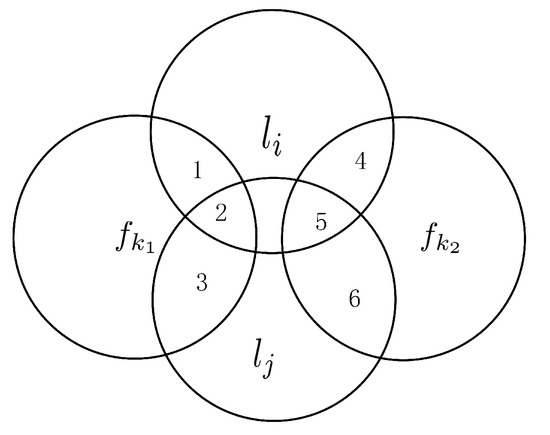

It has no influence on the amount of information between candidate features and each label if the labels are independent. The influence of label correlations is represented by a Venn diagram as shown in Figure 3.

Figure 3.

The relationship between features and labels in the Venn diagram.

In Figure 3, and are two redundant labels, that is, there exists a correlation between and . Without the consideration of label correlations, the amount of information provided to by is (the area ) and the amount of information provided to by is (the area ). Then, while taking label correlations into consideration, the amount of information provided to by is (the area ) and the amount of information provided to by is (the area ). Now, provide the first hypothesis: the area is larger than the area , the area is larger than the area , but the area is less than the area . Hence, but . The second hypothesis modifies the last condition in the first hypothesis: the area is larger than the area . Hence, and . We call the area and the area feature-related label redundancy. Therefore, the original amount of information between candidate features and labels and the feature-related label redundancy can affect the selection of features. Merely using the accumulation of mutual information as the feature relevance will cause the redundant recalculation of feature-related label redundancy.

According to the three key aspects of feature relevance described above, they are indispensable. As a result, we devise FR as the feature relevance term of TCRFS.

4.2. Evaluation Function of TCRFS

4.2.1. Definitions of FR and LR

Regarding the feature relevance evaluation, we distinguish the importance of features based on the closeness of the relationship between features and labels. According to Section 4.1, candidate features, selected features, and label correlations are three key aspects on evaluating feature relevance. In order to be able to perform better in multi-label classification, we utilize three types of conditional relevance (, and ) to represent the feature relevance term in the proposed method. By using three incremental information terms to summarize the three key aspects of feature relevance, FR is devised. The three incremental information terms represent the three respective types of conditional relevance.

Definition 1.

(FR). Suppose that and are the total feature set and the total label set, respectively. Let S be the selected feature set excluding candidate features, that is, . FR is depicted as follows:

where denotes the conditional relevance taking candidate features into account while evaluating feature relevance, denotes the conditional relevance taking selected features into account while evaluating feature relevance, and denotes the conditional relevance taking label correlations into account while evaluating feature relevance. The comprehensive evaluation of the above-mentioned three key aspects of feature relevance is more conducive to capturing the optimal features. Furthermore, FR can be expanded as follows:

where and are considered to be two constants in feature selection.

Definition 2.

(LR). In the initial analysis of the three key aspects of feature relevance, it is mentioned that the label-related feature redundancy is repeatedly calculated in the previous methods, which will impact on capturing the optimal features. Here, LR is devised as follows:

As indicated in Table 2, we have compiled a list of feature relevance terms and feature redundancy terms for TCRFS and the contrasted methods based on information theory.

Table 2.

Feature relevance terms and feature redundancy terms of multi-label feature selection methods.

4.2.2. Proposed Method

We design FR and LR to analyze and discuss feature relevance and feature redundancy, respectively, in Section 4.2.1. Subsequently, TCRFS, a designed multi-label feature selection method that integrates FR with LR, is suggested. The definition of TCRFS is as follows:

where and represent the number of the total label set and the number of the selected subset, respectively, and their inversions are and , respectively. The feature relevance term and the feature redundancy term can be balanced using the two balance parameters and . According to Formula (19), Formula (21) can be rewritten as follows:

where is regarded as the new feature relevance term and is regarded as the new feature redundancy term. The pseudo-code of TCRFS (Algorithm 1) is as follows:

| Algorithm 1. TCRFS. |

|

First, in lines 1–5, the selected feature subset S and the number of selected features k in the proposed method are initialized. To capture the initial feature, we calculate the incremental information to capture the first feature (lines 6–12). Then, until the procedure is complete, calculate and capture the following feature (lines 13–20).

4.3. Time Complexity

Time complexity is also one of the criteria for evaluating the pros and cons of methods. The time complexity of each contrasted method and TCRFS has been computed here. Assume that there are n, p, and q instances, features, and labels, respectively. The computational complexity of mutual information and conditional mutual information is for all instances that have to be visited for probability. Each iteration of RALM-FS requires . Assume that k denotes the number of selected features. The time complexity of TCRFS is as three incremental information terms and one label-related feature redundancy term are calculated. Similarly, D2F, PMU, and SCLS have time complexities of , , and , respectively. FSSL has a time complexity of . The time complexity of MUCO is since it constructs a fuzzy matrix and incremental search.

5. Experimental Evaluation

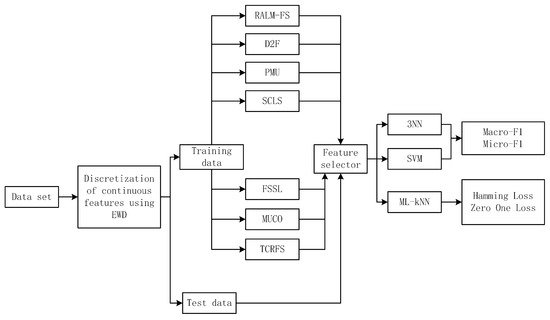

Against the demonstrated efficacy of TCRFS, we compare it to 6 advanced multi-label feature selection approaches (RALM-FS [40], D2F [41], PMU [42], SCLS [43], FSSL [44], and MUCO [45]), on 13 benchmark data sets in this section. As a result, we have conducted numerous experiments based on four different criteria using three classifiers, which are Support Vector Machine (SVM), 3-Nearest Neighbor (3NN), and Multi-Label k-Nearest Neighbor (ML-kNN) [46,47]. The 13 multi-label benchmark data sets utilized in the experiments are depicted first. Following that, the findings of the experiments are discussed and examined. Four evaluation metrics that we employed in the experiments have been offered in Section 2.2. The approximate experimental framework is depicted in Figure 4.

Figure 4.

The experimental framework.

5.1. Multi-Label Data Sets

A total of 13 multi-label benchmark data sets from 4 different domains have been depicted in Table 3, which are collected on the Mulan repository [48]. Among them, the Birds data set classifies the birds in Audio [49], the Emotions data set is gathered for Music [38], the Genbase and Yeast data sets are primarily concerned with the Biology category [34], and the remaining 9 data sets are categorized as Text. The 13 data sets we chose have an abundant number of instances, which are split into two parts: training set and test set [48]. Ueda and Saito [50] attempted to classify real Web pages linked from the “yahoo.com” domain, which is composed of 14 top-level categories, each of which is split into many second-level subcategories. They tested 11 of the 14 independent text classification problems by focusing on the second-level categories. For each problem, the training set includes 2000 documents and the test set includes 3000 documents, such as the Arts and Health data sets, and so on [51]. The number of labels and the number of features both vary substantially. Previous research demonstrates that maintaining 10% of the features results in no loss, while retaining 1% of the features results in a slight loss dependent on document frequency [3]. For example, the Arts and Social data sets have more than 20,000 features and 50,000 features, respectively, and they retain about 2% of the features with the highest document frequency. The continuous features of 13 data sets are discretized into equal intervals with 3 bins as indicated in the literature [38,52].

Table 3.

The depiction of data sets in our experiments.

5.2. The Theoretical Justification of TCRFS on an Artificial Data Set

To further justify the indispensability of the three key aspects (candidate features, selected features, and label correlations) for feature relevance evaluation. We employ an artificial data set to compare the classification performance of five information-theoretical-based methods (D2F, PMU, SCLS, MUCO, and TCRFS) that use distinct feature relevance terms. With respect to the feature relevance terms, D2F and PMU employ the amount of information between candidate features and labels, SCLS employs a scalable relevance evaluation, which takes feature redundancy into account in feature relevance, MUCO employs fuzzy mutual information, and TCRFS comprehensively considers the three types of conditional relevance we mentioned to design FR. Table 4 and Table 5 display the training set and the test set, respectively.

Table 4.

Training set.

Table 5.

Test set.

Table 6 shows the experimental results and the feature ranking of each approach on the artificial data set. As shown in Table 6, the first feature selected by TCRFS is . Different from D2F and PMU, is regarded as the least essential feature. In TCRFS, feature rankings , and are higher than the feature ranking of SCLS, whereas MUCO selects as the first feature. TCRFS achieves the best classification performance overall. Therefore, TCRFS, which considers three key aspects (candidate features, selected features, and label correlations), is justified.

Table 6.

Experimental results on the artificial data set.

5.3. Analysis and Discussion of the Experimental Findings

The experiments that run on a 3.70 GHz Intel Core i9-10900K processor with 32 GB of main memory are performed on four different evaluation criteria regarding three classifiers. Python is used to create the proposed method [53]. Hamming Loss is conducted on the ML-kNN (k = 10) classifier, and Macro- and Micro- measures are conducted on the SVM and 3NN classifiers. The number of selected features on the 12 data sets is set to {1%, 2%,..., 20%} of the total number of features when using a step size of 1, whereas the number of selected features on the Medical data set is set to {1%, 2%,..., 17%}. Table 7, Table 8, Table 9, Table 10, Table 11 and Table 12 present the classification performance of 6 contrasted approaches and TCRFS on 13 data sets. The average classification results and standard deviations are used to express the classification performance. The average classification results of each method on all data sets are represented in the row “Average”. The data of the best-performing classification results in Table 7, Table 8, Table 9, Table 10, Table 11 and Table 12 are bolded.

Table 7.

Classification performance of each method regarding Macro- on SVM classifier (mean ± std).

Table 8.

Classification performance of each method regarding Micro- on SVM classifier (mean ± std).

Table 9.

Classification performance of each method regarding Macro- on 3NN classifier (mean ± std).

Table 10.

Classification performance of each method regarding Micro- on 3NN classifier (mean ± std).

Table 11.

Classification performance of each method regarding HL on ML-kNN classifier (mean ± std).

Table 12.

Classification performance of each method regarding ZOL on ML-kNN classifier (mean ± std).

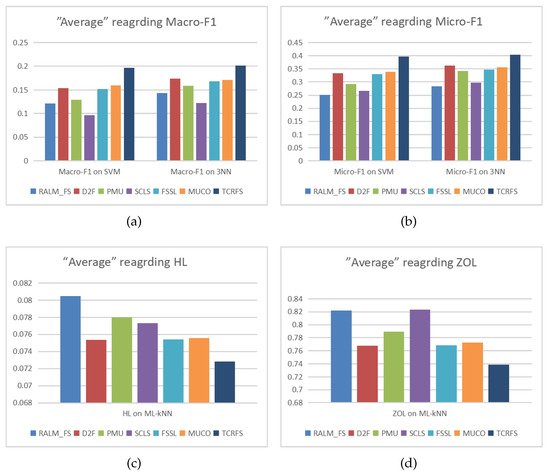

Observing Table 7 and Table 8, TCRFS delivers the optimum classification performance on SVM classifier regarding Macro- and Micro- measures, since the higher the values of the two measures, the more superior the classification performance. In Table 9, except for the Yeast data set, TCRFS beats 6 other contrasted approaches on 12 data sets using 3NN classifier for Macro-. TCRFS surpasses the other 6 contrasted approaches on 11 data sets using the 3NN classifier for Micro- in Table 10. According to the properties of the HL and ZOL measures, the lower values of the two measures mean the more excellent classification performance. In Table 11 and Table 12, TCRFS can exhibit the best system performance on 11 data sets on the ML-kNN classifier for the HL and ZOL criteria. In some cases, comprehensive consideration of the three key aspects to assess feature relevance does not achieve the best classification effect. The classification results of D2F takes the first position on the Yeast data set regarding Macro- on the 3NN classifier. PMU and RALM-FS possess the optimal classification performance on the Yeast data set and the Education data sets, respectively. In terms of HL (Table 11), RALM-FS and SCLS surpass other approaches on the Birds and Emotions data sets, respectively. In terms of ZOL (Table 12), FSSL and D2F surpass other approaches on the Birds and Emotions data sets, respectively. Despite the fact that D2F, PMU, RALM-FS, SCLS and FSSL have the greatest system performance on individual data sets, the overall optimal classification performance is still TCRFS. The average values of each method for different evaluation criteria are illustrated in Figure 5. The abscissa and different colored bars represent different feature selection methods, while the ordinate represents the average value.

Figure 5.

The average values of each method for (a) Macro-, (b) Micro-, (c) HL, (d) ZOL.

Observing the trend of the bar graphs in Figure 5a,b, Macro- results and Micro- results achieved on the SVM classifier and 3NN classifier have reached similar classification performance. The average results of TCRFS in terms of Macro- are roughly 0.2 or above, and the average results of TCRFS in terms of Micro- are roughly 0.4 or above, which are clearly greater than the average results of other approaches. The average result of TCRFS is less than 0.074 in Figure 5c and less than 0.74 in Figure 5d, which are clearly less than the average results of other approaches. Intuitively, TCRFS clearly presents the most excellent average values in terms of the four evaluation criteria. In order to further observe the classification performance of the seven methods on the data sets, we draw Figure 6, Figure 7, Figure 8 and Figure 9.

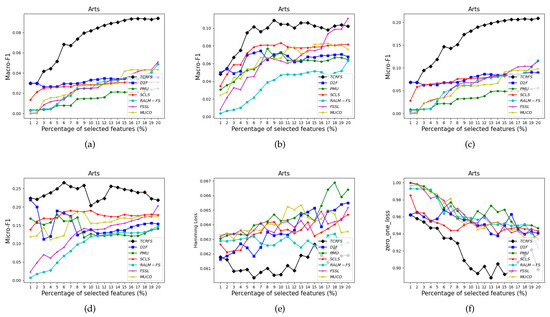

Figure 6.

The classification performance of seven methods on Arts data set for (a) Macro- using SVM, (b) Macro- using 3NN, (c) Micro- using SVM, (d) Micro- using 3NN, (e) HL using ML-kNN, (f) ZOL using ML-kNN.

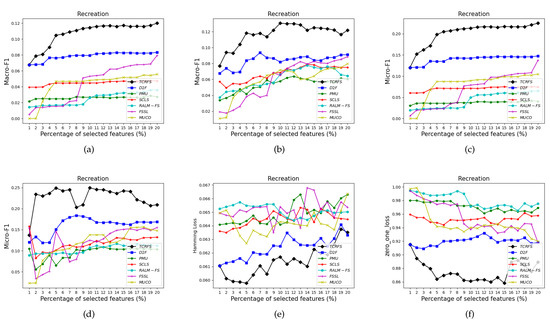

Figure 7.

The classification performance of seven methods on Recreation data set for (a) Macro- using SVM, (b) Macro- using 3NN, (c) Micro- using SVM, (d) Micro- using 3NN, (e) HL using ML-kNN, (f) ZOL using ML-kNN.

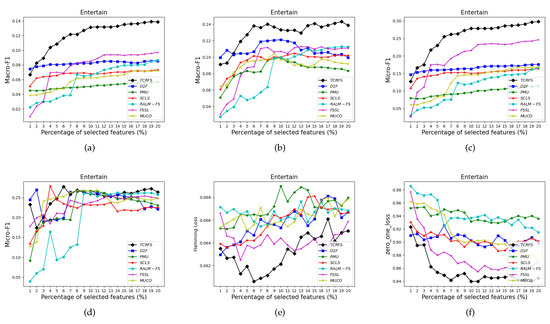

Figure 8.

The classification performance of seven methods on Entertain data set for (a) Macro- using SVM, (b) Macro- using 3NN, (c) Micro- using SVM, (d) Micro- using 3NN, (e) HL using ML-kNN, (f) ZOL using ML-kNN.

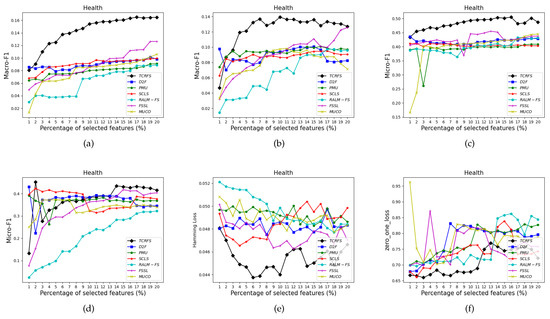

Figure 9.

The classification performance of seven methods on Health data set for (a) Macro- using SVM, (b) Macro- using 3NN, (c) Micro- using SVM, (d) Micro- using 3NN, (e) HL using ML-kNN, (f) ZOL using ML-kNN.

Figure 6, Figure 7, Figure 8 and Figure 9 indicate that TCRFS delivers superior classification performance on the Arts, Recreation, Entertain, and Health data sets regarding the four evaluation criteria. As shown in Figure 6, the classification performance of our method is significantly better than the other six contrasted methods. On the Recreation data set (Figure 7), the classification performance of the method is not constantly improved by increasing the number of selected features. TCRFS, for example, may obtain the most significant classification results regarding the ZOL measure when the number of selected features is set at 8% or 11% of the total number of features. On the Entertain data set (Figure 8), TCRFS is clearly in the lead regarding Macro- when the percentage of the selected features is larger than one. In terms of HL and ZOL, TCRFS also possesses significant advantages among the seven methods. The proposed method can obtain the optimum classification performance for each metric when the percentage of the selected features is set to 6%. In Figure 9, our method outperforms the other six contrasted methods on the Health data set utilizing the four metrics. Although in most cases the performance of feature selection methods improves as the number of selected features increases, as the number of features increases to a certain number, the improvement in the classification performance tends to be flat. When the percentage of the number of features increases to about 16% on the Arts data set (Figure 6a–d) and the percentage of the number of features increases to about 19% on the Entertain data (Figure 8a–d), the classification performance has reached a relatively high level. That is to say, an optimal feature subset is to select a smaller number of features to achieve a better classification performance. However, some methods appear to have the same classification performance as TCRFS in Figure 8d and Figure 9e, but TCRFS is superior on average, and they are not as excellent as TCRFS overall. As a consequence, it is critical to consider the three types of conditional relevance for multi-label feature selection.

We create the final feature subset by starting from an empty feature subset and adding a feature after each calculation of the proposed method. According to the TCRFS evaluation function, the score of each candidate feature is calculated and sorted. Due to TCRFS using three incremental information terms as the evaluation criteria for feature relevance, the incremental information of the remaining candidate features will change after each time the selection operation of candidate features is completed. It needs to be recalculated and scored. Therefore, while achieving better classification performance, more time is consumed.

6. Conclusions

In this paper, a TCRFS that combines FR and LR is proposed to capture the optimal selected feature subset. FR fuses three incremental information terms that take three key aspects into consideration to convey three types of conditional relevance. Then, TCRFS is compared with 1 embedded approach (RALM-FS) and 5 information-theoretical-based approaches (D2F, PMU, SCLS, FSSL, and MUCO) on 13 multi-label benchmark data sets to demonstrate its efficacy. The classification performance of seven multi-label feature selection methods is evaluated through four multi-label metrics (Macro-, Micro-, Hamming Loss, and Zero One Loss) for three classifiers (SVM, 3NN, and ML-kNN). Finally, the classification results verify that TCRFS outperforms the other six contrasted approaches. Therefore, candidate features, selected features, and label correlations are critical for feature relevance evaluation, and they can aid in the selection of a more suitable subset of selected features. Our current research is based on a fixed label set for multi-label feature selection. In our future research, we intend to explore multi-label feature selection integrating information theory with the stream label problem.

Author Contributions

Conceptualization, L.G.; methodology, L.G.; software, P.Z. and L.G.; validation, Y.W. and Y.L.; formal analysis, L.G.; investigation, L.G.; resources, Y.W.; data curation, L.H.; writing—original draft preparation, L.G.; writing—review and editing, L.G.; visualization, L.G. and Y.W.; supervision, Y.W.; project administration, L.H.; funding acquisition, L.H. All authors have read and approved the final manuscript.

Funding

This work was supported in part by the National Key Research and Development Plan of China under Grant 2017YFA0604500, in part by the Key Scientific and Technological Research and Development Plan of Jilin Province of China under Grant 20180201103GX, and in part by the Project of Jilin Province Development and Reform Commission under Grant 2019FGWTZC001.

Data Availability Statement

The multi-label data sets used in the experiment are from Mulan Library http://mulan.sourceforge.net/datasets-mlc.html, accessed on 24 November 2021.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhou, Z.H.; Zhang, M.L. Multi-label Learning. 2017. Available online: https://cs.nju.edu.cn/zhouzh/zhouzh.files/publication/EncyMLDM2017.pdf (accessed on 26 November 2021).

- Kashef, S.; Nezamabadi-pour, H. A label-specific multi-label feature selection algorithm based on the Pareto dominance concept. Pattern Recognit. 2019, 88, 654–667. [Google Scholar] [CrossRef]

- Zhang, M.L.; Wu, L. Lift: Multi-label learning with label-specific features. IEEE PAMI 2014, 37, 107–120. [Google Scholar] [CrossRef] [PubMed]

- Zhang, M.L.; Li, Y.K.; Liu, X.Y.; Geng, X. Binary relevance for multi-label learning: An overview. Front. Comput. Sci. 2018, 12, 191–202. [Google Scholar] [CrossRef]

- Al-Salemi, B.; Ayob, M.; Noah, S.A.M. Feature ranking for enhancing boosting-based multi-label text categorization. Expert Syst. Appl. 2018, 113, 531–543. [Google Scholar] [CrossRef]

- Yu, Y.; Pedrycz, W.; Miao, D. Neighborhood rough sets based multi-label classification for automatic image annotation. Int. J. Approx. Reason. 2013, 54, 1373–1387. [Google Scholar] [CrossRef]

- Yu, G.; Rangwala, H.; Domeniconi, C.; Zhang, G.; Yu, Z. Protein function prediction with incomplete annotations. IEEE/ACM Trans. Comput. Biol. Bioinform. 2013, 11, 579–591. [Google Scholar] [CrossRef][Green Version]

- Tran, M.Q.; Li, Y.C.; Lan, C.Y.; Liu, M.K. Wind Farm Fault Detection by Monitoring Wind Speed in the Wake Region. Energies 2020, 13, 6559. [Google Scholar] [CrossRef]

- Tran, M.Q.; Elsisi, M.; Liu, M.K. Effective feature selection with fuzzy entropy and similarity classifier for chatter vibration diagnosis. Measurement 2021, 184, 109962. [Google Scholar] [CrossRef]

- Tran, M.Q.; Liu, M.K.; Elsisi, M. Effective multi-sensor data fusion for chatter detection in milling process. ISA Trans. 2021. Available online: https://www.sciencedirect.com/science/article/abs/pii/S0019057821003724 (accessed on 26 November 2021). [CrossRef]

- Gao, W.; Hu, L.; Zhang, P.; Wang, F. Feature selection by integrating two groups of feature evaluation criteria. Expert Syst. Appl. 2018, 110, 11–19. [Google Scholar] [CrossRef]

- Huang, J.; Li, G.; Huang, Q.; Wu, X. Learning label specific features for multi-label classification. In Proceedings of the 2015 IEEE International Conference on Data Mining, Atlantic City, NJ, USA, 14–17 November 2015; pp. 181–190. [Google Scholar] [CrossRef]

- Zhang, P.; Gao, W.; Liu, G. Feature selection considering weighted relevancy. Appl. Intell. 2018, 48, 4615–4625. [Google Scholar] [CrossRef]

- Gao, W.; Hu, L.; Zhang, P. Class-specific mutual information variation for feature selection. Pattern Recognit. 2018, 79, 328–339. [Google Scholar] [CrossRef]

- Zhang, P.; Gao, W. Feature selection considering Uncertainty Change Ratio of the class label. Appl. Soft 2020, 95, 106537. [Google Scholar] [CrossRef]

- Liu, H.; Sun, J.; Liu, L.; Zhang, H. Feature selection with dynamic mutual information. Pattern Recognit. 2009, 42, 1330–1339. [Google Scholar] [CrossRef]

- Vergara, J.R.; Estévez, P.A. A review of feature selection methods based on mutual information. Neural. Comput. 2014, 24, 175–186. [Google Scholar] [CrossRef]

- Hancer, E.; Xue, B.; Zhang, M. Differential evolution for filter feature selection based on information theory and feature ranking. Knowl. Based Syst. 2018, 140, 103–119. [Google Scholar] [CrossRef]

- Brezočnik, L.; Fister, I.; Podgorelec, V. Swarm intelligence algorithms for feature selection: A review. Appl. Sci. 2018, 8, 1521. [Google Scholar] [CrossRef]

- Zhu, P.; Xu, Q.; Hu, Q.; Zhang, C.; Zhao, H. Multi-label feature selection with missing labels. Pattern Recognit. 2018, 74, 488–502. [Google Scholar] [CrossRef]

- Kohavi, R.; John, G.H. Wrappers for feature subset selection. Appl. Intell. 1997, 97, 273–324. [Google Scholar] [CrossRef]

- Paniri, M.; Dowlatshahi, M.B.; Nezamabadi-pour, H. MLACO: A multi-label feature selection algorithm based on ant colony optimization. Knowl. Based Syst. 2020, 192, 105285. [Google Scholar] [CrossRef]

- Blum, A.L.; Langley, P. Selection of relevant features and examples in machine learning. Appl. Intell. 1997, 97, 245–271. [Google Scholar] [CrossRef]

- Cherrington, M.; Thabtah, F.; Lu, J.; Xu, Q. Feature selection: Filter methods performance challenges. In Proceedings of the 2019 International Conference on Computer and Information Sciences (ICCIS), Sakaka, Saudi Arabia, 3–4 April 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Li, F.; Miao, D.; Pedrycz, W. Granular multi-label feature selection based on mutual information. Pattern Recognit. 2017, 67, 410–423. [Google Scholar] [CrossRef]

- Zhang, Z.; Li, S.; Li, Z.; Chen, H. Multi-label feature selection algorithm based on information entropy. Comput. Sci. 2013, 50, 1177. [Google Scholar]

- Wang, J.; Wei, J.M.; Yang, Z.; Wang, S.Q. Feature selection by maximizing independent classification information. IEEE Trans. Knowl. Data Eng. 2017, 29, 828–841. [Google Scholar] [CrossRef]

- Lin, Y.; Hu, Q.; Liu, J.; Duan, J. Multi-label feature selection based on max-dependency and min-redundancy. Neurocomputing 2015, 168, 92–103. [Google Scholar] [CrossRef]

- Ramírez-Gallego, S.; Mouriño-Talín, H.; Martínez-Rego, D.; Bolón-Canedo, V.; Benítez, J.M.; Alonso-Betanzos, A.; Herrera, F. An information theory-based feature selection framework for big data under apache spark. IEEE Trans. Syst. 2017, 48, 1441–1453. [Google Scholar] [CrossRef]

- Song, X.F.; Zhang, Y.; Guo, Y.N.; Sun, X.Y.; Wang, Y.L. Variable-size cooperative coevolutionary particle swarm optimization for feature selection on high-dimensional data. IEEE Trans. Evol. Comput. 2020, 24, 882–895. [Google Scholar] [CrossRef]

- Zhang, M.L.; Zhou, Z.H. A review on multi-label learning algorithms. IEEE Trans. Knowl. Data Eng. 2013, 26, 1819–1837. [Google Scholar] [CrossRef]

- Hu, L.; Li, Y.; Gao, W.; Zhang, P.; Hu, J. Multi-label feature selection with shared common mode. Pattern Recognit. 2020, 104, 107344. [Google Scholar] [CrossRef]

- Zhang, P.; Gao, W.; Hu, J.; Li, Y. Multi-Label Feature Selection Based on High-Order Label Correlation Assumption. Entropy 2020, 22, 797. [Google Scholar] [CrossRef]

- Zhang, P.; Gao, W. Feature relevance term variation for multi-label feature selection. Appl. Intell. 2021, 51, 5095–5110. [Google Scholar] [CrossRef]

- Xu, S.; Yang, X.; Yu, H.; Yu, D.J.; Yang, J.; Tsang, E.C. Multi-label learning with label-specific feature reduction. Knowl. Based Syst. 2016, 104, 52–61. [Google Scholar] [CrossRef]

- Boutell, M.R.; Luo, J.; Shen, X.; Brown, C.M. Learning multi-label scene classification. Pattern Recognit. 2004, 37, 1757–1771. [Google Scholar] [CrossRef]

- Read, J. A pruned problem transformation method for multi-label classification. In New Zealand Computer Science Research Student Conference (NZCSRS 2008); Citeseer: Princeton, NJ, USA, 2008; Volume 143150, p. 41. [Google Scholar]

- Trohidis, K.; Tsoumakas, G.; Kalliris, G.; Vlahavas, I.P. Multi-label classification of music into emotions. In Proceedings of the ISMIR, Philadelphia, PA, USA, 14–18 September 2008; Volume 8, pp. 325–330. [Google Scholar]

- Lee, J.; Kim, D.W. Memetic feature selection algorithm for multi-label classification. Inf. Sci. 2015, 293, 80–96. [Google Scholar] [CrossRef]

- Cai, X.; Nie, F.; Huang, H. Exact top-k feature selection via ℓ2,0-norm constraint. In Proceedings of the Twenty-Third International Joint Conference on Artificial Intelligence, Beijing, China, 3–9 August 2013. [Google Scholar]

- Lee, J.; Kim, D.W. Mutual information-based multi-label feature selection using interaction information. Expert Syst. Appl. 2015, 42, 2013–2025. [Google Scholar] [CrossRef]

- Lee, J.; Kim, D.W. Feature selection for multi-label classification using multivariate mutual information. Pattern Recognit. Lett. 2013, 34, 349–357. [Google Scholar] [CrossRef]

- Lee, J.; Kim, D.W. SCLS: Multi-label feature selection based on scalable criterion for large label set. Pattern Recognit. 2017, 66, 342–352. [Google Scholar] [CrossRef]

- Liu, J.; Li, Y.; Weng, W.; Zhang, J.; Chen, B.; Wu, S. Feature selection for multi-label learning with streaming label. Neurocomputing 2020, 387, 268–278. [Google Scholar] [CrossRef]

- Lin, Y.; Hu, Q.; Liu, J.; Li, J.; Wu, X. Streaming feature selection for multilabel learning based on fuzzy mutual information. IEEE Trans. Fuzzy Syst. 2017, 25, 1491–1507. [Google Scholar] [CrossRef]

- Kong, D.; Fujimaki, R.; Liu, J.; Nie, F.; Ding, C. Exclusive Feature Learning on Arbitrary Structures via ℓ1,2-norm. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 1655–1663. [Google Scholar]

- Zhang, M.L.; Zhou, Z.H. ML-KNN: A lazy learning approach to multi-label learning. Pattern Recognit. 2007, 40, 2038–2048. [Google Scholar] [CrossRef]

- Tsoumakas, G.; Spyromitros-Xioufis, E.; Vilcek, J.; Vlahavas, I. Mulan: A java library for multi-label learning. J. Mach. Learn Res. 2011, 12, 2411–2414. [Google Scholar]

- Zhang, P.; Gao, W.; Hu, J.; Li, Y. Multi-label feature selection based on the division of label topics. Inf. Sci. 2021, 553, 129–153. [Google Scholar] [CrossRef]

- Ueda, N.; Saito, K. Parametric mixture models for multi-labeled text. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2003; pp. 737–744. [Google Scholar]

- Zhang, Y.; Zhou, Z.H. Multilabel dimensionality reduction via dependence maximization. ACM Trans. Knowl. Discov. Data 2010, 4, 1–21. [Google Scholar] [CrossRef]

- Doquire, G.; Verleysen, M. Feature selection for multi-label classification problems. In International Work-Conference on Artificial Neural Networks; Springer: Berlin/Heidelberger, Germany, 2011; pp. 9–16. [Google Scholar]

- Szymański, P.; Kajdanowicz, T. A scikit-based Python environment for performing multi-label classification. arXiv 2017, arXiv:1702.01460. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).