Abstract

Deep Gaussian Processes (DGPs) were proposed as an expressive Bayesian model capable of a mathematically grounded estimation of uncertainty. The expressivity of DPGs results from not only the compositional character but the distribution propagation within the hierarchy. Recently, it was pointed out that the hierarchical structure of DGP well suited modeling the multi-fidelity regression, in which one is provided sparse observations with high precision and plenty of low fidelity observations. We propose the conditional DGP model in which the latent GPs are directly supported by the fixed lower fidelity data. Then the moment matching method is applied to approximate the marginal prior of conditional DGP with a GP. The obtained effective kernels are implicit functions of the lower-fidelity data, manifesting the expressivity contributed by distribution propagation within the hierarchy. The hyperparameters are learned via optimizing the approximate marginal likelihood. Experiments with synthetic and high dimensional data show comparable performance against other multi-fidelity regression methods, variational inference, and multi-output GP. We conclude that, with the low fidelity data and the hierarchical DGP structure, the effective kernel encodes the inductive bias for true function allowing the compositional freedom.

1. Introduction

Multi-fidelity regression refers to a category of learning tasks in which a set of sparse data is given to infer the underlying function but a larger amount of less precise or noisy observations is also provided. Multifidelity tasks frequently occur in various fields of science because precise measurement is often costly while approximate measurements are more affordable (see [1,2] for example). The assumption that the more precise function is a function of the less precise one [1,3] is shared in some hierarchical learning algorithms (e.g., one-shot learning in [4], meta learning [5], and continual learning [6]). Thus, one can view the plentiful low fidelity data as a source of prior knowledge so the function can be efficiently learned with sparse data.

In Gaussian Process (GP) regression [7] domain experts can encode their knowledge into the combinations of covariance functions [8,9], building an expressive learning model. However, construction of an appropriate kernel becomes less clear when building a prior for the precise function in the context of multi-fidelity regression because the uncertainty, both epistemic and aleatoric, in the low fidelity function prior learned by the plentiful data should be taken into account. It is desirable to fuse the low fidelity data to an effective kernel as a prior, taking advantage of marginal likelihood being able to avoid overfitting, and then perform the GP regression as if only the sparse precise observations are given.

Deep Gaussian Process (DGP) [10] and the similar models [11,12] are expressive models with a hierarchical composition of GPs. As pointed out in [3], a hierarchical structure is particularly suitable for fusing data of different fidelity into one learning model. Although full Bayesian inference is promising in obtaining expressiveness while avoiding overfitting, exact inference is not tractable and approximate solutions such as the variational approach [13,14,15] are employed. Ironically, the major difficulty in inference comes from marginalization of the latent GPs in Bayesian learning, which, on the flip side, is also why overfitting can be prevented.

We propose a conditional DGP model in which the intermediate GPs are supported by the lower fidelity data. We also define the corresponding marginal prior distribution which is obtained by marginalizing all GPs except the exposed one. For some families of kernel compositions, we previously developed an analytical method in calculating exact covariance in the marginal prior [16]. As such, the method is applied here so the marginal prior is approximated as a GP prior with an effective kernel. The high fidelity data are then connected to the exposed GP, and the hyperparameters throughout the hierarchy are optimized via the marginal likelihood. Our model, therefore, captures the expressiveness embedded in hierarchical composition, retains the Bayesian character hinted in the marginal prior, but loses the non-Gaussian aspect of DGP. From the analytical expressions, one can partially understand the propagation of uncertainty in latent GPs as it is responsible for the non-stationary aspect of effective kernels. Moreover, the compositional freedom, i.e., different compositions may result in the same target function, in a DGP model [17,18] can be shown to be intact in our approach.

The paper is organized as follows. In Section 2, we review the literature of multi-fidelity regression model and deep kernels. A background of GP, DGP, and the moment matching method is introduced in Section 3. The conditional DGP model defined as a marginal prior and the exact covariance associated with two families of kernel compositions are discussed in Section 4. The method of hyperparameter learning is given in Section 5, and the simulation of synthetic and high dimensional multi-fidelity regression in a variety of situations are presented in Section 6. A brief discussion followed by the conclusion appear in Section 7 and Section 8, respectively.

2. Related Work

Assuming autoregressive relations between data of different fidelity, Kennedy and O’Hagan [1] proposed the AR1 model for multi-fidelity regression tasks. Le Gratiet and Garnier [19] improved computational efficiency with a recursive multi-fidelity model. Deep-MF [20] mapped the input space to the latent space and followed the work in Kennedy and O’Hagan [1]. NARGP [21] stacked a sequence of GPs in which the posterior mean about the low-fidelity function is passed to the input of the next GP while the associated uncertainty is not. GPAR [22] uses a similar conditional structure between functions of interest. MF-DGP in [3] exploited the DGP structure for the multi-fidelity regression tasks and used the approximate variational inference in [13]. Multi-output GPs [23,24] regard the observations from different data sets as realization of vector-valued function; [25] modeled the multi-output GP using general relation between multiple target functions and multiple hidden functions. Alignment learning [26,27] is an application of warped GP [11,12] to time series data. We model the multi-fidelity regression as a kernel learning, effectively taking the space of functions representing the low fidelity data into account.

As for general studies of deep and non-stationary kernels, Williams [28] and Cho and Saul [29] used the basis of error functions and Heaviside polynomial functions to obtain the arc-sine and arc-cosine kernel functions, respectively, of neural networks. Duvenaud et al. [30] employed the analogy between neural network and GP, and constructed the deep kernel for DGP. Dunlop et al. [31] analyzed variety of non-stationary kernel compositions in DGP, and Shen et al. [32] provided an insight from Wigner transformation of general two-input functions. Wilson et al. [33] proposed the general recipe for constructing the deep kernel with neural networks. Daniely et al. [34] computed the deep kernel from the perspective of two correlated random variables. Mairal et al. [35] and Van der Wilk et al. [36] studied the deep kernels in the convolutional models. The moment matching method [16] allows obtaining the effective kernel encoding the uncertainty in learning the lower fidelity function.

3. Background

3.1. Gaussian Process and Deep Gaussian Process

Gaussian Process (GP) [7] is a popular Bayesian learning model in which the prior over a continuous function is modeled as a Gaussian. Denoted by , the collection of any finite function values with has the mean and covariance . Thus, a continuous and deterministic mean function and a positive definite kernel function fully specify the stochastic process. It is common to consider the zero-mean case and write down the prior distribution, with covariance matrix K. In the setting of a regression task with input and output of data , the hyperparameters in the mean and kernel functions can be learned by optimizing the marginal likelihood, .

Deep Gaussian Process (DGP) was proposed in [10] as a hierarchical composition of GPs for superior expressivity. From a generative view, the distribution over the composite function is a serial product of Gaussian conditional distribution,

in which the capital bolded face symbol stands for a multi-output GP in ith layer and the independent components have . The above is the general DGP, and the width in each layer is denoted by . In such notation, the zeroth layer represents the collection of inputs . Here, we shall consider the DGP with and and the three-layer counterpart.

The intractability of exact inference is a result of the fact that the random variables for appear in the covariance matrix K. In a full Bayesian inference, the random variables are marginalized in order to estimate the evidence associated with the data.

3.2. Multi-Fidelity Deep Gaussian Process

The multi-fidelity model in [1] considered the regression task for a data set consisting of observations measured with both high and low precision. For simplicity, the more precise observations are denoted by and those with low precision by . The main assumption made in [1] is that the less precise observations shall come from a function modeled by a GP with zero mean and kernel k, while the more precise ones come from the combination . With the residual function h being a GP with kernel , one can jointly model the two subsets with the covariance within precise observations , within the less precise ones , and the mutual covariance . refers to the covariance between the two inputs at and .

The work in [3] generalized the above the linear relationship between the more and less precise functions to a nonlinear one, i.e., . The hierarchical structure in DGP is suitable for nonlinear modeling. The variational inference scheme [13] was employed to evaluate the evidence lower bounds (ELBOs) for the data with all levels of precision, and the sum over all ELBOs is the objective for learning the hyperparameters and inducing points.

3.3. Covariance in Marginal Prior of DGP

The variational inference, e.g., [13], starts with connecting the joint distribution with data , followed by applying the Jensen’s inequality along with an approximate posterior in evaluating the ELBO. In contrast, we proposed in [16] that the marginal prior for the DGP,

with the bolded face symbols representing the set of function values, , , and , can be approximated as a GP, i.e., in the zero-mean case. The matching of covariance in p and q leads to the closed form of effective covariance function for certain families of kernel compositions. The SE[SC] composition, i.e., the squared exponential and squared cosine kernels being used in the GPs for and , respectively, is an example. With the intractable marginalization over the intermediate being taken care of in the moment matching approximation, one can evaluate the approximate marginal likelihood for the data set ,

In the following, we shall develop along the line of [16] the approximate inference for the multi-fidelity data consisting of precise observations and less precise observations with the L-layer width-1 DGP models. The effective kernels shall encode the knowledge built on these less precise data, which allows modeling the precise function even with a sparse data set.

4. Conditional DGP and Multi-Fidelity Kernel Learning

In the simplest case, we are given two subsets of data, with high precision and with low precision. We can start with defining the conditional DGP in terms of the marginal prior,

where the Gaussian distribution has the conditional mean in the vector form,

and the conditional covariance in the matrix form,

The matrix registers the covariance among the inputs in and , and likewise for and . Thus, the set of function values associated with the N inputs in are supported by the low fidelity data.

The data with high precision are then associated with the function . Following the previous discussion, we may write down the true evidence for the precise data conditioned on the less precise ones shown below,

To proceed with the moment matching approximation of the true evidence in Equation (7), one needs to find the effective kernel in the approximate distribution and replace the true distribution in Equation (4) with the approximate distribution,

Therefore, the learning in the conditional DGP includes the hyperparameters in the exposed GP, , and those in the intermediate GP, . Standard gradient descent is applied to above approximate marginal likelihood. One can customize the kernel in the GPy [37] framework and implement the gradient components with in the optimization.

4.1. Analytic Effective Kernels

Here, we consider the conditional DGP with two-layer and width-1 hierarchy, focusing on the cases where the exposed GP for in Equation (4) uses the squared exponential (SE) kernel or the squared cosine (SC) kernel. We also follow the notation in [16] so that the composition denoted by represents that is the kernel used in the exposed GP and used in the intermediate GP. For example, SE[SC] means is SE while is SC. Following [16], the exact covariance in the marginal prior Equation (4) is calculated,

Thus, when the exposed GP has the kernel in the exponential family, the above integral is tractable and the analytic can be implemented as a customized kernel. The following two lemmas from [16] are useful for the present case with a nonzero conditional mean and a conditional covariance in .

Lemma 1.

(Lemma 2 in [16]) For a vector of Gaussian random variables , the expectation of exponential quadratic form with has the following closed form,

The n-dimensional matrix appearing in the quadratic form Q is symmetric.

Lemma 2.

(Lemma 3 in [16]) With the same Gaussian vector in Lemma 1, the expectation value of the exponential inner product between and a constant vector reads,

where the transpose operation on column vector is denoted by the superscript.

We shall emphasize that our previous work [16] did not discuss the cases when the intermediate GP for is conditioned on the low precision data . Thus, the conditional mean and the non-stationary conditional covariance were not considered in [16].

Lemma 3.

The covariance in the marginal prior with a SE in the exposed GP can be calculated analytically. With the Gaussian conditional distribution, , supported by the low fidelity data, the effective kernel reads,

where being the conditional mean of . The positive parameter is defined with the conditional covariance . and and the the length scale in dictates how the uncertainty in affects the function composition.

Proof.

For SE[ ] composition, one can represent the kernel as an exponential quadratic form with with . is set for ease of notation. Now is a bivariate Gaussian variable with mean and covariance matrix . To calculate the expectation value in Equation (12), we need to compute the following 2-by-2 matrix and one can show can be reduced to

The seemingly complicated matrix in fact is reducible as one can show that , which leads to the exponential term in the kernel. With the determinant and restoring back the length scale , the kernel in Equation (12) is reproduced. □

A few observations are in order. First, we can rewrite , which guarantees the positiveness of as the two-by-two sub-block of covariance matrix is positive-definite. Secondly, there are deterministic and probabilistic aspects of the kernel in Equation (12). When the the length scale is very large, the term encoding the uncertainty in becomes irrelevant and the kernel is approximately a SE kernel with the input transformed via the conditonal mean Equation (5), which is reminiscent of the deep kernel proposed in [33] where GP is stacked on the output of a DNN. The kernel used in [21] similarly considers the conditional mean in as a deterministic transformation while the uncertainty is ignored. On the other hand, when and are comparable, it means that the (epistemic) uncertainty in shaped by the supports is relevant. The effective kernel then represents the covariance in the ensemble of GPs, each of which receives the inputs transformed by one sampled from the intermediate GP. Thirdly, we shall stress that the appearance of is a signature of marginalization over the latent function in deep probabilistic models. Similar square distance also appeared in [30] where the effectively deep kernel was derived from a recursive inner product in the space defined by neural network feature functions.

In the following lemma, we consider the composition where the kernel in outer layer is squared cosine, , which is a special case of spectrum mixture kernel [38].

Lemma 4.

The covariance in f of the marginal prior with SC kernel used in the exposed GP is given below,

where has been defined in the previous lemma.

The form of product of cosine and exponential kernels is similar with the deep spectral mixture kernel [33]. In our case the cosine function has the warped input , but the exponential function has the input due to the conditional covariance in the intermediate GP.

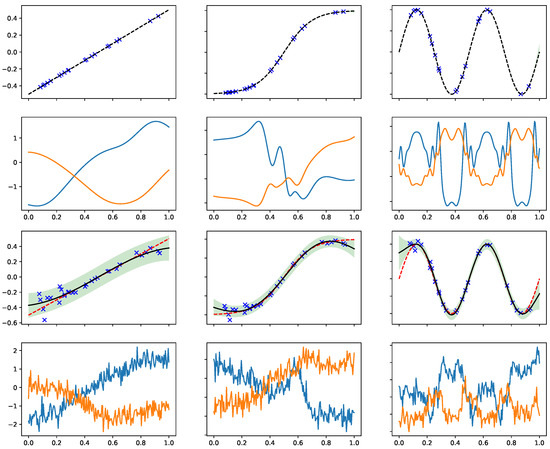

4.2. Samples from the Marginal Prior

Now we study the samples from the approximate marginal prior with the effective kernel in Equation (12). We shall vary the low fidelity data to see how they affect the inductive bias for the target function. See the illustration in Figure 1. The top row displays the low-fidelity functions , which are obtained by a standard GP regression. Here, the low-fidelity data are noiseless observations of three simple functions (linear in the left, hyper tangent in middle, and sine in right). The conditional covariance and condition mean are then fed into the effective kernel in Equation (12), and so we can sample functions from the prior carrying the effective kernel. The samples are displayed in the second row.

Figure 1.

Sampling random functions from the approximate marginal prior which carries the effective kernel in Equation (12). The low fidelity data , marked by the cross symbols, and the low fidelity function and the uncertainty are shown in the top (noiseless) and the third (noisy) rows. Top row: the uncertainty in is negligible so is nearly a deterministic function, so the effective kernels are basically kernels with warped input. The corresponding samples from q are shown in the second row. Third row: the noise in generates the samples in bottom row which carry additional high-frequency signals due to the non-stationary in Equation (12).

In such cases, it can be seen that is nearly a deterministic function (top row) given the sufficient amount of noiseless observations in . In fact, the left panel in the second row is equivalent to the samples from a SE kernel as is the identity function. Moving to the second column, one can see the effect of nonlinear warping generates additional kinks in the target functions. The case on the third column with periodic warping results in periodic patterns to the sampled functions.

Next, we shall see the effect of uncertainty in (third row) on the sampled functions (bottom row). The increased uncertainty (shown by shadow region) in generates the weak and high frequency signal in the target function due to the non-stationary in Equation (12). We stress that these weak signals are not white noise. The noise in the low fidelity data even reverses the sign of sampled functions, i.e., comparing the second against the bottom rows in the third column. Consequently, the expressivity of the effective kernel gets a contribution from the uncertainty in learning the low fidelity functions.

5. Method

Since we approximate the marginal prior for the conditional DGP with a GP, the corresponding approximate marginal likelihood should be the objective for jointly learning the hyperparameters including those in the exposed GP and the intermediate GPs. From the analytical expression for the effective kernel, e.g., Equation (12), the gradient components include the explicit derivatives and as well as those implicit derivatives and which can be computed via chain rule.

With the data consisting of observations of different fidelity, an alternative method can learn the hyperparameters associated with each layer of the hierarchy sequentially. See Algorithm 1 for details. The low fidelity data are fed into the first GP regression model for inferring and the hyperparameters and . The conditional mean and conditional covariance in are then sent to the effective kernel. The second GP using the effective kernel is to infer the high fidelity function f with the marginal likelihood for the high fidelity data being the objective. Optimization of the second model results in the hyperparameters and in the second layer. Learning in the three-layer hierarchy can be generalized from the two-layer hierarchy. In the Appendix A, a comparison of regression using the two methods is shown.

| Algorithm 1 A learning algorithm for conditional DGP multi-fidelity regression |

| Input: two sources of data, low-fidelity data () and high-fidelity data (X, y), kernel k1 for function f1, and the test input x*. |

| 1. k1 = Kernel (var = , lengthscale = {Initialize the kernel for inferring g} |

| 2. model1 = Regression(kernel = k1, data = and ) {Initialize regression model for f1} |

| 3. model1.optimize() |

| 4. m, C = model1.predict(input = X, x*, full-cov = true) {Output pred. mean and post cov. of f1} |

| 5. keff = Effective Kernel(var = , lengthscale = , m, C) {Initialize the effective kernel in Equation (12) for SE[ ] and Equation (14) for SC[ ].} |

| 6. model2 = Regression(kernel = keff, data = X, y) {Initialize regression model for f} |

| 7. model2.optimize() |

| 8. , = model2.predict(input = x*) |

| Output: Optimal hyper-parameters {, } and predictive mean and variance at x*. |

6. Results

In this section, we shall present the results of multi-fidelity regression given low fidelity data and high fidelity and use the 2-layer conditional DGP model. The cases where there are three levels of fidelity can be generalized with the 3-layer counterpart. The toy demonstrations in Section 6.1 focus on data sets in which the target function is a composite, . The low fidelity data are observations of while the high fidelity are those of . The aspect of compositional freedom is discussed in Section 6.2, and the same target function shall be inferred with the same high fidelity data but the low fidelity data now result from a variety of functions. In Section 6.3, we switch to the case where the low fidelity data are also observations of the target function f but with large noise. In Section 6.4, we compare our model with the work in [3] on the data set with high dimensional inputs.

6.1. Synthetic Two-Fidelity Function Regression

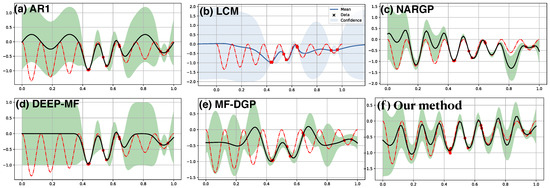

The first example in Figure 2 consists of 10 random observations of the target function (red dashed line) along with 30 observations of the low fidelity function (not shown). The 30 observations of with a period 0.25 in the range of is more than sufficient to reconstruct with high confidence. In contrast, the 10 observations of f alone (shown in red dots) are difficult to reconstruct f if a GP with SE kernel is used. The above figures demonstrate the results from a set of multi-source nonparametric regression methods which incorporate the learning of into the target regression of f. Our result, the SE[SE] [panel (f)] kernel, and NARGP [panel (c)] successfully capture the periodic pattern inherited from the low fidelity function , but the target function is fully covered in the confidence region in our prediction only. On the other hand, in the input space away from the target observations, AR1 [panel (a)] and MF-DGP [panel (e)] manages to only capture part of the oscillation. Predictions in LCM [panel(b)] and DEEP-MF [panel (d)] are reasonable near the target observations but fail to capture the oscillation away from these observations.

Figure 2.

Multi-fidelity regression with 30 observations (not shown) of low fidelity and 10 observations (red dots) from the target function, (shown in red dashed line). Only the target prediction (solid dark) and associated uncertainty (shaded) are shown. Top row: (a) AR1, (b) LCM, (c) NARGP. Bottom row: (d) DEEP-MF, (e) MF-DGP, (f) our model with SE[SE] kernel.

Figure 3 demonstrates another example of multi-fidelity regression on the nonlinear composite function. The low fidelity function is also periodic, , and the target is exponential function, . The 15 observations of f (red dashed line) are marked by the red dots. The exponential nature in the mapping might make the reconstruction more challenging than the previous case, which may lead to less satisfying results from LCM [panel (b)]. NARGP [panel (c)] and MF-DGP [panel (e)] have similar predictions which mismatch some of the observations, but the target function is mostly covered by the uncertainty estimation. Our model with SE[SE] kernel [panel (f)], on the other hand, has predictions consistent with all the target observations, and the target function is fully covered by the uncertainty region. Qualitatively similar results are also obtained from AR1 [panel (a)] and DEEP-MF [panel (d)].

Figure 3.

Multi-fidelity regression on the low-level true function, , with 30 observations and high-level one, , with 15 observations. Top row: (a) AR1, (b) LCM, and (c) NARGP. Bottom row: (d) DEEP-MF, (e) MF-DGP, and (f) Our method with SE[SE] kernel.

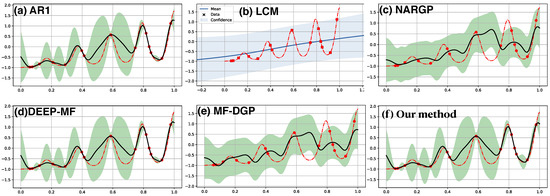

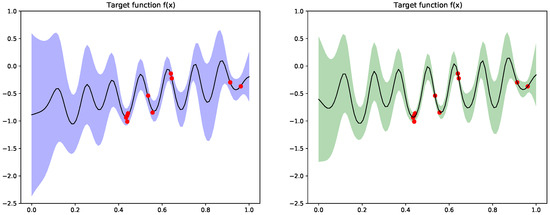

6.2. Compositional Freedom and Varying Low-Fidelity Data

Given the good learning results in the previous subsection, one may wonder the effects of having a different low fidelity data set on inferring the high fidelity function. Here, we consider the same high fidelity data from the target function in Figure 2, but the low fidelity data are observations of , , , and . Figure 4 displays the results. Plots in the top rows represent , while the bottom rows show the inferred target function given the high fidelity data (red dots). It can be seen in the left most column in panel (a) that the linear is not a probable low fidelity function as the true target function (red dashed line) in the bottom is outside the predictive confidence. Similarly in the second plot in (a), being a hyper tangent function is not probable to account for the true target function. In the end, being a periodic function is more likely to account for the true target function than the first two cases, but the right most plot with leads to the predictive mean very close to the true target function.

Figure 4.

Demonstration of compositional freedom and effects of uncertainty in low fidelity function on the target function inference. Given the same high fidelity observations of target function, four different sets of observations of , , , and are employed as low fidelity data in inferring the target function. In panel (a), the low fidelity data are noiseless observations of the four functions. The true target function is partially outside the model confidence for the first two cases. In panel (b), the low fidelity data are noisy observations of the same four functions. Now the first three cases result in the inferred function outside the model confidence. The effect of uncertainty in low fidelity is most dramatic when comparing the third subplots in (a,b).

Next, the low fidelity data become the noisy observations of the same four functions. As shown in panel (b), the increased variance in also results in raising the variance in f, especially comparing the first two cases in (a) against those in (b). A dramatic difference can be found in comparing the third plot in (a) against that in (b). In (b), the presence of noise in the low fidelity data slightly raises the uncertainty in , but the ensuing inference in f generates the prediction which fails to contain most of the true target function within its model confidence. Thus, the likelihood that is the probable low fidelity function is greatly reduced by the noise in the observation. Lastly, the noise in observing as the low fidelity data does not significantly change the inferred target function.

Therefore, the inductive bias associated with the target function is indeed controllable by the intermediate function distribution conditioned on the lower fidelity data. The observation motivates the DGP learning from the common single-fidelity regression data with the intermediate GPs conditioned on some optimizable hyperdata [39]. These hyperdata constrain the space of intermediate function, and the uncertainty therein contribute to the expressiveness of the model.

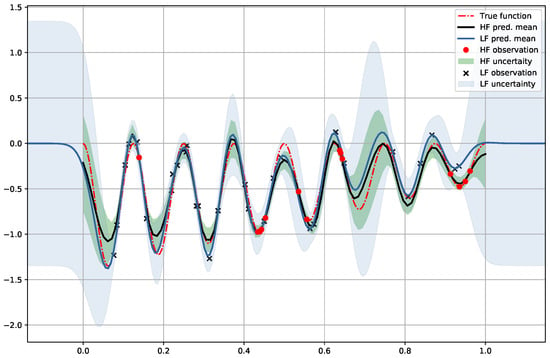

6.3. Denoising Regression

Here we continue considering the inference of the same target function in , but now the low fidelity data set becomes the noisy observations of the target function. See Figure 5 for illustration. Now we have 15 observations of f with noise level of 0.001 (red dots) as high fidelity data and 30 observations of the same function with nosie level of 0.1 (dark cross symbol) as the low fidelity data. Next, we follow the same procedure in inferring with the low fidelity, and then use the conditional mean and covariance in constructing the effective kernel for inferring the target function f with the high fidelity data. Unlike the previous cases, the relation between f and here is not clear. However, the structure of DGP can be viewed as the intermediate GP emitting infinitely many samples of into the exposed GP. Qualitatively, one can expect that the actual prediction for f is the average over the GP models with different warping . Consequently, we may expect the variance in predicting f is reduced.

Figure 5.

Denoising regression with 30 high-noise and 15 low-noise observations from the target function (red dashed line). The uncertainty is reduced in the GP learning with the SE[SE] kernels.

Indeed, as shown in Figure 5, the predictive variance using a GP with the low fidelity (high noise) observations only is marked by the light-blue region around the predictive mean (light-blue solid line). When the statistical information in is transferred to the effective kernel, the new prediction and model confidence possess much tighter uncertainty (marked by the light-green shaded region) around the improved predictive mean (dark solid line) even in the region away from the low-noise observations.

6.4. Multi-Fidelity Data with High Dimensional Input

The work in [3] along with their public code in emukit [40] assembles a set of multi-fidelity regression data sets in which the input is of high dimension. Here we demonstrate the simulation results on these data (see [3] for details). The simulation is performed using the effective kernels with compositions: SE[SE] and SC[SE] for the Borehole (two-fidelity) regression data set, SE[SE[SE]]] and SC[SC[SE]] for Branin (three-fidelity) regression data set. The data are obtained from deploying the modules in [40]. Algorithm 1 is followed to obtain the results here. The performance of generalization is measured in terms of mean negative log likelihood (MNLL). Table 1 displays the results using the same random seed from MF-DGP and our methods. We also include the simulation of standard GP regression with the high fidelity data only. It is seen that the knowledge about the low fidelity function is significant for predicting high-level simulation (comparing with vanilla GP) and that the informative kernels have better performance in these cases.

Table 1.

MNLL results of multi-fidelity regression.

7. Discussion

In this paper, we propose a novel kernel learning which is able to fuse data of low fidelity into a prior for high fidelity function. Our approach addresses two limitations of prior research on GPs: the need to choose or design kernel [8,9] and the lack of explicit dependence on the observations in the prediction (in Student-t process [41] the latter is possible). We resolve limitations associated with reliance on designing kernels, introducing new data-dependent kernels together with effective approximate inference. Our results show that the method is effective, and we prove that our moment-matching approximation retains some multi-scale, multi-frequency, and non-stationary correlations that are characteristic of deep kernels, e.g., [33]. The compositional freedom [18] pertaining to hierarchical learning is also manifested in our approach.

8. Conclusions

Central to the allure of Bayesian methods, including Gaussian Processes, is the ability to calibrate model uncertainty through marginalization over hidden variables. The power and promise of DGP is in allowing rich composition of functions while maintaining the Bayesian character of inference over unobserved functions. Modeling the multi-fidelity data with the hierarchical DGP is able to exploit its expressive power and to consider the effects of uncertainty propagation. Whereas most approaches are based on variational approximations for inference and Monte Carlo sampling in the prediction stage, our approach uses a moment-based approximation in which the marginal prior of DGP is analytically approximated with a GP. For both, the full implications of these approximations are unknown. Continued research is required to understand the full strengths and limitations of each approach.

Author Contributions

Conceptualization, C.-K.L. and P.S.; methodology, C.-K.L.; software, C.-K.L.; validation, C.-K.L. and P.S.; formal analysis, C.-K.L.; investigation, C.-K.L.; resources, C.-K.L.; data curation, C.-K.L.; writing—original draft preparation, C.-K.L.; writing—review and editing, C.-K.L. and P.S.; visualization, C.-K.L.; supervision, P.S.; project administration, C.-K.L.; funding acquisition, P.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Air Force Research Laboratory and DARPA under agreement number FA8750-17-2-0146.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

We would like to thank the helpful correspondences with the authors of [22].

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| DGP | Deep Gaussian Process |

| SE | Squared Exponential |

| SC | Squared Cosine |

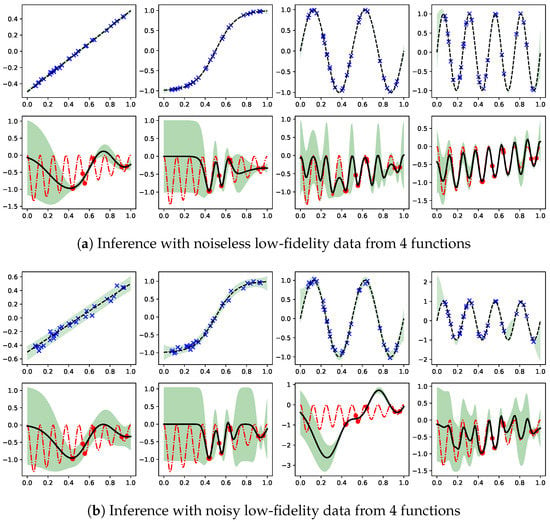

Appendix A

Figure A1 shows the two results of multi-fidelity regressions with the same data. The left panel is obtained with jointly learning the hyperparameters in all layers with the standard gradient descent on the approximate marginal likelihood, while the right panel is from learning the hyperparameters sequentially with the Algorithm 1. It is noted that the right panel yields higher log of marginal likelihood.

Figure A1.

Comparison between the joint learning (left) and the sequential learning with Algorithm 1 (right). The same 10 training data are shown by the red dots. The joint learning algorithm results in a log marginal likelihood while the alternative one . The hyperparameters are (left) and (right).

References

- Kennedy, M.C.; O’Hagan, A. Predicting the output from a complex computer code when fast approximations are available. Biometrika 2000, 87, 1–13. [Google Scholar] [CrossRef] [Green Version]

- Wang, Z.; Xing, W.; Kirby, R.; Zhe, S. Multi-Fidelity High-Order Gaussian Processes for Physical Simulation. In Proceedings of the International Conference on Artificial Intelligence and Statistics, San Diego, CA, USA, 13 April 2021; pp. 847–855. [Google Scholar]

- Cutajar, K.; Pullin, M.; Damianou, A.; Lawrence, N.; González, J. Deep Gaussian processes for multi-fidelity modeling. arXiv 2019, arXiv:1903.07320. [Google Scholar]

- Salakhutdinov, R.; Tenenbaum, J.; Torralba, A. One-shot learning with a hierarchical nonparametric bayesian model. In Proceedings of the ICML Workshop on Unsupervised and Transfer Learning, Bellevue, WA, USA, 2 July 2012; pp. 195–206. [Google Scholar]

- Finn, C.; Abbeel, P.; Levine, S. Model-agnostic meta-learning for fast adaptation of deep networks. In Proceedings of the International Conference on Machine Learning, Sydney, Australia, 6 August 2017; pp. 1126–1135. [Google Scholar]

- Titsias, M.K.; Schwarz, J.; Matthews, A.G.d.G.; Pascanu, R.; Teh, Y.W. Functional Regularisation for Continual Learning with Gaussian Processes. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Rasmussen, C.E.; Williams, C.K.I. Gaussian Process for Machine Learning; MIT Press: Cambridge, MA, USA, 2006. [Google Scholar]

- Duvenaud, D.; Lloyd, J.; Grosse, R.; Tenenbaum, J.; Zoubin, G. Structure discovery in nonparametric regression through compositional kernel search. In Proceedings of the International Conference on Machine Learning, Atlanta, GA, USA, 17–19 June 2013; pp. 1166–1174. [Google Scholar]

- Sun, S.; Zhang, G.; Wang, C.; Zeng, W.; Li, J.; Grosse, R. Differentiable compositional kernel learning for Gaussian processes. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 4828–4837. [Google Scholar]

- Damianou, A.; Lawrence, N. Deep Gaussian processes. In Proceedings of the Artificial Intelligence and Statistics, Scottsdale, AZ, USA, 29 April–1 May 2013; pp. 207–215. [Google Scholar]

- Snelson, E.; Ghahramani, Z.; Rasmussen, C.E. Warped Gaussian processes. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 13–18 December 2004; pp. 337–344. [Google Scholar]

- Lázaro-Gredilla, M. Bayesian warped Gaussian processes. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1619–1627. [Google Scholar]

- Salimbeni, H.; Deisenroth, M. Doubly stochastic variational inference for deep Gaussian processes. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Salimbeni, H.; Dutordoir, V.; Hensman, J.; Deisenroth, M.P. Deep Gaussian Processes with Importance-Weighted Variational Inference. arXiv 2019, arXiv:1905.05435. [Google Scholar]

- Yu, H.; Chen, Y.; Low, B.K.H.; Jaillet, P.; Dai, Z. Implicit Posterior Variational Inference for Deep Gaussian Processes. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; pp. 14502–14513. [Google Scholar]

- Lu, C.K.; Yang, S.C.H.; Hao, X.; Shafto, P. Interpretable deep Gaussian processes with moments. In Proceedings of the International Conference on Artificial Intelligence and Statistics, Virtual, 26–28 August 2020; pp. 613–623. [Google Scholar]

- Havasi, M.; Hernández-Lobato, J.M.; Murillo-Fuentes, J.J. Inference in deep Gaussian processes using stochastic gradient Hamiltonian Monte Carlo. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; pp. 7506–7516. [Google Scholar]

- Ustyuzhaninov, I.; Kazlauskaite, I.; Kaiser, M.; Bodin, E.; Campbell, N.; Ek, C.H. Compositional uncertainty in deep Gaussian processes. In Proceedings of the Conference on Uncertainty in Artificial Intelligence, Toronto, ON, Canada, 3 August 2020; pp. 480–489. [Google Scholar]

- Le Gratiet, L.; Garnier, J. Recursive co-kriging model for design of computer experiments with multiple levels of fidelity. Int. J. Uncertain. Quantif. 2014, 4, 365–386. [Google Scholar] [CrossRef]

- Raissi, M.; Karniadakis, G. Deep multi-fidelity Gaussian processes. arXiv 2016, arXiv:1604.07484. [Google Scholar]

- Perdikaris, P.; Raissi, M.; Damianou, A.; Lawrence, N.; Karniadakis, G.E. Nonlinear information fusion algorithms for data-efficient multi-fidelity modelling. Proc. R. Soc. Math. Phys. Eng. Sci. 2017, 473, 20160751. [Google Scholar] [CrossRef] [PubMed]

- Requeima, J.; Tebbutt, W.; Bruinsma, W.; Turner, R.E. The Gaussian process autoregressive regression model (gpar). In Proceedings of the 22nd International Conference on Artificial Intelligence and Statistics, Naha, Okinawa, Japan, 16–18 April 2019; pp. 1860–1869. [Google Scholar]

- Alvarez, M.A.; Rosasco, L.; Lawrence, N.D. Kernels for vector-valued functions: A review. arXiv 2011, arXiv:1106.6251. [Google Scholar]

- Parra, G.; Tobar, F. Spectral mixture kernels for multi-output Gaussian processes. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 6684–6693. [Google Scholar]

- Bruinsma, W.; Perim, E.; Tebbutt, W.; Hosking, S.; Solin, A.; Turner, R. Scalable Exact Inference in Multi-Output Gaussian Processes. In Proceedings of the International Conference on Machine Learning, Virtual, 13–18 July 2020; pp. 1190–1201. [Google Scholar]

- Kaiser, M.; Otte, C.; Runkler, T.; Ek, C.H. Bayesian alignments of warped multi-output Gaussian processes. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, Canada, 3–8 December 2018; pp. 6995–7004. [Google Scholar]

- Kazlauskaite, I.; Ek, C.H.; Campbell, N. Gaussian Process Latent Variable Alignment Learning. In Proceedings of the 22nd International Conference on Artificial Intelligence and Statistics, Naha, Okinawa, Japan, 16–18 April 2019; pp. 748–757. [Google Scholar]

- Williams, C.K. Computing with infinite networks. In Proceedings of the Advances in Neural Information Processing Systems, Denver, CO, USA, 2–4 December1997; pp. 295–301. [Google Scholar]

- Cho, Y.; Saul, L.K. Kernel methods for deep learning. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 6–8 December 2009; pp. 342–350. [Google Scholar]

- Duvenaud, D.; Rippel, O.; Adams, R.; Ghahramani, Z. Avoiding pathologies in very deep networks. In Proceedings of the Artificial Intelligence and Statistics, Reykjavik, Iceland, 22–25 April 2014; pp. 202–210. [Google Scholar]

- Dunlop, M.M.; Girolami, M.A.; Stuart, A.M.; Teckentrup, A.L. How deep are deep Gaussian processes? J. Mach. Learn. Res. 2018, 19, 2100–2145. [Google Scholar]

- Shen, Z.; Heinonen, M.; Kaski, S. Learning spectrograms with convolutional spectral kernels. In Proceedings of the International Conference on Artificial Intelligence and Statistics, Virtual, 26–28 August 2020; pp. 3826–3836. [Google Scholar]

- Wilson, A.G.; Hu, Z.; Salakhutdinov, R.; Xing, E.P. Deep kernel learning. In Proceedings of the Artificial Intelligence and Statistics, Cadiz, Spain, 9–11 May 2016; pp. 370–378. [Google Scholar]

- Daniely, A.; Frostig, R.; Singer, Y. Toward Deeper Understanding of Neural Networks: The Power of Initialization and a Dual View on Expressivity. In Proceedings of the NIPS: Centre Convencions Internacional Barcelona, Barcelona, Spain, 5–10 December 2016. [Google Scholar]

- Mairal, J.; Koniusz, P.; Harchaoui, Z.; Schmid, C. Convolutional kernel networks. In Proceedings of the Advances in Neural Information Processing Systems, Montréal, QC, Canada, 8–13 December 2014; pp. 2627–2635. [Google Scholar]

- Van der Wilk, M.; Rasmussen, C.E.; Hensman, J. Convolutional Gaussian processes. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 2849–2858. [Google Scholar]

- GPy. GPy: A Gaussian Process Framework in Python. 2012. Available online: http://github.com/SheffieldML/GPy (accessed on 1 October 2021).

- Wilson, A.; Adams, R. Gaussian process kernels for pattern discovery and extrapolation. In Proceedings of the International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2013; pp. 1067–1075. [Google Scholar]

- Lu, C.K.; Shafto, P. Conditional Deep Gaussian Processes: Empirical Bayes Hyperdata Learning. Entropy 2021, 23, 1387. [Google Scholar] [CrossRef]

- Paleyes, A.; Pullin, M.; Mahsereci, M.; Lawrence, N.; González, J. Emulation of physical processes with Emukit. In Proceedings of the Second Workshop on Machine Learning and the Physical Sciences, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Shah, A.; Wilson, A.; Ghahramani, Z. Student-t processes as alternatives to Gaussian processes. In Proceedings of the Artificial Intelligence and Statistics, Reykjavic, Iceland, 22–25 April 2014; pp. 877–885. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).