Abstract

In this paper, we study the asymptotic optimality of a low-complexity coding strategy for Gaussian vector sources. Specifically, we study the convergence speed of the rate of such a coding strategy when it is used to encode the most relevant vector sources, namely wide sense stationary (WSS), moving average (MA), and autoregressive (AR) vector sources. We also study how the coding strategy considered performs when it is used to encode perturbed versions of those relevant sources. More precisely, we give a sufficient condition for such perturbed versions so that the convergence speed of the rate remains unaltered.

1. Introduction

In [1], Kolmogorov gave a formula for the rate distortion function (RDF) of Gaussian vectors and for the RDF of Gaussian wide sense stationary (WSS) sources. In [2], Pearl presented an upper bound for the RDF of finite-length data blocks of any Gaussian WSS source and proved that such a bound tends to the RDF of the source when the length of the data block grows. However, he did not propose a coding strategy to achieve his bound for a given block length. In [3], we presented a tighter upper bound for the RDF of finite-length data blocks of any Gaussian WSS source, and we proposed a low-complexity coding strategy to achieve our bound. Obviously, since such a bound is tighter than the one given by Pearl, it also tends to the RDF of the source when the length of the data block grows. In [4], we generalized our low-complexity coding strategy to encode (compress) finite-length data blocks of any Gaussian vector source. Moreover, in [4], we also gave a sufficient condition for the vector source in order to make such a coding strategy asymptotically optimal. We recall that a coding strategy is asymptotically optimal if its rate tends to the RDF of the source as the length of the data block grows. Such a sufficient condition requires the Gaussian vector source to be asymptotically WSS (AWSS). The definition of the AWSS process was first introduced in [5], Section 6, and extended to vector processes in [6], Definition 7.1. However, the convergence speed of the rate of the coding strategy considered (i.e., how fast the rate of the coding strategy tends to the RDF of the AWSS vector source) was not studied in [4].

In this paper, we present a less restrictive sufficient condition for the vector source to make the coding strategy considered asymptotically optimal. Moreover, we study the convergence speed of the rate of such a coding strategy when it is used to encode the most relevant vector sources, namely, WSS, moving average (MA), and autoregressive (AR) vector sources. In this paper, we also study how the coding strategy considered performs when it is used to encode perturbed versions of those relevant sources. Specifically, we give a sufficient condition for such perturbed versions so that the convergence speed of the rate remains unaltered.

The study of the convergence speed in any information-theoretic problem is not an easy task. To study the aforementioned convergence speed, we first need to derive new mathematical results on block Toeplitz matrices and new mathematical results on the correlation matrices of the WSS, MA, and AR vector processes. These new mathematical results are useful not only to study the convergence speed in the information-theoretic problem considered, but also in other problems. In fact, as an example, in Appendix H, we use such mathematical results to study the convergence speed in a statistical signal processing problem on filtering WSS vector processes.

The paper is organized as follows. In Section 2, we give several new mathematical results on block Toeplitz matrices. In Section 3, using the results obtained in Section 2, we give several new mathematical results on the correlation matrices of WSS, MA, and AR vector processes. In Section 4, we recall the low-complexity coding strategy presented in [4], and using the results obtained in Section 3, we study the asymptotic optimality of such a coding strategy when it is used to encode WSS, MA, and AR vector sources. In Section 4, we also study how the coding strategy considered performs when it is used to encode perturbed versions of those sources. Finally, in Section 5, some conclusions are presented.

2. Several New Results on Block Toeplitz Matrices

In this section, we present new results on the product of block Toeplitz matrices, on the inverse of a block Toeplitz matrix, and on block circulant matrices. These results will be used in Section 3. We begin by introducing some notation.

2.1. Notation

In this paper, , , , and denote the set of natural numbers (that is, the set of positive integers), the set of integer numbers, the set of real numbers, and the set of complex numbers, respectively. is the set of all complex matrices. stands for the identity matrix. denotes the zero matrix. is the Fourier unitary matrix, i.e.,

with being the imaginary unit. We denote by the eigenvalues of an Hermitian matrix A arranged in decreasing order. ∗ denotes the conjugate transpose. ⊗ is the Kronecker product. and are the spectral norm and the Frobenius norm, respectively.

If and for all , then is the block diagonal matrix whose blocks are given by:

where is the Kronecker delta. We also denote by the matrix .

If and is a continuous -periodic function, stands for the block Toeplitz matrix generated by F whose blocks are given by:

where is the sequence of Fourier coefficients of F, that is,

We denote by the block circulant matrix with blocks defined as:

If , then is the block circulant matrix with the blocks given by:

We denote by the block circulant matrix with the blocks defined as .

If is Hermitian for all (or equivalently, is Hermitian for all (see, e.g., [6], Theorem 4.4), then denotes . We recall that (see [7], Proposition 3):

2.2. Product of Block Toeplitz Matrices

We begin this subsection with a result on the entries of the block Toeplitz matrices generated by the product of two functions, which is a direct consequence of the Parseval theorem.

Lemma 1.

Consider two continuous -periodic functions and . Let and be the sequences of Fourier coefficients of F and G, respectively. Then:

for all and .

Proof.

See Appendix A. □

We can now give a result on the product of two block Toeplitz matrices when one of them is generated by a trigonometric polynomial. We recall that an trigonometric polynomial of degree is a function of the form:

where with . It can be easily proven (see, e.g., [6], Example 4.3) that the sequence of the Fourier coefficients of the continuous -periodic function F in Equation (2) is given by:

Lemma 2.

Let F, G, , and be as in Lemma 1.

- 1.

- If F is a trigonometric polynomial of degree p, then:and:for all and .

- 2.

- If G is a trigonometric polynomial of degree q, then:and:for all and .

- 3.

- If F is a trigonometric polynomial of degree p and G is a trigonometric polynomial of degree q, then:and:for all , where are given by:and:for all and .

Proof.

See Appendix B. □

2.3. Inverse of a Block Toeplitz Matrix

Lemma 3.

Let be a trigonometric polynomial of degree p.

- 1.

- If is invertible for all and is stable (i.e., is invertible for all and is bounded), then:for all .

- 2.

- If is positive definite for all , then:for all .

Proof.

See Appendix C. □

2.4. Block Circulant Matrices

Lemma 4.

Consider . Then:

and:

Moreover, if is an block circulant matrix with blocks, then:

and:

Proof.

See Appendix D. □

Lemma 5.

Let be a trigonometric polynomial of degree p. Then:

for all . Furthermore,

Proof.

See Appendix E. □

3. Several New Results on the Correlation Matrices of Certain Random Vector Processes

Let be a (complex) random N-dimensional vector process, that is is a (complex) random N-dimensional (column) vector for all . In this section, we study the boundedness of the sequence when is a WSS, MA, or AR vector process, where:

and E denotes expectation.

3.1. WSS Vector Processes

In this subsection, we review the concept of the WSS vector process, and we prove that the sequence is bounded when is a WSS vector process whose power spectral density (PSD) is a trigonometric polynomial.

Definition 1.

Let be continuous and -periodic. A random N-dimensional vector process is said to be WSS with PSD X if it has constant mean (i.e., for all and .

Lemma 6.

If is a WSS vector process whose PSD is a trigonometric polynomial, then is bounded.

Proof.

This is a direct consequence of Lemma 5. □

3.2. VMA Processes

In this subsection, we review the concept of the MA vector (VMA) process, and we prove that the sequence is bounded when is a VMA process of finite order.

Definition 2.

A zero-mean random N-dimensional vector process is said to be a VMA process if:

where for all and is a zero-mean WSS N-dimensional vector process whose PSD is an positive semidefinite matrix Λ. If there exists such that for all , then is called a VMA process of (finite) order q or a VMA process.

Lemma 7.

If is a VMA process as in Definition 2, then is bounded.

Proof.

See Appendix F. □

3.3. VAR Processes

In this subsection, we review the concept of the AR vector (VAR) process, and we study the boundedness of the sequence when is a VAR process of finite order.

Definition 3.

A zero-mean random N-dimensional vector process is said to be a VAR process if:

where for all and is a zero-mean WSS N-dimensional vector process whose PSD is an positive definite matrix Λ. If there exists such that for all , then is called a VAR process of (finite) order p or a VAR process.

Lemma 8.

Let be a VAR process as in Definition 3. Suppose that is invertible for all and is bounded. Then, is bounded.

Proof.

See Appendix G. □

4. On the Asymptotic Optimality of a Low-Complexity Coding Strategy for Gaussian Vector Sources

4.1. Low-Complexity Coding Strategy Considered

In [1], Kolmogorov gave a formula for the RDF of a real zero-mean Gaussian N-dimensional vector with a positive definite correlation matrix , namely,

where ⊤ stands for the transpose, denotes the trace, and is a real number satisfying:

We recall that can be thought of as the minimum rate (measured in nats) at which can be encoded (compressed) in order to be able to recover it with a mean squared error (MSE) per dimension no larger than a given distortion D, that is:

where denotes the estimation of .

If , an optimal coding strategy to achieve is to encode separately with for all , where with U being a real orthogonal eigenvector matrix of (see [8], Corollary 1). Observe that in order to obtain U, we need to know the correlation matrix . This coding strategy also requires an optimal coding method for real Gaussian random variables.

In [4], Theorem 3, we gave a low-complexity coding strategy for any Gaussian N-dimensional vector source . According to that strategy, to encode a finite-length data block of such a source, we first compute the block discrete Fourier transform (DFT) of :

and then, we encode separately (i.e., if n is even, we encode separately, and if n is odd, we encode separately) with:

and:

where denotes the smallest integer higher than or equal to and:

with and being the real part and the imaginary part of a complex number, respectively.

As our coding strategy requires the computation of the block DFT, its computational complexity is whenever the fast Fourier transform (FFT) algorithm is used. We recall that the computational complexity of the optimal coding strategy for is since it requires the computation of , where is a real orthogonal eigenvector matrix of . Observe that such an eigenvector matrix also needs to be computed, which further increases the complexity. Hence, the main advantage of our coding strategy is that it notably reduces the computational complexity of coding . Moreover, our coding strategy does not require the knowledge of . It only requires the knowledge of , with .

We finish this subsection by reviewing a result that provides an upper bound for the distance between and the rate of our coding strategy (see [4], Theorem 3).

Theorem 1.

Consider . Let be a random N-dimensional vector for all . Suppose that is a real zero-mean Gaussian vector with a positive definite correlation matrix (or equivalently, ). Let be the random vector given by Equation (14). If , then:

where:

4.2. On the Asymptotic Optimality of the Low-Complexity Coding Strategy Considered

In this subsection, we study the asymptotic optimality of our coding strategy for Gaussian vector sources. We begin by presenting a new result that provides a sufficient condition for the source to make such a coding strategy asymptotically optimal.

Theorem 2.

Let be a real zero-mean Gaussian N-dimensional vector process. Suppose that and . If , then:

Hence, if is convergent, then:

Proof.

From Equation (15), we have:

and therefore, Theorem 2 is proven. □

We recall that is the RDF of the source .

In [4], Theorem 4, we gave a more restrictive sufficient condition for the source to make the coding strategy considered asymptotically optimal. Specifically, in [4], Theorem 4, we proved that Equation (16) holds if is AWSS. However, the convergence speed of the rate of the coding strategy considered (i.e., how fast the rate of the coding strategy tends to the RDF of the AWSS vector source) was not studied in [4]. We now study the convergence speed of the rate of such a coding strategy when it is used to encode the most relevant vector sources, namely WSS vector sources, VMA sources, and VAR sources. It should be mentioned that this convergence speed depends on the sequence whose boundedness is studied in Section 3 for these three types of vector sources.

Theorem 3.

Let be a real zero-mean Gaussian WSS N-dimensional vector process whose PSD X is a trigonometric polynomial. Suppose that (or equivalently, for all ). If , there exists such that:

Proof.

Theorem 4.

Let be a VMA(q) process as in Definition 2. Suppose that and is bounded with for all . If is real and Gaussian, and , there exists such that:

Proof.

Since for all , from Equation (A3), we have:

Hence, . Theorem 1 and Lemma 7 prove Theorem 4. □

Theorem 5.

Let be a VAR(p) process as in Definition 3. Suppose that is invertible for all and is bounded. If is real and Gaussian and , there exists such that:

Proof.

As for all , applying Equation (A4) and [6], Theorem 4.3, yields:

Thus, . Theorem 1 and Lemma 8 prove Theorem 5. □

4.3. On How the Low-Complexity Coding Strategy Considered Performs under Perturbations

In this subsection, we study how the low-complexity coding strategy considered performs when it is used to encode a perturbed version, , of a WSS, MA, or AR vector source . Observe that if is bounded, from Equation (9), we conclude that our coding strategy can also be used to optimally encode , and the convergence speed of the rate remains unaltered.

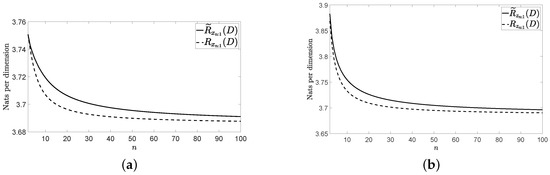

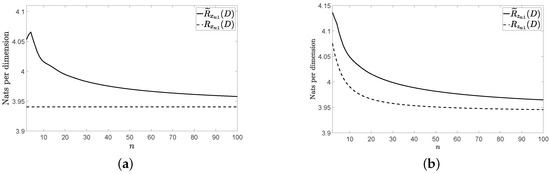

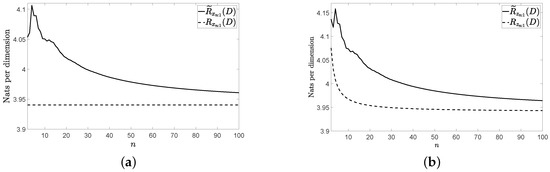

We now present three numerical examples that show how the coding strategy considered performs in the presence of a perturbation. In all of them, and:

Obviously, is bounded since for all . The three vector sources considered in our numerical examples are the zero-mean WSS vector source in [9], Section 4, the VMA(1) source in [10], Example 2.1, and the VAR(1) source in [10], Example 2.3. In [9], Section 4, the Fourier coefficients of the PSD X are:

and with . In [10], Example 2.1, and are given by:

and:

respectively. In [10], Example 2.3, and are given by Equations (18) and (19), respectively.

Figure 1a, Figure 2a and Figure 3a show and for the three vector sources considered by assuming that they are Gaussian. Figure 1b, Figure 2b and Figure 3b show and for these three vector sources. In Figure 1, Figure 2 and Figure 3, and . The figures bear the evidence of the fact that the rate of the low-complexity coding strategy considered tends to the RDF of the source even in the presence of a perturbation.

Figure 1.

Rates for the considered wide sense stationary (WSS) vector source: (a) without perturbation and (b) with perturbation.

Figure 2.

Rates for the considered VMA(1) source: (a) without perturbation and (b) with perturbation.

Figure 3.

Rates for the considered VAR(1) source: (a) without perturbation and (b) with perturbation.

5. Conclusions

In [4], we proposed a low-complexity coding strategy to encode finite-length data blocks of any Gaussian vector source. In this paper, we proved that the convergence speed of the rate of our coding strategy is when it is used to encode the most relevant vector sources, namely WSS, MA, and AR vector sources. This means that the rate of our coding strategy will be close enough to the RDF of the source even if the length n of the data blocks is relatively small. Therefore, we conclude that our coding strategy is not only low-complexity and asymptotically optimal, but also low-latency. These three features make our coding strategy very useful in practical coding applications.

Author Contributions

Authors are listed in order of their degree of involvement in the work, with the most active contributors listed first. J.G.-G. conceived the research question. All authors were involved in the research and wrote the paper. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the Spanish Ministry of Science and Innovation through the ADELE project (PID2019-104958RB-C44).

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Proof of Lemma 1

Proof.

Fix and . As and are continuous and -periodic, is also continuous and -periodic. Applying the Parseval theorem (see, e.g., [11], p. 191) yields:

for all and . □

Appendix B. Proof of Lemma 2

Proof.

(1) Fix . As with , from Lemma 1, we have:

and consequently, Equation (3) holds for all . Applying Equation (3), the Schwarz inequality (see, e.g., [11], p. 15), the Parseval theorem for continuous matrix-valued functions (see, e.g., [6], p. 208), and the well-known formula for the partial sums of the arithmetic series yields:

and therefore, Equation (4) is proven.

(2) Fix . As with , from Lemma 1, we obtain:

and hence, Equation (5) holds for all . Since G is a trigonometric polynomial of degree q, is also a trigonometric polynomial of degree q, where for all . Applying [6], Lemma 4.2, and Equation (4) yields:

and thus, Equation (6) is proven.

(3) Fix . As with and with , from Lemma 1, we obtain:

for all . Observe that:

for all and . □

Appendix C. Proof of Lemma 3

Proof.

(1) Since is continuous and -periodic, is also continuous and -periodic, where for all . As:

for all , Equation (4) proves Assertion 1 of Lemma 3.

(2) Since is positive definite for all (or equivalently, is Hermitian and for all ), is invertible for all (or equivalently, is non-zero for all ), is Hermitian, and for all (see Equation (1)). As is positive definite for all , is also positive definite for all . Therefore,

for all . Assertion 2 of Lemma 3 can now be obtained from Assertion 1 of Lemma 3. □

Appendix D. Proof of Lemma 4

Proof.

Consider . As is unitary, is also unitary for all . Consequently, since the Frobenius norm is unitarily invariant, we have

and:

If is an block circulant matrix with blocks, then (see, e.g., [6], Lemma 5.1, or [12], Lemma 3) there exist such that:

Therefore,

and combining Equations (9) and (10), we obtain Equation (11). □

Appendix E. Proof of Lemma 5

Appendix F. Proof of Lemma 7

Proof.

From Equation (12), we obtain:

with for all . Therefore, applying [6], Lemma 4.2, yields:

Hence, using Equation (9), we have:

for all . Thus, to finish the proof, we only need to show that and are bounded. As and are trigonometric polynomials, from Equation (7), we obtain that is bounded. Since is a trigonometric polynomial, applying Lemma 5, we conclude that is bounded. □

Appendix G. Proof of Lemma 8

Proof.

As is positive definite, if and , then:

whenever , or equivalently, . Since is positive definite for all , we have:

From Equation (13), we obtain:

Consequently,

Therefore, as for all , we have:

Hence, applying Equation (11) and [6], Lemma 4.2, yields:

for all . Thus, to finish the proof, we only need to show that , , , , , and are bounded. From [6], Theorem 4.3, we obtain that and are bounded. Applying [6], Lemma 5.2, we have that is bounded. Since and are trigonometric polynomials, from Equation (7), we obtain that is bounded. As is a trigonometric polynomial, applying Lemma 5, we have that is bounded. Since is positive definite for all , is also positive definite for all , and consequently, from Equation (8), we conclude that is bounded. □

Appendix H. A Statistical Signal Processing Application on Filtering WSS Vector Processes

Consider a zero-mean WSS M-dimensional vector process . Let Y be the PSD of a zero-mean WSS N-dimensional vector process with . Assume that those two processes are jointly WSS with joint PSD Z, that is is a continuous -periodic function satisfying that .

For every , if is an estimation of from of the form:

with , the MSE per sample is:

and the minimum MSE (MMSE) is given by , where is the Wiener filter, i.e.,

In [13], Equation (6), it was shown that there is no difference in the MSE per sample for large enough n if we substitute the optimal filter by , where , that is,

Obviously, the computational complexity of the operation (A5) is notably reduced when applying this substitution and the FFT algorithm is used. Specifically, the computational complexity is reduced from to .

We here study the convergence speed of the sequence (i.e., how fast this sequence tends to zero) by assuming that Y and Z are trigonometric polynomials. Applying [13], p. 11, and Lemma 5, we conclude that there exists such that:

where and . Therefore,

Equation (A6) was proven in [2] for the case .

References

- Kolmogorov, A.N. On the Shannon theory of information transmission in the case of continuous signals. IRE Trans. Inf. Theory 1956, IT-2, 102–108. [Google Scholar] [CrossRef]

- Pearl, J. On coding and filtering stationary signals by discrete Fourier transforms. IEEE Trans. Inf. Theory 1973, 19, 229–232. [Google Scholar] [CrossRef]

- Gutiérrez-Gutiérrez, J.; Zárraga-Rodríguez, M.; Insausti, X. Upper bounds for the rate distortion function of finite-length data blocks of Gaussian WSS sources. Entropy 2017, 19, 554. [Google Scholar] [CrossRef]

- Zárraga-Rodríguez, M.; Gutiérrez-Gutiérrez, J.; Insausti, X. A low-complexity and asymptotically optimal coding strategy for Gaussian vectors sources. Entropy 2019, 21, 965. [Google Scholar] [CrossRef]

- Gray, R.M. Toeplitz and circulant matrices: A review. Found. Trends Commun. Inf. Theory 2006, 2, 155–239. [Google Scholar] [CrossRef]

- Gutiérrez-Gutiérrez, J.; Crespo, P.M. Block Toeplitz matrices: Asymptotic results and applications. Found. Trends Commun. Inf. Theory 2011, 8, 179–257. [Google Scholar] [CrossRef]

- Gutiérrez-Gutiérrez, J. A modified version of the Pisarenko method to estimate the power spectral density of any asymptotically wide sense stationary vector process. Appl. Math. Comput. 2019, 362, 124526. [Google Scholar] [CrossRef]

- Gutiérrez-Gutiérrez, J.; Zárraga-Rodríguez, M.; Villar-Rosety, F.M.; Insausti, X. Rate-distortion function upper bounds for Gaussian vectors and their applications in coding AR sources. Entropy 2018, 20, 399. [Google Scholar] [CrossRef] [PubMed]

- Gutiérrez-Gutiérrez, J.; Iglesias, I.; Podhorski, A. Geometric MMSE for one-sided and two-sided vector linear predictors: From the finite-length case to the infinite-length case. Signal Process. 2011, 91, 2237–2245. [Google Scholar] [CrossRef]

- Reinsel, G.C. Elements of Multivariate Time Series Analysis; Springer: Berlin/Heidelberg, Germany, 1993. [Google Scholar]

- Rudin, W. Principles of Mathematical Analysis; McGraw-Hill: New York, NY, USA, 1976. [Google Scholar]

- Gutiérrez-Gutiérrez, J.; Crespo, P.M. Asymptotically equivalent sequences of matrices and multivariate ARMA processes. IEEE Trans. Inf. Theory 2011, 57, 5444–5454. [Google Scholar] [CrossRef]

- Gutiérrez-Gutiérrez, J.; Zárraga-Rodríguez, M.; Insausti, X.; Hogstad, B.O. On the complexity reduction of coding WSS vector processes by using a sequence of block circulant matrices. Entropy 2017, 19, 95. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).