Abstract

In this paper, we obtain an integral formula for the rate distortion function (RDF) of any Gaussian asymptotically wide sense stationary (AWSS) vector process. Applying this result, we also obtain an integral formula for the RDF of Gaussian moving average (MA) vector processes and of Gaussian autoregressive MA (ARMA) AWSS vector processes.

1. Introduction

The present paper focuses on the derivation of a closed-form expression for the rate distortion function (RDF) of a wide class of vector processes. As stated in [1,2], there exist very few journal papers in the literature that present closed-form expressions for the RDF of non-stationary processes, and just one of them deals with non-stationary vector processes [3]. In the present paper, we obtain an integral formula for the RDF of any real Gaussian asymptotically wide sense stationary (AWSS) vector process. This new formula generalizes the one given in 1956 by Kolmogorov [4] for real Gaussian stationary processes and the one given in 1971 by Toms and Berger [3] for real Gaussian autoregressive (AR) AWSS vector processes of finite order. Applying this new formula, we also obtain an integral formula for the RDF of real Gaussian moving average (MA) vector processes of infinite order and for the RDF of real Gaussian ARMA AWSS vector processes of infinite order. AR, MA and ARMA vector processes are frequently used to model multivariate time series (see, e.g., [5]).

The definition of the AWSS process was first given by Gray (see [6,7]), and it is based on his concept of asymptotically equivalent sequences of matrices [8]. The integral formulas given in the present paper are obtained by using some recent results on such sequences of matrices [9,10,11,12].

The paper is organized as follows. In Section 2, we set up notation, and we review the concepts of AWSS, MA and ARMA vector processes and the Kolmogorov formula for the RDF of a real Gaussian vector. In Section 3, we obtain an integral formula for the RDF of any Gaussian AWSS vector process. In Section 4, we obtain an integral formula for the RDF of Gaussian MA vector processes and of Gaussian ARMA AWSS vector processes. We finish the paper with a numerical example where the RDF of a Gaussian AWSS vector process is computed.

2. Preliminaries

2.1. Notation

In this paper, , , and denote the set of natural numbers (i.e., the set of positive integers), the set of integer numbers, the set of (finite) real numbers and the set of (finite) complex numbers, respectively. If , then , and are the set of all complex matrices, the zero matrix and the identity matrix, respectively. The symbols ⊤ and ∗ denote transpose and conjugate transpose, respectively. E stands for expectation; i is the imaginary unit; denotes trace; stands for the Kronecker delta; and , , are the eigenvalues of an Hermitian matrix A arranged in decreasing order.

Let and be matrices for all . We write if the sequences and are asymptotically equivalent (see ([9], p. 5673)), that is:

and:

where and denote the spectral norm and the Frobenius norm, respectively. The original definition of asymptotically equivalent sequences of matrices, where , was given by Gray (see ([6], Section 2.3) or [8]).

Let be a random N-dimensional vector process, i.e., is a random (column) vector of dimension N for all . We denote by the random vector of dimension given by:

Consider a matrix-valued function of a real variable , which is continuous and -periodic. For every , we denote by the block Toeplitz matrix with blocks given by:

where is the sequence of Fourier coefficients of X:

2.2. AWSS Vector Processes

We first review the well-known concept of the WSS vector process.

Definition 1.

Let , and suppose that it is continuous and -periodic. A random N-dimensional vector process is said to be WSS (or weakly stationary) with power spectral density (PSD) X if it has constant mean (i.e., for all and .

We now review the definition of the AWSS vector process given in ([11], Definition 7.1).

Definition 2.

Let , and suppose that it is continuous and -periodic. A random N-dimensional vector process is said to be AWSS with asymptotic PSD (APSD) X if it has constant mean and .

Definition 2 was first introduced by Gray for the case (see, e.g., ([6], p. 225)).

2.3. MA and ARMA Vector Processes

We first review the concept of real zero-mean MA vector process (of infinite order).

Definition 3.

A real zero-mean random N-dimensional vector process is said to be MA if:

where , , are real matrices, is a real zero-mean random N-dimensional vector process and for all with Λ being an positive definite matrix.

The MA vector process in Equation (1) is of finite order if there exists such that for all . In this case, is called an MA vector process (see, e.g., ([5], Section 2.1)).

Secondly, we review the concept of a real zero-mean ARMA vector process (of infinite order).

Definition 4.

A real zero-mean random N-dimensional vector process is said to be ARMA if:

where and , , are real matrices, is a real zero-mean random N-dimensional vector process and for all with Λ being an positive definite matrix.

The ARMA vector process in Equation (2) is of finite order if there exist such that for all and for all . In this case, is called an ARMA vector process (see, e.g., ([5], Section 1.2.2)).

2.4. RDF of Gaussian Vectors

Let be a real zero-mean Gaussian N-dimensional vector process satisfying that is positive definite for all . If from [4], we know that the RDF of the real zero-mean Gaussian vector is given by:

with and where is the real number satisfying:

The RDF of the real zero-mean Gaussian vector process is given by:

whenever this limit exists.

3. Integral Formula for the RDF of Gaussian AWSS Vector Processes

Theorem 1.

Let be a real zero-mean Gaussian AWSS N-dimensional vector process with APSD X. Suppose that is positive definite for all and that is positive definite for all . If , then:

is the operational RDF of , where θ is the real number satisfying:

Proof.

See Appendix A. ☐

Corollary 1.

Let be a real zero-mean Gaussian WSS N-dimensional vector process with PSD X. Suppose that is positive definite for all . If , then:

where θ is the real number satisfying:

Proof.

See Appendix B. ☐

The integral formula given in Equation (6) was presented by Kafedziski in ([13], Equation (20)). However, the proof that he proposed was not complete, because although Kafedziski pointed out that ([13], Equation (20)) can be directly proven by applying the Szegö theorem for block Toeplitz matrices ([14], Theorem 3), the Szegö theorem cannot be applied since the parameter that appears in the expression of in ([13], Equation (7)), depends on n, as it does in Equation (3). It should be also mentioned that the set of WSS vector processes that he considered was smaller, namely, he only considered WSS vector processes with PSD in the Wiener class. A function is said to be in the Wiener class if it is continuous and -periodic, and it satisfies for all (see, e.g., ([11], Appendix B)).

4. Applications

4.1. Integral Formula for the RDF of Gaussian MA Vector Processes

Theorem 2.

Let be as in Definition 3. Assume that , with and for all , is the sequence of Fourier coefficients of a function , which is continuous and -periodic. Then:

- 1.

- is AWSS with APSD for all .

- 2.

- If is Gaussian, for all , and yieldswhere θ is the real number satisfying:

Proof.

See Appendix C. ☐

4.2. Integral Formula for the RDF of Gaussian ARMA AWSS Vector Processes

Theorem 3.

Let be as in Definition 4. Assume that , with and for all , is the sequence of Fourier coefficients of a function , which is continuous and -periodic. Suppose that , with and for all , is the sequence of Fourier coefficients of a function , which is continuous and -periodic. Assume that is bounded and for all . Then:

- 1.

- is AWSS with APSD for all .

- 2.

- If is Gaussian, for all , and yields:where θ is the real number satisfying:

Proof.

See Appendix D. ☐

5. Numerical Example

We finish the paper with a numerical example where the RDF of a Gaussian AWSS vector process is computed. Specifically, we compute the RDF of the MA vector process considered in ([5], Example 2.1), by assuming that it is Gaussian.

Let be as in Definition 3 with ,

for all , and:

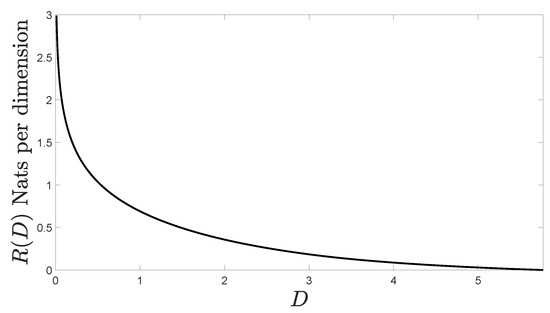

Assume that is Gaussian. Figure 1 shows with that we have computed using Theorem 2.

Figure 1.

Rate Distortion Function (RDF) of the Gaussian MA vector process considered.

Author Contributions

Authors are listed in order of their degree of involvement in the work, with the most active contributors listed first. J.G.-G. conceived the research question. All authors proved the main results. J.G.-G. and X.I. performed the simulations. All authors wrote the paper. All authors have read and approved the final manuscript.

Funding

This work was supported in part by the Spanish Ministry of Economy and Competitiveness through the CARMEN project (TEC2016-75067-C4-3-R).

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Proof of Theorem 1

Proof.

We divide the proof into six steps.

Step 1: We show that there exists such that in Equation (3) exists for all , or equivalently, such that for all .

Since , applying ([11], Theorem 6.6) yields:

Consequently, as , there exists such that:

Therefore, since:

we obtain:

Step 2: We prove that the sequence of real numbers is bounded.

From Equation (A2), we have for all . As , there exists such that for all . Thus,

Step 3: We show that if is a convergent subsequence of , then .

We denote by the limit of . We need to prove that .

Since for all , we have . Let be the sequence of real numbers such that and for all . Obviously, and for all . As and for all , applying ([12], Lemma 1) yields . From ([11], Lemma 4.2) we obtain . Hence, applying ([11], Theorem 6.6) yields:

Thus, is a real number satisfying Equation (5). Since , there exists a unique real number satisfying Equation (5), and consequently, .

Step 4: We prove that . From Steps 2 and 3, we have . Consequently, the sequence of real numbers is convergent, and its limit is (see, e.g., ([15], p. 57)).

Step 5: We show that Equation (4) holds.

Let be the sequence of positive numbers defined in Step 3 for the case in which , that is, if and if . From ([11], Theorem 6.6), we obtain:

Step 6: We prove that Equation (4) is the operational RDF of . Following the same arguments that Gray used in [16] for Gaussian AR AWSS one-dimensional vector processes, to prove the negative (converse) coding theorem and the positive (achievability) coding theorem, we only need to show that the sequence defined in ([17], p. 490), is bounded. Hence, Equation (A1) finishes the proof. ☐

Appendix B. Proof of Corollary 1

Proof.

Since is positive definite for all , from ([11], Theorem 4.4) and ([18], Corollary VI.1.6), we have:

for all , and consequently, is positive definite for all . Combining ([11], Lemma 3.3) and ([11], Theorem 4.3) yields . The proof finishes by applying Theorem 1. ☐

Appendix C. Proof of Theorem 2

Proof.

(1) From Equation (1), we have:

or more compactly,

for all . Consequently,

and applying ([11], Lemma 4.2), yields:

where , . Combining ([11], Lemma 3.3) and ([11], Theorem 4.3), we obtain . Moreover, applying ([10], Theorem 3) yields . Hence, from ([10], Lemma 2) and ([10], Theorem 3), we have:

Thus, as the relation ∼ is transitive (see ([11], Lemma 3.1)), is AWSS with APSD X. (2) First, we prove that is positive definite for all . Fix , and consider . Since is positive definite, we have:

whenever . As , if and only if , and consequently, is positive definite.

Secondly, we prove that is positive definite for all . To do that, we only need to show that for all , because as is a correlation matrix, it is positive semidefinite. We have:

for all .

The result now follows from Theorem 1. ☐

Appendix D. Proof of Theorem 3

Proof.

(1) From Equation (2), we have:

or more compactly,

for all . Consequently,

and applying Equation (A3) yields:

Since for all , we obtain:

From Equation (A4) and the fact that the relation ∼ is transitive (see ([11], Lemma 3.1)), we have . Combining ([11], Lemma 3.3) and ([11], Theorem 4.3) yields . Therefore, applying ([10], Lemma 2) and ([10], Theorem 3), we obtain:

Using ([11], Lemma 3.1) yields , and applying ([10], Lemma 2), ([11], Lemma 4.2), and ([10], Theorem 3), we have:

If and , then,

whenever . As , if and only if , hence is positive definite for all , and applying ([11], Theorem 4.4) and ([18], Corollary VI.1.6), yields:

Thus,

for all . Observe that is also bounded, because:

Moreover, from ([10], Theorem 3), we obtain . Consequently, applying Lemma A1 (see Appendix E) and ([11], Theorem 6.4), yields:

Therefore, from Equation (A6), ([10], Lemma 2), and ([10], Theorem 3), we have:

Hence, applying Equation (A7), ([10], Lemma 2), and ([10], Theorem 3), we deduce that:

(2) First, we prove that is positive definite for all . Fix , and consider . Since is positive definite (see the proof of Theorem 2), we have:

whenever . As if and only if , is positive definite.

Secondly, we prove that is positive definite for all , or equivalently, for all . Applying Equation (A5) yields:

The result now follows from Theorem 1. ☐

Appendix E. A Property of Asymptotically Equivalent Sequences of Invertible Matrices

Lemma A1.

Let and be invertible matrices for all . Suppose that and and are bounded. Then, .

Proof.

If such that for all , then:

☐

This result was presented in ([6], Theorem 1) for the case .

References

- Hammerich, E. Waterfilling theorems for linear time-varying channels and related nonstationary sources. IEEE Trans. Inf. Theory 2016, 62, 6904–6916. [Google Scholar] [CrossRef]

- Kipnis, A.; Goldsmith, A.J.; Eldar, Y.C. The distortion rate function of cyclostationary Gaussian processes. IEEE Trans. Inf. Theory 2018, 64, 3810–3824. [Google Scholar] [CrossRef]

- Toms, W.; Berger, T. Information rates of stochastically driven dynamic systems. IEEE Trans. Inf. Theory 1971, 17, 113–114. [Google Scholar] [CrossRef]

- Kolmogorov, A.N. On the Shannon theory of information transmission in the case of continuous signals. IRE Trans. Inf. Theory 1956, 2, 102–108. [Google Scholar] [CrossRef]

- Reinsel, G.C. Elements of Multivariate Time Series Analysis; Springer: Berlin, Germany, 1993. [Google Scholar]

- Gray, R.M. Toeplitz and circulant matrices: A review. Found. Trends Commun. Inf. Theory 2006, 2, 155–239. [Google Scholar] [CrossRef]

- Ephraim, Y.; Lev-Ari, H.; Gray, R.M. Asymptotic minimum discrimination information measure for asymptotically weakly stationary processes. IEEE Trans. Inf. Theory 1988, 34, 1033–1040. [Google Scholar] [CrossRef]

- Gray, R.M. On the asymptotic eigenvalue distribution of Toeplitz matrices. IEEE Trans. Inf. Theory 1972, 18, 725–730. [Google Scholar] [CrossRef]

- Gutiérrez-Gutiérrez, J.; Crespo, P.M. Asymptotically equivalent sequences of matrices and Hermitian block Toeplitz matrices with continuous symbols: Applications to MIMO systems. IEEE Trans. Inf. Theory 2008, 54, 5671–5680. [Google Scholar] [CrossRef]

- Gutiérrez-Gutiérrez, J.; Crespo, P.M. Asymptotically equivalent sequences of matrices and multivariate ARMA processes. IEEE Trans. Inf. Theory 2011, 57, 5444–5454. [Google Scholar] [CrossRef]

- Gutiérrez-Gutiérrez, J.; Crespo, P.M. Block Toeplitz matrices: Asymptotic results and applications. Found. Trends Commun. Inf. Theory 2011, 8, 179–257. [Google Scholar] [CrossRef]

- Gutiérrez-Gutiérrez, J.; Crespo, P.M.; Zárraga-Rodríguez, M.; Hogstad, B.O. Asymptotically equivalent sequences of matrices and capacity of a discrete-time Gaussian MIMO channel with memory. IEEE Trans. Inf. Theory 2017, 63, 6000–6003. [Google Scholar] [CrossRef]

- Kafedziski, V. Rate distortion of stationary and nonstationary vector Gaussian sources. In Proceedings of the IEEE/SP 13th Workshop on Statistical Signal Processing, Bordeaux, France, 17–20 July 2005; IEEE: Piscataway, NJ, USA, 2005; pp. 1054–1059. [Google Scholar]

- Gazzah, H.; Regalia, P.A.; Delmas, J.P. Asymptotic eigenvalue distribution of block Toeplitz matrices and application to blind SIMO channel identification. IEEE Trans. Inf. Theory 2001, 47, 1243–1251. [Google Scholar] [CrossRef]

- Rudin, W. Principles of Mathematical Analysis; McGraw-Hill: New York, NY, USA, 1976. [Google Scholar]

- Gray, R.M. Information rates of autoregressive processes. IEEE Trans. Inf. Theory 1970, 16, 412–421. [Google Scholar] [CrossRef]

- Gallager, R.G. Information Theory and Reliable Communication; John Wiley & Sons: Hoboken, NJ, USA, 1968. [Google Scholar]

- Bhatia, R. Matrix Analysis; Springer: Berlin, Germany, 1997. [Google Scholar]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).