Abstract

Relative error estimation has been recently used in regression analysis. A crucial issue of the existing relative error estimation procedures is that they are sensitive to outliers. To address this issue, we employ the -likelihood function, which is constructed through -cross entropy with keeping the original statistical model in use. The estimating equation has a redescending property, a desirable property in robust statistics, for a broad class of noise distributions. To find a minimizer of the negative -likelihood function, a majorize-minimization (MM) algorithm is constructed. The proposed algorithm is guaranteed to decrease the negative -likelihood function at each iteration. We also derive asymptotic normality of the corresponding estimator together with a simple consistent estimator of the asymptotic covariance matrix, so that we can readily construct approximate confidence sets. Monte Carlo simulation is conducted to investigate the effectiveness of the proposed procedure. Real data analysis illustrates the usefulness of our proposed procedure.

1. Introduction

In regression analysis, many analysts use the (penalized) least squares estimation, which aims at minimizing the mean squared prediction error []. On the other hand, the relative (percentage) error is often more useful and/or adequate than the mean squared error. For example, in econometrics, the comparison of prediction performance between different stock prices with different units should be made by relative error; we refer to [,] among others. Additionally, the prediction error of photovoltaic power production or electricity consumption is evaluated by not only mean squared error but also relative error (see, e.g., []). We refer to [] regarding the usefulness and importance of the relative error.

In relative error estimation, we minimize a loss function based on the relative error. An advantage of using such a loss function is that it is scale free or unit free. Recently, several researchers have proposed various loss functions based on relative error [,,,,,]. Some of these procedures have been extended to the nonparameteric model [] and random effect model []. The relative error estimation via the regularization, including the least absolute shrinkage and operator (lasso; []), and the group lasso [], have also been proposed by several authors [,,], to allow for the analysis of high-dimensional data.

In practice, a response variable can turn out to be extremely large or close to zero. For example, the electricity consumption of a company may be low during holidays and high on exceptionally hot days. These responses may often be considered to be outliers, to which the relative error estimator is sensitive because the loss function diverges when or . Therefore, a relative error estimation that is robust against outliers must be considered. Recently, Chen et al. [] discussed the robustness of various relative error estimation procedures by investigating the corresponding distributions, and concluded that the distribution of least product relative error estimation (LPRE) proposed by [] has heavier tails than others, implying that the LPRE might be more robust than others in practical applications. However, our numerical experiments show that the LPRE is not as robust as expected, so that the robustification of the LPRE is yet to be investigated from the both theoretical and practical viewpoints.

To achieve a relative error estimation that is robust against outliers, this paper employs the -likelihood function for regression analysis by Kawashima and Fujisawa [], which is constructed by the -cross entropy []. The estimating equation is shown to have a redescending property, a desirable property in robust statistics literature []. To find a minimizer of the negative -likelihood function, we construct a majorize-minimization (MM) algorithm. The loss function of our algorithm at each iteration is shown to be convex, although the original negative -likelihood function is nonconvex. Our algorithm is guaranteed to decrease the objective function at each iteration. Moreover, we derive the asymptotic normality of the corresponding estimator together with a simple consistent estimator of the asymptotic covariance matrix, which enables us to straightforwardly create approximate confidence sets. Monte Carlo simulation is conducted to investigate the performance of our proposed procedure. An analysis of electricity consumption data is presented to illustrate the usefulness of our procedure. Supplemental material includes our R package rree (robust relative error estimation), which implements our algorithm, along with a sample program of the rree function.

The reminder of this paper is organized as follows: Section 2 reviews several relative error estimation procedures. In Section 3, we propose a relative error estimation that is robust against outliers via the -likelihood function. Section 4 presents theoretical properties: the redescending property of our method and the asymptotic distribution of the estimator, the proof of the latter being deferred to Appendix A. In Section 5, the MM algorithm is constructed to find the minimizer of the negative -likelihood function. Section 6 investigates the effectiveness of our proposed procedure via Monte Carlo simulations. Section 7 presents the analysis on electricity consumption data. Finally, concluding remarks are given in Section 8.

2. Relative Error Estimation

Suppose that = are predictors and is a vector of positive responses. Consider the multiplicative regression model

where is a p-dimensional coefficient vector, and are positive random variables. Predictors may be random and serially dependent, while we often set , that is, incorporate the intercept in the exponent. The parameter space of is a bounded convex domain such that . We implicitly assume that the model is correctly specified, so that there exists a true parameter . We want to estimate from a sample , .

We first remark that the condition ensures that the model (1) is scale-free regarding variables , which is an essentially different nature from the linear regression model . Specifically, multiplying a positive constant to results in the translation of the intercept in the exponent:

so that the change from to is equivalent to that from to . See Remark 1 on the distribution of .

To provide a simple expression of the loss functions based on the relative error, we write

Chen et al. [,] pointed out that the loss criterion for relative error may depend on and / or . These authors also proposed general relative error (GRE) criteria, defined as

where . Most of the loss functions based on the relative error are included in the GRE. Park and Stefanski [] considered a loss function . It may highly depend on a small because it includes terms, and then the estimator can be numerically unstable. Consistency and asymptotic normality may not be established under general regularity conditions []. The loss functions based on [] and (least absolute relative error estimation, []) can have desirable asymptotic properties [,]. However, the minimization of the loss function can be challenging, in particular for high-dimensional data, when the function is nonsmooth or nonconvex.

In practice, the following two criteria would be useful:

- Least product relative error estimation (LPRE) Chen et al. [] proposed the LPRE given by . The LPRE tries to minimize the product , not necessarily both terms at once.

- Least squared-sum relative error estimation (LSRE) Chen et al. [] considered the LSRE given by . The LSRE aims to minimize both and through sum of squares .

The loss functions of LPRE and LSRE are smooth and convex, and also possess desirable asymptotic properties []. The above-described GRE criteria and their properties are summarized in Table 1. Particularly, the “convexity” in the case of holds when , , and is positive definite, since the Hessian matrix of the corresponding is a.s.

Table 1.

Several examples of general relative error (GRE) criteria and their properties. “Likelihood” in the second column means the existence of a likelihood function that corresponds to the loss function. The properties of “Convexity” and “Smoothness” in the last two columns respectively indicate those with respect to of the corresponding loss function.

Although not essential, we assume that the variables in Equation (1) are i.i.d. with common density function h. As in Chen et al. [], we consider the following class of h associated with g:

where

and is a normalizing constant () and denotes the indicator function of set . Furthermore, we assume the symmetry property , , from which it follows that . The latter property is necessary for a score function to be associated with the gradient of a GRE loss function, hence being a martingale with respect to a suitable filtration, which often entails estimation efficiency. Indeed, the asymmetry of (i.e., ) may produce a substantial bias in the estimation []. The entire set of our regularity conditions will be shown in Section 4.3. The conditions therein concerning g are easily verified for both LPRE and LSRE.

In this paper, we implicitly suppose that denote “time” indices. As usual, in order to deal with cases of non-random and random predictors in a unified manner, we employ the partial-likelihood framework. Specifically, in the expression of the joint density (with the obvious notation for the densities)

we ignore the first product and only look at the second one , which is defined as the partial likelihood. We further assume that the ith-stage noise is independent of , so that, in view of Equation (1), we have

The density function of response y given is

From Equation (3), we see that the maximum likelihood estimator (MLE) based on the error distribution in Equation (5) is obtained by the minimization of Equation (2). For example, the density functions of LPRE and LSRE are

where denotes the modified Bessel function of third kind with index :

and is a constant term. Constant terms are numerically computed as and . Density (6) is a special case of the generalized inverse Gaussian distribution (see, e.g., []).

Remark 1.

We assume that the noise density h is fully specified in the sense that, given g, the density h does not involve any unknown quantity. However, this is never essential. For example, for the LPRE defined by Equation (6), we could naturally incorporate one more parameter into h, the resulting form of being

Then, we can verify that the distributional equivalence holds whatever the value of σ is. Particularly, the estimation of parameter σ does make statistical sense and, indeed, it is possible to deduce the asymptotic normality of the joint maximum-(partial-) likelihood estimator of . In this paper, we do not pay attention to such a possible additional parameter, but instead regard it (whenever it exists) as a nuisance parameter, as in the noise variance in the least-squares estimation of a linear regression model.

3. Robust Estimation via -Likelihood

In practice, outliers can often be observed. For example, the electricity consumption data can have the outliers on extremely hot days. The estimation methods via GRE criteria, including LPRE and LSRE, are not robust against outliers, because the corresponding density functions are not generally heavy-tailed. Therefore, a relative error estimation method that is robust against the outliers is needed. To achieve this, we consider minimizing the negative -(partial-)likelihood function based on the -cross entropy [].

We now define the negative -(partial-)likelihood function by

where is a parameter that controls the degrees of robustness; corresponds to the negative log-likelihood function, and robustness is enhanced as increases. On the other hand, a too large can decrease the efficiency of the estimator []. In practice, the value of may be selected by a cross-validation based on -cross entropy (see, e.g., [,]). We refer to Kawashima and Fujisawa [] for more recent observations on comparison of the -divergences between Fujisawa and Eguchi [] and Kawashima and Fujisawa [].

There are several likelihood functions which yield robust estimation. Examples include the -likelihood [], and the likelihood based on the density power divergence [], referred to as -likelihood. It is shown that the -likelihood, the -likelihood, and the -likelihood are closely related. The negative -likelihood function and the negative -likelihood function are, respectively, expressed as

The difference between -likelihood and -likelihood is just the existence of the logarithm on . Furthermore, substituting into Equation (9) gives us

Therefore, the minimization of the negative -likelihood function is equivalent to minimization of the negative -likelihood function without second term in the right side of Equation (8). Note that the -likelihood has the redescending property, a desirable property in robust statistics literature, as shown in Section 4.2. Moreover, it is known that the -likelihood is the essentially unique divergence that is robust against heavy contamination (see [] for details). On the other hand, we have not shown whether the -likelihood and/or the -likelihood have the redescending property or not.

4. Theoretical Properties

4.1. Technical Assumptions

Let denote the convergence in probability.

Assumption A1 (Stability of the predictor).

There exists a probability measure on the state space of the predictors and positive constants such that

and that

where the limit is finite for any measurable η satisfying that

Assumption A2 (Noise structure).

The a.s. positive i.i.d. random variables have a common positive density h of the form (3):

for which the following conditions hold.

- Function is three times continuously differentiable on and satisfies that

- There exist constants , and such thatfor every .

- There exist constants such that

Here and in the sequel, for a variable a, we denote by the kth-order partial differentiation with respect to a.

Assumption 1 is necessary to identify the large-sample stochastic limits of the several key quantities in the proofs: without them, we will not be able to deduce an explicit asymptotic normality result. Assumption 2 holds for many cases, including the LPRE and the LSRE (i.e., and ), while excluding and . The smoothness condition on h on is not essential and could be weakened in light of the M-estimation theory ([], Chapter 5). Under these assumptions, we can deduce the following statements.

- h is three times continuously differentiable on , and for each ,

- For each and (recall that the value of is given),where

The verifications are straightforward hence omitted.

Finally, we impose the following assumption:

Assumption A3 (Identifiability).

We have if

where denotes the Lebesgue measure on .

4.2. Redescending Property

The estimating function based on the negative -likelihood function is given by

In our model, we consider not only too large s but also too small s as outliers: the estimating equation is said to have the redescending property if

for each . The redescending property is known as a desirable property in robust statistics literature []. Here, we show the proposed procedure has the redescending property.

The estimating equation based on the negative -likelihood function is

where

We have expression

4.3. Asymptotic Distribution

Recall Equation (10) for the definition of and let

where , , and ; Assumptions 1 and 2 ensure that all these quantities are finite for each . Moreover,

We are assuming that density h and tuning parameter are given a priori, hence we can (numerically) compute constants , , and . In the following, we often omit “” from the notation.

Let denote the convergence in distribution.

Theorem 1.

Under Assumptions 1–3, we have

The proof of Theorem 1 will be given in Appendix A. Note that, for , we have , , and , which in particular entails and . Then, both and tend to the Fisher information matrix

as , so that the asymptotic distribution becomes , the usual one of the MLE.

We also note that, without details, we could deduce a density-power divergence (also known as the -divergence []) counterpart to Theorem 1 similarly but with slightly lesser computation cost; in that case, we consider the objective function defined by Equation (8) instead of the -(partial-)likelihood (7). See Basu et al. [] and Jone et al. [] for details of the density-power divergence.

5. Algorithm

Even if the GRE criterion in Equation (2) is a convex function, the negative -likelihood function is nonconvex. Therefore, it is difficult to find a global minimum. Here, we derive the MM (majorize-minimization) algorithm to obtain a local minimum. The MM algorithm monotonically decreases the objective function at each iteration. We refer to Hunter and Lange [] for a concise account of the MM algorithm.

Let be the value of the parameter at the tth iteration. The negative -likelihood function in Equation (11) consists of two nonconvex functions, and . The majorization functions of , say (), are constructed so that the optimization of is much easier than that of . The majorization functions must satisfy the following inequalities:

Here, we construct majorization functions for .

5.1. Majorization Function for

Let

Obviously, and . Applying Jensen’s inequality to , we obtain inequality

Substituting Equation (20) and Equation (21) into Equation (22) gives

where . Denoting

we observe that Equation (23) satisfies Equation (18) and Equation (19). It is shown that is a convex function if the original relative error loss function is convex. Particularly, the majorization functions based on LPRE and LSRE are both convex.

5.2. Majorization Function for

Let . We view as a function of . Let

By taking the derivative of with respect to , we have

where . Note that for any .

The Taylor expansion of at is expressed as

where and is an n-dimensional vector located between and . We define an matrix as follows:

5.3. MM Algorithm for Robust Relative Error Estimation

In Section 5.1 and Section 5.2, we have constructed the majorization functions for both and . The MM algorithm based on these majorization functions is detailed in Algorithm 1. The majorization function is convex if the original relative error loss function is convex. Particularly, the majorization functions of LPRE and LSRE are both convex.

| Algorithm 1 Algorithm of robust relative error estimation. |

|

Remark 2.

Instead of the MM algorithm, one can directly use the quasi-Newton method, such as the Broyden–Fletcher–Goldfarb–Shanno (BFGS) algorithm to minimize the negative γ-likelihood function. In our experience, the BFGS algorithm is faster than the MM algorithm but is more sensitive to an initial value than the MM algorithm. The strengths of BFGS and MM algorithms would be shared by using the following hybrid algorithm:

- We first conduct the MM algorithm with a small number of iterations.

- Then, the BFGS algorithm is conducted. We use the estimate obtained by the MM algorithm as an initial value of the BFGS algorithm.

The stableness of the MM algorithm is investigated through the real data analysis in Section 7.

Remark 3.

To deal with high-dimensional data, we often use the regulzarization, such as the lasso [], elastic net [], and Smoothly Clipped Absolute Deviation (SCAD) []. In robust relative error estimation, the loss function based on the lasso is expressed as

where is a regularization parameter. However, the loss function in Equation (29) is non-convex and non-differentiable. Instead of directly minimizing the non-convex loss function in Equation (29), we may use the MM algorithm; the following convex loss function is minimized at each iteration:

The minimization of Equation (30) can be realized by the alternating direction method of multipliers algorithm [] or the coordinate descent algorithm with quadratic approximation of [].

6. Monte Carlo Simulation

6.1. Setting

We consider the following two simulation models as follows:

The number of observations is set to be . For each model, we generate T=10,000 datasets of predictors () according to . Here, we consider the case of and . Responses are generated from the mixture distribution

where is a density function corresponding to the LPRE defined as Equation (6), is a density function of distribution of outliers, and () is an outlier ratio. The outlier ratio is set to be and in this simulation. We assume that follows a log-normal distribution (pdf: ) with . When , the outliers take extremely large values. On the other hand, when , the data values of outliers are nearly zero.

6.2. Investigation of Relative Prediction Error and Mean Squared Error of the Estimator

To investigate the performance of our proposed procedure, we use the relative prediction error (RPE) and the mean square error (MSE) for the tth dataset, defined as

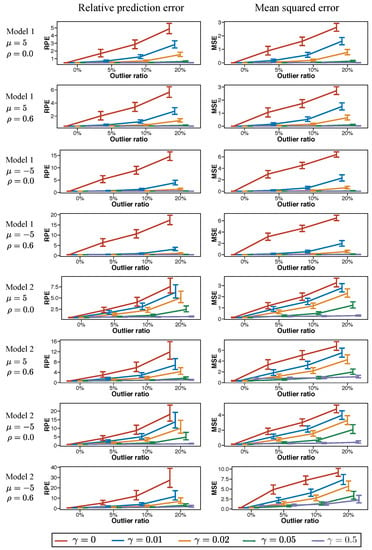

respectively, where is an estimator obtained from the dataset , and is an observation from . Here, follows a distribution of and is independent of . Figure 1 shows the median and error bar of {RPE(1), ⋯, RPE(T)} and {MSE(1), ⋯, MSE(T)}. The error bars are delineated by the 25th and 75th percentiles.

Figure 1.

Median and error bar of relative prediction error (RPE) in Equation (31) and mean squared error (MSE) of in Equation (32) when parameters of the log-normal distribution (distribution of outliers) are . The error bars are delineated by 25th and 75th percentiles.

We observe the following tendencies from the results in Figure 1:

- As the outlier ratio increases, the performance becomes worse in all cases. Interestingly, the length of the error bar of RPE increases as the outlier ratio increases.

- The proposed method becomes robust against outliers as the value of increases. We observe that a too large , such as , leads to extremely poor RPE and MSE because most observations are regarded as outliers. Therefore, the not too large , such as the used here, generally results in better estimation accuracy than the MLE.

- The cases of , where the predictors are correlated, are worse than those of . Particularly, when , the value of RPE of becomes large on the large outlier ratio. However, increasing has led to better estimation performance uniformly.

- The results for different simulation models on the same value of are generally different, which implies the appropriate value of may change according to the data generating mechanisms.

6.3. Investigation of Asymptotic Distribution

The asymptotic distribution is derived under the assumption that the true distribution of follows , that is, . However, we expect that, when is sufficiently large and is moderate, the asymptotic distribution may approximate the true distribution well, a point underlined by Fujisawa and Eguchi ([], Theorem 5.1) in the case of i.i.d. data. We investigate whether the asymptotic distribution given by Equation (16) appropriately works when there exist outliers.

The asymptotic covariance matrix in Equation (16) depends on , , and . For the LPRE, simple calculations provide

The Bessel function of third kind, , can be numerically computed, and then we obtain the values of , , and .

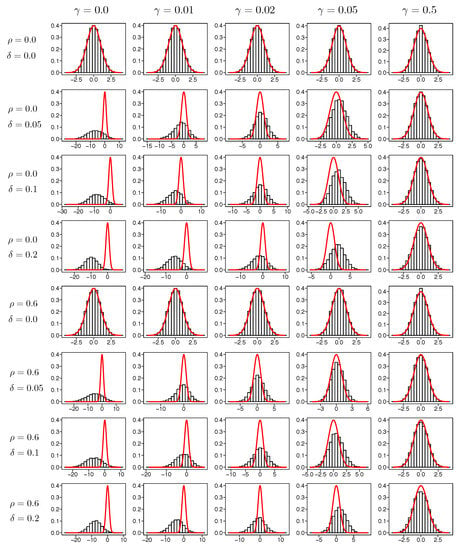

We expect that the histogram of obtained by the simulation would approximate the density function of the standard normal distribution when there are no (or a few) outliers. When there exists a significant number of outliers, the asymptotic distribution of may not be but is expected to be close to for large . Figure 2 shows the histograms of T=10,000 samples of along with the density function of the standard normal distribution for in Model 1.

Figure 2.

Histograms of T = 100,00 samples of along with the density function of standard normal distribution for in Model 1.

When there are no outliers, the distribution of is close to the standard normal distribution whatever the value of is selected. When the outlier ratio is large, the histogram of is far from the density function of for a small . However, when the value of is large, the histogram of is close to the density function of , which implies the asymptotic distribution in Equation (16) appropriately approximates the distribution of estimators even when there exist outliers. We observe that the result of the asymptotic distributions for other s shows a similar tendency to that of .

7. Real Data Analysis

We apply the proposed method to electricity consumption data from the UCI (University of California, Irvine) Machine Learning repository []. The dataset consists of 370 household electricity consumption observations from January 2011 to December 2014. The electricity consumption is in kWh at 15-minute intervals. We consider the problem of prediction of the electricity consumption for next day by using past electricity consumption. The prediction of the day ahead electricity consumption is needed when we trade electricity on markets, such as the European Power Exchange (EPEX) day ahead market (https://www.epexspot.com/en/market-data/dayaheadauction) and the Japan Power Exchange (JEPX) day ahead market (http://www.jepx.org/english/index.html). In the JEPX market, when the prediction value of electricity consumption is smaller than actual electricity consumption , the price of the amount of becomes “imbalance price”, which is usually higher than the ordinary price. For details, please refer to Sioshansi and Pfaffenberger [].

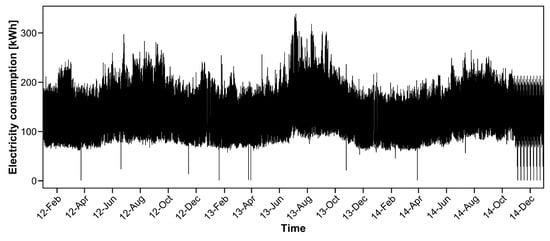

To investigate the effectiveness of the proposed procedure, we choose one household that includes small positive values of electricity consumption. The consumption data for 25 December 2014 were deleted because the corresponding data values are zero. We predict the electricity consumption from January 2012 to December 2014 (the data in 2011 are used only for estimating the parameter). The actual electricity consumption data from January 2012 to December 2014 are depicted in Figure 3.

Figure 3.

Electricity consumption from January 2012 to December 2014 for one of the 370 households.

We observe that several data values are close to zero from Figure 3. Particularly, from October to December 2014, several spikes exist that attain nearly zero values. In this case, the estimation accuracy is poor with ordinary GRE criteria, as shown in our numerical simulation in the previous section.

We assume the multiplicative regression model in Equation (1) to predict electricity consumption. Let denote the electricity consumption at t (). The number of observations is 146,160. Here, 96 is the number of measurements in one day because electricity demand is expressed in 15-minute intervals. We define as , where . In our model, the electricity consumption at t is explained by the electricity consumption of the past q days for the same period. We set for data analysis and use past days of observations to estimate the model.

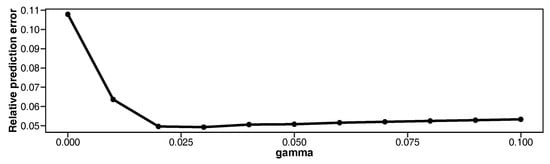

The model parameters are estimated by robust LPRE. The values of are set to be regular sequences from 0 to 0.1, with increments of 0.01. To minimize the negative -likelihood function, we apply our proposed MM algorithm. As the electricity consumption pattern on weekdays is known to be completely different from that on weekends, we make predictions for weekdays and weekends separately. The results of the relative prediction error are depicted in Figure 4.

Figure 4.

Relative prediction error for various values of for household electricity consumption data.

The relative prediction error is large when (i.e., ordinary LPRE estimation). The minimum value of relative prediction error is 0.049 and the corresponding value of is . When we set a too large value of , efficiency decreases and the relative prediction error might increase.

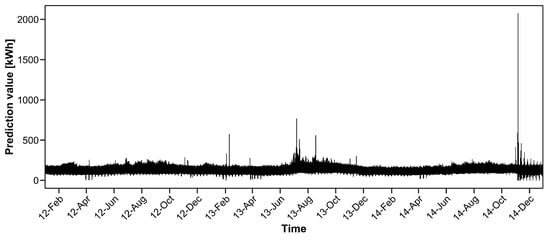

Figure 5 shows the prediction value when . We observe that there exist several extremely large prediction values (e.g., 8 July 2013 and 6 November 2014) due to the model parameters, which are heavily affected by the nearly zero values of electricity consumption.

Figure 5.

Prediction value based on least product relative error (LPRE) loss for household electricity consumption data.

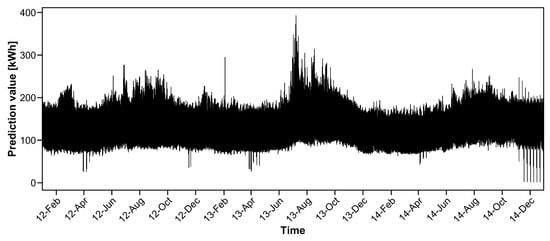

Figure 6 shows the prediction values when . Extremely large prediction values are not observed and the prediction values are similar to the actual electricity demand in Figure 3. Therefore, our proposed procedure is robust against outliers.

Figure 6.

Prediction value based on the proposed method with for household electricity consumption data.

Additionally, we apply the Yule–Walker method, one of the most popular estimation procedures in the autoregressive (AR) model. Note that the Yule–Walker method does not regard a small positive value of as an outlier, so that we do not have to conduct the robust AR model for this dataset. The relative prediction error of the Yule–Walker is 0.123, which is larger than that of our proposed method (0.049).

Furthermore, to investigate the stableness of the MM algorithm described in Section 5, we also apply the BFGS method to obtain the minimizer of the negative -likelihood function. The optim function in R is used to implement the BFGS method. With the BFGS method, relative prediction errors diverge when . Consequently, the MM algorithm is more stable than the BFGS algorithm for this dataset.

8. Discussion

We proposed a relative error estimation procedure that is robust against outliers. The proposed procedure is based on the -likelihood function, which is constructed by -cross entropy []. We showed that the proposed method has the redescending property, a desirable property in robust statistics literature. The asymptotic normality of the corresponding estimator together with a simple consistent estimator of the asymptotic covariance matrix are derived, which allows the construction of approximate confidence sets. Besides the theoretical results, we have constructed an efficient algorithm, in which we minimize a convex loss function at each iteration. The proposed algorithm monotonically decreases the objective function at each iteration.

Our simulation results showed that the proposed method performed better than the ordinary relative error estimation procedures in terms of prediction accuracy. Furthermore, the asymptotic distribution of the estimator yielded a good approximation, with an appropriate value of , even when outliers existed. The proposed method was applied to electricity consumption data, which included small positive values. Although the ordinary LPRE was sensitive to small positive values, our method was able to appropriately eliminate the negative effect of these values.

In practice, variable selection is one of the most important topics in regression analysis. The ordinary AIC (Akaike information criterion, Akaike []) cannot be directly applied to our proposed method because the AIC aims at minimizing the Kullback–Leibler divergence, whereas our method aims at minimizing the -divergence. As a future research topic, it would be interesting to derive the model selection criterion for evaluating a model estimated by the -likelihood method.

High-dimensional data analysis is also an important topic in statistics. In particular, the sparse estimation, such as the lasso [], is a standard tool to deal with high-dimensional data. As shown in Remark 3, our method may be extended to regularization. An important point in the regularization procedure is the selection of a regularization parameter. Hao et al. [] suggested using the BIC (Bayesian information criterion)-type criterion of Wang et al. [,] for the ordinary LPRE estimator. It would also be interesting to consider the problem of regularization parameter selection in high-dimensional robust relative error estimation.

In regression analysis, we may formulate two types of -likelihood functions: Fujisawa and Eguchi’s formulation [] and Kawashima and Fujisawa’s formulation []. [] reported that the difference of performance occurs when the outlier ratio depends on the explanatory variable. In multiplicative regression model in Equation (1), the responses highly depend on the exploratory variables compared with the ordinary linear regression model because is an exponential function of . As a result, the comparison of the above two formulations of the -likelihood functions would be important from both theoretical and practical points of view.

Supplementary Materials

The following are available online at http://www.mdpi.com/1099-4300/20/9/632/s1.

Author Contributions

K.H. proposed the algorithm, made the R package rree, conducted the simulation study, and analyzed the real data; H.M. derived the asymptotics; K.H. and H.M. wrote the paper.

Funding

This research was funded by the Japan Society for the Promotion of Science KAKENHI 15K15949, and the Center of Innovation Program (COI) from Japan Science and Technology Agency (JST), Japan (K.H.), and JST CREST Grant Number JPMJCR14D7 (H.M.)

Acknowledgments

The authors would like to thank anonymous reviewers for the constructive and helpful comments that improved the quality of the paper.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Proof of Theorem 1

All of the asymptotics will be taken under . We write if there exists a universal constant such that for every n large enough. For any random functions and on , we denote if ; below, we will simply write for .

First, we state a preliminary lemma, which will be repeatedly used in the sequel.

Lemma A1.

Let and be vector-valued measurable functions satisfying that

for some and such that

Then,

Proof.

For the first term, let us recall the Sobolev inequality ([], Section 10.2):

for . The summands of trivially form a martingale difference array with respect to the filtration , : since we are assuming that the conditional distribution of given equals that given (Section 2 and Section 3), each summand of equals . Hence, by means of the Burkholder’s inequality for martingales, we obtain, for ,

We can take the same route for the summands of to conclude that . These estimates combined with Equation (A3) then lead to the conclusion that

As for the other term, we have for each and also

The latter implies the tightness of the family of continuous random functions on the compact set , thereby entailing that . The proof is complete. □

Appendix A.1. Consistency

Let for brevity and

By means of Lemma A1, we have

Since , we see that taking the logarithm preserves the uniformity of the convergence in probability: for the -likelihood function (7), it holds that

The limit equals the -cross entropy from to . We have , the equality holding if and only if (see [], Theorem 1). By Equation (4), the latter condition is equivalent to , followed by from Assumption 3. This, combined with Equation (A4) and the argmin theorem (cf. [], Chapter 5), concludes the consistency . Note that we do not need Assumption 3 if is a.s. convex, which generally may not be the case for .

Appendix A.2. Asymptotic Normality

First, we note that Assumption 2 ensures that, for every , there corresponds a function such that

This estimate will enable us to interchange the order of and the -Lebesgue integration, repeatedly used below without mention.

Let , and

Then, the -likelihood equation is equivalent to

By the consistency of , we have ; hence as well, for is open. Therefore, virtually defining to be if has no root, we may and do proceed as if a.s. Because of the Taylor expansion

to conclude Equation (16), it suffices to show that (recall the definitions (14) and (15))

First, we prove Equation (A5). By direct computations and Lemma A1, we see that

The sequence is an -martingale-difference array. It is easy to verify the Lapunov condition:

Hence, the martingale central limit theorem concludes Equation (A5) if we show the following convergence of the quadratic characteristic:

This follows upon observation that

invoke the expression (4) for the last equality.

Next, we show Equation (A6). Under the present regularity condition, we can deduce that

It therefore suffices to verify that . This follows from a direct computation of , combined with the applications of Lemma A1 (note that and have the same limit in probability):

Appendix A.3. Consistent Estimator of the Asymptotic Covariance Matrix

Thanks to the stability assumptions on the sequence , we have

Moreover, for given in Assumption 1, we have

These observations are enough to conclude Equation (17).

References

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning, 2nd ed.; Springer Series in Statistics; Springer: New York, NY, USA, 2009. [Google Scholar]

- Park, H.; Stefanski, L.A. Relative-error prediction. Stat. Probab. Lett. 1998, 40, 227–236. [Google Scholar] [CrossRef]

- Ye, J.; Price Models and the Value Relevance of Accounting Information. SSRN Electronic Journal 2007. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=1003067 (accessed on 20 August 2018).

- Van der Meer, D.W.; Widén, J.; Munkhammar, J. Review on probabilistic forecasting of photovoltaic power production and electricity consumption. Renew. Sust. Energ. Rev. 2018, 81, 1484–1512. [Google Scholar] [CrossRef]

- Mount, J. Relative error distributions, without the heavy tail theatrics. 2016. Available online: http://www.win-vector.com/blog/2016/09/relative-error-distributions-without-the-heavy-tail-theatrics/ (accessed on 20 August 2018).

- Chen, K.; Guo, S.; Lin, Y.; Ying, Z. Least Absolute Relative Error Estimation. J. Am. Stat. Assoc. 2010, 105, 1104–1112. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Lin, Y.; Zhou, G.; Zhou, W. Empirical likelihood for least absolute relative error regression. TEST 2013, 23, 86–99. [Google Scholar] [CrossRef]

- Chen, K.; Lin, Y.; Wang, Z.; Ying, Z. Least product relative error estimation. J. Multivariate Anal. 2016, 144, 91–98. [Google Scholar] [CrossRef]

- Ding, H.; Wang, Z.; Wu, Y. A relative error-based estimation with an increasing number of parameters. Commun. Stat. Theory Methods 2017, 47, 196–209. [Google Scholar] [CrossRef]

- Demongeot, J.; Hamie, A.; Laksaci, A.; Rachdi, M. Relative-error prediction in nonparametric functional statistics: Theory and practice. J. Multivariate Anal. 2016, 146, 261–268. [Google Scholar] [CrossRef]

- Wang, Z.; Chen, Z.; Chen, Z. H-relative error estimation for multiplicative regression model with random effect. Comput. Stat. 2018, 33, 623–638. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Series B Methodol. 1996, 58, 267–288. [Google Scholar]

- Yuan, M.; Lin, Y. Model selection and estimation in regression with grouped variables. J. R. Stat. Soc. Series B Stat. Methodol. 2006, 68, 49–67. [Google Scholar] [CrossRef]

- Hao, M.; Lin, Y.; Zhao, X. A relative error-based approach for variable selection. Comput. Stat. Data Anal. 2016, 103, 250–262. [Google Scholar] [CrossRef]

- Liu, X.; Lin, Y.; Wang, Z. Group variable selection for relative error regression. J. Stat. Plan. Inference 2016, 175, 40–50. [Google Scholar] [CrossRef]

- Xia, X.; Liu, Z.; Yang, H. Regularized estimation for the least absolute relative error models with a diverging number of covariates. Comput. Stat. Data Anal. 2016, 96, 104–119. [Google Scholar] [CrossRef]

- Kawashima, T.; Fujisawa, H. Robust and Sparse Regression via γ-Divergence. Entropy 2017, 19, 608. [Google Scholar] [CrossRef]

- Fujisawa, H.; Eguchi, S. Robust parameter estimation with a small bias against heavy contamination. J. Multivariate Anal. 2008, 99, 2053–2081. [Google Scholar] [CrossRef]

- Maronna, R.; Martin, D.; Yohai, V. Robust Statistics; John Wiley & Sons: Chichester, UK, 2006. [Google Scholar]

- Koudou, A.E.; Ley, C. Characterizations of GIG laws: A survey. Probab. Surv. 2014, 11, 161–176. [Google Scholar] [CrossRef]

- Jones, M.C.; Hjort, N.L.; Harris, I.R.; Basu, A. A comparison of related density-based minimum divergence estimators. Biometrika 2001, 88, 865–873. [Google Scholar] [CrossRef]

- Kawashima, T.; Fujisawa, H. On Difference between Two Types of γ-divergence for Regression. 2018. Available online: https://arxiv.org/abs/1805.06144 (accessed on 20 August 2018).

- Ferrari, D.; Yang, Y. Maximum Lq-likelihood estimation. Ann. Stat. 2010, 38, 753–783. [Google Scholar] [CrossRef]

- Basu, A.; Harris, I.R.; Hjort, N.L.; Jones, M.C. Robust and efficient estimation by minimising a density power divergence. Biometrika 1998, 85, 549–559. [Google Scholar] [CrossRef]

- Van der Vaart, A.W. Asymptotic Statistics; Vol. 3, Cambridge Series in Statistical and Probabilistic Mathematics; Cambridge University Press: Cambridge, UK, 1998. [Google Scholar]

- Eguchi, S.; Kano, Y. Robustifing maximum likelihood estimation by psi-divergence. ISM Research Memorandam. 2001. Available online: https://www.researchgate.net/profile/Shinto Eguchi/publication/228561230Robustifing maximum likelihood estimation by psi-divergence/links545d65910cf2c1a63bfa63e6.pdf (accessed on 20 August 2018).

- Hunter, D.R.; Lange, K. A tutorial on MM algorithms. Am. Stat. 2004, 58, 30–37. [Google Scholar] [CrossRef]

- Böhning, D. Multinomial logistic regression algorithm. Ann. Inst. Stat. Math. 1992, 44, 197–200. [Google Scholar] [CrossRef]

- Zou, H.; Hastie, T. Regularization and variable selection via the elastic net. J. R. Stat. Soc. Series B Stat. Methodol. 2005, 67, 301–320. [Google Scholar] [CrossRef]

- Fan, J.; Li, R. Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 2001, 96, 1348–1360. [Google Scholar] [CrossRef]

- Friedman, J.; Hastie, T.; Tibshirani, R. Regularization Paths for Generalized Linear Models via Coordinate Descent. J. Stat. Softw. 2010, 33, 1. [Google Scholar] [CrossRef] [PubMed]

- Dheeru, D.; Karra Taniskidou, E. UCI Machine Learning Repository. 2017. Available online: https://archive.ics.uci.edu/ml/datasets/ElectricityLoadDiagrams20112014 (accessed on 20 August 2018).

- Sioshansi, F.P.; Pfaffenberger, W. Electricity Market Reform: An International Perspective; Elsevier: Oxford, UK, 2006. [Google Scholar]

- Akaike, H. A new look at the statistical model identification. IEEE Trans. Automat. Contr. 1974, 19, 716–723. [Google Scholar] [CrossRef]

- Wang, H.; Li, R.; Tsai, C.L. Tuning parameter selectors for the smoothly clipped absolute deviation method. Biometrika 2007, 94, 553–568. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Li, B.; Leng, C. Shrinkage tuning parameter selection with a diverging number of parameters. J. R. Stat. Soc. Series B Stat. Methodol. 2009, 71, 671–683. [Google Scholar] [CrossRef]

- Friedman, A. Stochastic Differential Equations and Applications; Dover Publications: New York, NY, USA, 2006. [Google Scholar]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).