Abstract

In this paper, the noise-enhanced detection problem is investigated for the binary hypothesis-testing. The optimal additive noise is determined according to a criterion proposed by DeGroot and Schervish (2011), which aims to minimize the weighted sum of type I and II error probabilities under constraints on type I and II error probabilities. Based on a generic composite hypothesis-testing formulation, the optimal additive noise is obtained. The sufficient conditions are also deduced to verify whether the usage of the additive noise can or cannot improve the detectability of a given detector. In addition, some additional results are obtained according to the specificity of the binary hypothesis-testing, and an algorithm is developed for finding the corresponding optimal noise. Finally, numerical examples are given to verify the theoretical results and proofs of the main theorems are presented in the Appendix.

1. Introduction

In the binary hypothesis testing problem, there are usually a null hypothesis and an alternative hypothesis , and the objective of testing is to be determine truthfulness of them based on the observation data and a decision rule. Due to the presence of noise, the decision result obviously cannot be absolutely correct. Generally, two erroneous decisions may occur in the signal detection: type I error that rejects a true null hypothesis and type II error that accepts a false null hypothesis [1].

In the classical statistical theory, the Neyman–Pearson criterion is usually applied to obtain a decision rule that minimizes the type II error probability with a constraint on the type I error probability . However, the minimum may not always correspond to the optimal decision result. For instance, in the example 1 of [2], a binary hypothesis testing is designed to determine the mean of a normal data: the mean equals to −1 under and equals to 1 under . Under the constraint that the type I error probability is fixed to 0.05, the type II error probability is decreased from 0.0091 to 0.00000026 when the data size increases from 20 to 100, whereas the rejection region of the null hypothesis is changed from (0.1, +∞) to (−0.51, +∞). In such case, more information brings a worse decision result even a smaller type II error probability is achieved with the fixed type I error probability. Similarly, the decision rule that minimizes the type I error probability for a fixed type II error probability may not perform well. Therefore, it could not be appropriate to simply minimize one of the two error probabilities in practice. The ideal case is to develop a decision criterion that minimizes the two types of error probabilities simultaneously, but it is almost impossible in practical applications.

In order to obtain a better decision result to balance the type I and II error probabilities, DeGroot and Schervish [1] proposed a criterion to minimize a weighted sum of type I and II error probabilities, i.e., , where represents the decision rule, and are the weight coefficients corresponding to and , respectively, and . Furthermore, DeGroot also provided the optimal decision procedure to minimize the weighted sum. The decision rule is given as follows. If , the null hypothesis is rejected, where and are the respective probability density functions (pdfs) of the observation under and . If , the alternative hypothesis is rejected. In addition, if , the hypothesis can be either rejected or not. The optimal detector in this case is closely related to the distribution of the observation. This implies that once the distribution changes, the detector should be adjusted accordingly. But in the cases where the detector is fixed, this weighted sum rule cannot be directly applied. In such a case, finding an alternative method to minimize the weighted sum of type I and II error probabilities instead of changing the detector is important. Fortunately, the stochastic resonance (SR) theory provides a means to solve this problem.

The SR, first discovered by Benzi et al. [3] in 1981, is a phenomenon where noise plays a positive role in enhancing signal and system through a nonlinear system under certain conditions. The phenomenon of SR in the signal detection is also called noise-enhanced detection. Recent studies indicate that the system output performance can be improved significantly by adding noise to the system input or increasing the background noise level [4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22]. The improvements achieved via noise can be measured in the forms of increased signal-to-noise ratio (SNR) [7,8,9,10], mutual information (MI) [11,12] or detection probability [13,14,15,16], or in the form of decreased Bayes risk [17,18]. For example, the SNR gain of a parallel uncoupled array of bistable oscillators, operating in a mixture of sinusoidal signal and Gaussian white noise, is maximized via extra array noise [8]. In addition, due to the added array noise, the performance of a finite array closely approaches to an infinite array. In [11], the throughput MI of threshold neurons is increased by increasing the intensity of faint input noise. The optimal additive noise to maximize the detection probability with a constraint on false-alarm probability is studied in [13], and the sufficient conditions for improvability and non-improvability are deduced. In [17], the effects of additive independent noise on the performance of suboptimal detectors are investigated according to the restricted Bayes criterion, where the minimum noise modified Bayes risk is explored with certain constraints on the conditional risks. Inspired by this concept, it is reasonable to conjecture that a proper noise can decrease the weighted sum of type I and II error probabilities for a fixed detector.

In the absence of constraints, it is obvious that the additive noise that minimizes the weighted sum is a constant vector, whereas the corresponding type I or II error probability may exceed a certain value to cause a bad decision result. To avoid this problem, two constraints are enforced on type I and II error probabilities, respectively, to keep a balance. The aim of this work is to find the optimal additive noise that minimizes the weighted sum of type I and II error probabilities with the constraints on type I and II error probabilities for a fixed detector. Furthermore, the work can also be extended to some applications, such as the energy detection in sensor networks [23,24] and the independent Bernoulli trials [25]. The main contributions of this paper are summarized as follows:

- Formulation of the optimization problem for minimizing the noise modified weighted sum of type I and II error probabilities under the constraints on the two error probabilities is presented.

- Derivations of the optimal noise that minimizes the weighted sum and sufficient conditions for improvability and nonimprovability for a general composite hypothesis testing problem are provided.

- Analysis of the characteristics of the optimal additive noise that minimizes the weighted sum for a simple hypothesis testing problem is studied and the corresponding algorithm to solve the optimization problem is developed.

- Numerical results are presented to verify the theoretical results and to demonstrate the superior performance of the proposed detector.

The remainder of this paper is organized as follows: in Section 2, a noise modified composite hypothesis testing problem is formulated first for minimizing the weighted sum of type I and II error probabilities under different constraints. Then the sufficient conditions for improvability and nonimprovability are given and the optimal additive noise is derived. In Section 3, additional theoretical results are analyzed for a simple hypothesis testing problem. Finally, simulation results are shown in Section 4 and conclusions are made in Section 5.

Notation: Lower-case bold letters denote vectors, with denoting the i-th element of ; denotes the value of parameter ; denotes the pdf of for a given parameter value ; denotes the set of all possible parameter values of under ; denotes the Dirac function; , and denote intersection, union and null set, respectively; , , , , , and denote convolution, transpose, integral, expectation, minimum, maximum and argument operators, respectively; and denote the infimum and supremum operators, respectively; means summation; and denote the respective gradient and Hessian operators.

2. Noise Enhanced Composite Hypothesis Testing

2.1. Problem Formulation

Consider the following binary composite hypothesis testing problem:

where is the observation vector, and are the null and the alternative hypothesizes, respectively, denotes the value of parameter , represents the pdf of for a given parameter value . The parameter has multiple possible values under each hypothesis and denote the pdf of any parameter value under and by and . In addition, and denote the respective sets of all possible values of under and . It is true that and the union of them forms the parameter space , i.e., .

Without loss of generality, a decision rule (detector) is considered as:

where and form the observation space . Actually, the detector chooses if , otherwise chooses if .

In order to investigate the performance of the detector achieved via an additive noise, a noise modified observation is obtained by adding an independent additive noise to the original observation , i.e., . For a given parameter value , the pdf of is calculated by the convolution of the pdfs of and , given by:

where denotes the pdf of . For a fixed detector, the noise modified type I and II error probabilities of the detector for given parameter values now is expressed as:

Correspondingly, the average noise modified type I and II error probabilities are calculated by:

From (6) and (7), the weighted sum of the two types of average error probabilities is obtained as:

where and are the weights assigned for the type I and II error probabilities, which can be predefined according to the actual situations. For example, if the prior probabilities are known, the value of and equal the prior probabilities corresponding to and , respectively. Besides, the values of and can also be determined based on the expected decision results.

In this work, the aim is to find the optimal independent additive noise, which minimizes the weighted sum of the average error probabilities under the constraints on the maximum type I and II error probabilities for different parameter values. The optimization problem can be formulated as below:

subject to

where and are the upper limits for the type I and II error probabilities, respectively.

In order to explicitly express the optimization problem described in (9) and (10), substituting (3) into (4) produces:

where

It should be noted that can be viewed as the type I error probability obtained by adding a constant vector to for . Therefore, denotes the type I error probability for the original observation .

Similarly, in (5) can be expressed as:

where

The can be treated as the type II error probability obtained by adding a constant vector to for and is the original type II error probability without adding noise for .

With (11) and (13), (8) becomes:

where

Accordingly, is the weighted sum of two types of average error probabilities achieved by adding a constant vector to the original observation . Naturally, denotes the weighted sum of type I and II average error probabilities for the original observation .

Combined (11), (13) and (15), the optimization problem in (9) and (10) now is:

subject to

2.2. Sufficient Conditions for Improvability and Non-improvability

In practice, the solution of the optimization problem in (17) and (18) requires a research over all possible noises and this procedure is complicated. Therefore, it is worthwhile to determine whether the detector can or cannot be improved by adding additive noise in advance. From (17) and (18), a detector is considered to be improvable if there exists one noise that satisfies , and simultaneously; otherwise, the detector is considered to be non-improvable.

The sufficient conditions for non-improvability can be obtained according to the characteristics of , and , which are provided in Theorem 1.

Theorem 1.

If there exists () such that () implies for any , where represents the convex set of all possible additive noises, and if ( ) and are convex functions over , then the detector is non-improvable.

The proof is provided in Appendix A.

Under the conditions in Theorem 1, the detector cannot be improved and it is unnecessary to solve the optimization problem in (17) and (18). In other words, if the conditions in Theorem 1 are satisfied, the three inequities , and cannot be achieved simultaneously by adding any additive noise. In addition, even if the conditions in Theorem 1 are not satisfied, the detector can also be non-improvable. This implies the sufficient conditions for improvability need to be addressed.

The sufficient conditions for improvability are discussed now. Suppose that (), () and are second-order continuously differentiable around . In order to facilitate the subsequent analysis, six auxiliary functions are predefined as follows based on the first and second partial derivatives of , and with respect to the elements of . The first three auxiliary functions , and are defined as the weight sums of the first partial derivatives of , and , respectively, based on the coefficient vector . Specifically:

where is a -dimensional column vector, is the transposition of , and are the i-th elements of and , respectively. In addition, denotes the gradient operator, thereby (,) is a -dimensional column vector with i-th element (, ), . The last three auxiliary functions , and are defined as the weight sums of the second partial derivatives of , and based on the coefficient matrix , i.e.,

where denote the Hessian operator, (,) is a matrix with its -th element denoted by (,), where .

Based on the definitions in (19)–(24), Theorem 2 presents the sufficient conditions for improvability.

Theorem 2.

Suppose that and are the sets of all possible values of that maximize and , respectively, and . The detector is improvable, if there exists a -dimensional column vector that satisfies one of the following conditions for all and :

- (1)

- , , ;

- (2)

- , , ;

- (3)

- , , .

The proof is presented in Appendix B.

Theorem 2 indicates that under the condition (1), (2) or (3), there always exist noises that decrease the weighted sum of average error probabilities under the constraints on the type I and II error probabilities. In addition, alternative sufficient conditions for improvability can be obtained by defining the following two functions, and they are:

where and are the minimum weighted sum of two types of average error probabilities and the maximum type II error probability for a given maximum type I error probability obtained via adding a constant vector, respectively. If there is a such that and , the detector is improvable. More specifically, there exists a constant vector that satisfies , and simultaneously. However, in most cases, the solution of the optimization problem in (17) and (18) is not a constant vector. A more practical sufficient condition for improvability is shown in Theorem 3.

Theorem 3.

Let and be the respective maximum type I and II error probabilities without adding any noise, and suppose that , and . If and are second-order continuously differentiable around , and and hold at the same time, then the detector is improvable.

The proof is given in Appendix C.

Additionally, the following functions and are defined:

A similar conclusion to the Theorem 3 can be made as well, provided in Corollary 1.

Corollary 1.

The detector is improvable, if and hold, where and are second-order continuously differentiable around , and .

The proof is similar to that of Theorem 3 and it is omitted here.

2.3. Optimal Additive Noise

In general, it is difficult to solve the optimization problem in (17) and (18) directly, because the solution is obtained based on the search over all possible additive noises. Hence, in order to reduce the computational complexity, one can utilize Parzen window density estimation to obtain an approximate solution. Actually, the pdf of the optimal additive noise can be approximated by:

where and , while represents the window function that satisfies for any and for . The window function can be a cosine window, rectangular window, or Gauss window function. With (29), the optimization problem is simplified to obtain the parameter values corresponding to each window function. In such cases, global optimization algorithms can be applied such as Particle swarm optimization (PSO), Ant colony algorithm (ACA), and Genetic algorithm (GA) [26,27,28].

If the numbers of parameter values in and are finite, the optimal additive noise for (17) and (18) is a randomization of no more than constant vectors. In this case, and can be expressed by and , where and are finite positive integers. The Theorem 4 states this claim.

Theorem 4.

Suppose that each component in the optimal additive noise is finite, namely for , where and are two finite values. If and are continuous functions, the pdf of the optimal additive noise for the optimization problem in (17) and (18) can be expressed as:

where and .

The proof is similar to that of Theorem 4 in [17] and Theorem 3 in [13], and omitted here. In some special cases, the optimal additive can be solved directly based on the characteristics of (). For example, let () and (). If () and (), the optimal additive noise is a constant vector with pdf of . In addition, equality of () holds if ().

3. Noise Enhanced Simple Hypothesis Testing

In this section, the noise enhanced binary simple hypothesis testing problem is considered, which is a special case of the optimization problem in (9) and (10). Therefore, the conclusions obtained in Section 2 are also applicable in this section. Furthermore, due to the specificity of simple binary hypothesis testing problem, some additional results are also obtained.

3.1. Problem Formulation

When , , the composite binary hypothesis testing problem described in (1) is simplified to a simple binary hypothesis testing problem. In this case, the probability of under equals to 1, i.e., for . Therefore, the corresponding noise modified type I and II error probabilities is rewritten as:

where and represent the pdfs of under and , respectively, and and are:

Correspondingly, the weighted sum of noise modified type I and II error probabilities is calculated by:

where

As a result, the optimization problem in (9) and (10) becomes:

subject to

Based on the definitions in (33) and (34), and are viewed as the noise modified type I and II error probabilities obtained by adding a constant vector noise. Furthermore, and are the original type I and II error probabilities, respectively.

3.2. Algorithm for the Optimal Additive Noise

According to the Theorem 4 in Section 2.3, the optimal additive noise for the optimization problem in (37) and (38) is a randomization of most two constant vectors with the pdf . In order to find the values of , and , we first divide each constant vector into four disjoint sets according to the relationships of and , and . To be specific, the four disjoint sets are , , , and . Then, we calculate the minimum , the corresponding set of all possible values of is denoted by . It should be noted that is the optimal additive noise that minimizes the weighted sum without constraints.

It is obvious that , and do not exist if all the elements of belong to . In other words, if , there is no additive noise that satisfies under the constraints of and . Therefore, if the detector is improvable, the elements of must come from , and/or . Theorem 5 is now provided to find the values of , and .

Theorem 5.

Let and .

- (1)

- If , then and such that .

- (2)

- If and are true, then we have , , , and .

- (3)

- If , then is obtained when , and the corresponding achieves the minimum and .

- (4)

- If , then is achieved when , and the corresponding and reaches the minimum.

The corresponding proofs are provided in Appendix D.

From (3) and (4) in Theorem 5, under the constraints on and , the solution of the optimization problem in (37) and (38) is identical with the additive noise that minimizes () when (). In such cases, the optimal solution can be obtained easily by referring the algorithm provided in [14].

4. Numerical Results

In this section, a binary hypothesis testing problem is studied to verify the theoretical analysis, and it is:

where is an observation, is a constant or random variable, and is the background noise with pdf . From (39), the pdf of under is , and the pdf of under for a given parameter value is denoted by , where represents the pdf of . A noise modified observation is obtained via adding an additive independent noise to the observation , i.e., . If the additive noise is a constant vector, the pdf of under is calculated as , and the pdf of under for is . In addition, a linear- quadratic detector is utilized here, given by:

where , and are detector parameters, and denotes the detection threshold. In the numerical examples, and , where and are the original type I and II error probabilities, respectively.

4.1. Rayleigh Distribution Background Noise

Suppose that is a constant, the problem shown in (39) represents a simple binary hypothesis testing problem. Here, we set and , then the detector becomes

It is assumed that the background noise obeys the mixture of Rayleigh distributions with zero-means such that , where for , , and

In the simulations, the variances of all the Rayleigh components are assumed to be the same, i.e., for . In addition, the parameters are specified as , , , , and for . From (33) and (34), the noise modified type I error probability and type II error probability obtained by adding a constant vector is calculated as:

where , when ; , when . Accordingly, and . Let and , the noise modified weighted sum of the two types of error probabilities obtained via adding a constant vector is .

From Section 3.2, the pdf of the optimal additive noise that minimizes weighted sum of type I and II error probabilities is denoted by , under the two constraints that and . Moreover, the optimal additive noise for the case without any constraints is a constant vector.

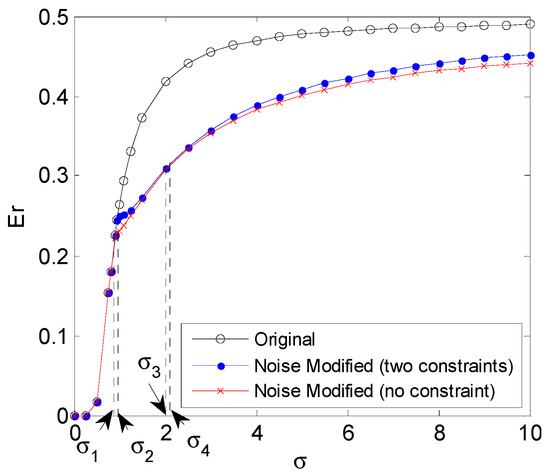

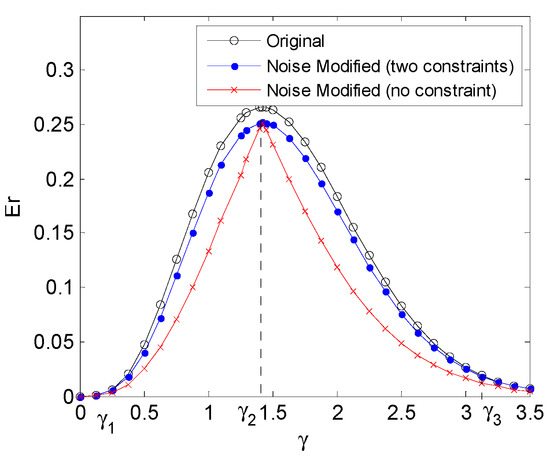

Figure 1 plots the minimum noise modified weighted sums of type I and II error probabilities obtained under no constraint and two constraints that and , and the original weighted sum without adding any noise for different values of when and . When , there is no noise that decreases the weighted sum. With the increase of , noise exhibits a positive effect on the detection performance. To be specific, when , the weighted sum can be decreased by adding a constant vector for the no constraint case. When , the weighted sum can be decreased adding the noise under two constraints. The noise modified weighted sum obtained without any constraints is less than or equal to that obtained under the two constraints, and the difference between them first decreases to zero for and then gradually increases when . In addition, once exceeds a certain value, no noise exists that can decrease the weighted sum for any cases.

Figure 1.

The minimum noise modified weighted sums of the type I and II error probabilities obtained under no constraint and two constraints, and the original weighted sum for different when s = 3 and γ = s/2.

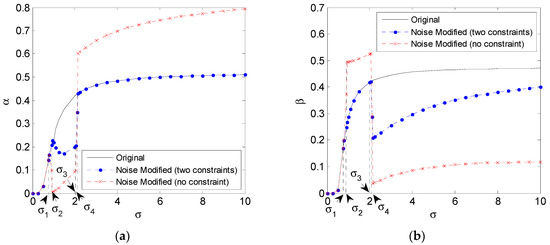

Figure 2 shows the type I and II error probabilities corresponding to the weighted sum in Figure 1. From both Figure 1 and Figure 2, it is observed that one of the noise modified Type I and II error probabilities performs worse than the original one for the no constraint case. Therefore, though the noise modified weighted sum obtained with no constraint is less than that obtained under the two constraints, the corresponding noise is actually not suitable to add to the observation. Furthermore, when the minimum value of the noise modified weighted sum is obtained under the two constraints, the corresponding type II probability equals to the original one and the type I probability achieves the minimum for . Conversely, when , the corresponding type I probability equals to the original one and the type II probability achieves the minimum. The results are consistent with part (3) and part (4) in Theorem 5. Especially, for , the minimum values of the noise modified weighted sum obtained under no constraint is equal to that obtained under two constraints, and the corresponding type I and II error probabilities are the same, which also agrees with part (2) in Theorem 5. In order to further illustrate the results in Figure 1 and Figure 2, Table 1 provides the optimal additive noises added for the two different cases.

Figure 2.

The type I (a) and II (b) error probabilities corresponding to the weighted sum in Figure 1.

Table 1.

The optimal additive noises that minimize the weighted sum under two constraints and no constraint for various where s = 3 and γ = s/2.

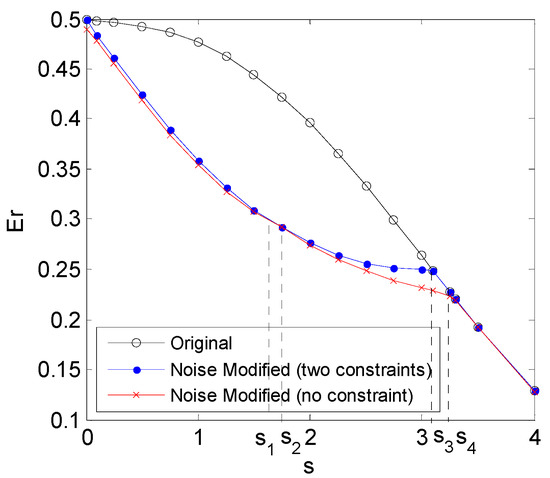

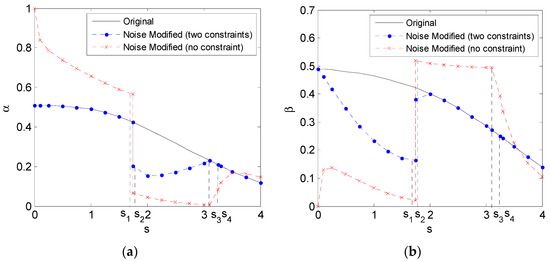

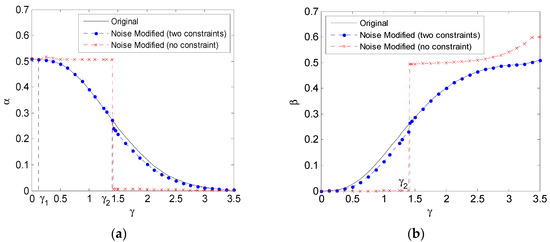

Figure 3 depicts the minimum noise modified weighted sums of the type I and II error probabilities versus for the cases of no constraint and two constraints, and the original weighted sum, when and . The corresponding type I and II error probabilities are depicted in Figure 4a,b, respectively. It is seen in Figure 3, the improvement of the weighted sum obtained by adding noise first increases and then decreases with the increase of , and finally they all converge to the same value. The differences for the cases with and without constraints are very small in most cases. In the small interval of , i.e., , the difference even decreases to zero. On the other hand, the noise modified type I error probability obtained under no constraint is significantly greater than the original one for , while the corresponding type II error probability is less than that obtained under the two constraints. The situation, however, is reversed for . When , there is no noise that decreases the weighted sum under the two constraints, while the weighted sum is still decreased by adding a constant vector for no constraint case. When , the weighted sum cannot be decreased by adding any noise for all the cases. Furthermore, Table 2 shows the optimal additive noises that minimize the weighted sum under the cases of no and two constraints.

Figure 3.

The minimum noise modified weighted sums of the type I and II error probabilities obtained under no constraint and two constraints, and the original weighted sum for different when σ = 1 and γ = s/2.

Figure 4.

The type I and II error probabilities corresponding to the weighted sum in Figure 3 are shown in (a) and (b), respectively.

Table 2.

The optimal additive noises that minimize the weighted sum under two constraints and no constraint for various where σ = 1 and γ = s/2.

Figure 5 shows the minimum noise modified weighted sums of type I and II error probabilities versus for the cases of no constraint and two constraints, and the original weighted sum, when and . The corresponding type I and II error probabilities are depicted in Figure 6a,b, respectively. As illustrated in Figure 5, when is close to zero, the original weighted sum approaches to zero. In such case, no additive noise exists to decrease the weighted sum. For the case of two constraints, the improvement of the weighted sum first increases for and then decreases for , and no improvement can be obtained when . On the other hand, the minimum noise modified weighted sum obtained under no constraint is smaller than that obtained under the two constraints for , and the difference between them first increases and then decreases for both and . When , there still exists a constant vector that decreases the weighted sum, but it may be not a suitable noise in the practical application according to the type II probability depicted in Figure 6b. Furthermore, in order to study the results illustrated in Figure 5 and Figure 6, Table 3 shows the optimal additive noises that minimize the weighted sum for the cases of no and two constraints.

Figure 5.

The minimum noise modified weighted sums of the type I and II error probabilities obtained under no constraint and two constraints, and the original weighted sum for different when σ = 1 and s = 3.

Figure 6.

The type I and II error probabilities corresponding to the weighted sum in Figure 5 are shown in (a) and (b), respectively.

Table 3.

The optimal additive noises that minimize the weighted sum under two constraints and no constraint for various where σ = 1 and s = 3.

4.2. Gaussian Mixture Background Noise

Suppose that is a random variable with following pdf:

Therefore, we have and . In the simulations, we set , , and , the detector is expressed as:

Moreover, we assume that is a zero-mean symmetric Gaussian mixture noise with pdf of , where , and:

Let and the mean values of the symmetric Gaussian components are set as [0.05 0.52 −0.52 −0.05] with corresponding weights [0.35 0.15 0.15 0.35]. In addition, the variances of Gaussian components are the same, i.e., for . According to (12) and (14), the noise modified type I error probability obtained by adding a constant vector to is calculated by:

and the corresponding type II error probabilities for and are respectively calculated as:

where . Accordingly:

Therefore, the original type I and type II error probabilities for and are , and , respectively.

Due to the symmetry property of , one obtains . In this case, the original average type II error probability is . The noise modified weighted sum of type I and average type II error probabilities corresponding to the constant vector is expressed by . The values of and are still specified as and , respectively. From Theorem 4 in Section 2.3, the optimal additive noise that minimizes the weighted sum is a randomization with a pdf of , where for , and .

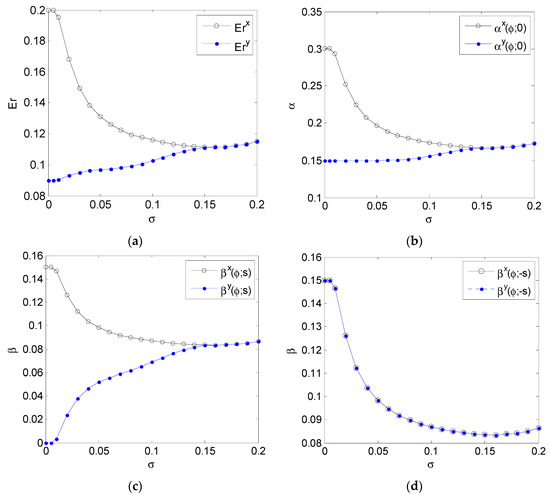

Figure 7 shows the detection performance of the original detector and the noise enhanced detector that minimizes the weighted sum of type I and average type II error probabilities under the constraints that and , for different values of where and . The minimum achievable noise modified weighted sum is plotted in Figure 7a, and the corresponding type I error probability and type II error probabilities for and are depicted in Figure 7b–d, respectively.

Figure 7.

The weighted sums, type I error probabilities, and type II error probabilities for and −s of the original detector and the noise enhanced detector for different σ where s = 1 and ρ = 0.6 shown in (a), (b), (c) and (d), respectively

From Figure 7, the original weighted sums, type I error probabilities, and type II error probabilities for and increase as decreases towards zero. In Figure 7a, when is close to zero, the weighted sum can be decreased significantly. With the increase of , the improvement obtained by adding noise is reduced gradually to zero. In other words, the phenomenon of noise-enhanced detection performance cannot occur when exceeds a certain value. In Figure 7b, the noise modified type I error probability stays at 0.1500 for and then increases gradually to equal to the original type I error probability. Moreover, the noise modified type II error probabilities for corresponding to the minimum weighted sum increases from zero to that of original detector, shown in Figure 7c, while the type II error probabilities for of the noise enhanced detector is equal to that of the original detector all the time. In fact, the type II error probability for also reaches the minimums under the constraints that and in this example. In addition, Table 4 offers the optimal additive noises that minimize the weighted sum for different values of to explain the results in Figure 7. It should be noted that the optimal noise is not unique.y.

Table 4.

The optimal additive noises that minimize the weighted sum under two constraints for various where s = 1 and ρ = 0.6.

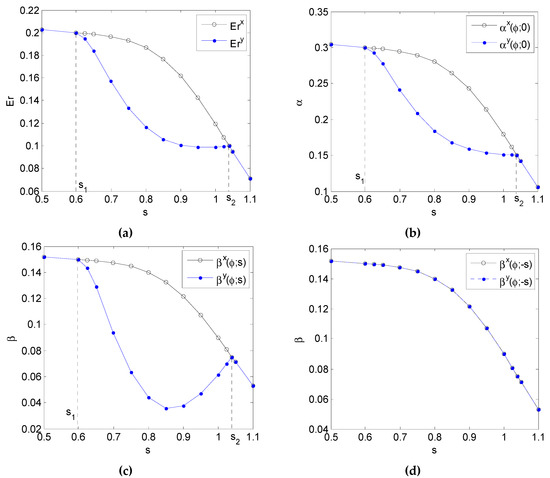

Figure 8a demonstrates the weighted sums of type I and average type II error probabilities of the original detector and the noise enhanced detector versus , where and . The corresponding type I error probability and type II error probabilities for and are depicted in Figure 8b–d, respectively. From Figure 8a, the weighted sum cannot be decreased under the constraints on different error probabilities for and . Conversely, there exists additive noise under the constraints that reduces the weighted sum for , and the corresponding improvement first increases and then decreases with the increase of . Comparing Figure 8b with Figure 8a, it is noted that the change of the noise modified type I error probability is similar to that of the noise modified weighted sum. In Figure 8c, the noise modified type II error probability for first decreases to the minimum and then increases as increases, while the type II error probability for of the noise modified detector is always equal to that of the original detector, shown in Figure 8d. In addition, in order to further illustrate the results in Figure 8, Table 5 shows the optimal noises that minimize the weighted sum under the case of two constraints.

Figure 8.

The weighted sums, type I error probabilities, and type II error probabilities for and −s of the original detector and the noise enhanced detector for different s where σ = 0.08 and ρ = 0.6 shown in (a), (b), (c) and (d), respectively.

Table 5.

The optimal additive noises that minimize the weighted sum under two constraints for various where σ = 0.08 and ρ = 0.6.

5. Conclusions

In this paper, a noise-enhanced detection problem has been investigated for a general composite hypothesis testing. Under the constraints of type I and II error probabilities, the minimization of the weighted sum of average type I and II error probabilities has been explored by adding an additive independent noise. The sufficient conditions for improvability of the weighted sum are provided, and a simple algorithm to search the optimal noise is developed. Then some additional theoretical results are made based on the specificity of the binary simple hypothesis testing problem. The studies on different noise distributions confirm the theoretical analysis that the optimal additive noise indeed minimizes the weighted sum under certain conditions. To be noted that, theoretical results can also be extended to a broad class of noise enhanced optimization problems under two inequality constraints such as the minimization of Bayes risk under the different constraints of condition risks for a binary hypothesis testing problem.

Acknowledgments

This research is partly supported by the Basic and Advanced Research Project in Chongqing (Grant No. cstc2016jcyjA0134, No. cstc2016jcyjA0043), the National Natural Science Foundation of China (Grant No. 61501072, No. 41404027, No. 61571069, No. 61675036, No. 61471073) and the Project No. 106112017CDJQJ168817 supported by the Fundamental Research Funds for the Central Universities.

Author Contributions

Shujun Liu raised the idea of the framework to solve noise enhanced detection problems. Ting Yang and Shujun Liu contributed to the drafting of the manuscript, interpretation of the results, some experimental design and checked the manuscript. Kui Zhang contributed to develop the algorithm of finding the corresponding optimal noise and Ting Yang contributed to the proofs of the theories developed in this paper. All authors have read and approved the final manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Proof of Theorem 1

Proof.

Due to the convexity of and according to the Jensen’s inequality, the type I error probability in (4) is calculated as:

The contradiction method is utilized to prove this theorem. Suppose that the detector can be improved by adding noise. The improvability means that for any , and then from (A1). Since , implies based on the assumption in Theorem 1, (15) can be recalculated as:

where the first inequality holds according to the convexity of . From (A1) and (A2), the inequality cannot be achieved by adding any noise under the conditions presented in Theorem 1. Therefore, the detector is nonimprovable, which contradicts the assumption. Similarly, the alternative conditions for nonimprovability stated in the parentheses can also be proved. □

Appendix B. Proof of Theorem 2

Proof.

According to the definitions in (9) and (10), improvability for a detector means that there exists at least one pdf to satisfy three conditions, i.e., , for any and for any . Suppose that the noise pdf consists of infinitesimal noise components, i.e., . The three conditions can be rewritten as follows:

Since , , is infinitesimal, , and can be expressed approximately with Taylor series expansion as , and , where (,) and (,) are the gradient and the Hessian matrix of (,) around , respectively. Therefore, (A3)–(A5) are rewritten as:

Let be expressed by , where is a -dimensional real vector and is an infinitesimal real value, . Accordingly, one obtains:

Based on the definitions given in (19)–(24), (A9)–(A11) are simplified as:

where . As for and for , the right-hand side of (A13) approaches to plus infinity for . Similarly, when for and for , the right-hand side of (A14) also goes to plus infinity for . Therefore, we only need to consider the cases of and . In doing so, (A12)–(A14) are now:

It is obvious that can be set as any real value by choosing appropriate and . As a result, (A15)–(A17) can be satisfied by selecting a suitable value of under each condition in Theorem 2. That is:

- (1)

- Inequalities (A15)–(A17) can be satisfied by setting as a sufficiently large positive number, if , , hold.

- (2)

- Inequalities (A15)–(A17) can be satisfied by setting as a sufficiently large negative number, if , , hold.

- (3)

- Inequalities (A15)–(A17) can be satisfied by setting as zero, if , , hold. □

Appendix C. Proof of Theorem 3

Proof.

Since and are second-order continuously differentiable around , there exists a such that and for . If one adds a noise with pdf , where and , to the original observation , the maximum values of corresponding noise modified type I and II error probabilities are:

In addition:

One obtains because according to the definition of . As a result, the detector is improvable. □

Appendix D. Proof of Theorem 5

Proof.

Part (1): If , any satisfies the constraints of and based on the definition of and according to the definition of .

Part (2): If and simultaneously, there exists and such that based on the definition of . In order to meet the constraints that and , the noise components , and should satisfy the following two inequalities:

Consequently, and according to the definitions of and . If , the noise with pdf can minimize and satisfy the two inequalities, and .

Part (3): If , the optimal additive noise is not a constant vector, i.e., . Therefore, one of and belongs to and the second one comes from or . In addition, , and should also satisfy the two constraints in (A21) and (A22).

First, suppose that and , then (A21) holds based on the definitions of and . We should only consider the constraint in (A22), which implies . It is true that and according to the definition of . If , we have , which contradicts with the definition of . Hence, and the minimum of is obtained when .

Next, suppose that and . The two inequalities in (A21) and (A22) require that . If , the minimum of is obtained when . In such case, there exists a noise with pdf that satisfies and simultaneously, where and . Therefore, since , which contradicts with the definition of . As a result, and the minimum of is obtained when .

When , one obtains . In other words, the minimum of is obtained when achieves the minimum and . Accordingly, one obtains .

Part (4): The proof of Part (4) is similar to that of Part (3) and it is omitted here. □

References

- DeGroot, M.H.; Sxhervish, M.J. Probability and Statistics, 4nd ed.; Addison-Wesley: Boston, MA, USA, 2011. [Google Scholar]

- Pericchi, L.; Pereira, C. Adaptative significance levels using optimal decision rules: Balancing by weighting the error probabilities. Braz. J. Probab. Stat. 2016, 30, 70–90. [Google Scholar] [CrossRef]

- Benzi, R.; Sutera, A.; Vulpiani, A. The mechanism of stochastic resonance. J. Phys. A Math. 1981, 14, 453–457. [Google Scholar] [CrossRef]

- Patel, A.; Kosko, B. Noise benefits in quantizer-array correlation detection and watermark decoding. IEEE Trans. Signal Process. 2011, 59, 488–505. [Google Scholar] [CrossRef]

- Han, D.; Li, P.; An, S.; Shi, P. Multi-frequency weak signal detection based on wavelet transform and parameter compensation band-pass multi-stable stochastic resonance. Mech. Syst. Signal Process. 2016, 70–71, 995–1010. [Google Scholar] [CrossRef]

- Addesso, P.; Pierro, V.; Filatrella, G. Interplay between detection strategies and stochastic resonance properties. Commun. Nonlinear Sci. Numer. Simul. 2016, 30, 15–31. [Google Scholar] [CrossRef]

- Gingl, Z.; Makra, P.; Vajtai, R. High signal-to-noise ratio gain by stochastic resonance in a double well. Fluct. Noise Lett. 2001, 1, L181–L188. [Google Scholar] [CrossRef]

- Makra, P.; Gingl, Z. Signal-to-noise ratio gain in non-dynamical and dynamical bistable stochastic resonators. Fluct. Noise Lett. 2002, 2, L147–L155. [Google Scholar] [CrossRef]

- Makra, P.; Gingl, Z.; Fulei, T. Signal-to-noise ratio gain in stochastic resonators driven by coloured noises. Phys. Lett. A 2003, 317, 228–232. [Google Scholar] [CrossRef]

- Duan, F.; Chapeau-Blondeau, F.; Abbott, D. Noise-enhanced SNR gain in parallel array of bistable oscillators. Electron. Lett. 2006, 42, 1008–1009. [Google Scholar] [CrossRef]

- Mitaim, S.; Kosko, B. Adaptive stochastic resonance in noisy neurons based on mutual information. IEEE Trans. Neural Netw. 2004, 15, 1526–1540. [Google Scholar] [CrossRef] [PubMed]

- Patel, A.; Kosko, B. Mutual-Information Noise Benefits in Brownian Models of Continuous and Spiking Neurons. In Proceedings of the 2006 International Joint Conference on Neural Network, Vancouver, BC, Canada, 16–21 July 2006; pp. 1368–1375. [Google Scholar]

- Chen, H.; Varshney, P.K.; Kay, S.M.; Michels, J.H. Theory of the stochastic resonance effect in signal detection: Part I – fixed detectors. IEEE Trans. Signal Process. 2007, 55, 3172–3184. [Google Scholar] [CrossRef]

- Patel, A.; Kosko, B. Optimal noise benefits in Neyman–Pearson and inequality constrained signal detection. IEEE Trans. Signal Process. 2009, 57, 1655–1669. [Google Scholar]

- Bayram, S.; Gezici, S. Stochastic resonance in binary composite hypothesis-testing problems in the Neyman–Pearson framework. Digit. Signal Process. 2012, 22, 391–406. [Google Scholar] [CrossRef]

- Bayrama, S.; Gultekinb, S.; Gezici, S. Noise enhanced hypothesis-testing according to restricted Neyman–Pearson criterion. Digit. Signal Process. 2014, 25, 17–27. [Google Scholar] [CrossRef]

- Bayram, S.; Gezici, S.; Poor, H.V. Noise enhanced hypothesis-testing in the restricted Bayesian framework. IEEE Trans. Signal Process. 2010, 58, 3972–3989. [Google Scholar] [CrossRef]

- Bayram, S.; Gezici, S. Noise enhanced M-ary composite hypothesis-testing in the presence of partial prior information. IEEE Trans. Signal Process. 2011, 59, 1292–1297. [Google Scholar] [CrossRef]

- Chen, H.; Varshney, L.R.; Varshney, P.K. Noise-enhanced information systems. Proc. IEEE 2014, 102, 1607–1621. [Google Scholar] [CrossRef]

- Weber, J.F.; Waldman, S.D. Stochastic Resonance is a Method to Improve the Biosynthetic Response of Chondrocytes to Mechanical Stimulation. J. Orthop. Res. 2015, 34, 231–239. [Google Scholar] [CrossRef] [PubMed]

- Duan, F.; Chapeau-Blondeau, F.; Abbott, D. Non-Gaussian noise benefits for coherent detection of narrow band weak signal. Phys. Lett. A 2014, 378, 1820–1824. [Google Scholar] [CrossRef]

- Lu, Z.; Chen, L.; Brennan, M.J.; Yang, T.; Ding, H.; Liu, Z. Stochastic resonance in a nonlinear mechanical vibration isolation system. J. Sound Vib. 2016, 370, 221–229. [Google Scholar] [CrossRef]

- Rossi, P.S.; Ciuonzo, D.; Ekman, T.; Dong, H. Energy Detection for MIMO Decision Fusion in Underwater Sensor Networks. IEEE Sen. J. 2015, 15, 1630–1640. [Google Scholar] [CrossRef]

- Rossi, P.S.; Ciuonzo, D.; Kansanen, K.; Ekman, T. Performance Analysis of Energy Detection for MIMO Decision Fusion in Wireless Sensor Networks Over Arbitrary Fading Channels. IEEE Trans. Wirel. Commun. 2016, 15, 7794–7806. [Google Scholar] [CrossRef]

- Ciuonzo, D.; de Maio, A.; Rossi, P.S. A Systematic Framework for Composite Hypothesis Testing of Independent Bernoulli Trials. IEEE Signal Proc. Lett. 2015, 22, 1249–1253. [Google Scholar] [CrossRef]

- Parsopoulos, K.E.; Vrahatis, M.N. Particle Swarm Optimization Method for Constrained Optimization Problems; IOS Press: Amsterdam, The Netherlands, 2002; pp. 214–220. [Google Scholar]

- Hu, X.; Eberhart, R. Solving constrained nonlinear optimization problems with particle swarm optimization. In Proceedings of the sixth world multiconference on systemics, cybernetics and informatics, Orlando, FL, USA, 14–18 July 2002. [Google Scholar]

- Price, K.V.; Storn, R.M.; Lampinen, J.A. Differential Evolution: A Practical Approach to Global Optimization; Springer: New York, NY, USA, 2005. [Google Scholar]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).