Abstract

Kidney disease poses a significant global health challenge, affecting millions and straining healthcare systems due to limited nephrology resources. This paper examines the transformative potential of Generative AI (GenAI), Large Language Models (LLMs), and Large Vision Models (LVMs) in addressing critical challenges in kidney care. GenAI supports research and early interventions through the generation of synthetic medical data. LLMs enhance clinical decision-making by analyzing medical texts and electronic health records, while LVMs improve diagnostic accuracy through advanced medical image analysis. Together, these technologies show promise for advancing patient education, risk stratification, disease diagnosis, and personalized treatment strategies. This paper highlights key advancements in GenAI, LLMs, and LVMs from 2018 to 2024, focusing on their applications in kidney care and presenting common use cases. It also discusses their limitations, including knowledge cutoffs, hallucinations, contextual understanding challenges, data representation biases, computational demands, and ethical concerns. By providing a comprehensive analysis, this paper outlines a roadmap for integrating these AI advancements into nephrology, emphasizing the need for further research and real-world validation to fully realize their transformative potential.

1. Introduction

Kidneys are vital, bean-shaped organs responsible for filtering waste as urine, maintaining electrolyte balance, regulating blood pressure, and producing hormones that control red blood cell production and calcium metabolism [1,2]. Disruption in kidney function can lead to severe health complications, including chronic kidney disease (CKD) or end-stage renal disease (ESRD).

CKD affects over 800 million people worldwide, approximately 10% of the global population [3]. The incidence of CKD continues to rise, driven by factors such as diabetes, hypertension, and aging. It is the 12th leading cause of death worldwide, contributing to nearly 2.5 million deaths annually from CKD and ESRD [4].

As kidney disease cases rise globally, the demand for nephrologists and timely treatment increases. A major challenge in managing kidney disease is the shortage of nephrologists, particularly in developing countries [5]. The 2021 Global Kidney Health Atlas reported that 80% of countries fail to meet the World Health Assembly’s goal for adequate nephrology care, leading to delays in diagnosis and treatment [6]. This highlights the need for emerging technologies, such as Generative AI (GenAI), Large Language Models (LLMs), and Large Vision Models (LVMs), which offer promising solutions to address these challenges.

GenAI can create synthetic medical data for research and drug discovery. LLMs excel in processing medical texts and analyzing patient records, supporting clinical decision-making and diagnosis. LVMs enhance the analysis of medical images, improving diagnostic accuracy, especially in kidney disease detection. These technologies can address diagnostic challenges, enable earlier detection, and support personalized treatment. By analyzing medical literature and electronic health records (EHRs), LLMs offer insights and treatment suggestions, while GenAI simulates treatment responses and creates predictive models for early intervention.

The objective of this paper is to explore the current state of Generative AI (GenAI), Large Language Models (LLMs), and Large Vision Models (LVMs), and their applications in kidney care from 2018 to 2024. The year 2018 marks a pivotal point in AI development, with the introduction of OpenAI’s Generative Pre-trained Transformer (GPT) models, which have significantly advanced natural language processing capabilities. While several research papers have discussed the individual applications of GenAI, LLMs, and LVMs in healthcare, to the best of our knowledge, no study has comprehensively examined all three technologies together. This paper aims to fill that gap by analyzing the combined potential of these AI technologies in addressing the limitations posed by the shortage of nephrologists and the growing global demand for kidney care. By presenting a unified exploration of these three advanced technologies, this article seeks to offer a broader perspective on their collective impact on improving kidney care practices. The main research objectives of this paper are:

- Understand Key Technologies: Provide an overview of GenAI, LLMs, and LVMs as of 2024. This includes explaining the core principles of each technology, their advancements over recent years, and their current capabilities.

- Application in Kidney Care: Current applications of these technologies to improve diagnostics, treatment, and patient management in kidney care.

- Present Common Use Cases: Highlight use cases where these technologies can be effectively implemented.

- Address Limitations and Future Directions: Analyze current limitations of these technologies and propose future research areas for advancing their applications in the field.

The paper is structured as follows: Section 2 outlines the research methodology. Section 3 provides background on GenAI, LLMs, and LVMs along with their advancements. Section 4 explores recent applications of GenAI, LLMs, and LVMs in kidney care. Section 5 focuses on technology-centered discussions. Section 6 illustrates related use cases. Section 7 discusses the limitations of these technologies, and Section 8 concludes the paper with future work.

2. Research Methodology

This survey explores the applications of GenAI, LLMs, and LVMs in kidney care. Relevant publications were sourced from databases such as PubMed, IEEE Xplore, Scopus, Google Scholar, ACM Digital Library, and ScienceDirect using targeted keywords, including Generative AI in kidney care, LLMs for kidney diagnostics, AI in nephrology, Kidney transplant prediction using ChatGPT.

LLM in chronic kidney disease management, NLP in nephrology, LVM for patient education, GenAI in kidney imaging analysis, LLMs for kidney function prediction, Generative models for medical data, and AI-assisted kidney diagnosis using LLM and LVM. These keywords facilitated a comprehensive review of literature to assess the role of AI in advancing kidney disease diagnosis, treatment, and management.

Note on Terminology: The keyword renal was excluded from the search strategy to align with current nomenclature recommendations favoring the term kidney [7]. This shift, supported by initiatives such as those from Kidney Disease: Improving Global Outcomes (KDIGO), aims to enhance clarity and patient understanding by using more widely recognized terminology [8].

Data Retrieval and Screening: As of December 2024, a total of 50 publications were retrieved through keyword searches. The initial screening involved removing duplicate records across databases using metadata matching based on titles, authors, DOI, and publication year, which resulted in 25 unique articles. These were then assessed for relevance based on predefined inclusion and exclusion criteria. Following a full-text review, 17 articles were selected for inclusion in the final synthesis.

- Inclusion Criteria:

- –

- Articles in English, published between January 2020 and December 2024, discussing the application of GenAI, LLMs, and LVMs in kidney care, including peer-reviewed journal articles, conference papers, and reputable preprints.

- Exclusion Criteria:

- –

- Non-English articles.

- –

- Publications without full-text access.

- –

- Studies not specifically focused on the application of GenAI, LLMs, and LVMs in kidney care.

- –

- Opinion pieces, editorials, and commentaries lacking empirical data.

Synthesis of Findings: The findings were synthesized to provide an overview of the current applications of GenAI, LLMs, and LVMs in kidney care, highlighting key use cases, limitations, and potential directions for future research.

3. Background

GenAI, LLMs, and LVMs have evolved significantly over the past few years, with key advancements in technology, algorithms, and applications. This section presents a detailed history of their development up to the present. The inclusion of solutions, techniques, and models in the background is guided by a technological progression framework. Developments from 2018 to 2024 were selected based on their significant contributions to the evolution of Generative AI (GenAI), Large Language Models (LLMs), and Large Vision Models (LVMs), particularly those that marked turning points in capability, architecture, or adoption. Priority was given to foundational models, landmark architectures, and innovations. This chronological approach enables a coherent narrative of advancement, contextualizing the current state of the field and establishing the basis for their applicability in nephrology-related tasks.

3.1. The Era of Pretrained Language Models (2018–2020)

From 2018 to 2020, pretrained language models, particularly Generative Pretrained Transformer (GPT) and Bidirectional Encoder Representations from Transformers (BERT), transformed generative AI. These models, based on transformer architectures, enabled significant improvements in natural language processing (NLP) tasks such as text generation, translation, and summarization, setting the stage for future advancements [9].

3.1.1. Generative Pretrained Transformer (GPT)

Developed by OpenAI in 2018, GPT utilizes unidirectional pretraining (left-to-right) [10]. It is trained through autoregressive language modeling, where each word is predicted based on the preceding ones:

where represents the predicted words. This design enables strong performance in text generation and continuation tasks.

3.1.2. Bidirectional Encoder Representations from Transformers (BERT)

Introduced by Google in 2018, BERT utilizes bidirectional pretraining, considering both left and right context for a deeper understanding of language [11]. BERT’s pretraining includes:

- Masked Language Modeling (MLM): Predicts randomly masked words from their context:

- Next Sentence Prediction (NSP): Determines if the second sentence logically follows the first:

3.1.3. XLNet

Introduced in 2019 by Google and Carnegie Mellon University, XLNet combines the strengths of BERT’s bidirectional pretraining and autoregressive modeling (like GPT) [12]. It addresses the limitations of BERT’s masked language modeling by using a permutation-based training method, which allows the model to consider all possible token orders during training. This approach captures long-range dependencies more effectively.

XLNet’s pretraining task maximizes the likelihood of the correct token order across all permutations of the input sequence:

3.1.4. Text-to-Text Transfer Transformer (T5)

Introduced by Google in 2019, T5 reformulated all NLP tasks into a text-to-text format, where every task, from translation to summarization to question answering, is treated as a text generation problem [13]. This unified architecture allows T5 to handle a wide range of tasks with a single model.

T5 is pretrained using a span-corruption denoising objective, where some part of the input text is randomly corrupted by replacing spans of text with a special token, and the model is tasked with predicting the missing spans. The loss function for this task is:

where represents the predicted words or spans, and the model is trained to recover the original input from the corrupted version.

3.1.5. GPT-3

Building on GPT-2’s success with 1.5 billion parameters [14], GPT-3, released in 2020 with 175 billion parameters, further scaled the model, enabling it to perform few-shot learning [15]. This allows GPT-3 to generate coherent text with minimal input, performing a wide variety of tasks without extensive retraining. It also uses the same autoregressive language modeling approach:

Table 1 provides a comparison of text-based models—GPT, BERT, XLNet, T5, and GPT-3, highlighting their architectural differences, strengths, best use cases, and limitations.

Table 1.

Comparison between text-based models: GPT, BERT, XLNet, T5, and GPT-3.

3.2. Multimodal Models: Text, Image, and Video (2021–2023)

Between 2021 and 2023, multimodal models like DALL·E and CLIP expanded generative AI to process text, images, and video, enabling applications such as image captioning, text-to-image generation, and video analysis.

3.2.1. DALL·E

Released in 2021, DALL·E extends GPT-3’s transformer-based architecture to generate images from text descriptions [16]. It was trained on large text-image pairs, enabling it to understand the relationship between textual prompts and visual features. The model employs a VQ-VAE-2 (Vector Quantized Variational Autoencoder) architecture, which encodes the input text into a latent space and decodes it into high-quality images. Specifically, a text prompt T is passed through the transformer model, which produces a latent representation. This representation is then decoded into the corresponding image I:

where T represents the input text and the decoder generates the final image I. This unified approach allows DALL·E to generate coherent and imaginative images.

3.2.2. Contrastive Language-Image Pretraining (CLIP)

Released in 2021, CLIP by OpenAI connects text and images, trained on a large dataset of text-image pairs [17]. Unlike DALL·E, which generates images from text, CLIP matches images to textual descriptions. It understands the relationship between text and corresponding visual representations, making it highly effective for multimodal tasks. CLIP employs contrastive learning to associate images with their corresponding text by minimizing the difference between their embeddings in a shared latent space. The contrastive loss function is:

where and are the embeddings of image and text , is cosine similarity, is a temperature parameter, and N is the number of pairs. This loss function encourages the model to maximize similarity for matching image-text pairs and minimize it for non-matching pairs.

The architecture uses a vision transformer model for images and a transformer model for text [9]. Both models project their respective data into a shared feature space for direct comparison. Additionally, CLIP’s zero-shot learning capability allows it to perform tasks without task-specific fine-tuning.

3.2.3. Neural Video and Image Animation (NUWA)

Released in 2021, NUWA is a multimodal, pre-trained, transformer-based model designed for tasks such as text-to-image, text-to-video, and image animation [18]. It utilizes 3D transformers to capture spatial and temporal patterns in the data. A key feature of NUWA is the use of a 3D Nearby Attention (3DNA) mechanism, which improves the model’s ability to handle spatial and temporal relationships in visual data. This attention mechanism enhances computational efficiency while preserving high-quality visual outputs, allowing the model to scale effectively for complex tasks.Given an input T, such as text or an image, the model generates a latent representation through a 3D transformer, and the decoder reconstructs the output O, which can be an image or video:

This architecture enables NUWA to efficiently generate high-quality visual outputs.

3.2.4. CogView2

Released in 2022, CogView2 is an advanced version of the original CogView model (2021), designed for text-to-image generation [19,20]. It employs a transformer-based architecture that integrates the Cross-Modal Language Model (CogLM). Using a masked autoregressive approach, CogView2 generates high-quality images from textual descriptions. Pretrained on large text-image pairs, it is capable of generating detailed and diverse visual content, with improved efficiency and scalability thanks to an advanced masking strategy.

Given an input text prompt T, the model generates a latent representation, which is then decoded into the corresponding image I:

CogView2’s hierarchical design first generates low-resolution images, which are progressively refined through an iterative super-resolution module using local parallel autoregressive generation. This approach enables faster image generation, with CogView2 being up to 10 times faster than CogView at producing images of similar resolution, while offering improved quality. Additionally, CogView2 supports interactive, text-guided editing, allowing users to modify images based on new textual input.

3.2.5. Imagen

Released in 2022, Imagen is a text-to-image model developed by Google that integrates a two-stage diffusion process combined with a pretrained T5 transformer for encoding text [21]. The model first converts a text prompt T into a latent representation using the T5 encoder, then refines the image through a diffusion process, iteratively improving the image’s quality. Imagen focuses on generating high-resolution, photorealistic images and outperforms previous models like CogView in terms of image fidelity and realism. This architecture makes Imagen highly efficient and scalable for producing detailed, high-quality visual content from natural language descriptions.

3.2.6. DALL·E 2

Released in 2022, DALL·E 2 by OpenAI improves upon the original DALL·E by generating higher-quality images and introducing inpainting capabilities [22]. Unlike CLIP, which matches images to text, DALL·E 2 generates images directly from textual descriptions and allows for editing existing images by modifying specific areas with new text prompts.

DALL·E 2 uses a two-part model for image generation. The first part, based on CLIP, encodes the input text into a latent space representation that captures the relationship between the text and image. The second part employs a diffusion model to refine this representation into high-resolution images. The diffusion process generates more photorealistic images compared to the original DALL·E.

3.2.7. Stable Diffusion

Stable Diffusion (2022) by Stability AI is a text-to-image model that generates detailed images from textual descriptions [23]. It uses a latent diffusion model operating in a lower-dimensional latent space for efficiency and scalability. The model progressively refines images from noise using a denoising score-matching approach. The diffusion process is as follows:

Given a noisy image at time step t, the model generates the clean image by minimizing the loss:

where is the model’s predicted distribution, and is the transition probability.

3.2.8. Make-A-Video

Make-A-Video (2022) is a text-to-video model developed by Meta, capable of generating short video clips from textual descriptions [24]. It uses a transformer-based architecture for text encoding and a spatiotemporal generative model to capture video dynamics. It uses a text-to-image (T2I) model fine-tuned with temporal components for video generation.

The model generates temporally consistent video frames by progressively refining video output. The architecture employs pseudo-3D convolutional layers and temporal attention mechanisms to learn how motion occurs across time, ensuring smooth transitions between frames. The loss function involves temporal coherence, represented as:

where is the model’s predicted distribution for frame t, is the transition probability conditioned on the previous frame, and the second term, , penalizes any temporal discontinuities between consecutive frames.

This architecture allows Make-A-Video to generate high-quality videos from text, with an added capability of frame interpolation for smooth video generation at higher frame rates.

3.2.9. MidJourney

MidJourney (2022) is a text-to-image model focused on creating artistic and stylized images from text prompts [25]. It uses a transformer-based architecture with latent diffusion for efficient generation of high-quality, surreal, and creative images.

Primarily accessed through Discord, MidJourney allows users to generate images by submitting text prompts, with control over aspects like aspect ratios and artistic styles. It employs a latent diffusion model and integrates attention mechanisms for generating coherent and detailed artistic visuals.

3.2.10. DreamFusion

DreamFusion (2022) is a text-to-3D model developed by Google that generates 3D models from textual descriptions [26]. It utilizes a Neural Radiance Field (NeRF) model and a pretrained 2D diffusion model (such as Imagen) to guide the creation of 3D objects.

The model employs Score Distillation Sampling (SDS) to optimize the 3D model. Starting with a random initialization, the model is progressively refined using 2D renderings of the model at different viewpoints. These renderings are generated from a NeRF-based representation of the scene. The text prompt is encoded by the 2D diffusion model (Imagen), which guides the model’s generation by conditioning the 3D structure on the textual description.

DreamFusion ensures 3D consistency by using multi-view consistency, which aligns the rendered images from different viewpoints to the same object, guaranteeing that the generated 3D model is visually coherent from all angles. This process is iterated multiple times, adjusting the NeRF parameters using gradient descent to minimize the difference between the rendered 2D views and the outputs conditioned on the text prompt.

By combining 2D diffusion models with NeRF optimization, DreamFusion is capable of generating photorealistic 3D models directly from text, without requiring a 3D training dataset.

3.2.11. Make-A-Scene

Make-A-Scene (2022) is an interactive text-to-image model developed by Meta, designed to allow users to specify the layout and placement of elements in the generated image [27]. It leverages a transformer-based architecture to integrate both textual descriptions and layout information for creative image generation.

The model utilizes a shared embedding space where both text inputs and spatial layout information are represented. Text is encoded using a text encoder (likely based on transformers), while the layout is encoded through a spatial encoder that processes the positioning and arrangement of elements in the scene. These encoded representations are then fused into a unified vector, which is decoded to generate the final image.

Table 2 provides a comparison between DALL·E, CLIP, NUWA, CogView2, Imagen, DALL·E 2, Stable Diffusion, Make-A-Video, MidJourney, DreamFusion, and Make-A-Scene, highlighting their architectural differences, strengths, best use cases, and limitations.

Table 2.

Comparison of DALL·E, CLIP, NUWA, CogView2, Imagen, DALL·E 2, Stable Diffusion, Make-A-Video, MidJourney, DreamFusion, and Make-A-Scene.

3.2.12. Large Language Model Meta AI (Llama)

Llama (2023) is a transformer-based LLM developed by Meta, designed for high efficiency in language modeling tasks [28]. The model utilizes a standard transformer architecture with multi-head self-attention and feed-forward layers, trained on a large, diverse dataset to capture a wide range of linguistic patterns. Llama models are scaled to hundreds of billions of parameters, improving their capacity for text generation and understanding. The model is pretrained using a causal language modeling task, where it predicts the next token in a sequence. Optimized with techniques like mixed precision training and the Adam optimizer, Llama offers high performance while maintaining efficiency. Llama 3.1 (2024) further enhances the architecture, improving model scaling and fine-tuning strategies, making it a competitive open-source alternative to proprietary LLMs.

3.2.13. Bidirectional Attention Recurrent Decoder (Bard)

Bard (2023), now Gemini, is a text-to-text language model developed by Google [29,30], powered by LaMDA (Language Model for Dialogue Applications) [31] and PaLM 2 (Pathways Language Model 2) [32]. It generates human-like responses from text using deep learning techniques, particularly neural networks. Bard employs a transformer-based architecture with attention mechanisms to optimize text generation. The model integrates Gemini, a multimodal system for handling text, images, audio, and video. LaMDA ensures natural dialogue, while PaLM 2 enhances language understanding. Bard uses gradient descent for optimization, minimizing the loss function during training.

3.2.14. Phenaki

Phenaki (2023) is a text-to-video model developed by Google to generate long, coherent videos from textual descriptions [33]. It uses a Causal Vision Transformer (C-ViViT) architecture for spatiotemporal processing and is trained on a large dataset of text-video pairs.

The model employs a masked bidirectional transformer approach, learning to predict missing video tokens by conditioning on the surrounding context. Text is encoded using the T5X model to guide video generation. Phenaki ensures temporal coherence by generating frames progressively, maintaining smooth transitions over time.

3.2.15. GPT-4

GPT-4 (2023) is a multimodal model developed by OpenAI capable of processing both text and images [34]. It utilizes a transformer-based architecture to integrate and generate outputs from these mixed inputs. GPT-4 is trained on large datasets that consist of both text-image pairs.

The model employs a shared embedding space where both text and image inputs are represented. Text is encoded using a text encoder (likely a transformer-based model), while images are processed through a vision model (e.g., a CNN or vision transformer). The resulting encoded representations are fused into a unified vector, which is then decoded to generate text-based outputs.

GPT-4 ensures effective multimodal understanding by learning the relationships between the textual and visual data during training, enabling it to generate contextually relevant and coherent text responses based on both modalities.

3.2.16. Mistral

Mistral (2023) is a family of open-weight, sparse and dense transformer-based models designed to optimize large language model efficiency [35]. It employs Mixture of Experts (MoE) architecture, where only a subset of expert models is activated during inference, enabling significant computational savings while maintaining performance. Mistral models are designed for dense and sparse retrieval, improving generative tasks by efficiently scaling to large datasets. With up to 12.9 billion parameters in its dense version, Mistral enhances LLMs by improving computational efficiency, reducing latency, and providing high-quality text generation while leveraging minimal resources.

Table 3 provides a comparison between Llama, Bard/Gemini, Phenaki, GPT-4, and Mistral, highlighting their architectural differences, strengths, and limitations.

Table 3.

Comparison of Llama, Bard/Gemini, Phenaki, GPT-4, and Mistral.

3.3. 2024—Advanced Video and Multimodal Systems

In 2024, advances in video and multimodal systems enhanced GenAI’s ability to process and generate content across text, image, and video, enabling new applications in healthcare systems.

3.3.1. SORA

SORA (2024) is a text-to-video generation model developed by OpenAI, which uses a transformer-based architecture combined with a pre-trained diffusion model to generate videos from textual descriptions [36]. The model encodes text T using a transformer-based text encoder and processes images I with a vision model, such as a CNN or vision transformer. These representations are fused into a unified latent vector , which is then decoded to produce video frames. SORA employs a spacetime latent patch representation, where both spatial (appearance) and temporal (motion) information are encoded within patches that span across space and time, represented as , with x, y, and t being the spatial and temporal dimensions. The latent vector is refined through a denoising diffusion process, iteratively reducing noise to generate clearer frames . Temporal consistency is maintained through motion modeling and frame-to-frame coherence, ensuring smooth transitions between video frames, expressed as:

where is the frame at time t and represents the next frame in the sequence.

3.3.2. Gemini 1.5

Gemini 1.5, released in 2024, extends the capabilities of Gemini, which was introduced in 2023 [37]. Gemini is a multimodal AI model built on the PaLM architecture and utilizes the Pathways framework to efficiently scale across various tasks. Gemini employs transformer-based models with attention mechanisms to integrate text, image, and video generation. The attention mechanism is defined as:

where Q, K, and V are the query, key, and value matrices, respectively, and is the dimensionality of the key vectors.

Gemini 1.5 improves Gemini by temporal coherence in video generation and introduces a Mixture-of-Experts (MoE) architecture. MoE activates different subsets of the model’s parameters based on the task, thereby improving efficiency. The MoE activation is expressed as:

where are the gating weights for the expert functions , and N is the number of experts. Additionally, Gemini 1.5 enhances multimodal integration, extends context windows, and supports the processing of up to 1 million tokens.

3.3.3. Big Sleep

Big Sleep (2024) is an AI framework developed by Google Project Zero and DeepMind for vulnerability research [38]. It integrates large language models (LLMs) like Gemini 1.5 Pro with tools such as a code browser, Python 3.8 fuzz testing tool, debugger, and reporter to automate security analysis. The system uses a multimodal architecture to analyze codebases, detect vulnerabilities, and perform variant analysis by comparing code changes to known vulnerabilities. Big Sleep’s key features include variant and root-cause analysis, proactive defense by identifying vulnerabilities before exploitation, and automated reporting. The system processes input code through advanced machine learning algorithms, analyzing patterns with its neural network to detect security issues.

The technical formula applied by Big Sleep can be represented as:

where x is the input code, represents the model parameters, and y is the output identifying potential vulnerabilities. Big Sleep demonstrated its effectiveness by identifying a stack buffer underflow in SQLite, marking its significant role in real-world cybersecurity.

3.3.4. ChatGPT 4o and Variants (2024)

In 2024, OpenAI released GPT-4o, GPT-4o Mini, and o1, each with advancements in multimodal capabilities and problem-solving.

GPT-4o is a multimodal model built on a transformer-based architecture with MoE activation and dense attention layers [39]. It processes text, images, audio, and video, using unsupervised learning and reinforcement learning with human feedback (RLHF) for improved outputs.

GPT-4o Mini is a smaller, resource-efficient version, maintaining core capabilities while optimizing for lower computational costs [40].

GPT-o1 focuses on complex problem-solving, utilizing iterative reasoning and exploration techniques for tasks like competitive programming and mathematics [41].

These models are based on large-scale datasets, multimodal data integration, and sophisticated training methods to improve accuracy and versatility.

Table 4 provides a comparison between SORA, Gemini 1.5, Big Sleep, and ChatGPT, highlighting their architectural differences, strengths, and limitations.

Table 4.

Comparison of SORA, Gemini 1.5, Big Sleep, and ChatGPT.

3.4. Improvements in GenAI, LLMs, and LVMs

Between 2018 and 2024, numerous advancements have been made in GenAI, LLMs, and LVMs. These improvements have significantly enhanced the models’ ability to generate more relevant, accurate, and knowledge-rich responses. They address key limitations of earlier models, particularly in terms of factual accuracy and domain-specific knowledge. Below is an overview of the key changes introduced during this period.

3.4.1. Prompt Engineering

Prompt Engineering is a technique for optimizing AI model outputs by refining inputs [15]. It uses strategies like few-shot learning (where models learn tasks with minimal training examples), contextual embedding, and task-specific fine-tuning to guide models. Typically relying on transformer-based models like GPT, it processes prompt tokens to adjust the output based on specific instructions. Prompt engineering helps models understand context, intent, and constraints, improving performance in tasks like text generation, code synthesis, and complex queries. Techniques such as zero-shot and few-shot prompting enhance efficiency without extensive retraining.

3.4.2. Retrieval-Augmented Generation (RAG)

RAG is a hybrid approach that combines retrieval-based methods with generative models to improve the factual accuracy and contextual relevance of responses [42]. The architecture integrates a retrieval component, often using dense vector search or BM25 (Best Matching 25), a ranking function used to retrieve relevant documents from an external knowledge base based on their relevance to a given query. These documents are then used to condition a generative model, to produce more informed and contextually accurate outputs. RAG enables models to access a broader knowledge pool, improving performance on tasks that require up-to-date or domain-specific information and mitigating issues like hallucinations.

3.4.3. Dense Retrieval and Fusion-in-Decoder (FiD)

Dense Retrieval and Fusion-in-Decoder (FiD) improve RAG by using dense vector search for efficient document retrieval [43,44]. Documents are represented as vectors, and the most relevant ones are retrieved based on semantic similarity to the query. FiD concatenates the retrieved documents with the query in the decoder of transformer models, allowing the model to attend to both the query and retrieved documents. This architecture improves contextual coherence and factuality in tasks like question answering and document generation.

3.4.4. Sparse Retrieval Models

Sparse Retrieval Models use keyword-based methods like BM25 to retrieve documents by matching terms in the query with those in the documents [45]. They rank documents based on term frequency (TF) and inverse document frequency (IDF) without generating dense embeddings. FAISS (Facebook AI Similarity Search) is often used in conjunction with dense retrieval models, but in sparse retrieval, it can also be used for indexing large collections of documents for faster search by storing precomputed term-based vectors. These models are simpler, faster, and more interpretable but less effective at capturing semantic meaning compared to dense retrieval models. They are ideal for tasks prioritizing high recall and fast retrieval.

3.4.5. Vector Databases

Vector Databases store and search high-dimensional vectors for tasks like semantic search and recommendation systems [46]. Using approximate nearest neighbor (ANN) algorithms like FAISS, they quickly retrieve vectors similar to query vectors. Integrating vector databases with LLMs and LVMs enables faster retrieval of relevant data from large datasets, improving generative model performance. These databases enhance RAG by providing real-time access to external knowledge, reducing hallucinations and improving factual accuracy.

3.4.6. Contrastive Learning

Contrastive Learning is a technique where models learn representations by bringing similar data points (positive pairs) closer and pushing dissimilar ones (negative pairs) apart [47,48,49]. Using loss functions like InfoNCE (Information Noise Contrastive Estimation), it enhances LLMs, LVMs, and GenAI by improving semantic understanding and capturing meaningful relationships. This method boosts tasks such as semantic search, image captioning, and multi-modal alignment, enabling models to learn efficiently from unstructured data, improving accuracy, and reducing biases. Contrastive learning also enhances generative tasks by learning high-quality, aligned representations, as seen in models like CLIP.

4. Recent Applications of GenAI, LLMs, and LVMs in Kidney Care

Recent advancements in Generative AI (GenAI), Large Language Models (LLMs), and Large Vision Models (LVMs) have significantly impacted various aspects of healthcare, enhancing efficiency and patient outcomes. GenAI automates routine tasks such as data entry and appointment scheduling, allowing clinicians to focus more on direct patient care. LLMs, like ChatGPT, have demonstrated potential in drafting medical documents and assisting in diagnostics, thereby streamlining clinical workflows. LVMs contribute to improved diagnostics and personalized medicine by analyzing medical images, aiding in early disease detection and treatment planning. These technologies collectively transform healthcare delivery, making it more efficient and patient-centered. This section highlights recent research applying GenAI, LLMs, and LVMs in kidney care. These studies explore innovative approaches to improving diagnosis, treatment, and patient management through advanced AI models in nephrology.

4.1. 2018–2023: Early Developments

Between 2018 and 2023, advancements in GenAI, LLMs, and LVMs reshaped nephrology by enhancing diagnostic accuracy, patient education, and clinical workflows [50,51,52,53,54,55,56]. Domain-specific adaptations, such as AKI-BERT and MD-BERT-LGBM, improved early disease prediction by integrating textual and structured data, achieving significant accuracy in acute kidney injury (AKI) and CKD prediction. Generative models like ChatGPT demonstrated utility in dietary support for CKD patients and patient education in nephrology and urology, although inconsistencies and limitations in depth and citation accuracy necessitated human oversight. The integration of AI-powered chatbots in kidney transplant care showed promise in improving treatment adherence and personalized patient education, despite challenges in bias, interpretability, and EHR system compatibility. These efforts underscore the growing potential of AI in nephrology while highlighting the need for continued innovation to address limitations and enhance clinical reliability. Table 5 summarizes the early developments in nephrology from 2018 to 2023.

Table 5.

Summary of early developments in nephrology from 2018 to 2023.

4.2. 2024: Recent Breakthroughs

In 2024, the application of GenAI, LLMs, and advanced multimodal frameworks marked significant progress in nephrology, expanding their utility in diagnosis, prediction, education, and clinical support [57,58,59,60,61,62,63,64,65,66,67]. Breakthroughs included the development of HERBERT for CKD risk stratification, multimodal models for AKI and continuous renal replacement therapy (CRRT) prediction, and innovative uses of AI-powered tools for patient education, renal cancer diagnosis, and visual communication. These advancements showcased enhanced integration of structured and unstructured data, improved prediction accuracies, and personalized education materials tailored for diverse audiences. Despite notable achievements in improving healthcare delivery and engagement, challenges such as data limitations, computational demands, and the need for human oversight persist, emphasizing the importance of iterative refinement and validation in clinical settings. Table 6 provides a summary of these works.

Table 6.

Summary of Recent Work in 2024.

5. Discussion

Recent studies have identified several limitations in nephrology care; however, ongoing research has proposed various strategies to address these gaps. Emerging methods emphasize hybrid models that integrate structured electronic health record (EHR) data with unstructured clinical narratives. Additionally, lightweight architectures suited for low-resource settings and human-in-the-loop frameworks for clinical validation have been introduced to enhance accuracy, efficiency, and safety. Thus, this section serves a dual purpose: (1) It analyzes how recent solutions and methods have been developed to overcome the limitations identified in previous work, as discussed in Section 4. (2) It outlines practical applications of these advancements, which are further elaborated in Section 6.

By 2024, LLMs such as ChatGPT have proven valuable in kidney care applications, including patient education, chronic kidney disease (CKD) risk stratification, renal cell carcinoma (RCC) diagnosis, medical training, and predicting postoperative complications [68]. For example, ChatGPT has enhanced CKD dietary planning and health literacy, though challenges like limited clinical depth persist. HERBERT, a domain-specific LLM, has improved CKD risk stratification by handling temporal data, but requires broader validation across diverse populations.

Advanced LLMs, including GPT-4, Gemini, Llama, and Mistral, offer untapped potential in personalized medicine, such as symptom monitoring and tailored treatment. They could integrate with EHRs to analyze datasets and support real-time decisions, but adoption in nephrology remains limited. Additionally, techniques like retrieval-augmented generation (RAG), dense retrieval, Fusion-in-Decoder (FiD), and vector databases remain unexplored despite their ability to improve contextual accuracy.

Similarly, LVMs like DALL-E, Stable Diffusion, MidJourney, and DreamFusion, which have transformed image generation in other domains, have seen minimal application in nephrology. Studies in 2024 have only begun to explore AI-generated visuals for patient education and presentations, such as using Microsoft Copilot with DALL-E, focusing primarily on text-to-image generation. This approach neglects the integration of LVMs with medical imaging data for diagnostic purposes. The absence of generative AI applications for creating synthetic nephrology datasets further limits advancements. Synthetic data could address critical gaps in training datasets, particularly for underrepresented populations, while aiding model development for CKD progression, RCC detection, and postoperative care. Moreover, there is limited exploration of multimodal AI for combining structured, unstructured, and imaging data, which could support a comprehensive understanding of nephrology-specific challenges. Such advancements could enable the generation of 3D anatomical structures of kidneys, enhancing both diagnostic and educational applications.

Additionally, multimodal frameworks like CLIP, Make-A-Scene, SORA, and Phenaki, capable of text-to-video generation and video modeling, remain unexplored in nephrology-specific use cases. These frameworks could be transformative for applications such as surgical video analysis, treatment simulations, and patient education through dynamic visual content. They also hold potential for automating video-based training for medical professionals, enhancing procedural accuracy, and simulating complex kidney-related conditions for research and diagnostic purposes. Despite their capabilities, their integration with nephrology workflows and datasets is still lacking, limiting their impact on advancing clinical practices and patient outcomes.

Addressing these gaps could transform nephrology by leveraging synthetic datasets, integrating multimodal AI, and validating technologies across diverse populations. Thoughtful application of generative AI could enhance diagnostic accuracy, personalized treatment, and education, reducing disparities and improving outcomes globally.

6. Use Case

This section highlights various use cases demonstrating the application of LLMs and LVMs in kidney care. These applications include generating imaging data through text-to-image prompts, with or without RAG integration, creating 2D and 3D images to enhance patient health literacy and support educational initiatives, utilizing text-to-video methods to produce medical videos that visualize anatomical structures, and employing text-to-text models for generating text-based responses to common kidney-related questions.

6.1. Text-to-Image (2D)

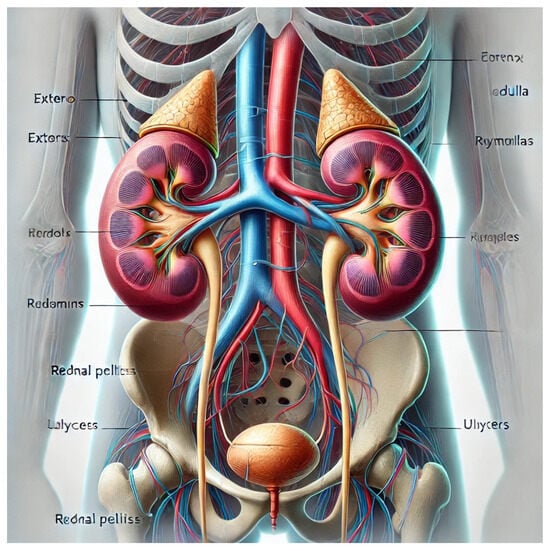

Prompt 1: Create an image of the human kidney.

Response: DALL·E generated a detailed illustration of a human kidney, as shown in Figure 1.

Figure 1.

Generated image based on a general request from Prompt 1.

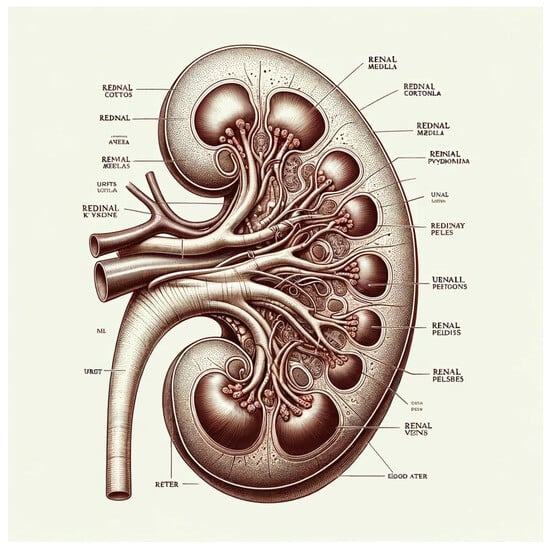

Prompt 2: Create an image of the human kidney as shown in [69].

Response: DALL·E generated a detailed illustration of a human kidney, as shown in Figure 2.

Figure 2.

Generated image inspired from an external reference (RAG) Prompt 2.

6.2. Text-to-Image (3D)

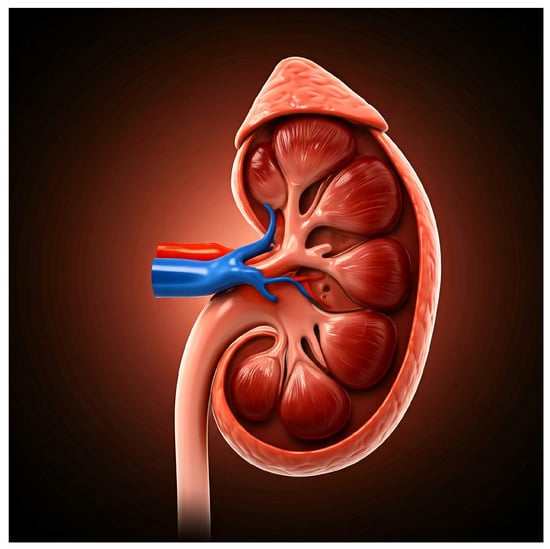

Prompt 1: Create an 3D image of the human kidney.

Response: Gemini 2.0 Flash generated a 3D illustration of a human kidney, as shown in Figure 3.

Figure 3.

Generated 3D image based on a general request from Prompt 1.

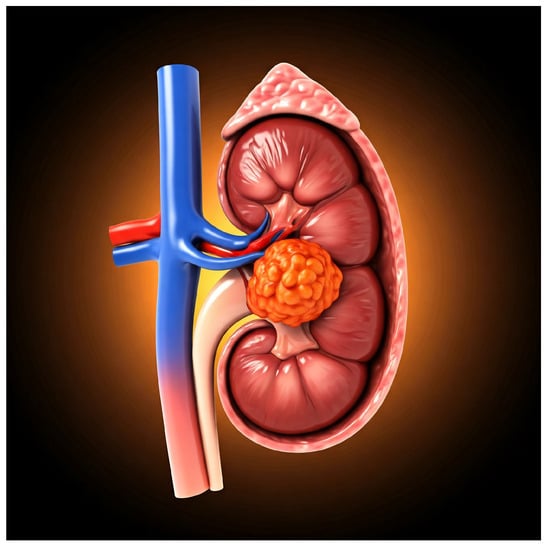

Prompt 1: Create an 3D image of the tumor kidney.

Response: Gemini 2.0 Flash generated a 3D illustration of a tumor kidney, as shown in Figure 4.

Figure 4.

Generated 3D image based on Prompt 2.

6.3. Text-to-Text

Table 7 showcases the ability of ChatGPT to provide responses to text-based prompts related to kidney health. Each response demonstrates ChatGPT’s understanding and its ability to deliver medically relevant and concise information. The provided prompts focus on common questions concerning kidney function and associated symptoms.

Table 7.

ChatGPT responses to text-based prompts related to kidney health.

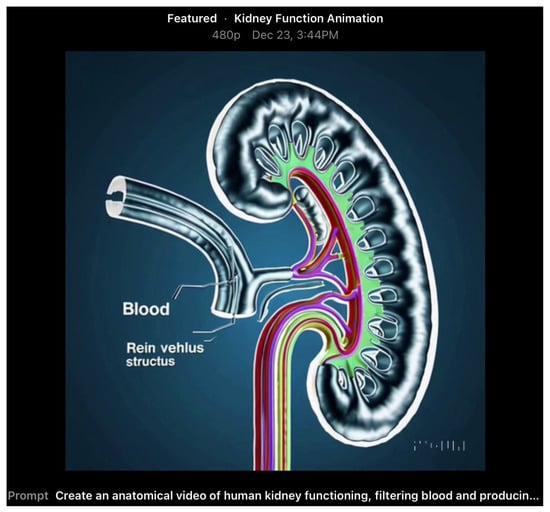

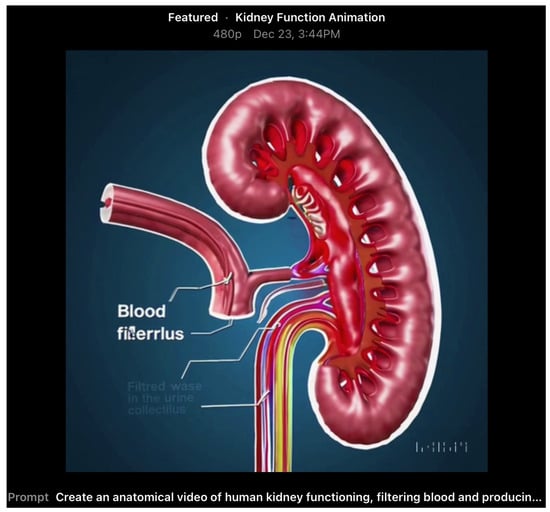

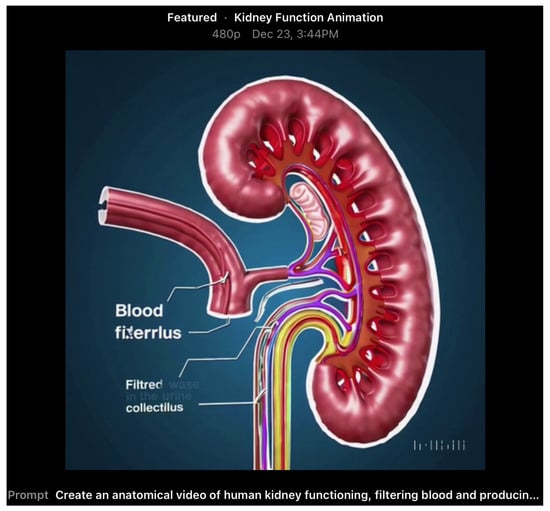

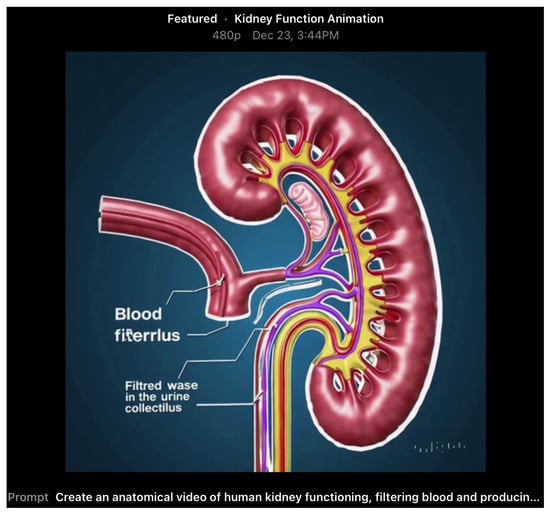

6.4. Text-to-Video

Prompt 1: Generate an anatomical video depicting human kidney function, including blood filtration, urine production, and urine flow into the collecting ducts.

Response: SORA successfully generated a video, as illustrated in the provided Figure 5, Figure 6, Figure 7 and Figure 8.

Figure 5.

Frame 1: Illustrates the blood entering the kidney via the renal artery.

Figure 6.

Frame 2: Focuses on the blood filtration process in the glomerulus.

Figure 7.

Frame 3: Demonstrates the filtration of waste in the form of Urine.

Figure 8.

Frame 4: Urine flow into the ureter.

DALL·E 2, Gemini 2.0 Flash, SORA, and ChatGPT-4.0 show significant potential for educational purposes. However, medical expert intervention is essential to correct common spelling mistakes and factual inaccuracies.

7. Challenges of GenAI, LLMs, and LVMs in Kidney Healthcare

GenAI, LLMs, and LVMs are reshaping healthcare, yet substantial limitations hinder their full integration, particularly in kidney care. These challenges span technical, ethical, and demographic dimensions, affecting reliability, equity, and practicality.

A key challenge is the knowledge cutoff in most LLMs. Trained on static datasets, they cannot access real-time updates, leaving them unable to incorporate the latest medical research or guidelines. In nephrology, where protocols and diagnostics evolve rapidly, this can lead to outdated or incomplete recommendations. Furthermore, hallucination—where models produce incorrect or fabricated information—poses risks, as misleading insights on CKD management, biomarkers, or RCC diagnosis could lead to inappropriate clinical decisions and diminished trust.

LLMs and LVMs also face difficulties in contextual understanding, critical in kidney care, which often requires synthesizing lab results, comorbidities, and lifestyle factors. While these models handle individual data points, integrating them into actionable insights remains a challenge. Their outputs can be overly simplistic or irrelevant, and their lack of interpretability—operating as black boxes—reduces clinician trust, particularly in high-stakes scenarios.

Bias and demographic representation issues further complicate adoption. Training datasets often lack diversity, leading to racial, gender, and socioeconomic biases. In nephrology, this may result in less accurate predictions for minority groups or rare disease presentations, exacerbating health inequities. Similarly, inadequate representation of anatomical and disease diversity leads to generalized predictions that may miss diagnoses or recommend inappropriate treatments, especially for underrepresented conditions.

Regulatory and ethical challenges, such as ensuring compliance with Health Insurance Portability and Accountability Act (HIPAA), present additional barriers. Patient data use in training raises concerns about privacy, security, and consent. Moreover, the potential misuse of AI-generated outputs, including falsified records or inaccurate recommendations, underscores the need for stringent oversight to align with medical and ethical standards.

Deployment demands also limit accessibility. The significant computational resources required by LLMs and LVMs make them unattainable for under-resourced facilities, widening care disparities. In kidney care, this could deepen the divide between well-funded institutions and underserved regions.

Finally, integrating GenAI into existing healthcare workflows remains underdeveloped. Legacy systems often lack compatibility with advanced AI tools. Effective kidney care applications, such as CKD risk stratification and complication prediction, require seamless integration with electronic health records (EHRs) and clinical systems, a capability not yet fully realized. Addressing these limitations is essential for unlocking the full potential of generative AI in kidney healthcare.

8. Conclusions and Future Work

The integration of Generative AI (GenAI), Large Language Models (LLMs), and Large Vision Models (LVMs) in kidney care has seen significant advancements, contributing to risk stratification, disease diagnosis, patient education, postoperative complication prediction, treatment optimization, and continuous monitoring. However, challenges remain in integrating multimodal AI, generating synthetic datasets to address demographic underrepresentation, and overcoming issues related to interpretability, bias, and ethical compliance. Emerging tools and frameworks, including retrieval-augmented generation (RAG) and multimodal AI models such as ChatGPT, Gemini, and SORA, offer promising solutions for improving contextual accuracy, synthesizing structured and unstructured data, and expanding applications in nephrology diagnostics and education.

The rapid evolution of AI highlights the growing sophistication of these models. OpenAI’s introduction of o3, an artificial general intelligence (AGI) model focused on advanced reasoning, and Google’s release of Gemini 2.0 Flash with enhanced multimodal capabilities underscore the push toward improved problem-solving, real-time decision-making, and multimodal adaptability. Additionally, NVIDIA’s advancements in long-context AI are aimed at reducing hallucinations and enhancing logical reasoning, which could address limitations in nephrology-focused AI applications. We will incorporate DeepSeek in future reviews as more data becomes available.

Future research should prioritize integrating these advancements into clinical workflows while ensuring seamless compatibility with electronic health records (EHRs) and accessibility in under-resourced healthcare settings. AI-powered text generation, detection systems, and multimodal frameworks should be leveraged to develop precise, equitable, and scalable kidney care solutions. Addressing ethical, computational, and regulatory challenges will be critical to fostering widespread adoption and ultimately transforming diagnostics, personalized treatment, and medical education on a global scale.

Author Contributions

Conceptualization, F.N., D.B. and D.K.S.; Methodology, F.N.; Writing—original draft, F.N.; Writing—review & editing, F.N., D.B. and D.K.S.; Funding acquisition, F.N., D.B. and D.K.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partly supported by Kent State University’s Open Access APC Support Fund.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All data were presented in main text.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Nair, M. The renal system. In Fundamentals of Anatomy and Physiology: For Nursing and Healthcare Students; Wiley-Blackwell: Hoboken, NJ, USA, 2016. [Google Scholar]

- Neha, F. Kidney Localization and Stone Segmentation from a CT Scan Image. In Proceedings of the 2023 7th International Conference on Computing, Communication, Control And Automation (ICCUBEA), Pune, India, 18–19 August 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–6. [Google Scholar]

- Kovesdy, C.P. Epidemiology of chronic kidney disease: An update 2022. Kidney Int. Suppl. 2022, 12, 7–11. [Google Scholar] [CrossRef] [PubMed]

- Luyckx, V.A.; Tonelli, M.; Stanifer, J.W. The global burden of kidney disease and the sustainable development goals. Bull. World Health Organ. 2018, 96, 414. [Google Scholar] [PubMed]

- Osman, M.A.; Alrukhaimi, M.; Ashuntantang, G.E.; Bellorin-Font, E.; Gharbi, M.B.; Braam, B.; Courtney, M.; Feehally, J.; Harris, D.C.; Jha, V.; et al. Global nephrology workforce: Gaps and opportunities toward a sustainable kidney care system. Kidney Int. Suppl. 2018, 8, 52–63. [Google Scholar]

- Bello, A.K.; McIsaac, M.; Okpechi, I.G.; Johnson, D.W.; Jha, V.; Harris, D.C.; Saad, S.; Zaidi, D.; Osman, M.A.; Ye, F.; et al. International Society of Nephrology Global Kidney Health Atlas: Structures, organization, and services for the management of kidney failure in North America and the Caribbean. Kidney Int. Suppl. 2021, 11, e66–e76. [Google Scholar]

- Levey, A.S.; Eckardt, K.U.; Dorman, N.M.; Christiansen, S.L.; Cheung, M.; Jadoul, M.; Winkelmayer, W.C. Nomenclature for kidney function and disease—Executive summary and glossary from a Kidney Disease: Improving Global Outcomes (KDIGO) consensus conference. Eur. Heart J. 2020, 41, 4592–4598. [Google Scholar]

- Seaborg, E. What’s in a Name, and Who’s the Audience? “Kidney” vs. ”Renal”. Kidney News 2021, 13, 24–25. [Google Scholar]

- Fnu, N.; Bansal, A. Understanding the architecture of vision transformer and its variants: A review. In Proceedings of the 2024 1st International Conference on Innovative Engineering Sciences and Technological Research (ICIESTR), Muscat, Oman, 14–15 May 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving language understanding by generative pre-training. Mikecaptain 2018. in progress. [Google Scholar]

- Kenton, J.D.M.W.C.; Toutanova, L.K. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; Volume 1, p. 2. [Google Scholar]

- Yang, Z. XLNet: Generalized Autoregressive Pretraining for Language Understanding. arXiv 2019, arXiv:1906.08237. [Google Scholar]

- Roberts, A.; Raffel, C.; Lee, K.; Matena, M.; Shazeer, N.; Liu, P.J.; Narang, S.; Li, W.; Zhou, Y. Exploring the limits of transfer learning with a unified text-to-text transformer. J. Mach. Learn. Res. 2019, 21, 1–67. [Google Scholar]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language models are unsupervised multitask learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Ramesh, A.; Pavlov, M.; Goh, G.; Gray, S.; Voss, C.; Radford, A.; Chen, M.; Sutskever, I. Zero-shot text-to-image generation. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 8821–8831. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Wu, C.; Liang, J.; Ji, L.; Yang, F.; Fang, Y.; Jiang, D.; Duan, N. Nüwa: Visual synthesis pre-training for neural visual world creation. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 720–736. [Google Scholar]

- Ding, M.; Yang, Z.; Hong, W.; Zheng, W.; Zhou, C.; Yin, D.; Lin, J.; Zou, X.; Shao, Z.; Yang, H.; et al. Cogview: Mastering text-to-image generation via transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 19822–19835. [Google Scholar]

- Ding, M.; Zheng, W.; Hong, W.; Tang, J. Cogview2: Faster and better text-to-image generation via hierarchical transformers. Adv. Neural Inf. Process. Syst. 2022, 35, 16890–16902. [Google Scholar]

- Saharia, C.; Chan, W.; Saxena, S.; Li, L.; Whang, J.; Denton, E.L.; Ghasemipour, K.; Gontijo Lopes, R.; Karagol Ayan, B.; Salimans, T.; et al. Photorealistic text-to-image diffusion models with deep language understanding. Adv. Neural Inf. Process. Syst. 2022, 35, 36479–36494. [Google Scholar]

- Ramesh, A.; Dhariwal, P.; Nichol, A.; Chu, C.; Chen, M. Hierarchical text-conditional image generation with clip latents. arXiv 2022, arXiv:2204.06125. [Google Scholar]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10684–10695. [Google Scholar]

- Singer, U.; Polyak, A.; Hayes, T.; Yin, X.; An, J.; Zhang, S.; Hu, Q.; Yang, H.; Ashual, O.; Gafni, O.; et al. Make-a-video: Text-to-video generation without text-video data. arXiv 2022, arXiv:2209.14792. [Google Scholar]

- MidJourney Team. MidJourney Explore: Top Images. Available online: https://www.midjourney.com/explore?tab=top (accessed on 22 August 2024).

- Poole, B.; Jain, A.; Barron, J.T.; Mildenhall, B. Dreamfusion: Text-to-3d using 2d diffusion. arXiv 2022, arXiv:2209.14988. [Google Scholar]

- Gafni, O.; Polyak, A.; Ashual, O.; Sheynin, S.; Parikh, D.; Taigman, Y. Make-a-scene: Scene-based text-to-image generation with human priors. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 89–106. [Google Scholar]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. Llama: Open and efficient foundation language models. arXiv 2023, arXiv:2302.13971. [Google Scholar]

- Google. Bard: A Generative AI Text-to-Text Model Powered by LaMDA and PaLM 2. 2023. Available online: https://bard.google.com (accessed on 22 December 2024).

- Team, G.; Anil, R.; Borgeaud, S.; Alayrac, J.B.; Yu, J.; Soricut, R.; Schalkwyk, J.; Dai, A.M.; Hauth, A.; Millican, K.; et al. Gemini: A family of highly capable multimodal models. arXiv 2023, arXiv:2312.11805. [Google Scholar]

- Thoppilan, R.; De Freitas, D.; Hall, J.; Shazeer, N.; Kulshreshtha, A.; Cheng, H.T.; Jin, A.; Bos, T.; Baker, L.; Du, Y.; et al. Lamda: Language models for dialog applications. arXiv 2022, arXiv:2201.08239. [Google Scholar]

- Anil, R.; Dai, A.M.; Firat, O.; Johnson, M.; Lepikhin, D.; Passos, A.; Shakeri, S.; Taropa, E.; Bailey, P.; Chen, Z.; et al. Palm 2 technical report. arXiv 2023, arXiv:2305.10403. [Google Scholar]

- Villegas, R.; Babaeizadeh, M.; Kindermans, P.J.; Moraldo, H.; Zhang, H.; Saffar, M.T.; Castro, S.; Kunze, J.; Erhan, D. Phenaki: Variable length video generation from open domain textual descriptions. In Proceedings of the International Conference on Learning Representations, Online, 25–29 April 2022. [Google Scholar]

- Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; Anadkat, S.; et al. Gpt-4 Technical Report. arXiv 2023, arXiv:2303.08774. [Google Scholar]

- Mistral AI. Introducing Mistral: Advancing Open-Weight Transformer Models. 2023. Available online: https://www.mistral.ai (accessed on 22 December 2024).

- OpenAI. Sora: A Text-to-Video Generation Model Using Transformer-Based Architecture and Pre-Trained Diffusion Models. 2024. Available online: https://openai.com (accessed on 22 December 2024).

- Team, G.; Georgiev, P.; Lei, V.I.; Burnell, R.; Bai, L.; Gulati, A.; Tanzer, G.; Vincent, D.; Pan, Z.; Wang, S.; et al. Gemini 1.5: Unlocking multimodal understanding across millions of tokens of context. arXiv 2024, arXiv:2403.05530. [Google Scholar]

- Big Sleep Team. From Naptime to Big Sleep: Using Large Language Models To Catch Vulnerabilities In Real-World Code. 2024. Available online: https://googleprojectzero.blogspot.com/2024/10/from-naptime-to-big-sleep.html (accessed on 22 December 2024).

- OpenAI. Hello GPT-4o. 2024. Available online: https://openai.com/index/hello-gpt-4o/ (accessed on 1 December 2024).

- OpenAI. GPT-4o Mini: Advancing Cost-Efficient Intelligence. 2024. Available online: https://openai.com/index/gpt-4o-mini-advancing-cost-efficient-intelligence (accessed on 1 December 2024).

- OpenAI. Introducing OpenAI o1: A Model Designed for Enhanced Reasoning. Available online: https://openai.com/index/introducing-openai-o1-preview (accessed on 1 December 2024).

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.t.; Rocktäschel, T.; et al. Retrieval-augmented generation for knowledge-intensive nlp tasks. Adv. Neural Inf. Process. Syst. 2020, 33, 9459–9474. [Google Scholar]

- Karpukhin, V.; Oğuz, B.; Min, S.; Lewis, P.; Wu, L.; Edunov, S.; Chen, D.; Yih, W.t. Dense passage retrieval for open-domain question answering. arXiv 2020, arXiv:2004.04906. [Google Scholar]

- Izacard, G.; Grave, E. Leveraging passage retrieval with generative models for open domain question answering. arXiv 2020, arXiv:2007.01282. [Google Scholar]

- Schütze, H.; Manning, C.D.; Raghavan, P. Introduction to Information Retrieval; Cambridge University Press: Cambridge, UK, 2008; Volume 39. [Google Scholar]

- Johnson, J.; Douze, M.; Jégou, H. Billion-scale similarity search with GPUs. IEEE Trans. Big Data 2019, 7, 535–547. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A simple framework for contrastive learning of visual representations. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 13–18 July 2020; pp. 1597–1607. [Google Scholar]

- He, K.; Fan, H.; Wu, Y.; Xie, S.; Girshick, R. Momentum contrast for unsupervised visual representation learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9729–9738. [Google Scholar]

- Gao, T.; Yao, X.; Chen, D. Simcse: Simple contrastive learning of sentence embeddings. arXiv 2021, arXiv:2104.08821. [Google Scholar]

- Mao, C.; Yao, L.; Luo, Y. A Pre-trained Clinical Language Model for Acute Kidney Injury. In Proceedings of the 2020 IEEE International Conference on Healthcare Informatics (ICHI), Oldenburg, Germany, 30 November–3 December 2020; pp. 1–2. [Google Scholar] [CrossRef]

- Ma, D.; Li, X.; Mou, S.; Cheng, Z.; Yan, X.; Lu, Y.; Yan, R.; Cao, S. Prediction of chronic kidney disease risk using multimodal data. In Proceedings of the 2021 5th International Conference on Compute and Data Analysis, Sanya, China, 2–4 February 2021; pp. 20–25. [Google Scholar]

- Qarajeh, A.; Tangpanithandee, S.; Thongprayoon, C.; Suppadungsuk, S.; Krisanapan, P.; Aiumtrakul, N.; Garcia Valencia, O.A.; Miao, J.; Qureshi, F.; Cheungpasitporn, W. AI-Powered Renal Diet Support: Performance of ChatGPT, Bard AI, and Bing Chat. Clin. Pract. 2023, 13, 1160–1172. [Google Scholar] [CrossRef]

- Aiumtrakul, N.; Thongprayoon, C.; Suppadungsuk, S.; Krisanapan, P.; Miao, J.; Qureshi, F.; Cheungpasitporn, W. Navigating the landscape of personalized medicine: The relevance of ChatGPT, BingChat, and Bard AI in nephrology literature searches. J. Pers. Med. 2023, 13, 1457. [Google Scholar] [CrossRef]

- Suppadungsuk, S.; Thongprayoon, C.; Krisanapan, P.; Tangpanithandee, S.; Garcia Valencia, O.; Miao, J.; Mekraksakit, P.; Kashani, K.; Cheungpasitporn, W. Examining the validity of ChatGPT in identifying relevant nephrology literature: Findings and implications. J. Clin. Med. 2023, 12, 5550. [Google Scholar] [CrossRef] [PubMed]

- Garcia Valencia, O.A.; Thongprayoon, C.; Jadlowiec, C.C.; Mao, S.A.; Miao, J.; Cheungpasitporn, W. Enhancing kidney transplant care through the integration of chatbot. Healthcare 2023, 11, 2518. [Google Scholar] [CrossRef]

- Szczesniewski, J.J.; Tellez Fouz, C.; Ramos Alba, A.; Diaz Goizueta, F.J.; García Tello, A.; Llanes González, L. ChatGPT and most frequent urological diseases: Analysing the quality of information and potential risks for patients. World J. Urol. 2023, 41, 3149–3153. [Google Scholar] [CrossRef] [PubMed]

- Moore, A.; Orset, B.; Yassaee, A.; Irving, B.; Morelli, D. HEalthRecordBERT (HERBERT): Leveraging Transformers on Electronic Health Records for Chronic Kidney Disease Risk Stratification. ACM Trans. Comput. Healthc. 2024, 5, 1–18. [Google Scholar] [CrossRef]

- Tan, Y.; Dede, M.; Mohanty, V.; Dou, J.; Hill, H.; Bernstam, E.; Chen, K. Forecasting Acute Kidney Injury and Resource Utilization in ICU patients using longitudinal, multimodal models. J. Biomed. Inform. 2024, 154, 104648. [Google Scholar] [CrossRef] [PubMed]

- Liang, R.; Zhao, A.; Peng, L.; Xu, X.; Zhong, J.; Wu, F.; Yi, F.; Zhang, S.; Wu, S.; Hou, J. Enhanced artificial intelligence strategies in renal oncology: Iterative optimization and comparative analysis of GPT 3.5 versus 4.0. Ann. Surg. Oncol. 2024, 31, 3887–3893. [Google Scholar] [CrossRef]

- Talyshinskii, A.; Juliebø-Jones, P.; Hameed, B.Z.; Naik, N.; Adhikari, K.; Zhanbyrbekuly, U.; Tzelves, L.; Somani, B.K. ChatGPT as a Clinical Decision Maker for Urolithiasis: Compliance with the Current European Association of Urology Guidelines. Eur. Urol. Open Sci. 2024, 69, 51–62. [Google Scholar] [CrossRef]

- Bersano, J. Exploring the role of Microsoft’s Copilot in visual communication: Current use and considerations through science communicators’ lens. Virus 2024, 6, 11. [Google Scholar] [CrossRef]

- Safadi, M.F.; Zayegh, O.; Hawoot, Z. Advancing Innovation in Medical Presentations: A Guide for Medical Educators to Use Images Generated With Artificial Intelligence. Cureus 2024, 16, e74978. [Google Scholar] [CrossRef]

- Goparaju, N. Picture This: Text-to-Image Models Transforming Pediatric Emergency Medicine. Ann. Emerg. Med. 2024, 84, 651–657. [Google Scholar] [CrossRef]

- Lin, S.Y.; Jiang, C.C.; Law, K.M.; Yeh, P.C.; Kuo, H.L.; Ju, S.W.; Kao, C.H. Comparative Analysis of Generative AI in Clinical Nephrology: Assessing ChatGPT-4, Gemini Pro, and Bard in Patient Interaction and Renal Biopsy Interpretation. Gemini Pro. Bard Patient Interact. Ren. Biopsy Interpret. 2024. Available online: https://ssrn.com/abstract=4711596 (accessed on 18 February 2025).

- Hsueh, J.Y.; Nethala, D.; Singh, S.; Linehan, W.M.; Ball, M.W. Investigating the clinical reasoning abilities of large language model GPT-4: An analysis of postoperative complications from renal surgeries. Urol. Oncol. Semin. Orig. Investig. 2024, 42, 292.e1–292.e7. [Google Scholar] [CrossRef] [PubMed]

- Sexton, D.J.; Judge, C. Assessments of Generative Artificial Intelligence as Clinical Decision Support Ought to be Incorporated Into Randomized Controlled Trials of Electronic Alerts for Acute Kidney Injury. Mayo Clin. Proc. Digit. Health 2024, 2, 606–610. [Google Scholar] [CrossRef]

- Halawani, A.; Almehmadi, S.G.; Alhubaishy, B.A.; Alnefaie, Z.A.; Hasan, M.N. Empowering patients: How accurate and readable are large language models in renal cancer education. Front. Oncol. 2024, 14, 1457516. [Google Scholar] [CrossRef]

- Neha, F.; Bhati, D.; Shukla, D.K.; Amiruzzaman, M. Chatgpt: Transforming healthcare with AI. AI 2024, 5, 2618–2650. [Google Scholar] [CrossRef]

- Commons Wikimedia. The Kidney. 2024. Available online: https://commons.wikimedia.org/wiki/File:2610_The_Kidney.jpg (accessed on 23 November 2024).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).