1. Introduction

ANSYS, a commercially available finite element software, can simulate ordinary concrete’s mechanical properties, and it is used to analyze concrete elements [

1]. It enables engineers to model the mechanical behavior of concrete and other materials at a very fine level of detail. The simulation process in ANSYS involves modeling the concrete structure as a three-dimensional (3D) network of interconnected finite elements. Loads are then applied to the model to simulate the mechanical behavior of the concrete, taking into account the material properties. The finite element method (FEM) is a method widely used for calculation of structural properties, stresses, strains, and deformations of structures. The rapid and continuous advancement in computational power has significantly expanded the scope and scale of numerical simulation techniques, enabling the analysis of increasingly complex physical systems with higher efficiency and resolution and potentially greater accuracy depending on the underlying models.

The uncertainty in a system can be present in multiple aspects, such as loading conditions or geometric and material properties. The deterministic approach assumes exact, fixed values for inputs like material properties, boundary conditions, and loading. On the other hand, the probabilistic approach [

2,

3] is a method used to account for uncertainty and variability in input data, model parameters, or environmental conditions when analyzing or designing systems. Instead of assuming that all parameters are fixed (as in a deterministic approach), the probabilistic approach treats key inputs as random variables with known or definable probability distributions. These distributions can assume any mathematically definable form. A common example is the normal (or Gaussian) distribution. This approach allows for the prediction not just of a single outcome, but a range of possible outcomes, each with a quantifiable likelihood [

4]. By integrating the probabilistic approach into finite element analysis, the simulation is transformed from a single-point deterministic prediction into a statistical evaluation of the likelihood of various outcomes. This is typically achieved through techniques such as the Monte Carlo method [

5,

6,

7], in which the model is executed repeatedly using input parameters that are randomly sampled from predefined probability distributions. This process results in a range of possible outputs rather than a single solution, allowing for the assessment of variability, the identification of influential parameters, and the quantification of uncertainty in the system’s response.

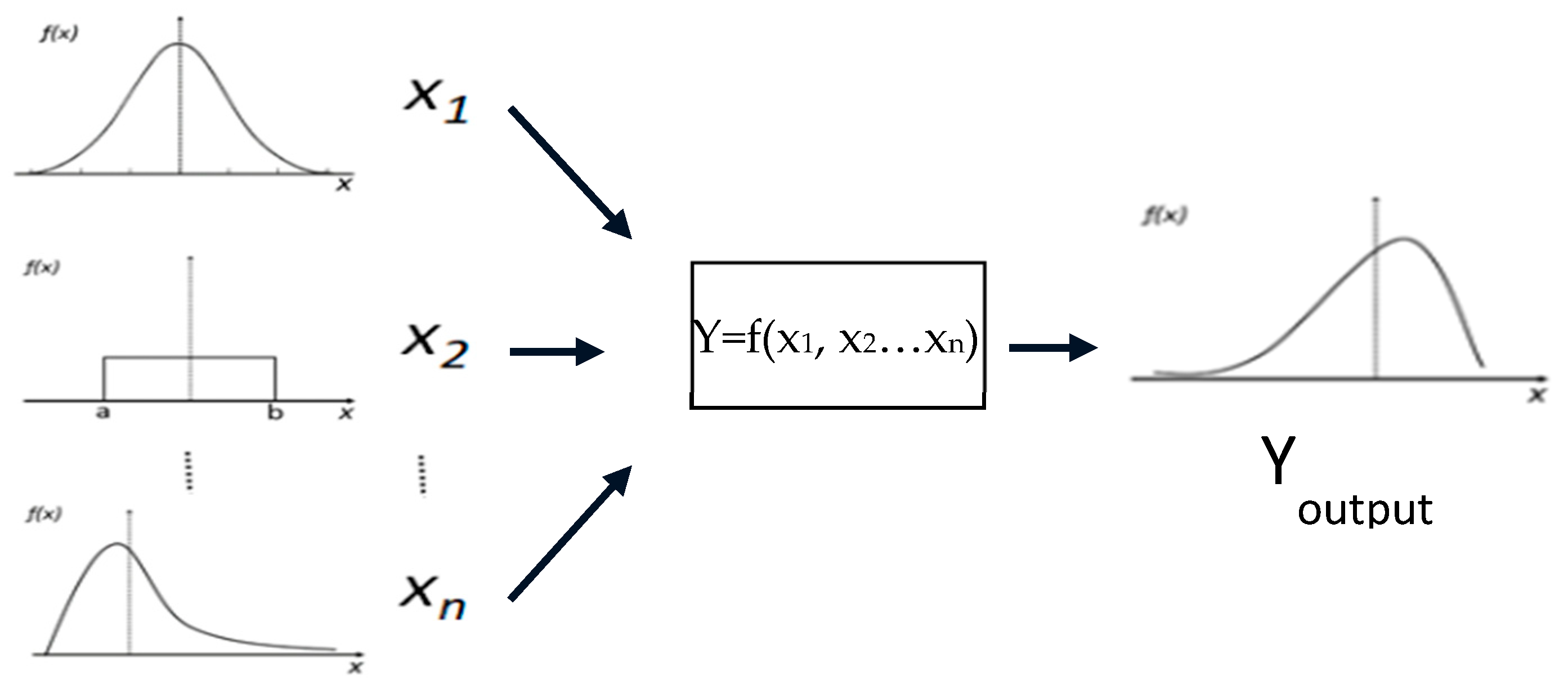

Figure 1 illustrates the propagation of distributions for N = 3 independent input quantities.

JCGM 101:2008 [

8], titled “

Evaluation of Measurement Data—Supplement 1 to the ‘Guide to the Expression of Uncertainty in Measurement’—Propagation of Distributions Using a Monte Carlo Method”, is an internationally recognized document published by the Joint Committee for Guides in Metrology (JCGM). Generally, it provides comprehensive guidance on how to evaluate and propagate uncertainty when the input quantities of a measurement or computational model are represented by probability distributions. This supplement promotes the use of the Monte Carlo method as a practical and powerful tool for numerically simulating the propagation of uncertainty through complex models.

When engineers use the results of a simulation, their goal is often to compare the outcome either to an experimental result or to another computational model outcome. In both cases, understanding and quantifying the uncertainty associated with the simulation is crucial. If a simulation is to be compared with an experiment, it is not feasible to simply match the mean or nominal values; a decision rule needs to be selected. Without accounting for this, any apparent decision on agreement or disagreement could be misleading. Uncertainty intervals provide context for whether the results are statistically consistent or significantly different. Without uncertainty quantification, simulation–experiment comparisons lack scientific rigor. Similarly, when comparing two different models, each with its own assumptions, simplifications, and input data, uncertainty quantification allows engineers to determine whether the models produce results that are meaningfully distinct or within acceptable agreement. This is particularly important for model validation, quality control, or selecting a model for use in a safety-critical or high-precision application.

In this study, a simple finite element model of a concrete cube was developed in ANSYS 2025 R2 to simulate the mechanical response under load. Recognizing that real-world variability—such as geometric tolerances of the specimen or variability in the magnitude and misalignments of applied forces—can significantly affect test outcomes, these input parameters were modeled as random variables with assigned probability distributions. To propagate this uncertainty through the model and obtain distributions of output quantities (such as maximum deformation, strain, and stress), the Monte Carlo method was employed in the way that is explicitly proposed by JCGM 101:2008.

In destructive testing, estimating the uncertainty of the measurement is typically achieved through repeated tests on multiple specimens. However, this approach inevitably introduces the effect of material heterogeneity, as each specimen may differ in composition, geometry, or internal structure. This variation can obscure the influence of specific factors on the test outcome. By assigning probability distributions to input parameters, it becomes possible to evaluate their individual and combined effects on the output without the confounding influence of specimen-to-specimen variability. Beyond identifying which factors contribute most to the result, this method enables the calculation of confidence intervals for key mechanical quantities. These intervals can be used as the basis for decision-making, including comparing simulation outcomes with experimental data from a single physical specimen, assessing the consistency between two different models, and defining control limits for quality assurance in laboratory testing procedures. In this way, the Monte Carlo-based approach, as introduced in JCGM 101:2008, provides a more robust alternative [

5,

6,

7,

8,

9]. Not only does it quantify uncertainty in a traceable and standardized manner, but it also enhances the interpretability and applicability of simulation results in engineering practice.

In a previous study [

10], a methodology was presented for combining the results of compressive strength testing of concrete specimens with two non-destructive testing methods, providing additional results on strength determination based on totally different measurement mechanisms. The approach developed in the present work aims to further enhance the reliability of the destructive testing method. By incorporating numerical simulations and uncertainty quantification through Monte Carlo analysis, this method provides deeper insight into the variability and sensitivity of key parameters affecting the outcome of compressive strength tests. As a result, it strengthens the interpretive value of destructive tests by complementing their single-value results with a probabilistic framework. This integration supports more robust conformity assessments and contributes to improving the overall quality control procedures in accordance with modern standards.

The significance of this study is that (i) it presents a method for obtaining a decision rule when comparing the model results with the results of an experiment on the real specimen or when comparing the results of two different models, and (ii) it proposes a method to enhance the reliability of destructive testing. The uncertainty quantification is essential to engineers who want to validate any model.

2. Method—Input Parameters and Randomization Procedure

A simple example of FEM application (a 3D model of a concrete cube with dimensions 150 × 150 × 150 mm) was chosen for the determination of maximum total deformation, maximum principal elastic strain, and maximum principal stress. It should be noted that this introduces an ideal, perfectly shaped specimen that is impossible to manufacture in the real world.

Figure 2a shows one side of this specimen where the deviations from the ideal cube are emphasized enough to be visible. In this figure, the red line represents a cross section of the real surface of the specimen, the blue line is the same cross section but for a perfectly flat and perpendicular side of the specimen that could be considered as representative of the real side on average, and the gray line is the cross section of the perfectly flat and perpendicular side in the plane at the nominal (150 mm) dimension of the specimen. Correspondingly, the same figure introduces many significant errors produced by the model deviations: a. lack of perpendicularity of the surface, shown as angle

θ of the real side (red line) relative to the plane where the representative and the nominal sides are lying; b. roughness of the surface (non-linearity of the red line); and c. offset of the average plane of the specimen side (blue line) relative to the nominal plane of the specimen (gray line). These occur naturally and are expectedly due to imperfections in the construction of the molds and deviations in the molding or specimen curing process.

When attempting to evaluate the real characteristics of a cube specimen, it is natural to measure the dimensions of each side, e.g., by using a properly calibrated caliper. As shown in

Figure 2a, a good approach is to make at least three measurements, one in the middle and two more near the extremities of each side of the specimen (i.e., points shown by the green arrows in the case of the side parallel to axis

x). This way, a mean (variable

dx′) of the side dimension and a scale of dispersion, in the form of a calculated standard deviation

sdx′, are obtained. A correct representation of this side dimension is a confidence interval assuming a normal (Gaussian) distribution of variable

dx′, at a 95% level, should be referred to as

dx′ ± 1.96·

sdx′. Of course, this interval is biased when compared to the nominal (i.e., the desired) value, with a corresponding systematic error equal to

dx −

dx′.

One side of the cube was chosen as fixed, and a force, nominally chosen to be equal to F, was applied perpendicular to the opposite side.

Figure 2b shows how the lack of flatness of the surface of the specimen, but also the lack of alignment with the moving part of a uniaxial compressive machine, affects the vector of the applied force. The nominal testing condition corresponds to a totally perpendicular compressive force, equal to F, evenly distributed through the entire side of the specimen. Again, the real test conditions deviate from this, producing many significant errors: (a) error in defining the scale of the applied force, which is expected even for successfully calibrated uniaxial compressive machines, and (b) error due to deviation from the desired situation of perfectly flat specimen surface and perfectly aligned axis of the moving part of the compressive machine relative to the perpendicular axis of the specimen.

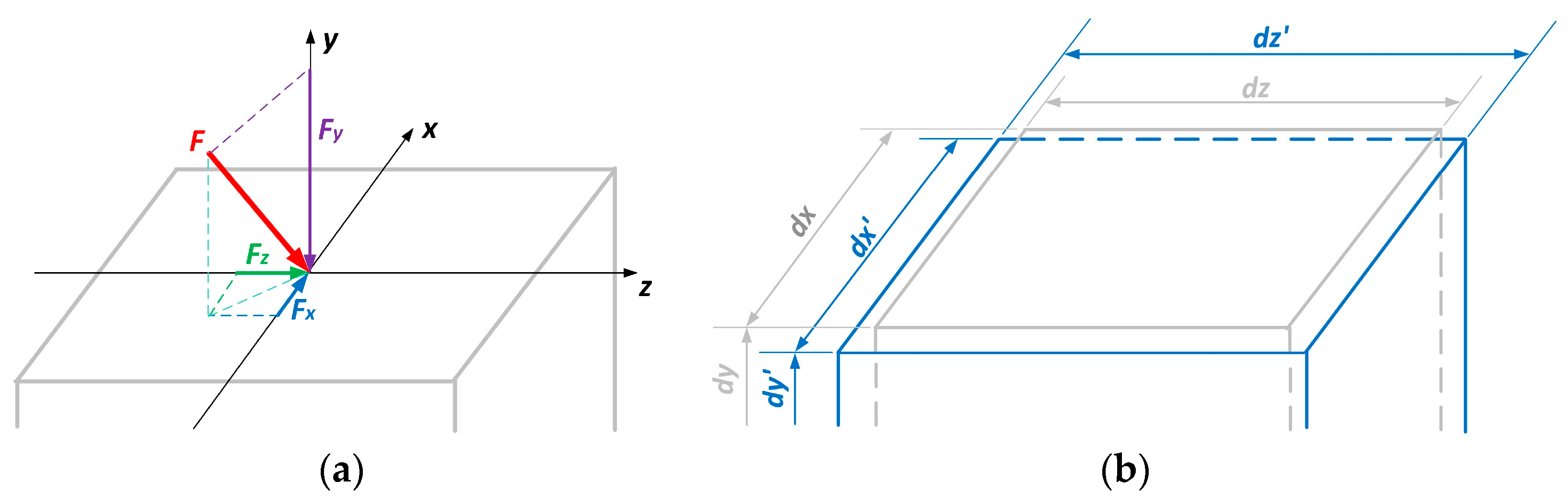

Parameters P1–P3 represent the three Cartesian dimensions (

dx,

dy,

dz) of the cube (

Figure 3b), specified nominally as 150 mm. While molds are designed for these nominal values, manufacturing tolerances and surface irregularities result in deviations. The actual dimensions (

dx′,

dy′,

dz′) are best represented as a mean ± standard deviation, assuming a normal distribution. In practice, up to 10 caliper measurements per specimen provide a small but sufficient dataset for estimating these values.

Parameters P4–P6 denote the Cartesian components of the applied force F, which is the resultant force due to the compression of the upper surface of the specimen, referenced from the center of the specimen’s upper face (

Figure 3a). Although the intended loading is perpendicular to this face, imperfections in machine alignment and specimen flatness (

Figure 2b) introduce small components along the horizontal axes. Calibration data provide the mean and standard deviation for the vertical component, while the horizontal components are estimated from reasonable engineering assumptions.

Random numbers were generated in Microsoft Excel using the formula =NORM.INV(RAND(), m, sd), where RAND() produces a uniformly distributed value between 0 and 1, and NORM.INV converts it into a normally distributed variate with specified mean (

m) and standard deviation (

sd). Large sets of values for each input parameter (P1–P6) were generated according to selected means and standard deviations. Values for input parameter P6 were not generated directly using the randomization function. It was decided that the randomization function should be applied for parameter F, which is originally correlated with the setup of the compressive machine. The force components on the

x and

z axes are due to the lack of flatness of the upper surface of the specimen and of its alignment with the moving part of the compressive machine. The component of force

Fz on the perpendicular axis

z, the parameter P6, was then calculated as (

Figure 3a):

To validate Excel’s random number generation, the simulated datasets were subjected to normality testing (

Table 1).

For each dataset, the first 5000 values were tested to verify consistency with a normal distribution, using the Shapiro–Wilk (S–W), Kolmogorov–Smirnov (K–S), and Anderson–Darling (A–D) tests. The S–W test, recommended for small to moderate samples (n ≈ 3–5000), compares ordered sample values with expected normal order statistics, with W values near 1 indicating normality. At the upper range (n = 5000), results were corroborated by the K–S test, which compares the empirical and theoretical cumulative distribution functions, using the maximum vertical distance (D) as the test statistic. To match the study population (n = 404), the first 404 values from each dataset were also tested. At this sample size, both S–W and K–S tests are appropriate, with the former generally more sensitive to deviations in symmetric distributions. The A–D test, a modification of the K–S procedure, emphasizes deviations in the distribution tails, with its statistic (A2) measuring the integrated squared difference between empirical and theoretical distributions, providing greater power for detecting outliers and extreme-value departures from normality across all sample sizes.

3. Results

The method was used to determine the distribution of the output parameters based on repeats of the model solution using the first 404 generated sets of randomized values. The output parameter data were obtained in the form of a set of 404 values for each. As a result, each one of the three output parameter distributions (P7, P8, P9) was turned into a histogram, thus providing the exact range and shape of the output distribution (

Figure 4).

Figure 4 illustrates a probabilistic analysis workflow where input distributions of geometric dimensions and force components are propagated through a computational model, resulting in output distributions. This figure is similar to

Figure 1, but the presenting distributions are made by the exact results of the applied method. The three histograms obtained for output parameters represent the distributions of response quantities obtained from the model. The statistical characteristics of the resulting distributions are presented in detail in

Table 2.

Initially, the output parameters’ values were tested against the normal distribution (

Table 2). The normality test succeeded for parameters P8 and P9, meaning that values equal to

m ± 1.96·

sd correspond to a 95% confidence interval. For P8, the 95% confidence interval is (4.0 ± 0.2) ×10

−6 mm

−1, and for P9 it is (3.6 ± 0.1) ×10

4 Pa. These two intervals may be used in a decision rule for comparison with specified limits. Another use of them is to compare the values produced from two different simulations for two different, but similar, specimens.

The normality test was not successful for the distribution of values for the output parameter P7. Following an attempt to test whether this distribution complies with the t-distribution, which is also a symmetrical, centralized distribution like the normal distribution. A chi2 test was performed, leading to the conclusion that the parameter P7 data does not comply with the t-distribution.

This process could be followed for other known symmetrical distributions until a chi

2 test reveals the real distribution for the values of the output parameter P7. Nevertheless, assigning a triangular distribution in this case of a symmetrical and centralized outcome for parameter P7 (

Figure 4) would be adequate to proceed with establishing a decision rule.

The method analyzed in this study is presented in a way that it could be utilized in any finite element solution of a material’s strength problem to produce a criterion for experimental results verification, based on the 95% estimated confidence interval for the strength result.

The confidence intervals estimated based on the methodology presented for the model results through the Monte Carlo method can be used:

As the basis for a decision rule when comparing the model results with the results of an experiment on the real specimen.

As the basis for a decision rule when comparing the results of two different models.

To calculate the limits of quality control charts of a laboratory performing the testing.