1. Introduction

The paper revisits a well-known concept—the reduced chi-square—and introduces and formalizes a novel interpretation of the ratio between fitting and propagation errors. This supports the usage of the reduced chi-square and the criterion of its deviation from one as a measure of the goodness of fitting.

The chi-square sums the squares of the residuals between a given dataset and a model, and is suitable for fitting this dataset [

1]. Given the dataset of

N observations,

, the respective modeled values

are determined as

from the statistical model

, which is expected to best describe the data. Often, the statistics of observational data are unknown, thus a general fitting method is employed to adjust the respective modeled values to data by minimizing their total deviations

defined through a norm Φ. For the Euclidean norm, the sum of the square deviations is used, given by

.

A mono-parametric fitting model,

, depends on one single parameter

p, e.g., the simple case of fitting a constant,

. A bi-parametric fitting model

depends on two independent parameters

and

, e.g., the famous least-square methods of a linear fit,

. In general, we consider a multi-parametric fitting model

that depends on

M independent parameters,

. In all of the cases, the fitting method involves finding the optimal values of the parameters that minimize the sum of the squares

[

2,

3]. The chi-square is a similar sum expression to be minimized but also includes the data uncertainties.

Observational data are typically accompanied by the respective uncertainties,

, which are used for normalizing the deviation of data with the fitted model,

. These ratios are summed up to construct the chi-square, using the Euclidean norm

L2, that is, by adding the squares,

There are four straightforward generalizations of the chi-square. These are described and formulated as follows:

- (1)

When a non-Euclidean norm,

Lq, is being used to sum the vector components

, then, we minimized the chi-

q (instead of the chi-square), defined by [

4,

5,

6]

The optimization involves finding, aside from the optimal value of the fitting parameters, the optimal value of q that characterizes the non-Euclidean norm, Lq.

- (2)

When the observations of the independent variable

x have significant uncertainties

, then, the optimization involves minimizing the chi-square defined by [

7].

- (3)

When the vector components are characterized by correlations, then, a non-diagonal covariance matrix

exists, whose inverse matrix is involved in the construction of the chi-square [

8], i.e.,

- (4)

The relation between degrees of freedom and information is significant in statistics and information theory. Degrees of freedom represent the number of independent values in a dataset that can vary while satisfying statistical constraints. Therefore, this number indicates the amount of information available to estimate parameters. Furthermore, when a significant variability characterizes the uncertainties of observations, then, a potential statistical bias exists in their use as statistical weights (expressed as inverse variance). This bias is reduced through the effective statistical degrees of freedom

Ne (replacing the number of data points,

N), which is as follows [

9]:

This is reduced to the number of observations, N, when all the uncertainties are equal, . Then, the effective independent degrees of freedom are given by , instead of , where is the number of the independent parameters to be optimized by the fitting. The notation of the effective independent degrees of freedom is used for formulating the reduced chi-square.

All the above four scenarios are well-studied and used in statistical analyses (e.g., see the cited references). In this paper, we focus on the standard form of (1), in order to demonstrate the statistical meaning of the chi-square in characterizing the goodness of fitting.

The statistical model is expressed by a function, f, of the independent variable x and of a number of independent parameters , in order to describe the observations of over , so that , where ei is the error term that follows a probability distribution (typically assumed to be a normal distribution). A linearly parametric model is referred to as a linear relationship of the model’s function with the involved parameter(s), and not with the variable x; e.g., the model function is linearly parameterized, while the function is nonlinear.

In general, the linearly parameterized model function can be written as

which involves arbitrary functions of the variable

x,

.

Minimization of the constructed chi-square,

involves finding the optimal values of the fitting parameters,

, so that

The minimum value of the chi-square,

, equals the sum of the residuals and can provide a measure of the goodness of the fitting. This is precisely given by the minimum reduced chi-square, that is, the chi-square over the independent degrees of freedom

,

How can a chi-square be used to characterize the goodness of a fitting method? The first thought is that the smaller the minimum chi-square value, the better the fitting. Ideally, the fitting is perfect when the sum of the residuals turns into zero for some value of the fitting parameters, ; when this occurs, all the vector components of the data-model deviation (fitting residuals) are zero, ; (note that we used the abbreviation ). Such a fitting would have been meaningful, if the observation uncertainties were negligible, . On the contrary, when these uncertainties are finite and not negligible, such an adaptation of a perfect fitting becomes meaningless; indeed, what is the point of having residuals between observations and the model tending to zero , if the uncertainties of these observations are significantly larger than these deviations, i.e., ?

The fit, once optimized, is considered good when the respective residuals are neither larger, (underfitting), nor smaller, (overfitting), than the observations uncertainties, but rather, when they have near values, . Ideally, the observation uncertainty would be equal to the minimum value of each data-model deviation, , instead of being equal to zero, in order for the fitting to be considered meaningful. In this case, the summation on all the points to derive the best chi-square value simply provides the number of points, , while the average of all the vector components provides unity, . The average chi-square approximates its reduced value, whose optimal value is ~1.

In a more detailed derivation, the reduced chi-square is exactly involved in this interpretation. In particular, let the observation’s uncertainties to be equal,

; then, the chi-square is

and its reduced value is

. The averaged squared residuals, that is, the variance between the data

and model

values, are determined by

; hence,

Therefore, the standard deviation is minimized to its smallest meaningful value, that is, the standard deviation of the observation values, , only when is equal to unity, i.e., . This observation is fundamental, interwoven with the definition, rather than a simple property, of the chi-square.

The concept and interpretation of the chi-square is interwoven with two types of errors characterizing the fitting parameters. The first, the fitting error, comes from the regression, caused by the nonzero deviation between the data and modeled values,

. The second, the propagation error, comes from the existence of the observation data uncertainties, that is, the nonzero value of

. Equation (10) provides insights that the chi-square expresses the ratio between the two types of errors, the fitting over the propagation error. We demonstrate this relation between the errors in

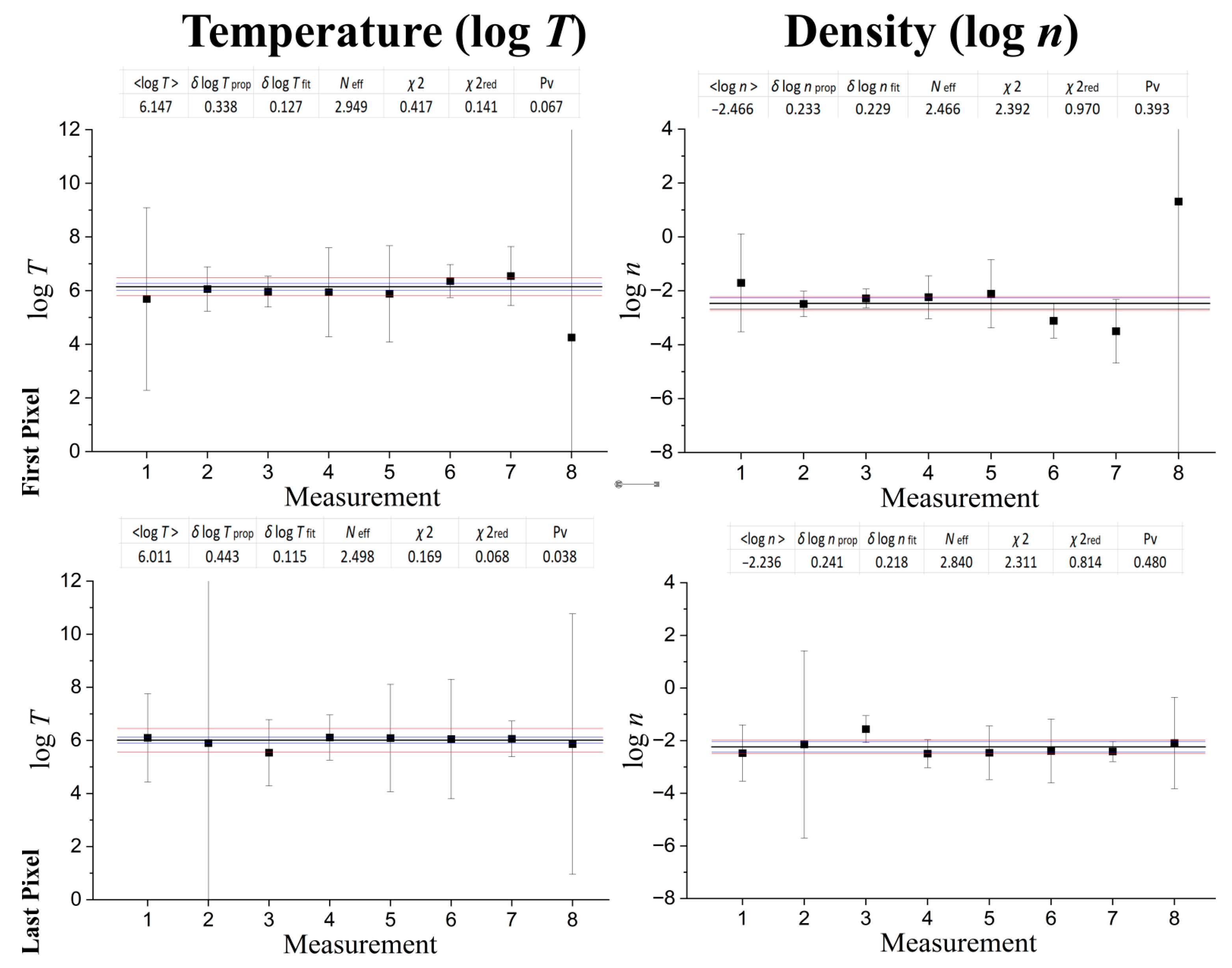

Figure 1.

In particular,

Figure 1 shows the fitting of eight data values with a constant fitting model (a special case of a linear model is shown in detail in

Section 2.1; see: [

10,

11,

12]). This fitting is performed to test the statistical hypothesis that the data values are well represented by a constant. The characterization of the fitting depends on the accompanying observational uncertainties. Too large uncertainties (

Figure 1a) lead to small value of the reduced chi-square (

); if the fitting model is the right choice, then, this result indicates that there is an error overestimation characterizing the observational data. On the other hand, too small uncertainties (

Figure 1c) lead to a large value of the reduced chi-square (

); again, if the fitting model is the right choice, then, this outcome indicates that there is an error underestimation of the observational data. The optimal fitting goodness would be for observation uncertainties similar to the data variation (

Figure 1b), meaning that the two types of errors are about the same, and the reduced chi-square is about one (

).

The statistical behavior of chi-squared values derived from model residuals has been thoroughly understood for over a century, thanks to the foundational work of R.A. Fisher [

13,

14]. Fisher not only performed the necessary algebraic derivations but also rigorously established the associated probability distributions. Building on this classical framework, the current study introduces a fresh algebraic and intuitive perspective on reduced chi-squared statistics.

The purpose of this paper is to show an alternative perspective to the statistical meaning of the reduced chi-square as a measure of the goodness of fitting methods, and finally, to demonstrate it with several examples and applications. This expression is given by the ratio between the two types of errors, the fitting over the propagation error, which holds for any linearly parameterized fitting. We show this characterization of chi-square, as follows. We first work with the traditional examples of one-parametric fitting of a constant and the bi-parametric fitting of a linear model; then, we provide the proof for the general case of any linearly multi-parameterized model. Nevertheless, we present a counterexample of this characterization of chi-square, showing that it is not generally true for nonlinearly parameterized fitting. Furthermore, we focus on the nature of the two types of errors and show their independent origin. We also examine how the observation uncertainties affect the formulations of the fitting and propagation errors and the reduced chi-square. The developments of this paper in regard to the chi-square formulation and characterization are applied in the case of plasma protons of the heliosheath; we find that the acceptable characterization of the fitting goodness is given by one order of magnitude of the reduced chi-square around its optimal unity value, .

The paper is organized as follows: In

Section 2, we show both the cases of linearly and nonlinearly parametric fitting models. We start with a linearly mono-parametric model, then work with bi-parametric model, and end up with the general case of a multi-parametric fitting model of an arbitrary number of independent parameters. In the same section, we show that the reduced chi-square cannot be used for nonlinear parametric fitting models. We show this separately for nonlinearly mono-parameterized and multi-parameterized models. In

Section 3, we provide an example application from the plasma protons in the heliosphere. Finally, in

Section 4, we summarize the conclusions and findings of this paper.

3. Discussion: Application in the Outer Heliosphere

We use the reduced chi-square to characterize the goodness of the fitting involved in the method that determines the thermodynamic parameters of the inner heliosheath, that is, the distant outer shelf of our heliosphere. In addition, we use these datasets to compare the two measures related to the estimated chi-square, the reduced chi-square, and the respective p-value.

The Interstellar Boundary Explorer (IBEX) mission observes energetic neutral atom (ENA) emissions, constructing images of the whole sky every six months [

15]. The persistence of these maps and its origins has been studied in detail [

16,

17]. Using these datasets, Livadiotis et al. [

10] derived the sky maps of the temperature

T, density

n, and other thermodynamic quantities that characterize the plasma protons in the inner heliosheath (see also: [

18,

19,

20]). These authors also showed the negative correlation between the values of temperature and density, which originates from the thermodynamic processes of the plasma.

The sky maps of temperature and density constitute 1800 pixels, each with angular dimensions of 6

0 × 6

0, while each map’s grid in longitude and latitude is separated in 60 × 30 pixels. The method used by [

11] derives eight (statistically independent) measurements of the logarithms of the temperature and density of each pixel. This is based on fitting kappa distributions on the plasma protons, that is, the distribution function of particle velocities linked to the thermodynamics of space plasma particle populations [

21,

22,

23,

24,

25,

26,

27,

28,

29,

30]. Once these eight values (temperature and density) are well-fitted by a constant, then, the respective pixel is assigned by this thermodynamic parameter.

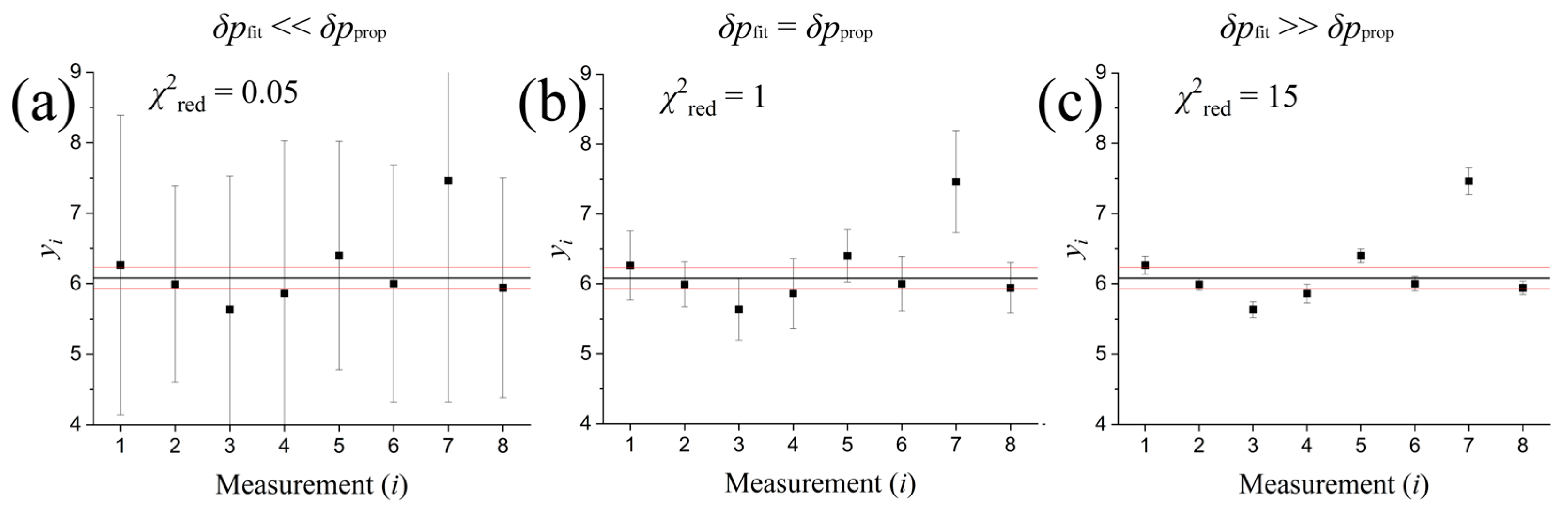

Figure 2 shows two examples of these eight measurements of temperature and density values. As is shown in

Section 2, the fitting parameter is a constant whose constant is the weighted mean. Both types of errors are plotted together with their weighted mean.

Figure 2.

Two pixels’ examples of eight measurements of temperature and density values that characterize the sky map of the plasma protons in the inner heliosheath. The weighted average (black) is plotted together with the ± deviation caused by fitting errors (blue) and propagation errors (red). (Tables show statistical details for each fit.).

Figure 2.

Two pixels’ examples of eight measurements of temperature and density values that characterize the sky map of the plasma protons in the inner heliosheath. The weighted average (black) is plotted together with the ± deviation caused by fitting errors (blue) and propagation errors (red). (Tables show statistical details for each fit.).

The two errors were calculated as follows:

and

for some small value of ε (as this tends to zero, the value of the resultant errors becomes independent of

ε).

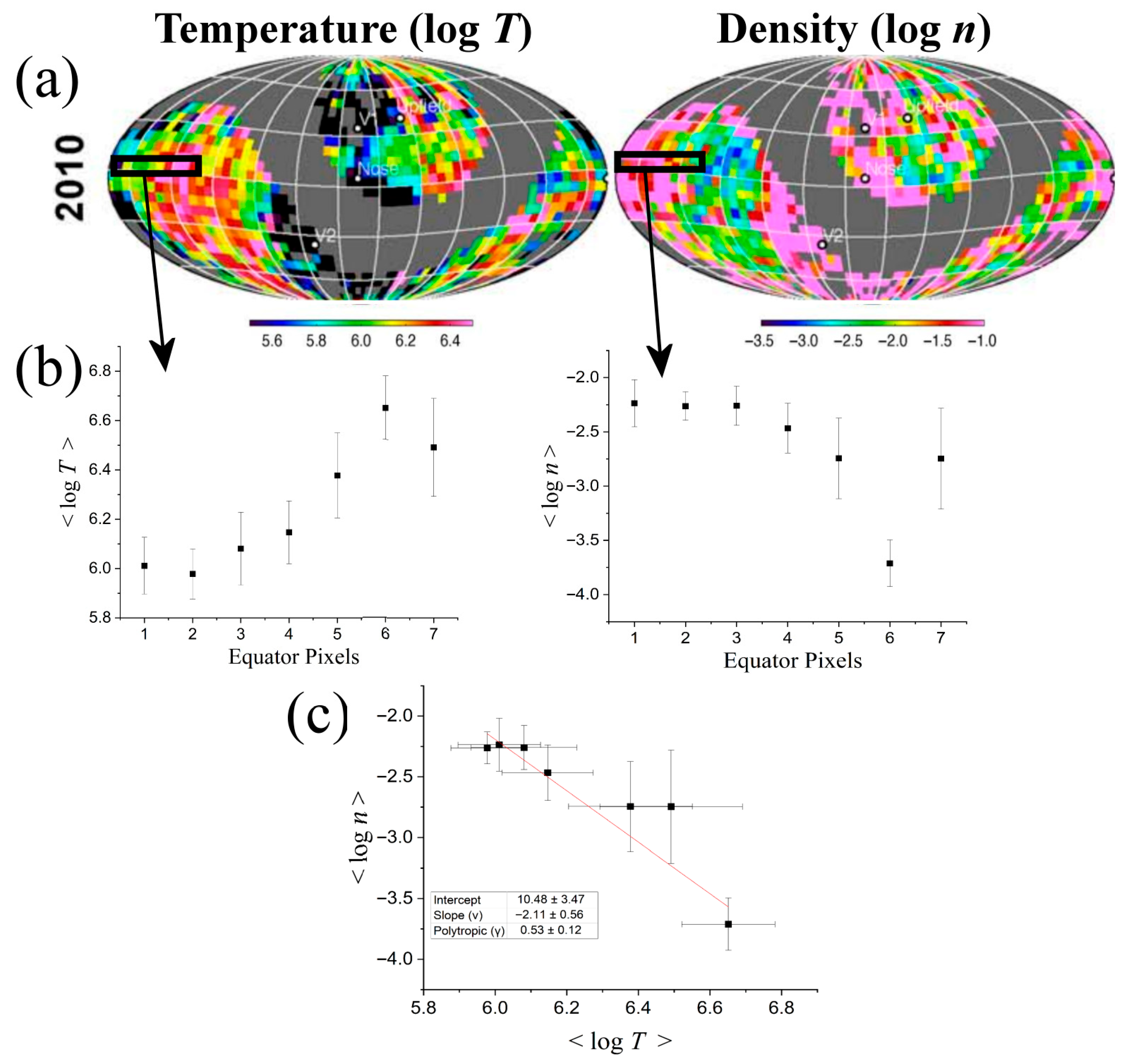

We repeat the above fitting procedure for eight neighboring pixels in the sky (taken from the annual maps of year 2010; see details in [

10]).

Figure 3a shows these neighboring pixels in the sky maps of temperature and density. Then, in

Figure 3b we plot the derived temperature, density, and respective uncertainties (corresponding to the larger among the two error types). Finally, in

Figure 3c we show their mutual relationship, by plotting the density against the temperature (logarithmic values). This is described by a negative correlation relationship, originated by a polytropic process with sub-isothermal polytropic index

γ. A polytropic process is described by a power-law relation between temperature and density (i.e.,

), or between thermal pressure and density (i.e.,

), along a streamline of the flow. In particular, we find a slope

(that is, the exponent in

) around

ν ~ −2, but it is characterized with better statistics when outlining the last point, leading to

ν ~ −1 (also, see: [

10,

30]).

Using the

p-value method for evaluating the goodness of fitting [

13], we compare the estimated chi-square value,

, with any possible chi-square value larger than the estimated one,

, by deriving this exact probability,

; this determined the

p-value. This is determined by integrating the chi-square distribution,

, that is the distribution of all the possible

values (parameterized by the degrees of freedom

D). Therefore, the likelihood of having a chi-square value,

, larger than the estimated one,

, is given in terms of the complementary cumulative chi-square distribution. The respective probability, the

p-value, equals

.

The larger the p-value, the better the goodness of the fitting. A p-value larger than 0.5 corresponds to < D or < 1. Larger p-values, up to p = 1, correspond to smaller chi-squares, down to ~ 0. Thus, an increasing p-value above the threshold of 0.5 cannot lead to a better fitting. Rather, it leads to a worse fit, similar to a decreasing < 1. On the other hand, a p-value smaller than the significance level of ~0.05 is typically rejected. Using these limits of the p-value, we find the respective limits of the reduced chi-square.

In

Figure 4, we demonstrate the relationship between the reduced chi-square

and the respective

p-value P

v. We plot 16 pair values of (

and P

v), one pair for each of the 16 fitting measures performed (temperature and density fits, both for eight pixels). The deduced relationship between the two measures can be used to deduct the extreme acceptable values of the reduced chi-square; indeed, the best reduced chi-square value that characterizes the goodness of a fitting is one, but at what extreme should a lower goodness be accepted? The correspondence with the acceptable value of 0.05 of the

p-value will provide an answer to this issue.

In

Figure 4a we plot all (

and P

v) pair values and observe the linear behavior between the two measures. The plot shows the well-described linearity between the low chi-square values

and the corresponding

p-values. Nevertheless, in order to also catch the relationship for all the points, including those with

, in

Figure 4b we plot the values of 1–2∙P

v as a function of the modified chi-square values, that is,

, which surely ranges in the interval [0, 1] (similarly for both axes). We find that the excluded

p-values (P

v < 0.05) correspond to the reduced chi-square values

and

; (of course, this is not a universal value, but corresponds to the examined datasets).

4. Conclusions

The paper showed the relationship between the two types of errors that characterize the optimal fitting parameters, the fitting and propagation errors. While the fitting error estimates the uncertainty originated from the variation in the observational data around the model, the propagation error comes from the raw uncertainties of the observational data. The (square) ratio of the two errors determines the value of the reduced chi-square.

Large deviations between propagation and fitting errors lead to a reduced chi-square significantly different than one, corresponding to low fitting goodness. In fact, large uncertainties lead to small values of the reduced chi-square (), indicating a possible error overestimation; small uncertainties lead to a large value of the reduced chi-square (), indicating that there is an error underestimation of the observation data. The optimal fitting goodness would be for observation uncertainties similar to the data variation, namely, the two types of errors are about the same, and the reduced chi-square is about one ().

The relationship between the two types of errors and the chi-square was shown for mono-parametric, bi-parametric, and in general, multi-parametric statistical models. In the multi-parametric case, it is interesting that the ratio is universal, namely, independent of the respective parameter; namely, , for any n: 1, …, M, as shown in Equation (68).

In addition to this theoretical development, we presented a counterexample of this characterization of chi-square, showing that it is not generally true for a nonlinearly parameterized fitting. Some alternative approaches for assessing nonlinear fits involve the bootstrap inference [

31], the Bayesian frameworks [

32,

33], and the technique of averaging the parameter’s optimal values derived from solving by pairs [

11].

Finally, the method of chi-square characterization as a measure of the fitting goodness is applied on the datasets of temperature and density of the plasma protons in the inner heliosheath, the outer shelf of our heliosphere. A previously applied method derived eight measurements of the logarithms of temperature and density for each pixel of the sky map [

10]. Once these eight values (temperature and density) are well-fitted by a constant, then, the respective pixel is assigned by this thermodynamic parameter. We have determined the two types of errors of this analysis; the respective reduced chi-square values were compared with the respective

p-values for completeness.