Abstract

Coastal litter is a severe environmental issue impacting marine ecosystems and coastal communities in Thailand, with plastic pollution posing one of the most urgent challenges. Every month, millions of tons of plastic waste enter the ocean, where items such as bottles, cans, and other plastics can take hundreds of years to degrade, threatening marine life through ingestion, entanglement, and habitat destruction. To address this issue, we deploy drones equipped with high-resolution cameras and sensors to capture detailed coastal imagery for assessing litter distribution. This study presents the development of an AI-driven coastal litter detection system using edge computing and 5G communication networks. The AI edge server utilizes YOLOv8 and a recurrent neural network (RNN) to enable the drone to detect and classify various types of litter, such as bottles, cans, and plastics, in real-time. High-speed 5G communication supports seamless data transmission, allowing efficient monitoring. We evaluated drone performance under optimal flying heights above ground of 5 m, 7 m, and 10 m, analyzing accuracy, precision, recall, and F1-score. Results indicate that the system achieves optimal detection at an altitude of 5 m with a ground sampling distance (GSD) of 0.98 cm/pixel, yielding an F1-score of 98% for cans, 96% for plastics, and 95% for bottles. This approach facilitates real-time monitoring of coastal areas, contributing to marine ecosystem conservation and environmental sustainability.

1. Introduction

The marine pollution problem is a pressing environmental issue characterized by the contamination of Thailand’s oceans and coastal areas by various pollutants []. Addressing the marine pollution problem requires concerted efforts at the local, national, and global levels. Strategies to mitigate marine pollution include improving waste management practices, reducing plastic consumption and production, implementing pollution control measures, promoting sustainable fisheries and aquaculture, and raising public awareness about the importance of protecting marine ecosystems. Collaboration among governments, industries, communities, and environmental organizations is essential to effectively address this complex and multifaceted environmental challenge.

One of the most common forms of marine pollution is caused by coastal litter from various sources, including land-based activities, maritime industries, recreational activities, stormwater runoff, and improper waste disposal. Common items found as coastal litter include plastic bottles, bags, fishing gear, cigarette butts, food packaging, and industrial waste []. Coastal litter poses significant threats to marine life, with marine animals such as seabirds, turtles, marine mammals, and fish being particularly vulnerable. Animals can become entangled in or ingest litter, leading to injury, suffocation, starvation, and death. Additionally, microplastics, small plastic particles resulting from the breakdown of larger items can be mistaken for food and ingested by marine organisms, potentially causing toxicity and disrupting ecosystems. Additionally, the coastal litter degrades the natural beauty of coastal areas, diminishes recreational value, and harms coastal ecosystems. Littered beaches and shorelines can deter tourists, negatively impact local economies dependent on tourism, and damage fragile coastal habitats such as coral reefs, mangroves, and dune systems [].

Managing coastal litter requires technology to survey, assess risks, and predict the volume of litter in advance. Remote sensing and aerial mapping technologies are viable options [,,]. These technologies involve using drones with high-resolution sensors or cameras to detect coastal litter. The literature from 2020 to 2023 has shown various directions such as remote sensing [,,,,,,], aerial mapping [,,,,,,], AI edge computing [,,,,,,,,,], and 5G communication networks [,,,,,,] for managing coastal litter. AI is an emerging principle that employs computer systems trained to recognize images of various types of coastal litter by inputting datasets for the computer to analyze and process using algorithms for accurate decision-making. The core of AI technology is the machine learning algorithm, and when more complex algorithms are used, it is called deep learning. AI technology has started to be integrated with drones for coastal litter detection. However, the limitations of drone-based real-time litter detection are specifically data transmission delays, AI system compatibility, and real-time processing capabilities. Real-time drone litter detection requires rapid transmission of high-resolution video data from the drone to an AI processing unit, typically via a network such as 5G.

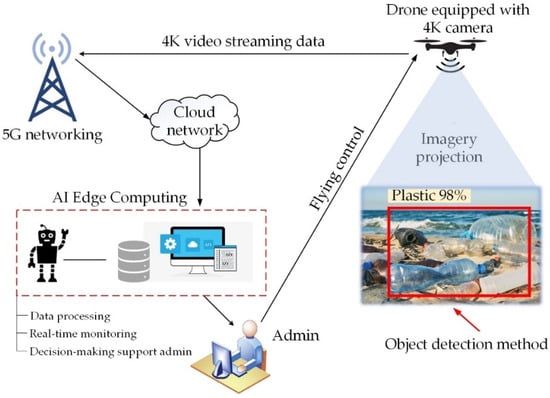

This paper presents a conceptual framework for developing drones to detect coastal litter by leveraging the capabilities of AI edge computing and 5G communication networks, as shown in Figure 1. The drones are equipped with 4K high-resolution cameras integrated with AI edge computing models at the ground station to accurately distinguish between different types of coastal litter and count the number of items. This system achieves highly accurate data processing, with an accuracy rate exceeding 90%, and is capable of real-time operation. Data transmission is facilitated through a 5G communication network.

Figure 1.

The conceptual framework of real-time drone coastal litter imagery detection.

The main contributions of this paper are as follows:

- We developed the YOLOv8 detector and a recurrent neural network (RNN) algorithm to validate AI edge computing models.

- We implemented the Anaconda platform, incorporating tools such as OpenCV, YOLOv8, TensorFlow, LabelImg, PyCharm, and OBS, to support the development of AI edge computing and enable data integration with a 5G communication network.

- Flight altitudes and GSD values were considered key parameters for evaluating potential drone platforms capable of detecting bottles, cans, and plastics.

- Finally, we presented evaluation metrics to assess the drone’s capabilities, including accuracy, precision, recall, and F1-Score.

2. Related Works

2.1. Remote Sensing

Techniques for detecting marine litter using remote sensing have been extensively reviewed in []. These studies categorize methods based on platforms (e.g., satellites, aircraft, drones), sensors, spectral and spatial resolution, and litter size. The majority of research utilizes satellite-based multispectral and hyperspectral optical sensors to address marine litter. Aircraft, with high spatial resolution (3 m) and optical sensor ranges of 400–2500 nm, are identified as the most effective solution for detecting marine litter in the sea. While satellites and aircraft are well-suited for covering large areas, drones are more applicable for localized monitoring, particularly on beaches. Future research, as noted in [], emphasizes the potential for drone applications in sea surface marine litter detection.

In [], several remote sensing techniques were reviewed for detecting floating marine litter. While satellite imaging offers large-scale coverage, its effectiveness is often constrained by environmental factors such as sunlight variability, cloud cover, and shadows. These challenges make low-altitude platforms like aircraft and drones more effective, as they provide greater proximity and control over image clarity and resolution.

UAVs have been increasingly utilized for monitoring marine plastic litter in coastal areas []. Since 2011, various studies have proposed UAVs and autonomous underwater vehicles (AUVs) as tools for detecting floating and beached debris. UAVs are recognized as a cost-effective solution for monitoring coastal plastic pollution, while AUVs hold promise for revolutionizing floating debris detection.

Studies in [] highlighted the efficiency of UAVs for mapping and monitoring marine litter, including both beached and floating debris. UAV-based surveys for beached locations have seen more advanced development compared to those for floating debris. These surveys are more effective than traditional techniques, offering integration with other drone-based studies and significantly improving the understanding of litter dynamics in marine environments.

Thermal infrared sensing, as presented in [], has shown promise for monitoring macro-plastic litter floating on the water surface. UAVs equipped with thermal sensors performed better during nighttime operations than in daylight, enabling improved detection of floating plastics at sea.

Machine learning (ML) algorithms have further enhanced the capabilities of marine litter detection. In [], a combination of K-means clustering and the Light Gradient Boosting Model (LGBM) was employed to distinguish floating objects from shallow water. These algorithms demonstrated the ability to detect floating objects offshore with improved accuracy during daytime operations.

In [], unmanned aerial systems (UAS) equipped with high-resolution RGB cameras were reviewed for floating litter surveys in coastal areas. UAS imagery was processed using three distinct methods: (i) manual visual inspection and annotation, (ii) automated pixel-based detection through color analysis, and (iii) ML-based object detection using convolutional neural networks (CNNs). The results showcased the scalability of ML-based approaches for assessing floating litter contamination.

Advanced remote sensing methods leveraging satellites, UAVs, aircraft, and UASs are employed for monitoring coastal litter, encompassing both beached and floating debris. These platforms utilize sensors such as multispectral, thermal infrared, and RGB cameras, while ML algorithms play a critical role in automating detection and processing. The growing adoption of UAVs and UASs underscores their transformative potential for localized, efficient, and scalable marine litter monitoring.

2.2. Aerial Mapping

In [], aerial drones were used to assess beach litter, focusing on distinguishing litter from non-litter items. The study found that the optimal drone operating height for maximum resolution was 5 m, suitable for detecting litter items no smaller than 10 cm. Flying at heights between 5 m and 10 m on sunny days provided the best balance between resolution and coverage.

The work in [] explored the classification of litter types using UAS images. Litter was categorized by size and color, with plastics accounting for 60% of detected litter, while undefined items made up 20%. Other materials, such as wood and textiles, were less than 10%. White-colored items were the most commonly identified at 30%. The study highlighted the benefits of litter mapping, such as defining target areas before drone flights and improving survey accuracy. A flight altitude of 10 m on sunny days was recommended to optimize image resolution.

In [], the accuracy of marine litter detection by non-expert citizens (students) and experts was compared using drone images. The study demonstrated that citizen science programs, when paired with appropriate training and tools, could effectively support drone-based litter detection. Conducted at the Arno River estuary within a Marine Protected Area (MPA), the study used drones flying at 15 m for 10 min intervals. Plastics, primarily white and larger than 5 cm, were the most frequently detected litter type.

The use of multispectral imaging in macro-litter mapping was presented in []. Multispectral photos from drones supported the automated categorization of standard litter materials and improved the detection of semi-buried objects. Sensors such as red, green, black, RedEdge, and NIR enhanced litter categorization, outperforming manual detection methods by expert operators.

The potential for standardizing drone-based beach litter surveys was discussed in []. An analysis of approximately 15 studies revealed challenges in proposing unified flight parameters due to variations in coastal environments, equipment, and survey objectives. Drones demonstrated strong capabilities for grid mapping, facilitating the classification of litter density and type. This method is a viable alternative to traditional visual surveys, offering a new approach to understanding litter dynamics and supporting the development of coastal litter models over time.

Anthropogenic marine litter detection through drone mapping and ground truthing was discussed in []. In [], the authors emphasized the importance of selecting appropriate flight altitudes and camera setups. A GSD between 0.5 cm/pixel and 1.25 cm/pixel was recommended, with lower GSD values enabling detailed litter classification and higher values suitable for coarser surveys.

Aerial mapping using drones has emerged as a transformative approach for detecting and classifying marine and beach litter. Optimal flight altitudes between 5 m and 10 m enhance detection accuracy and resolution. Multispectral imaging improves categorization, especially for semi-buried objects. Selecting suitable GSD values and flight parameters is critical for balancing detection detail with survey efficiency. Standardizing drone-based surveys remains challenging but essential for consistent and scalable environmental monitoring.

2.3. AI Edge Computing

In [], a multi-class neural network was developed for automatically detecting various types of plastic litter on beaches, including bottles, fishing ropes, octopus pots, and fragments. The system achieved an average F-score of 49%, with higher performance for specific categories: 66% for octopus pots, 56% for fishing ropes, 39% for plastic fragments, and 34% for plastic bottles. These results were obtained with a drone operating at a flight altitude of 30 m and a GSD of 0.9 cm/pixel. This study demonstrated that multi-class neural networks can improve litter categorization compared to traditional manual screening methods.

In [], five convolutional neural networks (CNNs)—VGG16, VGG19, DenseNet121, DenseNet169, and DenseNet201—were tested for automatically detecting and mapping marine litter in coastal areas. High-resolution UAS images from diverse beach environments were used to train and evaluate the models. Among these, VGG19 demonstrated optimal performance in simpler scenarios, achieving an F-score of 0.77 and lower error metrics, such as MAE (1.39) and RMSE (1.92). The study also explored integrating citizen science data with automated detection to improve results.

In [], the authors presented a CNN-based approach for semantic segmentation and recognition of marine litter using drones at various elevations. Drone images were captured at flying heights of 10 m, 15 m, 20 m, and 45 m to assess performance in beach litter monitoring. Results indicated that a 10 m flight altitude was optimal for the new dataset of beach litter objects, yielding a precision of 0.83 and an F-score of 0.96. For altitudes above 10 m, the authors noted that detection performance depends on image resolution, with a 1000 1000 pixel input size proving suboptimal. In a related study on pixel-level image classification [], an input size of 1200 1600 pixels was ideal for detecting beach litter using semantic segmentation methods.

At present, deep learning models are crucial for drone marine litter detection. Deep learning tools such as YOLOv5 [] and CNN algorithms [] are among the most powerful drone methods to detect various beach litter due to their excellent accuracy and highly automated feature extraction processes. In [], the authors used YOLOv5 to detect litter objects in footage recorded from drone surveys, which did not involve real-time monitoring. The results showed that the validated model had a precision of 0.695 and an F1-score of 0.235 at a flight altitude of 60 m above sea level. The YOLO model has been a great success in deep learning, leading researchers to present and propose new models based on it for many classical applications [,]. Since its release in 2023, YOLOv8 has been an advanced and cutting-edge model, offering higher detection accuracy and faster speed than YOLOv5 and YOLOv7. In [], YOLOv8 was presented as a small object detector for UAV photography scenarios. The results demonstrated that YOLOv8 can precisely detect small objects in UAV photography scenes. Although UAV-YOLOv8 is an optimal AI model for UAV object detection, its system requires a communication network for real-time monitoring. Thus, communication networks such as cellular networks or WiFi networks are crucial for drone real-time monitoring applications.

2.4. Fifth-Generation Communication Networks

Real-time object detection using UAV remote sensing requires three key components: an AI model, edge computing, and reliable communication links. As presented in [], traditional data streaming often relies on cloud networks and edge computing servers. However, uploading data to the cloud can face bandwidth limitations, leading to delays. To overcome these challenges, 5G communication modules have been proposed, as they offer reliable bandwidth and improved data transfer rates, enabling faster and more efficient processing.

In [], the integration of 5G components with AI modules was explored to enhance UAV-based search and rescue missions. Deep neural networks (DNNs) were used for object detection, with 5G facilitating high-speed data streaming. The 5G network’s flexibility enables robust transmission links for real-time video streaming and supports Ultra-Reliable Low Latency (URLL) communication for UAV control. Simulations demonstrated that the AI model achieved a video transmission rate of 30 FPS at 17.7 Mbps, underscoring the synergy between AI and 5G for real-time operations.

In [], researchers addressed the limitations of onboard edge computing, which can lead to longer computation times and inefficient UAV power usage. They proposed offloading deep learning tasks, such as segmentation and object detection, to 5G-enabled edge servers or ground stations. Using the MobileNetV2 model for segmentation and YOLOv5 for object detection, the system filtered noise on the edge server. Results showed that with a 10 MHz bandwidth allocation, MobileNetV2 achieved a signal-to-noise ratio (SNR) of 20 dB for segmentation, while YOLOv5 achieved an SNR of 10 dB for object detection. These findings highlight the reliability of 5G for task offloading and noise reduction during real-time UAV operations.

The implementation of 5G networks represents a transformative advancement for drone-based environmental monitoring, particularly in addressing coastal marine pollution. As noted in [,], 5G high-speed data transmission and seamless integration with AI models significantly enhance drone capabilities for litter detection. This integration supports the broader adoption of drones as a service (DaaS) and paves the way for future advancements toward 6G technologies. While the benefits of 5G integration are substantial, challenges remain in ensuring reliable data collection and processing across diverse environmental conditions. Overcoming these obstacles is essential to fully leveraging the potential of 5G networks for real-time coastal monitoring. Successfully addressing these challenges will allow 5G to play a pivotal role in global efforts to combat coastal pollution and preserve marine ecosystems.

In [], the authors have addressed the challenge of minimizing the age of Information (AoI) in UAV-aided mobile edge computing networks (MECN), where UAVs are essential for communication, computation, data collection, and control services. However, edge computing server-based LoRaWAN network has insufficient bandwidth. Because of LoRa primary design focus is on low data rates, typically up to a few kilobits per second. This makes it unsuitable for transmitting high-resolution images or video data from drones, which is essential for real-time litter detection using AI models. The limited data rate cannot support the large bandwidth needed for continuous video feeds or high-frequency image capture required by object detection models like YOLOv8. Thus, 5G technology, which supports high-speed, low-latency data transmission, is far more appropriate for such applications, as they can handle continuous video streaming and complex data processing requirements inherent to real-time AI-driven monitoring systems.

This paper presents a novel, integrated system for real-time coastal litter detection, leveraging cutting-edge AI edge computing, UAV technology, and 5G communication networks. The unique contributions of this work distinguish it from the related works reviewed. While previous studies have explored UAV-based litter detection and AI modeling, this paper uniquely combines AI edge computing and 5G communication networks to enable real-time detection. This approach eliminates the latency and bandwidth challenges commonly encountered in cloud-based solutions.

3. Methodology

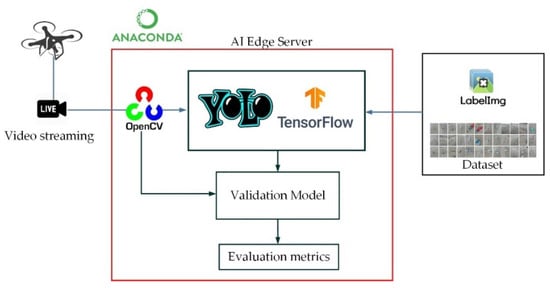

The AI edge computing framework is developed using Python 3.7 on the Anaconda platform. The first step is to install OpenCV version 4.4.0 to enable video input streaming. Next, YOLOv8 and LabelImg are installed for training and step of testing data. Once the training data are obtained, pixels of the object of interest are isolated from the dataset. Following this, TensorFlow is installed as a library used for model validation. The next step involves using PyCharm to evaluate performance metrics, while the real-time video monitoring will be displayed on a desktop application using an open broadcasting software 30.2.3 (OBS). The framework of real-time drone coastal litter imagery detection using the AI edge server processing is illustrated in Figure 2.

Figure 2.

The framework of AI edge computing.

3.1. YOLOv8

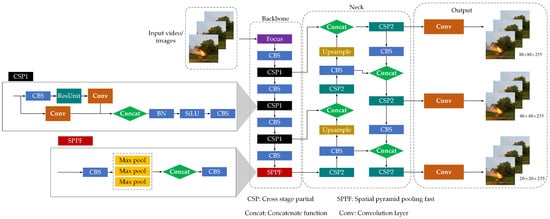

YOLOv8 is a state-of-the-art object detection method that utilizes three scale-detection layers to accommodate coastal litter imagery detection. In this context, video streaming acquired by drones will be controlled using OpenCV programmed and fed into YOLOv8 as input. YOLOv8 is an optimized object detection method that addresses issues related to the loss function, attention mechanism, and multiscale feature fusion. The YOLOv8 network architecture primarily consists of a backbone, neck, and output, as illustrated in Figure 3.

Figure 3.

The network architecture of the YOLOv8 model.

3.1.1. Backbone

The backbone of YOLOv8 is designed to efficiently extract and process visual features from input images, making it suitable for real-time object detection tasks. The backbone architecture in YOLOv8 follows a convolutional neural network (CNN) structure optimized for speed and accuracy. The key components of the backbone modules, such as the CBS module, the C2f module that was replaced from the cross-stage partial (CSP) of YOLOv5, and the spatial pyramid pooling fast (SPPF) module, were used to pool the input images feature maps to a fixed-size map for adaptive size output. Overall, the backbone of YOLOv8 is designed to balance the trade-off between computational efficiency and detection accuracy, making it an ideal choice for real-time object detection applications.

3.1.2. Neck

The neck stage in the YOLOv8 architecture plays a crucial role in enhancing the feature maps produced by the backbone. The neck typically consists of layers such as feature pyramid networks (FPN) or concatenate functions (concat), which merge features from multiple backbone network levels. This multi-scale feature fusion helps detect objects of varying sizes by combining fine-grained details from lower layers with high-level semantic information from deeper layers. The refined feature maps produced by the neck stage are then passed to the output stage, where the final object detection predictions, including bounding boxes and class probabilities, are made.

3.1.3. Output

Normally, the output state of YOLOv8 is called a head stage. The head stage of YOLOv8 is where the final object detection predictions are made. This stage takes the refined feature maps from the neck and processes them to output bounding box coordinates, class probabilities, and objectness scores for each potential object in the image. The head is designed to be efficient and precise, ensuring that YOLOv8 can perform real-time object detection accurately.

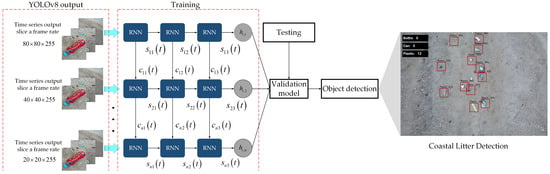

3.2. The RNN Algorithm

To mitigate gradient errors in the backpropagation neural network (BPNN), a recurrent neural network (RNN) is applied to control time series outputs by slicing the frame rate for YOLOv8 and YOLOv5. Figure 4 illustrates the network architecture of a classifier using the RNN algorithm. The output expressions of the RNN layers are given by:

where represents a Sigmoid function, denotes the operation of a fully connected layer, and are bias vectors. The weight vectors , , and represent the connections from the input layer to the hidden layer, from the previous hidden layer to the next hidden layer, and from the current hidden layer to the output layer, respectively. Here, and denote the current and previous hidden layers, respectively.

Figure 4.

The network of a classifier using the RNN algorithm.

The RNN memory cell can predict the output time series from YOLOv8, enabling the observation of specific segments in image detection. By deploying RNN layers, the AI edge computing model can be optimized to continuously and efficiently track coastal litter imagery detection.

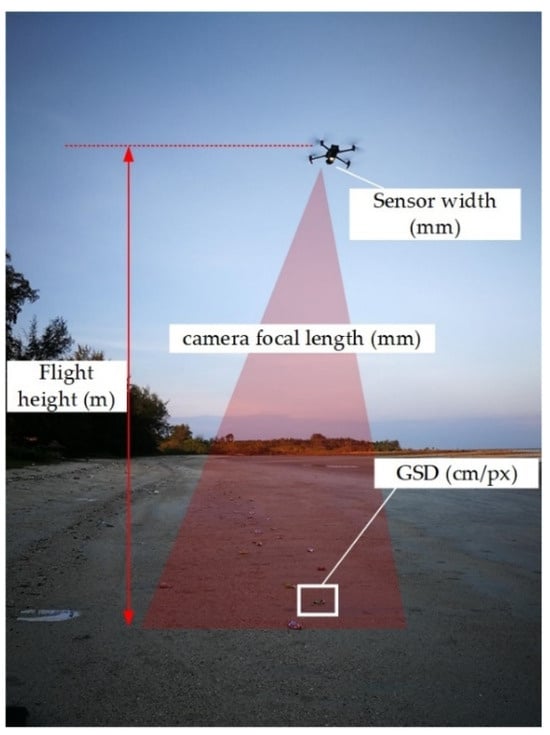

3.3. Ground Sampling Distance

The ground sampling distance (GSD) is determined by drone flight altitude and camera properties. In [], a suitable flight altitude and image resolution of drones for litter monitoring on coasts and rivers were described. During drone-based coastal litter detection, the camera gimbal is commonly set to −90 degrees, to capture photos perpendicular to the flight direction. Drone flight altitude and camera properties determine the pixel spatial resolution of drone images as shown in Figure 5. Thus, the finding of the GSD value is expressed as

where is the flight altitude (m), is the sensor width (mm), is the size of image (pixels), and is the camera focal length (mm).

Figure 5.

Ground sampling distance (GSD) of drone coastal litter monitoring.

The GSD defines the image resolution and the minimum detectable size of litter items. The smallest detectable object size can be expressed as follows

where (cm) is the minimum detectable object size, (pixel) is a constant value indicating the number of pixel units representing an object, and the GSD (cm/px) is the ground area covered by a single pixel.

In practice, the parameter of is not defined a priori and generally varies between 2 and 5 pixels. Consequently, the suitable GSD range is between 0.5 cm/pixel and 1.25 cm/pixel, with = 5 pixels providing better detection of litter items compared to other ranges [].

4. Experimental Setup

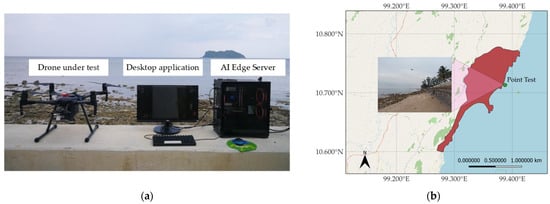

Figure 6 illustrates real-time drone coastal litter detection. The DJI Matrice 200 series was used, with a flight time of 25 min, and it was equipped with a 4K camera and a 5G transmission module for video streaming. Figure 7a presents the equipment used in the experimental setup, including the drone, desktop application, and AI edge server computing. Figure 7b displays the location map of KMITL Prince of Chumphon, Pathio District, Chumphon, Thailand.

Figure 6.

Real-time drone coastal litter detection.

Figure 7.

Experimental tools and location: (a) Real-time drone coastal litter detection equipment; (b) Location map of point test.

The accuracy of AI edge computing is highly dependent on the datasets used for training and testing. The datasets were categorized into three types of images and videos of litter items: cans, bottles, and plastics. Figure 8 shows sample datasets collected from drone videos and photos with 2048 3072 pixels. The dataset consisted of 1289 photos and 13 videos of cans, 1029 photos and 12 videos of bottles, and 1324 photos and 14 videos of plastics, as listed in Table 1. The datasets were split, with 80% used for training and 20% for testing, across 200 iterations (Epochs). A learning rate of 0.001 was used, which balances the AI model’s ability to learn efficiently without overshooting the optimal weights. After several tests, the learning rate was fine-tuned to ensure stable convergence and optimal detection accuracy. A batch size of 16 was selected, enabling efficient processing while maintaining sufficient data per iteration. This batch size optimizes GPU memory usage and speeds up the training process without compromising accuracy. The model was trained for 200 epochs. This iteration count was selected to allow the model to generalize well on training and testing datasets, achieving high accuracy without overfitting.

Figure 8.

Samples of the coastal litter items dataset for training: cans, bottles, and plastics.

Table 1.

Number of dataset categories.

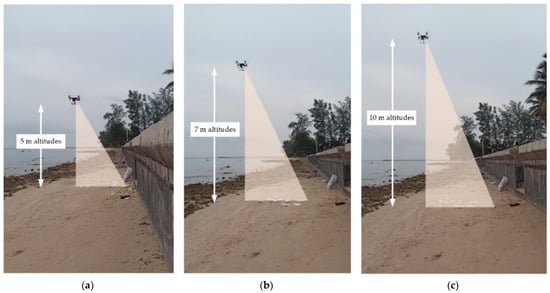

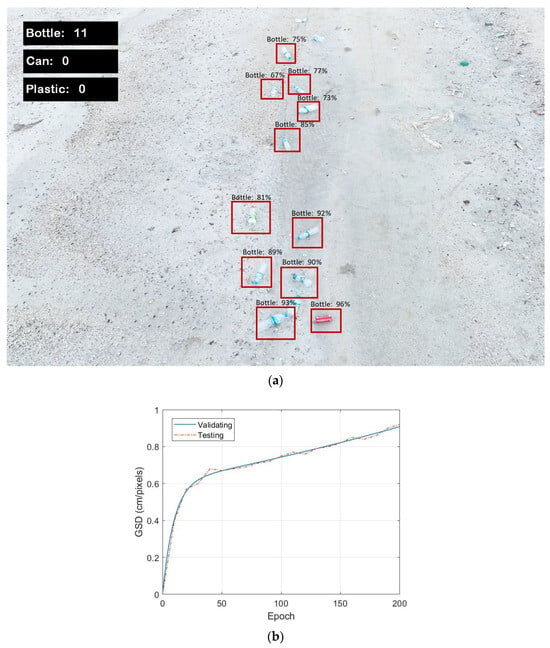

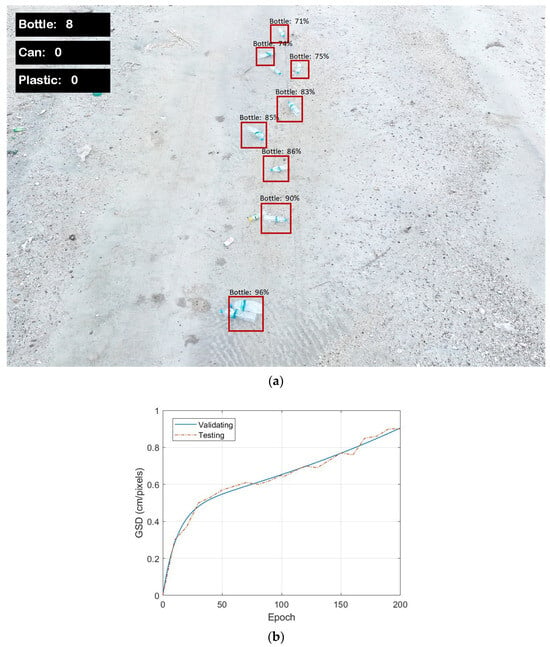

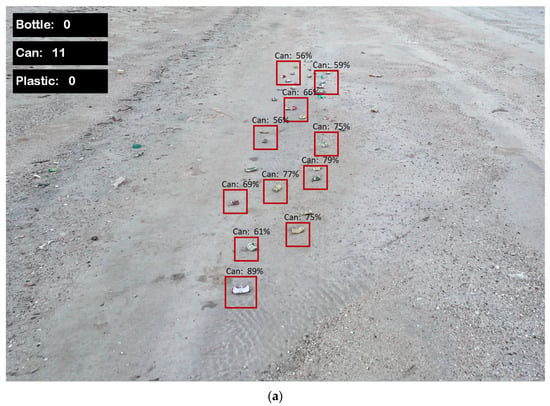

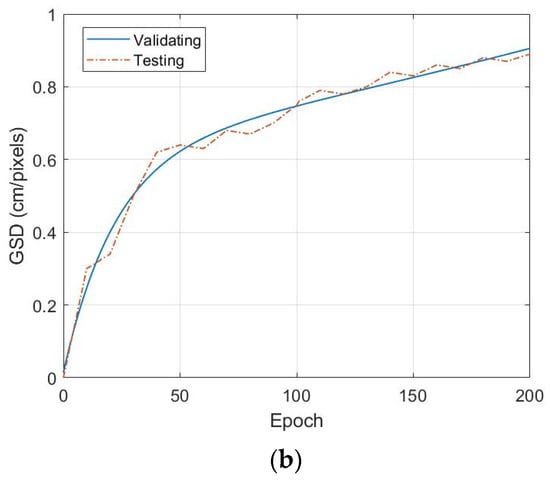

Figure 9a–c illustrates the experimental setup for real-time drone-based coastal litter detection at altitudes of 5 m, 7 m, and 10 m, respectively. Initially, bottle litter detection was performed as the UAV hovered at 5 m, gradually increasing to 10 m. The results, including real-time video monitoring and the performance of GSD versus epochs, are shown in Figure 10, Figure 11 and Figure 12. Table 2 provides the specifications of the AI edge server and drone equipment.

Figure 9.

Experimental setup: (a) Drone flight at 5 m altitude; (b) Drone flight at 7 m altitude; (c) Drone flight at 10 m altitude.

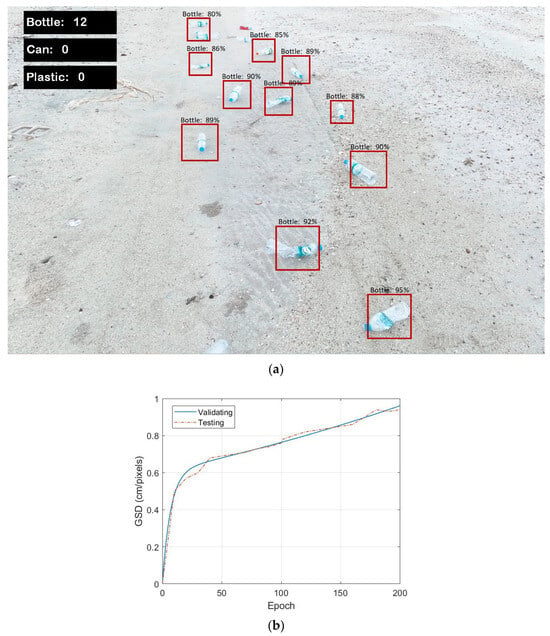

Figure 10.

Bottle litter detection at 5 m altitude: (a) Real-time video monitoring via OBS; (b) GSD vs. Epoch performance.

Figure 11.

Bottle litter detection at 7 m altitude: (a) Real-time video monitoring via OBS; (b) GSD vs. Epoch performance.

Figure 12.

Bottle litter detection at 10 m altitude: (a) Real-time video monitoring via OBS; (b) GSD vs. Epoch performance.

Table 2.

The specifications of the AI edge server and drone equipment.

5. Result and Discussion

5.1. Experimental Results

This section presents the experimental results of real-time drone coastal litter detection by investigating bottle, can, and plastic litter.

Figure 10a shows the real-time video monitoring of bottle litter detection at an altitude of 5 m. The monitoring, displayed via the OBS interface, allows us to count 12 bottles using the testing model. The performance indicator accurately captures the detection by displaying the percentage within the boundary boxes. Figure 10b illustrates the relationship between Ground Sampling Distance (GSD) and Epochs. GSD is measured from 0 to 1 cm/pixel as the number of Epochs increases up to 200. The blue line represents the validation model curve, while the red line shows the testing model curve, with both curves appearing similar. Notably, a breakpoint occurs at 30 Epochs, where the GSD is approximately 0.6 cm/pixel. As the Epochs increase, the GSD rises, reaching 0.96 cm/pixel at 200 Epochs.

Figure 11a presents the real-time video monitoring from the OBS screenshot of bottle litter detection at an altitude of 7 m. The AI edge computing system successfully identifies 11 bottles, with an optimal GSD of 0.96 cm/pixel for the testing curve, as shown in Figure 11b. At an altitude of 10 m, the AI edge computing system detects 8 bottles as shown in Figure 12a. The breakpoint in the GSD curve occurs at 0.50 cm/pixel and 30 Epochs, as shown in Figure 12b. However, the GSD remains optimal at 0.96 cm/pixel at the maximum number of Epochs.

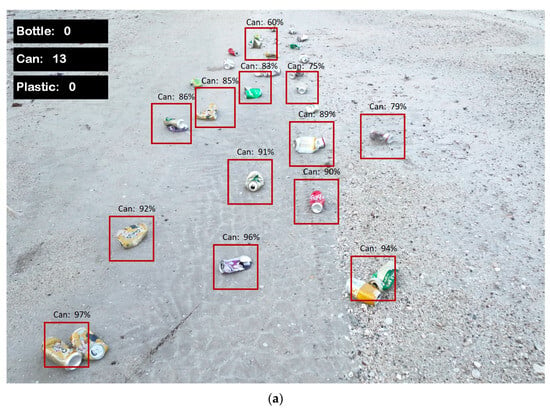

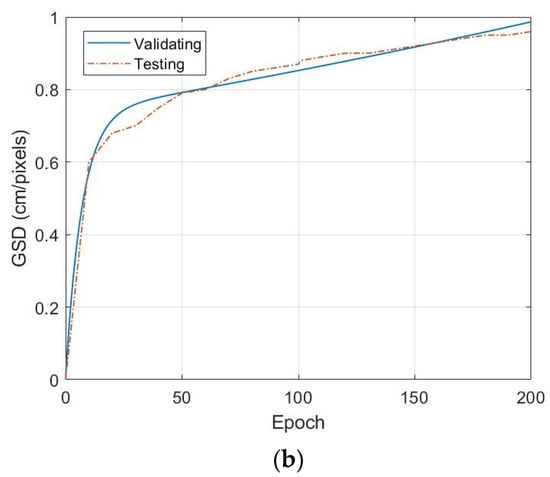

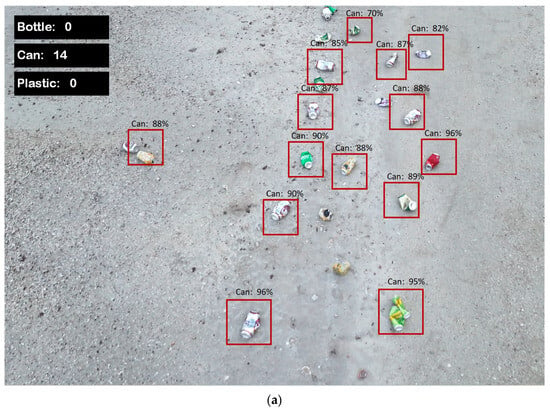

Figure 13a shows the real-time video monitoring from the OBS screenshot of can litter detection at an altitude of 5 m. The AI edge computing system identifies 13 cans on the beach by detecting boundary boxes. The accuracy of detection depends on both the GSD and the number of Epochs. As shown in Figure 13b, the testing model curve indicates that the GSD is optimal at 0.98 cm/pixel at the maximum number of Epochs. The performance of AI edge computing is strongly influenced by image resolution and the clarity of video streaming. Notably, the detection of can litter is more accurate than bottle litter detection due to the improved GSD clarity.

Figure 13.

Can litter detection at 5 m altitude: (a) Real-time video monitoring via OBS; (b) GSD vs. Epoch performance.

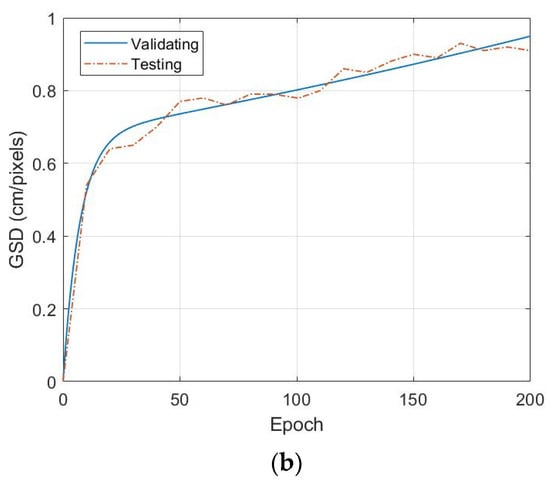

Figure 14a presents the real-time video monitoring from the OBS live stream at an altitude of 7 m, where AI edge computing detects 14 cans on the beach. The AI edge computing system, utilizing YOLOv8 and RNN, validates the GSD, enhancing the precision of the drone camera’s detection along the coastline. Figure 14b displays the GSD for both the validation and testing models. The GSD reaches an optimal value of 0.96 cm/pixel after the testing.

Figure 14.

Can litter detection at 7 m altitude: (a) Real-time video monitoring via OBS; (b) GSD vs. Epoch performance.

Figure 15a shows the real-time video monitoring from the OBS live stream for can litter detection at an altitude of 10 m, where AI edge computing identifies 11 cans on the beach. Figure 15b displays the GSD, which reaches an optimal value of 0.91 cm/pixel after increasing the Epochs to 200. In this case, the GSD breakpoint curve shows a slight increase, likely due to the drone’s altitude and camera focus. Nevertheless, the AI edge server successfully continues detecting litter on the beach.

Figure 15.

Can litter detection at 10 m altitude: (a) Real-time video monitoring via OBS; (b) GSD vs. Epoch performance.

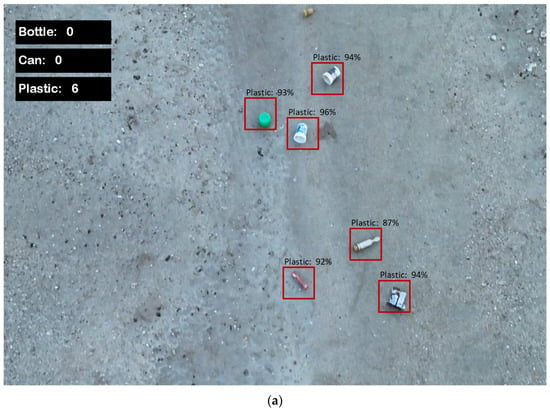

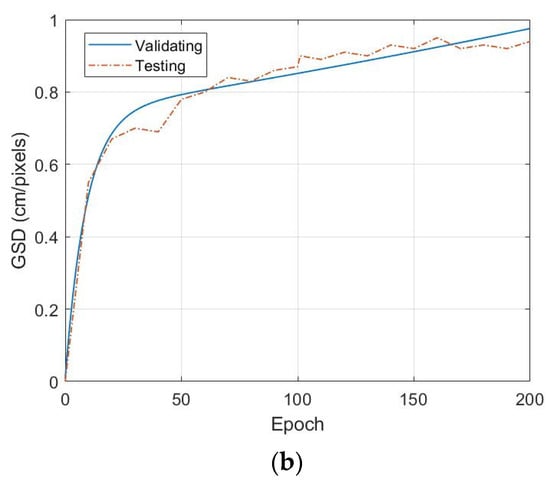

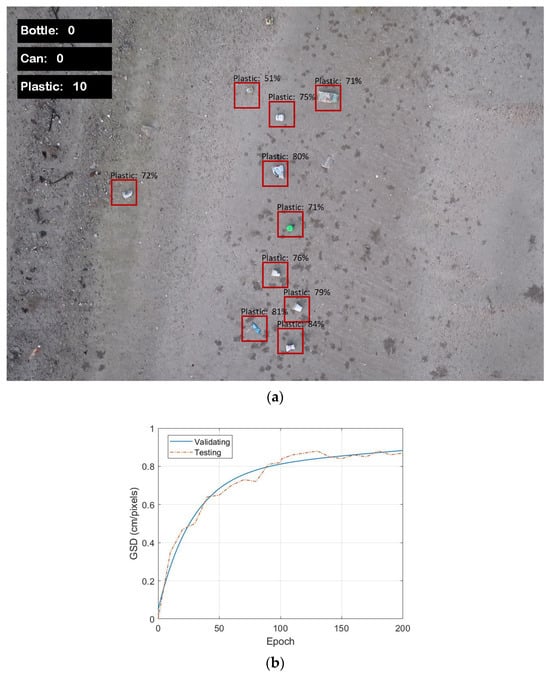

For plastic litter detection, Figure 16a presents the real-time video monitoring from the OBS live stream at an altitude of 5 m. Various types of plastic were detected, including food packaging, foam plastics, and caps. The drone’s camera and AI edge computing system successfully identified six pieces of plastic on the beach. Figure 16b shows the GSD results, with the testing GSD reaching an optimal value of 0.96 cm/pixel after the test.

Figure 16.

Plastic litter detection at 5 m altitude: (a) Real-time video monitoring via OBS; (b) GSD vs. Epoch performance.

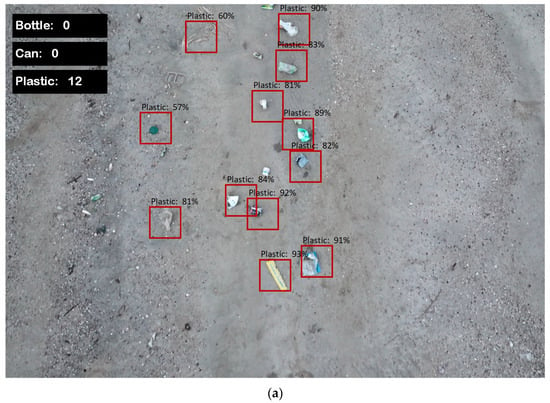

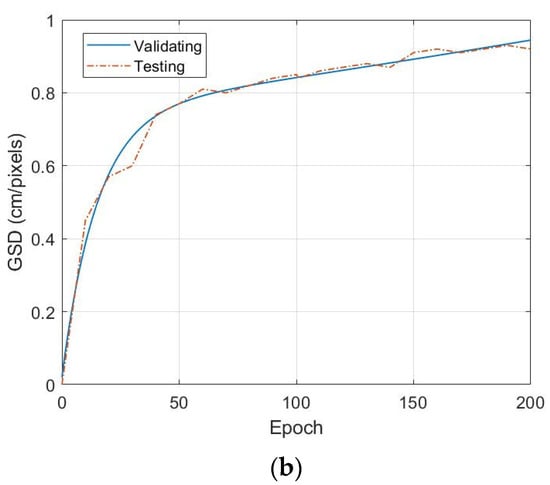

At altitudes of 7 m and 10 m, the real-time video monitoring from the OBS live stream is shown in Figure 17a and Figure 18a, respectively. Figure 17a indicates that AI edge computing detected approximately 12 pieces of plastic litter, while Figure 18a shows 10 pieces detected. Figure 17b presents the GSD with an optimal value of 0.94 cm/pixel, and Figure 18b shows an optimal GSD of 0.92 cm/pixel.

Figure 17.

Plastic litter detection at 7 m altitude: (a) Real-time video monitoring via OBS; (b) GSD vs. Epoch performance.

Figure 18.

Plastic litter detection at 10 m altitude: (a) Real-time video monitoring via OBS; (b) GSD vs. Epoch performance.

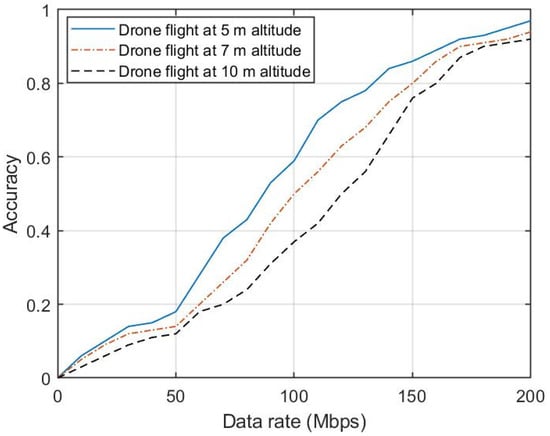

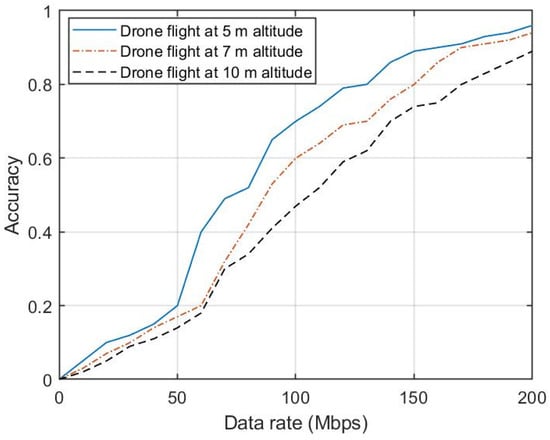

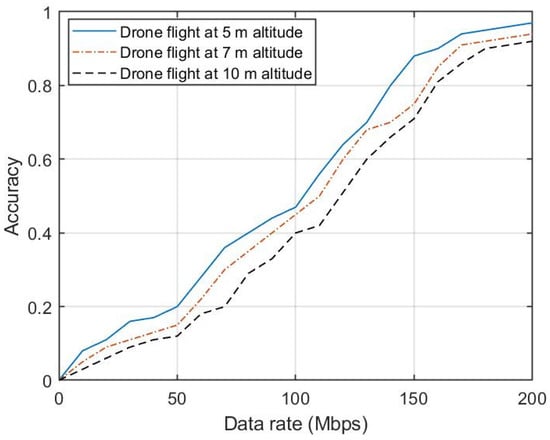

In practice, the performance of AI edge computing is closely linked to the 5G transmission network. Figure 19, Figure 20 and Figure 21 illustrate the relationship between real-time monitoring accuracy and data transmission rate (Mbps), with the 5G network under test supporting data rates of up to 200 Mbps.

Figure 19.

Accuracy vs. data rate for bottle litter detection speed test.

Figure 20.

Accuracy vs. data rate for can litter detection speed test.

Figure 21.

Accuracy vs. data rate for plastic litter detection speed test.

The drone operated at flight altitudes of 5 m, 7 m, and 10 m in the experiment. We analyzed the relationship between data rate and detection accuracy for various types of litter on the beach, including bottles, cans, and plastics. Figure 19 shows the accuracy versus data rate for bottle detection, with the blue curve representing the 5 m altitude, the red dashed line for 7 m, and the black dashed line for 10 m. The highest accuracy was observed at 5 m, likely due to better camera focus and optimal GSD values. While different altitudes impacted the data rate, the accuracy of AI edge computing remained robust across all altitudes. Figure 20 and Figure 21 show similar trends for can and plastic detection, respectively, demonstrating strong accuracy across all altitudes and data rates. These results highlight the importance of the 5G transmission network in supporting AI edge computing for real-time drone-based coastal litter detection in realistic environments.

5.2. Discussion

The experimental results demonstrate the effectiveness of AI edge computing, integrated with 5G communication, for real-time drone-based coastal litter detection. Across varying altitudes (5 m, 7 m, and 10 m), the system consistently identified bottles, cans, and plastics, with varying degrees of accuracy influenced by altitude, GSD, and data transmission rates.

To impact altitude on detection accuracy, the altitude at which the drone operates has a significant impact on detection accuracy. The results show that at lower altitudes, particularly 5 m, the detection accuracy is highest for all types of litter (bottles, cans, and plastics). This can be attributed to the improved camera focus and optimal GSD values at lower heights, as seen in Figure 19, Figure 20 and Figure 21. The blue line in each graph shows the highest accuracy at 5 m, with a gradual decline as the altitude increases to 7 m and 10 m. Despite the decline, AI edge computing maintained robust performance, suggesting that the system is adaptable even at higher altitudes where the GSD decreases. Although the highest accuracy is achieved at lower altitudes, the wind generated by the drone’s propellers can have an impact on detection. In practice, operating at higher altitudes, such as 7 m or 10 m, provides a more stable solution, mitigating the effects of propeller turbulence while maintaining sufficient accuracy.

In our discussion, we observed that a height of 5 m provided the highest detection accuracy due to the finer GSD and improved image clarity, which are especially advantageous for detecting smaller or partially obscured litter items. However, we acknowledge that such a low operating height may present challenges in certain practical scenarios, such as those involving extensive coastal areas or environments where the UAV’s proximity to the ground could cause turbulence or disrupt wildlife.

To address these concerns, we also evaluated the system’s performance at higher altitudes (e.g., 7 m and 10 m). While there was a slight reduction in accuracy due to the coarser GSD, the AI edge computing system demonstrated robust adaptability, maintaining reliable detection of larger objects and overall performance. This suggests that the system can be tuned for different operational heights depending on the context.

Note that operating at low altitudes, such as 5 m or 7 m, offers several benefits for drone-based coastal surveying, particularly in enhancing detection accuracy and detail capture. At lower altitudes, drones can achieve finer GSD, which improves the clarity and resolution of captured images. This precision is especially valuable for detecting partially obscured litter items, such as plastics and bottles, which might be missed at higher altitudes. Low-altitude surveying also reduces the impact of environmental factors like wind, as drones operating closer to the ground experience less turbulence, allowing for more stable data collection. Additionally, proximity to the ground helps mitigate issues caused by variable lighting and reflections from water surfaces, common challenges in coastal environments. As a result, low-altitude surveying enhances the overall effectiveness and reliability of real-time litter detection, contributing to more accurate and actionable environmental monitoring outcomes.

The GSD played a critical role in the precision of litter detection. The results show that an optimal GSD of approximately 0.98 cm/pixel was achieved at the lower altitudes, particularly during bottle and can detection, as shown in Figure 10, Figure 11, Figure 12, Figure 13, Figure 14 and Figure 15. As the number of Epochs increased, the GSD improved until it reached the optimal value at the maximum of 200 Epochs. At higher altitudes, the GSD slightly decreased, but the AI edge computing system still demonstrated strong accuracy, particularly for detecting larger objects like cans. These findings indicate that while GSD affects the resolution and detection precision, the performance of the AI models integrated with YOLOv8 and RNN was robust enough to handle small variations in GSD.

The role of the 5G communication network was crucial in enabling real-time data processing and transmission. The experiments, which tested the drone under data rates of up to 200 Mbps, showed a strong correlation between the data rate and detection accuracy. Higher data rates allowed for faster, more reliable video transmission, which in turn, improved the overall accuracy of the AI edge computing system. This is particularly important in a realistic operational environment where data transfer speeds may fluctuate. The consistency in detection performance across various altitudes further emphasizes the reliability of the 5G transmission network in maintaining high-quality real-time monitoring.

The detection accuracy varied slightly between different types of litter. Bottles and cans were detected with higher accuracy than plastics, particularly at higher altitudes. This difference can be explained by the size and shape of the objects, with bottles and cans being more distinguishable by the AI models due to their more uniform shapes. Plastics, such as food packaging and foam, may have less defined boundaries, making detection more challenging, especially when the camera’s resolution decreases at higher altitudes. Despite this, the system still achieved strong accuracy rates for all types of litter, demonstrating its versatility in handling different materials.

Integrating AI edge computing with 5G communication networks proved essential for real-time drone-based litter detection. AI edge computing allowed efficient on-edge server processing, reducing latency and enabling real-time decision-making. The 5G network ensured that the system maintained a high data rate and low latency, which is critical for real-time applications like coastal litter detection. The successful detection of litter at multiple altitudes and over different materials shows the scalability and adaptability of the system. Moreover, the consistent results across various tests validate the AI model’s robustness, particularly YOLOv8+RNN, in real-time scenarios.

Integrating drones, AI, and 5G presented a powerful solution for real-time coastal litter detection and monitoring. By leveraging drone mobility, AI analytical power, and 5G high-speed data transmission capabilities, this system offers a scalable and adaptable approach for applications. However, overcoming challenges related to infrastructure, power consumption, and operational costs will be essential to realizing the full potential of this integrated technology framework.

While the proposed model achieved high accuracy in detecting specific litter types, its ability to distinguish waste from non-waste items (e.g., stones) remains an area for improvement. Expanding the dataset, refining feature extraction techniques, and leveraging multimodal inputs (e.g., multispectral imaging) are proposed strategies to enhance classification accuracy.

5.3. Evaluation Matrices

To summarize the accuracy of real-time monitoring, the evaluation matrices such as the accuracy, precision, recall, and F1-score, were obtained as

where and represent the true position and true negative, and represent the false positive and false negative, respectively.

The summaries of the accuracy assessment of the experimental test by the evaluation matrices are shown in Table 3.

Table 3.

The evaluation matrices of the real-time drone coastal litter detection.

5.4. Comparison Between the Proposed YOLOv8+RNN and the Existing Models

This study evaluates YOLOv8+RNN in the context of drone-based coastal litter detection, focusing on its suitability for real-time, resource-constrained applications. While various advanced existing models incorporate neural attention mechanisms for enhanced accuracy.

In this subsection, we have compared YOLOv8+RNN with the existing methods of attention-based models. Attention-based object detection models for drones, such as DETR [], EfficientDet [], and Swin Transformer [], utilize neural attention mechanisms to improve detection accuracy by focusing on relevant regions of an image. These models handle complex scenes, variable object scales, and dense object distributions. However, their computational demands often result in slower processing speeds, impacting their suitability for real-time applications.

The proposed YOLOv8+RNN architecture is optimized for real-time processing, achieving a balance of speed and accuracy that allows it to detect objects in rapid succession, even with limited computational resources. This makes YOLOv8+RNN particularly well-suited for applications requiring real-time data transmission and immediate response, such as coastal litter detection via drones.

The comparison of YOLOv8+RNN and existing models like DETR, EfficientDet, and Swin Transformer across key metrics: accuracy, inference speed, computational demands, and suitability for real-time applications, as shown in Table 4.

Table 4.

Comparison between YOLOv8+RNN and the existing models for real-time drone coastal litter detection.

Given the constraints of drone-based coastal litter detection, including the need for real-time data analysis and limited onboard computing power, the proposed YOLOv8+RNN efficiency and speed were decisive factors. Although attention-based models like DETR and Swin Transformer offer high accuracy, their computational requirements make them less viable for real-time, edge-based applications. YOLOv8+RNN, with its optimized structure, enables effective detection while balancing speed and resource efficiency.

The comparison accuracy result reveals that the existing models offer precise detection capabilities. We guarantee that the proposed YOLOv8+RNN optimized balance of inference speed (FSP) and accuracy makes it the most suitable test for real-time, drone-based coastal litter detection in this work.

6. Conclusions

This paper presented a novel approach for real-time coastal litter detection using drone-enabled AI edge computing integrated with 5G communication networks. Our experimental results demonstrated the system’s capability to accurately detect various litter types of bottles, cans, and plastics at flight altitudes of 5 m, 7 m, and 10 m. By deploying AI edge servers equipped with YOLOv8+RNN models, we achieved efficient, high-accuracy data processing, while the 5G network provided a reliable high-speed data rate of 200 Mbps, crucial for seamless real-time monitoring. The proposed model performance was influenced by key factors, including altitude, GSD, and data rate. Lower altitudes, particularly 5 m, yielded the highest detection accuracy due to enhanced camera focus and optimal GSD values. Even at higher altitudes, however, the system maintained robust accuracy, showcasing the reliability and adaptability of the AI edge computing framework and achieving a consistently strong F1-score. The 5G network proved essential for stable data transmission, supporting the model’s effectiveness in dynamic environments. This study highlights the potential of AI-powered, automated coastal litter detection to address the urgent issue of marine pollution, offering a high-precision tool for environmental monitoring.

Future work could explore our drone capability for detecting smaller or more complex litter types and its application in diverse marine settings, including non-trash coastal environments.

Author Contributions

Conceptualization, S.D. and P.P.; methodology, S.D.; software, S.D.; validation, S.D. and P.P.; investigation, S.D.; writing—original draft preparation, S.D.; writing—review and editing, S.D. and P.P. All authors have read and agreed to the published version of the manuscript.

Funding

This work was financially supported by King Mongkut’s Institute of Technology Ladkrabang and the NSRF under the Fundamental Fund (grant number: RE-KRIS/FF66/59).

Data Availability Statement

The data presented in this study are available from the corresponding author upon request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Kungskulniti, N.; Charoenca, N.; Hamann, S.L.; Pitayarangsarit, S.; Mock, J. Cigarette waste in popular beaches in Thailand: High densities that demand environmental action. Int. J. Environ. Res. Public Health 2018, 15, 630. [Google Scholar] [CrossRef] [PubMed]

- Akkajit, P.; Tipmanee, D.; Cherdsukjai, P.; Suteerasak, T.; Thongnonghin, S. Occurrence and distribution of microplastics in beach sediments along Phuket coastline. Mar. Pollut. Bull. 2021, 169, 112496. [Google Scholar] [CrossRef] [PubMed]

- Rangel-Buitrago, N.; Williams, A.T.; Neal, W.J.; Gracia, A.; Micallef, A. Litter in coastal and marine environments. Mar. Pollut. Bull. 2022, 177, 113546. [Google Scholar] [CrossRef] [PubMed]

- Escobar-Sánchez, G.; Markfort, G.; Berghald, M.; Ritzenhofen, L.; Schernewski, G. Aerial and underwater drones for marine litter monitoring in shallow coastal waters: Factors influencing item detection and cost-efficiency. Environ. Monit. Access 2022, 194, 863. [Google Scholar] [CrossRef]

- Bao, Z.C.; Sha, J.M.; Li, X.M.; Hanchiso, T.; Shifaw, E. Monitoring of beach litter by automatic interpretation of unmanned aerial vehicle images using the segmentation threshold method. Mar. Pollut. Bull. 2018, 137, 388–398. [Google Scholar] [CrossRef]

- Martin, C.; Parkes, S.; Zhang, Q.; Zhang, X.; McCabe, M.F.; Duarte, C.M. Use of unmanned aerial vehicles for efficient beach litter monitoring. Mar. Pollut. Bull. 2018, 131, 662–673. [Google Scholar] [CrossRef]

- Salgado-Hernanz, P.M.; Bauzà, J.; Alomar, C.; Compa, M.; Romero, L.; Deudero, S. Assessment of marine litter through remote sensing: Recent approaches and future goals. Mar. Pollut. Bull. 2021, 168, 112347. [Google Scholar] [CrossRef]

- Topouzelis, K.; Papageorgiou, D.; Suaria, G.; Aliani, S. Floating marine litter detection algorithms and techniques using optical remote sensing data: A review. Mar. Pollut. Bull. 2021, 170, 112675. [Google Scholar] [CrossRef]

- Veettil, B.K.; Quan, N.H.; Hauser, L.T.; Van, D.D.; Quang, N.X. Coastal and marine plastic litter monitoring using remote sensing: A review. Estuar. Coast. Shelf Sci. 2022, 279, 108160. [Google Scholar] [CrossRef]

- Andriolo, U.; Garcia-Garin, O.; Vighi, M.; Borrell, A.; Gonçalves, G. Beached and floating litter surveys by unmanned aerial vehicles: Operational analogies and differences. Remote Sens. 2022, 14, 1336. [Google Scholar] [CrossRef]

- Goddijn-Murphy, L.; Williamson, B.J.; McIlvenny, J.; Corradi, P. Using a UAV thermal infrared camera for monitoring floating marine plastic litter. Remote Sens. 2022, 14, 3179. [Google Scholar] [CrossRef]

- Taggio, N.; Aiello, A.; Ceriola, G.; Kremezi, M.; Kristollari, V.; Kolokoussis, P.; Karathanassi, V.; Barbone, E. A combination of machine learning algorithms for marine plastic litter detection exploiting hyperspectral PRISMA data. Remote Sens. 2022, 14, 3606. [Google Scholar] [CrossRef]

- Almeida, S.; Radeta, M.; Kataoka, T.; Canning-Clode, J.; Pessanha Pais, M.; Freitas, R.; Monteiro, J.G. Designing unmanned aerial survey monitoring program to assess floating litter contamination. Remote Sens. 2023, 15, 84. [Google Scholar] [CrossRef]

- Lo, H.S.; Wong, L.C.; Kwok, S.H.; Lee, Y.K.; Po, B.H.K.; Wong, C.Y.; Tam, N.F.Y.; Cheung, S.G. Field test of beach litter assessment by commercial aerial drone. Mar. Pollut. Bull. 2020, 151, 110823. [Google Scholar] [CrossRef] [PubMed]

- Andriolo, U.; Gonçalves, G.; Rangel-Buitrago, N.; Paterni, M.; Bessa, F.; Gonçalves, L.M.; Sobral, P.; Bini, M.; Duarte, D.; Fontán-Bouzas, Á.; et al. Drones for litter mapping: An inter-operator concordance test in marking beached items on aerial images. Mar. Pollut. Bull. 2021, 169, 112542. [Google Scholar] [CrossRef]

- Merlino, S.; Paterni, M.; Locritani, M.; Andriolo, U.; Gonçalves, G.; Massetti, L. Citizen science for marine litter detection and classification on unmanned aerial vehicle images. Water 2021, 13, 3349. [Google Scholar] [CrossRef]

- Gonçalves, G.; Andriolo, U. Operational use of multispectral images for macro-litter mapping and categorization by unmanned aerial vehicle. Mar. Pollut. Bull. 2022, 176, 113431. [Google Scholar] [CrossRef]

- Gonçalves, G.; Andriolo, U.; Gonçalves, L.M.; Sobral, P.; Bessa, F. Beach litter survey by drones: Mini-review and discussion of a potential standardization. Enrivon. Pollut. 2022, 315, 120370. [Google Scholar] [CrossRef]

- Taddia, Y.; Corbau, C.; Buoninsegni, J.; Simeoni, U.; Pellegrinelli, A. UAV approach for detecting plastic marine debris on the beach: A case study in the Po River Delta (Italy). Drones 2021, 5, 140. [Google Scholar] [CrossRef]

- Andriolo, U.; Topouzelis, K.; van Emmerik, T.H.; Papakonstantinou, A.; Monteiro, J.G.; Isobe, A.; Hidaka, M.; Kako, S.I.; Kataoka, T.; Gonçalves, G. Drones for litter monitoring on coasts and rivers: Suitable flight altitude and image resolution. Mar. Pollut. Bull. 2023, 195, 115521. [Google Scholar]

- Pinto, L.; Andriolo, U.; Gonçalves, G. Detecting stranded macro-litter categories on drone orthophoto by a multi-class Neural Network. Mar. Pollut. Bull. 2021, 169, 112594. [Google Scholar] [CrossRef] [PubMed]

- Papakonstantinou, A.; Batsaris, M.; Spondylidis, S.; Topouzelis, K. A citizen science unmanned aerial system data acquisition protocol and deep learning techniques for the automatic detection and mapping of marine litter concentrations in the coastal zone. Drones 2021, 5, 6. [Google Scholar] [CrossRef]

- Scarrica, V.M.; Aucelli, P.P.; Cagnazzo, C.; Casolaro, A.; Fiore, P.; La Salandra, M.; Rizzo, A.; Scardino, G.; Scicchitano, G.; Staiano, A. A novel beach litter analysis system based on UAV images and Convolutional Neural Networks. Ecol. Inform. 2022, 72, 101875. [Google Scholar] [CrossRef]

- Hidaka, M.; Matsuoka, D.; Sugiyama, D.; Murakami, K.; Kako, S.I. Pixel-level image classification for detecting beach litter using a deep learning approach. Mar. Pollut. Bull. 2022, 175, 113371. [Google Scholar] [CrossRef] [PubMed]

- Pfeiffer, R.; Valentino, G.; Farrugia, R.A.; Colica, E.; D’Amico, S.; Calleja, S. Detecting beach litter in drone images using deep learning. In Proceedings of the IEEE International Workshop on Metrology for the Sea; Learning to Measure Sea Health Parameters (MetroSea), Milazzo, Italy, 3–5 October 2022. [Google Scholar]

- Wu, T.W.; Zhang, H.; Peng, W.; Lü, F.; He, P.J. Applications of convolutional neural networks for intelligent waste identification and recycling: A review. Resour. Conserv. Recycl. 2023, 190, 106813. [Google Scholar] [CrossRef]

- Pfeiffer, R.; Valentino, G.; D’Amico, S.; Piroddi, L.; Galone, L.; Calleja, S.; Farrugia, R.A.; Colica, E. Use of UAVs and deep learning for beach litter monitoring. Electronics 2022, 12, 198. [Google Scholar] [CrossRef]

- Gupta, C.; Gill, N.S.; Gulia, P.; Yadav, S.; Chatterjee, J.M. A novel inetuned YOLOv8 model for real-time underwater trash detection. J. Real-Time Image Process. 2024, 21, 48. [Google Scholar]

- Gui, S.; Song, S.; Qin, R.; Tang, Y. Remote sensing object detection in the deep learning Era—A review. Remote Sens. 2024, 16, 327. [Google Scholar] [CrossRef]

- Wang, G.; Chen, Y.; An, P.; Hong, H.; Hu, J.; Huang, T. UAV-YOLOv8: A small-object-detection model based on improved YOLOv8 for UAV aerial photography scenarios. Sensors 2023, 23, 7190. [Google Scholar] [CrossRef]

- Cao, Z.; Kooistra, L.; Wang, W.; Guo, L.; Valente, J. Real-time object detection based on UAV remote sensing: A systematic literature review. Drones 2023, 7, 620. [Google Scholar] [CrossRef]

- Lins, S.; Cardoso, K.V.; Both, C.B.; Mendes, L.; De Rezende, J.F.; Silveira, A.; Linder, N.; Klautau, A. Artificial intelligence for enhanced mobility and 5G connectivity in UAV-based critical missions. IEEE Access 2021, 9, 111792–111801. [Google Scholar] [CrossRef]

- Ozer, S.; Ilhan, H.E.; Ozkanoglu, M.A.; Cirpan, H.A. Offloading deep learning powered vision tasks from UAV to 5G edge server with denoising. IEEE Trans. Veh. Technol. 2023, 72, 8035–8048. [Google Scholar] [CrossRef]

- Garg, T.; Gupta, S.; Obaidat, M.S.; Raj, M. Drones as a service (DaaS) for 5G networks and blockchain-assisted IoT-based smart city infrastructure. Clust. Comput. 2024, 27, 8725–8788. [Google Scholar] [CrossRef]

- Geraci, G.; Garcia-Rodriguez, A.; Azari, M.M.; Lozano, A.; Mezzavilla, M.; Chatzinotas, S.; Chen, Y.; Rangan, S.; Di Renzo, M. What will the future of UAV cellular communications be? A flight from 5G to 6G. IEEE Commun. Surv. Tutor. 2022, 24, 1304–1335. [Google Scholar] [CrossRef]

- Wang, W.; Srivastava, G.; Lin, J.C.W.; Yang, Y.; Alazab, M.; Gadekallu, T.R. Data freshness optimization under CAA in the UAV-aided MECN: A potential game perspective. IEEE Trans. Intell. Transport. Sysm. 2023, 11, 12912–12921. [Google Scholar] [CrossRef]

- Kong, Y.; Shang, X.; Jia, S. Drone-DETR: Efficient small object detection for remote sensing image using enhanced RT-DETR model. Sensors 2024, 24, 5496. [Google Scholar] [CrossRef]

- Anusha, N.; Swapna, T. Deep learning-based aerial object detection for unmanned aerial vehicles. In Proceedings of the International Conference on Sustainable Communication Networks and Application (ICSCNA), Theni, India, 15–17 November 2023. [Google Scholar]

- Xu, W.; Zhang, C.; Wang, Q.; Dai, P. FEA-Swin: Foreground enhancement attention swin transformer network for accurate UAV-based dense object detection. Sensors 2022, 22, 6993. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).