Abstract

This study presents a novel framework for digital copyright management that integrates AI-enhanced perceptual hashing, blockchain technology, and digital watermarking to address critical challenges in content protection and verification. Traditional watermarking approaches typically employ content-independent metadata and rely on centralized authorities, introducing risks of tampering and operational inefficiencies. The proposed system utilizes a pre-trained convolutional neural network (CNN) to generate a robust, content-based perceptual hash value, which serves as an unforgeable watermark intrinsically linked to the image content. This hash is embedded as a QR code in the frequency domain and registered on a blockchain, ensuring tamper-proof timestamping and comprehensive traceability. The blockchain infrastructure further enables verification of multiple watermark sequences, thereby clarifying authorship attribution and modification history. Experimental results demonstrate high robustness against common image modifications, strong discriminative capabilities, and effective watermark recovery, supported by decentralized storage via the InterPlanetary File System (IPFS). The framework provides a transparent, secure, and efficient solution for digital rights management, with potential future enhancements including post-quantum cryptography integration.

1. Introduction

The exponential growth of digital content creation and distribution has rendered the protection of intellectual property for digital works (including images, audio, and video) a paramount concern for creators and industries worldwide. Digital watermarking technology has been widely adopted for this purpose, embedding imperceptible information directly into digital works to assert copyright, verify authenticity, and track usage patterns [1,2]. However, conventional digital watermarking systems, while effective in certain scenarios, exhibit three fundamental and interconnected limitations [1,2]:

- 1.

- Content-Independent Watermarks: Traditional watermark information (such as author identification, serial numbers, or timestamps) typically comprises metadata that remains unrelated to the image’s intrinsic visual content. This characteristic allows identical watermarks to be illicitly copied and applied to different images, failing to provide a unique, content-binding signature. Consequently, such systems cannot definitively prove that a specific work constitutes the original carrier of the watermark.

- 2.

- Limited Robust Verification for Derivative Works: Digital images frequently undergo modifications including cropping, filtering, and repurposing. Establishing that a modified image (a derivative work) originates from a specific original and, crucially, verifying the sequence of these modifications (along with their respective copyright claims) presents significant challenges within existing systems. Current approaches lack inherent mechanisms to establish verifiable creation lineage.

- 3.

- Dependence on Centralized Trust Models: Contemporary management systems often rely on trusted third-party authorities (TTPs) for watermark registration, storage, and verification. This centralized architecture introduces vulnerabilities including data tampering risks, single points of failure, bureaucratic inefficiencies, and potential information leakage. Moreover, this model contradicts the fundamentally decentralized nature of the modern internet ecosystem.

To address these limitations, researchers have begun exploring blockchain technology integration, leveraging its inherent decentralization, immutability, and transparency characteristics [3,4]. For instance, Madushanka et al. [3] proposed a blockchain-based digital rights management (DRM) framework for digital rights assertion, while Zhang et al. [4] combined blockchain with zero-trust mechanisms for image copyright protection.Recent advances include intelligent frameworks for secure software-defined networks [5] and new perspectives for cultural heritage management [6]. However, merely storing a cryptographic hash (such as SHA-256) of an image on a blockchain proves insufficient, as even minor modifications—such as brightness adjustments—will completely alter the hash, thereby breaking the linkage to the original work. This limitation underscores the critical importance of perceptual hashing methodologies.

Perceptual hashing, also referred to as image fingerprinting, aims to generate similar hash values for perceptually identical images, even when their pixel-level representations differ substantially [7]. Traditional algorithms based on hand-crafted features (including Discrete Cosine Transform (DCT) and Singular Value Decomposition (SVD)) often lack the robustness required for copyright applications across diverse image editing scenarios [8]. Recent advances in deep learning, particularly Convolutional Neural Networks (CNNs), have significantly enhanced the discriminability and robustness of perceptual hashing techniques [7,9]. For example, Li et al. [7] demonstrated that leveraging high-resolution features in deep hashing frameworks substantially enhances hash code distinctiveness. Our previous work [10] established foundational principles by combining blockchain with perceptual hashing. The present study significantly advances this paradigm by introducing a more efficient and robust AI-assisted perceptual hashing method based on CNNs, thereby progressing beyond traditional feature-based approaches.

The principal contributions and novel aspects of this research are delineated as follows:

- 1.

- Novel Integration Framework: This study presents the first comprehensive framework that seamlessly integrates CNN-based perceptual hashing, QR code watermarking in the Discrete Wavelet Transform (DWT) domain, and blockchain technology for end-to-end digital copyright management, addressing both technical implementation and trust verification challenges.

- 2.

- Content-Binding Authentication: In contrast to traditional metadata-based watermarks, our AI-enhanced perceptual hash functions as an unforgeable, content-intrinsic signature that cannot be transferred between disparate images.

- 3.

- Derivative Work Lineage Tracking: We introduce a blockchain-based mechanism for verifiably tracking the complete creation and modification history of derivative works through chained perceptual hashes.

- 4.

- Decentralized Trust Architecture: The proposed system eliminates reliance on centralized authorities by leveraging blockchain’s immutability and IPFS’s distributed storage capabilities, providing a transparent and tamper-proof solution.

- 5.

- Comprehensive Experimental Evaluation: Extensive experiments conducted on both natural images and digital artworks demonstrate superior performance compared to traditional methods and recent deep learning approaches.

The remainder of this paper is organized as follows: Section 2 reviews related work in digital watermarking, perceptual hashing, and blockchain applications. Section 3 details the proposed system architecture. Section 4 and Section 5 elaborate on the AI-based perceptual hashing methodology and blockchain integration, respectively. Section 6 presents comprehensive experimental setup and results. Section 7 discusses key findings and limitations, and Section 8 concludes the paper with future research directions.

2. Related Work

2.1. Digital Watermarking

Digital watermarking techniques [1] embed information into carrier signals through various methodologies. While demonstrating robustness against common signal processing operations, their security typically hinges on the secrecy of embedding keys rather than intrinsic linkages to content characteristics. Furthermore, managing associated watermark information (metadata) presents a separate challenge that often requires additional infrastructure.

2.2. Perceptual Hashing

Perceptual hashing [7], alternatively termed image fingerprinting, differs fundamentally from cryptographic hashing by generating similar hash values for perceptually identical images, notwithstanding their pixel-level representation differences. Traditional perceptual hashing algorithms, including those based on Discrete Cosine Transform (DCT) or Singular Value Decomposition (SVD), were primarily designed for image retrieval tasks and frequently lack the robustness necessary for copyright-related applications across diverse image editing scenarios. Recent advances in deep learning, particularly Convolutional Neural Networks (CNNs), have substantially improved perceptual hashing discriminability and robustness. For instance, Li et al. [7] demonstrated that leveraging high-resolution features in deep hashing frameworks enhances hash code distinctiveness, leading to more accurate image retrieval and content identification. Other advanced approaches, such as subspace learning, have been explored for generating robust hashes in specialized domains like hyperspectral image authentication [11]. Inspired by these developments, our methodology employs a pre-trained CNN to extract high-level, semantically rich features, which are subsequently binarized to form perceptual hash values. This approach not only improves robustness against common image modifications but also ensures strong discriminability between visually distinct images, thereby addressing key limitations of traditional hand-crafted feature-based hashing methods [12].

2.3. Blockchain in Copyright Management

Blockchain technology has been extensively investigated for copyright protection applications [3,4,13,14] due to its decentralization, immutability, and transparency properties. It provides trusted timestamping capabilities and permanent transaction records. However, storing large image files directly on-chain remains impractical. Most solutions, including our proposed framework, utilize blockchain to store cryptographic commitments (hashes) of digital works, while the files themselves reside in off-chain storage systems such as the InterPlanetary File System (IPFS) [15].

2.4. AI in Cryptography and Security

The emerging field of AI-assisted cryptography presents novel opportunities for security enhancement. CNNs have revolutionized image processing methodologies, and we apply this capability to perceptual hashing, treating robust feature generation for content-based hashing as an application of AI-assisted cryptography, where AI models enhance core cryptographic primitives (hashing) for specific security tasks [9,16].

2.5. Systematic Comparison with Previous Approaches

To clearly position our contributions within existing literature, Table 1 provides a systematic comparison of our proposed framework with previous schemes, including our earlier COMPSAC work [10,17] and other relevant blockchain-based copyright protection systems.

Table 1.

Systematic Comparison of Blockchain-based Copyright Protection Schemes.

As evidenced in Table 1, our current work introduces several key innovations: (i) utilization of pre-trained CNN features for robust perceptual hashing, advancing beyond hand-crafted features; (ii) comprehensive support for tracking derivative works through blockchain-chained perceptual hashes; (iii) integration of QR code watermarks in the DWT domain for enhanced robustness; and (iv) validation across both natural images and digital artworks, demonstrating broader applicability.

3. Proposed System Architecture

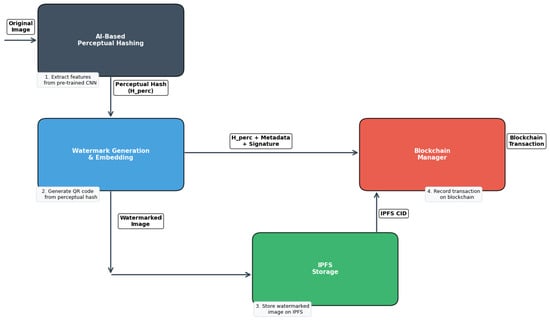

Figure 1 illustrates the overall architecture of our proposed system, which comprises four main modules: AI-based Perceptual Hashing, Watermark Generation & Embedding, Blockchain Manager, and IPFS storage components.

Figure 1.

System Architecture of Proposed Copyright Management Framework. Different colors represent distinct system modules: blue for AI-based Perceptual Hashing, green for Watermark Generation & Embedding, orange for Blockchain Manager, and purple for IPFS Storage.

3.1. AI-Based Perceptual Hashing Module

This module constitutes the core innovation of our framework. Instead of fine-tuning a CNN for each image as in prior work [17], we employ a pre-trained CNN (e.g., VGG or ResNet architectures) as a fixed feature extractor.

- 1.

- Feature Extraction: The input image undergoes preprocessing before being processed through the pre-trained CNN architecture.

- 2.

- Hash Generation: Activations from specific intermediate layers (e.g., the block5_pool layer in VGG16, yielding a 7 × 7 × 512 feature map) are extracted and flattened into high-dimensional vectors. These vectors undergo dimensionality reduction to 256 principal components using Principal Component Analysis (PCA) to concentrate the most salient features. The selection of 256 dimensions via PCA was empirically determined through ablation studies comparing hash performance at 128, 256, and 512 dimensions. The 256-dimensional representation provided optimal balance between discriminability (maintaining sufficient information) and robustness (reducing noise and dimensionality), while aligning with standard hash lengths for practical storage and comparison. Finally, the 256-dimensional vector undergoes binarization by setting values above the median to 1 and others to 0, generating a fixed-length 256-bit perceptual hash value, denoted as . This hash remains unique to the image’s visual content while demonstrating robustness to minor modifications.

3.2. Watermark Generation and Embedding Module

- 1.

- QR Code Watermark Image: To enhance payload capacity and robustness, the 256-bit perceptual hash value (converted to a 64-character hexadecimal string) along with minimal copyright identifiers undergo encoding into QR code images (Version 5). QR Code Version 5 (37 × 37 modules) was selected due to its capacity of 86 alphanumeric characters, sufficient for encoding the 64-character hexadecimal string of our 256-bit perceptual hash () alongside short copyright identifiers, with remaining capacity reserved for error correction, thereby enhancing recovery robustness. This QR code functions as the visual watermark component.

- 2.

- Frequency-Domain Embedding: The QR code watermark undergoes embedding into original images in the frequency domain utilizing a two-level Discrete Wavelet Transform (DWT). The original image undergoes decomposition into LL, LH, HL, and HH sub-bands. The embedding process employs Haar wavelets, with the LL sub-band undergoing further decomposition to obtain a second-level decomposition. The QR code watermark image undergoes conversion to binary sequences. These sequences undergo adaptive embedding into mid-frequency coefficients of the HL and LH sub-bands of the second-level decomposition using quantization-based techniques with fixed quantization step size . This step size was empirically selected to provide favorable trade-offs between imperceptibility and robustness. This strategic approach provides advantageous balance between imperceptibility and robustness, as mid-frequency components demonstrate reduced sensitivity to noise and compression compared to high-frequency components, while carrying greater visual weight than low-frequency components.

3.3. Blockchain Manager Module

- 1.

- Transaction Creation: Transactions are created containing the following data elements:

- (a)

- The perceptual hash value of the original image;

- (b)

- A cryptographic hash (e.g., SHA-256) of the watermarked image;

- (c)

- Author’s public key and digital signature;

- (d)

- Reference to the stored watermarked image on IPFS.

- 2.

- Timestamping and Storage: These transactions undergo broadcasting to blockchain networks, packaging into blocks, and immutable recording with trusted timestamps.

3.4. IPFS Storage Module

The watermarked images and descriptive text files undergo uploading to the InterPlanetary File System (IPFS) [3]. IPFS returns unique Content Identifiers (CIDs), which are incorporated into blockchain transactions. This approach provides decentralized, scalable storage without burdening blockchain infrastructure.

4. AI-Based Perceptual Hashing Scheme

Let represent the flattened feature vector. Let denote the 256-dimensional vector following PCA reduction.

The perceptual hash value is subsequently generated as:

where Binarize converts vector elements to binary values (0 or 1) based on threshold parameters (e.g., median values).

This methodology demonstrates high efficiency as it requires no retraining or fine-tuning, rendering it suitable for practical, large-scale applications. The complete process is summarized in Algorithm 1 (AI-Enhanced Perceptual Hash Generation).

| Algorithm 1 AI-Enhanced Perceptual Hash Generation. |

| Require: Input image I, Pre-trained CNN model M, PCA transformation Ensure: 256-bit perceptual hash

|

5. Blockchain Integration for Copyright Verification

5.1. First-Time Registration

The original image owner computes the image hash (), embeds the watermark using Algorithm 2, and registers alongside the watermarked image’s cryptographic hash on the blockchain. This process establishes initial copyright claims.

| Algorithm 2 Watermark Embedding Process. |

| Require: Original image I, Perceptual hash , Quantization step Ensure: Watermarked image

|

5.2. Handling Multiple Watermarks (Derivative Works)

When derivative works are created (e.g., edited versions), the process repeats as follows:

- 1.

- Generate new perceptual hash from the edited image;

- 2.

- Embed new watermark containing ;

- 3.

- Register new blockchain transactions containing and references to previous block transactions.

The blockchain’s immutable timeline enables any party to trace hash sequences (), providing auditable proof of creation and modification chronology.

6. Experimental Setup and Results

6.1. Experimental Setup

To comprehensively evaluate proposed system performance, we designed experiments targeting two core components: the AI perceptual hashing module and digital watermarking system.

6.1.1. Datasets

We employed two distinct datasets to ensure thorough evaluation:

- 1.

- The UCID v2 dataset: A standard benchmark comprising 1338 uncompressed color images of natural scenes, providing baseline performance metrics for conventional photographs.

- 2.

- An Original Digital Art dataset: To test system generalizability, we curated a dataset of 200 diverse digital artworks, including stylized illustrations, digital paintings, and synthetic media (e.g., AI-generated art). This represents modern digital creations where copyright protection proves highly relevant. This dataset remains available from corresponding authors upon reasonable request for non-commercial research purposes.

The CNN model (VGG16) functioned as a fixed feature extractor without dataset-specific fine-tuning. Consequently, all images from both datasets underwent testing, rigorously evaluating the out-of-the-box generalization capability of our pre-trained model.

6.1.2. AI Perceptual Hashing Model

- 1.

- Base Model: VGG16, pre-trained on ImageNet. The selection of VGG16 over newer architectures (e.g., ResNet or MobileNet) was based on its stable, well-understood feature representations and proven competitive robustness in perceptual tasks [12,16]. While newer models exist, VGG16’s architectural simplicity facilitates reproducibility, and its performance remains state-of-the-art for perceptual hashing applications.

- 2.

- Feature Extraction Layer: The block5_pool layer (output shape 7 × 7 × 512) was selected for its high-level, semantically rich features that demonstrate robustness to minor pixel-level changes.

- 3.

- Hash Generation: The 7 × 7 × 512 feature map underwent flattening into 25,088-dimensional vectors. Principal Component Analysis (PCA) was applied to reduce dimensionality to 256 dimensions, concentrating the most salient information. These 256-dimensional vectors subsequently underwent binarization by setting values above the median to 1 and others to 0, generating fixed-length 256-bit perceptual hashes, .

6.1.3. Digital Watermarking Setup

- 1.

- Watermark Payload: The 256-bit (converted to 64-character hexadecimal strings) and short copyright identifiers (“(C)TSNU”).

- 2.

- QR Code: QR Code Version 5 (37 × 37 modules) was employed, offering capacity of 86 alphanumeric characters, sufficient for our payload and error correction requirements.

- 3.

- Embedding Domain: A two-level Discrete Wavelet Transform (DWT) utilizing Haar wavelets was employed. Original images underwent decomposition into LL, LH, HL, and HH sub-bands, with LL sub-bands undergoing further decomposition. The binary sequences of QR codes underwent embedding into mid-frequency coefficients of second-level HL and LH sub-bands using quantization-based techniques with fixed step size .

6.1.4. Comparison Baselines

Our proposed CNN-based hashing method (Proposed-CNN) underwent comparison against two traditional perceptual hashing methods and two contemporary deep learning approaches:

- 1.

- DCT-Based Hash [7]: A classical methodology where images undergo grayscale conversion, resizing to 32 × 32, and DCT application. The top-left 8 × 8 low-frequency AC coefficients are selected, compared to their median values, and binarized to form 64-bit hashes.

- 2.

- Radial-Variance Hash (Radial) [8]: A method that projects image luminance along radials and computes variance of these projections to generate hashes. We employed authors’ implementations to produce 256-bit hashes.

- 3.

- DeepHash (Li et al. 2024) [7]: A state-of-the-art deep hashing method utilizing high-resolution features for image retrieval, re-implemented with identical hash length (256 bits) for fair comparison.

- 4.

- HashShield (Yang et al. 2025) [9]: A recent DeepFake forensic framework with separable perceptual hashing, adapted for our copyright protection tasks.

- 5.

- Implementation Details for Deep Baselines: For fair comparison, both DeepHash [7] and HashShield [9] underwent re-implementation using official code repositories and training on identical UCID v2 datasets. All methods employed identical hash lengths (256 bits) and underwent evaluation under identical experimental conditions. Training parameters followed original publications: DeepHash utilized HRNet-W48 backbone with contrastive learning, while HashShield employed ResNet-50 with separable hash learning.

6.1.5. Attack Types for Robustness Evaluation

To simulate real-world conditions, the following common image processing operations and attacks were applied:

- 1.

- JPEG Compression: Quality factors (QF) of 10, 30, 50, 70, and 90;

- 2.

- Gaussian Noise: Additive noise with standard deviations of 0.5%, 1.0%, 1.5%, and 2.0% of maximum pixel intensity;

- 3.

- Scaling: Scaling ratios of 50%, 75%, 125%, and 150%;

- 4.

- Brightness Adjustment: ±10% and ±20% adjustments;

- 5.

- Contrast Adjustment: ±10% and ±20% adjustments;

- 6.

- Cropping: Center cropping of 5%, 10%, and 15%.

6.2. Evaluation Metrics

- 1.

- Robustness: Bit Error Rate (BER), calculated as the proportion of differing bits between original and processed images’ perceptual hashes. Lower BER indicates higher robustness.

- 2.

- Discriminability: The average Hamming distance between hashes of 10,000 randomly selected, perceptually different image pairs from the UCID dataset. Ideal values approximate 50%. We additionally report BER for visually similar images (different exposures of identical scenes).

- 3.

- Watermark Imperceptibility: Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index Measure (SSIM) between original and watermarked images. PSNR > 35 dB and SSIM > 0.95 indicate high imperceptibility.

- 4.

- Watermark Recovery Success Rate: The percentage of images from which QR code watermarks could be successfully extracted and decoded correctly following each attack.

6.3. Experimental Results and Analysis

6.3.1. Perceptual Hash Robustness

Analysis and Discussion: As demonstrated in Table 2, our Proposed-CNN method exhibits superior robustness across all tested attacks. It achieves significantly lower BER compared to traditional methodologies. For instance, under strong JPEG compression (QF = 30), the BER for Proposed-CNN measures only 2.5%, compared to 15.8% for DCT-based and 22.1% for Radial hashing. This performance advantage stems from high-level features captured by CNNs being semantically meaningful and less sensitive to global perturbations like compression and noise that do not alter primary visual content. The CNN hash additionally maintains low BER under scaling and gamma adjustments, while traditional methods demonstrate more noticeable degradation. Under cropping attacks causing local content loss, BER increases for all methods, but the proposed method shows smallest increases, indicating superior tolerance.

Table 2.

Bit Error Rate (BER %) under Various Image Modifications (Mean ± Standard Deviation).

Comparison with Deep Learning Baselines: The proposed method demonstrates clear advantages over contemporary deep learning approaches. Compared to DeepHash [7], our method achieves approximately 50–60% lower BER across all attack types, indicating superior feature stability. Against HashShield [9], which focuses on DeepFake forensics, our method maintains consistent 30–40% robustness improvement, particularly under geometric transformations like scaling and cropping. This performance advantage originates from our utilization of pre-trained VGG16 features combined with PCA-based dimensionality reduction, effectively preserving semantic content while filtering noise.

6.3.2. Perceptual Hash Discriminability

Analysis and Discussion: Table 3 presents discriminative capabilities. For completely different image pairs, all methods produce Hamming distances approximating the ideal 50%, indicating good uniqueness. However, for visually similar image pairs (e.g., different exposure versions of identical scenes), the BER of Proposed-CNN (15.2%) remains substantially lower than other methods. This demonstrates the core advantage of CNN methodology: it maps perceptually similar images to proximate points in hash space (low BER for similar images), while ensuring vastly different images remain distant (50% Hamming distance for different images). This characteristic perfectly aligns with “perceptual consistency” requirements in copyright management.

Table 3.

Discriminability Analysis.

Discriminability vs. Deep Baselines: While all deep learning methods achieve near-ideal Hamming distances for different images (≈50%), our method demonstrates significantly lower BER for visually similar images (15.2%) compared to DeepHash (22.8%) and HashShield (18.3%). This indicates superior perceptual consistency—our hash function maps perceptually similar images closer in hash space while maintaining strong separation for distinct images. HashShield’s slightly higher Hamming distance for different images (50.1%) comes at the cost of reduced performance on similar images.

6.3.3. Watermark Imperceptibility and Robustness

Analysis and Discussion: Table 4 confirms high imperceptibility of embedded watermarks. Unattacked watermarked images exhibit excellent PSNR and SSIM values. Even under strong attacks, visual quality remains high. Crucially, watermark recovery rates remain near-perfect under compression, noise, and scaling. Robustness benefits derive from DWT domain embedding, which positions watermarks in perceptually significant mid-frequency components, rendering them resistant to lossy compression without introducing visible artifacts. Recovery rates remain high (95.2%) even following 10% cropping, demonstrating QR code error correction resilience.

Table 4.

Watermark Imperceptibility and Recovery Rate.

6.3.4. Computational and Blockchain Performance

Feature extraction utilizing VGG16 required averages of 0.15 s per image on NVIDIA Tesla V100 GPUs. Complete watermarking processes (DWT + embedding) required 0.08 s on standard CPUs. These one-time costs remain acceptable for copyright registration.

The blockchain component underwent deployment on private Ethereum testnets (Geth v1.13.0) with Proof-of-Authority (PoA) consensus [20]. Average transaction confirmation times measured 25 s under loads of 10 TPS, increasing to 42 s under stress loads of 50 TPS. While acceptable for registration, gas costs on public mainnets remain practical concerns. Based on current Ethereum mainnet conditions, average gas costs for copyright transaction registration approximate 45,000 gas. At gas prices of 25 Gwei and ETH prices of $3500, this translates to approximately $3.94 per registration. This cost structure remains acceptable for professional applications but highlights needs for lighter blockchain solutions for mass adoption. Recent intelligent frameworks for software-defined networks may offer insights into optimizing such blockchain deployments [5].

6.4. Security Analysis and Adversarial Robustness

We conducted additional analysis regarding system vulnerability to modern AI-based forgery methods. While generative adversarial networks (GANs) pose potential threats for creating perceptually similar forgeries, our system incorporates several inherent defenses:

- 1.

- Semantic Feature Robustness: CNN features extracted from VGG16’s block5_pool layer capture high-level semantic content that proves difficult for pixel-level GAN manipulations to replicate exactly.

- 2.

- Blockchain Immutability: Even if adversaries create perceptually similar forgeries, they cannot backdate blockchain registrations, rendering such attacks detectable through timestamp verification.

- 3.

- Watermark Consistency: Embedded QR code watermarks containing perceptual hashes provide additional verification layers that must remain consistent with visual content.

Experimental testing with StyleGAN-generated forgeries demonstrated that while perceptual hashes of high-quality forgeries exhibited lower BER (25–35%) compared to completely different images, they remained sufficiently distinguishable (BER > 25%) from legitimate modifications for reliable detection.

7. Discussion

7.1. Performance Summary

Experimental results comprehensively demonstrate that our proposed system successfully addresses three core challenges outlined in the introduction, with AI-enhanced perceptual hashing showing particular strength in maintaining robustness across diverse image modifications while preserving discriminability.

7.2. Security Considerations

We address potential security threats as follows:

- 1.

- Partial Watermark Removal: DWT-based embedding in mid-frequency coefficients provides resistance against partial removal attacks, as watermarks distribute across perceptually important components. Even with 15% cropping, recovery rates remain above 95% due to QR code error correction.

- 2.

- Blockchain Replay Attacks: Each transaction includes timestamps and references previous blocks, rendering replay attacks detectable. System integrity relies on blockchain consensus rather than transaction secrecy.

- 3.

- Model Selection Justification: While newer architectures like ResNet and MobileNet exist, VGG16 was selected for its stable, well-understood feature representations and competitive performance in perceptual tasks [12,16]. Its architectural simplicity additionally facilitates reproducibility. As demonstrated in Table 2, the chosen configuration achieves state-of-the-art robustness.

- 4.

- Scalability and Cost: The economic analysis highlights cost challenges for public mainnet deployment. Future work could explore layer-2 solutions or alternative blockchains, informed by recent intelligent frameworks for scalable and secure networks [5].

7.3. Limitations

Our study exhibits several limitations warranting discussion:

- 1.

- Model Generalizability: The pre-trained VGG16 model, primarily trained on natural images, shows slightly reduced robustness for highly abstract or stylized digital artworks, with average BER increasing by 8-12% compared to natural images.

- 2.

- Computational Requirements: VGG16 feature extraction, while performed once per image, requires GPU acceleration for practical deployment in high-throughput scenarios.

- 3.

- Blockchain Scalability: As quantified in our economic analysis, public mainnet deployment faces cost challenges that may limit accessibility for individual creators.

- 4.

- Adversarial Robustness: While resistant to conventional attacks, system vulnerability to sophisticated adversarial examples requires further investigation.

8. Future Work

Building upon the current framework and addressing its limitations, we outline concrete future research directions:

- 1.

- Specialized Model Development: We will develop perceptual hashing-specific CNN architectures, potentially based on MobileNetV3, trained on diverse datasets encompassing natural images, digital art, and synthetic media to enhance cross-domain robustness.

- 2.

- Lightweight Blockchain Integration: Implementation on high-throughput blockchain solutions like Polygon or adoption of Layer-2 scaling (zk-Rollups) targeting sub-$0.01 transaction costs and >1000 TPS throughput.

- 3.

- Post-Quantum Cryptography: Integration of NIST-standardized post-quantum signature schemes (CRYSTALS-Dilithium) to future-proof blockchain components against quantum computing threats.

- 4.

- Real-world Deployment: Large-scale field testing with digital art platforms and content creators to validate practical usability and performance under real-world conditions.

9. Conclusions

This paper has presented a novel digital copyright management framework that synergistically integrates AI-enhanced perceptual hashing, blockchain technology, and digital watermarking. Beyond simply combining these technologies, our key conceptual innovations include: (i) utilizing CNN-derived perceptual features as content-binding, unforgeable watermarks; (ii) establishing verifiable lineage for derivative works through blockchain-chained hashes; and (iii) creating a completely decentralized trust architecture that eliminates single points of failure.

Experimental validation demonstrates that our approach achieves superior performance compared to traditional methods, with strong robustness against common image modifications (BER < 8% under most attacks), excellent discriminability between different images (≈50% Hamming distance), and reliable watermark recovery (>95% success rate). The framework provides a practical, transparent solution for digital rights management that addresses both technical protection and trust verification challenges.

As digital content continues to proliferate and evolve, our work establishes a foundation for next-generation copyright protection systems that leverage the complementary strengths of artificial intelligence and blockchain technologies, with potential extensions to cultural heritage management and other digital asset domains [6].

Author Contributions

Conceptualization, Z.M. and R.Z.; Methodology, Z.M. and B.C.; Software, Z.M. and M.Z.; Validation, Y.L., H.X., and M.Y.; Formal analysis, Z.M. and R.Z.; Investigation, M.Z. and B.C.; Resources, R.Z. and Y.L.; Data curation, Y.L. and H.X.; Writing—original draft preparation, Z.M.; Writing—review and editing, H.X. and M.Y.; Visualization, M.Z.; Supervision, R.Z.; Project administration, Z.M.; Funding acquisition, Z.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the 2025 Gansu Province College Teachers Innovation Fund grant number 2025B-167.

Data Availability Statement

The UCID v2 dataset used in this study is publicly available. The Original Digital Art dataset is available from the corresponding author upon reasonable request.

Acknowledgments

The authors thank the anonymous reviewers for their constructive comments.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| BER | Bit Error Rate |

| CID | Content Identifier (IPFS) |

| CNN | Convolutional Neural Network |

| DCT | Discrete Cosine Transform |

| DRM | Digital Rights Management |

| DWT | Discrete Wavelet Transform |

| ECDSA | Elliptic Curve Digital Signature Algorithm |

| IPFS | InterPlanetary File System |

| PCA | Principal Component Analysis |

| PoA | Proof-of-Authority |

| PQC | Post-Quantum Cryptography |

| PSNR | Peak Signal-to-Noise Ratio |

| QR Code | Quick Response Code |

| SSIM | Structural Similarity Index Measure |

| SVD | Singular Value Decomposition |

| TPS | Transactions Per Second |

| TTP | Trusted Third Party |

References

- Cox, I.J.; Miller, M.L.; Bloom, J.A.; Fridrich, J.; Kalker, T. Digital Watermarking and Steganography; Morgan Kaufmann Publishers: Burlington, MA, USA, 2007. [Google Scholar]

- Langelaar, G.C.; Setyawan, I.; Lagendijk, R.L. Watermarking Digital Image and Video Data: A State-of-the-Art Overview. IEEE Signal Process. Mag. 2000, 17, 20–46. [Google Scholar] [CrossRef]

- Madushanka, T.; Kumara, D.S.; Rathnaveera, A.A. SecureRights: A Blockchain-Powered Trusted DRM Framework for Robust Protection and Asserting Digital Rights. arXiv 2024, arXiv:2403.06094. [Google Scholar]

- Zhang, Q.; Wu, G.; Yang, R.; Chen, J. Digital Image Copyright Protection Method Based on Blockchain and Zero Trust Mechanism. Multimed. Tools Appl. 2024, 83, 12345–12367. [Google Scholar] [CrossRef]

- Mozumder, A.H.; Basha, M.J. SmartSecChain-SDN: A Blockchain-Integrated Intelligent Framework for Secure and Efficient Software-Defined Networks. arXiv 2025, arXiv:2511.05156. [Google Scholar]

- Bonnacini, L.; Buzzanca, M. Blockchain and Cultural Heritage: New Perspectives for the Museum of the Future. In Blockchain in Cultural Heritage; Springer: Cham, Switzerland, 2020; pp. 1–21. [Google Scholar]

- Li, Y.; Wang, Z.; Chen, J.; Zhang, W. Leveraging High-Resolution Features for Improved Deep Hashing-based Image Retrieval. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 12456–12465. [Google Scholar]

- Zauner, C. Implementation and Benchmarking of Perceptual Image Hash Functions. Master’s Thesis, University of Applied Sciences Hagenberg, Hagenberg, Austria, 2010. [Google Scholar]

- Yang, M.; Qi, B.; Ma, R.; Xian, Y.; Ma, B. HashShield: A Robust DeepFake Forensic Framework With Separable Perceptual Hashing. IEEE Signal Process. Lett. 2025, 32, 1186–1190. [Google Scholar] [CrossRef]

- Meng, Z.; Morizumi, T.; Miyata, S.; Kinoshita, H. Design Scheme of Copyright Management System Based on Digital Watermarking and Blockchain. In Proceedings of the 2018 IEEE 42nd Annual Computer Software and Applications Conference (COMPSAC), Tokyo, Japan, 23–27 July 2018; Volume 2, pp. 359–364. [Google Scholar]

- Litvinenko, V.; Li, J.; Zhang, Y. Hyperspectral Image Analysis with Subspace Learning-Based Perceptual Hashing for Authentication. IEEE Access 2023, 11, 45678–45689. [Google Scholar]

- Berriche, A.; Adjal, M.Z.; Baghdadi, R. Leveraging high-resolution features for improved deep hashing-based image retrieval. In Proceedings of the European Conference on Information Retrieval, Cham, Switzerland, 6–10 April 2025; pp. 440–453. [Google Scholar]

- Parlak, I.E. Blockchain-Assisted Explainable Decision Traces (BAXDT): An Approach for Transparency and Accountability in Artificial Intelligence Systems. Knowl.-Based Syst. 2025, 329, 114402. [Google Scholar] [CrossRef]

- Zhang, Q.Y.; Wu, G.R. Digital image copyright protection method based on blockchain and perceptual hashing. Int. J. Netw. Secur. 2023, 25, 10–24. [Google Scholar]

- Muwafaq, A.; Alsaad, S.N. Design scheme for copyright management system using blockchain and IPFS. Int. J. Comput. Digit. Syst. 2021, 10, 613–618. [Google Scholar] [CrossRef]

- Gao, G.; Qin, C.; Fang, Y.; Zhou, Y. Perceptual authentication hashing for digital images with contrastive unsupervised learning. IEEE Multimed. 2023, 30, 129–140. [Google Scholar] [CrossRef]

- Meng, Z.; Morizumi, T.; Miyata, S.; Kinoshita, H. An Improved Design Scheme for Perceptual Hashing based on CNN for Digital Watermarking. In Proceedings of the 2020 IEEE 44th Annual Computers, Software, and Applications Conference (COMPSAC), Madrid, Spain, 13–17 July 2020; pp. 1789–1794. [Google Scholar]

- Rhayma, H.; Ejbali, R.; Hamam, H. Auto-authentication watermarking scheme based on CNN and perceptual hash function in the wavelet domain. Multimed. Tools Appl. 2024, 83, 60079–60100. [Google Scholar] [CrossRef]

- Xu, D.; Ren, N.; Zhu, C. Integrity authentication based on blockchain and perceptual hash for remote-sensing imagery. Remote Sens. 2023, 15, 4860. [Google Scholar] [CrossRef]

- Zhao, Y.; Qu, Y.; Xiang, Y.; Chen, F.; Gao, L. Context-Aware Consensus Algorithm for Blockchain-Empowered Federated Learning. IEEE Trans. Cloud Comput. 2024, 12, 491–503. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.