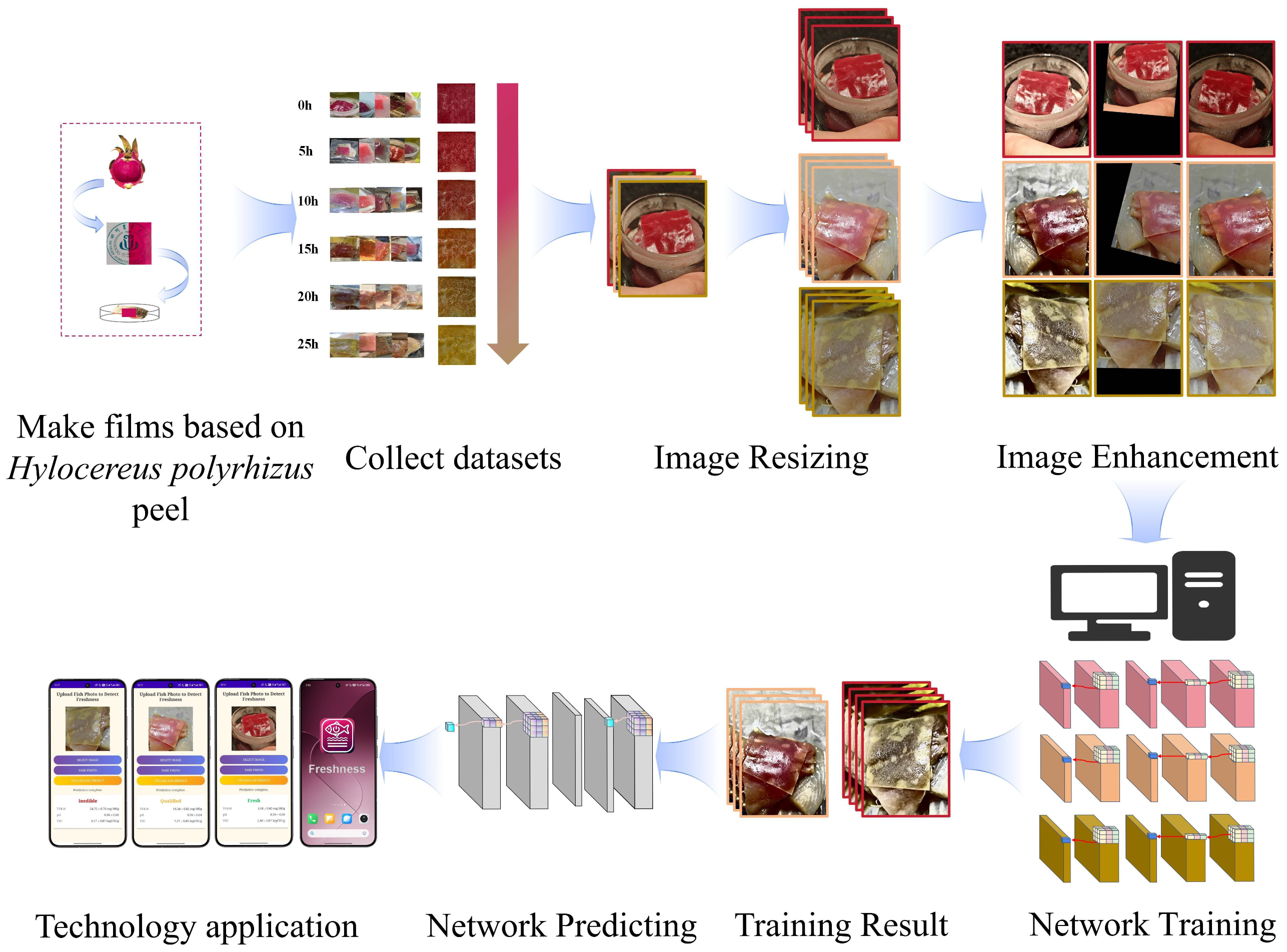

A Smartphone-Based Non-Destructive Multimodal Deep Learning Approach Using pH-Sensitive Pitaya Peel Films for Real-Time Fish Freshness Detection

Abstract

1. Introduction

- (1)

- Objective Quantification: Smartphone cameras, when coupled with sophisticated image-processing algorithms and deep learning models as proposed in this work, can objectively capture and quantify subtle color changes that might be indicative of early-stage spoilage, often beyond the consistent discernment capabilities of the human eye. This leads to more precise and reproducible freshness assessments.

- (2)

- Data Logging and Traceability: As demonstrated by the application developed in this study (Section 4.6), smartphones can automatically record detection results, including timestamps, images, and associated chemical data, thereby creating a valuable digital log for quality assurance, supply chain management, and traceability.

- (3)

- Standardization: A smartphone-based detection system can enforce a standardized protocol for image acquisition and analysis, minimizing variability introduced by different operators or viewing conditions.

- (4)

- Enhanced Accessibility and Portability: The widespread availability, low cost, and inherent portability of smartphones make them ideal tools for convenient on-site and real-time freshness monitoring by a broad range of users, from consumers to food industry professionals.

- (5)

- Connectivity and Advanced Analytics Potential: Smartphone-derived data can be easily shared or integrated with cloud platforms for remote monitoring, trend analysis, or integration into larger food safety management systems.

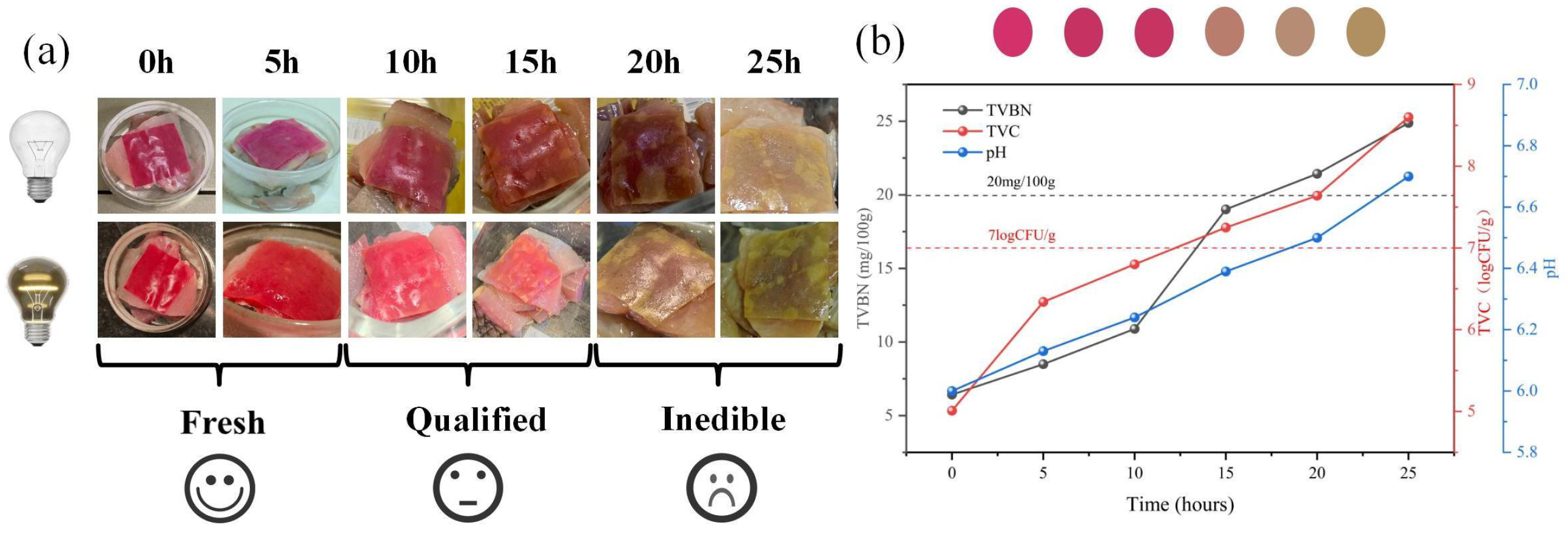

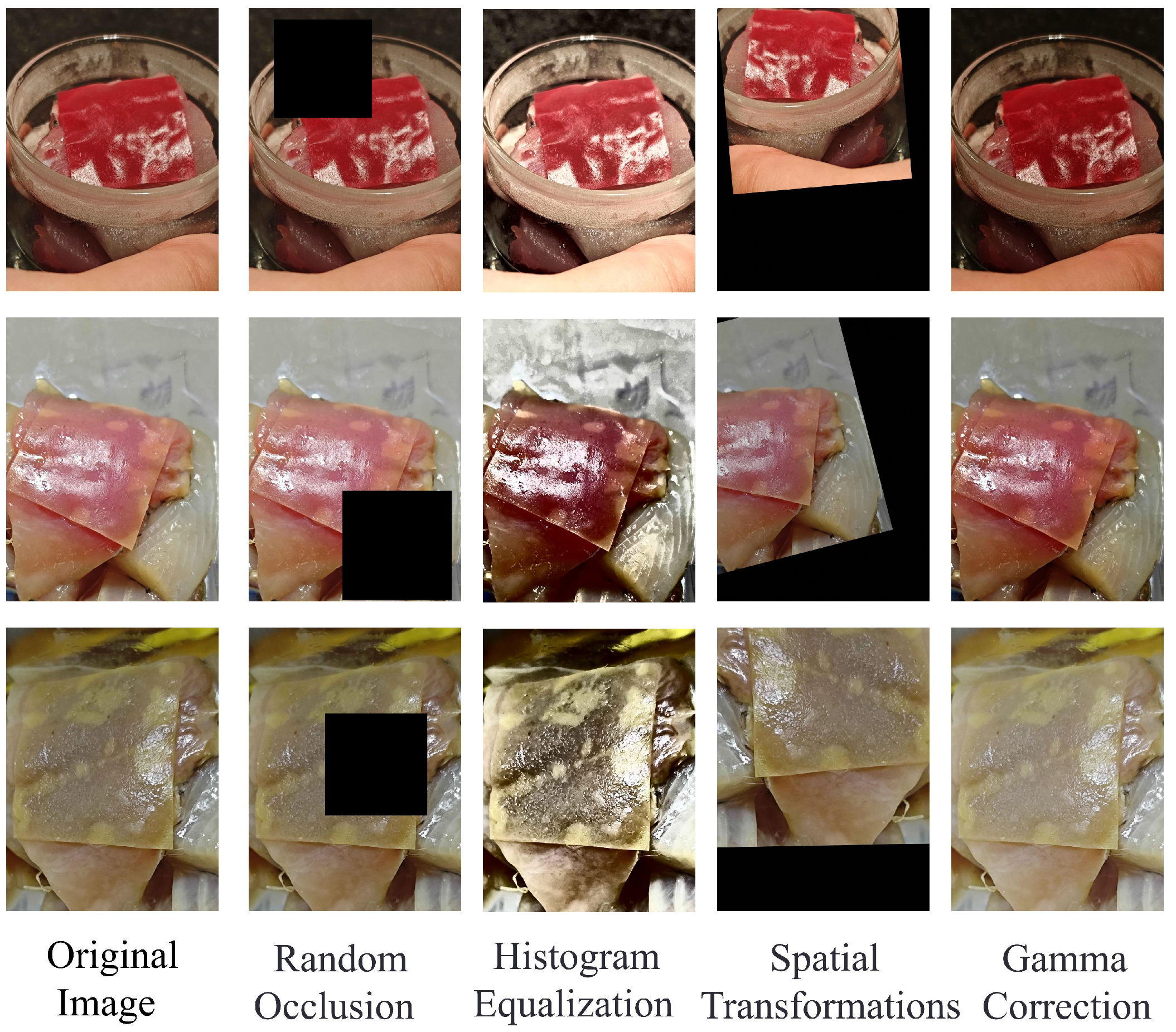

2. Materials and Data Preprocessing

2.1. Preparation of Pitaya Peel pH Indicator Films

2.2. Performance Characterization of the HPH-Treated Indicator Film

2.3. Experimental Setup for Freshness Detection

3. Methods

3.1. Overall Model Architecture Design

- (1)

- An image feature extraction branch, responsible for processing visual cues from the pH indicator film.

- (2)

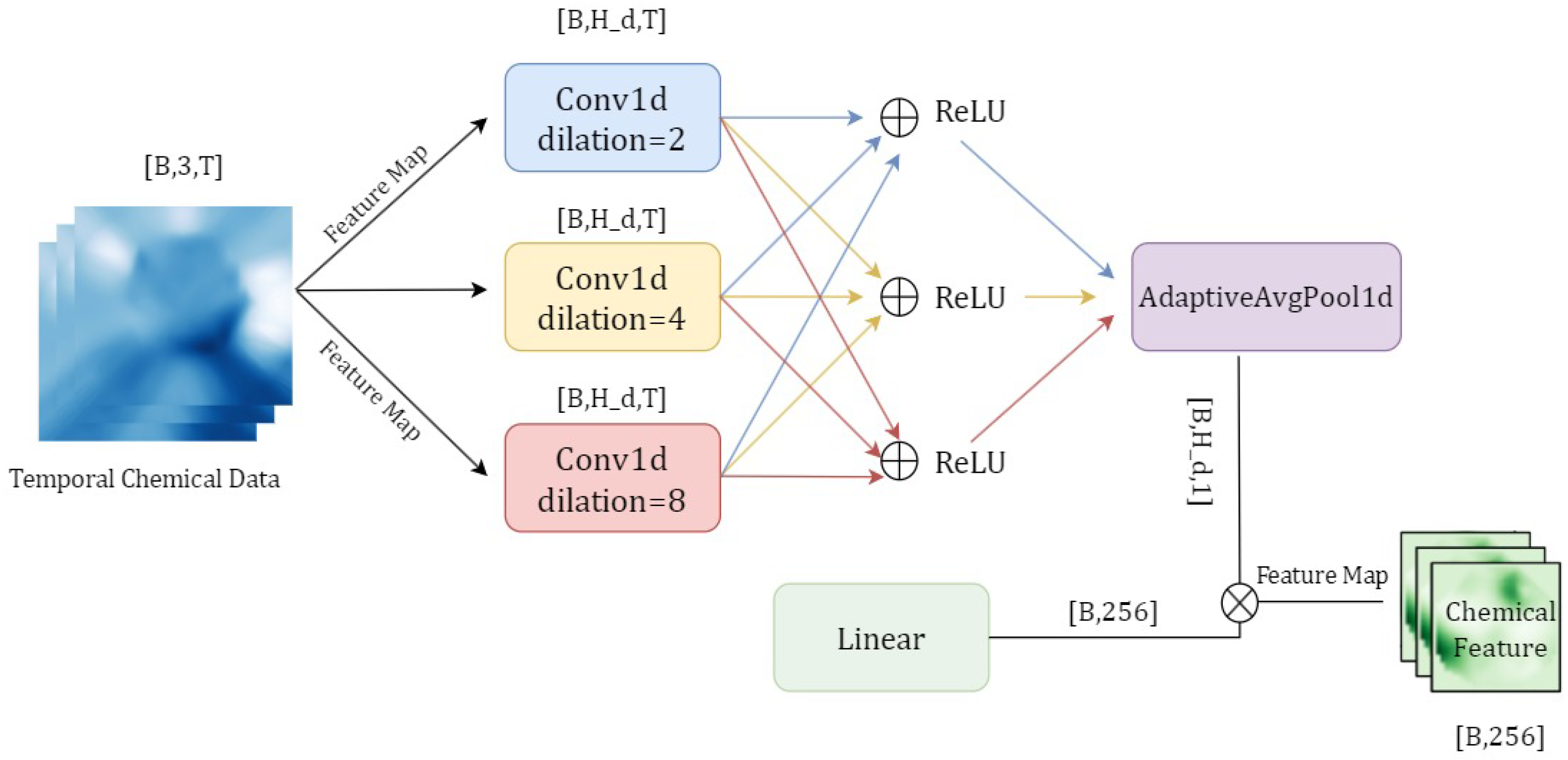

- A chemical data-processing branch, specifically designed to model the temporal dynamics of sequentially collected chemical indicators (pH, TVB-N, TVC) using a Temporal Convolutional Network (TCN).

- (3)

- A Context-Aware Gated Fusion (CAG-Fusion) module, tasked with adaptively integrating the features from these distinct modalities.

3.2. Multi-Scale Attention Enhancement Module

- A local detail branch uses a convolution with a dilation rate of 6 (effective receptive field: pixels) to detect early-stage color spots (approx. 1.2 mm diameter). Its output, , is computed as (Equation (1)).

- A global trend branch utilizes a convolution with a dilation rate of 12 (effective receptive field: pixels) to capture the overall hue shift in advanced spoilage. Its output, , is computed as (Equation (2)).

3.3. Chemical Temporal Encoder

3.3.1. Pseudo-Temporal Sequence Construction

3.3.2. Dilated Temporal Convolution

3.3.3. Hierarchical Architecture

3.3.4. Multi-Scale Fusion

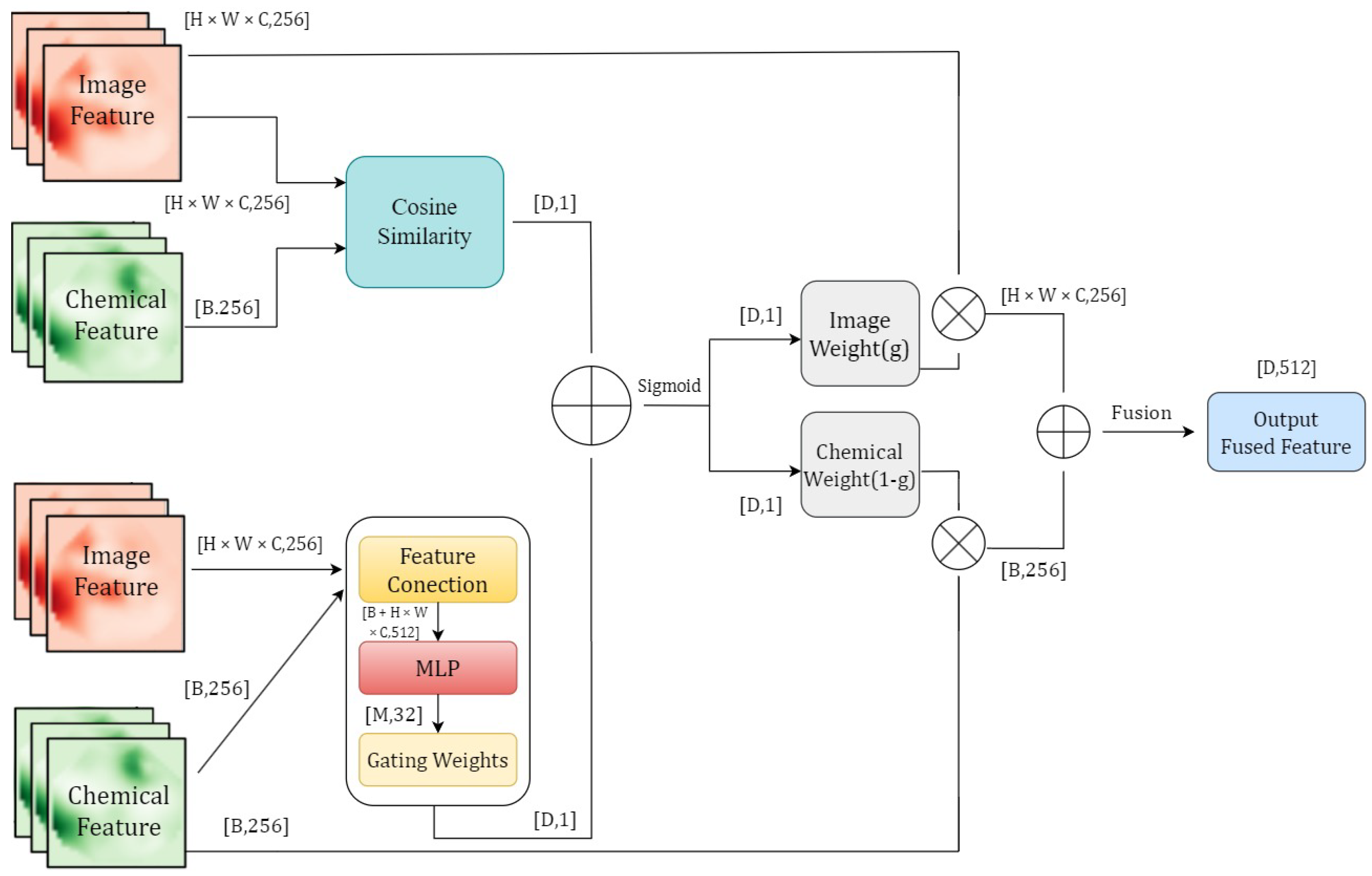

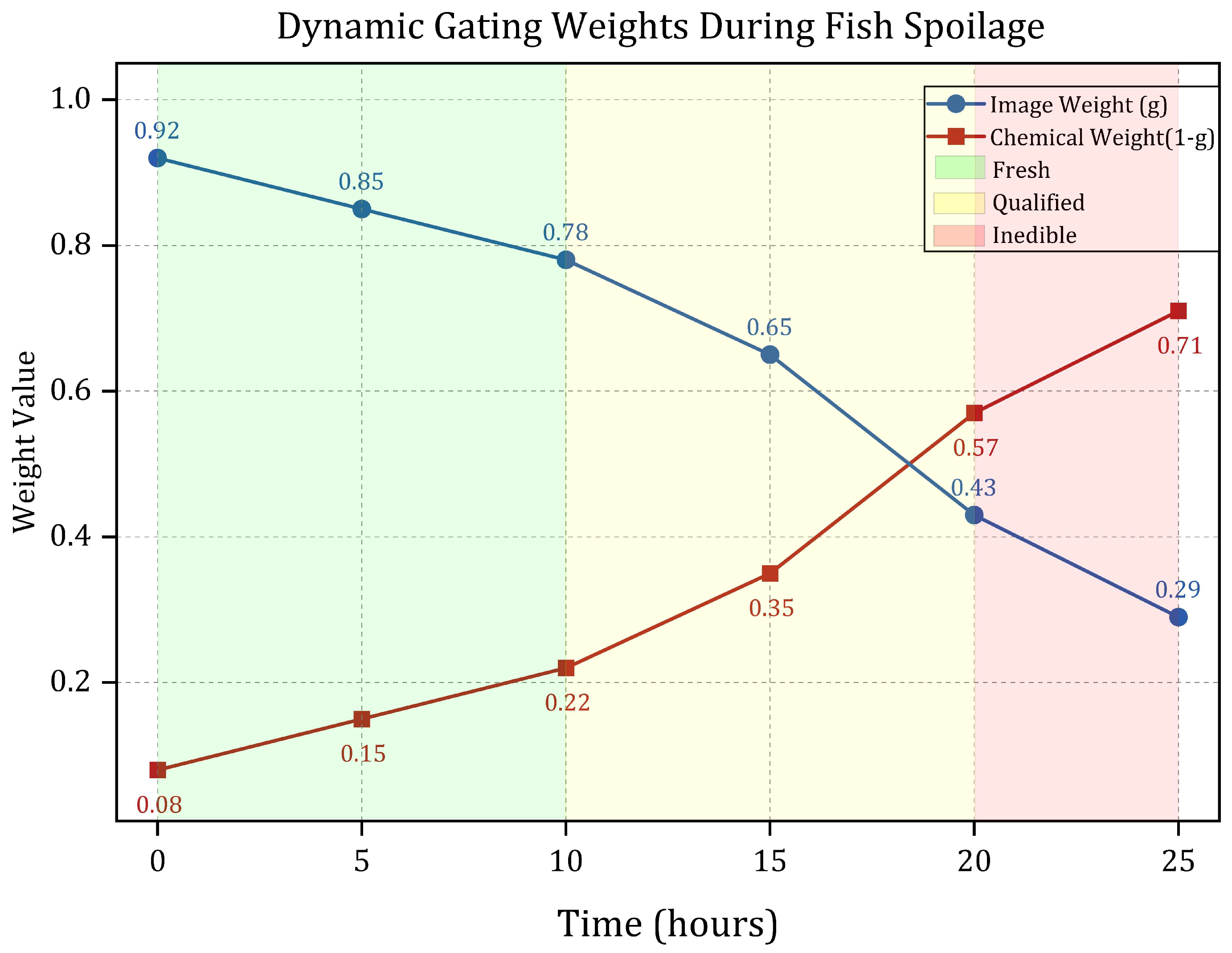

3.4. Context-Aware Gated Fusion Mechanism

3.4.1. Context-Aware Adaptation

3.4.2. Interpretability Constraints

3.4.3. System Integration

4. Results and Analysis

4.1. Experimental Setup

4.2. Performance Evaluation

4.3. Ablation Studies

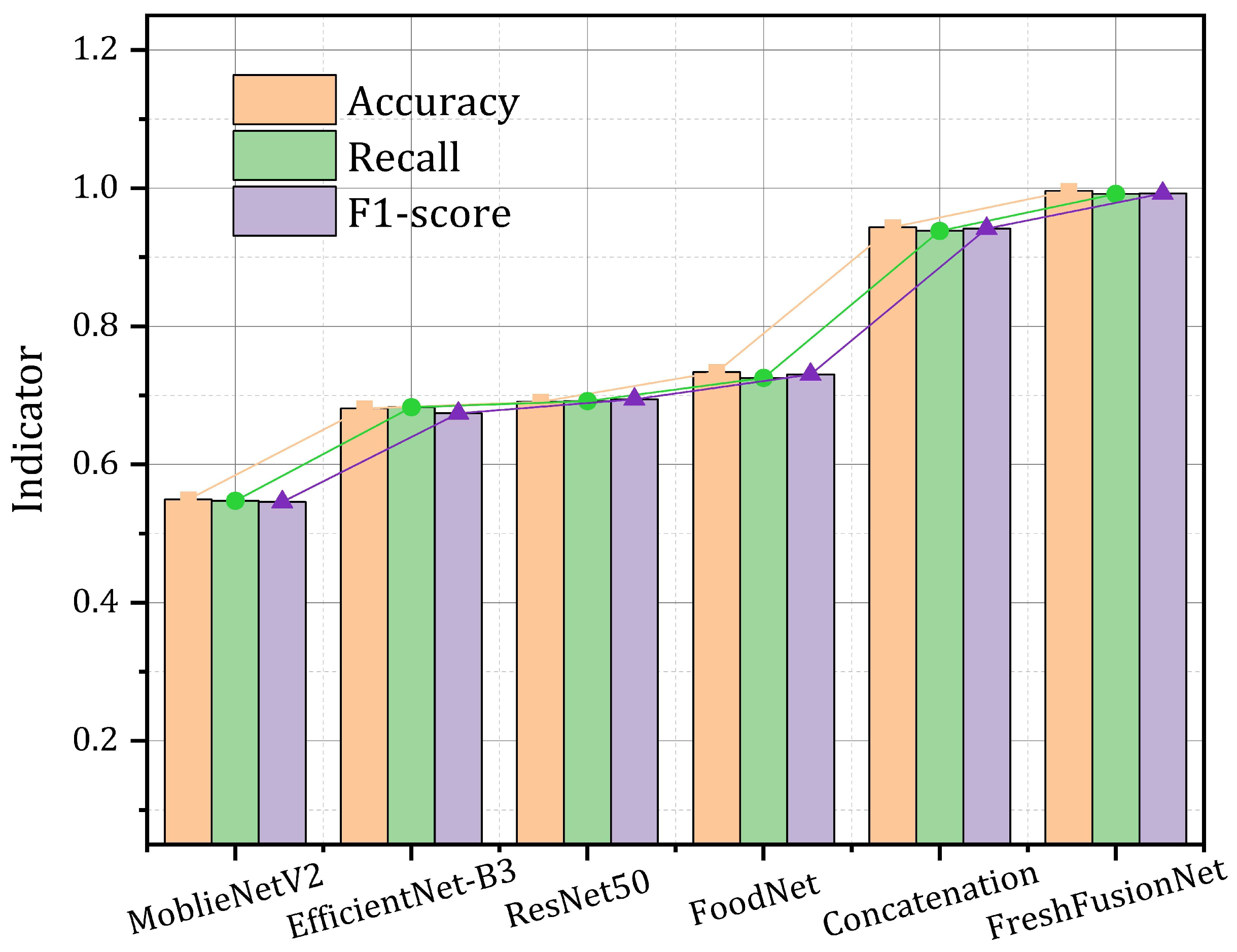

4.4. Comparative Analysis

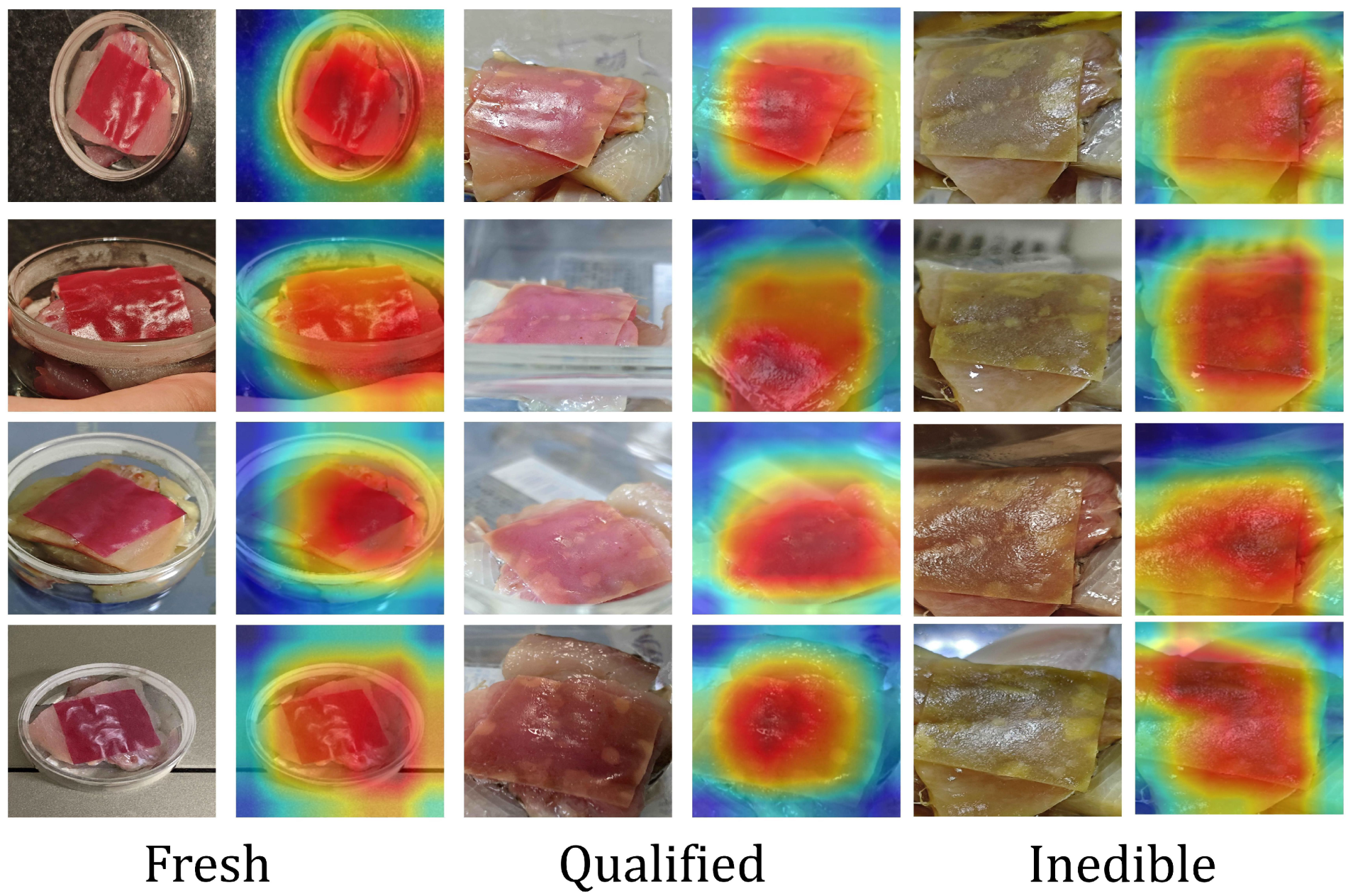

4.5. Heatmap Visualization

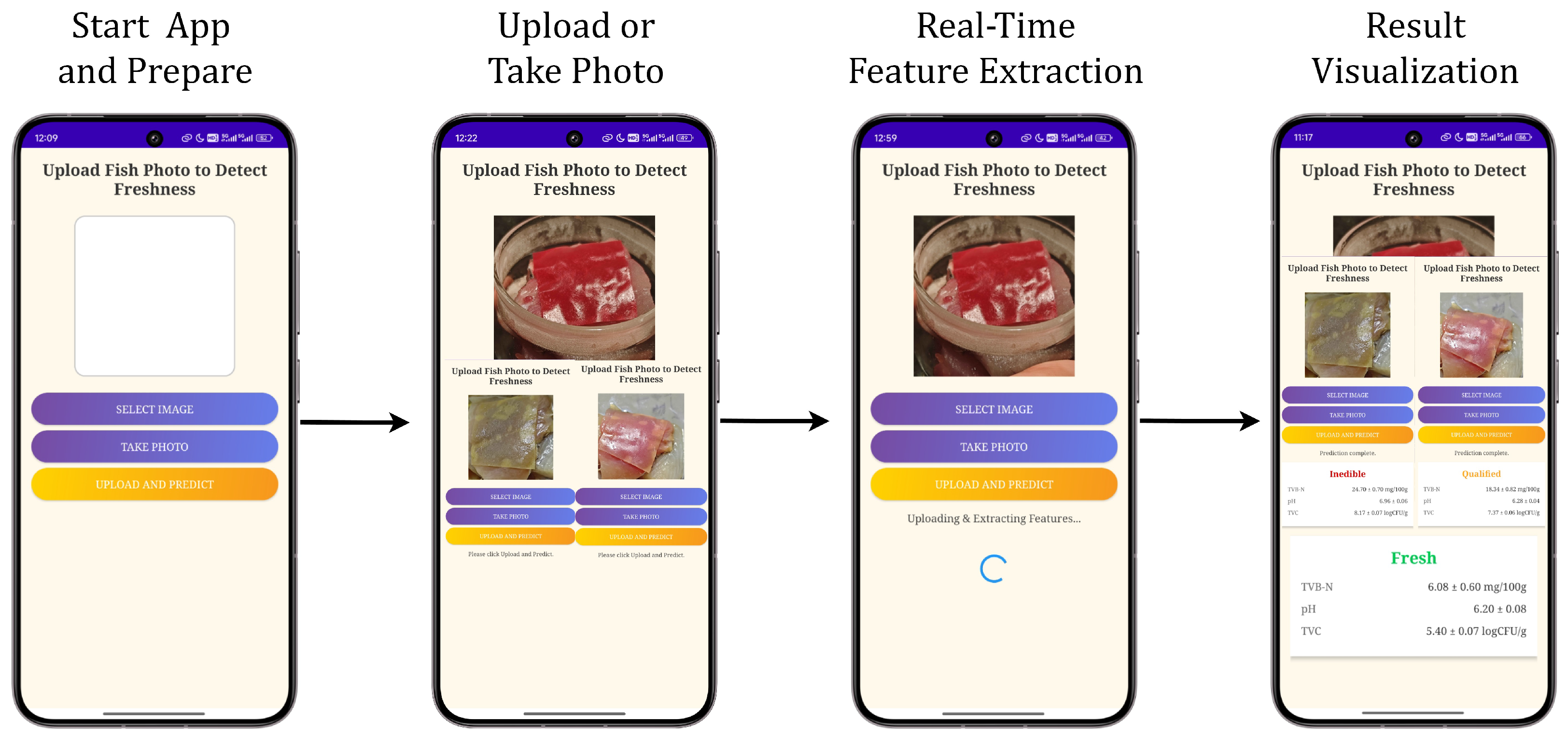

4.6. Smartphone APP Identification Process

4.6.1. Launch and Image Acquisition

4.6.2. Real-Time Feature Extraction and Prediction

4.6.3. Result Display and Visualization

4.6.4. Practical Application Performance

5. Discussion

5.1. Method Overview

5.2. Core Innovations

5.3. Limitations

- (1)

- Adjusting the formulation of the pH indicator film or selecting chromogenic systems that are less sensitive to the background color of red-fleshed fish;

- (2)

- Developing more advanced color correction or color deconvolution algorithms in the image preprocessing stage to separate or mitigate the influence of the fish’s own pigments on the indicator film’s color;

- (3)

- Training specific models for different types of fish (e.g., white-fleshed, red-fleshed), or incorporating additional input features into the multimodal model that can differentiate fish types, thereby enhancing the model’s adaptability and accuracy.

5.4. Future Directions

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chen, L.; Guttieres, D.; Levi, R.; Paulson, E.; Perakis, G.; Renegar, N.; Springs, S. Public health risks arising from food supply chains: Challenges and opportunities. Nav. Res. Logist. (NRL) 2021, 68, 1098–1112. [Google Scholar] [CrossRef]

- Bekhit, A.; Giteru, S.; Holman, B.; Hopkins, D. Total volatile basic nitrogen and trimethylamine in muscle foods: Potential formation pathways and effects on human health. Compr. Rev. Food Sci. Food Saf. 2021, 20, 3620–3666. [Google Scholar] [CrossRef] [PubMed]

- Eyer-Silva, W.A.; Hoyos, V.P.A.; Nascimento, L. Scombroid fish poisoning. Am. J. Trop. Med. Hyg. 2022, 106, 1300. [Google Scholar] [CrossRef] [PubMed]

- Wells, N.; Yusufu, D.; Mills, A. Colourimetric plastic film indicator for the detection of the volatile basic nitrogen compounds associated with fish spoilage. Talanta 2019, 194, 830–836. [Google Scholar] [CrossRef]

- Yu, H.D.; Zuo, S.M.; Xia, G.; Liu, X.; Yun, Y.H.; Zhang, C. Rapid and nondestructive freshness determination of tilapia fillets by a portable near-infrared spectrometer combined with chemometrics methods. Food Anal. Methods 2020, 13, 1918–1928. [Google Scholar] [CrossRef]

- Zhang, Y.; Luo, Q.; Ding, K.; Liu, S.; Shi, X. A smartphone-integrated colorimetric sensor of total volatile basic nitrogen (TVB-N) based on Au@MnO2 core-shell nanocomposites incorporated into hydrogel and its application in fish spoilage monitoring. Sensors Actuators B Chem. 2021, 335, 129708. [Google Scholar] [CrossRef]

- Wu, H.; Jiao, C.; Li, S.; Li, Q.; Zhang, Z.; Zhou, M.; Yuan, X. A facile strategy for development of pH-sensing indicator films based on red cabbage puree and polyvinyl alcohol for monitoring fish freshness. Foods 2022, 11, 3371. [Google Scholar] [CrossRef]

- Wu, H.; Chen, L.; Li, T.; Li, S.; Lei, Y.; Chen, M.; Chen, A. Development and characterization of pH-sensing films based on red pitaya (Hylocereus polyrhizus) peel treated by high-pressure homogenization for monitoring fish freshness. Food Hydrocoll. 2024, 154, 110119. [Google Scholar] [CrossRef]

- Lee, G.; Kang, B.; Nho, K.; Sohn, K.; Kim, D. MildInt: Deep learning-based multimodal longitudinal data integration framework. Front. Genet. 2019, 10, 617. [Google Scholar] [CrossRef]

- Cao, Y.; Song, Y.; Fan, X.; Feng, T.; Zeng, J.; Xue, C.; Xu, J. A smartphone-assisted sensing hydrogels based on UCNPs@SiO2-phenol red nanoprobes for detecting the pH of aquatic products. Food Chem. 2024, 451, 139428. [Google Scholar] [CrossRef]

- Yardeni, E.H.; Mishra, S.; Stein, R.A.; Bibi, E.; Mchaourab, H.S. The multidrug transporter MdfA deviates from the canonical model of alternating access of MFS transporters. J. Mol. Biol. 2020, 432, 5665–5680. [Google Scholar] [CrossRef]

- Wang, G.; Zhou, K.; Wang, L.; Wang, L. Context-Aware and Attention-Driven Weighted Fusion Traffic Sign Detection Network. IEEE Access 2023, 11, 42104–42112. [Google Scholar] [CrossRef]

- Yao, X.; Liu, J.; Hu, H.; Yun, D.; Liu, J. Development and comparison of different polysaccharide/PVA-based active/intelligent packaging films containing red pitaya betacyanins. Food Hydrocoll. 2022, 124, 107305. [Google Scholar] [CrossRef]

- Xu, J.; Liu, F.; Goff, H.D.; Zhong, F. Effect of pre-treatment temperatures on the film-forming properties of collagen fiber dispersions. Food Hydrocoll. 2020, 107, 105326. [Google Scholar] [CrossRef]

- Qin, Y.; Liu, Z.; Zhang, M.; Liu, C. Betacyanins-based pH-sensing films for real-time food freshness monitoring: Preparation, characterization, and application. Food Control 2020, 110, 106925. [Google Scholar]

- Qin, Y.; Liu, Y.; Zhang, X.; Liu, J. Development of active and intelligent packaging by incorporating betalains from red pitaya (Hylocereus polyrhizus) peel into starch/polyvinyl alcohol films. Food Hydrocoll. 2020, 100, 105410. [Google Scholar] [CrossRef]

- Saricaoglu, S.; Tural, S.; Gul, O.; Turhan, M. Mechanical and barrier properties of edible films based on pectin and beet juice: Effect of high-pressure homogenization. J. Food Sci. 2020, 85, 870–878. [Google Scholar]

- Huang, X.; Liu, Q.; Yang, Y.; He, W.-Q. Effect of high pressure homogenization on sugar beet pulp: Rheological and microstructural properties. LWT 2020, 125, 109245. [Google Scholar] [CrossRef]

- GB 5009.228-2016; National Food Safety Standard—Determination of Volatile Basic Nitrogen in Foods. National Health Commission of the People’s Republic of China and State Administration for Market Regulation: Beijing, China, 2016.

- Wang, W.; Li, M.; Li, H.; Liu, X.; Guo, T.; Zhang, G. A renewable intelligent colorimetric indicator based on polyaniline for detecting freshness of tilapia. Packag. Technol. Sci. 2018, 31, 133–140. [Google Scholar] [CrossRef]

- ISO 4833-1:2013; Microbiology of the Food Chain—Horizontal Method for the Enumeration of Microorganisms—Part 1: Colony Count at 30 Degrees C by the Pour Plate Technique. International Organization for Standardization: Geneva, Switzerland, 2013.

- Cai, S.; Zhou, R. Quantum Implementation of Bilinear Interpolation Algorithm Based on NEQR and Center Alignment. Phys. Scr. 2024, 100, 015107. [Google Scholar] [CrossRef]

- Jeon, J.J.; Park, J.Y.; Eom, I.K. Low-light image enhancement using gamma correction prior in mixed color spaces. Pattern Recognit. 2024, 146, 110001. [Google Scholar] [CrossRef]

- Aghaei, Z.; Emadzadeh, B.; Ghorani, B.; Kadkhodaee, R. Cellulose Acetate Nanofibres Containing Alizarin as a Halochromic Sensor for the Qualitative Assessment of Rainbow Trout Fish Spoilage. Food Bioprocess Technol. 2018, 11, 1087–1095. [Google Scholar] [CrossRef]

- Zhao, S.; Gong, M.; Fu, H.; Tao, D. Adaptive context-aware multi-modal network for depth completion. IEEE Trans. Image Process. 2021, 30, 5264–5276. [Google Scholar] [CrossRef]

- Zhu, W.; Tian, J.; Chen, M.; Chen, L.; Chen, J. MSS-UNet: A Multi-Spatial-Shift MLP-based UNet for skin lesion segmentation. Comput. Biol. Med. 2023, 168, 107719. [Google Scholar] [CrossRef]

- Shahi, T.; Sitaula, C.; Neupane, A.; Guo, W. Fruit classification using attention-based MobileNetV2 for industrial applications. PLoS ONE 2022, 17, e0264586. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Y.; Yang, K. Exploring TensorRT to improve real-time inference for deep learning. In Proceedings of the 2022 IEEE 24th Int Conf on High Performance Computing Communications; 8th Int Conf on Data Science Systems; 20th Int Conf on Smart City; 8th Int Conf on Dependability in Sensor, Cloud Big Data Systems Application (HPCC/DSS/SmartCity/DependSys), Hainan, China, 18–20 December 2022; pp. 2011–2018. [Google Scholar]

- AlZamili, Z.; Danach, K.; Frikha, M. Deep Learning-Based Patch-Wise Illumination Estimation for Enhanced Multi-Exposure Fusion. IEEE Access 2023, 11, 120642–120653. [Google Scholar] [CrossRef]

- Wang, W.; Hu, Y.; Zou, T.; Liu, H.; Wang, J.; Wang, X. A New Image Classification Approach via Improved MobileNet Models with Local Receptive Field Expansion in Shallow Layers. Comput. Intell. Neurosci. 2020, 2020, 8817849. [Google Scholar] [CrossRef] [PubMed]

- Gong, T.; Zhou, W.; Qian, X.; Lei, J.; Yu, L. Global contextually guided lightweight network for RGB-thermal urban scene understanding. Eng. Appl. Artif. Intell. 2023, 117, 105510. [Google Scholar] [CrossRef]

- Cong, G.; Yamamoto, N.; Kou, R.; Maegami, Y.; Namiki, S.; Yamada, K. Vertically hierarchical electro-photonic neural network by cascading element-wise multiplication. APL Photonics 2024, 9, 056110. [Google Scholar] [CrossRef]

- Mustafa, F.; Andreescu, S. Paper-Based Enzyme Biosensor for One-Step Detection of Hypoxanthine in Fresh and Degraded Fish. ACS Sensors 2020, 5, 4092–4100. [Google Scholar] [CrossRef]

- Martinelli, J.; Grignard, J.; Soliman, S.; Ballesta, A.; Fages, F. Reactmine: A statistical search algorithm for inferring chemical reactions from time series data. arXiv 2022, arXiv:2209.03185. [Google Scholar]

- Fan, J.; Zhang, K.; Huang, Y.; Zhu, Y.; Chen, B. Parallel spatio-temporal attention-based TCN for multivariate time series prediction. Neural Comput. Appl. 2023, 35, 13109–13118. [Google Scholar] [CrossRef]

- Gao, R. Rethinking dilated convolution for real-time semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 4675–4684. [Google Scholar]

- Wu, Y.; Guo, C.; Gao, H.; Xu, J.; Bai, G. Dilated residual networks with multi-level attention for speaker verification. Neurocomputing 2020, 412, 177–186. [Google Scholar] [CrossRef]

- Zhai, X.; Mustafa, B.; Kolesnikov, A.; Beyer, L. Sigmoid loss for language image pre-training. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 11975–11986. [Google Scholar]

- Cai, M.; Li, X.; Liang, J.; Liao, M.; Han, Y. An effective deep learning fusion method for predicting the TVB-N and TVC contents of chicken breasts using dual hyperspectral imaging systems. Food Chem. 2024, 456, 139847. [Google Scholar] [CrossRef]

- Lin, T.; Ren, Z.; Zhu, L.; Zhu, Y.; Feng, K.; Ding, W.; Yan, K.; Beer, M. A Systematic Review of Multi-Sensor Information Fusion for Equipment Fault Diagnosis. IEEE Trans. Instrum. Meas. 2025. early access. [Google Scholar] [CrossRef]

- Mao, R.; Li, X. Bridging towers of multi-task learning with a gating mechanism for aspect-based sentiment analysis and sequential metaphor identification. Proc. AAAI Conf. Artif. Intell. 2021, 35, 13534–13542. [Google Scholar] [CrossRef]

- Huang, L.; Qin, J.; Zhou, Y.; Zhu, F.; Liu, L.; Shao, L. Normalization techniques in training DNNs: Methodology, analysis and application. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 10173–10196. [Google Scholar] [CrossRef]

- Pang, B.; Fu, Y.; Ren, S.; Shen, S.; Wang, Y.; Liao, Q.; Jia, Y. A Multi-Modal Approach for Context-Aware Network Traffic Classification. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar]

- Moradi, M.; Tajik, H.; Almasi, H.; Forough, M.; Ezati, P. A novel pH-sensing indicator based on bacterial cellulose nanofibers and black carrot anthocyanins for monitoring fish freshness. Carbohydr. Polym. 2019, 222, 115030. [Google Scholar] [CrossRef]

- Yamauchi, T. Spatial Sensitive Grad-CAM++: Improved visual explanation for object detectors via weighted combination of gradient map. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 8164–8168. [Google Scholar]

- Azlim, N.; Nafchi, A.; Oladzadabbasabadi, N.; Ariffin, F.; Ghalambor, P.; Jafarzadeh, S.; Al-Hassan, A. Fabrication and characterization of a pH-sensitive intelligent film incorporating dragon fruit skin extract. Food Sci. Nutr. 2021, 10, 597–608. [Google Scholar] [CrossRef]

- Wang, Z.; Li, B.; Li, W.; Niu, S.; Miao, W.; Niu, T. NAS-ASDet: An Adaptive Design Method for Surface Defect Detection Network using Neural Architecture Search. arXiv 2023, arXiv:2311.10952. [Google Scholar] [CrossRef]

- Gou, J.; Yu, B.; Maybank, S.; Tao, D. Knowledge Distillation: A Survey. Int. J. Comput. Vis. 2020, 129, 1789–1819. [Google Scholar] [CrossRef]

- Kuribayashi, K.; Miyake, Y.; Rikitake, K.; Tanaka, K.; Shinoda, Y. Dynamic IoT Applications and Isomorphic IoT Systems Using WebAssembly. In Proceedings of the 2023 IEEE 9th World Forum on Internet of Things (WF-IoT), Aveiro, Portugal, 12–27 October 2023; pp. 1–8. [Google Scholar] [CrossRef]

- Yang, X.; Ho, C.T.; Gao, X.; Chen, N.; Chen, F.; Zhu, Y.; Zhang, X. Machine learning: An effective tool for monitoring and ensuring food safety, quality, and nutrition. Food Chem. 2025, 477, 143391. [Google Scholar] [CrossRef] [PubMed]

| Module | Layer/Description | Input | Output | Kernel/Dil./Units | Activ. | Notes |

|---|---|---|---|---|---|---|

| Image Branch | ||||||

| MobileNetV2 Backbone (Pruned) | 3 × 512 × 512 | 320 × 14 × 14 | Std. MobileNetV2 | Various | Output from pruned vers. | |

| MDFA (Section 3.2) | 320 × 14 × 14 | 256 × 14 × 14 () | ||||

| - Local Detail (Conv2D+BN) | 320 | 128 | 3 × 3, d = 6 | ReLU | ||

| - Global Trend (Conv2D+BN) | 320 | 128 | 3 × 3, d = 12 | ReLU | ||

| - Global Context Path (GAP, 1 × 1C, BN, Int.) | 320 | 128 | 1 × 1 | ReLU | Interp to 14 × 14 | |

| - Concatenation | 384 | N/A | N/A | |||

| - Fusion Conv (1 × 1Conv+BN) | 384 | 256 () | 1 × 1 | ReLU | ||

| - Spatial Attn. Gen. (1 × 1Conv) | 256 () | 256 () | 1 × 1 | Sigmoid | from | |

| - Final Output () | :256, :256 | 256 () | Elem-mult. | N/A | ||

| AdaAvgPool2d(1)+Flatten | 256 × 14 × 14 | 256 | N/A | N/A | Output | |

| Chemical Branch | ||||||

| 3 (pH,TVB,TVC) × T | T = 6 (Figure 4) | |||||

| TCN (Section 3.3) | 3 × 6 | 256 () | ||||

| - Linear Expansion | 3 | 64 () | N/A | ReLU | Per timestep proj. | |

| - Dilated Conv1D L1 (+BN) | 64 | 64 | k = 3, d = 2 | ReLU | ||

| - Dilated Conv1D L2 (+BN) | 64 | 64 | k = 3, d = 4 | ReLU | ||

| - Dilated Conv1D L3 (+BN) | 64 | 64 | k = 3, d = 8 | ReLU | ||

| - FC (after TCN pool) | 64 (pooled) | 256 | N/A | N/A | ||

| Fusion Module | ||||||

| CAG-Fusion (Section 3.4) | :256, :256 | 256 () | ||||

| - Cosine Similarity (s) | Scalar | N/A | N/A | |||

| - MLP for Gating g | 1 (g) | [513→64→1] | ReLU, Sig. | MLP example | ||

| - Weighted Sum Fusion | 256 () | N/A | N/A | |||

| Classification Head | ||||||

| 256 () | From CAG-Fusion | |||||

| Linear Layer 1 | 256 | 128 | N/A | ReLU | Matches Figure 4 logic | |

| Linear Layer 2 (Output) | 128 | 3 (classes) | N/A | N/A | Softmax later | |

| Model | Acc (%) | Recall (%) | F1-Score (%) | Precision (%) | Params (M) |

|---|---|---|---|---|---|

| Chem Only | 87.54 | 87.11 | 86.87 | 87.03 | 0.02 |

| Image Only | 56.73 | 56.60 | 55.41 | 56.90 | 2.59 |

| Baseline | 93.98 | 94.26 | 94.07 | 93.51 | 26.12 |

| Baseline(+SEA) | 95.83 | 96.02 | 95.79 | 95.88 | 28.25 |

| Baseline(+CBAM) | 94.23 | 94.42 | 94.25 | 94.31 | 28.23 |

| Baseline(+MDFA) | 96.79 | 96.71 | 96.99 | 97.14 | 38.67 |

| Baseline(+Fusion Gate) | 97.44 | 97.20 | 97.29 | 97.55 | 26.83 |

| FreshFusionNet(ours) | 99.61 | 99.19 | 99.25 | 99.48 | 39.32 |

| Model | Acc (%) | Recall (%) | F1-Score (%) | Params (M) | FPS |

|---|---|---|---|---|---|

| MoblieNetV2 | 54.97 | 54.74 | 54.57 | 2.23 | 17.34 |

| EfficientNet-B3 | 68.11 | 68.29 | 67.40 | 7.04 | 13.85 |

| ResNet50 | 69.07 | 69.19 | 69.45 | 23.52 | 12.93 |

| FoodNet | 73.37 | 72.54 | 73.03 | 25.46 | 13.73 |

| Concatenation | 94.35 | 93.80 | 94.14 | 26.12 | 19.62 |

| FreshFusionNet(ours) | 99.61 | 99.19 | 99.25 | 39.32 | 14.78 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pan, Y.; Wang, Y.; Zhou, Y.; Zhou, J.; Chen, M.; Liu, D.; Li, F.; Liu, C.; Zeng, M.; Jiang, D.; et al. A Smartphone-Based Non-Destructive Multimodal Deep Learning Approach Using pH-Sensitive Pitaya Peel Films for Real-Time Fish Freshness Detection. Foods 2025, 14, 1805. https://doi.org/10.3390/foods14101805

Pan Y, Wang Y, Zhou Y, Zhou J, Chen M, Liu D, Li F, Liu C, Zeng M, Jiang D, et al. A Smartphone-Based Non-Destructive Multimodal Deep Learning Approach Using pH-Sensitive Pitaya Peel Films for Real-Time Fish Freshness Detection. Foods. 2025; 14(10):1805. https://doi.org/10.3390/foods14101805

Chicago/Turabian StylePan, Yixuan, Yujie Wang, Yuzhe Zhou, Jiacheng Zhou, Manxi Chen, Dongling Liu, Feier Li, Can Liu, Mingwan Zeng, Dongjing Jiang, and et al. 2025. "A Smartphone-Based Non-Destructive Multimodal Deep Learning Approach Using pH-Sensitive Pitaya Peel Films for Real-Time Fish Freshness Detection" Foods 14, no. 10: 1805. https://doi.org/10.3390/foods14101805

APA StylePan, Y., Wang, Y., Zhou, Y., Zhou, J., Chen, M., Liu, D., Li, F., Liu, C., Zeng, M., Jiang, D., Yuan, X., & Wu, H. (2025). A Smartphone-Based Non-Destructive Multimodal Deep Learning Approach Using pH-Sensitive Pitaya Peel Films for Real-Time Fish Freshness Detection. Foods, 14(10), 1805. https://doi.org/10.3390/foods14101805