Abstract

This paper presents a new evolutionary-based approach called a Subtraction-Average-Based Optimizer (SABO) for solving optimization problems. The fundamental inspiration of the proposed SABO is to use the subtraction average of searcher agents to update the position of population members in the search space. The different steps of the SABO’s implementation are described and then mathematically modeled for optimization tasks. The performance of the proposed SABO approach is tested for the optimization of fifty-two standard benchmark functions, consisting of unimodal, high-dimensional multimodal, and fixed-dimensional multimodal types, and the CEC 2017 test suite. The optimization results show that the proposed SABO approach effectively solves the optimization problems by balancing the exploration and exploitation in the search process of the problem-solving space. The results of the SABO are compared with the performance of twelve well-known metaheuristic algorithms. The analysis of the simulation results shows that the proposed SABO approach provides superior results for most of the benchmark functions. Furthermore, it provides a much more competitive and outstanding performance than its competitor algorithms. Additionally, the proposed approach is implemented for four engineering design problems to evaluate the SABO in handling optimization tasks for real-world applications. The optimization results show that the proposed SABO approach can solve for real-world applications and provides more optimal designs than its competitor algorithms.

1. Introduction

Optimization is a comprehensive concept in various fields of science. An optimization problem is a type of problem that has more than one feasible solution. Therefore, the goal of optimization is to find the best solution among all these feasible solutions. From a mathematical point of view, an optimization problem is explained using three parts: decision variables, constraints, and objective function [1]. The problem solving techniques in optimization studies are placed into two groups: deterministic and stochastic approaches [2].

Deterministic approaches, which are placed into two classes, gradient-based and non-gradient-based, are effective in solving linear, convex, simple, low-dimensional, continuous, and differentiable optimization problems [3]. However, increasing the complexity of these optimization problems leads to disruption in the performance of the deterministic approaches, and these methods get stuck in inappropriate local optima. On the other hand, many optimization problems within science and real-world applications have characteristics such as a high dimensionality, a high complexity, a non-convex, non-continuous, non-linear, and non-differentiable objective function, and a non-linear and unknown search space [4]. These optimization task characteristics and the difficulties of deterministic approaches have led researchers to introduce new techniques called stochastic approaches.

Metaheuristic algorithms are one of the most widely used stochastic approaches that effectively solve complex optimization problems. They have efficiency in solving non-linear, non-convex, non-differentiable, high-dimensional, and NP-hard optimization problems. An efficiency in addressing discrete, non-linear, and unknown search spaces, the simplicity of their concepts, their easy implementation, and their non-dependence on the type of problem are among the advantages that have led to the popularity of metaheuristic algorithms [5]. Metaheuristic algorithms are employed in various optimization applications within science, such as index tracking [6], energy [7,8,9,10], protection [11], energy carriers [12,13], and electrical engineering [14,15,16,17,18,19].

The optimization process of these metaheuristic algorithms is based on random search in the problem solving space and the use of random operators. Initially, candidate solutions are randomly generated. Then, during a repetition-based process and based on the steps of the algorithm, to improve the quality of these initial solutions, the position of the candidate solutions in the problem solving space is updated. In the end, the best candidate solution is available to solve the problem. Using random search in the optimization process does not guarantee the achievement of the global optimal by a metaheuristic algorithm. For this reason, the solutions that are obtained from metaheuristic algorithms are called pseudo-optimal [20]. To organize an effective search in the problem solving space, metaheuristic algorithms should be able to provide and manage search operations well, at both global and local levels. Global search, with the concept of exploration, leads to a comprehensive search in the problem solving space and an escape from optimal local areas. Local search, with the concept of exploitation, leads to a detailed search around the promising solutions for a convergence towards possible better solutions. Considering that exploration and exploitation pursue opposite goals, the key to the success of metaheuristic algorithms is to create a balance between this exploration and exploitation during the search process [21].

On the one hand, the concepts of the random search process and quasi-optimal solutions, and, on the other hand, the desire to achieve better quasi-optimal solutions for these optimization problems, have led to the development of numerous metaheuristic algorithms by researchers.

The main research question is that now that many metaheuristic algorithms have been designed, is there still a need to introduce a newer algorithm to deal with optimization problems or not? In response to this question, the No Free Lunch (NFL) [22] theorem explains that the high success of a particular algorithm in solving a set of optimization problems will not guarantee the same performance of that algorithm for other optimization problems. There is no assumption that implementing an algorithm on an optimization problem will be successful. According to the NFL theorem, no particular metaheuristic algorithm is the best optimizer for solving all optimization problems. The NFL theorem motivates researchers to search for better solutions for these optimization problems by designing newer metaheuristic algorithms. The NFL theorem has also inspired the authors of this paper to provide more effective solutions for dealing with optimization problems by creating a new metaheuristic algorithm.

The innovation and novelty of this paper are in the introduction a new metaheuristic algorithm called the Subtraction Average of Searcher Agents (SABO) for solving the optimization problems in different sciences. The main contributions of this study are as follows:

- The basic idea behind the design of the SABO is the mathematical concepts and information subtraction average of the algorithm’s search agents.

- The steps of the SABO’s implementation are described and its mathematical model is presented.

- The efficiency of the proposed SABO approach has been evaluated for fifty-two standard benchmark functions.

- The quality of the SABO’s results has been compared with the performance of twelve well-known algorithms.

- To evaluate the capability of the SABO in handling real-world applications, the proposed approach is implemented for four engineering design problems.

The continuation of this paper is organized as follows: the literature review is presented in Section 2. The proposed SABO approach is introduced and designed in Section 3. Its simulation studies are presented in Section 4. The performance of the SABO in solving real-world applications is evaluated in Section 5. The conclusions and several research suggestions are provided in Section 6.

2. Literature Review

Metaheuristic algorithms have been developed with inspiration from various natural phenomena, the behaviors of living organisms in nature, concepts of biology, physical sciences, rules of games, and human interactions, etc. In a general classification based on the idea that is employed in their design, metaheuristic algorithms are placed into five groups: swarm-based, evolutionary-based, physics-based, human-based, and game-based approaches.

Swarm-based metaheuristic algorithms are approaches that are inspired by various natural swarming phenomena, such as the natural behaviors of animals, birds, aquatic animals, insects, and other living organisms. Among the most famous swarm-based approaches are particle swarm optimization (PSO) [23], ant colony optimization (ACO) [24], and artificial bee colony (ABC) [25]. PSO is a swarming method that is inspired by the movement strategy of flocks of fish or birds searching for food in nature. ACO is inspired by ant colonies’ ability to choose the shortest path between the food source and the colony site. ABC is derived from the hierarchical strategy of honey bee colonies and their activities in finding food sources. The strategies of providing food through hunting and foraging, migration, and the process of chasing between living organisms are some of the most natural, characteristic swarming ways of behavior, which have been a source of inspiration in the design of numerous swarm-based algorithms, such as the Reptile Search Algorithm (RSA) [26], Orca Predation Algorithm (OPA) [27], Marine Predator Algorithm (MPA) [28], African Vultures Optimization Algorithm (AVOA) [29], Honey Badger Algorithm (HBA) [30], White Shark Optimizer (WSO) [31], Whale Optimization Algorithm (WOA) [32], Tunicate Swarm Algorithm (TSA) [33], Grey Wolf Optimizer (GWO) [34], and Golden Jackal Optimization (GJO) [35].

Evolutionary-based metaheuristic algorithms are approaches that are developed based on simulating the concepts of the biological and genetic sciences. The bases of these methods are evolution strategies (ES) [36], genetic algorithms (GA) [37], and differential evolution (DE) [36]. These methods and all their generalizations are inspired by the concepts of biology, natural selection, Darwin’s theory of evolution, reproduction, and stochastic operators such as selection, crossover, and mutation.

Physics-based metaheuristic algorithms are designed based on modeling phenomena, processes, concepts, and the different forces in physics. Simulated annealing (SA) [38] is one of the most widely used physics-based methods, whose design is inspired by the annealing process of metals. In the annealing process, the metal is first melted under heat, then gradually cooled to achieve the ideal crystal. The modeling of physical forces and the laws of motion is the design origin of physics-based algorithms such as the gravitational search algorithm (GSA) [39] and momentum search algorithm (MSA) [40]. SA is developed based on the modeling of the tensile force and Hooke’s law between bodies that are connected by springs. Gravitational force inspires the GSA, which masses at the different distances that exert on each other. The MSA is designed based on the modeling of the force that results from the momentum of balls that hit each other. The phenomenon of the transformations of different physical states in the natural water cycle is employed in the water cycle algorithm’s (WCA) [41] design. The concepts of cosmology and black holes have been the primary sources for the design of algorithms such as the Black Hole Algorithm (BHA) [42] and Multi-Verse Optimizer (MVO) [43]. Some of the other physics-based algorithms are: the Equilibrium Optimizer (EO) [44], Thermal Exchange Optimization (TEO) [45], the Archimedes optimization algorithm (AOA) [46], the Lichtenberg Algorithm (LA) [47], Henry Gas Optimization (HGO) [48], Electro-Magnetism Optimization (EMO) [49], and nuclear reaction optimization (NRO) [50].

Human-based metaheuristic algorithms are approaches with designs that are inspired by the interactions, relationships, and thoughts of humans in social and individual life. Teaching–learning-based optimization (TLBO) [51] is one of the most familiar and widely used human-based approaches, whose design is inspired by the scientific interactions between teachers and students in the educational system. The effort of two social classes, the poor and rich, to improve their economic situations was the main idea behind introducing poor and rich optimization (PRO) [45]. The cooperation and interactions between teammates within a team to achieve their set goal has been the main idea behind the introduction of the Teamwork Optimization Algorithm (TOA) [52]. Collective decision optimization (CDO) [45] is inspired by the decision making behavior of humans, the queuing search algorithm (QSA) [45] mimics human actions when performing a queuing process, the political optimizer (PO) [50] imitates a human political formwork, and the Election-Based Optimization Algorithm (EBOA) [45] is based on mimicking the voting process for leader selections. Some of the other human-based algorithms are, e.g., the gaining–sharing knowledge-based algorithm (GSK) [53], Ali Baba and the Forty Thieves (AFT) [54], Driving Training-Based Optimization (DTBO) [4], the Coronavirus herd immunity optimizer (CHIO) [55], and War Strategy Optimization (WSO) [56].

Game-based metaheuristic algorithms are approaches that are introduced based on modeling the rules of different individual and group games and the strategies of their players, coaches, referees, and the other people influencing the games. Football and volleyball are popular group games whose simulations have been employed in the design of the League Championship Algorithm (LCA) [57], Volleyball Premier League (VPL) [57], and Football-Game-Based Optimization (FGBO) [58], respectively.

Mathematics-based metaheuristic algorithms are designed based on mathematical concepts, foundations, and operations. The Sine Cosine Algorithm (SCA) [51] is one of the most familiar mathematics-based approaches, whose design is inspired by the transcendental functions and . The Arithmetic Optimization Algorithm (AOA) [51] uses the distribution behavior of mathematics’ four basic arithmetic operators (multiplication, division, subtraction, and addition). Runge Kutta (RUN) [51] uses the logic of slope variations that are computed by the RK method as a promising and logical searching mechanism for global optimization. The Average and Subtraction-Based Optimizer (ASBO) [51] has the main construction idea of computing the averages and subtractions of the best and worst population members for guiding the algorithm population in the problem search space.

Based on the best knowledge from the literature review, no metaheuristic algorithm has been developed based on the mathematical concept of “an average of subtraction of search agents”. Therefore, the primary idea of the proposed algorithm was to use an extraordinary average of all the search agents to update the algorithm’s population, which can prevent the algorithm’s dependence on specific population members. Moreover, by improving the exploration of the algorithm, this can avoid it getting stuck in local optima. Therefore, to address this research gap in optimization studies, in this paper, a new metaheuristic algorithm is designed based on the mathematical concept of a special subtraction arithmetic average, which is discussed in the next section.

3. Subtraction-Average-Based Optimizer

In this section, the theory of the proposed Subtraction-Average-Based Optimizer (SABO) approach is explained, then its mathematical modeling is presented for its employment in optimization tasks.

3.1. Algorithm Initialization

Each optimization problem has a solution space, which is called the search space. The search space is a subset of the space of the dimension, which is equal to the number of the decision variables of the given problem. According to their position in the search space, algorithm searcher agents (i.e., population members) determine the values for the decision variables. Therefore, each search agent contains the information of the decision variables and is mathematically modeled using a vector. The set of search agents together forms the population of the algorithm. From a mathematical point of view, the population of the algorithm can be represented using a matrix, according to Equation (1). The primary positions of the search agents in the search space are randomly initialized using Equation (2).

where is the SABO population matrix, Xi is the th search agent (population member), is its th dimension in the search space (decision variable), is the number of search agents, is the number of decision variables, is a random number in the interval , and and are the lower and upper bounds of the th decision variable, respectively.

Each search agent is a candidate solution to the problem that suggests values for the decision variables. Therefore, the objective function of the problem can be evaluated based on each search agent. The evaluated values for the objective function of the problem can be represented by using a vector called , according to Equation (3). Based on the placement of the specified values by each population member for the decision variables of the problem, the objective function is evaluated and stored in the vector . Therefore, the number of elements of the vector is equal to the number of the population members .

where is the vector of the values of the objective function, and is the evaluated values for the objective function based on the th search agent.

The evaluated values for the objective function are a suitable criterion for analyzing the quality of the solutions that are proposed by the search agents. Therefore, the best value that is calculated for the objective function corresponds to the best search agent. Similarly, the worst value that is calculated for the objective function corresponds to the worst search agent. Considering that the position of the search agents in the search space is updated in each iteration, the process of identifying and saving the best search agent continues until the last iteration of the algorithm.

3.2. Mathematical Modelling of SABO

The basic inspiration for the design of the SABO is mathematical concepts such as averages, the differences in the positions of the search agents, and the sign of difference of the two values of the objective function. The idea of using the arithmetic mean location of all the search agents (i.e., the population members of the th iteration), instead of just using, e.g., the location of the best or worst search agent to update the position of all the search agents (i.e., the construction of all the population members of the th iteration), is not new, but the SABO’s concept of the computation of the arithmetic mean is wholly unique, as it is based on a special operation “”, called the subtraction of the search agents from the search agent A, which is defined as follows:

where is a vector of the dimension , in which components are random numbers that are generated from the set , the operation “” represents the Hadamard product of the two vectors (i.e., all the components of the resulting vectors are formed by multiplying the corresponding components of the given two vectors), and are the values of the objective function of the search agents and , respectively, and is the signum function. It is worth noting that, due to the use of a random vector with components from the set in the definition of the subtraction, the result of this operation is any of the points of a subset of the search space that has a cardinality of .

In the proposed SABO, the displacement of any search agent in the search space is calculated by the arithmetic mean of the subtraction of each search agent from the search agent . Thus, the new position for each search agent is calculated using (5).

where is the new proposed position for the th search agent , is the total number of the search agents, and is a vector of the dimension , in which components have a normal distribution with the values from the interval .

Then, if this proposed new position leads to an improvement in the value of the objective function, it is acceptable as the new position of the corresponding agent, according to (6).

where and are the objective function values of the search agents and respectively.

Clearly, the subtraction represents a vector and we can look at Equation (5) as the motion equation of the search agent , since we can rewrite it in the form , where the mean vector determines the direction of the movement of the search agent to its new position The search mechanism based on “the arithmetic mean of the -subtractions”, which is presented in (5), has the essential property of realizing both the exploration and exploitation phases to explore the promising areas in the search space. The exploration phase is realized by the operation of “-subtraction” (i.e., the vector ), see Figure 1A, and the exploitation phase by the operation of the “arithmetic mean of the -subtractions” (i.e., the vector ), see Figure 1B.

Figure 1.

Schematic illustration (for the case ) of the exploration phase by “ -subtraction” (A), and the exploitation phase by “arithmetic mean of the -subtractions” (B).

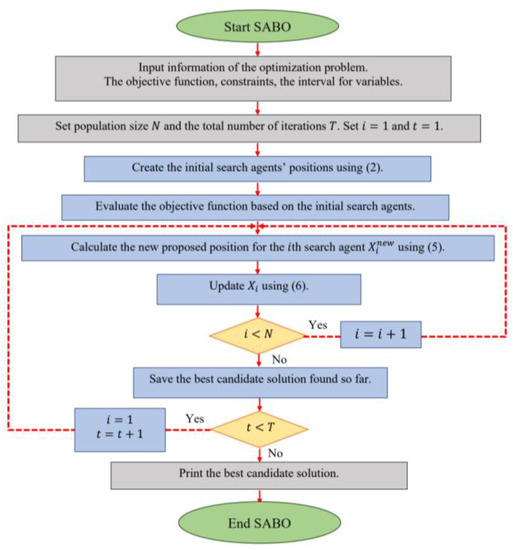

3.3. Repetition Process, Pseudocode, and Flowchart of SABO

After updating all the search agents, the first iteration of the algorithm is completed. Then, based on the new values that have been evaluated for the positions of the search agents and objective function, the algorithm enters its next iteration. In each iteration, the best search agent is stored as the best candidate solution so far. This process of updating the search agents continues until the last iteration of the algorithm, based on (3) to (5). In the end, the best candidate solution that was stored during the iterations of the algorithm is presented as the solution to the problem. The implementation steps of the SABO are shown as a flowchart in Figure 2 and presented as a pseudocode in Algorithm 1.

| Algorithm 1. Pseudocode of SABO. |

| Start SABO. |

| 1. Input problem information: variables, objective function, and constraints. |

| 2. Set SABO population size (N) and iterations (T). |

| 3. Generate the initial search agents’ matrix at random using Equation (2). |

| 4. Evaluate the objective function. |

| 5. For t = 1 to T |

| 6. For i = 1 to |

| 7. Calculate new proposed position for ith SABO search agent using Equation (5). |

| 8. Update ith GAO member using Equation (6). |

| 9. end |

| 10. Save the best candidate solution so far. |

| 11. end |

| 12. Output best quasi-optimal solution obtained with the SABO. |

| End SABO. |

Figure 2.

Flowchart of SABO.

3.4. Computational Complexity of SABO

In this subsection, the computational complexity of the proposed SABO approach is evaluated. The initialization steps of the SABO for dealing with an optimization problem with decision variables have a complexity that is equal to , where is the number of search agents. Furthermore, the process of updating these search agents has a complexity that is equal to , where is the total number of iterations of the algorithm. Therefore, the computational complexity of the SABO is equal to .

4. Simulation Studies and Results

In this section, the effectiveness of the proposed SABO approach in solving optimization problems is challenged. For this purpose, a set of fifty-two standard benchmark functions is employed, consisting of seven unimodal functions (F1 to F7), six high-dimensional multimodal functions (F8 to F13), ten fixed-dimensional multimodal functions (F14 to F23), and twenty-nine functions from the CEC 2017 test suite [59]. To analyze the performance quality of the SABO in optimization tasks, the results that were obtained from the proposed approach have been compared with twelve well-known metaheuristic algorithms: GA, PSO, GSA, TLBO, GWO, MVO, WOA, MPA, TSA, RSA, WSO, and AVOA. The values of the control parameters of the competitor algorithms are specified in Table 1.

Table 1.

Control parameters values.

The proposed SABO and each of the competitor algorithms are implemented for twenty independent runs on the benchmark functions, where each independent run includes 1000 iterations. The optimization results are reported using six indicators: the mean, best, worst, standard deviation (std), median, and rank. The ranking criterion of these metaheuristic algorithms is based on providing a better value for the mean index.

4.1. Evaluation Unimodal Functions

The unimodal objective functions, F1 to F7, due to the lack of local optima, are suitable options for analyzing the exploitation ability of the metaheuristic algorithms. The optimization results of the F1 to F7 functions, using the SABO and competitor algorithms, are reported in Table 2.

Table 2.

Optimization results of unimodal functions (F1–F8).

Based on the obtained results, the proposed SABO, with a high exploitation ability, provided the global optimal when solving the F1, F2, F3, F4, and F6 functions. Additionally, the SABO is the best optimizer for the F5 and F7 functions. A comparison of the simulation results shows that the SABO, through obtaining the first rank in the total, provided a superior performance for solving the unimodal problems F1 to F7 compared to the competitor algorithms.

4.2. Evaluation High-Dimensional Multimodal Functions

The high-dimensional multimodal objective functions, F8 to F13, due to having a large number of local optima, are suitable options for evaluating the exploration ability of the metaheuristic algorithms. The results of implementing the SABO and its competitor algorithms on the functions F8 to F13 are reported in Table 3.

Table 3.

Optimization results of high-dimensional multimodal functions (F8–F13).

Based on the optimization results, the SABO provided the global optimal for the F9 and F11 functions, with a high exploration ability. The proposed SABO approach is the best optimizer for solving the functions F8, F10, F12, and F13. The analysis of the simulation results shows that the SABO provided a superior performance in handling the high-dimensional multimodal problems compared to its competitor algorithms.

4.3. Evaluation Fixed-Dimensional Multimodal Functions

The fixed-dimensional multimodal objective functions, F14 to F23, have fewer numbers of local optima than the functions F8 to F13. These functions are suitable options for evaluating the ability of the metaheuristic algorithms to create a balance between the exploration and exploitation. The optimization results of the operations F14 to F23, using the SABO and its competitor algorithms, are presented in Table 4.

Table 4.

Optimization results of high-dimensional multimodal functions (F14–F23).

Based on the obtained results, the SABO is the best optimizer for the functions F15 and F21. In solving the other benchmark functions of this group, the SABO had a similar situation to some of its competitor algorithms from the point of view of the mean criterion. However, the proposed SABO algorithm performed better in solving these functions by providing better values for the std index. Furthermore, the analysis of the simulation results shows that, compared to the competitor algorithms, the SABO provided a superior performance by balancing the exploration and exploitation in the optimization of the fixed-dimensional multimodal problems.

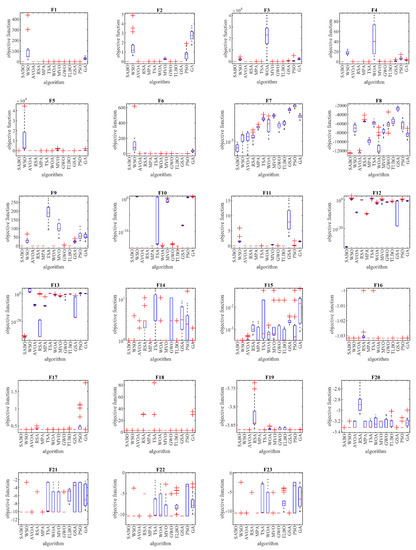

The performances of the proposed SABO approach and the competitor algorithms in solving the functions F1 to F23 are presented in the form of boxplot diagrams in Figure 3.

Figure 3.

Boxplot diagrams of the proposed SABO and competitor algorithms for F1 to F23 test functions.

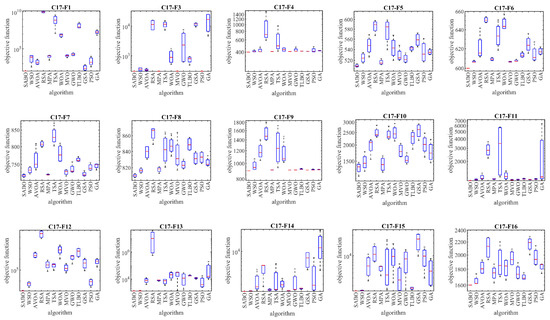

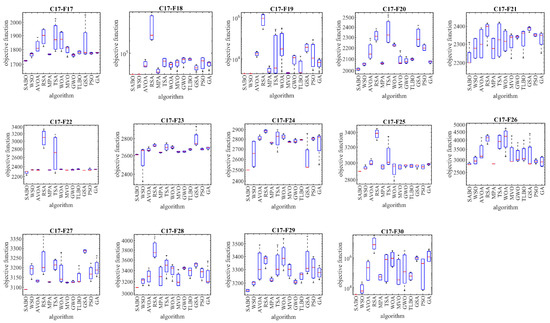

4.4. Evaluation CEC 2017 Test Suite

In this subsection, the efficiency of the SABO in solving the complex optimization problems from the CEC 2017 test suite is evaluated. The CEC 2017 test suite has thirty benchmark functions, consisting of three unimodal functions, C17-F1 to C17-F3, seven multimodal functions, C17-F4 to C17-F10, ten hybrid functions, C17-F11 to C17-F20, and ten composition functions, C17-F21 to C17-F30. The C17-F2 function was removed from this test suite due to its unstable behavior. The complete information on the CEC 2017 test suite is provided by [59]. The results of implementing the proposed SABO approach and its competitor algorithms on the CEC 2017 test suite are reported in Table 5.

Table 5.

Optimization results of the CEC 2017 test suite.

Based on the obtained results, the SABO is the best optimizer for the functions C17-F1, C17-F3 to C17-F23, and C17-F25 to C17-F30. The analysis of the simulation results shows that the proposed SABO approach provided better results for most of the benchmark functions. Overall, by winning the first rank, it provided a superior performance in handling the CEC 2017 test suite compared to the competitor algorithms. The performances of the SABO and its competitor algorithms in solving the CEC 2017 test suite are plotted as boxplot diagrams in Figure 4.

Figure 4.

Boxplot diagram of SABO and competitor algorithms on the CEC 2017 test suite.

4.5. Statistical Analysis

In this subsection, statistical analyses are presented for the results of the proposed SABO approach and its competing algorithms to determine whether the superiority of the SABO over the competing algorithms is significant from a statistical point of view. For this purpose, the Wilcoxon rank sum test [60] was used, which is a non-parametric statistical analysis that is used to determine the significant difference between the averages of two data samples. In this test, an index called the -value is used to determine the significant difference. The results of implementing the Wilcoxon rank sum test on the performances of the SABO and the competitor algorithms are presented in Table 6.

Table 6.

Wilcoxon rank sum test results.

Based on the simulation results, in cases where the -value was less than 0.05, the proposed SABO approach had a significant statistical superiority over the corresponding metaheuristic algorithm.

4.6. Advantages and Disadvantages of SABO

The proposed SABO approach is a metaheuristic algorithm that performs the optimization process based on the search power of its population through an iteration-based process. Among the advantages of the SABO, it can be mentioned that, except for the parameters of the number of population members and the maximum number of iterations of the algorithm , which are similar in all the algorithms, it does not have any control parameters. For this reason, it does not need a parameter-setting process. The simplicity of its equations, its easy implementation, and its simple concepts are other advantages of the SABO. The SABO’s ability to balance exploration and exploitation during the search process in the problem solving space is another advantage of this proposed approach. Despite these advantages, the proposed approach also has several disadvantages. The proposed SABO approach belongs to the group of stochastic techniques for solving optimization problems, and for this reason, its first disadvantage is that there is no guarantee of it achieving the global optimal. Another disadvantage of the SABO is that, based on the NFL theorem, it cannot be claimed that the proposed approach performs best in all optimization applications. Another disadvantage of the SABO is that there is always the possibility that newer metaheuristic algorithms will be designed that have a better performance than the proposed approach in handling some optimization tasks.

5. SABO for Real-World Applications

In this subsection, the capability of the proposed SABO approach in handling optimization tasks in real-world applications is challenged by four engineering design optimization problems.

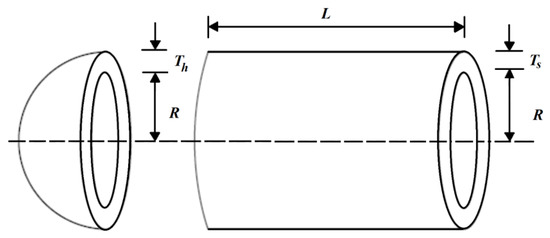

5.1. Pressure Vessel Design Problem

The pressure vessel design is an optimization challenge with the aim of minimizing construction costs. The pressure vessel design schematic is shown in Figure 5.

Figure 5.

Schematic of the pressure vessel design.

The mathematical model of the pressure vessel design problem is as follows [61]:

Consider: .

Minimize:

Subject to:

with

The optimization results for the pressure vessel design, using the SABO and its competing algorithms, are reported in Table 7 and Table 8.

Table 7.

Performance of optimization algorithms for the pressure vessel design problem.

Table 8.

Statistical results of optimization algorithms for the pressure vessel design problem.

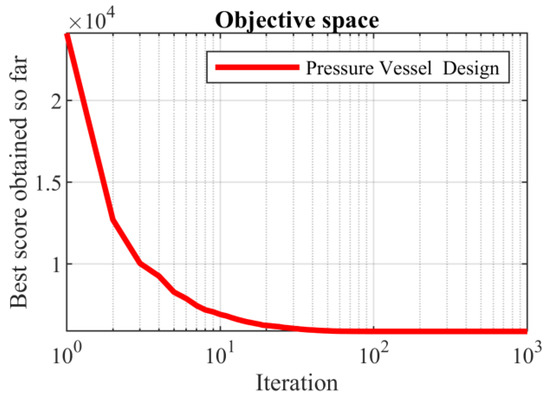

Based on the obtained results, the SABO provided the optimal solution, with the values of the design variables being equal to (0.778027075, 0.384579186, 40.3122837, and 200) and the value of the objective function being equal to 5882.901334. The analysis of the simulation results shows that the SABO more effectively dealt with the pressure vessel design compared to its competing algorithms. The convergence curve of the SABO during the pressure vessel design optimization is drawn in Figure 6.

Figure 6.

SABO’s performance convergence curve for the pressure vessel design.

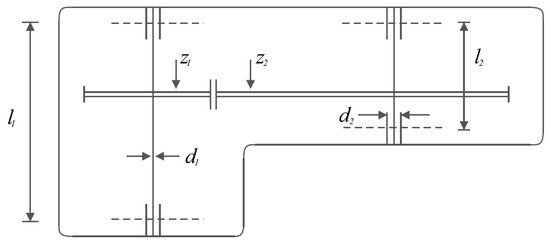

5.2. Speed Reducer Design Problem

The speed reducer design is a real-world application within engineering science with the aim of minimizing the weight of the speed reducer. The speed reducer design schematic is shown in Figure 7.

Figure 7.

Schematic of the speed reducer design.

The mathematical model of the speed reducer design problem is as follows [62,63]:

Consider: .

Minimize: .

Subject to:

with

The results of implementing the proposed SABO approach and its competing algorithms on the speed reducer design problem are presented in Table 9 and Table 10.

Table 9.

Performance of optimization algorithms for the speed reducer design problem.

Table 10.

Statistical results of optimization algorithms for the speed reducer design problem.

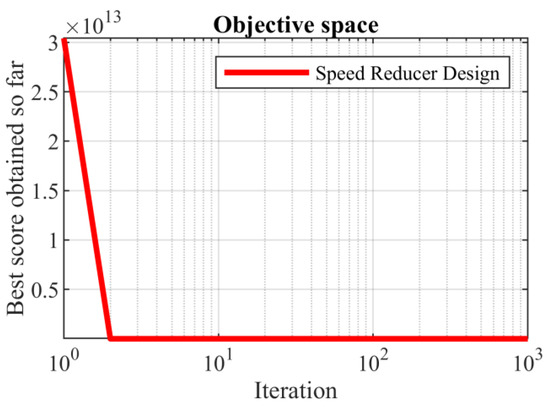

Based on the obtained results, the SABO provided the optimal solution, with the values of the design variables being equal to (3.5, 0.7, 17, 7.3, 7.8, 3.350214666, and 5.28668323) and the value of the objective function being equal to 2996.348165. What can be concluded from the comparison of the simulation results is that the proposed SABO approach provided better results and a superior performance in dealing with the speed reducer design problem compared to the competing algorithms. The convergence curve of the SABO while achieving the optimal solution for the speed reducer design problem is drawn in Figure 8.

Figure 8.

SABO’s performance convergence curve for the speed reducer design.

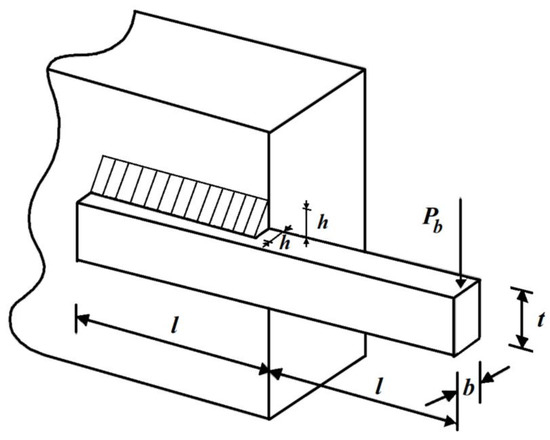

5.3. Welded Beam Design

The design of the welded beam is the subject of optimization by real users to minimize its production costs. The design of the welded beam schematic is shown in Figure 9.

Figure 9.

Schematic of the welded beam design.

The mathematical model of the welded beam design problem is as follows [32]:

Consider: .

Minimize: .

Subject to:

where

with

The results of using the SABO and its competitor algorithms on the welded beam design problem are reported in Table 11 and Table 12.

Table 11.

Performance of optimization algorithms for the welded beam design problem.

Table 12.

Statistical results of optimization algorithms for the welded beam design problem.

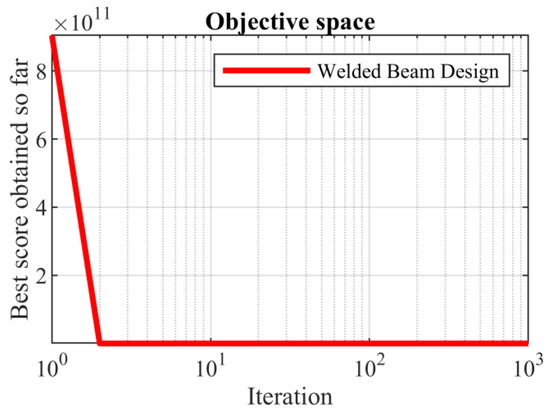

Based on the obtained results, the SABO provided the optimal solution, with the values of the design variables being equal to (0.20572964, 3.470488666, 9.03662391, and 0.20572964) and the value of the objective function being equal to 1.724852309. Comparing these optimization results indicates the superior performance of the SABO over the competing algorithms in optimizing the welded beam design. The SABO convergence curve while providing the solution for the welded beam design problem is drawn in Figure 10.

Figure 10.

SABO’s performance convergence curve for the welded beam design.

5.4. Tension/Compression Spring Design

The tension/compression spring design is an engineering challenge with the aim of minimizing the weight of the tension/compression spring. The tension/compression spring design schematic is shown in Figure 11.

Figure 11.

Schematic of the tension/compression spring design.

The mathematical model of the tension/compression spring design problem is as follows [32]:

Consider:

Minimize:

Subject to:

with

The results of employing the SABO and the competing algorithms to handle the tension/compression spring design problem are presented in Table 13 and Table 14.

Table 13.

Performance of optimization algorithms for the tension/compression spring design problem.

Table 14.

Statistical results of optimization algorithms for the tension/compression spring design problem.

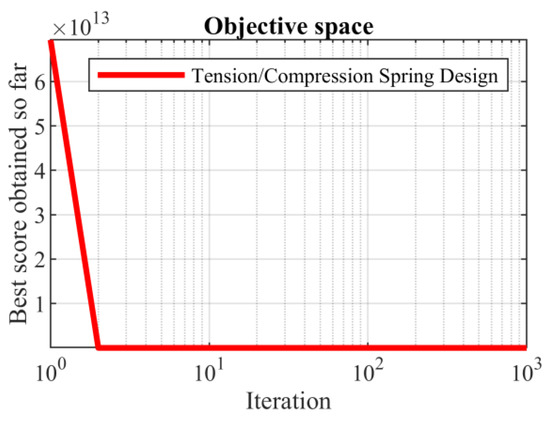

Based on the obtained results, the SABO provided the optimal solution, with the values of the design variables being equal to (0.051689061, 0.356717736, and 11.28896595) and the value of the objective function being equal to 0.012665233. What is evident from the analysis of the simulation results is that the SABO was more effective in optimizing the tension/compression spring design than the competing algorithms. The SABO convergence curve in reaching the optimal design for the tension/compression spring problem is drawn in Figure 12.

Figure 12.

SABO’s performance convergence curve for the tension/compression spring.

6. Conclusions and Future Works

In this paper, a new metaheuristic algorithm called the Subtraction Average of Searcher Agents (SABO) was designed. The main idea of the design of the SABO was to use mathematical concepts and information on the average differences of searcher agents to update the population of the algorithm. The mathematical modeling of the proposed SABO approach was presented for optimization applications. The SABO’s ability to solve these optimization problems was evaluated for fifty-two standard benchmark functions, including unimodal, high-dimensional, and fixed-dimensional functions, and the CEC 2017 test suite. The optimization results indicated the SABO’s optimal ability to create a balance between exploration and exploitation while scanning the search space to provide suitable solutions for the optimization problems. A total of twelve well-known metaheuristic algorithms were employed for comparison with the proposed SABO approach. Comparing the simulation results showed that the SABO performed better than its competitor algorithms, providing better results for most of the benchmark functions. The implementation of the proposed optimization method on four engineering design problems demonstrated the SABO’s ability to handle these optimization tasks in real-world applications.

With the introduction of the proposed SABO approach, several research avenues are opened for further study. The design of binary and multi-objective versions of the SABO is one of this study’s most special research potentials. Employing the SABO to solve the optimization problems within various sciences and real-world applications is another suggestion for further studies.

Author Contributions

Conceptualization, P.T.; methodology, M.D.; software, M.D. and P.T.; validation, P.T. and M.D.; formal analysis, M.D.; investigation, P.T.; resources, M.D.; data curation, P.T.; writing—original draft preparation, M.D. and P.T.; writing—review and editing P.T.; visualization, P.T.; supervision, M.D.; project administration, P.T.; funding acquisition, P.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Project of Excellence of Faculty of Science, University of Hradec Králové, Czech Republic. Grant number 2209/2023-2024.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors thank University of Hradec Králové for support.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Sergeyev, Y.D.; Kvasov, D.; Mukhametzhanov, M. On the efficiency of nature-inspired metaheuristics in expensive global optimization with limited budget. Sci. Rep. 2018, 8, 1–9. [Google Scholar] [CrossRef]

- Liberti, L.; Kucherenko, S. Comparison of deterministic and stochastic approaches to global optimization. Int. Trans. Oper. Res. 2005, 12, 263–285. [Google Scholar] [CrossRef]

- Koc, I.; Atay, Y.; Babaoglu, I. Discrete tree seed algorithm for urban land readjustment. Eng. Appl. Artif. Intell. 2022, 112, 104783. [Google Scholar] [CrossRef]

- Dehghani, M.; Trojovská, E.; Trojovský, P. A new human-based metaheuristic algorithm for solving optimization problems on the base of simulation of driving training process. Sci. Rep. 2022, 12, 9924. [Google Scholar] [CrossRef]

- Zeidabadi, F.-A.; Dehghani, M.; Trojovský, P.; Hubálovský, Š.; Leiva, V.; Dhiman, G. Archery Algorithm: A Novel Stochastic Optimization Algorithm for Solving Optimization Problems. Comput. Mater. Contin. 2022, 72, 399–416. [Google Scholar] [CrossRef]

- Yuen, M.-C.; Ng, S.-C.; Leung, M.-F.; Che, H. A metaheuristic-based framework for index tracking with practical constraints. Complex Intell. Syst. 2022, 8, 4571–4586. [Google Scholar] [CrossRef]

- Dehghani, M.; Montazeri, Z.; Malik, O.P. Energy commitment: A planning of energy carrier based on energy consumption. Electr. Eng. Electromechanics 2019, 2019, 69–72. [Google Scholar] [CrossRef]

- Dehghani, M.; Mardaneh, M.; Malik, O.P.; Guerrero, J.M.; Sotelo, C.; Sotelo, D.; Nazari-Heris, M.; Al-Haddad, K.; Ramirez-Mendoza, R.A. Genetic Algorithm for Energy Commitment in a Power System Supplied by Multiple Energy Carriers. Sustainability 2020, 12, 10053. [Google Scholar] [CrossRef]

- Dehghani, M.; Mardaneh, M.; Malik, O.P.; Guerrero, J.M.; Morales-Menendez, R.; Ramirez-Mendoza, R.A.; Matas, J.; Abusorrah, A. Energy Commitment for a Power System Supplied by Multiple Energy Carriers System using Following Optimization Algorithm. Appl. Sci. 2020, 10, 5862. [Google Scholar] [CrossRef]

- Rezk, H.; Fathy, A.; Aly, M.; Ibrahim, M.N.F. Energy management control strategy for renewable energy system based on spotted hyena optimizer. Comput. Mater. Contin. 2021, 67, 2271–2281. [Google Scholar] [CrossRef]

- Ehsanifar, A.; Dehghani, M.; Allahbakhshi, M. Calculating the leakage inductance for transformer inter-turn fault detection using finite element method. In Proceedings of the 2017 Iranian Conference on Electrical Engineering (ICEE), Tehran, Iran, 2–4 May 2017; pp. 1372–1377. [Google Scholar]

- Dehghani, M.; Montazeri, Z.; Ehsanifar, A.; Seifi, A.R.; Ebadi, M.J.; Grechko, O.M. Planning of energy carriers based on final energy consumption using dynamic programming and particle swarm optimization. Electr. Eng. Electromechanics 2018, 2018, 62–71. [Google Scholar] [CrossRef]

- Montazeri, Z.; Niknam, T. Energy carriers management based on energy consumption. In Proceedings of the 2017 IEEE 4th International Conference on Knowledge-Based Engineering and Innovation (KBEI), Tehran, Iran, 22 December 2017; pp. 539–543. [Google Scholar]

- Dehghani, M.; Montazeri, Z.; Malik, O. Optimal sizing and placement of capacitor banks and distributed generation in distribution systems using spring search algorithm. Int. J. Emerg. Electr. Power Syst. 2020, 21, 20190217. [Google Scholar] [CrossRef]

- Dehghani, M.; Montazeri, Z.; Malik, O.P.; Al-Haddad, K.; Guerrero, J.M.; Dhiman, G. A New Methodology Called Dice Game Optimizer for Capacitor Placement in Distribution Systems. Electr. Eng. Electromechanics 2020, 2020, 61–64. [Google Scholar] [CrossRef]

- Dehbozorgi, S.; Ehsanifar, A.; Montazeri, Z.; Dehghani, M.; Seifi, A. Line loss reduction and voltage profile improvement in radial distribution networks using battery energy storage system. In Proceedings of the 2017 IEEE 4th International Conference on Knowledge-Based Engineering and Innovation (KBEI), Tehran, Iran, 22 December 2017; pp. 215–219. [Google Scholar]

- Montazeri, Z.; Niknam, T. Optimal utilization of electrical energy from power plants based on final energy consumption using gravitational search algorithm. Electr. Eng. Electromechanics 2018, 2018, 70–73. [Google Scholar] [CrossRef]

- Dehghani, M.; Mardaneh, M.; Montazeri, Z.; Ehsanifar, A.; Ebadi, M.J.; Grechko, O.M. Spring search algorithm for simultaneous placement of distributed generation and capacitors. Electr. Eng. Electromechanics 2018, 2018, 68–73. [Google Scholar] [CrossRef]

- Premkumar, M.; Sowmya, R.; Jangir, P.; Nisar, K.S.; Aldhaifallah, M. A New Metaheuristic Optimization Algorithms for Brushless Direct Current Wheel Motor Design Problem. CMC-Comput. Mater. Contin. 2021, 67, 2227–2242. [Google Scholar] [CrossRef]

- de Armas, J.; Lalla-Ruiz, E.; Tilahun, S.L.; Voß, S. Similarity in metaheuristics: A gentle step towards a comparison methodology. Nat. Comput. 2022, 21, 265–287. [Google Scholar] [CrossRef]

- Trojovská, E.; Dehghani, M.; Trojovský, P. Zebra Optimization Algorithm: A New Bio-Inspired Optimization Algorithm for Solving Optimization Algorithm. IEEE Access 2022, 10, 49445–49473. [Google Scholar] [CrossRef]

- Wolpert, D.H.; Macready, W.G. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95—International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; Volume 1944, pp. 1942–1948. [Google Scholar]

- Dorigo, M.; Maniezzo, V.; Colorni, A. Ant system: Optimization by a colony of cooperating agents. IEEE Trans. Syst. Man Cybern. Part B 1996, 26, 29–41. [Google Scholar] [CrossRef]

- Karaboga, D.; Basturk, B. Artificial bee colony (ABC) optimization algorithm for solving constrained optimization problems. In Proceedings of the International Fuzzy Systems Association World Congress, Daegu, Republic of Korea, 20–24 August 2023; pp. 789–798. [Google Scholar]

- Abualigah, L.; Abd Elaziz, M.; Sumari, P.; Geem, Z.W.; Gandomi, A.H. Reptile Search Algorithm (RSA): A nature-inspired meta-heuristic optimizer. Expert Syst. Appl. 2022, 191, 116158. [Google Scholar] [CrossRef]

- Jiang, Y.; Wu, Q.; Zhu, S.; Zhang, L. Orca predation algorithm: A novel bio-inspired algorithm for global optimization problems. Expert Syst. Appl. 2022, 188, 116026. [Google Scholar] [CrossRef]

- Faramarzi, A.; Heidarinejad, M.; Mirjalili, S.; Gandomi, A.H. Marine Predators Algorithm: A nature-inspired metaheuristic. Expert Syst. Appl. 2020, 152, 113377. [Google Scholar] [CrossRef]

- Abdollahzadeh, B.; Gharehchopogh, F.S.; Mirjalili, S. African vultures optimization algorithm: A new nature-inspired metaheuristic algorithm for global optimization problems. Comput. Ind. Eng. 2021, 158, 107408. [Google Scholar] [CrossRef]

- Hashim, F.A.; Houssein, E.H.; Hussain, K.; Mabrouk, M.S.; Al-Atabany, W. Honey Badger Algorithm: New metaheuristic algorithm for solving optimization problems. Math. Comput. Simul. 2022, 192, 84–110. [Google Scholar] [CrossRef]

- Braik, M.; Hammouri, A.; Atwan, J.; Al-Betar, M.A.; Awadallah, M.A. White Shark Optimizer: A novel bio-inspired meta-heuristic algorithm for global optimization problems. Knowl. Based Syst. 2022, 243, 108457. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Kaur, S.; Awasthi, L.K.; Sangal, A.L.; Dhiman, G. Tunicate Swarm Algorithm: A new bio-inspired based metaheuristic paradigm for global optimization. Eng. Appl. Artif. Intell. 2020, 90, 103541. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Chopra, N.; Ansari, M.M. Golden Jackal Optimization: A Novel Nature-Inspired Optimizer for Engineering Applications. Expert Syst. Appl. 2022, 198, 116924. [Google Scholar] [CrossRef]

- Storn, R.; Price, K. Differential evolution–a simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Goldberg, D.E.; Holland, J.H. Genetic Algorithms and Machine Learning. Mach. Learn. 1988, 3, 95–99. [Google Scholar] [CrossRef]

- Kirkpatrick, S.; Gelatt, C.D.; Vecchi, M.P. Optimization by simulated annealing. Science 1983, 220, 671–680. [Google Scholar] [CrossRef] [PubMed]

- Rashedi, E.; Nezamabadi-Pour, H.; Saryazdi, S. GSA: A gravitational search algorithm. Inf. Sci. 2009, 179, 2232–2248. [Google Scholar] [CrossRef]

- Dehghani, M.; Samet, H. Momentum search algorithm: A new meta-heuristic optimization algorithm inspired by momentum conservation law. SN Appl. Sci. 2020, 2, 1–15. [Google Scholar] [CrossRef]

- Eskandar, H.; Sadollah, A.; Bahreininejad, A.; Hamdi, M. Water cycle algorithm–A novel metaheuristic optimization method for solving constrained engineering optimization problems. Comput. Struct. 2012, 110, 151–166. [Google Scholar] [CrossRef]

- Hatamlou, A. Black hole: A new heuristic optimization approach for data clustering. Inf. Sci. 2013, 222, 175–184. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Hatamlou, A. Multi-verse optimizer: A nature-inspired algorithm for global optimization. Neural Comput. Appl. 2016, 27, 495–513. [Google Scholar] [CrossRef]

- Faramarzi, A.; Heidarinejad, M.; Stephens, B.; Mirjalili, S. Equilibrium optimizer: A novel optimization algorithm. Knowl. Based Syst. 2020, 191, 105190. [Google Scholar] [CrossRef]

- Kaveh, A.; Dadras, A. A novel meta-heuristic optimization algorithm: Thermal exchange optimization. Adv. Eng. Softw. 2017, 110, 69–84. [Google Scholar] [CrossRef]

- Hashim, F.A.; Hussain, K.; Houssein, E.H.; Mabrouk, M.S.; Al-Atabany, W. Archimedes optimization algorithm: A new metaheuristic algorithm for solving optimization problems. Appl. Intell. 2021, 51, 1531–1551. [Google Scholar] [CrossRef]

- Pereira, J.L.J.; Francisco, M.B.; Diniz, C.A.; Oliver, G.A.; Cunha Jr, S.S.; Gomes, G.F. Lichtenberg algorithm: A novel hybrid physics-based meta-heuristic for global optimization. Expert Syst. Appl. 2021, 170, 114522. [Google Scholar] [CrossRef]

- Hashim, F.A.; Houssein, E.H.; Mabrouk, M.S.; Al-Atabany, W.; Mirjalili, S. Henry gas solubility optimization: A novel physics-based algorithm. Future Gener. Comput. Syst. 2019, 101, 646–667. [Google Scholar] [CrossRef]

- Cuevas, E.; Oliva, D.; Zaldivar, D.; Pérez-Cisneros, M.; Sossa, H. Circle detection using electro-magnetism optimization. Inf. Sci. 2012, 182, 40–55. [Google Scholar] [CrossRef]

- Wei, Z.; Huang, C.; Wang, X.; Han, T.; Li, Y. Nuclear reaction optimization: A novel and powerful physics-based algorithm for global optimization. IEEE Access 2019, 7, 66084–66109. [Google Scholar] [CrossRef]

- Rao, R.V.; Savsani, V.J.; Vakharia, D. Teaching–learning-based optimization: A novel method for constrained mechanical design optimization problems. Comput. Aided Des. 2011, 43, 303–315. [Google Scholar] [CrossRef]

- Dehghani, M.; Trojovský, P. Teamwork Optimization Algorithm: A New Optimization Approach for Function Minimization/Maximization. Sensors 2021, 21, 4567. [Google Scholar] [CrossRef]

- Mohamed, A.W.; Hadi, A.A.; Mohamed, A.K. Gaining-sharing knowledge based algorithm for solving optimization problems: A novel nature-inspired algorithm. Int. J. Mach. Learn. Cybern. 2020, 11, 1501–1529. [Google Scholar] [CrossRef]

- Braik, M.; Ryalat, M.H.; Al-Zoubi, H. A novel meta-heuristic algorithm for solving numerical optimization problems: Ali Baba and the forty thieves. Neural Comput. Appl. 2022, 34, 409–455. [Google Scholar] [CrossRef]

- Al-Betar, M.A.; Alyasseri, Z.A.A.; Awadallah, M.A.; Abu Doush, I. Coronavirus herd immunity optimizer (CHIO). Neural Comput. Appl. 2021, 33, 5011–5042. [Google Scholar] [CrossRef]

- Ayyarao, T.L.; RamaKrishna, N.; Elavarasam, R.M.; Polumahanthi, N.; Rambabu, M.; Saini, G.; Khan, B.; Alatas, B. War Strategy Optimization Algorithm: A New Effective Metaheuristic Algorithm for Global Optimization. IEEE Access 2022, 10, 25073–25105. [Google Scholar] [CrossRef]

- Moghdani, R.; Salimifard, K. Volleyball premier league algorithm. Appl. Soft Comput. 2018, 64, 161–185. [Google Scholar] [CrossRef]

- Dehghani, M.; Mardaneh, M.; Guerrero, J.M.; Malik, O.; Kumar, V. Football game based optimization: An application to solve energy commitment problem. Int. J. Intell. Eng. Syst. 2020, 13, 514–523. [Google Scholar] [CrossRef]

- Awad, N.; Ali, M.; Liang, J.; Qu, B.; Suganthan, P.; Definitions, P. Evaluation Criteria for the CEC 2017 Special Session and Competition on Single Objective Real-Parameter Numerical Optimization; Technology Report; Nanyang Technological University: Singapore, 2016. [Google Scholar]

- Wilcoxon, F. Individual comparisons by ranking methods. In Breakthroughs in Statistics; Springer: Berlin/Heidelberg, Germany, 1992; pp. 196–202. [Google Scholar]

- Kannan, B.; Kramer, S.N. An augmented Lagrange multiplier based method for mixed integer discrete continuous optimization and its applications to mechanical design. J. Mech. Des. 1994, 116, 405–411. [Google Scholar] [CrossRef]

- Gandomi, A.H.; Yang, X.-S. Benchmark problems in structural optimization. In Computational Optimization, Methods and Algorithms; Springer: Berlin/Heidelberg, Germany, 2011; pp. 259–281. [Google Scholar]

- Mezura-Montes, E.; Coello, C.A.C. Useful infeasible solutions in engineering optimization with evolutionary algorithms. In Proceedings of the Mexican International Conference on Artificial Intelligence, Monterrey, Mexico, 14–18 November 2005; pp. 652–662. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).