Abstract

Random terms in many natural and social science systems have distinct Markovian characteristics, such as Markov jump-taking values in a finite or countable set, and Wiener process-taking values in a continuous set. In general, these systems can be seen as Markov-process-driven systems, which can be used to describe more complex phenomena. In this paper, a discrete-time stochastic linear system driven by a homogeneous Markov process is studied, and the corresponding linear quadratic (LQ) optimal control problem for this system is solved. Firstly, the relations between the well-posedness of LQ problems and some linear matrix inequality (LMI) conditions are established. Then, based on the equivalence between the solvability of the generalized difference Riccati equation (GDRE) and the LMI condition, it is proven that the solvability of the GDRE is sufficient and necessary for the well-posedness of the LQ problem. Moreover, the solvability of the GDRE and the feasibility of the LMI condition are established, and it is proven that the LQ problem is attainable through a certain feedback control when any of the four conditions is satisfied, and the optimal feedback control of the LQ problem is given using the properties of homogeneous Markov processes and the smoothness of the conditional expectation. Finally, a practical example is used to illustrate the validity of the theory.

1. Introduction

Linear quadratic (LQ) optimal control problems play an important role in control theory and practical applications [1,2]. The Riccati equation method is a well-known method for studying the LQ problem for deterministic systems described by ordinary differential equations [3,4,5], and this method can be extended to stochastic cases for Itô-type stochastic systems [6,7,8,9]. With the development of control theories, the LQ problem for discrete-time stochastic systems has also been studied by many scholars, achieving impressive results [10,11,12]. In these studies, the value functions and optimal controls for finite- or infinite-horizon indefinite LQ problems were obtained based on the solutions to some Riccati equations with forms of algebraic, differential, and difference Riccati equations [13,14,15,16,17,18,19]. In system controller design, some external disturbances or parameter uncertainties should be addressed to eliminate or reduce their impact on the system’s performance [20,21,22]. In some studies, the uncertain parts of the systems are treated as unknown disturbances, e.g., Ref. [22] presented the robust non-linear generalized predictive control method to improve the system’s robustness and [23] used the control design method. Many studies describe these complex systems as stochastic systems in which the random or fluctuating terms are usually modeled by white noise, Markov chains, or Wiener processes [24,25,26,27,28,29,30,31,32].

In practice, random terms in many natural and social science systems exhibit distinct Markovian characteristics. Markovian processes find applications in various fields, such as economy, finance, and engineering systems. For example, Markov jumps, which take values in a finite or countable set, are widely used to describe the jumping phenomenon of various systems [33,34,35]. In some situations, there are processes that possess Markovian properties but take values in a continuous set, such as Wiener processes and fractal Brownian motions, which are used to describe different types of noise in continuous- or discrete-time systems [36,37,38]. These systems are driven by a type of stochastic process with Markovian properties. So, in general, such systems are called Markov-process-driven systems, in which the Markovian processes are seen as an extension of white noise. This paper discusses the LQ problem for discrete-time linear systems driven by Markov processes. In more detail, the following LQ optimal control is considered. Minimize the cost function

subject to

where , , , and . is the state variable, is the control, and is a Markovian process. Compared to the stochastic systems discussed in [10], the random disturbance in system (2) is no longer an independent process but rather a Markovian process, i.e., the probability distributions of are related to . Compared to the system discussed in [39], in (2) is a Markovian process that can take values in a continuous set rather than a countable set. So, the novelties of this paper can be summarized as follows: (1) A discussion of more general systems driven by Markovian processes, extending beyond systems driven by white noise, Markov jumps, Wiener processes, etc. (2) A derivation of more general results with distribution forms to describe the probability distributions of Markov processes.

This paper is organized as follows. In Section 2, the basic theoretical knowledge involved in this paper is introduced. In Section 3, the equivalence of the well-posedness and attainability of the LQ problem in (1) and (2) is discussed, and the optimal controller and minimum value of the cost function are obtained. In Section 4, two examples are used to illustrate the feasibility of the theory.

The following notations are used in this paper. : the set of all real n-dimensional vectors; : the set of real matrices; , : transpose of a matrix A or vector x; : inverse of matrix A; : A is a positive (positive semi-) definite matrix; : indicator function of set D with when and when ; : the expectation of a random variable X; : the conditional expectation of X under the condition ; and : the complete space of random variables with , where .

2. Preliminaries

Let be a homogeneous Markovian process defined on a complete probability space with a one-step transition probability density function , , and the initial distribution is . For every given , the joint probability density function of is

where denotes the conditional probability density function of under the conditions of , . Because is a Markovian process, the conditional probability density function only depends on , i.e.,

and is just the one-step transition probability density function. So,

The joint probability density function of can be represented by

Suppose that X is a random variable generated by , i.e., . The conditional expectation of X is

In the following discussion, the condition expectation is shortened by

The following lemma is used in this paper.

Lemma 1.

Given a series of random symmetric matrices , the following results hold

Proof.

According to the definition of a Markovian process, the conditional probability density function of satisfies , and according to the definition of the conditional expectation,

We obtain

This ends the proof. □

Let be the field generated by and .

3. LQ Problem of the Discrete-Time Linear Stochastic Systems Driven by a Homogeneous Markovian Process

In this section, the well-posedness and attainability of the LQ problem are studied. We suppose that in the optimal control problem in (1) and (2), the admissible control set , , and are the homogeneous Markovian processes given in Section 2. We also assume that is the optimal control problem in (1) and (2), and the corresponding optimal trajectory is . Under the premise that the optimal cost function is finite, the LQ problem is always attainable through optimal control. Next, we establish the relationship between the well-posedness of the LQ problem and an LMI condition and then prove that the LMI condition is equivalent to both the solvability of the GDRE and the attainability of the LQ problem. In other words, we establish the equivalent relationship between the well-posedness and attainability of the LQ problem, the solvability of the GDRE, and the feasibility of the LMI condition and find the optimal control and optimal value function. In the following discussion, we denote

as the corresponding symmetric matrix

3.1. Well-Posedness

The following provides a connection between the well-posedness and the feasibility of an LMI involving unknown symmetric matrices [40].

Theorem 1.

Proof.

Let satisfy (6). Then, by adding the following trivial equality,

to the cost function,

and using the system in Equation (1), we can rewrite the cost function as follows:

where . From the above equality, we can see that the cost function is bounded from below by ; hence, the LQ problem in (1) and (2) is well-posed. □

We have shown in the proof of Theorem 1 that the proposed LMI condition (6) is sufficient for the well-posedness of the LQ problem. Next, we show that the LMI condition is equivalent to the solvability of the GDRE, and then provide a connection between the well-posedness of the LQ problem and the solvability of the GDRE. Meanwhile, the necessity of the LMI condition (6) for the well-posedness of the LQ problem is also proven.

Lemma 2

(Extended Schur’s lemma [41]). Let and C and be given matrices of appropriate proper sizes. Then, the following conditions are equivalent:

- (i)

- (ii)

- (iii)

Lemma 3.

Let and H and be given matrices of appropriate sizes. Consider the following quadratic form:

where x and u are random variables belonging to the space . Then, the following conditions are equivalent:

- (i)

- for any random variable x.

- (ii)

- There exists a symmetric matrix , such that for any random variable x.

- (iii)

- and .

- (iv)

- There exists a symmetric matrix , such that

Moreover, if and any of the above conditions holds, then (ii) is satisfied by , and for any random variable x, the random variable is optimal with the following optimal value:

Proof.

: Suppose there is a v such that . Then, for any scalar , we have . This leads to a contradiction through the assumption. So, G must be positive.

Suppose now . That is, there exists u, such that and . Take any scalar . Then, we have , which contradicts (i).

: Through a simple calculation, we can obtain the following:

Let . Then, it is immediate that for any random variable x.

: Since that is,

the condition (iv) holds with .

: Since

for every x and u, there exists

i.e.,

So, for every

and

This proves that (i) is true.

Furthermore, if , by applying the completing square method to with respect to u, we obtain

Let . We can directly obtain . This ends the proof. □

The following provides a connection between the well-posedness of the LQ problem and the solvability of the GDRE.

Theorem 2.

Proof.

We prove that the solvability of the GDRE is necessary for the well-posedness of the LQ problem by induction. This needs to consider the cost function from l to N. Suppose that

Then is also finite for any when is finite. This fact is used at each step of the induction: the LQ problem is assumed to be well-posed at the initial time, so the cost function is finite at any stage .

First, we consider the case of , and let . There exists

So,

According to Lemma 3, we have the following conditions:

with , where

Because is the transition probability density function, there exists . So, for every , we have , and the following results can be obtained:

Reminding that , we have . So, Equation (8) satisfies the GDRE for .

Next, suppose that we have found a sequence of symmetric matrices , which solves the GDRE for , and satisfies

Then, the following results are derived:

Since is finite, according to Lemma 3, we have

In addition,

By the recursion method, the necessity of the GDRE for the well-posedness of the LQ problem has been proven.

According to Lemma 2, we deduce that the solution of the GDRE also satisfies the LMI condition (6), which, according to Theorem 2, implies the well-posedness of the LQ problem. □

Remark 1.

The following constrained difference equation is called a generalized difference Riccati equation (GDRE):

where

Compared to the Riccati equations obtained in [7], the forms of (9) are more complex, and the probability distributions of the Markov processes are necessary. Specifically, if and are independent with identical distributions and with the expectation and the variance , we have for all , which implies independence between and , and and for all . In this case, we can take as deterministic matrices that are not dependent on or ξ. The forms of the Riccati equations are given more simply:

where

which is the form of the GDRE provided in [10]. Because the systems discussed in this paper are only for finite-time cases, the system’s stability is not discussed. However, the stability of such Markov-process-driven systems is also a topic worthy of further research, and excellent relevant results can be found in [42,43].

Remark 2.

From Lemma 2 and Theorem 2, it is obvious that the LMI condition (6) is also necessary for the well-posedness of the LQ problem. So, the LMI condition (8) is a sufficient and necessary condition for the well-posedness of the LQ problem.

3.2. Attainability

The following result shows the equivalent relationship between the well-posedness and attainability of the LQ problem, the solvability of the GDRE, and the feasibility of the LMI condition and provides the optimal control by which the LQ problem is attainable, as well as the optimal value function.

Theorem 3.

The following are equivalent:

- (i)

- (ii)

- (iii)

- The LMI condition (6) is feasible.

- (iv)

- The GDRE (9) is solvable.

Proof.

According to Theorem 1 and Theorem 2, the equivalences are straightforward. Next, we prove that the LQ problem is attainable through the feedback control law (10). To this end, by introducing the symmetric matrices , , where the randomness of is generated by , and the randomness of is generated by , we have

Through the smoothing property of the conditional expectation, we have

since the randomness of , , and is generated by , but the randomness of is generated by . According to Lemma 1, we can obtain

Denote

and then

Summing k from 0 to on both sides of Equation (12) at the same time, we can obtain

i.e.,

So,

Completing the square for , we have

where

Let solve the GDRE (9), and then when , we can obtain the optimal cost function

This shows that the optimal value equals and the LQ problem is attainable through the feedback control □

4. Examples

Example 1.

Consider the following one-dimensional system:

with the cost function

where the coefficients of , and r take values in where , , and are the state and control, respectively, is a Markovian process with transition probability density with initial probability density , where the set and . According to Theorem 2, The Riccati equation of the LQ problem in (13) and (14) is

and the optimal control is given by

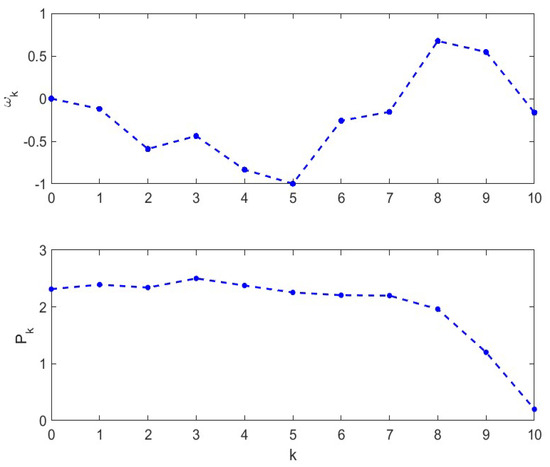

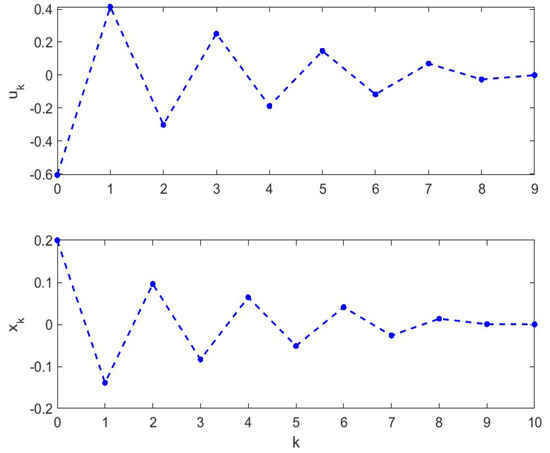

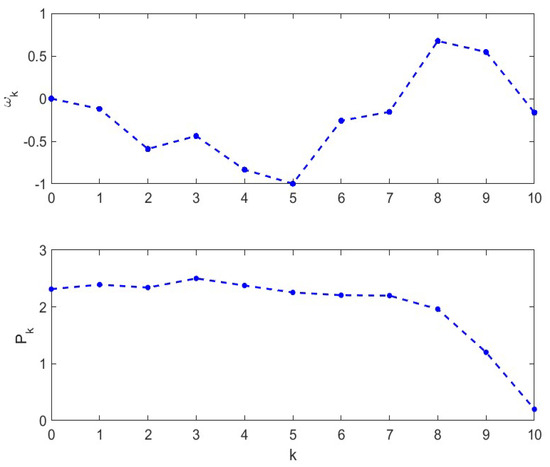

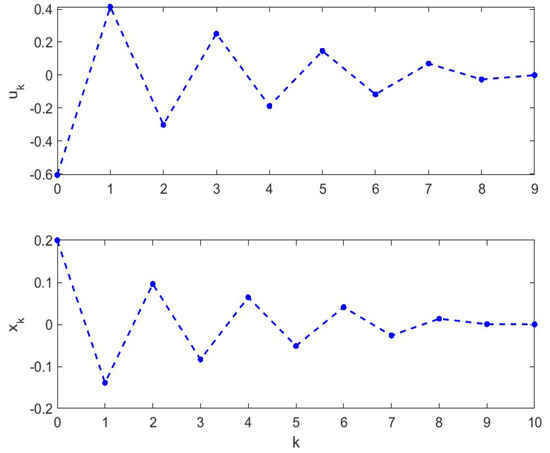

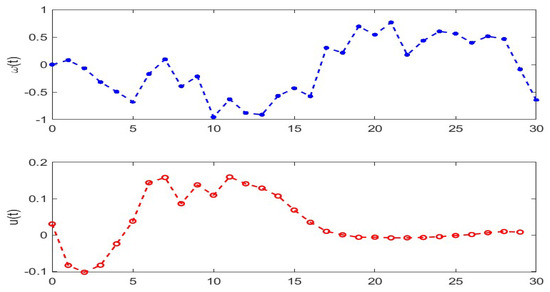

Figure 1 shows the profile of the Markovian process and the trajectories of which is the solution of the Riccati Equation (15) with coefficients , , , , , and . Figure 2 shows the corresponding trajectories of the optimal control and the state .

Figure 1.

Profiles of Markov process and solutions of for the LQ problem in (13) and (14).

Figure 2.

Trajectories of and for the LQ problem in (13) and (14).

Example 2.

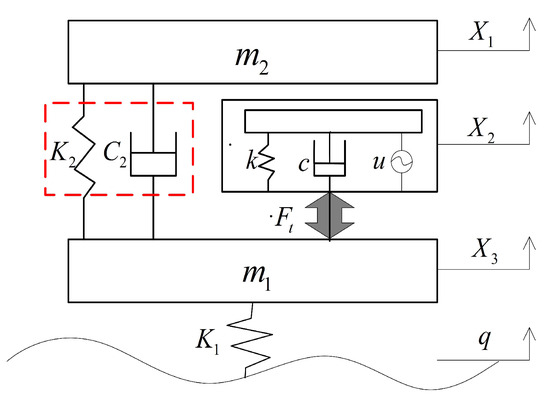

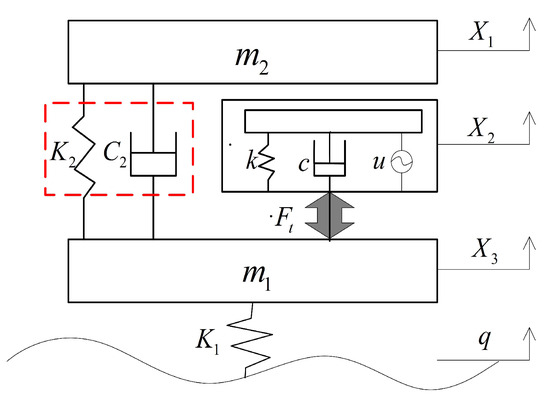

In automobile manufacturing industrial design, automobile suspension is an important piece of equipment. Figure 3 shows the hybrid active suspension studied in [44].

Figure 3.

Schematic diagram of hybrid active suspension mechanics.

represents the non-spring-loaded mass; represents the spring-loaded mass; is the equivalent stiffness of the tire; is the suspension stiffness; is the suspension damping; m, k, c, and u are, respectively, the mass block, spring stiffness, damper damping coefficient, and electromagnetic driving force of the electromagnetic reaction force actuator. When the electromagnetic coil is energized, the electromagnetic driving force u is generated. The force u drives the mass block m to vibrate so that the electromagnetic actuator as a whole generates a reaction force . q, , , and represent the displacement of the road surface, wheel, body, and electromagnetic actuator mass block, respectively. The external force of the electromagnetic actuator acts only on the non-spring-loaded mass as an active control force. Therefore, as long as the magnitude and direction of the current of the electromagnetic actuator are controlled, the corresponding active control force can be generated to adjust the vibration of the entire vibration system. According to Newton’s second law, the differential Equation (16) of motion of the hybrid active suspension shown in Figure 1 can be obtained

where is the displacement in the vertical direction of the road surface, and here, it is assumed that satisfies the following model [45]:

where is the road roughness coefficient and is the speed of the vehicle. By selecting , , , and as the state variables and taking as the notation, the system is discretized as

where the coefficient matrices A, B, and are obtained as follows:

and is the time difference between this moment and the previous moment; is the active control force as the control input; represents the uncertainty input, generally white noise; is a Markovian process; and its transition probability density function is .

represents the output of the LQ metric, and our goal is to find the optimal control that minimizes under the premise that is as small as possible.

According to Theorem 3, we can deduce that the optimal control of the system is

where

So, we can obtain

According to [44,45], the coefficients of system (19) take the following values: , , , , , , , , and . By solving the Riccati Equation (21), the matrix is obtained as follows:

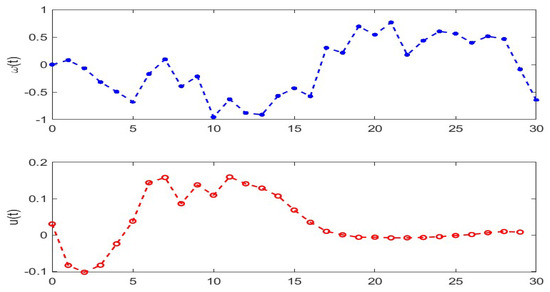

Figure 4 illustrates the profiles of the Markovian process with the transport probability density function and the trajectories of the optimal control in the optimal control problem in (18) and (19), with the optimal cost value .

Figure 4.

Profiles of and the trajectories of the optimal control of system (19).

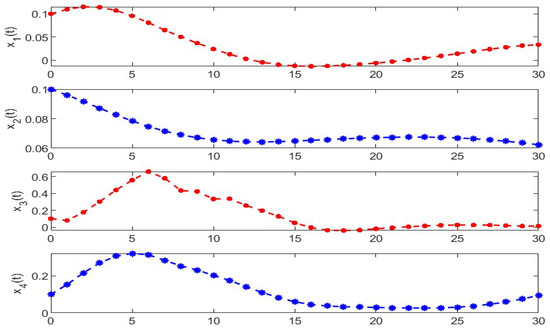

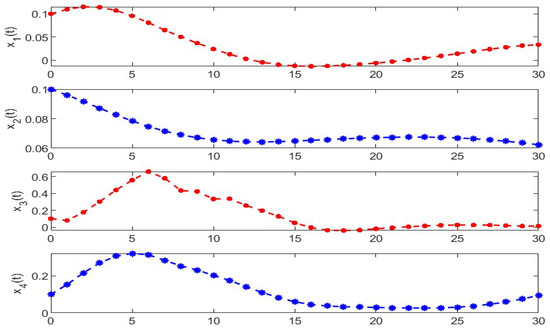

Figure 5 illustrates the trajectories of the optimal control and the trajectories of , , , and , which are the components of the optimal states of the optimal control problem in (18) and (19), with the initial state . This shows that under the effect of the optimal control, the fluctuations of the system’s states change in a small range, and less energy from the optimal controller is needed.

Figure 5.

Trajectories of the control of system (19) and the corresponding components of state .

In the above two examples, Example 1 covers one-dimensional systems and Example 2 covers four-dimensional systems. These two examples show that the main results of this paper can be used in different dimensional systems. However, in Example 2, the computational simulations focus on a primitive model of a suspension, where the connections with Markov processes are completely artificial. The more demanding applications of these systems need further exploration and research.

5. Conclusions

A type of discrete-time stochastic system driven by general Markov processes, known as Markov-process-driven systems, is proposed to describe more complex noises. By using the properties of the probability distribution of Markov processes, the LQ problem of discrete-time stochastic systems driven by homogeneous Markovian processes is studied. The equivalent relationship between the well-posedness and attainability of the LQ problem, the solvability of the GDRE, and the feasibility of the LMI condition is established. By applying the completing square method to these linear systems, the relationship between the well-posedness of the LQ problem and the LMI condition is obtained: if there exists a series of positive definite matrices satisfying the LMI condition, the LQ problem is well-posed. These results extend the GDRE to the general forms in which the probability distributions of Markov processes are needed to describe the impacts of Markovian properties. In addition, the equivalent relationship between the well-posedness of the LQ problem and the solvability of the GDRE is also obtained, and the necessity of the LMI condition for the well-posedness is also proven. Moreover, by using the properties of Markov processes and the method of completing squares, we have proven that such LQ problems are attainable and optimal state-feedback control can be obtained. Finally, a numerical example and a practical example are used to illustrate the effectiveness and validity of the theory.

Author Contributions

Conceptualization, X.L. and R.Z.; methodology, X.L.; software, X.L. and D.R.; validation, L.S., X.L. and R.Z.; formal analysis, L.S. and X.L.; investigation, W.Z.; resources, X.L.; data curation, X.L.; writing—original draft preparation, X.L. and L.S.; writing—review and editing, X.L. and R.Z.; visualization, W.Z.; supervision, X.L. and W.Z.; project administration, X.L.; funding acquisition, W.Z. and R.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China, grant number 62273212; the Research Fund for the Taishan Scholar Project of Shandong Province of China; the Natural Science Foundation of Shandong Province of China, grant number ZR2020MF062.

Data Availability Statement

Not applicable.

Acknowledgments

We would like to thank the anonymous reviewers for their constructive suggestions to improve the quality of this paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kalman, R.E. Contributions to the theory of optimal control. Bol. Soc. Mex. 1960, 5, 102–119. [Google Scholar]

- Lewis, F.L. Optimal Control; John Wiley & Sons: New York, NY, USA, 1986. [Google Scholar]

- Wonham, W.M. On a matrix Riccati equation of stochastic control. SIAM J. Control. Optim. 1968, 6, 312–326. [Google Scholar] [CrossRef]

- Luenberger, D.G. Linear and Nonlinear Programming, 2nd ed.; Addision-Wesley: Reading, MA, USA, 1984. [Google Scholar]

- De Souza, C.E.; Fragoso, M.D. On the existence of maximal solution for generalized algebraic Riccati equations arising in stochastic control. Syst. Control. Lett. 1990, 14, 233–239. [Google Scholar] [CrossRef]

- Chen, S.P.; Zhou, X.Y. Stochastic linear quadratic regulators with indefinite control weight costs. SIAM J. Control Optim. 2000, 39, 1065–1081. [Google Scholar] [CrossRef]

- Rami, M.A.; Zhou, X.Y. Linear matrix inequalities, Riccati equations, and indefinite stochastic linear quadratic controls. IEEE Trans. Autom. Control 2000, 45, 1131–1143. [Google Scholar] [CrossRef]

- Yao, D.D.; Zhang, S.Z.; Zhou, X.Y. Stochastic linear quadratic control via semidefinite programming. SIAM J. Control Optim. 2001, 40, 801–823. [Google Scholar] [CrossRef]

- Rami, M.A.; Moore, J.B.; Zhou, X.Y. Indefinite stochastic linear quadratic control and generalized differential Riccati equation. SIAM J. Control Optim. 2001, 40, 1296–1311. [Google Scholar] [CrossRef]

- Rami, M.A.; Chen, X.; Zhou, X.Y. Discrete-time indefinite LQ control with state and control dependent noises. J. Glob. Optim. 2002, 23, 245–265. [Google Scholar] [CrossRef]

- Zhang, W. Study on generalized algebraic Riccati equation and optimal regulators. Control. Theory Appl. 2003, 20, 637–640. [Google Scholar]

- Zhang, W.; Chen, B.S. On stabilizability and exact observability of stochastic systems with their applications. Automatica 2004, 40, 87–94. [Google Scholar] [CrossRef]

- Huang, Y.; Zhang, W.; Zhang, H. Infinite horizon LQ optimal control for discrete-time stochastic systems. Asian J. Control 2008, 10, 608–615. [Google Scholar] [CrossRef]

- Li, G.; Zhang, W. Discrete-time indefinite stochastic linear quadratic optimal control: Inequality constraint case. In Proceedings of the 32nd Chinese Control Conference, Xi’an, China, 26 July 2013; pp. 2327–2332. [Google Scholar]

- Huang, H.; Wang, X. LQ stochastic optimal control of forward-backward stochastic control system driven by Lévy process. In Proceedings of the IEEE Advanced Information Management, Communicates, Electronic and Automation Control Conference, Xi’an, China, 3 October 2016; pp. 1939–1943. [Google Scholar]

- Tan, C.; Zhang, H.; Wong, W. Delay-dependent algebraic Riccati equation to stabilization of networked control systems: Continuous-time case. IEEE Trans. Cybern. 2018, 48, 2783–2794. [Google Scholar] [CrossRef]

- Tan, C.; Yang, L.; Zhang, F.; Zhang, Z.; Wong, W. Stabilization of discrete time stochastic system with input delay and control dependent noise. Syst. Control Lett. 2019, 123, 62–68. [Google Scholar] [CrossRef]

- Zhang, T.; Deng, F.; Sun, Y.; Shi, P. Fault estimation and fault-tolerant control for linear discrete time-varying stochastic systems. Sci. China Inf. Sci. 2021, 64, 200201. [Google Scholar] [CrossRef]

- Jiang, X.; Zhao, D. Event-triggered fault detection for nonlinear discrete-time switched stochastic systems: A convex function method. Sci. China Inf. Sci. 2021, 64, 200204. [Google Scholar] [CrossRef]

- Dashtdar, M.; Rubanenko, O.; Rubanenko, O.; Hosseinimoghadam, S.M.S.; Belkhier, Y.; Baiai, M. Improving the Differential Protection of Power Transformers Based on Fuzzy Systems. In Proceedings of the 2021 IEEE 2nd KhPI Week on Advanced Technology (KhPIWeek), Kharkiv, Ukraine, 13 September 2021; pp. 16–21. [Google Scholar]

- Belkhier, Y.; Nath Shaw, R.; Bures, M.; Islam, M.R.; Bajaj, M.; Albalawi, F.; Alqurashi, A.; Ghoneim, S.S.M. Robust interconnection and damping assignment energy-based control for a permanent magnet synchronous motor using high order sliding mode approach and nonlinear observer. Energy Rep. 2022, 8, 1731–1740. [Google Scholar] [CrossRef]

- Djouadi, H.; Ouari, K.; Belkhier, Y.; Lehouche, H.; Ibaouene, C.; Bajaj, M.; AboRas, K.M.; Khan, B.; Kamel, S. Non-linear multivariable permanent magnet synchronous machine control: A robust non-linear generalized predictive controller approach. IET Control Theory Appl. 2023, 2023, 1–15. [Google Scholar] [CrossRef]

- Lin, X.; Zhang, T.; Zhang, W.; Chen, B.S. New Approach to General Nonlinear Discrete-Time Stochastic H∞ Control. IEEE Trans. Autom. Control 2019, 64, 1472–1486. [Google Scholar] [CrossRef]

- Lv, Q. Well-posedness of stochastic Riccati equations and closed-loop solvability for stochastic linear quadratic optimal control problems. J. Differ. Equ. 2019, 267, 180–227. [Google Scholar]

- Tang, C.; Li, X.Q.; Huang, T.M. Solvability for indefinite mean-field stochastic linear quadratic optimal control with random jumps and its applications. Optim. Control Appl. Methods 2020, 41, 2320–2348. [Google Scholar] [CrossRef]

- Chen, X.; Zhu, Y.G. Multistage uncertain random linear quadratic optimal control. J. Syst. Sci. Complex. 2020, 33, 1–26. [Google Scholar] [CrossRef]

- Zhang, W.; Zhang, L.Q. A BSDE approach to stochastic linear quadratic control problem. Optim. Control Appl. Methods 2021, 42, 1206–1224. [Google Scholar] [CrossRef]

- Meng, W.J.; Shi, J.T. Linear quadratic optimal control problems of delayed backward stochastic differential equations. Appl. Math. Optim. 2021, 84, 1–37. [Google Scholar] [CrossRef]

- Li, Y.B.; Wahlberg, B.; Hu, X.M. Identifiability and solvability in inverse linear quadratic optimal control problems. J. Syst. Sci. Complex. 2021, 34, 1840–1857. [Google Scholar] [CrossRef]

- Li, Y.C.; Ma, S.P. Finite and infinite horizon indefinite linear quadratic optimal control for discrete-time singular Markov jump systems. J. Frankl. Inst. 2021, 358, 8993–9022. [Google Scholar]

- Tan, C.; Zhang, S.; Wong, W.; Zhang, Z. Feedback stabilization of uncertain networked control systems over delayed and fading channels. IEEE Trans. Control Netw. Syst. 2021, 8, 260–268. [Google Scholar] [CrossRef]

- Tan, C.; Yang, L.; Wong, W. Learning based control policy and regret analysis for online quadratic optimization with asymmetric information structure. IEEE Trans. Cybern. 2022, 52, 4797–4810. [Google Scholar] [CrossRef]

- Bolzern, P.; Colaneri, P.; Nicolao, G.D. Almost sure stability of Markov jump linear systems with deterministic switching. IEEE Trans. Autom. Control 2013, 58, 209–214. [Google Scholar] [CrossRef]

- Dong, S.; Chen, G.; Liu, M.; Wu, Z.G. Cooperative adaptive H∞ output regulation of continuous-time heterogeneous multi-agent Markov jump systems. IEEE Trans. Circuits Syst. II Express Briefs 2021, 68, 3261–3265. [Google Scholar]

- Wu, X.; Shi, P.; Tang, Y.; Mao, S.; Qian, F. Stability analysis of aemi-Markov jump atochastic nonlinear systems. IEEE Trans. Autom. Control 2022, 67, 2084–2091. [Google Scholar] [CrossRef]

- Øksendal, B. Stochastic Differential Equations: An Introduction with Applications; Springer: New York, NY, USA, 2005. [Google Scholar]

- Bertoin, J. Lévy Processes; Cambridge University Process: New York, NY, USA, 1996. [Google Scholar]

- Han, Y.; Li, Z. Maximum Principle of Discrete Stochastic Control System Driven by Both Fractional Noise and White Noise. Discret. Dyn. Nat. Soc. 2020, 2020, 1959050. [Google Scholar] [CrossRef]

- Ni, Y.H.; Li, X.; Zhang, J.F. Mean-field stochastic linear-quadratic optimal control with Markov jump parameters. Syst. Control Lett. 2016, 93, 69–76. [Google Scholar] [CrossRef]

- Rami, M.A.; Chen, X.; Moore, J.B.; Zhou, X.Y. Solvability and asymptotic behavior of generalized Riccati equations arising in indefinite stochastic LQ controls. IEEE Trans. Autom. Control 2001, 46, 428–440. [Google Scholar] [CrossRef]

- Albert, A. Conditions for positive and nonnegative definiteness in terms of pseudo-inverse. SIAM J. Appl. Math. 1969, 17, 434–440. [Google Scholar] [CrossRef]

- Yu, X.; Yin, J.; Khoo, S. Generalized Lyapunov criteria on finite-time stability of stochastic nonlinear systems. Automatica 2019, 107, 183–189. [Google Scholar] [CrossRef]

- Yin, J.; Khoo, S.; Man, Z.; Yu, X. Finite-time stability and instability of stochastic nonlinear systems. Automatica 2011, 47, 2671–2677. [Google Scholar] [CrossRef]

- Bu, X.F.; Xie, Y.H. Study on characteristics of electromagnetic hybrid active vehicle suspension based on mixed H2/H∞ control. J. Manuf. Autom. 2018, 40, 129–133. [Google Scholar]

- Chen, M.; Long, H.Y.; Ju, L.Y.; Li, Y.G. Stochastic road roughness modeling and simulation in time domain. Mech. Eng. Autom. Chin. 2017, 201, 40–41. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).