Abstract

Firefly-Algorithm (FA) is an eminent nature-inspired swarm-based technique for solving numerous real world global optimization problems. This paper presents an overview of the constraint handling techniques. It also includes a hybrid algorithm, namely the Stochastic Ranking with Improved Firefly Algorithm (SRIFA) for solving constrained real-world engineering optimization problems. The stochastic ranking approach is broadly used to maintain balance between penalty and fitness functions. FA is extensively used due to its faster convergence than other metaheuristic algorithms. The basic FA is modified by incorporating opposite-based learning and random-scale factor to improve the diversity and performance. Furthermore, SRIFA uses feasibility based rules to maintain balance between penalty and objective functions. SRIFA is experimented to optimize 24 CEC 2006 standard functions and five well-known engineering constrained-optimization design problems from the literature to evaluate and analyze the effectiveness of SRIFA. It can be seen that the overall computational results of SRIFA are better than those of the basic FA. Statistical outcomes of the SRIFA are significantly superior compared to the other evolutionary algorithms and engineering design problems in its performance, quality and efficiency.

1. Introduction

Nature-Inspired Algorithms (NIAs) are very popular in solving real-life optimization problems. Hence, designing an efficient NIA is rapidly developing as an interesting research area. The combination of evolutionary algorithms (EAs) and swarm intelligence (SI) algorithms are commonly known as NIAs. The use of NIAs is popular and efficient in solving optimization problems in the research field [1]. EAs are inspired by Darwinian theory. The most popular EAs are genetic algorithm [2], evolutionary programming [3], evolutionary strategies [4], and genetic programming [5]. The term SI was coined by Gerardo Beni [6], as it mimics behavior of biological agents such as birds, fish, bees, and so on. Most popular SI algorithms are particle swarm optimization [7], firefly algorithm [8], ant colony optimization [9], cuckoo search [10] and bat algorithm [11]. Recently, many new population-based algorithms have been developed to solve various complex optimization problem such as killer whale algorithm [12], water evaporation algorithm [13], crow search algorithm [14] and so on. The No-Free-Lunch (NFL) theorem described that there is not a single appropriate NIA to solve all optimization problems. Consequently, choosing a relevant NIAs for a particular optimization problem involves a lot of trial and error. Hence, many NIAs are studied and modified to make them more powerful with regard to efficiency and convergence rate for some optimization problems. The primary factor of NIAs are intensification (exploitation) and diversification (exploration) [15]. Exploitation refers to finding a good solution in local search regions, whereas exploration refers to exploring global search space to generate diverse solutions [16].

Optimization algorithms can be classified in different ways. NIAs can be simply divided into two types: stochastic and deterministic [17]. Stochastic (in particular, metaheuristic) algorithms always have some randomness. For example, the firefly algorithm has “” as a randomness parameter. This approach provides a probabilistic guarantee for a faster convergence of global optimization problem, usually to find a global minimum or maximum at an infinite time. In the deterministic approach, it ensures that, after a finite time, the global optimal solution will be found. This approach follows a detailed procedure and the path and values of both dimensions of problem and function are reputable. Hill-climbing is a good example of deterministic algorithm, and it follows same path (starting point and ending point) whenever the program is executed [18].

Real-world engineering optimization problems contain a number of equality and inequality constraints, which alter the search space. These problems are termed as Constrained-Optimization Problems (COPs). The minimization COPs defined as:

where is the objective-function given in Equation (1), n-dimensional design variables, and are the lower and upper bounds, inequality with m constraints and equality with constraints.

The feasible search space is represented as the equality (q) and inequality (m). Some point in the contains feasible or infeasible solutions. The active constraint is defined as inequality constraints that are satisfied when at given point . In feasible regions, all constraints (i.e., equality constraints) were acknowledged as active constraints at all points.

In NIA problems, most of the constraint-handling techniques deal with inequality constraints. Hence, we have transformed equality constrained into equality using some tolerance value ():

where and ’’ is tolerance allowed. Apply the value of tolerance for equality constraints for a given optimization problem. Then, the constraint-violation of an individual from the constraint can be calculated by

The maximum constraint-violation of of every constraint in the all individual or population is given as:

With this background, the rest of paper is ordered as follows: Section 2 explains the classification of constrained-Handling Techniques (CHT); Section 3 deals with an overview of Constrained FA; Section 4 gives the outline of SR and OBL approaches. Section 5 described the proposed SRIFA with OBL; the experimental setup and computational outcomes of the SRIFA with 24 CEC 2006 benchmark test functions are illustrated in Section 6. The comparison of SRIFA with existing metaheuristic algorithms is also discussed with respect to its performance and effectiveness. The computational results of the SRIFA are examined with an engineering design problem in Section 7. Finally, in Section 8, conclusions of the paper are given.

2. Constrained-Handling Techniques (CHT)

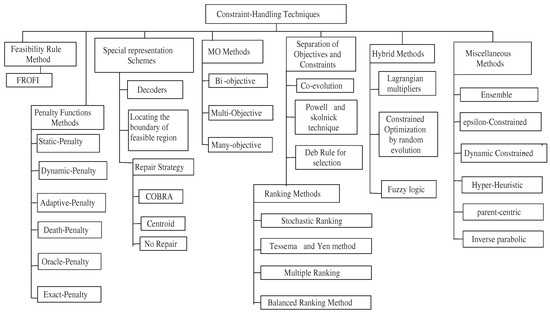

Classification of CHT

In this section, we provide a literature survey of various CHT approaches that are adapted into NIAs to solve COPs. The classification of constrained handling approaches is shown in Figure 1. In the past few decades, various CHTs have been developed, particularly for EAs. Mezura-Montes and Coello conducted a comprehensive survey of NIA [19].

Figure 1.

The classification of Constrained-Handling Techniques.

- Feasibility Rules Approach: The most effective CHT was proposed by Deb [20]. Between any two solutions and compared, is better than , under the following conditions:

- (a)

- If is a feasible solution, then solution is not.

- (b)

- Between two and feasible solutions, if has better objective value over , then is preferred.

- (c)

- Between two and infeasible solutions, if has the lowest sum of constraint-violation over , then is preferred.

Wang and Li [21] integrated a Feasibility-Rule integrated with Objective Function Information (FROFI), where Differential Evolution (DE) is used as a search algorithm along with feasibility rule. - Penalty Function Method: COPs can be transformed into unconstrained problems using penalty function. This penalty method includes various techniques such as static-penalty, dynamic-penalty [22], adaptive-penalty [23], death-penalty [24], oracle-penalty and exact-penalty methods.

- Special representation scheme: This method includes decoders, locating the boundary of a feasible solution [25] and repair method [26]. The new repair methods classified into three types: Constrained Optimization by Radical basis Function Approximation (COBRA) [27], the centroid and No-pair method.

- Multi-objective Methods (MO) or Vector optimization or Pareto-optimization: It is an optimization problems that has two or more objectives [28]. There are roughly two types of MO methods: bi-objective and many-objective.

- Split-up objective and constraints: There are many techniques to handle split-up objective and constraints. These techniques are co-evolution, Powell and Skolnick technique, Deb-rule and ranking method. There are different types of ranking methods such as stochastic ranking, Tessema and Yen method, multiple ranking and the balanced ranking method.

- Hybrid Method: The NIAs combined with a classical constrained method or heuristic method are called as hybrid methods. The hybrid method includes Lagrangian multipliers, constrained Optimization by random evolution and fuzzy logic [25].

- Miscellaneous Method: These methods include ensemble [29], -constrained [30], dynamic constrained, hyper-heuristic, parent-centric and inverse parabolic [31].

3. Overview of Constrained FA

3.1. Basic FA

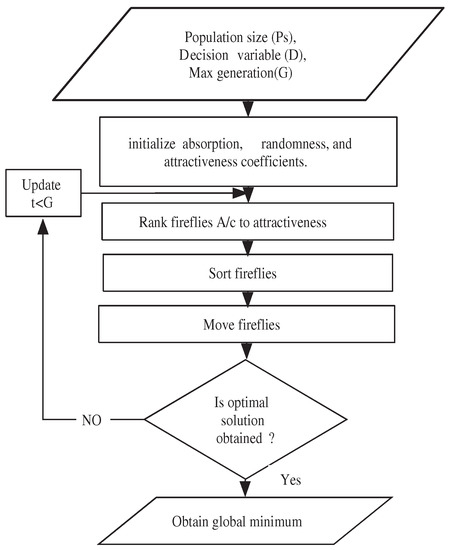

FA is a swarm-based NIAs proposed by Xin-she Yang [8]. Fister et al. [32] carried out in detail comprehensive review of FA. The basic FA pseudo-code is indicated in Algorithm 1. The mathematical formulation of the basic FA is as follows (Figure 2):

Figure 2.

Basic Firefly Algorithm (BFA).

Let us consider that attractiveness of FA is assumed as brightness (i.e., fitness function). The distance between brightness of two fireflies (assume u and v) is given as:

where I is an intensity of light-source parameter, is an absorption coefficient, and is distance between two fireflies u and v. is the intensity of light source parameter when r = 0. The attractiveness for two fireflies u and v (u is more attractive than v) is defined as:

is attractiveness parameter when r = 0.

Movement of fireflies are basically based on the attractiveness, when a firefly u is less attractive than firefly v; then, firefly u moves towards firefly v and it is determined by Equation (10):

where the second term is an attractive parameter, the third term is a randomness parameter and is a vector of random-numbers generated uniform distribution between 0 and 1.

3.2. Constrained FA

The FA Combined with CHT has been widely used for solving COPs. Some typical constrained FA (CFA) has been briefly discussed below.

To solve engineering optimization problems, the adaptive-FA is designed has been discussed in [33]. Costa et al. [34] used penalty based techniques to evaluate different test functions for global optimization with FA. Brajevic et al. [35] developed feasibility-rule based with FA for COPs. Kulkarni et al. [36] proposed a modified feasibility-rule based for solving COPs using probability. The upgraded FA (UFA) is proposed to solve mechanical engineering optimization problem [37]. Chou and Ngo designed a multidimensional optimization structure with modified FA (MFA) [38].

| Algorithm 1 Stochastic Ranking Approach (SRA) |

| 1: Number of population (N), balanced dominance of two solution of , is sum of constrained violation, m is individual who will be ranked 2: Rank the individual based on and 3: Calculate = 1, */ and is variable of f(z) 4: for i = 1 to n do 5: for k = 1 to m-1 do 6: Random R = U(0, 1) */random number generator 7: end for 8: if ((() = (() = 0)) or R < then 9: if (f() > f()) then 10: swap (,) 11: end if 12: else if ( > ) then 13: swap (, ) end if 14: end if 15: if no swapping then break; 16: end if 17: end for |

4. Stochastic Ranking and Opposite-Based Learning (OBL)

This section represents an overview of SR and OBL.

4.1. Stochastic Ranking Approach (SRA)

This approach, which was introduced by Runarsson and Yao [39], which balances fitness or (objective function) and dominance of a penalty approach. Based on this, the SRA uses a simple bubble sort technique to rank the individuals. To rank the individual in SRM, is introduced, which is used to compare the fitness function in infeasible area of search space. Normally, when we take any two individuals for comparison, three possible solutions are formed.

(a) if both individuals are in a feasible region, then the smallest fitness function is given the highest priority; (b) For both individuals at an infeasible region, an individual having smallest constraint-violation () is preferred to fitness function and is given the highest priority; and (c) if one individual is feasible and other is infeasible, then the feasible region individual is given highest priority. The pseudo code of SRM is given in Algorithm 1.

4.2. Opposition-Based Learning (OBL)

The OBL is suggested by Tizhoosh in the research industry, which is inspired by a relationship among the candidate and its opposite solution. The main aim of the OBL is to achieve an optimal solution for a fitness function and enhance the performance of the algorithm [40]. Let us assume that z∈ [] is any real number, and the opposite solution of z is denoted as and defined as

Let us assume that is an n-dimensional decision vector, in which ∈ and . In the opposite vector, p is defined as where .

5. The Proposed Algorithm

The most important factor in NIAs is to maintain diversity of population in search space to avoid premature convergence. From the intensification and diversification viewpoints, an expansion in diversity of population revealed that NIAs are in the phase of intensification, while a decreased population of diversity revealed that NIAs are in the phase of diversification. The adequate balance between exploration and exploitation is achieved by maintaining a diverse populations. To maintain balance between intensification and diversification, different approaches were proposed such as diversity maintenance, diversity learning, diversity control and direct approaches [16]. The diversity maintenance can be performed using a varying size population, duplication removal and selection of a randomness parameter.

On the other hand, when the basic FA algorithm is performed with insufficient diversification (exploration), it leads to a solution stuck in local optima or a suboptimal region. By considering these issues, a new hybridizing algorithm is proposed by improving basic FA.

5.1. Varying Size of Population

A very common and simple technique is to increase the population size in NIAs to maintain the diversity of population. However, due to an increase in population size, computation time required for the execution of NIAs is also increased. To overcome this problem, the OBL concept is applied to improve the efficiency and performance of basic FA at the initialization phase.

5.2. Improved FA with OBL

In the population-based algorithms, premature convergence in local optimum is a common problem. In the basic FA, every firefly moves randomly towards the brighter one. In that condition, population diversity is high. After some generation, the population diversity decreases due to a lack of selection pressure and this leads to a trap solution at local optima. The diversification of FA is reduced due to premature convergence. To overcome this problem, the OBL is applied to an initial phase of FA, in order to increase the diversity of firefly individuals.

In the proposed Improved Firefly Algorithm (IFA), we have to balance intensification and diversification for better performance and efficiency of the proposed FA. To perform exploration, a randomization parameter is used to overcome local optimum and to explore global search. To balance between intensification and diversification, the random-scale factor (R) was applied to generate randomly populations. Das et al. [41] used a similar approach in DE:

where is a vth parameter of the uth firefly, is upper-bound, is a lower-bound of vth value and rand (0, 1) is randomly distributed of the random-number.

The movement of fireflies using Equation (10) will be modified as

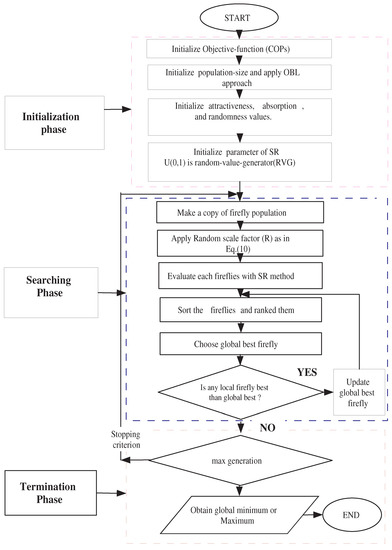

5.3. Stochastic Ranking with an Improved Firefly Algorithm (SRIFA)

Many studies are published in literature for solving COPs using EAs and FA. However, it is quite challenging to apply this approach for constraints effectively handling optimization problems. FA produces admirable outcomes on COPs and it is well-known for having a quick convergence rate [42]. As a result of the quick convergence rate of FA and popularity of the stochastic-ranking for CHT, we proposed a hybridized technique for constrained optimization problems, known as Stochastic Ranking with an Improved Firefly Algorithm (SRIFA). The flowchart of SRIFA is shown in Figure 3.

Figure 3.

The flowchart of the SRIFA algorithm.

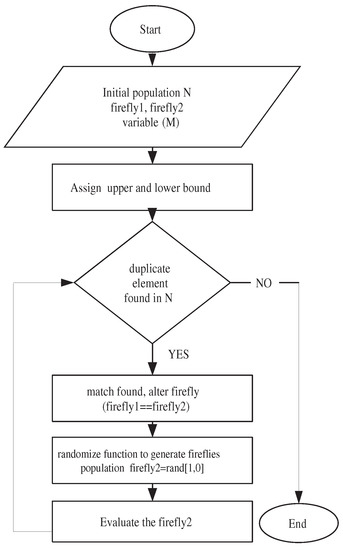

5.4. Duplicate Removal in SRIFA

The duplicate individuals in a population should be eliminated and new individuals should generated and inserted randomly into SRIFA. Figure 4 represents the duplication removal in SRIFA.

Figure 4.

The flowchart of duplication removal in SRIFA.

6. Experimental Results and Discussions

To examine the performance of SRIFA with existing NIAs, the proposed algorithm is applied to 24 numerical benchmark test functions given in CEC 2006 [43]. This preferred benchmark functions have been thoroughly studied before by various authors.

In Table 1, the main characteristics of 24 test function are determined, where a fitness function (f(z)), number of variables or dimensions (D), is expressed as a feasibility ratio between a feasible solution (Feas) with search region (SeaR), Linear-Inequality constraint (LI), Nonlinear Inequality constraint (NI), Linear-Equality constraint (LE), Nonlinear Equality constraint (NE), number active constraint represented as () and an optimal solution of fitness function denoted (OPT) are given. For convenience, all equality constraints, i.e., are transformed into inequality constraint , where is a tolerance value, and its goal is to achieve a feasible solution [43].

Table 1.

Characteristic of 24 standard-functions.

6.1. Experimental Design

To investigate the performance and effectiveness of the SRIFA, it is tested over 24 standard- functions and five well-known engineering design-problems. All experiments of COPs were experimented on an Intel core (TM) processor @3.40 GHz with 8 GB RAM memory, where an SRIFA algorithm was programmed with Matlab 8.4 (R2014b) under Win7 (x64). Table 2 shows the parameter used to conduct computational experiments of SRIFA algorithms. For all experiments, 30 independent runs were performed for each problem. To investigate efficiency and effectiveness of the SRIFA, various statistical parameters were used such as best, worst, mean, global optimum and standard-deviation (Std). Results in bold indicate best results obtained.

Table 2.

Experimental parameters for SRIFA.

6.2. Calibration of SRIFA Parameters

In this section, we have to calibrate the parameter of the SRIFA. According to the strategy of the SRIFA, described in Figure 2, the SRIFA contains eight parameters: size of population (), initial randomization value (), initial attractiveness value (), absorption-coefficient (), max-generation (G), total number of function evaluations (), probability and varphi (). To derive a suitable parameter, we have performed details of fine-tuning by varying parameters of SRIFA. The choice of each of these parameters as follows: ()∈ (5 to 100 with an interval of 5), () ∈ (0.10 to 1.00 with an interval of 0.10), () ∈ (0.10 to 1.00 with an interval 0.10), () ∈ (0.01 to 100 with an interval of 0.01 until 1 further 5 to 100), (G) ∈ (1000 to 10,000 with an interval of 1000), ∈ (1000 to 240,000), = ∈ (0.1 to 0.9 with an interval of 0.1) and () ∈ (0.1 to 1.0 with an interval of 0.1). The best optimal solutions obtained by SRIFA parameter experiments from the various test functions. In Table 2, the best parameter value for experiments for the SRIFA are described.

6.3. Experimental Results of SRIFA Using a GKLS (GAVIANO, KVASOV, LERA and SERGEYEV) Generator

In this experiment, we have compared the proposed SRIFA with two novel approaches: Operational Characteristic and Aggregated Operational Zone. An operational characteristic approach is used for comparing deterministic algorithms, whereas an aggregated operational zone approach is used by extending the idea of operational characteristics to compare metaheuristic algorithms.

The proposed algorithm is compared with some widely used NIAs (such as DE, PSO and FA) and the well-known deterministic algorithms such as DIRECT, DIRECT-L (locally-biased version), and ADC (adaptive diagonal curves). The GKLS test classes generator is used in our experiments. The generator allows us to randomly generate 100 test instances having local minima and dimension. In this experiment, eight classes (small and hard) are used (with dimensions of n = 2, 3, 4 and 5) [45]. The control parameters of the GKLS-generator required for each class contain 100 functions and are defined by the following parameters: design variable or problem dimension (N), radius of the convergence region (), distance from of the paraboloid vertex and global minimum (r) and tolerance (). The value of control parameters are given in Table 3.

Table 3.

Control parameter of the GKLS generator.

From Table 4, we can see that the mean value of generations required for computation of 100 instances are calculated for each deterministic and metaheuristic algorithms using an GKLS generator. The values “>m(i)” indicate that the given algorithm did not solve a global optimization problem i times in 100 × 100 instances (i.e., 1000 runs for deterministic and 10,000 runs for metaheuristic algorithms). The maximum number of generations is set to be . The mean value of generation required for proposed algorithm is less than other algorithms, indicating that the performance SRIFA is better than the given deterministic and metaheuristic algorithms.

Table 4.

Statistical results obtained by deterministic and metaheuristic algorithms using GKLS generator.

6.4. Experimental Results FA and SRIFA

In our computational experiment, the proposed SRIFA is compared with the basic FA. It differs from the basic FA in following few points. In the SRIFA, the OBL technique is used to enhance initial population of algorithm, while, in FA, fixed generation is used to search for optimal solutions. In the SRIFA, the chaotic map (or logistic map) is used to improve absorption coefficient , while, in the FA, fixed iteration is applied to explore the global solution. The random scale factor (R) was used to enhance performance in SRIFA. In addition, SRIFA uses Deb’s rules in the form of the stochastic ranking method.

The experimental results of the SRIFA with basic FA are shown in Table 5. The comparison between SRIFA and FA are conducted using 24 CEC (Congress on Evolutionary-Computation) benchmark test functions [43]. The global optimum, CEC 2006 functions, best, worst, mean and standard deviation (Std) outcomes produced by SRIFA and FA in over 25 runs are described in Table 5.

Table 5.

Statistical results obtained by SRIFA and FA on 24 benchmark functions over 25 runs.

In Table 5, it is clearly observed that SRIFA provides promising results compared to the basic FA for all benchmark test functions. The proposed algorithm found optimal or best solutions on all test functions over 25 runs. For two functions (G20 and G22), we were unable to find any optimal solution. It should be noted that ’N-F’ refers to no feasible result found.

6.5. Comparison of SRIFA with Other NIAs

To investigate the performance and effectiveness of the SRIFA, these results are compared with five metaheuristic algorithms. These algorithms are stochastic ranking with a particle-swarm-optimization (SRPSO) [46], self adaptive mix of particle-swarm-optimization (SAMO-PSO) [47], upgraded firefly algorithm (UFA) [37], an ensemble of constraint handling techniques for evolutionary-programming (ECHT-EP2) [48] and a novel differential-evolution algorithm (NDE) [49]. To evaluate proper comparisons of these algorithms, the same number of function evaluations (NFEs = 240,000) were chosen.

The statistical outcomes achieved by SRPSO, SAMO-PSO, UFA, ECHT-EP2 and NDE for 24 standard functions are listed in Table 6. The outcomes given in bold letter indicates best or optimal solution. N-A denotes “Not Available”. The benchmark function G20 and G22 are discarded from the analysis, due to no feasible results were obtained.

Table 6.

Statistical outcomes achieved by SRPSO, SAMO-PSO, ECHT-EP2, UFA, NDE AND SRIFA.

On comparing SRIFA with SRPSO for 22 functions as described in Table 6, it is clearly seen that, for all test functions, statistical outcomes indicate better performance in most cases. The SRIFA obtained the best or the same optimal values among five metaheuristic algorithms. In terms of mean outcomes, SRIFA shows better outcomes to test functions G02, G14, G17, G21 and G23 for all four metaheuristic algorithms (i.e., SAMO-PSO, ECHT-EP2, UFA and NDE). SRIFA obtained worse mean outcomes to test function G19 than NDE. In the rest of all test functions, SRIFA was superior to all compared metaheuristic algorithms.

6.6. Statistical Analysis with Wilcoxon’s and Friedman Test

Statistical analysis can be classified as parametric and non-parametric test (also known as distribution-free tests). In parametric tests, some assumptions are made about data parameters, while, in non-parametric tests, no assumptions are made for data parameters. We performed statistical analysis of data by non-parametric tests. It mainly consists of a Wilcoxon test (pair-wise comparison) and Friedman test (multiple comparisons) [50].

The outcomes of statistical analysis after conducting a Wilcoxon-test between SRIFA and the other five metaheuristic algorithms are shown in Table 7. The value indicates that the first algorithm is significantly superior than the second algorithm, whereas indicates that the second algorithm performs better than the first algorithm. In Table 7, it is observed that values are higher than values in all cases. Thus, we can conclude that SRIFA significantly outperforms compared to all metaheuristic algorithms.

Table 7.

Results obtained by a Wilcoxon-test for SRIFA against SRPSO, SAMO-PSO, ECHT-EP2, UFA and NDE.

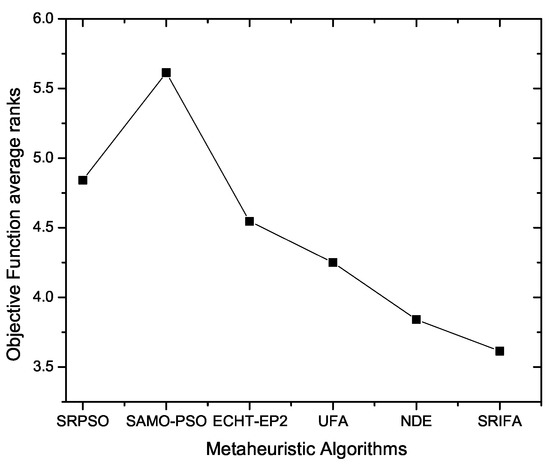

The statistical analysis outcomes by applying Friedman test are shown in Table 8. We have ranked the given metaheuristic algorithms corresponding to their mean value. From Table 8, SRIFA obtained first ranking (i.e., the lowest value gets the first rank) compared to all metaheuristic algorithms over the 22 test functions. The average ranking of the SRIFA algorithm based on the Friedman test is described in Figure 5.

Table 8.

Results obtained Friedman test for all metaheuristic algorithms.

Figure 5.

Average ranking of the proposed algorithm with various metaheuristic algorithms.

6.7. Computational Complexity of SRIFA

In order to reduce complexity of the given problem, constraints are normalized. Let n be population size and t is iteration. Generally in NIAs, at each iteration, a complexity is , where is the maximum amount of function evaluations allowed and and is the cost of objective function. At the initialization phase of SRIFA, the computational complexity of population generated randomly by the OBL technique is . In a searching and termination phase, the computational complexity of two inner loops of FA and stochastic ranking using a bubble sort are +. The total computational complexity of SRIFA is = + +≈.

7. SRIFA for Constrained Engineering Design Problems

In this section, we evaluate the efficiency and performance of SRIFA by solving five widely used constrained engineering design problems. These problems are: (i) tension or compression spring design [51]; (ii) welded-beam problem [52]; (iii) pressure-vessel problem [53]; (iv) three-bar truss problem [51]; and (v) speed-reducer problem [53]. For every engineering design problem, statistical outcomes were calculated by executing 25 independent runs for each problem. The mathematical formulation of all five constrained engineering design problems are given in “Appendix A”.

Every engineering problem has unique characteristics. The best value of constraints, parameter and objective values obtained by SRIFA for all five engineering problems are listed in Table 9. The statistical outcomes and number of function-evaluations (NFEs) of SRIFA for all five engineering design problems are listed in Table 10. These results were obtained by SRIFA over 25 independent runs.

Table 9.

Best outcomes of parameter objective and constraints values for over engineering-problems.

Table 10.

Statistical outcomes achieved by SRIFA for all five engineering problems over 25 independent runs.

7.1. Tension/Compression Spring Design

A tension/compression spring-design problem is formulated to minimize weight with respect to four constraints. These four constraints are shear stress, deflection, surge frequency and outside diameter. There are three design variables, namely: mean coil (D), wire-diameter d and the amount of active-coils N.

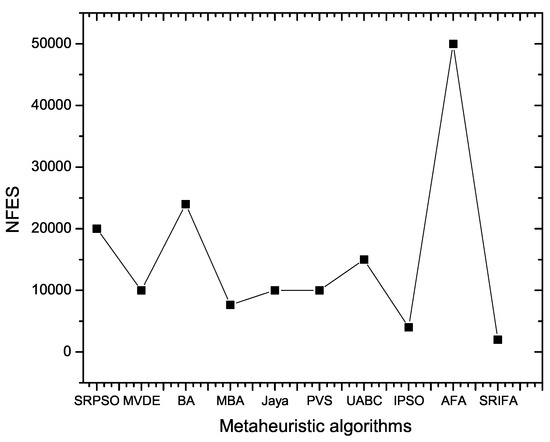

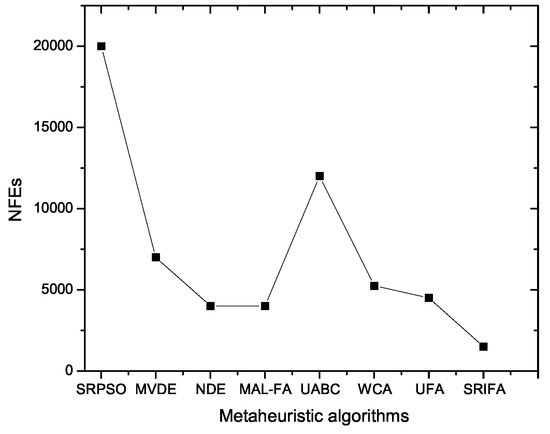

This proposed SRIFA approach is compared to SRPSO [46], MVDE [54], BA [44], MBA [55], JAYA [56], PVS [57], UABC [58], IPSO [59] and AFA [33]. The comparative results obtained by SRIFA for nine NIAs are given in Table 11. It is clearly observed that SRIFA provides the most optimum results over nine metaheuristic algorithms. The mean, worst and SD values obtained by SRIFA are superior to those for other algorithms. Hence, we can draw conclusions that SRIFA performs better in terms of statistical values. The comparison of the number of function evacuations (NFEs) with various NIAs is plotted in Figure 6.

Table 11.

Statistical results of comparison between SRIFA and NIAs for tension/compression spring design problem.

Figure 6.

NIAs with NFEs for the tension/compression problem.

7.2. Welded-Beam Problem

The main objective of the welded-beam problem is to minimize fabrication costs with respect to seven constraints. These constraints are bending stress in the beam ( ), shear stress ( ), deflection of beam ( ), buckling load on the bar (), side constraints, weld thickness and member thickness (L).

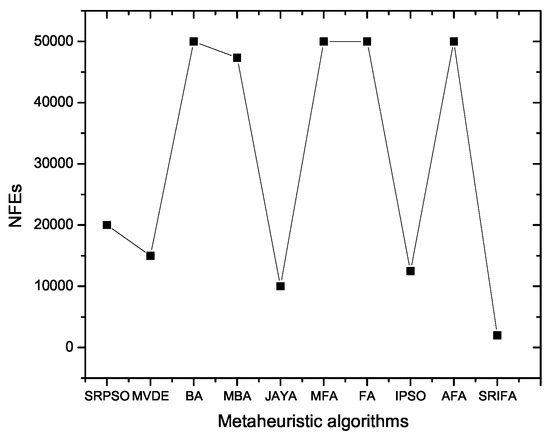

Attempts have been made by many researchers to solve the welded-beam-design problem. The SRIFA was compared with SRPSO, MVDE, BA, MBA, JAYA, MFA, FA, IPSO and AFA. The statistical results obtained by SRIFA on comparing with nine metaheuristic algorithms are described in Table 12. It can be seen that statistical results obtained from SRIFA performs better than all metaheuristic algorithms.

Table 12.

Statistical results of comparison between SRIFA and NIAs for welded-beam problem.

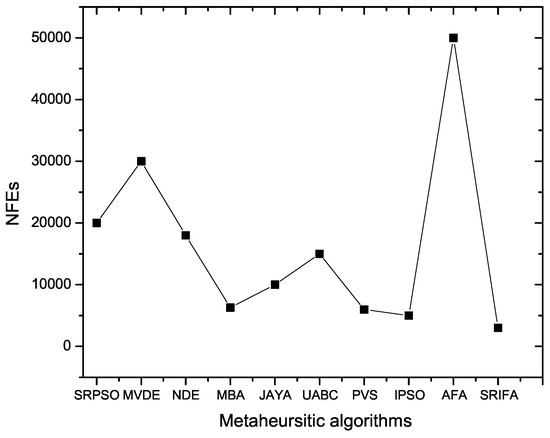

The results obtained by the best optimum value for SRIFA performs superior to almost all of the seven algorithms (i.e., SRPSO, MVDE, BA, MBA, MFA, FA, and IPSO) but almost the same optimum value for JAYA and AFA. In terms of mean results obtained by SRIFA, it performs better than all metaheuristic algorithms except AFA as it contains the same optimum mean value. The standard deviation (SD) obtained by SRIFA is slightly worse than the SD obtained by MBA. From Table 12, it can be seen that SRIFA is superior in terms of SD for all remaining algorithms. The smallest NFE result is obtained by SRIFA as compared to all of the metaheuristic algorithms. The comparisons of NFEs with all NIAs are shown in Figure 7.

Figure 7.

NIAs with NFEs for welded-beam problem.

7.3. Pressure-Vessel Problem

The main purpose of the pressure-vessel problem is to minimize the manufacturing cost of a cylindrical-vessel with respect to four constraints. These four constraints are thickness of head (), thickness of pressure vessel (), length of vessel without head (L) and inner radius of the vessel (R).

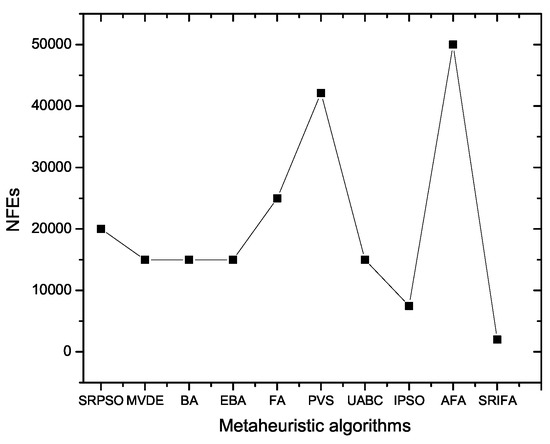

The SRIFA is optimized with SRPSO, MVDE, BA, EBA [60], FA [44], PVS, UABC, IPSO and AFA. The statistical results obtained by SRIFA for nine metaheuristic algorithms are listed in Table 13. It is clearly seen that SRIFA has the same best optimum value when compared to six algorithms (MVDE, BA, EBA, PVS, UABC and IPSO). The mean, worst, SD and NFE results obtained by SRIFA are superior to all NIAs. The comparisons of NFEs with all NIAs are shown in Figure 8.

Table 13.

Statistical results of comparison between SRIFA and NIAs for the pressure-vessel problem.

Figure 8.

NIAs with NFEs for pressure-vessel problem.

7.4. Three-Bar-Truss Problem

The main purpose of the given three-bar-truss problem is to minimize the volume of a three-bar truss with respect to three stress constraints.

The SRIFA is compared with SRPSO, MVDE, NDE [49], MAL-FA [61], UABC, WCA [62] and UFA. The statistical results obtained by SRIFA in comparison with the seven NIAs are described in Table 14. It is clearly seen that SRIFA has almost the same best optimum value except with the UABC algorithm. In terms of mean and worst results obtained, SRIFA performed better compared to all metaheuristic algorithms except NDE and UFA, which contain the same optimum mean and worst value. The standard deviation (SD) obtained by SRIFA is superior to all metaheuristic algorithms. The smallest NFE value is obtained by SRIFA compared to all other metaheuristic algorithms. The comparisons of NFEs with all other NIAs are shown in Figure 9.

Table 14.

Statistical results of comparison between SRIFA and NIAs for the three-bar truss problem.

Figure 9.

NIAs with NFEs for three-bar-truss problem.

7.5. Speed-Reducer Problem

The goal of the given problem is to minimize the speed-reducer of weight with respect to eleven constraints. This problem has seven design variables that are gear face, number of teeth in pinion, teeth module, length of first shaft between bearings. diameter of first shaft, length of second shaft between bearings, and diameter of second shaft.

The proposed SRIFA approach is compared with SRPSO, MVDE, NDE, MBA, JAYA, MBA, UABC, PVS, IPSO and AFA. The statistical results obtained by SRIFA for nine metaheuristic algorithms are listed in Table 15. It can be observed that the SRIFA provides the best optimum value among all eight metaheuristic algorithms except JAYA (they have the same optimum value). The statistical results (best, mean and worst) value obtained by SRIFA and JAYA algorithm is almost the same, while SRIFA requires less NFEs for executing the algorithm. Hence, we can conclude that SRIFA performed better in terms of statistical values. Comparisons of number of function evacuations (NFEs) with various metaheuristic algorithm are plotted in Figure 10.

Table 15.

Statistical results of comparison between SRIFA and NIAs for the speed-reducer problem.

Figure 10.

NIAs with NFEs for the speed-reducer problem.

8. Conclusions

This paper proposes a review of constrained handling techniques and a new hybrid algorithm known as a Stochastic Ranking with an Improved Firefly Algorithm (SRIFA) to solve a constrained optimization problem. In population-based problems, stagnation and premature convergence occurs due to imbalance between exploration and exploitation during the development process that traps the solution in the local optimal. To overcome this problem, the Opposite Based Learning (OBL) approach was applied to basic FA. This OBL technique was used at an initial population, which leads to increased diversity of the problem and improves the performance of the proposed algorithm.

The random scale factor was incorporated into basic FA, for balancing intensification and diversification. It helps to overcome the premature convergence and increase the performance of the proposed algorithm. The SRIFA was applied to 24 CEC benchmark test functions and five constrained engineering design problems. Various computational experiments were conducted to check the effectiveness and quality of the proposed algorithm. The statistical results obtained from SRIFA when compared to those of the FA clearly indicated that our SRIFA outperformed in terms of statistical values.

Furthermore, the computational experiments demonstrated that the performance of SRIFA was better compared to five NIAs. The performance and efficiency of the proposed algorithm were significantly superior to other metaheuristic algorithms presented from the literature. The statistical analysis of SRIFA was conducted using the Wilcoxon’s and Friedman test. The results obtained proved that efficiency, quality and performance of SRIFA was statistically superior compared to NIAs. Moreover, SRIFA was also applied to the five constrained engineering design problems efficiently. In the future, SRIFA can be modified and extended to explain multi-objective problems.

Author Contributions

Conceptualization, U.B.; methodology, U.B. and D.S.; software, U.B. and D.S.; validation, U.B. and D.S.; formal analysis, U.B. and D.S.; investigation, U.B.; resources, D.S.; data curation, D.S.; writing—original draft preparation, U.B.; writing—review and editing, U.B. and D.S.; supervision, D.S.

Acknowledgments

The authors would like to thank Dr. Yaroslav Sergeev for sharing GKLS generator. The authors would also like to thank Mr. Rohit for his valuable suggestions, which contribute a lot to technical improvement of the manuscript.

Conflicts of Interest

The authors have declared no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| COPs | Constrained Optimization Problems |

| CHT | Constrained Handling Techniques |

| EAs | Evolutionary Algorithms |

| FA | Firefly Algorithm |

| OBL | Opposite-Based Learning |

| NIAs | Nature Inspired Algorithms |

| NFEs | Number of Function Evaluations |

| SRA | Stochastic Ranking Approach |

| SRIFA | Stochastic Ranking with Improved Firefly Algorithm |

Appendix A

Appendix A.1. Tension/Compression Spring Design Problem

Appendix A.2. Welded-Beam-Design Problem

Appendix A.3. Pressure-Vessel Design Problem

Appendix A.4. Three-Bar-Truss Design Problem

Appendix A.5. Speed-Reducer-Design Problem

References

- Slowik, A.; Kwasnicka, H. Nature Inspired Methods and Their Industry Applications—Swarm Intelligence Algorithms. IEEE Trans. Ind. Inform. 2018, 14, 1004–1015. [Google Scholar] [CrossRef]

- Goldberg, D.E.; Holland, J.H. Genetic Algorithms and Machine Learning. Mach. Learn. 1988, 3, 95–99. [Google Scholar] [CrossRef]

- Fogel, D.B. An introduction to simulated evolutionary optimization. IEEE Trans. Neural Netw. 1994, 5, 3–14. [Google Scholar] [CrossRef] [PubMed]

- Beyer, H.G.; Schwefel, H.P. Evolution strategies—A comprehensive introduction. Nat. Comput. 2002, 1, 3–52. [Google Scholar] [CrossRef]

- Koza, J.R. Genetic Programming: On the Programming of Computers by Means of Natural Selection; MIT Press: Cambridge, MA, USA, 1992. [Google Scholar]

- Beni, G. From Swarm Intelligence to Swarm Robotics. In Swarm Robotics; Springer: Berlin/Heidelberg, Germany, 2005; pp. 1–9. [Google Scholar]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95—International Conference on Neural Networks, Perth, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Yang, X.S. Firefly algorithm, stochastic test functions and design optimisation. Int. J. Bio-Inspired Comput. 2010, 2, 78–84. [Google Scholar] [CrossRef]

- Dorigo, M.; Birattari, M. Ant Colony Optimization. In Encyclopedia of Machine Learning; Springer: Boston, MA, USA, 2010; pp. 36–39. [Google Scholar]

- Yang, X.; Deb, S. Cuckoo Search via Lévy flights. In Proceedings of the 2009 World Congress on Nature Biologically Inspired Computing (NaBIC), Coimbatore, India, 9–11 December 2009; pp. 210–214. [Google Scholar]

- Yang, X.S. A New Metaheuristic Bat-Inspired Algorithm. In Nature Inspired Cooperative Strategies for Optimization (NICSO 2010); Springer: Berlin/Heidelberg, Germany, 2010; pp. 65–74. [Google Scholar]

- Biyanto, T.R.; Irawan, S.; Febrianto, H.Y.; Afdanny, N.; Rahman, A.H.; Gunawan, K.S.; Pratama, J.A.; Bethiana, T.N. Killer Whale Algorithm: An Algorithm Inspired by the Life of Killer Whale. Procedia Comput. Sci. 2017, 124, 151–157. [Google Scholar] [CrossRef]

- Saha, A.; Das, P.; Chakraborty, A.K. Water evaporation algorithm: A new metaheuristic algorithm towards the solution of optimal power flow. Eng. Sci. Technol. Int. J. 2017, 20, 1540–1552. [Google Scholar] [CrossRef]

- Abdelaziz, A.Y.; Fathy, A. A novel approach based on crow search algorithm for optimal selection of conductor size in radial distribution networks. Eng. Sci. Technol. Int. J. 2017, 20, 391–402. [Google Scholar] [CrossRef]

- Blum, C.; Roli, A. Metaheuristics in Combinatorial Optimization: Overview and Conceptual Comparison. ACM Comput. Surv. 2003, 35, 268–308. [Google Scholar] [CrossRef]

- Črepinšek, M.; Liu, S.H.; Mernik, M. Exploration and Exploitation in Evolutionary Algorithms: A Survey. ACM Comput. Surv. 2013, 45, 35:1–35:33. [Google Scholar] [CrossRef]

- Sergeyev, Y.D.; Kvasov, D.E.; Mukhametzhanov, M.S. Emmental-Type GKLS-Based Multiextremal Smooth Test Problems with Non-linear Constraints. In Learning and Intelligent Optimization; Battiti, R., Kvasov, D.E., Sergeyev, Y.D., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 383–388. [Google Scholar]

- Kvasov, D.E.; Mukhametzhanov, M.S. Metaheuristic vs. deterministic global optimization algorithms: The univariate case. Appl. Math. Comput. 2018, 318, 245–259. [Google Scholar] [CrossRef]

- Mezura-Montes, E.; Coello, C.A.C. Constraint-handling in nature-inspired numerical optimization: Past, present and future. Swarm Evol. Comput. 2011, 1, 173–194. [Google Scholar] [CrossRef]

- Deb, K. An efficient constraint handling method for genetic algorithms. Comput. Methods Appl. Mech. Eng. 2000, 186, 311–338. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, B.; Li, H.; Yen, G.G. Incorporating Objective Function Information Into the Feasibility Rule for Constrained Evolutionary Optimization. IEEE Trans. Cybern. 2016, 46, 2938–2952. [Google Scholar] [CrossRef] [PubMed]

- Tasgetiren, M.F.; Suganthan, P.N. A Multi-Populated Differential Evolution Algorithm for Solving Constrained Optimization Problem. In Proceedings of the 2006 IEEE International Conference on Evolutionary Computation, Vancouver, BC, Canada, 16–21 July 2006; pp. 33–40. [Google Scholar]

- Farmani, R.; Wright, J.A. Self-adaptive fitness formulation for constrained optimization. IEEE Trans. Evol. Comput. 2003, 7, 445–455. [Google Scholar] [CrossRef]

- Kramer, O.; Schwefel, H.P. On three new approaches to handle constraints within evolution strategies. Natural Comput. 2006, 5, 363–385. [Google Scholar] [CrossRef]

- Coello, C.A.C. Theoretical and numerical constraint-handling techniques used with evolutionary algorithms: A survey of the state of the art. Comput. Methods Appl. Mech. Eng. 2002, 191, 1245–1287. [Google Scholar] [CrossRef]

- Chootinan, P.; Chen, A. Constraint handling in genetic algorithms using a gradient-based repair method. Comput. Oper. Res. 2006, 33, 2263–2281. [Google Scholar] [CrossRef]

- Regis, R.G. Constrained optimization by radial basis function interpolation for high-dimensional expensive black-box problems with infeasible initial points. Eng. Optim. 2014, 46, 218–243. [Google Scholar] [CrossRef]

- Mezura-Montes, E.; Reyes-Sierra, M.; Coello, C.A.C. Multi-objective Optimization Using Differential Evolution: A Survey of the State-of-the-Art. In Advances in Differential Evolution; Springer: Berlin/Heidelberg, Germany, 2008; pp. 173–196. [Google Scholar]

- Mallipeddi, R.; Das, S.; Suganthan, P.N. Ensemble of Constraint Handling Techniques for Single Objective Constrained Optimization. In Evolutionary Constrained Optimization; Springer: New Delhi, India, 2015; pp. 231–248. [Google Scholar]

- Takahama, T.; Sakai, S.; Iwane, N. Solving Nonlinear Constrained Optimization Problems by the e Constrained Differential Evolution. In Proceedings of the 2006 IEEE International Conference on Systems, Man and Cybernetics, Taipei, Taiwan, 8–11 October 2006; Volume 3, pp. 2322–2327. [Google Scholar]

- Padhye, N.; Mittal, P.; Deb, K. Feasibility Preserving Constraint-handling Strategies for Real Parameter Evolutionary Optimization. Comput. Optim. Appl. 2015, 62, 851–890. [Google Scholar] [CrossRef]

- Fister, I.; Yang, X.S.; Fister, D. Firefly Algorithm: A Brief Review of the Expanding Literature. In Cuckoo Search and Firefly Algorithm: Theory and Applications; Springer International Publishing: Cham, Switzerland, 2014; pp. 347–360. [Google Scholar]

- Baykasoğlu, A.; Ozsoydan, F.B. Adaptive firefly algorithm with chaos for mechanical design optimization problems. Appl. Soft Comput. 2015, 36, 152–164. [Google Scholar] [CrossRef]

- Costa, M.F.P.; Rocha, A.M.A.C.; Francisco, R.B.; Fernandes, E.M.G.P. Firefly penalty-based algorithm for bound constrained mixed-integer nonlinear programming. Optimization 2016, 65, 1085–1104. [Google Scholar] [CrossRef]

- Brajevic, I.; Tuba, M.; Bacanin, N. Firefly Algorithm with a Feasibility-Based Rules for Constrained Optimization. In Proceedings of the 6th WSEAS European Computing Conference, Prague, Czech Republic, 24–26 September 2012; pp. 163–168. [Google Scholar]

- Deshpande, A.M.; Phatnani, G.M.; Kulkarni, A.J. Constraint handling in Firefly Algorithm. In Proceedings of the 2013 IEEE International Conference on Cybernetics (CYBCO), Lausanne, Switzerland, 13–15 July 2013; pp. 186–190. [Google Scholar]

- Brajević, I.; Ignjatović, J. An upgraded firefly algorithm with feasibility-based rules for constrained engineering optimization problems. J. Intell. Manuf. 2018. [Google Scholar] [CrossRef]

- Chou, J.S.; Ngo, N.T. Modified Firefly Algorithm for Multidimensional Optimization in Structural Design Problems. Struct. Multidiscip. Optim. 2017, 55, 2013–2028. [Google Scholar] [CrossRef]

- Runarsson, T.P.; Yao, X. Stochastic ranking for constrained evolutionary optimization. IEEE Trans. Evol. Comput. 2000, 4, 284–294. [Google Scholar] [CrossRef]

- Tizhoosh, H.R. Opposition-Based Learning: A New Scheme for Machine Intelligence. In Proceedings of the International Conference on Computational Intelligence for Modelling, Control and Automation and International Conference on Intelligent Agents, Web Technologies and Internet Commerce (CIMCA-IAWTIC’06), Vienna, Austria, 28–30 November 2005; Volume 1, pp. 695–701. [Google Scholar]

- Das, S.; Konar, A.; Chakraborty, U.K. Two Improved Differential Evolution Schemes for Faster Global Search. In Proceedings of the 7th Annual Conference on Genetic and Evolutionary Computation, Washington, DC, USA, 25–29 June 2005; pp. 991–998. [Google Scholar]

- Ismail, M.M.; Othman, M.A.; Sulaiman, H.A.; Misran, M.H.; Ramlee, R.H.; Abidin, A.F.Z.; Nordin, N.A.; Zakaria, M.I.; Ayob, M.N.; Yakop, F. Firefly algorithm for path optimization in PCB holes drilling process. In Proceedings of the 2012 International Conference on Green and Ubiquitous Technology, Jakarta, Indonesia, 30 June–1 July 2012; pp. 110–113. [Google Scholar]

- Liang, J.; Runarsson, T.P.; Mezura-Montes, E.; Clerc, M.; Suganthan, P.N.; Coello, C.C.; Deb, K. Problem definitions and evaluation criteria for the CEC 2006 special session on constrained real-parameter optimization. J. Appl. Mech. 2006, 41, 8–31. [Google Scholar]

- Gandomi, A.H.; Yang, X.S.; Alavi, A.H. Mixed variable structural optimization using Firefly Algorithm. Comput. Struct. 2011, 89, 2325–2336. [Google Scholar] [CrossRef]

- Sergeyev, Y.D.; Kvasov, D.; Mukhametzhanov, M. On the efficiency of nature-inspired metaheuristics in expensive global optimization with limited budget. Sci. Rep. 2018, 8, 453. [Google Scholar] [CrossRef] [PubMed]

- Ali, L.; Sabat, S.L.; Udgata, S.K. Particle Swarm Optimisation with Stochastic Ranking for Constrained Numerical and Engineering Benchmark Problems. Int. J. Bio-Inspired Comput. 2012, 4, 155–166. [Google Scholar] [CrossRef]

- Elsayed, S.M.; Sarker, R.A.; Mezura-Montes, E. Self-adaptive mix of particle swarm methodologies for constrained optimization. Inf. Sci. 2014, 277, 216–233. [Google Scholar] [CrossRef]

- Mallipeddi, R.; Suganthan, P.N. Ensemble of Constraint Handling Techniques. IEEE Trans. Evol. Comput. 2010, 14, 561–579. [Google Scholar] [CrossRef]

- Mohamed, A.W. A novel differential evolution algorithm for solving constrained engineering optimization problems. J. Intell. Manuf. 2018, 29, 659–692. [Google Scholar] [CrossRef]

- Derrac, J.; García, S.; Molina, D.; Herrera, F. A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evol. Comput. 2011, 1, 3–18. [Google Scholar] [CrossRef]

- Ray, T.; Liew, K.M. Society and civilization: An optimization algorithm based on the simulation of social behavior. IEEE Trans. Evol. Comput. 2003, 7, 386–396. [Google Scholar] [CrossRef]

- zhuo Huang, F.; Wang, L.; He, Q. An effective co-evolutionary differential evolution for constrained optimization. Appl. Math. Comput. 2007, 186, 340–356. [Google Scholar] [CrossRef]

- Lee, K.S.; Geem, Z.W. A new meta-heuristic algorithm for continuous engineering optimization: Harmony search theory and practice. Comput. Methods Appl. Mech. Eng. 2005, 194, 3902–3933. [Google Scholar] [CrossRef]

- de Melo, V.V.; Carosio, G.L. Investigating Multi-View Differential Evolution for solving constrained engineering design problems. Expert Syst. Appl. 2013, 40, 3370–3377. [Google Scholar] [CrossRef]

- Sadollah, A.; Bahreininejad, A.; Eskandar, H.; Hamdi, M. Mine blast algorithm: A new population based algorithm for solving constrained engineering optimization problems. Appl. Soft Comput. 2013, 13, 2592–2612. [Google Scholar] [CrossRef]

- Rao, R.V.; Waghmare, G. A new optimization algorithm for solving complex constrained design optimization problems. Eng. Optim. 2017, 49, 60–83. [Google Scholar] [CrossRef]

- Savsani, P.; Savsani, V. Passing vehicle search (PVS): A novel metaheuristic algorithm. Appl. Math. Model. 2016, 40, 3951–3978. [Google Scholar] [CrossRef]

- Brajevic, I.; Tuba, M. An upgraded artificial bee colony (ABC) algorithm for constrained optimization problems. J. Intell. Manuf. 2013, 24, 729–740. [Google Scholar] [CrossRef]

- Guedria, N.B. Improved accelerated PSO algorithm for mechanical engineering optimization problems. Appl. Soft Comput. 2016, 40, 455–467. [Google Scholar] [CrossRef]

- Yılmaz, S.; Küçüksille, E.U. A new modification approach on bat algorithm for solving optimization problems. Appl. Soft Comput. 2015, 28, 259–275. [Google Scholar] [CrossRef]

- Balande, U.; Shrimankar, D. An oracle penalty and modified augmented Lagrangian methods with firefly algorithm for constrained optimization problems. Oper. Res. 2017. [Google Scholar] [CrossRef]

- Eskandar, H.; Sadollah, A.; Bahreininejad, A.; Hamdi, M. Water cycle algorithm—A novel metaheuristic optimization method for solving constrained engineering optimization problems. Comput. Struct. 2012, 110–111, 151–166. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).